repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 9,711 | closed | Add support for RemBERT | # 🌟 New model addition

## Model description

Hi,

I just found this really interesting upcoming ICLR 2021 paper: "Rethinking Embedding Coupling in Pre-trained Language Models":

> We re-evaluate the standard practice of sharing weights between input and output embeddings in state-of-the-art pre-trained language models. We show that decoupled embeddings provide increased modeling flexibility, allowing us to significantly improve the efficiency of parameter allocation in the input embedding of multilingual models. By reallocating the input embedding parameters in the Transformer layers, we achieve dramatically better performance on standard natural language understanding tasks with the same number of parameters during fine-tuning. We also show that allocating additional capacity to the output embedding provides benefits to the model that persist through the fine-tuning stage even though the output embedding is discarded after pre-training. Our analysis shows that larger output embeddings prevent the model's last layers from overspecializing to the pre-training task and encourage Transformer representations to be more general and more transferable to other tasks and languages. Harnessing these findings, we are able to train models that achieve strong performance on the XTREME benchmark without increasing the number of parameters at the fine-tuning stage.

Paper can be found [here](https://openreview.net/forum?id=xpFFI_NtgpW).

Thus, the authors propose a new *Rebalanced mBERT (**RemBERT**) model* that outperforms XLM-R. An integration into Transformers would be awesome!

I would really like to help with the integration into Transformers, as soon as the model is out!

## Open source status

* [ ] the model implementation is available: authors plan to release model implementation

* [ ] the model weights are available: authors plan to release model checkpoint

* [ ] who are the authors: @hwchung27, @Iwontbecreative, Henry Tsai, Melvin Johnson and @sebastianruder

| 01-20-2021 23:16:44 | 01-20-2021 23:16:44 | Decided it would be easier for us to take care of this since we plan to directly release the model checkpoint in huggingface.

Started working on it over the week-end, will share PR once it is more polished. <|||||>This is great news @Iwontbecreative! Let us know if you need help. |

transformers | 9,710 | closed | Let Trainer provide the device to perform training | # 🚀 Feature request

Training_args object [chooses](https://github.com/huggingface/transformers/blob/7acfa95afb8194f8f9c1f4d2c6028224dbed35a2/src/transformers/training_args.py#L477) the training device by itself(cuda:0 by default). I request a possibility for a user to be able to choose it :)

## Motivation

Imagine a situation when we have a cluster with several gpus and cuda:0 memory is full(I have it right now :)) So user cannot use Trainer object for training.

| 01-20-2021 21:49:21 | 01-20-2021 21:49:21 | |

transformers | 9,709 | closed | DeepSpeed: Exits with CUDA runtime error on A100 (requires recompiling DeepSpeed for NVIDIA 8.0 Arch) | ## Environment info

- `transformers` version: 4.3.0 (unofficial, off current main branch)

- Platform: Linux

- Python version: 3.7

- PyTorch version (GPU?): 1.7.1

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: Yes

## Information

Model I am using (Bert, XLNet ...): T-5

The problem arises when using:

* [ ] the official example scripts: examples/seq2seq/finetune_trainer.py

## Issue

In the hopes this saves others some time since it took a while for me to fix: When running the new DeepSpeed mode in Transformers 4.3.0 on an A100 GPU, it will exit with a runtime error:

RuntimeError: CUDA error: no kernel image is available for execution on the device

For me, this was due to installing DeepSpeed from pip rather than source. The A100 architecture appears not to be (as of this writing) installed in the default. If you install from source as described in this post ( https://www.deepspeed.ai/tutorials/advanced-install/ ), the error goes away. The post suggests selecting the architecture using the TORCH_CUDA_ARCH_LIST environment variable, but I found just using the install.sh script (which I am assuming auto-detects the architecture of your GPU) worked more successfully.

| 01-20-2021 21:04:14 | 01-20-2021 21:04:14 | Pinging @stas00<|||||>Heh, actually I wrote this section: https://www.deepspeed.ai/tutorials/advanced-install/#building-for-the-correct-architectures and the autodetector, since I originally had the same issue.

This problem is also partially in pytorch - which is now fixed too in pytorch-nightly.

`TORCH_CUDA_ARCH_LIST` is there if you say want to use the binary build on another machine or want to optimize it for whatever reason. e.g. I build it with:

```

TORCH_CUDA_ARCH_LIST="6.1;8.6" DS_BUILD_OPS=1 pip install --no-cache -v --disable-pip-version-check -e .

```

because I have 1070 and 3090 cards.

I'm glad you found a way to solve it.

Now, this is a purely DeepSpeed issue and has nothing to do with transformers, other than perhaps a documentation issue.

I'm all ears at how perhaps `transformers` can improve the doc on our side to help the users find a solution quickly.

1. Probably should recommend to install from source

2. but then when we bail on missing `deepspeed` we say do `pip install deepspeed` - do you think we should change that to:

> `pip install deepspeed` or if it doesn't work install from source?

The thing is `pip install deepspeed` is installing from source, but I think it perhaps isn't using the same build script? So should we say:

> `pip install deepspeed` or if it doesn't work install from https://github.com/microsoft/deepspeed?

or may be easier to just say:

> install from https://github.com/microsoft/deepspeed?

What happens if you install with:

```

DS_BUILD_OPS=1 pip install deepspeed

```

Perhaps your issue is JIT/PTX which happens if you don't do the above - i.e. the binary build gets postpone till run time. `DS_BUILD_OPS=1` forces the binary build.

In any case let's discuss this over at DeepSpeed Issues - @PeterAJansen, would you please open an issue there because only you can report/reproduce the specific error - should they fix the pip build. and tag me?

BTW, fairscale has its own issues with `pip install fairscale` - I also have to build from the the repo, because I am forced to use pytorch-nightly due to rtx-30* and it won't build at all via `pip` directly.

so whatever we decide we should do the same for `fairscale`.

Thank you!<|||||>This issue has been automatically marked as stale and been closed because it has not had recent activity. Thank you for your contributions.

If you think this still needs to be addressed please comment on this thread. |

transformers | 9,708 | closed | fix typo | # What does this PR do?

fix typo

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSMT: @stas00

-->

| 01-20-2021 19:46:42 | 01-20-2021 19:46:42 | |

transformers | 9,707 | closed | Allow text generation for ProphetNetForCausalLM | # What does this PR do?

The configuration for ProphetNetForCausalLM is overwritten at initialization to ensure that it is used as a decoder (and not as an encoder_decoder) for text generation.

The initialization of the parent class for ProphetNetForCausalLM is done before this overwrite, causing the `model.config.is_encoder_decoder` to remain possibly True. This leads to an error if the generate method of the model is later called as the non-existing method `get_encoder` is called.

Fixes https://github.com/huggingface/transformers/issues/9702

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

@patrickvonplaten

| 01-20-2021 18:56:34 | 01-20-2021 18:56:34 | Thanks a lot for fixing it @guillaume-be |

transformers | 9,706 | closed | [PR/Issue templates] normalize, group, sort + add myself for deepspeed | This PR:

* case-normalizes, groups and sorts the tagging entries

* removes one duplicate

* adds myself for deepspeed

* adds/removes/moves others based on their suggestions through this PR

@LysandreJik, @sgugger, @patrickvonplaten

| 01-20-2021 17:08:51 | 01-20-2021 17:08:51 | Once the PR template is complete and everybody is happy I will sync with the Issue template. So please only review the former if you're just joining in.<|||||>should we add bullets? As in:

```

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, longformer, reformer, t5, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @jplu

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0

- trainer: @sgugger

Documentation: @sgugger

HF projects:

- nlp datasets: [different repo](https://github.com/huggingface/nlp)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

```<|||||>I like bullets.<|||||>someone to tag for ONNX issues? @mfuntowicz? |

transformers | 9,705 | closed | [deepspeed] fix the backward for deepspeed | This PR fixes a bug in my deepspeed integration - `backward` needs to be called on the deepspeed object.

@sgugger

Fixes: https://github.com/huggingface/transformers/issues/9694

| 01-20-2021 16:55:41 | 01-20-2021 16:55:41 | Thanks for fixing! |

transformers | 9,704 | closed | ValueError("The training dataset must have an asserted cardinality") when running run_tf_ner.py | ## Environment info

- `transformers` version: 4.2.0

- Platform: linux

- Python version: python3.6

- PyTorch version (GPU?): 1.7.1 gpu

- Tensorflow version (GPU?):2.4.0

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?:no

### Who can help

@stefan-it

## Information

Model I am using (Bert, XLNet ...): bert-base-multilingual-cased

The problem arises when using:

- [yes ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name) ner GermEval 2014

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Created a new conda environment using conda env -n xftf2 python=3.6

2. pip insrall transformer==4.2.0 tensorflow==2.4 torch==1.7.1

3. Prepare the data set(train.txt, test.txt, dev.txt) according to the README under the folder token-classification, run run_tf_ner.py

setting from_pt=True, with the following parameters:

```

--data_dir ./data \

--labels ./data/labels.txt \

--model_name_or_path bert-base-multilingual-cased \

--output_dir ./output \

--max_seq_length 128 \

--num_train_epochs 4\

--per_device_train_batch_size 32 \

--save_steps 500 \

--seed 100 \

--do_train \

--do_eval \

--do_predict

```

Here is the stack trace:

```

01/21/2021 00:12:18 - INFO - utils_ner - *** Example ***

01/21/2021 00:12:18 - INFO - utils_ner - guid: dev-5

01/21/2021 00:12:18 - INFO - utils_ner - tokens: [CLS] Dara ##us entwickelte sich im Rok ##oko die Sitt ##e des gemeinsamen Wein ##ens im Theater , das die Stand ##es ##grenze ##n innerhalb des Publikum ##s über ##brücken sollte . [SEP]

01/21/2021 00:12:18 - INFO - utils_ner - input_ids: 101 95621 10251 28069 10372 10211 51588 20954 10128 105987 10112 10139 58090 90462 12457 10211 16223 117 10242 10128 15883 10171 58433 10115 21103 10139 63332 10107 10848 99765 17799 119 102 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

01/21/2021 00:12:18 - INFO - utils_ner - input_mask: 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

01/21/2021 00:12:18 - INFO - utils_ner - segment_ids: 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0

01/21/2021 00:12:18 - INFO - utils_ner - label_ids: -1 24 -1 24 24 24 6 -1 24 24 -1 24 24 24 -1 24 24 24 24 24 24 -1 -1 -1 24 24 24 -1 24 -1 24 24 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1 -1

Traceback (most recent call last):

File "run_tf_ner.py", line 299, in <module>

main()

File "run_tf_ner.py", line 231, in main

trainer.train()

File "/.conda/envs/xftf2/lib/python3.6/site-packages/transformers/trainer_tf.py", line 457, in train

train_ds = self.get_train_tfdataset()

File "/.conda/envs/xftf2/lib/python3.6/site-packages/transformers/trainer_tf.py", line 141, in get_train_tfdataset

raise ValueError("The training dataset must have an asserted cardinality")

ValueError: The training dataset must have an asserted cardinality

```

## Expected behavior

In such a case, is there any tips to deal with it?I really appreciate any help you can provide.

| 01-20-2021 16:54:35 | 01-20-2021 16:54:35 | Maybe @jplu has an idea!<|||||>Hello!

This error is always raised by the TFTrainer when your dataset has not a cardinality attached.

Can you give me the version of the `run_tf_ner.py` you are using please?<|||||>The run_tf_ner.py I used was downloaded from this https://github.com/huggingface/transformers/tree/master/examples/token-classification, transformer version is 4.2.0, tensorflow == 2.4.0

@jplu <|||||>Are you sure this is the exact version or not from another commit? Because I see a cardinality assigned in the current script. Even thought the script is not working since 4.2.0 but for a diffeerent reason.<|||||>This issue has been automatically marked as stale and been closed because it has not had recent activity. Thank you for your contributions.

If you think this still needs to be addressed please comment on this thread. |

transformers | 9,703 | closed | Fix WAND_DISABLED test | # What does this PR do?

As reported in #9699, the test for the WAND_DISABLED environment variable is not working right now. This PR fixes that.

Fixes #9699

| 01-20-2021 16:49:29 | 01-20-2021 16:49:29 | |

transformers | 9,702 | closed | ProphetNetForCausalLM text generation fails | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: latest master (4.3.0.dev0)

- Platform: win64

- Python version: 3.7

- PyTorch version (GPU?): 1.7

- Tensorflow version (GPU?): N/A

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: no

### Who can help

@patrickvonplaten

## Information

Model I am using: ProphetNet

The `ProphetNetForCausalLM` defined at https://github.com/huggingface/transformers/blob/88583d4958ae4cb08a4cc85fc0eb3aa02e6b68af/src/transformers/models/prophetnet/modeling_prophetnet.py#L1884 overwrites the `is_encoder_decoder` flag to a value of False to ensure the mode is used as a decoder only, regardless of what is given in the configuration file.

However, the initialization of the parent class is done before this overwrite, causing the `model.config.is_encoder_decoder` to remain possibly `True`. This leads to an error if the `generate` method of the model is later called as the non-existign method `get_encoder` is called:

```python

AttributeError: 'ProphetNetForCausalLM' object has no attribute 'get_encoder'

```

The script below allows reproducing:

```python

from transformers import ProphetNetTokenizer, ProphetNetForCausalLM

tokenizer = ProphetNetTokenizer.from_pretrained('microsoft/prophetnet-large-uncased')

model = ProphetNetForCausalLM.from_pretrained('patrickvonplaten/prophetnet-decoder-clm-large-uncased').cuda()

model = model.eval()

input_sentences = ["It was a very nice and sunny"]

inputs = tokenizer(input_sentences, return_tensors='pt')

# Generate text

summary_ids = model.generate(inputs['input_ids'].cuda(),

num_beams=4,

temperature=1.0,

top_k=50,

top_p=1.0,

repetition_penalty=1.0,

min_length=10,

max_length=32,

no_repeat_ngram_size=3,

do_sample=False,

early_stopping=True)

model_output = tokenizer.batch_decode(summary_ids, skip_special_tokens=True)

```

## Step to fix it

The call to `super().__init__(config)` in the initialization method should be moved from modeling_prophetnet.py#L1886 to modeling_prophetnet.py#L1890 (after the configuration object was modified). If you agree I could submit a small PR with the same, I tested locally and the model does not crash.

As a side note, After the fix, the generation quality remains very poor, is there a pretrained snapshot for ProphetNet that can actually be used for causal generation? | 01-20-2021 15:49:00 | 01-20-2021 15:49:00 | You're totally right @gui11aume! Thanks for posting this issue - it would be great if you could open a PR to fix it. The checkpoint I uploaded won't work well because I just took the decoder part of the encoder-decoder model and removed all cross-attention layer. The model would have to be fine-tuned to work correctly. The main motivation to add `ProphetNetForCausalLM` however was to enable things like `Longformer2ProphetNet` as described here: https://github.com/huggingface/transformers/pull/9033 |

transformers | 9,701 | closed | how to run pegasus finetune on multiple gpus | ## Environment Information

- transformers version: 4.2.0dev0

- Platform: Linux-3.10.0-1062.18.1.el7.x86_64-x86_64-with-centos-7.7.1908-Core

- Python version: 3.7.3

- PyTorch version (GPU?): 1.7.1 (False)

- Tensorflow version (GPU?): 2.4.0 (False)

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

## Who might help

@sgugger

@patrickvonplaten

@patil-suraj

## Information

The fine-tune process is taking really long time, so I want to do it parallel on multiple gpus.

The problem arises when using:

I do not have found the instructions for training on multiple gpus for the arguments, are there configurations for something like nodes, etc. or should I implement it in my own script?

## To reproduce

```

python finetune.py \

--gpus 0 \

--learning_rate=1e-4 \

--do_train \

--do_predict \

--n_val 1000 \

--val_check_interval 0.25 \

--max_source_length 512 --max_target_length 56 \

--freeze_embeds --label_smoothing 0.1 --adafactor --task summarization_xsum \

--model_name_or_path google/pegasus-xsum \

--output_dir=xsum_results \

--data_dir xsum \

--tokenizer_name google/pegasus-large \

"$@"

```

and which of the belowings are correct? I saw both in other posts:

--model_name_or_path google/pegasus-xsum

--tokenizer_name google/pegasus-large \

or

--model_name_or_path google/pegasus-large

--tokenizer_name google/pegasus-xum \

I think it should be the second one but I am not sure.

## Expected behavior

1. Enable the finetune of pegasus model on multiple gpus.

2. Inject the correct arguments. | 01-20-2021 13:46:46 | 01-20-2021 13:46:46 | Please use the [forums](https://discuss.huggingface.co/) to ask questions like this. Also note that there is no `finetune` script in the example folder anymore, so you should probably be using `finetune_trainer` or `run_seq2seq`. |

transformers | 9,700 | closed | NAN return from F.softmax function in pytorch implementation of BART self-attention | Pytorch 1.7.1 with GPU

transformers 3.0.2

Filling all masked positions with "-inf" may cause a NAN issue for softmax function returns. | 01-20-2021 13:28:09 | 01-20-2021 13:28:09 | It may cause similar issues in other models and other versions of the same model as well.<|||||>HI @KaiQiangSong

We haven't yet observed `NaN`s with BART specifically, could you post a code snippet where the model returns `NaN` so we could take a look ?<|||||>> HI @KaiQiangSong

>

> We haven't yet observed `NaN`s with BART specifically, could you post a code snippet where the model returns `NaN` so we could take a look ?

Sorry that, I couldn't publish my code now due to it is unpublished research.

I've fixed the issue myself with changing the mask_fill of float("-inf") to -1e5 (for supporting AMP as well).

Just post this issue here to let you know there might be a potential issue.<|||||>I have the same exact problem.

Ill try with the -1e5 trick and see if it helps me too.

Thanks a lot!<|||||>> I have the same exact problem.

>

> Ill try with the -1e5 trick and see if it helps me too.

>

> Thanks a lot!

glad that my solution helps.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,699 | closed | WANDB_DISABLED env variable not working as expected | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.2.1

- Platform: Linux-5.4.34-1-pve-x86_64-with-glibc2.10

- Python version: 3.8.5

- PyTorch version (GPU?): 1.7.0 (False)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

@sgugger

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, GPT2, XLM: @LysandreJik

tokenizers: @mfuntowicz

Trainer: @sgugger

Speed and Memory Benchmarks: @patrickvonplaten

Model Cards: @julien-c

TextGeneration: @TevenLeScao

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten @TevenLeScao

Blenderbot: @patrickvonplaten

Bart: @patrickvonplaten

Marian: @patrickvonplaten

Pegasus: @patrickvonplaten

mBART: @patrickvonplaten

T5: @patrickvonplaten

Longformer/Reformer: @patrickvonplaten

TransfoXL/XLNet: @TevenLeScao

RAG: @patrickvonplaten, @lhoestq

FSMT: @stas00

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

ray/raytune: @richardliaw @amogkam

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

-->

## Information

Model I am using (Bert, XLNet ...): Bert

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

I'm using modified scripts, but the error is related to a specific function in the `integrations.py` module, as explained below.

The tasks I am working on is:

* [x] an official GLUE/SQUaD task: SQUaD

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. Make sure that `wandb` is installed on your system and set the environment variable `WANDB_DISABLED` to "true", which should entirely disable `wandb` logging

2. Create an instance of the `Trainer` class

3. Observe that the Trainer always reports the error "WandbCallback requires wandb to be installed. Run `pip install wandb`."

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

I would have expected to disable `wandb`, but instead setting the `WANDB_DISABLED` environment variable completely prevents the user from using `wandb`.

After a bit of digging in the source code, I discovered that the `Trainer` uses the `WandbCallback` class (in `integrations.py`) to handle `wandb` logging. In that class, the `__init__` method has the following lines:

```python

has_wandb = is_wandb_available()

assert has_wandb, "WandbCallback requires wandb to be installed. Run `pip install wandb`."

```

In particular, by checking the `is_wandb_available()` function, we can see that it performs the following check:

```python

if os.getenv("WANDB_DISABLED"):

return False

```

That if statement does not seem to be correct, since environment variables are stored as strings and the truth value of a string depends on whether it is empty or not. So, for example, by not setting the `WANDB_DISABLED` variable at all, then `wandb` would be enabled, but setting it to any value would entirely disable `wandb`. | 01-20-2021 13:21:21 | 01-20-2021 13:21:21 | You're right, thanks for reporting! The PR mentioned above should fix that. |

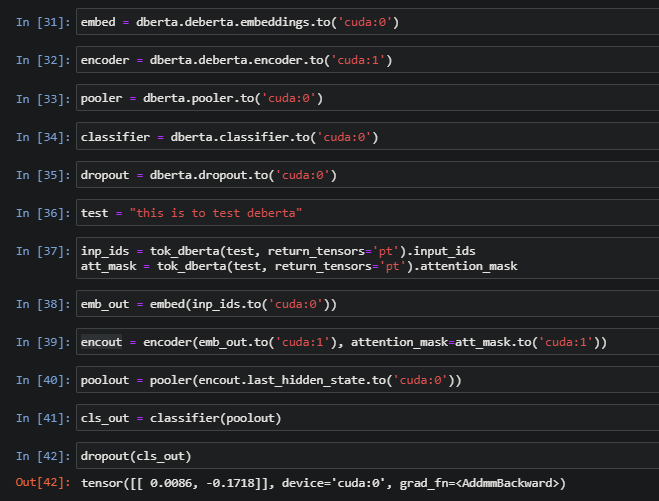

transformers | 9,698 | closed | Model Parallelism for DeBERTa |

Hi,

Is there any way to apply [Model Parallelism](https://pytorch.org/tutorials/intermediate/model_parallel_tutorial.html#apply-model-parallel-to-existing-modules) for DeBERTa ?

I want to run 'microsoft/deberta-large' on 2 GPU's (32 GB each) using [PyTorch's Model Parallelism](https://pytorch.org/tutorials/intermediate/model_parallel_tutorial.html#apply-model-parallel-to-existing-modules) . | 01-20-2021 12:41:35 | 01-20-2021 12:41:35 | Hello! It's currently not implemented for DeBERTa, unfortunately. Following the document you linked, it should be pretty easy to do it in a script!<|||||>Hi @LysandreJik ,

Will DeBERTa (or any of RoBERTa, ALBERT) work if I separate these layers as two or three parts and connect them sequentially?

Because this is what is happening in [previous link](https://pytorch.org/tutorials/intermediate/model_parallel_tutorial.html#apply-model-parallel-to-existing-modules) that I shared<|||||>You would need to cast the intermediate hidden states to the correct devices as well. You can see that in the example you shared, see how the intermediate hidden states were cast to cuda 1:

```py

def forward(self, x):

x = self.seq2(self.seq1(x).to('cuda:1'))

return self.fc(x.view(x.size(0), -1))

```<|||||>Hi @LysandreJik ,

For DeBERTa, I'm able to split entire model into 'embedding', 'encoder', 'pooler', 'classifier' and 'dropout' layers as shown in below pic.

With this approach, I trained on IMDB classification task by assigning 'encoder' to second GPU and others to first 'GPU'. At the end of the training, second GPU consumed lot of memory when compared to first GPU and this resulted in 20-80 split of the entire model.

So, I tried splitting encoder layers also as shown below but getting this error - **"TypeError: forward() takes 1 positional argument but 2 were given"**

```

embed = dberta.deberta.embeddings.to('cuda:0')

f6e = dberta.deberta.encoder.layer[:6].to('cuda:0')

l6e = dberta.deberta.encoder.layer[6:].to('cuda:1')

pooler = dberta.pooler.to('cuda:0')

classifier = dberta.classifier.to('cuda:0')

dropout = dberta.dropout.to('cuda:0')

test = "this is to test deberta"

inp_ids = tok_dberta(test, return_tensors='pt').input_ids

att_mask = tok_dberta(test, return_tensors='pt').attention_mask

emb_out = embed(inp_ids.to('cuda:0'))

first_6_enc_lay_out = f6e(emb_out)

---------------------------------------------------------------------------

TypeError Traceback (most recent call last)

<ipython-input-15-379d948e5ba5> in <module>

----> 1 first_6_enc_lay_out = f6e(emb_out)

/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py in _call_impl(self, *input, **kwargs)

725 result = self._slow_forward(*input, **kwargs)

726 else:

--> 727 result = self.forward(*input, **kwargs)

728 for hook in itertools.chain(

729 _global_forward_hooks.values(),

TypeError: forward() takes 1 positional argument but 2 were given

```

Plz suggest how to proceed further..<|||||>Hi @LysandreJik ,

Plz update on the above issue that I'm facing<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,697 | closed | Fix TF template | # What does this PR do?

Fix a template issue for TF. | 01-20-2021 11:30:57 | 01-20-2021 11:30:57 | Thanks for fixing! |

transformers | 9,696 | closed | Add notebook | # What does this PR do?

Add a notebook to the list of community notebooks, illustrating how you can fine-tune `LayoutLMForSequenceClassification` for classifying scanned documents, just as invoices or resumes.

## Who can review?

@sgugger

| 01-20-2021 11:12:52 | 01-20-2021 11:12:52 | |

transformers | 9,695 | closed | The model learns nothing after 3 epochs of training | I have trained a multilingual Bert model on 3 different input data configurations ( imbalanced, partial balanced, and full balanced) for the sentiment classification task. Everything works fine so far, except the zero-shot model being trainined on the full balanced dataset (training data: label balanced data; val/test data: label balanced data). however, the result is very weird:

<img width="638" alt="Screen Shot 2021-01-20 at 11 45 47 AM" src="https://user-images.githubusercontent.com/41744366/105165475-afb1b800-5b16-11eb-9d8f-d775fa9a07ee.png">

As you can see, the model has not learned anything, and it classifies everything into neutral in the testing phase.

Could anyone helps please?

| 01-20-2021 10:58:44 | 01-20-2021 10:58:44 | Hello, thanks for opening an issue! We try to keep the github issues for bugs/feature requests.

Could you ask your question on the [forum](https://discusss.huggingface.co) instead? You'll get more answers over there.

Thanks! |

transformers | 9,694 | closed | ModuleAttributeError: 'GPT2LMHeadModel' object has no attribute 'backward' | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.2.1

- Platform: Linux-4.19.0-12-cloud-amd64-x86_64-with-debian-10.6

- Python version: 3.7.8

- PyTorch version (GPU?): 1.6.0a0+9907a3e (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No(?)

### Who can help

albert, bert, GPT2, XLM: @LysandreJik

Trainer: @sgugger

## To reproduce

Steps to reproduce the behavior:

1. Set up a TrainingArguments for a GPT2LMHeadModel with the following deepspeed config:

```

{

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"zero_optimization": {

"stage": 2,

"allgather_partitions": true,

"allgather_bucket_size": 2e8,

"reduce_scatter": true,

"reduce_bucket_size": 2e8,

"overlap_comm": true,

"contiguous_gradients": true,

"cpu_offload": false

},

"optimizer": {

"type": "Adam",

"params": {

"adam_w_mode": true,

"lr": 3e-5,

"betas": [ 0.9, 0.999 ],

"eps": 1e-8,

"weight_decay": 3e-7

}

},

"scheduler": {

"type": "WarmupLR",

"params": {

"warmup_min_lr": 0,

"warmup_max_lr": 3e-5,

"warmup_num_steps": 500

}

}

}

```

2. Attempt to call `trainer.train()`.

## Expected behavior

Training should begin as expected.

## Believed bug location

It would appear that [line 1286 in trainer.py](https://github.com/huggingface/transformers/blob/76f36e183a825b8e5576256f4e057869b2e2df29/src/transformers/trainer.py#L1286) actually calls the `backward` method on the *model*, not the loss object. I will try rebuilding after fixing that line and seeing if it helps.

| 01-20-2021 10:10:59 | 01-20-2021 10:10:59 | > It would appear that line 1286 in trainer.py actually calls the backward method on the model, not the loss object. I will try rebuilding after fixing that line and seeing if it helps.

This is incorrect. It appears that the ``model_wrapped.module`` in the aforementioned trainer.py actually resolves to GPT2LMHeadModel. Another big shot in the dark, but maybe ``model_wrapped`` is never actually wrapping because I'm only using one GPU? It's very late where I live, I'll take another shot at this in the morning.<|||||>You then need to launch your script with the `deepspeed` launcher. Could you tell us which command you ran?

Also cc @stas00 since he added deepspeed to Trainer.<|||||>Yes, please tag me on any deepspeed issues.

Thank you for this report.

I think it's a bug, it should be:

```

self.deepspeed.backward(loss)

```

I will test and send a fix.

<|||||>The merged PR closed this report, but should you still have an issue please don't hesitate to re-open it.

<|||||>Hello,

Thanks for this great deepspeed feature. I am also running into the same error both for

DistilBertForSequenceClassification' object has no attribute 'backward'

and for

BertForSequenceClassification object has no attribute 'backward'

here is the full error:

> ---------------------------------------------------------------------------

AttributeError Traceback (most recent call last)

<ipython-input-6-beaae64139c1> in <module>

23 )

24

---> 25 trainer.train()

~/anaconda3/lib/python3.7/site-packages/transformers/trainer.py in train(self, model_path, trial)

886 tr_loss += self.training_step(model, inputs)

887 else:

--> 888 tr_loss += self.training_step(model, inputs)

889 self._total_flos += self.floating_point_ops(inputs)

890

~/anaconda3/lib/python3.7/site-packages/transformers/trainer.py in training_step(self, model, inputs)

1263 elif self.deepspeed:

1264 # calling on DS engine (model_wrapped == DDP(Deepspeed(PretrainedModule)))

-> 1265 self.model_wrapped.module.backward(loss)

1266 else:

1267 loss.backward()

~/anaconda3/lib/python3.7/site-packages/torch/nn/modules/module.py in __getattr__(self, name)

574 return modules[name]

575 raise AttributeError("'{}' object has no attribute '{}'".format(

--> 576 type(self).__name__, name))

577

578 def __setattr__(self, name, value):

AttributeError: 'DistilBertForSequenceClassification' object has no attribute 'backward'

Any idea?

Thanks<|||||>@victorstorchan, can you please ensure you use an up-to-date master?<|||||>Thanks for your answer. I just pip installed transformers 1h ago. It should be up-to-date right?<|||||>no, it won't. pip installs the released version. you need the unreleased master build, which there are several ways to go about, one of them is just:

```

pip install git+https://github.com/huggingface/transformers

```

<|||||>My bad! Thanks @stas00 <|||||>You did nothing wrong, @victorstorchan.

I will propose an update to the installation page so that the distinction is loud and clear. |

transformers | 9,693 | closed | ModuleAttributeError: 'GPT2LMHeadModel' object has no attribute 'backward' | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform:

- Python version: 3.7

- PyTorch version (GPU?):

- Tensorflow version (GPU?): None

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No?

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, GPT2, XLM: @LysandreJik

Trainer: @sgugger

## To reproduce

Steps to reproduce the behavior:

1. Set up a TrainingArguments for a GPT2LMHeadModel with the following deepspeed config:

`{

"fp16": {

"enabled": true,

"loss_scale": 0,

"loss_scale_window": 1000,

"hysteresis": 2,

"min_loss_scale": 1

},

"zero_optimization": {

"stage": 2,

"allgather_partitions": true,

"allgather_bucket_size": 2e8,

"reduce_scatter": true,

"reduce_bucket_size": 2e8,

"overlap_comm": true,

"contiguous_gradients": true,

"cpu_offload": false

},

"optimizer": {

"type": "Adam",

"params": {

"adam_w_mode": true,

"lr": 3e-5,

"betas": [ 0.9, 0.999 ],

"eps": 1e-8,

"weight_decay": 3e-7

}

},

"scheduler": {

"type": "WarmupLR",

"params": {

"warmup_min_lr": 0,

"warmup_max_lr": 3e-5,

"warmup_num_steps": 500

}

}

}`

2. Attempt to train.

## Expected behavior

Training should begin as expected.

## Believed bug location

It would appear that [line 1286 in trainer.py](https://github.com/huggingface/transformers/blob/76f36e183a825b8e5576256f4e057869b2e2df29/src/transformers/trainer.py#L1286) actually calls the `backward` method on the *model*, not the loss object. I will try rebuilding after fixing that line and seeing if it helps.

| 01-20-2021 10:07:31 | 01-20-2021 10:07:31 | https://github.com/huggingface/transformers/issues/9694 |

transformers | 9,692 | closed | input one model's output to another one | Hello,

I want to create a model which generates text and the generated text is input to other model. So basically two models are trained together. How can i achieve this using hugging face?

Thanks | 01-20-2021 10:07:12 | 01-20-2021 10:07:12 | Hello, thanks for opening an issue! We try to keep the github issues for bugs/feature requests.

Could you ask your question on the [forum](https://discusss.huggingface.co) instead? You'll get more answers there!

Thanks! |

transformers | 9,691 | closed | Add DeBERTa head models | This PR adds 3 head models on top of the DeBERTa base model: `DebertaForMaskedLM`, `DebertaForTokenClassification`, `DebertaForQuestionAnswering`. These are mostly copied from `modeling_bert.py` with bert->deberta.

## Who can review?

@LysandreJik

Also tagging original DeBERTa author: @BigBird01

Fixes #9689 | 01-20-2021 08:57:48 | 01-20-2021 08:57:48 | Thanks for the review @LysandreJik, the test did fail because of the pooler. Is fixed now! |

transformers | 9,690 | closed | Is there a C++ interface? |

Is there a C++ interface? transformers | 01-20-2021 08:27:10 | 01-20-2021 08:27:10 | No, only Python.<|||||>> No, only Python.

thx.

It means that using torch cannot call bert with c++, right? |

transformers | 9,689 | closed | MLM training for DeBERTa not supported: configuration class is missing | When I ran the example script run_mlm.py to fine tune the pretrained deberta model on a customized dataset, I got the following error. The same command worked for roberta-base.

The command:

python run_mlm.py --model_name_or_path 'microsoft/deberta-base' --train_file slogans/train.txt --validation_file slogans/test.txt --do_train --do_eval --per_device_train_batch_size 64 --per_device_eval_batch_size 64 --learning_rate 1e-3 --num_train_epochs 10 --output_dir /home/jovyan/share2/xiaolin/models/mlm/temp --save_steps 5000 --logging_steps 100

The terminal error:

Traceback (most recent call last):

File "run_mlm.py", line 409, in <module>

main()

File "run_mlm.py", line 264, in main

cache_dir=model_args.cache_dir,

File "/home/jovyan/.local/lib/python3.7/site-packages/transformers/models/auto/modeling_auto.py", line 1093, in from_pretrained

config.__class__, cls.__name__, ", ".join(c.__name__ for c in MODEL_FOR_MASKED_LM_MAPPING.keys())

ValueError: Unrecognized configuration class <class 'transformers.models.deberta.configuration_deberta.DebertaConfig'> for this kind of AutoModel: AutoModelForMaskedLM.

Model type should be one of LayoutLMConfig, DistilBertConfig, AlbertConfig, BartConfig, CamembertConfig, XLMRobertaConfig, LongformerConfig, RobertaConfig, SqueezeBertConfig, BertConfig, MobileBertConfig, FlaubertConfig, XLMConfig, ElectraConfig, ReformerConfig, FunnelConfig, MPNetConfig, TapasConfig. | 01-20-2021 05:27:39 | 01-20-2021 05:27:39 | Looking at the [docs](https://huggingface.co/transformers/model_doc/deberta.html), it seems like there's currently no `DeBERTaForMaskedLM` defined. I will make a PR that adds this. |

transformers | 9,688 | closed | [Open in Colab] links not working in examples/README.md | For the following tasks below, the  button contains github links instead of colab links.

- question-answering

- text-classification

- token-classification

@sgugger

| 01-20-2021 04:12:45 | 01-20-2021 04:12:45 | Hi @wilcoln

Yes, the links point to Github, feel free to open a PR to replace the GitHub links with colab :). Thanks! |

transformers | 9,687 | closed | Can't load previously built tokenizers | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.2.1

- Platform: Linux-3.10.0-957.5.1.el7.x86_64-x86_64-with-glibc2.10

- Python version: 3.8.5

- PyTorch version (GPU?): 1.7.1 (False)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: not for this part that triggers this error

- Using distributed or parallel set-up in script?: n

### Who can help

Probably @mfuntowicz or @patrickvonplaten

## Information

N/A -- none of the fields here applied

## To reproduce

Context: I work on cluster where most nodes don't have internet access. Therefore I pre-build tokenizers, models, etc., in cli on nodes with internet access and then make sure that I can access the local caches on other nodes. That last part -- accessing the tokenizer I've built -- is failing for BlenderBot 400M distilled tokenizer. It's also failing for blenderbot small 90M which I also built today, potentially for others too, but it doesn't seem to be failing for roberta-base, which I had built before (and is a tokenizer small rather than base).

1. `AutoTokenizer.from_pretrained('facebook/blenderbot-400M-distill')` from a node with internet access

2. the same as above, from a node without internet access

3. You should see this error getting triggered : [https://github.com/huggingface/transformers/blob/14d677ca4a62facf70b28f2922b12e6cd3692a03/src/transformers/file_utils.py#L1234](url)

Here's the specific Traceback:

`Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/usr/lusers/margsli/miniconda3/envs/latest/lib/python3.8/site-packages/transformers/models/auto/tokenization_auto.py", line 388, in from_pretrained

return tokenizer_class_py.from_pretrained(pretrained_model_name_or_path, *inputs, **kwargs)

File "/usr/lusers/margsli/miniconda3/envs/latest/lib/python3.8/site-packages/transformers/tokenization_utils_base.py", line 1738, in from_pretrained

resolved_vocab_files[file_id] = cached_path(

File "/usr/lusers/margsli/miniconda3/envs/latest/lib/python3.8/site-packages/transformers/file_utils.py", line 1048, in cached_path

output_path = get_from_cache(

File "/usr/lusers/margsli/miniconda3/envs/latest/lib/python3.8/site-packages/transformers/file_utils.py", line 1234, in get_from_cache

raise ValueError(

ValueError: Connection error, and we cannot find the requested files in the cached path. Please try again or make sure your Internet connection is on.`

Dug around a little and found that cached_path() gets called 7 filenames/urls when I'm offline and only 6 when I'm online (I printed cache_path every time cached_path() gets called) -- the last one is not seen when offline, and that's the one that triggers the error. Printed the same things for other tokenizers I had previously built and didn't see this. Not sure if that's helpful, but it was as far as I got during my debugging.

## Expected behavior

no error

| 01-20-2021 00:57:12 | 01-20-2021 00:57:12 | Hello! To make sure I understand your issue, you're doing the following:

```py

AutoTokenizer.from_pretrained('facebook/blenderbot-400M-distill')

```

on a node which has internet access, and then you're doing the same once you have no internet access. You want the library to rely on the cache that it had previously downloaded, is that right?

Could you make sure you are up to date with the `master` branch, and try the following once you have no internet access:

```py

AutoTokenizer.from_pretrained('facebook/blenderbot-400M-distill', local_files_only=True)

```

Thank you.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,686 | closed | BertGenerationDecoder .generate() issue during inference with PyTorch Lightning | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.2.1

- Platform: Ubuntu 20.04.1 LTS

- Python version: 3.8.5

- PyTorch version: 1.7.1

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: Tried both distributed and parallel

### Who can help

TextGeneration: @TevenLeScao

Text Generation: @patrickvonplaten

examples/seq2seq: @patil-suraj

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

-->

## Information

I am using BertGenerationEncoder and BertGenerationDecoder. I am using `transformers` in combination with PyTorch lightning.

At inference, `.generate()` outputs the same thing for each input.

I am unsure of why this is occurring, my only hunch is that PyTorch lighting is somehow blocking the outputs of the encoder to reach the decoder for cross-attention? As the outputs seem as though the decoder is just given the `[BOS]` token only for each input during inference.

The task that I am demonstrating this issue on is:

* WMT'14 English to German.

I have had this problem occur on different tasks as well. Using WMT'14 English to German to demonstrate.

## To reproduce

I have tried to simplify this down, but unfortunately, the example is still long. Sorry about that. Please let me know if something does not work.

If torchnlp is not installed: `pip install pytorch-nlp`

If pytorch_lightning is not installed: `pip install pytorch-lightning `

```

from torchnlp.datasets.wmt import wmt_dataset

import torch

import torch.nn as nn

from pytorch_lightning.core.datamodule import LightningDataModule

from pytorch_lightning.metrics.functional.nlp import bleu_score

import pytorch_lightning as pl

from transformers import (

BertGenerationConfig,

BertGenerationEncoder,

BertGenerationDecoder,

)

from transformers import AutoTokenizer

import os

import numpy as np

import multiprocessing

class Dataset(LightningDataModule):

def __init__(

self,

mbatch_size,

dataset_path,

encoder_tokenizer,

decoder_tokenizer,

max_len=None,

**kwargs,

):

super().__init__()

self.mbatch_size = mbatch_size

self.dataset_path = dataset_path

self.encoder_tokenizer = encoder_tokenizer

self.decoder_tokenizer = decoder_tokenizer

self.max_len = max_len

## Number of workers for DataLoader

self.n_workers = multiprocessing.cpu_count()

def setup(self, stage=None):

## Assign train & validation sets

if stage == "fit" or stage is None:

train_iterator, val_iterator = wmt_dataset(

directory=self.dataset_path,

train=True,

dev=True,

)

self.train_set = Set(

train_iterator,

self.encoder_tokenizer,

self.decoder_tokenizer,

self.max_len,

)

self.val_set = Set(

val_iterator,

self.encoder_tokenizer,

self.decoder_tokenizer,

self.max_len,

)

## Assign test set

if stage == "test" or stage is None:

test_iterator = wmt_dataset(directory=self.dataset_path, test=True)

self.test_set = Set(

test_iterator,

self.encoder_tokenizer,

self.decoder_tokenizer,

self.max_len,

)

def train_dataloader(self):

return DataLoader(

self.train_set,

batch_size=self.mbatch_size,

num_workers=self.n_workers,

shuffle=True,

)

def val_dataloader(self):

return DataLoader(

self.val_set,

batch_size=self.mbatch_size,

num_workers=self.n_workers,

)

def test_dataloader(self):

return DataLoader(

self.test_set,

batch_size=self.mbatch_size,

num_workers=self.n_workers,

)

class Set(torch.utils.data.Dataset):

def __init__(

self,

iterator,

encoder_tokenizer,

decoder_tokenizer,

max_len,

):

self.iterator = iterator

self.encoder_tokenizer = encoder_tokenizer

self.decoder_tokenizer = decoder_tokenizer

self.n_examples = len(self.iterator)

self.max_len = max_len

def __getitem__(self, index):

example = self.iterator[index]

english_encoded = self.encoder_tokenizer(

example["en"],

return_tensors="pt",

padding="max_length",

truncation=True,

max_length=self.max_len,

)

german_encoded = self.decoder_tokenizer(

example["de"],

return_tensors="pt",

padding="max_length",

truncation=True,

max_length=self.max_len,

)

return {

"input_ids": english_encoded["input_ids"][0],

"token_type_ids": english_encoded["token_type_ids"][0],

"attention_mask": english_encoded["attention_mask"][0],

"decoder_input_ids": german_encoded["input_ids"][0],

"decoder_token_type_ids": german_encoded["token_type_ids"][0],

"decoder_attention_mask": german_encoded["attention_mask"][0],

}

def __len__(self):

return self.n_examples

class BERT2BERT(nn.Module):

def __init__(self, **kwargs):

super(BERT2BERT, self).__init__()

assert "ckpt_base" in kwargs, "ckpt_base must be passed."

self.ckpt_base = kwargs["ckpt_base"]

## Tokenizer

assert (

"encoder_tokenizer" in kwargs

), "A tokenizer for the encoder must be passed."

assert (

"decoder_tokenizer" in kwargs

), "A tokenizer for the decoder must be passed."

self.encoder_tokenizer = kwargs["encoder_tokenizer"]

self.decoder_tokenizer = kwargs["decoder_tokenizer"]

## Encoder

assert "encoder_init" in kwargs, "Set encoder_init in config file."

self.encoder_init = kwargs["encoder_init"]

ckpt_dir = os.path.join(self.ckpt_base, self.encoder_init)

self.encoder = BertGenerationEncoder.from_pretrained(ckpt_dir)

## Decoder

assert "decoder_init" in kwargs, "Set decoder_init in config file."

self.decoder_init = kwargs["decoder_init"]

ckpt_dir = os.path.join(self.ckpt_base, self.decoder_init)

config = BertGenerationConfig.from_pretrained(ckpt_dir)

config.is_decoder = True

config.add_cross_attention = True

config.bos_token_id = self.decoder_tokenizer.cls_token_id

config.eos_token_id = self.decoder_tokenizer.sep_token_id

config.pad_token_id = self.decoder_tokenizer.pad_token_id

config.max_length = kwargs["max_length"] if "max_length" in kwargs else 20

config.min_length = kwargs["min_length"] if "min_length" in kwargs else 10

config.no_repeat_ngram_size = (

kwargs["no_repeat_ngram_size"] if "no_repeat_ngram_size" in kwargs else 0

)

config.early_stopping = (

kwargs["early_stopping"] if "early_stopping" in kwargs else False

)

config.length_penalty = (

kwargs["length_penalty"] if "length_penalty" in kwargs else 1.0

)

config.num_beams = kwargs["num_beams"] if "num_beams" in kwargs else 1

self.decoder = BertGenerationDecoder.from_pretrained(

ckpt_dir,

config=config,

)

def forward(self, x):

## Get last hidden state of the encoder

encoder_hidden_state = self.encoder(

input_ids=x["input_ids"],

attention_mask=x["attention_mask"],

).last_hidden_state

## Teacher forcing: labels are given as input

outp = self.decoder(

input_ids=x["decoder_input_ids"],

attention_mask=x["decoder_attention_mask"],

encoder_hidden_states=encoder_hidden_state,

)

return outp["logits"]

def generate(self, input_ids, attention_mask):

## Get last hidden state of the encoder

encoder_hidden_state = self.encoder(

input_ids=input_ids,

attention_mask=attention_mask,

).last_hidden_state

print("\n Output of encoder:")

print(encoder_hidden_state)

bos_ids = (

torch.ones(

(encoder_hidden_state.size()[0], 1),

dtype=torch.long,

device=self.decoder.device,

)

* self.decoder.config.bos_token_id

)

## Autoregresively generate predictions

return self.decoder.generate(

input_ids=bos_ids,

encoder_hidden_states=encoder_hidden_state,

)

class Seq2Seq(pl.LightningModule):

def __init__(

self,

encoder_init,

decoder_init,

encoder_tokenizer,

decoder_tokenizer,

permute_outp=False,

ckpt_base="",

ver="tmp",

print_model=True,

**kwargs,

):

super(Seq2Seq, self).__init__()

self.save_hyperparameters()

self.permute_outp = permute_outp

self.ckpt_base = ckpt_base

self.ver = ver

self.encoder_tokenizer = encoder_tokenizer

self.decoder_tokenizer = decoder_tokenizer

self.seq2seq = BERT2BERT(

encoder_init=encoder_init,

decoder_init=decoder_init,

encoder_tokenizer=encoder_tokenizer,

decoder_tokenizer=decoder_tokenizer,

ckpt_base=ckpt_base,

**kwargs,

)

## Loss function

self.loss = torch.nn.CrossEntropyLoss()

def forward(self, x):

## Iterate through the networks

return self.seq2seq(x)

def training_step(self, batch, batch_idx):

## Target

y = batch["decoder_input_ids"]

## Inference

y_hat = self(batch)

## Permute output

if self.permute_outp:

y_hat = y_hat.permute(*self.permute_outp)

## Loss

train_loss = self.loss(y_hat, y)

## Compute and log metrics

logs = {"train_loss": train_loss}

self.log_dict(logs, on_step=False, on_epoch=True)

######### TEMPORARY!!!

if batch_idx % 100 == 0:

pred = self.seq2seq.generate(

batch["input_ids"],

batch["attention_mask"],

)

pred_str = self.decoder_tokenizer.batch_decode(pred, skip_special_tokens=True)

ref_str = self.decoder_tokenizer.batch_decode(y, skip_special_tokens=True)

print("\nTraining reference labels:")

print(ref_str)

print("\n Training predictions:")

print(pred_str)

print("\n\n")

## Return training loss

return train_loss

def validation_step(self, batch, batch_idx):

print("\n\n\n Validation input_ids:")

print(batch["input_ids"])

## Generate outputs autoregresively

pred = self.seq2seq.generate(

batch["input_ids"],

batch["attention_mask"],

)

pred_str = self.decoder_tokenizer.batch_decode(pred, skip_special_tokens=True)

ref_str = self.decoder_tokenizer.batch_decode(batch["decoder_input_ids"], skip_special_tokens=True)

print("Validation reference labels:")

print(ref_str)

print("Validation predictions:")

print(pred_str)

print("\n\n")

pred_str = [i.split() for i in pred_str]

ref_str = [i.split() for i in ref_str]

self.log_dict({"val_bleu": bleu_score(pred_str, ref_str)})

def test_step(self, batch, batch_idx):

## Generate outputs autoregresively

pred = self.seq2seq.generate(

batch["input_ids"],

batch["attention_mask"],

)

pred_str = self.decoder_tokenizer.batch_decode(pred, skip_special_tokens=True)

ref_str = self.decoder_tokenizer.batch_decode(batch["decoder_input_ids"], skip_special_tokens=True)

pred_str = [i.split() for i in pred_str]

ref_str = [i.split() for i in ref_str]

self.log_dict({"test_bleu": bleu_score(pred_str, ref_str)})

def configure_optimizers(self):

self.optimisers = [torch.optim.Adam(self.parameters(), lr=4e-5)]

return self.optimisers

if __name__ == "__main__":

ckpt_base = ""

encoder_init = "bert-base-uncased"

decoder_init = "dbmdz/bert-base-german-uncased"

dataset_path = ""

encoder_tokenizer = AutoTokenizer.from_pretrained(

os.path.join(ckpt_base, encoder_init),

)

decoder_tokenizer = AutoTokenizer.from_pretrained(

os.path.join(ckpt_base, decoder_init),

)

dataset = Dataset(

mbatch_size=4,

dataset_path=dataset_path,

encoder_tokenizer=encoder_tokenizer,

decoder_tokenizer=decoder_tokenizer,

max_len=512,

)

trainer = pl.Trainer(

max_epochs=2,

num_sanity_val_steps=0,

fast_dev_run=True,

accelerator="ddp" if torch.cuda.device_count() > 1 else None,

gpus=torch.cuda.device_count() if torch.cuda.is_available() else None,

precision=16 if torch.cuda.is_available() else 32,

log_gpu_memory=log_gpu_memory if torch.cuda.is_available() else False,

plugins=plugins if torch.cuda.device_count() > 1 else None,

)

seq2seq = Seq2Seq(

encoder_init=encoder_init,

decoder_init=decoder_init,

encoder_tokenizer=encoder_tokenizer,

decoder_tokenizer=decoder_tokenizer,

ckpt_base=ckpt_base,

permute_outp=[0, 2, 1],

)

trainer.fit(seq2seq, datamodule=dataset)

# trainer.test(seq2seq, datamodule=dataset)

```

## Outputs of script demonstrating the issue

#### During training:

Output of encoder (to demonstrate that there is a difference per input):

```

tensor([[[-0.1545, 0.0785, 0.4573, ..., -0.3254, 0.5409, 0.4258],

[ 0.2935, -0.1310, 0.4843, ..., -0.4160, 0.8018, 0.2589],

[ 0.0649, -0.5836, 1.9177, ..., -0.3412, 0.2852, 0.8098],

...,

[ 0.1109, 0.1653, 0.5843, ..., -0.3402, 0.1081, 0.2566],

[ 0.3011, 0.0258, 0.4950, ..., -0.2070, 0.1684, -0.0199],

[-0.1004, -0.0299, 0.4860, ..., -0.2958, -0.1653, 0.0719]],

[[-0.3105, 0.0351, -0.5714, ..., -0.1062, 0.3461, 0.8927],

[ 0.0727, 0.2580, -0.6962, ..., 0.3195, 0.9559, 0.6534],

[-0.6213, 0.9008, 0.2194, ..., 0.1259, 0.1122, 0.7071],

...,

[ 0.2667, -0.1453, -0.2017, ..., 0.5667, -0.0772, -0.2298],

[ 0.4050, 0.0916, 0.2218, ..., 0.0295, -0.2065, 0.1230],

[-0.1895, 0.0259, -0.1619, ..., -0.1657, -0.0760, -0.6030]],

[[-0.1366, 0.2778, 0.1203, ..., -0.4764, 0.4009, 0.2918],

[ 0.2401, -0.2308, 1.1218, ..., -0.2140, 0.7054, 0.6656],

[-0.7005, -0.9183, 1.6280, ..., 0.2339, -0.1870, 0.0630],

...,

[-0.0212, -0.2678, 0.0711, ..., 0.2884, 0.3741, -0.2103],

[-0.0058, -0.2364, 0.2587, ..., 0.0689, 0.2010, -0.0315],

[ 0.1869, -0.0784, 0.2257, ..., -0.1498, 0.0935, -0.0234]],

[[ 0.1023, 0.0532, 0.2052, ..., -0.5335, 0.0676, 0.2436],

[-0.2254, 1.0484, -0.1338, ..., -0.9030, -0.1407, -0.2173],

[-0.8384, 0.3990, 0.6661, ..., -0.4869, 0.7780, -0.5461],

...,

[ 0.4410, 0.1868, 0.6844, ..., -0.2972, -0.1069, -0.1848],

[-0.0021, -0.0537, 0.2477, ..., 0.1877, -0.0479, -0.3762],

[ 0.1981, 0.0980, 0.3827, ..., 0.1449, 0.0403, -0.2863]]],

grad_fn=<NativeLayerNormBackward>)

```

Training reference labels:

```

[

'pau @ @ schal @ @ preis 80 € / person auf basis von 2 person @ @ nen.',

'ich finde es be @ @ denk @ @ lich, dass der bericht, den wir im ausschuss angenommen haben, so unterschiedlich ausgelegt wird.',

'die globalisierung hat eine betrachtliche veranderung der bedeutung ge @ @ ok @ @ ultur @ @ eller regionen in der welt mit sich gebracht.',

'falls sie eigentumer einer immobili @ @ e in andor @ @ ra sind, kontaktieren sie uns, um ihr apartment oder hotel hier auf @ @ zun @ @ ehem @ @ en.',

]

```

Training predictions after `.generate()` and `.batch_decode()` (garbage, but different per input):

```

[

'##exe int int int int fid fid fid fid fid fid fid fid fid fid fid fid lanz urn',

'##schleschleually vno stadien stadien stadienherzherzherzherzherzherzherzherzherzherzherzherz', '##betrtghattkerlabend verpackungahmahm te te teila einfl einfl einflierende add adduff',

'##reisreisviert fairrug ganze ganze ganze veh wz wz wz ihr x ihrverdverdverdverd',

]

```

#### During validation:

Input IDs to encoder:

```

tensor([[ 101, 1037, 3072, ..., 0, 0, 0],

[ 101, 3072, 1030, ..., 0, 0, 0],

[ 101, 2174, 1010, ..., 0, 0, 0],

[ 101, 5262, 1010, ..., 0, 0, 0]])

```

Output of encoder (to demonstrate that there is a difference per input):

```

tensor([[[-0.2494, -0.2050, -0.2032, ..., -1.0734, 0.1397, 0.4336],

[-0.2473, 0.0091, -0.2359, ..., -0.6884, 0.2158, -0.0761],

[-0.5098, -0.1364, 0.7411, ..., -1.0496, -0.0250, -0.2929],

...,

[-0.1039, -0.2547, 0.2264, ..., -0.2483, -0.2153, 0.0748],

[ 0.2561, -0.3465, 0.5167, ..., -0.2460, -0.1611, 0.0155],

[-0.0767, -0.3239, 0.4679, ..., -0.2552, -0.1551, -0.1501]],

[[-0.3001, 0.0428, -0.3463, ..., -0.6265, 0.3733, 0.3856],

[-0.1463, -0.0212, 0.1447, ..., -0.7843, -0.0542, 0.2394],

[ 0.7481, -0.3762, 0.6301, ..., 0.2269, 0.0267, -0.4466],

...,

[ 0.3723, -0.2708, 0.2251, ..., -0.0096, -0.0072, -0.2217],

[ 0.4360, -0.1101, 0.3447, ..., 0.0117, -0.0956, -0.1236],

[ 0.3221, -0.1846, 0.3263, ..., -0.0600, -0.0025, -0.1883]],

[[-0.1365, 0.1746, 0.1038, ..., -0.2151, 0.7875, 0.8574],

[ 0.1072, 0.2133, -0.8644, ..., 0.0739, 1.0464, 0.3385],

[ 0.7204, 0.2680, 0.0991, ..., -0.2964, -0.8238, -0.0604],

...,

[ 0.2686, -0.0701, 0.8973, ..., -0.0366, -0.2160, 0.0276],

[ 0.2265, -0.2171, 0.4239, ..., 0.0833, -0.0573, 0.0297],

[ 0.0690, -0.2430, 0.4186, ..., 0.0897, -0.0287, 0.0762]],

[[ 0.0408, 0.2332, -0.0992, ..., -0.2242, 0.6512, 0.4630],

[ 0.3257, 0.1358, -0.3344, ..., 0.0866, 1.0004, -0.0733],

[ 0.6827, 0.3013, 0.0672, ..., -0.2793, -0.8870, -0.0024],

...,

[ 0.4291, -0.5344, 0.0134, ..., 0.0439, 0.0617, -0.4433],

[ 0.4847, -0.2888, 0.2942, ..., 0.0153, 0.0121, -0.1231],

[ 0.4725, -0.3132, 0.3458, ..., -0.0207, 0.0517, -0.4281]]])

```

Validation reference labels:

```

[

'eine repub @ @ li @ @ kanische strategie, um der wieder @ @ wahl von obama entgegen @ @ zu @ @ treten',

'die fuhrungs @ @ krafte der republi @ @ kaner rechtfertigen ihre politik mit der notwendigkeit, den wahl @ @ betrug zu bekampfen.',

'allerdings halt das brenn @ @ an center letz @ @ teres fur einen my @ @ thos, indem es bekraftigt, dass der wahl @ @ betrug in den usa sel @ @ tener ist als die anzahl der vom bli @ @ tz @ @ schlag geto @ @ teten menschen.',

'die rechtsan @ @ walte der republi @ @ kaner haben in 10 jahren in den usa ubrigens nur 300 falle von wahl @ @ betrug ver @ @ zeichnet.',

]

```

Validation predictions after `.generate()` and `.batch_decode()` (garbage, but the same per input):

```

[

'##schleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschle',

'##schleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschle',

'##schleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschle',

'##schleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschleschle',

]

```

## Expected behavior

I would expect the model to generate a different output per input, as during training time.

## Thank you for your help!

Hopefully, it is something simple that I am missing. | 01-20-2021 00:10:09 | 01-20-2021 00:10:09 | Hi @anicolson ,

We would love to help, but sadly when you post such a long script it will be very hard and time-consuming for us to take a look at. We're happy to assist if you could provide a short, precise, and complete code snippet that is based on Transformers Seq2SeqTrainer only. Here's our guide on [how to request support](https://discuss.huggingface.co/t/how-to-request-support/3128).

Also from what I can see, seems like you are initializing bert encoder and bert decoder separately, you could directly instantiate it using the `EncoderDecoder` model class to get a seq2seq model. Here are two colab notebooks that show how to train `EncoderDecoder` models using `Seq2SeqTrainer`. The notebooks show how to fine-tune for summarization task, but could be easily adapted for translation as well.

[Leverage BERT for Encoder-Decoder Summarization on CNN/Dailymail](https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/BERT2BERT_for_CNN_Dailymail.ipynb)

[Leverage RoBERTa for Encoder-Decoder Summarization on BBC XSum](https://colab.research.google.com/github/patrickvonplaten/notebooks/blob/master/RoBERTaShared_for_BBC_XSum.ipynb)<|||||>Thanks for your reply,

I am attempting to create a shorter version that is not so time-consuming.

Certainly, the `EncoderDecoder` is an attractive option if one is using natural language, but I would like to highlight that using `BertGenerateDecoder` allows the user to provide any sequence for cross-attention, even those derived from encoders that operate on modalities other than natural language, which I think is powerful.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>> Thanks for your reply,

>

> I am attempting to create a shorter version that is not so time-consuming.

>

> Certainly, the `EncoderDecoder` is an attractive option if one is using natural language, but I would like to highlight that using `BertGenerateDecoder` allows the user to provide any sequence for cross-attention, even those derived from encoders that operate on modalities other than natural language, which I think is powerful.

Hi, have you tackled the problem? I encounter the exactly same problem. Any cues? |

transformers | 9,685 | closed | Fix Trainer and Args to mention AdamW, not Adam. | This PR fixed the issue with Docs and labels in Trainer and TrainingArguments Class for AdamW, current version mentions adam in several places.

Fixes #9628

The Trainer class in `trainer.py` uses AdamW as the default optimizer. The TrainingArguments class mentions it as Adam in the documentation, which was confusing.

I have also changed variable names to `adamw_beta1`, `adamw_beta2`, `adamw_epsilon` in `trainer.py`.

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

@LysandreJik | 01-19-2021 23:13:38 | 01-19-2021 23:13:38 | Thanks for opening the PR. As it stands this would break every existing script leveraging the parameters defined, so renaming the parameters is probably not the way to go.

@sgugger, your insight on this would be very welcome.

<|||||>There is no reason to change all the names of the parameters indeed, and it would be a too-heavy breaking change. `AdamW` is not a different optimizer from `Adam`, it's just `Adam` with a different way (some might say the right way) of doing weight decay. I don't think we need to do more than a mention at the beginning of the docstring saying that all mentions of `Adam` are actually about `AdamW`, with a link to the paper.<|||||>Hi @LysandreJik @sgugger. Thanks for your comments, I'll be changing the variables back.

I apologize if this is too silly a question, but how can I run and see how the docs look on a browser after the changes?<|||||>You should check [this page](https://github.com/huggingface/transformers/tree/master/docs#generating-the-documentation) for all the information on generating/writing the documentation :-)<|||||>I have updated it and also added that by default, the weight decay is applied to all layers except bias and LayerNorm weights while training.<|||||>@sgugger My code passed only 3 out of 12 checks, I was unable to run CirlceCI properly. Can you point out the reasons why this happened?<|||||>We are trying to get support from them to understand why, but the checks on your PR were all cancelled. I couldn't retrigger them from our interface either.<|||||>Hi @sgugger

I believe a possible reason could be that I followed `transformers` on CircleCI. Maybe it performs checks on my fork of transformers and expects to find some "resources" which aren't there.

I'm not sure how CircleCI works, so this is just a wild guess. |

transformers | 9,684 | closed | Fix model templates and use less than 119 chars | # What does this PR do?

This PR fixes the model templates that were broken by #9596 (copies not inline with the original anymore). In passing since I'm a dictator, I've rewritten the warning to take less than 119 chars.

Will merge as soon as CI is green. | 01-19-2021 21:35:04 | 01-19-2021 21:35:04 | |

transformers | 9,683 | closed | Fix Funnel Transformer conversion script | # What does this PR do?

The conversion script was using the wrong kind of model, so wasn't working. I've also added the option to convert the base models.

Fixes #9644

| 01-19-2021 21:02:34 | 01-19-2021 21:02:34 | Do not miss ```transformers/cammand/convert.py``` for ```transformer-cli``` user.

Need ```base_model``` arg for ```convert_tf_checkpoint_to_pytorch(self._tf_checkpoint, self._config, self._pytorch_dump_output)```. |

transformers | 9,682 | closed | Add a community page to the docs | # What does this PR do?

This PR adds a new "community" page in the documentation that aims to gather information about all resources developed by the community. I copied all the community notebooks there, and we have an open PR that will also populate it. | 01-19-2021 20:39:33 | 01-19-2021 20:39:33 | |

transformers | 9,681 | closed | Restrain tokenizer.model_max_length default | # What does this PR do?

Apply the same fix to `run_mlm` (when line_by_line is not selected) as we did previously in `run_clm`. Since the tokenizer model_max_length can be excessively large, we should restrain it when no `max_seq_length` is passed.

Fixes #9665 | 01-19-2021 20:20:35 | 01-19-2021 20:20:35 | |

transformers | 9,680 | closed | Generating sentence embeddings from pretrained transformers model | Hi, I have a pretrained BERT based model hosted on huggingface.

https://huggingface.co/microsoft/SportsBERT