repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 9,110 | closed | Not able to train RoBERTa language model from scratch | I tried training RoBERTa language model from scratch using

<code> !python ./run_mlm.py

--model_name_or_path roberta-base

--train_file './data_lm.txt'

--do_train

--line_by_line

--num_train_epochs 3

--output_dir ./roberta

</code>

Due to the limits on the colab storage, I deleted all the checkpoints generated during the training process. So finally my model directory has the following files -

1. config.json

2. merges.txt

3. pytorch_model.bin

4. special_tokens_map.json

5. tokenizer.config

6. vocab.json

But after loading my trained model using RobertaTokenizer, RobertaForSequenceClassification and fine-tuning it for my classification task, i am receiving almost same accuracy as by loading and fine-tuning the readily available 'roberta-base'.

Also, when i try loading it as

`model = RobertaModel.from_pretrained('./roberta)

`

I get the warning-

<code>

Some weights of RobertaModel were not initialized from the model checkpoint at ./roberta/ and are newly initialized: ['roberta.pooler.dense.weight', 'roberta.pooler.dense.bias']

You should probably TRAIN this model on a down-stream task to be able to use it for predictions and inference.

</code>

So, my question is, Is there something wrong in the training procedure of the Language Model on my dataset and the loading process? Or the checkpoints that i deleted were important?

| 12-14-2020 21:04:15 | 12-14-2020 21:04:15 | The `run_mlm` script trains a `RobertaForMaskedLM` model, which does not have a pooler layer. That's why you get this warning when using this pretrained model to initialize a `RobertaModel`.<|||||>Thanks @sgugger! Now I get where the problem was.

Moreover, I found some good tutorials on it. Here are the links for those who need it.

https://zablo.net/blog/post/training-roberta-from-scratch-the-missing-guide-polish-language-model/

https://colab.research.google.com/github/huggingface/blog/blob/master/notebooks/01_how_to_train.ipynb |

transformers | 9,109 | closed | Cannot disable logging from trainer module | @sgugger @stas00

- `transformers` version: 3.2.0

- Platform:

- Python version: 3.7.6

- PyTorch version (GPU?): 1.6.0, Tesla V100

- Tensorflow version (GPU?):

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: Parallel

I am using the Hugging Face Trainer class for NER fine-tuning. Whenever I turn evaluation on with the `--do_eval` argument, my console gets overwhelmed with a printed dictionary that appears to be coming from evaluation that is happening inside of the Trainer.

The dictionary has the keys:

` 'eval_loss', 'eval_accuracy_score', 'eval_precision', 'eval_recall', 'eval_f1', 'eval_class_report', 'eval_predictions' `

It's especially hard to read the console with NER predictions, because `eval_predictions` is a list with each token receiving an IOB tag.

I tried suppressing logging from transformers with this solution https://github.com/huggingface/transformers/issues/3050. I also tried disabling all logging below CRITICAL level. The problem persisted, and I noticed that the console output of the evaluation dictionary appeared to be coming from a print statement.

I tried suppressing all print statements from the Trainer's `train(...)` method, using this solution https://stackoverflow.com/questions/977840/redirecting-fortran-called-via-f2py-output-in-python/978264#978264. That worked, but now I have no logging of training at all :(.

| 12-14-2020 19:55:26 | 12-14-2020 19:55:26 | Could you elaborate on the script you're using. `run_ner.py` does not report `eval_class_report` or `eval_predictions` and there are no print statements in it, nor are there in `Trainer.train` method.<|||||>Yes, it's a custom script. The script creates an `AutoModelForTokenClassification,` passes it to the `Trainer` and calls the `Trainer.train` method.

We are using WandB to plot a confusion matrix, so we define our own `compute_metrics` function which we also pass to the `Trainer` (sorry should have stated this earlier). `compute metrics` does return a dictionary with the keys `'predictions', 'class_report' and 'target'`. It looks like one that gets output from `Trainer` has the prefix `'eval_'` in front of each key produced by `compute_metrics`.

I can't find anywhere in our script or our custom dependencies where we print or log this dictionary, and when I suppress console output from `Trainer` then the problem stops.

<|||||>Is it just a matter of changing the log level? `run_ner.py` sets it to `INFO` for the main process (it does it twice - once for the root logger and another for transformers' logger:

https://github.com/huggingface/transformers/blob/251eb70c979d74d3823e999236ff3621b07510a1/examples/token-classification/run_ner.py#L158-L168

<|||||>Maybe your `Trainer` ends up with a `PrinterCallback` that prints all the logs. You can remove this with

```

form transformers.trainer_callback import PrinterCallback

trainer.remove_callback(PrinterCallback)

```<|||||>Fixed! I had to upgrade to 4.0. Ended up having to upgrade to import the PrinterCalback, but I believe the upgrade itself fixed the problem. <|||||>Even better if the upgrade fixes the problem! There were printing statements in older versions indeed.<|||||>Same issue here - How do I stop all prints coming from trainer.predict() ? |

transformers | 9,108 | closed | Time for second encoding is much higher than first time | Hi,

using a bert model on a single gpu to encode multiple times after each each other like

```

bert_model = TFBertModel.from_pretrained('bert-base-cased',output_hidden_states=True)

input = tokenizer(data , max_length=MAX_SEQ_lEN,padding="max_length",truncation=True, return_tensors="tf")

outputs1 = bert_model(input)

###time1 : 0.1 seconds

outputs2 = bert_model(input)

### time2: 1.7 seconds

```

gives a unproportional high time for the second encoding. If the first encoding time is just 0.1 second I would assume to have each other encoding afterweards also about 0.1 seconds. I run this multiple times and it seems a patterns that the encoding after the first encoding is significantly larger.

Can someone explain this behaviour? I assume it is due to gpu.

```

Env: win 10, python: 3.6, tensorflow 2.3, transformers 3.3.1

GPU: Nvidia mx 150

```

| 12-14-2020 18:36:55 | 12-14-2020 18:36:55 | Could you show the entire code, including how you instantiate the model, as well as your environment information, as mentioned in the template? Thank you.<|||||>that's ok?<|||||>With the following code:

```py

from transformers import TFBertModel, BertTokenizer

from time import time

import tensorflow as tf

print("GPU Available:", tf.test.is_gpu_available())

tokenizer = BertTokenizer.from_pretrained("bert-base-cased")

bert_model = TFBertModel.from_pretrained('bert-base-cased',output_hidden_states=True)

input = tokenizer("Hey is this slow?" * 100 , max_length=512,padding="max_length",truncation=True, return_tensors="tf")

for i in range(100):

start = time()

outputs = bert_model(input)

print(time() - start)

```

Running on CPU doesn't increase the time for me:

```

GPU Available: False

0.5066382884979248

0.5038580894470215

0.5125613212585449

0.5018391609191895

0.4927494525909424

0.5066125392913818

0.49803781509399414

0.5140326023101807

0.501518726348877

0.49771928787231445

0.5038976669311523

```

[Running on GPU, no problem either:](https://colab.research.google.com/drive/1tfynzpOiQJKkEi0vkpKaDXTwhB5-k6Td?usp=sharing)

```

GPU Available: True

0.09349918365478516

0.09653115272521973

0.09893131256103516

0.10591268539428711

0.09297466278076172

0.09105610847473145

0.10088920593261719

0.0935661792755127

0.09639692306518555

0.10130929946899414

0.0947415828704834

0.09380221366882324

```<|||||>Thanks. If I do exactly your code I observe an increasing time! Very strange, but I assume that this has something to do with the gpu memory not releasing?

```

0.07779383659362793

0.20029330253601074

0.2085282802581787

0.22140789031982422

0.23041844367980957

0.22839117050170898

0.23337340354919434

0.22336935997009277

0.22971582412719727

0.22768259048461914

0.22839140892028809

0.22934865951538086

0.23038363456726074

0.22646212577819824

0.23062443733215332

0.22713351249694824

0.24032235145568848

0.24936795234680176

0.24984216690063477

0.2523007392883301

0.2481672763824463

0.2532966136932373

0.24833273887634277

0.2513241767883301

0.2522923946380615

0.2536492347717285

0.25013017654418945

0.25212621688842773

0.24585843086242676

0.25535058975219727

0.2563152313232422

0.2423419952392578

0.6144394874572754

0.647824764251709

0.6494302749633789

0.6406776905059814

0.6507377624511719

0.6411724090576172

0.6513652801513672

0.6484384536743164

0.6489207744598389

0.6405856609344482

0.6493120193481445

0.6484384536743164

0.6372919082641602

0.6494011878967285

0.6433298587799072

0.65077805519104

0.6475985050201416

0.6383304595947266

0.6525297164916992

0.6413178443908691

0.6475212574005127

0.6485188007354736

0.64430832862854

0.6478779315948486

0.6457436084747314

0.7288320064544678

0.6573460102081299

0.6572368144989014

0.5861053466796875

0.6324939727783203

0.722456693649292

0.6353938579559326

0.6324222087860107

0.6373186111450195

0.6216456890106201

0.6627655029296875

0.7275354862213135

0.6035926342010498

0.6590445041656494

0.5936176776885986

0.6416335105895996

0.6400752067565918

1.1317992210388184

1.2438006401062012

1.2430295944213867

1.2435650825500488

1.2585129737854004

1.2704930305480957

1.2204067707061768

1.2424969673156738

1.2366819381713867

1.2533769607543945

1.2510595321655273

1.2426464557647705

1.2566087245941162

1.2392685413360596

```

If you don't mind, my issue regarding the usage for the input of the tokenizer is still open. :)

https://github.com/huggingface/transformers/issues/7674<|||||>Indeed, I'll try and check the issue ASAP. Thanks for the reminder!<|||||>@LysandreJik . Thank you! But, do you have any idea for my issue? It seems it is a gpu issue?<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,107 | closed | Fix T5 model parallel test | The model was defined in the wrong model tester. | 12-14-2020 18:32:01 | 12-14-2020 18:32:01 | |

transformers | 9,106 | closed | Cannot load community model on local machine | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 3.4.0

- Platform: Darwin-19.6.0-x86_64-i386-64bit

- Python version: 3.7.6

- PyTorch version (GPU?): 1.7.0 (False)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

Probably @LysandreJik

## Information

Model I am using: https://huggingface.co/huggingtweets/xinqisu

The problem arises when using:

* [x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

## To reproduce

Steps to reproduce the behavior: (this is the instruction on the model page)

```

from transformers import pipeline

generator = pipeline('text-generation', model='huggingtweets/xinqisu')

generator("My dream is", num_return_sequences=5)

```

It gives me

```

OSError: Can't load config for 'huggingtweets/xinqisu'. Make sure that:

- 'huggingtweets/xinqisu' is a correct model identifier listed on 'https://huggingface.co/models'

- or 'huggingtweets/xinqisu' is the correct path to a directory containing a config.json file

```

## Expected behavior

The generator should work with the snippet above. I have trained other `huggingtweets` models and they still work with the same code, for example, the following still works, it downloaded the model successfully.

```

from transformers import pipeline

generator = pipeline('text-generation', model='huggingtweets/billgates')

generator("My dream is", num_return_sequences=5)

```

| 12-14-2020 15:37:08 | 12-14-2020 15:37:08 | Hello! I believe this is so because this model uses the new weights system, which was introduced in v3.5.1. Please upgrade your transformers version to at least v3.5.1, we recommand the latest (v4.0.1):

```

pip install -U transformers==4.0.1

```<|||||>@LysandreJik Thanks for the quick reply! It works now 👍 |

transformers | 9,105 | closed | Added TF OpenAi GPT1 Sequence Classification | This PR implements Sequence classification for TF OpenAi GPT1 model.

TFOpenAIGPTForSequenceClassification uses the last token in order to do the classification, as other causal models (e.g. Transformer XL ,GPT-2) do.

Fixes #7623

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

@LysandreJik @jplu

| 12-14-2020 14:47:08 | 12-14-2020 14:47:08 | Not sure, why test cases are failing for 'run_tests_tf'. @LysandreJik .

Let me know any action require from my side.<|||||>Can you rebase on master, a fix has been recently merged.<|||||>The tests have already been fixed on `master`, merging! Thanks a lot @spatil6 |

transformers | 9,104 | closed | Cannot load custom tokenizer for Trainer | ## Environment info

- `transformers` version: 4.0.0

- Platform: Linux-5.9.13-zen1-1-zen-x86_64-with-glibc2.2.5

- Python version: 3.8.6

- PyTorch version (GPU?): 1.7.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

@mfuntowicz

## Information

Model I am using: My own

The problem arises when using:

* [X] the official example scripts: (give details below)

The tasks I am working on is:

* [X] my own task or dataset: (give details below)

I want to finetune a model on my own dataset. For now it doesn't matter if i finetune bert, DistilBert or other, I just want good embeddings for text similarity (cosine distance)

## To reproduce

Steps to reproduce the behavior:

1. Read the [How to train tutorial](https://colab.research.google.com/github/huggingface/blog/blob/master/notebooks/01_how_to_train.ipynb)

2. Train your own tokenizer (working perfectly) and got `model-vocab.json` and `model-merges.txt`

3. Load and encode with :

```

tokenizer = ByteLevelBPETokenizer(./models/custom/my_model-vocab.json","./models/custom/my_model-merges.txt")

```

This works nicelly !

4. Try to do the same with a DistilBertTokenizerFast to use with the `Trainer` class

```

tokenizer = DistilBertTokenizerFast.from_pretrained('./models/custom', max_len=512)

```

5. Get the error `check that './models/custom' is the correct path to a directory containing relevant tokenizer files`

Note : I also tried to add a `config.json` file next to the merge and vocab which seemed missing but it doesn't change anything.

I also tried a RobertaTokenizerFast (and the 'not fast' version) but same problem

## Expected behavior

Train a custom tokenizer and be able to load it with a ModelTokenizer for the Trainer.

(The BPE tokenizer which works do not have the `mask_token` attribute to work with the dataset loader)

| 12-14-2020 14:20:31 | 12-14-2020 14:20:31 | After playing with it more and changing files, names etc... I managed to made it work with Roberta. I guess it was a stupid name error... Sorry for taking 5mn of you time reading this.

I realize it couldn't work with distilbert (bert) as the tokenizers are differents.

In the end, the model is training.

Maybe it will help someone else one day.

Have a good day. <|||||>Glad you could resolve your issue! |

transformers | 9,103 | closed | Seq2Seq training calculate_rouge with precision and recall | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: master

- Platform: Google Colab

- Python version: 3.6.9

- PyTorch version (GPU?): pytorch-lightning==1.0.4

- Tensorflow version (GPU?):

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

### Who can help

Trainer: @sgugger

examples/seq2seq: @patil-suraj

## Information

Model I am using (Bert, XLNet ...): bart-base

The tasks I am working on is:

summarization on XSUM

## To reproduce

Change `calculate_rouge` function in `utils.py` with `return_precision_and_recall=True`.

Fine-tune any seq2seq model with the official script `finetune.py`:

```

!python3 $finetune_script \

--model_name_or_path facebook/bart-base \

--tokenizer_name facebook/bart-base \

--data_dir $data_dir \

--learning_rate 3e-5 --label_smoothing 0.1 --num_train_epochs 2 \

--sortish_sampler --freeze_embeds --adafactor \

--task summarization \

--do_train \

--max_source_length 1024 \

--max_target_length 60 \

--val_max_target_length 60 \

--test_max_target_length 100 \

--n_train 8 --n_val 2 \

--train_batch_size 2 --eval_batch_size 2 \

--eval_beams 2 \

--val_check_interval 0.5 \

--log_every_n_steps 1 \

--logger_name wandb \

--output_dir $output_dir \

--overwrite_output_dir \

--gpus 1

```

Throws the error

```

Validation sanity check: 100%|██████████| 1/1 [00:01<00:00, 1.67s/it]Traceback (most recent call last):

File "/content/drive/My Drive/MAGMA: Summarization/seq2seq/finetune.py", line 443, in <module>

main(args)

File "/content/drive/My Drive/MAGMA: Summarization/seq2seq/finetune.py", line 418, in main

logger=logger,

File "/content/drive/My Drive/MAGMA: Summarization/seq2seq/lightning_base.py", line 389, in generic_train

trainer.fit(model)

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/trainer/trainer.py", line 440, in fit

results = self.accelerator_backend.train()

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/accelerators/gpu_accelerator.py", line 54, in train

results = self.train_or_test()

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/accelerators/accelerator.py", line 68, in train_or_test

results = self.trainer.train()

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/trainer/trainer.py", line 462, in train

self.run_sanity_check(self.get_model())

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/trainer/trainer.py", line 650, in run_sanity_check

_, eval_results = self.run_evaluation(test_mode=False, max_batches=self.num_sanity_val_batches)

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/trainer/trainer.py", line 597, in run_evaluation

num_dataloaders=len(dataloaders)

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/trainer/evaluation_loop.py", line 196, in evaluation_epoch_end

deprecated_results = self.__run_eval_epoch_end(num_dataloaders, using_eval_result)

File "/usr/local/lib/python3.6/dist-packages/pytorch_lightning/trainer/evaluation_loop.py", line 247, in __run_eval_epoch_end

eval_results = model.validation_epoch_end(eval_results)

File "/content/drive/My Drive/MAGMA: Summarization/seq2seq/finetune.py", line 190, in validation_epoch_end

k: np.array([x[k] for x in outputs]).mean() for k in self.metric_names + ["gen_time", "gen_len"]

File "/content/drive/My Drive/MAGMA: Summarization/seq2seq/finetune.py", line 190, in <dictcomp>

k: np.array([x[k] for x in outputs]).mean() for k in self.metric_names + ["gen_time", "gen_len"]

File "/usr/local/lib/python3.6/dist-packages/numpy/core/_methods.py", line 163, in _mean

ret = ret / rcount

TypeError: unsupported operand type(s) for /: 'dict' and 'int'

```

From my understanding self.metric_names should be a list. | 12-14-2020 13:57:17 | 12-14-2020 13:57:17 | Hi there. Please note that this script is not maintained anymore and is provided as is. We only maintain the `finetune_trainer.py` script now.<|||||>Ok, I will switch to that one. Thank you |

transformers | 9,102 | closed | Unexpected logits shape on prediction with TFRobertaForSequenceClassification | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.0.0

- Platform: Linux-4.9.0-11-amd64-x86_64-with-debian-9.11

- Python version: 3.7.9

- PyTorch version (GPU?): 1.6.0a0+bf2bbd9 (False)

- Tensorflow version (GPU?): 2.3.1 (False)

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: Distributed

I am using the the TFRobertaForSequenceClassification to create a classifier. According to the documentation, the logits output should have a shape of (batch_size, num_labels) which makes sense. I however get (batch_size, seq_length, num_labels).

Code to reproduce:

```

from transformers import TFRobertaForSequenceClassification, RobertaConfig

import numpy as np

seq_len = 512

classifier = TFRobertaForSequenceClassification(RobertaConfig())

#create random inputs for demo

input_ids = np.random.randint(0,10000, size=(seq_len,))

attention_mask = np.random.randint(0,2, size=(seq_len,))

token_type_ids = np.random.randint(0,2, size=(seq_len,))

#make a prediction with batch_size of 1

output = classifier.predict([input_ids, attention_mask, token_type_ids])

print(output.logits.shape) -> prints out (512,2)

```

## Expected behavior

Logits in the shape of (batch_size,num_labels) or (1,2)

| 12-14-2020 13:39:42 | 12-14-2020 13:39:42 | Hello! The main issue here is that your arrays are of shape `(seq_length)`, whereas they should be of shape `(batch_size, seq_length)`, even if the batch size is 1.

Updating your code to reflect that:

```py

from transformers import TFRobertaForSequenceClassification, RobertaConfig

import numpy as np

bs = 1

seq_len = 510

classifier = TFRobertaForSequenceClassification(RobertaConfig())

#create random inputs for demo

input_ids = np.random.randint(0,10000, size=(bs, seq_len,))

attention_mask = np.random.randint(0,2, size=(bs, seq_len,))

token_type_ids = np.random.randint(0,2, size=(bs, seq_len,))

#make a prediction with batch_size of 1

output = classifier.predict([input_ids, attention_mask, token_type_ids])

print(output.logits.shape) # -> outputs (1, 2)

```

However, there seems to be an error as the model cannot handle a sequence length of 512 when used this way. @jplu running the above code with a sequence length of 512 results in the following error:

```

tensorflow.python.framework.errors_impl.InvalidArgumentError: indices[0,510] = 512 is not in [0, 512)

[[node tf_roberta_for_sequence_classification/roberta/embeddings/position_embeddings/embedding_lookup (defined at /home/lysandre/Workspaces/Python/transformers/src/transformers/models/roberta/modeling_tf_roberta.py:199) ]] [Op:__inference_predict_function_8030]

Errors may have originated from an input operation.

Input Source operations connected to node tf_roberta_for_sequence_classification/roberta/embeddings/position_embeddings/embedding_lookup:

tf_roberta_for_sequence_classification/roberta/embeddings/add (defined at /home/lysandre/Workspaces/Python/transformers/src/transformers/models/roberta/modeling_tf_roberta.py:122)

Function call stack:

predict_function

```

Using a smaller sequence length doesn't raise the error. Do you mind weighing in on the issue?<|||||>Yep, you are limited to 510 tokens + 2 extra tokens (beginning + end)<|||||>After talking about it a bit offline with @jplu we realize there might be an issue with the `predict` method when passing in the values as a list. Could you try passing them as a dictionary instead?

Doing this instead:

```py

output = classifier.predict({"input_ids": input_ids, "attention_mask": attention_mask, "token_type_ids": token_type_ids})

```<|||||>Hei! Thank you for the feedback. I passed the parameters as a dict with everything else unchanged but still get the output as (seq_len, num_labels) unfortunately. <|||||>Can you try this:

```

from transformers import TFRobertaForSequenceClassification, RobertaConfig

import numpy as np

bs = 1

seq_len = 510

classifier = TFRobertaForSequenceClassification(RobertaConfig())

input_ids = np.random.randint(0,10000, size=(bs, seq_len,))

attention_mask = np.random.randint(0,2, size=(bs, seq_len,))

token_type_ids = np.zeros(shape=(bs, seq_len,))

classifier.predict({"input_ids": input_ids, "attention_mask": attention_mask, "token_type_ids": token_type_ids})

```<|||||>Yes, this works with seq_len = 510. It might help stating this behaviour in the docs or perhaps raise an error or show a warning when one tries to input an unbatched sample. Also a bit confusing that seq_len needs to be 510 and not 512 to account for the extra tokens (and the error received when one tries with 512 is a bit murky). Anyway, thanks for the help. I'll go ahead and close this. |

transformers | 9,101 | closed | Fix a broken link in documentation | # What does this PR do?

Fixes a broken link to the BERTology example in documentation

Fixes #9100

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

## Who can review?

documentation: @sgugger

| 12-14-2020 13:00:25 | 12-14-2020 13:00:25 | |

transformers | 9,100 | closed | Link to BERTology example is broken | Link to BERTology example is broken in Documentation (https://huggingface.co/transformers/bertology.html)

| 12-14-2020 12:57:36 | 12-14-2020 12:57:36 | |

transformers | 9,099 | closed | bug with _load_optimizer_and_scheduler in trainer.py | ## Environment info

- `transformers` version: 3.5.1

- Platform: GPU

- Python version: 3.7

- PyTorch version (GPU?): 1.4.0

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

Trainer: @sgugger

Text Generation: @patrickvonplaten

## Information

I am using finetune_trainer.py, what I am observing is that in trainer.py, when you call _load_optimizer_and_scheduler if the model_path folder exist, then this ignores the user's set learning rate, meaning it continues with some saved learning rate than actually use the learning rate user set, could you have a look please? thanks

| 12-14-2020 11:00:20 | 12-14-2020 11:00:20 | Hi @rabeehk ,

In `_load_optimizer_and_scheduler` if `model_path` exists and `optimizer` and `scheduler` `state_dict` is found then that means you are loading from a saved checkpoint and continue training from there, so the `lr` is read from the `scheduler` and used instead of the set LR. This is expected behaviour.<|||||>Hi Suraj,

thanks for the reply. I have a couple of questions on this, 1) I see this

is ignoring the training epochs in when loading from the saved checkpoints,

so it does not train for the epochs set, how could I resolve it? Also, if I

want to change the lr, could I load from checkpoint but change the lr?could

you give me some information how loading from trained optimizer, could

help?

to explain better, I train a model for X epochs, then I want to finetune it

on other datasets with extra Y epochs with different learning rate, for

this I pass the updated model to trainer, but then should I pass the

model_path so it loads from the saved checkpoint of optimizer? and why this

is ignoring the set number of epochs?

thanks

On Mon, Dec 14, 2020 at 11:07 AM Suraj Patil <[email protected]>

wrote:

> Hi @rabeehk <https://github.com/rabeehk> ,

>

> In _load_optimizer_and_scheduler if model_path exists and optimizer and

> scheduler state_dict is found then that means you are loading from a

> saved checkpoint and continue training from there, so the lr is read from

> the scheduler and used instead of the set LR. This is expected behaviour.

>

> —

> You are receiving this because you were mentioned.

> Reply to this email directly, view it on GitHub

> <https://github.com/huggingface/transformers/issues/9099#issuecomment-744366176>,

> or unsubscribe

> <https://github.com/notifications/unsubscribe-auth/ABP4ZCDDDMJGNROAD3AKZFTSUXWVJANCNFSM4U2SCXGA>

> .

>

<|||||>If you want to fine-tune the saved checkpoint on another dataset then you could save it in diff path or remove the saved `optimizer` and `scheduler` files.

Also @sgugger might have a better answer here.<|||||>Hi Suraj,

I am having a similar problem here.

When the trainer continues from a checkpoint, i.e. `trainer.train(check_point_path)`, I notice a peek in the learning curve. I suspect that is related to what Rabeeh has mentioned.

Please have a look at the learning curve I got after I had to resume the training twice.

Any ideas?<|||||>> this I pass the updated model to trainer, but then should I pass the model_path so it loads from the saved checkpoint of optimizer? and why this is ignoring the set number of epochs?

Passing a `model_path` to the train method is done when you want to resume an interrupted training, which is why it does not do all epochs (it resumes the training from where you where). If you want to do a new training, you should not use that argument and manually pass the optimizer/scheduler you want to use at init.

@abdullah-alnahas I have no idea what your plot is since you haven't told us how you generated it. <|||||>Thanks for your response @sgugger , and sorry for not making myself clear.

I am training an Electra model from scratch using the [`Trainer`] API.(https://huggingface.co/transformers/main_classes/trainer.html). I have interrupted the trainer twice, then resumed training by `trainer.train(latest_checkpoint_path)`.

After that, I have generated the learning curve plot from `{latest_checkpoint_path}/trainer_state.json`'s `log_history` using `step` as the x axis, and `loss` as the y axis.

My question: Is it normal that the learning curve peaks after resuming the training from a checkpoint after an interruption?<|||||>The loss is reinitialized to 0 (it's not saved with the checkpoints) so it could come from this. There were also some recent changes in how the loss is logged so having your transformers version would help. The CI tests the final values of the weights of a (small) model are the same with a full training or resumed training, so I think this is just some weird reporting of the loss.<|||||>thanks Suraj and everyone, makes sense not to initialize the optimizers.<|||||>Hi @sgugger,

I encountered the same issue on Transformers 4.3.0. I think the problem is not the loss being reinitialized as 0, but that the model is not being loaded from model_path. Only `TrainerState` is loaded but not the model weights. I looked through the code before concluding this, but as a sanity check, the current code will run even if `pytorch_model.bin` is not in the checkpoint directory, confirming that its not being loaded at all. It's odd that the CI tests are passing....

Anyway I modified `trainer.py:train()`under the code block:

```

# Check if continuing training from a checkpoint

if model_path and os.path.isfile(os.path.join(model_path, "trainer_state.json")):

...

self._globalstep_last_logged = self.state.global_step

if isinstance(self.model, PreTrainedModel):

model = model.from_pretrained(model_path)

if not self.is_model_parallel:

model = model.to(self.args.device)

else:

state_dict = torch.load(os.path.join(model_path, WEIGHTS_NAME))

model.load_state_dict(state_dict)

```

`self._globalstep_last_logged = self.state.global_step` ensures the first logging of the loss is correct. `self._globalstep_last_logged` should not be 0 (that line is removed in the later part of the code)

The training is properly resumed after this.

<|||||>`Trainer` does not handle the reloading of the model indeed, which can be confusing. So l'll add that functionality this afternoon!<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,098 | closed | [RAG, Bart] Align RAG, Bart cache with T5 and other models of transformers | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

In Transformers, the cache should always have the same structure. This becomes especially important for composite models like `RAG` and `EncoderDecoder` that expect all models to have the same cache.

Bart and T5 had different caches with Bart being most different from the standard cache of the library.

This PR aligns the `past_key_values` cache of Bart/Rag with all other models in the library. In general, the philosophy should be:

the past_key_value should have exactly one level for each layer, no matter whether the model is a decoder-only a.k.a. GPT2 or BART. This was not correctly refactored in BART (it should have been implemented 1-to-1 as in T5). No breaking changes here though.

- `past_key_value` tuple for each layer should always be a tuple of tensors, **not** a tuple of a tuple

- for decodre-only models (GPT2), the tuple for each layer contains 2 tensors: key and value states

- for seq2seq (BART/T5), the tuple for each layer contains 4 tensors: key and value states of uni-directional self-attention, saved key and value states for cross-attention

This doesn't break any backward compatibility and should fix some RAG problems (@ratthachat). All RAG, Bart slow tests are passing and changes correspond just to the tuple structure.

PR is blocking me for TFBart refactor -> will merge already.

cc @LysandreJik, @sgugger, @patil-suraj for info.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSMT: @stas00

-->

| 12-14-2020 10:36:53 | 12-14-2020 10:36:53 | |

transformers | 9,097 | closed | Is the LayoutLM working now? | Getting endless erros when trying to use the LayoutLMForTokenClassification from transformers for NER task, is just me doing wrong or the function still on work?

Really appreciate if anyone can give some information. | 12-14-2020 09:25:13 | 12-14-2020 09:25:13 | Hi @shaonanqinghuaizongshishi

Could you please post the code snippet, stack trace and your env info so that we can take a look ?<|||||>I am working on:

ubuntu 16.04

torch 1.5.0

transformers 3.4.0

```

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

tokenizer = LayoutLMTokenizer.from_pretrained(model_path)

model = LayoutLMForTokenClassification.from_pretrained(model_path, num_labels=config.num_labels).to(device)

outputs = model(b_input_ids, bbox=b_boxes, token_type_ids=None,

attention_mask=b_input_mask, labels=b_labels)

```

Then I run CUDA_LAUNCH_BLOCKING=1 python layoutLM.py, and got the following error:

```

Traceback (most recent call last):

File "layoutLM.py", line 275, in <module>

train(train_dataloader, validation_dataloader)

File "layoutLM.py", line 162, in train

attention_mask=b_input_mask, labels=b_labels)

File "/Classification/lib/python3.6/site-packages/torch/nn/modules/module.py", line 550, in __call__

result = self.forward(*input, **kwargs)

File "/Classification/lib/python3.6/site-packages/transformers/modeling_layoutlm.py", line 864, in forward

return_dict=return_dict,

File "/Classification/lib/python3.6/site-packages/torch/nn/modules/module.py", line 550, in __call__

result = self.forward(*input, **kwargs)

File "/Classification/lib/python3.6/site-packages/transformers/modeling_layoutlm.py", line 701, in forward

inputs_embeds=inputs_embeds,

File "/Classification/lib/python3.6/site-packages/torch/nn/modules/module.py", line 550, in __call__

result = self.forward(*input, **kwargs)

File "/Classification/lib/python3.6/site-packages/transformers/modeling_layoutlm.py", line 118, in forward

+ token_type_embeddings

RuntimeError: CUDA error: an illegal memory access was encountered

```

<|||||>Hi there!

I have been investigating the model by making [integration tests](https://github.com/NielsRogge/transformers/blob/e5431da34ab2d03d6114303f18fd70192c880913/tests/test_modeling_layoutlm.py#L318), and turns out it outputs the same tensors as the original repository on the same input data, so there are no issues (tested this both for the base model - `LayoutLMModel` as well as the models with heads on top - `LayoutLMForTokenClassification` and `LayoutLMForSequenceClassification`).

However, the model is poorly documented in my opinion, I needed to first look at the original repository to understand everything. I made a demo notebook that showcases how to fine-tune HuggingFace's `LayoutLMForTokenClassification` on the FUNSD dataset (a sequence labeling task): https://github.com/NielsRogge/Transformers-Tutorials/blob/master/LayoutLM/Fine_tuning_LayoutLMForTokenClassification_on_FUNSD.ipynb

Let me know if this helps you!

<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,096 | closed | Fix variable name in TrainingArguments docstring | # What does this PR do?

Corrects a var name in the docstring for `TrainingArguments` (there is no `ignore_skip_data`)

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

## Who can review?

@sgugger | 12-14-2020 07:51:55 | 12-14-2020 07:51:55 | |

transformers | 9,095 | closed | [TorchScript] Received several warning during Summarization model conversion | ## Environment info

Using Transformers 4.0.1 and PyTorch 1.6.0.

```pytorch

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

import torch

tokenizer = AutoTokenizer.from_pretrained("sshleifer/distilbart-cnn-6-6")

model = AutoModelForSeq2SeqLM.from_pretrained("sshleifer/distilbart-cnn-6-6")

# model = BartModel.from_pretrained("sshleifer/bart-tiny-random")

input_ids = decoder_input_ids = torch.tensor([19 * [1] + [model.config.eos_token_id]])

traced_model = torch.jit.trace(model, (input_ids, decoder_input_ids), strict=False)

traced_model.save("distilbart.pt")

```

I have to disable the strict checking in order to pass. (Error message without disable the strict flag):

```

RuntimeError: Encountering a dict at the output of the tracer might cause the trace to be incorrect, this is only valid if the container structure does not change based on the module's inputs. Consider using a constant container instead (e.g. for `list`, use a `tuple` instead. for `dict`, use a `NamedTuple` instead). If you absolutely need this and know the side effects, pass strict=False to trace() to allow this behavior.

```

Here is the warning messages:

```

/Users/qingla/PycharmProjects/pytorch/venv/lib/python3.7/site-packages/transformers/models/bart/modeling_bart.py:232: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if not padding_mask.any():

/Users/qingla/PycharmProjects/pytorch/venv/lib/python3.7/site-packages/transformers/models/bart/modeling_bart.py:175: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if decoder_padding_mask is not None and decoder_padding_mask.shape[1] > 1:

/Users/qingla/PycharmProjects/pytorch/venv/lib/python3.7/site-packages/transformers/models/bart/modeling_bart.py:716: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert key_padding_mask is None or key_padding_mask.shape == (bsz, src_len)

/Users/qingla/PycharmProjects/pytorch/venv/lib/python3.7/site-packages/transformers/models/bart/modeling_bart.py:718: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert attn_weights.size() == (bsz * self.num_heads, tgt_len, src_len)

/Users/qingla/PycharmProjects/pytorch/venv/lib/python3.7/site-packages/transformers/models/bart/modeling_bart.py:736: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

assert attn_output.size() == (bsz * self.num_heads, tgt_len, self.head_dim)

/Users/qingla/PycharmProjects/pytorch/venv/lib/python3.7/site-packages/transformers/models/bart/modeling_bart.py:287: TracerWarning: Converting a tensor to a Python boolean might cause the trace to be incorrect. We can't record the data flow of Python values, so this value will be treated as a constant in the future. This means that the trace might not generalize to other inputs!

if torch.isinf(x).any() or torch.isnan(x).any():

```

If these warning are indicated correctly, them the model I traced is highly tied to the dummy input I provided which would bring inaccurate inference result... Any thoughts on how to improve it? @sshleifer Thanks! | 12-14-2020 07:14:20 | 12-14-2020 07:14:20 | Have you tried removing the `strict=False`, and instead specify `return_dict=False` when you initialize the model with `from_pretrained`? Can you let me know if this fixes your issue?<|||||>> Have you tried removing the `strict=False`, and instead specify `return_dict=False` when you initialize the model with `from_pretrained`? Can you let me know if this fixes your issue?

Thanks. It seemed the error message is gone. However, I still receive the warning messages. Is there anyway I can modify the script and make it work without warning?<|||||>Usually these do not impact the result, as they are python values that do not change over time. Have you seen an error in prediction?<|||||>@LysandreJik Sounds good. Haven't seen anything wrong yet. :) |

transformers | 9,094 | closed | head mask issue transformers==3.5.1 | ## Environment info

- `transformers` version: 3.5.1

- Platform: windows & linux

- Python version: python 3.7

- PyTorch version (GPU?): 1.7.0

- Tensorflow version (GPU?): 2.3.1

- Using GPU in script?: Yes both CPU and GPU

- Using distributed or parallel set-up in script?: No

### Who can help

albert, bert, GPT2, XLM: @LysandreJik

## Information

Hi

I am using tiny albert Chinese as an encoder. and I've also tried to use albert transformer in my code.

Thing is I have to change a little bit source code to avoid some head mask issues.

watch transformers/modeling_albert.py

line 387: layer_output = albert_layer(hidden_states, attention_mask, **head_mask[layer_index]**, output_attentions)

however a few lines above, the defualt head_mask is None. So **TypeError: 'NoneType' object is not subscriptable** would be raised

It's not a deep bug and could be easily avoided if making a torch.ones head_mask. Just want to bring it up so it might probably help the others who encounter the same problem.

| 12-14-2020 06:56:53 | 12-14-2020 06:56:53 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,093 | closed | Not able to load T5 tokenizer | Transformers==4.0.0

torch == 1.7.0+cu101

tensorflow == 2.3.0

Platform = Colab notebook

@julien-c @patrickvonplaten

Not able to load T5 tokenizer using

`tokenizer = T5Tokenizer.from_pretrained('t5-base')'

Getting error -

I am able to download the pre-trained model though. | 12-14-2020 06:55:44 | 12-14-2020 06:55:44 | Hey @adithyaan-creator,

as the error message says you need to install the sentence piece library :-)

If you run:

```

pip install sentencepiece==0.1.91

```

before, it should work.<|||||>Thanks @patrickvonplaten . <|||||>Hi @patrickvonplaten

I installed sentencepiece, but it still doesnt seem to be working for me. Please see the snapshot below. Please help.

<|||||>@DesiKeki try sentencepiece version 0.1.94.<|||||>Thanks @adithyaan-creator , it worked!<|||||>Hello @patrickvonplaten

I have gone through the issue and the suggestions given above. However, I am facing the same issue and for some reason, none of the above solutions are proving fruitful.

The issue I am facing is exactly the same as the one stated above:

`from transformers import T5Tokenizer,T5ForConditionalGeneration,Adafactor`

`!pip install sentencepiece==0.1.91`

`tokenizer = T5Tokenizer.from_pretrained("t5-base")`

`print(tokenizer)`

The output of the above code is: None.

I tried using other versions of sentencepiece as well (as the one suggested above 0.1.94 and others as well). But it is still not working.

<|||||>Did you restart your kernel after installing `sentencepiece`? See conversation in https://github.com/huggingface/transformers/issues/10797<|||||>> Did you restart your kernel after installing `sentencepiece`? See conversation in #10797

it works for me, thank you<|||||>> Did you restart your kernel after installing `sentencepiece`? See conversation in #10797

It works for me. Thanks a lot. |

transformers | 9,092 | closed | Patch *ForCausalLM model with TF resize_token_embeddings | cc @jplu | 12-14-2020 05:30:42 | 12-14-2020 05:30:42 | |

transformers | 9,091 | closed | Chinese | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform:

- Python version:

- PyTorch version (GPU?):

- Tensorflow version (GPU?):

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

### Who can help

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, GPT2, XLM: @LysandreJik

tokenizers: @mfuntowicz

Trainer: @sgugger

Speed and Memory Benchmarks: @patrickvonplaten

Model Cards: @julien-c

TextGeneration: @TevenLeScao

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten @TevenLeScao

Blenderbot: @patrickvonplaten

Bart: @patrickvonplaten

Marian: @patrickvonplaten

Pegasus: @patrickvonplaten

mBART: @patrickvonplaten

T5: @patrickvonplaten

Longformer/Reformer: @patrickvonplaten

TransfoXL/XLNet: @TevenLeScao

RAG: @patrickvonplaten, @lhoestq

FSMT: @stas00

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

-->

## Information

Model I am using (Bert, XLNet ...):

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1.

2.

3.

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

| 12-14-2020 02:46:27 | 12-14-2020 02:46:27 | |

transformers | 9,090 | closed | run_clm example gives `CUDA out of memory. Tried to allocate` error | ## Environment info

Google Colab with GPU runtime.

- Python version: 3.6.9

## Information

I'm trying to run the GPT2 training example from `https://github.com/huggingface/transformers/blob/master/examples/language-modeling/run_clm.py`.

The problem arises when using:

- Run CLM language modeling example.

## To reproduce

Steps to reproduce the behavior:

1. Open Google Colab with GPU on

2. Run

```

!git clone https://github.com/huggingface/transformers

%cd transformers

!pip install .

%cd examples

%cd language-modeling

!pip install -r requirements.txt

```

```

!python run_clm.py \

--model_name_or_path gpt2 \

--dataset_name wikitext \

--dataset_config_name wikitext-2-raw-v1 \

--do_train \

--do_eval \

--output_dir test

```

Log:

```

[INFO|trainer.py:668] 2020-12-14 02:09:02,049 >> ***** Running training *****

[INFO|trainer.py:669] 2020-12-14 02:09:02,049 >> Num examples = 2318

[INFO|trainer.py:670] 2020-12-14 02:09:02,049 >> Num Epochs = 3

[INFO|trainer.py:671] 2020-12-14 02:09:02,049 >> Instantaneous batch size per device = 8

[INFO|trainer.py:672] 2020-12-14 02:09:02,049 >> Total train batch size (w. parallel, distributed & accumulation) = 8

[INFO|trainer.py:673] 2020-12-14 02:09:02,049 >> Gradient Accumulation steps = 1

[INFO|trainer.py:674] 2020-12-14 02:09:02,049 >> Total optimization steps = 870

0% 0/870 [00:00<?, ?it/s]Traceback (most recent call last):

File "run_clm.py", line 357, in <module>

main()

File "run_clm.py", line 327, in main

trainer.train(model_path=model_path)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 767, in train

tr_loss += self.training_step(model, inputs)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 1096, in training_step

loss = self.compute_loss(model, inputs)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 1120, in compute_loss

outputs = model(**inputs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/models/gpt2/modeling_gpt2.py", line 895, in forward

return_dict=return_dict,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/models/gpt2/modeling_gpt2.py", line 740, in forward

output_attentions=output_attentions,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/models/gpt2/modeling_gpt2.py", line 295, in forward

output_attentions=output_attentions,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 727, in _call_impl

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/models/gpt2/modeling_gpt2.py", line 239, in forward

attn_outputs = self._attn(query, key, value, attention_mask, head_mask, output_attentions)

File "/usr/local/lib/python3.6/dist-packages/transformers/models/gpt2/modeling_gpt2.py", line 166, in _attn

w = torch.matmul(q, k)

RuntimeError: CUDA out of memory. Tried to allocate 384.00 MiB (GPU 0; 15.90 GiB total capacity; 14.75 GiB already allocated; 185.88 MiB free; 14.81 GiB reserved in total by PyTorch)

0% 0/870 [00:00<?, ?it/s]

```

## Expected behavior

Outputs the model in the output_dir with no memory error.

| 12-14-2020 02:17:31 | 12-14-2020 02:17:31 | You should try to reduce the batch size. This will reduce the memory usage.<|||||>Yup. What @LysandreJik said is correct. Use the following:

`--per_device_train_batch_size x \`

`--per_device_eval_batch_size x \`

Replace x with your preferred batch size, I would recommend the highest power of 2 your GPU memory allows.<|||||>It worked! With the Colab's GPU memory size of 12.72GB, the batch size worked at:

`--per_device_train_batch_size 2 \`

`--per_device_eval_batch_size 16 \`

Thanks for the quick response guys. |

transformers | 9,089 | closed | Fix a bug in eval_batch_retrieval of eval_rag.py | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Following the instructions in [RAG example](https://github.com/huggingface/transformers/tree/master/examples/research_projects/rag#retrieval-evaluation), I was trying to evaluate retrieval against DPR evaluation data.

`pipenv run python eval_rag.py --model_name_or_path facebook/rag-sequence-nq --model_type rag_sequence --evaluation_set output/biencoder-nq-dev.questions --gold_data_path output/biencoder-nq-dev.pages --predictions_path output/retrieval_preds.tsv --eval_mode retrieval --k 1`

With the above command, I faced the following error and confirmed that `question_enc_outputs` is a tuple whose length is 1.

```

...

loading weights file https://huggingface.co/facebook/rag-sequence-nq/resolve/main/pytorch_model.bin from cache at /home/ubuntu/.cache/huggingface/transformers/9456ce4ba210322153f704e0f26c6228bd6c0caad60fe1b3bdca001558adbeca.ee816b8e716f9741a2ac602bb9c6f4d84eff545b0b00a6c5353241bea6dec221

All model checkpoint weights were used when initializing RagSequenceForGeneration.

All the weights of RagSequenceForGeneration were initialized from the model checkpoint at facebook/rag-sequence-nq.

If your task is similar to the task the model of the checkpoint was trained on, you can already use RagSequenceForGeneration for predictions without further training.

initializing retrieval

Loading index from https://storage.googleapis.com/huggingface-nlp/datasets/wiki_dpr/

loading file https://storage.googleapis.com/huggingface-nlp/datasets/wiki_dpr/hf_bert_base.hnswSQ8_correct_phi_128.c_index.index.dpr from cache at /home/ubuntu/.cache/huggingface/transformers/a481b3aaed56325cb8901610e03e76f93b47f4284a1392d85e2ba5ce5d40d174.a382b038f1ea97c4fbad3098cd4a881a7cd4c5f73902c093e0c560511655cc0b

loading file https://storage.googleapis.com/huggingface-nlp/datasets/wiki_dpr/hf_bert_base.hnswSQ8_correct_phi_128.c_index.index_meta.dpr from cache at /home/ubuntu/.cache/huggingface/transformers/bb9560964463bc761c682818cbdb4e1662e91d25a9407afb102970f00445678c.f8cbe3240b82ffaad54506b5c13c63d26ff873d5cfabbc30eef9ad668264bab4

7it [00:00, 212.77it/s]

Traceback (most recent call last):

File "eval_rag.py", line 315, in <module>

main(args)

File "eval_rag.py", line 301, in main

answers = evaluate_batch_fn(args, model, questions)

File "eval_rag.py", line 99, in evaluate_batch_retrieval

question_enc_pool_output = question_enc_outputs.pooler_output

AttributeError: 'tuple' object has no attribute 'pooler_output'

```

With this simple change (`question_enc_outputs.pooler_output` -> `question_enc_outputs[0]`), I got to run the evaluation code and confirmed

`INFO:__main__:Precision@1: 70.74`

## Environments

- Ubuntu 18.04 LTS

- Python 3.7.7

- transformers 4.0.1

- torch: 1.7.1

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSMT: @stas00

-->

@ola13 (confirmed by `git blame`) @patrickvonplaten @lhoestq | 12-14-2020 01:49:45 | 12-14-2020 01:49:45 | @lhoestq - feel free to merge if you're ok with the PR |

transformers | 9,088 | closed | run_clm.py Early stopping with ^C | - `transformers` version: 4.0.1

- Platform: Colab

- Python version: 3.6.9

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@sgugger

## Information

Model I am using: GPT2

The problem arises when using:

`run_clm.py`

## To reproduce

`!python ./transformers/examples/language-modeling/run_clm.py \

--model_name_or_path ./GPT2_PRETRAINED_LOCAL \

--dataset_name bookcorpusopen \

--dataset_config_name plain_text \

--per_device_train_batch_size 2 \

--per_device_eval_batch_size 2 \

--block_size 128 \

--gradient_accumulation_steps 1 \

--overwrite_output_dir \

--do_train \

--do_eval \

--num_train_epochs 20 \

--save_steps 50000 \

--save_total_limit 1 \

--output_dir ./GPT2-trained-save`

Output:

`2020-12-13 20:02:51.391764: I tensorflow/stream_executor/platform/default/dso_loader.cc:48] Successfully opened dynamic library libcudart.so.10.1

12/13/2020 20:02:53 - WARNING - __main__ - Process rank: -1, device: cpu, n_gpu: 0distributed training: False, 16-bits training: False

12/13/2020 20:02:53 - INFO - __main__ - Training/evaluation parameters TrainingArguments(output_dir='./RPT-trained-save', overwrite_output_dir=True, do_train=True, do_eval=True, do_predict=False, evaluation_strategy=<EvaluationStrategy.NO: 'no'>, prediction_loss_only=False, per_device_train_batch_size=2, per_device_eval_batch_size=2, per_gpu_train_batch_size=None, per_gpu_eval_batch_size=None, gradient_accumulation_steps=1, eval_accumulation_steps=None, learning_rate=5e-05, weight_decay=0.0, adam_beta1=0.9, adam_beta2=0.999, adam_epsilon=1e-08, max_grad_norm=1.0, num_train_epochs=20.0, max_steps=-1, warmup_steps=0, logging_dir='runs/Dec13_20-02-53_7d34d2e22bee', logging_first_step=False, logging_steps=500, save_steps=50000, save_total_limit=1, no_cuda=False, seed=42, fp16=False, fp16_opt_level='O1', local_rank=-1, tpu_num_cores=None, tpu_metrics_debug=False, debug=False, dataloader_drop_last=False, eval_steps=500, dataloader_num_workers=0, past_index=-1, run_name='./RPT-trained-save', disable_tqdm=False, remove_unused_columns=True, label_names=None, load_best_model_at_end=False, metric_for_best_model=None, greater_is_better=None)

Downloading: 4.00kB [00:00, 2.73MB/s]

Downloading: 2.10kB [00:00, 1.47MB/s]

Downloading and preparing dataset book_corpus_open/plain_text (download: 2.24 GiB, generated: 6.19 GiB, post-processed: Unknown size, total: 8.43 GiB) to /root/.cache/huggingface/datasets/book_corpus_open/plain_text/1.0.0/5cc3e4620a202388e77500f913b37532be8b036287436f3365e066671a1bd97e...

Downloading: 100% 2.40G/2.40G [02:41<00:00, 14.9MB/s]

9990 examples [01:04, 149.50 examples/s]^C`

The ^C automatically appears and the script stops.

## Expected behavior

The training process takes place as normal.

| 12-13-2020 20:19:17 | 12-13-2020 20:19:17 | ^C means you have hit Ctrl + C on your machine and stops the command running. You should re-run the command without hitting Ctrl + C.<|||||>Yup I am aware that ^C is a halt command. I am running this on colab and I have tried to run this 5-7 times now, not hitting Ctrl+C once. For some reason it appears itself and halts the execution. <|||||>There might be something in colab that aborts bash command after some time then, or it happens when the session disconnects. But there is absolutely nothing in the script that triggers a cancel like this, so there is nothing we can do to fix this.

Note that the scripts are not meant to be run on Colab, we have [notebook versions](https://github.com/huggingface/notebooks/tree/master/examples) of them for that.<|||||>I think I have figured out the issue. This is happening because the dataset is large and when the full thing is loaded, colab crashes.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 9,087 | closed | BertForSequenceClassification finetune training loss and accuracy have some problem | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: v4.0.0

- Platform: colab pro

- Python version: 3.6.9

- PyTorch version (GPU?): 1.7.0+cu101

- Tensorflow version (GPU?): 2.3.0

- Using GPU in script?: no

- Using distributed or parallel set-up in script?: no

@sgugger

@JetRunner

## Information

I follow the paper https://arxiv.org/pdf/2003.02245.pdf to do augmentation and test the performance

Model I am using Bert for BertTokenizer, BertForMaskedLM, BertForSequenceClassification

The problem arises when using:

Use Trainer to fine-tuning on both training set and concatenated set of training set and augmentation set, the Training log is No log or 0.683592, and accuracy is always 0.8

The tasks I am working on is:

An official GLUE task: sst2, using by huggingface datasets package

The details:

Trainer setting I follow the examples/text_classification.ipynb to build the compute_metrics function and tokenize mapping function, but the training loss and accuracy have bug

my tokenized datasets format:

compute_function, little modify by examples/text_classification.ipynb

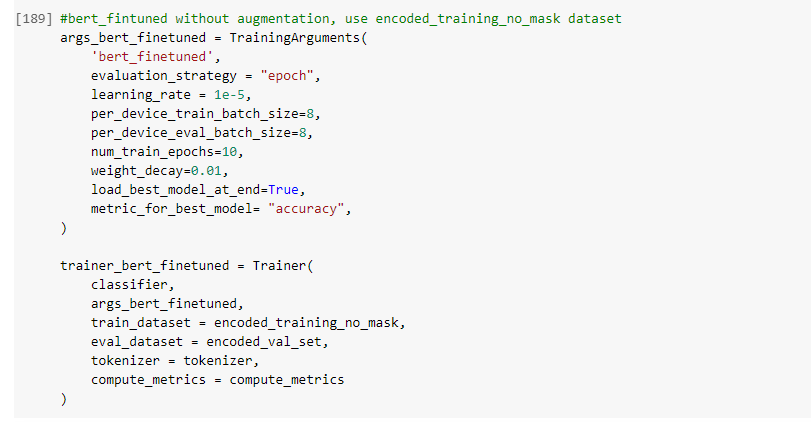

bert_finetuned_setting

fine_tuned result

| 12-13-2020 18:39:28 | 12-13-2020 18:39:28 | Hello, thanks for opening an issue! We try to keep the github issues for bugs/feature requests, rather than help with training.

Could you ask your question on the [forum](https://discusss.huggingface.co) instead?

Thanks! |

transformers | 9,086 | closed | Getting a 404 error when loading TFXLMRobertaModel from 'xlm-roberta-large' | Getting a 404 when trying to load the model.

Manyually checked the https://huggingface.co repository for the xlm-roberta-large, was only able to find the Pytorch models, why aren't the TF models available for this, if its not, why is it not explicitly mentioned in the documentation? | 12-13-2020 17:42:20 | 12-13-2020 17:42:20 | Could you try with the flag `from_pt=True` when using `from_pretrained`? <|||||>That worked, thanks |

transformers | 9,085 | closed | Adding to docs how to train CTRL Model with control codes. | # 🚀 Feature request

At the moment there is no explanation in the docs how to train a CTRL model with user defined `control codes`.

## Motivation

At the moment there is no explanation in the docs how to train a CTRL model with user defined `control codes`. I think it should be added because control codes are an important part of CTRL model.

## Your contribution

I am currently struggling on coming up with ideas on how to do that using transformer interface, but I'd love to open a PR after I understand how to do that. | 12-13-2020 15:20:18 | 12-13-2020 15:20:18 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>Did anyone figure out how to do this? |

transformers | 9,084 | closed | Problem with Token Classification models | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.0.1

- Platform: Win10

- Python version:3.7

- PyTorch version (GPU?):

- Tensorflow version (GPU?): 2.3 CPU

- Using GPU in script?: no

- Using distributed or parallel set-up in script?: no

### Who can help

examples/token-classification: @stefan-it

## Information

I followed this tutorial https://huggingface.co/transformers/custom_datasets.html#token-classification-with-w-nut-emerging-entities for token classification but results were really bad. So I changed the dataset to conll2003 and simplified the data a little (remove sentences without entities, keep only sentences with a certain length) as I saw that the model should perform well on this data. Unfortunately the results are still bad for example after epoch two with the bert model set trainable=True:

Conf mat: (rows are prediction, columns are the labels)

[[ 1 3 21 10 5 10 1 16 172]

[ 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 1 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0]

[ 0 0 0 0 0 0 0 0 0]

[ 2 7 7 13 7 6 0 9 162]]

Classification report

(' precision recall f1-score support\n'

'\n'

' 0 0.33 0.00 0.01 239\n'

' 1 0.00 0.00 0.00 0\n'

' 2 0.00 0.00 0.00 0\n'

' 3 0.00 0.00 0.00 1\n'

' 4 0.00 0.00 0.00 0\n'

' 5 0.00 0.00 0.00 0\n'

' 6 0.00 0.00 0.00 0\n'

' 7 0.00 0.00 0.00 0\n'

' 8 0.49 0.76 0.59 213\n'

'\n'

' accuracy 0.36 453\n'

' macro avg 0.09 0.08 0.07 453\n'

'weighted avg 0.40 0.36 0.28 453\n')

I tried a lot of things and checked pre-processing and post-processing multiple times and can't find a bug in there.

The model is close to the tutorial(in the tutorial it's a DistilBert model but as if performed in the same manner I changed to the bigger brother) but it seems like it's not learning at all. Though it should perform well with conll data and in other tutorials this model has shown good results (for example: https://www.depends-on-the-definition.com/named-entity-recognition-with-bert/)

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

Here's the code is use (not complete preprocessing):

```

import tensorflow as tf

from transformers import BertTokenizerFast

tokenizer = BertTokenizerFast.from_pretrained('bert-base-cased')

train_encodings = tokenizer(train_texts, is_split_into_words=True, return_offsets_mapping=True, padding=True, truncation=True)

val_encodings = tokenizer(val_texts, is_split_into_words=True, return_offsets_mapping=True, padding=True, truncation=True)

train_dataset = tf.data.Dataset.from_tensor_slices((dict(train_encodings), train_labels))

val_dataset = tf.data.Dataset.from_tensor_slices((dict(val_encodings), val_labels))

from transformers import TFBertForTokenClassification

model = TFBertForTokenClassification.from_pretrained('bert-base-cased', num_labels=len(unique_tags)) #unique tags are infered from training data

model.layers[0].trainable = True

optimizer = tf.keras.optimizers.Adam(learning_rate=5e-5)

model.compile(optimizer=optimizer, loss=model.compute_loss) #or tf.keras.losses.SparseCategoricalCrossentropy(from_logits=True)

for epoch in range(8):

model.fit(train_dataset.shuffle(64).batch(16), batch_size=16, verbose=1, epochs=10)

predictions = model.predict(val_dataset)

# some post-processing. predictions is a list with all logits. for calculating the metrics I only consider

# the tags that are not -100 (which are supposed to be ignored ).

good_indexes = [i for i, l in enumerate(val_labels) if l != -100]

list_preds = []

for logi in predictions['logits']:

list_preds.append(np.argmax(logi))

pred_post = [list_preds[j] for j in good_indexes]

print(confusion_matrix(pred_post, label_post))

report = classification_report(pred_post, label_post)

pprint(report)

```

## Expected behavior