repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 8,806 | closed | Create README.md | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-26-2020 19:17:24 | 11-26-2020 19:17:24 | |

transformers | 8,805 | closed | Revert "[s2s] finetune.py: specifying generation min_length" | Reverts huggingface/transformers#8478 | 11-26-2020 19:05:51 | 11-26-2020 19:05:51 | |

transformers | 8,804 | closed | MPNet: Masked and Permuted Pre-training for Language Understanding | # Model addition

[MPNet](https://arxiv.org/abs/2004.09297)

## Model description

MPNet introduces a novel self-supervised objective named masked and permuted language modeling for language understanding. It inherits the advantages of both the masked language modeling (MLM) and the permuted language modeling (PLM) to addresses the limitations of MLM/PLM, and further reduce the inconsistency between the pre-training and fine-tuning paradigms.

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-26-2020 17:17:32 | 11-26-2020 17:17:32 | Hey @StillKeepTry - could you maybe link the paper corresponding to your model add a small PR description? :-) That would be very helpful<|||||>Thanks for the new PR @StillKeepTry - could you add a `test_modeling_mpnet.py` file - it would be important to test the model :-)

Also it would be amazing if you could give some context of MPNet - is there a paper, blog post, analysis, results that go along with the model? And are there pretrained weights?

Thanks a lot! <|||||>> Thanks for the new PR @StillKeepTry - could you add a `test_modeling_mpnet.py` file - it would be important to test the model :-)

>

>

>

> Also it would be amazing if you could give some context of MPNet - is there a paper, blog post, analysis, results that go along with the model? And are there pretrained weights?

>

>

>

> Thanks a lot!

https://arxiv.org/abs/2004.09297<|||||>> > Thanks for the new PR @StillKeepTry - could you add a `test_modeling_mpnet.py` file - it would be important to test the model :-)

> > Also it would be amazing if you could give some context of MPNet - is there a paper, blog post, analysis, results that go along with the model? And are there pretrained weights?

> > Thanks a lot!

>

> https://arxiv.org/abs/2004.09297

ok<|||||>Oh and another thing to do after the merge will be to add your new model to the main README and the documentation so that people can use it! The template should give you a file for the `.rst` (or you can use `docs/model_doc/bert.rst` as an example).<|||||>I have updated `test_modeling_mpnet.py` now.<|||||>Hi, every reviewer. Thank you for your valuable reviews. I have fixed previous comments (like doc, format, and so on) and updated the `tokenization_mpnet.py` and `tokenization_mpnet_fast.py` by removing the inheritance. Besides, I also upload test files (`test_modeling_mpnet.py`, `test_modeling_tf_mpnet.py`) for testing, and model weights into the model hub. <|||||>Fantastic, thanks for working on it! Will review today.<|||||>@patrickvonplaten Hi, are there any new comments?<|||||>Hello!

Still some comments:

1. Update the inputs handling in the TF file, we have merged an update for the booleans last Friday. You can see an example in the TF BERT file if you need one.

2. rebase and fix the conflicting files.

3. Fix the check_code_quality test.<|||||>I think something went wrong with the merge here :-/ Could you try to open a new PR that does not include all previous commits or fix this one? <|||||>> I think something went wrong with the merge here :-/ Could you try to open a new PR that does not include all previous commits or fix this one?

OK, it seems something wrong when I update to the latest version. :(<|||||>@patrickvonplaten @JetRunner @sgugger @LysandreJik @jplu The new PR is moved to [https://github.com/huggingface/transformers/pull/8971](https://github.com/huggingface/transformers/pull/8971) |

transformers | 8,803 | closed | Get locally cached models programatically | # 🚀 Feature request

A small utility function to allow users to get a list of model binaries that are cached locally. Each list entry would be a tuple in the form `(model_url, etag, size_in_MB)`.

## Motivation

I have quite a few environments on my local machine containing the package and have downloaded a number of models. Over time these begin to stack up in terms of storage usage so I thought it would be useful at the very least to be able to retrieve a list of the models that are stored locally as well as some info regarding their size. I had also thought about building on this further and providing a function to remove a model from the local cache programmatically. However, for now I think getting a list is a good start.

## Your contribution

I have a PR ready to go if you think this would be a suitable feature to add. I've added it inside `file_utils.py` as this seemed like the most appropriate place. The function only adds files to the list that endwith `.bin` so right now only model binaries are included.

An example usage of the function is below:

```python

from transformers import file_utils

models = file_utils.get_cached_models()

for model in models:

print(model)

>>> ('https://s3.amazonaws.com/models.huggingface.co/bert/gpt2-pytorch_model.bin', '"2d19e321961949b7f761cdffefff32c0-66"', 548.118077)

>>> ('https://cdn.huggingface.co/distilbert-base-uncased-finetuned-sst-2-english-pytorch_model.bin', '"1d085de7c065928ccec2efa407bd9f1e-16"', 267.844284)

>>> ('https://cdn.huggingface.co/twmkn9/bert-base-uncased-squad2/pytorch_model.bin', '"e5f04c87871ae3a98e6eea90f1dec146"', 437.985356)

```

<!-- Is there any way that you could help, e.g. by submitting a PR?

Make sure to read the CONTRIBUTING.MD readme:

https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md -->

| 11-26-2020 16:30:27 | 11-26-2020 16:30:27 | I think that would be a cool addition! What do you think @julien-c?<|||||>Yes, I almost wrote something like that a while ago, so go for it 👍

To remove old weights you don't use anymore @cdpierse, we could also document a unix command to `find` files sorted by last access time and `rm` them

(I think @sshleifer or @patrickvonplaten had a bash alias for this at some point?)<|||||>@julien-c I have the PR for handling cached models pushed but I've been trying to think of some way to add a function that allows model deletion, we could use the model names and etags returned by `get_cached_models()` to select specfic model `.bin` files to delete but the problem is that it will still leave behind stray config and tokenizer files which probably isn't great. The filenames for tokenizers, configs, and models don't seem to be related so I'm not sure if they can be deleted that way. The two approaches seem to be to delete from last accessed date or just delete model `.bin` files. <|||||>This issue has been automatically marked as stale and been closed because it has not had recent activity. Thank you for your contributions.

If you think this still needs to be addressed please comment on this thread. |

transformers | 8,802 | closed | Use GPT to assign sentence probability/perplexity given previous sentence? | Hi!

Is it possible to use GPT to assign a sentence probability given the previous sentences?

I have seen this code here, which can be used to assign a perplexity score to a sentence:

https://github.com/huggingface/transformers/issues/473

But is there a way to compute this score given a certain context (up to 1024 tokens)?

| 11-26-2020 15:29:50 | 11-26-2020 15:29:50 | Hello, thanks for opening an issue! We try to keep the github issues for bugs/feature requests.

Could you ask your question on the [forum](https://discusss.huggingface.co) instead?

Thanks! |

transformers | 8,801 | closed | Multiprocessing behavior change 3.1.0 -> 3.2.0 | ## Environment info

```

- `transformers` version: 3.2.0

- Platform: Linux-4.15.0-88-generic-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.6.9

- PyTorch version (GPU?): 1.7.0 (True)

- Tensorflow version (GPU?): 2.3.0 (False)

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: Yes (Python multiprocessing)

```

## Information

I am writing a custom script that uses Python's multiprocessing. The goal of it is to have multiple child processes that run inference (using `torch.nn.Module`) **on separate GPUs**.

See below a minimal example of the issue. Please note that script contains pure `torch` code, however, it seems like importing `transformers` (**and not even using it afterwards**) changes some internal states.

```python

import multiprocessing as mp

import os

import torch

import transformers # <-- Just imported, never used

def diagnostics(name):

"""Print diagnostics."""

print(name)

print(f"CUDA initialized: {torch.cuda.is_initialized()}")

print(f"Is bad fork: {torch._C._cuda_isInBadFork()}")

print(80 * "*")

def fun(gpu):

current_process = mp.current_process()

diagnostics(current_process.pid)

os.environ["CUDA_VISIBLE_DEVICES"] = str(gpu)

model = torch.nn.Linear(200, 300)

model = model.to("cuda") # Trouble maker

while True:

model(torch.ones(32, 200, device="cuda"))

if __name__ == "__main__":

n_processes = 2

gpus = [0, 1]

start_method = "fork" # fork, forkserver, spawn

diagnostics("Parent")

mp.set_start_method(start_method)

processes = []

for i in range(n_processes):

p = mp.Process(name=str(i), target=fun, kwargs={"gpu": gpus[i]})

p.start()

processes.append(p)

for p in processes:

p.join()

```

The above script works as expected in `3.1.0` or when we do not import transformers at all. Each subprocess does inference on a separate GPU. See below the standard output.

```

Parent

CUDA initialized: False

Is bad fork: False

********************************************************************************

21091

CUDA initialized: False

Is bad fork: False

********************************************************************************

21092

CUDA initialized: False

Is bad fork: False

********************************************************************************

```

However, for `3.2.0` and higher there is the following error.

```

Parent

CUDA initialized: False

Is bad fork: False

********************************************************************************

21236

CUDA initialized: False

Is bad fork: True

********************************************************************************

Process 0:

Traceback (most recent call last):

File "/usr/lib/python3.6/multiprocessing/process.py", line 258, in _bootstrap

self.run()

File "/usr/lib/python3.6/multiprocessing/process.py", line 93, in run

self._target(*self._args, **self._kwargs)

File "git_example.py", line 21, in fun

model = model.to("cuda") # Trouble maker

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 612, in to

return self._apply(convert)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 381, in _apply

param_applied = fn(param)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 610, in convert

return t.to(device, dtype if t.is_floating_point() else None, non_blocking)

File "/usr/local/lib/python3.6/dist-packages/torch/cuda/__init__.py", line 164, in _lazy_init

"Cannot re-initialize CUDA in forked subprocess. " + msg)

RuntimeError: Cannot re-initialize CUDA in forked subprocess. To use CUDA with multiprocessing, you must use the 'spawn' start method

21237

CUDA initialized: False

Is bad fork: True

********************************************************************************

Process 1:

Traceback (most recent call last):

File "/usr/lib/python3.6/multiprocessing/process.py", line 258, in _bootstrap

self.run()

File "/usr/lib/python3.6/multiprocessing/process.py", line 93, in run

self._target(*self._args, **self._kwargs)

File "git_example.py", line 21, in fun

model = model.to("cuda") # Trouble maker

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 612, in to

return self._apply(convert)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 381, in _apply

param_applied = fn(param)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 610, in convert

return t.to(device, dtype if t.is_floating_point() else None, non_blocking)

File "/usr/local/lib/python3.6/dist-packages/torch/cuda/__init__.py", line 164, in _lazy_init

"Cannot re-initialize CUDA in forked subprocess. " + msg)

RuntimeError: Cannot re-initialize CUDA in forked subprocess. To use CUDA with multiprocessing, you must use the 'spawn' start method

```

Changing the `start_method` to `"forkserver"` or `"spawn"` prevents the exception from being raised. However, only a single GPU for all child processes is used.

| 11-26-2020 15:20:28 | 11-26-2020 15:20:28 | Sorry to insist. Could you at least share your thoughts on this? @LysandreJik @patrickvonplaten <|||||>Is the only dependency you change `transformers`? The PyTorch version remained the same (v.1.7.0) for both `transformers` versions? If so, I'll take a deeper look this week.<|||||>@LysandreJik In both experiments `torch==v1.7.0`. However, downgrading to `torch==v.1.6.0` (in both experiments) leads to exactly the same problem. I did `pip install transformers==v3.1.0` and `pip install transformers==v3.2.0` back and forth so that could be the only way other dependencies got updated.

Thank you!<|||||>Okay, thanks for checking. I'll have a look this week.<|||||>Hello @LysandreJik , is there any update on this issue?

Thank in advance!<|||||>This issue has been stale for 1 month.<|||||>I think I managed to solve the problem.

Instead of using the environment variable `os.environ["CUDA_VISIBLE_DEVICES"] = str(gpu)` to specify the GPUs one needs to provide it via `torch.device(f"cuda:{gpu}")`. |

transformers | 8,800 | closed | Problem with using custom tokenizers with run_mlm.py | Hi! I have an issue with running the `run_mlm.py` script with a tokenizer I myself trained. If I use pretrained tokenizers everything works.

Versions:

```

python: 3.8.3

transformers: 3.5.1

tokenizers: 0.9.4

torch: 1.7.0

```

This is how I train my tokenizer:

```

from tokenizers import BertWordPieceTokenizer

tokenizer = BertWordPieceTokenizer(lowercase=False, strip_accents=False, clean_text=True)

tokenizer.train(files=['/mounts/data/proj/antmarakis/wikipedia/wikipedia_en_1M.txt'], vocab_size=350, special_tokens=[

"[PAD]",

"[UNK]",

"[CLS]",

"[SEP]",

"[MASK]",

])

tokenizer.save_model('wikipedia_en')

```

The above results in a vocab.txt file.

And this is how I try to train my model (using the `run_mlm.py` script):

```

python run_mlm.py \

--model_type bert \

--config_name bert_custom.json \

--train_file wikipedia_en_1M.txt \

--tokenizer_name wikipedia_en \

--output_dir lm_temp \

--do_train \

--num_train_epochs 1 \

--overwrite_output_dir

```

If I use a pretrained model/tokenizer, this script works (that is, I replace `config_name` and `tokenizer_name` with `model_name_or_path roberta-base` or something). But using the above code, I get the following error message:

```

Traceback (most recent call last):

File "run_mlm.py", line 392, in <module>

main()

File "run_mlm.py", line 334, in main

tokenized_datasets = tokenized_datasets.map(

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/dataset_dict.py", line 283, in map

{

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/dataset_dict.py", line 284, in <dictcomp>

k: dataset.map(

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 1240, in map

return self._map_single(

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 156, in wrapper

out: Union["Dataset", "DatasetDict"] = func(self, *args, **kwargs)

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/fingerprint.py", line 163, in wrapper

out = func(self, *args, **kwargs)

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/arrow_dataset.py", line 1525, in _map_single

writer.write_batch(batch)

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/arrow_writer.py", line 278, in write_batch

pa_table = pa.Table.from_pydict(typed_sequence_examples)

File "pyarrow/table.pxi", line 1531, in pyarrow.lib.Table.from_pydict

File "pyarrow/array.pxi", line 295, in pyarrow.lib.asarray

File "pyarrow/array.pxi", line 195, in pyarrow.lib.array

File "pyarrow/array.pxi", line 107, in pyarrow.lib._handle_arrow_array_protocol

File "/mounts/Users/cisintern/antmarakis/.local/lib/python3.8/site-packages/datasets/arrow_writer.py", line 100, in __arrow_array__

if trying_type and out[0].as_py() != self.data[0]:

File "pyarrow/array.pxi", line 949, in pyarrow.lib.Array.__getitem__

File "pyarrow/array.pxi", line 362, in pyarrow.lib._normalize_index

IndexError: index out of bounds

```

This approach used to work for previous versions, but after I upgraded to the latest releases this doesn't seem to work anymore and I do not know where it broke. Any help would be appreciated! | 11-26-2020 13:56:00 | 11-26-2020 13:56:00 | I have simplified the code to show that it is definitely the pretrained tokenizer that breaks the execution:

```

python run_mlm.py \

--model_name_or_path bert-base-cased \

--train_file data.txt \

--tokenizer_name custom_tokenizer \

--output_dir output \

--do_train \

--num_train_epochs 1 \

--overwrite_output_dir

```<|||||>This seems like an issue that concerns the new mlm script, `tokenizers` and `datasets` so I'll ping the holy trinity that may have an idea where the error comes from: @sgugger @n1t0 @lhoestq <|||||>Looks like the tokenizer returns an empty batch of elements, which causes an `IndexError` ?<|||||>is this issue resolved? ran into the same error.<|||||>From recent experience, I think this might happen if no `model_max_length` is set for the tokenizer.

In the directory where your tokenizer files live, do you mind adding another file called `tokenizer_config.json`, with the following information: `{"model_max_length": 512}`?

Thank you.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 8,799 | closed | Warning about too long input for fast tokenizers too | # What does this PR do?

If truncation is not set in tokenizers, but the tokenization is too long

for the model (`model_max_length`), we used to trigger a warning that

The input would probably fail (which it most likely will).

This PR re-enables the warning for fast tokenizers too and uses common code

for the trigger to make sure it's consistent across.

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

@LysandreJik

@thomwolf

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

--> | 11-26-2020 11:35:12 | 11-26-2020 11:35:12 | Failing tests seem to come from some other code (seq2seq)<|||||>@thomwolf could you review this PR as you're the mastermind behind this code?<|||||>@LysandreJik May I merge (failing tests and quality is linked to unrelated `finetune.py` code, I tried to rebase but it does not seem to be enough) |

transformers | 8,798 | closed | Fix setup.py on Windows | # What does this PR do?

This PR fixes the target `deps_table_update` on Windows by forcing the newline to be LF. | 11-26-2020 10:55:49 | 11-26-2020 10:55:49 | |

transformers | 8,797 | closed | Minor docs typo fixes | # What does this PR do?

Just a few typo fixes in the docs.

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

## Who can review?

@sgugger | 11-26-2020 10:28:00 | 11-26-2020 10:28:00 | Looks like you have a styling problem, could you run the command `make style` after doing a dev install with

```

pip install -e .[dev]

```

in the repo?<|||||>Oops! Done. |

transformers | 8,796 | closed | QARiB Arabic and dialects models | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-26-2020 08:49:20 | 11-26-2020 08:49:20 | Thanks @ahmed451!

For context, please also read https://discuss.huggingface.co/t/announcement-all-model-cards-will-be-migrated-to-hf-co-model-repos/2755 |

transformers | 8,795 | closed | Use model.from_pretrained for DataParallel also | When training on multiple GPUs, the code wraps a model with torch.nn.DataParallel. However if the model has custom from_pretrained logic, it does not get applied during load_best_model_at_end.

This commit uses the underlying model during load_best_model_at_end, and re-wraps the loaded model with DataParallel.

If you choose to reject this change, then could you please move the this logic to a function, e.g. def load_best_model_checkpoint(best_model_checkpoint) or something, so that it can be overridden?

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-26-2020 08:36:39 | 11-26-2020 08:36:39 | Oh, looks like there is a last code-style issue to fix. Could you run `make style` on your branch? Then we can merge this.<|||||>I don't have `make` installed 😄 , what is the style issue? Wonder what style issue can go wrong in such a simple patch. The only thing we added is `self.` in those 2 lines<|||||>`check_code_quality` complains about `finetune.py`, but it's not modified by this patch<|||||>Weird indeed. Will merge and fix if the issue persists. |

transformers | 8,794 | closed | Can I get logits for each sequence I acqired from model.generate()? | Hi, I’m currently stucked in getting logits from model.generate. I’m wondering if it is possible to get logits of each seqeucne returned by model.generate. (like logits for each token returned by model.logits) | 11-26-2020 07:33:02 | 11-26-2020 07:33:02 | Sadly not at the moment... -> we are currently thinking about how to improve the `generate()` outputs though! <|||||>This issue has been automatically marked as stale and been closed because it has not had recent activity. Thank you for your contributions.

If you think this still needs to be addressed please comment on this thread. |

transformers | 8,793 | closed | Loss pooling layer parameters after Fine-tune. | According to the [code](https://github.com/huggingface/transformers/blob/master/src/transformers/models/bert/modeling_bert.py#L1005): if I want to fine-tune BERT with LM, we don't init pooling layer.

So we loss the original(pre-trained by Google) parameters if we save the fine-tune model and reload it.

Mostly, we use this model for downstream task( text classification), this (may) lead to a worse result.

This `add_pooling_layer` should be `true` for all time even if we don't update them in fine-tune.

@thomwolf @LysandreJik | 11-26-2020 06:40:20 | 11-26-2020 06:40:20 | The pooling layer is not used during the fine-tuning if doing MLM, so gradients are not retro-propagated through that layer; the parameters are not updated.<|||||>@LysandreJik The pooling parameters are not needed in MLM fine-tune. But usually, we use MLM to fine-tune BERT on our own corpus, then we use the saved model weight(missed pooling parameters) in downstream task.

It's unreasonable for us to random initialize the pool parameters, we should reload google's original pooling parameter(though it was not update in MLM fine-tune).<|||||>I see, thank you for explaining! In that case, would using the `BertForPreTraining` model fit your needs? You would only need to pass the masked LM labels, not the NSP labels, but you would still have all the layers that were used for the pre-training.

This is something we had not taken into account when implementing the `add_pooling_layer` argument cc @patrickvonplaten @sgugger <|||||>Hi @LysandreJik,

I also tried to further pre-train BERT with new, domain specific text data using the recommended run_mlm_wwm.py file, since I read a paper which outlines the benefits of this approach. I also got the warning that the Pooling Layers are not initialized from the model checkpoint. I have a few follow up questions to that:

- Does that mean that the final hidden vector of the [CLS] token is randomly initialized? That would be an issue for me since I need it in my downstream application.

- If the former point is true: Why is not at least the hidden vector of the source model copied?

- I think to get a proper hidden vector for [CLS], NSP would be needed. If I understand your answers in issue #6330 correctly, you don't support the NSP objective due to the results of the RoBERTa paper. Does that mean there is no code for pre-training BERT in the whole huggingface library which yields meaningful final [CLS] hidden vectors?

- Is there an alternative to [CLS] for downstream tasks that use sentence/document embeddings rather than token embeddings?

I would really appreciate any kind of help. Thanks a lot!

<|||||>The [CLS] token was not randomly initialized. It's a token in BERT vocabulary.

We talk about Pooling Layer in [here](https://github.com/huggingface/transformers/blob/master/src/transformers/models/bert/modeling_bert.py#L609).

<|||||>Oh okay, I see. Only the weight matrix and the bias vector of that feed forward operation on the [CLS] vector are randomly initalized, not the [CLS] vector itself. I misunderstood a comment in another forum. Thanks for clarification @wlhgtc!<|||||>This issue has been automatically marked as stale and been closed because it has not had recent activity. Thank you for your contributions.

If you think this still needs to be addressed please comment on this thread. |

transformers | 8,792 | closed | [finetune_trainer] --evaluate_during_training is no more | In `examples/seq2seq/builtin_trainer/` all scripts reference `--evaluate_during_training ` but it doesn't exist in pt trainer, but does exist in tf trainer:

```

grep -Ir evaluate_during

builtin_trainer/finetune.sh: --do_train --do_eval --do_predict --evaluate_during_training \

builtin_trainer/train_distil_marian_enro.sh: --do_train --do_eval --do_predict --evaluate_during_training\

builtin_trainer/finetune_tpu.sh: --do_train --do_eval --evaluate_during_training \

builtin_trainer/train_distilbart_cnn.sh: --do_train --do_eval --do_predict --evaluate_during_training \

builtin_trainer/train_distil_marian_enro_tpu.sh: --do_train --do_eval --evaluate_during_training \

builtin_trainer/train_mbart_cc25_enro.sh: --do_train --do_eval --do_predict --evaluate_during_training \

```

```

Traceback (most recent call last):

File "finetune_trainer.py", line 310, in <module>

main()

File "finetune_trainer.py", line 118, in main

model_args, data_args, training_args = parser.parse_args_into_dataclasses()

File "/home/stas/anaconda3/envs/main-38/lib/python3.8/site-packages/transformers/hf_argparser.py", line 144, in parse_args_into_dataclasses

raise ValueError(f"Some specified arguments are not used by the HfArgumentParser: {remaining_args}")

ValueError: Some specified arguments are not used by the HfArgumentParser: ['--evaluate_during_training']

```

Is this meant to be replaced by: `--evaluation_strategy` - this is the closest I found in `training_args.py`

If so which one? `steps` or `epoch`?

Also the help output is borked:

```

$ python finetune_trainer.py -h

...

[--evaluation_strategy {EvaluationStrategy.NO,EvaluationStrategy.STEPS,EvaluationStrategy.EPOCH}]

```

probably this is not what what's intended, but

```

[--evaluation_strategy {no, steps, epochs}

```

But perhaps it's a bigger issue - I see `trainer.args.evaluate_during_training`:

```

src/transformers/integrations.py: ) and (not trainer.args.do_eval or not trainer.args.evaluate_during_training):

```

and also `--evaluate_during_training` in many other files under `examples/`.

Thank you.

@sgugger, @patrickvonplaten

| 11-26-2020 06:37:54 | 11-26-2020 06:37:54 | Found the source of breakage: https://github.com/huggingface/transformers/pull/8604 - I guess that PR needs more work |

transformers | 8,791 | closed | [FlaxBert] Fix non-broadcastable attention mask for batched forward-passes | # What does this PR do?

Fixes #8790

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@mfuntowicz

@avital

@LysandreJik

| 11-26-2020 06:10:31 | 11-26-2020 06:10:31 | @mfuntowicz @avital

I just fixed the bug that I was hitting. There might be other places that need this fix as well.<|||||>Wuuhu - first Flax PR :-). This looks great to me @KristianHolsheimer - think you touched all necessary files as well!

@mfuntowicz - maybe you can take a look as well<|||||>**EDIT** I re-enabled GPU memory preallocation but set the mem fraction < 1/parallelism. That seemed to fix the tests. The problem with this is that future tests might fail if a model doesn't fit in 1/8th of the GPU memory.

---

The flax tests time out. When I ran the tests locally with `pytest -n auto`, I did notice OOM issues due to preallocation of GPU memory by XLA. I addressed this in commit 6bb1f5e600cd35c712f4f980699df7735b4f59eb.

Other than that, it's hard to debug the tests when there's no output.

Would it be an option to run these tests single-threaded instead?<|||||>I had the changes in another branche I'm working on, happy to merge this one and will rebase mine 👍.

Thanks for looking at it @KristianHolsheimer |

transformers | 8,790 | closed | [FlaxBert] Non-broadcastable attention mask in batched forward-pass | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform: Linux

- Python version: 3.8

- JAX version:

- jax==0.2.6

- jaxlib==0.1.57+cuda110

- flax==0.2.2

- PyTorch version (GPU?): n/a

- Tensorflow version (GPU?): n/a

- Using GPU in script?: **yes** (cuda 11.0)

- Using distributed or parallel set-up in script?: **no**

### Who can help

@mfuntowicz

@avital

@LysandreJik

## Information

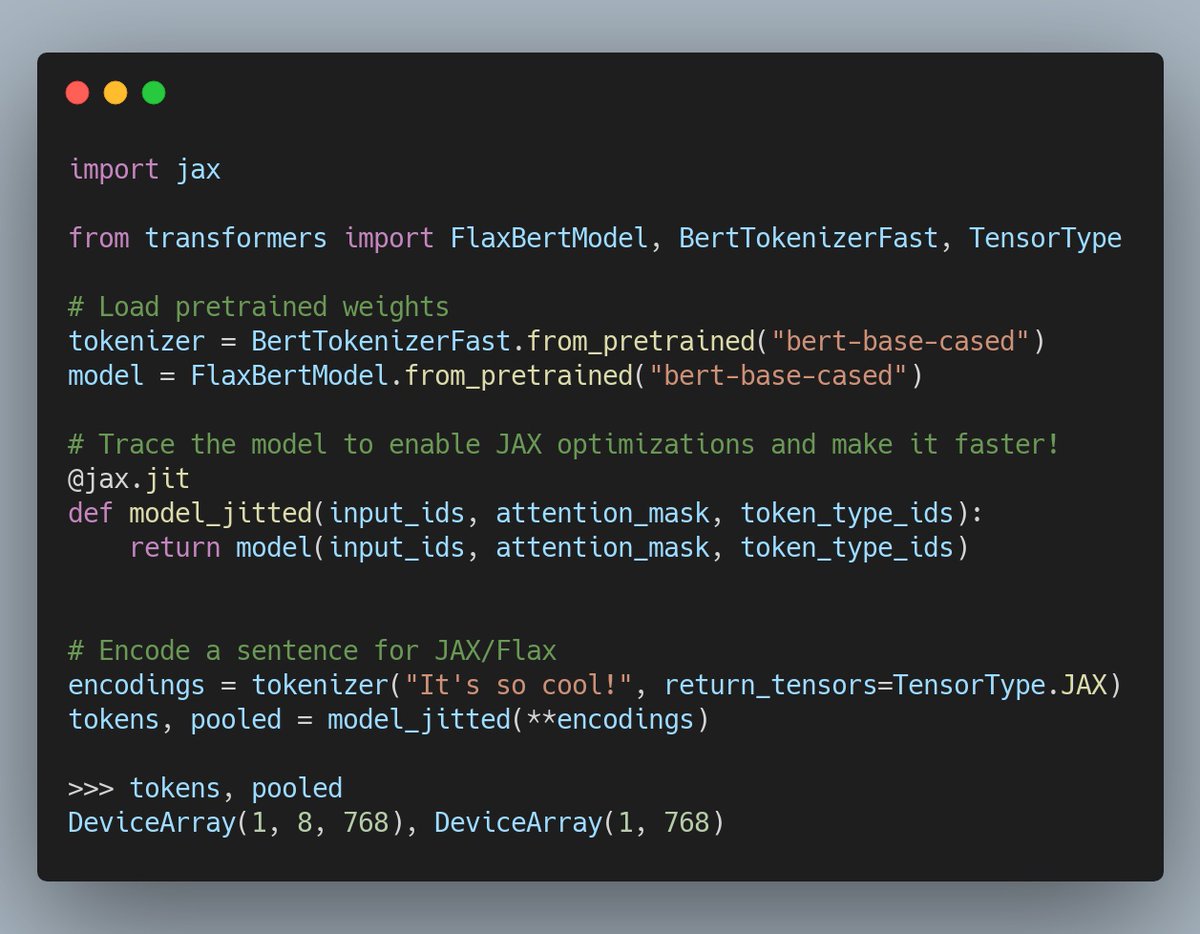

I ran the script from the recent Twitter [post](https://twitter.com/huggingface/status/1331255460033400834):

The only thing I changed was that I fed in multiple sentences:

```python

from transformers import FlaxBertModel, BertTokenizerFast, TensorType

tokenizer = BertTokenizerFast.from_pretrained('bert-base-cased')

model = FlaxBertModel.from_pretrained('bert-base-cased')

# apply_fn = jax.jit(model.model.apply)

sentences = ["this is an example sentence", "this is another", "and a third one"]

encodings = tokenizer(sentences, return_tensors=TensorType.JAX, padding=True, truncation=True)

tokens, pooled = model(**encodings)

```

> ValueError: Incompatible shapes for broadcasting: ((3, 12, 7, 7), (1, 1, 3, 7))

See full stack trace: https://pastebin.com/sPUSjGVi

| 11-26-2020 06:09:09 | 11-26-2020 06:09:09 | |

transformers | 8,789 | closed | KeyError: 'eval_loss' when fine-tuning gpt-2 with run_clm.py | ## Environment info

- `transformers` version: 4.0.0-rc-1

- Platform: Linux-4.19.0-12-amd64-x86_64-with-glibc2.10

- Python version: 3.8.5

- PyTorch version (GPU?): 1.6.0 (True)

- Tensorflow version (GPU?): 2.2.0 (True)

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: default option

### Who can help

albert, bert, GPT2, XLM: @LysandreJik

Trainer: @sgugger

## Information

Model I am using (Bert, XLNet ...): GPT2

The problem arises when using:

* [x] the official example scripts: (give details below)

Bug occurs when running run_clm.py file from transformers/examples/language-modeling/ , the evaluation step (--do_eval) will crash with a python error related to missing KeyError 'eval_loss',

## To reproduce

Steps to reproduce the behavior:

1. Use run_clm.py file from transformers/examples/language-modeling/

2. Try to fine-tune gpt-2 model, with your own train file and your own validation file

3. When you add "--do_eval" option in run_clm.py then an error will occur when the step "evaluation" is reached :

```

File "run_clm.py", line 353, in <module>

main()

File "run_clm.py", line 333, in main

perplexity = math.exp(eval_output["eval_loss"])

KeyError: 'eval_loss'

```

when I try to print the content of eval_output then there is just one key : "epoch"

the way I execute run_clm.py :

```

python run_clm.py \

--model_name_or_path gpt2 \

--train_file train.txt \

--validation_file dev.txt \

--do_train \

--do_eval \

--per_device_train_batch_size 2 \

--per_device_eval_batch_size 2 \

--output_dir results/test-clm

```

## Expected behavior

The evaluation step should run without problems. | 11-26-2020 04:15:56 | 11-26-2020 04:15:56 | This is weird, as the script is tested for evaluation. What does your `dev.txt` file look like?<|||||>Dev.txt contains text in english, one sentence by line.

The PC I use has 2 graphic cards, so run_clm.py uses the 2 cards for the training, perhaps the bug occurs only when 2 or more graphic card are used for the training ?<|||||>The script is tested on 2 GPUs as well as one. Are you sure this file contains enough text to have a least one batch during evaluation? This is the only thing I can think of for not having an eval_loss returned.<|||||>The dev.txt file contains 46 lines, the train file contains 268263 lines.

the specifications of the PC I use :

- Intel Xeon E5-2650 v4 (Broadwell, 2.20GHz)

- 128 Gb ram

- 2 x Nvidia GeForce GTX 1080 Ti

<|||||>Like I said, the dev file is maybe too short to provide at least one batch and return a loss. You should try with a longer dev file.<|||||>This issue has been automatically marked as stale and been closed because it has not had recent activity. Thank you for your contributions.

If you think this still needs to be addressed please comment on this thread. |

transformers | 8,788 | closed | Add QCRI Arabic and Dialectal BERT (QARiB) models | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-25-2020 20:51:34 | 11-25-2020 20:51:34 | Thanks for sharing; your filenames are wrong, they should be nested inside folders named from your model id<|||||>> Thanks for sharing; your filenames are wrong, they should be nested inside folders named from your model id

Thanks Julien, I have updated the branch accordingly.<|||||>closing in favor of #8796 |

transformers | 8,787 | closed | QA pipeline fails during convert_squad_examples_to_features | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.0.0-rc-1

- Platform: Linux-3.10.0-1062.9.1.el7.x86_64-x86_64-with-redhat-7.8-Maipo

- Python version: 3.7.9

- PyTorch version (GPU?): 1.7.0 (True)

- Tensorflow version (GPU?): 2.3.1 (True)

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: yes

### Who can help

@LysandreJik @mfuntowicz

IDK who else can help, but in sort, I am looking for someone who can help me in QA tasks.

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, GPT2, XLM: @LysandreJik

tokenizers: @mfuntowicz

Trainer: @sgugger

Speed and Memory Benchmarks: @patrickvonplaten

Model Cards: @julien-c

TextGeneration: @TevenLeScao

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten @TevenLeScao

Blenderbot: @patrickvonplaten

Bart: @patrickvonplaten

Marian: @patrickvonplaten

Pegasus: @patrickvonplaten

mBART: @patrickvonplaten

T5: @patrickvonplaten

Longformer/Reformer: @patrickvonplaten

TransfoXL/XLNet: @TevenLeScao

RAG: @patrickvonplaten, @lhoestq

FSMT: @stas00

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

-->

## Information

Model I am using (Bert, XLNet ...): Bert

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: run_squad.py (modifying to run using jupyter notebook, using "HfArgumentParser")

The tasks I am working on is:

* [x] an official GLUE/SQUaD task: SQUaD

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. modified all argparse to HfArgumentParser

2. created "ModelArguments" dataclass function for HfArgumentParser (Ref: https://github.com/patil-suraj/Notebooks/blob/master/longformer_qa_training.ipynb)

3. need to small changes in the whole script.

The test fails with error `TypeError: TextInputSequence must be str`

Complete failure result:

```

RemoteTraceback Traceback (most recent call last)

RemoteTraceback:

"""

Traceback (most recent call last):

File "/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/multiprocessing/pool.py", line 121, in worker

result = (True, func(*args, **kwds))

File "/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/multiprocessing/pool.py", line 44, in mapstar

return list(map(*args))

File "/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/site-packages/transformers/data/processors/squad.py", line 175, in squad_convert_example_to_features

return_token_type_ids=True,

File "/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/site-packages/transformers/tokenization_utils_base.py", line 2439, in encode_plus

**kwargs,

File "/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/site-packages/transformers/tokenization_utils_fast.py", line 463, in _encode_plus

**kwargs,

File "/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/site-packages/transformers/tokenization_utils_fast.py", line 378, in _batch_encode_plus

is_pretokenized=is_split_into_words,

TypeError: TextInputSequence must be str

"""

The above exception was the direct cause of the following exception:

TypeError Traceback (most recent call last)

<ipython-input-19-263240bbee7e> in <module>

----> 1 main()

<ipython-input-18-61d7f0eab618> in main()

111 # Training

112 if train_args.do_train:

--> 113 train_dataset = load_and_cache_examples((model_args, train_args), tokenizer, evaluate=False, output_examples=False)

114 global_step, tr_loss = train(args, train_dataset, model, tokenizer)

115 logger.info(" global_step = %s, average loss = %s", global_step, tr_loss)

<ipython-input-8-79eb3ed364c2> in load_and_cache_examples(args, tokenizer, evaluate, output_examples)

54 max_query_length=model_args.max_query_length,

55 is_training=not evaluate,

---> 56 return_dataset="pt",

57 # threads=model_args.threads,

58 )

/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/site-packages/transformers/data/processors/squad.py in squad_convert_examples_to_features(examples, tokenizer, max_seq_length, doc_stride, max_query_length, is_training, padding_strategy, return_dataset, threads, tqdm_enabled)

366 total=len(examples),

367 desc="convert squad examples to features",

--> 368 disable=not tqdm_enabled,

369 )

370 )

/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/site-packages/tqdm/std.py in __iter__(self)

1131

1132 try:

-> 1133 for obj in iterable:

1134 yield obj

1135 # Update and possibly print the progressbar.

/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/multiprocessing/pool.py in <genexpr>(.0)

323 result._set_length

324 ))

--> 325 return (item for chunk in result for item in chunk)

326

327 def imap_unordered(self, func, iterable, chunksize=1):

/data/user/tr27p/.conda/envs/DeepBioComp/lib/python3.7/multiprocessing/pool.py in next(self, timeout)

746 if success:

747 return value

--> 748 raise value

749

750 __next__ = next # XXX

TypeError: TextInputSequence must be str

```

## Expected behavior

#### for more details check here:

link: https://github.com/uabinf/nlp-group-project-fall-2020-deepbiocomp/blob/cancer_ask/scripts/qa_script/qa_squad_v1.ipynb

<!-- A clear and concise description of what you would expect to happen. -->

| 11-25-2020 17:28:10 | 11-25-2020 17:28:10 | After updating the run_squad.py script with a newer version of transformers, it works now!

Thank you!<|||||>@TrupeshKumarPatel Seems that this is not working still. What was the actual solution to this?<|||||>Hi @aleSuglia,

here is the updated link: https://github.com/uabinf/nlp-group-project-fall-2020-deepbiocomp/blob/main/scripts/qa_script/qa_squad_v1.ipynb , see if this help. If not then please elaborate on the error or problem that you are facing. <|||||>I have exactly the same error that you reported: `TypeError: TextInputSequence must be str`

By debugging, I can see that the variable `truncated_query` has a list of integers (which should be the current question's token ids). However, when you pass that to the [encode_plus](https://github.com/huggingface/transformers/blob/df2af6d8b8765b1ac2cda12d2ece09bf7240fba8/src/transformers/data/processors/squad.py#L181) method, you get the error. I guess it's because `encode_plus` expects strings and not integers. Do you have any suggestion?<|||||>If you googled this error and you are reading this post, please do the following. When you create your tokenizer make sure that you set the flag `use_fast` to `False` like this:

```python

AutoTokenizer.from_pretrained(tokenizer_name, use_fast=False)

```

This fixes the error. However, I wonder why there is no backward compatibility...<|||||>Had the similar issue with the above. What @aleSuglia suggested indeed works, but the issue still persists; fast version of the tokenizer should be compatible with the previous methods. In my case, I narrowed the problem down to `InputExample`, where `text_b` can be `None`, https://github.com/huggingface/transformers/blob/447808c85f0e6d6b0aeeb07214942bf1e578f9d2/src/transformers/data/processors/utils.py#L47-L48

but the tokenizer apparently doesn't accept `None` as an input. So, I found a workaround by changing

```

InputExample(guid=some_id, text_a=some_text, label=some_label)

-> InputExample(guid=some_id, text_a=some_text, text_b='', label=some_label)

```

I'm not sure this completely solves the issue though.<|||||>Potentially related issues: https://github.com/huggingface/transformers/issues/6545 https://github.com/huggingface/transformers/issues/7735 https://github.com/huggingface/transformers/issues/7011 |

transformers | 8,786 | closed | What would be the license of the model files available in Hugging face repository? | Dear Team,

Could you clarify what would be the license for different models pushed to hugging face repo like legal_bert, contracts_bert etc? Would the model file follows the same license i.e. Apache 2.0 like the hugging face library?

Regards

Gaurav | 11-25-2020 16:52:40 | 11-25-2020 16:52:40 | Maybe @julien-c can answer!<|||||>You can check on the model hub:

eg. apache-2.0 models: https://huggingface.co/models?filter=license:apache-2.0

mit models: https://huggingface.co/models?filter=license:mit

etc.<|||||>Thanks @julien-c for the update. |

transformers | 8,785 | closed | Update README.md | Disable Hosted Inference API while output inconsistency is not solved.

"How to use" section.

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-25-2020 16:51:51 | 11-25-2020 16:51:51 | Decided to delete "inference: false" |

transformers | 8,784 | closed | Different ouputs from code and Hosted Inference API | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 3.5.1

- Platform: Windows-10-10.0.19041-SP0

- Python version: 3.8.5

- PyTorch version (GPU?): 1.6.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

## To reproduce

Steps to reproduce the behavior:

1. Run the following code:

```python

from transformers import BertForTokenClassification, DistilBertTokenizerFast, pipeline

model = BertForTokenClassification.from_pretrained('monilouise/ner_pt_br')

tokenizer = DistilBertTokenizerFast.from_pretrained('neuralmind/bert-base-portuguese-cased', model_max_length=512, do_lower_case=False)

nlp = pipeline('ner', model=model, tokenizer=tokenizer)

result = nlp("O Tribunal de Contas da União é localizado em Brasília e foi fundado por Rui Barbosa. Fiscaliza contratos, por exemplo com empresas como a Veigamed e a Buyerbr.")

print(result)

````

It'll ouput:

[{'word': 'Tribunal', 'score': 0.9858521819114685, 'entity': 'B-PUB', 'index': 2}, {'word': 'de', 'score': 0.9954801201820374, 'entity': 'I-PUB', 'index': 3}, {'word': 'Contas', 'score': 0.9929609298706055, 'entity': 'I-PUB', 'index': 4}, {'word': 'da', 'score': 0.9949454665184021, 'entity': 'I-PUB', 'index': 5}, {'word': 'União', 'score': 0.9913719296455383, 'entity': 'L-PUB', 'index': 6}, {'word': 'Brasília', 'score': 0.9405767321586609, 'entity': 'B-LOC', 'index': 10}, {'word': 'Rui', 'score': 0.979736328125, 'entity': 'B-PESSOA', 'index': 15}, {'word': 'Barbosa', 'score': 0.988306999206543, 'entity': 'L-PESSOA', 'index': 16}, {'word': 'Veiga', 'score': 0.9748793244361877, 'entity': 'B-ORG', 'index': 29}, {'word': '##med', 'score': 0.9309309124946594, 'entity': 'L-ORG', 'index': 30}, {'word': 'Bu', 'score': 0.9679405689239502, 'entity': 'B-ORG', 'index': 33}, {'word': '##yer', 'score': 0.6654638051986694, 'entity': 'L-ORG', 'index': 34}, {'word': '##br', 'score': 0.9575732350349426, 'entity': 'L-ORG', 'index': 35}]

including all entity types (PUB, PESSOA, ORG and LOC)

2. In Hosted Inference API, the following result is returned for the same sentence, ignoring PUB entity type and giving incorrect and incomplete results:

```json

[

{

"entity_group": "LOC",

"score": 0.8127626776695251,

"word": "bras"

},

{

"entity_group": "PESSOA",

"score": 0.7101765692234039,

"word": "rui barbosa"

},

{

"entity_group": "ORG",

"score": 0.7679458856582642,

"word": "ve"

},

{

"entity_group": "ORG",

"score": 0.45047426223754883,

"word": "##igamed"

},

{

"entity_group": "ORG",

"score": 0.8467527627944946,

"word": "bu"

},

{

"entity_group": "ORG",

"score": 0.6024420410394669,

"word": "##yerbr"

}

]

```

## Expected behavior

How can it be possible the same model file give different results?(!) May I be missing anything?

| 11-25-2020 16:29:44 | 11-25-2020 16:29:44 | Hi @moniquebm .

The hosted inference is running `nlp = pipeline('ner', model=model, tokenizer=tokenizer, grouped_entities=True)` by default.

There is currently no way to overload it.

Does that explain the difference? <|||||>Hi @Narsil

>Does that explain the difference?

I'm afraid it doesn't explain... I've just tested nlp = pipeline('ner', model=model, tokenizer=tokenizer, grouped_entities=True) and the following (correct) result is generated programmatically:

[{'entity_group': 'PUB', 'score': 0.9921221256256103, 'word': 'Tribunal de Contas da União'}, {'entity_group': 'LOC', 'score': 0.9405767321586609, 'word': 'Brasília'}, {'entity_group': 'PESSOA', 'score': 0.9840216636657715, 'word': 'Rui Barbosa'}, {'entity_group': 'ORG', 'score': 0.9529051184654236, 'word': 'Veigamed'}, {'entity_group': 'ORG', 'score': 0.8636592030525208, 'word': 'Buyerbr'}]

But in fact it seems the API does not group entities:

```json

[

{

"entity_group": "LOC",

"score": 0.8127626776695251,

"word": "bras"

},

{

"entity_group": "PESSOA",

"score": 0.7101765692234039,

"word": "rui barbosa"

},

{

"entity_group": "ORG",

"score": 0.7679458856582642,

"word": "ve"

},

{

"entity_group": "ORG",

"score": 0.45047426223754883,

"word": "##igamed"

},

{

"entity_group": "ORG",

"score": 0.8467527627944946,

"word": "bu"

},

{

"entity_group": "ORG",

"score": 0.6024420410394669,

"word": "##yerbr"

}

]

```

The tokens are also different. One possible explaination is that the Hosted Inference API may be using English tokenizer, but my model/code used Portuguese tokenizer from this model: https://huggingface.co/neuralmind/bert-base-portuguese-cased

Does it make sense?<|||||>You need to update your tokenizer on your model in the hub: 'monilouise/ner_pt_br' to reflect this. The hosted inference can't know how to use a different tokenizer than the one you provide.

If you are simply using the one from `neuralmind/bert-base-portuguese-case`, you probably just download theirs, and reupload it as your own following this doc: https://huggingface.co/transformers/model_sharing.html<|||||>May I suggest moving the discussion here:

https://discuss.huggingface.co/c/intermediate/6

As it's not really and transformers problem but a Hub one.

I am closing the issue here. Feel free to comment here to show the new location of the discussion or ping me directly on discuss.

|

transformers | 8,783 | closed | MPNet: Masked and Permuted Pre-training for Natural Language Understanding | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors which may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

albert, bert, XLM: @LysandreJik

GPT2: @LysandreJik, @patrickvonplaten

tokenizers: @mfuntowicz

Trainer: @sgugger

Benchmarks: @patrickvonplaten

Model Cards: @julien-c

examples/distillation: @VictorSanh

nlp datasets: [different repo](https://github.com/huggingface/nlp)

rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Text Generation: @patrickvonplaten, @TevenLeScao

Blenderbot, Bart, Marian, Pegasus: @patrickvonplaten

T5: @patrickvonplaten

Rag: @patrickvonplaten, @lhoestq

EncoderDecoder: @patrickvonplaten

Longformer, Reformer: @patrickvonplaten

TransfoXL, XLNet: @TevenLeScao, @patrickvonplaten

examples/seq2seq: @patil-suraj

examples/bert-loses-patience: @JetRunner

tensorflow: @jplu

examples/token-classification: @stefan-it

documentation: @sgugger

FSTM: @stas00

-->

| 11-25-2020 16:28:09 | 11-25-2020 16:28:09 | |

transformers | 8,782 | closed | Unexpected output from bart-large | I am looking this thread about generation, https://stackoverflow.com/questions/64904840/why-we-need-a-decoder-start-token-id-during-generation-in-huggingface-bart

I re-run his code,

Use ```facebook/bart-base``` model,

```

from transformers import *

import torch

model = BartForConditionalGeneration.from_pretrained('facebook/bart-base')

tokenizer = BartTokenizer.from_pretrained('facebook/bart-base')

input_ids = torch.LongTensor([[0, 894, 213, 7, 334, 479, 2]])

res = model.generate(input_ids, num_beams=1, max_length=100)

print(res)

preds = [tokenizer.decode(g, skip_special_tokens=True, clean_up_tokenization_spaces=True).strip() for g in res]

print(preds)

```

I get output: