repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 3,992 | closed | RuntimeError: Creating MTGP constants failed. at /opt/conda/conda-bld/pytorch_1549287501208/work/aten/src/THC/THCTensorRandom.cu:35 | # 🐛 Bug

## Information

Model I am using (Bert, XLNet ...): BertForSequenceClassification

Language I am using the model on (English, Chinese ...): English

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

I am performing multi-class (50 classes) classification on the Project Gutenberg dataset. The max text length is 1620 so I set max length at 2048. I'm also padding the text with 0s.

```

MAX_LEN = 2048

def get_encodings(texts):

token_ids = []

attention_masks = []

for text in texts:

token_id = tokenizer.encode(text, add_special_tokens=True, max_length=2048)

token_ids.append(token_id)

return token_ids

def pad_encodings(encodings):

return pad_sequences(encodings, maxlen=MAX_LEN, dtype="long",

value=0, truncating="post", padding="post")

def get_attention_masks(padded_encodings):

attention_masks = []

for encoding in padded_encodings:

attention_mask = [int(token_id > 0) for token_id in encoding]

attention_masks.append(attention_mask)

return attention_masks

X_train = torch.tensor(train_encodings)

y_train = torch.tensor(train_df.author_id.values)

train_masks = torch.tensor(train_attention_masks)

X_test = torch.tensor(test_encodings)

y_test = torch.tensor(test_df.author_id.values)

test_masks = torch.tensor(test_attention_masks)

batch_size = 32

# Create the DataLoader for our training set.

train_data = TensorDataset(X_train, train_masks, y_train)

train_sampler = RandomSampler(train_data)

train_dataloader = DataLoader(train_data, sampler=train_sampler, batch_size=batch_size)

validation_data = TensorDataset(X_test, test_masks, y_test)

validation_sampler = SequentialSampler(validation_data)

validation_dataloader = DataLoader(validation_data, sampler=validation_sampler, batch_size=batch_size)

```

My model is setup like so:

```

model = BertForSequenceClassification.from_pretrained(

"bert-base-uncased",

num_labels = 50,

output_attentions = False,

output_hidden_states = False,

)

```

During training however, the line indicated below is throwing the error:

```

for step, batch in enumerate(train_dataloader):

b_texts = batch[0].to(device)

b_attention_masks = batch[1].to(device)

b_authors = batch[2].to(device) <---- ERROR

```

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

<!-- A clear and concise description of what you would expect to happen. -->

## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 2.8.0

- Platform: Ubuntu 16.04

- Python version: 1.0.0

- PyTorch version (GPU?):

- Tensorflow version (GPU?): 2.1.0

- Using GPU in script?: Yes. P4000

- Using distributed or parallel set-up in script?: No

| 04-26-2020 20:12:45 | 04-26-2020 20:12:45 | |

transformers | 3,991 | closed | Fix the typos | Fix the typos of "[0.0, 1.0[" --> "[0.0, 1.0]". | 04-26-2020 19:20:37 | 04-26-2020 19:20:37 | That's not a typo :)

https://en.wikipedia.org/wiki/Interval_(mathematics)<|||||>Ohhh! I am not aware of this notation before. (only [X, Y) is in my knowledge-base). Thanks for the clarification. |

transformers | 3,990 | closed | Language generation not possible with roberta? | Hi,

I have a small question.

I finetuned a roberta model and then I was about to do generation and I understood this was not possible on my model, but only in a smaller collection of model, where most of them are quite huge.

Why is that? Is there another way to generate language with roberta?

Thanks in a advance!

PS: the scripts I used were: `run_language_modeling.py` and `run_generation.py` | 04-26-2020 18:53:24 | 04-26-2020 18:53:24 | `Roberta` has not really been trained on generating text. Small models that work well for generation are `distilgpt2` and `gpt2` for example. |

transformers | 3,989 | closed | Model cards for KoELECTRA | Hi:)

I've recently uploaded `KoELECTRA`, pretrained ELECTRA model for Korean:)

Thanks for supporting this great library and S3 storage:)

| 04-26-2020 17:15:25 | 04-26-2020 17:15:25 | That's great! Model pages:

https://huggingface.co/monologg/koelectra-base-generator

https://huggingface.co/monologg/koelectra-base-discriminator

cc'ing @LysandreJik and @clarkkev for information |

transformers | 3,988 | closed | [Trainer] Add more schedules to trainer | Adds all available schedules to trainer and also add beta1 and beta2 for Adam to args.

@julien-c | 04-26-2020 16:38:21 | 04-26-2020 16:38:21 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3988?src=pr&el=h1) Report

> Merging [#3988](https://codecov.io/gh/huggingface/transformers/pull/3988?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/cb3c2212c79d7ff0a4a4e84c3db48371ecc1c15d&el=desc) will **decrease** coverage by `0.05%`.

> The diff coverage is `33.33%`.

[](https://codecov.io/gh/huggingface/transformers/pull/3988?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3988 +/- ##

==========================================

- Coverage 78.45% 78.40% -0.06%

==========================================

Files 111 111

Lines 18521 18533 +12

==========================================

- Hits 14531 14530 -1

- Misses 3990 4003 +13

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3988?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/trainer.py](https://codecov.io/gh/huggingface/transformers/pull/3988/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90cmFpbmVyLnB5) | `42.42% <9.09%> (-1.18%)` | :arrow_down: |

| [src/transformers/training\_args.py](https://codecov.io/gh/huggingface/transformers/pull/3988/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90cmFpbmluZ19hcmdzLnB5) | `88.40% <100.00%> (+0.71%)` | :arrow_up: |

| [src/transformers/configuration\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/3988/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX2F1dG8ucHk=) | `91.89% <0.00%> (-8.11%)` | :arrow_down: |

| [src/transformers/configuration\_xlnet.py](https://codecov.io/gh/huggingface/transformers/pull/3988/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX3hsbmV0LnB5) | `93.87% <0.00%> (-2.05%)` | :arrow_down: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3988/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `92.59% <0.00%> (-0.17%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3988?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3988?src=pr&el=footer). Last update [cb3c221...851b8f3](https://codecov.io/gh/huggingface/transformers/pull/3988?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>I'd rather let the user pass an optimizer and a scheduler in the Trainer's `__init__` and only keep the default one (which was the one used in all the scripts) in the implementation. Otherwise there's really a combinatorial explosion of possibilities.

What do you think?<|||||>Yeah it's true that this could lead to a "combinatorial explosion" but I don't really mind that because:

1. If we set good default values (like the beta ones for Adam), and have a good description I don't feel like the user is affected by more and more parameters

2. I think if a user does want to change very special params (like the beta ones), then it's very convenient to be able to do it directly

What I already started in this PR which can become messy is that there are now parameters that are only relevant if other parameters are set in a certain way `num_cycles` is only relevant if there is a cosine scheduler. And it's true that the trainer class could become messier this way as well.

In the end, for me the use case of the trainer class is more important I guess. If the idea is to have a very easy and fast way to train a model, then I would not mind introducing a bunch of parameters with good default values and description. If instead it should be a bit more "low-level" and the `run_language_modeling` script should wrap this class into a faster interface then it might be better to keep clean. <|||||>Closing this due to cafa6a9e29f3e99c67a1028f8ca779d439bc0689 |

transformers | 3,987 | closed | fix output_dir / tokenizer_name confusion | Fixing #3950

It seems that `output_dir` has been used in place of `tokenizer_name` in `run_xnli.py`. I have corrected this typo.

I am not that familiar with code style in this repo, in a lot of instances I used:

`args.tokenizer_name if args.tokenizer_name else args.model_name_or_path`

Instead, I believe I could have set `args.tokenizer_name = args.tokenizer_name if args.tokenizer_name else args.model_name_or_path` somewhere at the start of the script, so that the code could be cleaner below. | 04-26-2020 13:27:51 | 04-26-2020 13:27:51 | No, this isn't correct. This was consistent with other scripts (before they were ported to the new Trainer in #3800) where we always saved the model and its tokenizer before re-loading it for eval

So I think that code is currently right. (but again, should be re-written to Trainer pretty soon) |

transformers | 3,986 | closed | Run Multiple Choice failure with ImportError: cannot import name 'AutoModelForMultipleChoice' | # 🐛 Bug

## Information

Run the example, it returns error as below. The version in April 17 doesn't have the problem.

python ./examples/run_multiple_choice.py \

> --task_name swag \

> --model_name_or_path roberta-base \

> --do_train \

> --do_eval \

> --data_dir $SWAG_DIR \

> --learning_rate 5e-5 \

> --num_train_epochs 3 \

> --max_seq_length 80 \

> --output_dir models_bert/swag_base \

> --per_gpu_eval_batch_size=16 \

> --per_gpu_train_batch_size=16 \

> --gradient_accumulation_steps 2 \

> --overwrite_output

Traceback (most recent call last):

File "./examples/run_multiple_choice.py", line 26, in <module>

from transformers import (

ImportError: cannot import name 'AutoModelForMultipleChoice'

Model I am using (Bert, XLNet ...):

Language I am using the model on (English, Chinese ...):English

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

Follow the exact steps in README.md

1. Download the transformer with git clone

2. git clone https://github.com/rowanz/swagaf.git

3. export SWAG_DIR=/path/to/swag_data_dir

python ./examples/run_multiple_choice.py \

--task_name swag \

--model_name_or_path roberta-base \

--do_train \

--do_eval \

--data_dir $SWAG_DIR \

--learning_rate 5e-5 \

--num_train_epochs 3 \

--max_seq_length 80 \

--output_dir models_bert/swag_base \

--per_gpu_eval_batch_size=16 \

--per_gpu_train_batch_size=16 \

--gradient_accumulation_steps 2 \

--overwrite_output

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

***** Eval results *****

eval_acc = 0.8338998300509847

eval_loss = 0.44457291918821606

<!-- A clear and concise description of what you would expect to happen. -->

## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version:

- Platform: ubuntu 18.04

- Python version: 3.6.8

- PyTorch version (GPU?): 1.4.0 GPU

- Tensorflow version (GPU?):

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No.

| 04-26-2020 13:23:05 | 04-26-2020 13:23:05 | You need to install transformers from source as specified in the README |

transformers | 3,985 | closed | Using the T5 model with huggingface's mask-fill pipeline | Does anyone know if it is possible to use the T5 model with hugging face's mask-fill pipeline? The below is how you can do it using the default model but i can't seem to figure out how to do is using the T5 model specifically?

```

from transformers import pipeline

nlp_fill = pipeline('fill-mask')

nlp_fill('Hugging Face is a French company based in ' + nlp_fill.tokenizer.mask_token)

```

Trying this for example raises the error "TypeError: must be str, not NoneType" because nlp_fill.tokenizer.mask_token is None.

```

nlp_fill = pipeline('fill-mask',model="t5-base", tokenizer="t5-base")

nlp_fill('Hugging Face is a French company based in ' + nlp_fill.tokenizer.mask_token)

```

Stack overflow [question](https://stackoverflow.com/questions/61408753/using-the-t5-model-with-huggingfaces-mask-fill-pipeline) | 04-26-2020 12:18:26 | 04-26-2020 12:18:26 | Correct me if I'm wrong @patrickvonplaten, but I don't think T5 is trained on masked language modeling (and does not have a mask token) so will not work with this pipeline.<|||||>Yeah, `T5` is not trained on the conventional "Bert-like" masked language modeling objective. It does a special encoder-decoder masked language modeling (see docs [here](https://huggingface.co/transformers/model_doc/t5.html#training)), but this is not really supported in combination with the `mask-fill` pipeline at the moment.<|||||>Hi @patrickvonplaten, is there any plan to support `T5` with the `mask-fill` pipeline in the near future?<|||||>`T5` is an encoder-decoder model so I don't really see it as a fitting model for the `mask-fill` task. <|||||>Could we use the following workaround?

* `<extra_id_0>` could be considered as a mask token

* Candidate sequences for the mask-token could be generated using a code, like:

```python

from transformers import T5Tokenizer, T5Config, T5ForConditionalGeneration

T5_PATH = 't5-base' # "t5-small", "t5-base", "t5-large", "t5-3b", "t5-11b"

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # My envirnment uses CPU

t5_tokenizer = T5Tokenizer.from_pretrained(T5_PATH)

t5_config = T5Config.from_pretrained(T5_PATH)

t5_mlm = T5ForConditionalGeneration.from_pretrained(T5_PATH, config=t5_config).to(DEVICE)

# Input text

text = 'India is a <extra_id_0> of the world. </s>'

encoded = t5_tokenizer.encode_plus(text, add_special_tokens=True, return_tensors='pt')

input_ids = encoded['input_ids'].to(DEVICE)

# Generaing 20 sequences with maximum length set to 5

outputs = t5_mlm.generate(input_ids=input_ids,

num_beams=200, num_return_sequences=20,

max_length=5)

_0_index = text.index('<extra_id_0>')

_result_prefix = text[:_0_index]

_result_suffix = text[_0_index+12:] # 12 is the length of <extra_id_0>

def _filter(output, end_token='<extra_id_1>'):

# The first token is <unk> (inidex at 0) and the second token is <extra_id_0> (indexed at 32099)

_txt = t5_tokenizer.decode(output[2:], skip_special_tokens=False, clean_up_tokenization_spaces=False)

if end_token in _txt:

_end_token_index = _txt.index(end_token)

return _result_prefix + _txt[:_end_token_index] + _result_suffix

else:

return _result_prefix + _txt + _result_suffix

results = list(map(_filter, outputs))

results

```

Output:

```

['India is a cornerstone of the world. </s>',

'India is a part of the world. </s>',

'India is a huge part of the world. </s>',

'India is a big part of the world. </s>',

'India is a beautiful part of the world. </s>',

'India is a very important part of the world. </s>',

'India is a part of the world. </s>',

'India is a unique part of the world. </s>',

'India is a part of the world. </s>',

'India is a part of the world. </s>',

'India is a beautiful country in of the world. </s>',

'India is a part of the of the world. </s>',

'India is a small part of the world. </s>',

'India is a part of the world. </s>',

'India is a part of the world. </s>',

'India is a country in the of the world. </s>',

'India is a large part of the world. </s>',

'India is a part of the world. </s>',

'India is a significant part of the world. </s>',

'India is a part of the world. </s>']

```<|||||>@girishponkiya Thanks for your example! Unfortunately, I can't reproduce your results. I get

```

['India is a _0> of the world. </s>',

'India is a ⁇ extra of the world. </s>',

'India is a India is of the world. </s>',

'India is a ⁇ extra_ of the world. </s>',

'India is a a of the world. </s>',

'India is a [extra_ of the world. </s>',

'India is a India is an of the world. </s>',

'India is a of the world of the world. </s>',

'India is a India. of the world. </s>',

'India is a is a of the world. </s>',

'India is a India ⁇ of the world. </s>',

'India is a Inde is of the world. </s>',

'India is a ] of the of the world. </s>',

'India is a . of the world. </s>',

'India is a _0 of the world. </s>',

'India is a is ⁇ of the world. </s>',

'India is a india is of the world. </s>',

'India is a India is the of the world. </s>',

'India is a -0> of the world. </s>',

'India is a ⁇ _ of the world. </s>']

```

Tried on CPU, GPU, 't5-base' and 't5-3b' — same thing.<|||||>Could you please mention the version of torch, transformers and tokenizers?

I used the followings:

* torch: 1.5.0+cu101

* transformers: 2.8.0

* tokenizers: 0.7.0

`tokenizers` in the latest version of `transformers` has a bug. Looking at your output, I believe you are using a buggy version of tokenizers. <|||||>@girishponkiya I'm using

```

transformers 2.9.0

tokenizers 0.7.0

torch 1.4.0

```

Tried tokenizers-0.5.2 transformers-2.8.0 — now it works, thank you!<|||||>Thanks to @takahiro971. He pointed out this bug in #4021. <|||||>@girishponkiya thanks a lot for your above code. Your example works but if I run your above code instead with the text :

`text = "<extra_id_0> came to power after defeating Stalin"

`

I get the following error:

```

/usr/local/lib/python3.6/dist-packages/transformers/modeling_utils.py in _generate_beam_search(self, input_ids, cur_len, max_length, min_length, do_sample, early_stopping, temperature, top_k, top_p, repetition_penalty, no_repeat_ngram_size, bad_words_ids, bos_token_id, pad_token_id, eos_token_id, decoder_start_token_id, batch_size, num_return_sequences, length_penalty, num_beams, vocab_size, encoder_outputs, attention_mask)

1354 # test that beam scores match previously calculated scores if not eos and batch_idx not done

1355 if eos_token_id is not None and all(

-> 1356 (token_id % vocab_size).item() is not eos_token_id for token_id in next_tokens[batch_idx]

1357 ):

1358 assert torch.all(

UnboundLocalError: local variable 'next_tokens' referenced before assignment

```

Any ideas of the cause?

<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>Can I use multiple masking in a same sentence?<|||||>> Can I use multiple masking in a same sentence?

In this case, you need to set max_length higher or not set it at all.

This might produce more outputs than you need though.

<|||||>outputs = t5_mlm.generate(input_ids=input_ids,

num_beams=200, num_return_sequences=20,

max_length=5)

is very slow. How can I improve performance?<|||||>@jban-x3 I think you should try smaller values for `num_beams`<|||||>> Could we use the following workaround?

>

> * `<extra_id_0>` could be considered as a mask token

> * Candidate sequences for the mask-token could be generated using a code, like:

>

> ```python

> from transformers import T5Tokenizer, T5Config, T5ForConditionalGeneration

>

> T5_PATH = 't5-base' # "t5-small", "t5-base", "t5-large", "t5-3b", "t5-11b"

>

> DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # My envirnment uses CPU

>

> t5_tokenizer = T5Tokenizer.from_pretrained(T5_PATH)

> t5_config = T5Config.from_pretrained(T5_PATH)

> t5_mlm = T5ForConditionalGeneration.from_pretrained(T5_PATH, config=t5_config).to(DEVICE)

>

> # Input text

> text = 'India is a <extra_id_0> of the world. </s>'

>

> encoded = t5_tokenizer.encode_plus(text, add_special_tokens=True, return_tensors='pt')

> input_ids = encoded['input_ids'].to(DEVICE)

>

> # Generaing 20 sequences with maximum length set to 5

> outputs = t5_mlm.generate(input_ids=input_ids,

> num_beams=200, num_return_sequences=20,

> max_length=5)

>

> _0_index = text.index('<extra_id_0>')

> _result_prefix = text[:_0_index]

> _result_suffix = text[_0_index+12:] # 12 is the length of <extra_id_0>

>

> def _filter(output, end_token='<extra_id_1>'):

> # The first token is <unk> (inidex at 0) and the second token is <extra_id_0> (indexed at 32099)

> _txt = t5_tokenizer.decode(output[2:], skip_special_tokens=False, clean_up_tokenization_spaces=False)

> if end_token in _txt:

> _end_token_index = _txt.index(end_token)

> return _result_prefix + _txt[:_end_token_index] + _result_suffix

> else:

> return _result_prefix + _txt + _result_suffix

>

> results = list(map(_filter, outputs))

> results

> ```

>

> Output:

>

> ```

> ['India is a cornerstone of the world. </s>',

> 'India is a part of the world. </s>',

> 'India is a huge part of the world. </s>',

> 'India is a big part of the world. </s>',

> 'India is a beautiful part of the world. </s>',

> 'India is a very important part of the world. </s>',

> 'India is a part of the world. </s>',

> 'India is a unique part of the world. </s>',

> 'India is a part of the world. </s>',

> 'India is a part of the world. </s>',

> 'India is a beautiful country in of the world. </s>',

> 'India is a part of the of the world. </s>',

> 'India is a small part of the world. </s>',

> 'India is a part of the world. </s>',

> 'India is a part of the world. </s>',

> 'India is a country in the of the world. </s>',

> 'India is a large part of the world. </s>',

> 'India is a part of the world. </s>',

> 'India is a significant part of the world. </s>',

> 'India is a part of the world. </s>']

> ```

Nice tool!

may I ask a question?

what if I have several masked places to predict?

```python

t5('India is a <extra_id_0> of the <extra_id_1>. </s>',max_length=5)

```

I got:

[{'generated_text': 'part world'}]

It seems only the first masked place is predicted.<|||||>> > Could we use the following workaround?

> >

> > * `<extra_id_0>` could be considered as a mask token

> > * Candidate sequences for the mask-token could be generated using a code, like:

> >

> > ```python

> > from transformers import T5Tokenizer, T5Config, T5ForConditionalGeneration

> >

> > T5_PATH = 't5-base' # "t5-small", "t5-base", "t5-large", "t5-3b", "t5-11b"

> >

> > DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # My envirnment uses CPU

> >

> > t5_tokenizer = T5Tokenizer.from_pretrained(T5_PATH)

> > t5_config = T5Config.from_pretrained(T5_PATH)

> > t5_mlm = T5ForConditionalGeneration.from_pretrained(T5_PATH, config=t5_config).to(DEVICE)

> >

> > # Input text

> > text = 'India is a <extra_id_0> of the world. </s>'

> >

> > encoded = t5_tokenizer.encode_plus(text, add_special_tokens=True, return_tensors='pt')

> > input_ids = encoded['input_ids'].to(DEVICE)

> >

> > # Generaing 20 sequences with maximum length set to 5

> > outputs = t5_mlm.generate(input_ids=input_ids,

> > num_beams=200, num_return_sequences=20,

> > max_length=5)

> >

> > _0_index = text.index('<extra_id_0>')

> > _result_prefix = text[:_0_index]

> > _result_suffix = text[_0_index+12:] # 12 is the length of <extra_id_0>

> >

> > def _filter(output, end_token='<extra_id_1>'):

> > # The first token is <unk> (inidex at 0) and the second token is <extra_id_0> (indexed at 32099)

> > _txt = t5_tokenizer.decode(output[2:], skip_special_tokens=False, clean_up_tokenization_spaces=False)

> > if end_token in _txt:

> > _end_token_index = _txt.index(end_token)

> > return _result_prefix + _txt[:_end_token_index] + _result_suffix

> > else:

> > return _result_prefix + _txt + _result_suffix

> >

> > results = list(map(_filter, outputs))

> > results

> > ```

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> >

> > Output:

> > ```

> > ['India is a cornerstone of the world. </s>',

> > 'India is a part of the world. </s>',

> > 'India is a huge part of the world. </s>',

> > 'India is a big part of the world. </s>',

> > 'India is a beautiful part of the world. </s>',

> > 'India is a very important part of the world. </s>',

> > 'India is a part of the world. </s>',

> > 'India is a unique part of the world. </s>',

> > 'India is a part of the world. </s>',

> > 'India is a part of the world. </s>',

> > 'India is a beautiful country in of the world. </s>',

> > 'India is a part of the of the world. </s>',

> > 'India is a small part of the world. </s>',

> > 'India is a part of the world. </s>',

> > 'India is a part of the world. </s>',

> > 'India is a country in the of the world. </s>',

> > 'India is a large part of the world. </s>',

> > 'India is a part of the world. </s>',

> > 'India is a significant part of the world. </s>',

> > 'India is a part of the world. </s>']

> > ```

>

> Nice tool! may I ask a question? what if I have several masked places to predict?

>

> ```python

> t5('India is a <extra_id_0> of the <extra_id_1>. </s>',max_length=5)

> ```

>

> I got: [{'generated_text': 'part world'}]

>

> It seems only the first masked place is predicted.

Seems like <extra_id_0> is filled with "part", and <extra_id_1> is filled with "world"?<|||||>Hi @beyondguo,

> may I ask a question?

> what if I have several masked places to predict?

> ```python

> t5('India` is a <extra_id_0> of the <extra_id_1>. </s>',max_length=5)

> ```

You need to modify the `_filter` function.

The (original) function in [my code](https://github.com/huggingface/transformers/issues/3985#issuecomment-622981083) extracts the word sequence between `<extra_id_0>` and `<extra_id_1>` from the output, and the word sequence is being used to replace the `<extra_id_0>` token in the input. In addition to it, you have to extract the word sequence between `<extra_id_1>` and `<extra_id_2>` to replace the `<extra_id_1>` token from the input. Now, as the system needs to generate more tokens, one needs to set a bigger value for the `max_length` argument of `t5_mlm.generate`.<|||||>How is it possible to use the workaround with target words like in the pipeline?

E.g. targets=["target1", "target2"] and get the probabilities definitely for those targets? |

transformers | 3,984 | closed | why the accuracy is very low when we test on sentences one by one of the words input? | # ❓ Questions & Help

hi, friends:

there is a problem , that we trained a good lm model on gpt2 and sequence len is 30,when we batch test in training with 30 seqlen, the accuracy can reach 90%. But when we test like this:

[1]->[2],[1,2]->[3],[1,2,3]-->[4], left is the input, and we hope it can predict the right, we found the accurcy is very low which is only 6%.

| 04-26-2020 10:21:15 | 04-26-2020 10:21:15 | when we were training, the accuracy count method is like this:

input: [0,1,2,3,4,5,...,29]

label: [1,2,3,4,5,6,...,30]

so we count the right hits in 30 seqs.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 3,983 | closed | MLM Loss not decreasing when pretraining Bert from scratch | I want to pretrain bert base model from scratch with en-wikipedia dataset, since I havn't found a bookcorpus copy. The code I used was adapted from pytorch-pretrained-bert and Nvidia Megatron-LM. I can finetune BERT on SQuAD and get SOTA result. But for pretraining, the MLM loss stays around 7.3. I have no idea why this happens. Can someone offer some possible solutions? Great thanks! | 04-26-2020 10:08:34 | 04-26-2020 10:08:34 | |

transformers | 3,982 | closed | Can't install transformers from sources using poetry | # 🐛 Bug

When installing transformers from sources using poetry

```bash

poetry add git+https://github.com/huggingface/transformers.git

```

The following exception is thrown:

```bash

[InvalidRequirement]

Invalid requirement, parse error at "'extra =='"

```

This is a problem on poetry side. The issue here is just to cross-link the problem.

Ref: https://github.com/python-poetry/poetry/issues/2326

| 04-26-2020 10:01:50 | 04-26-2020 10:01:50 | In the meantime as a workaround, you can do the following:

1) Fork huggingface/transformers

2) On your fork remove this line https://github.com/huggingface/transformers/blob/97a375484c618496691982f62518130f294bb9a8/setup.py#L79

3) Install your fork with poetry<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 3,981 | closed | Improve split on token | This patch makes the code shorter, also solves a bug that is suppressed already[1]

old code:

split_on_token(tok = '[MASK]', text='')

> ['[MASK]']

This is a bug, because split_on_token shouldn't add token when the input doesn't have the token

[1] 21451ec (handle string with only whitespaces as empty, 2019-12-06)

| 04-26-2020 09:46:25 | 04-26-2020 09:46:25 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3981?src=pr&el=h1) Report

> Merging [#3981](https://codecov.io/gh/huggingface/transformers/pull/3981?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/4e817ff41885063e08bb3bcd63e5adfd835b9911&el=desc) will **decrease** coverage by `0.02%`.

> The diff coverage is `100.00%`.

[](https://codecov.io/gh/huggingface/transformers/pull/3981?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3981 +/- ##

==========================================

- Coverage 78.44% 78.42% -0.03%

==========================================

Files 111 111

Lines 18518 18513 -5

==========================================

- Hits 14527 14518 -9

- Misses 3991 3995 +4

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3981?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/tokenization\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3981/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHMucHk=) | `89.78% <100.00%> (+0.05%)` | :arrow_up: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3981/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `91.94% <0.00%> (-0.83%)` | :arrow_down: |

| [src/transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3981/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `68.49% <0.00%> (-0.37%)` | :arrow_down: |

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3981/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `91.06% <0.00%> (+0.12%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3981?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3981?src=pr&el=footer). Last update [4e817ff...410c538](https://codecov.io/gh/huggingface/transformers/pull/3981?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 3,980 | closed | When I run `import transformers` , it reports an error. But I had no problem with pyrotch-transformers before. Is it a TensorRT problem? | # ❓ Questions & Help

when i run `import transformers`, i get this:

2020-04-26 17:02:59.210145: W tensorflow/compiler/tf2tensorrt/utils/py_utils.cc:30] Cannot dlopen some TensorRT libraries. If you would like to use Nvidia GPU with TensorRT, please make sure the missing libraries mentioned above are installed properly.

*** Error in `/conda-torch/bin/python': double free or corruption (!prev): 0x00007faa0eac23c0 ***

is there anything can fix it? | 04-26-2020 09:13:55 | 04-26-2020 09:13:55 | |

transformers | 3,979 | closed | Add modelcard for Hate-speech-CNERG/dehatebert-mono-arabic model | 04-26-2020 03:11:09 | 04-26-2020 03:11:09 | ||

transformers | 3,978 | closed | Fix t5 doc typos | Read through the docs for T5 at `docs/source/model_doc/t5.rst` and found some typos. Hope this helps! | 04-26-2020 03:02:57 | 04-26-2020 03:02:57 | Great thanks you @enzoampil |

transformers | 3,977 | closed | xlnet large | If you train in batch 8 at max length = 512 with the xlnet-base-cased, you will learn well with changes in values such as Loss, Accuracy, Precision, Recall, and F1-score.

However, if you train with batch 2 at max length = 512 with a xlnet-large-cased, even if you run several times, there is almost no change, and precision is always 1.00000, and other values are only fixed.

Is it because the batch size is too small? Help.

[epoch1]

Tr Loss | Vld Acc | Vld Loss | Vld Prec | Vld Reca | Vld F1

0.39480 | 0.87500 | 0.39765 | 1.00000 | 0.87500 | 0.93333

[epoch2]

Tr Loss | Vld Acc | Vld Loss | Vld Prec | Vld Reca | Vld F1

0.39772 | 0.87500 | 0.38215 | 1.00000 | 0.87500 | 0.93333 | 04-26-2020 02:52:36 | 04-26-2020 02:52:36 | Maybe the task is learning rate and batch size sensitive. You can try to decrease the learning rate when changing the batch size from 8 to 2. <|||||>> Maybe the task is learning rate and batch size sensitive. You can try to decrease the learning rate when changing the batch size from 8 to 2.

Using xlnet-large-cased with batch size 2 and learning rate = 1e-5 is the same symptom. Is there another way?<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 3,976 | closed | Fixed Style Inconsistency | This fixes a style inconsistency in BertForSequenceClassification's constructor where 'config' is referenced using both the parameter 'config' and 'self.config' on the same line.

This line is the only instance in modeling_bert.py where the config parameter is referenced using self.config in a constructor. | 04-26-2020 02:05:45 | 04-26-2020 02:05:45 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3976?src=pr&el=h1) Report

> Merging [#3976](https://codecov.io/gh/huggingface/transformers/pull/3976?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/4e817ff41885063e08bb3bcd63e5adfd835b9911&el=desc) will **decrease** coverage by `0.01%`.

> The diff coverage is `100.00%`.

[](https://codecov.io/gh/huggingface/transformers/pull/3976?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3976 +/- ##

==========================================

- Coverage 78.44% 78.43% -0.02%

==========================================

Files 111 111

Lines 18518 18518

==========================================

- Hits 14527 14525 -2

- Misses 3991 3993 +2

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3976?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/modeling\_bert.py](https://codecov.io/gh/huggingface/transformers/pull/3976/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19iZXJ0LnB5) | `88.40% <100.00%> (ø)` | |

| [src/transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3976/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `68.49% <0.00%> (-0.37%)` | :arrow_down: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3976/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `92.59% <0.00%> (-0.17%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3976?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3976?src=pr&el=footer). Last update [4e817ff...6e5ef43](https://codecov.io/gh/huggingface/transformers/pull/3976?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>Thanks @jtaylor351 ! |

transformers | 3,975 | closed | Added support for pathlib.Path objects instead of string paths in from_pretrained() (resolves #3962) | Resolves issue/feature request #3962

This only covers string paths in `from_pretrained` (so vocab_file paths for` __init__` for tokenizers aren't covered). This may or may not be a feature that is desired (since it's fairly easy for the client to convert a path-like to a string). With the changes, you would be able to use `from_pretrained` with path-likes:

```

from pathlib import Path

from transformers import AutoModel, AutoTokenizer

my_path = Path("path/to/model_dir/")

AutoTokenizer.from_pretrained(my_path)

AutoModel.from_pretrained(my_path)

``` | 04-25-2020 22:42:25 | 04-25-2020 22:42:25 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3975?src=pr&el=h1) Report

> Merging [#3975](https://codecov.io/gh/huggingface/transformers/pull/3975?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/4e817ff41885063e08bb3bcd63e5adfd835b9911&el=desc) will **increase** coverage by `0.00%`.

> The diff coverage is `85.00%`.

[](https://codecov.io/gh/huggingface/transformers/pull/3975?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3975 +/- ##

=======================================

Coverage 78.44% 78.45%

=======================================

Files 111 111

Lines 18518 18534 +16

=======================================

+ Hits 14527 14541 +14

- Misses 3991 3993 +2

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3975?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/tokenization\_transfo\_xl.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdHJhbnNmb194bC5weQ==) | `40.57% <50.00%> (-0.10%)` | :arrow_down: |

| [src/transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `68.68% <75.00%> (-0.19%)` | :arrow_down: |

| [src/transformers/configuration\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9jb25maWd1cmF0aW9uX3V0aWxzLnB5) | `96.57% <100.00%> (+0.02%)` | :arrow_up: |

| [src/transformers/modelcard.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGNhcmQucHk=) | `87.80% <100.00%> (+0.15%)` | :arrow_up: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `93.10% <100.00%> (+0.34%)` | :arrow_up: |

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `90.95% <100.00%> (+0.01%)` | :arrow_up: |

| [src/transformers/tokenization\_auto.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fYXV0by5weQ==) | `97.50% <100.00%> (+0.13%)` | :arrow_up: |

| [src/transformers/tokenization\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3975/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy90b2tlbml6YXRpb25fdXRpbHMucHk=) | `89.73% <100.00%> (+0.01%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3975?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3975?src=pr&el=footer). Last update [4e817ff...033d5f8](https://codecov.io/gh/huggingface/transformers/pull/3975?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>On second thought, I probably would't merge this. I think it's better to just let the user deal with this on their end.<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 3,974 | closed | Weights from pretrained model not used in GPT2LMHeadModel | # 🐛 Bug

## Information

Model I am using (Bert, XLNet ...): gpt2

Language I am using the model on (English, Chinese ...): English

The problem arises when using:

* [ x] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. trained gpt2 using run_language_modeling

2. using run_generation.py

`backend_1 | 04/25/2020 18:37:27 - INFO - transformers.modeling_utils - Weights from pretrained model not u

sed in GPT2LMHeadModel: ['transformer.h.0.attn.masked_bias', 'transformer.h.1.attn.masked_bias', 'transformer.h.2.a

ttn.masked_bias', 'transformer.h.3.attn.masked_bias', 'transformer.h.4.attn.masked_bias', 'transformer.h.5.attn.mas

ked_bias', 'transformer.h.6.attn.masked_bias', 'transformer.h.7.attn.masked_bias', 'transformer.h.8.attn.masked_bia

s', 'transformer.h.9.attn.masked_bias', 'transformer.h.10.attn.masked_bias', 'transformer.h.11.attn.masked_bias']`

| 04-25-2020 18:44:57 | 04-25-2020 18:44:57 | @patrickvonplaten - could you help elaborate on this?<|||||>Yeah, we need to clean this logging. The weights are correctly loaded into the model as far as I know (we have integration tests for pretrained GPT2 models so the weights have to be correctly loaded). Just need to clean up the logging logic there.<|||||>Actually this logging info is fine. Those masked bias are saved values using the `register_buffer()` method and don't need to be loaded. |

transformers | 3,973 | closed | Pytorch 1.5.0 | PyTorch 1.5.0 doesn't allow specifying a standard deviation of 0 in normal distributions. We were testing that our models initialized with a normal distribution with a mean and a standard deviation of 0 had all their parameters initialized to 0.

This allows us to verify that all weights in the model are indeed initialized according to the specified weights initializations.

We now specify a tiny value (1e10) and round to the 9th decimal so that all these values are set to 0.

closes #3947

closes #3872 | 04-25-2020 17:33:14 | 04-25-2020 17:33:14 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3973?src=pr&el=h1) Report

> Merging [#3973](https://codecov.io/gh/huggingface/transformers/pull/3973?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/4e817ff41885063e08bb3bcd63e5adfd835b9911&el=desc) will **decrease** coverage by `0.01%`.

> The diff coverage is `n/a`.

[](https://codecov.io/gh/huggingface/transformers/pull/3973?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3973 +/- ##

==========================================

- Coverage 78.44% 78.43% -0.02%

==========================================

Files 111 111

Lines 18518 18518

==========================================

- Hits 14527 14525 -2

- Misses 3991 3993 +2

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3973?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3973/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `68.49% <0.00%> (-0.37%)` | :arrow_down: |

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3973/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `92.59% <0.00%> (-0.17%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3973?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3973?src=pr&el=footer). Last update [4e817ff...4bee514](https://codecov.io/gh/huggingface/transformers/pull/3973?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>~~I've seen one error with 1.5 and the new trainer interface (using ner) - I'll raise an issue for that :)~~ |

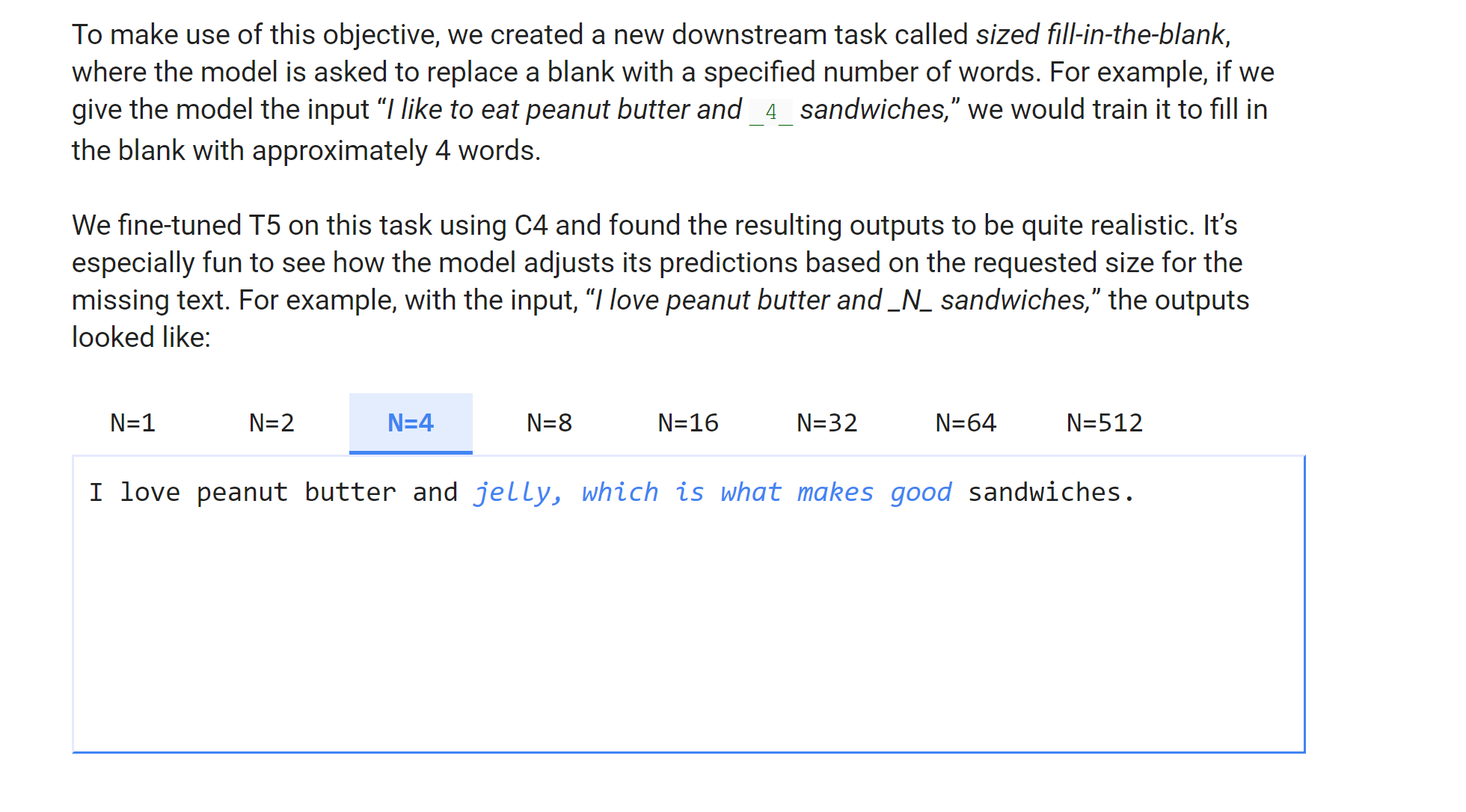

transformers | 3,972 | closed | Sized Fill-in-the-blank or Multi Mask filling with T5 | In the Google T5 paper they mentioned :

For example, with the input, “I love peanut butter and _4_ sandwiches,” the outputs looked like:

I love peanut butter and jelly, which is what makes good sandwiches.

How do I achieve Multi Mask filling with T5 in hugging face transformers?

Code samples please :)

| 04-25-2020 17:30:56 | 04-25-2020 17:30:56 | Did you discover any way of doing this?<|||||>@edmar Nothing solid yet! I Will update if I do.<|||||>Also looking for a solution

https://github.com/google-research/text-to-text-transfer-transformer/issues/133<|||||>@franz101 @edmar

The closest thing I could come up with :

```

from transformers import RobertaTokenizer, RobertaForMaskedLM

import torch

tokenizer = RobertaTokenizer.from_pretrained('roberta-base')

model = RobertaForMaskedLM.from_pretrained('roberta-base')

sentence = "Tom has fully <mask> <mask> <mask> illness."

token_ids = tokenizer.encode(sentence, return_tensors='pt')

# print(token_ids)

token_ids_tk = tokenizer.tokenize(sentence, return_tensors='pt')

print(token_ids_tk)

masked_position = (token_ids.squeeze() == tokenizer.mask_token_id).nonzero()

masked_pos = [mask.item() for mask in masked_position ]

print (masked_pos)

with torch.no_grad():

output = model(token_ids)

last_hidden_state = output[0].squeeze()

print ("\n\n")

print ("sentence : ",sentence)

print ("\n")

list_of_list =[]

for mask_index in masked_pos:

mask_hidden_state = last_hidden_state[mask_index]

idx = torch.topk(mask_hidden_state, k=5, dim=0)[1]

words = [tokenizer.decode(i.item()).strip() for i in idx]

list_of_list.append(words)

print (words)

best_guess = ""

for j in list_of_list:

best_guess = best_guess+" "+j[0]

print ("\nBest guess for fill in the blank :::",best_guess)

```

The output is :

['Tom', 'Ġhas', 'Ġfully', '<mask>', '<mask>', '<mask>', 'Ġillness', '.']

[4, 5, 6]

sentence : Tom has fully <mask> <mask> <mask> illness.

['recovered', 'returned', 'recover', 'healed', 'cleared']

['from', 'his', 'with', 'to', 'the']

['his', 'the', 'her', 'mental', 'this']

Best guess for fill in the blank ::: recovered from his<|||||>@ramsrigouthamg @edmar @franz101 Any update on how to do that ?<|||||>@Diego999 The above-provided answer is the best I have.

Google hasn't released the pretrained multi-mask fill model.<|||||>@ramsrigouthamg Thanks! This is also similar to BART architecture where they mask a span of text. A similar thread is available here https://github.com/huggingface/transformers/issues/4984 <|||||>@Diego999 There are few things that you can possibly explore. There is Spanbert https://huggingface.co/SpanBERT/spanbert-base-cased but I didn't explore on how to use it.

Then there is Google pegasus that is trained with sentences as masks. https://ai.googleblog.com/2020/06/pegasus-state-of-art-model-for.html<|||||>This example might be useful:

https://github.com/huggingface/transformers/issues/3985<|||||>@ramsrigouthamg Any luck with it yet on T5?

<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

<|||||>I think the `__4__` is a special token, and they use the same text to text framework (seq2seq, instead of masked-lm) to train this task.

And that's why the screenshot above says:

> train it to fill in the blank with **approximately** 4 words<|||||>is there any updates or simple example code for doing this? thanks!<|||||>```

from transformers import T5Tokenizer, T5Config, T5ForConditionalGeneration

T5_PATH = 't5-base' # "t5-small", "t5-base", "t5-large", "t5-3b", "t5-11b"

DEVICE = torch.device('cuda' if torch.cuda.is_available() else 'cpu') # My envirnment uses CPU

t5_tokenizer = T5Tokenizer.from_pretrained(T5_PATH)

t5_config = T5Config.from_pretrained(T5_PATH)

t5_mlm = T5ForConditionalGeneration.from_pretrained(T5_PATH, config=t5_config).to(DEVICE)

# Input text

text = 'India is a <extra_id_0> of the world. </s>'

encoded = t5_tokenizer.encode_plus(text, add_special_tokens=True, return_tensors='pt')

input_ids = encoded['input_ids'].to(DEVICE)

# Generaing 20 sequences with maximum length set to 5

outputs = t5_mlm.generate(input_ids=input_ids,

num_beams=200, num_return_sequences=20,

max_length=5)

_0_index = text.index('<extra_id_0>')

_result_prefix = text[:_0_index]

_result_suffix = text[_0_index+12:] # 12 is the length of <extra_id_0>

def _filter(output, end_token='<extra_id_1>'):

# The first token is <unk> (inidex at 0) and the second token is <extra_id_0> (indexed at 32099)

_txt = t5_tokenizer.decode(output[2:], skip_special_tokens=False, clean_up_tokenization_spaces=False)

if end_token in _txt:

_end_token_index = _txt.index(end_token)

return _result_prefix + _txt[:_end_token_index] + _result_suffix

else:

return _result_prefix + _txt + _result_suffix

results = list(map(_filter, outputs))

results

```<|||||>does the above work? |

transformers | 3,971 | closed | Problem with downloading the XLNetSequenceClassification pretrained xlnet-large-cased | I've run this before and it was fine. However, this time, i keep encountering this runtime error. In fact, when i tried to initialize model = XLNetModel.from_pretrained('xlnet-large-cased') - it also gives me the 'negative dimension' error.

`model = XLNetForSequenceClassification.from_pretrained("xlnet-large-cased", num_labels = 2)

model.to(device)`

`---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-37-d6f698a3714b> in <module>()

----> 1 model = XLNetForSequenceClassification.from_pretrained("xlnet-large-cased", num_labels = 2)

2 model.to(device)

3 frames

/usr/local/lib/python3.6/dist-packages/torch/nn/modules/sparse.py in __init__(self, num_embeddings, embedding_dim, padding_idx, max_norm, norm_type, scale_grad_by_freq, sparse, _weight)

95 self.scale_grad_by_freq = scale_grad_by_freq

96 if _weight is None:

---> 97 self.weight = Parameter(torch.Tensor(num_embeddings, embedding_dim))

98 self.reset_parameters()

99 else:

RuntimeError: Trying to create tensor with negative dimension -1: [-1, 1024]` | 04-25-2020 17:08:40 | 04-25-2020 17:08:40 | Hey mate, I've encountered the same issue. How did you solve it?<|||||>I encounter this issue. How can I solve this?<|||||>the problem is when l import xlnet from pytorch_transformers. instead, you should be importing it from the module called 'transformers' |

transformers | 3,970 | closed | Fix GLUE TPU script | Temporary fix until we refactor this script to work with the trainer. | 04-25-2020 17:01:10 | 04-25-2020 17:01:10 | Pinging @jysohn23 to see if it fixed the issue.<|||||>Closing in favour of integrating TPU support for the trainer. |

transformers | 3,969 | closed | override weights name | Optional override of the weights name, relevant for cases where torch.save() is patched like in https://github.com/allegroai/trains | 04-25-2020 16:35:55 | 04-25-2020 16:35:55 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3969?src=pr&el=h1) Report

> Merging [#3969](https://codecov.io/gh/huggingface/transformers/pull/3969?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/4e817ff41885063e08bb3bcd63e5adfd835b9911&el=desc) will **increase** coverage by `0.01%`.

> The diff coverage is `82.60%`.

[](https://codecov.io/gh/huggingface/transformers/pull/3969?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3969 +/- ##

==========================================

+ Coverage 78.44% 78.45% +0.01%

==========================================

Files 111 111

Lines 18518 18527 +9

==========================================

+ Hits 14527 14536 +9

Misses 3991 3991

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3969?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/modeling\_tf\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3969/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ190Zl91dGlscy5weQ==) | `92.81% <80.00%> (+0.04%)` | :arrow_up: |

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3969/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `91.11% <84.61%> (+0.17%)` | :arrow_up: |

| [src/transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3969/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `68.49% <0.00%> (-0.37%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3969?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3969?src=pr&el=footer). Last update [4e817ff...56024ae](https://codecov.io/gh/huggingface/transformers/pull/3969?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>This issue has been automatically marked as stale because it has not had recent activity. It will be closed if no further activity occurs. Thank you for your contributions.

|

transformers | 3,968 | closed | Remove boto3 dependency | Downloading a model from `s3://` urls was not documented anywhere and I suspect it doesn't work with our `from_pretrained` methods anyways.

Removing boto3 also kills its transitive dependencies (some of which are slow to support new versions of Python), contributing to a leaner library:

```

boto3-1.12.46

botocore-1.15.46

docutils-0.15.2

jmespath-0.9.5

python-dateutil-2.8.1

s3transfer-0.3.3

six-1.14.0

urllib3-1.25.9

``` | 04-25-2020 14:50:59 | 04-25-2020 14:50:59 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3968?src=pr&el=h1) Report

> Merging [#3968](https://codecov.io/gh/huggingface/transformers/pull/3968?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/4e817ff41885063e08bb3bcd63e5adfd835b9911&el=desc) will **increase** coverage by `0.07%`.

> The diff coverage is `100.00%`.

[](https://codecov.io/gh/huggingface/transformers/pull/3968?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3968 +/- ##

==========================================

+ Coverage 78.44% 78.51% +0.07%

==========================================

Files 111 111

Lines 18518 18486 -32

==========================================

- Hits 14527 14515 -12

+ Misses 3991 3971 -20

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3968?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3968/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `72.61% <100.00%> (+3.74%)` | :arrow_up: |

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3968/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `91.06% <0.00%> (+0.12%)` | :arrow_up: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3968?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3968?src=pr&el=footer). Last update [4e817ff...b440a19](https://codecov.io/gh/huggingface/transformers/pull/3968?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

|

transformers | 3,967 | closed | Proposal: saner num_labels in configs. | See https://github.com/guillaume-be/rust-bert/pull/21 for more context | 04-25-2020 13:55:19 | 04-25-2020 13:55:19 | I like this better as well. Probably won't be backwards compatible with users that have their config with `_num_labels` though.<|||||>Like it better as well! Agree with @LysandreJik that some configs might need to be fixed manually<|||||>Well, `_num_labels` (if present) should always be consistent with the length of `id2label`, no?<|||||>You're right, it should!<|||||>All right, merging this. @patrickvonplaten if you want to re-run your cleaning script at some point feel free to do it :) (let me know before) |

transformers | 3,966 | closed | Add CALBERT (Catalan ALBERT) base-uncased model card | Hi there!

I just uploaded a new ALBERT model pretrained on 4.3 GB of Catalan text. Thank you for these fantastic libraries and platform to make this a joy! | 04-25-2020 09:19:10 | 04-25-2020 09:19:10 | Looks good! Model page for [CALBERT](https://huggingface.co/codegram/calbert-base-uncased)<|||||>One additional tweak: you should add a

```

---

language: catalan

---

```

metadata block at the top of the model page. Thanks! |

transformers | 3,965 | closed | Remove hard-coded pad token id in distilbert and albert | As the config adds `pad_token_id` attribute, `padding_idx` set the value of `config.pad_token_id` in BertEmbedding. ( PR #3793 )

But it seems that not only the config of `Bert`, but also that of `DistilBert` and `Albert` has `pad_token_id`. ([Distilbert config](https://s3.amazonaws.com/models.huggingface.co/bert/distilbert-base-uncased-config.json), [Albert config](https://s3.amazonaws.com/models.huggingface.co/bert/albert-base-v1-config.json))

But in Embedding class of Distilbert and Albert, it seems that `padding_idx` is still hard-coded. So I've fixed those parts. | 04-25-2020 07:03:25 | 04-25-2020 07:03:25 | # [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3965?src=pr&el=h1) Report

> Merging [#3965](https://codecov.io/gh/huggingface/transformers/pull/3965?src=pr&el=desc) into [master](https://codecov.io/gh/huggingface/transformers/commit/73d6a2f9019960c327f19689c1d9a6c0fba31d86&el=desc) will **decrease** coverage by `0.01%`.

> The diff coverage is `100.00%`.

[](https://codecov.io/gh/huggingface/transformers/pull/3965?src=pr&el=tree)

```diff

@@ Coverage Diff @@

## master #3965 +/- ##

==========================================

- Coverage 78.45% 78.44% -0.02%

==========================================

Files 111 111

Lines 18518 18518

==========================================

- Hits 14528 14526 -2

- Misses 3990 3992 +2

```

| [Impacted Files](https://codecov.io/gh/huggingface/transformers/pull/3965?src=pr&el=tree) | Coverage Δ | |

|---|---|---|

| [src/transformers/modeling\_albert.py](https://codecov.io/gh/huggingface/transformers/pull/3965/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19hbGJlcnQucHk=) | `75.31% <100.00%> (ø)` | |

| [src/transformers/modeling\_distilbert.py](https://codecov.io/gh/huggingface/transformers/pull/3965/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ19kaXN0aWxiZXJ0LnB5) | `98.15% <100.00%> (ø)` | |

| [src/transformers/file\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3965/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9maWxlX3V0aWxzLnB5) | `68.49% <0.00%> (-0.37%)` | :arrow_down: |

| [src/transformers/modeling\_utils.py](https://codecov.io/gh/huggingface/transformers/pull/3965/diff?src=pr&el=tree#diff-c3JjL3RyYW5zZm9ybWVycy9tb2RlbGluZ191dGlscy5weQ==) | `90.94% <0.00%> (-0.13%)` | :arrow_down: |

------

[Continue to review full report at Codecov](https://codecov.io/gh/huggingface/transformers/pull/3965?src=pr&el=continue).

> **Legend** - [Click here to learn more](https://docs.codecov.io/docs/codecov-delta)

> `Δ = absolute <relative> (impact)`, `ø = not affected`, `? = missing data`

> Powered by [Codecov](https://codecov.io/gh/huggingface/transformers/pull/3965?src=pr&el=footer). Last update [73d6a2f...9d92a30](https://codecov.io/gh/huggingface/transformers/pull/3965?src=pr&el=lastupdated). Read the [comment docs](https://docs.codecov.io/docs/pull-request-comments).

<|||||>LGTM<|||||>@LysandreJik @VictorSanh

Hi:) Can you please check this PR? This one makes issue on Korean BERT. (which use `pad_token_id=1` and `unk_token_id=0`)

I hope this PR will be applied on the next version of transformers library:)<|||||>lgtm!<|||||>@julien-c

Can you merge this PR? Thank you so much:) |

transformers | 3,964 | closed | Error on dtype in modeling_bertabs.py file | # 🐛 Bug

Traceback (most recent call last):

File "run_summarizationn.py", line 324, in <module>

main()

File "run_summarizationn.py", line 309, in main

evaluate(args)

File "run_summarizationn.py", line 84, in evaluate

batch_data = predictor.translate_batch(batch)

File "/kaggle/working/transformers/examples/summarization/bertabs/modeling_bertabs.py", line 797, in translate_batch

return self._fast_translate_batch(batch, self.max_length, min_length=self.min_length)

File "/kaggle/working/transformers/examples/summarization/bertabs/modeling_bertabs.py", line 844, in _fast_translate_batch

dec_out, dec_states = self.model.decoder(decoder_input, src_features, dec_states, step=step)

File "/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 547, in __call__

result = self.forward(*input, **kwargs)

File "/kaggle/working/transformers/examples/summarization/bertabs/modeling_bertabs.py", line 231, in forward

step=step,

File "/opt/conda/lib/python3.6/site-packages/torch/nn/modules/module.py", line 547, in __call__

result = self.forward(*input, **kwargs)

File "/kaggle/working/transformers/examples/summarization/bertabs/modeling_bertabs.py", line 328, in forward

dec_mask = torch.gt(tgt_pad_mask + self.mask[:, : tgt_pad_mask.size(1), : tgt_pad_mask.size(1)], 0)

RuntimeError: expected device cpu and dtype Byte but got device cpu and dtype Bool

## Information

Model I am using (Bert, XLNet ...):bertabs

Language I am using the model on (English, Chinese ...):English

The problem arises when using:

* [ ] the official example scripts: (give details below) - Yes

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [ ] my own task or dataset: (give details below) - my own task

| 04-25-2020 06:31:34 | 04-25-2020 06:31:34 | |

transformers | 3,963 | closed | run_language_modeling, RuntimeError: expected scalar type Half but found Float | # 🐛 Bug

## Information

CUDA: 10.1

Python 3.6.8 (default, Oct 7 2019, 12:59:55)

Installed transformers from source.

Trying to train **gpt2-medium**

Command Line:

```

export TRAIN_FILE=dataset/wiki.train.raw

export TEST_FILE=dataset/wiki.test.raw

python3 examples/run_language_modeling.py --fp16 \

--per_gpu_eval_batch_size 1 \

--output_dir=output \

--model_type=gpt2-medium \

--model_name_or_path=gpt2-medium \

--do_train \

--train_data_file=$TRAIN_FILE \

--do_eval \

--eval_data_file=$TEST_FILE \

--overwrite_output_dir

```

```

Traceback (most recent call last):

File "examples/run_language_modeling.py", line 284, in <module>

main()

File "examples/run_language_modeling.py", line 254, in main

trainer.train(model_path=model_path)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 314, in train

tr_loss += self._training_step(model, inputs, optimizer)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 388, in _training_step

outputs = model(**inputs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/data_parallel.py", line 152, in forward

outputs = self.parallel_apply(replicas, inputs, kwargs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/data_parallel.py", line 162, in parallel_apply

return parallel_apply(replicas, inputs, kwargs, self.device_ids[:len(replicas)])

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/parallel_apply.py", line 85, in parallel_apply

output.reraise()

File "/usr/local/lib/python3.6/dist-packages/torch/_utils.py", line 385, in reraise

raise self.exc_type(msg)

RuntimeError: Caught RuntimeError in replica 0 on device 0.

Original Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/parallel_apply.py", line 60, in _worker

output = module(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 616, in forward

use_cache=use_cache,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 500, in forward

use_cache=use_cache,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 237, in forward

use_cache=use_cache,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 541, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 196, in forward

attn_outputs = self._attn(query, key, value, attention_mask, head_mask)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 149, in _attn

w = torch.where(mask, w, self.masked_bias)

RuntimeError: expected scalar type Half but found Float

```

| 04-25-2020 06:06:35 | 04-25-2020 06:06:35 | What's your PyTorch version? Can you post the output of `transformers-cli env`

This does not seem specific to `examples/run_language_modeling.py` so I'm gonna ping @patrickvonplaten on this<|||||>@julien-c thanks for the prompt response.

`- `transformers` version: 2.8.0

- Platform: Linux-4.14.138+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.6.8

- PyTorch version (GPU?): 1.3.0 (True)

- Tensorflow version (GPU?): not installed (NA)

- Using GPU in script?: yes

- Using distributed or parallel set-up in script?: no`<|||||>Hi @ankit-chadha,

I will take a look next week :-). Maybe @sshleifer - do you something from the top of your head? This issue is also related: https://github.com/huggingface/transformers/issues/3676.<|||||>I have tried to replicate in torch 1.4 and mask, masked_bias are float16, and there is no error.

Would it be possible to upgrade torch to 1.4 and see if the problem persists @ankit-chadha ?

<|||||>@sshleifer @patrickvonplaten

`- transformers version: 2.8.0

- Platform: Linux-4.14.138+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.6.8

- PyTorch version (GPU?): 1.4.0 (True)

- Tensorflow version (GPU?): 2.0.0 (False)`

````

raceback (most recent call last): | 0/372 [00:00<?, ?it/s]

File "examples/run_language_modeling.py", line 284, in <module>

main()

File "examples/run_language_modeling.py", line 254, in main

trainer.train(model_path=model_path)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 314, in train

tr_loss += self._training_step(model, inputs, optimizer)

File "/usr/local/lib/python3.6/dist-packages/transformers/trainer.py", line 388, in _training_step

outputs = model(**inputs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/data_parallel.py", line 152, in forward

outputs = self.parallel_apply(replicas, inputs, kwargs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/data_parallel.py", line 162, in parallel_apply

return parallel_apply(replicas, inputs, kwargs, self.device_ids[:len(replicas)])

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/parallel_apply.py", line 85, in parallel_apply

output.reraise()

File "/usr/local/lib/python3.6/dist-packages/torch/_utils.py", line 394, in reraise

raise self.exc_type(msg)

RuntimeError: Caught RuntimeError in replica 0 on device 0.

Original Traceback (most recent call last):

File "/usr/local/lib/python3.6/dist-packages/torch/nn/parallel/parallel_apply.py", line 60, in _worker

output = module(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 616, in forward

use_cache=use_cache,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 500, in forward

use_cache=use_cache,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 237, in forward

use_cache=use_cache,

File "/usr/local/lib/python3.6/dist-packages/torch/nn/modules/module.py", line 532, in __call__

result = self.forward(*input, **kwargs)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 196, in forward

attn_outputs = self._attn(query, key, value, attention_mask, head_mask)

File "/usr/local/lib/python3.6/dist-packages/transformers/modeling_gpt2.py", line 149, in _attn

w = torch.where(mask, w, self.masked_bias)

RuntimeError: expected scalar type Half but found Float

```

<|||||>Could you provide a small subset of your data so that I can reproduce?<|||||>@sshleifer - I am using the wiki.train.raw default dataset. |

transformers | 3,962 | closed | `BertTokenizer.from_pretrained()` not working with native Python `pathlib` module | Consider this code that downloads models and tokenizers to disk and then uses `BertTokenizer.from_pretrained` to load the tokenizer from disk.

**ISSUE:** `BertTokenizer.from_pretrained()` does not seem to be compatible with Python's native [pathlib](https://docs.python.org/3/library/pathlib.html) module.

```

# -*- coding: utf-8 -*-

"""

Created on: 25-04-2020

Author: MacwanJ

ISSUE:

BertTokenizer.from_pretrained() is not compatible with pathlib constructs

"""

from pathlib import Path

import os

from transformers import BertModel, BertTokenizer

# enables proper path resolves when this script is run in terminal

# or in an interactive Python shell/notebook

try:

file_location = Path(__file__).parent.resolve()

except NameError:

file_location = Path.cwd().resolve()

PROJ_DIR = file_location.parent

#####################################################################

# DOWNLOAD MODELS & TOKENIZERS

#####################################################################

model_name = 'bert-base-uncased'

if not os.path.exists(PROJ_DIR/'models'/model_name):

print(model_name,

'folder does not exist. Creating folder now',

'and proceeding to download and save model')

os.makedirs(PROJ_DIR/'models'/model_name)

else:

print(model_name,

'folder already exists. Proceeding to download and save model')

tokenizer = BertTokenizer.from_pretrained(model_name)

model = BertModel.from_pretrained(model_name)

print('Download complete. Proceeding to save to disk')

model.save_pretrained(PROJ_DIR/'models'/model_name)