repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

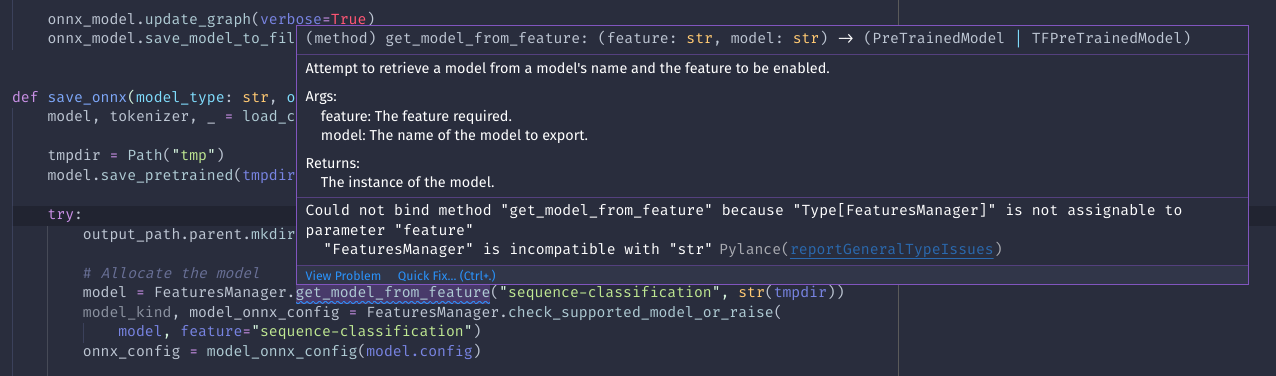

transformers | 16,347 | closed | `FeaturesManager.get_model_from_feature` should be a staticmethod | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.17.0

- Platform: Linux (Pop!_OS)

- Python version: 3.9.6

- PyTorch version (GPU?): 1.9.0+cu111

- Tensorflow version (GPU?): /

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

@michaelbenayoun who committed #14358

## Information

Type error for Pylance in VsCode:

## Fix

https://github.com/huggingface/transformers/blob/77321481247787c97568c3b9f64b19e22351bab8/src/transformers/onnx/features.py#L336-L345

I believe the above function should have the `@staticmethod` decorator.

| 03-23-2022 00:14:27 | 03-23-2022 00:14:27 | |

transformers | 16,346 | closed | Fix code repetition in serialization guide | # What does this PR do?

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

@sgugger @stevhliu | 03-22-2022 20:34:48 | 03-22-2022 20:34:48 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,345 | closed | [FlaxBart] make sure no grads are computed an bias | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Makes sure that no gradients are computed on FlaxBart bias. Note that in PyTorch we don't compute a gradient because the bias logits are saved as a PyTorch buffer: https://discuss.pytorch.org/t/what-is-the-difference-between-register-buffer-and-register-parameter-of-nn-module/32723

Also see results of: https://discuss.huggingface.co/t/gradients-verification-between-jax-flax-models-and-pytorch/15970

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 03-22-2022 20:21:39 | 03-22-2022 20:21:39 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_16345). All of your documentation changes will be reflected on that endpoint.<|||||>@patil-suraj @sanchit-gandhi - this surely makes a difference when training - I'm not sure how much of a difference though. Will be interesting to try out though.<|||||>The `stop_gradient` fix looks good - the results you cited verify nicely that the gradients are only frozen for the `final_logits_bias` parameters, and not for any other additional parameters upstream in the computation graph.

One small comment in regards to the testing script `check_gradients_pt_flax.py` that you used to generate the gradient comparison results. Currently, we are comparing the PyTorch gradients relative to the Flax ones (https://huggingface.co/patrickvonplaten/codesnippets/blob/main/check_gradients_pt_flax.py#L86):

```python

diff_rel = np.abs(ak_norm - bk_norm) / np.abs(ak_norm)

```

Since we are taking the PyTorch gradients as our ground truth values, we should compare the Flax gradients relative to the PyTorch ones:

```python

diff_rel = np.abs(ak_norm - bk_norm) / np.abs(bk_norm)

``` |

transformers | 16,344 | closed | [Bug template] Shift responsibilities for long-range | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

@ydshieh knows Longformer and BigBird probably better than me now

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 03-22-2022 19:02:05 | 03-22-2022 19:02:05 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,343 | closed | Checkpoint sharding | # What does this PR do?

This PR introduces the ability to create and load sharded checkpoints. It introduces a new argument in `save_pretrained` that controls the maximum size of a checkpoint before being auto-sharded into smaller parts (which defaults to 10GB after internal discussion, which should be good with the Hub and environment with low RAM like Colab).

When the model total size is less than this maximum size, it's saved exactly like before. When the model size is bigger, while traversing the state dict, each time a new weight tips the size above that threshold, a new shard is created. Therefore each shard is usually of size less than the max size, but if an individual weight has a size bigger than this threshold, it will spawn a shard containing only itself that will be of a bigger size.

On the `from_pretrained` side, a bit of refactoring was necessary to make the API deal with several state dict files. The main part is isolating the code that loads a state dict into a model in a separate function, so I can call it for each shard. I'm leaving comments on the PR to facilitate the review and I will follow up with another PR that refactors `from_pretrained` even more for cleaning but with no change of actual code.

cc @julien-c @thomwolf @stas00 @Narsil who interacted in the RFC.

Linked issue: #13548 | 03-22-2022 19:01:10 | 03-22-2022 19:01:10 | _The documentation is not available anymore as the PR was closed or merged._<|||||>@sgugger is it possibile to apply sharding to a current pretrained model that is bigger than 10gb (let's say t0pp like), using

load_pretrained-> save_pretrained

To save a sharded version, and the again

load_pretrained

That should handle therefore load the sharded checkpoint?

Thanks<|||||>Yes, that's exactly what you should do!

We'll also create a new branch of the t0pp checkpoint with a sharded checkpoint (we can't do it on the main branch or it would break compatibility with older versions of Transformers).<|||||>Hahaha amazing! Where is it? <|||||>@sgugger we could possibly upload the sharded checkpoint **in addition to** the current checkpoint in the same repo branch no?

I thought that's what we wanted to do to preserve backward compat while still upgrading those big models

<|||||>If you put them in the same branch, `from_pretrained` will only download the full checkpoint as it's the first in order of priority.<|||||>I see. should we consider changing this behavior? we've seen that git-branches are not super practical for those large models (cc @osanseviero )<|||||>(yep, i know this is going to require to call something like /api/models/xxx at the start of `from_pretrained`... :) )<|||||>or maybe we can say that we'll just push sharded models from now on (for example the bigscience models will be sharded-only) I think that's actually fine<|||||>or we can default to the shared model for the new version of `transformers`?<|||||>yep that was what i was suggesting but implementation wise is a bit more complex (and affects all model repos not just the sharded ones) |

transformers | 16,342 | closed | Adopt framework-specific blocks for content | This PR adopts the framework-specific blocks from [#130](https://github.com/huggingface/doc-builder/pull/130) for PyTorch/TensorFlow code samples and content. | 03-22-2022 17:52:49 | 03-22-2022 17:52:49 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,341 | closed | Modeling Outputs | # What does this PR do?

This PR tries to add new model outputs to cover avoid implementing custom outputs for models that do not have attentions. Three new outputs are added:

- `BaseModelOutputWithNoAttention`

- `BaseModelOutputWithPoolingAndNoAttention`

- `ImageClassifierOutputWithNoAttention`

The docstring of the `hidden_states` and `hidden_states` shape is changed to `(batch_size, num_channels, height, width)`. The thinking behind it is models that do not output attentions are usually conv models. However, this is not quite general.

Another solution is to change the docstring of `BaseModelOutput***` to state that `attentions` will be returned only if the model has an attention mechanism.

A custom `ModelOutput` will still be needed if the returned tensors' shapes are not the ones in the docstring. One solution may be making the outputs "model aware". For example, I can define a `BaseModelOutput2D` that returns 2d tensors (`batch_size, num_channels, height, width`). Doing so will unfortunately result in a lot of new model outputs.

Let me know your feedbacks | 03-22-2022 17:13:57 | 03-22-2022 17:13:57 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Updated the docstring of the outputs and replaced multiple models' outputs with the new ones<|||||>@NielsRogge Not sure what the point of your last comments. We know the modeling outputs won't work for every model and we have a strategy for that (subclass and overwrite the docstring). Did you have a suggestion on how to make the docstring more general?<|||||>Maybe we can add a statement that says that the `hidden_states` optionally include the initial embeddings, if the model has an embedding layer.<|||||>+1 on @NielsRogge idea<|||||>Looks good to me, note that we have some more vision models:

* Swin Transformer

* DeiT

* BEiT

* ViTMAE<|||||>Added correct outputs for

- DeiT

- BEiT

The following models need a custom output

- ViTMAE

- Swin<|||||>Changed the docstring for every `hidden_states` field in every `*ModelOutput*` to specify the embedding hidden state may be optional (depending on the model's architecture)<|||||>Update the docstrings and create a custom model output for SegFormer `SegFormerImageClassifierOutput` |

transformers | 16,340 | closed | VAN: Code sample tests | # What does this PR do?

This PR fixes the wrong name in `_CHECKPOINT_FOR_DOC` for VAN

| 03-22-2022 16:54:22 | 03-22-2022 16:54:22 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,339 | closed | The `convert_tokens_to_string` method fails when the added tokens include a space | ## Environment info

```

- `transformers` version: 4.17.0

- Platform: Linux-5.4.144+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.12

- PyTorch version (GPU?): 1.10.0+cu111 (False)

- Tensorflow version (GPU?): 2.8.0 (False)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

```

## Information

The bug that was identified [for the decode method regarding added tokens with space ](https://github.com/huggingface/transformers/issues/1133)also occurs with the `convert_tokens_to_string` method.

## To reproduce

```python

from transformers import AutoTokenizer, AddedToken

def print_tokenizer_result(text, tokenizer):

text = tokenizer.convert_tokens_to_string(tokenizer.tokenize(text))

print(f"convert_tokens_to_string method: {text}")

model_name = "patrickvonplaten/norwegian-roberta-base"

tokenizer_init = AutoTokenizer.from_pretrained(model_name)

tokenizer_init.save_pretrained("local_tokenizer")

model_name = "local_tokenizer"

tokenizer_s = AutoTokenizer.from_pretrained(model_name, use_fast=False)

tokenizer_f = AutoTokenizer.from_pretrained(model_name, use_fast=True)

new_token = "token with space"

tokenizer_s.add_tokens(AddedToken(new_token, lstrip=True))

tokenizer_f.add_tokens(AddedToken(new_token, lstrip=True))

text = "Example with token with space"

print("Output for the fast:")

print_tokenizer_result(text, tokenizer_f)

print("\nOutput for the slow:")

print_tokenizer_result(text, tokenizer_s)

```

## Expected behavior

No error or the fact that `convert_tokens_to_string` is a private method.

| 03-22-2022 16:00:10 | 03-22-2022 16:00:10 | One way to solve this problem is to move the logic implemented in the `_decode` method into the `convert_tokens_to_string` method.

https://github.com/huggingface/transformers/blob/9d88be57785dccfb1ce104a1226552cd216b726e/src/transformers/tokenization_utils.py#L933-L946

The only issue will be that we'll need to add new argument (`skip_special_tokens`, `clean_up_tokenization_spaces`, `spaces_between_special_tokens`) to the `convert_tokens_to_string` method of the slow tokenizers.

Another option is to make the method `convert_tokens_to_string` private.

<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,338 | closed | Add type annotations for Rembert/Splinter and copies | Splinter and Rembert (Torch) type annotations.

Dependencies on Bert (Torch).

Raw files w/o running make fixup or make fix-copies

#16059

@Rocketknight1 | 03-22-2022 15:59:40 | 03-22-2022 15:59:40 | By the way, GitHub is having some issues right now and tests may not be running. Feel free to continue with the PR, but we'll need to wait for them to come back before we can merge!<|||||>_The documentation is not available anymore as the PR was closed or merged._<|||||>Tests are back, going to try fixing copies etc. now, one sec!<|||||>Is this a private file? This was the main error I was receiving after run fix-copies (from the build strack trace)

```

self = <module 'transformers.models.template_bi' from '/home/runner/work/transformers/transformers/src/transformers/models/template_bi/__init__.py'>

module_name = 'modeling_template_bi'

def _get_module(self, module_name: str):

try:

return importlib.import_module("." + module_name, self.__name__)

except Exception as e:

raise RuntimeError(

f"Failed to import {self.__name__}.{module_name} because of the following error (look up to see its traceback):\n{e}"

> ) from e

E RuntimeError: Failed to import transformers.models.template_bi.modeling_template_bi because of the following error (look up to see its traceback):

E name 'Optional' is not defined```<|||||>Ah, I see the problem now! This error is in our template files, and yes, it can be hard to spot, because it only appears when one of our tests tries to generate a new class from the template. Give me a second and I'll see if I can fix it!<|||||>I see. Definitely scratched my head at these for a while - Thanks so much for your help! |

transformers | 16,337 | closed | [TBD] discrepancy regarding the tokenize method behavior - should the token correspond to the token in the vocabulary or to the initial text | ## Environment info

```

- `transformers` version: 4.17.0

- Platform: Linux-5.4.144+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.12

- PyTorch version (GPU?): 1.10.0+cu111 (False)

- Tensorflow version (GPU?): 2.8.0 (False)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

```

## Information

When adding a token to a tokenizer with a slow backend or to a tokenizer with a fast backend, if you use the `AddedToken` class with the `lstrip=True` argument, the output of the tokenize method is not the same.

This difference should be put into perspective by the fact that the encoding (the sequence of ids) is identical: the model will see the correct input.

## To reproduce

```python

from transformers import AutoTokenizer, AddedToken

def print_tokenizer_result(text, tokenizer):

tokens = tokenizer.tokenize(text)

print(f"tokenize method: {tokens}")

model_name = "patrickvonplaten/norwegian-roberta-base"

tokenizer_init = AutoTokenizer.from_pretrained(model_name)

tokenizer_init.save_pretrained("local_tokenizer")

model_name = "local_tokenizer"

tokenizer_s = AutoTokenizer.from_pretrained(model_name, use_fast=False)

tokenizer_f = AutoTokenizer.from_pretrained(model_name, use_fast=True)

new_token = "added_token_lstrip_false"

tokenizer_s.add_tokens(AddedToken(new_token, lstrip=True))

tokenizer_f.add_tokens(AddedToken(new_token, lstrip=True))

text = "Example with added_token_lstrip_false"

print("Output for the fast:")

print_tokenizer_result(text, tokenizer_f)

print("\nOutput for the slow:")

print_tokenizer_result(text, tokenizer_s)

```

Output:

```

Output for the fast:

tokenize method: ['Ex', 'amp', 'le', 'Ġwith', ' added_token_lstrip_false'] # Note the space at the beginning of ' added_token_lstrip_false'

Output for the slow:

tokenize method: ['Ex', 'amp', 'le', 'Ġwith', 'added_token_lstrip_false']

``` | 03-22-2022 15:46:01 | 03-22-2022 15:46:01 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,336 | closed | A slow tokenizer cannot add a token with the argument `lstrip=False` | ## Environment info

```

- `transformers` version: 4.17.0

- Platform: Linux-5.4.144+-x86_64-with-Ubuntu-18.04-bionic

- Python version: 3.7.12

- PyTorch version (GPU?): 1.10.0+cu111 (False)

- Tensorflow version (GPU?): 2.8.0 (False)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

```

## Information

When adding a token to a tokenizer with a slow backend or to a tokenizer with a fast backend, if you use the `AddedToken` class with the `lstrip=False` argument, the final tokenization will not be the same.

My impression is that the slow tokenizer always strips and that the fast tokenizer takes this argument into account (to be confirmed by the conclusion of [this issue](https://github.com/huggingface/tokenizers/issues/959) in the tokenizers library).

## To reproduce

```python

from transformers import AutoTokenizer, AddedToken

def print_tokenizer_result(text, tokenizer):

ids = tokenizer.encode(text, add_special_tokens=False)

tokens = tokenizer.convert_ids_to_tokens(ids)

print(f"tokens: {tokens}")

model_name = "patrickvonplaten/norwegian-roberta-base"

tokenizer_init = AutoTokenizer.from_pretrained(model_name)

tokenizer_init.save_pretrained("local_tokenizer")

model_name = "local_tokenizer"

tokenizer_s = AutoTokenizer.from_pretrained(model_name, use_fast=False)

tokenizer_f = AutoTokenizer.from_pretrained(model_name, use_fast=True)

new_token = "added_token_lstrip_false"

tokenizer_s.add_tokens(AddedToken(new_token, lstrip=False))

tokenizer_f.add_tokens(AddedToken(new_token, lstrip=False))

text = "Example with added_token_lstrip_false"

print("Output for the fast:")

print_tokenizer_result(text, tokenizer_f)

print("\nOutput for the slow:")

print_tokenizer_result(text, tokenizer_s)

```

Output:

```

Output for the fast:

tokens: ['Ex', 'amp', 'le', 'Ġwith', 'Ġ', 'added_token_lstrip_false']

Output for the slow:

tokens: ['Ex', 'amp', 'le', 'Ġwith', 'added_token_lstrip_false']

```

## Expected behavior

Output:

```

Output for the fast:

tokens: ['Ex', 'amp', 'le', 'Ġwith', 'Ġ', 'added_token_lstrip_false']

Output for the slow:

tokens: ['Ex', 'amp', 'le', 'Ġwith', 'Ġ', 'added_token_lstrip_false']

```

| 03-22-2022 15:26:49 | 03-22-2022 15:26:49 | The logic for `lstrip` **is** implemented for slow tokenizers actually:

https://github.com/huggingface/transformers/blob/main/src/transformers/tokenization_utils.py#L519

The main thing I think is that in that function,the added token is NOT in the `all_special_added_tokens_extended`. If it was, it would work.

Now the function `add_tokens` starts by removing all information about the `AddedToken` which if it didn't maybe we could keep parity here. https://github.com/huggingface/transformers/blob/main/src/transformers/tokenization_utils.py#L411

So my conclusion is that the core issue is that the slow tokenizer discards all the info you are sending it, it probably shouldn't.

That being said, the simplest backward compatible fix, might not be obvious.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,335 | closed | [WIP] test doctest | # What does this PR do?

test doctest | 03-22-2022 14:27:07 | 03-22-2022 14:27:07 | |

transformers | 16,334 | closed | T5Tokenizer Fast and Slow give different results with AddedTokens | When adding a new token to T5TokenizerFast and/or T5Tokenizer, we get different results for the tokenizers which is unexpected.

E.g. running the following code:

```python

from transformers import AutoTokenizer, AddedToken

tok = AutoTokenizer.from_pretrained("t5-small", use_fast=False)

tok_fast = AutoTokenizer.from_pretrained("t5-small", use_fast=True)

tok.add_tokens("$$$")

tok_fast.add_tokens(AddedToken("$$$", lstrip=False))

prompt = "Hello what is going on $$$ no ? We should"

print("Slow")

print(tok.decode(tok(prompt).input_ids))

print("Fast")

print(tok_fast.decode(tok_fast(prompt).input_ids))

```

yields different results for each tokenizer

```

Slow

Hello what is going on $$$ no? We should</s>

Fast

Hello what is going on$$$ no? We should</s>

```

## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.18.0.dev0

- Platform: Linux-5.15.15-76051515-generic-x86_64-with-glibc2.34

- Python version: 3.9.7

- Huggingface_hub version: 0.4.0.dev0

- PyTorch version (GPU?): 1.10.2+cu102 (True)

- Tensorflow version (GPU?): 2.8.0 (False)

- Flax version (CPU?/GPU?/TPU?): 0.4.0 (cpu)

- Jax version: 0.3.1

- JaxLib version: 0.3.0

| 03-22-2022 13:49:00 | 03-22-2022 13:49:00 | cc @Narsil @SaulLu <|||||>Hi, The behavior can be explained by the fact that the encode, splits on whitespace and ignores them,

then the decoder uses `Metaspace` (which is for the `spm` behavior) which does not prefix things with spaces even on the added token. The spaces are supposed to already be contained within the tokens themselves.

We could have parity on this at least for sure !

But I am not sure who is right in that case, both decoded values look OK to me. The proposed AddedToken contains no information about the spaces so it's ok to no place one back by default (it would break things when added tokens are specifically intended for stuff not containing spaces).

In that particular instance, because we're coming from a sentence with a space, ofc it makes more sense to put one back to recover the original string. But `decode[999, 998]` with `999="$("` and `998=")$"` It's unclear to me if a user wants `"$( )$"` or `"$()$"` when decoded. (Just trying to take an plausible example where the answer is unclear.)<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.<|||||>should this be reopened if it's not resolved yet?<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,333 | closed | Update docs/README.md | # What does this PR do?

Add information about how to perform doc testing **for a specific python module**. Potentially making the process less scaring (a lot of file changes) for the users.

Also make it more clear/transparent that `file_utils.py` should be (almost) always included.

| 03-22-2022 13:37:26 | 03-22-2022 13:37:26 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Do you mean: there are some python module files or doc files, for which if we want to run doctest, it will require changes (preparation) in files other than

- the target files themselves

- `file_utils.py`

<|||||>> Ah sorry, I misread the command! This works indeed but could we maybe wait for the refactor of `file_utils` to merge this (and fix the paths)? I'll probably forget to adapt this otherwise.

Oh, yes for sure!<|||||>Will merge this PR once Sylvain's PR about file_utils is merged. |

transformers | 16,332 | closed | TF - Fix interchangeable past/past_key_values and revert output variable name in GPT2 | # Context

From the discussion in https://github.com/huggingface/transformers/pull/16311 (PR that applies `@unpack_inputs` to TF `gpt2`): In the generate refactor, TF `gpt2` got an updated `prepare_inputs_for_generation()`, where its output `past` got renamed into `past_key_values` (i.e. as in FLAX/PT). Patrick suggested reverting it since this prepared input could be used externally.

# What did I find while working on this PR?

Reverting as suggested above makes TF `gpt2` fail tests related to `encoder_decoder`, which got an updated `prepare_inputs_for_generation()` in the same PR that expects a `past_key_values` (and not a `past`).

Meanwhile, I've also noticed a related bug in the new `@unpack_inputs` decorator, where it was not preserving a previous behavior -- when the model received a `past_key_values` but expected a `past` input (and vice-versa), it automatically swapped the keyword. This feature was the key enabler behind `encoder_decoder`+`gpt2`, as `encoder_decoder` was throwing out `past_key_values` prepared inputs that were caught by `gpt2`'s `past` argument.

# So, what's in this PR?

This PR fixes the two issues above, which are needed for proper behavior in all combinations of inputs to TF `gpt2`, after the introduction of the decorator:

1. corrects the bug in the `@unpack_inputs` decorator and adds tests to ensure we don't regress on some key properties of our TF input handling. After this PR, `gpt2` preserves its ability to receive `past` (and `past_key_values`, if through `encoder_decoder`-like), with and without the decorator.

2. It also reverts `past_key_values` into `past` whenever the change was introduced in https://github.com/huggingface/transformers/pull/15944, and makes the necessary changes in `encoder_decoder`-like models. | 03-22-2022 12:50:17 | 03-22-2022 12:50:17 | ~(Wait, there is an error)~

~Should be good now~

Nope<|||||>@patrickvonplaten hold your review, this change is not conflicting with the `encoder_decoder` models. I believe I know why, digging deeper.<|||||>_The documentation is not available anymore as the PR was closed or merged._<|||||>@patrickvonplaten now it is properly fixed -- please check the updated description at the top :)

Meanwhile, the scope increased a bit, so I'm tagging a 2nd reviewer (@Rocketknight1 )<|||||>I think Sylvain also tries to avoid `modeling_tf_utils.py` as much as possible too these days, lol. Let me take a look! <|||||>> I'd really like to get rid of these old non-standard arguments next time we can make a breaking change, though!

@Rocketknight1 me too 🙈 that function is a mess |

transformers | 16,331 | closed | [GLPN] Improve docs | # What does this PR do?

This PR adds a link to a notebook. | 03-22-2022 12:21:10 | 03-22-2022 12:21:10 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,330 | closed | Deal with the error when task is regression | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 03-22-2022 11:45:44 | 03-22-2022 11:45:44 | _The documentation is not available anymore as the PR was closed or merged._<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.<|||||>Sorry for not seeing this earlier! cc @sgugger <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,329 | closed | Spanish translation of the file multilingual.mdx | # What does this PR do?

Adds the Spanish version of [multilingual.mdx](https://github.com/huggingface/transformers/blob/master/docs/source/en/multilingual.mdx) to [transformers/docs/source_es](https://github.com/huggingface/transformers/tree/main/docs/source/es)

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes https://github.com/huggingface/transformers/issues/15947 (issue)

## Before submitting

- [x] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

## Who can review?

@omarespejel @osanseviero

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 03-22-2022 11:34:02 | 03-22-2022 11:34:02 | _The documentation is not available anymore as the PR was closed or merged._<|||||>any other thing left to do? @omarespejel <|||||>@SimplyJuanjo thank you! 🤗

@sgugger LGTM 👍 |

transformers | 16,328 | closed | [T5] Add t5 download script | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

There have been a lot of issues on converting any of the official T5 checkpoints to HF. All original T5 checkpoints are stored on google cloud bucked and it's not always obvious how to download them. I think it makes sense to provide a bash script to the T5 folder that can help with this.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 03-22-2022 11:30:10 | 03-22-2022 11:30:10 | cc @peregilk @stefan-it <|||||>_The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,327 | closed | Arg to begin `Trainer` evaluation on eval data after n steps/epochs | # 🚀 Feature request

It would be great to be able to have the option to delay evaluation on the eval set until after a set number of steps/epochs.

## Motivation

Evaluation metrics can take a while to calculate and are arguably often not as meaningful in the early stages of training (like during warmup). This change would free up time that would be better spent training. Increasing `eval_steps` works until you end up waiting a while at the business end of training so this would not be an ideal compromise.

## Your contribution

First maybe have a training argument such as `evaluation_delay`.

Then:

(Adapted from [here](https://github.com/huggingface/transformers/blob/v4.17.0/src/transformers/trainer.py#L1578).)

```python

if self.args.evaluation_delay is not None:

if self.args.save_strategy == IntervalStrategy.STEPS:

is_delayed = self.state.global_step <= self.args.evaluation_delay

else:

is_delayed = epoch <= self.args.evaluation_delay

if self.control.should_save and not is_delayed:

self._save_checkpoint(model, trial, metrics=metrics)

self.control = self.callback_handler.on_save(self.args, self.state, self.control)

```

Maybe get some input from @sgugger?

Thanks! | 03-22-2022 10:27:15 | 03-22-2022 10:27:15 | That sounds like a very interesting new feature, and I agree with the proposed implementation. Would you like to work on a PR for this?<|||||>Sure thing! @sgugger <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,326 | closed | Updates the default branch from master to main | Updates the default branch name for the doc-builder. | 03-22-2022 10:11:55 | 03-22-2022 10:11:55 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,325 | open | Implement HybridNets: End-to-End Perception Network | # 🌟 New model addition

## Model description

Quote from the Github repository:

```markdown

HybridNets is an end2end perception network for multi-tasks.

Our work focused on traffic object detection, drivable area segmentation and lane detection.

HybridNets can run real-time on embedded systems, and obtains SOTA Object Detection,

Lane Detection on BDD100K Dataset.

```

## Open source status

* [x] the model implementation is available: yes, they have pushed everything [here](https://github.com/datvuthanh/HybridNets) on Github

* [x] the model weights are available: yes, there are available directly [here](https://github.com/datvuthanh/HybridNets/releases/download/v1.0/hybridnets.pth) on Github.

* [x] who are the authors: Dat Vu and Bao Ngo and Hung Phan (@xoiga123 @datvuthanh)

Btw, I would love to help to implement this kind of models to the Hub if possible :hugs: .

But I don't know if it fits to `Transformers` library or not and if it involves a lot of work to implement it, but I want to try to help! | 03-22-2022 08:52:31 | 03-22-2022 08:52:31 | cc @NielsRogge |

transformers | 16,324 | closed | Add type hints for Pegasus model (PyTorch) | Adding type hints for forward methods in user-facing class for Pegasus model (PyTorch) as mentioned in #16059

@Rocketknight1 | 03-22-2022 08:38:52 | 03-22-2022 08:38:52 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,323 | closed | Funnel type hints | # What does this PR do?

Added type hints for Funnel Transformer and TF Funnel Transformer as described in https://github.com/huggingface/transformers/issues/16059

@Rocketknight1 | 03-22-2022 06:42:45 | 03-22-2022 06:42:45 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 16,322 | closed | RuntimeError: CUDA out of memory. Tried to allocate 20.00 MiB with 8 Ampere GPU's | I am fine tuning masked language model from XLM Roberta large on google machine specs.

I made couple of experiments and was strange to see few results. `I think something is not functioning properly. `

I am using pre-trained Hugging face model.

`I launch it as train.py file which I copy inside docker image and use vertex-ai ( GCP) to launch it using Containerspec`

`machineSpec = MachineSpec(machine_type="a2-highgpu-8g",accelerator_count=8,accelerator_type="NVIDIA_TESLA_A100")`

```

container = ContainerSpec(image_uri="us-docker.pkg.dev/*****",

command=["/bin/bash", "-c", "gsutil cp gs://***/tfr_code2.tar.gz . && tar xvzf tfr_code2.tar.gz && cd pythonPackage/trainer/ && python train.py"])

```

I am using

https://huggingface.co/xlm-roberta-large

I am not using fairseq or anything.

```

tokenizer = tr.XLMRobertaTokenizer.from_pretrained("xlm-roberta-large",local_files_only=True)

model = tr.XLMRobertaForMaskedLM.from_pretrained("xlm-roberta-large", return_dict=True,local_files_only=True)

model.gradient_checkpointing_enable() #included as new line

```

Here is `Nvidia-SMI`

```

b'Tue Mar 22 05:06:40 2022 \n+-----------------------------------------------------------------------------+\n| NVIDIA-SMI 450.119.04 Driver Version: 450.119.04 CUDA Version: 11.0

|\n|-------------------------------+----------------------+----------------------+\n| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |\n| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M.

|\n| | | MIG M. |\n|===============================+======================+======================|\n| 0 A100-SXM4-40GB On | 00000000:00:04.0 Off | 0 |\n| N/A 33C P0 54W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled

|\n+-------------------------------+----------------------+----------------------+\n| 1 A100-SXM4-40GB On | 00000000:00:05.0 Off | 0 |\n| N/A 31C P0 53W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled |

\n+-------------------------------+----------------------+----------------------+\n| 2 A100-SXM4-40GB On | 00000000:00:06.0 Off | 0 |\n| N/A 31C P0 54W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled |

\n+-------------------------------+----------------------+----------------------+\n| 3 A100-SXM4-40GB On | 00000000:00:07.0 Off | 0 |\n| N/A 34C P0 54W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled |

\n+-------------------------------+----------------------+----------------------+\n| 4 A100-SXM4-40GB On | 00000000:80:00.0 Off | 0 |\n| N/A 32C P0 57W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled |

\n+-------------------------------+----------------------+----------------------+\n| 5 A100-SXM4-40GB On | 00000000:80:01.0 Off | 0 |\n| N/A 34C P0 54W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled |

\n+-------------------------------+----------------------+----------------------+\n| 6 A100-SXM4-40GB On | 00000000:80:02.0 Off | 0 |\n| N/A 32C P0 54W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled |

\n+-------------------------------+----------------------+----------------------+\n| 7 A100-SXM4-40GB On | 00000000:80:03.0 Off | 0 |\n| N/A 34C P0 61W / 400W | 0MiB / 40537MiB | 0% Default |\n| | | Disabled |

\n+-------------------------------+----------------------+----------------------+\n \n+-----------------------------------------------------------------------------+\n| Processes: |\n| GPU GI CI PID Type Process name GPU Memory |\n| ID ID Usage |\n|=============================================================================|\n| No running processes found |\n+-----------------------------------------------------------------------------+\n'

2022-03-22T05:10:07.712355Z

```

**It has lot of free memories but still I get this error.**

`RuntimeError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 39.59 GiB total capacity; 33.48 GiB already allocated; 3.19 MiB free; 34.03 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF`

```

['Traceback (most recent call last):\n', ' File "train.py", line 144, in <module>\n trainer.train()\n', ' File "/opt/conda/lib/python3.7/site-packages/transformers/trainer.py", line 1400, in train\n tr_loss_step = self.training_step(model, inputs)\n', ' File "/opt/conda/lib/python3.7/site-packages/transformers/trainer.py", line 1994, in training_step\n self.scaler.scale(loss).backward()\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/_tensor.py", line 363, in backward\n torch.autograd.backward(self, gradient, retain_graph, create_graph, inputs=inputs)\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/autograd/__init__.py", line 175, in backward\n allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/autograd/function.py", line 253, in apply\n return user_fn(self, *args)\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/utils/checkpoint.py", line 146, in backward\n torch.autograd.backward(outputs_with_grad, args_with_grad)\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/autograd/__init__.py", line 175, in backward\n allow_unreachable=True, accumulate_grad=True) # Calls into the C++ engine to run the backward pass\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/autograd/function.py", line 253, in apply\n return user_fn(self, *args)\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/nn/parallel/_functions.py", line 34, in backward\n return (None,) + ReduceAddCoalesced.apply(ctx.input_device, ctx.num_inputs, *grad_outputs)\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/nn/parallel/_functions.py", line 45, in forward\n return comm.reduce_add_coalesced(grads_, destination)\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/nn/parallel/comm.py", line 143, in reduce_add_coalesced\n flat_result = reduce_add(flat_tensors, destination)\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/nn/parallel/comm.py", line 95, in reduce_add\n result = torch.empty_like(inputs[root_index])\n', 'RuntimeError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 39.59 GiB total capacity; 33.48 GiB already allocated; 3.19 MiB free; 34.03 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF\n'] | 2022-03-22T05:11:26.409945Z

-- | --

```

**Training Code**

```

training_args = tr.TrainingArguments(

output_dir='****'

,logging_dir='****' # directory for storing logs

,save_strategy="epoch"

,run_name="****"

,learning_rate=2e-5

,logging_steps=1000

,overwrite_output_dir=True

,num_train_epochs=10

,per_device_train_batch_size=8

,prediction_loss_only=True

,gradient_accumulation_steps=4

# ,gradient_checkpointing=True

,bf16=True #57100

,optim="adafactor"

)

trainer = tr.Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_data

)

```

Also `gradient_checkpointing` never works. Strange.

**Also, is it using all 8 GPU's?**

### Versions

Versions

torch==1.11.0+cu113

torchvision==0.12.0+cu113

torchaudio==0.11.0+cu113

transformers==4.17.0

**Train.py**

```

import torch

import numpy as np

import pandas as pd

from transformers import BertTokenizer, BertForSequenceClassification

import transformers as tr

from sentence_transformers import SentenceTransformer

from transformers import XLMRobertaTokenizer, XLMRobertaForMaskedLM

from transformers import AdamW

from transformers import AutoTokenizer

from transformers import BertTokenizerFast as BertTokenizer, BertModel, AdamW, get_linear_schedule_with_warmup,BertForMaskedLM

from transformers import DataCollatorForLanguageModeling

from scipy.special import softmax

import scipy

import random

import pickle

import os

import time

import subprocess as sp

# torch.cuda.empty_cache()

print(sp.check_output('nvidia-smi'))

print("current device",torch.cuda.current_device())

print("device count",torch.cuda.device_count())

# os.environ["CUDA_DEVICE_ORDER"]="PCI_BUS_ID"

# os.environ["CUDA_VISIBLE_DEVICES"]="0,1,2,3,4,5,6,7"

import sys

from traceback import format_exception

def my_except_hook(exctype, value, traceback):

print(format_exception(exctype, value, traceback))

sys.__excepthook__(exctype, value, traceback)

sys.excepthook = my_except_hook

start=time.time()

print("package imported completed")

os.environ['TRANSFORMERS_OFFLINE']='1'

os.environ['HF_MLFLOW_LOG_ARTIFACTS']='TRUE'

# os.environ['PYTORCH_CUDA_ALLOC_CONF']='max_split_size_mb'

print("env setup completed")

print( "transformer",tr.__version__)

print("torch",torch.__version__)

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

print("Using", device)

torch.backends.cudnn.deterministic = True

tr.trainer_utils.set_seed(0)

print("here")

tokenizer = tr.XLMRobertaTokenizer.from_pretrained("xlm-roberta-large",local_files_only=True)

model = tr.XLMRobertaForMaskedLM.from_pretrained("xlm-roberta-large", return_dict=True,local_files_only=True)

model.gradient_checkpointing_enable() #included as new line

print("included gradient checkpoint")

model.to(device)

print("Model loaded successfully")

df=pd.read_csv("gs://******/data.csv")

print("read csv")

# ,engine='openpyxl',sheet_name="master_data"

train_df=df.text.tolist()

print(len(train_df))

train_df=list(set(train_df))

train_df = [x for x in train_df if str(x) != 'nan']

print("Length of training data is \n ",len(train_df))

print("DATA LOADED successfully")

train_encodings = tokenizer(train_df, truncation=True, padding=True, max_length=512, return_tensors="pt")

print("encoding done")

data_collator = DataCollatorForLanguageModeling(tokenizer=tokenizer, mlm=True, mlm_probability=0.15)

print("data collector done")

class SEDataset(torch.utils.data.Dataset):

def __init__(self, encodings):

self.encodings = encodings

def __getitem__(self, idx):

item = {key: torch.tensor(val[idx]) for key, val in self.encodings.items()}

return item

def __len__(self):

return len(self.encodings["attention_mask"])

train_data = SEDataset(train_encodings)

print("train data created")

training_args = tr.TrainingArguments(

output_dir='gs://****/results_mlm_exp1'

,logging_dir='gs://****logs_mlm_exp1' # directory for storing logs

,save_strategy="epoch"

# ,run_name="MLM_Exp1"

,learning_rate=2e-5

,logging_steps=500

,overwrite_output_dir=True

,num_train_epochs=20

,per_device_train_batch_size=4

,prediction_loss_only=True

,gradient_accumulation_steps=2

# ,sharded_ddp='zero_dp_3'

# ,gradient_checkpointing=True

,bf16=True #Ampere GPU

# ,fp16=True

# ,optim="adafactor"

# ,dataloader_num_workers=20

# ,logging_strategy='no'

# per_device_train_batch_size

# per_gpu_train_batch_size

# disable_tqdm=True

)

print("training sample is 400001")

print("Included ,gradient_accumulation_steps=4 ,bf16=True and per_device_train_batch_size=4 " )

print(start)

trainer = tr.Trainer(

model=model,

args=training_args,

data_collator=data_collator,

train_dataset=train_data

)

print("training to start without bf16")

trainer.train()

print("model training finished")

trainer.save_model("gs://*****/model_mlm_exp1")

print("training finished")

end=time.time()

print("total time taken in hours is", (end-start)/3600)

``` | 03-22-2022 05:35:00 | 03-22-2022 05:35:00 | @sgugger <|||||>Please follow the issue template. You're not showing the script you are running or how you are launching it, so there is nothing anyone can do to help.<|||||>> Please follow the issue template. You're not showing the script you are running or how you are launching it, so there is nothing anyone can do to help.

Edited and Showing you the script. <|||||>You're launching the script with just `python`. As highlighted in the [examples README](https://github.com/huggingface/transformers/tree/master/examples/pytorch#distributed-training-and-mixed-precision) for distributed training, you need to use `python -m torch.distributed.launch` to use the 8 GPUs.<|||||>> You're launching the script with just `python`. As highlighted in the [examples README](https://github.com/huggingface/transformers/tree/master/examples/pytorch#distributed-training-and-mixed-precision) for distributed training, you need to use `python -m torch.distributed.launch` to use the 8 GPUs.

Ok.

You mean to say I should use this:

`command=["/bin/bash", "-c", "gsutil cp gs://***/tfr_code2.tar.gz . && tar xvzf tfr_code2.tar.gz && cd pythonPackage/trainer/ && python -m torch.distributed.launch train.py"])`

<|||||>> > You're launching the script with just `python`. As highlighted in the [examples README](https://github.com/huggingface/transformers/tree/master/examples/pytorch#distributed-training-and-mixed-precision) for distributed training, you need to use `python -m torch.distributed.launch` to use the 8 GPUs.

>

> Ok.

>

> You mean to say I should use this:

>

> `command=["/bin/bash", "-c", "gsutil cp gs://***/tfr_code2.tar.gz . && tar xvzf tfr_code2.tar.gz && cd pythonPackage/trainer/ && python -m torch.distributed.launch train.py"])`

I get this error:

```

RuntimeError: CUDA out of memory. Tried to allocate 7.63 GiB (GPU 0; 39.59 GiB total capacity; 23.22 GiB already allocated; 3.52 GiB free; 33.88 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF | 2022-03-22T16:24:41.763613Z

-- | --

```

Why its showing `GPU 0` only<|||||>I am using this parameters or am I missing anything.

@sgugger

```

training_args = tr.TrainingArguments(

output_dir='**'

,logging_dir='**' # directory for storing logs

,save_strategy="epoch"

,learning_rate=2e-5

,logging_steps=2500

,overwrite_output_dir=True

,num_train_epochs=20

,per_device_train_batch_size=4

,prediction_loss_only=True

,bf16=True #Ampere GPU

)

And Launching using this:

python -m torch.distributed.launch --nproc_per_node 8 train.py --bf16

```

**ERROR**

```

Traceback (most recent call last):\n', ' File "train.py", line 159, in <module>\n trainer.train()\n',

' File "/opt/conda/lib/python3.7/site-packages/transformers/trainer.py", line 1400, in train\n tr_loss_step = self.training_step(model, inputs)\n',

' File "/opt/conda/lib/python3.7/site-packages/transformers/trainer.py", line 1984, in training_step\n loss = self.compute_loss(model, inputs)\n',

' File "/opt/conda/lib/python3.7/site-packages/transformers/trainer.py", line 2016, in compute_loss\n outputs = model(**inputs)\n',

' File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl\n return forward_call(*input, **kwargs)\n',

' File "/opt/conda/lib/python3.7/site-packages/torch/nn/parallel/distributed.py", line 963, in forward\n output = self.module(*inputs[0], **kwargs[0])\n',

' File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl\n return forward_call(*input, **kwargs)\n',

' File "/opt/conda/lib/python3.7/site-packages/transformers/models/roberta/modeling_roberta.py", line 1114, in forward\n masked_lm_loss = loss_fct(prediction_scores.view(-1, self.config.vocab_size), labels.view(-1))\n', ' File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/module.py", line 1110, in _call_impl\n return forward_call(*input, **kwargs)\n',

' File "/opt/conda/lib/python3.7/site-packages/torch/nn/modules/loss.py", line 1165, in forward\n label_smoothing=self.label_smoothing)\n',

' File "/opt/conda/lib/python3.7/site-packages/torch/nn/functional.py", line 2996, in cross_entropy\n return torch._C._nn.cross_entropy_loss(input, target, weight, _Reduction.get_enum(reduction), ignore_index, label_smoothing)\n',

'RuntimeError: CUDA out of memory. Tried to allocate 978.00 MiB (GPU 0; 39.59 GiB total capacity; 6.40 GiB already allocated; 690.19 MiB free; 6.45 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF

If your script expects `--local_rank` argument to be set, please change it to read from `os.environ['LOCAL_RANK']`

```<|||||>Any help?<|||||>@sgugger If I use distributed training, then would `trainer.save_model("gs://*****/model_mlm_exp1")` will work or do I need to pass any extra parameter so that only 1 model is saved from multiple GPU's?<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.<|||||>Assalamu alekum, I also came across such a mistake

RuntimeError: CUDA out of memory. Tried to allocate 20.00 MiB (GPU 0; 7.80 GiB total capacity; 6.41 GiB already allocated; 1.69 MiB free; 6.82 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF |

transformers | 16,321 | closed | First token misses the first character in its `offsets_mapping` WHEN `add_prefix_space=True` is used | ## Environment info

- `transformers` version: 3.5.1

- Python version: 3.9.7

- PyTorch version (GPU?): 1.6.0 (GPU)

### Who can help

@SaulLu

## Information

Model I am using (Bert, XLNet ...): roberta-large

## To reproduce

Steps to reproduce the behavior:

```

tokenizer = AutoTokenizer.from_pretrained('roberta-large', use_fast=True)

tokenizer("This is a sentence", return_offsets_mapping=True)['offset_mapping']

[(0, 0), **(0, 4)**, (5, 7), (8, 9), (10, 18), (0, 0)]}

tokenizer = AutoTokenizer.from_pretrained('roberta-large', use_fast=True, add_prefix_space=True)

tokenizer("This is a sentence", return_offsets_mapping=True)['offset_mapping']

[(0, 0), **(1, 4)**, (5, 7), (8, 9), (10, 18), (0, 0)]}

```

## Expected behavior

Should be [(0, 0), **(0, 4)**, (5, 7), (8, 9), (10, 18), (0, 0)]} | 03-22-2022 05:31:02 | 03-22-2022 05:31:02 | Hi @ciaochiaociao ,

I've just tested you're snippet of code with the latest version of transformers (`transformers==4.17.0`) and the result correspond to what you're expecting:

```python

tokenizer = AutoTokenizer.from_pretrained('roberta-large', use_fast=True)

print(tokenizer("This is a sentence", return_offsets_mapping=True)['offset_mapping'])

# [(0, 0), **(0, 4)**, (5, 7), (8, 9), (10, 18), (0, 0)]}

tokenizer = AutoTokenizer.from_pretrained('roberta-large', use_fast=True, add_prefix_space=True)

print(tokenizer("This is a sentence", return_offsets_mapping=True)['offset_mapping'])

# [(0, 0), **(0, 4)**, (5, 7), (8, 9), (10, 18), (0, 0)]}

```

I've also tried to test with the version 3.5.1 of `transformers` but I think got this error when I tried to import AutoTokenizer -that you use in your snippet of code - from transformers:

```

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

[<ipython-input-3-939527a2a513>](https://localhost:8080/#) in <module>()

----> 1 from transformers import AutoTokenizer

2 frames

[/usr/local/lib/python3.7/dist-packages/transformers/trainer_pt_utils.py](https://localhost:8080/#) in <module>()

38 SAVE_STATE_WARNING = ""

39 else:

---> 40 from torch.optim.lr_scheduler import SAVE_STATE_WARNING

41

42 logger = logging.get_logger(__name__)

ImportError: cannot import name 'SAVE_STATE_WARNING' from 'torch.optim.lr_scheduler' (/usr/local/lib/python3.7/dist-packages/torch/optim/lr_scheduler.py)

---------------------------------------------------------------------------

NOTE: If your import is failing due to a missing package, you can

manually install dependencies using either !pip or !apt.

To view examples of installing some common dependencies, click the

"Open Examples" button below.

---------------------------------------------------------------------------

```

Let me know if upgrading to the last version of transformers work for you :smile:

<|||||>Cool. Thank you for your reply. 4.17.0 did work. <|||||>By the way, FYI

`4.16.2` also works, while `4.14.1` does not, which shows the same results of `3.5.1`.<|||||>Yes, I think 2 PRs helped to solve this issue:

1. One in the transformers lib: https://github.com/huggingface/transformers/pull/14752. That was integrated into transformers v4.15.0

2. One in the tokenizers lib: https://github.com/huggingface/tokenizers/pull/844. That was integrated into tokenizers v0.11.0

With previous version, we might indeed have issues with offset mappings. :slightly_smiling_face:

I'm closing this issue since this seems to be solved :smile: |

transformers | 16,320 | closed | Can we support the trace of ViT and Swin-Transformer based on torch.fx? | # 🚀 Feature request

Can we support the trace of ViT and Swin-Transformer based on torch.fx()? If not, what's the difficulty for that?

## Motivation

ViT and Swin-Transformation is widely used in CV scenarios. Hope we could support the trace of torch.fx, so we can do the quantization based on the work.

## Your contribution

I'm not sure right now. Currently I wanda whether we could support the ViT and Swin-Transformer. If not, the reason.

| 03-22-2022 01:52:27 | 03-22-2022 01:52:27 | @michaelbenayoun May I know the current situation or your schedule first? Thanks.<|||||>Hi,

The plan is to work on a nicer solution for tracing the model as soon as possible.

I will take a look to add those models in the mean time as they might not be hard to add in the current setting.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,319 | closed | ibert seems to be quite slow in quant_mode = True | Hi, for iBert -

I found it is MUCH slower in quant_mode = True. here's a notebook with a slightly modified version of the HF code to allow dynamically switching quant_mode. You can see the timing difference.

https://colab.research.google.com/drive/1DkYFGc18oPvAn5nyGEL1aIFHmD_aNlXW

@patrickvonplaten

https://github.com/kssteven418/I-BERT/issues/21

| 03-22-2022 01:19:25 | 03-22-2022 01:19:25 | Hey @ontocord,

I don't have much experience with iBERT. @kssteven418 any ideas here? Is such a slowdown expected? <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 16,318 | closed | Fix the issue #16317 in judging module names starting with base_model_prefix | This fixed #16317:

```

Keys are still reported as "missing" during model loading even though

specified in `_keys_to_ignore_on_load_missing`

```

as described in detail in #16317.

The cause of the issue is:

* The code that judges if a module name has the `base_model_prefix`

would match it with *any* beginning part of the name. This caused

the first part of the module name being stripped off unexpectedly

sometimes, hence not able to match the prefix in

`_keys_to_ignore_on_load_missing`.

Fix:

* Match `base_model_prefix` as a whole beginning word followed by

`.` in module names.

Also added test into `test_modeling_common`.

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

@patrickvonplaten @sgugger

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten