repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 15,847 | closed | [UniSpeechSat] Revert previous incorrect change of slow tests | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes `tests/unispeech_sat/test_modeling_unispeech_sat.py::UniSpeechSatModelIntegrationTest::test_inference_encoder_large`

The previous PR: #15818 was incorrect and due to a Datasets library version mismatch. See: https://github.com/huggingface/transformers/pull/15818/files#r815712576

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 02-28-2022 09:26:20 | 02-28-2022 09:26:20 | |

transformers | 15,846 | closed | [TF-PT-Tests] Fix PyTorch - TF tests for different GPU devices | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes `tests/t5/test_modeling_t5.py::T5EncoderOnlyModelTest::test_pt_tf_model_equivalence`

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 02-28-2022 09:09:23 | 02-28-2022 09:09:23 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15846). All of your documentation changes will be reflected on that endpoint. |

transformers | 15,845 | closed | Decision transformer gym | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Added the Decision Transformer Model to the transformers library (https://github.com/kzl/decision-transformer)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

@LysandreJik | 02-28-2022 09:05:57 | 02-28-2022 09:05:57 | I have:

- Removed the (temporary) changes that were made on the gpt2 model

- Added zeroed position encodings to the call to gpt2 forward, so they are ignored. (as these are implemented in the DT model)

- Fixed issues related to black line length (my IDE was set to the default I think)

- Removed datasets submodule

- Added and fixed many tests for the ModelTesterMixin. I skip:

- test_generate_without_input_ids

- test_pruning

- test_resize_embeddings

- test_head_masking

- test_attention_outputs

- test_hidden_states_output

- test_inputs_embeds

- test_model_common_attributes

- Updated some of the doc strings etc.

Still Todo:

- Example file / notebook. Ideally this would show a model checkpoint being loaded and then demonstrate the performance in an RL environment. Is this what you are thinking? I have checkpoints saved from the author's implementation, but I will need to modify them so the state_dict matches this implementation (it will be missing the gpt2 position encoding weights, for example)

- Integration test. Similar issue as the above.

Please let me know if you have any comments, questions or ideas related to the commits I have just added.

<|||||>Really cool to see the first RL model in Transformers here! Agree with @LysandreJik in that we can just set the position ids to a 0 valued tensor so that the model doesn't need to be adapted.

For me, it would be important to understand how the model would be used in practice so that we can better understand how to best implement the model in Transformers. @edbeeching, do you think it could be possible to add an integration test that verifies that a **minimal** use case of the model works as expected? E.g. maybe we could show how the user would use this implementation to retrieve an action state `a_t` from `s_{t-1}`, `r_{t-1}` and `a_{t-1}` and then how `a_t` would be further treated for the next time step? So a code-snippet showing a two-step inference with some comments/code explaining where the reward and state come from as well.

Very exciting!<|||||>Thanks for the comments, the model is now loaded from a pretrained checkpoint. I have set the seed and have added a comparison between the output and the expected output. Let me know of any other comments or additions.<|||||>Sorry some files got committed unintentially, I have removed them.

Regarding the model checkpoint files, the authors are from Berkley, but I trained the models myself with their codebase (they did not share their checkpoints). So I don't know who the rightful "authors" of the checkpoints are, I am not bothered either way. Let me know what you think.<|||||>> checkpoint

I see! Yeah since you've trained them, then I think it's totally fine to just publish them under your name on the Hub! So I think the PR is good to go for me :-)<|||||>Think we still got some `decision-transformer-gym-halfcheetah-expert` folders though ;-) <|||||>There are five conflicts to resolve, could you take care of this before we do a final review @edbeeching ?<|||||>Ok I went through the conflicts.

One final question, it appears that the test files have been reorganized since I first forked the transformers repo. They now appear to be nested each in their own subdirectory. Should add a directory for my test to reflect this change in organization?<|||||>_The documentation is not available anymore as the PR was closed or merged._ |

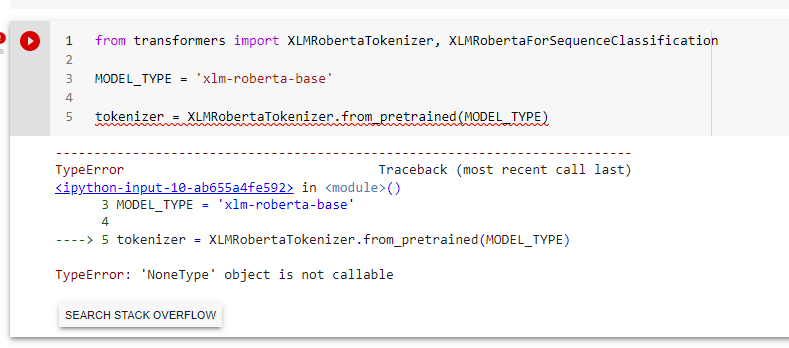

transformers | 15,844 | closed | Error:"TypeError: 'NoneType' object is not callable" with model specific Tokenizers | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.17.0.dev0 but also current latest master

- Platform: Colab

- Python version:

- PyTorch version (GPU?):

- Tensorflow version (GPU?):

- Using GPU in script?:

- Using distributed or parallel set-up in script?:

Models:

- XGLM (although I don't think this is model specific)

## To reproduce

Steps to reproduce the behavior:

1. Try to create a tokenizer from class-specific tokenizers (eg XGLMTokenizer), it fails.

```

from transformers import XGLMTokenizer

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-564M")

```

## Expected behavior

Should work, but it fails with this exception:

```

Error:"TypeError: 'NoneType' object is not callable" with Tokenizers

```

However, creating it with `AutoTokenizer` just works, this is fine, but there're a lot of examples for specific models which do not use AutoTokenizer (I found out this by pasting an example from XGLM) | 02-28-2022 08:09:33 | 02-28-2022 08:09:33 | cc @SaulLu for tokenizers<|||||>Oops #15827 existed already, this seems to be widespread to more than XGLMTokenizer. XLMRobertaTokenizer doesn't seem to work either. AutoTokenizer seems to work but because it's a separate class altogether (`PreTrainedTokenizerFast`)<|||||>Hi @afcruzs ! `XGLMTokenizer` depends on `sentencepiece`, if it's not installed then a None object is imported.

`pip install sentencepiece` should resolve this.

> AutoTokenizer seems to work but because it's a separate class altogether (PreTrainedTokenizerFast)

yes, by default `AutoTokenizer` returns fast tokenizer.<|||||>Oh yes, this works indeed. Thanks! It'd be great to have a meaningful error message though, is not at all evident.<|||||>If I understood correctly, you tried with the version of transformers on master. After running:

```python

from transformers import XGLMTokenizer

tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-564M")

```

normally you should see this error message after:

```python

---------------------------------------------------------------------------

ImportError Traceback (most recent call last)

[<ipython-input-3-34ff8e92d7d6>](https://localhost:8080/#) in <module>()

----> 1 tokenizer = XGLMTokenizer.from_pretrained("facebook/xglm-564M")

1 frames

[/usr/local/lib/python3.7/dist-packages/transformers/file_utils.py](https://localhost:8080/#) in requires_backends(obj, backends)

846 failed = [msg.format(name) for available, msg in checks if not available()]

847 if failed:

--> 848 raise ImportError("".join(failed))

849

850

ImportError:

XGLMTokenizer requires the SentencePiece library but it was not found in your environment. Checkout the instructions on the

installation page of its repo: https://github.com/google/sentencepiece#installation and follow the ones

that match your environment.

---------------------------------------------------------------------------

NOTE: If your import is failing due to a missing package, you can

manually install dependencies using either !pip or !apt.

To view examples of installing some common dependencies, click the

"Open Examples" button below.

---------------------------------------------------------------------------

```

Can you share with us a little more about what you did so we can understand why you didn't receive this error? :slightly_smiling_face: <|||||>@SaulLu I've tried to repro it again and i couldn't, it seems to work just like you said 😐. I'll take a closer look and share a colab if I find something, otherwise will close this. Thanks!<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored.<|||||>Closing this issue as it seems to be solved :smile: <|||||>> Oh yes, this works indeed. Thanks! It'd be great to have a meaningful error message though, is not at all evident.

Mine does not work still with distilbert tokenizer<|||||>@aiendeveloper , that would be super helpful if you can share with us your environment info (that you can get with the `transformers-cli env` command). Thank you!<|||||>

I am getting this error for xlm-roberta-base model. Any help will be very helpful.<|||||>@tanuj2212, My hunch is that you are missing the `sentencepiece` dependency. Could you check if you don't have it in your virtual environment or install it with for example `pip install sentencepiece`?

What version of `transformers` are you using? I would have expected you to get the following message the first time you tried to use `XLMRobertaTokenizer` :

```bash

XLMRobertaTokenizer requires the SentencePiece library but it was not found in your environment. Checkout the instructions on the

installation page of its repo: https://github.com/google/sentencepiece#installation and follow the ones

that match your environment.

```<|||||>I am having the same issue with T5Tokenizer - I have installed sentencepiece and I still get the NoneType error. I am working on a Google Colab file and here is my environment info from the `transformers-cli env` command:

- `transformers` version: 4.24.0

- Platform: Linux-5.10.133+-x86_64-with-glibc2.27

- Python version: 3.8.15

- Huggingface_hub version: 0.11.1

- PyTorch version (GPU?): 1.12.1+cu113 (False)

- Tensorflow version (GPU?): 2.9.2 (False)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: no

- Using distributed or parallel set-up in script?: no

Also, `pip install sentencepiece` gave the following output: `Successfully installed sentencepiece-0.1.97`<|||||>What I found it works perfectly with the CPU, this problem arises with GPU. <|||||>I ran my code in colab and on the first try it also gave the same error. But then I restarted the runtime, then it worked.<|||||>@tianshuailu same thing, restarting the kernel worked. Pretty buggy I would say.<|||||>I am also getting the same error message even though I have sentencepiece installed<|||||>Friendly ping to @ArthurZucker <|||||>Hey @91jpark19 could you share the settings in which this is happening or a notebook?

I can't reproduce any of the errors. See this notebook:

https://colab.research.google.com/drive/1RFdXqNwA-kdyx0S_ALRrGzZMR8dk48Ie?usp=sharing

|

transformers | 15,843 | closed | Fixing the timestamps with chunking. | # What does this PR do?

This PR fixes #15840

- Have to change the tokenization word state machine. With chunking we can have 2 chunks

being concatenated, both containing a separate space. Hopefully this code is readable enough.

- Refactored things to keep more alignment on CTC vs CTC_WITH_LM.

- Removed a bunch of code (no more `apply_stride`).

- Added `align_to` mecanism to make sure `stride` is aligned on token space so

that we don't have rounding errors that make an extra stride creep, and mess up the

timestamps.

- I tested 10s chunking on a 36mn long file and the last timings held ot the second at least, so it seems to be good. ~There is still potential room for accumulation of error. Since ctc use convolution to downsample for sample space to logits space, It is regular the `logits_shape * inputs_to_logits_ratio` != `input_shape`. Even when everything is aligned. It seems the real formula is `(logits_shape + 1) * inputs_to_logits_ratio` != `input_shape`. That means the ratio might be slightly off and the resulting timestamps could be shifted.~

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

--> | 02-28-2022 08:09:21 | 02-28-2022 08:09:21 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15843). All of your documentation changes will be reflected on that endpoint.<|||||>```

RUN_SLOW=1 RUN_PIPELINE_TESTS=1 pytest -sv tests/pipelines/test_pipelines_automatic_speech_recognition.py

Results (614.95s):

22 passed

8 skipped

```<|||||>Merging since slow tests are good. |

transformers | 15,842 | closed | Output embedding from each self-attention head from each encoder layer | Hi there!

I wanted the embeddings from each self-attention head of each encoder layer for one of my projects, is this possible with the hugging face library?

If not, can I just slice the original embeddings from each layer (suppose 768/12 = 128 size slice) to get the attention head output?

Thank You | 02-28-2022 07:42:55 | 02-28-2022 07:42:55 | Hi @Sreyan88 ! The best place to ask this question is [the forum](https://discuss.huggingface.co/). We use issues for bug reports and feature requests. Thank you! |

transformers | 15,841 | closed | Make Flax pt-flax equivalence test more aggressive | # What does this PR do?

Make Flax pt-flax equivalence test more aggressive. (Similar to #15839 for PT/TF).

It uses `output_hidden_states=True` and `output_attentions=True` to test all output tensors (in a recursive way).

Also, it lowers the tolerance from `4e-2` to `1e-5`. (From the experience I gained in PT/TF test, if an error > `1e-5`, I always found a bug to fix).

(A bit) surprisingly, but very good news: unlike PT/TF, there is no PT/Flax inconsistency found by this more aggressive test! (@patil-suraj must have done a great job on flax models :-) )

Flax: @patil-suraj @patrickvonplaten

| 02-27-2022 12:05:32 | 02-27-2022 12:05:32 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15841). All of your documentation changes will be reflected on that endpoint.<|||||>Also very surprised that the tests are all passing. @ydshieh - can you double check that the tests are actually passing for most models. Some edge-case models that could be tested locally:

- BigBird

- Pegagus

- GPT2<|||||>@patil-suraj @patrickvonplaten

There are a few things to fix in this PR (same for the PT/TF) - some tests are just ignored by my mistakes.

I will let you know once I fix them.<|||||>**[Updated Info.]**

- The (more aggressive) PT/TF test is merged to `master`

- I fixed some bugs for this new PT/Flax test

- There are 10 failures

- 6 have `very large` difference between PT/Flax ( > `1.8`) --> I will check if I can fix them easily

- 4 have `large` difference (0.01 ~ 0.05) --> Need to verify if these are expected

<|||||>> * 6 have `very large` difference between PT/Flax ( > `1.8`) --> I will check if I can fix them easily

> * 4 have `large` difference (0.01 ~ 0.05) --> Need to verify if these are expected

~~Good news! Once #16167 and #16168 are merged, this more aggressive PT/Flax test will pass with `1e-5` on `CPU`. I will test with `GPU` later.~~

Sorry, but please ignore the above claim. The tests ran flax models on CPU because the GPU version of Jax/Flax were not installed.

----

Running on `GPU` with `1e-5` also passes! (run 3 times per model class)<|||||>_The documentation is not available anymore as the PR was closed or merged._<|||||>Update:

After rebasing on a more recent commit on master (`5a6b3ccd28a320bcde85190b0853ade385bd4158`), this test with `1e-5` work fine!

I ran this new test on GPU (inside docker container that we use for CI GPU testing + with `jax==0.3.0`). The only errors I got is

- Flax/Jax `failed to determine best cudnn convolution algorithm for ...`

- Need to find out the cause eventually.

Think this PR is ready! @patil-suraj @patrickvonplaten

(After installing `jax[cuda11_cudnn805]` instead of `jax[cuda11_cudnn82]`, the errors listed below no longer appear)

----

## Error logs

```

FAILED tests/beit/test_modeling_flax_beit.py::FlaxBeitModelTest::test_equivalence_flax_to_pt - RuntimeError: UNKNOWN: Failed to determine best cudnn convolution algorithm for:

FAILED tests/clip/test_modeling_flax_clip.py::FlaxCLIPVisionModelTest::test_equivalence_flax_to_pt - RuntimeError: UNKNOWN: Failed to determine best cudnn convolution algorithm for:

FAILED tests/clip/test_modeling_flax_clip.py::FlaxCLIPModelTest::test_equivalence_flax_to_pt - RuntimeError: UNKNOWN: Failed to determine best cudnn convolution algorithm for:

FAILED tests/vit/test_modeling_flax_vit.py::FlaxViTModelTest::test_equivalence_flax_to_pt - RuntimeError: UNKNOWN: Failed to determine best cudnn convolution algorithm for:

FAILED tests/wav2vec2/test_modeling_flax_wav2vec2.py::FlaxWav2Vec2ModelTest::test_equivalence_flax_to_pt - RuntimeError: UNKNOWN: Failed to determine best cudnn convolution algorithm for:

```<|||||>It's all green! I will approve my own PR too: LGTM! |

transformers | 15,840 | closed | Timestamps in AutomaticSpeechRecognitionPipeline not aligned in sample space | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.17.0.dev0

- Platform: Linux-5.10.98-1-MANJARO-x86_64-with-glibc2.33

- Python version: 3.9.8

- PyTorch version (GPU?): 1.10.2+cpu (False)

- Tensorflow version (GPU?): 2.8.0 (False)

- Flax version (CPU?/GPU?/TPU?): 0.4.0 (cpu)

- Jax version: 0.3.1

- JaxLib version: 0.3.0

- Using GPU in script?: No

- Using distributed or parallel set-up in script?: No

### Who can help

@Narsil

<!-- Your issue will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- ALBERT, BERT, XLM, DeBERTa, DeBERTa-v2, ELECTRA, MobileBert, SqueezeBert: @LysandreJik

- T5, BART, Marian, Pegasus, EncoderDecoder: @patrickvonplaten

- Blenderbot, MBART: @patil-suraj

- Longformer, Reformer, TransfoXL, XLNet, FNet, BigBird: @patrickvonplaten

- FSMT: @stas00

- Funnel: @sgugger

- GPT-2, GPT: @patrickvonplaten, @LysandreJik

- RAG, DPR: @patrickvonplaten, @lhoestq

- TensorFlow: @Rocketknight1

- JAX/Flax: @patil-suraj

- TAPAS, LayoutLM, LayoutLMv2, LUKE, ViT, BEiT, DEiT, DETR, CANINE: @NielsRogge

- GPT-Neo, GPT-J, CLIP: @patil-suraj

- Wav2Vec2, HuBERT, SpeechEncoderDecoder, UniSpeech, UniSpeechSAT, SEW, SEW-D, Speech2Text: @patrickvonplaten, @anton-l

If the model isn't in the list, ping @LysandreJik who will redirect you to the correct contributor.

Library:

- Benchmarks: @patrickvonplaten

- Deepspeed: @stas00

- Ray/raytune: @richardliaw, @amogkam

- Text generation: @patrickvonplaten @narsil

- Tokenizers: @SaulLu

- Trainer: @sgugger

- Pipelines: @Narsil

- Speech: @patrickvonplaten, @anton-l

- Vision: @NielsRogge, @sgugger

Documentation: @sgugger

Model hub:

- for issues with a model, report at https://discuss.huggingface.co/ and tag the model's creator.

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

For research projetcs, please ping the contributor directly. For example, on the following projects:

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

## Issue

Generating timestamps in the `AutomaticSpeechRecognitionPipeline` does not match the timestamps generated from `Wav2Vec2CTCTokenizer.decode()`. The timestamps from the pipeline are exceeding the duration of the audio signal because of the strides.

## Expected behavior

Generating timestamps using the pipeline gives the following prediction IDs and offsets:

```python

pred_ids=array([ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 12, 14, 14, 0, 0,

0, 22, 11, 0, 7, 4, 15, 16, 0, 0, 4, 17, 5, 7, 7, 4, 4,

14, 14, 18, 18, 15, 4, 4, 0, 7, 5, 0, 0, 13, 0, 9, 0, 0,

0, 8, 0, 11, 11, 0, 0, 0, 27, 4, 4, 0, 23, 0, 16, 0, 5,

7, 7, 0, 25, 25, 0, 0, 22, 7, 7, 11, 0, 0, 0, 10, 10, 0,

0, 8, 0, 5, 5, 0, 0, 19, 19, 0, 4, 4, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 5, 0, 6, 4, 4, 0, 25, 0,

14, 14, 16, 0, 0, 0, 0, 10, 9, 9, 0, 0, 0, 0, 25, 0, 0,

0, 0, 0, 26, 26, 16, 12, 12, 0, 0, 0, 19, 0, 5, 0, 8, 8,

4, 4, 27, 0, 16, 0, 0, 4, 4, 0, 3, 3, 0, 0, 0, 0, 0,

0, 0, 0, 4, 4, 17, 11, 11, 13, 0, 13, 11, 14, 16, 16, 0, 6,

5, 6, 6, 4, 0, 5, 5, 16, 16, 0, 0, 7, 14, 0, 0, 4, 4,

12, 5, 0, 0, 0, 26, 0, 13, 14, 0, 0, 0, 0, 23, 0, 0, 21,

11, 11, 11, 0, 5, 5, 5, 7, 7, 0, 8, 0, 4, 4, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 12, 21, 11, 11, 11, 0, 4, 4, 0, 0, 12, 11, 11, 0, 0, 0,

0, 0, 7, 7, 5, 5, 0, 0, 0, 0, 23, 0, 0, 8, 0, 0, 4,

4, 0, 0, 0, 0, 12, 5, 0, 0, 4, 4, 10, 0, 0, 24, 14, 14,

0, 7, 7, 0, 8, 8, 10, 10, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 26, 0, 5, 0, 0, 0, 20, 0, 5, 0,

0, 5, 5, 0, 0, 19, 16, 16, 0, 6, 6, 19, 0, 5, 6, 6, 4,

4, 0, 14, 18, 18, 15, 4, 4, 0, 0, 25, 25, 0, 5, 4, 4, 19,

16, 8, 8, 0, 8, 8, 4, 4, 0, 23, 23, 0, 14, 17, 17, 0, 0,

17, 0, 5, 6, 6, 4, 4])

decoded['word_offsets']=[{'word': 'dofir', 'start_offset': 12, 'end_offset': 22}, {'word': 'hu', 'start_offset': 23, 'end_offset': 25}, {'word': 'mer', 'start_offset': 28, 'end_offset': 32

}, {'word': 'och', 'start_offset': 34, 'end_offset': 39}, {'word': 'relativ', 'start_offset': 42, 'end_offset': 60}, {'word': 'kuerzfristeg', 'start_offset': 63, 'end_offset': 94}, {'word'

: 'en', 'start_offset': 128, 'end_offset': 131}, {'word': 'zousazbudget', 'start_offset': 134, 'end_offset': 170}, {'word': 'vu', 'start_offset': 172, 'end_offset': 175}, {'word': '<unk>',

'start_offset': 180, 'end_offset': 182}, {'word': 'milliounen', 'start_offset': 192, 'end_offset': 207}, {'word': 'euro', 'start_offset': 209, 'end_offset': 217}, {'word': 'deblokéiert',

'start_offset': 221, 'end_offset': 249}, {'word': 'déi', 'start_offset': 273, 'end_offset': 278}, {'word': 'direkt', 'start_offset': 283, 'end_offset': 303}, {'word': 'de', 'start_offset': 311, 'end_offset': 313},

{'word': 'sportsbeweegungen', 'start_offset': 317, 'end_offset': 492}, {'word': 'och', 'start_offset': 495, 'end_offset': 499}, {'word': 'ze', 'start_offset': 503, 'end_offset': 507}, {'word': 'gutt', 'start_offset': 509, 'end_offset': 516},

{'word': 'kommen', 'start_offset': 519, 'end_offset': 532}]

```

However, the following is computed using `Wav2Vec2CTCTokenizer.decode()` and these offsets are expected:

```python

pred_ids=tensor([ 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 12, 14, 14, 0, 0, 0,

22, 11, 0, 7, 4, 15, 16, 0, 0, 4, 17, 5, 7, 7, 4, 0, 14, 14,

18, 18, 15, 4, 4, 0, 7, 5, 0, 0, 13, 0, 9, 0, 0, 0, 8, 0,

11, 11, 0, 0, 0, 27, 4, 4, 0, 23, 0, 16, 0, 5, 7, 7, 0, 25,

25, 0, 0, 22, 7, 7, 11, 0, 0, 0, 10, 10, 0, 0, 8, 0, 5, 5,

0, 0, 19, 19, 0, 4, 4, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 5, 0, 6, 4, 4, 0, 25, 0, 14, 14, 16, 0, 0, 0, 0, 10,

9, 9, 0, 0, 0, 0, 25, 0, 0, 0, 0, 0, 26, 26, 16, 12, 12, 0,

0, 0, 19, 0, 5, 0, 8, 8, 4, 4, 27, 0, 16, 0, 0, 4, 4, 0,

3, 3, 0, 0, 0, 0, 0, 0, 0, 0, 4, 4, 17, 11, 11, 13, 0, 13,

11, 14, 16, 16, 0, 6, 5, 6, 6, 4, 0, 5, 5, 16, 16, 0, 0, 7,

14, 0, 0, 4, 4, 12, 5, 0, 0, 0, 26, 0, 13, 14, 0, 0, 0, 0,

23, 0, 0, 21, 11, 11, 11, 0, 5, 5, 5, 7, 7, 0, 8, 0, 4, 4,

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,

0, 0, 0, 12, 21, 11, 11, 11, 0, 4, 4, 0, 0, 12, 11, 11, 0, 0,

0, 0, 0, 7, 7, 5, 5, 0, 0, 0, 0, 23, 0, 0, 8, 0, 0, 4,

4, 0, 0, 0, 0, 12, 5, 0, 0, 4, 4, 10, 0, 0, 24, 14, 14, 0,

7, 7, 0, 8, 8, 10, 10, 0, 0, 0, 26, 5, 5, 0, 0, 0, 20, 5,

0, 0, 0, 5, 0, 0, 19, 0, 16, 16, 6, 6, 19, 19, 5, 5, 6, 6,

4, 0, 14, 0, 18, 15, 15, 4, 4, 0, 0, 25, 0, 16, 0, 4, 4, 19,

16, 8, 0, 0, 8, 0, 4, 0, 0, 23, 0, 14, 0, 17, 0, 0, 0, 17,

5, 5, 6, 6, 4, 4])

word_offsets=[{'word': 'dofir', 'start_offset': 12, 'end_offset': 22}, {'word': 'hu', 'start_offset': 23, 'end_offset': 25}, {'word': 'mer', 'start_offset': 28, 'end_offset': 32}, {'word': 'och', 'start_offset': 34, 'end_offset': 39}, {'word': 'relativ', 'start_offset': 42, 'end_offset': 60}, {'word': 'kuerzfristeg', 'start_offset': 63, 'end_offset': 94}, {'word': 'en', 'start_offset': 128, 'end_offset': 131}, {'word': 'zousazbudget', 'start_offset': 134, 'end_offset': 170}, {'word': 'vu', 'start_offset': 172, 'end_offset': 175}, {'word': '<unk>', 'start_offset': 180, 'end_offset': 182}, {'word': 'milliounen', 'start_offset': 192, 'end_offset': 207}, {'word': 'euro', 'start_offset': 209, 'end_offset': 217}, {'word': 'deblokéiert', 'start_offset': 221, 'end_offset': 249}, {'word': 'déi', 'start_offset': 273, 'end_offset': 278}, {'word': 'direkt', 'start_offset': 283, 'end_offset': 303}, {'word': 'de', 'start_offset': 311, 'end_offset': 313}, {'word': 'sportsbeweegungen', 'start_offset': 317, 'end_offset': 360}, {'word': 'och', 'start_offset': 362, 'end_offset': 367}, {'word': 'zu', 'start_offset': 371, 'end_offset': 374}, {'word': 'gutt', 'start_offset': 377, 'end_offset': 383}, {'word': 'kommen', 'start_offset': 387, 'end_offset': 400}]

```

## Potential Fix

A fix could be removing the strides instead of filling them with `first_letter` and `last_letter` in `apply_stride()`:

```python

def apply_stride(tokens, stride):

input_n, left, right = rescale_stride(tokens, stride)[0]

left_token = left

right_token = input_n - right

return tokens[:, left_token:right_token]

```

<!-- A clear and concise description of what you would expect to happen. -->

| 02-26-2022 21:50:50 | 02-26-2022 21:50:50 | Hi @lemswasabi ,

Thanks for the report. We unfortunately cannot drop the stride as when there's batching involved, the tensors cannot be of different shapes. We can however keep track of the stride and fix the timestamps.

I'll submit a patch tomorrow probably.<|||||>Hi @Narsil,

Thanks for having a look at it.<|||||>@lemswasabi , in the end I aligned most of the code closer to what `ctc_with_lm` did (dropping the stride in the postprocess method as I said, we can't in _forward).

There could still be some slight issue with timings, so the PR is not necessarily finished<|||||>@Narsil, I understand that dropping the stride has to be done in the postprocess method. I tested your patch and it works as expected now.<|||||>Great ! Please let us know if there's any further issue, ctc_with_lm coming right up :D |

transformers | 15,839 | closed | Make TF pt-tf equivalence test more aggressive | # What does this PR do?

Make TF pt-tf equivalence test more aggressive.

After a series of fixes done so far, I think it is a good time to include this more aggressive testing in `master` branch.

(Otherwise, the new models added might have undetected issues. For example, the recent `TFConvNextModel` would have `hidden_states` not transposed to match Pytorch version issue - I tested it on my local branch and informed the author to fix it).

There are still 3 categories of PT/TF inconsistency to address, but they are less urgent in my opinion. See below.

Currently, the test makes a few exception to not test these 3 cases (in order to get green test) - I add `TODO` comments in the code.

TF: @Rocketknight1 @gante

Test: @LysandreJik @sgugger

## TODO in separate PRs

- failing due to the difference of large negative values (used for attn mask) between PT/TF:

- https://circleci.com/api/v1.1/project/github/huggingface/transformers/379126/output/112/0?file=true&allocation-id=621b51ac72134246517cac0c-0-build%2F6E4C1534

- albert, convbert, speech_to_text, t5, tapas

- failing due to cache format difference between PT/TF:

- https://app.circleci.com/pipelines/github/huggingface/transformers/35203/workflows/d0c0fbb4-661b-4a61-96e7-2fd4a3249f44/jobs/379098/parallel-runs/0/steps/0-112

- gpt2, t5, speech_to_text

- failing due to outputting loss or not between PT/TF:

- https://app.circleci.com/pipelines/github/huggingface/transformers/35206/workflows/d2533f38-6877-4db5-9eb6-da92e6421e11/jobs/379144/parallel-runs/0/steps/0-112

- flaubert, funnel, transfo_xl, xlm | 02-26-2022 17:05:21 | 02-26-2022 17:05:21 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15839). All of your documentation changes will be reflected on that endpoint.<|||||>> Thank you for your time spent making this better! Two questions:

>

> * Does it run on GPU?

For now, I haven't tested it with GPU. I can run it on the office's GPU machine this week (a good chance to learn how to connect to those machine!)

> * How long does it take to run? Our test suite is already taking a significant time to run, so aiming for common tests that run as fast as possible is important.

Let me measure the timing of this test with the current master and with this PR. Will report it.

>

>

> There's quite a bit of model-specific logic which I'm not particularly enthusiastic about (pre-training models + convnext), but I understand why it's rigorous to do it like that here.

(Yeah, once we fix all the inconsistency, we can remove all these exceptional conditions.)<|||||>Good news! Testing on single GPU with the small tolerance `1e-5` still works! All models pass `test_pt_tf_model_equivalence`.

(I forgot to install CUDA driver - and I'm glad I double-checked and fixed this silly mistake :-) )

I will address a few style review suggestions. After that, I think it's ready to merge ..?<|||||>It's weird that it took more time when you expected it to take less, no? Can you try running the test suite with `--durations=0` to see all the tests and the time it took for them to run? <|||||>After a thorough verification and a few fixes, this PR is ready (again) from my side.

I would love @sgugger (and @LysandreJik when he is back) to check it again, and @gante & @Rocketknight1 if they want (no particular TF-related change compared to the last commit).

The following summary might save some review (again) time

- **Main fixes** (since the last review):

- In the last commit, the following line forgot to use `to_tuple()`, and the method `check_output` (previous version) only deal with `tuple` or `tensor`. So half test cases passed just because no check was performed at all. (my bad ...).

https://github.com/huggingface/transformers/blob/6f2025054dc49a42dc3de82cbea22f2a5913f122/tests/test_modeling_tf_common.py#L447

This is fixed now, and also `check_output` now raises error if a result is not tested (if its type is not in `[tuple, list, tensor]`).

- In the last commit, I accidentally deleted the following test case (`check the results after save/load checkpoints`, which exists on the current `master`).

https://github.com/huggingface/transformers/blob/6f2025054dc49a42dc3de82cbea22f2a5913f122/tests/test_modeling_tf_common.py#L576

This is added back now.

- Make the test also run on GPU (put models/inputs on the correct device)

- **Style changes**

- Since I have to add back the accidentally deleted test cases , I added `check_pt_tf_models` to avoid duplicated large code block.

- `tfo` -> `tf_outputs` and `pto` -> `pt_outputs`

- no need to use `np.copy` in [here](https://github.com/huggingface/transformers/blob/e9555ea9c75c3fb2741e27a1be7c2240ce8395f2/tests/test_modeling_tf_common.py#L406), which was discussed in [a comment](https://github.com/huggingface/transformers/pull/15256#discussion_r791942224).

I run this new version of test, and with the small tolerance `1e-5`, it pass both on CPU and GPU :tada:!

In order to be super sure, I also ran it on CPU/GPU for 100 times (very aggressive :fire: :fire: )! All models passed for this test, except for:

- `TFSpeech2TextModel`: this is addressed in #15952

- (`TFHubertModel`): There is 1 among 300 runs on CPU where I got a diff > 1e-5. I ran it 10000 times again, and still got only 1 such occurrence. (Guess it's OK to set `1e-5` in this case.)

Regarding the running time, I will measure it and post the results in the next comment.<|||||>In terms of running time:

- Circle CI

- current : [56.11s](https://circleci.com/api/v1.1/project/github/huggingface/transformers/384755/output/112/0?file=true&allocation-id=62238c1d4f148c1110a1efa8-0-build%2F1610CB69)

- this PR : [61.21s](https://circleci.com/api/v1.1/project/github/huggingface/transformers/384731/output/112/0?file=true&allocation-id=622385347ccc3f3894ec7697-0-build%2F351FF724)

- GCP VM (with `-n 1` + CPU)

- current : 178.75s

- this PR : 200.81s

These suggest an increase by roughly 10%.<|||||>I think I made a mistake that ~~TF 2.8 doesn't work with CUDA 11.4 (installed on my GCP VM machine)~~ K80 GPU doesn't work well with Ubuntu 20.04 (regarding the drivers and CUDA), and it fallbacks to CPU instead. I will fix this and re-run the tests.<|||||>After using Tesla T4 GPU (same as for the CI GPU testings), I confirmed that this aggressive PT/TF equivalence test passes with `1e-5` when running on GPU!

I ran this test **1000** times (on GPU) for each TF models.

Think it's ready if @LysandreJik is happy with the [slightly(?) increased running time](https://github.com/huggingface/transformers/pull/15839#issuecomment-1059791405).

(I saved all the differences. I can share the values if you would like to see them!) |

transformers | 15,838 | closed | Issue running run_glue.py at test time | Hi,

I'm using [this script](https://github.com/huggingface/transformers/blob/master/examples/pytorch/text-classification/run_glue.py) to train a model on my own dataset.

(transformers 4.15.0, datasets 1.17.0, python3.7)

I'm doing single sentence classification, and my CSV files have 2 cols: label, sentence1

I can run the script with no issue when --do_train --do_eval --do_predict are true.

However, later, when I use the same test set used when training, but this time with only "--do_predict" on one of the checkpoints of the models I previously trained, I get the following error:

> File "/lib/python3.7/site-packages/datasets/arrow_writer.py", line 473, in <listcomp>

pa_table = pa.Table.from_arrays([pa_table[name] for name in self._schema.names], schema=self._schema)

File "pyarrow/table.pxi", line 1339, in pyarrow.lib.Table.__getitem__

File "pyarrow/table.pxi", line 1900, in pyarrow.lib.Table.column

File "pyarrow/table.pxi", line 1875, in pyarrow.lib.Table._ensure_integer_index

KeyError: 'Field "sentence2" does not exist in table schema'

I would appreciate any tips on how to fix this, thanks!

| 02-25-2022 18:59:41 | 02-25-2022 18:59:41 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 15,837 | closed | Problems with convert_blenderbot_original_pytorch_checkpoint_to_pytorch.py | I am trying to use `the convert_blenderbot_original_pytorch_checkpoint_to_pytorch.py` script to convert a finetuned ParlAI Blenderbot-1B-distill model. I call it this way:

```python convert.py --src_path model --hf_config_json config.json```

Where the `config.json` file is downloaded from the HuggingFace repository. Unfortunately, I get the following error:

```

raceback (most recent call last):

File "C:\Users\micha\Documents\Chatbot\AI\persona3\convert.py", line 114, in <module>

convert_parlai_checkpoint(args.src_path, args.save_dir, args.hf_config_json)

File "C:\Users\micha\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "C:\Users\micha\Documents\Chatbot\AI\persona3\convert.py", line 99, in convert_parlai_checkpoint

rename_layernorm_keys(sd)

File "C:\Users\micha\Documents\Chatbot\AI\persona3\convert.py", line 68, in rename_layernorm_keys

v = sd.pop(k)

KeyError: 'model.encoder.layernorm_embedding.weight'

```

And after commenting lines 98 and 99 of the script, it gives a further error:

```

raceback (most recent call last):

File "C:\Users\micha\Documents\Chatbot\AI\persona3\convert.py", line 114, in <module>

convert_parlai_checkpoint(args.src_path, args.save_dir, args.hf_config_json)

File "C:\Users\micha\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "C:\Users\micha\Documents\Chatbot\AI\persona3\convert.py", line 100, in convert_parlai_checkpoint

m.model.load_state_dict(mapping, strict=True)

File "C:\Users\micha\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\nn\modules\module.py", line 1497, in load_state_dict

raise RuntimeError('Error(s) in loading state_dict for {}:\n\t{}'.format(

RuntimeError: Error(s) in loading state_dict for BartModel:

size mismatch for encoder.embed_positions.weight: copying a param with shape torch.Size([128, 2560]) from checkpoint, the shape in current model is torch.Size([130, 2560]).

size mismatch for decoder.embed_positions.weight: copying a param with shape torch.Size([128, 2560]) from checkpoint, the shape in current model is torch.Size([130, 2560]).

```

I tried changing `BartConfig` to `BlenderbotConfig` and `BartForConditionalGeneration` to `BlenderbotForConditionalGeneration` but then it gives still another error:

```

Traceback (most recent call last):

File "C:\Users\micha\Documents\Chatbot\AI\persona3\convert.py", line 114, in <module>

convert_parlai_checkpoint(args.src_path, args.save_dir, args.hf_config_json)

File "C:\Users\micha\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\autograd\grad_mode.py", line 27, in decorate_context

return func(*args, **kwargs)

File "C:\Users\micha\Documents\Chatbot\AI\persona3\convert.py", line 100, in convert_parlai_checkpoint

m.model.load_state_dict(mapping, strict=True)

File "C:\Users\micha\AppData\Local\Programs\Python\Python39\lib\site-packages\torch\nn\modules\module.py", line 1497, in load_state_dict

raise RuntimeError('Error(s) in loading state_dict for {}:\n\t{}'.format(

RuntimeError: Error(s) in loading state_dict for BlenderbotModel:

Missing key(s) in state_dict: "encoder.layer_norm.weight", "encoder.layer_norm.bias", "decoder.layer_norm.weight", "decoder.layer_norm.bias".

```

I changed `strict=True` to `strict=False` and commented out lines 98 & 99 and the script was finally executed, but I am not sure if this is the correct way. I would appreciate help in making the script work as intended.

| 02-25-2022 18:00:47 | 02-25-2022 18:00:47 | Hi @MichalPleban ! The blenderbot conversion script has not been updated in a long time. It should actually blenderbot classes instead of bart. Feel free to open a PR to fix this if you are interested. Happy to help! <|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 15,836 | closed | Inference for multilingual models | This PR updates the multilingual model docs to include M2M100 and MBart whose inference usage is different (basically forcing the `bos` token as the target language id). Let me know if I'm missing any other multilingual models that have a different method for inference! :)

Other notes:

- Moved this doc to the How-to guide section because it seems like it is more of an intermediate-level topic.

- Changed a section heading because the ampersand wasn't being properly displayed in the right navbar:

| 02-25-2022 17:52:34 | 02-25-2022 17:52:34 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15836). All of your documentation changes will be reflected on that endpoint. |

transformers | 15,835 | closed | [FlaxT5 Example] Fix flax t5 example pretraining | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes #12755 . 0 can be a pad_token that occurs in the text

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 02-25-2022 17:46:46 | 02-25-2022 17:46:46 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15835). All of your documentation changes will be reflected on that endpoint.<|||||>Gently pinging @stefan-it and @yhavinga here<|||||>Ok merging now - would love to get some feedback if this fixes the problem :-)<|||||>I just tested the fix and the reshape error is gone! Thanks @patrickvonplaten ! |

transformers | 15,834 | closed | Embedding index getting out of range while running onlplab/alephbert-base model | ## Environment info

- Platform: Google colab

## Information

Model I am using: 'onlplab/alephbert-base'

The problem arises when using:

[* ] the official example scripts:

I tried to implement the code written in your official course (https://huggingface.co/course/chapter7/7?fw=pt)

When the only change I want to make is to replace the model with an 'onlplab/alephbert-base'.

I mean I want to do a finetuning question answering to the 'onlplab/alephbert-base'.

The tasks I am working on is:

[ *] an official task: SQUaD

## To reproduce

Steps to reproduce the behavior:

1.Run the script:

!pip install datasets transformers[sentencepiece]

!pip install accelerate

!apt install git-lfs

from huggingface_hub import notebook_login

from datasets import load_dataset

from transformers import AutoTokenizer,BertTokenizerFast

from transformers import AutoModelForQuestionAnswering, BertForQuestionAnswering

import collections

import numpy as np

from datasets import load_metric

from tqdm.auto import tqdm

from transformers import TrainingArguments

from transformers import Trainer

from transformers import pipeline

import torch

raw_datasets = load_dataset("squad")

raw_datasets["train"].filter(lambda x: len(x["answers"]["text"]) != 1)

# model_checkpoint = "bert-base-cased"

model_checkpoint = "onlplab/alephbert-base"

# tokenizer = AutoTokenizer.from_pretrained(model_checkpoint)

tokenizer = BertTokenizerFast.from_pretrained(model_checkpoint)

context = raw_datasets["train"][0]["context"]

question = raw_datasets["train"][0]["question"]

inputs = tokenizer(question, context)

tokenizer.decode(inputs["input_ids"])

max_length = 384

stride = 128

def preprocess_training_examples(examples):

questions = [q.strip() for q in examples["question"]]

inputs = tokenizer(

questions,

examples["context"],

max_length=max_length,

truncation="only_second",

stride=stride,

return_overflowing_tokens=True,

return_offsets_mapping=True,

padding="max_length",

)

offset_mapping = inputs.pop("offset_mapping")

sample_map = inputs.pop("overflow_to_sample_mapping")

answers = examples["answers"]

start_positions = []

end_positions = []

for i, offset in enumerate(offset_mapping):

sample_idx = sample_map[i]

answer = answers[sample_idx]

start_char = answer["answer_start"][0]

end_char = answer["answer_start"][0] + len(answer["text"][0])

sequence_ids = inputs.sequence_ids(i)

# Find the start and end of the context

idx = 0

while sequence_ids[idx] != 1:

idx += 1

context_start = idx

while sequence_ids[idx] == 1:

idx += 1

context_end = idx - 1

# If the answer is not fully inside the context, label is (0, 0)

if offset[context_start][0] > end_char or offset[context_end][1] < start_char:

start_positions.append(0)

end_positions.append(0)

else:

# Otherwise it's the start and end token positions

idx = context_start

while idx <= context_end and offset[idx][0] <= start_char:

idx += 1

start_positions.append(idx - 1)

idx = context_end

while idx >= context_start and offset[idx][1] >= end_char:

idx -= 1

end_positions.append(idx + 1)

inputs["start_positions"] = start_positions

inputs["end_positions"] = end_positions

return inputs

train_dataset = raw_datasets["train"].map(

preprocess_training_examples,

batched=True,

remove_columns=raw_datasets["train"].column_names,

)

len(raw_datasets["train"]), len(train_dataset)

def preprocess_validation_examples(examples):

questions = [q.strip() for q in examples["question"]]

inputs = tokenizer(

questions,

examples["context"],

max_length=max_length,

truncation="only_second",

stride=stride,

return_overflowing_tokens=True,

return_offsets_mapping=True,

padding="max_length",

)

sample_map = inputs.pop("overflow_to_sample_mapping")

example_ids = []

for i in range(len(inputs["input_ids"])):

sample_idx = sample_map[i]

example_ids.append(examples["id"][sample_idx])

sequence_ids = inputs.sequence_ids(i)

offset = inputs["offset_mapping"][i]

inputs["offset_mapping"][i] = [

o if sequence_ids[k] == 1 else None for k, o in enumerate(offset)

]

inputs["example_id"] = example_ids

return inputs

validation_dataset = raw_datasets["validation"].map(

preprocess_validation_examples,

batched=True,

remove_columns=raw_datasets["validation"].column_names,

)

len(raw_datasets["validation"]), len(validation_dataset)

metric = load_metric("squad")

n_best = 20

max_answer_length = 30

def compute_metrics(start_logits, end_logits, features, examples):

example_to_features = collections.defaultdict(list)

for idx, feature in enumerate(features):

example_to_features[feature["example_id"]].append(idx)

predicted_answers = []

for example in tqdm(examples):

example_id = example["id"]

context = example["context"]

answers = []

# Loop through all features associated with that example

for feature_index in example_to_features[example_id]:

start_logit = start_logits[feature_index]

end_logit = end_logits[feature_index]

offsets = features[feature_index]["offset_mapping"]

start_indexes = np.argsort(start_logit)[-1 : -n_best - 1 : -1].tolist()

end_indexes = np.argsort(end_logit)[-1 : -n_best - 1 : -1].tolist()

for start_index in start_indexes:

for end_index in end_indexes:

# Skip answers that are not fully in the context

if offsets[start_index] is None or offsets[end_index] is None:

continue

# Skip answers with a length that is either < 0 or > max_answer_length

if (

end_index < start_index

or end_index - start_index + 1 > max_answer_length

):

continue

answer = {

"text": context[offsets[start_index][0] : offsets[end_index][1]],

"logit_score": start_logit[start_index] + end_logit[end_index],

}

answers.append(answer)

# Select the answer with the best score

if len(answers) > 0:

best_answer = max(answers, key=lambda x: x["logit_score"])

predicted_answers.append(

{"id": example_id, "prediction_text": best_answer["text"]}

)

else:

predicted_answers.append({"id": example_id, "prediction_text": ""})

theoretical_answers = [{"id": ex["id"], "answers": ex["answers"]} for ex in examples]

return metric.compute(predictions=predicted_answers, references=theoretical_answers)

# model = AutoModelForQuestionAnswering.from_pretrained(model_checkpoint)

model = BertForQuestionAnswering.from_pretrained(model_checkpoint)

args = TrainingArguments(

"alephbert-finetuned-squad",

evaluation_strategy="no",

save_strategy="epoch",

learning_rate=2e-5,

num_train_epochs=3,

weight_decay=0.01,

fp16=False,

push_to_hub=False, #TODO enable it if we eant push to hub

)

trainer = Trainer(

model=model,

args=args,

train_dataset=train_dataset,

eval_dataset=validation_dataset,

tokenizer=tokenizer,

)

trainer.train() #TODO enable it if we want to train

predictions, _ = trainer.predict(validation_dataset)

start_logits, end_logits = predictions

compute_metrics(start_logits, end_logits, validation_dataset, raw_datasets["validation"])

# Save the model

output_dir = "aleph_bert-finetuned-squad"

trainer.save_model(output_dir)

# Replace this with your own checkpoint

#model_checkpoint = "huggingface-course/bert-finetuned-squad"

model_checkpoint=output_dir

question_answerer = pipeline("question-answering", model=model_checkpoint)

context = """

🤗 Transformers is backed by the three most popular deep learning libraries — Jax, PyTorch and TensorFlow — with a seamless integration

between them. It's straightforward to train your models with one before loading them for inference with the other.

"""

question = "Which deep learning libraries back 🤗 Transformers?"

question_answerer(question=question, context=context)

2. The problem arises on trainer.train()

3. If i change the model back to Bert the code runs well.

4. I dont know what is the problem

Thanks

## Expected behavior

from: torch.embedding(weight, input, padding_idx, scale_grad_by_freq, sparse)

IndexError: index out of range in self

--> 211 trainer.train() #TODO enable it if we want to train

212

213 predictions, _ = trainer.predict(validation_dataset)

11 frames

[/usr/local/lib/python3.7/dist-packages/torch/nn/functional.py](https://localhost:8080/#) in embedding(input, weight, padding_idx, max_norm, norm_type, scale_grad_by_freq, sparse)

2042 # remove once script supports set_grad_enabled

2043 _no_grad_embedding_renorm_(weight, input, max_norm, norm_type)

-> 2044 return torch.embedding(weight, input, padding_idx, scale_grad_by_freq, sparse)

2045

2046

IndexError: index out of range in self

[Question_answering_(PyTorch).zip](https://github.com/huggingface/transformers/files/8142807/Question_answering_.PyTorch.zip)

| 02-25-2022 16:40:48 | 02-25-2022 16:40:48 | This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 15,833 | closed | [examples/summarization and translation] fix readme | # What does this PR do?

Remove the `TASK_NAME` which is never used in the command.

cc @michaelbenayoun | 02-25-2022 14:51:34 | 02-25-2022 14:51:34 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,832 | closed | Adding Decision Transformer Model (WIP) | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Added the Decision Transformer Model to the transformers library (https://github.com/kzl/decision-transformer)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

@LysandreJik

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 02-25-2022 14:43:27 | 02-25-2022 14:43:27 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,831 | closed | support new marian models | # What does this PR do?

This PR updates the Marian model:

1. To allow not sharing embeddings between encoder and decoder.

2. Allow tying only decoder embeddings with `lm_head`.

3. Separate two `vocabs` in `tokenizer` for `src` and `tgt` language

To support this, the PR introduces the following new methods:

- `get_decoder_input_embeddings` and `set_decoder_input_embeddings`

To get and set the decoder embeddings when the embeddings are not shared. These methods will raise an error if the embeddings are shared.

- `resize_decoder_token_embeddings`

To only resize the decoder embeddings. Will raise an error if the embeddings are shared.

This PR also adds two new config attributes to `MarianConfig`:

- `share_encoder_decoder_embeddings`: to indicate if emb should be shared or not

- `decoder_vocab_size`: to specify the vocab size for decoder when emb are not shared.

And the following methods from `PreTrainedModel` class are overridden to support these changes:

- `tie_weights`

- `_resize_token_embeddings`

Fixes #15109 | 02-25-2022 13:27:44 | 02-25-2022 13:27:44 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15831). All of your documentation changes will be reflected on that endpoint. |

transformers | 15,830 | closed | Re-enable doctests for task_summary | # What does this PR do?

This PR re-enables doctests for the task_summary. It should be merged after #15828 | 02-25-2022 12:28:23 | 02-25-2022 12:28:23 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,829 | closed | BART model parameters should not contain biases for Q, K and V | ## Environment info

- `transformers` version: 4.16.2

- Platform: Linux-5.11.0-1018-gcp-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyTorch version (GPU?): 1.10.2+cu102 (False)

- Tensorflow version (GPU?): 2.7.0 (False)

- Flax version (CPU?/GPU?/TPU?): 0.4.0 (cpu)

- Jax version: 0.3.0

- JaxLib version: 0.3.0

- Using GPU in script?: False

- Using distributed or parallel set-up in script?: False

### Who can help

@patrickvonplaten

## Information

Model I am using: BART

## To reproduce

Steps to reproduce the behavior:

```python

>>> from transformers import FlaxBartForConditionalGeneration

>>> import jax.numpy as np

>>> model = FlaxBartForConditionalGeneration.from_pretrained('facebook/bart-base', dtype=np.bfloat16)

>>> model.params['model']['decoder']['layers']['0']['encoder_attn']['q_proj']['bias']

DeviceArray([-6.86645508e-02, 3.71704102e-02, 3.40820312e-01,

-3.87451172e-01, 1.04980469e-01, -9.60693359e-02,

-2.45361328e-01, -1.50985718e-02, 2.50244141e-01,

-2.20581055e-01, 9.33074951e-03, 1.28295898e-01,

...

>>> model.params['model']['decoder']['layers']['0']['encoder_attn']['k_proj']['bias']

DeviceArray([-4.09317017e-03, 3.31687927e-03, 5.46646118e-03,

-9.59777832e-03, 4.63104248e-03, -4.14276123e-03,

-6.32858276e-03, -3.68309021e-03, 2.80380249e-03,

-2.85339355e-03, 1.19304657e-03, 1.88636780e-03,

...

>>> model.params['model']['decoder']['layers']['0']['encoder_attn']['v_proj']['bias']

DeviceArray([-6.41479492e-02, -5.52749634e-03, -4.86145020e-02,

5.64575195e-03, -6.95800781e-02, -2.50053406e-03,

6.31332397e-03, -1.13952637e-01, 8.81195068e-03,

4.99877930e-02, 1.15814209e-02, 8.41617584e-04,

...

```

## Expected behavior

The parameters should not contain biases for Q, K and V. However, it turns out that there exists non-zero biases for Q, K and V.

Reason:

> BART (https://arxiv.org/pdf/1910.13461.pdf): BART uses the standard sequence-to-sequence Transformer architecture from (Vaswani et al., 2017), except, following GPT, that we modify ReLU activation functions to GeLUs (Hendrycks & Gimpel, 2016) and initialise parameters from N (0, 0.02).

>

> Transformer (https://arxiv.org/pdf/1706.03762.pdf): In practice, we compute the attention function on a set of queries simultaneously, packed together into a matrix Q. The keys and values are also packed together into matrices K and V.

This proves that Q, K and V are **matrices** instead of **linear layers**. Therefore, they should not contain biases. | 02-25-2022 11:42:41 | 02-25-2022 11:42:41 | Hey @ayaka14732 !

Note that the original BART checkpoints use `bias` in Q, K, V layers.

> This proves that Q, K and V are matrices instead of linear layers. Therefore, they should not contain biases.

The weights of linear layers are also just matrices or for that matter, every layer weights in transformers are just matrices.<|||||>Hey @patil-suraj !

This should be very clear in the Transformer paper. There is a formula for scaled dot-product attention:

And for multi-head attention:

You can see the difference clearly if you compare them with the formula for feed-forward network: