repo

stringclasses 1

value | number

int64 1

25.3k

| state

stringclasses 2

values | title

stringlengths 1

487

| body

stringlengths 0

234k

⌀ | created_at

stringlengths 19

19

| closed_at

stringlengths 19

19

| comments

stringlengths 0

293k

|

|---|---|---|---|---|---|---|---|

transformers | 15,446 | closed | [generate] fix synced_gpus default | The default for `synced_gpus` should be `False` - it's already correctly documented as such.

@sgugger | 01-31-2022 19:15:47 | 01-31-2022 19:15:47 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,445 | closed | skip large generations pipeline test for XGLM | # What does this PR do?

Skip large generations pipeline test for XGLM since it uses sinusoidal positional embeddings which are resized on the fly. | 01-31-2022 19:09:33 | 01-31-2022 19:09:33 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Can we just add some TODO to fix better later in the comment in that case?<|||||>Merging to get the CI green. |

transformers | 15,444 | closed | [M2M100, XGLM] fix positional emb resize | # What does this PR do?

The sinusoidal position embeddings in M2M100 and XGLM are not correctly resized as the `max_pos` is computed in-correctly. Take into account the `past_key_values_length` when computing `max_pos`. | 01-31-2022 18:56:55 | 01-31-2022 18:56:55 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Looks good to me - a bit surprising to me that no one saw this earlier |

transformers | 15,443 | closed | Fix FlaxDataCollatorForT5MLM for texts with "<pad>" | If the input text contains `"<pad>"` (true for C4/en dataset) T5 tokenizer assigns it index 0.

Collator code (line 371) assumes that only tokens with `id > 0` are the text tokens and the rest are sentinel ids (`-1`), which turns out not to be true for this particular case.

This causes the line to throw an error similar to

```

input_ids = input_ids_full[input_ids_full > 0].reshape((batch_size, -1))

*** ValueError: cannot reshape array of size 1040383 into shape (8192,newaxis)

```

This modification should also allow to use padding in T5 pre-training.

@patil-suraj (note edited by @stas00 to tag the correct maintainer for flax) | 01-31-2022 18:53:50 | 01-31-2022 18:53:50 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15443). All of your documentation changes will be reflected on that endpoint.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>@patil-suraj , cold you please review this PR? It's very short<|||||>Oh we have a similar PR here from @patrickvonplaten

https://github.com/huggingface/transformers/pull/15835<|||||>Great! |

transformers | 15,442 | closed | Misfiring tf warnings | One last misfiring TF warning switched to `tf.print`. | 01-31-2022 18:38:57 | 01-31-2022 18:38:57 | _The documentation is not available anymore as the PR was closed or merged._<|||||>I think this is the last misfiring warning (this one is usually triggered in Seq2Seq models). I'm a little worried that there's some use-case where this will actually cause console spam instead, but I think it's safe.<|||||>Aaa, wait! In some examples this is actually much worse. I believe the warning isn't even correct/necessary anymore, though - I'm doing some testing to see if it even makes sense with modern TF.<|||||>After testing, I don't think this warning even makes sense anymore - I had no problems changing the values of these arguments at call-time instead of model initialization. I presume this dates from a time when TensorFlow had much more trouble with function retracing. I removed the warning entirely. |

transformers | 15,441 | closed | [XGLMTokenizer] correct positional emb size | # What does this PR do?

Correct the `PRETRAINED_POSITIONAL_EMBEDDINGS_SIZES`, 1024 => 2048 | 01-31-2022 18:14:39 | 01-31-2022 18:14:39 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,440 | closed | tokenizer truncation_side is not set up with from_pretrained call | ## Environment info

- `transformers` version: 4.16.1

### Who can help

Library:

- Tokenizers: @SaulLu

- Pipelines: @Narsil

## Information

Model I am using (Bert, XLNet ...): **GPT2Tokenizer**

The problem arises when using:

* [ ] the official example scripts: (give details below)

* [x] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [x] my own task or dataset: (give details below)

## To reproduce

```

tokenizer = GPT2Tokenizer.from_pretrained("gpt2", truncation_side="left")

print(tokenizer.truncation_side)

```

```

right

```

## Expected behavior

```

left

```

## Possible solution

I believe the problem is in the missing part at `tokenization_utils_base.py` (just like the one for the padding side at https://github.com/huggingface/transformers/blob/master/src/transformers/tokenization_utils_base.py#L1457-L1462)

```

# Truncation side is right by default and overridden in subclasses. If specified in the kwargs, it is changed.

self.truncation_side = kwargs.pop("truncation_side", self.truncation_side)

assert self.truncation_side in [

"right",

"left",

], f"Truncation side should be selected between 'right' and 'left', current value: {self.truncation_side}"

```

Feel free to add this solution if it is good enough without writing tests, as I don't have transformers dev environment ready for PR right now.

| 01-31-2022 18:12:03 | 01-31-2022 18:12:03 | Thanks for the detailed issue @LSinev ! Indeed, this seems to be a desirable change!

@Narsil, it looks like we doubled the work, we should choose one of the 2 PRs. :relaxed: <|||||>Haha no worries @SaulLu .

Whichever you prefer as a PR, I don't have a real preference for the test choice. (Yours has much more coverage, mine is much simpler)<|||||>Thank you! Personally, I think it would be good to test the behavior on as many tokenizer classes as possible, so we'll start with mine?

I will of course put both of you as co-authors.<|||||>Go right ahead, I'll close my PR. |

transformers | 15,439 | closed | Change REALM checkpoint to new ones | # What does this PR do?

This moves all REALM checkpoints to the Google organization as they have been moved here. | 01-31-2022 17:28:48 | 01-31-2022 17:28:48 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,438 | closed | Harder check for IndexErrors in QA scripts | # What does this PR do?

This falls back by not adding the example when we get an `IndexError` for some obscure reason when trying to get the offsets of a given feature.

Fixes #15401 | 01-31-2022 17:16:37 | 01-31-2022 17:16:37 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,437 | closed | Error when group_by_length is used with an IterableDataset | # What does this PR do?

Since `group_by_length` is not supported for `IterableDataset`s, we should raise an error at the trainer init when we detect that arg is set and the training set is an `IterableDataset`.

Fixes #15418 | 01-31-2022 16:57:23 | 01-31-2022 16:57:23 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,436 | closed | use mean instead of elementwise_mean in XLMPredLayer | # What does this PR do?

Super tiny change:

`XLMPredLayer` currently uses

```

loss = nn.functional.cross_entropy(

scores.view(-1, self.n_words), y.view(-1), reduction="elementwise_mean"

)

```

I believe `elementwise_mean` is deprecated:

https://github.com/pytorch/pytorch/pull/13419

https://pytorch.org/docs/stable/generated/torch.nn.functional.cross_entropy.html

| 01-31-2022 16:53:46 | 01-31-2022 16:53:46 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,435 | closed | Fix spurious warning in TF TokenClassification models | This small fix switches `warnings.warn` for `tf.print` in the loss function for TF `TokenClassification` models. When TF compiles a model which has a conditional that depends on values inside a Tensor, it traces both branches. The result of this is that when one of the branches contains a `warnings.warn` statement, that warning is fired during tracing, before the model has seen any data.

Using `tf.print` instead of `warnings.warn` has a few downsides - in particular, it will possibly fire more than once. Still, this is better than firing the warning every time the model is used even if the data is correct, which is what happens now. | 01-31-2022 16:19:52 | 01-31-2022 16:19:52 | _The documentation is not available anymore as the PR was closed or merged._<|||||>I think if we use `FutureWarning` they are only printed once, no? Could you check in this instance @Rocketknight1 ?

<|||||>@sgugger Either `FutureWarning` or `warnings.warn` are correctly only printed once - the problem is that they are **always** printed once, even if there are no -1s in the labels! This is because TF compiles the branch of the graph where the labels tensor contains `-1` and fires the warning during tracing. Only a TF graph op (like `tf.print()`) is correctly fired if and only if the tensor contains `-1`.<|||||>Ah thanks for explaining! I had completely misunderstood :-)<|||||>Don't worry, even the TF engineers don't understand TF |

transformers | 15,434 | closed | [Swin] Add missing header | # What does this PR do?

Add missing header to Swin docs. | 01-31-2022 16:12:27 | 01-31-2022 16:12:27 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,433 | closed | Add doc for add-new-model-like command | # What does this PR do?

This PR adds documentation for the new command `add-new-model-like`. | 01-31-2022 15:48:07 | 01-31-2022 15:48:07 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,432 | closed | add t5 ner finetuning | # What does this PR do?

This PR adds a Colab notebook for fine-tuning Text-2-Text T5 for Named Entity Recognition

@patil-suraj @patrickvonplaten | 01-31-2022 15:19:57 | 01-31-2022 15:19:57 | _The documentation is not available anymore as the PR was closed or merged._<|||||>@patil-suraj , thanks

I have reverted the commit |

transformers | 15,431 | closed | Can't push a model to hub | ## Environment info

- `transformers` version: 4.16.1

- Platform: Linux-5.4.0-96-generic-x86_64-with-glibc2.29

- Python version: 3.8.10

- PyTorch version (GPU?): 1.10.0+cu113 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: <fill in>

- Using distributed or parallel set-up in script?: <fill in>

### Who can help

@patrickvonplaten, @anton-l

## Information

Model I am using: facebook/wav2vec2-xls-r-300m

The problem arises when using:

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: speech-recognition-community-v2

## To reproduce

Steps to reproduce the behavior:

1. I use a modified jupiter notebook from this site: https://huggingface.co/blog/fine-tune-wav2vec2-english

2. Firstly a train was running with TrainingArguments(push_to_hub=True). It worked, you can see results here: https://huggingface.co/AigizK/wav2vec2-large-xls-r-300m-bashkir-cv7_opt/commits/main until the commit "Training in progress, step 94900"

3. Then my notebook has crashed

4. I ran all cells until trainer.train()

5. sent the current state to the repository without thinking(commits 293563110d31ba9610e4ad7bffd8b377beaf4cf5 and 3f6e999d7d2fdb4640fd1523bfca744681fcc2fc)

6. Then I run train from last checkpoint

7. When try to send result to hub but get error

8.

9. I can resolve this issue only using this command

- repo = Repository(local_dir="xls-r-300m-ba_v2", clone_from="AigizK/wav2vec2-large-xls-r-300m-bashkir-cv7_opt")

- copy last model.bin to this folder

- repo.push_to_hub(commit_message="50 epochs")

When I asked in the Discord from @patrickvonplaten how i can resolve he wrote:

`Could you open an issue on Transformers for this? This looks like a library mismatch between transformers and huggingface_hub`

https://discord.com/channels/879548962464493619/930507803339137024/937665851878952990

| 01-31-2022 14:54:50 | 01-31-2022 14:54:50 | Gently pinging @sgugger here. Any idea what could be the problem here? I cannot really reproduce this error<|||||>Are you sure that in your second run you still had `push_to_hub=True` in your `TrainingArguments`? Because the `repo` arg not defined can only come from there I think.<|||||>@sgugger From Step3 i used only `push_to_hub=False`<|||||>So that's why you get an error. We will make it clearer, but you can't use `Trainer.push_to_hub` if `push_to_hub=False`.<|||||>Thank you. I thought that `push_to_hub` only affects during training. |

transformers | 15,430 | closed | Update README.md | fix typo

# What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

Fixes # (issue)

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

@sgugger @LysandreJik | 01-31-2022 14:46:29 | 01-31-2022 14:46:29 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Thanks for fixing! |

transformers | 15,429 | closed | [RobertaTokenizer] remove inheritance on GPT2Tokenizer | # What does this PR do?

Continue making tokenizers independent. This PR refactors the `RobertaTokenizer` to remove dependency on `GPT2Tokenizer` | 01-31-2022 14:42:17 | 01-31-2022 14:42:17 | _The documentation is not available anymore as the PR was closed or merged._<|||||>The failures are unrelated, merging. |

transformers | 15,428 | closed | [Robust Speech Challenge] Add missing LR parameter | Some participants of the Robust Speech event complain about learning rate issues when training the model with the run_speech_recognition_ctc_bnb.py script. This problem is due to a missing LR parameter on the 8-bit optimizer initialization. This PR fixes this.

@patrickvonplaten | 01-31-2022 14:10:12 | 01-31-2022 14:10:12 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,427 | closed | [XGLM] fix gradient checkpointing | # What does this PR do?

Adds the missing import statement for `torch.utils.checkpoint`

Fixes #15422 | 01-31-2022 14:00:15 | 01-31-2022 14:00:15 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,426 | closed | Higher memory allocation for GPT-2 forward pass when not using past_key_values. | I have following problem:

I have a context text as input and then GPT-2 generates a text based on the input.

Currently I do this token for token the following way:

```

model_outputs = model(input_ids=last_token, past_key_values=past)

logits = model_outputs['logits']

past = model_outputs['past_key_values']

# do stuff with the logits to determine the next token...

```

This does work and is pretty fast, but the problem is the high gpu memory consumption by always saving the past_key_values.

AMP is enabled too!

To avoid this I had a trade-off in mind: Only save and use the past_key_values from the context text and put in the generated text so far as input_ids, instead of just the last token!

```

model_outputs = model(input_ids=generated_tokens, past_key_values=past, use_cache=False)

logits = model_outputs['logits']

# do stuff with the logits to determine the next token...

```

But now the memory allocation per generated token is even higher. Even though I didn't change anything else in the code.

Can anyone help me with this, or explain what exactly happens here?

## Environment info

- `transformers` version: 4.15.0

- Platform: Windows-10-10.0.19041-SP0 (also tested on Ubuntu)

- Python version: Python 3.7.3

- PyTorch version (GPU?): 1.10.1+cu102 (True)

- Tensorflow version (GPU?): not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@patrickvonplaten, @narsil

| 01-31-2022 13:27:12 | 01-31-2022 13:27:12 | Hi @LFruth ,

I think `past_key_values` should never incure more memory usage than without (since it's really a cache of matrices that have to be caculated anyway during inference.).

What could be at play here, is that you are using custom code, keeping references to old past_key_values, which means an ever growing amount of memory. Can you provide your full script ?

Do you mind trying to use `model.generate(....)` and see if it also has ever growing memory usage (more than expected) ? And if you want to play with the logits, you can add a custom `LogitsProcessor` in order to use some rule which isn't already integrated into `transformers`.

I can help providing an example on how to do so if that's the case.<|||||>Hi @Narsil,

Thank you for the quick answer.

What you say about past_key_values makes sense to me!

I don't keep any references to past_key_values anywhere afaik, but I copy my code here later!

I already have the same thing implemented with model.generate! Which is quite interesting, as it is a little slower but has almost no extra memory allocation. I tried to play with past_key_values and use_cache here too, but didn't notice a difference in speed and or memory allocation at all.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored.<|||||>I encountered this same issue, and in case it helps anyone, my mistake is that I forgot to add the `torch.no_grad()`. |

transformers | 15,425 | closed | Partial Torch Memory Efficient Attention | # What does this PR do?

This begins the implementation of a central `attention()` function in modeling_utils.py that calls out to https://github.com/AminRezaei0x443/memory-efficient-attention if configuration parameters are set, to allocate configurably down to O(n) memory rather than O(n^2) memory at the expense of parallel execution.

I'm afraid the new memory-efficient-attention still needs to be added as a dependency and some development cruft removed from the source.

I believe it is important to reuse existing projects, so that people's work can be more effective and valued, but I also believe memory-efficient-attention code is MIT licensed if copying it is preferred.

The GPTJ and Perceiver models are altered to call out to the new attention function.

Working on this has been very hard for me, so I am contributing what I have now. If others have better work on this, feel free to accept them before mine.

- [ ] I have commented out the rest of the PR form here, to return to as I find capacity.

<!--

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

-->

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 01-31-2022 13:25:37 | 01-31-2022 13:25:37 | The docs for this PR live [here](https://moon-ci-docs.huggingface.co/docs/transformers/pr_15425). All of your documentation changes will be reflected on that endpoint.<|||||>Hey @xloem, thanks a lot for your hard work on this! It is cool to support the attention mechanism as visible in https://github.com/AminRezaei0x443/memory-efficient-attention. However, the `transformers` library does not really work with central components to be shared among many models, so we do not design layers in the `modeling_utils.py` file.

This comes from the following two pieces of the "Why should I/shouldn't I use Transformers" from the README:

> 4. Easily customize a model or an example to your needs:

> - We provide examples for each architecture to reproduce the results published by its original authors.

> - **Model internals are exposed as consistently as possible.**

> - **Model files can be used independently of the library for quick experiments.**

and

> This library is not a modular toolbox of building blocks for neural nets. The code in the model files is not refactored with additional abstractions on purpose so that researchers can quickly iterate on each of the models without diving into additional abstractions/files.

You'll see that we have other implementations of efficient attention mechanisms spread across the codebase, and each of them are linked to a single model. Recent examples of this are YOSO and Nyströmformer, which were released in the v4.16 released last week.

cc @sgugger @patrickvonplaten for knowledge<|||||>@xloem, would you be interested in adding this new attention mechanism simply as a new model - linked to its official paper: https://arxiv.org/abs/2112.05682v2 (maybe called something like `O1Transformer` ?) <|||||>Thanks so much for your replies and advice.

I hadn't noticed the readme line. Do you know of any similar libraries to transformers, that do indeed collect building blocks? The transformers library is far smaller than the torch and flax libraries that models already depend on, but I imagine you know the experience of research work better than I do to make that call.

I've been finding a little more energy to work on this. The owner of the dependency repo found there's still an outstanding issue that masks and biases are built O(n^2), so more work is needed.<|||||>Apologies for submitting this PR in such a poor state.

Unlike YOSO and Nystromformer, this implementation is exact. The output is theoretically idempotent with that generated by use of the softmax function.

Although the research could be implemented as a standalone model[edit:typo], I'm really hoping to actually help users on low-memory systems use the code to fine tune current pretrained models. @patrickvonplaten how does that hope settle with you?

After rereading the concerns, my plan is to move the code into the model files to provide for the statements in the readme. @LysandreJik, does this sound reasonable?

I also try to stick to just one sourcefile when developing and learning. My usual approach is to open a python interpreter and do `print(inspect.getsource(modulefunc))` to quickly see abstraction implementations, which is indeed quite unideal. Abstraction can _really_ empower a domain: research and development accelerates when abstraction is made a norm.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

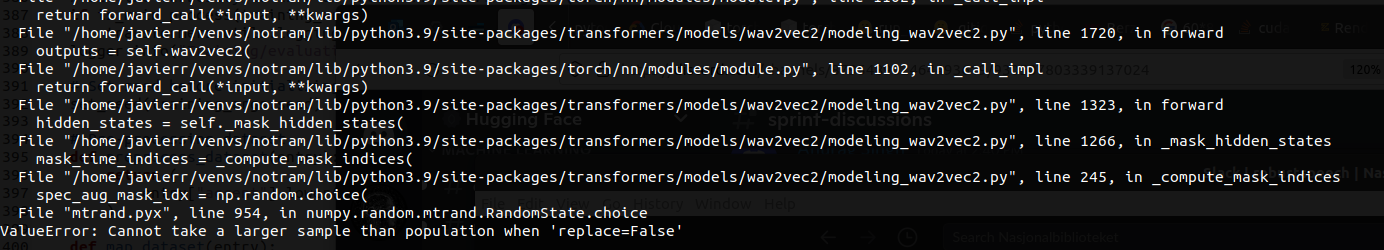

transformers | 15,424 | closed | error when running official run_speech_recognition_ctc.py (ValueError: 'a' cannot be empty unless no samples are taken) | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

`transformers` version: 4.17.0.dev0

- Platform:Ubuntu 20.04.3 LTS

- Python version: 3.8.10

- PyTorch version (GPU?):1.10.2+cu113

- Tensorflow version (GPU?): not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@patrickvonplaten @anton-l

Models:

- Wav2Vec2

Library:

- Speech

## Information

Model I am using : facebook/wav2vec2-xls-r-300m

The problem arises when using:

* [ ] the official example script: https://github.com/huggingface/transformers/blob/master/examples/pytorch/speech-recognition/run_speech_recognition_ctc.py

The tasks I am working on is:

* [ ] dataset: mozilla-foundation/common_voice_8_0

## To reproduce

Steps to reproduce the behavior:

I have been running the official script `run_speech_recognition_ctc.py` as provided in

[https://github.com/huggingface/transformers/tree/master/examples/pytorch/speech-recognition](https://github.com/huggingface/transformers/tree/master/examples/pytorch/speech-recognition)

1. `python run_speech_recognition_ctc.py \

--dataset_name="common_voice" \

--model_name_or_path="facebook/wav2vec2-large-xlsr-53" \

--dataset_config_name="ta" \

--output_dir="./wav2vec2-common_voice-tamil" \

--overwrite_output_dir \

--num_train_epochs="15" \

--per_device_train_batch_size="16" \

--gradient_accumulation_steps="2" \

--learning_rate="3e-4" \

--warmup_steps="500" \

--evaluation_strategy="steps" \

--text_column_name="sentence" \

--length_column_name="input_length" \

--save_steps="400" \

--eval_steps="100" \

--layerdrop="0.0" \

--save_total_limit="3" \

--freeze_feature_encoder \

--gradient_checkpointing \

--chars_to_ignore , ? . ! - \; \: \" “ % ‘ ” � \

--fp16 \

--group_by_length \

--push_to_hub \

--do_train --do_eval `

## Error Message

`train_result = trainer.train(resume_from_checkpoint=checkpoint)

File "/home/stephen/.local/lib/python3.8/site-packages/transformers/trainer.py", line 1373, in train

tr_loss_step = self.training_step(model, inputs)

File "/home/stephen/.local/lib/python3.8/site-packages/transformers/trainer.py", line 1948, in training_step

loss = self.compute_loss(model, inputs)

File "/home/stephen/.local/lib/python3.8/site-packages/transformers/trainer.py", line 1980, in compute_loss

outputs = model(**inputs)

File "/home/stephen/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/stephen/.local/lib/python3.8/site-packages/transformers/models/wav2vec2/modeling_wav2vec2.py", line 1742, in forward

outputs = self.wav2vec2(

File "/home/stephen/.local/lib/python3.8/site-packages/torch/nn/modules/module.py", line 1102, in _call_impl

return forward_call(*input, **kwargs)

File "/home/stephen/.local/lib/python3.8/site-packages/transformers/models/wav2vec2/modeling_wav2vec2.py", line 1342, in forward

hidden_states = self._mask_hidden_states(

File "/home/stephen/.local/lib/python3.8/site-packages/transformers/models/wav2vec2/modeling_wav2vec2.py", line 1284, in _mask_hidden_states

mask_time_indices = _compute_mask_indices(

File "/home/stephen/.local/lib/python3.8/site-packages/transformers/models/wav2vec2/modeling_wav2vec2.py", line 263, in _compute_mask_indices

spec_aug_mask_idx = np.random.choice(

File "mtrand.pyx", line 909, in numpy.random.mtrand.RandomState.choice

ValueError: 'a' cannot be empty unless no samples are taken`

## Expected behavior

The same script runs fine without any errors, when the **mask_time_prob** is set to 0 instead of the default value mentioned in the script that 0.05 | 01-31-2022 13:09:21 | 01-31-2022 13:09:21 | Hey @StephennFernandes,

Thanks a mille for opening the issue - this PR seems to be related: https://github.com/huggingface/transformers/pull/15423<|||||>Hey @StephennFernandes,

Can you try whether the error goes away with the newest `transformers` version?<|||||>hey @patrickvonplaten i installed tranfromers from source `pip install git+https://github.com/huggingface/transformers` and the issue still persists<|||||>Hmm, that's a bit odd. Rerunning your training command now<|||||>Hey @StephennFernandes,

I ran the command on master and trained for 3 epochs without error. You can see the run here: https://huggingface.co/patrickvonplaten/wav2vec2-common_voice-tamil<|||||>Could you verify that you are using current master of `transformers`?<|||||>hey patrick i uninstalled and reinstalled transformers and it looks like everything is working fine.

One strange thing i noticed is that until a couple of epochs the wer went low 0.63 and then the wer came up again and for the rest of the training it was 1.0, the final model was trained for 1.0 wer.

This isnt the first time i am facing such issues on updated version of transformers.

the only version of transformers that works fine and converges wers for me was 4.8.0, is there some issue with my cuda drivers perhaps ?? what version of CUDA drivers does huggingface recommend along with pytorch version.

I have an Nvidia A6000 GPU <|||||>Uff I really don't know sadly :-/ Could you maybe open a new issue since this one does not seem to be related anymore or a forum discussion: https://discuss.huggingface.co/? :-)<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 15,423 | closed | Update modeling_wav2vec2.py | @patrickvonplaten

With very tiny sound files (less than 0.1 seconds) the num_masked_span can be too long. The issue is described in issue 15366 and discussed with @patrickvonplaten. This can be an issue with custom datasets that contains sentences with small words like "OK", "Yes" etc.

| 01-31-2022 12:51:12 | 01-31-2022 12:51:12 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,422 | closed | XGLM Bug: break: module 'torch.utils' has no attribute 'checkpoint' | ## Environment info

<!-- You can run the command `transformers-cli env` and copy-and-paste its output below.

Don't forget to fill out the missing fields in that output! -->

- `transformers` version: 4.17.0.dev0

- Platform: Linux-4.9.253-tegra-aarch64-with-glibc2.29

- Python version: 3.8.10

- PyTorch version (GPU?): 1.10.2 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

- Using GPU in script?: Yes

- Using distributed or parallel set-up in script?: No

### Who can help

@patil-suraj

## Information

Model I am using (Bert, XLNet ...): XGLMForCausalLM

The problem arises when using:

* [X] the official example scripts: (give details below)

* [ ] my own modified scripts: (give details below)

The tasks I am working on is:

* [ ] an official GLUE/SQUaD task: (give the name)

* [X] my own task or dataset: (give details below)

## To reproduce

Steps to reproduce the behavior:

1. I am trying to start training on a dataset using a converted model.

2. As soon as I start training using the script `run_clm.py` (found in the examples folder) I get the following error back:

```

break: module 'torch.utils' has no attribute 'checkpoint'

```

Cause might be related to this: https://discuss.pytorch.org/t/attributeerror-module-torch-utils-has-no-attribute-checkpoint/101543/6

When adding the following line in the modeling_xglm.py, the error does not appear and training starts:

```

from torch.utils.checkpoint import checkpoint

```

<!-- If you have code snippets, error messages, stack traces please provide them here as well.

Important! Use code tags to correctly format your code. See https://help.github.com/en/github/writing-on-github/creating-and-highlighting-code-blocks#syntax-highlighting

Do not use screenshots, as they are hard to read and (more importantly) don't allow others to copy-and-paste your code.-->

## Expected behavior

Training would start without any issues. | 01-31-2022 12:30:24 | 01-31-2022 12:30:24 | Hi,

Could you please share a code snippet or colab so I could reproduce this issue? Thanks <|||||>I can't share my dataset, but I used this one as the basis for the trainer:

https://github.com/huggingface/transformers/blob/master/examples/pytorch/language-modeling/run_clm.py

EDIT: Just train any XGLM-compatible model with a default dataset (wikitext for example)<|||||>Thank you for reporting this. Fixed in #15427 |

transformers | 15,421 | closed | [Trainer] suppress warning for length-related columns | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

<!-- Remove if not applicable -->

During the robust speech event multiple participants reported that they were confused by the (harmless) warning:

```

"The following columns in the training set don't have a corresponding argument in `Wav2Vec2ForCTC.forward` and have been ignored: input_length."

```

The reason why this warning shows up is because we pass a dataset column `"input_length"` to the Trainer so that `group_by_length` is significantly sped-up when the input lengths are automatically passed to the trainer.

Fixes #https://github.com/huggingface/transformers/issues/15366#issuecomment-1024166475

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [ ] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [ ] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 01-31-2022 12:03:03 | 01-31-2022 12:03:03 | _The documentation is not available anymore as the PR was closed or merged._<|||||>I disagree with this. The column is in the dataset (to speed up the preprocessing) but it then ignored by the Trainer, which warrants something. The logging level is not set at warning, but at info, so the user knows there is nothing wrong with it.

I'm fine with rephrasing the message if you think it helps, but we shouldn't silently discard a column of the training dataset.

In most our examples, we don't clean up the datasets to include only the columns the model want and we never had any suer complain about this info.<|||||>Fair point! Made the message a tiny bit more explicit<|||||>Failing tests are due to REALM. |

transformers | 15,420 | closed | Bug in T5 Tokenizer - Adding space after special tokens | ## Environment info

- `transformers` version: 4.16.1

- Platform: Linux-4.15.0-65-generic-x86_64-with-debian-buster-sid

- Python version: 3.7.11

- PyTorch version (GPU?): 1.10.2+cu102 (True)

- Tensorflow version (GPU?): not installed (NA)

- Flax version (CPU?/GPU?/TPU?): not installed (NA)

- Jax version: not installed

- JaxLib version: not installed

### Who can help

@SaulLu @patrickvonplaten @PhilipMay

## Information

When decoding with the T5 tokenizer it inserts redundant spaces after special tokens; this leads to incorrect decoding. Here is an example:

```bash

>> tokenizer.convert_ids_to_tokens(self.encode("``America''"))

['▁', '<unk>', 'America', "'", "'", '</s>']

>>self.convert_ids_to_tokens(self.encode(self.decode(self.encode("``America''"))))

['<unk>', '▁America', "'", "'", '</s>']

```

This happens in line 291 of file `tokenization_t5.py`:

```python

out_string += self.sp_model.decode_pieces(current_sub_tokens) + token + " "

```

What is the purpose of the last space (" ")?

## Expected behavior

IMO the decoding in this example should be exactly the same as the original text.

Thank you! :)

| 01-31-2022 12:00:12 | 01-31-2022 12:00:12 | That's a good question! @SaulLu @LysandreJik @n1t0 - do you have an idea by any chance?<|||||>This seems to be about special tokens:

```python

# make sure that special tokens are not decoded using sentencepiece model

if token in self.all_special_tokens:

out_string += self.sp_model.decode_pieces(current_sub_tokens) + token + " "

current_sub_tokens = []

```

https://github.com/huggingface/transformers/blob/2ca6268394c1464eaa813ccb398c3812904e2283/src/transformers/models/t5/tokenization_t5.py#L289-L292<|||||>@PhilipMay will removing the additional `" "` lead to any negative effect?

If not, can we please remove the additional `" "`?

Sometimes we need to decode encoded inputs, and we will prefer the outcome to be as similar as possible to the original text.<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/main/CONTRIBUTING.md) are likely to be ignored. |

transformers | 15,419 | closed | Add option to resize like torchvision's Resize | # What does this PR do?

A lot of research papers (like ConvNeXT, PoolFormer, etc.) use [`torchvision.transforms.Resize`](https://pytorch.org/vision/stable/transforms.html#torchvision.transforms.Resize) when preparing images for the model. This class supports providing an integer size, which will only resize the smaller edge of the image.

The `resize` method defined in `image_utils.py` currently resizes to (size, size) in case size is an integer. This PR adds the possibility to resize images similar to torchvision's resize by adding an argument `torch_resize` (which is set to `False` by default). One can then also provide an optional `max_size` argument.

This is replicated using Pillow. | 01-31-2022 10:28:57 | 01-31-2022 10:28:57 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Applied all suggestions from your review, I've renamed `torch_resize` (which defaults to `False`) to `default_to_square` (which defaults to `True`).

I guess we cannot default it to a rectangle, to be backwards compatible? Or can we, if we change the existing ones that expect a square to `default_to_square=True`)? Based on [this](https://github.com/huggingface/transformers/search?q=self.resize), it seems like we currently have 10 models using the resize method that assume providing an integer will resize to a square.<|||||>> I guess we cannot default it to a rectangle, to be backwards compatible? Or can we, if we change the existing ones that expect a square to default_to_square=True)? Based on this, it seems like we currently have 10 models using the resize method that assume providing an integer will resize to a square.

We can switch the other way, but only if we change the default for all the subclasses that expected the resize to a square, so not sure it's worth the hassle. |

transformers | 15,418 | closed | Notify user if group_by_length won't be used | @sgugger @stas00 (as suggested in [15196](https://github.com/huggingface/transformers/issues/15196#issuecomment-1024143208))

It is just a minor issue but it could prevent some head-scratching:

Setting `group_by_length` to `True` during training has, as far as I can see, no effect if the provided dataset is a `torch.utils.data.IterableDataset`, as can be seen in this line:

https://github.com/huggingface/transformers/blob/c4d1fd77fa52d72b66207c6002d3ec13cc36dca8/src/transformers/trainer.py#L646

Entering this branch will run the training without bucketing / grouping.

Imo it would be best to raise an exception or, at the very least, print a warning message for the user. Grouping only happens in [`_get_train_sampler()`](https://github.com/huggingface/transformers/blob/c4d1fd77fa52d72b66207c6002d3ec13cc36dca8/src/transformers/trainer.py#L566:L628) which [is never reached](https://github.com/huggingface/transformers/blob/c4d1fd77fa52d72b66207c6002d3ec13cc36dca8/src/transformers/trainer.py#L646:L664) if said condition is true.

| 01-31-2022 08:33:37 | 01-31-2022 08:33:37 | Yes, that's a great idea! Will add something for that today. |

transformers | 15,417 | closed | Support for larger XGLM models | According to the official XGLM repo, https://github.com/pytorch/fairseq/tree/main/examples/xglm, there are five versions of XGLM out there. The architecture of the larger XGLM versions is same as the base XGLM, ; why doesn't HF provide support for larger XGLM versions? It seems that currently HF provides support for the smallest one (546M parameters) only. | 01-31-2022 08:27:34 | 01-31-2022 08:27:34 | Hi,

There are already 4 variants available: https://huggingface.co/models?other=xglm.

Only the 4.5B variant seems to be missing as of now, but pretty sure @patil-suraj is going to add that one as well.<|||||>As Niels pointed out, all the xglm checkpoints are available on the hub except the 4.5B. I'm having some issues with that checkpoint so will upload that once it's resolved.<|||||>Just pushed the 4.5B checkpoint https://huggingface.co/facebook/xglm-4.5B

All XGLM checkpoints are now available on the hub. Closing this issue.<|||||>Hi @patil-suraj and @NielsRogge ! Thanks for your quick reply! I went through the code of XGLM model. It seems that for the model and tokenizer part, we can only use the smallest one. Because I noticed a snippet like this:

XGLM_PRETRAINED_MODEL_ARCHIVE_LIST = [

"facebook/xglm-564M",

# See all XGLM models at https://huggingface.co/models?filter=xglm

]

Would you like to provide any advice to make use of the larger models like 1.7B, 2.5B and 4.5B? For example, just add the model names in the list? Thanks in advance!<|||||>Hi @WadeYin9712

That list is only used for internal purposes. You can use all the xglm checkpoints available on the hub https://huggingface.co/models?other=xglm.

To use it, just pass the repo id to `from_pretrained`. For ex

```python3

model = XGLMForCausalLM.from_pretrained("facebook/xglm-1.7B")

``` |

transformers | 15,416 | closed | Constrained Beam Search [without disjunctive decoding] | # ~~Disjunctive~~ Positive Constraint Decoding

@patrickvonplaten @Narsil

Fixes #14081,

But without the "disjunctive" component. I think it's a good idea to first integrate this `Constraint` framework before adding more advanced techniques like Disjunctive Decoding, which can arguably be added with ease afterwards.

Currently this PR is able to force the generation of tokens and/or an ordered sequence of tokens in the beam search with `constrained_beam_search` in `GenerationMixin.generate`. It finds the best possible, highest probable list of beam outputs in fulfilling a list of `Constraint` objects, yielding results more sensible than just blindly forcing the tokens in the output sequence.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [x] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case. #14081

- [x] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

Anyone in the community is free to review the PR once the tests have passed. Feel free to tag

members/contributors who may be interested in your PR.

Tagging @patrickvonplaten because my main back-and-forth was with you on this PR.

## Example Use

Here is an example of how one could use this functionality:

```python

from transformers import GPT2Tokenizer, GPT2LMHeadModel

from transformers.generation_beam_constraints import PhrasalConstraint

device = "cuda"

model = GPT2LMHeadModel.from_pretrained("gpt2").to(device)

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

force_text = " big monsters"

force_text_2 = " crazy"

force_tokens = tokenizer.encode(force_text, return_tensors="pt").to(device)[0]

force_tokens_2 = tokenizer.encode(force_text_2, return_tensors="pt").to(device)[0]

constraints = [

PhrasalConstraint(force_tokens),

PhrasalConstraint(force_tokens_2)

]

input_text = ["The baby is crying because"]

model_inputs = tokenizer(input_text, return_tensors="pt")

for key, value in model_inputs.items():

model_inputs[key] = value.to(device)

k = model.generate(

**model_inputs,

constraints=constraints,

num_beams=7,

num_return_sequences=7,

no_repeat_ngram_size=2

)

for out in k:

print(tokenizer.decode(out))

```

For some example outputs:

```

The baby is crying because she's been told crazy big monsters are going to come and kill her.

The baby is crying because she's been told crazy big monsters are coming for her.

The baby is crying because she's been told crazy big monsters are going to come after her.

```

## The Constraint Framework

Users can define their own constraints by inheriting the `Constraint` abstract class and this framework is ensured to work as desired, because the `Constraint` class is quite strictly defined. If an implementation passes the `self.test()` function of this interface then it necessarily works as desired. An incorrect implementation will lead to an error. But probably most users would be fine just using the `TokenConstraint` and `PhrasalContraint` classes.

## Testing

My approach to testing this new functionality was that it passes every test that was related to the existing `beam_search` function, with the **additional testing that requires that the generated output indeed fulfills the constraint.**

One such example:

```python

# tests/test_generation_utils.py

def test_constrained_beam_search_generate(self):

for model_class in self.all_generative_model_classes:

....

# check `generate()` and `constrained_beam_search()` are equal for `num_return_sequences`

# Sample constraints

force_tokens = torch.randint(min_id, max_id, (1, 2)).type(torch.LongTensor)[0]

constraints = [

PhrasalConstraint(force_tokens),

]

num_return_sequences = 2

max_length = 10

beam_kwargs, beam_scorer = self._get_constrained_beam_scorer_and_kwargs(

input_ids.shape[0], max_length, constraints, num_return_sequences=num_return_sequences

)

output_generate, output_beam_search = self._constrained_beam_search_generate(

model=model,

input_ids=input_ids,

attention_mask=attention_mask,

max_length=max_length,

constrained_beam_scorer=beam_scorer,

constraints=constraints,

beam_kwargs=beam_kwargs,

logits_processor=logits_processor,

logits_process_kwargs=logits_process_kwargs,

)

self.assertListEqual(output_generate.tolist(), output_beam_search.tolist())

for generation_output in output_generate:

self._check_sequence_inside_sequence(force_tokens, generation_output)

...

```

| 01-31-2022 07:36:12 | 01-31-2022 07:36:12 | _The documentation is not available anymore as the PR was closed or merged._<|||||>Very cool taking a look now<|||||>Hey @cwkeam,

This already looks like a super clean and impactful addition to the `transformers` library! Great job. I really like how you implement this new generation method in a extendable way without affecting existing generate methods. I'm sure we can merge it soon.

At the moment, it seems like your example above doesn't work. When I try to run:

```python

from transformers import GPT2Tokenizer, GPT2LMHeadModel

from transformers.generation_beam_constraints import PhrasalConstraint

device = "cuda"

model = GPT2LMHeadModel.from_pretrained("gpt2").to(device)

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

force_text = " big monsters"

force_text_2 = " crazy"

force_tokens = tokenizer.encode(force_text, return_tensors="pt").to(device)[0]

force_tokens_2 = tokenizer.encode(force_text_2, return_tensors="pt").to(device)[0]

constraints = [

PhrasalConstraint(force_tokens),

PhrasalConstraint(force_tokens_2)

]

input_text = ["The baby is crying because"]

model_inputs = tokenizer(input_text, return_tensors="pt")

for key, value in model_inputs.items():

model_inputs[key] = value.to(device)

k = model.generate(

**model_inputs,

constraints=constraints,

num_beams=7,

num_return_sequences=7,

no_repeat_ngram_size=2

)

for out in k:

print(tokenizer.decode(out))

```

on my computer, I'm getting the following error:

```

ValueError: `token_ids` has to be list of positive integers or a `torch.LongTensor` but is tensor([7165], device='cuda:0')For single token constraints, refer to `TokenConstraint`.

```

I think the example is really nice and exactly what we are looking for. So maybe you could also add it as a `@slow` test under `tests/test_generation_utils.py` once it works :-)<|||||>Let me know if you need further help!<|||||>Added 1. clear examples (**and tests for them**, 2. more specific input parameter tests, and 3. **bug fixes in other beam search code**.

## 1. Examples (text completion & translation)

The latter example really emphasizes the usefulness of this feature.

Fixed up the first example as requested previously, showing its ability that it can

- [x] handle multiple constraints

- [x] handle constraints that have more than one words (sequence of tokens)

```python

def test_constrained_beam_search(self):

model = GPT2LMHeadModel.from_pretrained("gpt2").to(torch_device)

tokenizer = GPT2Tokenizer.from_pretrained("gpt2")

force_tokens = tokenizer.encode(" scared", return_tensors="pt").to(torch_device)[0]

force_tokens_2 = tokenizer.encode(" big weapons", return_tensors="pt").to(torch_device)[0]

constraints = [

PhrasalConstraint(force_tokens),

PhrasalConstraint(force_tokens_2),

]

starting_text = ["The soldiers were not prepared and"]

input_ids = tokenizer(starting_text, return_tensors="pt").input_ids.to(torch_device)

outputs = model.generate(

input_ids,

constraints=constraints,

num_beams=10,

num_return_sequences=1,

no_repeat_ngram_size=1,

max_length=30,

remove_invalid_values=True,

)

generated_text = tokenizer.batch_decode(outputs, skip_special_tokens=True)

self.assertListEqual(

generated_text,

[

"The soldiers were not prepared and didn't know how big the big weapons would be, so they scared them off. They had no idea what to do",

],

)

```

and here's a translation example:

```python

def test_constrained_beam_search_example_integration(self):

tokenizer = AutoTokenizer.from_pretrained("t5-base")

model = AutoModelForSeq2SeqLM.from_pretrained("t5-base")

encoder_input_str = "translate English to German: How old are you?"

encoder_input_ids = tokenizer(encoder_input_str, return_tensors="pt").input_ids

# lets run beam search using 5 beams

num_beams = 5

# define decoder start token ids

input_ids = torch.ones((num_beams, 1), device=model.device, dtype=torch.long)

input_ids = input_ids * model.config.decoder_start_token_id

# add encoder_outputs to model keyword arguments

model_kwargs = {

"encoder_outputs": model.get_encoder()(

encoder_input_ids.repeat_interleave(num_beams, dim=0), return_dict=True

)

}

constraint_str = "sind"

constraint_token_ids = tokenizer.encode(constraint_str)[:-1] # remove eos token

constraints = [PhrasalConstraint(token_ids=constraint_token_ids)]

# instantiate beam scorer

beam_scorer = ConstrainedBeamSearchScorer(

batch_size=1, num_beams=num_beams, device=model.device, constraints=constraints

)

# instantiate logits processors

logits_processor = LogitsProcessorList(

[

MinLengthLogitsProcessor(5, eos_token_id=model.config.eos_token_id),

]

)

outputs = model.constrained_beam_search(

input_ids, beam_scorer, constraints=constraints, logits_processor=logits_processor, **model_kwargs

)

outputs = tokenizer.batch_decode(outputs, skip_special_tokens=True)

self.assertListEqual(outputs, ["Wie alter sind Sie?"])

```

This is a really useful example, because the following is the test for **ordinary beam search**

```python

def test_beam_search_example_integration(self):

# ... the exact same code as above minus constraints

outputs = model.beam_search(input_ids, beam_scorer, logits_processor=logits_processor, **model_kwargs)

outputs = tokenizer.batch_decode(outputs, skip_special_tokens=True)

self.assertListEqual(outputs, ["Wie alt bist du?"])

```

I've never studied German before, but upon looking it up, it seemed like there were two equally realistic ways to translate "How old are you" in German. While the ordinary beam search output may be fine for most uses, maybe the user specifically wants the other way of translating it. In this case, they can do as I have and force the generation to include the token that changes the direction of the translation as desired.

## 2. Input Parameters tests

Added @patrickvonplaten 's recommendation as well as dealing with `num_groups`:

```python

if num_beams <= 1:

raise ValueError("`num_beams` needs to be greater than 1 for constrained genertation.")

if do_sample:

raise ValueError("`do_sample` needs to be false for constrained generation.")

if num_beam_groups is not None and num_beam_groups > 1:

raise ValueError("`num_beam_groups` not supported yet for constrained generation.")

```

I'm not sure whether the last part is a good idea and whether I should just make the implementation work for `num_beam_groups>1` but the fact is that it can't handle that case yet (will throw an error).

## 3. Bug Fixes in **beam_search** with `max_length`

As I was writing the test code for the few examples above, I realized that the example in the [huggingface documentation](https://huggingface.co/docs/transformers/v4.16.2/en/main_classes/model#transformers.generation_utils.GenerationMixin.beam_search) for **ordinary beam search** was actually failing, because of the **recent change with `max_length`**.

The above testing code `test_beam_search_example_integration(self)` is taken directly from the huggingface documentation for `GenerationMixin.beam_search` and it fails, because `max_length` can be set to None unless otherwise specified, and it throws an error saying that you can't compare a `Tensor` to a `NoneType`.

[From `BeamSearchScorer.finalize()`](https://github.com/huggingface/transformers/blob/master/src/transformers/generation_beam_search.py#L333),

```python

sent_max_len = min(sent_lengths.max().item() + 1, max_length) # ERROR 1

decoded: torch.LongTensor = input_ids.new(batch_size * self.num_beam_hyps_to_keep, sent_max_len)

if sent_lengths.min().item() != sent_lengths.max().item():

assert pad_token_id is not None, "`pad_token_id` has to be defined"

decoded.fill_(pad_token_id)

for i, hypo in enumerate(best):

decoded[i, : sent_lengths[i]] = hypo

if sent_lengths[i] < max_length: # ERROR 2

decoded[i, sent_lengths[i]] = eos_token_id

```

So I changed that to:

```python

sent_lengths_max = sent_lengths.max().item() + 1

sent_max_len = min(sent_lengths_max, max_length) if max_length is not None else sent_lengths_max

decoded: torch.LongTensor = input_ids.new(batch_size * self.num_beam_hyps_to_keep, sent_max_len)

# shorter batches are padded if needed

if sent_lengths.min().item() != sent_lengths.max().item():

assert pad_token_id is not None, "`pad_token_id` has to be defined"

decoded.fill_(pad_token_id)

# fill with hypotheses and eos_token_id if the latter fits in

for i, hypo in enumerate(best):

decoded[i, : sent_lengths[i]] = hypo

if sent_lengths[i] < sent_max_len:

decoded[i, sent_lengths[i]] = eos_token_id

```

<|||||>Hey @patrickvonplaten

I also wanted to ask your opinions on making this feature integrate more easily to the `model.generate()` function. The same way how `bad_word_ids` is converted into a `LogitsProcessor` in the internal logic, maybe there's a way to add a similarly simple API like `force_token_ids` that internally converts it into `Constraint` objects in the backend.

I'm thinking of something like:

```python

starting_text = ["The soldiers were not prepared and"]

force_tokens = ["scared", "big weapons"]

input_ids = tokenizer(starting_text, return_tensors="pt").input_ids.to(torch_device)

force_token_ids = tokenizer(force_tokens).input_ids

outputs = model.generate(

input_ids,

force_token_ids=force_token_ids, # [[28349], [23981, 3721]] something that looks like this (made the numbers up)

...

)

```

But the problem arises when we start talking about **Disjunctive Positive Constraint Decoding** which was the main subject of the original #14081 issue. Although the disjunctive constraint generation implementation isn't even made yet, I think the best approach is something like this:

```python

from transformers.generation_beam_constraints import DisjunctiveConstraint

variations_1 = ["rain", "raining", "rained"]

variations_2 = ["smile", "smiling", "smiled"]

force_rain = tokenizer.encode(variations, return_tensors="pt")

force_smile = tokenizer.encode(variatiosn_2, return_tensors="pt")

constraints = [

DisjunctiveConstraint(force_rain),

DisjunctiveConstraint(force_smile)

]

outputs = model.generate(

input_ids,

constraints=constraints,

...

)

```

Where `DisjunctiveConstraint` is a constraint that is fulfilled by fulfilling just one among the list.

However, this feature would be something that's quite awkward to make into the `force_token_ids` API.

I think one way to approaching this is realizing that a `PhrasalConstraint` is basically a subset of a `DisjunctiveConstraint`:

```python

variations_1 = ["rain"]

force_rain = tokenizer.encode(variations, return_tensors="pt")

constraints = [

DisjunctiveConstraint(force_rain),

]

```

The above has the same effect as just doing `PhrasalConstraint(tokenizer_encode("rain"))`, since the Disjunctive code would be choosing one from a list with one item.

So maybe the API above should be changed to:

```python

starting_text = ["The soldiers were not prepared and"]

force_tokens = [

["scared", "another"],

["big weapons"]

]

input_ids = tokenizer(starting_text, return_tensors="pt").input_ids.to(torch_device)

force_token_ids = tokenizer(force_tokens).input_ids

outputs = model.generate(

input_ids,

force_token_ids=force_token_ids, # [

[ [28349], [456849] ], # DisjunctiveConstraint, choose one between two

[ [23981, 3721] ] # same ordinary PhrasalConstraint for a sequence, yet still a Disjunctive object.

]

...

)

```

Then the input type for `force_token_ids` would be `List[List[List[int]]]` that sounds quite messy.

Let me know your thoughts on this!<|||||>Hey @patrickvonplaten,

That's a good point. I think that the `DisjunctiveConstraint` feature should also come as a separate PR for maintanence purposes as you said. I'll open a new PR soon.

The checks seem to be all passing now! Will you do a final review?

Thanks!

<|||||>Incredible job at implementing this @cwkeam - it's very clean and enables some really cool use cases.

If you'd like we could now the Conjunctive decoding in a follow-up PR then and make it a bit easier for the user to enable it via some keywords for `generate()` and then post about this method - I'm sure the community will be very excited about this addition. If you'd like to promote the integration already at this point, we could also do it right away and delay the conjuntive decoding PR a bit - as you wish :-)<|||||>All slow tests run correctly and the fast tests are not slowed down by this PR. One final change @cwkeam and I think then we are good to go<|||||>@patrickvonplaten @sgugger Thanks for the review! I've adjusted all the code accordingly.

As for promoting these changes, I think I'll save it for the `DisjunctiveConstraint` and simple integration to the `generate()` function is complete. I'll open a new PR about it soon. In terms of after those other changes are done, what would be a nice way to promote this change? Could it be possible to write a blog post in huggingface blogs as well as posting on TowardsDataScience about it?

Thanks so much for everyone's help!<|||||>> @patrickvonplaten @sgugger Thanks for the review! I've adjusted all the code accordingly.

>

> As for promoting these changes, I think I'll save it for the `DisjunctiveConstraint` and simple integration to the `generate()` function is complete. I'll open a new PR about it soon. In terms of after those other changes are done, what would be a nice way to promote this change? Could it be possible to write a blog post in huggingface blogs as well as posting on TowardsDataScience about it?

>

> Thanks so much for everyone's help!

A blog post would be a great idea indeed! This is a really nice feature to write a blog post about I think. Happy to help you <|||||>@patrickvonplaten

I've made all the requested adjustments on the comments.

The test is failing right now at just one script:

```

tests/test_modeling_speech_encoder_decoder.py::Wav2Vec2Speech2Text2::test_encoder_decoder_model_generate

```

which actually runs fine on my machine. Not sure why it's throwing this error after pushing a new commit with adjustments to nothing but comments. This has happened before but simply worked out after fetching from master. How does one generally deal with these problems?

EDIT: upon pushing again with some docstrings adjustments, supposedly just by just triggering a re-run, it's passing now<|||||>Incredible work here @cwkeam - this was one of the cleanest and highest quality PRs that I've seen in a long time! Merging - let's try to tackle disjunctive decoding next :rocket: |

transformers | 15,415 | closed | Performance of T5 | I'm testing the performance of T5, and want to reproduce the result on WMT English to German newstest2014 in the table 14. But the results I got were always 1 or 2 points lower. Are there any scripts that can reproduce the result with pytorch? | 01-31-2022 02:38:56 | 01-31-2022 02:38:56 | I have found that<|||||>> I have found that

Hi, could you please share how you get to reproduce to results?<|||||>It's impossible, because the model hasn't been finetune on the translation. The details are on https://github.com/huggingface/transformers/tree/v2.9.0/examples/translation/t5<|||||>@zzasdf Thanks a lot! |

transformers | 15,414 | closed | [Hotfix] Fix Swin model outputs | # What does this PR do?

This PR:

- fixes some critical outputs of `SwinModel`, to be exactly as `ViTModel`, `BeitModel`, `DeitModel`. More specifically, the `last_hidden_state` previously returned a pooled output, which shouldn't be the case. Instead, the output should be a tensor of shape (batch_size, seq_len, hidden_size), and the pooler output a tensor of shape (batch_size, hidden_size).

- adds `problem_type` support to `SwinForImageClassification`

- fixes the integration test

To do:

- [x] more of a nit, but the pooler in Swin is called `self.pool` rather than `self.pooler` (as in models like BERT, ViT). We could rename it to `self.pooler` for consistency. | 01-30-2022 21:05:55 | 01-30-2022 21:05:55 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,413 | closed | add scores to Wav2Vec2WithLMOutput | # What does this PR do?

<!--

Congratulations! You've made it this far! You're not quite done yet though.

Once merged, your PR is going to appear in the release notes with the title you set, so make sure it's a great title that fully reflects the extent of your awesome contribution.

Then, please replace this with a description of the change and which issue is fixed (if applicable). Please also include relevant motivation and context. List any dependencies (if any) that are required for this change.

Once you're done, someone will review your PR shortly (see the section "Who can review?" below to tag some potential reviewers). They may suggest changes to make the code even better. If no one reviewed your PR after a week has passed, don't hesitate to post a new comment @-mentioning the same persons---sometimes notifications get lost.

-->

- Adds `logit_score` and `lm_score` produced by `BeamSearchDecoderCTC` to `Wav2Vec2DecoderWithLMOutput`. Useful for results analysis and pseudo-labeling for self-training.

- Removes padding from batched logits (as returned by `Trainer.predict`) at `batch_decode`. This prevents producing nonsensical tokens at the end of decoded texts.

## Before submitting

- [ ] This PR fixes a typo or improves the docs (you can dismiss the other checks if that's the case).

- [x] Did you read the [contributor guideline](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md#start-contributing-pull-requests),

Pull Request section?

- [ ] Was this discussed/approved via a Github issue or the [forum](https://discuss.huggingface.co/)? Please add a link

to it if that's the case.

- [ ] Did you make sure to update the documentation with your changes? Here are the

[documentation guidelines](https://github.com/huggingface/transformers/tree/master/docs), and

[here are tips on formatting docstrings](https://github.com/huggingface/transformers/tree/master/docs#writing-source-documentation).

- [x] Did you write any new necessary tests?

## Who can review?

The PR is related to Robust ASR week.

@patrickvonplaten @anton-l

<!-- Your PR will be replied to more quickly if you can figure out the right person to tag with @

If you know how to use git blame, that is the easiest way, otherwise, here is a rough guide of **who to tag**.

Please tag fewer than 3 people.

Models:

- albert, bert, xlm: @LysandreJik

- blenderbot, bart, marian, pegasus, encoderdecoder, t5: @patrickvonplaten, @patil-suraj

- longformer, reformer, transfoxl, xlnet: @patrickvonplaten

- fsmt: @stas00

- funnel: @sgugger

- gpt2: @patrickvonplaten, @LysandreJik

- rag: @patrickvonplaten, @lhoestq

- tensorflow: @LysandreJik

Library:

- benchmarks: @patrickvonplaten

- deepspeed: @stas00

- ray/raytune: @richardliaw, @amogkam

- text generation: @patrickvonplaten

- tokenizers: @n1t0, @LysandreJik

- trainer: @sgugger

- pipelines: @LysandreJik

Documentation: @sgugger

HF projects:

- datasets: [different repo](https://github.com/huggingface/datasets)

- rust tokenizers: [different repo](https://github.com/huggingface/tokenizers)

Examples:

- maintained examples (not research project or legacy): @sgugger, @patil-suraj

- research_projects/bert-loses-patience: @JetRunner

- research_projects/distillation: @VictorSanh

-->

| 01-30-2022 18:58:45 | 01-30-2022 18:58:45 | _The documentation is not available anymore as the PR was closed or merged._ |

transformers | 15,412 | closed | How to use multi-node multi-gpu cluster for large batch beam-search decoding | I have a self-implemented-from-scratch torch.nn.Module GPT2 model (self-implemented for custom tweaks to the model) and now I have a 4-node-32-GPU cluster which I like to use to beam-search generate continuation for millions of prefixes. Is there a way to use hugginface as a wrapper to do this in a multi-node-multi-gpu fashion? I know it can be done by manually splitting the file into small chunks and individually beam-search generate,but it is messy. | 01-30-2022 18:14:22 | 01-30-2022 18:14:22 | Hello, thanks for opening an issue! We try to keep the github issues for bugs/feature requests.

Could you ask your question on the [forum](https://discuss.huggingface.co) instead?

Thanks!<|||||>This issue has been automatically marked as stale because it has not had recent activity. If you think this still needs to be addressed please comment on this thread.

Please note that issues that do not follow the [contributing guidelines](https://github.com/huggingface/transformers/blob/master/CONTRIBUTING.md) are likely to be ignored. |

transformers | 15,411 | closed | [JAX/FLAX]: Language modeling example throws RESOURCE_EXHAUSTED error for large datasets | Hi,

I haven't found any similar issue that is related to my `RESOURCE_EXHAUSTED` error, so I'm creating a new issue here.

Problem is: I can use a dataset that is <= 50GB with a certain batch size for BERT or T5 pre-training. It is working fine.