markdown

stringlengths 0

1.02M

| code

stringlengths 0

832k

| output

stringlengths 0

1.02M

| license

stringlengths 3

36

| path

stringlengths 6

265

| repo_name

stringlengths 6

127

|

|---|---|---|---|---|---|

Registering your model with Azure ML | model_dir = "emotion_ferplus" # replace this with the location of your model files

# leave as is if it's in the same folder as this notebook

from azureml.core.model import Model

model = Model.register(model_path = model_dir + "/" + "model.onnx",

model_name = "onnx_emotion",

tags = {"onnx": "demo"},

description = "FER+ emotion recognition CNN from ONNX Model Zoo",

workspace = ws) | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Optional: Displaying your registered modelsThis step is not required, so feel free to skip it. | models = ws.models()

for m in models:

print("Name:", m.name,"\tVersion:", m.version, "\tDescription:", m.description, m.tags) | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

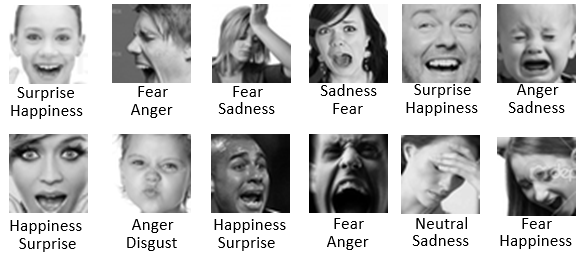

ONNX FER+ Model MethodologyThe image classification model we are using is pre-trained using Microsoft's deep learning cognitive toolkit, [CNTK](https://github.com/Microsoft/CNTK), from the [ONNX model zoo](http://github.com/onnx/models). The model zoo has many other models that can be deployed on cloud providers like AzureML without any additional training. To ensure that our cloud deployed model works, we use testing data from the famous FER+ data set, provided as part of the [trained Emotion Recognition model](https://github.com/onnx/models/tree/master/emotion_ferplus) in the ONNX model zoo.The original Facial Emotion Recognition (FER) Dataset was released in 2013, but some of the labels are not entirely appropriate for the expression. In the FER+ Dataset, each photo was evaluated by at least 10 croud sourced reviewers, creating a better basis for ground truth. You can see the difference of label quality in the sample model input below. The FER labels are the first word below each image, and the FER+ labels are the second word below each image.***Input: Photos of cropped faces from FER+ Dataset******Task: Classify each facial image into its appropriate emotions in the emotion table***``` emotion_table = {'neutral':0, 'happiness':1, 'surprise':2, 'sadness':3, 'anger':4, 'disgust':5, 'fear':6, 'contempt':7} ```***Output: Emotion prediction for input image***Remember, once the application is deployed in Azure ML, you can use your own images as input for the model to classify. | # for images and plots in this notebook

import matplotlib.pyplot as plt

from IPython.display import Image

# display images inline

%matplotlib inline | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Model DescriptionThe FER+ model from the ONNX Model Zoo is summarized by the graphic below. You can see the entire workflow of our pre-trained model in the following image from Barsoum et. al's paper ["Training Deep Networks for Facial Expression Recognitionwith Crowd-Sourced Label Distribution"](https://arxiv.org/pdf/1608.01041.pdf), with our (64,64) input images and our output probabilities for each of the labels.  Deploy our model on Azure ML We are now going to deploy our ONNX Model on AML with inference in ONNX Runtime. We begin by writing a score.py file, which will help us run the model in our Azure ML virtual machine (VM), and then specify our environment by writing a yml file.You will also notice that we import the onnxruntime library to do runtime inference on our ONNX models (passing in input and evaluating out model's predicted output). More information on the API and commands can be found in the [ONNX Runtime documentation](https://aka.ms/onnxruntime). Write Score FileA score file is what tells our Azure cloud service what to do. After initializing our model using azureml.core.model, we start an ONNX Runtime GPU inference session to evaluate the data passed in on our function calls. | %%writefile score.py

import json

import numpy as np

import onnxruntime

import sys

import os

from azureml.core.model import Model

import time

def init():

global session

model = Model.get_model_path(model_name = 'onnx_emotion')

session = onnxruntime.InferenceSession(model, None)

def run(input_data):

'''Purpose: evaluate test input in Azure Cloud using onnxruntime.

We will call the run function later from our Jupyter Notebook

so our azure service can evaluate our model input in the cloud. '''

try:

# load in our data, convert to readable format

start = time.time()

data = np.array(json.loads(input_data)['data']).astype('float32')

r = session.run(["Plus214_Output_0"], {"Input3": data})[0]

result = emotion_map(postprocess(r[0]))

end = time.time()

result_dict = {"result": np.array(result).tolist(),

"time": np.array(end - start).tolist()}

except Exception as e:

result_dict = {"error": str(e)}

return json.dumps(result_dict)

def emotion_map(classes, N=1):

"""Take the most probable labels (output of postprocess) and returns the top N emotional labels that fit the picture."""

emotion_table = {'neutral':0, 'happiness':1, 'surprise':2, 'sadness':3, 'anger':4, 'disgust':5, 'fear':6, 'contempt':7}

emotion_keys = list(emotion_table.keys())

emotions = []

for i in range(N):

emotions.append(emotion_keys[classes[i]])

return emotions

def softmax(x):

"""Compute softmax values (probabilities from 0 to 1) for each possible label."""

x = x.reshape(-1)

e_x = np.exp(x - np.max(x))

return e_x / e_x.sum(axis=0)

def postprocess(scores):

"""This function takes the scores generated by the network and returns the class IDs in decreasing

order of probability."""

prob = softmax(scores)

prob = np.squeeze(prob)

classes = np.argsort(prob)[::-1]

return classes | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Write Environment File | from azureml.core.conda_dependencies import CondaDependencies

myenv = CondaDependencies()

myenv.add_pip_package("numpy")

myenv.add_pip_package("azureml-core")

myenv.add_pip_package("onnxruntime-gpu")

with open("myenv.yml","w") as f:

f.write(myenv.serialize_to_string()) | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Create the Container ImageThis step will likely take a few minutes. | from azureml.core.image import ContainerImage

# enable_gpu = True to install CUDA 9.1 and cuDNN 7.0

image_config = ContainerImage.image_configuration(execution_script = "score.py",

runtime = "python",

conda_file = "myenv.yml",

description = "test",

tags = {"demo": "onnx"},

enable_gpu = True

)

image = ContainerImage.create(name = "onnxtest",

# this is the model object

models = [model],

image_config = image_config,

workspace = ws)

image.wait_for_creation(show_output = True) | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

DebuggingIn case you need to debug your code, the next line of code accesses the log file. | print(image.image_build_log_uri) | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

We're all set! Let's get our model chugging. Deploy the container image | from azureml.core.webservice import AciWebservice

aciconfig = AciWebservice.deploy_configuration(cpu_cores = 1,

memory_gb = 1,

tags = {'demo': 'onnx'},

description = 'ONNX for facial emotion recognition model') | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

The following cell will likely take a few minutes to run as well. | from azureml.core.webservice import Webservice

aci_service_name = 'onnx-emotion-demo'

print("Service", aci_service_name)

aci_service = Webservice.deploy_from_image(deployment_config = aciconfig,

image = image,

name = aci_service_name,

workspace = ws)

aci_service.wait_for_deployment(True)

print(aci_service.state)

if aci_service.state != 'Healthy':

# run this command for debugging.

print(aci_service.get_logs())

# If your deployment fails, make sure to delete your aci_service before trying again!

# aci_service.delete() | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Success!If you've made it this far, you've deployed a working VM with a facial emotion recognition model running in the cloud using Azure ML. Congratulations!Let's see how well our model deals with our test images. Testing and Evaluation Useful Helper FunctionsWe preprocess and postprocess our data (see score.py file) using the helper functions specified in the [ONNX FER+ Model page in the Model Zoo repository](https://github.com/onnx/models/tree/master/emotion_ferplus). | def preprocess(img):

"""Convert image to the write format to be passed into the model"""

input_shape = (1, 64, 64)

img = np.reshape(img, input_shape)

img = np.expand_dims(img, axis=0)

return img

# to manipulate our arrays

import numpy as np

# read in test data protobuf files included with the model

import onnx

from onnx import numpy_helper

# to use parsers to read in our model/data

import json

import os

test_inputs = []

test_outputs = []

# read in 3 testing images from .pb files

test_data_size = 3

for i in np.arange(test_data_size):

input_test_data = os.path.join(model_dir, 'test_data_set_{0}'.format(i), 'input_0.pb')

output_test_data = os.path.join(model_dir, 'test_data_set_{0}'.format(i), 'output_0.pb')

# convert protobuf tensors to np arrays using the TensorProto reader from ONNX

tensor = onnx.TensorProto()

with open(input_test_data, 'rb') as f:

tensor.ParseFromString(f.read())

input_data = preprocess(numpy_helper.to_array(tensor))

test_inputs.append(input_data)

with open(output_test_data, 'rb') as f:

tensor.ParseFromString(f.read())

output_data = numpy_helper.to_array(tensor)

test_outputs.append(output_data) | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Show some sample imagesWe use `matplotlib` to plot 3 images from the dataset with their labels over them. | plt.figure(figsize = (20, 20))

for test_image in np.arange(3):

test_inputs[test_image].reshape(1, 64, 64)

plt.subplot(1, 8, test_image+1)

plt.axhline('')

plt.axvline('')

plt.text(x = 10, y = -10, s = test_outputs[test_image][0], fontsize = 18)

plt.imshow(test_inputs[test_image].reshape(64, 64))

plt.show() | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Run evaluation / prediction | plt.figure(figsize = (16, 6), frameon=False)

plt.subplot(1, 8, 1)

plt.text(x = 0, y = -30, s = "True Label: ", fontsize = 13, color = 'black')

plt.text(x = 0, y = -20, s = "Result: ", fontsize = 13, color = 'black')

plt.text(x = 0, y = -10, s = "Inference Time: ", fontsize = 13, color = 'black')

plt.text(x = 3, y = 14, s = "Model Input", fontsize = 12, color = 'black')

plt.text(x = 6, y = 18, s = "(64 x 64)", fontsize = 12, color = 'black')

plt.imshow(np.ones((28,28)), cmap=plt.cm.Greys)

for i in np.arange(test_data_size):

input_data = json.dumps({'data': test_inputs[i].tolist()})

# predict using the deployed model

r = json.loads(aci_service.run(input_data))

if len(r) == 1:

print(r['error'])

break

result = r['result']

time_ms = np.round(r['time'] * 1000, 2)

ground_truth = int(np.argmax(test_outputs[i]))

# compare actual value vs. the predicted values:

plt.subplot(1, 8, i+2)

plt.axhline('')

plt.axvline('')

# use different color for misclassified sample

font_color = 'red' if ground_truth != result else 'black'

clr_map = plt.cm.gray if ground_truth != result else plt.cm.Greys

# ground truth labels are in blue

plt.text(x = 10, y = -30, s = ground_truth, fontsize = 18, color = 'blue')

# predictions are in black if correct, red if incorrect

plt.text(x = 10, y = -20, s = result, fontsize = 18, color = font_color)

plt.text(x = 5, y = -10, s = str(time_ms) + ' ms', fontsize = 14, color = font_color)

plt.imshow(test_inputs[i].reshape(64, 64), cmap = clr_map)

plt.show() | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

Try classifying your own images! | # Replace the following string with your own path/test image

# Make sure the dimensions are 28 * 28 pixels

# Any PNG or JPG image file should work

# Make sure to include the entire path with // instead of /

# e.g. your_test_image = "C://Users//vinitra.swamy//Pictures//emotion_test_images//img_1.jpg"

your_test_image = "<path to file>"

import matplotlib.image as mpimg

if your_test_image != "<path to file>":

img = mpimg.imread(your_test_image)

plt.subplot(1,3,1)

plt.imshow(img, cmap = plt.cm.Greys)

img = img.reshape(1, 1, 64, 64)

else:

img = None

if img is None:

print("Add the path for your image data.")

else:

input_data = json.dumps({'data': img.tolist()})

try:

r = json.loads(aci_service.run(input_data))

result = r['result']

time_ms = np.round(r['time'] * 1000, 2)

except Exception as e:

print(json.loads(r)['error'])

plt.figure(figsize = (16, 6))

plt.subplot(1, 15,1)

plt.axhline('')

plt.axvline('')

plt.text(x = -100, y = -20, s = "Model prediction: ", fontsize = 14)

plt.text(x = -100, y = -10, s = "Inference time: ", fontsize = 14)

plt.text(x = 0, y = -20, s = str(result), fontsize = 14)

plt.text(x = 0, y = -10, s = str(time_ms) + " ms", fontsize = 14)

plt.text(x = -100, y = 14, s = "Input image: ", fontsize = 14)

plt.imshow(img.reshape(28, 28), cmap = plt.cm.Greys)

# remember to delete your service after you are done using it!

# aci_service.delete() | _____no_output_____ | MIT | onnx/onnx-inference-emotion-recognition.ipynb | sxusx/MachineLearningNotebooks |

2d. Distributed training and monitoring In this notebook, we refactor to call ```train_and_evaluate``` instead of hand-coding our ML pipeline. This allows us to carry out evaluation as part of our training loop instead of as a separate step. It also adds in failure-handling that is necessary for distributed training capabilities.We also use TensorBoard to monitor the training. | from google.cloud import bigquery

import tensorflow as tf

import numpy as np

import shutil

print(tf.__version__) | _____no_output_____ | Apache-2.0 | courses/machine_learning/deepdive/03_tensorflow/labs/d_traineval.ipynb | jonesevan007/training-data-analyst |

Input Read data created in Lab1a, but this time make it more general, so that we are reading in batches. Instead of using Pandas, we will use add a filename queue to the TensorFlow graph. | CSV_COLUMNS = ['fare_amount', 'pickuplon','pickuplat','dropofflon','dropofflat','passengers', 'key']

LABEL_COLUMN = 'fare_amount'

DEFAULTS = [[0.0], [-74.0], [40.0], [-74.0], [40.7], [1.0], ['nokey']]

def read_dataset(filename, mode, batch_size = 512):

def decode_csv(value_column):

columns = tf.decode_csv(value_column, record_defaults = DEFAULTS)

features = dict(zip(CSV_COLUMNS, columns))

label = features.pop(LABEL_COLUMN)

return features, label

# Create list of file names that match "glob" pattern (i.e. data_file_*.csv)

filenames_dataset = tf.data.Dataset.list_files(filename)

# Read lines from text files

textlines_dataset = filenames_dataset.flat_map(tf.data.TextLineDataset)

# Parse text lines as comma-separated values (CSV)

dataset = textlines_dataset.map(decode_csv)

# Note:

# use tf.data.Dataset.flat_map to apply one to many transformations (here: filename -> text lines)

# use tf.data.Dataset.map to apply one to one transformations (here: text line -> feature list)

if mode == tf.estimator.ModeKeys.TRAIN:

num_epochs = None # indefinitely

dataset = dataset.shuffle(buffer_size = 10 * batch_size)

else:

num_epochs = 1 # end-of-input after this

dataset = dataset.repeat(num_epochs).batch(batch_size)

return dataset | _____no_output_____ | Apache-2.0 | courses/machine_learning/deepdive/03_tensorflow/labs/d_traineval.ipynb | jonesevan007/training-data-analyst |

Create features out of input data For now, pass these through. (same as previous lab) | INPUT_COLUMNS = [

tf.feature_column.numeric_column('pickuplon'),

tf.feature_column.numeric_column('pickuplat'),

tf.feature_column.numeric_column('dropofflat'),

tf.feature_column.numeric_column('dropofflon'),

tf.feature_column.numeric_column('passengers'),

]

def add_more_features(feats):

# Nothing to add (yet!)

return feats

feature_cols = add_more_features(INPUT_COLUMNS) | _____no_output_____ | Apache-2.0 | courses/machine_learning/deepdive/03_tensorflow/labs/d_traineval.ipynb | jonesevan007/training-data-analyst |

Serving input function Defines the expected shape of the JSON feed that the modelwill receive once deployed behind a REST API in production. | ## TODO: Create serving input function

def serving_input_fn():

#ADD CODE HERE

return tf.estimator.export.ServingInputReceiver(features, json_feature_placeholders) | _____no_output_____ | Apache-2.0 | courses/machine_learning/deepdive/03_tensorflow/labs/d_traineval.ipynb | jonesevan007/training-data-analyst |

tf.estimator.train_and_evaluate | ## TODO: Create train and evaluate function using tf.estimator

def train_and_evaluate(output_dir, num_train_steps):

#ADD CODE HERE

tf.estimator.train_and_evaluate(estimator, train_spec, eval_spec) | _____no_output_____ | Apache-2.0 | courses/machine_learning/deepdive/03_tensorflow/labs/d_traineval.ipynb | jonesevan007/training-data-analyst |

Monitoring with TensorBoard Start the TensorBoard by opening up a new Launcher (File > New Launcher) and selecting TensorBoard. | OUTDIR = './taxi_trained' | _____no_output_____ | Apache-2.0 | courses/machine_learning/deepdive/03_tensorflow/labs/d_traineval.ipynb | jonesevan007/training-data-analyst |

Run training | # Run training

shutil.rmtree(OUTDIR, ignore_errors = True) # start fresh each time

tf.summary.FileWriterCache.clear() # ensure filewriter cache is clear for TensorBoard events file

train_and_evaluate(OUTDIR, num_train_steps = 2000) | _____no_output_____ | Apache-2.0 | courses/machine_learning/deepdive/03_tensorflow/labs/d_traineval.ipynb | jonesevan007/training-data-analyst |

DescriptionResnet from scratch tutorial from medium post: https://towardsdatascience.com/building-a-resnet-in-keras-e8f1322a49baNet structure: - Input with shape (32, 32, 3) - 1 Conv2D layer, with 64 filters - 2, 5, 5, 2 residual blocks with 64, 128, 256, and 512 filters - AveragePooling2D layer with pool size = 4 - Flatten layer - Dense layer with 10 output nodes Import libraries | from tensorflow import Tensor

from tensorflow.keras.layers import Input, Conv2D, ReLU, BatchNormalization,\

Add, AveragePooling2D, Flatten, Dense

from tensorflow.keras.models import Model

from tensorflow.keras.datasets import cifar10

from tensorflow.keras.callbacks import ModelCheckpoint, TensorBoard

import datetime

import os | _____no_output_____ | MIT | tutorial_resnet.ipynb | ElHouas/cnn-deep-learning |

Function definitions | def relu_bn(inputs: Tensor) -> Tensor: # Specifying return type

relu = ReLU()(inputs)

bn = BatchNormalization()(relu)

return bn

def residual_block(x: Tensor, downsample: bool, filters: int, kernel_size: int = 3) -> Tensor:

y = Conv2D(kernel_size=kernel_size,

strides= (1 if not downsample else 2),

filters=filters,

padding="same")(x)

y = relu_bn(y)

y = Conv2D(kernel_size=kernel_size,

strides=1,

filters=filters,

padding="same")(y)

if downsample:

x = Conv2D(kernel_size=1,

strides=2,

filters=filters,

padding="same")(x)

out = Add()([x, y])

out = relu_bn(out)

return out

def create_res_net():

inputs = Input(shape=(32, 32, 3))

num_filters = 64

t = BatchNormalization()(inputs)

t = Conv2D(kernel_size=3,

strides=1,

filters=num_filters,

padding="same")(t)

t = relu_bn(t)

num_blocks_list = [2, 5, 5, 2]

for i in range(len(num_blocks_list)):

num_blocks = num_blocks_list[i]

for j in range(num_blocks):

t = residual_block(t, downsample=(j==0 and i!=0), filters=num_filters)

num_filters *= 2

t = AveragePooling2D(4)(t)

t = Flatten()(t)

outputs = Dense(10, activation='softmax')(t)

model = Model(inputs, outputs)

model.compile(

optimizer='adam',

loss='sparse_categorical_crossentropy',

metrics=['accuracy']

)

return model | _____no_output_____ | MIT | tutorial_resnet.ipynb | ElHouas/cnn-deep-learning |

Main function | (x_train, y_train), (x_test, y_test) = cifar10.load_data()

model = create_res_net()

model.summary

timestr= datetime.datetime.now().strftime("%Y%m%d-%H%M%S")

name = 'cifar-10_res_net_30-'+timestr # or 'cifar-10_plain_net_30-'+timestr

checkpoint_path = "checkpoints/"+name+"/cp-{epoch:04d}.ckpt"

checkpoint_dir = os.path.dirname(checkpoint_path)

os.system('mkdir {}'.format(checkpoint_dir))

# save model after each epoch

cp_callback = ModelCheckpoint(

filepath=checkpoint_path,

verbose=1

)

tensorboard_callback = TensorBoard(

log_dir='tensorboard_logs/'+name,

histogram_freq=1

)

model.fit(

x=x_train,

y=y_train,

epochs=20,

verbose=1,

validation_data=(x_test, y_test),

batch_size=128,

callbacks=[cp_callback, tensorboard_callback]

) | Epoch 1/20

1/391 [..............................] - ETA: 0s - loss: 2.3458 - accuracy: 0.0938WARNING:tensorflow:From /usr/local/lib/python3.6/dist-packages/tensorflow/python/ops/summary_ops_v2.py:1277: stop (from tensorflow.python.eager.profiler) is deprecated and will be removed after 2020-07-01.

Instructions for updating:

use `tf.profiler.experimental.stop` instead.

30/391 [=>............................] - ETA: 2:22:53 - loss: 2.1849 - accuracy: 0.2419 | MIT | tutorial_resnet.ipynb | ElHouas/cnn-deep-learning |

Quiz 1 : Sifat ListJawab Pertanyaan di bawah ini :Jenis data apa saja yang bisa ada di dalam List? list, ada numerik, string, boolean, dan sebagainya Quiz 2 : Akses ListLengkapi kode untuk menghasilkan suatu output yang di harapkan | a = ['1', '13b', 'aa1', 1.32, 22.1, 2.34]

# slicing list

print(a[1:5]) | ['13b', 'aa1', 1.32, 22.1]

| MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

Expected Output :[ '13b', 'aa1', 1.32, 22.1 ] Quiz 3 : Nested ListLengkapi kode untuk menghasilkan suatu output yang di harapkan | a = [1.32, 22.1, 2.34]

b = ['1', '13b', 'aa1']

c = [3, 40, 100]

# combine list

d = [a, c, b]

print(d) | [[1.32, 22.1, 2.34], [3, 40, 100], ['1', '13b', 'aa1']]

| MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

Expected Output :[ [1.32, 22.1, 2.34], [3, 40, 100], ['1', '13b', 'aa1'] ] Quiz 4 : Akses Nested ListLengkapi kode untuk menghasilkan suatu output yang di harapkan | a = [

[5, 9, 8],

[0, 0, 6]

]

# subsetting list

print(a[1][1:3]) | [0, 6]

| MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

Expected Output :[0, 6] Quiz 5 : Built in Function ListLengkapi kode untuk menghasilkan suatu output yang di harapkan | p = [0, 5, 2, 10, 4, 9]

# ordered list

print(sorted(p, reverse=False))

# get max value of list

print(max(p)) | [0, 2, 4, 5, 9, 10]

10

| MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

Expected Output :[0, 2, 4, 5, 9, 10]10 Quiz 6 : List OperationLengkapi kode untuk menghasilkan suatu output yang di harapkan | a = [1, 3, 5]

b = [5, 1, 3]

# combine list

c = b + a

print(c) | [5, 1, 3, 1, 3, 5]

| MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

Expected Output :[5, 1, 3, 1, 3, 5] Quiz 7 : List ManipulationLengkapi kode untuk menghasilkan suatu output yang di harapkan | a = [

[5, 9, 8],

[0, 0, 6]

]

# change list value

a[0][2] = 10

# change list value

a[1][0] = 11

print(a) | [[5, 9, 10], [11, 0, 6]]

| MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

Expected Output :[ [5, 9, 10], [11, 0, 6] ] Quiz 8 : Delete Element ListLengkapi kode untuk menghasilkan suatu output yang di harapkan | areas = ["hallway", 11.25, "kitchen", 18.0,

"chill zone", 20.0, "bedroom", 10.75,

"bathroom", 10.50, "poolhouse", 24.5,

"garage", 15.45]

# Hilangkan elemen yang bernilai "bathroom" dan 10.50 dalam satu statement code

del(areas[8], areas[8])

print(areas) | ['hallway', 11.25, 'kitchen', 18.0, 'chill zone', 20.0, 'bedroom', 10.75, 'poolhouse', 24.5, 'garage', 15.45]

| MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

Expected Output :['hallway', 11.25, 'kitchen', 18.0, 'chill zone', 20.0, 'bedroom', 10.75, 'poolhouse', 24.5, 'garage', 15.45] | _____no_output_____ | MIT | Learn/Week 1 Basic Python/Week_1_Day_2.ipynb | mazharrasyad/Data-Science-SanberCode |

|

Welcome to the walkthrough for the updated Python SDK for libsimba.py-platformTo use the Python SDK for SEP, there are two steps the user/developer takes. These two steps assume that you have already created a contract, and an app that uses that contract, on SEP.1. Instantiate a SimbaHintedContract object. Instantiating this object will automatically invoke that object's write_contract method. This method will generate a .py file that contains a class representation of our smart contract. Our smart contract's methods will be represented as class methods in this class. 2. Instantiate an instance of your contract class. If your smart contract is named "CarContract", then you would instantiate, for example, cc = CarContract(). You would then call your smart contract's methods as methods of your cc instance. For example, if your smart contract has a method "arrived_from_warehouse", then you can invoke cc.arrived_from_warehouse(), with relevant parameters, of course. First, we import SimbaHintedContract, define our app name, contract name, base api url, contract clas name (which take sthe name of our contract if not specified), and the path of our output file.This output file will be the name of the file that we write our class-based contract object to. In this example, we have already created a contract and app in SEP, both of which have the name "TestSimbaHinted". | from libsimba.simba_hinted_contract import SimbaHintedContract

app_name = "TestSimbaHinted"

contract_name = "TestSimbaHinted"

base_api_url = 'https://api.sep.dev.simbachain.com/'

output_file = "test_simba_hinted.py"

contract_class_name = "TestSimbaHinted" | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

Next, we instantiate our SimbaHintedContract object: | sch = SimbaHintedContract(

app_name,

contract_name,

contract_class_name=contract_class_name,

base_api_url=base_api_url,

output_file=output_file) | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

Instantiating that object will write our class-based smart contract to "test_simba_hinted.py", since that is the name we specified for our output fileIn this output, solidity structs from our smart contract are represented as subclasses (Addr, Person, AddressPerson). Also note that our contract methods are now represented as class methods (eg nowt, an_arr, etc.). One important detail to notice here is that if a method call does not accept files, then we are given the option to pass a query_method parameter. If query_method == True, then previous invocations of that method call will be queried. If query_method == False, then the method itself will actually be invoked. Here is our generated file: | from libsimba.simba import Simba

from typing import List, Tuple, Dict, Any, Optional

from libsimba.class_converter import ClassToDictConverter, convert_classes

from libsimba.file_handler import open_files, close_files

class TestSimbaHinted:

def __init__(self):

self.app_name = "TestSimbaHinted"

self.base_api_url = "https://api.sep.dev.simbachain.com/"

self.contract_name = "TestSimbaHinted"

self.simba = Simba(self.base_api_url)

self.simba_contract = self.simba.get_contract(self.app_name, self.contract_name)

class Addr(ClassToDictConverter):

def __init__(self, street: str = '', number: int = 0, town: str = ''):

self.street=street

self.number=number

self.town=town

class Person(ClassToDictConverter):

def __init__(self, name: str = '', age: int = 0, addr: "TestSimbaHinted.Addr" = None):

self.name=name

self.age=age

self.addr=addr

class AddressPerson(ClassToDictConverter):

def __init__(self, name: str = '', age: int = 0, addrs: List["TestSimbaHinted.Addr"] = []):

self.name=name

self.age=age

self.addrs=addrs

def get_bundle_file(self, bundle_hash, file_name, opts: Optional[dict] = None):

return self.simba.get_bundle_file(self.app_name, self.contract_name, bundle_hash, file_name, opts)

def get_transactions(self, opts: Optional[dict] = None):

return self.simba_contract.get_transactions(opts)

def validate_bundle_hash(self, bundle_hash: str, opts: Optional[dict] = None):

return self.simba_contract.validate_bundle_hash(bundle_hash, opts)

def get_transaction_statuses(self, txn_hashes: List[str] = None, opts: Optional[dict] = None):

return self.simba_contract.get_transaction_statuses(txn_hashes, opts)

def nowt(self, async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of nowt will be queried. Otherwise nowt will be invoked with inputs.

"""

inputs= {

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("nowt", opts)

else:

return self.simba_contract.submit_method("nowt", inputs, opts, async_method)

def an_arr(self, first: List[int], async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of an_arr will be queried. Otherwise an_arr will be invoked with inputs.

"""

inputs= {

'first': first,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("an_arr", opts)

else:

return self.simba_contract.submit_method("an_arr", inputs, opts, async_method)

def two_arrs(self, first: List[int], second: List[int], async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of two_arrs will be queried. Otherwise two_arrs will be invoked with inputs.

"""

inputs= {

'first': first,

'second': second,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("two_arrs", opts)

else:

return self.simba_contract.submit_method("two_arrs", inputs, opts, async_method)

def address_arr(self, first: List[str], async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of address_arr will be queried. Otherwise address_arr will be invoked with inputs.

"""

inputs= {

'first': first,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("address_arr", opts)

else:

return self.simba_contract.submit_method("address_arr", inputs, opts, async_method)

def nested_arr_0(self, first: List[List[int]], async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of nested_arr_0 will be queried. Otherwise nested_arr_0 will be invoked with inputs.

"""

inputs= {

'first': first,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("nested_arr_0", opts)

else:

return self.simba_contract.submit_method("nested_arr_0", inputs, opts, async_method)

def nested_arr_1(self, first: List[List[int]], async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of nested_arr_1 will be queried. Otherwise nested_arr_1 will be invoked with inputs.

"""

inputs= {

'first': first,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("nested_arr_1", opts)

else:

return self.simba_contract.submit_method("nested_arr_1", inputs, opts, async_method)

def nested_arr_2(self, first: List[List[int]], async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of nested_arr_2 will be queried. Otherwise nested_arr_2 will be invoked with inputs.

"""

inputs= {

'first': first,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("nested_arr_2", opts)

else:

return self.simba_contract.submit_method("nested_arr_2", inputs, opts, async_method)

def nested_arr_3(self, first: List[List[int]], async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of nested_arr_3 will be queried. Otherwise nested_arr_3 will be invoked with inputs.

"""

inputs= {

'first': first,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("nested_arr_3", opts)

else:

return self.simba_contract.submit_method("nested_arr_3", inputs, opts, async_method)

def nested_arr_4(self, first: List[List[int]], files: List[Tuple], async_method: Optional[bool] = False, opts: Optional[dict] = None):

"""

If async_method == True, then nested_arr_4 will be invoked as async, otherwise nested_arr_4 will be invoked as non async

files parameter should be list with tuple elements of form (file_name, file_path) or (file_name, readable_file_like_object).

see libsimba.file_handler for further details on what open_files expects as arguments

"""

inputs= {

'first': first,

}

convert_classes(inputs)

files = open_files(files)

if async_method:

response = self.simba_contract.submit_contract_method_with_files_async("nested_arr_4", inputs, files, opts)

close_files(files)

return response

else:

response = self.simba_contract.submit_contract_method_with_files("nested_arr_4", inputs, files, opts)

close_files(files)

return response

def structTest_1(self, people: List["TestSimbaHinted.Person"], test_bool: bool, async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of structTest_1 will be queried. Otherwise structTest_1 will be invoked with inputs.

"""

inputs= {

'people': people,

'test_bool': test_bool,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("structTest_1", opts)

else:

return self.simba_contract.submit_method("structTest_1", inputs, opts, async_method)

def structTest_2(self, person: "TestSimbaHinted.Person", test_bool: bool, async_method: Optional[bool] = False, opts: Optional[dict] = None, query_method: Optional[bool] = False):

"""

If query_method == True, then invocations of structTest_2 will be queried. Otherwise structTest_2 will be invoked with inputs.

"""

inputs= {

'person': person,

'test_bool': test_bool,

}

convert_classes(inputs)

if query_method:

return self.simba_contract.query_method("structTest_2", opts)

else:

return self.simba_contract.submit_method("structTest_2", inputs, opts, async_method)

def structTest_3(self, person: "TestSimbaHinted.AddressPerson", files: List[Tuple], async_method: Optional[bool] = False, opts: Optional[dict] = None):

"""

If async_method == True, then structTest_3 will be invoked as async, otherwise structTest_3 will be invoked as non async

files parameter should be list with tuple elements of form (file_name, file_path) or (file_name, readable_file_like_object).

see libsimba.file_handler for further details on what open_files expects as arguments

"""

inputs= {

'person': person,

}

convert_classes(inputs)

files = open_files(files)

if async_method:

response = self.simba_contract.submit_contract_method_with_files_async("structTest_3", inputs, files, opts)

close_files(files)

return response

else:

response = self.simba_contract.submit_contract_method_with_files("structTest_3", inputs, files, opts)

close_files(files)

return response

def structTest_4(self, persons: List["TestSimbaHinted.AddressPerson"], files: List[Tuple], async_method: Optional[bool] = False, opts: Optional[dict] = None):

"""

If async_method == True, then structTest_4 will be invoked as async, otherwise structTest_4 will be invoked as non async

files parameter should be list with tuple elements of form (file_name, file_path) or (file_name, readable_file_like_object).

see libsimba.file_handler for further details on what open_files expects as arguments

"""

inputs= {

'persons': persons,

}

convert_classes(inputs)

files = open_files(files)

if async_method:

response = self.simba_contract.submit_contract_method_with_files_async("structTest_4", inputs, files, opts)

close_files(files)

return response

else:

response = self.simba_contract.submit_contract_method_with_files("structTest_4", inputs, files, opts)

close_files(files)

return response

def structTest_5(self, person: "TestSimbaHinted.Person", files: List[Tuple], async_method: Optional[bool] = False, opts: Optional[dict] = None):

"""

If async_method == True, then structTest_5 will be invoked as async, otherwise structTest_5 will be invoked as non async

files parameter should be list with tuple elements of form (file_name, file_path) or (file_name, readable_file_like_object).

see libsimba.file_handler for further details on what open_files expects as arguments

"""

inputs= {

'person': person,

}

convert_classes(inputs)

files = open_files(files)

if async_method:

response = self.simba_contract.submit_contract_method_with_files_async("structTest_5", inputs, files, opts)

close_files(files)

return response

else:

response = self.simba_contract.submit_contract_method_with_files("structTest_5", inputs, files, opts)

close_files(files)

return response | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

Here we will instantiate an instance of our contract class. You could do that from a separate file, by importing your contract class, but since we're using a notebook here, we'll just instantiate an object in the same file: | tsh = TestSimbaHinted() | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

Now we will call one of our smart contract's methods by invoking a method of our contract class object. We will call our method two_arrs, which simply takes two arrays as parameters. | arr1 = [2, 4, 20, 10, 3, 3]

arr2 = [1,3,5]

r = tsh.two_arrs(arr1, arr2) | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

We can inspect the response from this submission to check it has succeeded: | assert (200 <= r.status_code <= 299)

print(r.json()) | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

Now let's invoke structTest_5, which is a method that accepts files, and also takes a nested struct as a parameter. First we need to assign our file path and file name, as well as specify the read_mode for our file. if read_mode is not specified here, then it defaults to 'r' (see file_handler.py for documentation on this). | file_name = 'test_file'

file_path = '../tests/data/file1.txt'

files = [(file_name, file_path, 'r')] | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

Now we will need to instantiate Person and Addr objects. Person takes an Addr object as one of its initialization parameters. | name = "Charlie"

age = 99

street = "rogers street"

number = 123

town = "new york"

addr = TestSimbaHinted.Addr(street, number, town)

p = TestSimbaHinted.Person(name, age, addr)

| _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

Now we will invoke structTest_5 with parameters p, and files. |

r = tsh.structTest_5(p, files)

if 200 <= r.status_code <= 299:

print(r.json()) | _____no_output_____ | MIT | notebooks/examples.ipynb | SIMBAChain/libsimba.py-platform |

**Movie Recommendation Model** | import numpy as np

import pandas as pd

movies = pd.read_csv("/Users/sarang/Documents/Movie-Reccomendation/content/tmdb_5000_movies.csv")

credits = pd.read_csv("/Users/sarang/Documents/Movie-Reccomendation/content/tmdb_5000_credits.csv")

movies = movies.merge(credits,on='title')

movies = movies[['movie_id','title','overview','genres','keywords','cast','crew']]

movies.head(2)

# movies.shape

credits.head()

credits.shape

#From a dict, it takes the value of the key named 'name' and adds it to a list

import ast

def convert(text):

L = []

for i in ast.literal_eval(text):

L.append(i['name'])

return L

movies.dropna(inplace=True)

movies['genres'] = movies['genres'].apply(convert)

movies.head()

movies['keywords'] = movies['keywords'].apply(convert)

movies.head(2)

movies['cast'] = movies['cast'].apply(convert)

# Only 3 member cast

movies['cast'] = movies['cast'].apply(lambda x: x[0:3])

movies.head(2)

def get_Director(text):

L=[]

for i in ast.literal_eval(text):

if i['job'] == 'Director':

L.append(i['name'])

return L

movies['crew'] = movies['crew'].apply(get_Director)

movies.head(2)

#removes the space in the string in a list

def remove_space(text):

L=[]

for i in text:

L.append(i.replace(" ", ""))

return L

movies['genres'] = movies['genres'].apply(remove_space)

movies['keywords'] = movies['keywords'].apply(remove_space)

movies['cast'] = movies['cast'].apply(remove_space)

movies['crew'] = movies['crew'].apply(remove_space)

movies.head(2)

#makes a list of words of the string overview

movies['overview'] = movies['overview'].apply(lambda x: x.split())

#makes a new col 'tag' concats all the parameters we created

movies['tag'] = movies['cast']+movies['crew']+movies['genres']+movies['overview']+movies['keywords']

#Creates new dataframe 'new'(softcopy of movies), where every col is dropped except id,title,tag

new = movies.drop(columns=['genres', 'overview', 'keywords', 'cast', 'crew'])

new.head(2)

new['tag'] = new['tag'].apply(lambda x: " ".join(x))

new.head(2) | _____no_output_____ | MIT | Movie-Recommendation.ipynb | Git-Sarang/Movie-Recommender |

Machine Learning Section | from sklearn.feature_extraction.text import CountVectorizer

cv = CountVectorizer(max_features=5000,stop_words='english')

vector = cv.fit_transform(new['tag']).toarray()

vector.shape

from sklearn.metrics.pairwise import cosine_similarity

similarity = cosine_similarity(vector)

type(similarity)

new[new['title']=='The Lego Movie'].index[0]

def recommend(movie):

index = new[new['title'] == movie].index[0]

distances = sorted(list(enumerate(similarity[index])),reverse=True,key = lambda x: x[1])

for i in distances[1:6]:

print(new.iloc[i[0]].title)

# Sample titles to try the recommend function

new['title'].sample(5)

recommend('Fury')

import pickle

pickle.dump(new.to_dict(), open('movie_dict.pkl', 'wb'))

pickle.dump(similarity, open('similarity.pkl', 'wb')) | _____no_output_____ | MIT | Movie-Recommendation.ipynb | Git-Sarang/Movie-Recommender |

Hypothesis test power from scratch with Python Calculating power of hypothesis tests.The code is from the [Data Science from Scratch](https://www.oreilly.com/library/view/data-science-from/9781492041122/) book. Libraries and helper functions | from typing import Tuple

import math as m

def calc_normal_cdf(x: float, mu: float = 0, sigma: float = 1) -> float:

return (1 + m.erf((x - mu) / m.sqrt(2) / sigma)) / 2

normal_probability_below = calc_normal_cdf

def normal_probability_between(lo: float, hi: float, mu: float = 0, sigma: float = 1) -> float:

return normal_probability_below(hi, mu, sigma) - normal_probability_below(lo, mu, sigma)

def calc_normal_cdf(x: float, mu: float = 0, sigma: float = 1) -> float:

return (1 + m.erf((x - mu) / m.sqrt(2) / sigma)) / 2

def calc_inverse_normal_cdf(p: float, mu:float = 0, sigma: float = 1, tolerance: float = 1E-5, show_steps=False) -> float:

if p == 0: return -np.inf

if p == 1: return np.inf

# In case it is not a standard normal distribution, calculate the standard normal first and then rescale

if mu != 0 or sigma != 1:

return mu + sigma * calc_inverse_normal_cdf(p, tolerance=tolerance)

low_z = -10

hi_z = 10

if show_steps: print(f"{'':<19}".join(['low_z', 'mid_z', 'hi_z']), "\n")

while hi_z - low_z > tolerance:

mid_z = (low_z + hi_z) / 2

mid_p = calc_normal_cdf(mid_z)

if mid_p < p:

low_z = mid_z

else:

hi_z = mid_z

if show_steps: print("\t".join(map(to_string, [low_z, mid_z, hi_z])))

return mid_z

def normal_upper_bound(probabilty: float, mu: float = 0, sigma: float = 1) -> float:

return calc_inverse_normal_cdf(probabilty, mu, sigma)

def normal_lower_bound(probabilty: float, mu: float = 0, sigma: float = 1) -> float:

return calc_inverse_normal_cdf(1 - probabilty, mu, sigma)

def normal_two_sided_bounds(probability: float, mu: float = 0, sigma: float = 1) -> float:

if probability == 0: return 0, 0

tail_probability = (1 - probability) / 2

lower_bound = normal_upper_bound(tail_probability, mu, sigma)

upper_bound = normal_lower_bound(tail_probability, mu, sigma)

return lower_bound, upper_bound

def normal_approximation_to_binomial(n: int, p: float) -> Tuple[float, float]:

mu = p * n

sigma = m.sqrt(p * (1 - p) * n)

return mu, sigma | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

Type 1 Error and Tolerance Let's make our null hypothesis ($H_0$) that the probability of head is 0.5 | mu_0, sigma_0 = normal_approximation_to_binomial(1000, 0.5)

mu_0, sigma_0 | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

We define our tolerance at 5%. That is, we accept our model to produce 'type 1' errors (false positive) in 5% of the time. With the coin flipping example, we expect to receive 5% of the results to fall outsied of our defined interval. | lo, hi = normal_two_sided_bounds(0.95, mu_0, sigma_0)

lo, hi | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

Type 2 Error and Power At type 2 error we consider false negatives, that is, those cases where we fail to reject our null hypothesis even though we should. Let's assume that contra $H_0$ the actual probability is 0.55. | mu_1, sigma_1 = normal_approximation_to_binomial(1000, 0.55)

mu_1, sigma_1 | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

In this case we get our Type 2 probability as the overlapping of the real distribution and the 95% probability region of $H_0$. In this particular case, in 11% of the cases we will wrongly fail to reject our null hypothesis. | type_2_probability = normal_probability_between(lo, hi, mu_1, sigma_1)

type_2_probability | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

The power of the test is then the probability of rightly rejecting the $H_0$ | power = 1 - type_2_probability

power | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

Now, let's redefine our null hypothesis so that we expect the probability of head to be less than or equal to 0.5.In this case we have a one-sided test. | hi = normal_upper_bound(0.95, mu_0, sigma_0)

hi | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

Because this is a less strict hypothesis than our previus one, it has a smaller T2 probability and a greater power. | type_2_probability = normal_probability_below(hi, mu_1, sigma_1)

type_2_probability

power = 1 - type_2_probability

power | _____no_output_____ | Apache-2.0 | _notebooks/2020-10-12-hypothesis-test-power.ipynb | nocibambi/ds_blog |

Carry signals by Gustavo SoaresIn this notebook you will apply a few things you learned in our Python lecture [FinanceHub's Python lectures](https://github.com/Finance-Hub/FinanceHubMaterials/tree/master/Python%20Lectures):* You will use and manipulate different kinds of variables in Python such as text variables, booleans, date variables, floats, dictionaries, lists, list comprehensions, etc.;* We will also use `Pandas.DataFrame` objects and methods which are very useful in manipulating financial time series;* You will use if statements and loops, and;* You will use [FinanceHub's Bloomberg tools](https://github.com/Finance-Hub/FinanceHub/tree/master/bloomberg) for fetching data from a Bloomberg terminal. If you are using this notebook within BQNT, you may want to use BQL for getting the data. Basic imports | import pandas as pd

import numpy as np

import matplotlib.pyplot as plt

from bloomberg import BBG

bbg = BBG() # because BBG is a class, we need to create an instance of the BBG class wihtin this notebook, here deonted by bbg | _____no_output_____ | MIT | Quantitative Finance Lectures/carry.ipynb | antoniosalomao/FinanceHubMaterials |

CarryThe concept of carry arised in currency markets but it can be applied to any asset. Any security or derivative expected return can be decomposed into its “carry” – an ex-ante and model-free characteristic – and its expected price appreciation. So, "carry" can be understood as the expected return of a security or derivative if there is no change in underlying prices. That is, if "nothing happens", i.e., prices do not move and only time passes, that security or derivative will earn its "carry".The concept of carry has been shown to be a good predictor or returns cross-sectionally (going long securities with high carry while at the same time going short securities with low carry) and in time series (going long a particular security when carry is positive or historically high and short when carry is negative or historically low). So, the concept of "carry" provides a unifying framework for return predictability and gives orgin to carry strategies across a host of different asset classes, including global equities, global bonds, commodities, US Treasuries, credit, and options. Carry strategies are commonly exposed to global recession, liquidity, and volatility risks, though none fully explain carry’s premium.[Koijen, Moskowitz, Pedersen, and Vrugt (2016)](https://ssrn.com/abstract=2298565) is a great reference for discussing cross-sectional carry strategies in several different markets. There are typically market-neutral carry strategies where we are always long some currencies, rates, commodities and indices and short some others. [Baz, Granger, Harvey, Le Roux, and Rattray (2015)](https://ssrn.com/abstract=2695101) discusses in detail the differences between cross-sectional strategies and time series strategies for three different factors, including carry. It is really important to understand the difference. [Baltas (2017)](https://doi.org/10.1016/B978-1-78548-201-4.50013-1) also looks at carry stratgies, both cross-sectional and time series, across different futures markets (commodities, equity indices and government bonds). He also discusses the benefits of constructing a multi-asset carry strategies.Here in this notebook, we just follow [Koijen, Moskowitz, Pedersen, and Vrugt (2016)](https://ssrn.com/abstract=2298565) and show how, using Bloomberg data, calculate carry metrics for different asset classes. Carry in FXLet's start with the best known case, which is carry in currencies. For that, let's take the USDBRL and see how we can compute carry for that currency. Deposit ratesThe “classic” definition of currency carry is the local deposit interest rate in the correspondingcountry. This definition captures an investment in a currency by literally putting cash into a country’s money market, which earns the interest rate if the exchange rate (the “price of the currency”) does not change. Typically, we would use theBRL 3M deposit rate (BCDRC BDSR Curncy on Bloomberg) which is the interest rate that a bank will charge for lending or pay for borrowing in BRL. For shorter investment horizons, we could pick shorter tenors such as 1M. However, in terms of predictability of future returns there should be little difference in using 1M, 3M or 6M deposit rates in your carry signal. As a matter of fact, now that many currencies have very small short term rates, many quantitative strategies have been using 2Y rates in their carry signals, even when the signal are meant to be predictive over relatively short investment horizons of 1M or 3M. So, in the case of 3M rates, we can define the annualized USDBRL carry as:$$Carry_{USDBRL}^{3M} = \frac{1+r_{USD}}{1+r_{BRL}}-1$$where $r_{USD}$ is the 3M USD deposit rate and $r_{BRL}$ is the 3M BRL interest rate.Note that if we were calculating carry for BRLUSD, it would be a different number. This is important because some curruncies, like the BRL, are quoted as USDBRL and some currencies, like the EUR, are quoted as EURUSD. So, when trading FX, make sure your carry signal is calculated according to whethere you are trading XXXYYY or YYYXXX! ForwardsRecall that by a no-arbitrage argument a currency 3M forward contract should be prices by:$$F_{USDBRL}^{3M} = S_{USDBRL} \times \Big(\frac{1+r_{BRL}}{1+r_{USD}}\Big)^{3/12}$$where $S_{USDBRL}$ is the spot USDBRL exchange rate. So, an alternative way of defining the 3M annualized carry in USDBRL is:$$Carry_{USDBRL}^{3M} = \Big(\frac{S_{USDBRL}}{F_{USDBRL}^{3M}}\Big)^{12/3}-1$$ Volatility adjusted carryThe forwards based definition of $Carry_{USDBRL}^{3M}$ above suggests that the expected return of buying a certain notional, $N$, of 3M forward contract at price $F_{USDBRL}$ expects to earn$$N \times \Big(\big(1+Carry_{USDBRL}^{3M}\big)^{3/12}-1\Big)$$in profits if deposit rates $r_{USD}$ and $r_{BRL}$ and the spot exchange rate $S_{USDBRL}$ remains constant over the 3M period investment horizon. This is the right intuition for the concept of carry in FX.However, it is important to note that this trade, buying a 3M forward contract at price $F_{USDBRL}$ and unwinding it at maturity, does not really require the full notional, $N$ in capital. Let's call the actual required capital $X < N$, the "margin amount". The margin $X$ is the actual cash amount needed to operate unfunded derivatives contracts like FX forwards.An investor typically allocates a fraction of $N$, sometimes as litle as 2% or 3% of $N$, as margin. Let's say $X = k \times N$, then the carry return over the allocated cash capital $X$ is given by:$$k^{-1} \times \Big(\big(1+Carry_{USDBRL}^{3M}\big)^{3/12}-1\Big)$$Hence, by using a scaling factor $k$ one can modify the definition of carry to be consistent with the returns per unit of allocated capital. So, an asset with carry equal to 5% but that requires 2.5% margin has the same carry per allocated capital of an asset with carry equal to 10% but that requires 5% margin.For time-series strategies, this scaling factor $k$ tends to be not very relevant because it is typically constant over time. However, for cross-sectional strategies, $k$ can vary from asset to asset making the comparison of their carry measures inapropriate as different assets may require different amounts of cash as margin. Asset from the same asset class will typically have very similar margin requirements unless the asset volatilities vary significantly in the cross section. Since, margin requirements, very roughly, vary with the underlying asset volatiltiy. It is common in quantitative cross-sectional carry strategies to adjust carry signals by the volatility of the underlying asset as in:$$\sigma_{USDBRL}^{-1} \times Carry_{USDBRL}^{3M}$$Let's see how these definitions can be computed in Python using Bloomberg data and [FinanceHub's Bloomberg tools](https://github.com/Finance-Hub/FinanceHub/tree/master/bloomberg): | ref_date = '2019-12-04'

tickers = [

'BCDRC BDSR Curncy', # BRL 3M deposit rate

'USDRC BDSR Curncy', # USD 3M deposit rate

'USDBRL Curncy', # USDBRL spot exchange rate

'BCN+3M BGN Curncy', # USDBRL 3M forward contract

'USDBRLV3M BGN Curncy', # USDBRL 3M ATM implied volatility

]

bbg_data = bbg.fetch_series(securities=tickers,

fields='PX_LAST', # This is the Bloomberg field that contains the spot exchange rates data

startdate=ref_date,

enddate=ref_date)

bbg_data | _____no_output_____ | MIT | Quantitative Finance Lectures/carry.ipynb | antoniosalomao/FinanceHubMaterials |

Given the data above, let's calculate carry in both ways: | dr_carry = (1+bbg_data.iloc[-1,1]/100)/(1+bbg_data.iloc[-1,0]/100)-1

print('Deposit rate 3M ann. carry for USDBRL on %s is: %s'% (ref_date,dr_carry))

fwd_carry = ((1+bbg_data.iloc[-1,2])/(1+bbg_data.iloc[-1,3]))**(12/3)-1

print('Forward contract 3M ann. carry for USDBRL on %s is: %s'% (ref_date,fwd_carry))

vol_adj_carry = fwd_carry/(bbg_data.iloc[-1,-1]/100)

print('Vol-adjusted forward contract 3M ann. carry for USDBRL on %s is: %s'% (ref_date,vol_adj_carry)) | Deposit rate 3M ann. carry for USDBRL on 2019-12-04 is: -0.016833325297719526

Forward contract 3M ann. carry for USDBRL on 2019-12-04 is: -0.013180395716221094

Vol-adjusted forward contract 3M ann. carry for USDBRL on 2019-12-04 is: -0.11723201739945827

| MIT | Quantitative Finance Lectures/carry.ipynb | antoniosalomao/FinanceHubMaterials |

Carry in commodity futuresCalculating carry for commodity futures, or for any futures contract follows pretty much the same lines as calculating carry for forward FX contract. Again, by a no-arbitrage argument any futures contract on an underlying $i$, maturing in $T$ years, should be priced by:$$F_{i}^{T} = S_{i} \times \big(1+Carry_{i}^{T}\big)^{T}$$where $S_{i}$ is the spot price of the underlying. So, we can use the equation above as the definition of carry in futures. The problem is that in many commodity markets, there is not exactly a spot market like in FX. Hence, the standard practice is to use the front month future, i.e., the futures closest to maturity as the "spot" market and calculate carry as:$$Carry_{i}^{T-T_{0}} = \Big(\frac{F_{i}^{T_{0}}}{F_{i}^{T}}\Big)^{1/(T-T_{0})}-1$$For many commodities, prices move seasonally. For example, let's look at natural gas futures prices: | tickers = ['NG' + str(i) + ' Comdty' for i in range(1,13)]

bbg_data = bbg.fetch_series(securities=tickers,

fields='PX_LAST',

startdate=ref_date,

enddate=ref_date)

bbg_data.columns = [int(x.replace('NG','').replace(' Comdty','')) for x in bbg_data.columns]

bbg_data.sort_index(axis=1)

plt.figure(figsize=(15,10))

bbg_data.iloc[-1].sort_index().plot(title='Natural gas futures curve')

plt.ylabel('$/MBTU')

plt.xlabel('Months ahead')

plt.show() | _____no_output_____ | MIT | Quantitative Finance Lectures/carry.ipynb | antoniosalomao/FinanceHubMaterials |

In order to avoid the definition of carry moving up and down seasonally, carry strategies in commodities often look at $T$ and $T_{0}$ exactly one year apart. However, this not always true. Some people may argue that you want carry to move up and down seasonally because the underlying spot markets do so. Carry in rates Carry in zero coupon bondsWhen we have data on a zero-coupon rates curve, we can also apply the same carry definition as in FX forward contracts or commodity futures. Let's suppose we want to calculate carry on zero coupon bon with maturity $\tau$ years ahead. Since we have a zero-coupon rates curve, we know the yield of this zero coupon bond, $y_{t}^{\tau}$, on date $t$. Asuming without loss of generality that the bond pays 1 as principal, the spot price of this bond is then given by $P_{t}^{\tau} = (1+y_{t}^{\tau})^{-\tau}$.So, what is the expected return of this bond over a time period $h$ if there is no change in underlying prices, i.e., the zero-coupon rates curve remains the same? Well, after $h$ time periods have passed, this bond will now have maturity equal to $\tau-h$ years and therefore, if the zero-coupon rates curve remains the same, it will have price: $P_{t+h}^{\tau-h} = P_{t}^{\tau-h} = (1+y_{t}^{\tau-h})^{-\tau+h}$. Hence, the annualized total return of buying a zero coupon bond of maturity $\tau$ years and holding it for $h$ years is:$$\Big(\frac{P_{t+h}^{\tau-h}}{P_{t}^{\tau}}\Big)^{1/h}-1= \frac{(1+y_{t}^{\tau})^{\tau/h}}{(1+y_{t}^{\tau-h})^{(\tau-h)/h}}-1.$$The chapter *A Framework for Analyzing Yield-curve trades* in [Fabozzi's book](https://www.amazon.com/Handbook-Fixed-Income-Securities-Eighth/dp/0071768467) will show that the expression on the right hand side of the equation above is in fact the $h$ years rate $\tau-h$ years forward, i.e., the so-called $\tau-h:h$ years forward rate, denoted here by $f_{t}^{\tau-h:h}$. The $\tau-h:h$ years forward rate is the non-arbitrage $h$ years rate implied by the current zero-coupon curve that is suppose to prevalent during the time period in between $\tau-h$ and $\tau$. Hence, the annualized total return of buying a zero coupon bond of maturity $\tau$ years and holding it for $h$ years is $f_{t}^{\tau-h:h}$.However, note that buying a zero coupon bond requires some actual cash. This cash which is required duing $h$ years needs to be remunerated at the $y_{t}^{h}$ rate. So, the actual carry of a zero-coupon bond, or any no-coupon paying derivative, with maturity $\tau$ is:$$Carry_{\tau}^{h} = \frac{1+f_{t}^{\tau-h:h}}{1+y_{t}^{h}}-1.$$ Carry in coupon paying bondsWhen we do not have data on a zero-coupon rates curve, calculating carry is a bit different. We still know the bond, $y_{t}^{\tau}$, on date $t$ and its price $P_{t}^{\tau}$. After $h$ time periods have passed, this bond will now have maturity equal to $\tau-h$ years and therefore, if the yield curve remains the same, it will have price: $P_{t+h}^{\tau-h} = P_{t}^{\tau-h}$ and yield equal to $y_{t}^{\tau-h}$.Using the arguemnts in the chapter *A Framework for Analyzing Yield-curve trades* in [Fabozzi's book](https://www.amazon.com/Handbook-Fixed-Income-Securities-Eighth/dp/0071768467) one can show that the annualized total return of buying a bond of maturity $\tau$ years and holding it for $h$ years can be approximated by:$$\Big(\frac{P_{t+h}^{\tau-h}}{P_{t}^{\tau}}\Big)^{1/h}-1 \approx y_{t}^{\tau} - \frac{D_{t}^{\tau-h}}{h} \times (y_{t}^{\tau-h}-y_{t}^{\tau})$$where $D_{t}^{\tau-h}$ is the modified duration of a bond with maturity $\tau-h$.Again, buying a bond requires some actual cash which needs to be remunerated at the $y_{t}^{h}$ rate. So, the actual carry of a a bond, or any similar derivative, with maturity $\tau$ can be approximated by:$$Carry_{\tau}^{h} \approx \underbrace{(y_{t}^{\tau} - y_{t}^{h})}_\text{slope} - \underbrace{\frac{D_{t}^{\tau-h}}{h} \times (y_{t}^{\tau-h}-y_{t}^{\tau})}_\text{roll down}$$As dicussed in [Koijen, Moskowitz, Pedersen, and Vrugt (2016)](https://ssrn.com/abstract=2298565) the equation above shows that the bond carry consists of two effects: (i) the bond’s yield spread to the risk-free rate, which is also called the **slope** of the term structure; plus (ii) the **roll down** which captures the price increase due to the fact that the bond rolls down the yield curve. The idea of the second component is that if the entire yield curve stays constanto, the bond "rolls down" theyield curve and it will now have yield $y_{t}^{\tau-h}$, resulting in a price appreciation that can be measured by the change in yields times the modified duration.In many case, the magnitude of the roll down component is small relative to the slope component. So, from time to time you will see carry strategies in rates defining carry simply as the slope component, i.e., $Carry_{\tau}^{h} \approx y_{t}^{\tau} - y_{t}^{h}$. This approximation is sometimes called **cash carry** while the previous one is sometimes called **total carry**. Carry in interest rate swapsWe want to calculate the carry of holding a vanilla (fixed vs floating) interest rate swap in a particular currency. Here, we can actually use the same logic we used when calculating carry in FX using forward contracts. Let's denote the spot swap rate by $y^{\tau}$. If there are $h:\tau+h$ forward starting swaps available, they will trade at rate $y^{h:\tau+h}$. The carry for holding the $\tau$ years swap over $h$ periods should be equal to the expected return from holding a $h:\tau+h$ forward starting swaps to its maturity at time ${\tau}$.Again using the arguements from the chapter *A Framework for Analyzing Yield-curve trades* in [Fabozzi's book](https://www.amazon.com/Handbook-Fixed-Income-Securities-Eighth/dp/0071768467) one can approximate the return of a $h:\tau+h$ forward starting swaps by:$$Carry_{\tau}^{h} \approx D_{\tau} \times \Delta y \approx \frac{D_{\tau}}{h} \times (y^{h:\tau+h}-y_{t}^{\tau})$$ Carry across G10 IRSLet's see how all this looks in practice. Let's calcualte the 6M carry in 10Y rates across G10: | # get spot 10Y rates tickers

spot_IRS = pd.Series({

'USD':'USSW10 Curncy',

'EUR':'EUSA10 Curncy',

'JPY':'JYSW10 Curncy',

'GBP':'BPSW10 Curncy',

'AUD':'ADSW10Q Curncy',

'CAD':'CDSW10 Curncy',

'SEK':'SKSW10 Curncy',

'CHF':'SFSW10 Curncy',

'NOK':'NKSW10 Curncy',

'NZD':'NDSWAP10 Curncy',

})

swap_rates = bbg.fetch_series(securities=list(spot_IRS.values),

fields='PX_LAST',

startdate=ref_date,

enddate=ref_date)

swap_rates.columns = [spot_IRS.index[list(spot_IRS.values).index(x)] for x in list(swap_rates.columns)]

# 6M forward 10Y rates tickers

fwd_6M_IRS = pd.Series({

'USD':'USFS0F10 Curncy',

'EUR':'EUSAF10 Curncy',

'JPY':'JYFS0F10 Curncy',

'GBP':'BPSW0F10 Curncy',

'AUD':'S0302FS 6M10Y BLC Curncy',

'CAD':'CDFS0F10 Curncy',

'SEK':'SKFS0F10 Curncy',

'CHF':'SFFS0F10 Curncy',

'NOK':'S0313FS 6M10Y BLC Curncy',

'NZD':'NDFS0F10 Curncy',

})

swap_6M_fwd_rates = bbg.fetch_series(securities=list(fwd_6M_IRS.values),

fields='PX_LAST',

startdate=ref_date,

enddate=ref_date)

swap_6M_fwd_rates.columns = [fwd_6M_IRS.index[list(fwd_6M_IRS.values).index(x)] for x in list(swap_6M_fwd_rates.columns)]

durations = bbg.fetch_contract_parameter(securities=list(spot_IRS.values), field='DUR_ADJ_BID')

durations.index = [spot_IRS.index[list(spot_IRS.values).index(x)] for x in list(durations.index)]

durations = durations.iloc[:,0]

# calculate carry

carry = durations*(swap_6M_fwd_rates - swap_rates).iloc[0]/100

plt.figure(figsize=(15,10))

carry.sort_values().plot(kind='bar', color='b',

title='%s: 6M carry in 10Y rates' % ref_date)

plt.show() | _____no_output_____ | MIT | Quantitative Finance Lectures/carry.ipynb | antoniosalomao/FinanceHubMaterials |

Carry in equity indicesFor equities, we can also use the same arguemnt we used for FX forward contracts. The no-arbitrage price of a futures contract, $F_{t}$ depends on the current equity value $S_{t}$, the expected future dividend payment $D_{t+1}$ computed under the risk-neutral measure and the risk-free interest rate in the country of the equity index. So, $F_{t}=S_{t}(1+r_{f})-E^{Q}[D_{t+1}]$ and therefore:$$Carry_{eq} = \frac{S_{t}}{F_{t}} -1 = \Big(\underbrace{ \frac{E^{Q}[D_{t+1}]}{S_{t}}}_\text{div yield}-r_{f}\Big) \frac{S_{t}}{F_{t}}$$In practice, historical dividend yields can be quite different from $E^{Q}[D_{t+1}]/S_{t}$ so, calculating carry over $h$ periods in equity indices is simply:$$Carry_{eq}^{h} = \Big(\frac{S_{t}}{F_{t}}\Big)^{1/h} -1$$Let's use S&P futures to illustrate: | front_month = bbg.fetch_contract_parameter(securities='SP1 Comdty', field='FUT_CUR_GEN_TICKER')

expiry = bbg.fetch_contract_parameter(securities=front_month.iloc[0,0] + ' Index', field='FUT_NOTICE_FIRST')

h = (pd.to_datetime(expiry.iloc[0,0]) - pd.to_datetime('today')).days/365.25

bbg_data = bbg.fetch_series(securities=['SP1 Comdty'] + ['SPX Index'],

fields='PX_LAST',

startdate=pd.to_datetime('today'),

enddate=pd.to_datetime('today'))

carry = (bbg_data['SPX Index']/bbg_data['SP1 Comdty'])**(1/h)-1

print('On %s, the carry on the front month S&P future is: %s' % (carry.index[0].strftime('%d-%b-%y'),carry.iloc[0])) | On 28-Jan-20, the carry on the front month S&P future is: 0.0007568960130031055

| MIT | Quantitative Finance Lectures/carry.ipynb | antoniosalomao/FinanceHubMaterials |

Carry in bond futuresCalculating carry for bond futures is similar to calculating carry for any futures contract and it follows pretty much the same lines as calculating carry for forward FX contract. The tricky part about bond futures is that the underlying $i$ keeps changing. At any one point in time, the bond underlying a bond futures is called the *cheapest-to-deliver* (CTD) because it is the cheapest bond meeting the futures contract criteria that can be physically delivered to settle a futures contract at maturity. Associated to the CTD bond there is a conversion factor $k$ that makes the price of the bond (quoted in relation to 100 par bond) comparable with the bond futures price quoted on the screen. So, if you buy a bond via a bond future at price $F_{i}^{T}$ and the CTD bond has price $P_{i}$ at $T$, then you will profit: $F_{i}^{T} \times k - P_{i}$. Hence, for 100 par bond, the annualized total returns on this strategy is given by:$$Carry_{i}^{T} = \Big(1+\frac{F_{i}^{T} \times k - P_{i}}{100}\Big)^{1/T}-1$$ | front_month_ticker = bbg.fetch_contract_parameter(securities='TY1 Comdty', field='FUT_CUR_GEN_TICKER')

front_month_ticker = front_month_ticker.iloc[0,0] + ' Comdty'

expiry = bbg.fetch_contract_parameter(securities=front_month_ticker, field='LAST_TRADEABLE_DT')

h = (pd.to_datetime(expiry.iloc[0,0])-pd.to_datetime('today')).days/365.25

ctd_cusip = bbg.fetch_contract_parameter(securities=front_month_ticker, field='FUT_CTD_CUSIP')

ctd_cusip = ctd_cusip.iloc[0,0] + ' Govt'

conv_factor = bbg.fetch_contract_parameter(securities=front_month_ticker, field='FUT_CNVS_FACTOR')

conv_factor = conv_factor.iloc[0,0]

bbg_data = bbg.fetch_series(securities=[front_month_ticker,ctd_cusip],

fields='PX_LAST',

startdate=pd.to_datetime('today'),

enddate=pd.to_datetime('today'))

fut_price = bbg_data.iloc[0,0]*conv_factor

bond_price = bbg_data.iloc[0,1]

carry = (1+(fut_price-bond_price)/100)**(1/h)-1

print('On %s, the carry on the front month 10Y UST future is: %s' % (pd.to_datetime('today').strftime('%d-%b-%y'),carry)) | On 28-Jan-20, the carry on the front month 10Y UST future is: -0.001715206652355361

| MIT | Quantitative Finance Lectures/carry.ipynb | antoniosalomao/FinanceHubMaterials |

import matplotlib.pyplot as plt

import matplotlib as mpl

import numpy as np

from ipywidgets import interact, interactive, fixed, interact_manual

import ipywidgets as widgets

x = np.arange(1, 15)

x

y = x * 5

y

plt.plot(x, y)

plt.axis([0, 16, -20, 100])

plt.show()

def f(m):

y = x * m

plt.plot(x, y)

plt.axis([0, 16, -20, 100])

f(5)

interact(f, m=1)

interact(f, m=(1, 7))

def f(m, b):

y = x * m + b

plt.plot(x, y)

plt.axis([0, 16, -20, 100])

interact(f, m=(1, 7), b=(-10, 10))

def f(m, b, xmin, xmax, ymin, ymax):

y = x * m + b

plt.plot(x, y)

plt.axis([xmin, xmax, ymin, ymax])

interact(f, m=(1, 7), b=(-10, 10),

xmin=(-10, 10), xmax=(10, 20), ymin=(-30, 20), ymax=(10, 200))

def f(a, b):

return a + b

interact(f, a=(1, 100), b=(1, 100))

interact(f, a=(1, 10, 0.5), b=(1, 10, 0.5))

interact(f, a=range(1, 10, 2), b=range(1, 10, 3))

data = {

'a': np.arange(1, 15),

'b': np.arange(1, 15) ** 2,

'c': np.arange(1, 15) + 2.5,

'd': np.arange(1, 15) ** .5,

}

def f2(x, y):

plt.scatter(data[x], data[y])

cols = ['a', 'b', 'c', 'd']

interact(f2, x=cols, y=cols)

def f3(val: bool):

if val:

return "hola"

else:

return "chao"

interact(f3, val=False)

#@title Example form fields

#@markdown Forms support many types of fields.

no_type_checking = '' #@param

string_type = '' #@param {type: "string"}

slider_value = 131 #@param {type: "slider", min: 100, max: 200}

number = 102 #@param {type: "number"}

date = '2010-11-05' #@param {type: "date"}

pick_me = "monday" #@param ['monday', 'tuesday', 'wednesday', 'thursday']

select_or_input = "apples" #@param ["apples", "bananas", "oranges"] {allow-input: true}

#@markdown ---

from IPython.display import display, Javascript

from google.colab.output import eval_js

from base64 import b64decode

def take_photo(filename='photo.jpg', quality=0.8):

js = Javascript('''

async function takePhoto(quality) {

const div = document.createElement('div');

const capture = document.createElement('button');

capture.textContent = 'Capture';

div.appendChild(capture);

const video = document.createElement('video');

video.style.display = 'block';

const stream = await navigator.mediaDevices.getUserMedia({video: true});

document.body.appendChild(div);

div.appendChild(video);

video.srcObject = stream;

await video.play();

// Resize the output to fit the video element.

google.colab.output.setIframeHeight(document.documentElement.scrollHeight, true);

// Wait for Capture to be clicked.

await new Promise((resolve) => capture.onclick = resolve);

const canvas = document.createElement('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

canvas.getContext('2d').drawImage(video, 0, 0);

stream.getVideoTracks()[0].stop();

div.remove();

return canvas.toDataURL('image/jpeg', quality);

}

''')

display(js)

data = eval_js('takePhoto({})'.format(quality))

binary = b64decode(data.split(',')[1])

with open(filename, 'wb') as f:

f.write(binary)

return filename

from IPython.display import Image

try:

filename = take_photo()

print('Saved to {}'.format(filename))

# Show the image which was just taken.

display(Image(filename))

except Exception as err:

# Errors will be thrown if the user does not have a webcam or if they do not

# grant the page permission to access it.

print(str(err))

import matplotlib.image as mpimg

img = mpimg.imread("photo.jpg", format="jpeg")

plt.imshow(img)

| _____no_output_____ | CC0-1.0 | notebooks/ea_Interactive_controls.ipynb | lmcanavals/analytics_visualization |

|