sha

stringlengths 40

40

| text

stringlengths 1

13.4M

| id

stringlengths 2

117

| tags

listlengths 1

7.91k

| created_at

stringlengths 25

25

| metadata

stringlengths 2

875k

| last_modified

stringlengths 25

25

| arxiv

listlengths 0

25

| languages

listlengths 0

7.91k

| tags_str

stringlengths 17

159k

| text_str

stringlengths 1

447k

| text_lists

listlengths 0

352

| processed_texts

listlengths 1

353

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|

af71effd6410078b0b8b2859aabe497b189a8260 | # Dataset Card for "Wikipedia_5gram_more_orders"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | lshowway/Wikipedia_5gram_more_orders | [

"region:us"

]

| 2023-01-31T11:14:39+00:00 | {"dataset_info": {"features": [{"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 3729298637, "num_examples": 1894957}], "download_size": 2399612708, "dataset_size": 3729298637}} | 2023-02-01T22:19:20+00:00 | []

| []

| TAGS

#region-us

| # Dataset Card for "Wikipedia_5gram_more_orders"

More Information needed | [

"# Dataset Card for \"Wikipedia_5gram_more_orders\"\n\nMore Information needed"

]

| [

"TAGS\n#region-us \n",

"# Dataset Card for \"Wikipedia_5gram_more_orders\"\n\nMore Information needed"

]

|

cd91fb6d2ee07c29c24accbf04d5454c89e8b2e8 | # Dataset Card for "boostcamp-docvqa-v3-test"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | Ssunbell/boostcamp-docvqa-v3-test | [

"region:us"

]

| 2023-01-31T11:18:55+00:00 | {"dataset_info": {"features": [{"name": "questionId", "dtype": "int64"}, {"name": "question", "dtype": "string"}, {"name": "image", "sequence": {"sequence": {"sequence": {"sequence": "uint8"}}}}, {"name": "docId", "dtype": "int64"}, {"name": "ucsf_document_id", "dtype": "string"}, {"name": "ucsf_document_page_no", "dtype": "string"}, {"name": "data_split", "dtype": "string"}, {"name": "words", "sequence": "string"}, {"name": "boxes", "sequence": {"sequence": "int64"}}], "splits": [{"name": "test", "num_bytes": 843104716, "num_examples": 5188}], "download_size": 297133332, "dataset_size": 843104716}} | 2023-01-31T11:20:11+00:00 | []

| []

| TAGS

#region-us

| # Dataset Card for "boostcamp-docvqa-v3-test"

More Information needed | [

"# Dataset Card for \"boostcamp-docvqa-v3-test\"\n\nMore Information needed"

]

| [

"TAGS\n#region-us \n",

"# Dataset Card for \"boostcamp-docvqa-v3-test\"\n\nMore Information needed"

]

|

019f5d6c88be9c55b605076c88da75aa3d39de7f |

# MIRACL (ru) embedded with cohere.ai `multilingual-22-12` encoder

We encoded the [MIRACL dataset](https://huggingface.co/miracl) using the [cohere.ai](https://txt.cohere.ai/multilingual/) `multilingual-22-12` embedding model.

The query embeddings can be found in [Cohere/miracl-ru-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-queries-22-12) and the corpus embeddings can be found in [Cohere/miracl-ru-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-corpus-22-12).

For the orginal datasets, see [miracl/miracl](https://huggingface.co/datasets/miracl/miracl) and [miracl/miracl-corpus](https://huggingface.co/datasets/miracl/miracl-corpus).

Dataset info:

> MIRACL 🌍🙌🌏 (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., `\n\n` in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

## Embeddings

We compute for `title+" "+text` the embeddings using our `multilingual-22-12` embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at [cohere.ai multilingual embedding model](https://txt.cohere.ai/multilingual/).

## Loading the dataset

In [miracl-ru-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-corpus-22-12) we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-ru-corpus-22-12", split="train")

```

Or you can also stream it without downloading it before:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-ru-corpus-22-12", split="train", streaming=True)

for doc in docs:

docid = doc['docid']

title = doc['title']

text = doc['text']

emb = doc['emb']

```

## Search

Have a look at [miracl-ru-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-queries-22-12) where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use **dot-product**.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

```python

# Attention! For large datasets, this requires a lot of memory to store

# all document embeddings and to compute the dot product scores.

# Only use this for smaller datasets. For large datasets, use a vector DB

from datasets import load_dataset

import torch

#Load documents + embeddings

docs = load_dataset(f"Cohere/miracl-ru-corpus-22-12", split="train")

doc_embeddings = torch.tensor(docs['emb'])

# Load queries

queries = load_dataset(f"Cohere/miracl-ru-queries-22-12", split="dev")

# Select the first query as example

qid = 0

query = queries[qid]

query_embedding = torch.tensor(queries['emb'])

# Compute dot score between query embedding and document embeddings

dot_scores = torch.mm(query_embedding, doc_embeddings.transpose(0, 1))

top_k = torch.topk(dot_scores, k=3)

# Print results

print("Query:", query['query'])

for doc_id in top_k.indices[0].tolist():

print(docs[doc_id]['title'])

print(docs[doc_id]['text'])

```

You can get embeddings for new queries using our API:

```python

#Run: pip install cohere

import cohere

co = cohere.Client(f"{api_key}") # You should add your cohere API Key here :))

texts = ['my search query']

response = co.embed(texts=texts, model='multilingual-22-12')

query_embedding = response.embeddings[0] # Get the embedding for the first text

```

## Performance

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 | ES 8.6.0 nDCG@10 | ES 8.6.0 acc@3 |

|---|---|---|---|---|

| miracl-ar | 64.2 | 75.2 | 46.8 | 56.2 |

| miracl-bn | 61.5 | 75.7 | 49.2 | 60.1 |

| miracl-de | 44.4 | 60.7 | 19.6 | 29.8 |

| miracl-en | 44.6 | 62.2 | 30.2 | 43.2 |

| miracl-es | 47.0 | 74.1 | 27.0 | 47.2 |

| miracl-fi | 63.7 | 76.2 | 51.4 | 61.6 |

| miracl-fr | 46.8 | 57.1 | 17.0 | 21.6 |

| miracl-hi | 50.7 | 62.9 | 41.0 | 48.9 |

| miracl-id | 44.8 | 63.8 | 39.2 | 54.7 |

| miracl-ru | 49.2 | 66.9 | 25.4 | 36.7 |

| **Avg** | 51.7 | 67.5 | 34.7 | 46.0 |

Further languages (not supported by Elasticsearch):

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 |

|---|---|---|

| miracl-fa | 44.8 | 53.6 |

| miracl-ja | 49.0 | 61.0 |

| miracl-ko | 50.9 | 64.8 |

| miracl-sw | 61.4 | 74.5 |

| miracl-te | 67.8 | 72.3 |

| miracl-th | 60.2 | 71.9 |

| miracl-yo | 56.4 | 62.2 |

| miracl-zh | 43.8 | 56.5 |

| **Avg** | 54.3 | 64.6 |

| Cohere/miracl-ru-corpus-22-12 | [

"task_categories:text-retrieval",

"task_ids:document-retrieval",

"annotations_creators:expert-generated",

"multilinguality:multilingual",

"language:ru",

"license:apache-2.0",

"region:us"

]

| 2023-01-31T11:24:36+00:00 | {"annotations_creators": ["expert-generated"], "language": ["ru"], "license": ["apache-2.0"], "multilinguality": ["multilingual"], "size_categories": [], "source_datasets": [], "task_categories": ["text-retrieval"], "task_ids": ["document-retrieval"], "tags": []} | 2023-02-06T11:56:20+00:00 | []

| [

"ru"

]

| TAGS

#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Russian #license-apache-2.0 #region-us

| MIRACL (ru) embedded with URL 'multilingual-22-12' encoder

==========================================================

We encoded the MIRACL dataset using the URL 'multilingual-22-12' embedding model.

The query embeddings can be found in Cohere/miracl-ru-queries-22-12 and the corpus embeddings can be found in Cohere/miracl-ru-corpus-22-12.

For the orginal datasets, see miracl/miracl and miracl/miracl-corpus.

Dataset info:

>

> MIRACL (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., '\n\n' in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

>

>

>

Embeddings

----------

We compute for 'title+" "+text' the embeddings using our 'multilingual-22-12' embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at URL multilingual embedding model.

Loading the dataset

-------------------

In miracl-ru-corpus-22-12 we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

Or you can also stream it without downloading it before:

Search

------

Have a look at miracl-ru-queries-22-12 where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use dot-product.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

You can get embeddings for new queries using our API:

Performance

-----------

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

Further languages (not supported by Elasticsearch):

Model: miracl-fa, cohere multilingual-22-12 nDCG@10: 44.8, cohere multilingual-22-12 hit@3: 53.6

Model: miracl-ja, cohere multilingual-22-12 nDCG@10: 49.0, cohere multilingual-22-12 hit@3: 61.0

Model: miracl-ko, cohere multilingual-22-12 nDCG@10: 50.9, cohere multilingual-22-12 hit@3: 64.8

Model: miracl-sw, cohere multilingual-22-12 nDCG@10: 61.4, cohere multilingual-22-12 hit@3: 74.5

Model: miracl-te, cohere multilingual-22-12 nDCG@10: 67.8, cohere multilingual-22-12 hit@3: 72.3

Model: miracl-th, cohere multilingual-22-12 nDCG@10: 60.2, cohere multilingual-22-12 hit@3: 71.9

Model: miracl-yo, cohere multilingual-22-12 nDCG@10: 56.4, cohere multilingual-22-12 hit@3: 62.2

Model: miracl-zh, cohere multilingual-22-12 nDCG@10: 43.8, cohere multilingual-22-12 hit@3: 56.5

Model: Avg, cohere multilingual-22-12 nDCG@10: 54.3, cohere multilingual-22-12 hit@3: 64.6

| []

| [

"TAGS\n#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Russian #license-apache-2.0 #region-us \n"

]

|

5496cca11b191bf58ad8bad91da85af5a35a8734 |

# Miyuki Character LoRA

# Use Cases

The LoRA is in itself very compatible with the most diverse model. However, it is most effective when used with Kenshi or AbyssOrangeMix2.

The LoRA itself was trained with the token: ```miyuki```.

I would suggest using the token with AbyssOrangeMix2, but not with Kenshi, since I got better results that way.

The models mentioned right now

1. AbyssOrangeMix2 from [WarriorMama777](https://huggingface.co/WarriorMama777/OrangeMixs)

2. Kenshi Model from [Luna](https://huggingface.co/SweetLuna/Kenshi)

## Strength

I would personally use these strength with the assosiated model:

- 0.6-0.75 for AbyssOrangeMix2

- 0.4-0.65 for Kenshi

# Showcase

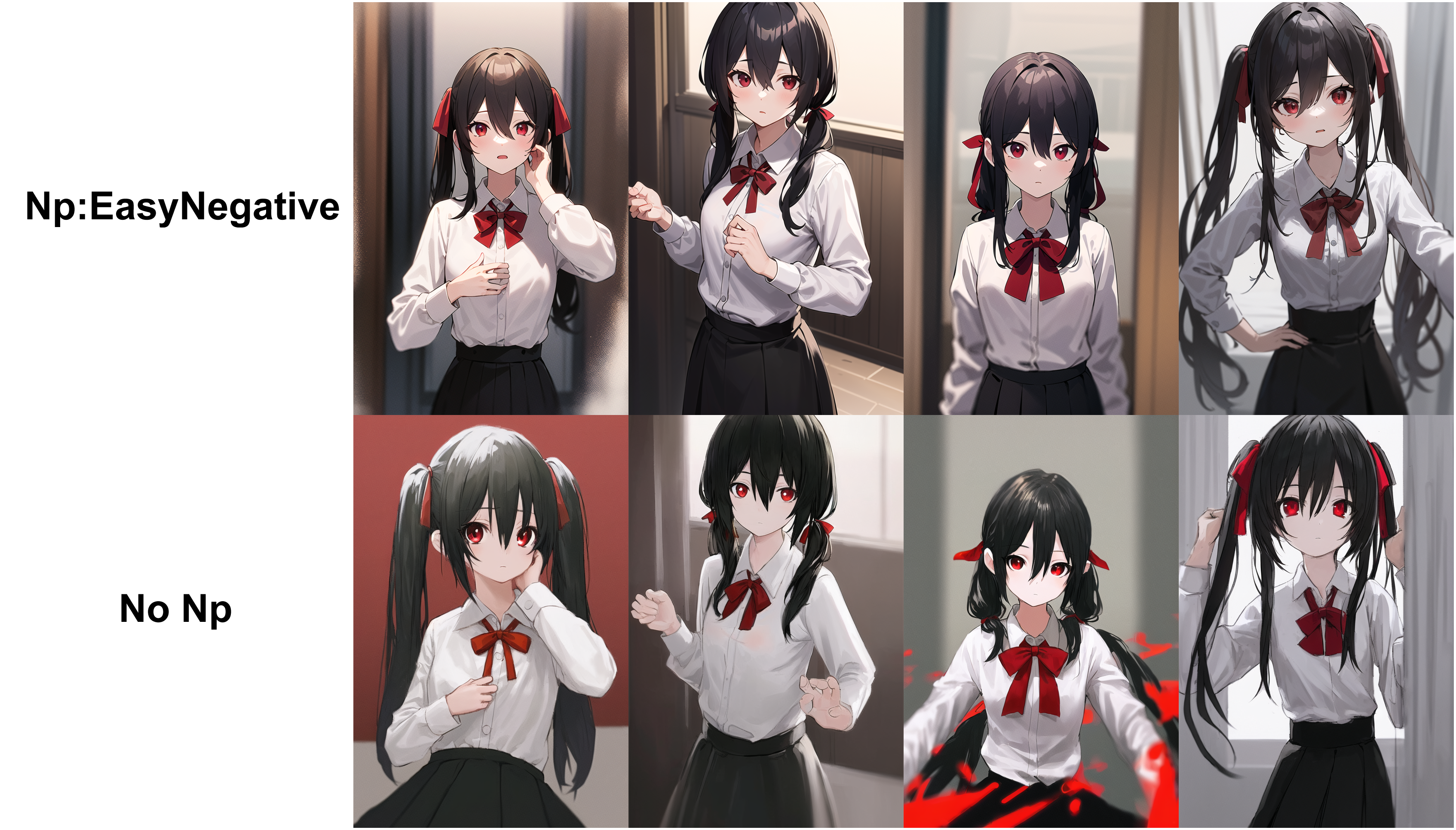

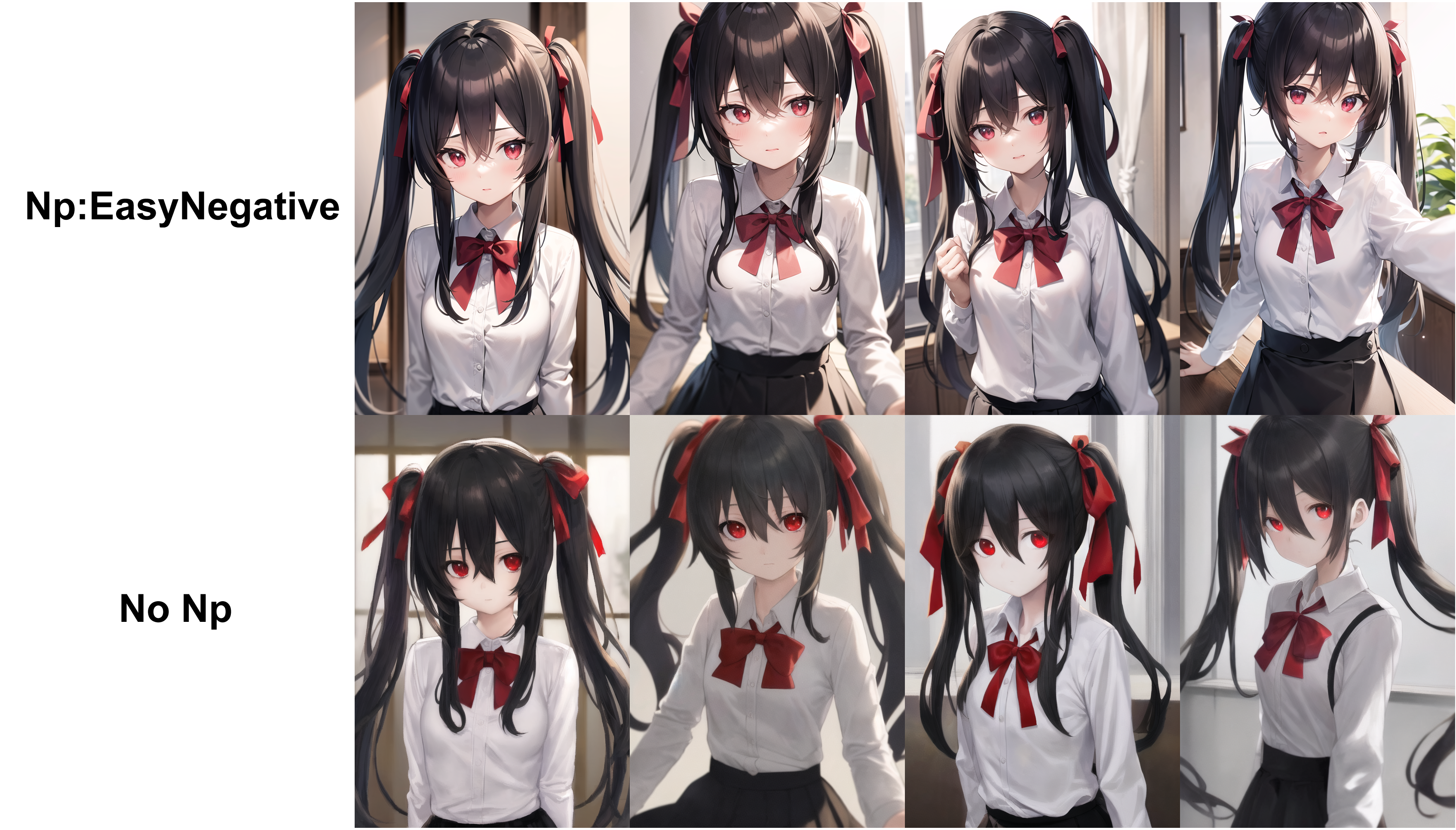

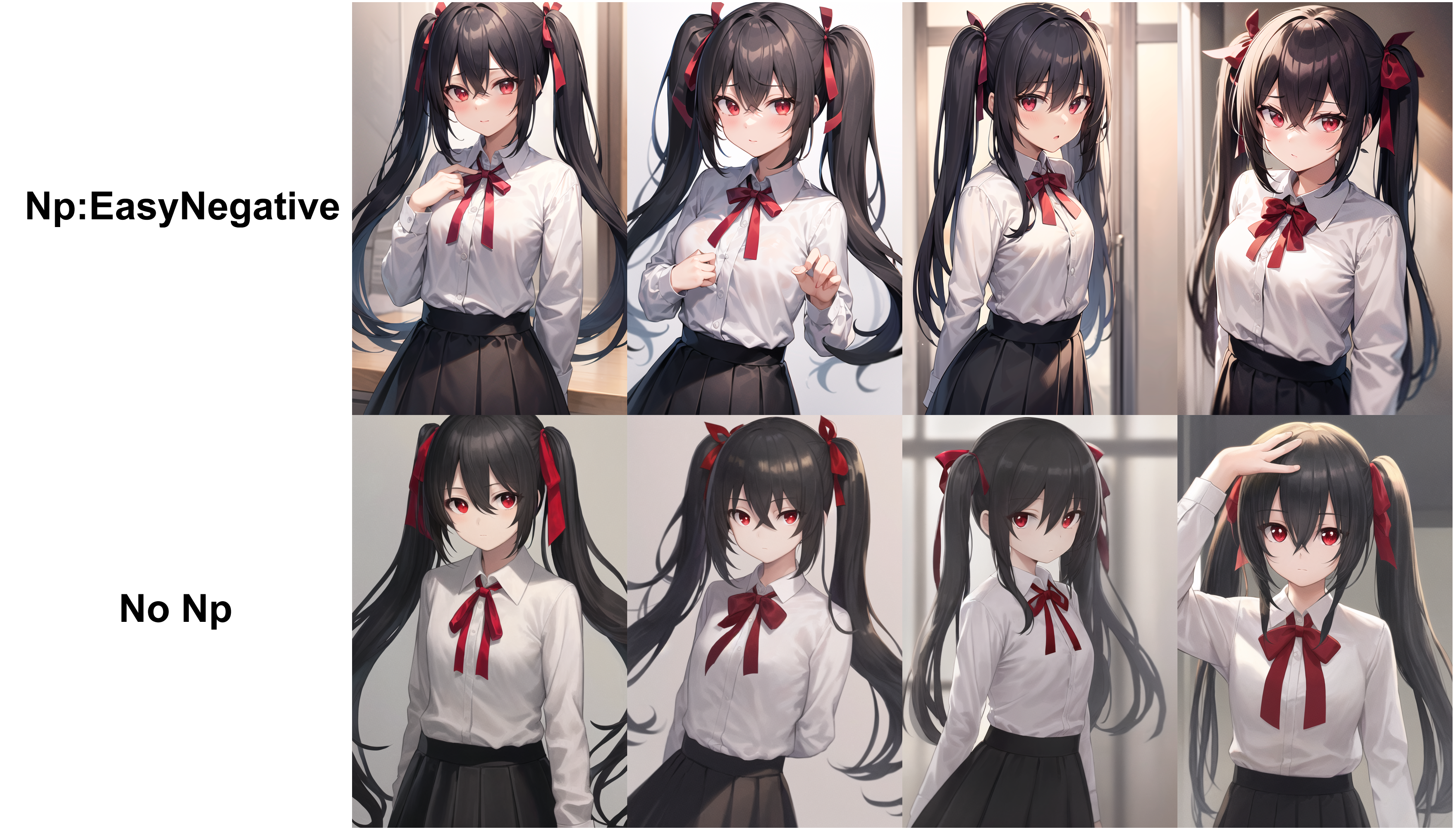

**Example 1**

<img alt="Showcase" src="https://huggingface.co/datasets/Nerfgun3/miyuki-shiba_LoRA/resolve/main/preview/preview%20(2).png"/>

```

miyuki,

1girl, (masterpiece:1.2), (best quality:1.2), (sharp detail:1.2), (highres:1.2), (in a graden of flowers), sitting, waving

Steps: 32, Sampler: Euler a, CFG scale: 7

```

**Example 2**

<img alt="Showcase" src="https://huggingface.co/datasets/Nerfgun3/miyuki-shiba_LoRA/resolve/main/preview/preview%20(3).png"/>

```

miyuki, 1girl, (masterpiece:1.2), (best quality:1.2), (sharp detail:1.2), (highres:1.2), (in a graden of flowers), sitting, waving

Steps: 32, Sampler: Euler a, CFG scale: 7

```

**Example 3**

<img alt="Showcase" src="https://huggingface.co/datasets/Nerfgun3/miyuki-shiba_LoRA/resolve/main/preview/preview%20(4).png"/>

```

miyuki, 1girl, (masterpiece:1.2), (best quality:1.2), (sharp detail:1.2), (highres:1.2), (in a graden of flowers), sitting, hands behind her back

Steps: 20, Sampler: DPM++ SDE Karras, CFG scale: 7

```

# License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the embedding commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license) | Nerfgun3/miyuki-shiba_LoRA | [

"language:en",

"license:creativeml-openrail-m",

"stable-diffusion",

"text-to-image",

"image-to-image",

"region:us"

]

| 2023-01-31T12:08:33+00:00 | {"language": ["en"], "license": "creativeml-openrail-m", "thumbnail": "https://huggingface.co/datasets/Nerfgun3/miyuki-shiba_LoRA/resolve/main/preview/preview%20(1).png", "tags": ["stable-diffusion", "text-to-image", "image-to-image"], "inference": false} | 2023-01-31T12:22:58+00:00 | []

| [

"en"

]

| TAGS

#language-English #license-creativeml-openrail-m #stable-diffusion #text-to-image #image-to-image #region-us

|

# Miyuki Character LoRA

# Use Cases

The LoRA is in itself very compatible with the most diverse model. However, it is most effective when used with Kenshi or AbyssOrangeMix2.

The LoRA itself was trained with the token: .

I would suggest using the token with AbyssOrangeMix2, but not with Kenshi, since I got better results that way.

The models mentioned right now

1. AbyssOrangeMix2 from WarriorMama777

2. Kenshi Model from Luna

## Strength

I would personally use these strength with the assosiated model:

- 0.6-0.75 for AbyssOrangeMix2

- 0.4-0.65 for Kenshi

# Showcase

Example 1

<img alt="Showcase" src="URL

Example 2

<img alt="Showcase" src="URL

Example 3

<img alt="Showcase" src="URL

# License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the embedding commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

Please read the full license here | [

"# Miyuki Character LoRA",

"# Use Cases\n\nThe LoRA is in itself very compatible with the most diverse model. However, it is most effective when used with Kenshi or AbyssOrangeMix2.\n\nThe LoRA itself was trained with the token: .\nI would suggest using the token with AbyssOrangeMix2, but not with Kenshi, since I got better results that way.\n\nThe models mentioned right now\n1. AbyssOrangeMix2 from WarriorMama777\n2. Kenshi Model from Luna",

"## Strength\n\nI would personally use these strength with the assosiated model:\n- 0.6-0.75 for AbyssOrangeMix2\n- 0.4-0.65 for Kenshi",

"# Showcase\n\nExample 1\n\n<img alt=\"Showcase\" src=\"URL\n\n\n\nExample 2\n<img alt=\"Showcase\" src=\"URL\n\n\n\nExample 3\n<img alt=\"Showcase\" src=\"URL",

"# License\n\nThis model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.\nThe CreativeML OpenRAIL License specifies: \n\n1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content \n2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license\n3. You may re-distribute the weights and use the embedding commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)\nPlease read the full license here"

]

| [

"TAGS\n#language-English #license-creativeml-openrail-m #stable-diffusion #text-to-image #image-to-image #region-us \n",

"# Miyuki Character LoRA",

"# Use Cases\n\nThe LoRA is in itself very compatible with the most diverse model. However, it is most effective when used with Kenshi or AbyssOrangeMix2.\n\nThe LoRA itself was trained with the token: .\nI would suggest using the token with AbyssOrangeMix2, but not with Kenshi, since I got better results that way.\n\nThe models mentioned right now\n1. AbyssOrangeMix2 from WarriorMama777\n2. Kenshi Model from Luna",

"## Strength\n\nI would personally use these strength with the assosiated model:\n- 0.6-0.75 for AbyssOrangeMix2\n- 0.4-0.65 for Kenshi",

"# Showcase\n\nExample 1\n\n<img alt=\"Showcase\" src=\"URL\n\n\n\nExample 2\n<img alt=\"Showcase\" src=\"URL\n\n\n\nExample 3\n<img alt=\"Showcase\" src=\"URL",

"# License\n\nThis model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.\nThe CreativeML OpenRAIL License specifies: \n\n1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content \n2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license\n3. You may re-distribute the weights and use the embedding commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)\nPlease read the full license here"

]

|

5240f784fb2f0b59d51358464c25d60dd0959015 |

# MIRACL (ru) embedded with cohere.ai `multilingual-22-12` encoder

We encoded the [MIRACL dataset](https://huggingface.co/miracl) using the [cohere.ai](https://txt.cohere.ai/multilingual/) `multilingual-22-12` embedding model.

The query embeddings can be found in [Cohere/miracl-ru-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-queries-22-12) and the corpus embeddings can be found in [Cohere/miracl-ru-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-corpus-22-12).

For the orginal datasets, see [miracl/miracl](https://huggingface.co/datasets/miracl/miracl) and [miracl/miracl-corpus](https://huggingface.co/datasets/miracl/miracl-corpus).

Dataset info:

> MIRACL 🌍🙌🌏 (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., `\n\n` in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

## Embeddings

We compute for `title+" "+text` the embeddings using our `multilingual-22-12` embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at [cohere.ai multilingual embedding model](https://txt.cohere.ai/multilingual/).

## Loading the dataset

In [miracl-ru-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-corpus-22-12) we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-ru-corpus-22-12", split="train")

```

Or you can also stream it without downloading it before:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-ru-corpus-22-12", split="train", streaming=True)

for doc in docs:

docid = doc['docid']

title = doc['title']

text = doc['text']

emb = doc['emb']

```

## Search

Have a look at [miracl-ru-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-ru-queries-22-12) where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use **dot-product**.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

```python

# Attention! For large datasets, this requires a lot of memory to store

# all document embeddings and to compute the dot product scores.

# Only use this for smaller datasets. For large datasets, use a vector DB

from datasets import load_dataset

import torch

#Load documents + embeddings

docs = load_dataset(f"Cohere/miracl-ru-corpus-22-12", split="train")

doc_embeddings = torch.tensor(docs['emb'])

# Load queries

queries = load_dataset(f"Cohere/miracl-ru-queries-22-12", split="dev")

# Select the first query as example

qid = 0

query = queries[qid]

query_embedding = torch.tensor(queries['emb'])

# Compute dot score between query embedding and document embeddings

dot_scores = torch.mm(query_embedding, doc_embeddings.transpose(0, 1))

top_k = torch.topk(dot_scores, k=3)

# Print results

print("Query:", query['query'])

for doc_id in top_k.indices[0].tolist():

print(docs[doc_id]['title'])

print(docs[doc_id]['text'])

```

You can get embeddings for new queries using our API:

```python

#Run: pip install cohere

import cohere

co = cohere.Client(f"{api_key}") # You should add your cohere API Key here :))

texts = ['my search query']

response = co.embed(texts=texts, model='multilingual-22-12')

query_embedding = response.embeddings[0] # Get the embedding for the first text

```

## Performance

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 | ES 8.6.0 nDCG@10 | ES 8.6.0 acc@3 |

|---|---|---|---|---|

| miracl-ar | 64.2 | 75.2 | 46.8 | 56.2 |

| miracl-bn | 61.5 | 75.7 | 49.2 | 60.1 |

| miracl-de | 44.4 | 60.7 | 19.6 | 29.8 |

| miracl-en | 44.6 | 62.2 | 30.2 | 43.2 |

| miracl-es | 47.0 | 74.1 | 27.0 | 47.2 |

| miracl-fi | 63.7 | 76.2 | 51.4 | 61.6 |

| miracl-fr | 46.8 | 57.1 | 17.0 | 21.6 |

| miracl-hi | 50.7 | 62.9 | 41.0 | 48.9 |

| miracl-id | 44.8 | 63.8 | 39.2 | 54.7 |

| miracl-ru | 49.2 | 66.9 | 25.4 | 36.7 |

| **Avg** | 51.7 | 67.5 | 34.7 | 46.0 |

Further languages (not supported by Elasticsearch):

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 |

|---|---|---|

| miracl-fa | 44.8 | 53.6 |

| miracl-ja | 49.0 | 61.0 |

| miracl-ko | 50.9 | 64.8 |

| miracl-sw | 61.4 | 74.5 |

| miracl-te | 67.8 | 72.3 |

| miracl-th | 60.2 | 71.9 |

| miracl-yo | 56.4 | 62.2 |

| miracl-zh | 43.8 | 56.5 |

| **Avg** | 54.3 | 64.6 |

| Cohere/miracl-ru-queries-22-12 | [

"task_categories:text-retrieval",

"task_ids:document-retrieval",

"annotations_creators:expert-generated",

"multilinguality:multilingual",

"language:ru",

"license:apache-2.0",

"region:us"

]

| 2023-01-31T12:18:51+00:00 | {"annotations_creators": ["expert-generated"], "language": ["ru"], "license": ["apache-2.0"], "multilinguality": ["multilingual"], "size_categories": [], "source_datasets": [], "task_categories": ["text-retrieval"], "task_ids": ["document-retrieval"], "tags": []} | 2023-02-06T11:56:00+00:00 | []

| [

"ru"

]

| TAGS

#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Russian #license-apache-2.0 #region-us

| MIRACL (ru) embedded with URL 'multilingual-22-12' encoder

==========================================================

We encoded the MIRACL dataset using the URL 'multilingual-22-12' embedding model.

The query embeddings can be found in Cohere/miracl-ru-queries-22-12 and the corpus embeddings can be found in Cohere/miracl-ru-corpus-22-12.

For the orginal datasets, see miracl/miracl and miracl/miracl-corpus.

Dataset info:

>

> MIRACL (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., '\n\n' in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

>

>

>

Embeddings

----------

We compute for 'title+" "+text' the embeddings using our 'multilingual-22-12' embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at URL multilingual embedding model.

Loading the dataset

-------------------

In miracl-ru-corpus-22-12 we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

Or you can also stream it without downloading it before:

Search

------

Have a look at miracl-ru-queries-22-12 where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use dot-product.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

You can get embeddings for new queries using our API:

Performance

-----------

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

Further languages (not supported by Elasticsearch):

Model: miracl-fa, cohere multilingual-22-12 nDCG@10: 44.8, cohere multilingual-22-12 hit@3: 53.6

Model: miracl-ja, cohere multilingual-22-12 nDCG@10: 49.0, cohere multilingual-22-12 hit@3: 61.0

Model: miracl-ko, cohere multilingual-22-12 nDCG@10: 50.9, cohere multilingual-22-12 hit@3: 64.8

Model: miracl-sw, cohere multilingual-22-12 nDCG@10: 61.4, cohere multilingual-22-12 hit@3: 74.5

Model: miracl-te, cohere multilingual-22-12 nDCG@10: 67.8, cohere multilingual-22-12 hit@3: 72.3

Model: miracl-th, cohere multilingual-22-12 nDCG@10: 60.2, cohere multilingual-22-12 hit@3: 71.9

Model: miracl-yo, cohere multilingual-22-12 nDCG@10: 56.4, cohere multilingual-22-12 hit@3: 62.2

Model: miracl-zh, cohere multilingual-22-12 nDCG@10: 43.8, cohere multilingual-22-12 hit@3: 56.5

Model: Avg, cohere multilingual-22-12 nDCG@10: 54.3, cohere multilingual-22-12 hit@3: 64.6

| []

| [

"TAGS\n#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Russian #license-apache-2.0 #region-us \n"

]

|

5f4c79d0710fffe58328dfa2795a64b927cca5de | # Dataset Card for Dataset Name

## Dataset Description

- **Homepage:**

- **Repository:**

- **Paper:**

- **Leaderboard:**

- **Point of Contact:**

### Dataset Summary

This dataset card aims to be a base template for new datasets. It has been generated using [this raw template](https://github.com/huggingface/huggingface_hub/blob/main/src/huggingface_hub/templates/datasetcard_template.md?plain=1).

### Supported Tasks and Leaderboards

[More Information Needed]

### Languages

[More Information Needed]

## Dataset Structure

### Data Instances

[More Information Needed]

### Data Fields

[More Information Needed]

### Data Splits

[More Information Needed]

## Dataset Creation

### Curation Rationale

[More Information Needed]

### Source Data

#### Initial Data Collection and Normalization

[More Information Needed]

#### Who are the source language producers?

[More Information Needed]

### Annotations

#### Annotation process

[More Information Needed]

#### Who are the annotators?

[More Information Needed]

### Personal and Sensitive Information

[More Information Needed]

## Considerations for Using the Data

### Social Impact of Dataset

[More Information Needed]

### Discussion of Biases

[More Information Needed]

### Other Known Limitations

[More Information Needed]

## Additional Information

### Dataset Curators

[More Information Needed]

### Licensing Information

[More Information Needed]

### Citation Information

[More Information Needed]

### Contributions

[More Information Needed] | flow3rdown/MarKG | [

"language:en",

"license:mit",

"region:us"

]

| 2023-01-31T13:07:23+00:00 | {"language": ["en"], "license": "mit"} | 2023-01-31T13:18:49+00:00 | []

| [

"en"

]

| TAGS

#language-English #license-mit #region-us

| # Dataset Card for Dataset Name

## Dataset Description

- Homepage:

- Repository:

- Paper:

- Leaderboard:

- Point of Contact:

### Dataset Summary

This dataset card aims to be a base template for new datasets. It has been generated using this raw template.

### Supported Tasks and Leaderboards

### Languages

## Dataset Structure

### Data Instances

### Data Fields

### Data Splits

## Dataset Creation

### Curation Rationale

### Source Data

#### Initial Data Collection and Normalization

#### Who are the source language producers?

### Annotations

#### Annotation process

#### Who are the annotators?

### Personal and Sensitive Information

## Considerations for Using the Data

### Social Impact of Dataset

### Discussion of Biases

### Other Known Limitations

## Additional Information

### Dataset Curators

### Licensing Information

### Contributions

| [

"# Dataset Card for Dataset Name",

"## Dataset Description\n\n- Homepage: \n- Repository: \n- Paper: \n- Leaderboard: \n- Point of Contact:",

"### Dataset Summary\n\nThis dataset card aims to be a base template for new datasets. It has been generated using this raw template.",

"### Supported Tasks and Leaderboards",

"### Languages",

"## Dataset Structure",

"### Data Instances",

"### Data Fields",

"### Data Splits",

"## Dataset Creation",

"### Curation Rationale",

"### Source Data",

"#### Initial Data Collection and Normalization",

"#### Who are the source language producers?",

"### Annotations",

"#### Annotation process",

"#### Who are the annotators?",

"### Personal and Sensitive Information",

"## Considerations for Using the Data",

"### Social Impact of Dataset",

"### Discussion of Biases",

"### Other Known Limitations",

"## Additional Information",

"### Dataset Curators",

"### Licensing Information",

"### Contributions"

]

| [

"TAGS\n#language-English #license-mit #region-us \n",

"# Dataset Card for Dataset Name",

"## Dataset Description\n\n- Homepage: \n- Repository: \n- Paper: \n- Leaderboard: \n- Point of Contact:",

"### Dataset Summary\n\nThis dataset card aims to be a base template for new datasets. It has been generated using this raw template.",

"### Supported Tasks and Leaderboards",

"### Languages",

"## Dataset Structure",

"### Data Instances",

"### Data Fields",

"### Data Splits",

"## Dataset Creation",

"### Curation Rationale",

"### Source Data",

"#### Initial Data Collection and Normalization",

"#### Who are the source language producers?",

"### Annotations",

"#### Annotation process",

"#### Who are the annotators?",

"### Personal and Sensitive Information",

"## Considerations for Using the Data",

"### Social Impact of Dataset",

"### Discussion of Biases",

"### Other Known Limitations",

"## Additional Information",

"### Dataset Curators",

"### Licensing Information",

"### Contributions"

]

|

7efe436235598edf9b6103abaa757659d2a5c1cb |

# MIRACL (zh) embedded with cohere.ai `multilingual-22-12` encoder

We encoded the [MIRACL dataset](https://huggingface.co/miracl) using the [cohere.ai](https://txt.cohere.ai/multilingual/) `multilingual-22-12` embedding model.

The query embeddings can be found in [Cohere/miracl-zh-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-queries-22-12) and the corpus embeddings can be found in [Cohere/miracl-zh-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-corpus-22-12).

For the orginal datasets, see [miracl/miracl](https://huggingface.co/datasets/miracl/miracl) and [miracl/miracl-corpus](https://huggingface.co/datasets/miracl/miracl-corpus).

Dataset info:

> MIRACL 🌍🙌🌏 (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., `\n\n` in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

## Embeddings

We compute for `title+" "+text` the embeddings using our `multilingual-22-12` embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at [cohere.ai multilingual embedding model](https://txt.cohere.ai/multilingual/).

## Loading the dataset

In [miracl-zh-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-corpus-22-12) we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-zh-corpus-22-12", split="train")

```

Or you can also stream it without downloading it before:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-zh-corpus-22-12", split="train", streaming=True)

for doc in docs:

docid = doc['docid']

title = doc['title']

text = doc['text']

emb = doc['emb']

```

## Search

Have a look at [miracl-zh-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-queries-22-12) where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use **dot-product**.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

```python

# Attention! For large datasets, this requires a lot of memory to store

# all document embeddings and to compute the dot product scores.

# Only use this for smaller datasets. For large datasets, use a vector DB

from datasets import load_dataset

import torch

#Load documents + embeddings

docs = load_dataset(f"Cohere/miracl-zh-corpus-22-12", split="train")

doc_embeddings = torch.tensor(docs['emb'])

# Load queries

queries = load_dataset(f"Cohere/miracl-zh-queries-22-12", split="dev")

# Select the first query as example

qid = 0

query = queries[qid]

query_embedding = torch.tensor(queries['emb'])

# Compute dot score between query embedding and document embeddings

dot_scores = torch.mm(query_embedding, doc_embeddings.transpose(0, 1))

top_k = torch.topk(dot_scores, k=3)

# Print results

print("Query:", query['query'])

for doc_id in top_k.indices[0].tolist():

print(docs[doc_id]['title'])

print(docs[doc_id]['text'])

```

You can get embeddings for new queries using our API:

```python

#Run: pip install cohere

import cohere

co = cohere.Client(f"{api_key}") # You should add your cohere API Key here :))

texts = ['my search query']

response = co.embed(texts=texts, model='multilingual-22-12')

query_embedding = response.embeddings[0] # Get the embedding for the first text

```

## Performance

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 | ES 8.6.0 nDCG@10 | ES 8.6.0 acc@3 |

|---|---|---|---|---|

| miracl-ar | 64.2 | 75.2 | 46.8 | 56.2 |

| miracl-bn | 61.5 | 75.7 | 49.2 | 60.1 |

| miracl-de | 44.4 | 60.7 | 19.6 | 29.8 |

| miracl-en | 44.6 | 62.2 | 30.2 | 43.2 |

| miracl-es | 47.0 | 74.1 | 27.0 | 47.2 |

| miracl-fi | 63.7 | 76.2 | 51.4 | 61.6 |

| miracl-fr | 46.8 | 57.1 | 17.0 | 21.6 |

| miracl-hi | 50.7 | 62.9 | 41.0 | 48.9 |

| miracl-id | 44.8 | 63.8 | 39.2 | 54.7 |

| miracl-ru | 49.2 | 66.9 | 25.4 | 36.7 |

| **Avg** | 51.7 | 67.5 | 34.7 | 46.0 |

Further languages (not supported by Elasticsearch):

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 |

|---|---|---|

| miracl-fa | 44.8 | 53.6 |

| miracl-ja | 49.0 | 61.0 |

| miracl-ko | 50.9 | 64.8 |

| miracl-sw | 61.4 | 74.5 |

| miracl-te | 67.8 | 72.3 |

| miracl-th | 60.2 | 71.9 |

| miracl-yo | 56.4 | 62.2 |

| miracl-zh | 43.8 | 56.5 |

| **Avg** | 54.3 | 64.6 |

| Cohere/miracl-zh-corpus-22-12 | [

"task_categories:text-retrieval",

"task_ids:document-retrieval",

"annotations_creators:expert-generated",

"multilinguality:multilingual",

"language:zh",

"license:apache-2.0",

"region:us"

]

| 2023-01-31T13:13:33+00:00 | {"annotations_creators": ["expert-generated"], "language": ["zh"], "license": ["apache-2.0"], "multilinguality": ["multilingual"], "size_categories": [], "source_datasets": [], "task_categories": ["text-retrieval"], "task_ids": ["document-retrieval"], "tags": []} | 2023-02-06T11:55:44+00:00 | []

| [

"zh"

]

| TAGS

#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Chinese #license-apache-2.0 #region-us

| MIRACL (zh) embedded with URL 'multilingual-22-12' encoder

==========================================================

We encoded the MIRACL dataset using the URL 'multilingual-22-12' embedding model.

The query embeddings can be found in Cohere/miracl-zh-queries-22-12 and the corpus embeddings can be found in Cohere/miracl-zh-corpus-22-12.

For the orginal datasets, see miracl/miracl and miracl/miracl-corpus.

Dataset info:

>

> MIRACL (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., '\n\n' in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

>

>

>

Embeddings

----------

We compute for 'title+" "+text' the embeddings using our 'multilingual-22-12' embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at URL multilingual embedding model.

Loading the dataset

-------------------

In miracl-zh-corpus-22-12 we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

Or you can also stream it without downloading it before:

Search

------

Have a look at miracl-zh-queries-22-12 where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use dot-product.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

You can get embeddings for new queries using our API:

Performance

-----------

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

Further languages (not supported by Elasticsearch):

Model: miracl-fa, cohere multilingual-22-12 nDCG@10: 44.8, cohere multilingual-22-12 hit@3: 53.6

Model: miracl-ja, cohere multilingual-22-12 nDCG@10: 49.0, cohere multilingual-22-12 hit@3: 61.0

Model: miracl-ko, cohere multilingual-22-12 nDCG@10: 50.9, cohere multilingual-22-12 hit@3: 64.8

Model: miracl-sw, cohere multilingual-22-12 nDCG@10: 61.4, cohere multilingual-22-12 hit@3: 74.5

Model: miracl-te, cohere multilingual-22-12 nDCG@10: 67.8, cohere multilingual-22-12 hit@3: 72.3

Model: miracl-th, cohere multilingual-22-12 nDCG@10: 60.2, cohere multilingual-22-12 hit@3: 71.9

Model: miracl-yo, cohere multilingual-22-12 nDCG@10: 56.4, cohere multilingual-22-12 hit@3: 62.2

Model: miracl-zh, cohere multilingual-22-12 nDCG@10: 43.8, cohere multilingual-22-12 hit@3: 56.5

Model: Avg, cohere multilingual-22-12 nDCG@10: 54.3, cohere multilingual-22-12 hit@3: 64.6

| []

| [

"TAGS\n#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Chinese #license-apache-2.0 #region-us \n"

]

|

da70cd209896584e91cccac9c86092ec9c25c2c1 | # Dataset Card for "wikipedia.reorder.SVO"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | lshowway/wikipedia.reorder.SVO | [

"region:us"

]

| 2023-01-31T13:29:50+00:00 | {"dataset_info": {"features": [{"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 4083836556, "num_examples": 1986076}], "download_size": 1989232973, "dataset_size": 4083836556}} | 2023-01-31T16:41:06+00:00 | []

| []

| TAGS

#region-us

| # Dataset Card for "wikipedia.reorder.SVO"

More Information needed | [

"# Dataset Card for \"wikipedia.reorder.SVO\"\n\nMore Information needed"

]

| [

"TAGS\n#region-us \n",

"# Dataset Card for \"wikipedia.reorder.SVO\"\n\nMore Information needed"

]

|

6d9607deb61364812da24e285f35d6463f8910fa | # Dataset Card for "wikipedia.reorder.VOS"

[More Information needed](https://github.com/huggingface/datasets/blob/main/CONTRIBUTING.md#how-to-contribute-to-the-dataset-cards) | lshowway/wikipedia.reorder.VOS | [

"region:us"

]

| 2023-01-31T13:37:46+00:00 | {"dataset_info": {"features": [{"name": "text", "dtype": "string"}], "splits": [{"name": "train", "num_bytes": 4083836556, "num_examples": 1986076}], "download_size": 2018381284, "dataset_size": 4083836556}} | 2023-01-31T17:40:42+00:00 | []

| []

| TAGS

#region-us

| # Dataset Card for "wikipedia.reorder.VOS"

More Information needed | [

"# Dataset Card for \"wikipedia.reorder.VOS\"\n\nMore Information needed"

]

| [

"TAGS\n#region-us \n",

"# Dataset Card for \"wikipedia.reorder.VOS\"\n\nMore Information needed"

]

|

125f282756795fe4c1a4ba1a80cbf4434c48835b |

# MIRACL (zh) embedded with cohere.ai `multilingual-22-12` encoder

We encoded the [MIRACL dataset](https://huggingface.co/miracl) using the [cohere.ai](https://txt.cohere.ai/multilingual/) `multilingual-22-12` embedding model.

The query embeddings can be found in [Cohere/miracl-zh-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-queries-22-12) and the corpus embeddings can be found in [Cohere/miracl-zh-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-corpus-22-12).

For the orginal datasets, see [miracl/miracl](https://huggingface.co/datasets/miracl/miracl) and [miracl/miracl-corpus](https://huggingface.co/datasets/miracl/miracl-corpus).

Dataset info:

> MIRACL 🌍🙌🌏 (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., `\n\n` in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

## Embeddings

We compute for `title+" "+text` the embeddings using our `multilingual-22-12` embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at [cohere.ai multilingual embedding model](https://txt.cohere.ai/multilingual/).

## Loading the dataset

In [miracl-zh-corpus-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-corpus-22-12) we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-zh-corpus-22-12", split="train")

```

Or you can also stream it without downloading it before:

```python

from datasets import load_dataset

docs = load_dataset(f"Cohere/miracl-zh-corpus-22-12", split="train", streaming=True)

for doc in docs:

docid = doc['docid']

title = doc['title']

text = doc['text']

emb = doc['emb']

```

## Search

Have a look at [miracl-zh-queries-22-12](https://huggingface.co/datasets/Cohere/miracl-zh-queries-22-12) where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use **dot-product**.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

```python

# Attention! For large datasets, this requires a lot of memory to store

# all document embeddings and to compute the dot product scores.

# Only use this for smaller datasets. For large datasets, use a vector DB

from datasets import load_dataset

import torch

#Load documents + embeddings

docs = load_dataset(f"Cohere/miracl-zh-corpus-22-12", split="train")

doc_embeddings = torch.tensor(docs['emb'])

# Load queries

queries = load_dataset(f"Cohere/miracl-zh-queries-22-12", split="dev")

# Select the first query as example

qid = 0

query = queries[qid]

query_embedding = torch.tensor(queries['emb'])

# Compute dot score between query embedding and document embeddings

dot_scores = torch.mm(query_embedding, doc_embeddings.transpose(0, 1))

top_k = torch.topk(dot_scores, k=3)

# Print results

print("Query:", query['query'])

for doc_id in top_k.indices[0].tolist():

print(docs[doc_id]['title'])

print(docs[doc_id]['text'])

```

You can get embeddings for new queries using our API:

```python

#Run: pip install cohere

import cohere

co = cohere.Client(f"{api_key}") # You should add your cohere API Key here :))

texts = ['my search query']

response = co.embed(texts=texts, model='multilingual-22-12')

query_embedding = response.embeddings[0] # Get the embedding for the first text

```

## Performance

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 | ES 8.6.0 nDCG@10 | ES 8.6.0 acc@3 |

|---|---|---|---|---|

| miracl-ar | 64.2 | 75.2 | 46.8 | 56.2 |

| miracl-bn | 61.5 | 75.7 | 49.2 | 60.1 |

| miracl-de | 44.4 | 60.7 | 19.6 | 29.8 |

| miracl-en | 44.6 | 62.2 | 30.2 | 43.2 |

| miracl-es | 47.0 | 74.1 | 27.0 | 47.2 |

| miracl-fi | 63.7 | 76.2 | 51.4 | 61.6 |

| miracl-fr | 46.8 | 57.1 | 17.0 | 21.6 |

| miracl-hi | 50.7 | 62.9 | 41.0 | 48.9 |

| miracl-id | 44.8 | 63.8 | 39.2 | 54.7 |

| miracl-ru | 49.2 | 66.9 | 25.4 | 36.7 |

| **Avg** | 51.7 | 67.5 | 34.7 | 46.0 |

Further languages (not supported by Elasticsearch):

| Model | cohere multilingual-22-12 nDCG@10 | cohere multilingual-22-12 hit@3 |

|---|---|---|

| miracl-fa | 44.8 | 53.6 |

| miracl-ja | 49.0 | 61.0 |

| miracl-ko | 50.9 | 64.8 |

| miracl-sw | 61.4 | 74.5 |

| miracl-te | 67.8 | 72.3 |

| miracl-th | 60.2 | 71.9 |

| miracl-yo | 56.4 | 62.2 |

| miracl-zh | 43.8 | 56.5 |

| **Avg** | 54.3 | 64.6 |

| Cohere/miracl-zh-queries-22-12 | [

"task_categories:text-retrieval",

"task_ids:document-retrieval",

"annotations_creators:expert-generated",

"multilinguality:multilingual",

"language:zh",

"license:apache-2.0",

"region:us"

]

| 2023-01-31T13:38:51+00:00 | {"annotations_creators": ["expert-generated"], "language": ["zh"], "license": ["apache-2.0"], "multilinguality": ["multilingual"], "size_categories": [], "source_datasets": [], "task_categories": ["text-retrieval"], "task_ids": ["document-retrieval"], "tags": []} | 2023-02-06T11:55:33+00:00 | []

| [

"zh"

]

| TAGS

#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Chinese #license-apache-2.0 #region-us

| MIRACL (zh) embedded with URL 'multilingual-22-12' encoder

==========================================================

We encoded the MIRACL dataset using the URL 'multilingual-22-12' embedding model.

The query embeddings can be found in Cohere/miracl-zh-queries-22-12 and the corpus embeddings can be found in Cohere/miracl-zh-corpus-22-12.

For the orginal datasets, see miracl/miracl and miracl/miracl-corpus.

Dataset info:

>

> MIRACL (Multilingual Information Retrieval Across a Continuum of Languages) is a multilingual retrieval dataset that focuses on search across 18 different languages, which collectively encompass over three billion native speakers around the world.

>

>

> The corpus for each language is prepared from a Wikipedia dump, where we keep only the plain text and discard images, tables, etc. Each article is segmented into multiple passages using WikiExtractor based on natural discourse units (e.g., '\n\n' in the wiki markup). Each of these passages comprises a "document" or unit of retrieval. We preserve the Wikipedia article title of each passage.

>

>

>

Embeddings

----------

We compute for 'title+" "+text' the embeddings using our 'multilingual-22-12' embedding model, a state-of-the-art model that works for semantic search in 100 languages. If you want to learn more about this model, have a look at URL multilingual embedding model.

Loading the dataset

-------------------

In miracl-zh-corpus-22-12 we provide the corpus embeddings. Note, depending on the selected split, the respective files can be quite large.

You can either load the dataset like this:

Or you can also stream it without downloading it before:

Search

------

Have a look at miracl-zh-queries-22-12 where we provide the query embeddings for the MIRACL dataset.

To search in the documents, you must use dot-product.

And then compare this query embeddings either with a vector database (recommended) or directly computing the dot product.

A full search example:

You can get embeddings for new queries using our API:

Performance

-----------

In the following table we compare the cohere multilingual-22-12 model with Elasticsearch version 8.6.0 lexical search (title and passage indexed as independent fields). Note that Elasticsearch doesn't support all languages that are part of the MIRACL dataset.

We compute nDCG@10 (a ranking based loss), as well as hit@3: Is at least one relevant document in the top-3 results. We find that hit@3 is easier to interpret, as it presents the number of queries for which a relevant document is found among the top-3 results.

Note: MIRACL only annotated a small fraction of passages (10 per query) for relevancy. Especially for larger Wikipedias (like English), we often found many more relevant passages. This is know as annotation holes. Real nDCG@10 and hit@3 performance is likely higher than depicted.

Further languages (not supported by Elasticsearch):

Model: miracl-fa, cohere multilingual-22-12 nDCG@10: 44.8, cohere multilingual-22-12 hit@3: 53.6

Model: miracl-ja, cohere multilingual-22-12 nDCG@10: 49.0, cohere multilingual-22-12 hit@3: 61.0

Model: miracl-ko, cohere multilingual-22-12 nDCG@10: 50.9, cohere multilingual-22-12 hit@3: 64.8

Model: miracl-sw, cohere multilingual-22-12 nDCG@10: 61.4, cohere multilingual-22-12 hit@3: 74.5

Model: miracl-te, cohere multilingual-22-12 nDCG@10: 67.8, cohere multilingual-22-12 hit@3: 72.3

Model: miracl-th, cohere multilingual-22-12 nDCG@10: 60.2, cohere multilingual-22-12 hit@3: 71.9

Model: miracl-yo, cohere multilingual-22-12 nDCG@10: 56.4, cohere multilingual-22-12 hit@3: 62.2

Model: miracl-zh, cohere multilingual-22-12 nDCG@10: 43.8, cohere multilingual-22-12 hit@3: 56.5

Model: Avg, cohere multilingual-22-12 nDCG@10: 54.3, cohere multilingual-22-12 hit@3: 64.6

| []

| [

"TAGS\n#task_categories-text-retrieval #task_ids-document-retrieval #annotations_creators-expert-generated #multilinguality-multilingual #language-Chinese #license-apache-2.0 #region-us \n"

]

|

3c25218126e5a3ea1a8f1ee6d1646d42b5d40646 | # Dataset Card for "lex_fridman_podcast"

### Dataset Summary

This dataset contains transcripts from the [Lex Fridman podcast](https://www.youtube.com/playlist?list=PLrAXtmErZgOdP_8GztsuKi9nrraNbKKp4) (Episodes 1 to 325).

The transcripts were generated using [OpenAI Whisper](https://github.com/openai/whisper) (large model) and made publicly available at: https://karpathy.ai/lexicap/index.html.

### Languages

- English

## Dataset Structure

The dataset contains around 803K entries, consisting of audio transcripts generated from episodes 1 to 325 of the [Lex Fridman podcast](https://www.youtube.com/playlist?list=PLrAXtmErZgOdP_8GztsuKi9nrraNbKKp4). In addition to the transcript text, the dataset includes other metadata such as episode id and title, guest name, and start and end timestamps for each transcript.

### Data Fields

The dataset schema is as follows:

- **id**: Episode id.

- **guest**: Name of the guest interviewed.

- **title:** Title of the episode.

- **text:** Text of the transcription.

- **start:** Timestamp (`HH:mm:ss.mmm`) indicating the beginning of the trancription.

- **end:** Timestamp (`HH:mm:ss.mmm`) indicating the end of the trancription.

### Source Data

Source data provided by Andrej Karpathy at: https://karpathy.ai/lexicap/index.html

### Contributions

Thanks to [nmac](https://huggingface.co/nmac) for adding this dataset. | nmac/lex_fridman_podcast | [

"task_categories:automatic-speech-recognition",

"task_categories:sentence-similarity",

"size_categories:100K<n<1M",

"language:en",

"podcast",

"whisper",

"region:us"

]

| 2023-01-31T13:40:48+00:00 | {"language": ["en"], "size_categories": ["100K<n<1M"], "task_categories": ["automatic-speech-recognition", "sentence-similarity"], "tags": ["podcast", "whisper"]} | 2023-01-31T16:24:07+00:00 | []

| [

"en"

]

| TAGS

#task_categories-automatic-speech-recognition #task_categories-sentence-similarity #size_categories-100K<n<1M #language-English #podcast #whisper #region-us

| # Dataset Card for "lex_fridman_podcast"

### Dataset Summary

This dataset contains transcripts from the Lex Fridman podcast (Episodes 1 to 325).

The transcripts were generated using OpenAI Whisper (large model) and made publicly available at: URL

### Languages

- English

## Dataset Structure

The dataset contains around 803K entries, consisting of audio transcripts generated from episodes 1 to 325 of the Lex Fridman podcast. In addition to the transcript text, the dataset includes other metadata such as episode id and title, guest name, and start and end timestamps for each transcript.

### Data Fields

The dataset schema is as follows:

- id: Episode id.

- guest: Name of the guest interviewed.

- title: Title of the episode.

- text: Text of the transcription.

- start: Timestamp ('HH:mm:URL') indicating the beginning of the trancription.

- end: Timestamp ('HH:mm:URL') indicating the end of the trancription.

### Source Data

Source data provided by Andrej Karpathy at: URL

### Contributions

Thanks to nmac for adding this dataset. | [

"# Dataset Card for \"lex_fridman_podcast\"",

"### Dataset Summary\n\nThis dataset contains transcripts from the Lex Fridman podcast (Episodes 1 to 325).\nThe transcripts were generated using OpenAI Whisper (large model) and made publicly available at: URL",

"### Languages\n\n- English",

"## Dataset Structure\n\nThe dataset contains around 803K entries, consisting of audio transcripts generated from episodes 1 to 325 of the Lex Fridman podcast. In addition to the transcript text, the dataset includes other metadata such as episode id and title, guest name, and start and end timestamps for each transcript.",

"### Data Fields\n\nThe dataset schema is as follows:\n- id: Episode id.\n- guest: Name of the guest interviewed.\n- title: Title of the episode.\n- text: Text of the transcription.\n- start: Timestamp ('HH:mm:URL') indicating the beginning of the trancription.\n- end: Timestamp ('HH:mm:URL') indicating the end of the trancription.",

"### Source Data\n\nSource data provided by Andrej Karpathy at: URL",

"### Contributions\n\nThanks to nmac for adding this dataset."

]

| [

"TAGS\n#task_categories-automatic-speech-recognition #task_categories-sentence-similarity #size_categories-100K<n<1M #language-English #podcast #whisper #region-us \n",

"# Dataset Card for \"lex_fridman_podcast\"",

"### Dataset Summary\n\nThis dataset contains transcripts from the Lex Fridman podcast (Episodes 1 to 325).\nThe transcripts were generated using OpenAI Whisper (large model) and made publicly available at: URL",

"### Languages\n\n- English",

"## Dataset Structure\n\nThe dataset contains around 803K entries, consisting of audio transcripts generated from episodes 1 to 325 of the Lex Fridman podcast. In addition to the transcript text, the dataset includes other metadata such as episode id and title, guest name, and start and end timestamps for each transcript.",

"### Data Fields\n\nThe dataset schema is as follows:\n- id: Episode id.\n- guest: Name of the guest interviewed.\n- title: Title of the episode.\n- text: Text of the transcription.\n- start: Timestamp ('HH:mm:URL') indicating the beginning of the trancription.\n- end: Timestamp ('HH:mm:URL') indicating the end of the trancription.",

"### Source Data\n\nSource data provided by Andrej Karpathy at: URL",

"### Contributions\n\nThanks to nmac for adding this dataset."

]

|

5f326be836f64a96e42641983de3e5feafbc835c | # Dataset Card for "cqadupstack"

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Additional Information](#additional-information)

- [Licensing Information](#licensing-information)

## Dataset Description

- **Homepage:** [http://nlp.cis.unimelb.edu.au/resources/cqadupstack/](http://nlp.cis.unimelb.edu.au/resources/cqadupstack/)

### Dataset Summary

This is a preprocessed version of cqadupstack, to make it easily consumable via huggingface. The original dataset can be found [here](http://nlp.cis.unimelb.edu.au/resources/cqadupstack/).

CQADupStack is a benchmark dataset for community question-answering (cQA) research. It contains threads from twelve StackExchange1 subforums, annotated with duplicate question information and comes with pre-defined training, development, and test splits, both for retrieval and classification experiments.

## Dataset Structure

### Data Instances

An example of 'train' looks as follows.

```json

{

"question": "Very often, when some unknown company is calling me, in couple of seconds I see its name and logo on standard ...",

"answer": "You didn't explicitely mention it, but from the context I assume you're using a device with Android 4.4 (Kitkat). With that ...",

"title": "Why Dialer shows contact name and image, when contact is not in my address book?",

"forum_tag": "android"

}

```

### Data Fields

The data fields are the same among all splits.

- `question`: a `string` feature.

- `answer`: a `string` feature.

- `title`: a `string` feature.

- `forum_tag`: a categorical `string` feature.

## Additional Information

### Licensing Information

This dataset is distributed under the Apache 2.0 licence.

| LLukas22/cqadupstack | [

"task_categories:sentence-similarity",

"task_categories:feature-extraction",

"size_categories:100K<n<1M",

"language:en",

"license:apache-2.0",

"region:us"

]

| 2023-01-31T14:18:36+00:00 | {"language": ["en"], "license": "apache-2.0", "size_categories": ["100K<n<1M"], "task_categories": ["sentence-similarity", "feature-extraction"]} | 2023-04-30T18:24:35+00:00 | []

| [

"en"

]

| TAGS

#task_categories-sentence-similarity #task_categories-feature-extraction #size_categories-100K<n<1M #language-English #license-apache-2.0 #region-us

| # Dataset Card for "cqadupstack"

## Table of Contents

- Table of Contents

- Dataset Description

- Dataset Summary

- Dataset Structure

- Data Instances

- Data Fields

- Additional Information

- Licensing Information

## Dataset Description

- Homepage: URL

### Dataset Summary

This is a preprocessed version of cqadupstack, to make it easily consumable via huggingface. The original dataset can be found here.

CQADupStack is a benchmark dataset for community question-answering (cQA) research. It contains threads from twelve StackExchange1 subforums, annotated with duplicate question information and comes with pre-defined training, development, and test splits, both for retrieval and classification experiments.

## Dataset Structure

### Data Instances

An example of 'train' looks as follows.

### Data Fields

The data fields are the same among all splits.

- 'question': a 'string' feature.

- 'answer': a 'string' feature.

- 'title': a 'string' feature.

- 'forum_tag': a categorical 'string' feature.

## Additional Information

### Licensing Information

This dataset is distributed under the Apache 2.0 licence.

| [

"# Dataset Card for \"cqadupstack\"",

"## Table of Contents\n- Table of Contents\n- Dataset Description\n - Dataset Summary\n- Dataset Structure\n - Data Instances\n - Data Fields\n- Additional Information\n - Licensing Information",

"## Dataset Description\n\n- Homepage: URL",

"### Dataset Summary\nThis is a preprocessed version of cqadupstack, to make it easily consumable via huggingface. The original dataset can be found here.\n\n\nCQADupStack is a benchmark dataset for community question-answering (cQA) research. It contains threads from twelve StackExchange1 subforums, annotated with duplicate question information and comes with pre-defined training, development, and test splits, both for retrieval and classification experiments.",

"## Dataset Structure",

"### Data Instances\n\nAn example of 'train' looks as follows.",

"### Data Fields\n\nThe data fields are the same among all splits.\n\n- 'question': a 'string' feature.\n- 'answer': a 'string' feature.\n- 'title': a 'string' feature.\n- 'forum_tag': a categorical 'string' feature.",

"## Additional Information",

"### Licensing Information\n\nThis dataset is distributed under the Apache 2.0 licence."

]

| [

"TAGS\n#task_categories-sentence-similarity #task_categories-feature-extraction #size_categories-100K<n<1M #language-English #license-apache-2.0 #region-us \n",

"# Dataset Card for \"cqadupstack\"",

"## Table of Contents\n- Table of Contents\n- Dataset Description\n - Dataset Summary\n- Dataset Structure\n - Data Instances\n - Data Fields\n- Additional Information\n - Licensing Information",

"## Dataset Description\n\n- Homepage: URL",

"### Dataset Summary\nThis is a preprocessed version of cqadupstack, to make it easily consumable via huggingface. The original dataset can be found here.\n\n\nCQADupStack is a benchmark dataset for community question-answering (cQA) research. It contains threads from twelve StackExchange1 subforums, annotated with duplicate question information and comes with pre-defined training, development, and test splits, both for retrieval and classification experiments.",

"## Dataset Structure",

"### Data Instances\n\nAn example of 'train' looks as follows.",

"### Data Fields\n\nThe data fields are the same among all splits.\n\n- 'question': a 'string' feature.\n- 'answer': a 'string' feature.\n- 'title': a 'string' feature.\n- 'forum_tag': a categorical 'string' feature.",

"## Additional Information",

"### Licensing Information\n\nThis dataset is distributed under the Apache 2.0 licence."

]

|

7c4aa0946d69f52ef275002e06b3f820e6482a8d |

# Dataset Card for "cqadupstack"

## Table of Contents

- [Table of Contents](#table-of-contents)

- [Dataset Description](#dataset-description)

- [Dataset Summary](#dataset-summary)

- [Dataset Structure](#dataset-structure)

- [Data Instances](#data-instances)

- [Data Fields](#data-fields)

- [Additional Information](#additional-information)

- [Licensing Information](#licensing-information)

## Dataset Description

- **Homepage:** [https://sites.google.com/view/fiqa/?pli=1](https://sites.google.com/view/fiqa/?pli=1)

### Dataset Summary

This is a preprocessed version of fiqa, to make it easily consumable via huggingface. The original dataset can be found [here](https://sites.google.com/view/fiqa/?pli=1).

The growing maturity of Natural Language Processing (NLP) techniques and resources is drastically changing the landscape of many application domains which are dependent on the analysis of unstructured data at scale. The financial domain, with its dependency on the interpretation of multiple unstructured and structured data sources and with its demand for fast and comprehensive decision making is already emerging as a primary ground for the experimentation of NLP, Web Mining and Information Retrieval (IR) techniques. This challenge focuses on advancing the state-of-the-art of aspect-based sentiment analysis and opinion-based Question Answering for the financial domain.

## Dataset Structure

### Data Instances

An example of 'train' looks as follows.

```json

{

"question": "How does a 2 year treasury note work?",

"answer": "Notes and Bonds sell at par (1.0). When rates go up, their value goes down. When rates go down, their value goes up. ..."

}

```

### Data Fields

The data fields are the same among all splits.

- `question`: a `string` feature.

- `answer`: a `string` feature.

## Additional Information

### Licensing Information

This dataset is distributed under the [CC BY-NC](https://creativecommons.org/licenses/by-nc/3.0/) licence providing free access for non-commercial and academic usage. | LLukas22/fiqa | [

"task_categories:feature-extraction",

"task_categories:sentence-similarity",

"size_categories:10K<n<100K",

"language:en",

"license:cc-by-3.0",

"region:us"

]