pipeline_tag

stringclasses 48

values | library_name

stringclasses 198

values | text

stringlengths 1

900k

| metadata

stringlengths 2

438k

| id

stringlengths 5

122

| last_modified

null | tags

sequencelengths 1

1.84k

| sha

null | created_at

stringlengths 25

25

| arxiv

sequencelengths 0

201

| languages

sequencelengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

sequencelengths 0

722

| processed_texts

sequencelengths 1

723

| tokens_length

sequencelengths 1

723

| input_texts

sequencelengths 1

1

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

reinforcement-learning | sample-factory |

A(n) **APPO** model trained on the **doom_health_gathering_supreme** environment.

This model was trained using Sample-Factory 2.0: https://github.com/alex-petrenko/sample-factory.

Documentation for how to use Sample-Factory can be found at https://www.samplefactory.dev/

## Downloading the model

After installing Sample-Factory, download the model with:

```

python -m sample_factory.huggingface.load_from_hub -r aw-infoprojekt/rl_course_vizdoom_health_gathering_supreme

```

## Using the model

To run the model after download, use the `enjoy` script corresponding to this environment:

```

python -m .usr.local.lib.python3.10.dist-packages.colab_kernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme

```

You can also upload models to the Hugging Face Hub using the same script with the `--push_to_hub` flag.

See https://www.samplefactory.dev/10-huggingface/huggingface/ for more details

## Training with this model

To continue training with this model, use the `train` script corresponding to this environment:

```

python -m .usr.local.lib.python3.10.dist-packages.colab_kernel_launcher --algo=APPO --env=doom_health_gathering_supreme --train_dir=./train_dir --experiment=rl_course_vizdoom_health_gathering_supreme --restart_behavior=resume --train_for_env_steps=10000000000

```

Note, you may have to adjust `--train_for_env_steps` to a suitably high number as the experiment will resume at the number of steps it concluded at.

| {"library_name": "sample-factory", "tags": ["deep-reinforcement-learning", "reinforcement-learning", "sample-factory"], "model-index": [{"name": "APPO", "results": [{"task": {"type": "reinforcement-learning", "name": "reinforcement-learning"}, "dataset": {"name": "doom_health_gathering_supreme", "type": "doom_health_gathering_supreme"}, "metrics": [{"type": "mean_reward", "value": "10.86 +/- 3.72", "name": "mean_reward", "verified": false}]}]}]} | aw-infoprojekt/rl_course_vizdoom_health_gathering_supreme | null | [

"sample-factory",

"tensorboard",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | null | 2024-04-30T06:22:42+00:00 | [] | [] | TAGS

#sample-factory #tensorboard #deep-reinforcement-learning #reinforcement-learning #model-index #region-us

|

A(n) APPO model trained on the doom_health_gathering_supreme environment.

This model was trained using Sample-Factory 2.0: URL

Documentation for how to use Sample-Factory can be found at URL

## Downloading the model

After installing Sample-Factory, download the model with:

## Using the model

To run the model after download, use the 'enjoy' script corresponding to this environment:

You can also upload models to the Hugging Face Hub using the same script with the '--push_to_hub' flag.

See URL for more details

## Training with this model

To continue training with this model, use the 'train' script corresponding to this environment:

Note, you may have to adjust '--train_for_env_steps' to a suitably high number as the experiment will resume at the number of steps it concluded at.

| [

"## Downloading the model\n\nAfter installing Sample-Factory, download the model with:",

"## Using the model\n\nTo run the model after download, use the 'enjoy' script corresponding to this environment:\n\n\n\nYou can also upload models to the Hugging Face Hub using the same script with the '--push_to_hub' flag.\nSee URL for more details",

"## Training with this model\n\nTo continue training with this model, use the 'train' script corresponding to this environment:\n\n\nNote, you may have to adjust '--train_for_env_steps' to a suitably high number as the experiment will resume at the number of steps it concluded at."

] | [

"TAGS\n#sample-factory #tensorboard #deep-reinforcement-learning #reinforcement-learning #model-index #region-us \n",

"## Downloading the model\n\nAfter installing Sample-Factory, download the model with:",

"## Using the model\n\nTo run the model after download, use the 'enjoy' script corresponding to this environment:\n\n\n\nYou can also upload models to the Hugging Face Hub using the same script with the '--push_to_hub' flag.\nSee URL for more details",

"## Training with this model\n\nTo continue training with this model, use the 'train' script corresponding to this environment:\n\n\nNote, you may have to adjust '--train_for_env_steps' to a suitably high number as the experiment will resume at the number of steps it concluded at."

] | [

26,

17,

57,

63

] | [

"TAGS\n#sample-factory #tensorboard #deep-reinforcement-learning #reinforcement-learning #model-index #region-us \n## Downloading the model\n\nAfter installing Sample-Factory, download the model with:## Using the model\n\nTo run the model after download, use the 'enjoy' script corresponding to this environment:\n\n\n\nYou can also upload models to the Hugging Face Hub using the same script with the '--push_to_hub' flag.\nSee URL for more details## Training with this model\n\nTo continue training with this model, use the 'train' script corresponding to this environment:\n\n\nNote, you may have to adjust '--train_for_env_steps' to a suitably high number as the experiment will resume at the number of steps it concluded at."

] |

text-generation | transformers |

# mlx-community/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0

This model was converted to MLX format from [`llm-jp/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0`]() using mlx-lm version **0.12.0**.

Refer to the [original model card](https://huggingface.co/llm-jp/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0) for more details on the model.

## Use with mlx

```bash

pip install mlx-lm

```

```python

from mlx_lm import load, generate

model, tokenizer = load("mlx-community/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0")

response = generate(model, tokenizer, prompt="hello", verbose=True)

```

| {"language": ["en", "ja"], "license": "apache-2.0", "library_name": "transformers", "tags": ["mlx"], "datasets": ["databricks/databricks-dolly-15k", "llm-jp/databricks-dolly-15k-ja", "llm-jp/oasst1-21k-en", "llm-jp/oasst1-21k-ja", "llm-jp/oasst2-33k-en", "llm-jp/oasst2-33k-ja"], "programming_language": ["C", "C++", "C#", "Go", "Java", "JavaScript", "Lua", "PHP", "Python", "Ruby", "Rust", "Scala", "TypeScript"], "pipeline_tag": "text-generation", "inference": false} | mlx-community/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0 | null | [

"transformers",

"safetensors",

"llama",

"text-generation",

"mlx",

"conversational",

"en",

"ja",

"dataset:databricks/databricks-dolly-15k",

"dataset:llm-jp/databricks-dolly-15k-ja",

"dataset:llm-jp/oasst1-21k-en",

"dataset:llm-jp/oasst1-21k-ja",

"dataset:llm-jp/oasst2-33k-en",

"dataset:llm-jp/oasst2-33k-ja",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | null | 2024-04-30T06:24:57+00:00 | [] | [

"en",

"ja"

] | TAGS

#transformers #safetensors #llama #text-generation #mlx #conversational #en #ja #dataset-databricks/databricks-dolly-15k #dataset-llm-jp/databricks-dolly-15k-ja #dataset-llm-jp/oasst1-21k-en #dataset-llm-jp/oasst1-21k-ja #dataset-llm-jp/oasst2-33k-en #dataset-llm-jp/oasst2-33k-ja #license-apache-2.0 #autotrain_compatible #text-generation-inference #region-us

|

# mlx-community/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0

This model was converted to MLX format from ['llm-jp/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0']() using mlx-lm version 0.12.0.

Refer to the original model card for more details on the model.

## Use with mlx

| [

"# mlx-community/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0\nThis model was converted to MLX format from ['llm-jp/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0']() using mlx-lm version 0.12.0.\nRefer to the original model card for more details on the model.",

"## Use with mlx"

] | [

"TAGS\n#transformers #safetensors #llama #text-generation #mlx #conversational #en #ja #dataset-databricks/databricks-dolly-15k #dataset-llm-jp/databricks-dolly-15k-ja #dataset-llm-jp/oasst1-21k-en #dataset-llm-jp/oasst1-21k-ja #dataset-llm-jp/oasst2-33k-en #dataset-llm-jp/oasst2-33k-ja #license-apache-2.0 #autotrain_compatible #text-generation-inference #region-us \n",

"# mlx-community/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0\nThis model was converted to MLX format from ['llm-jp/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0']() using mlx-lm version 0.12.0.\nRefer to the original model card for more details on the model.",

"## Use with mlx"

] | [

154,

130,

6

] | [

"TAGS\n#transformers #safetensors #llama #text-generation #mlx #conversational #en #ja #dataset-databricks/databricks-dolly-15k #dataset-llm-jp/databricks-dolly-15k-ja #dataset-llm-jp/oasst1-21k-en #dataset-llm-jp/oasst1-21k-ja #dataset-llm-jp/oasst2-33k-en #dataset-llm-jp/oasst2-33k-ja #license-apache-2.0 #autotrain_compatible #text-generation-inference #region-us \n# mlx-community/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0\nThis model was converted to MLX format from ['llm-jp/llm-jp-13b-instruct-full-dolly-ichikara_004_001_single-oasst-oasst2-v2.0']() using mlx-lm version 0.12.0.\nRefer to the original model card for more details on the model.## Use with mlx"

] |

text-to-image | diffusers |

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🧨 diffusers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

| {"library_name": "diffusers"} | Niggendar/mightMixes15Ponyxl_pxlBlastwrx | null | [

"diffusers",

"safetensors",

"arxiv:1910.09700",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | null | 2024-04-30T06:25:17+00:00 | [

"1910.09700"

] | [] | TAGS

#diffusers #safetensors #arxiv-1910.09700 #endpoints_compatible #diffusers-StableDiffusionXLPipeline #region-us

|

# Model Card for Model ID

## Model Details

### Model Description

This is the model card of a diffusers model that has been pushed on the Hub. This model card has been automatically generated.

- Developed by:

- Funded by [optional]:

- Shared by [optional]:

- Model type:

- Language(s) (NLP):

- License:

- Finetuned from model [optional]:

### Model Sources [optional]

- Repository:

- Paper [optional]:

- Demo [optional]:

## Uses

### Direct Use

### Downstream Use [optional]

### Out-of-Scope Use

## Bias, Risks, and Limitations

### Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

## Training Details

### Training Data

### Training Procedure

#### Preprocessing [optional]

#### Training Hyperparameters

- Training regime:

#### Speeds, Sizes, Times [optional]

## Evaluation

### Testing Data, Factors & Metrics

#### Testing Data

#### Factors

#### Metrics

### Results

#### Summary

## Model Examination [optional]

## Environmental Impact

Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).

- Hardware Type:

- Hours used:

- Cloud Provider:

- Compute Region:

- Carbon Emitted:

## Technical Specifications [optional]

### Model Architecture and Objective

### Compute Infrastructure

#### Hardware

#### Software

[optional]

BibTeX:

APA:

## Glossary [optional]

## More Information [optional]

## Model Card Authors [optional]

## Model Card Contact

| [

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a diffusers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] | [

"TAGS\n#diffusers #safetensors #arxiv-1910.09700 #endpoints_compatible #diffusers-StableDiffusionXLPipeline #region-us \n",

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a diffusers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] | [

39,

6,

4,

76,

23,

3,

5,

8,

9,

8,

34,

20,

4,

5,

5,

11,

13,

12,

3,

10,

6,

5,

6,

4,

5,

7,

49,

7,

7,

5,

5,

15,

7,

7,

8,

5

] | [

"TAGS\n#diffusers #safetensors #arxiv-1910.09700 #endpoints_compatible #diffusers-StableDiffusionXLPipeline #region-us \n# Model Card for Model ID## Model Details### Model Description\n\n\n\nThis is the model card of a diffusers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:## Uses### Direct Use### Downstream Use [optional]### Out-of-Scope Use## Bias, Risks, and Limitations### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.## How to Get Started with the Model\n\nUse the code below to get started with the model.## Training Details### Training Data### Training Procedure#### Preprocessing [optional]#### Training Hyperparameters\n\n- Training regime:#### Speeds, Sizes, Times [optional]## Evaluation### Testing Data, Factors & Metrics#### Testing Data#### Factors#### Metrics### Results#### Summary## Model Examination [optional]## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:## Technical Specifications [optional]### Model Architecture and Objective### Compute Infrastructure#### Hardware#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:## Glossary [optional]## More Information [optional]## Model Card Authors [optional]## Model Card Contact"

] |

sentence-similarity | sentence-transformers |

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 113 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 1000,

"evaluator": "sentence_transformers.evaluation.EmbeddingSimilarityEvaluator.EmbeddingSimilarityEvaluator",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 0,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 384, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False, 'pooling_mode_weightedmean_tokens': False, 'pooling_mode_lasttoken': False, 'include_prompt': True})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> | {"library_name": "sentence-transformers", "tags": ["sentence-transformers", "feature-extraction", "sentence-similarity", "transformers"], "pipeline_tag": "sentence-similarity"} | Mihaiii/test16 | null | [

"sentence-transformers",

"safetensors",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"endpoints_compatible",

"region:us"

] | null | 2024-04-30T06:25:40+00:00 | [] | [] | TAGS

#sentence-transformers #safetensors #bert #feature-extraction #sentence-similarity #transformers #endpoints_compatible #region-us

|

# {MODEL_NAME}

This is a sentence-transformers model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have sentence-transformers installed:

Then you can use the model like this:

## Usage (HuggingFace Transformers)

Without sentence-transformers, you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: URL

## Training

The model was trained with the parameters:

DataLoader:

'URL.dataloader.DataLoader' of length 113 with parameters:

Loss:

'sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss'

Parameters of the fit()-Method:

## Full Model Architecture

## Citing & Authors

| [

"# {MODEL_NAME}\n\nThis is a sentence-transformers model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.",

"## Usage (Sentence-Transformers)\n\nUsing this model becomes easy when you have sentence-transformers installed:\n\n\n\nThen you can use the model like this:",

"## Usage (HuggingFace Transformers)\nWithout sentence-transformers, you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.",

"## Evaluation Results\n\n\n\nFor an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: URL",

"## Training\nThe model was trained with the parameters:\n\nDataLoader:\n\n'URL.dataloader.DataLoader' of length 113 with parameters:\n\n\nLoss:\n\n'sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss' \n\nParameters of the fit()-Method:",

"## Full Model Architecture",

"## Citing & Authors"

] | [

"TAGS\n#sentence-transformers #safetensors #bert #feature-extraction #sentence-similarity #transformers #endpoints_compatible #region-us \n",

"# {MODEL_NAME}\n\nThis is a sentence-transformers model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.",

"## Usage (Sentence-Transformers)\n\nUsing this model becomes easy when you have sentence-transformers installed:\n\n\n\nThen you can use the model like this:",

"## Usage (HuggingFace Transformers)\nWithout sentence-transformers, you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.",

"## Evaluation Results\n\n\n\nFor an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: URL",

"## Training\nThe model was trained with the parameters:\n\nDataLoader:\n\n'URL.dataloader.DataLoader' of length 113 with parameters:\n\n\nLoss:\n\n'sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss' \n\nParameters of the fit()-Method:",

"## Full Model Architecture",

"## Citing & Authors"

] | [

30,

41,

30,

58,

26,

69,

5,

5

] | [

"TAGS\n#sentence-transformers #safetensors #bert #feature-extraction #sentence-similarity #transformers #endpoints_compatible #region-us \n# {MODEL_NAME}\n\nThis is a sentence-transformers model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.## Usage (Sentence-Transformers)\n\nUsing this model becomes easy when you have sentence-transformers installed:\n\n\n\nThen you can use the model like this:## Usage (HuggingFace Transformers)\nWithout sentence-transformers, you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.## Evaluation Results\n\n\n\nFor an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: URL## Training\nThe model was trained with the parameters:\n\nDataLoader:\n\n'URL.dataloader.DataLoader' of length 113 with parameters:\n\n\nLoss:\n\n'sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss' \n\nParameters of the fit()-Method:## Full Model Architecture## Citing & Authors"

] |

reinforcement-learning | stable-baselines3 |

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

| {"library_name": "stable-baselines3", "tags": ["LunarLander-v2", "deep-reinforcement-learning", "reinforcement-learning", "stable-baselines3"], "model-index": [{"name": "PPO", "results": [{"task": {"type": "reinforcement-learning", "name": "reinforcement-learning"}, "dataset": {"name": "LunarLander-v2", "type": "LunarLander-v2"}, "metrics": [{"type": "mean_reward", "value": "220.28 +/- 85.29", "name": "mean_reward", "verified": false}]}]}]} | Chhabi/PPO-LunarLander-v2 | null | [

"stable-baselines3",

"LunarLander-v2",

"deep-reinforcement-learning",

"reinforcement-learning",

"model-index",

"region:us"

] | null | 2024-04-30T06:26:59+00:00 | [] | [] | TAGS

#stable-baselines3 #LunarLander-v2 #deep-reinforcement-learning #reinforcement-learning #model-index #region-us

|

# PPO Agent playing LunarLander-v2

This is a trained model of a PPO agent playing LunarLander-v2

using the stable-baselines3 library.

## Usage (with Stable-baselines3)

TODO: Add your code

| [

"# PPO Agent playing LunarLander-v2\nThis is a trained model of a PPO agent playing LunarLander-v2\nusing the stable-baselines3 library.",

"## Usage (with Stable-baselines3)\nTODO: Add your code"

] | [

"TAGS\n#stable-baselines3 #LunarLander-v2 #deep-reinforcement-learning #reinforcement-learning #model-index #region-us \n",

"# PPO Agent playing LunarLander-v2\nThis is a trained model of a PPO agent playing LunarLander-v2\nusing the stable-baselines3 library.",

"## Usage (with Stable-baselines3)\nTODO: Add your code"

] | [

31,

35,

17

] | [

"TAGS\n#stable-baselines3 #LunarLander-v2 #deep-reinforcement-learning #reinforcement-learning #model-index #region-us \n# PPO Agent playing LunarLander-v2\nThis is a trained model of a PPO agent playing LunarLander-v2\nusing the stable-baselines3 library.## Usage (with Stable-baselines3)\nTODO: Add your code"

] |

text-classification | transformers |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# robust_llm_pythia-31m_mz-133_WordLength_n-its-10-seed-3

This model is a fine-tuned version of [EleutherAI/pythia-31m](https://huggingface.co/EleutherAI/pythia-31m) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 64

- seed: 3

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.39.3

- Pytorch 2.2.1

- Datasets 2.18.0

- Tokenizers 0.15.2

| {"tags": ["generated_from_trainer"], "base_model": "EleutherAI/pythia-31m", "model-index": [{"name": "robust_llm_pythia-31m_mz-133_WordLength_n-its-10-seed-3", "results": []}]} | AlignmentResearch/robust_llm_pythia-31m_mz-133_WordLength_n-its-10-seed-3 | null | [

"transformers",

"tensorboard",

"safetensors",

"gpt_neox",

"text-classification",

"generated_from_trainer",

"base_model:EleutherAI/pythia-31m",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null | 2024-04-30T06:29:09+00:00 | [] | [] | TAGS

#transformers #tensorboard #safetensors #gpt_neox #text-classification #generated_from_trainer #base_model-EleutherAI/pythia-31m #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# robust_llm_pythia-31m_mz-133_WordLength_n-its-10-seed-3

This model is a fine-tuned version of EleutherAI/pythia-31m on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 64

- seed: 3

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

### Framework versions

- Transformers 4.39.3

- Pytorch 2.2.1

- Datasets 2.18.0

- Tokenizers 0.15.2

| [

"# robust_llm_pythia-31m_mz-133_WordLength_n-its-10-seed-3\n\nThis model is a fine-tuned version of EleutherAI/pythia-31m on an unknown dataset.",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 1e-05\n- train_batch_size: 8\n- eval_batch_size: 64\n- seed: 3\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 1",

"### Training results",

"### Framework versions\n\n- Transformers 4.39.3\n- Pytorch 2.2.1\n- Datasets 2.18.0\n- Tokenizers 0.15.2"

] | [

"TAGS\n#transformers #tensorboard #safetensors #gpt_neox #text-classification #generated_from_trainer #base_model-EleutherAI/pythia-31m #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# robust_llm_pythia-31m_mz-133_WordLength_n-its-10-seed-3\n\nThis model is a fine-tuned version of EleutherAI/pythia-31m on an unknown dataset.",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 1e-05\n- train_batch_size: 8\n- eval_batch_size: 64\n- seed: 3\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 1",

"### Training results",

"### Framework versions\n\n- Transformers 4.39.3\n- Pytorch 2.2.1\n- Datasets 2.18.0\n- Tokenizers 0.15.2"

] | [

62,

58,

7,

9,

9,

4,

93,

5,

40

] | [

"TAGS\n#transformers #tensorboard #safetensors #gpt_neox #text-classification #generated_from_trainer #base_model-EleutherAI/pythia-31m #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n# robust_llm_pythia-31m_mz-133_WordLength_n-its-10-seed-3\n\nThis model is a fine-tuned version of EleutherAI/pythia-31m on an unknown dataset.## Model description\n\nMore information needed## Intended uses & limitations\n\nMore information needed## Training and evaluation data\n\nMore information needed## Training procedure### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 1e-05\n- train_batch_size: 8\n- eval_batch_size: 64\n- seed: 3\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- num_epochs: 1### Training results### Framework versions\n\n- Transformers 4.39.3\n- Pytorch 2.2.1\n- Datasets 2.18.0\n- Tokenizers 0.15.2"

] |

null | peft |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# taide_llama3_8b_lora_completion_only

This model is a fine-tuned version of [taide/Llama3-TAIDE-LX-8B-Chat-Alpha1](https://huggingface.co/taide/Llama3-TAIDE-LX-8B-Chat-Alpha1) on the DandinPower/ZH-Reading-Comprehension-Llama-Instruct dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0968

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: multi-GPU

- num_devices: 2

- gradient_accumulation_steps: 8

- total_train_batch_size: 16

- total_eval_batch_size: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 700

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:------:|:----:|:---------------:|

| 0.1474 | 0.3690 | 250 | 0.1201 |

| 0.1072 | 0.7380 | 500 | 0.1581 |

| 0.098 | 1.1070 | 750 | 0.1148 |

| 0.0963 | 1.4760 | 1000 | 0.1044 |

| 0.0502 | 1.8450 | 1250 | 0.1064 |

| 0.05 | 2.2140 | 1500 | 0.1017 |

| 0.0239 | 2.5830 | 1750 | 0.1015 |

| 0.0443 | 2.9520 | 2000 | 0.0968 |

### Framework versions

- PEFT 0.10.0

- Transformers 4.40.0

- Pytorch 2.2.2+cu121

- Datasets 2.19.0

- Tokenizers 0.19.1 | {"language": ["zh"], "license": "other", "library_name": "peft", "tags": ["trl", "sft", "nycu-112-2-deeplearning-hw2", "generated_from_trainer"], "datasets": ["DandinPower/ZH-Reading-Comprehension-Llama-Instruct"], "base_model": "taide/Llama3-TAIDE-LX-8B-Chat-Alpha1", "model-index": [{"name": "taide_llama3_8b_lora_completion_only", "results": []}]} | DandinPower/taide_llama3_8b_lora_completion_only | null | [

"peft",

"safetensors",

"trl",

"sft",

"nycu-112-2-deeplearning-hw2",

"generated_from_trainer",

"zh",

"dataset:DandinPower/ZH-Reading-Comprehension-Llama-Instruct",

"base_model:taide/Llama3-TAIDE-LX-8B-Chat-Alpha1",

"license:other",

"region:us"

] | null | 2024-04-30T06:29:55+00:00 | [] | [

"zh"

] | TAGS

#peft #safetensors #trl #sft #nycu-112-2-deeplearning-hw2 #generated_from_trainer #zh #dataset-DandinPower/ZH-Reading-Comprehension-Llama-Instruct #base_model-taide/Llama3-TAIDE-LX-8B-Chat-Alpha1 #license-other #region-us

| taide\_llama3\_8b\_lora\_completion\_only

=========================================

This model is a fine-tuned version of taide/Llama3-TAIDE-LX-8B-Chat-Alpha1 on the DandinPower/ZH-Reading-Comprehension-Llama-Instruct dataset.

It achieves the following results on the evaluation set:

* Loss: 0.0968

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 0.0001

* train\_batch\_size: 1

* eval\_batch\_size: 1

* seed: 42

* distributed\_type: multi-GPU

* num\_devices: 2

* gradient\_accumulation\_steps: 8

* total\_train\_batch\_size: 16

* total\_eval\_batch\_size: 2

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* lr\_scheduler\_warmup\_steps: 700

* num\_epochs: 3.0

### Training results

### Framework versions

* PEFT 0.10.0

* Transformers 4.40.0

* Pytorch 2.2.2+cu121

* Datasets 2.19.0

* Tokenizers 0.19.1

| [

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0001\n* train\\_batch\\_size: 1\n* eval\\_batch\\_size: 1\n* seed: 42\n* distributed\\_type: multi-GPU\n* num\\_devices: 2\n* gradient\\_accumulation\\_steps: 8\n* total\\_train\\_batch\\_size: 16\n* total\\_eval\\_batch\\_size: 2\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_steps: 700\n* num\\_epochs: 3.0",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.10.0\n* Transformers 4.40.0\n* Pytorch 2.2.2+cu121\n* Datasets 2.19.0\n* Tokenizers 0.19.1"

] | [

"TAGS\n#peft #safetensors #trl #sft #nycu-112-2-deeplearning-hw2 #generated_from_trainer #zh #dataset-DandinPower/ZH-Reading-Comprehension-Llama-Instruct #base_model-taide/Llama3-TAIDE-LX-8B-Chat-Alpha1 #license-other #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0001\n* train\\_batch\\_size: 1\n* eval\\_batch\\_size: 1\n* seed: 42\n* distributed\\_type: multi-GPU\n* num\\_devices: 2\n* gradient\\_accumulation\\_steps: 8\n* total\\_train\\_batch\\_size: 16\n* total\\_eval\\_batch\\_size: 2\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_steps: 700\n* num\\_epochs: 3.0",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.10.0\n* Transformers 4.40.0\n* Pytorch 2.2.2+cu121\n* Datasets 2.19.0\n* Tokenizers 0.19.1"

] | [

92,

174,

5,

52

] | [

"TAGS\n#peft #safetensors #trl #sft #nycu-112-2-deeplearning-hw2 #generated_from_trainer #zh #dataset-DandinPower/ZH-Reading-Comprehension-Llama-Instruct #base_model-taide/Llama3-TAIDE-LX-8B-Chat-Alpha1 #license-other #region-us \n### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0001\n* train\\_batch\\_size: 1\n* eval\\_batch\\_size: 1\n* seed: 42\n* distributed\\_type: multi-GPU\n* num\\_devices: 2\n* gradient\\_accumulation\\_steps: 8\n* total\\_train\\_batch\\_size: 16\n* total\\_eval\\_batch\\_size: 2\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_steps: 700\n* num\\_epochs: 3.0### Training results### Framework versions\n\n\n* PEFT 0.10.0\n* Transformers 4.40.0\n* Pytorch 2.2.2+cu121\n* Datasets 2.19.0\n* Tokenizers 0.19.1"

] |

text-generation | transformers | # starcoder2-15b-instruct-v0.1-GGUF

- Original model: [starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-15b-instruct-v0.1)

<!-- description start -->

## Description

This repo contains GGUF format model files for [starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-15b-instruct-v0.1).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). This is the source project for GGUF, providing both a Command Line Interface (CLI) and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), Known as the most widely used web UI, this project boasts numerous features and powerful extensions, and supports GPU acceleration.

* [Ollama](https://github.com/jmorganca/ollama) Ollama is a lightweight and extensible framework designed for building and running language models locally. It features a simple API for creating, managing, and executing models, along with a library of pre-built models for use in various applications

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), A comprehensive web UI offering GPU acceleration across all platforms and architectures, particularly renowned for storytelling.

* [GPT4All](https://gpt4all.io), This is a free and open source GUI that runs locally, supporting Windows, Linux, and macOS with full GPU acceleration.

* [LM Studio](https://lmstudio.ai/) An intuitive and powerful local GUI for Windows and macOS (Silicon), featuring GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui). A notable web UI with a variety of unique features, including a comprehensive model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), An attractive, user-friendly character-based chat GUI for Windows and macOS (both Silicon and Intel), also offering GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), A Python library equipped with GPU acceleration, LangChain support, and an OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), A Rust-based ML framework focusing on performance, including GPU support, and designed for ease of use.

* [ctransformers](https://github.com/marella/ctransformers), A Python library featuring GPU acceleration, LangChain support, and an OpenAI-compatible AI server.

* [localGPT](https://github.com/PromtEngineer/localGPT) An open-source initiative enabling private conversations with documents.

<!-- README_GGUF.md-about-gguf end -->

<!-- compatibility_gguf start -->

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single folder.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: LiteLLMs/starcoder2-15b-instruct-v0.1-GGUF and below it, a specific filename to download, such as: Q4_0/Q4_0-00001-of-00009.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download LiteLLMs/starcoder2-15b-instruct-v0.1-GGUF Q4_0/Q4_0-00001-of-00009.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download LiteLLMs/starcoder2-15b-instruct-v0.1-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install huggingface_hub[hf_transfer]

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download LiteLLMs/starcoder2-15b-instruct-v0.1-GGUF Q4_0/Q4_0-00001-of-00009.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m Q4_0/Q4_0-00001-of-00009.gguf --color -c 8192 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<PROMPT>"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 8192` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./Q4_0/Q4_0-00001-of-00009.gguf", # Download the model file first

n_ctx=32768, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"<PROMPT>", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./Q4_0/Q4_0-00001-of-00009.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: starcoder2-15b-instruct-v0.1

# StarCoder2-Instruct: Fully Transparent and Permissive Self-Alignment for Code Generation

## Model Summary

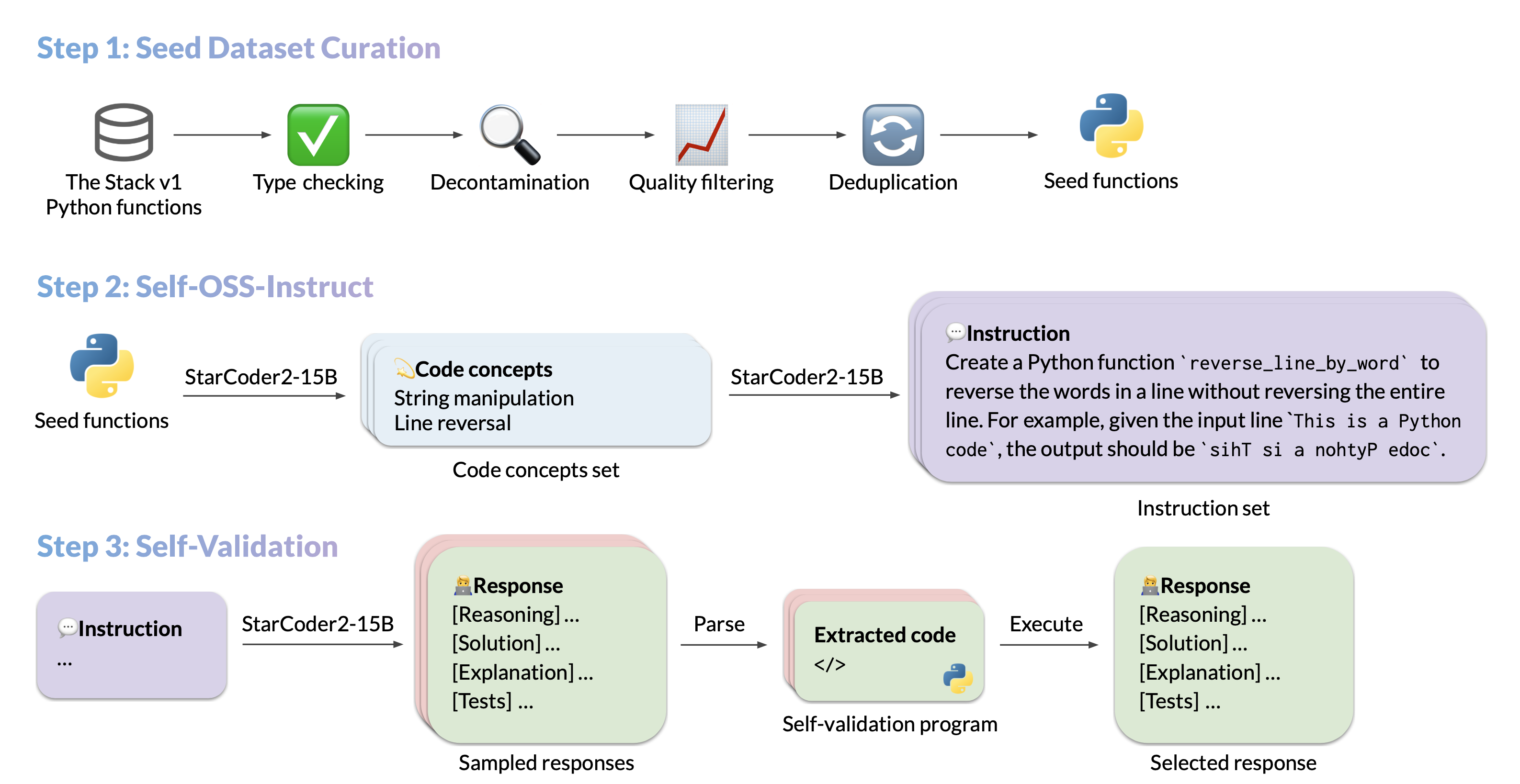

We introduce StarCoder2-15B-Instruct-v0.1, the very first entirely self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate thousands of instruction-response pairs, which are then used to fine-tune StarCoder-15B itself without any human annotations or distilled data from huge and proprietary LLMs.

- **Model:** [bigcode/starcoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-instruct-15b-v0.1)

- **Code:** [bigcode-project/starcoder2-self-align](https://github.com/bigcode-project/starcoder2-self-align)

- **Dataset:** [bigcode/self-oss-instruct-sc2-exec-filter-50k](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k/)

- **Authors:**

[Yuxiang Wei](https://yuxiang.cs.illinois.edu),

[Federico Cassano](https://federico.codes/),

[Jiawei Liu](https://jw-liu.xyz),

[Yifeng Ding](https://yifeng-ding.com),

[Naman Jain](https://naman-ntc.github.io),

[Harm de Vries](https://www.harmdevries.com),

[Leandro von Werra](https://twitter.com/lvwerra),

[Arjun Guha](https://www.khoury.northeastern.edu/home/arjunguha/main/home/),

[Lingming Zhang](https://lingming.cs.illinois.edu).

## Use

### Intended use

The model is designed to respond to **coding-related instructions in a single turn**. Instructions in other styles may result in less accurate responses.

Here is an example to get started with the model using the [transformers](https://huggingface.co/docs/transformers/index) library:

```python

import transformers

import torch

pipeline = transformers.pipeline(

model="bigcode/starcoder2-15b-instruct-v0.1",

task="text-generation",

torch_dtype=torch.bfloat16,

device_map="auto",

)

def respond(instruction: str, response_prefix: str) -> str:

messages = [{"role": "user", "content": instruction}]

prompt = pipeline.tokenizer.apply_chat_template(messages, tokenize=False)

prompt += response_prefix

teminators = [

pipeline.tokenizer.eos_token_id,

pipeline.tokenizer.convert_tokens_to_ids("###"),

]

result = pipeline(

prompt,

max_length=256,

num_return_sequences=1,

do_sample=False,

eos_token_id=teminators,

pad_token_id=pipeline.tokenizer.eos_token_id,

truncation=True,

)

response = response_prefix + result[0]["generated_text"][len(prompt) :].split("###")[0].rstrip()

return response

instruction = "Write a quicksort function in Python with type hints and a 'less_than' parameter for custom sorting criteria."

response_prefix = ""

print(respond(instruction, response_prefix))

```

Here is the expected output:

``````

Here's how you can implement a quicksort function in Python with type hints and a 'less_than' parameter for custom sorting criteria:

```python

from typing import TypeVar, Callable

T = TypeVar('T')

def quicksort(items: list[T], less_than: Callable[[T, T], bool] = lambda x, y: x < y) -> list[T]:

if len(items) <= 1:

return items

pivot = items[0]

less = [x for x in items[1:] if less_than(x, pivot)]

greater = [x for x in items[1:] if not less_than(x, pivot)]

return quicksort(less, less_than) + [pivot] + quicksort(greater, less_than)

```

``````

### Bias, Risks, and Limitations

StarCoder2-15B-Instruct-v0.1 is primarily finetuned for Python code generation tasks that can be verified through execution, which may lead to certain biases and limitations. For example, the model might not adhere strictly to instructions that dictate the output format. In these situations, it's beneficial to provide a **response prefix** or a **one-shot example** to steer the model’s output. Additionally, the model may have limitations with other programming languages and out-of-domain coding tasks.

The model also inherits the bias, risks, and limitations from its base StarCoder2-15B model. For more information, please refer to the [StarCoder2-15B model card](https://huggingface.co/bigcode/starcoder2-15b).

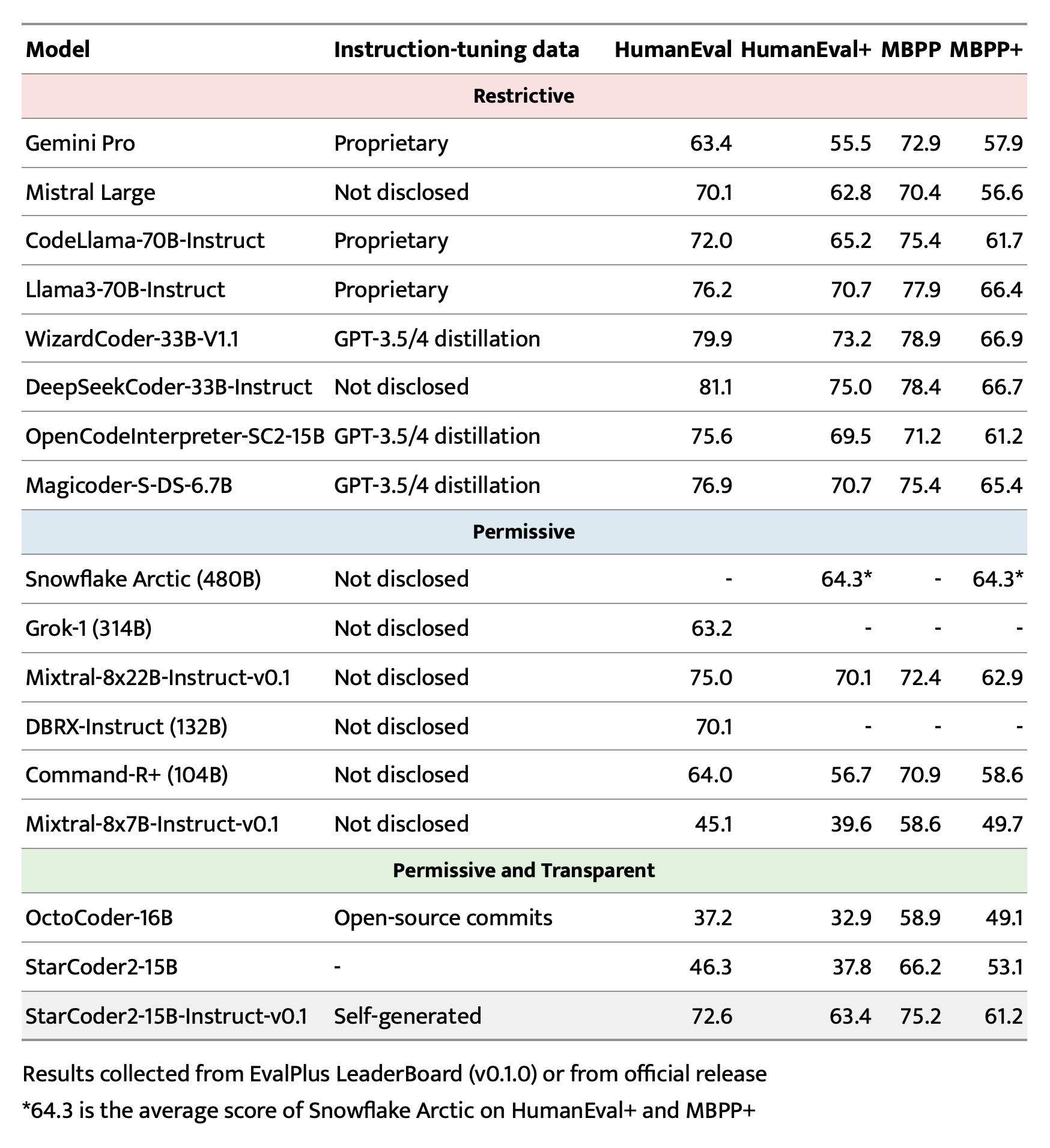

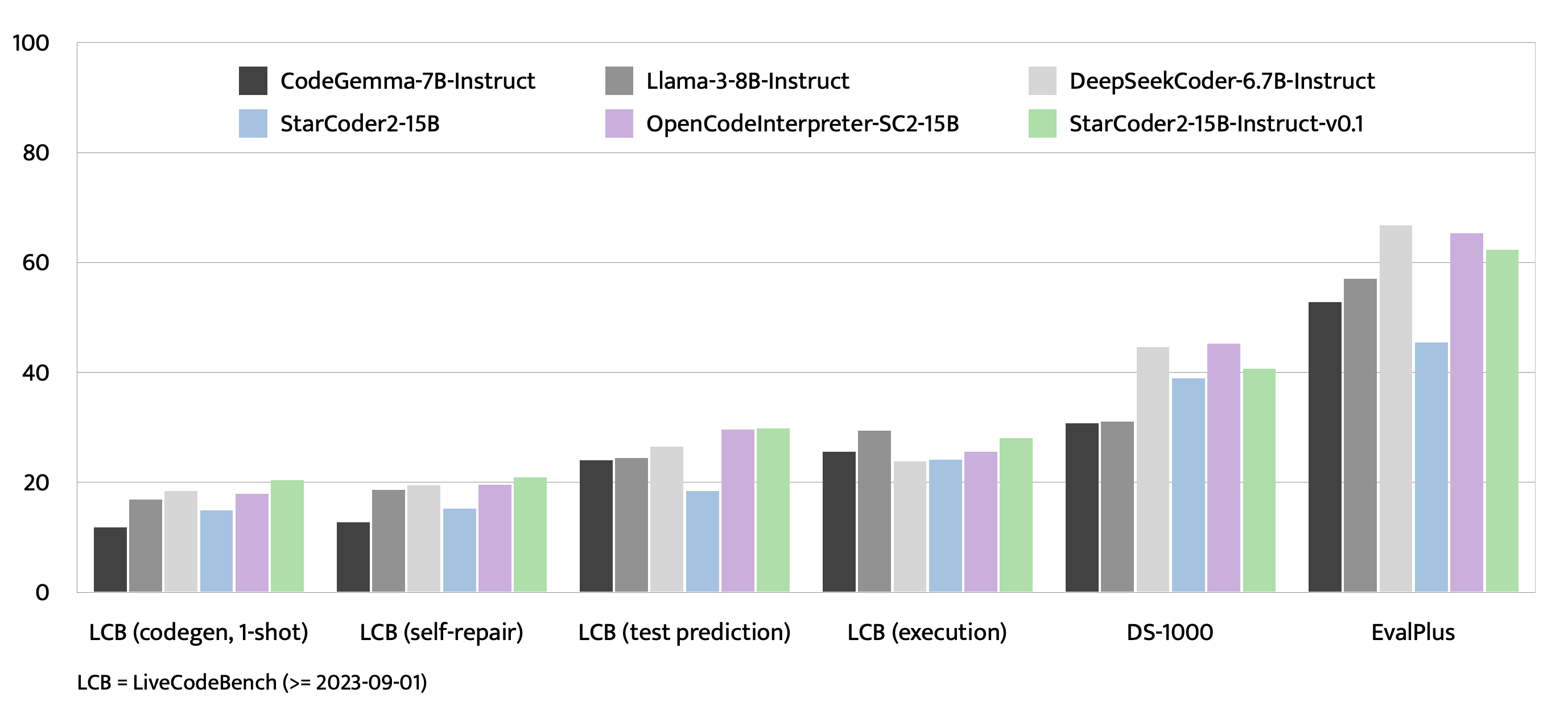

## Evaluation on EvalPlus, LiveCodeBench, and DS-1000

## Training Details

### Hyperparameters

- **Optimizer:** Adafactor

- **Learning rate:** 1e-5

- **Epoch:** 4

- **Batch size:** 64

- **Warmup ratio:** 0.05

- **Scheduler:** Linear

- **Sequence length:** 1280

- **Dropout**: Not applied

### Hardware

1 x NVIDIA A100 80GB

## Resources

- **Model:** [bigcode/starCoder2-15b-instruct-v0.1](https://huggingface.co/bigcode/starcoder2-instruct-15b-v0.1)

- **Code:** [bigcode-project/starcoder2-self-align](https://github.com/bigcode-project/starcoder2-self-align)

- **Dataset:** [bigcode/self-oss-instruct-sc2-exec-filter-50k](https://huggingface.co/datasets/bigcode/self-oss-instruct-sc2-exec-filter-50k/)

<!-- original-model-card end -->

| {"license": "bigcode-openrail-m", "library_name": "transformers", "tags": ["code", "GGUF"], "datasets": ["bigcode/self-oss-instruct-sc2-exec-filter-50k"], "base_model": "bigcode/starcoder2-15b", "pipeline_tag": "text-generation", "quantized_by": "andrijdavid", "model-index": [{"name": "starcoder2-15b-instruct-v0.1", "results": [{"task": {"type": "text-generation"}, "dataset": {"name": "LiveCodeBench (code generation)", "type": "livecodebench-codegeneration"}, "metrics": [{"type": "pass@1", "value": 20.4, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "LiveCodeBench (self repair)", "type": "livecodebench-selfrepair"}, "metrics": [{"type": "pass@1", "value": 20.9, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "LiveCodeBench (test output prediction)", "type": "livecodebench-testoutputprediction"}, "metrics": [{"type": "pass@1", "value": 29.8, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "LiveCodeBench (code execution)", "type": "livecodebench-codeexecution"}, "metrics": [{"type": "pass@1", "value": 28.1, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "HumanEval", "type": "humaneval"}, "metrics": [{"type": "pass@1", "value": 72.6, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "HumanEval+", "type": "humanevalplus"}, "metrics": [{"type": "pass@1", "value": 63.4, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "MBPP", "type": "mbpp"}, "metrics": [{"type": "pass@1", "value": 75.2, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "MBPP+", "type": "mbppplus"}, "metrics": [{"type": "pass@1", "value": 61.2, "verified": false}]}, {"task": {"type": "text-generation"}, "dataset": {"name": "DS-1000", "type": "ds-1000"}, "metrics": [{"type": "pass@1", "value": 40.6, "verified": false}]}]}]} | LiteLLMs/starcoder2-15b-instruct-v0.1-GGUF | null | [

"transformers",

"gguf",

"code",

"GGUF",

"text-generation",

"dataset:bigcode/self-oss-instruct-sc2-exec-filter-50k",

"base_model:bigcode/starcoder2-15b",

"license:bigcode-openrail-m",

"model-index",

"endpoints_compatible",

"region:us"

] | null | 2024-04-30T06:31:08+00:00 | [] | [] | TAGS

#transformers #gguf #code #GGUF #text-generation #dataset-bigcode/self-oss-instruct-sc2-exec-filter-50k #base_model-bigcode/starcoder2-15b #license-bigcode-openrail-m #model-index #endpoints_compatible #region-us

| # starcoder2-15b-instruct-v0.1-GGUF

- Original model: starcoder2-15b-instruct-v0.1

## Description

This repo contains GGUF format model files for starcoder2-15b-instruct-v0.1.

### About GGUF

GGUF is a new format introduced by the URL team on August 21st 2023. It is a replacement for GGML, which is no longer supported by URL.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* URL. This is the source project for GGUF, providing both a Command Line Interface (CLI) and a server option.

* text-generation-webui, Known as the most widely used web UI, this project boasts numerous features and powerful extensions, and supports GPU acceleration.

* Ollama Ollama is a lightweight and extensible framework designed for building and running language models locally. It features a simple API for creating, managing, and executing models, along with a library of pre-built models for use in various applications

* KoboldCpp, A comprehensive web UI offering GPU acceleration across all platforms and architectures, particularly renowned for storytelling.

* GPT4All, This is a free and open source GUI that runs locally, supporting Windows, Linux, and macOS with full GPU acceleration.

* LM Studio An intuitive and powerful local GUI for Windows and macOS (Silicon), featuring GPU acceleration.

* LoLLMS Web UI. A notable web UI with a variety of unique features, including a comprehensive model library for easy model selection.

* URL, An attractive, user-friendly character-based chat GUI for Windows and macOS (both Silicon and Intel), also offering GPU acceleration.

* llama-cpp-python, A Python library equipped with GPU acceleration, LangChain support, and an OpenAI-compatible API server.

* candle, A Rust-based ML framework focusing on performance, including GPU support, and designed for ease of use.

* ctransformers, A Python library featuring GPU acceleration, LangChain support, and an OpenAI-compatible AI server.

* localGPT An open-source initiative enabling private conversations with documents.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw.

</details>

## How to download GGUF files

Note for manual downloaders: You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single folder.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* URL

### In 'text-generation-webui'

Under Download Model, you can enter the model repo: LiteLLMs/starcoder2-15b-instruct-v0.1-GGUF and below it, a specific filename to download, such as: Q4_0/Q4_0-URL.

Then click Download.

### On the command line, including multiple files at once

I recommend using the 'huggingface-hub' Python library:

Then you can download any individual model file to the current directory, at high speed, with a command like this:

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

You can also download multiple files at once with a pattern:

For more documentation on downloading with 'huggingface-cli', please see: HF -> Hub Python Library -> Download files -> Download from the CLI.

To accelerate downloads on fast connections (1Gbit/s or higher), install 'hf_transfer':

And set environment variable 'HF_HUB_ENABLE_HF_TRANSFER' to '1':

Windows Command Line users: You can set the environment variable by running 'set HF_HUB_ENABLE_HF_TRANSFER=1' before the download command.

</details>

## Example 'URL' command

Make sure you are using 'URL' from commit d0cee0d or later.

Change '-ngl 32' to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change '-c 8192' to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by URL automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the '-p <PROMPT>' argument with '-i -ins'

For other parameters and how to use them, please refer to the URL documentation

## How to run in 'text-generation-webui'

Further instructions can be found in the text-generation-webui documentation, here: text-generation-webui/docs/04 ‐ Model URL.

## How to run from Python code

You can use GGUF models from Python using the llama-cpp-python or ctransformers libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: llama-cpp-python docs.

#### First install the package

Run one of the following commands, according to your system:

#### Simple llama-cpp-python example code

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* LangChain + llama-cpp-python

* LangChain + ctransformers

# Original model card: starcoder2-15b-instruct-v0.1

# StarCoder2-Instruct: Fully Transparent and Permissive Self-Alignment for Code Generation

!Banner

## Model Summary

We introduce StarCoder2-15B-Instruct-v0.1, the very first entirely self-aligned code Large Language Model (LLM) trained with a fully permissive and transparent pipeline. Our open-source pipeline uses StarCoder2-15B to generate thousands of instruction-response pairs, which are then used to fine-tune StarCoder-15B itself without any human annotations or distilled data from huge and proprietary LLMs.

- Model: bigcode/starcoder2-15b-instruct-v0.1

- Code: bigcode-project/starcoder2-self-align

- Dataset: bigcode/self-oss-instruct-sc2-exec-filter-50k

- Authors:

Yuxiang Wei,

Federico Cassano,

Jiawei Liu,

Yifeng Ding,

Naman Jain,

Harm de Vries,

Leandro von Werra,

Arjun Guha,

Lingming Zhang.

!self-alignment pipeline

## Use

### Intended use

The model is designed to respond to coding-related instructions in a single turn. Instructions in other styles may result in less accurate responses.

Here is an example to get started with the model using the transformers library:

Here is the expected output:

Here's how you can implement a quicksort function in Python with type hints and a 'less_than' parameter for custom sorting criteria:

### Bias, Risks, and Limitations

StarCoder2-15B-Instruct-v0.1 is primarily finetuned for Python code generation tasks that can be verified through execution, which may lead to certain biases and limitations. For example, the model might not adhere strictly to instructions that dictate the output format. In these situations, it's beneficial to provide a response prefix or a one-shot example to steer the model’s output. Additionally, the model may have limitations with other programming languages and out-of-domain coding tasks.

The model also inherits the bias, risks, and limitations from its base StarCoder2-15B model. For more information, please refer to the StarCoder2-15B model card.

## Evaluation on EvalPlus, LiveCodeBench, and DS-1000

!EvalPlus

!LiveCodeBench and DS-1000

## Training Details

### Hyperparameters

- Optimizer: Adafactor

- Learning rate: 1e-5

- Epoch: 4

- Batch size: 64

- Warmup ratio: 0.05

- Scheduler: Linear

- Sequence length: 1280

- Dropout: Not applied

### Hardware

1 x NVIDIA A100 80GB

## Resources

- Model: bigcode/starCoder2-15b-instruct-v0.1

- Code: bigcode-project/starcoder2-self-align

- Dataset: bigcode/self-oss-instruct-sc2-exec-filter-50k

| [

"# starcoder2-15b-instruct-v0.1-GGUF\n- Original model: starcoder2-15b-instruct-v0.1",

"## Description\n\nThis repo contains GGUF format model files for starcoder2-15b-instruct-v0.1.",