pipeline_tag

stringclasses 48

values | library_name

stringclasses 198

values | text

stringlengths 1

900k

| metadata

stringlengths 2

438k

| id

stringlengths 5

122

| last_modified

null | tags

listlengths 1

1.84k

| sha

null | created_at

stringlengths 25

25

| arxiv

listlengths 0

201

| languages

listlengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

listlengths 0

722

| processed_texts

listlengths 1

723

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

text-generation

|

transformers

|

<p align="center">

<img src="https://cdn-uploads.huggingface.co/production/uploads/641b435ba5f876fe30c5ae0a/Ds-Nf-6VvLdpUx_l0Yiu_.png" alt="" style="width: 95%; max-height: 750px;">

</p>

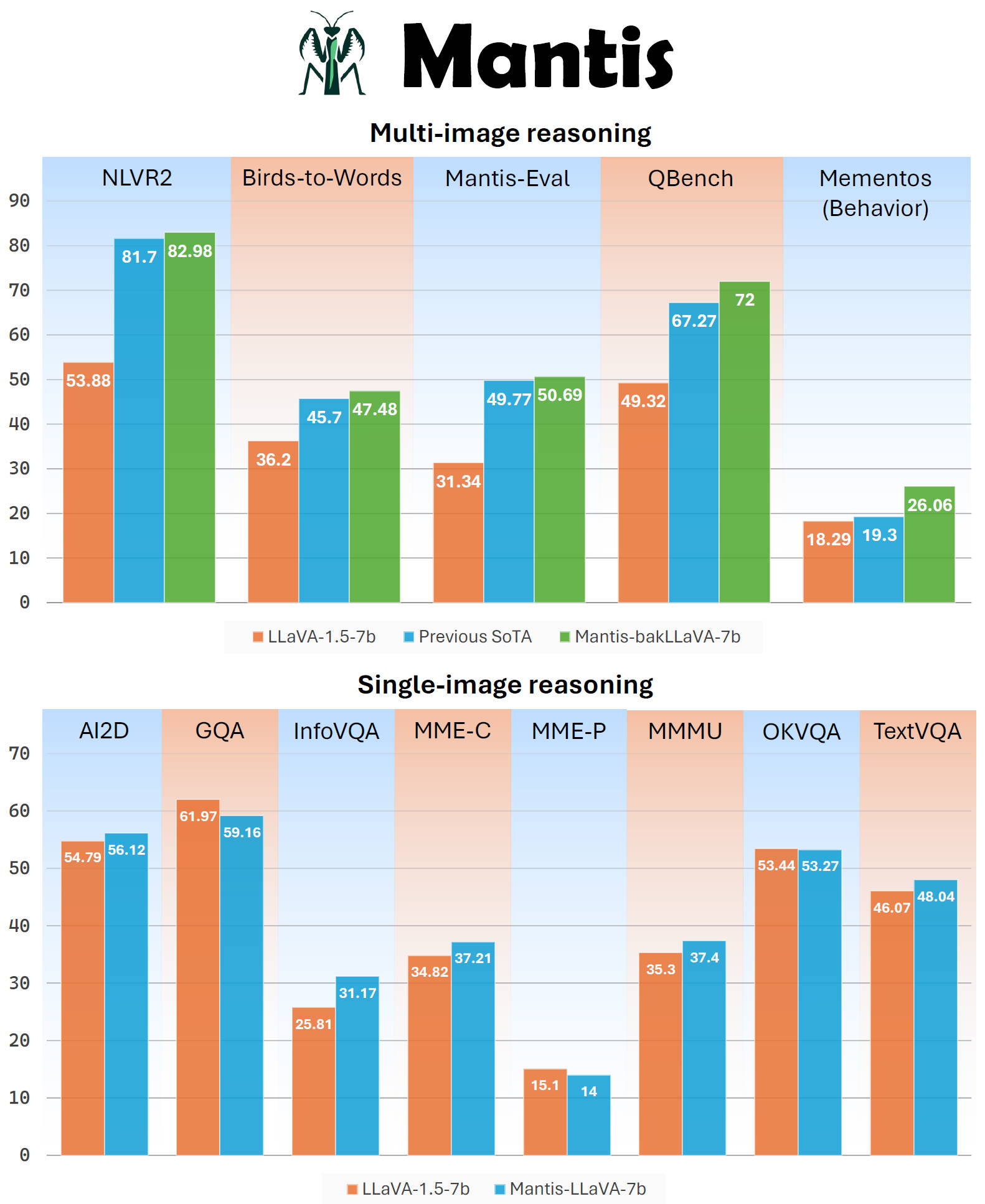

## Metrics.

<p align="center">

<img src="https://cdn-uploads.huggingface.co/production/uploads/641b435ba5f876fe30c5ae0a/clMqtJvaKZQ3y4sCdxHNC.png" alt="" style="width: 95%; max-height: 750px;">

</p>

<p align="center">

<img src="https://cdn-uploads.huggingface.co/production/uploads/641b435ba5f876fe30c5ae0a/jd63fRtz2fCs9AxYKTsaP.png" alt="" style="width: 95%; max-height: 750px;">

</p>

```

interrupted execution no TrainOutput

```

## Take dataset.

```

hiyouga/glaive-function-calling-v2-sharegpt

```

## Dataset format gemma fine tune.

```

NickyNicky/function-calling_chatml_gemma_v1

```

## colab examples.

```

https://colab.research.google.com/drive/1an2D2C3VNs32UV9kWlXEPJjio0uJN6nW?usp=sharing

```

|

{"language": ["en"], "license": "apache-2.0", "library_name": "transformers", "datasets": ["hiyouga/glaive-function-calling-v2-sharegpt", "NickyNicky/function-calling_chatml_gemma_v1"], "model": ["google/gemma-1.1-2b-it"], "widget": [{"text": "<bos><start_of_turn>system\nYou are a helpful AI assistant.<end_of_turn>\n<start_of_turn>user\n{question}<end_of_turn>\n<start_of_turn>model"}]}

|

NickyNicky/gemma-1.1-2b-it_oasst_format_chatML_unsloth_V1_function_calling_V2

| null |

[

"transformers",

"safetensors",

"gemma",

"text-generation",

"conversational",

"en",

"dataset:hiyouga/glaive-function-calling-v2-sharegpt",

"dataset:NickyNicky/function-calling_chatml_gemma_v1",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2024-04-13T18:28:49+00:00

|

[] |

[

"en"

] |

TAGS

#transformers #safetensors #gemma #text-generation #conversational #en #dataset-hiyouga/glaive-function-calling-v2-sharegpt #dataset-NickyNicky/function-calling_chatml_gemma_v1 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

<p align="center">

<img src="URL alt="" style="width: 95%; max-height: 750px;">

</p>

## Metrics.

<p align="center">

<img src="URL alt="" style="width: 95%; max-height: 750px;">

</p>

<p align="center">

<img src="URL alt="" style="width: 95%; max-height: 750px;">

</p>

## Take dataset.

## Dataset format gemma fine tune.

## colab examples.

|

[

"## Metrics.\n\n<p align=\"center\">\n <img src=\"URL alt=\"\" style=\"width: 95%; max-height: 750px;\">\n</p>\n\n<p align=\"center\">\n <img src=\"URL alt=\"\" style=\"width: 95%; max-height: 750px;\">\n</p>",

"## Take dataset.",

"## Dataset format gemma fine tune.",

"## colab examples."

] |

[

"TAGS\n#transformers #safetensors #gemma #text-generation #conversational #en #dataset-hiyouga/glaive-function-calling-v2-sharegpt #dataset-NickyNicky/function-calling_chatml_gemma_v1 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"## Metrics.\n\n<p align=\"center\">\n <img src=\"URL alt=\"\" style=\"width: 95%; max-height: 750px;\">\n</p>\n\n<p align=\"center\">\n <img src=\"URL alt=\"\" style=\"width: 95%; max-height: 750px;\">\n</p>",

"## Take dataset.",

"## Dataset format gemma fine tune.",

"## colab examples."

] |

image-classification

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Psoriasis-Project-M-swinv2-base-patch4-window12-192-22k

This model is a fine-tuned version of [microsoft/swinv2-base-patch4-window12-192-22k](https://huggingface.co/microsoft/swinv2-base-patch4-window12-192-22k) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2385

- Accuracy: 0.9167

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| No log | 0.92 | 6 | 0.3476 | 0.9167 |

| 0.0528 | 2.0 | 13 | 0.2577 | 0.9167 |

| 0.0528 | 2.92 | 19 | 0.3270 | 0.9167 |

| 0.0535 | 4.0 | 26 | 0.3330 | 0.8542 |

| 0.0176 | 4.92 | 32 | 0.2745 | 0.8958 |

| 0.0176 | 6.0 | 39 | 0.3743 | 0.8958 |

| 0.0337 | 6.92 | 45 | 0.3473 | 0.8958 |

| 0.0066 | 8.0 | 52 | 0.2628 | 0.9167 |

| 0.0066 | 8.92 | 58 | 0.2392 | 0.9167 |

| 0.0049 | 9.23 | 60 | 0.2385 | 0.9167 |

### Framework versions

- Transformers 4.39.3

- Pytorch 2.1.2

- Datasets 2.18.0

- Tokenizers 0.15.2

|

{"license": "apache-2.0", "tags": ["generated_from_trainer"], "metrics": ["accuracy"], "base_model": "microsoft/swinv2-base-patch4-window12-192-22k", "model-index": [{"name": "Psoriasis-Project-M-swinv2-base-patch4-window12-192-22k", "results": []}]}

|

ahmedesmail16/Psoriasis-Project-M-swinv2-base-patch4-window12-192-22k

| null |

[

"transformers",

"tensorboard",

"safetensors",

"swinv2",

"image-classification",

"generated_from_trainer",

"base_model:microsoft/swinv2-base-patch4-window12-192-22k",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null |

2024-04-13T18:31:04+00:00

|

[] |

[] |

TAGS

#transformers #tensorboard #safetensors #swinv2 #image-classification #generated_from_trainer #base_model-microsoft/swinv2-base-patch4-window12-192-22k #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us

|

Psoriasis-Project-M-swinv2-base-patch4-window12-192-22k

=======================================================

This model is a fine-tuned version of microsoft/swinv2-base-patch4-window12-192-22k on an unknown dataset.

It achieves the following results on the evaluation set:

* Loss: 0.2385

* Accuracy: 0.9167

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 5e-05

* train\_batch\_size: 16

* eval\_batch\_size: 16

* seed: 42

* gradient\_accumulation\_steps: 4

* total\_train\_batch\_size: 64

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* lr\_scheduler\_warmup\_ratio: 0.1

* num\_epochs: 10

### Training results

### Framework versions

* Transformers 4.39.3

* Pytorch 2.1.2

* Datasets 2.18.0

* Tokenizers 0.15.2

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* gradient\\_accumulation\\_steps: 4\n* total\\_train\\_batch\\_size: 64\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_ratio: 0.1\n* num\\_epochs: 10",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.39.3\n* Pytorch 2.1.2\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

[

"TAGS\n#transformers #tensorboard #safetensors #swinv2 #image-classification #generated_from_trainer #base_model-microsoft/swinv2-base-patch4-window12-192-22k #license-apache-2.0 #autotrain_compatible #endpoints_compatible #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* gradient\\_accumulation\\_steps: 4\n* total\\_train\\_batch\\_size: 64\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* lr\\_scheduler\\_warmup\\_ratio: 0.1\n* num\\_epochs: 10",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.39.3\n* Pytorch 2.1.2\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

text2text-generation

|

transformers

|

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

|

{"library_name": "transformers", "tags": ["trl", "sft"]}

|

Rutts07/t5-ai-human-gen

| null |

[

"transformers",

"safetensors",

"t5",

"text2text-generation",

"trl",

"sft",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2024-04-13T18:31:35+00:00

|

[

"1910.09700"

] |

[] |

TAGS

#transformers #safetensors #t5 #text2text-generation #trl #sft #arxiv-1910.09700 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# Model Card for Model ID

## Model Details

### Model Description

This is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.

- Developed by:

- Funded by [optional]:

- Shared by [optional]:

- Model type:

- Language(s) (NLP):

- License:

- Finetuned from model [optional]:

### Model Sources [optional]

- Repository:

- Paper [optional]:

- Demo [optional]:

## Uses

### Direct Use

### Downstream Use [optional]

### Out-of-Scope Use

## Bias, Risks, and Limitations

### Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

## Training Details

### Training Data

### Training Procedure

#### Preprocessing [optional]

#### Training Hyperparameters

- Training regime:

#### Speeds, Sizes, Times [optional]

## Evaluation

### Testing Data, Factors & Metrics

#### Testing Data

#### Factors

#### Metrics

### Results

#### Summary

## Model Examination [optional]

## Environmental Impact

Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).

- Hardware Type:

- Hours used:

- Cloud Provider:

- Compute Region:

- Carbon Emitted:

## Technical Specifications [optional]

### Model Architecture and Objective

### Compute Infrastructure

#### Hardware

#### Software

[optional]

BibTeX:

APA:

## Glossary [optional]

## More Information [optional]

## Model Card Authors [optional]

## Model Card Contact

|

[

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] |

[

"TAGS\n#transformers #safetensors #t5 #text2text-generation #trl #sft #arxiv-1910.09700 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] |

text-to-image

| null |

# LoRA model of Bianca Eleanor/エレオノール・ビアンカ (Maou Gakuin no Futekigousha)

## What Is This?

This is the LoRA model of waifu Bianca Eleanor/エレオノール・ビアンカ (Maou Gakuin no Futekigousha).

## How Is It Trained?

* This model is trained with [kohya-ss/sd-scripts](https://github.com/kohya-ss/sd-scripts), and the test images are generated with [a1111's webui](AUTOMATIC1111/stable-diffusion-webui) and [API sdk](https://github.com/mix1009/sdwebuiapi).

* The [auto-training framework](https://github.com/deepghs/cyberharem) is maintained by [DeepGHS Team](https://huggingface.co/deepghs).

The architecture of base model is is `SD1.5`.

* Dataset used for training is the `stage3-p480-1200` in [CyberHarem/bianca_eleanor_maougakuinnofutekigousha](https://huggingface.co/datasets/CyberHarem/bianca_eleanor_maougakuinnofutekigousha), which contains 261 images.

* The images in the dataset is auto-cropped from anime videos, more images for other waifus in the same anime can be found in [BangumiBase/maougakuinnofutekigousha](https://huggingface.co/datasets/BangumiBase/maougakuinnofutekigousha)

* **Trigger word is `bianca_eleanor_maougakuinnofutekigousha`.**

* **Trigger word of anime style is is `anime_style`.**

* Pruned core tags for this waifu are `long hair, black hair, braid, purple eyes, breasts, hair between eyes, purple hair, large breasts`. You can add them to the prompt when some features of waifu (e.g. hair color) are not stable.

* For more details in training, you can take a look at [training configuration file](https://huggingface.co/CyberHarem/bianca_eleanor_maougakuinnofutekigousha/resolve/main/train.toml).

* For more details in LoRA, you can download it, and read the metadata with a1111's webui.

## How to Use It?

After downloading the safetensors files for the specified step, you need to use them like common LoRA.

* Recommended LoRA weight is 0.5-0.85.

* Recommended trigger word weight is 0.7-1.1.

For example, if you want to use the model from step 2040, you need to download [`2040/bianca_eleanor_maougakuinnofutekigousha.safetensors`](https://huggingface.co/CyberHarem/bianca_eleanor_maougakuinnofutekigousha/resolve/main/2040/bianca_eleanor_maougakuinnofutekigousha.safetensors) as LoRA. By using this model, you can generate images for the desired characters.

## Which Step Should I Use?

We selected 5 good steps for you to choose. The best one is step 2040.

780 images (744.76 MiB) were generated for auto-testing.

Here are the preview of the recommended steps:

| Step | Epoch | CCIP | AI Corrupt | Bikini Plus | Score | Download | pattern_0 | pattern_1 | pattern_2 | pattern_3 | portrait_0 | portrait_1 | portrait_2 | full_body_0 | full_body_1 | profile_0 | profile_1 | free_0 | free_1 | shorts | maid_0 | maid_1 | miko | yukata | suit | china | bikini_0 | bikini_1 | bikini_2 | sit | squat | kneel | jump | crossed_arms | angry | smile | cry | grin | n_lie_0 | n_lie_1 | n_stand_0 | n_stand_1 | n_stand_2 | n_sex_0 | n_sex_1 |

|-------:|--------:|:----------|:-------------|:--------------|:----------|:----------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------|:------------------------------------------|:------------------------------------------|:------------------------------------------|:--------------------------------------------|:--------------------------------------------|:--------------------------------------------|:----------------------------------------------|:----------------------------------------------|:------------------------------------------|:------------------------------------------|:------------------------------------|:------------------------------------|:------------------------------------|:------------------------------------|:------------------------------------|:--------------------------------|:------------------------------------|:--------------------------------|:----------------------------------|:----------------------------------------|:----------------------------------------|:----------------------------------------|:------------------------------|:----------------------------------|:----------------------------------|:--------------------------------|:------------------------------------------------|:----------------------------------|:----------------------------------|:------------------------------|:--------------------------------|:--------------------------------------|:--------------------------------------|:------------------------------------------|:------------------------------------------|:------------------------------------------|:--------------------------------------|:--------------------------------------|

| 2040 | 51 | 0.866 | 0.989 | 0.832 | **0.772** | [Download](https://huggingface.co/CyberHarem/bianca_eleanor_maougakuinnofutekigousha/resolve/main/2040/bianca_eleanor_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 2160 | 54 | 0.863 | 0.992 | 0.831 | 0.766 | [Download](https://huggingface.co/CyberHarem/bianca_eleanor_maougakuinnofutekigousha/resolve/main/2160/bianca_eleanor_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 1560 | 39 | 0.817 | **0.992** | 0.830 | 0.703 | [Download](https://huggingface.co/CyberHarem/bianca_eleanor_maougakuinnofutekigousha/resolve/main/1560/bianca_eleanor_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 2280 | 57 | **0.866** | 0.986 | 0.810 | 0.684 | [Download](https://huggingface.co/CyberHarem/bianca_eleanor_maougakuinnofutekigousha/resolve/main/2280/bianca_eleanor_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 1320 | 33 | 0.784 | 0.991 | **0.837** | 0.674 | [Download](https://huggingface.co/CyberHarem/bianca_eleanor_maougakuinnofutekigousha/resolve/main/1320/bianca_eleanor_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

## Anything Else?

Because the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

## All Steps

We uploaded the files in all steps. you can check the images, metrics and download them in the following links:

* [Steps From 1320 to 2400](all/0.md)

* [Steps From 120 to 1200](all/1.md)

|

{"license": "mit", "tags": ["art", "not-for-all-audiences"], "datasets": ["CyberHarem/bianca_eleanor_maougakuinnofutekigousha", "BangumiBase/maougakuinnofutekigousha"], "pipeline_tag": "text-to-image"}

|

CyberHarem/bianca_eleanor_maougakuinnofutekigousha

| null |

[

"art",

"not-for-all-audiences",

"text-to-image",

"dataset:CyberHarem/bianca_eleanor_maougakuinnofutekigousha",

"dataset:BangumiBase/maougakuinnofutekigousha",

"license:mit",

"region:us"

] | null |

2024-04-13T18:31:45+00:00

|

[] |

[] |

TAGS

#art #not-for-all-audiences #text-to-image #dataset-CyberHarem/bianca_eleanor_maougakuinnofutekigousha #dataset-BangumiBase/maougakuinnofutekigousha #license-mit #region-us

|

LoRA model of Bianca Eleanor/エレオノール・ビアンカ (Maou Gakuin no Futekigousha)

======================================================================

What Is This?

-------------

This is the LoRA model of waifu Bianca Eleanor/エレオノール・ビアンカ (Maou Gakuin no Futekigousha).

How Is It Trained?

------------------

* This model is trained with kohya-ss/sd-scripts, and the test images are generated with a1111's webui and API sdk.

* The auto-training framework is maintained by DeepGHS Team.

The architecture of base model is is 'SD1.5'.

* Dataset used for training is the 'stage3-p480-1200' in CyberHarem/bianca\_eleanor\_maougakuinnofutekigousha, which contains 261 images.

* The images in the dataset is auto-cropped from anime videos, more images for other waifus in the same anime can be found in BangumiBase/maougakuinnofutekigousha

* Trigger word is 'bianca\_eleanor\_maougakuinnofutekigousha'.

* Trigger word of anime style is is 'anime\_style'.

* Pruned core tags for this waifu are 'long hair, black hair, braid, purple eyes, breasts, hair between eyes, purple hair, large breasts'. You can add them to the prompt when some features of waifu (e.g. hair color) are not stable.

* For more details in training, you can take a look at training configuration file.

* For more details in LoRA, you can download it, and read the metadata with a1111's webui.

How to Use It?

--------------

After downloading the safetensors files for the specified step, you need to use them like common LoRA.

* Recommended LoRA weight is 0.5-0.85.

* Recommended trigger word weight is 0.7-1.1.

For example, if you want to use the model from step 2040, you need to download '2040/bianca\_eleanor\_maougakuinnofutekigousha.safetensors' as LoRA. By using this model, you can generate images for the desired characters.

Which Step Should I Use?

------------------------

We selected 5 good steps for you to choose. The best one is step 2040.

780 images (744.76 MiB) were generated for auto-testing.

!Metrics Plot

Here are the preview of the recommended steps:

Anything Else?

--------------

Because the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

All Steps

---------

We uploaded the files in all steps. you can check the images, metrics and download them in the following links:

* Steps From 1320 to 2400

* Steps From 120 to 1200

|

[] |

[

"TAGS\n#art #not-for-all-audiences #text-to-image #dataset-CyberHarem/bianca_eleanor_maougakuinnofutekigousha #dataset-BangumiBase/maougakuinnofutekigousha #license-mit #region-us \n"

] |

null | null |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# wav2vecvlora_ctc_zero_infinity

This model is a fine-tuned version of [facebook/wav2vec2-base-960h](https://huggingface.co/facebook/wav2vec2-base-960h) on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 7

### Training results

### Framework versions

- Transformers 4.38.2

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

{"license": "apache-2.0", "tags": ["generated_from_trainer"], "base_model": "facebook/wav2vec2-base-960h", "model-index": [{"name": "wav2vecvlora_ctc_zero_infinity", "results": []}]}

|

charris/wav2vecvlora_ctc_zero_infinity

| null |

[

"tensorboard",

"safetensors",

"generated_from_trainer",

"base_model:facebook/wav2vec2-base-960h",

"license:apache-2.0",

"region:us"

] | null |

2024-04-13T18:32:48+00:00

|

[] |

[] |

TAGS

#tensorboard #safetensors #generated_from_trainer #base_model-facebook/wav2vec2-base-960h #license-apache-2.0 #region-us

|

# wav2vecvlora_ctc_zero_infinity

This model is a fine-tuned version of facebook/wav2vec2-base-960h on an unknown dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- num_epochs: 7

### Training results

### Framework versions

- Transformers 4.38.2

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

[

"# wav2vecvlora_ctc_zero_infinity\n\nThis model is a fine-tuned version of facebook/wav2vec2-base-960h on an unknown dataset.",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 0.0001\n- train_batch_size: 4\n- eval_batch_size: 8\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- lr_scheduler_warmup_steps: 500\n- num_epochs: 7",

"### Training results",

"### Framework versions\n\n- Transformers 4.38.2\n- Pytorch 2.2.1+cu121\n- Datasets 2.18.0\n- Tokenizers 0.15.2"

] |

[

"TAGS\n#tensorboard #safetensors #generated_from_trainer #base_model-facebook/wav2vec2-base-960h #license-apache-2.0 #region-us \n",

"# wav2vecvlora_ctc_zero_infinity\n\nThis model is a fine-tuned version of facebook/wav2vec2-base-960h on an unknown dataset.",

"## Model description\n\nMore information needed",

"## Intended uses & limitations\n\nMore information needed",

"## Training and evaluation data\n\nMore information needed",

"## Training procedure",

"### Training hyperparameters\n\nThe following hyperparameters were used during training:\n- learning_rate: 0.0001\n- train_batch_size: 4\n- eval_batch_size: 8\n- seed: 42\n- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n- lr_scheduler_type: linear\n- lr_scheduler_warmup_steps: 500\n- num_epochs: 7",

"### Training results",

"### Framework versions\n\n- Transformers 4.38.2\n- Pytorch 2.2.1+cu121\n- Datasets 2.18.0\n- Tokenizers 0.15.2"

] |

token-classification

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [](https://huggingface.co/) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1575

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.3277 | 1.0 | 679 | 0.1754 |

| 0.1793 | 2.0 | 1358 | 0.1697 |

| 0.1118 | 3.0 | 2037 | 0.1575 |

### Framework versions

- Transformers 4.38.2

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

{"tags": ["generated_from_trainer"], "model-index": [{"name": "bert-finetuned-ner", "results": []}]}

|

Kkkelsey/bert-finetuned-ner

| null |

[

"transformers",

"tensorboard",

"safetensors",

"gpt2",

"token-classification",

"generated_from_trainer",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2024-04-13T18:33:03+00:00

|

[] |

[] |

TAGS

#transformers #tensorboard #safetensors #gpt2 #token-classification #generated_from_trainer #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

bert-finetuned-ner

==================

This model is a fine-tuned version of [](URL on an unknown dataset.

It achieves the following results on the evaluation set:

* Loss: 0.1575

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 8

* eval\_batch\_size: 8

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 3

### Training results

### Framework versions

* Transformers 4.38.2

* Pytorch 2.2.1+cu121

* Datasets 2.18.0

* Tokenizers 0.15.2

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 8\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 3",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.38.2\n* Pytorch 2.2.1+cu121\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

[

"TAGS\n#transformers #tensorboard #safetensors #gpt2 #token-classification #generated_from_trainer #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 8\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 3",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.38.2\n* Pytorch 2.2.1+cu121\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

text2text-generation

|

transformers

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# new_output

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0280

- Rouge1: 0.2245

- Rouge2: 0.1862

- Rougel: 0.2241

- Rougelsum: 0.2241

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 10

- eval_batch_size: 10

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:---------:|

| 0.0628 | 1.0 | 17768 | 0.0338 | 0.2237 | 0.1848 | 0.2232 | 0.2232 |

| 0.0494 | 2.0 | 35536 | 0.0280 | 0.2245 | 0.1862 | 0.2241 | 0.2241 |

### Framework versions

- Transformers 4.39.3

- Pytorch 2.2.2+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

{"license": "apache-2.0", "tags": ["generated_from_trainer"], "metrics": ["rouge"], "base_model": "t5-small", "model-index": [{"name": "new_output", "results": []}]}

|

aprab/new_output

| null |

[

"transformers",

"safetensors",

"t5",

"text2text-generation",

"generated_from_trainer",

"base_model:t5-small",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null |

2024-04-13T18:34:19+00:00

|

[] |

[] |

TAGS

#transformers #safetensors #t5 #text2text-generation #generated_from_trainer #base_model-t5-small #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

new\_output

===========

This model is a fine-tuned version of t5-small on an unknown dataset.

It achieves the following results on the evaluation set:

* Loss: 0.0280

* Rouge1: 0.2245

* Rouge2: 0.1862

* Rougel: 0.2241

* Rougelsum: 0.2241

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 2e-05

* train\_batch\_size: 10

* eval\_batch\_size: 10

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 2

* mixed\_precision\_training: Native AMP

### Training results

### Framework versions

* Transformers 4.39.3

* Pytorch 2.2.2+cu121

* Datasets 2.18.0

* Tokenizers 0.15.2

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 10\n* eval\\_batch\\_size: 10\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.39.3\n* Pytorch 2.2.2+cu121\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

[

"TAGS\n#transformers #safetensors #t5 #text2text-generation #generated_from_trainer #base_model-t5-small #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 2e-05\n* train\\_batch\\_size: 10\n* eval\\_batch\\_size: 10\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 2\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.39.3\n* Pytorch 2.2.2+cu121\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

null |

peft

|

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# organc-deit-base-finetuned

This model is a fine-tuned version of [facebook/deit-base-patch16-224](https://huggingface.co/facebook/deit-base-patch16-224) on the medmnist-v2 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2795

- Accuracy: 0.9240

- Precision: 0.9199

- Recall: 0.9123

- F1: 0.9154

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.005

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 0.7947 | 1.0 | 203 | 0.3123 | 0.8976 | 0.9090 | 0.8450 | 0.8632 |

| 0.6703 | 2.0 | 406 | 0.1400 | 0.9607 | 0.9590 | 0.9543 | 0.9535 |

| 0.5941 | 3.0 | 609 | 0.1182 | 0.9699 | 0.9647 | 0.9681 | 0.9649 |

| 0.5837 | 4.0 | 813 | 0.1016 | 0.9678 | 0.9558 | 0.9586 | 0.9551 |

| 0.5193 | 5.0 | 1016 | 0.0800 | 0.9791 | 0.9701 | 0.9684 | 0.9675 |

| 0.5513 | 6.0 | 1219 | 0.0579 | 0.9862 | 0.9831 | 0.9855 | 0.9840 |

| 0.4343 | 7.0 | 1422 | 0.0775 | 0.9833 | 0.9858 | 0.9818 | 0.9835 |

| 0.3942 | 8.0 | 1626 | 0.0782 | 0.9833 | 0.9813 | 0.9827 | 0.9817 |

| 0.2971 | 9.0 | 1829 | 0.0839 | 0.9862 | 0.9884 | 0.9866 | 0.9873 |

| 0.3242 | 9.99 | 2030 | 0.0745 | 0.9870 | 0.9877 | 0.9863 | 0.9868 |

### Framework versions

- PEFT 0.10.0

- Transformers 4.38.2

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.2

|

{"license": "apache-2.0", "library_name": "peft", "tags": ["generated_from_trainer"], "datasets": ["medmnist-v2"], "metrics": ["accuracy", "precision", "recall", "f1"], "base_model": "facebook/deit-base-patch16-224", "model-index": [{"name": "organc-deit-base-finetuned", "results": []}]}

|

selmamalak/organc-deit-base-finetuned

| null |

[

"peft",

"safetensors",

"generated_from_trainer",

"dataset:medmnist-v2",

"base_model:facebook/deit-base-patch16-224",

"license:apache-2.0",

"region:us"

] | null |

2024-04-13T18:36:52+00:00

|

[] |

[] |

TAGS

#peft #safetensors #generated_from_trainer #dataset-medmnist-v2 #base_model-facebook/deit-base-patch16-224 #license-apache-2.0 #region-us

|

organc-deit-base-finetuned

==========================

This model is a fine-tuned version of facebook/deit-base-patch16-224 on the medmnist-v2 dataset.

It achieves the following results on the evaluation set:

* Loss: 0.2795

* Accuracy: 0.9240

* Precision: 0.9199

* Recall: 0.9123

* F1: 0.9154

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 0.005

* train\_batch\_size: 16

* eval\_batch\_size: 16

* seed: 42

* gradient\_accumulation\_steps: 4

* total\_train\_batch\_size: 64

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 10

* mixed\_precision\_training: Native AMP

### Training results

### Framework versions

* PEFT 0.10.0

* Transformers 4.38.2

* Pytorch 2.2.1+cu121

* Datasets 2.18.0

* Tokenizers 0.15.2

|

[

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.005\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* gradient\\_accumulation\\_steps: 4\n* total\\_train\\_batch\\_size: 64\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 10\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.10.0\n* Transformers 4.38.2\n* Pytorch 2.2.1+cu121\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

[

"TAGS\n#peft #safetensors #generated_from_trainer #dataset-medmnist-v2 #base_model-facebook/deit-base-patch16-224 #license-apache-2.0 #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.005\n* train\\_batch\\_size: 16\n* eval\\_batch\\_size: 16\n* seed: 42\n* gradient\\_accumulation\\_steps: 4\n* total\\_train\\_batch\\_size: 64\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 10\n* mixed\\_precision\\_training: Native AMP",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.10.0\n* Transformers 4.38.2\n* Pytorch 2.2.1+cu121\n* Datasets 2.18.0\n* Tokenizers 0.15.2"

] |

summarization

|

peft

|

# Model Card

There are facebook/bard-large-cnn LoRA finetuned model for dialogue summarization.

This LoRA weights trained on [dialogue sum augmented dataset](https://huggingface.co/datasets/doublecringe123/dialoguesum-npc-dialoguesum-stemmed-augmented)

## Model Details

```

cfg.lora_params = {

'target_modules':['out_proj', 'v_proj', 'q_proj', 'cf1', 'cf2'],

'r':8,

'lora_alpha': 16,

}

lora_conf = LoraConfig(

**cfg.lora_params,

lora_dropout = 0.05,

bias = 'none',

task_type = TaskType.CAUSAL_LM,

init_lora_weights = 'gaussian',

)

```

### Model Description

This is the model card of a 🤗 transformers model that has been pushed on the Hub by [doublecringe](https://huggingface.co/doublecringe123)

- **Developed by:** [doublecringe](https://huggingface.co/doublecringe123)

- **Model type:** LoRA (PEFT)

- **Language(s) (NLP):** English

- **Finetuned from model [optional]:** facebook\bart-large-cnn

## Uses

There are where this LoRA model can be usefull:

- Summarize Dialogues

- Summarize the News and etc.

### Direct Use

Model was developed for use it for summarize dialogues to chatbots to make them dont forget the meaning from first messages

## How to Get Started with the Model

There model inference way:

```

from peft import PeftConfig, PeftModel

from transformers import AutoModelForSeq2SeqLM, AutoTokenizer

import torch

from torch.nn import DataParallel

class SumModel():

def __init__(cfg, model_preset, **generation_parameters)->None:

# requires transformers and peft installed libs

cfg.model_preset = model_preset

cfg.generation_params = generation_parameters

config = PeftConfig.from_pretrained(cfg.model_preset)

model = AutoModelForSeq2SeqLM.from_pretrained(config.base_model_name_or_path)

cfg.tokenizer = AutoTokenizer.from_pretrained(config.base_model_name_or_path)

cfg.lora_model = PeftModel.from_pretrained(model, cfg.model_preset)

cfg.lora_model.print_trainable_parameters()

cfg.device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

cfg.lora_model.model = DataParallel(cfg.lora_model.model)

def __call__(self, text, **generation_params):

tokens = self.tokenizer(text, return_tensors = 'pt', truncation=True, padding=True).to(self.device)

if len(generation_params):

gen = self.lora_model.generate(**tokens, **generation_params)

else:

gen = self.lora_model.generate(**tokens, **self.generation_params)

return self.tokenizer.batch_decode(gen)

model = SumModel(model_preset = 'doublecringe123/bardt-large-cnn-dialoguesum-booksum-lora',

max_length = 96,

min_length = 26,

do_sample = True,

temperature = 0.9,

num_beams = 8,

repetition_penalty= 2.)

```

#### Training Hyperparameters

- fp16=True,

- learning rate = 2e-5,

- weights decay = .01,

- batch size = 8

#### Speeds, Sizes, Times [optional]

Model trained 18 hours - 12 epochs on kaggle notebook GPU A100 enviroment.

[First 6 epochs](https://www.kaggle.com/code/yannchikk/first-experience-in-peft-nlp-sum-model-lora?scriptVersionId=171845611)

[Last epochs until 12](https://www.kaggle.com/code/yannchikk/first-experience-in-peft-nlp-sum-model-lora?scriptVersionId=171944212)

### Results

There is revisions comparition on test dataset: [notebook](https://www.kaggle.com/code/yannchikk/first-experience-in-peft-nlp-sum-model-lora?scriptVersionId=172145140)

### Model Architecture and Objective

LoRA

#### Hardware

GPU A100

#### Software

Python, Transformes, PEFT

|

{"language": ["en"], "library_name": "peft", "datasets": ["doublecringe123/dialoguesum-npc-dialoguesum-stemmed-augmented"], "metrics": ["rouge"], "pipeline_tag": "summarization"}

|

doublecringe123/bardt-large-cnn-dialoguesum-booksum-lora

| null |

[

"peft",

"safetensors",

"summarization",

"en",

"dataset:doublecringe123/dialoguesum-npc-dialoguesum-stemmed-augmented",

"region:us"

] | null |

2024-04-13T18:37:00+00:00

|

[] |

[

"en"

] |

TAGS

#peft #safetensors #summarization #en #dataset-doublecringe123/dialoguesum-npc-dialoguesum-stemmed-augmented #region-us

|

# Model Card

There are facebook/bard-large-cnn LoRA finetuned model for dialogue summarization.

This LoRA weights trained on dialogue sum augmented dataset

## Model Details

### Model Description

This is the model card of a transformers model that has been pushed on the Hub by doublecringe

- Developed by: doublecringe

- Model type: LoRA (PEFT)

- Language(s) (NLP): English

- Finetuned from model [optional]: facebook\bart-large-cnn

## Uses

There are where this LoRA model can be usefull:

- Summarize Dialogues

- Summarize the News and etc.

### Direct Use

Model was developed for use it for summarize dialogues to chatbots to make them dont forget the meaning from first messages

## How to Get Started with the Model

There model inference way:

#### Training Hyperparameters

- fp16=True,

- learning rate = 2e-5,

- weights decay = .01,

- batch size = 8

#### Speeds, Sizes, Times [optional]

Model trained 18 hours - 12 epochs on kaggle notebook GPU A100 enviroment.

First 6 epochs

Last epochs until 12

### Results

There is revisions comparition on test dataset: notebook

### Model Architecture and Objective

LoRA

#### Hardware

GPU A100

#### Software

Python, Transformes, PEFT

|

[

"# Model Card\n\nThere are facebook/bard-large-cnn LoRA finetuned model for dialogue summarization. \nThis LoRA weights trained on dialogue sum augmented dataset",

"## Model Details",

"### Model Description\n\nThis is the model card of a transformers model that has been pushed on the Hub by doublecringe\n\n- Developed by: doublecringe\n- Model type: LoRA (PEFT)\n- Language(s) (NLP): English\n- Finetuned from model [optional]: facebook\\bart-large-cnn",

"## Uses\n\nThere are where this LoRA model can be usefull: \n- Summarize Dialogues\n- Summarize the News and etc.",

"### Direct Use\n\nModel was developed for use it for summarize dialogues to chatbots to make them dont forget the meaning from first messages",

"## How to Get Started with the Model\n\nThere model inference way:",

"#### Training Hyperparameters\n\n- fp16=True, \n- learning rate = 2e-5, \n- weights decay = .01,\n- batch size = 8",

"#### Speeds, Sizes, Times [optional]\n\nModel trained 18 hours - 12 epochs on kaggle notebook GPU A100 enviroment. \nFirst 6 epochs\nLast epochs until 12",

"### Results\n\nThere is revisions comparition on test dataset: notebook",

"### Model Architecture and Objective\n\nLoRA",

"#### Hardware\n\nGPU A100",

"#### Software\n\nPython, Transformes, PEFT"

] |

[

"TAGS\n#peft #safetensors #summarization #en #dataset-doublecringe123/dialoguesum-npc-dialoguesum-stemmed-augmented #region-us \n",

"# Model Card\n\nThere are facebook/bard-large-cnn LoRA finetuned model for dialogue summarization. \nThis LoRA weights trained on dialogue sum augmented dataset",

"## Model Details",

"### Model Description\n\nThis is the model card of a transformers model that has been pushed on the Hub by doublecringe\n\n- Developed by: doublecringe\n- Model type: LoRA (PEFT)\n- Language(s) (NLP): English\n- Finetuned from model [optional]: facebook\\bart-large-cnn",

"## Uses\n\nThere are where this LoRA model can be usefull: \n- Summarize Dialogues\n- Summarize the News and etc.",

"### Direct Use\n\nModel was developed for use it for summarize dialogues to chatbots to make them dont forget the meaning from first messages",

"## How to Get Started with the Model\n\nThere model inference way:",

"#### Training Hyperparameters\n\n- fp16=True, \n- learning rate = 2e-5, \n- weights decay = .01,\n- batch size = 8",

"#### Speeds, Sizes, Times [optional]\n\nModel trained 18 hours - 12 epochs on kaggle notebook GPU A100 enviroment. \nFirst 6 epochs\nLast epochs until 12",

"### Results\n\nThere is revisions comparition on test dataset: notebook",

"### Model Architecture and Objective\n\nLoRA",

"#### Hardware\n\nGPU A100",

"#### Software\n\nPython, Transformes, PEFT"

] |

text-to-image

| null |

# LoRA model of Rudewell Emilia/エミリア・ルードウェル (Maou Gakuin no Futekigousha)

## What Is This?

This is the LoRA model of waifu Rudewell Emilia/エミリア・ルードウェル (Maou Gakuin no Futekigousha).

## How Is It Trained?

* This model is trained with [kohya-ss/sd-scripts](https://github.com/kohya-ss/sd-scripts), and the test images are generated with [a1111's webui](AUTOMATIC1111/stable-diffusion-webui) and [API sdk](https://github.com/mix1009/sdwebuiapi).

* The [auto-training framework](https://github.com/deepghs/cyberharem) is maintained by [DeepGHS Team](https://huggingface.co/deepghs).

The architecture of base model is is `SD1.5`.

* Dataset used for training is the `stage3-p480-1200` in [CyberHarem/rudewell_emilia_maougakuinnofutekigousha](https://huggingface.co/datasets/CyberHarem/rudewell_emilia_maougakuinnofutekigousha), which contains 81 images.

* The images in the dataset is auto-cropped from anime videos, more images for other waifus in the same anime can be found in [BangumiBase/maougakuinnofutekigousha](https://huggingface.co/datasets/BangumiBase/maougakuinnofutekigousha)

* **Trigger word is `rudewell_emilia_maougakuinnofutekigousha`.**

* **Trigger word of anime style is is `anime_style`.**

* Pruned core tags for this waifu are `long hair, purple hair, purple eyes, hair between eyes, ponytail, pink eyes, asymmetrical hair`. You can add them to the prompt when some features of waifu (e.g. hair color) are not stable.

* For more details in training, you can take a look at [training configuration file](https://huggingface.co/CyberHarem/rudewell_emilia_maougakuinnofutekigousha/resolve/main/train.toml).

* For more details in LoRA, you can download it, and read the metadata with a1111's webui.

## How to Use It?

After downloading the safetensors files for the specified step, you need to use them like common LoRA.

* Recommended LoRA weight is 0.5-0.85.

* Recommended trigger word weight is 0.7-1.1.

For example, if you want to use the model from step 1152, you need to download [`1152/rudewell_emilia_maougakuinnofutekigousha.safetensors`](https://huggingface.co/CyberHarem/rudewell_emilia_maougakuinnofutekigousha/resolve/main/1152/rudewell_emilia_maougakuinnofutekigousha.safetensors) as LoRA. By using this model, you can generate images for the desired characters.

## Which Step Should I Use?

We selected 5 good steps for you to choose. The best one is step 1152.

972 images (917.47 MiB) were generated for auto-testing.

Here are the preview of the recommended steps:

| Step | Epoch | CCIP | AI Corrupt | Bikini Plus | Score | Download | pattern_0 | portrait_0 | portrait_1 | portrait_2 | full_body_0 | full_body_1 | profile_0 | profile_1 | free_0 | free_1 | shorts | maid_0 | maid_1 | miko | yukata | suit | china | bikini_0 | bikini_1 | bikini_2 | sit | squat | kneel | jump | crossed_arms | angry | smile | cry | grin | n_lie_0 | n_lie_1 | n_stand_0 | n_stand_1 | n_stand_2 | n_sex_0 | n_sex_1 |

|-------:|--------:|:----------|:-------------|:--------------|:----------|:------------------------------------------------------------------------------------------------------------------------------------------------------|:------------------------------------------|:--------------------------------------------|:--------------------------------------------|:--------------------------------------------|:----------------------------------------------|:----------------------------------------------|:------------------------------------------|:------------------------------------------|:------------------------------------|:------------------------------------|:------------------------------------|:------------------------------------|:------------------------------------|:--------------------------------|:------------------------------------|:--------------------------------|:----------------------------------|:----------------------------------------|:----------------------------------------|:----------------------------------------|:------------------------------|:----------------------------------|:----------------------------------|:--------------------------------|:------------------------------------------------|:----------------------------------|:----------------------------------|:------------------------------|:--------------------------------|:--------------------------------------|:--------------------------------------|:------------------------------------------|:------------------------------------------|:------------------------------------------|:--------------------------------------|:--------------------------------------|

| 1152 | 72 | **0.924** | 0.991 | **0.844** | **0.707** | [Download](https://huggingface.co/CyberHarem/rudewell_emilia_maougakuinnofutekigousha/resolve/main/1152/rudewell_emilia_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 1200 | 75 | 0.922 | **0.994** | 0.844 | 0.705 | [Download](https://huggingface.co/CyberHarem/rudewell_emilia_maougakuinnofutekigousha/resolve/main/1200/rudewell_emilia_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 1008 | 63 | 0.917 | 0.991 | 0.844 | 0.701 | [Download](https://huggingface.co/CyberHarem/rudewell_emilia_maougakuinnofutekigousha/resolve/main/1008/rudewell_emilia_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 768 | 48 | 0.904 | 0.984 | 0.842 | 0.686 | [Download](https://huggingface.co/CyberHarem/rudewell_emilia_maougakuinnofutekigousha/resolve/main/768/rudewell_emilia_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

| 912 | 57 | 0.905 | 0.987 | 0.841 | 0.685 | [Download](https://huggingface.co/CyberHarem/rudewell_emilia_maougakuinnofutekigousha/resolve/main/912/rudewell_emilia_maougakuinnofutekigousha.zip) |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |  |

## Anything Else?

Because the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

## All Steps

We uploaded the files in all steps. you can check the images, metrics and download them in the following links:

* [Steps From 864 to 1280](all/0.md)

* [Steps From 384 to 816](all/1.md)

* [Steps From 48 to 336](all/2.md)

|

{"license": "mit", "tags": ["art", "not-for-all-audiences"], "datasets": ["CyberHarem/rudewell_emilia_maougakuinnofutekigousha", "BangumiBase/maougakuinnofutekigousha"], "pipeline_tag": "text-to-image"}

|

CyberHarem/rudewell_emilia_maougakuinnofutekigousha

| null |

[

"art",

"not-for-all-audiences",

"text-to-image",

"dataset:CyberHarem/rudewell_emilia_maougakuinnofutekigousha",

"dataset:BangumiBase/maougakuinnofutekigousha",

"license:mit",

"region:us"

] | null |

2024-04-13T18:37:03+00:00

|

[] |

[] |

TAGS

#art #not-for-all-audiences #text-to-image #dataset-CyberHarem/rudewell_emilia_maougakuinnofutekigousha #dataset-BangumiBase/maougakuinnofutekigousha #license-mit #region-us

|

LoRA model of Rudewell Emilia/エミリア・ルードウェル (Maou Gakuin no Futekigousha)

=======================================================================

What Is This?

-------------

This is the LoRA model of waifu Rudewell Emilia/エミリア・ルードウェル (Maou Gakuin no Futekigousha).

How Is It Trained?

------------------

* This model is trained with kohya-ss/sd-scripts, and the test images are generated with a1111's webui and API sdk.

* The auto-training framework is maintained by DeepGHS Team.

The architecture of base model is is 'SD1.5'.

* Dataset used for training is the 'stage3-p480-1200' in CyberHarem/rudewell\_emilia\_maougakuinnofutekigousha, which contains 81 images.

* The images in the dataset is auto-cropped from anime videos, more images for other waifus in the same anime can be found in BangumiBase/maougakuinnofutekigousha

* Trigger word is 'rudewell\_emilia\_maougakuinnofutekigousha'.

* Trigger word of anime style is is 'anime\_style'.

* Pruned core tags for this waifu are 'long hair, purple hair, purple eyes, hair between eyes, ponytail, pink eyes, asymmetrical hair'. You can add them to the prompt when some features of waifu (e.g. hair color) are not stable.

* For more details in training, you can take a look at training configuration file.

* For more details in LoRA, you can download it, and read the metadata with a1111's webui.

How to Use It?

--------------

After downloading the safetensors files for the specified step, you need to use them like common LoRA.

* Recommended LoRA weight is 0.5-0.85.

* Recommended trigger word weight is 0.7-1.1.

For example, if you want to use the model from step 1152, you need to download '1152/rudewell\_emilia\_maougakuinnofutekigousha.safetensors' as LoRA. By using this model, you can generate images for the desired characters.

Which Step Should I Use?

------------------------

We selected 5 good steps for you to choose. The best one is step 1152.

972 images (917.47 MiB) were generated for auto-testing.

!Metrics Plot

Here are the preview of the recommended steps:

Anything Else?

--------------

Because the automation of LoRA training always annoys some people. So for the following groups, it is not recommended to use this model and we express regret:

1. Individuals who cannot tolerate any deviations from the original character design, even in the slightest detail.

2. Individuals who are facing the application scenarios with high demands for accuracy in recreating character outfits.

3. Individuals who cannot accept the potential randomness in AI-generated images based on the Stable Diffusion algorithm.

4. Individuals who are not comfortable with the fully automated process of training character models using LoRA, or those who believe that training character models must be done purely through manual operations to avoid disrespecting the characters.

5. Individuals who finds the generated image content offensive to their values.

All Steps

---------

We uploaded the files in all steps. you can check the images, metrics and download them in the following links:

* Steps From 864 to 1280

* Steps From 384 to 816

* Steps From 48 to 336

|

[] |

[

"TAGS\n#art #not-for-all-audiences #text-to-image #dataset-CyberHarem/rudewell_emilia_maougakuinnofutekigousha #dataset-BangumiBase/maougakuinnofutekigousha #license-mit #region-us \n"

] |

text-generation

|

transformers

|

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->