pipeline_tag

stringclasses 48

values | library_name

stringclasses 198

values | text

stringlengths 1

900k

| metadata

stringlengths 2

438k

| id

stringlengths 5

122

| last_modified

null | tags

sequencelengths 1

1.84k

| sha

null | created_at

stringlengths 25

25

| arxiv

sequencelengths 0

201

| languages

sequencelengths 0

1.83k

| tags_str

stringlengths 17

9.34k

| text_str

stringlengths 0

389k

| text_lists

sequencelengths 0

722

| processed_texts

sequencelengths 1

723

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

text-generation | transformers |

# Nous-Hermes-2-Vision - Mistral 7B

*In the tapestry of Greek mythology, Hermes reigns as the eloquent Messenger of the Gods, a deity who deftly bridges the realms through the art of communication. It is in homage to this divine mediator that I name this advanced LLM "Hermes," a system crafted to navigate the complex intricacies of human discourse with celestial finesse.*

## Model description

Nous-Hermes-2-Vision stands as a pioneering Vision-Language Model, leveraging advancements from the renowned **OpenHermes-2.5-Mistral-7B** by teknium. This model incorporates two pivotal enhancements, setting it apart as a cutting-edge solution:

- **SigLIP-400M Integration**: Diverging from traditional approaches that rely on substantial 3B vision encoders, Nous-Hermes-2-Vision harnesses the formidable SigLIP-400M. This strategic choice not only streamlines the model's architecture, making it more lightweight, but also capitalizes on SigLIP's remarkable capabilities. The result? A remarkable boost in performance that defies conventional expectations.

- **Custom Dataset Enriched with Function Calling**: Our model's training data includes a unique feature – function calling. This distinctive addition transforms Nous-Hermes-2-Vision into a **Vision-Language Action Model**. Developers now have a versatile tool at their disposal, primed for crafting a myriad of ingenious automations.

This project is led by [qnguyen3](https://twitter.com/stablequan) and [teknium](https://twitter.com/Teknium1).

## Training

### Dataset

- 220K from **LVIS-INSTRUCT4V**

- 60K from **ShareGPT4V**

- 150K Private **Function Calling Data**

- 50K conversations from teknium's **OpenHermes-2.5**

## Usage

### Prompt Format

- Like other LLaVA's variants, this model uses Vicuna-V1 as its prompt template. Please refer to `conv_llava_v1` in [this file](https://github.com/qnguyen3/hermes-llava/blob/main/llava/conversation.py)

- For Gradio UI, please visit this [GitHub Repo](https://github.com/qnguyen3/hermes-llava)

### Function Calling

- For functiong calling, the message should start with a `<fn_call>` tag. Here is an example:

```json

<fn_call>{

"type": "object",

"properties": {

"bus_colors": {

"type": "array",

"description": "The colors of the bus in the image.",

"items": {

"type": "string",

"enum": ["red", "blue", "green", "white"]

}

},

"bus_features": {

"type": "string",

"description": "The features seen on the back of the bus."

},

"bus_location": {

"type": "string",

"description": "The location of the bus (driving or pulled off to the side).",

"enum": ["driving", "pulled off to the side"]

}

}

}

```

Output:

```json

{

"bus_colors": ["red", "white"],

"bus_features": "An advertisement",

"bus_location": "driving"

}

```

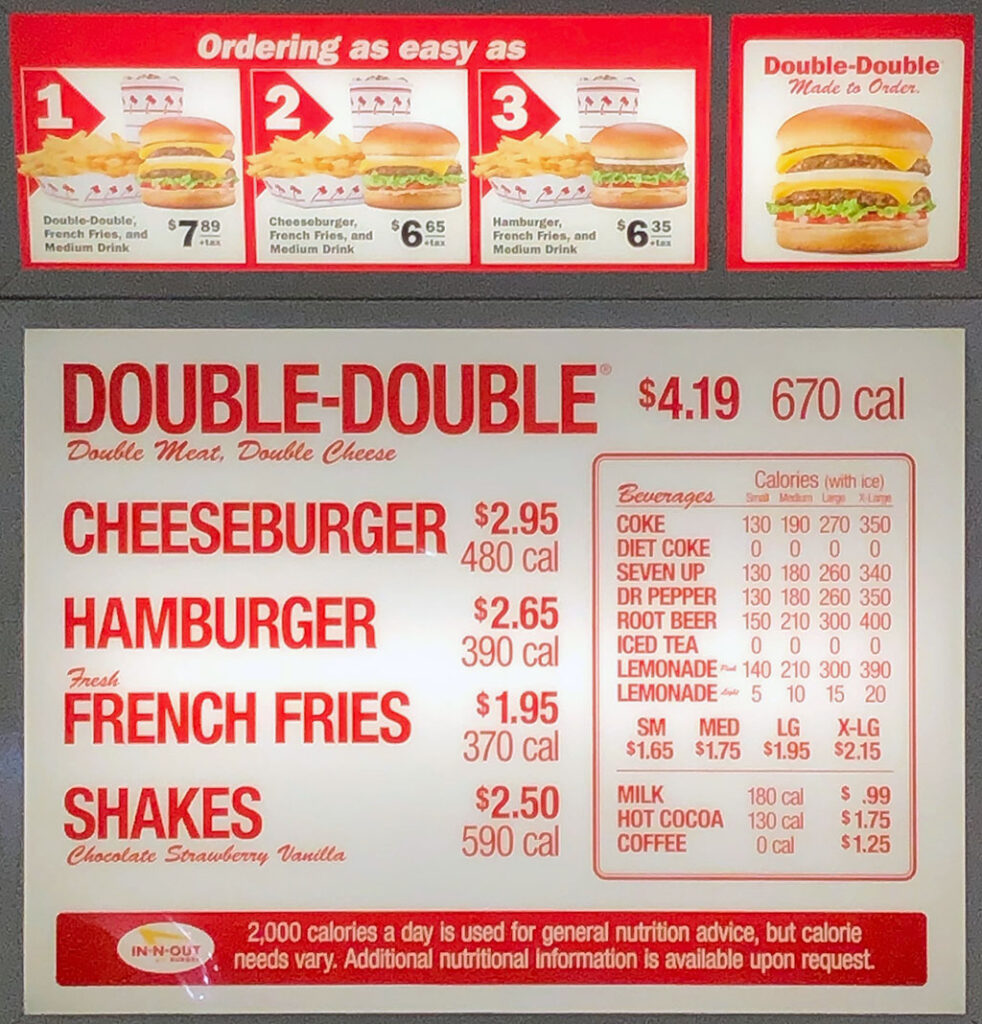

## Example

### Chat

### Function Calling

Input image:

Input message:

```json

<fn_call>{

"type": "object",

"properties": {

"food_list": {

"type": "array",

"description": "List of all the food",

"items": {

"type": "string",

}

},

}

}

```

Output:

```json

{

"food_list": [

"Double Burger",

"Cheeseburger",

"French Fries",

"Shakes",

"Coffee"

]

}

```

| {"language": ["en"], "license": "apache-2.0", "tags": ["mistral", "instruct", "finetune", "chatml", "gpt4", "synthetic data", "distillation", "multimodal", "llava"], "base_model": "mistralai/Mistral-7B-v0.1", "model-index": [{"name": "Nous-Hermes-2-Vision", "results": []}]} | jeiku/noushermesvisionalphaconfigedit | null | [

"transformers",

"pytorch",

"mistral",

"text-generation",

"instruct",

"finetune",

"chatml",

"gpt4",

"synthetic data",

"distillation",

"multimodal",

"llava",

"conversational",

"en",

"base_model:mistralai/Mistral-7B-v0.1",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null | 2024-04-16T22:55:09+00:00 | [] | [

"en"

] | TAGS

#transformers #pytorch #mistral #text-generation #instruct #finetune #chatml #gpt4 #synthetic data #distillation #multimodal #llava #conversational #en #base_model-mistralai/Mistral-7B-v0.1 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

|

# Nous-Hermes-2-Vision - Mistral 7B

!image/png

*In the tapestry of Greek mythology, Hermes reigns as the eloquent Messenger of the Gods, a deity who deftly bridges the realms through the art of communication. It is in homage to this divine mediator that I name this advanced LLM "Hermes," a system crafted to navigate the complex intricacies of human discourse with celestial finesse.*

## Model description

Nous-Hermes-2-Vision stands as a pioneering Vision-Language Model, leveraging advancements from the renowned OpenHermes-2.5-Mistral-7B by teknium. This model incorporates two pivotal enhancements, setting it apart as a cutting-edge solution:

- SigLIP-400M Integration: Diverging from traditional approaches that rely on substantial 3B vision encoders, Nous-Hermes-2-Vision harnesses the formidable SigLIP-400M. This strategic choice not only streamlines the model's architecture, making it more lightweight, but also capitalizes on SigLIP's remarkable capabilities. The result? A remarkable boost in performance that defies conventional expectations.

- Custom Dataset Enriched with Function Calling: Our model's training data includes a unique feature – function calling. This distinctive addition transforms Nous-Hermes-2-Vision into a Vision-Language Action Model. Developers now have a versatile tool at their disposal, primed for crafting a myriad of ingenious automations.

This project is led by qnguyen3 and teknium.

## Training

### Dataset

- 220K from LVIS-INSTRUCT4V

- 60K from ShareGPT4V

- 150K Private Function Calling Data

- 50K conversations from teknium's OpenHermes-2.5

## Usage

### Prompt Format

- Like other LLaVA's variants, this model uses Vicuna-V1 as its prompt template. Please refer to 'conv_llava_v1' in this file

- For Gradio UI, please visit this GitHub Repo

### Function Calling

- For functiong calling, the message should start with a '<fn_call>' tag. Here is an example:

Output:

## Example

### Chat

!image/png

### Function Calling

Input image:

!image/png

Input message:

Output:

| [

"# Nous-Hermes-2-Vision - Mistral 7B\n\n\n!image/png\n\n*In the tapestry of Greek mythology, Hermes reigns as the eloquent Messenger of the Gods, a deity who deftly bridges the realms through the art of communication. It is in homage to this divine mediator that I name this advanced LLM \"Hermes,\" a system crafted to navigate the complex intricacies of human discourse with celestial finesse.*",

"## Model description\n\nNous-Hermes-2-Vision stands as a pioneering Vision-Language Model, leveraging advancements from the renowned OpenHermes-2.5-Mistral-7B by teknium. This model incorporates two pivotal enhancements, setting it apart as a cutting-edge solution:\n\n- SigLIP-400M Integration: Diverging from traditional approaches that rely on substantial 3B vision encoders, Nous-Hermes-2-Vision harnesses the formidable SigLIP-400M. This strategic choice not only streamlines the model's architecture, making it more lightweight, but also capitalizes on SigLIP's remarkable capabilities. The result? A remarkable boost in performance that defies conventional expectations.\n\n- Custom Dataset Enriched with Function Calling: Our model's training data includes a unique feature – function calling. This distinctive addition transforms Nous-Hermes-2-Vision into a Vision-Language Action Model. Developers now have a versatile tool at their disposal, primed for crafting a myriad of ingenious automations.\n\nThis project is led by qnguyen3 and teknium.",

"## Training",

"### Dataset\n- 220K from LVIS-INSTRUCT4V\n- 60K from ShareGPT4V\n- 150K Private Function Calling Data\n- 50K conversations from teknium's OpenHermes-2.5",

"## Usage",

"### Prompt Format\n- Like other LLaVA's variants, this model uses Vicuna-V1 as its prompt template. Please refer to 'conv_llava_v1' in this file\n- For Gradio UI, please visit this GitHub Repo",

"### Function Calling\n- For functiong calling, the message should start with a '<fn_call>' tag. Here is an example:\n\n\n\nOutput:",

"## Example",

"### Chat\n!image/png",

"### Function Calling\nInput image:\n\n!image/png\n\nInput message:\n\n\nOutput:"

] | [

"TAGS\n#transformers #pytorch #mistral #text-generation #instruct #finetune #chatml #gpt4 #synthetic data #distillation #multimodal #llava #conversational #en #base_model-mistralai/Mistral-7B-v0.1 #license-apache-2.0 #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# Nous-Hermes-2-Vision - Mistral 7B\n\n\n!image/png\n\n*In the tapestry of Greek mythology, Hermes reigns as the eloquent Messenger of the Gods, a deity who deftly bridges the realms through the art of communication. It is in homage to this divine mediator that I name this advanced LLM \"Hermes,\" a system crafted to navigate the complex intricacies of human discourse with celestial finesse.*",

"## Model description\n\nNous-Hermes-2-Vision stands as a pioneering Vision-Language Model, leveraging advancements from the renowned OpenHermes-2.5-Mistral-7B by teknium. This model incorporates two pivotal enhancements, setting it apart as a cutting-edge solution:\n\n- SigLIP-400M Integration: Diverging from traditional approaches that rely on substantial 3B vision encoders, Nous-Hermes-2-Vision harnesses the formidable SigLIP-400M. This strategic choice not only streamlines the model's architecture, making it more lightweight, but also capitalizes on SigLIP's remarkable capabilities. The result? A remarkable boost in performance that defies conventional expectations.\n\n- Custom Dataset Enriched with Function Calling: Our model's training data includes a unique feature – function calling. This distinctive addition transforms Nous-Hermes-2-Vision into a Vision-Language Action Model. Developers now have a versatile tool at their disposal, primed for crafting a myriad of ingenious automations.\n\nThis project is led by qnguyen3 and teknium.",

"## Training",

"### Dataset\n- 220K from LVIS-INSTRUCT4V\n- 60K from ShareGPT4V\n- 150K Private Function Calling Data\n- 50K conversations from teknium's OpenHermes-2.5",

"## Usage",

"### Prompt Format\n- Like other LLaVA's variants, this model uses Vicuna-V1 as its prompt template. Please refer to 'conv_llava_v1' in this file\n- For Gradio UI, please visit this GitHub Repo",

"### Function Calling\n- For functiong calling, the message should start with a '<fn_call>' tag. Here is an example:\n\n\n\nOutput:",

"## Example",

"### Chat\n!image/png",

"### Function Calling\nInput image:\n\n!image/png\n\nInput message:\n\n\nOutput:"

] |

image-classification | pytorch |

# TransNeXt

Official Model release

for ["TransNeXt: Robust Foveal Visual Perception for Vision Transformers"](https://arxiv.org/pdf/2311.17132.pdf) [CVPR 2024]

.

## Model Details

- **Code:** https://github.com/DaiShiResearch/TransNeXt

- **Paper:** [TransNeXt: Robust Foveal Visual Perception for Vision Transformers](https://arxiv.org/abs/2311.17132)

- **Author:** [Dai Shi](https://github.com/DaiShiResearch)

- **Email:** [email protected]

## Methods

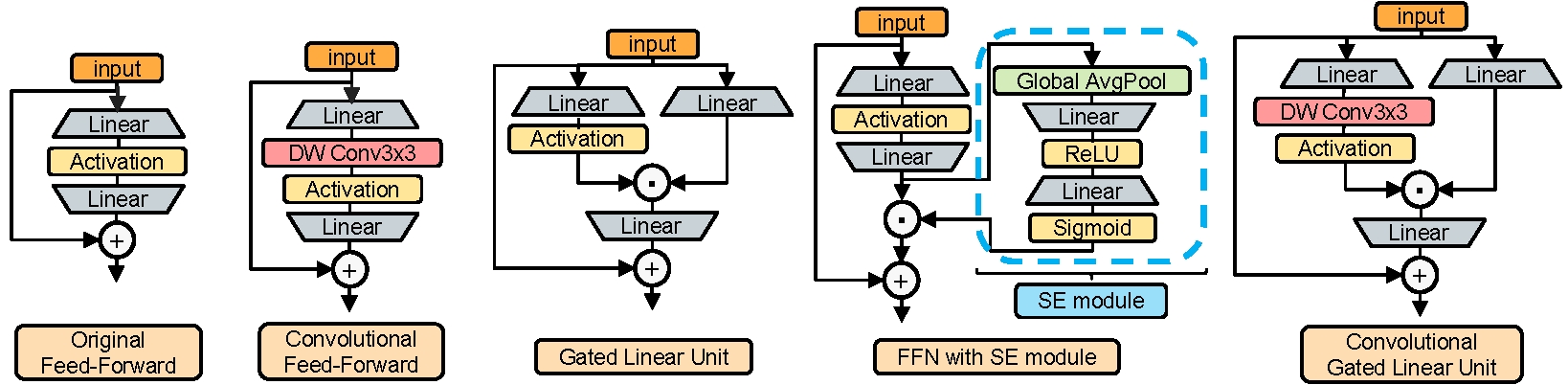

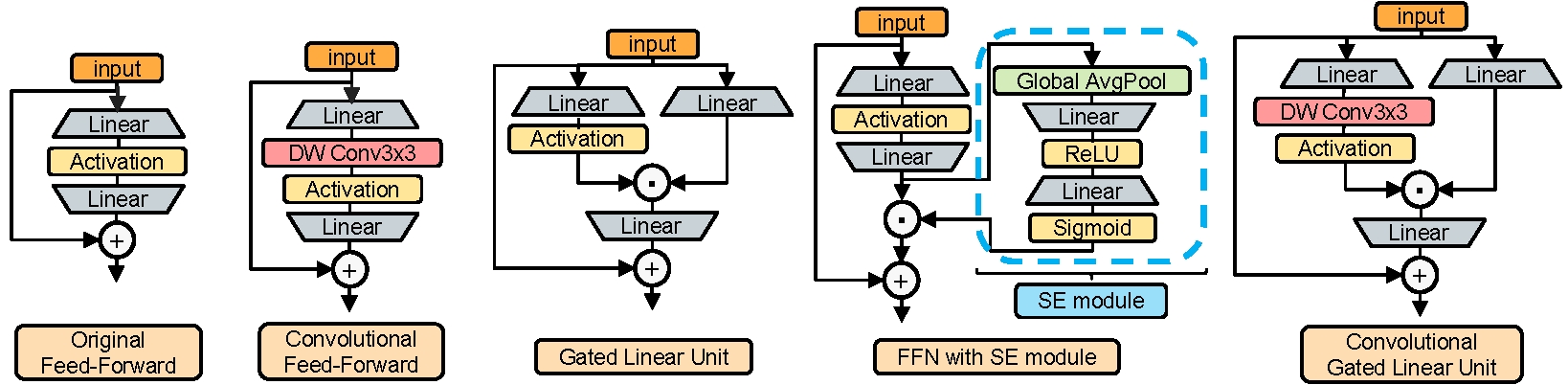

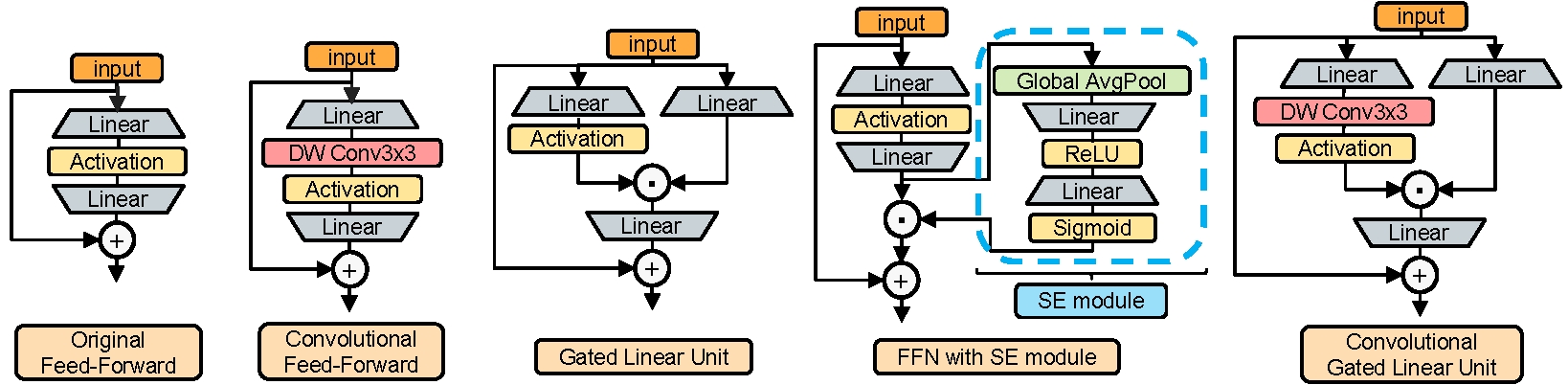

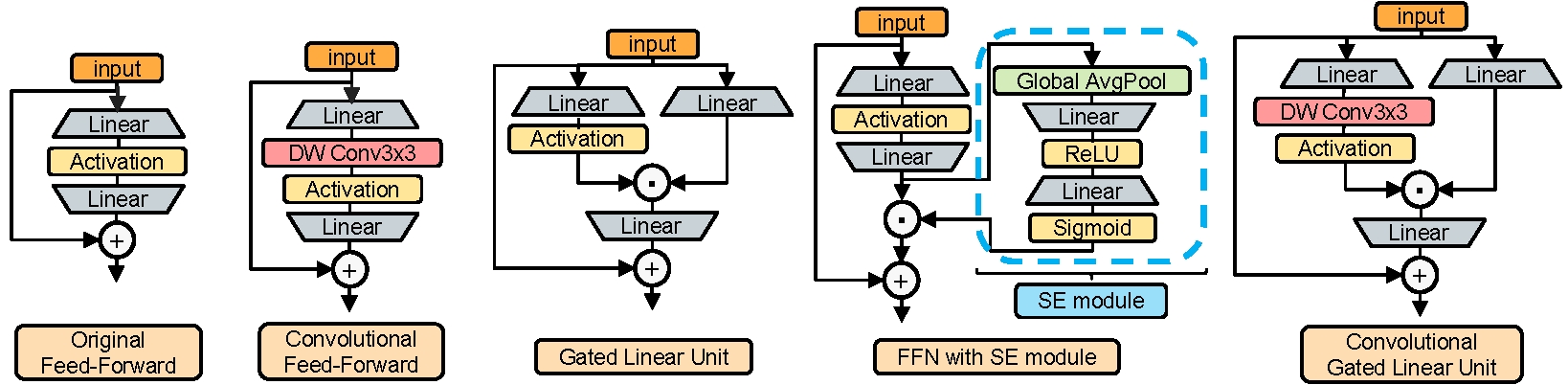

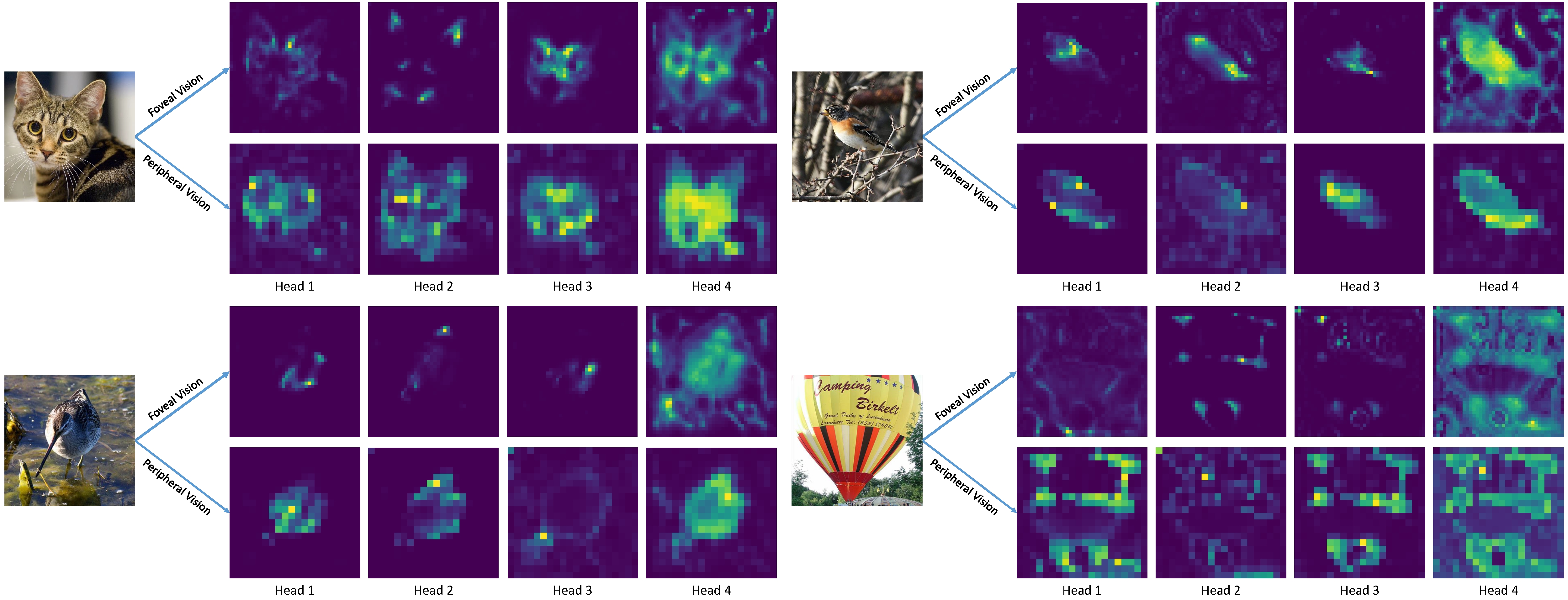

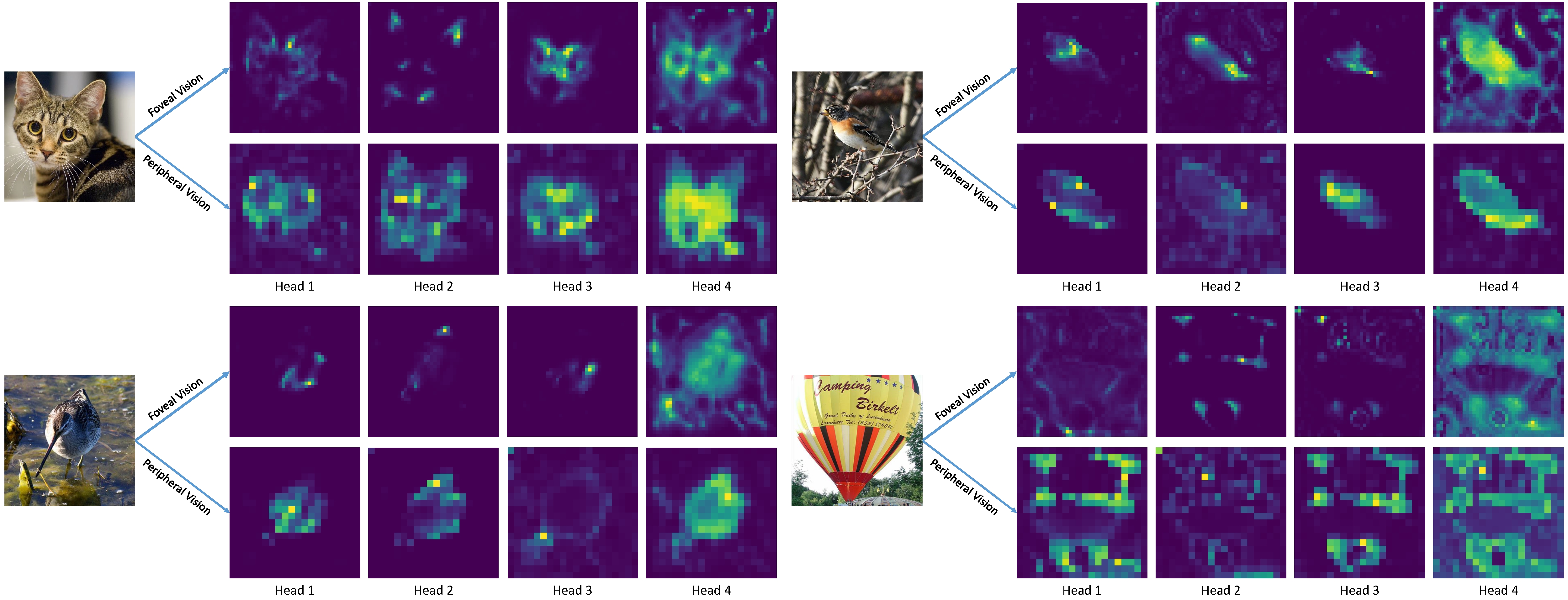

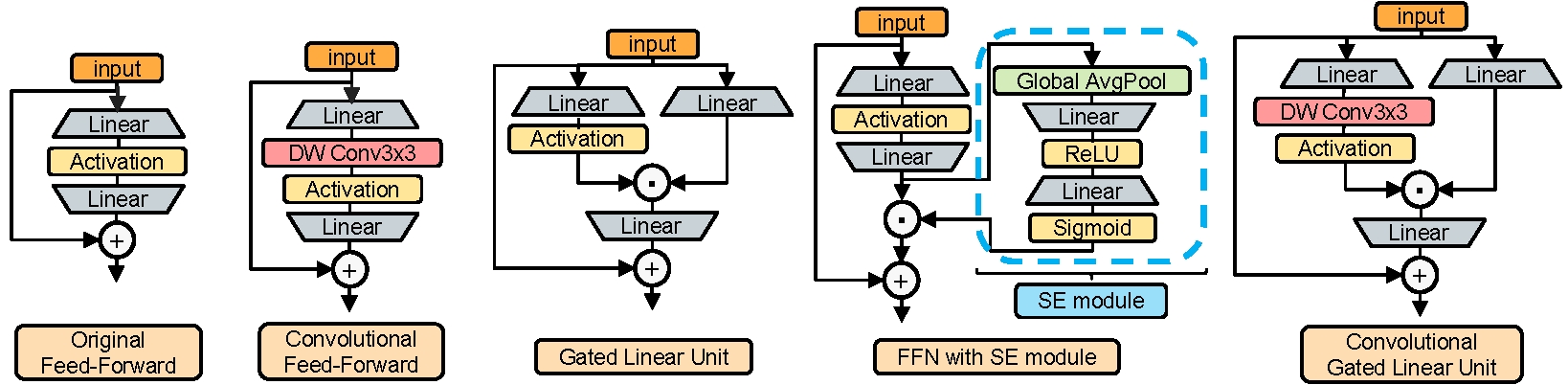

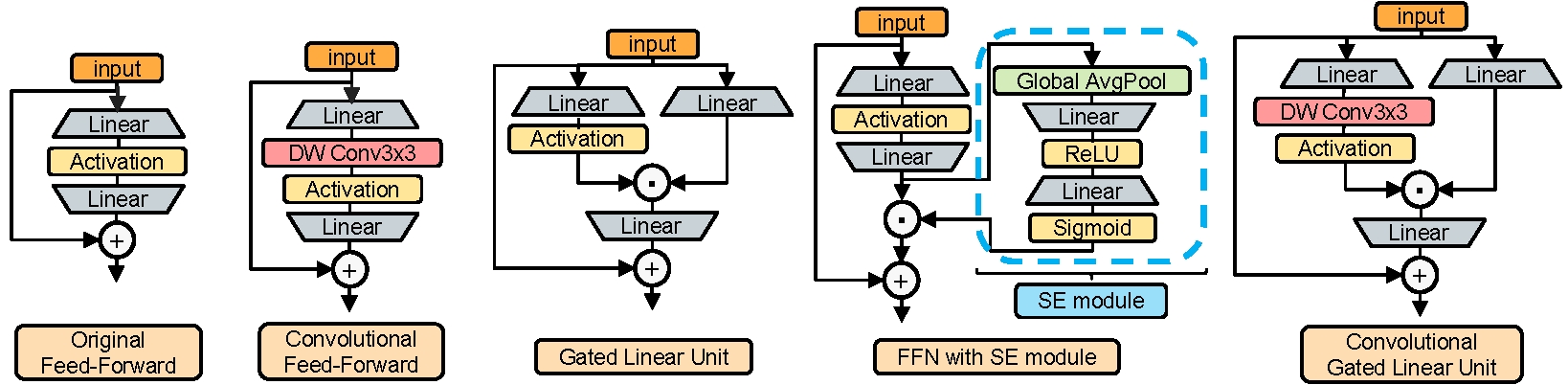

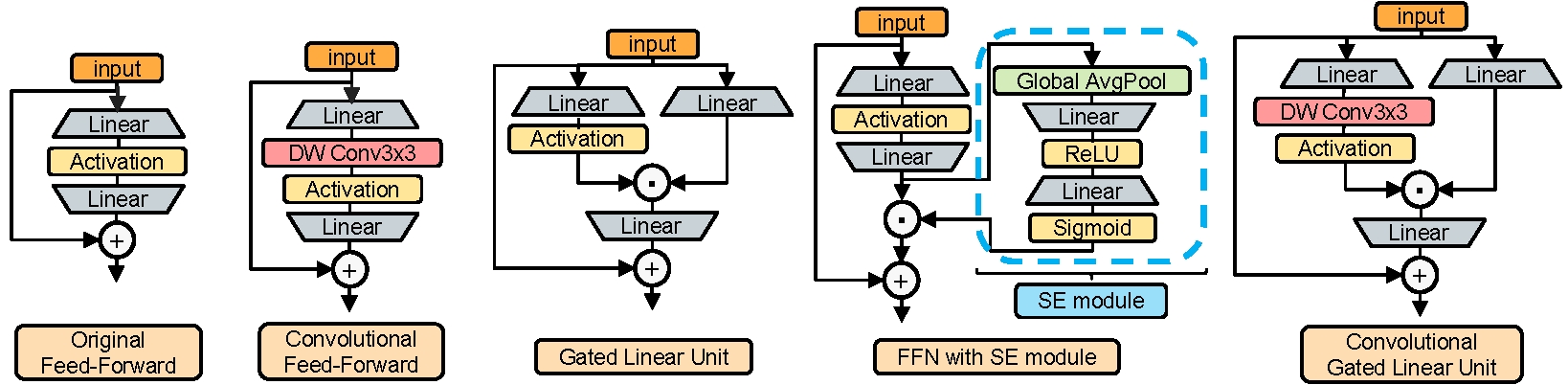

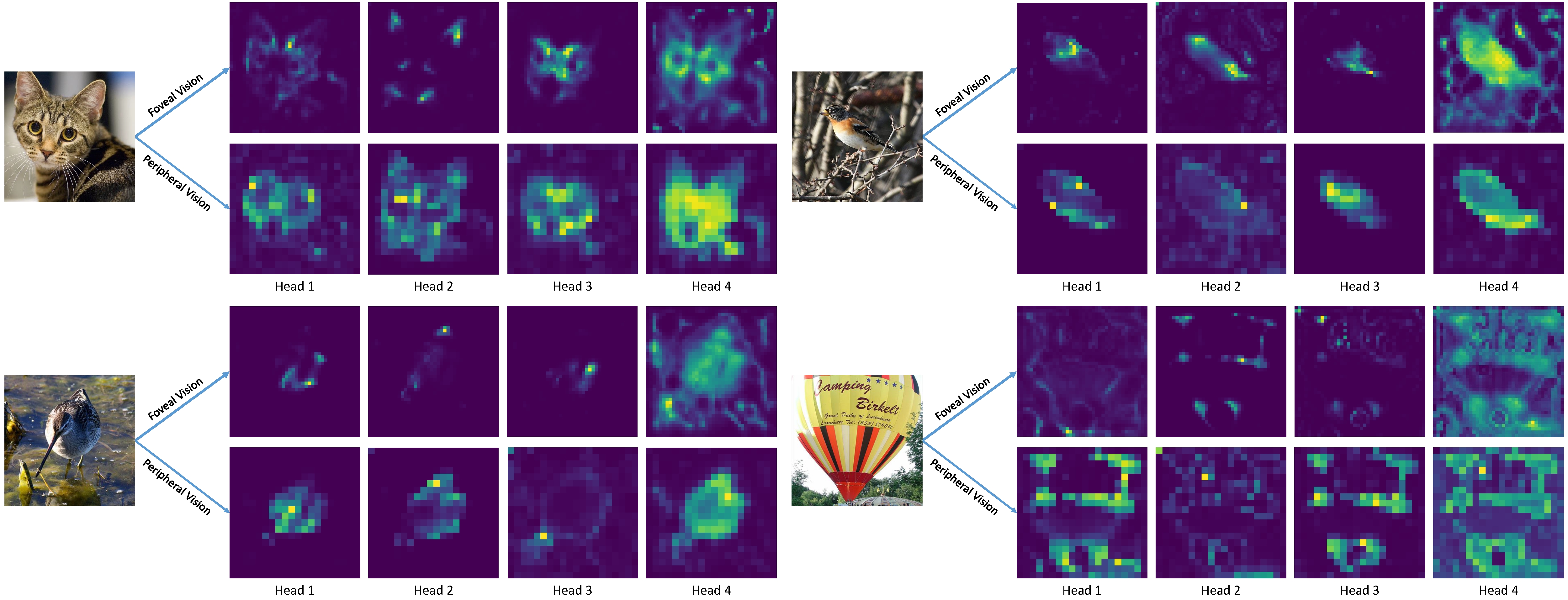

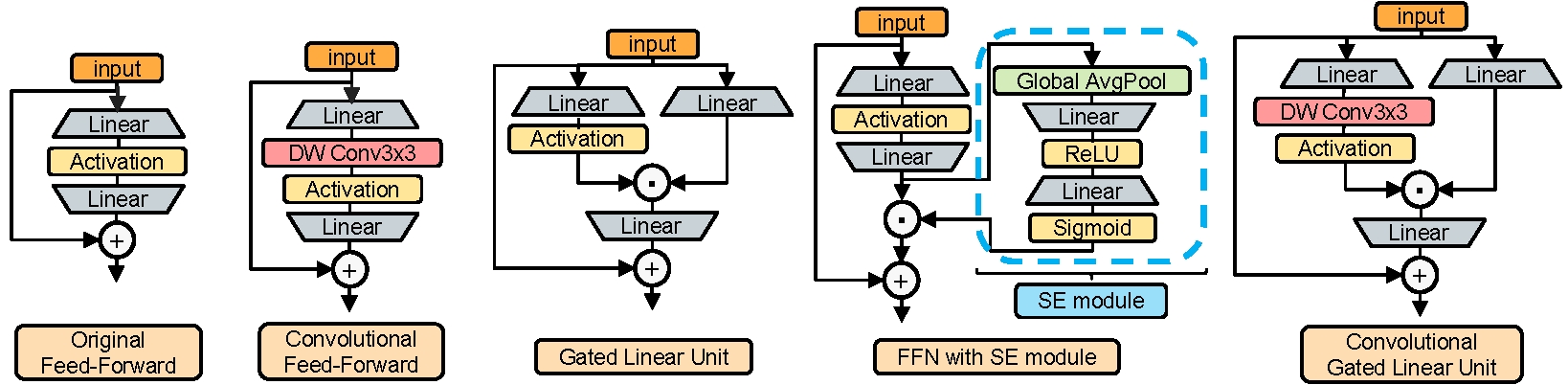

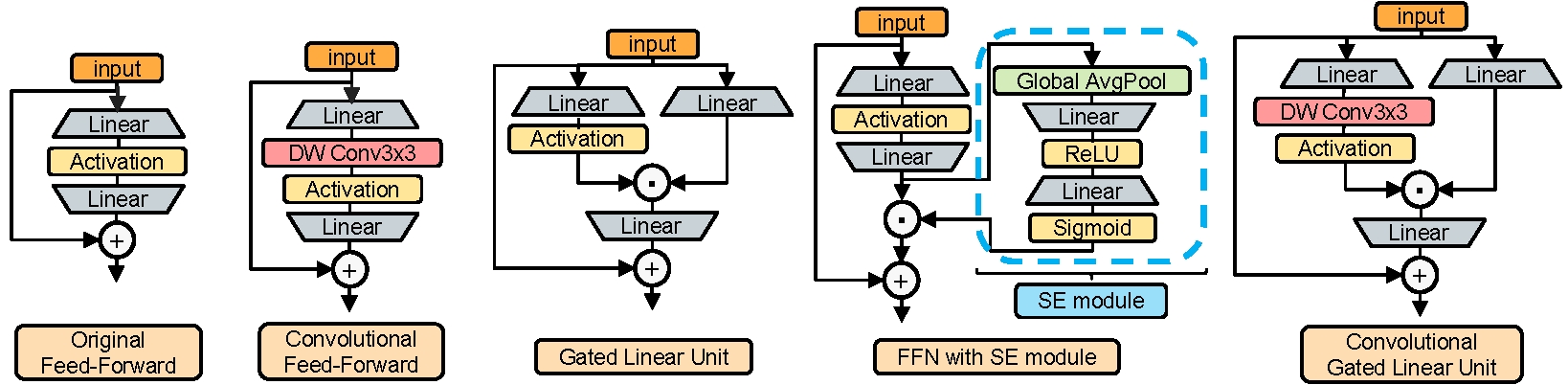

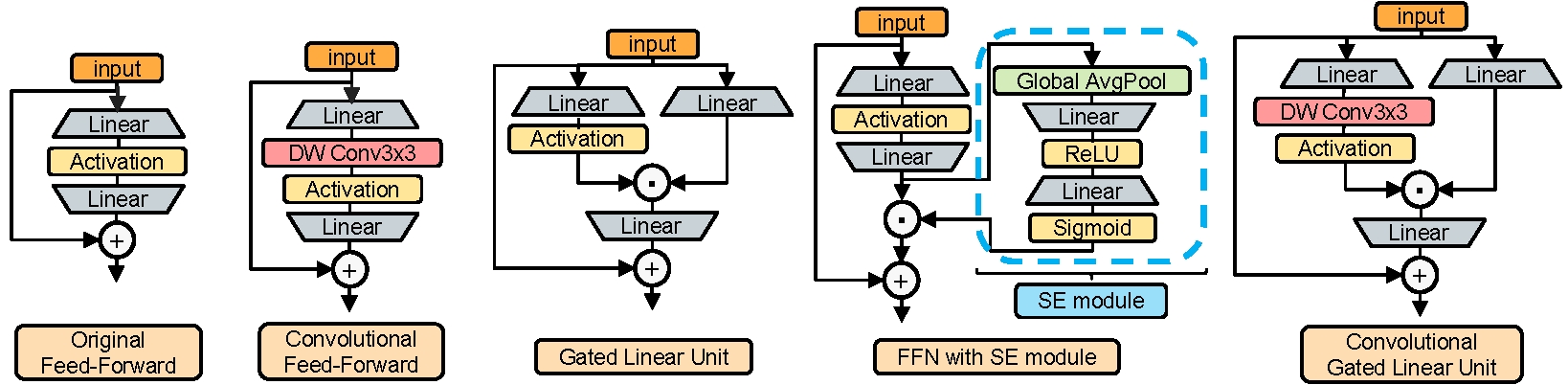

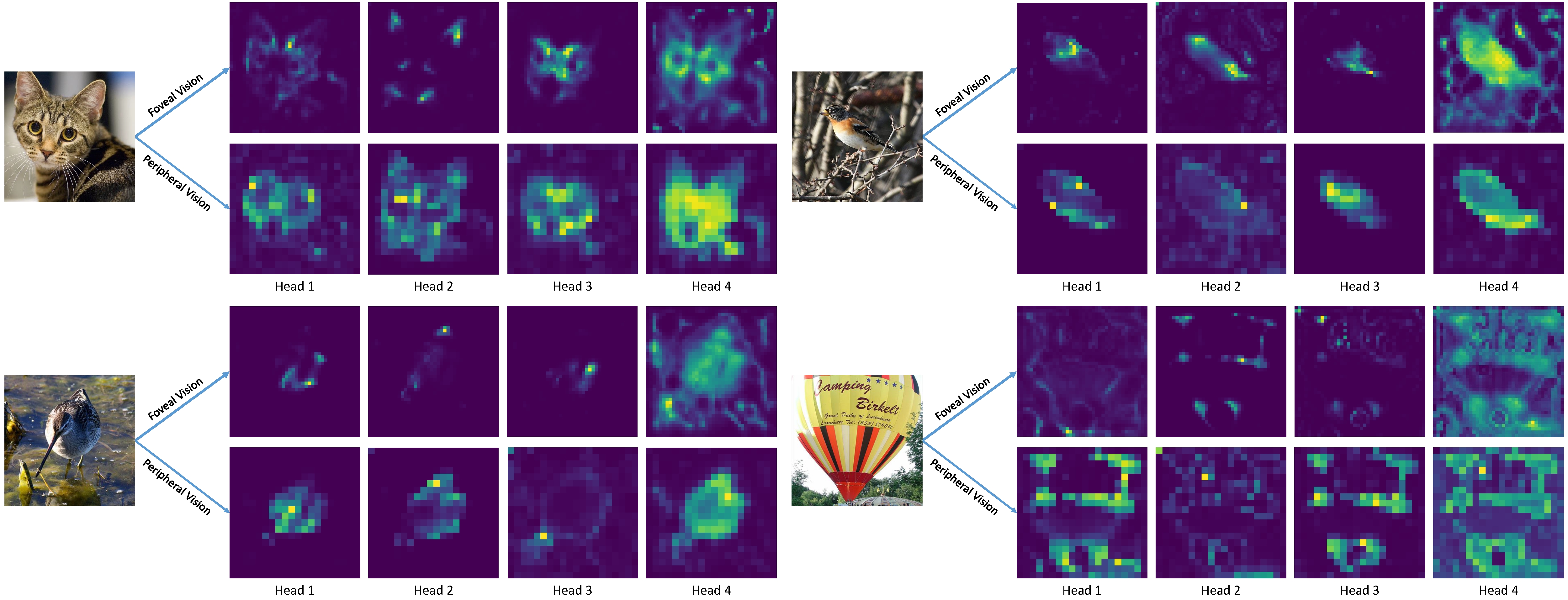

#### Pixel-focused attention (Left) & aggregated attention (Right):

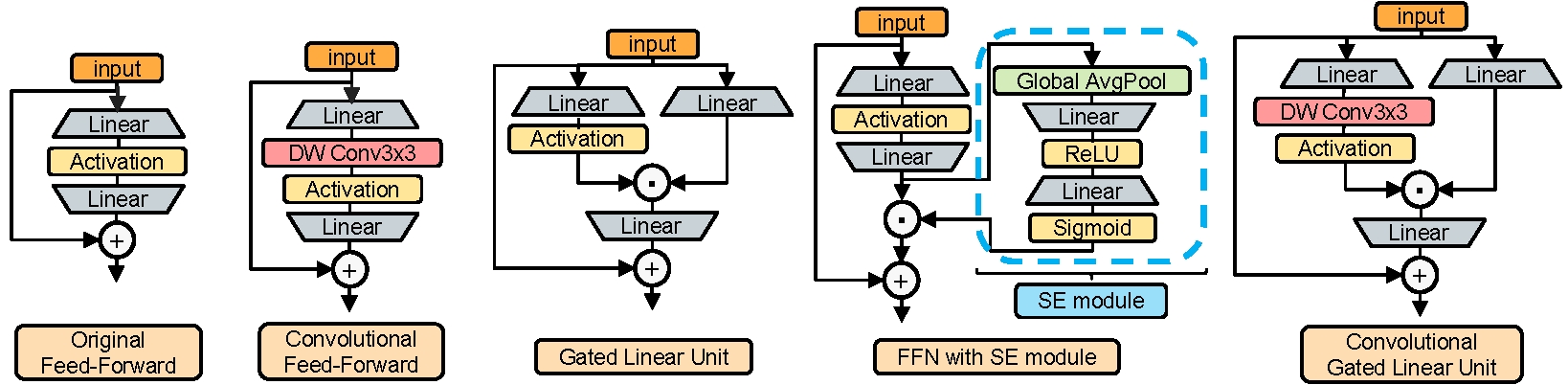

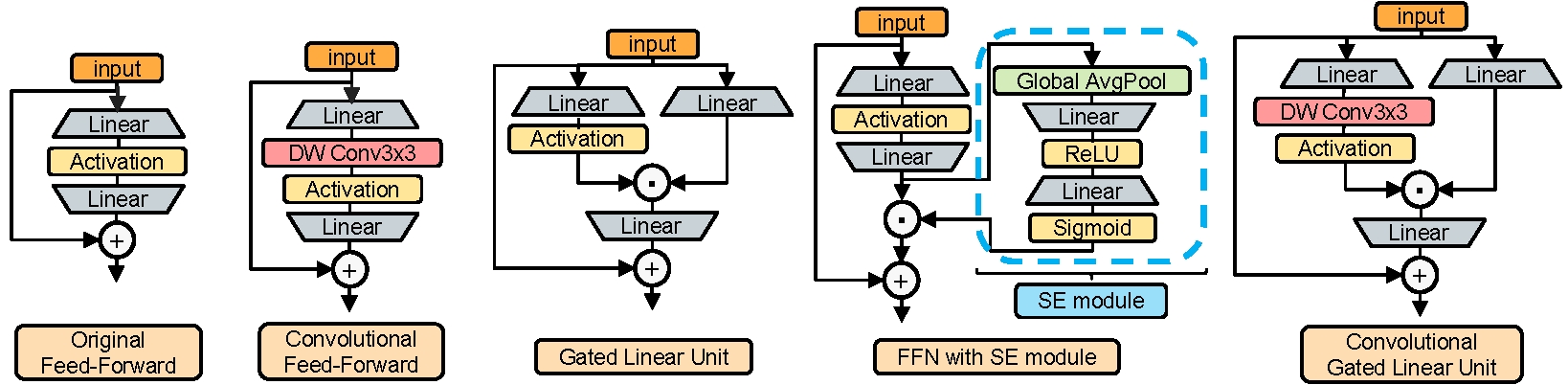

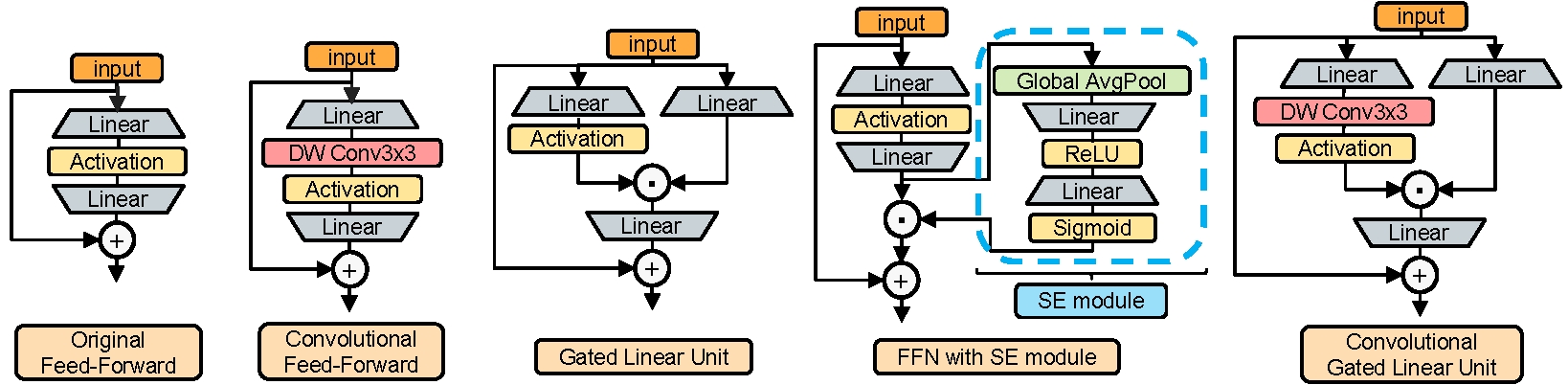

#### Convolutional GLU (First on the right):

## Results

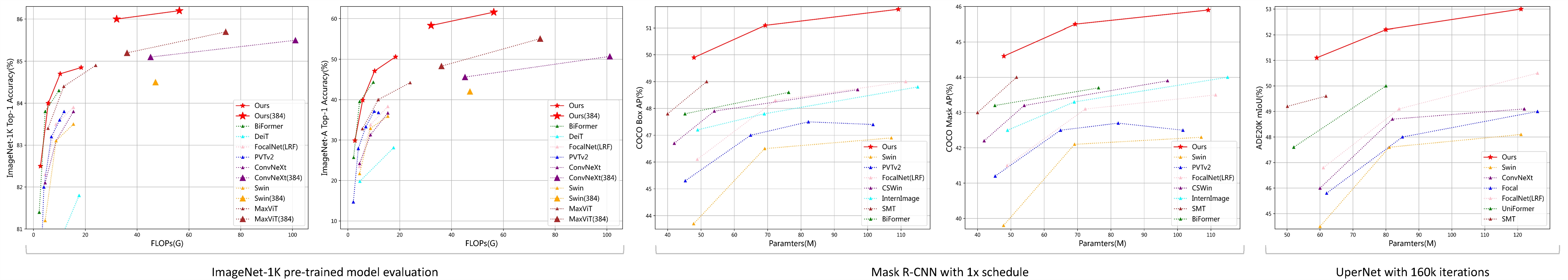

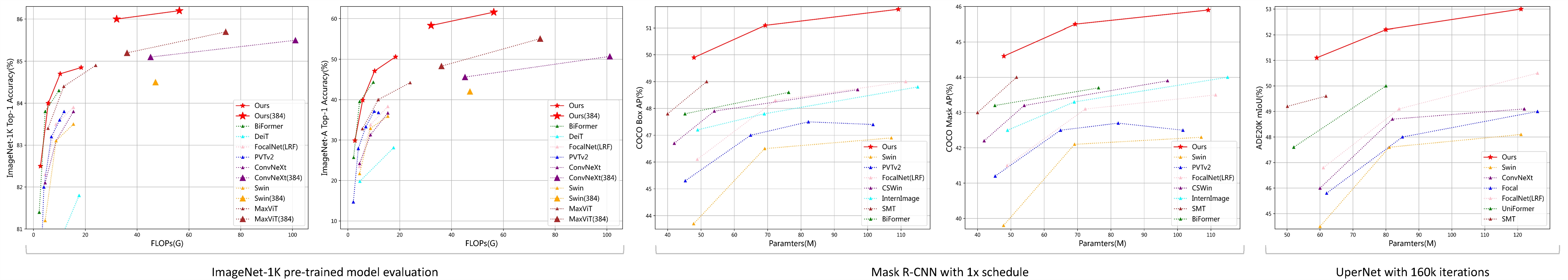

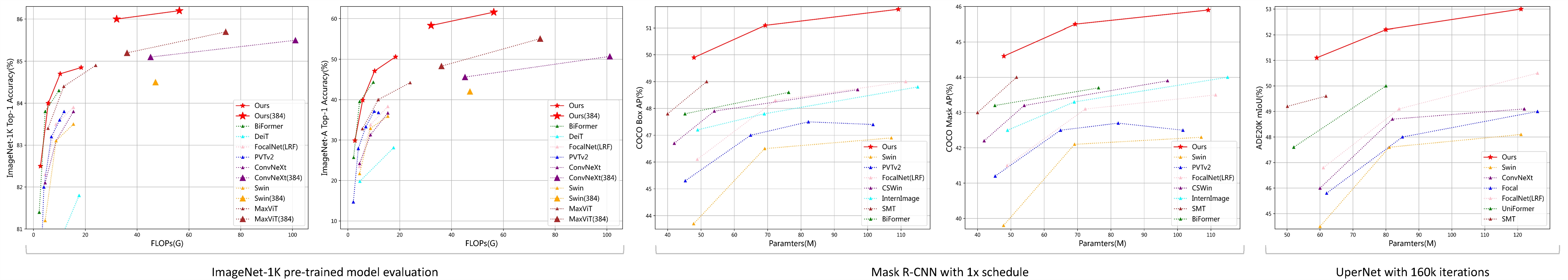

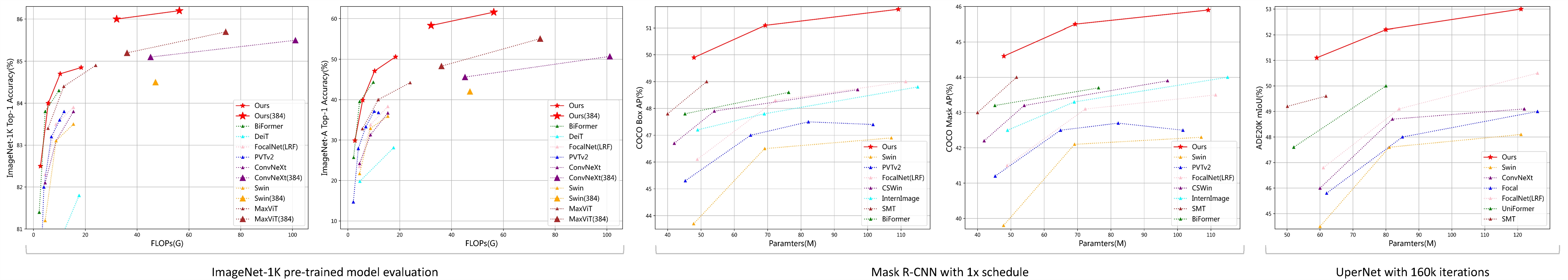

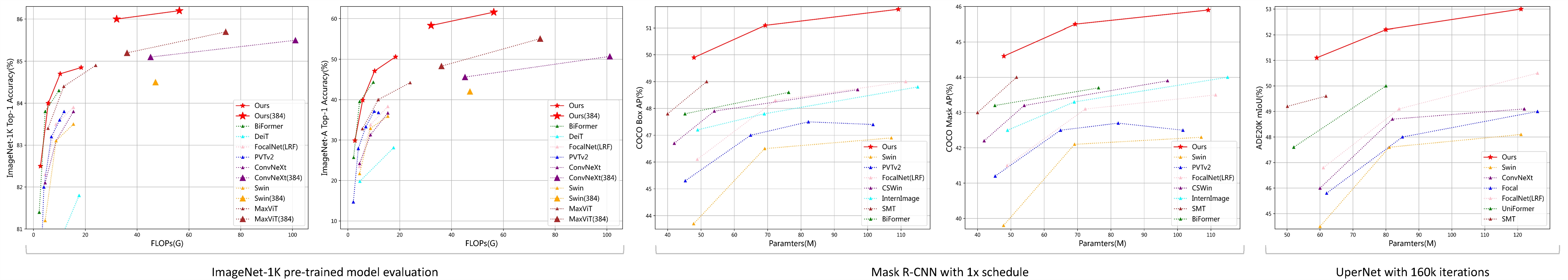

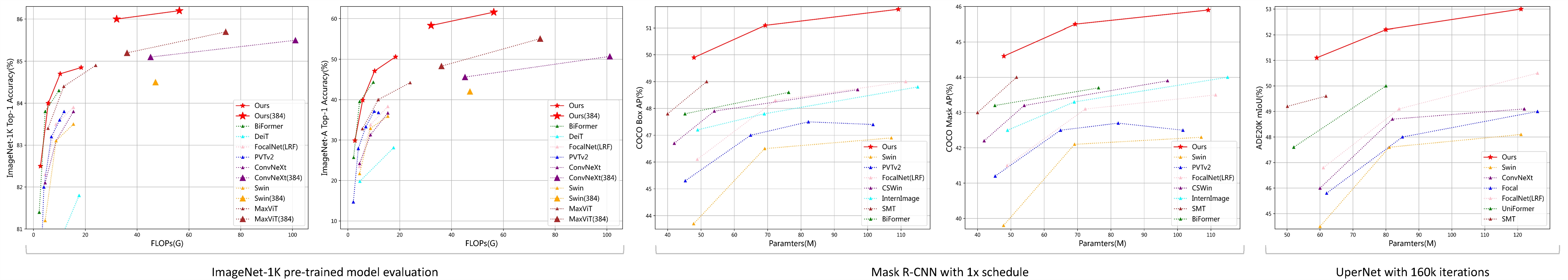

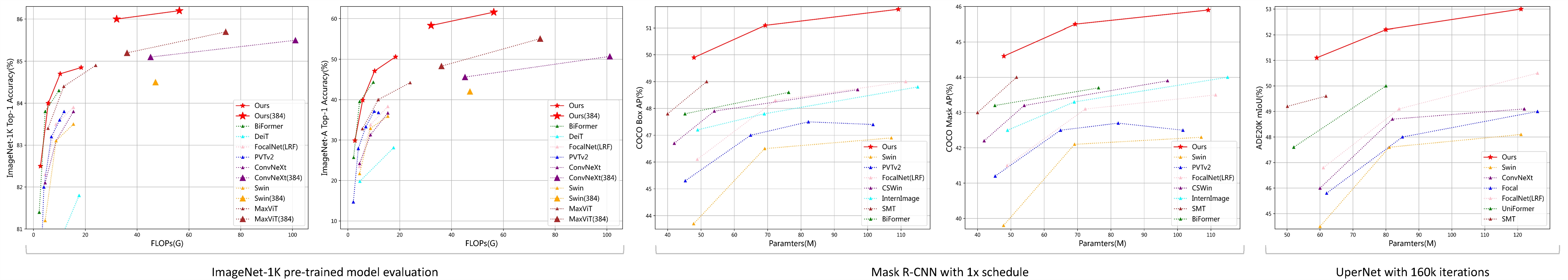

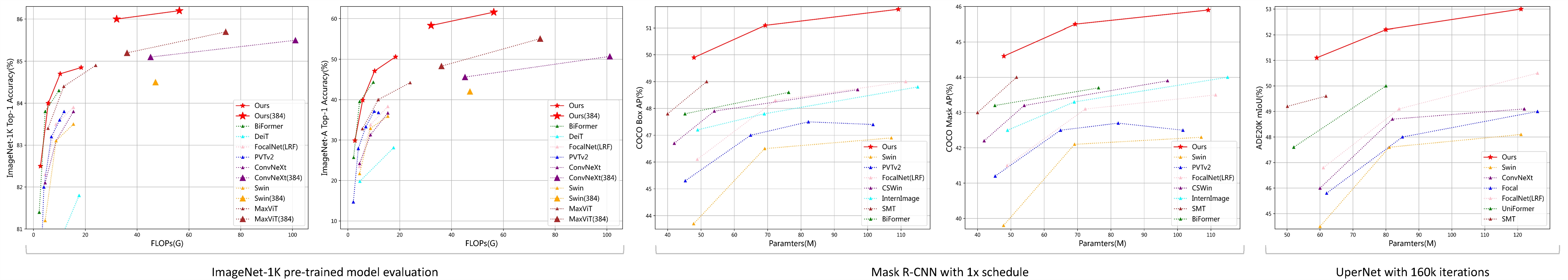

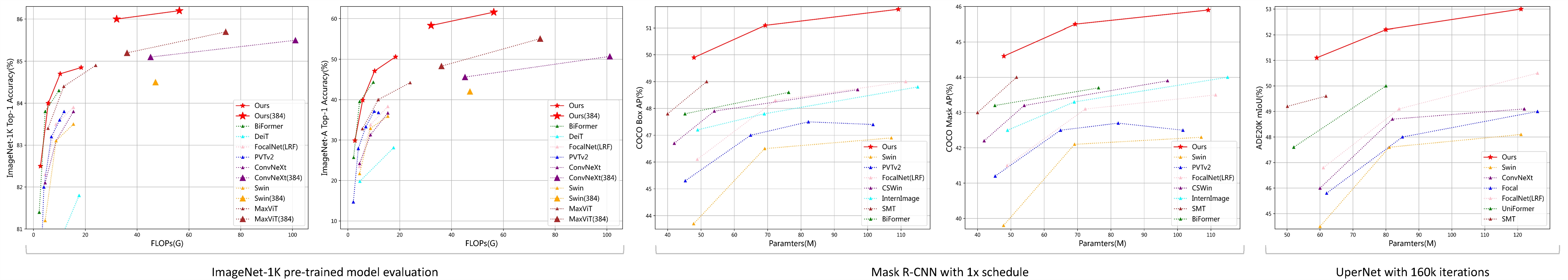

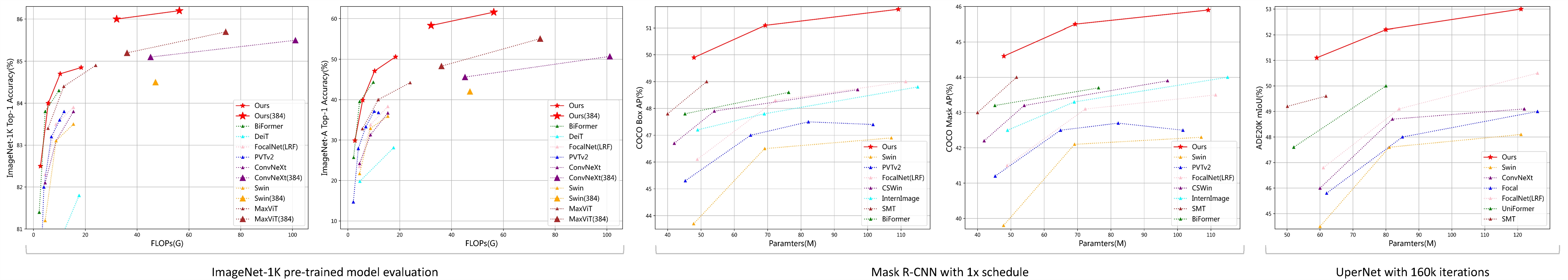

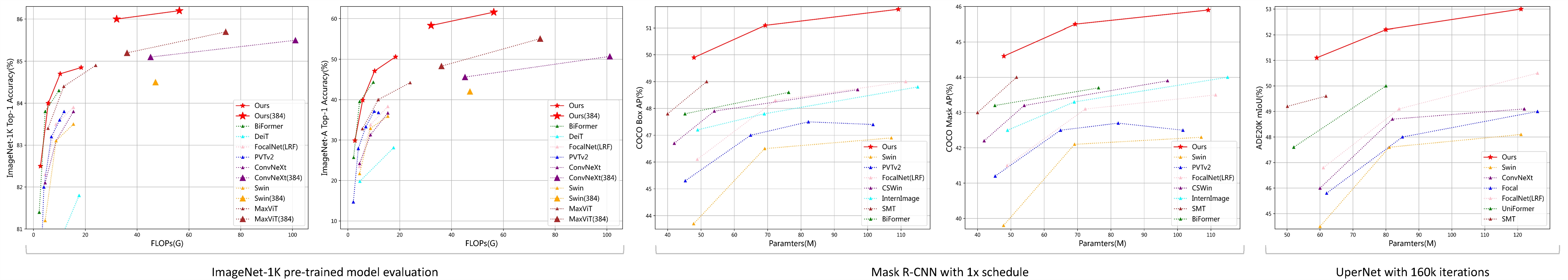

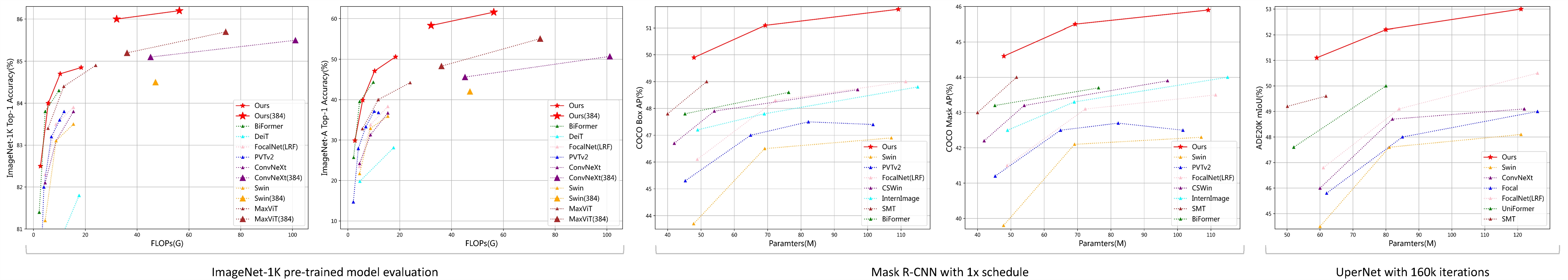

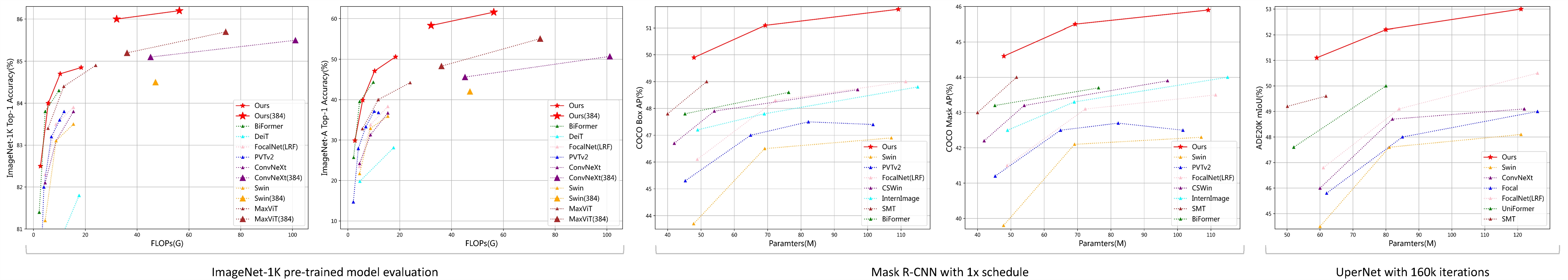

#### Image Classification, Detection and Segmentation:

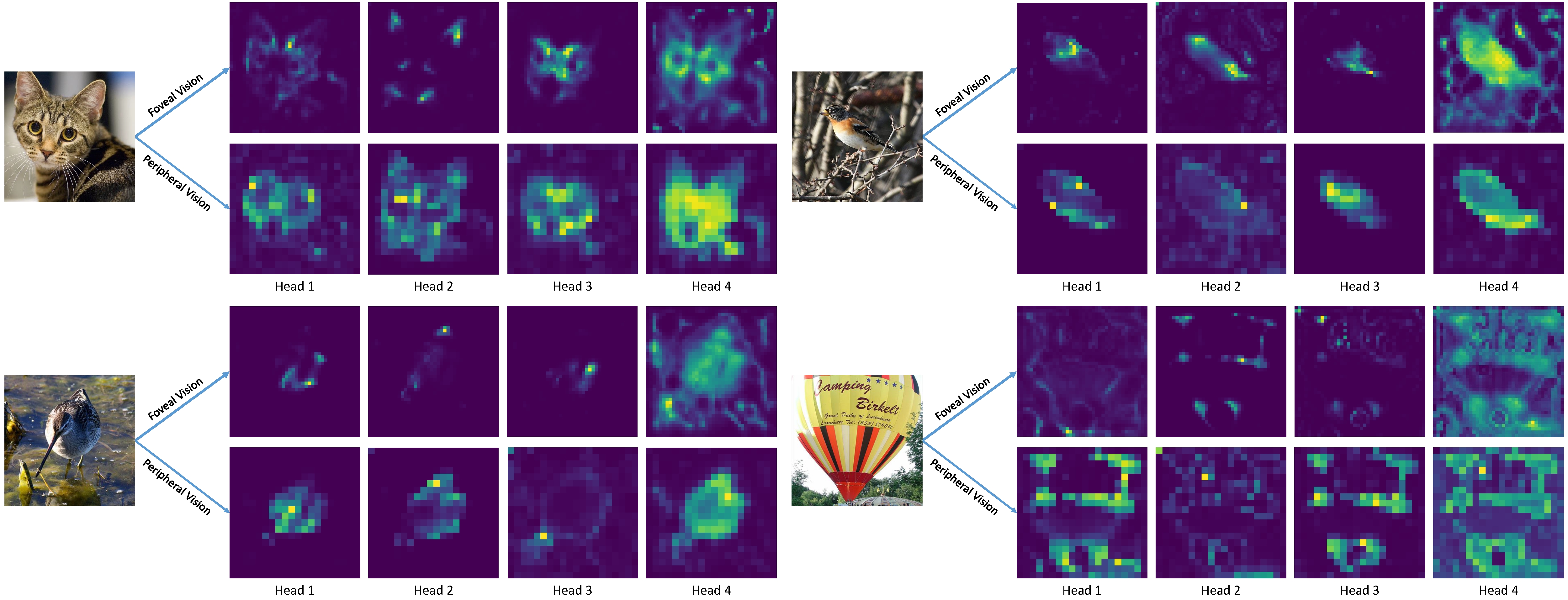

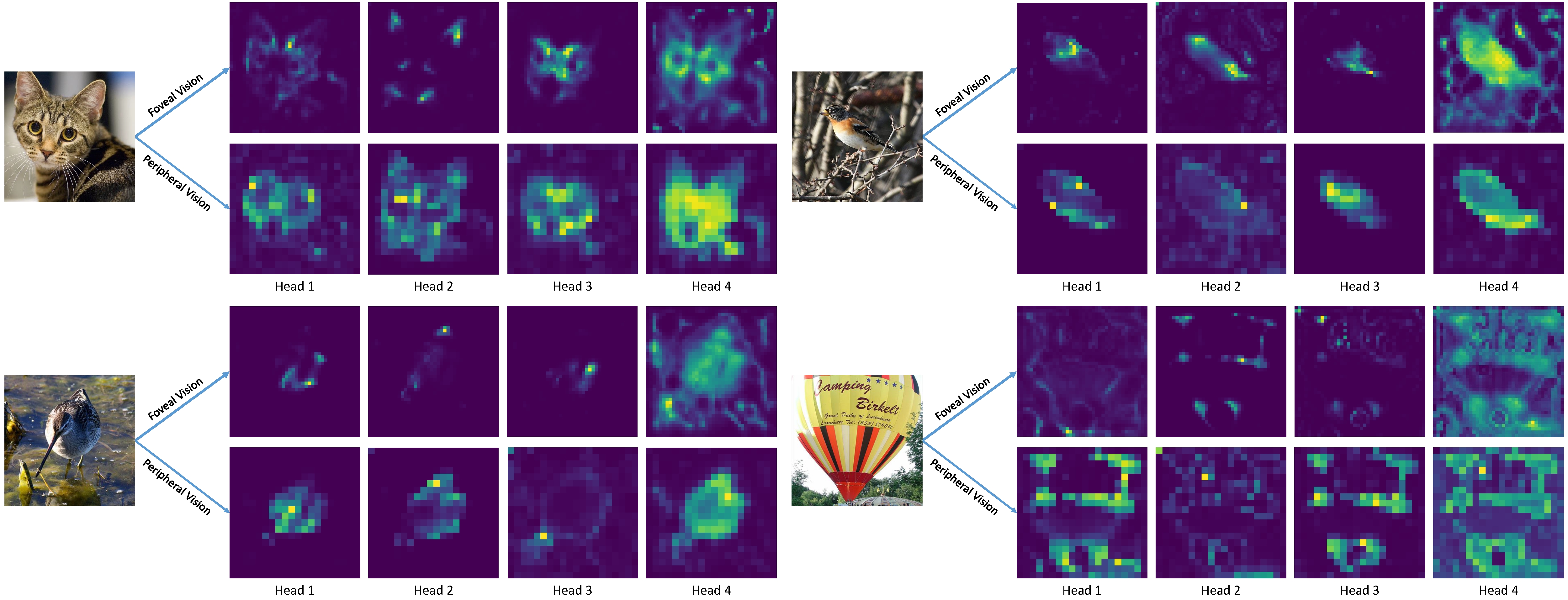

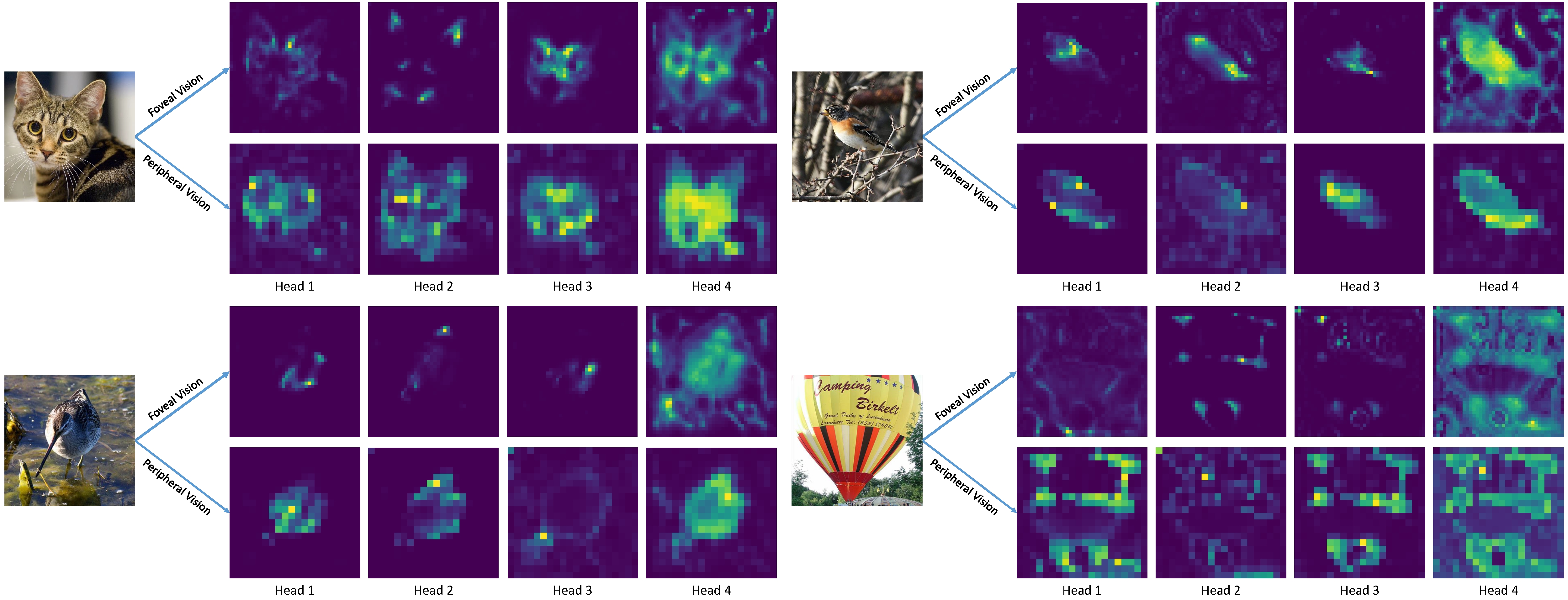

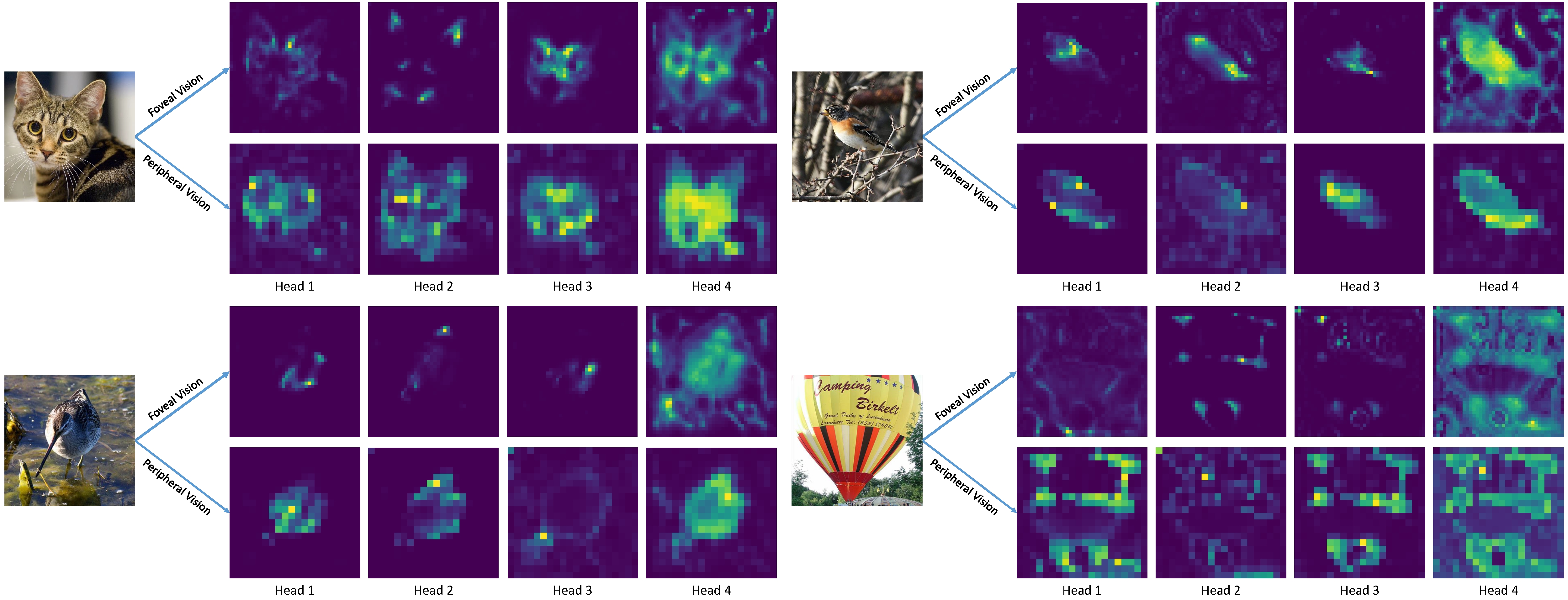

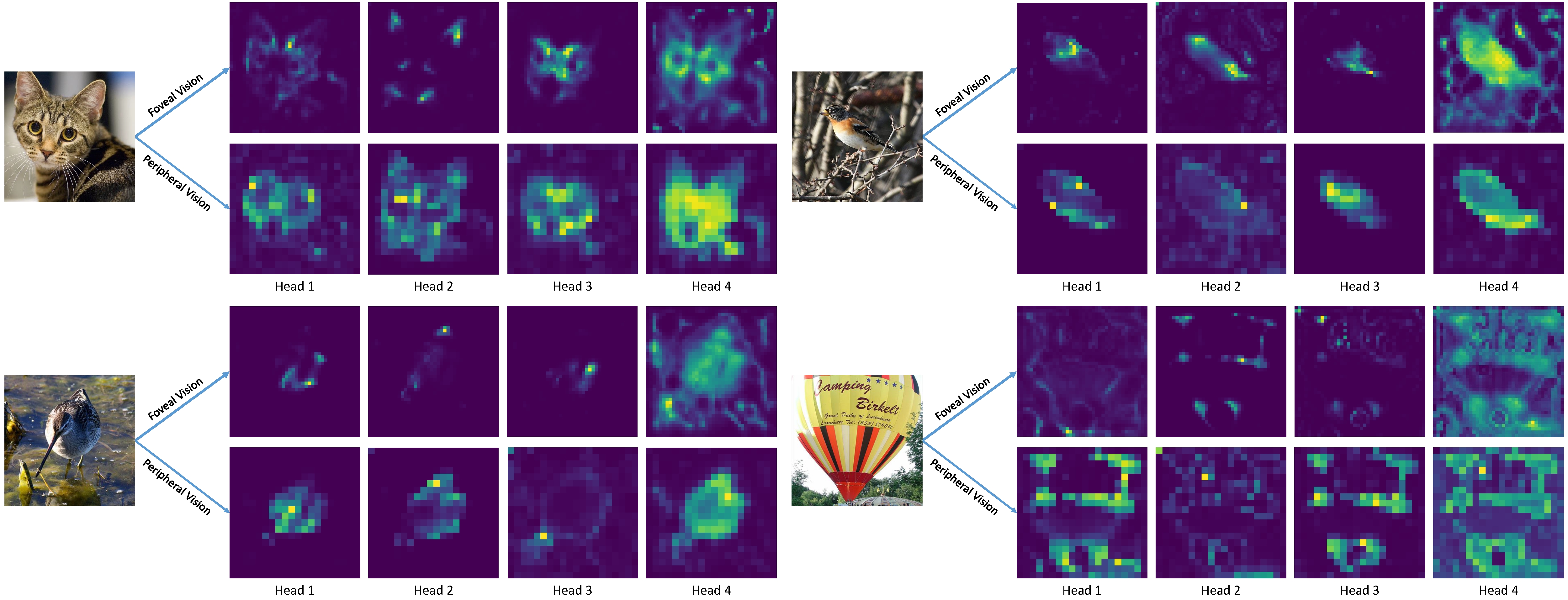

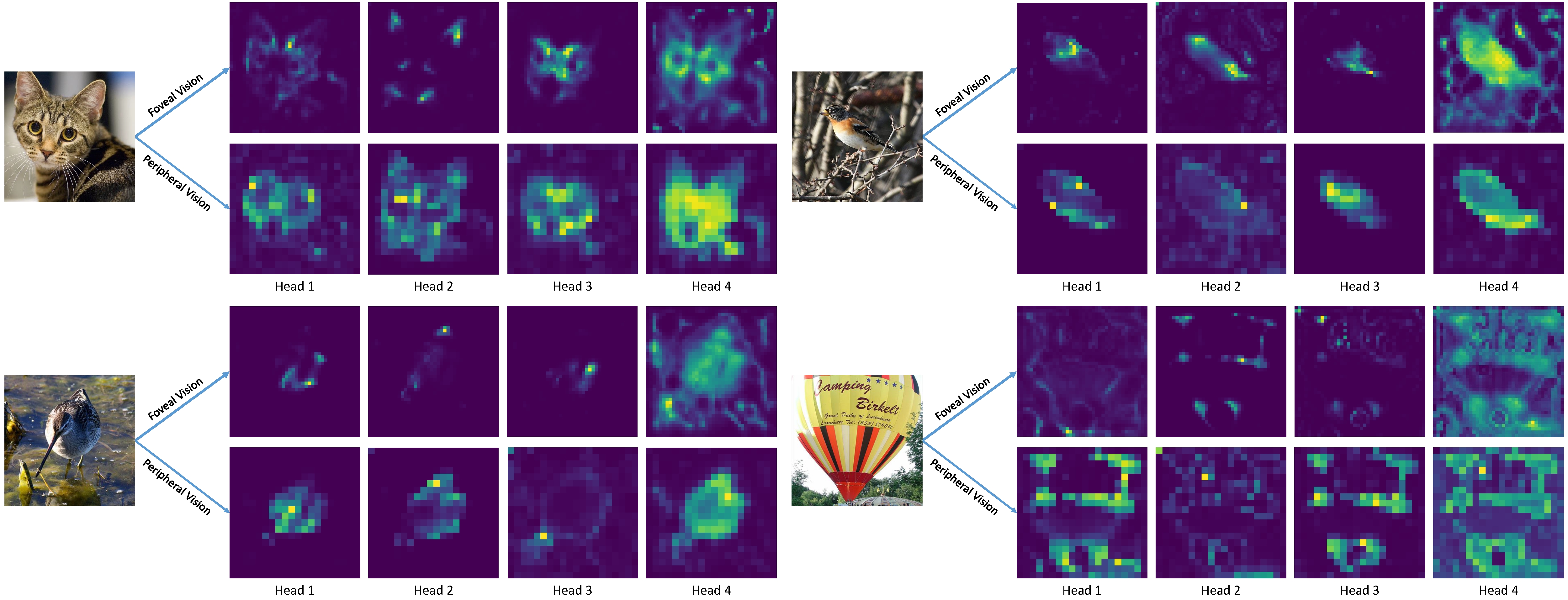

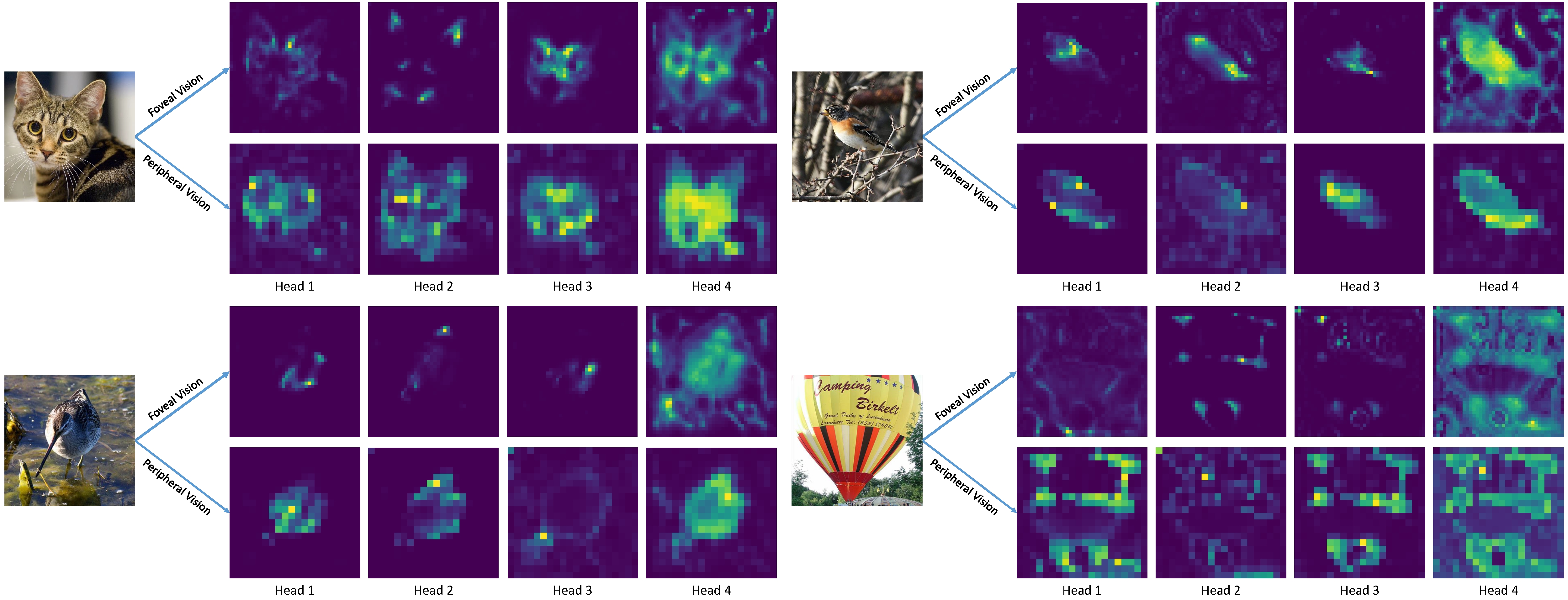

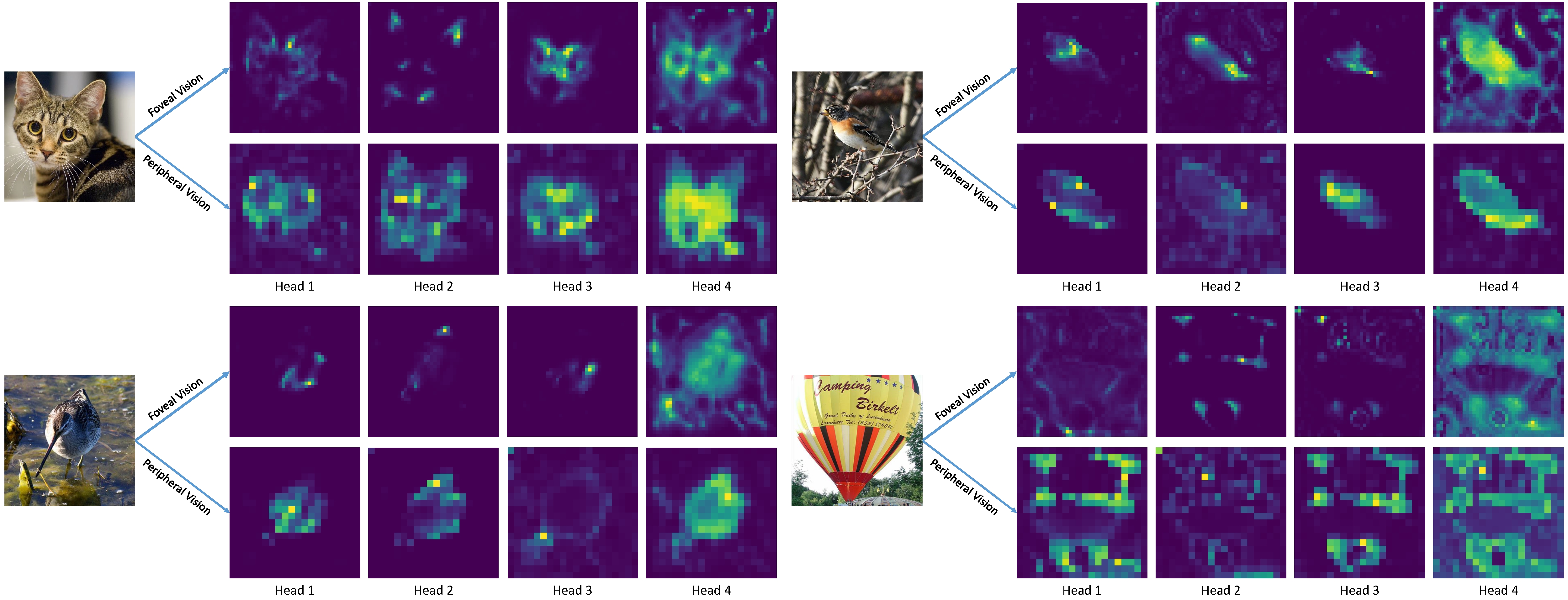

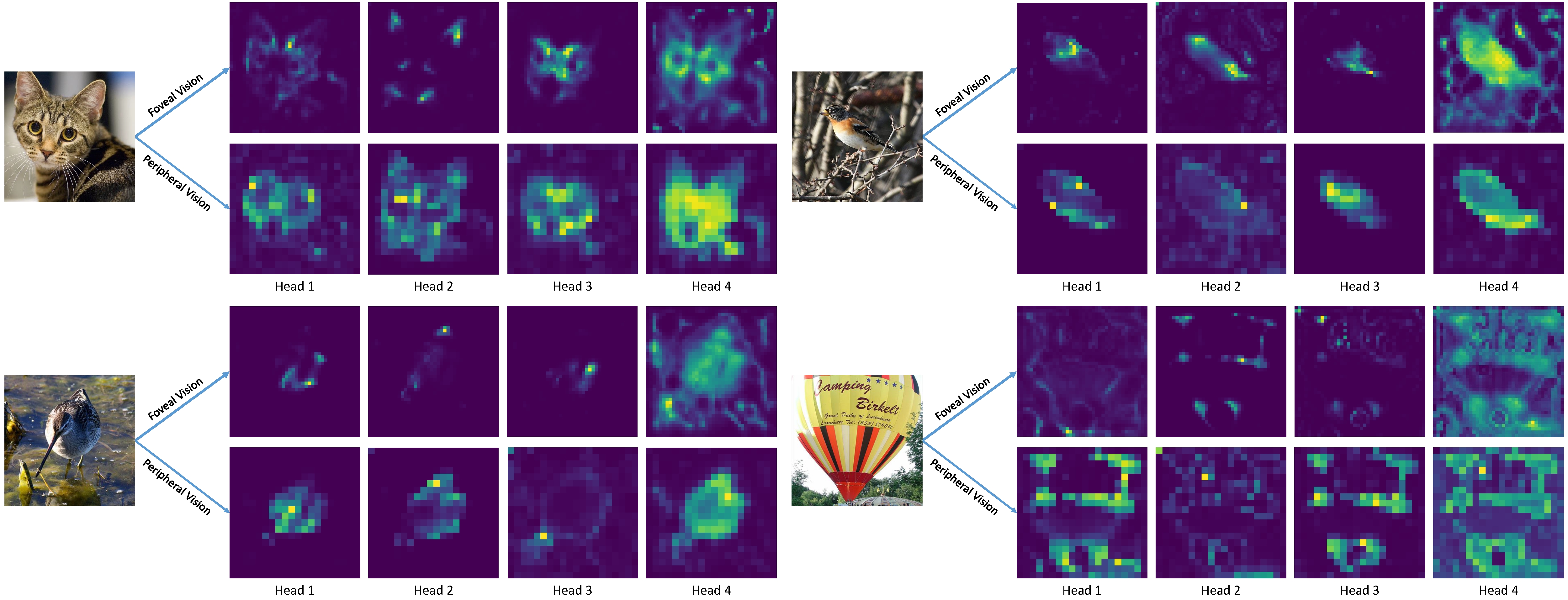

#### Attention Visualization:

## Model Zoo

### Image Classification

***Classification code & weights & configs & training logs are >>>[here](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/ )<<<.***

**ImageNet-1K 224x224 pre-trained models:**

| Model | #Params | #FLOPs |IN-1K | IN-A | IN-C↓ |IN-R|Sketch|IN-V2|Download |Config| Log |

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|

| TransNeXt-Micro|12.8M|2.7G| 82.5 | 29.9 | 50.8|45.8|33.0|72.6|[model](https://huggingface.co/DaiShiResearch/transnext-micro-224-1k/resolve/main/transnext_micro_224_1k.pth?download=true) |[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_micro.py)|[log](https://huggingface.co/DaiShiResearch/transnext-micro-224-1k/raw/main/transnext_micro_224_1k.txt) |

| TransNeXt-Tiny |28.2M|5.7G| 84.0| 39.9| 46.5|49.6|37.6|73.8|[model](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_tiny.py)|[log](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/raw/main/transnext_tiny_224_1k.txt)|

| TransNeXt-Small |49.7M|10.3G| 84.7| 47.1| 43.9|52.5| 39.7|74.8 |[model](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_small.py)|[log](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/raw/main/transnext_small_224_1k.txt)|

| TransNeXt-Base |89.7M|18.4G| 84.8| 50.6|43.5|53.9|41.4|75.1| [model](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_base.py)|[log](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/raw/main/transnext_base_224_1k.txt)|

**ImageNet-1K 384x384 fine-tuned models:**

| Model | #Params | #FLOPs |IN-1K | IN-A |IN-R|Sketch|IN-V2| Download |Config|

|:---:|:---:|:---:|:---:| :---:|:---:|:---:| :---:|:---:|:---:|

| TransNeXt-Small |49.7M|32.1G| 86.0| 58.3|56.4|43.2|76.8| [model](https://huggingface.co/DaiShiResearch/transnext-small-384-1k-ft-1k/resolve/main/transnext_small_384_1k_ft_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/finetune/transnext_small_384_ft.py)|

| TransNeXt-Base |89.7M|56.3G| 86.2| 61.6|57.7|44.7|77.0| [model](https://huggingface.co/DaiShiResearch/transnext-base-384-1k-ft-1k/resolve/main/transnext_base_384_1k_ft_1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/finetune/transnext_base_384_ft.py)|

**ImageNet-1K 256x256 pre-trained model fully utilizing aggregated attention at all stages:**

*(See Table.9 in Appendix D.6 for details)*

| Model |Token mixer| #Params | #FLOPs |IN-1K |Download |Config| Log |

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|

|TransNeXt-Micro|**A-A-A-A**|13.1M|3.3G| 82.6 |[model](https://huggingface.co/DaiShiResearch/transnext-micro-AAAA-256-1k/resolve/main/transnext_micro_AAAA_256_1k.pth?download=true) |[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/classification/configs/transnext_micro_AAAA_256.py)|[log](https://huggingface.co/DaiShiResearch/transnext-micro-AAAA-256-1k/blob/main/transnext_micro_AAAA_256_1k.txt) |

### Object Detection

***Object detection code & weights & configs & training logs are >>>[here](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/ )<<<.***

**COCO object detection and instance segmentation results using the Mask R-CNN method:**

| Backbone | Pretrained Model| Lr Schd| box mAP | mask mAP | #Params | Download |Config| Log |

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|:---:|

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true) |1x|49.9|44.6|47.9M|[model](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-tiny-coco/resolve/main/mask_rcnn_transnext_tiny_fpn_1x_coco_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/maskrcnn/configs/mask_rcnn_transnext_tiny_fpn_1x_coco.py)|[log](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-tiny-coco/raw/main/mask_rcnn_transnext_tiny_fpn_1x_coco_in1k.log.json)|

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true) |1x|51.1|45.5|69.3M|[model](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-small-coco/resolve/main/mask_rcnn_transnext_small_fpn_1x_coco_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/maskrcnn/configs/mask_rcnn_transnext_small_fpn_1x_coco.py)|[log](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-small-coco/raw/main/mask_rcnn_transnext_small_fpn_1x_coco_in1k.log.json)|

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true) |1x|51.7|45.9|109.2M|[model](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-base-coco/resolve/main/mask_rcnn_transnext_base_fpn_1x_coco_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/maskrcnn/configs/mask_rcnn_transnext_base_fpn_1x_coco.py)|[log](https://huggingface.co/DaiShiResearch/maskrcnn-transnext-base-coco/raw/main/mask_rcnn_transnext_base_fpn_1x_coco_in1k.log.json)|

**COCO object detection results using the DINO method:**

| Backbone | Pretrained Model| scales | epochs | box mAP | #Params | Download |Config| Log |

|:---:|:---:|:---:|:---:| :---:|:---:|:---:|:---:|:---:|

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|4scale | 12|55.1|47.8M|[model](https://huggingface.co/DaiShiResearch/dino-4scale-transnext-tiny-coco/resolve/main/dino_4scale_transnext_tiny_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-4scale_transnext_tiny-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-4scale-transnext-tiny-coco/raw/main/dino_4scale_transnext_tiny_12e_in1k.json)|

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|5scale | 12|55.7|48.1M|[model](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-tiny-coco/resolve/main/dino_5scale_transnext_tiny_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-5scale_transnext_tiny-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-tiny-coco/raw/main/dino_5scale_transnext_tiny_12e_in1k.json)|

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|5scale | 12|56.6|69.6M|[model](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-small-coco/resolve/main/dino_5scale_transnext_small_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-5scale_transnext_small-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-small-coco/raw/main/dino_5scale_transnext_small_12e_in1k.json)|

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|5scale | 12|57.1|110M|[model](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-base-coco/resolve/main/dino_5scale_transnext_base_12e_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/detection/dino/configs/dino-5scale_transnext_base-12e_coco.py)|[log](https://huggingface.co/DaiShiResearch/dino-5scale-transnext-base-coco/raw/main/dino_5scale_transnext_base_12e_in1k.json)|

### Semantic Segmentation

***Semantic segmentation code & weights & configs & training logs are >>>[here](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/ )<<<.***

**ADE20K semantic segmentation results using the UPerNet method:**

| Backbone | Pretrained Model| Crop Size |Lr Schd| mIoU|mIoU (ms+flip)| #Params | Download |Config| Log |

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|512x512|160K|51.1|51.5/51.7|59M|[model](https://huggingface.co/DaiShiResearch/upernet-transnext-tiny-ade/resolve/main/upernet_transnext_tiny_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/upernet/configs/upernet_transnext_tiny_512x512_160k_ade20k_ss.py)|[log](https://huggingface.co/DaiShiResearch/upernet-transnext-tiny-ade/blob/main/upernet_transnext_tiny_512x512_160k_ade20k_ss.log.json)|

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|512x512|160K|52.2|52.5/51.8|80M|[model](https://huggingface.co/DaiShiResearch/upernet-transnext-small-ade/resolve/main/upernet_transnext_small_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/upernet/configs/upernet_transnext_small_512x512_160k_ade20k_ss.py)|[log](https://huggingface.co/DaiShiResearch/upernet-transnext-small-ade/blob/main/upernet_transnext_small_512x512_160k_ade20k_ss.log.json)|

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|512x512|160K|53.0|53.5/53.7|121M|[model](https://huggingface.co/DaiShiResearch/upernet-transnext-base-ade/resolve/main/upernet_transnext_base_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/upernet/configs/upernet_transnext_base_512x512_160k_ade20k_ss.py)|[log](https://huggingface.co/DaiShiResearch/upernet-transnext-base-ade/blob/main/upernet_transnext_base_512x512_160k_ade20k_ss.log.json)|

* In the context of multi-scale evaluation, TransNeXt reports test results under two distinct scenarios: **interpolation** and **extrapolation** of relative position bias.

**ADE20K semantic segmentation results using the Mask2Former method:**

| Backbone | Pretrained Model| Crop Size |Lr Schd| mIoU| #Params | Download |Config| Log |

|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|:---:|

| TransNeXt-Tiny | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-tiny-224-1k/resolve/main/transnext_tiny_224_1k.pth?download=true)|512x512|160K|53.4|47.5M|[model](https://huggingface.co/DaiShiResearch/mask2former-transnext-tiny-ade/resolve/main/mask2former_transnext_tiny_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/mask2former/configs/mask2former_transnext_tiny_160k_ade20k-512x512.py)|[log](https://huggingface.co/DaiShiResearch/mask2former-transnext-tiny-ade/raw/main/mask2former_transnext_tiny_512x512_160k_ade20k_in1k.json)|

| TransNeXt-Small | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-small-224-1k/resolve/main/transnext_small_224_1k.pth?download=true)|512x512|160K|54.1|69.0M|[model](https://huggingface.co/DaiShiResearch/mask2former-transnext-small-ade/resolve/main/mask2former_transnext_small_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/mask2former/configs/mask2former_transnext_small_160k_ade20k-512x512.py)|[log](https://huggingface.co/DaiShiResearch/mask2former-transnext-small-ade/raw/main/mask2former_transnext_small_512x512_160k_ade20k_in1k.json)|

| TransNeXt-Base | [ImageNet-1K](https://huggingface.co/DaiShiResearch/transnext-base-224-1k/resolve/main/transnext_base_224_1k.pth?download=true)|512x512|160K|54.7|109M|[model](https://huggingface.co/DaiShiResearch/mask2former-transnext-base-ade/resolve/main/mask2former_transnext_base_512x512_160k_ade20k_in1k.pth?download=true)|[config](https://github.com/DaiShiResearch/TransNeXt/tree/main/segmentation/mask2former/configs/mask2former_transnext_base_160k_ade20k-512x512.py)|[log](https://huggingface.co/DaiShiResearch/mask2former-transnext-base-ade/raw/main/mask2former_transnext_base_512x512_160k_ade20k_in1k.json)|

## Citation

If you find our work helpful, please consider citing the following bibtex. We would greatly appreciate a star for this

project.

@misc{shi2023transnext,

author = {Dai Shi},

title = {TransNeXt: Robust Foveal Visual Perception for Vision Transformers},

year = {2023},

eprint = {arXiv:2311.17132},

archivePrefix={arXiv},

primaryClass={cs.CV}

} | {"language": ["en"], "license": "apache-2.0", "library_name": "pytorch", "tags": ["vision"], "datasets": ["imagenet-1k"], "metrics": ["accuracy"], "pipeline_tag": "image-classification"} | DaiShiResearch/transnext-small-224-1k | null | [

"pytorch",

"vision",

"image-classification",

"en",

"dataset:imagenet-1k",

"arxiv:2311.17132",

"license:apache-2.0",

"region:us"

] | null | 2024-04-16T22:57:10+00:00 | [

"2311.17132"

] | [

"en"

] | TAGS

#pytorch #vision #image-classification #en #dataset-imagenet-1k #arxiv-2311.17132 #license-apache-2.0 #region-us

| TransNeXt

=========

Official Model release

for "TransNeXt: Robust Foveal Visual Perception for Vision Transformers" [CVPR 2024]

.

Model Details

-------------

* Code: URL

* Paper: TransNeXt: Robust Foveal Visual Perception for Vision Transformers

* Author: Dai Shi

* Email: daishiresearch@URL

Methods

-------

#### Pixel-focused attention (Left) & aggregated attention (Right):

!pixel-focused\_attention

#### Convolutional GLU (First on the right):

!Convolutional GLU

Results

-------

#### Image Classification, Detection and Segmentation:

!experiment\_figure

#### Attention Visualization:

!foveal\_peripheral\_vision

Model Zoo

---------

### Image Classification

*Classification code & weights & configs & training logs are >>>here<<<.*

ImageNet-1K 224x224 pre-trained models:

ImageNet-1K 384x384 fine-tuned models:

ImageNet-1K 256x256 pre-trained model fully utilizing aggregated attention at all stages:

*(See Table.9 in Appendix D.6 for details)*

### Object Detection

*Object detection code & weights & configs & training logs are >>>here<<<.*

COCO object detection and instance segmentation results using the Mask R-CNN method:

COCO object detection results using the DINO method:

### Semantic Segmentation

*Semantic segmentation code & weights & configs & training logs are >>>here<<<.*

ADE20K semantic segmentation results using the UPerNet method:

* In the context of multi-scale evaluation, TransNeXt reports test results under two distinct scenarios: interpolation and extrapolation of relative position bias.

ADE20K semantic segmentation results using the Mask2Former method:

If you find our work helpful, please consider citing the following bibtex. We would greatly appreciate a star for this

project.

```

@misc{shi2023transnext,

author = {Dai Shi},

title = {TransNeXt: Robust Foveal Visual Perception for Vision Transformers},

year = {2023},

eprint = {arXiv:2311.17132},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

```

| [

"#### Pixel-focused attention (Left) & aggregated attention (Right):\n\n\n!pixel-focused\\_attention",

"#### Convolutional GLU (First on the right):\n\n\n!Convolutional GLU\n\n\nResults\n-------",

"#### Image Classification, Detection and Segmentation:\n\n\n!experiment\\_figure",

"#### Attention Visualization:\n\n\n!foveal\\_peripheral\\_vision\n\n\nModel Zoo\n---------",

"### Image Classification\n\n\n*Classification code & weights & configs & training logs are >>>here<<<.*\n\n\nImageNet-1K 224x224 pre-trained models:\n\n\n\nImageNet-1K 384x384 fine-tuned models:\n\n\n\nImageNet-1K 256x256 pre-trained model fully utilizing aggregated attention at all stages:\n\n\n*(See Table.9 in Appendix D.6 for details)*",

"### Object Detection\n\n\n*Object detection code & weights & configs & training logs are >>>here<<<.*\n\n\nCOCO object detection and instance segmentation results using the Mask R-CNN method:\n\n\n\nCOCO object detection results using the DINO method:",

"### Semantic Segmentation\n\n\n*Semantic segmentation code & weights & configs & training logs are >>>here<<<.*\n\n\nADE20K semantic segmentation results using the UPerNet method:\n\n\n\n* In the context of multi-scale evaluation, TransNeXt reports test results under two distinct scenarios: interpolation and extrapolation of relative position bias.\n\n\nADE20K semantic segmentation results using the Mask2Former method:\n\n\n\nIf you find our work helpful, please consider citing the following bibtex. We would greatly appreciate a star for this\nproject.\n\n\n\n```\n@misc{shi2023transnext,\n author = {Dai Shi},\n title = {TransNeXt: Robust Foveal Visual Perception for Vision Transformers},\n year = {2023},\n eprint = {arXiv:2311.17132},\n archivePrefix={arXiv},\n primaryClass={cs.CV}\n}\n\n```"

] | [

"TAGS\n#pytorch #vision #image-classification #en #dataset-imagenet-1k #arxiv-2311.17132 #license-apache-2.0 #region-us \n",

"#### Pixel-focused attention (Left) & aggregated attention (Right):\n\n\n!pixel-focused\\_attention",

"#### Convolutional GLU (First on the right):\n\n\n!Convolutional GLU\n\n\nResults\n-------",

"#### Image Classification, Detection and Segmentation:\n\n\n!experiment\\_figure",

"#### Attention Visualization:\n\n\n!foveal\\_peripheral\\_vision\n\n\nModel Zoo\n---------",

"### Image Classification\n\n\n*Classification code & weights & configs & training logs are >>>here<<<.*\n\n\nImageNet-1K 224x224 pre-trained models:\n\n\n\nImageNet-1K 384x384 fine-tuned models:\n\n\n\nImageNet-1K 256x256 pre-trained model fully utilizing aggregated attention at all stages:\n\n\n*(See Table.9 in Appendix D.6 for details)*",

"### Object Detection\n\n\n*Object detection code & weights & configs & training logs are >>>here<<<.*\n\n\nCOCO object detection and instance segmentation results using the Mask R-CNN method:\n\n\n\nCOCO object detection results using the DINO method:",

"### Semantic Segmentation\n\n\n*Semantic segmentation code & weights & configs & training logs are >>>here<<<.*\n\n\nADE20K semantic segmentation results using the UPerNet method:\n\n\n\n* In the context of multi-scale evaluation, TransNeXt reports test results under two distinct scenarios: interpolation and extrapolation of relative position bias.\n\n\nADE20K semantic segmentation results using the Mask2Former method:\n\n\n\nIf you find our work helpful, please consider citing the following bibtex. We would greatly appreciate a star for this\nproject.\n\n\n\n```\n@misc{shi2023transnext,\n author = {Dai Shi},\n title = {TransNeXt: Robust Foveal Visual Perception for Vision Transformers},\n year = {2023},\n eprint = {arXiv:2311.17132},\n archivePrefix={arXiv},\n primaryClass={cs.CV}\n}\n\n```"

] |

null | peft |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# GUE_EMP_H3K4me2-seqsight_32768_512_43M-L32_all

This model is a fine-tuned version of [mahdibaghbanzadeh/seqsight_32768_512_43M](https://huggingface.co/mahdibaghbanzadeh/seqsight_32768_512_43M) on the [mahdibaghbanzadeh/GUE_EMP_H3K4me2](https://huggingface.co/datasets/mahdibaghbanzadeh/GUE_EMP_H3K4me2) dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7291

- F1 Score: 0.5835

- Accuracy: 0.5963

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 2048

- eval_batch_size: 2048

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 10000

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 Score | Accuracy |

|:-------------:|:------:|:-----:|:---------------:|:--------:|:--------:|

| 0.6613 | 16.67 | 200 | 0.6624 | 0.5765 | 0.6119 |

| 0.5901 | 33.33 | 400 | 0.7221 | 0.5913 | 0.6025 |

| 0.5271 | 50.0 | 600 | 0.7747 | 0.5806 | 0.5777 |

| 0.476 | 66.67 | 800 | 0.8449 | 0.5662 | 0.5696 |

| 0.439 | 83.33 | 1000 | 0.8451 | 0.5652 | 0.5718 |

| 0.4151 | 100.0 | 1200 | 0.9032 | 0.5618 | 0.5676 |

| 0.3953 | 116.67 | 1400 | 0.9255 | 0.5612 | 0.5634 |

| 0.3766 | 133.33 | 1600 | 0.9668 | 0.5572 | 0.5539 |

| 0.3612 | 150.0 | 1800 | 0.9742 | 0.5636 | 0.5676 |

| 0.3482 | 166.67 | 2000 | 1.0234 | 0.5556 | 0.5533 |

| 0.3367 | 183.33 | 2200 | 1.0741 | 0.5487 | 0.5455 |

| 0.3268 | 200.0 | 2400 | 1.1159 | 0.5534 | 0.5513 |

| 0.3176 | 216.67 | 2600 | 1.0693 | 0.5619 | 0.5617 |

| 0.3087 | 233.33 | 2800 | 1.0744 | 0.5581 | 0.5575 |

| 0.3037 | 250.0 | 3000 | 1.0694 | 0.5660 | 0.5660 |

| 0.2951 | 266.67 | 3200 | 1.1822 | 0.5566 | 0.5543 |

| 0.2897 | 283.33 | 3400 | 1.1189 | 0.5559 | 0.5536 |

| 0.2835 | 300.0 | 3600 | 1.0699 | 0.5608 | 0.5588 |

| 0.278 | 316.67 | 3800 | 1.1427 | 0.5602 | 0.5582 |

| 0.2706 | 333.33 | 4000 | 1.1472 | 0.5597 | 0.5595 |

| 0.2672 | 350.0 | 4200 | 1.1485 | 0.5525 | 0.5510 |

| 0.2623 | 366.67 | 4400 | 1.1635 | 0.5575 | 0.5546 |

| 0.2564 | 383.33 | 4600 | 1.1665 | 0.5631 | 0.5617 |

| 0.2523 | 400.0 | 4800 | 1.2188 | 0.5661 | 0.5663 |

| 0.2488 | 416.67 | 5000 | 1.1831 | 0.5655 | 0.5650 |

| 0.2444 | 433.33 | 5200 | 1.2457 | 0.5598 | 0.5575 |

| 0.2406 | 450.0 | 5400 | 1.2023 | 0.5615 | 0.5611 |

| 0.2363 | 466.67 | 5600 | 1.1984 | 0.5607 | 0.5575 |

| 0.2337 | 483.33 | 5800 | 1.2028 | 0.5620 | 0.5617 |

| 0.2293 | 500.0 | 6000 | 1.2133 | 0.5609 | 0.5595 |

| 0.2254 | 516.67 | 6200 | 1.2177 | 0.5613 | 0.5595 |

| 0.223 | 533.33 | 6400 | 1.2381 | 0.5616 | 0.5595 |

| 0.219 | 550.0 | 6600 | 1.2617 | 0.5591 | 0.5559 |

| 0.2177 | 566.67 | 6800 | 1.2483 | 0.5600 | 0.5582 |

| 0.2149 | 583.33 | 7000 | 1.2313 | 0.5620 | 0.5614 |

| 0.2137 | 600.0 | 7200 | 1.2816 | 0.5588 | 0.5562 |

| 0.2086 | 616.67 | 7400 | 1.2936 | 0.5595 | 0.5572 |

| 0.2074 | 633.33 | 7600 | 1.2590 | 0.5623 | 0.5614 |

| 0.2042 | 650.0 | 7800 | 1.2862 | 0.5628 | 0.5601 |

| 0.2031 | 666.67 | 8000 | 1.2691 | 0.5600 | 0.5608 |

| 0.2007 | 683.33 | 8200 | 1.3315 | 0.5617 | 0.5601 |

| 0.2008 | 700.0 | 8400 | 1.3048 | 0.5613 | 0.5614 |

| 0.1972 | 716.67 | 8600 | 1.2883 | 0.5624 | 0.5604 |

| 0.1959 | 733.33 | 8800 | 1.2794 | 0.5628 | 0.5617 |

| 0.196 | 750.0 | 9000 | 1.2947 | 0.5636 | 0.5627 |

| 0.1944 | 766.67 | 9200 | 1.2998 | 0.5664 | 0.5647 |

| 0.1932 | 783.33 | 9400 | 1.2988 | 0.5647 | 0.5627 |

| 0.1917 | 800.0 | 9600 | 1.2993 | 0.5640 | 0.5624 |

| 0.1912 | 816.67 | 9800 | 1.3223 | 0.5623 | 0.5608 |

| 0.1909 | 833.33 | 10000 | 1.3096 | 0.5621 | 0.5604 |

### Framework versions

- PEFT 0.9.0

- Transformers 4.38.2

- Pytorch 2.2.0+cu121

- Datasets 2.17.1

- Tokenizers 0.15.2 | {"library_name": "peft", "tags": ["generated_from_trainer"], "metrics": ["accuracy"], "base_model": "mahdibaghbanzadeh/seqsight_32768_512_43M", "model-index": [{"name": "GUE_EMP_H3K4me2-seqsight_32768_512_43M-L32_all", "results": []}]} | mahdibaghbanzadeh/GUE_EMP_H3K4me2-seqsight_32768_512_43M-L32_all | null | [

"peft",

"safetensors",

"generated_from_trainer",

"base_model:mahdibaghbanzadeh/seqsight_32768_512_43M",

"region:us"

] | null | 2024-04-16T22:57:32+00:00 | [] | [] | TAGS

#peft #safetensors #generated_from_trainer #base_model-mahdibaghbanzadeh/seqsight_32768_512_43M #region-us

| GUE\_EMP\_H3K4me2-seqsight\_32768\_512\_43M-L32\_all

====================================================

This model is a fine-tuned version of mahdibaghbanzadeh/seqsight\_32768\_512\_43M on the mahdibaghbanzadeh/GUE\_EMP\_H3K4me2 dataset.

It achieves the following results on the evaluation set:

* Loss: 0.7291

* F1 Score: 0.5835

* Accuracy: 0.5963

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 0.0005

* train\_batch\_size: 2048

* eval\_batch\_size: 2048

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* training\_steps: 10000

### Training results

### Framework versions

* PEFT 0.9.0

* Transformers 4.38.2

* Pytorch 2.2.0+cu121

* Datasets 2.17.1

* Tokenizers 0.15.2

| [

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0005\n* train\\_batch\\_size: 2048\n* eval\\_batch\\_size: 2048\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* training\\_steps: 10000",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.9.0\n* Transformers 4.38.2\n* Pytorch 2.2.0+cu121\n* Datasets 2.17.1\n* Tokenizers 0.15.2"

] | [

"TAGS\n#peft #safetensors #generated_from_trainer #base_model-mahdibaghbanzadeh/seqsight_32768_512_43M #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0005\n* train\\_batch\\_size: 2048\n* eval\\_batch\\_size: 2048\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* training\\_steps: 10000",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.9.0\n* Transformers 4.38.2\n* Pytorch 2.2.0+cu121\n* Datasets 2.17.1\n* Tokenizers 0.15.2"

] |

text-generation | transformers | # merge

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the SLERP merge method.

### Models Merged

The following models were included in the merge:

* [Citaman/command-r-18-layer](https://huggingface.co/Citaman/command-r-18-layer)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

slices:

- sources:

- model: Citaman/command-r-18-layer

layer_range: [0, 17]

- model: Citaman/command-r-18-layer

layer_range: [1, 18]

merge_method: slerp

base_model: Citaman/command-r-18-layer

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

| {"library_name": "transformers", "tags": ["mergekit", "merge"], "base_model": ["Citaman/command-r-18-layer"]} | Citaman/command-r-17-layer | null | [

"transformers",

"safetensors",

"cohere",

"text-generation",

"mergekit",

"merge",

"conversational",

"base_model:Citaman/command-r-18-layer",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | null | 2024-04-16T22:58:22+00:00 | [] | [] | TAGS

#transformers #safetensors #cohere #text-generation #mergekit #merge #conversational #base_model-Citaman/command-r-18-layer #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us

| # merge

This is a merge of pre-trained language models created using mergekit.

## Merge Details

### Merge Method

This model was merged using the SLERP merge method.

### Models Merged

The following models were included in the merge:

* Citaman/command-r-18-layer

### Configuration

The following YAML configuration was used to produce this model:

| [

"# merge\n\nThis is a merge of pre-trained language models created using mergekit.",

"## Merge Details",

"### Merge Method\n\nThis model was merged using the SLERP merge method.",

"### Models Merged\n\nThe following models were included in the merge:\n* Citaman/command-r-18-layer",

"### Configuration\n\nThe following YAML configuration was used to produce this model:"

] | [

"TAGS\n#transformers #safetensors #cohere #text-generation #mergekit #merge #conversational #base_model-Citaman/command-r-18-layer #autotrain_compatible #endpoints_compatible #text-generation-inference #region-us \n",

"# merge\n\nThis is a merge of pre-trained language models created using mergekit.",

"## Merge Details",

"### Merge Method\n\nThis model was merged using the SLERP merge method.",

"### Models Merged\n\nThe following models were included in the merge:\n* Citaman/command-r-18-layer",

"### Configuration\n\nThe following YAML configuration was used to produce this model:"

] |

null | transformers |

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed]

| {"library_name": "transformers", "tags": []} | arzans9/hasil | null | [

"transformers",

"safetensors",

"vision-encoder-decoder",

"arxiv:1910.09700",

"endpoints_compatible",

"region:us"

] | null | 2024-04-16T23:00:30+00:00 | [

"1910.09700"

] | [] | TAGS

#transformers #safetensors #vision-encoder-decoder #arxiv-1910.09700 #endpoints_compatible #region-us

|

# Model Card for Model ID

## Model Details

### Model Description

This is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.

- Developed by:

- Funded by [optional]:

- Shared by [optional]:

- Model type:

- Language(s) (NLP):

- License:

- Finetuned from model [optional]:

### Model Sources [optional]

- Repository:

- Paper [optional]:

- Demo [optional]:

## Uses

### Direct Use

### Downstream Use [optional]

### Out-of-Scope Use

## Bias, Risks, and Limitations

### Recommendations

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

## Training Details

### Training Data

### Training Procedure

#### Preprocessing [optional]

#### Training Hyperparameters

- Training regime:

#### Speeds, Sizes, Times [optional]

## Evaluation

### Testing Data, Factors & Metrics

#### Testing Data

#### Factors

#### Metrics

### Results

#### Summary

## Model Examination [optional]

## Environmental Impact

Carbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).

- Hardware Type:

- Hours used:

- Cloud Provider:

- Compute Region:

- Carbon Emitted:

## Technical Specifications [optional]

### Model Architecture and Objective

### Compute Infrastructure

#### Hardware

#### Software

[optional]

BibTeX:

APA:

## Glossary [optional]

## More Information [optional]

## Model Card Authors [optional]

## Model Card Contact

| [

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] | [

"TAGS\n#transformers #safetensors #vision-encoder-decoder #arxiv-1910.09700 #endpoints_compatible #region-us \n",

"# Model Card for Model ID",

"## Model Details",

"### Model Description\n\n\n\nThis is the model card of a transformers model that has been pushed on the Hub. This model card has been automatically generated.\n\n- Developed by: \n- Funded by [optional]: \n- Shared by [optional]: \n- Model type: \n- Language(s) (NLP): \n- License: \n- Finetuned from model [optional]:",

"### Model Sources [optional]\n\n\n\n- Repository: \n- Paper [optional]: \n- Demo [optional]:",

"## Uses",

"### Direct Use",

"### Downstream Use [optional]",

"### Out-of-Scope Use",

"## Bias, Risks, and Limitations",

"### Recommendations\n\n\n\nUsers (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.",

"## How to Get Started with the Model\n\nUse the code below to get started with the model.",

"## Training Details",

"### Training Data",

"### Training Procedure",

"#### Preprocessing [optional]",

"#### Training Hyperparameters\n\n- Training regime:",

"#### Speeds, Sizes, Times [optional]",

"## Evaluation",

"### Testing Data, Factors & Metrics",

"#### Testing Data",

"#### Factors",

"#### Metrics",

"### Results",

"#### Summary",

"## Model Examination [optional]",

"## Environmental Impact\n\n\n\nCarbon emissions can be estimated using the Machine Learning Impact calculator presented in Lacoste et al. (2019).\n\n- Hardware Type: \n- Hours used: \n- Cloud Provider: \n- Compute Region: \n- Carbon Emitted:",

"## Technical Specifications [optional]",

"### Model Architecture and Objective",

"### Compute Infrastructure",

"#### Hardware",

"#### Software\n\n\n\n[optional]\n\n\n\nBibTeX:\n\n\n\nAPA:",

"## Glossary [optional]",

"## More Information [optional]",

"## Model Card Authors [optional]",

"## Model Card Contact"

] |

null | peft |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# GUE_EMP_H3K9ac-seqsight_32768_512_43M-L32_all

This model is a fine-tuned version of [mahdibaghbanzadeh/seqsight_32768_512_43M](https://huggingface.co/mahdibaghbanzadeh/seqsight_32768_512_43M) on the [mahdibaghbanzadeh/GUE_EMP_H3K9ac](https://huggingface.co/datasets/mahdibaghbanzadeh/GUE_EMP_H3K9ac) dataset.

It achieves the following results on the evaluation set:

- Loss: 1.1794

- F1 Score: 0.6081

- Accuracy: 0.6071

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 2048

- eval_batch_size: 2048

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 10000

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 Score | Accuracy |

|:-------------:|:------:|:-----:|:---------------:|:--------:|:--------:|

| 0.6617 | 18.18 | 200 | 0.6671 | 0.6050 | 0.6114 |

| 0.5709 | 36.36 | 400 | 0.7096 | 0.6114 | 0.6114 |

| 0.4961 | 54.55 | 600 | 0.7751 | 0.6051 | 0.6060 |

| 0.4402 | 72.73 | 800 | 0.8118 | 0.6101 | 0.6099 |

| 0.3998 | 90.91 | 1000 | 0.8499 | 0.6129 | 0.6121 |

| 0.3713 | 109.09 | 1200 | 0.8935 | 0.6026 | 0.6020 |

| 0.3506 | 127.27 | 1400 | 0.9348 | 0.6107 | 0.6099 |

| 0.331 | 145.45 | 1600 | 0.9848 | 0.5935 | 0.5955 |

| 0.3168 | 163.64 | 1800 | 0.9878 | 0.6086 | 0.6081 |

| 0.302 | 181.82 | 2000 | 1.0570 | 0.6051 | 0.6045 |

| 0.2915 | 200.0 | 2200 | 1.0663 | 0.6050 | 0.6042 |

| 0.2818 | 218.18 | 2400 | 1.0933 | 0.6049 | 0.6056 |

| 0.2729 | 236.36 | 2600 | 1.1083 | 0.5952 | 0.5945 |

| 0.2656 | 254.55 | 2800 | 1.1346 | 0.6008 | 0.6006 |

| 0.2597 | 272.73 | 3000 | 1.1517 | 0.6004 | 0.5999 |

| 0.2557 | 290.91 | 3200 | 1.1102 | 0.5992 | 0.5991 |

| 0.2476 | 309.09 | 3400 | 1.1596 | 0.5959 | 0.5966 |

| 0.2419 | 327.27 | 3600 | 1.1836 | 0.5995 | 0.5995 |

| 0.2356 | 345.45 | 3800 | 1.1120 | 0.6057 | 0.6049 |

| 0.2312 | 363.64 | 4000 | 1.1530 | 0.6061 | 0.6053 |

| 0.2266 | 381.82 | 4200 | 1.1690 | 0.6045 | 0.6042 |

| 0.2224 | 400.0 | 4400 | 1.1636 | 0.6018 | 0.6013 |

| 0.2175 | 418.18 | 4600 | 1.1667 | 0.5932 | 0.5930 |

| 0.2148 | 436.36 | 4800 | 1.1544 | 0.6032 | 0.6027 |

| 0.2092 | 454.55 | 5000 | 1.1731 | 0.6027 | 0.6020 |

| 0.2055 | 472.73 | 5200 | 1.1572 | 0.6043 | 0.6035 |

| 0.2024 | 490.91 | 5400 | 1.1931 | 0.6010 | 0.6002 |

| 0.1983 | 509.09 | 5600 | 1.1850 | 0.6038 | 0.6031 |

| 0.1947 | 527.27 | 5800 | 1.2111 | 0.6035 | 0.6031 |

| 0.1917 | 545.45 | 6000 | 1.2057 | 0.6133 | 0.6125 |

| 0.1871 | 563.64 | 6200 | 1.2591 | 0.6060 | 0.6056 |

| 0.1846 | 581.82 | 6400 | 1.2351 | 0.6071 | 0.6063 |

| 0.1823 | 600.0 | 6600 | 1.2167 | 0.6088 | 0.6081 |

| 0.1797 | 618.18 | 6800 | 1.2809 | 0.6086 | 0.6081 |

| 0.1765 | 636.36 | 7000 | 1.2408 | 0.6115 | 0.6107 |

| 0.1743 | 654.55 | 7200 | 1.2897 | 0.6074 | 0.6071 |

| 0.1725 | 672.73 | 7400 | 1.2601 | 0.6079 | 0.6071 |

| 0.169 | 690.91 | 7600 | 1.2472 | 0.6107 | 0.6099 |

| 0.1686 | 709.09 | 7800 | 1.2704 | 0.6065 | 0.6060 |

| 0.1658 | 727.27 | 8000 | 1.2722 | 0.6107 | 0.6103 |

| 0.1647 | 745.45 | 8200 | 1.2621 | 0.6082 | 0.6074 |

| 0.1637 | 763.64 | 8400 | 1.2940 | 0.6045 | 0.6038 |

| 0.1616 | 781.82 | 8600 | 1.3066 | 0.6100 | 0.6092 |

| 0.1611 | 800.0 | 8800 | 1.2912 | 0.6104 | 0.6096 |

| 0.1591 | 818.18 | 9000 | 1.2844 | 0.6114 | 0.6107 |

| 0.1582 | 836.36 | 9200 | 1.3036 | 0.6133 | 0.6125 |

| 0.1579 | 854.55 | 9400 | 1.3022 | 0.6103 | 0.6096 |

| 0.1562 | 872.73 | 9600 | 1.3085 | 0.6122 | 0.6114 |

| 0.1569 | 890.91 | 9800 | 1.2826 | 0.6089 | 0.6081 |

| 0.1556 | 909.09 | 10000 | 1.3016 | 0.6107 | 0.6099 |

### Framework versions

- PEFT 0.9.0

- Transformers 4.38.2

- Pytorch 2.2.0+cu121

- Datasets 2.17.1

- Tokenizers 0.15.2 | {"library_name": "peft", "tags": ["generated_from_trainer"], "metrics": ["accuracy"], "base_model": "mahdibaghbanzadeh/seqsight_32768_512_43M", "model-index": [{"name": "GUE_EMP_H3K9ac-seqsight_32768_512_43M-L32_all", "results": []}]} | mahdibaghbanzadeh/GUE_EMP_H3K9ac-seqsight_32768_512_43M-L32_all | null | [

"peft",

"safetensors",

"generated_from_trainer",

"base_model:mahdibaghbanzadeh/seqsight_32768_512_43M",

"region:us"

] | null | 2024-04-16T23:01:07+00:00 | [] | [] | TAGS

#peft #safetensors #generated_from_trainer #base_model-mahdibaghbanzadeh/seqsight_32768_512_43M #region-us

| GUE\_EMP\_H3K9ac-seqsight\_32768\_512\_43M-L32\_all

===================================================

This model is a fine-tuned version of mahdibaghbanzadeh/seqsight\_32768\_512\_43M on the mahdibaghbanzadeh/GUE\_EMP\_H3K9ac dataset.

It achieves the following results on the evaluation set:

* Loss: 1.1794

* F1 Score: 0.6081

* Accuracy: 0.6071

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 0.0005

* train\_batch\_size: 2048

* eval\_batch\_size: 2048

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* training\_steps: 10000

### Training results

### Framework versions

* PEFT 0.9.0

* Transformers 4.38.2

* Pytorch 2.2.0+cu121

* Datasets 2.17.1

* Tokenizers 0.15.2

| [

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0005\n* train\\_batch\\_size: 2048\n* eval\\_batch\\_size: 2048\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* training\\_steps: 10000",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.9.0\n* Transformers 4.38.2\n* Pytorch 2.2.0+cu121\n* Datasets 2.17.1\n* Tokenizers 0.15.2"

] | [

"TAGS\n#peft #safetensors #generated_from_trainer #base_model-mahdibaghbanzadeh/seqsight_32768_512_43M #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0005\n* train\\_batch\\_size: 2048\n* eval\\_batch\\_size: 2048\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* training\\_steps: 10000",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.9.0\n* Transformers 4.38.2\n* Pytorch 2.2.0+cu121\n* Datasets 2.17.1\n* Tokenizers 0.15.2"

] |

token-classification | transformers |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ruRoberta-distilled-med-kd_ner

This model is a fine-tuned version of [DimasikKurd/ruRoberta-distilled-med-kd](https://huggingface.co/DimasikKurd/ruRoberta-distilled-med-kd) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.7519

- Precision: 0.5167

- Recall: 0.5141

- F1: 0.5154

- Accuracy: 0.8935

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 4

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 100

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 50 | 0.8729 | 0.0 | 0.0 | 0.0 | 0.7613 |

| No log | 2.0 | 100 | 0.6001 | 0.0 | 0.0 | 0.0 | 0.7640 |

| No log | 3.0 | 150 | 0.4551 | 0.0260 | 0.0347 | 0.0297 | 0.8276 |

| No log | 4.0 | 200 | 0.3959 | 0.0833 | 0.1156 | 0.0969 | 0.8423 |

| No log | 5.0 | 250 | 0.3948 | 0.1213 | 0.2235 | 0.1573 | 0.8416 |

| No log | 6.0 | 300 | 0.3469 | 0.1554 | 0.1869 | 0.1697 | 0.8674 |

| No log | 7.0 | 350 | 0.3555 | 0.2174 | 0.2408 | 0.2285 | 0.8713 |

| No log | 8.0 | 400 | 0.2919 | 0.2318 | 0.3430 | 0.2766 | 0.8875 |

| No log | 9.0 | 450 | 0.3070 | 0.2609 | 0.3333 | 0.2927 | 0.8876 |

| 0.4413 | 10.0 | 500 | 0.2882 | 0.3046 | 0.4624 | 0.3673 | 0.8924 |

| 0.4413 | 11.0 | 550 | 0.2943 | 0.3292 | 0.4586 | 0.3833 | 0.8956 |

| 0.4413 | 12.0 | 600 | 0.3656 | 0.3769 | 0.4663 | 0.4169 | 0.8993 |

| 0.4413 | 13.0 | 650 | 0.3350 | 0.3280 | 0.5549 | 0.4123 | 0.8870 |

| 0.4413 | 14.0 | 700 | 0.3448 | 0.3614 | 0.5299 | 0.4297 | 0.8948 |

| 0.4413 | 15.0 | 750 | 0.3847 | 0.3270 | 0.5645 | 0.4141 | 0.8909 |

| 0.4413 | 16.0 | 800 | 0.4304 | 0.3365 | 0.6166 | 0.4354 | 0.8846 |

| 0.4413 | 17.0 | 850 | 0.4168 | 0.3905 | 0.6185 | 0.4787 | 0.8960 |

| 0.4413 | 18.0 | 900 | 0.4903 | 0.4258 | 0.5145 | 0.4660 | 0.9031 |

| 0.4413 | 19.0 | 950 | 0.4139 | 0.4255 | 0.5780 | 0.4902 | 0.8984 |

| 0.089 | 20.0 | 1000 | 0.4383 | 0.4034 | 0.5511 | 0.4658 | 0.9022 |

| 0.089 | 21.0 | 1050 | 0.5534 | 0.4744 | 0.5356 | 0.5032 | 0.9055 |

| 0.089 | 22.0 | 1100 | 0.4808 | 0.4585 | 0.5530 | 0.5013 | 0.9040 |

| 0.089 | 23.0 | 1150 | 0.4970 | 0.4547 | 0.5992 | 0.5170 | 0.9014 |

| 0.089 | 24.0 | 1200 | 0.5934 | 0.4421 | 0.5588 | 0.4936 | 0.9044 |

| 0.089 | 25.0 | 1250 | 0.4604 | 0.4150 | 0.6069 | 0.4930 | 0.9032 |

| 0.089 | 26.0 | 1300 | 0.5973 | 0.4741 | 0.5645 | 0.5154 | 0.9045 |

| 0.089 | 27.0 | 1350 | 0.5220 | 0.4617 | 0.5915 | 0.5186 | 0.9002 |

| 0.089 | 28.0 | 1400 | 0.5929 | 0.4816 | 0.6050 | 0.5363 | 0.9021 |

| 0.089 | 29.0 | 1450 | 0.6293 | 0.4828 | 0.5414 | 0.5104 | 0.9083 |

| 0.0249 | 30.0 | 1500 | 0.5865 | 0.4812 | 0.5665 | 0.5204 | 0.9069 |

| 0.0249 | 31.0 | 1550 | 0.6042 | 0.4429 | 0.6204 | 0.5169 | 0.8979 |

| 0.0249 | 32.0 | 1600 | 0.5790 | 0.4513 | 0.6166 | 0.5212 | 0.9010 |

| 0.0249 | 33.0 | 1650 | 0.5528 | 0.4105 | 0.6012 | 0.4879 | 0.8958 |

| 0.0249 | 34.0 | 1700 | 0.6139 | 0.4694 | 0.5607 | 0.5110 | 0.9059 |

| 0.0249 | 35.0 | 1750 | 0.5735 | 0.4316 | 0.6262 | 0.5110 | 0.8950 |

### Framework versions

- Transformers 4.38.2

- Pytorch 2.1.2

- Datasets 2.1.0

- Tokenizers 0.15.2

| {"tags": ["generated_from_trainer"], "metrics": ["precision", "recall", "f1", "accuracy"], "base_model": "DimasikKurd/ruRoberta-distilled-med-kd", "model-index": [{"name": "ruRoberta-distilled-med-kd_ner", "results": []}]} | DimasikKurd/ruRoberta-distilled-med-kd_ner | null | [

"transformers",

"tensorboard",

"safetensors",

"roberta",

"token-classification",

"generated_from_trainer",

"base_model:DimasikKurd/ruRoberta-distilled-med-kd",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | null | 2024-04-16T23:02:22+00:00 | [] | [] | TAGS

#transformers #tensorboard #safetensors #roberta #token-classification #generated_from_trainer #base_model-DimasikKurd/ruRoberta-distilled-med-kd #autotrain_compatible #endpoints_compatible #region-us

| ruRoberta-distilled-med-kd\_ner

===============================

This model is a fine-tuned version of DimasikKurd/ruRoberta-distilled-med-kd on the None dataset.

It achieves the following results on the evaluation set:

* Loss: 0.7519

* Precision: 0.5167

* Recall: 0.5141

* F1: 0.5154

* Accuracy: 0.8935

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 5e-05

* train\_batch\_size: 4

* eval\_batch\_size: 8

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* num\_epochs: 100

### Training results

### Framework versions

* Transformers 4.38.2

* Pytorch 2.1.2

* Datasets 2.1.0

* Tokenizers 0.15.2

| [

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 4\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 100",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.38.2\n* Pytorch 2.1.2\n* Datasets 2.1.0\n* Tokenizers 0.15.2"

] | [

"TAGS\n#transformers #tensorboard #safetensors #roberta #token-classification #generated_from_trainer #base_model-DimasikKurd/ruRoberta-distilled-med-kd #autotrain_compatible #endpoints_compatible #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 5e-05\n* train\\_batch\\_size: 4\n* eval\\_batch\\_size: 8\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* num\\_epochs: 100",

"### Training results",

"### Framework versions\n\n\n* Transformers 4.38.2\n* Pytorch 2.1.2\n* Datasets 2.1.0\n* Tokenizers 0.15.2"

] |

null | peft |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# GUE_EMP_H3K4me3-seqsight_32768_512_43M-L32_all

This model is a fine-tuned version of [mahdibaghbanzadeh/seqsight_32768_512_43M](https://huggingface.co/mahdibaghbanzadeh/seqsight_32768_512_43M) on the [mahdibaghbanzadeh/GUE_EMP_H3K4me3](https://huggingface.co/datasets/mahdibaghbanzadeh/GUE_EMP_H3K4me3) dataset.

It achieves the following results on the evaluation set:

- Loss: 0.6802

- F1 Score: 0.5609

- Accuracy: 0.5628

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 2048

- eval_batch_size: 2048

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 10000

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 Score | Accuracy |

|:-------------:|:------:|:-----:|:---------------:|:--------:|:--------:|

| 0.6879 | 13.33 | 200 | 0.6832 | 0.5583 | 0.5595 |

| 0.6382 | 26.67 | 400 | 0.7334 | 0.5464 | 0.5579 |

| 0.5843 | 40.0 | 600 | 0.7553 | 0.5456 | 0.5484 |

| 0.5389 | 53.33 | 800 | 0.8090 | 0.5488 | 0.5568 |

| 0.5076 | 66.67 | 1000 | 0.8400 | 0.5412 | 0.5416 |

| 0.4841 | 80.0 | 1200 | 0.8626 | 0.5466 | 0.5470 |

| 0.4655 | 93.33 | 1400 | 0.8843 | 0.5324 | 0.5332 |

| 0.4488 | 106.67 | 1600 | 0.8990 | 0.5358 | 0.5353 |

| 0.4356 | 120.0 | 1800 | 0.9441 | 0.5408 | 0.5427 |

| 0.4236 | 133.33 | 2000 | 0.9798 | 0.5303 | 0.5318 |

| 0.4135 | 146.67 | 2200 | 0.9903 | 0.5470 | 0.5467 |

| 0.402 | 160.0 | 2400 | 0.9648 | 0.5433 | 0.5435 |

| 0.3939 | 173.33 | 2600 | 0.9957 | 0.5432 | 0.5432 |

| 0.3867 | 186.67 | 2800 | 1.0448 | 0.5471 | 0.5467 |

| 0.3789 | 200.0 | 3000 | 1.0005 | 0.5441 | 0.5451 |

| 0.3702 | 213.33 | 3200 | 1.0095 | 0.5426 | 0.5427 |

| 0.366 | 226.67 | 3400 | 1.0214 | 0.5417 | 0.5413 |

| 0.3585 | 240.0 | 3600 | 1.0384 | 0.5420 | 0.5429 |

| 0.3549 | 253.33 | 3800 | 1.0652 | 0.5407 | 0.5410 |

| 0.3478 | 266.67 | 4000 | 1.0531 | 0.5424 | 0.5429 |

| 0.3418 | 280.0 | 4200 | 1.0429 | 0.5362 | 0.5359 |

| 0.3366 | 293.33 | 4400 | 1.0908 | 0.5393 | 0.5402 |

| 0.3329 | 306.67 | 4600 | 1.0780 | 0.5350 | 0.5345 |

| 0.325 | 320.0 | 4800 | 1.0910 | 0.5298 | 0.5299 |

| 0.3214 | 333.33 | 5000 | 1.0893 | 0.5426 | 0.5424 |

| 0.3186 | 346.67 | 5200 | 1.0718 | 0.5390 | 0.5386 |

| 0.3144 | 360.0 | 5400 | 1.1087 | 0.5337 | 0.5334 |

| 0.3118 | 373.33 | 5600 | 1.0730 | 0.5344 | 0.5359 |

| 0.3062 | 386.67 | 5800 | 1.1140 | 0.5406 | 0.5408 |

| 0.302 | 400.0 | 6000 | 1.1265 | 0.5339 | 0.5334 |

| 0.2987 | 413.33 | 6200 | 1.1369 | 0.5336 | 0.5332 |

| 0.2954 | 426.67 | 6400 | 1.1322 | 0.5361 | 0.5359 |

| 0.2945 | 440.0 | 6600 | 1.1478 | 0.5365 | 0.5361 |

| 0.2874 | 453.33 | 6800 | 1.1603 | 0.5403 | 0.5408 |

| 0.2851 | 466.67 | 7000 | 1.1172 | 0.5325 | 0.5321 |

| 0.2841 | 480.0 | 7200 | 1.1720 | 0.5355 | 0.5351 |

| 0.2815 | 493.33 | 7400 | 1.1704 | 0.5418 | 0.5432 |

| 0.2784 | 506.67 | 7600 | 1.1646 | 0.5365 | 0.5367 |

| 0.2754 | 520.0 | 7800 | 1.1859 | 0.5291 | 0.5291 |

| 0.2748 | 533.33 | 8000 | 1.1461 | 0.5347 | 0.5342 |

| 0.2702 | 546.67 | 8200 | 1.2038 | 0.5361 | 0.5359 |

| 0.2699 | 560.0 | 8400 | 1.1909 | 0.5330 | 0.5326 |

| 0.2689 | 573.33 | 8600 | 1.1961 | 0.5324 | 0.5321 |

| 0.2668 | 586.67 | 8800 | 1.1852 | 0.5373 | 0.5372 |

| 0.2647 | 600.0 | 9000 | 1.2011 | 0.5309 | 0.5304 |

| 0.2649 | 613.33 | 9200 | 1.1837 | 0.5325 | 0.5323 |

| 0.2627 | 626.67 | 9400 | 1.2160 | 0.5309 | 0.5304 |

| 0.262 | 640.0 | 9600 | 1.2265 | 0.5322 | 0.5318 |

| 0.26 | 653.33 | 9800 | 1.2140 | 0.5301 | 0.5296 |

| 0.2613 | 666.67 | 10000 | 1.2069 | 0.5332 | 0.5329 |

### Framework versions

- PEFT 0.9.0

- Transformers 4.38.2

- Pytorch 2.2.0+cu121

- Datasets 2.17.1

- Tokenizers 0.15.2 | {"library_name": "peft", "tags": ["generated_from_trainer"], "metrics": ["accuracy"], "base_model": "mahdibaghbanzadeh/seqsight_32768_512_43M", "model-index": [{"name": "GUE_EMP_H3K4me3-seqsight_32768_512_43M-L32_all", "results": []}]} | mahdibaghbanzadeh/GUE_EMP_H3K4me3-seqsight_32768_512_43M-L32_all | null | [

"peft",

"safetensors",

"generated_from_trainer",

"base_model:mahdibaghbanzadeh/seqsight_32768_512_43M",

"region:us"

] | null | 2024-04-16T23:02:56+00:00 | [] | [] | TAGS

#peft #safetensors #generated_from_trainer #base_model-mahdibaghbanzadeh/seqsight_32768_512_43M #region-us

| GUE\_EMP\_H3K4me3-seqsight\_32768\_512\_43M-L32\_all

====================================================

This model is a fine-tuned version of mahdibaghbanzadeh/seqsight\_32768\_512\_43M on the mahdibaghbanzadeh/GUE\_EMP\_H3K4me3 dataset.

It achieves the following results on the evaluation set:

* Loss: 0.6802

* F1 Score: 0.5609

* Accuracy: 0.5628

Model description

-----------------

More information needed

Intended uses & limitations

---------------------------

More information needed

Training and evaluation data

----------------------------

More information needed

Training procedure

------------------

### Training hyperparameters

The following hyperparameters were used during training:

* learning\_rate: 0.0005

* train\_batch\_size: 2048

* eval\_batch\_size: 2048

* seed: 42

* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

* lr\_scheduler\_type: linear

* training\_steps: 10000

### Training results

### Framework versions

* PEFT 0.9.0

* Transformers 4.38.2

* Pytorch 2.2.0+cu121

* Datasets 2.17.1

* Tokenizers 0.15.2

| [

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0005\n* train\\_batch\\_size: 2048\n* eval\\_batch\\_size: 2048\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* training\\_steps: 10000",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.9.0\n* Transformers 4.38.2\n* Pytorch 2.2.0+cu121\n* Datasets 2.17.1\n* Tokenizers 0.15.2"

] | [

"TAGS\n#peft #safetensors #generated_from_trainer #base_model-mahdibaghbanzadeh/seqsight_32768_512_43M #region-us \n",

"### Training hyperparameters\n\n\nThe following hyperparameters were used during training:\n\n\n* learning\\_rate: 0.0005\n* train\\_batch\\_size: 2048\n* eval\\_batch\\_size: 2048\n* seed: 42\n* optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08\n* lr\\_scheduler\\_type: linear\n* training\\_steps: 10000",

"### Training results",

"### Framework versions\n\n\n* PEFT 0.9.0\n* Transformers 4.38.2\n* Pytorch 2.2.0+cu121\n* Datasets 2.17.1\n* Tokenizers 0.15.2"

] |

null | peft |

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# GUE_EMP_H4-seqsight_32768_512_43M-L32_all

This model is a fine-tuned version of [mahdibaghbanzadeh/seqsight_32768_512_43M](https://huggingface.co/mahdibaghbanzadeh/seqsight_32768_512_43M) on the [mahdibaghbanzadeh/GUE_EMP_H4](https://huggingface.co/datasets/mahdibaghbanzadeh/GUE_EMP_H4) dataset.

It achieves the following results on the evaluation set:

- Loss: 1.4422

- F1 Score: 0.7429

- Accuracy: 0.7426

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 2048

- eval_batch_size: 2048

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 10000

### Training results