repo

stringlengths 8

116

| tasks

stringlengths 8

117

| titles

stringlengths 17

302

| dependencies

stringlengths 5

372k

| readme

stringlengths 5

4.26k

| __index_level_0__

int64 0

4.36k

|

|---|---|---|---|---|---|

Srijha09/Making-Images-Artsy-Neural-Style-Transfer | ['style transfer'] | ['A Neural Algorithm of Artistic Style'] | utils.py style_transfer.py app.py run.py home upload serve_image style_transfer run_model is_file_allowed image_loader StyleLoss Normalization gram_matrix imshow get_style_model_and_losses ContentLoss add_time run_style_transfer get_input_optimizer is_file_allowed print save filename add_time print run_model image_loader ToPILImage Compose clone imshow eval figure device is_available to run_style_transfer replace unsqueeze convert open str time squeeze clone close unloader title savefig t size mm view deepcopy children isinstance Sequential StyleLoss MaxPool2d add_module Conv2d len ReLU ContentLoss BatchNorm2d to range append detach LBFGS print clamp_ get_style_model_and_losses step get_input_optimizer | # Making-Images-Artsy-Neural-Style-Transfer The whole idea of editing images and making them more creative by merging with them other images lies in the principle of Neural Style Transfer. Neural style transfer is an optimization technique used to take three images, a content image, a style reference image (such as an artwork by a famous painter), and the input image you want to style — and blend them together such that the input image is transformed to look like the content image, but “painted” in the style of the style image. The principle is simple: we define two distances, one for the content (DC) and one for the style (DS). DC measures how different the content is between two images while DS measures how different the style is between two images. Then, we take a third image, the input, and transform it to minimize both its content-distance with the content-image and its style-distance with the style-image. Now we can import the necessary packages and begin the neural transfer. I have taken the help of Pytorch Neural Style Transfer: https://pytorch.org/tutorials/advanced/neural_style_tutorial.html and idea from the paper https://arxiv.org/abs/1508.06576 I have also incorporated a Flask Application deployed on Heroku Cloud.    |  | 1,000 |

Stanford-ILIAD/batch-active-preference-based-learning | ['active learning'] | ['Batch Active Preference-Based Learning of Reward Functions'] | input_sampler.py simulator.py dynamics.py demos.py lane.py utils_driving.py run.py feature.py world.py kmedoids.py utils.py trajectory.py algos.py visualize.py car.py run_optimizer.py simulation_utils.py sampling.py models.py boundary_medoids successive_elimination select_top_candidates generate_psi random func_psi func greedy medoids nonbatch Car batch nonbatch CarDynamics Dynamics feature speed control bounded_control Feature kMedoids Lane StraightLane LunarLander MountainCar Tosser Driver Swimmer Sampler perform_best get_feedback run_algo func create_env GymSimulation MujocoSimulation DrivingSimulation Simulation Trajectory extract vector randomize grad jacobian Maximizer shape hessian matrix NestedMaximizer scalar extract vector grad jacobian shape hessian matrix Visualizer irl_ground world2 world3 Object zero world_features world6 world1 world_test playground world0 world5 world4 World dot exp T sum list reshape range feed get_features zeros num_of_features array feed_size generate_psi fmin_l_bfgs_b feed_size load name argsort func_psi zeros num_of_features feed_size select_top_candidates select_top_candidates kMedoids pairwise_distances simplices select_top_candidates kMedoids pairwise_distances unique ConvexHull select_top_candidates reshape pairwise_distances where delete array uniform str norm format print get_feedback reshape exit run_algo num_of_features sample mean uniform append create_env Sampler range norm format print get_feedback reshape run_algo num_of_features sample mean uniform append create_env Sampler range list sort argmin shuffle where set add shape copy mean array_equal zip array range len watch feed get_features lower array print exit print exit set_ctrl array get_features watch set_ctrl inf ctrl_size lower fmin_l_bfgs_b range normal set_value isinstance concatenate shape isinstance concatenate CarDynamics StraightLane UserControlledCar Car append World CarDynamics StraightLane simple_reward World UserControlledCar f goal cars zip append SimpleOptimizerCar enumerate open CarDynamics StraightLane simple_reward UserControlledCar append SimpleOptimizerCar World CarDynamics StraightLane simple_reward bounds UserControlledCar NestedOptimizerCar append bounded_control World CarDynamics StraightLane simple_reward bounds UserControlledCar traj_h NestedOptimizerCar append bounded_control World CarDynamics StraightLane simple_reward bounds UserControlledCar traj_h NestedOptimizerCar append bounded_control World CarDynamics StraightLane simple_reward bounds UserControlledCar traj_h NestedOptimizerCar append bounded_control World CarDynamics StraightLane simple_reward bounds UserControlledCar traj_h NestedOptimizerCar append bounded_control World CarDynamics StraightLane simple_reward bounds UserControlledCar NestedOptimizerCar append bounded_control World CarDynamics asarray StraightLane simple_reward bounds NestedOptimizerCar append bounded_control SimpleOptimizerCar World CarDynamics StraightLane UserControlledCar Car append World | This code learns reward functions from human preferences in various tasks by actively generating batches of scenarios and querying a human expert. Companion code to [CoRL 2018 paper](https://arxiv.org/abs/1810.04303): E Bıyık, D Sadigh. **"[Batch Active Preference-Based Learning of Reward Functions](https://arxiv.org/abs/1810.04303)"**. *Conference on Robot Learning (CoRL)*, Zurich, Switzerland, Oct. 2018. ## Dependencies You need to have the following libraries with [Python3](http://www.python.org/downloads): - [MuJoCo 1.50](http://www.mujoco.org/index.html) - [NumPy](https://www.numpy.org/) - [OpenAI Gym](https://gym.openai.com) - [pyglet](https://bitbucket.org/pyglet/pyglet/wiki/Home) - PYMC | 1,001 |

StanfordAI4HI/ICLR2019_evaluating_discrete_temporal_structure | ['time series'] | ['Learning Procedural Abstractions and Evaluating Discrete Latent Temporal Structure'] | data_loaders.py metrics.py plot.py config.py viz.py evaluate.py utils.py load_bees_dataset load_mocap6_dataset evaluate_all_methods analyze_single_method analyze_best_runs_across_methods_for_metric_pair viz_best_runs_across_methods read_single_run generate_eval_dict restrict_eval_dict restrict_frame_to_metrics analyze_best_runs_across_method_pairs_by_metrics get_methods_sorted_by_best_runs_on_metric evaluate_a_prediction analyze_best_runs_across_methods_for_metric select_best_run_per_method_by_metric evaluate_single_method analyze_all_methods evaluate_single_run repeated_structure_score munkres_score segment_homogeneity_score dcg_score label_agnostic_segmentation_score segment_completeness_score temporal_structure_score_new segment_structure_score_new compute_HC_given_SG ndcg_score compute_HSC_given_SG transition_structure_score bar_plot_combined_2 setup_sns factor_plot_single_method factorplot_methods_grouped_by_metrics scatterplot_methods_varying_beta bar_plot_single_method factor_plot_combined bar_plot_combined barplot_methods_grouped_by_metrics heaviest_common_substring entropy heaviest_common_subsequence_with_alignment relabel_clustering_with_munkres_correspondences relabel_clustering get_segment_dict heaviest_common_subsequence viz_temporal_clusterings_with_segment_spacing viz_temporal_clusterings_by_segments viz_temporal_clusterings clear_labels _add_subplot append flatten loadmat transform concatenate glob len natsorted LabelEncoder scale append range fit repeated_structure_score transition_structure_score munkres_score normalized_mutual_info_score label_agnostic_segmentation_score adjusted_rand_score temporal_structure_score_new segment_structure_score_new homogeneity_completeness_v_measure generate_eval_dict load exists read_single_run evaluate_a_prediction write glob append evaluate_single_run evaluate_single_method DataFrame analyze_single_method print natsorted append DataFrame viz_temporal_clusterings_with_segment_spacing concatenate set viz_temporal_clusterings_by_segments relabel_clustering_with_munkres_correspondences viz_temporal_clusterings vstack append makedirs barplot_methods_grouped_by_metrics makedirs restrict_frame_to_metrics get_methods_sorted_by_best_runs_on_metric viz_best_runs_across_methods restrict_frame_to_metrics get_methods_sorted_by_best_runs_on_metric join makedirs factorplot_methods_grouped_by_metrics restrict_frame_to_metrics enumerate evaluate_all_methods analyze_best_runs_across_methods_for_metric_pair viz_best_runs_across_methods to_latex print makedirs map restrict_frame_to_metrics scatterplot_methods_varying_beta analyze_best_runs_across_method_pairs_by_metrics pivot_table analyze_best_runs_across_methods_for_metric select_best_run_per_method_by_metric round drop compute sum Munkres concatenate contingency_matrix float make_cost_matrix list heaviest_common_substring values heaviest_common_subsequence_with_alignment unique zip append sum heaviest_common_subsequence enumerate len entropy relabel_clustering get_segment_dict float len float get_segment_dict entropy len compute_HSC_given_SG relabel_clustering entropy compute_HSC_given_SG relabel_clustering entropy compute_HC_given_SG entropy compute_HC_given_SG compute_HSC_given_SG relabel_clustering entropy repeated_structure_score segment_structure_score_new log2 take arange len dcg_score set_style set_context set list plot yticks xlabel min close ylabel flatten ylim savefig Categorical figure append ndcg_score xticks max range len setup_sns list set_ylabels set_title suptitle set_xticklabels factorplot set_xlabel close flat savefig legend zip range set_ylim list remove arange set_xticklabels xlabel close ylabel ylim set_xticks title figure savefig barplot range list remove arange set_xticklabels xlabel close ylabel ylim set_xticks savefig figure barplot setup_sns list set_ylabels set_title set_xticklabels print factorplot set_yticks set_yticklabels set_xlabel close subplots_adjust flat figure savefig zip range set_ylim list remove arange set_xticklabels print xlabel close ylabel ylim set_xticks savefig figure barplot close tight_layout title ylim savefig figure barplot setup_sns list set_ylabels set_title set_xticklabels factorplot set_yticklabels set_yticks set_xlabel close subplots_adjust flat figure savefig zip range set_ylim float64 sum astype OrderedDict unique zip append enumerate append compute max Munkres contingency_matrix LabelEncoder make_cost_matrix fit_transform zeros abs array range len zeros array range len zeros array range len set_yticks set_xticks set_visible set_xticklabels imshow clear_labels add_subplot set_title len close tight_layout GridSpec savefig figure _add_subplot enumerate list sorted close extend GridSpec tight_layout get_segment_dict savefig figure _add_subplot array enumerate len list sorted close extend GridSpec tight_layout get_segment_dict savefig figure _add_subplot array enumerate len | # Evaluating Discrete Latent Temporal Structure This repository provides code to run experiments from, **Learning Procedural Abstractions and Evaluating Discrete Latent Temporal Structure** Karan Goel and Emma Brunskill _ICLR 2019_ Specifically, this repository reproduces experiments with the new evaluation criteria proposed in the paper. The results are reproduced from logged runs of the methods being compared, since running them from scratch can be very slow. #### Usage | 1,002 |

StanfordVL/arxivbot | ['few shot learning', 'program induction'] | ['Neural Task Programming: Learning to Generalize Across Hierarchical Tasks'] | forever.py bot.py format_arxiv summarize parse_direct_mention parse_arxiv parse_bot_commands handle_command summarizer get_stop_words document PlaintextParser list results print findall append summarize join title split parse_direct_mention search parse_arxiv api_call | # ArxivBot This is a Slack bot that posts arXiv summaries in a channel built based on this tutorial: https://www.fullstackpython.com/blog/build-first-slack-bot-python.html ## Quick start - Follow this [instruction](https://www.fullstackpython.com/blog/build-first-slack-bot-python.html) to create a slack bot (you are free to choose a name) and obtain the slack bot token. - Export the environment variable to the computer you want to deploy the bot ``` export SLACK_BOT_TOKEN=<your-slack-bot-token> ``` | 1,003 |

Star-Clouds/CenterFace | ['face detection'] | ['CenterFace: Joint Face Detection and Alignment Using Face as Point'] | prj-python/centerface.py prj-tensorrt/centerface.py prj-tensorrt/demo.py prj-python/demo.py CenterFace test_image_tensorrt camera test_widerface test_image CenterFace test_image_tensorrt VideoCapture read imshow rectangle CenterFace centerface release range circle waitKey imshow rectangle CenterFace centerface imread range circle waitKey imshow rectangle CenterFace centerface imread range circle join format print len write close CenterFace centerface open imread loadmat enumerate makedirs print len | ## CenterFace ### Introduce CenterFace(size of 7.3MB) is a practical anchor-free face detection and alignment method for edge devices.  ### Recent Update - `2019.09.13` CenterFace is released. ### Environment - OpenCV 4.1.0 - Numpy - Python3.6+ | 1,004 |

Statwolf/LocalLinearForest | ['causal inference'] | ['Local Linear Forests'] | modules/local_linear_forest.py modules/test_local_linear_forest.py LocalLinearForestRegressor TestLiuk TestLocalLinearForest | # LocalLinearForest Python implementation of Local Linear Forest (https://arxiv.org/pdf/1807.11408.pdf) | 1,005 |

StephenBo-China/DIEN | ['click through rate prediction'] | ['Deep Interest Evolution Network for Click-Through Rate Prediction'] | alibaba_data_reader.py activations.py layers.py main.py model.py loss.py Dice get_embedding_count_dict get_embedding_features_list get_sequence_data get_normal_data get_data convert_tensor get_embedding_dim_dict get_embedding_count get_user_behavior_features get_batch_data AUGRU GRU_GATES AuxLayer main DIEN dict get_embedding_count dict fillna read_csv list len map append zeros array values split sample get_sequence_data get_normal_data DIEN get_embedding_count_dict print get_embedding_features_list get_data get_embedding_dim_dict get_user_behavior_features get_batch_data | # DIEN_Final 本项目使用tensorflow2.0复现DIEN。 论文链接: https://arxiv.org/pdf/1809.03672.pdf 数据集使用阿里数据集测试模型代码, 数据集链接: https://tianchi.aliyun.com/dataset/dataDetail?dataId=56 # DIEN调用方法: ## 0. 简介: DIEN的输入特征中主要包含三个部分特征: 用户历史行为序列, 目标商品特征, 用户画像特征。 用户历史行为序列需包含点击序列与非点击序列。 请按如下1~2方法处理输入特征。 ## 1. 初始化: | 1,006 |

StephenBo-China/DIEN-DIN | ['click through rate prediction'] | ['Deep Interest Network for Click-Through Rate Prediction', 'Deep Interest Evolution Network for Click-Through Rate Prediction'] | alibaba_data_reader.py activations.py layers.py utils.py main.py model.py loss.py dice Dice get_embedding_count_dict get_test_data get_embedding_features_list get_sequence_data get_normal_data get_data convert_tensor get_embedding_dim_dict get_batch_data get_embedding_count get_user_behavior_features get_length AUGRU GRU_GATES attention AuxLayer main train_one_step get_train_data DIEN DIN make_test_loss_dir make_train_loss_dir get_file_name get_input_dim add_loss concat_features mkdir dict get_embedding_count dict fillna read_csv list len map append zeros array values split list len map append values split sample get_sequence_data get_length get_normal_data get_sequence_data head get_length get_normal_data trainable_variables clip_by_global_norm gradient loss_metric apply_gradients zip int DIEN train_one_step get_embedding_count_dict get_train_data get_embedding_features_list len Adam get_data get_embedding_dim_dict Sum get_user_behavior_features create_file_writer range AUC get_batch_data localtime strftime join close write open join close write open join list write close append open append makedirs | # DIEN-DIN 本项目使用tensorflow2.0复现阿里兴趣排序模型DIEN与DIN。 DIN论文链接: https://arxiv.org/pdf/1706.06978.pdf DIEN论文链接: https://arxiv.org/pdf/1809.03672.pdf 数据集使用阿里数据集测试模型代码, 数据集链接: https://tianchi.aliyun.com/dataset/dataDetail?dataId=56 # 调用方法: ## 0. 简介: DIEN的输入特征中主要包含三个部分特征: 用户历史行为序列, 目标商品特征, 用户画像特征。 用户历史行为序列需包含点击序列与非点击序列。 请按如下1~2方法处理输入特征。 | 1,007 |

StephenBo-China/recommendation_system_sort_model | ['click through rate prediction'] | ['Deep Interest Network for Click-Through Rate Prediction', 'Deep Interest Evolution Network for Click-Through Rate Prediction'] | layers/Dice.py layers/AUGRU.py model/DIEN.py layers/attention.py layers/AuxLayer.py model/DIN.py utils.py data_reader/alibaba_data_reader.py make_test_loss_dir make_train_loss_dir get_file_name get_input_dim add_loss concat_features mkdir get_embedding_count_dict get_test_data get_embedding_features_list get_sequence_data get_normal_data get_data convert_tensor get_embedding_dim_dict get_batch_data get_embedding_count get_user_behavior_features get_length attention AUGRU GRU_GATES attention AuxLayer dice Dice DIEN DIN localtime strftime join close write open join close write open join list write close append open append makedirs dict get_embedding_count dict fillna read_csv list len map append zeros array values split list len map append values split sample get_sequence_data get_length get_normal_data get_sequence_data head get_length get_normal_data | # 本项目将使用tensorflow2.0复现现在推荐系统中的主流排序模型 ## DIN: 论文链接: https://arxiv.org/pdf/1706.06978.pdf 数据集使用阿里数据集测试模型代码, 数据集链接: https://tianchi.aliyun.com/dataset/dataDetail?dataId=56 ### DIN调用方法: #### 0. 简介: DIN的输入特征中主要包含三个部分特征: 用户历史行为序列, 目标商品特征, 用户画像特征。 用户历史行为序列需包含点击序列与非点击序列。 请按如下1~2方法处理输入特征。 #### 1. 初始化: | 1,008 |

StevenGrove/TreeFilter-Torch | ['semantic segmentation'] | ['Learnable Tree Filter for Structure-preserving Feature Transform'] | furnace/tools/benchmark/__init__.py furnace/legacy/sync_bn/comm.py furnace/engine/engine.py furnace/seg_opr/sgd.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra/train.py furnace/base_model/xception.py model/voc/voc.fcn_32d.R101_v1c.extra/network.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter.further_finetune/network.py model/voc/voc.fcn_32d.R50_v1c/config.py furnace/tools/benchmark/statistics.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra/network.py furnace/datasets/ade/__init__.py furnace/datasets/__init__.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter.further_finetune/train.py furnace/kernels/lib_tree_filter/functions/mst.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter/network.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra.tree_filter/train.py furnace/seg_opr/__init__.py furnace/legacy/sync_bn/__init__.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra/eval.py furnace/kernels/lib_tree_filter/functions/bfs.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter.further_finetune/eval.py furnace/legacy/sync_bn/functions.py furnace/base_model/resnet.py furnace/tools/gluon2pytorch.py furnace/utils/init_func.py furnace/legacy/sync_bn/parallel.py furnace/datasets/BaseDataset.py furnace/datasets/pcontext/pcontext.py furnace/utils/img_utils.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter/dataloader.py furnace/legacy/sync_bn/src/gpu/setup.py furnace/engine/version.py model/voc/voc.fcn_32d.R50_v1c/dataloader.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra.tree_filter/network.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter.further_finetune/config.py furnace/legacy/parallel/parallel_apply.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter/eval.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra/config.py furnace/tools/benchmark/model_hook.py model/voc/voc.fcn_32d.R101_v1c.extra/config.py furnace/utils/pyt_utils.py furnace/legacy/sync_bn/src/cpu/setup.py furnace/tools/benchmark/compute_memory.py furnace/seg_opr/metric.py furnace/legacy/sync_bn/src/__init__.py furnace/datasets/camvid/camvid.py model/voc/voc.fcn_32d.R50_v1c/eval.py furnace/datasets/voc/voc.py furnace/kernels/lib_tree_filter/setup.py furnace/utils/visualize.py furnace/datasets/ade/ade.py furnace/tools/__init__.py model/voc/voc.fcn_32d.R50_v1c/train.py furnace/tools/benchmark/compute_speed.py furnace/tools/benchmark/compute_flops.py furnace/datasets/voc/__init__.py furnace/seg_opr/seg_oprs.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter.further_finetune/dataloader.py furnace/datasets/cityscapes/__init__.py furnace/datasets/pcontext/__init__.py furnace/tools/benchmark/stat_tree.py model/voc/voc.fcn_32d.R101_v1c.extra/eval.py furnace/base_model/__init__.py furnace/datasets/camvid/__init__.py model/voc/voc.fcn_32d.R101_v1c.extra/train.py model/voc/voc.fcn_32d.R50_v1c/network.py furnace/engine/lr_policy.py model/voc/voc.fcn_32d.R50_v1c.tree_filter/train.py furnace/tools/benchmark/reporter.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra.tree_filter/dataloader.py model/voc/voc.fcn_32d.R50_v1c.tree_filter/dataloader.py model/voc/voc.fcn_32d.R50_v1c.tree_filter/eval.py furnace/kernels/lib_tree_filter/modules/tree_filter.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra.tree_filter/config.py furnace/engine/logger.py furnace/seg_opr/loss_opr.py furnace/legacy/sync_bn/parallel_apply.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra/dataloader.py furnace/kernels/lib_tree_filter/functions/refine.py model/voc/voc.fcn_32d.R50_v1c.tree_filter/config.py model/voc/voc.fcn_32d.R50_v1c.tree_filter/network.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter/config.py furnace/datasets/cityscapes/cityscapes.py furnace/engine/evaluator.py model/voc/voc.fcn_32d.R101_v1c.extra.tree_filter/train.py furnace/legacy/sync_bn/syncbn.py model/cityscapes/cityscapes.fcn_32d.R101_v1c.extra.tree_filter/eval.py furnace/legacy/eval_methods.py model/voc/voc.fcn_32d.R101_v1c.extra/dataloader.py furnace/tools/benchmark/compute_madd.py ResBlock ResNet resnet50 Bottleneck resnet152 conv3x3 resnet34 resnet18 BasicBlock resnet101 xception39 Block Xception SeparableConvBnRelu BaseDataset ADE CamVid Cityscapes PascalContext VOC State Engine Evaluator get_logger LogFormatter MultiStageLR BaseLR LinearIncreaseLR PolyLR _BFS _MST _Refine MinimumSpanningTree TreeFilter2D val_func_process whole_eval scale_process pre_img sliding_eval get_a_var parallel_apply SyncMaster FutureResult SlavePipe _batchnormtrain batchnormtrain _sum_square sum_square CallbackContext allreduce AllReduce DataParallelModel execute_replication_callbacks Reduce patch_replication_callback get_a_var parallel_apply BatchNorm3d SharedTensor _SyncBatchNorm BatchNorm1d BatchNorm2d SigmoidFocalLoss ProbOhemCrossEntropy2d hist_info pixelAccuracy meanIoU accuracy intersectionAndUnion compute_score mean_pixel_accuracy ChannelAttention RefineResidual AttentionRefinement FeatureFusion ConvBnRelu BNRefine SeparableConvBnRelu SELayer GlobalAvgPool2d StandardSGD compute_Pool2d_flops compute_Upsample_flops compute_flops compute_BatchNorm2d_flops compute_ReLU_flops compute_Linear_flops compute_Conv2d_flops compute_Conv2d_madd compute_BatchNorm2d_madd compute_ConvTranspose2d_madd compute_AvgPool2d_madd compute_Softmax_madd compute_ReLU_madd compute_Linear_madd compute_madd compute_MaxPool2d_madd compute_Bilinear_madd compute_BatchNorm2d_memory compute_Conv2d_memory compute_Linear_memory compute_memory compute_Pool2d_memory num_params compute_ReLU_memory compute_PReLU_memory compute_speed ModelHook round_value report_format convert_leaf_modules_to_stat_tree get_parent_node ModelStat stat StatNode StatTree random_crop_pad_to_shape random_scale_with_length random_crop resize_ensure_shortest_edge random_gaussian_blur pad_image_to_shape center_crop random_scale random_rotation get_2dshape pad_image_size_to_multiples_of normalize generate_random_crop_pos random_mirror __init_weight group_weight init_weight load_model _dbg_interactive parse_devices ensure_dir all_reduce_tensor extant_file link_file reduce_tensor show_img show_prediction set_img_color print_iou get_ade_colors get_colors open_tensorboard add_path get_train_loader TrainPre SegEvaluator PredictHead Network open_tensorboard add_path get_train_loader TrainPre SegEvaluator PredictHead Network open_tensorboard add_path get_train_loader TrainPre SegEvaluator PredictHead Network open_tensorboard add_path get_train_loader TrainPre SegEvaluator PredictHead Network open_tensorboard add_path get_train_loader TrainPre SegEvaluator PredictHead Network open_tensorboard add_path get_train_loader TrainPre SegEvaluator PredictHead Network open_tensorboard add_path get_train_loader TrainPre SegEvaluator PredictHead Network ResNet load_model ResNet load_model ResNet load_model ResNet load_model ResNet load_model Xception load_model setFormatter getLogger addHandler formatter StreamHandler ensure_dir setLevel INFO FileHandler val_func_process permute resize pre_img zeros argmax shape argmax zeros resize int pad_image_to_shape min val_func_process pre_img shape permute resize ceil BORDER_CONSTANT numpy cuda range cuda ascontiguousarray concatenate pad_image_to_shape transpose normalize BORDER_CONSTANT Tensor map isinstance items get join list isinstance _worker get_context map start is_grad_enabled Queue append range len list hasattr __data_parallel_replicate__ modules enumerate len replicate sum nanmean sum diag nanmean sum histogram copy spacing sum sum float sum format isinstance print Conv2d Upsample BatchNorm2d __name__ Linear kernel_size groups shape affine prod kernel_size groups kernel_size groups kernel_size isinstance kernel_size isinstance format Softmax isinstance AvgPool2d print MaxPool2d Conv2d Bilinear BatchNorm2d ConvTranspose2d __name__ Linear PReLU format isinstance print Conv2d BatchNorm2d __name__ Linear numel num_params numel num_params numel size numel num_params numel numel time model randn synchronize set_device eval info cuda range sum list format fillna str parameter_quantity ConvFlops name duration Series inference_memory apply MAdd append DataFrame Flops join find_child_index split range len join get_parent_node items add_child tolist len StatNode range split ModelStat show_report int map pad_image_to_shape BORDER_CONSTANT get_2dshape randint get_2dshape zeros uint32 get_2dshape copyMakeBorder map float resize resize int choice resize choice flip getRotationMatrix2D warpAffine random GaussianBlur choice randint Number isinstance astype float32 named_modules isinstance conv_init bias weight constant_ __init_weight isinstance isinstance bias dict modules append weight Linear reduce clone div_ all_reduce clone div_ load items time format join isinstance set OrderedDict warning load_state_dict info keys int list format join endswith device_count info append range split remove format system makedirs embed range len uint8 array set_img_color uint8 set_img_color zeros array column_stack append range insert tolist join print size nanmean append range insert TrainPre batch_size DistributedSampler target_size image_mean distributed niters_per_epoch DataLoader image_std world_size dataset | # TreeFilter-Torch By [Lin Song](https://linsong.me), [Yanwei Li](https://yanwei-li.com), [Zeming Li](http://www.zemingli.com), [Gang Yu](https://www.skicyyu.org), [Hongbin Sun](http://gr.xjtu.edu.cn/web/hsun/chinese), [Jian Sun](http://www.jiansun.org), Nanning Zheng. This project provides a cuda implementation for "[Learnable Tree Filter for Structure-preserving Feature Transform](https://arxiv.org/pdf/1909.12513.pdf)" (NeurIPS2019) on PyTorch. Multiple semantic segmentation experiments are reproduced to verify the effectiveness of tree filtering module on PASCAL VOC2012 and Cityscapes. For the reason that the experiments in the paper were conducted using internal framework, this project reimplements them on PyTorch and reports detailed comparisons below. In addition, many thanks to [TorchSeg](https://github.com/ycszen/TorchSeg).  ## Prerequisites - PyTorch 1.2 - `sudo pip3 install torch torchvision` - Easydict - `sudo pip3 install easydict` | 1,009 |

StevenHickson/CreateNormals | ['surface normals estimation', 'semantic segmentation'] | ['Floors are Flat: Leveraging Semantics for Real-Time Surface Normal Prediction'] | python/calc_normals.py NormalCalculation | # CreateNormals [The paper can be found here](https://arxiv.org/abs/1906.06792) if you use this code for a paper, please cite the following: ``` @inproceedings{Hickson_2019_ICCV_Workshops, author = {Hickson, Steven and Raveendran, Karthik and Fathi, Alireza and Murphy, Kevin and Essa, Irfan}, title = {Floors are Flat: Leveraging Semantics for Real-Time Surface Normal Prediction}, booktitle = {The IEEE International Conference on Computer Vision (ICCV) Workshops}, month = {Oct}, year = {2019} | 1,010 |

StevenLiuWen/sRNN_TSC_Anomaly_Detection | ['anomaly detection'] | ['A Revisit of Sparse Coding Based Anomaly Detection in Stacked RNN Framework'] | libs/feature_loader_multi_patch.py libs/sista_rnn_anomaly_detection.py libs/sista_rnn_anomaly_detection_coherence.py libs/sista_rnn.py libs/common.py tools/__init__.py run_anomaly_detection.py libs/FLAGS.py tools/ground_truth.py tools/init_path.py evaluate.py libs/base.py run_anomaly_detection_coherence.py RecordResult compute_eer evaluate load_loss_gt compute_auc parser_args plot_roc test_func main main parse_args base checkdir checkrank BatchAdvancer HDF5Reader SequenceGeneratorVideo advance_batch readHDF5 FeatureLoader sista_rnn dim_reduction matmul sista_rnn_anomaly_detection_AE sista_rnn_anomaly_detection_TSC sista_rnn_anomaly_detection dim_reduction sista_rnn_anomaly_detection sista_rnn_anomaly_detection_AE matmul GroundTruthLoader add_path add_argument ArgumentParser gt_loader GroundTruthLoader len max show str ylabel title ylim gca append range plot concatenate load_loss_gt compute_auc annotate auc xlabel min roc_curve add_patch Rectangle array len RecordResult concatenate glob print load_loss_gt roc_curve shape any auc array range len AVENUE gt_loader format GroundTruthLoader print name random append plot_roc range len format eval_func print dataset auc join format RandomState asarray sista_rnn_anomaly_detection_TSC print train float32 placeholder test checkdir uniform ConfigProto Session sista_rnn_anomaly_detection_AE add_argument ArgumentParser makedirs list map sequence_generator array len as_list reshape AVENUE PED1 PED2 EXIT ENTRANCE SHANGHAITECH MOVINGMNIST append | # A revisit of sparse coding based anomaly detection in stacked rnn framework This repo is the official open source of [A revisit of sparse coding based anomaly detection in stacked rnn framework, ICCV 2017] It is implemented on tensorflow. Please follow the instructions to run the code. ## 1. Installation (Anaconda with python3.6 installation is recommended) * Install 3rd-package dependencies of python (listed in requirements.txt) ``` numpy==1.15.4 matplotlib==2.2.2 scikit_image==0.13.1 six==1.11.0 | 1,011 |

StonyBrookNLP/PerSenT | ['sentiment analysis'] | ["Author's Sentiment Prediction"] | pre_post_processing_steps/6_get_final_sentiment_compute_agreement_create_reannotation.py pre_post_processing_steps/8_masked_data_prepare_datasets.py pre_post_processing_steps/1_preprocess_EMNLP.py pre_post_processing_steps/2_core.py pre_post_processing_steps/10_data_stats.py pre_post_processing_steps/[7]_combine_4_votes.py pre_post_processing_steps/4_prepare_mturk_input.py pre_post_processing_steps/5_save_links.py pre_post_processing_steps/7_seperate_train_test.py pre_post_processing_steps/11_masked_lm_prepare_datasets.py pre_post_processing_steps/data_distribution.py pre_post_processing_steps/9_combine_pre_new_sets.py pre_post_processing_steps/3_entity_recognition.py word_distribution plot_class_distribution paragraph_distribution count_entities entity_frequency prepare_date map_title_doc del_unsused_doc_title word_distribution plot_class_distribution separate_train_test paragraph_distribution entity_frequency data_dist enclose_mask_entity data_dist tolist print pie axis title savefig figure plot Counter savefig figure most_common DataFrame xlabel print tolist len ylabel axis set hist savefig figure append sum split xlabel print tolist axis ylabel set hist savefig figure split append sum len time word_tokenize list replace print len tag append DataFrame range count list print len range set append DataFrame read_csv values open print len to_csv append keys read_csv drop remove print tolist head choice append DataFrame drop_duplicates read_csv len list start_char sorted replace print text Series nlp coref_clusters append end_char len append notnull split len | ## What is PerSenT? ### Person SenTiment, a challenge dataset for author's sentiment prediction in news domain. You can find our paper [Author's sentiment prediction](https://arxiv.org/abs/2011.06128) Mohaddeseh Bastan, Mahnaz Koupaee, Youngseo Son, Richard Sicoli, Niranjan Balasubramanian. COLING2020 We introduce PerSenT, a crowd-sourced dataset that captures the sentiment of an author towards the main entity in a news article. This dataset contains annotation for 5.3k documents and 38k paragraphs covering 3.2k unique entities. ### Example In the following example we see a 4-paragraph document about an entity (Donald Trump). Each paragraph is labeled separately and finally the author's sentiment towards the whole document is mentioned in the last row. <a href="https://github.com/MHDBST/PerSenT/blob/main/example2.png?raw=true"><img src="https://github.com/MHDBST/PerSenT/blob/main/example2.png?raw=true" alt="Image of PerSenT stats"/></a> ### Dataset Statistics To split the dataset, we separated the entities into 4 mutually exclusive sets. Due to the nature of news collections, some entities tend to dominate the collection. In our collection,there were four entities which were the main entity in nearly 800 articles. To avoid these entities from dominating the train or test splits, we moved them to a separate test collection. We split the remaining into a training, dev, and test sets at random. Thus our collection includes one standard test set consisting of articles drawn at random (Test Standard), while the other is a test set which contains multiple articles about a small number of popular entities (Test Frequent). | 1,012 |

StonyBrookNLP/SLDS-Stories | ['text generation'] | ['Generating Narrative Text in a Switching Dynamical System'] | scripts/viterbi.py S2S/EncDec.py EncDec.py scripts/sentiment_tag_rocstories.py scripts/gen_ref_for_decoding.py S2S/s2sa.py S2S/generate.py S2S/utils.py generate.py utils.py Sampler.py SLDS.py S2S/train.py data_utils.py LM.py train_alternate.py scripts/run_rouge.py scripts/convert_to_turk.py gibbs_interpolate.py scripts/calculate_transition.py generate_lm.py train_LM.py masked_cross_entropy.py ExtendableField load_vocab create_vocab RocStoryBatches RocStoryDataset S2SSentenceDataset transform RocStoryClozeDataset sentiment_label_vocab RocStoryDatasetSentiment gather_last sequence_mask Decoder fix_enc_hidden Encoder EncDecBase kl_divergence generate generate check_save_model_path generate LM compute_loss_unsupervised compute_loss_supervised compute_loss_unsupervised_LM _sequence_mask masked_cross_entropy print_iter_stats GibbsSampler SLDS check_save_model_path tally_parameters compute_loss_unsupervised compute_loss_supervised train print_iter_stats train print_iter_stats normal_sample top_k_logits normal_sample_deterministic gumbel_softmax_sample gumbel_sample get_context_vector gather_last sequence_mask Decoder fix_enc_hidden Encoder EncDecBase Attention generate S2SWithA train get_data_loader check_path_no_create variable build_transition get_stories write_for_turk rouge_log get_sents do_rouge write_for_rouge make_html_safe rouge_eval test_sentiment_tag get_sentiment sentiment_tag decode load Vocab Counter zero_ view numel cat Variable zeros cuda itos set_use_cuda combine_story compute_loss_unsupervised convert_to_target nll RocStoryClozeDataset cuda exp load_model squeeze exit RocStoryBatches vocab format eval cloze valid_data keys flush enumerate load print min load_vocab RocStoryDataset transform Tensor len model compute_loss_unsupervised_LM interpolate initial_sents reconstruct num_samples dirname abspath makedirs GibbsSampler train_data aggregate_gibbs_sample sentiment_label_vocab Variable Tensor cuda range print_iter_stats Variable mean Tensor cuda range print_iter_stats Variable mean Tensor range cuda CrossEntropyLoss print_iter_stats print max Variable size expand cuda expand_as long is_cuda view log_softmax size _sequence_mask float sum cuda print sum load_vectors save_model combine_story batch_size model compute_loss_unsupervised zero_grad convert_to_target compute_loss_supervised GloVe rnn_hidden_size save cuda clip open sentiment load_model squeeze Adam epochs RocStoryBatches use_pretrained vocab SLDS RocStoryDatasetSentiment format load_opt generative_params variational_params emb_size clip_grad_norm sentiment_label_vocab keys combine_sentiment_labels enumerate load time backward print min load_vocab RocStoryDataset parameters Tensor step hidden_size len compute_loss_unsupervised_LM LM exp randn Variable size cuda exp cuda size gumbel_sample topk expand_as mean BatchIter target S2SSentenceDataset tolist masked_cross_entropy text out_vocab BatchIter S2SSentenceDataset train_data batch_size get_data_loader clip_grad_norm_ target dataset expt_name S2SWithA ceil start_epoch float masked_cross_entropy int enc_hid_size text out_vocab dec_hid_size cuda makedirs print zeros sum zip get_stories format print append range len WARNING Rouge155 convert_and_evaluate setLevel print replace append join index split print rouge_log format print write_for_rouge rouge_eval rmtree mkdir exists polarity_scores SentimentIntensityAnalyzer format print SentimentIntensityAnalyzer sum flush len format print zeros argmax max range len | Code for Generating Narrative Text in a Switching Dynamical System Structure: 1. SLDS.py has the code for the proposed model. 2. train\_alternate.py and generate.py are used to train the model and for inference. 3. Lm.py has the code for the baseline Language model. 4. train\_LM.py and generate\_lm.py is used to train the model and for inference. 5. S2S folder has code for the code for the baseline sequence to sequence model with attention. 6. scripts folder has various scripts used for the project. 7. Sampler.py has thr main code for Gibbs sampling andgibbs\_interpolate.py is used to perform interpolations. | 1,013 |

Stream-AD/MIDAS | ['anomaly detection'] | ['Real-Time Anomaly Detection in Edge Streams', 'MIDAS: Microcluster-Based Detector of Anomalies in Edge Streams'] | util/DeleteTempFile.py util/EvaluateScore.py util/ReproduceROC.py util/PreprocessData.py darpa_original codes concat astype to_csv read_csv | # MIDAS <p> <a href="https://aaai.org/Conferences/AAAI-20/"> <img src="http://img.shields.io/badge/AAAI-2020-red.svg"> </a> <a href="https://arxiv.org/pdf/2009.08452.pdf"><img src="http://img.shields.io/badge/Paper-PDF-brightgreen.svg"></a> <a href="https://www.comp.nus.edu.sg/~sbhatia/assets/pdf/MIDAS_slides.pdf"> <img src="http://img.shields.io/badge/Slides-PDF-ff9e18.svg"> </a> <a href="https://youtu.be/Bd4PyLCHrto"> | 1,014 |

SuLab/crowdflower_relation_verification | ['relation extraction'] | ['Exposing ambiguities in a relation-extraction gold standard with crowdsourcing'] | src/true_relation_type.py src/file_util.py src/get_pubmed_abstract.py src/broad_reltype_performance.py src/unicode_to_ascii.py src/aggregate_votes.py src/filter_data.py src/check_settings.py aggregate_votes determine_broad_reltype_results has_proper_settings make_dir read_file filter_data query_ncbi get_abstract_information get_pubmed_article_xml_tree parse_article_xml_tree split_abstract get_euadr_relation_type convert_unicode_to_ascii append groupby sum len groupby insert sort map aggregate_votes append sum makedirs join format map query read_csv urlopen format query_ncbi text iter get format isinstance text convert_unicode_to_ascii iter append convert_unicode_to_ascii get_pubmed_article_xml_tree parse_article_xml_tree isinstance dict groupby filter_data normalize replace | ### Data and Code for *Exposing ambiguities in a relation-extraction gold standard with crowdsourcing* Last updated 2015-04-14 Tong Shu Li This repository contains the code and data used to generate *Exposing ambiguities in a relation-extraction gold standard with crowdsourcing*. Any questions can be sent to `[email protected]` ### Contents - **crowdflower/**: this directory contains all of the instructions and markup for CrowdFlower job 710587, which was used to gather the data analyzed in the paper. - **data/**: this directory contains the CrowdFlower output data as well as other data. - **src/**: this directory contains all of the source code referenced by the iPython notebooks. - **create_work_units.ipynb**: Code for randomly selecting some drug-disease relationships to show to the crowd. - **demographic_analysis.ipynb**: An analysis of the countries of origin of the task participants. | 1,015 |

SudhanshuMishra8826/Style-Transfer-On-Images | ['style transfer'] | ['A Neural Algorithm of Artistic Style'] | Algo2/styleTransfer.py Algo2/styleTransferOnVideos.py Algo1/styleTransferAlgo1.py Evaluator gram_matrix eval_loss_and_grads content_loss total_variation_loss style_loss dot transpose batch_flatten permute_dimensions gram_matrix square reshape astype f_outputs | # Style Transfer on Images The problem tackled in this report is Transfering the style of on image to contents of another image and thus creating a third image having the style similar to first image and content similar to second one. For achieving this I have used approaches from two different research papers and implemented them. ## Style transfer on Images using “Leon A.Gatys 's paper on ## A Neural Algorithm of Artistic Style” In this approach we used a VGG network with function to combine both the style and content loss and then by optimizing this loss in one iteration we will reduce the style and content loss | 1,016 |

SunnerLi/Cross-you-in-style | ['style transfer'] | ['Crossing You in Style: Cross-modal Style Transfer from Music to Visual Arts'] | Models/Classifier.py DataLoader/DatasetUtils.py Models/layer.py Models/loss.py Utils.py Models/model.py Models/Unet.py DataLoader/GetLoader.py evaluate.py DataLoader/WikiartDataset.py Models/generate.py batch_paint.py mkdir train get_gram presentParameters dumpWav dumpPaint sampleGt dumpMal dumpMusicLatent sample dumpYear gram_matrix gridTranspose Log mkdir normalize_batch label2year getArgs getTransform getLoaderEvaluate WikiartDatasetEvaluate UnetInDown Classifier basicConv UnetDown UnetUp UnetOutUp Generator Self_Attn Discriminator SpectralNorm2d LayerNorm l2normalize calc_gradient_penalty StyleLoss PerceptualLoss SVN UnetInDown basicConv UnetDown UnetUp Unet UnetOutUp format system model resample cuda squeeze dumpPaint append to format SVN getLoaderEvaluate eval getTransform mkdir item save_output_path enumerate load join print load_model_path Log system tqdm numpy len presentParameters add_argument ArgumentParser vars parse_args sorted format Log keys makedirs size stack append range enumerate len year_interval year_base join uint8 format imwrite transpose astype join format save join format join format save join format squeeze Log dumpPaint dumpMal dumpYear mkdir save_output_path numpy enumerate bmm size transpose view view div_ vgg cuda normalize_batch print format Compose paint_resize_min_edge DataLoader WikiartDatasetEvaluate Variable size rand expand mean netD cuda | # Crossing You in Style: Cross-modal Style Transfer from Music to Visual Arts

[[Project page]](https://sunnerli.github.io/Cross-you-in-style/) [[Arxiv paper]](https://arxiv.org/abs/2009.08083)

[[Dataset]](https://drive.google.com/drive/folders/1XgrXx1qKd8etj9-75ma_8z1tlO8Y49tE)

| 1,017 |

SurajDonthi/Clean-ST-ReID-Multi-Target-Multi-Camera-Tracking | ['person retrieval', 'person re identification'] | ['Beyond Part Models: Person Retrieval with Refined Part Pooling (and a Strong Convolutional Baseline)'] | mtmct_reid/eval.py mtmct_reid/engine.py mtmct_reid/data.py mtmct_reid/re_ranking.py mtmct_reid/train.py mtmct_reid/utils.py mtmct_reid/model.py mtmct_reid/rough.py mtmct_reid/metrics.py ReIDDataModule ReIDDataset ST_ReID generate_features main st_distribution smooth_st_distribution joint_scores gaussian_kernel mAP gaussian_func AP_CMC ClassifierBlock weights_init_classifier weights_init_kaiming PCB k_reciprocal_neigh re_ranking train plot_distributions standardize l2_norm_standardize save_args fliplr get_ids l2_norm_standardize cpu tensor Tensor cat load re_ranking print joint_scores Compose generate_features DataLoader eval ReIDDataset load_state_dict mAP model_path re_rank PCB st_distribution_path int sum unique zip zeros abs range len pow sqrt pi zeros arange range gaussian_func st_distribution dot gaussian_kernel sum range int16 exp matmul standardize type zip Tensor tensor abs cat setdiff1d argsort flatten intersect1d argwhere zero_ in1d append range len zero_ zip float AP_CMC len data normal_ kaiming_normal_ constant_ data normal_ constant_ zeros_like around max list exp transpose append sum range astype mean unique minimum int print float32 argpartition k_reciprocal_neigh zeros len num_classes experiment fit ST_ReID test Path save_args ModelCheckpoint save_dir TestTubeLogger from_argparse_args makedirs int stem append range enumerate split index_select long norm view size div expand_as append T sum subplots plot enumerate | # Multi-Camera Person Re-Identification

This repository is inspired by the paper [Spatial-Temporal Reidentification (ST-ReID)](https://arxiv.org/abs/1812.03282v1)[1]. The state-of-the-art for Person Re-identification tasks. This repository offers a flexible, and easy to understand clean implementation of the model architecture, training and evaluation.

This repository has been trained & tested on [DukeMTMTC-reID](https://megapixels.cc/duke_mtmc/) and [Market-1501 datasets](https://www.kaggle.com/pengcw1/market-1501). The model can be easily trained on any new datasets with a few tweaks to parse the files!

>You can do a quick run on Google Colab: [](https://colab.research.google.com/github/SurajDonthi/Multi-Camera-Person-Re-Identification/blob/master/demo.ipynb)

Below are the metrics on the various datasets.

| 1,018 |

SurajDonthi/Multi-Camera-Person-Re-Identification | ['person retrieval', 'person re identification'] | ['Beyond Part Models: Person Retrieval with Refined Part Pooling (and a Strong Convolutional Baseline)', 'Spatial-Temporal Person Re-identification'] | mtmct_reid/eval.py mtmct_reid/engine.py mtmct_reid/data.py mtmct_reid/re_ranking.py mtmct_reid/train.py mtmct_reid/utils.py mtmct_reid/model.py mtmct_reid/rough.py mtmct_reid/metrics.py ReIDDataModule ReIDDataset ST_ReID generate_features main st_distribution smooth_st_distribution joint_scores gaussian_kernel mAP gaussian_func AP_CMC ClassifierBlock weights_init_classifier weights_init_kaiming PCB k_reciprocal_neigh re_ranking train plot_distributions standardize l2_norm_standardize save_args fliplr get_ids l2_norm_standardize cpu tensor Tensor cat load re_ranking print joint_scores Compose generate_features DataLoader eval ReIDDataset load_state_dict mAP model_path re_rank PCB st_distribution_path int sum unique zip zeros abs range len pow sqrt pi zeros arange range gaussian_func st_distribution dot gaussian_kernel sum range int16 exp matmul standardize type zip Tensor tensor abs cat setdiff1d argsort flatten intersect1d argwhere zero_ in1d append range len zero_ zip float AP_CMC len data normal_ kaiming_normal_ constant_ data normal_ constant_ zeros_like around max list exp transpose append sum range astype mean unique minimum int print float32 argpartition k_reciprocal_neigh zeros len num_classes experiment fit ST_ReID test Path save_args ModelCheckpoint save_dir TestTubeLogger from_argparse_args makedirs int stem append range enumerate split index_select long norm view size div expand_as append T sum subplots plot enumerate | # Multi-Camera Person Re-Identification

This repository is inspired by the paper [Spatial-Temporal Reidentification (ST-ReID)](https://arxiv.org/abs/1812.03282v1)[1]. The state-of-the-art for Person Re-identification tasks. This repository offers a flexible, and easy to understand clean implementation of the model architecture, training and evaluation.

This repository has been trained & tested on [DukeMTMTC-reID](https://megapixels.cc/duke_mtmc/) and [Market-1501 datasets](https://www.kaggle.com/pengcw1/market-1501). The model can be easily trained on any new datasets with a few tweaks to parse the files!

>You can do a quick run on Google Colab: [](https://colab.research.google.com/github/SurajDonthi/Multi-Camera-Person-Re-Identification/blob/master/demo.ipynb)

Below are the metrics on the various datasets.

| 1,019 |

SurajDonthi/Multi-Target-Multi-Camera-Tracking-ST-ReID | ['person retrieval', 'person re identification'] | ['Beyond Part Models: Person Retrieval with Refined Part Pooling (and a Strong Convolutional Baseline)'] | mtmct_reid/eval.py mtmct_reid/engine.py mtmct_reid/data.py mtmct_reid/re_ranking.py mtmct_reid/train.py mtmct_reid/utils.py mtmct_reid/model.py mtmct_reid/rough.py mtmct_reid/metrics.py ReIDDataModule ReIDDataset ST_ReID generate_features main st_distribution smooth_st_distribution joint_scores gaussian_kernel mAP gaussian_func AP_CMC ClassifierBlock weights_init_classifier weights_init_kaiming PCB k_reciprocal_neigh re_ranking train plot_distributions standardize l2_norm_standardize save_args fliplr get_ids l2_norm_standardize cpu tensor Tensor cat load re_ranking print joint_scores Compose generate_features DataLoader eval ReIDDataset load_state_dict mAP model_path re_rank PCB st_distribution_path int sum unique zip zeros abs range len pow sqrt pi zeros arange range gaussian_func st_distribution dot gaussian_kernel sum range int16 exp matmul standardize type zip Tensor tensor abs cat setdiff1d argsort flatten intersect1d argwhere zero_ in1d append range len zero_ zip float AP_CMC len data normal_ kaiming_normal_ constant_ data normal_ constant_ zeros_like around max list exp transpose append sum range astype mean unique minimum int print float32 argpartition k_reciprocal_neigh zeros len num_classes experiment fit ST_ReID test Path save_args ModelCheckpoint save_dir TestTubeLogger from_argparse_args makedirs int stem append range enumerate split index_select long norm view size div expand_as append T sum subplots plot enumerate | # Multi-Camera Person Re-Identification

This repository is inspired by the paper [Spatial-Temporal Reidentification (ST-ReID)](https://arxiv.org/abs/1812.03282v1)[1]. The state-of-the-art for Person Re-identification tasks. This repository offers a flexible, and easy to understand clean implementation of the model architecture, training and evaluation.

This repository has been trained & tested on [DukeMTMTC-reID](https://megapixels.cc/duke_mtmc/) and [Market-1501 datasets](https://www.kaggle.com/pengcw1/market-1501). The model can be easily trained on any new datasets with a few tweaks to parse the files!

>You can do a quick run on Google Colab: [](https://colab.research.google.com/github/SurajDonthi/Multi-Camera-Person-Re-Identification/blob/master/demo.ipynb)

Below are the metrics on the various datasets.

| 1,020 |

SurajDonthi/Multi-Target-Multi-Camera-Tracking-ST-ReID-Clean-Code | ['person retrieval', 'person re identification'] | ['Beyond Part Models: Person Retrieval with Refined Part Pooling (and a Strong Convolutional Baseline)'] | mtmct_reid/eval.py mtmct_reid/engine.py mtmct_reid/data.py mtmct_reid/re_ranking.py mtmct_reid/train.py mtmct_reid/utils.py mtmct_reid/model.py mtmct_reid/rough.py mtmct_reid/metrics.py ReIDDataModule ReIDDataset ST_ReID generate_features main st_distribution smooth_st_distribution joint_scores gaussian_kernel mAP gaussian_func AP_CMC ClassifierBlock weights_init_classifier weights_init_kaiming PCB k_reciprocal_neigh re_ranking train plot_distributions standardize l2_norm_standardize save_args fliplr get_ids l2_norm_standardize cpu tensor Tensor cat load re_ranking print joint_scores Compose generate_features DataLoader eval ReIDDataset load_state_dict mAP model_path re_rank PCB st_distribution_path int sum unique zip zeros abs range len pow sqrt pi zeros arange range gaussian_func st_distribution dot gaussian_kernel sum range int16 exp matmul standardize type zip Tensor tensor abs cat setdiff1d argsort flatten intersect1d argwhere zero_ in1d append range len zero_ zip float AP_CMC len data normal_ kaiming_normal_ constant_ data normal_ constant_ zeros_like around max list exp transpose append sum range astype mean unique minimum int print float32 argpartition k_reciprocal_neigh zeros len num_classes experiment fit ST_ReID test Path save_args ModelCheckpoint save_dir TestTubeLogger from_argparse_args makedirs int stem append range enumerate split index_select long norm view size div expand_as append T sum subplots plot enumerate | # Multi-Camera Person Re-Identification

This repository is inspired by the paper [Spatial-Temporal Reidentification (ST-ReID)](https://arxiv.org/abs/1812.03282v1)[1]. The state-of-the-art for Person Re-identification tasks. This repository offers a flexible, and easy to understand clean implementation of the model architecture, training and evaluation.

This repository has been trained & tested on [DukeMTMTC-reID](https://megapixels.cc/duke_mtmc/) and [Market-1501 datasets](https://www.kaggle.com/pengcw1/market-1501). The model can be easily trained on any new datasets with a few tweaks to parse the files!

>You can do a quick run on Google Colab: [](https://colab.research.google.com/github/SurajDonthi/Multi-Camera-Person-Re-Identification/blob/master/demo.ipynb)

Below are the metrics on the various datasets.

| 1,021 |

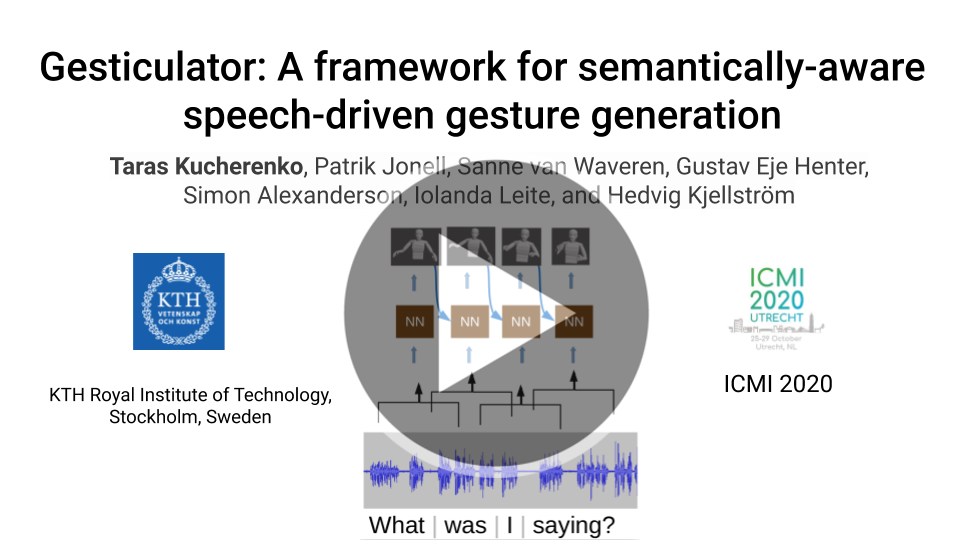

Svito-zar/gesticulator | ['gesture generation'] | ['Gesticulator: A framework for semantically-aware speech-driven gesture generation'] | gesticulator/visualization/pymo/preprocessing_old.py gesticulator/data_processing/text_features/compare_bert_versions.py gesticulator/hyper_param_search/scheduled_search.py gesticulator/visualization/motion_visualizer/model_animator.py gesticulator/train.py gesticulator/visualization/motion_visualizer/convert2bvh.py gesticulator/visualization/pymo/preprocessing.py gesticulator/visualization/pymo/slask.py gesticulator/data_processing/pca_gestures.py gesticulator/interface/gesture_predictor.py gesticulator/data_processing/SGdataset.py gesticulator/data_processing/text_features/parse_json_transcript.py gesticulator/visualization/motion_visualizer/bvh2npy.py gesticulator/hyper_param_search/schedulers.py gesticulator/visualization/setup.py gesticulator/visualization/motion_visualizer/bvh_helper.py gesticulator/visualization/pymo/rotation_tools_bkp.py gesticulator/evaluate.py gesticulator/data_processing/text_features/syllable_count.py gesticulator/visualization/pymo/rotation_tools.py gesticulator/data_processing/process_dataset.py gesticulator/visualization/pymo/features.py gesticulator/obj_evaluation/calc_errors.py gesticulator/visualization/motion_visualizer/visualize.py gesticulator/visualization/pymo/parsers.py install_script.py gesticulator/visualization/motion_visualizer/generate_videos.py gesticulator/hyper_param_search/random_search.py gesticulator/visualization/pymo/viz_tools.py gesticulator/data_processing/data_params.py gesticulator/data_processing/tools.py gesticulator/hyper_param_search/ray_trainable.py gesticulator/obj_evaluation/calc_histogram.py gesticulator/visualization/motion_visualizer/read_bvh.py gesticulator/obj_evaluation/calc_jerk.py gesticulator/data_processing/split_dataset.py gesticulator/model/model.py gesticulator/data_processing/test_audio.py gesticulator/visualization/pymo/writers.py demo/demo.py gesticulator/data_processing/bvh2features.py gesticulator/model/final_model.py gesticulator/data_processing/features2bvh.py dataset/rename_data_files.py gesticulator/config/model_config.py gesticulator/obj_evaluation/plot_hist.py setup.py gesticulator/visualization/pymo/Pivots.py gesticulator/model/prediction_saving.py gesticulator/visualization/pymo/data.py gesticulator/visualization/pymo/Quaternions.py main check_feature_type truncate_audio parse_args main create_save_dirs add_test_script_arguments create_logger main add_training_script_arguments ModelSavingCallback construct_model_config_parser extract_joint_angles feat2bvh create_embedding _save_data_as_sequences create_dataset _save_dataset _encode_vectors SpeechGestureDataset ValidationDataset check_dataset_directories create_dataset_splits copy_files _create_data_directories _format_datasets _files_to_pandas_dataframe create_bvh derivative calculate_mfcc average extract_prosodic_features compute_prosody calculate_spectrogram shorten encode_json_transcript_with_bert check_json_transcript encode_json_transcript_with_bert_DEPRECATED json_time_to_deciseconds merge_subword_encodings get_bert_embedding extract_extra_features extract_word_attributes count_syllables syl_count TrainableTrainer MyEarlyStopping main MyAsyncHyperBandScheduler MyAsyncHyperBandScheduler MyFIFOScheduler _Bracket ASHAv2 GesturePredictor My_Model weights_init_he weights_init_zeros GesticulatorModel weights_init_he weights_init_zeros PredictionSavingMixin read_joint_names remove_velocity MAE APE main main compute_velocity save_result compute_acceleration main save_result compute_acceleration compute_jerks read_csv read_joint_names convert_bvh2npy load parse_bvh_node BVHChannel loads BVHNode write_bvh generate_videos create_arg_parser visualize create_video AnimateSkeletons ModelSkeletons append obtain_coords read_bvh_to_array convert_bvh2npy visualize Joint MocapData plot_foot_up_down create_foot_contact_signal get_foot_contact_idxs BVHParser BVHScanner Pivots RootCentricPositionNormalizer Mirror JointSelector Flattener ConstantsRemover ReverseTime Numpyfier EulerReorder MocapParameterizer DownSampler TemplateTransform ListMinMaxScaler Slicer RootTransformer ListStandardScaler RootCentricPositionNormalizer Mirror JointSelector Flattener ConstantsRemover ReverseTime Numpyfier EulerReorder MocapParameterizer DownSampler TemplateTransform ListMinMaxScaler Slicer RootTransformer ListStandardScaler Quaternions euler_reorder Rotation euler2expmap2 expmap2euler euler_reorder2 rad2deg deg2rad unroll_2 euler2expmap unroll offsets offsets_inv unroll_1 Rotation expmap2euler euler2expmap rad2deg deg2rad EulerReorder Offsetter nb_play_mocap_fromurl draw_stickfigure draw_stickfigure3d viz_cnn_filter sketch_move print_skel nb_play_mocap save_fig BVHWriter check_feature_type int remove model_file detach print text video_out predict_gestures audio call GesturePredictor split load_from_checkpoint visualize load print exit load write_wav replace add_argument ArgumentParser create_save_dirs eval generate_evaluation_videos join generated_gestures_dir makedirs add_argument join GesticulatorModel create_logger save_checkpoint save_dir from_argparse_args fit rpartition add_argument add_argparse_args ArgumentParser add join list BVHParser parse dump savez print append Pipeline fit_transform load BVHWriter print shape inverse_transform load encode_json_transcript_with_bert isinstance concatenate print future_context min exit seq_len calculate_mfcc past_context shape extract_prosodic_features calculate_spectrogram shorten array len join _save_data_as_sequences proc_data_dir _save_dataset read_csv makedirs join save trange _encode_vectors len join concatenate print save trange _encode_vectors len print from_pretrained exit join copy zfill isfile join copy_files to_csv _create_data_directories _format_datasets range print join abspath makedirs print _files_to_pandas_dataframe range join format print zfill abspath append getsize join print exit abspath split convolve copy min len int len load melspectrogram abs log mfcc read transpose arange derivative from_file min transpose average stack compute_prosody len get_total_duration eps asarray arange log nan_to_num to_pitch to_intensity Sound clip list format print exit get_bert_embedding extract_extra_features extend any extract_word_attributes append array len join merge_subword_encodings unsqueeze encode numpy convert_ids_to_tokens strip mean iter startswith append print float float rstrip json_time_to_deciseconds print exit extend extract_extra_features any extract_word_attributes append array len findall lower enumerate len dict lower items Namespace Trainer ModelCheckpoint setattr fill_ in_features normal_ sqrt out_features __name__ zeros_ data __name__ arange mean empty range mean_absolute_error zeros norm range ArgumentParser sorted basename std shape predicted parse_args append format glob upper mean zip original load remove_velocity add_argument min out makedirs norm reshape zeros range diff zeros norm range diff print join format makedirs arange warn show str save_result ylabel title legend width sum range plot concatenate size tight_layout stack xlabel measure histogram len norm mean zeros range diff mean reshape read_bvh_to_array save concatenate name filter append BVHNode Bvh parse_bvh_node load inverse_transform BVHWriter range write_bvh convert_bvh2npy create_video load join remove print isfile listdir visualize add_argument ArgumentParser AnimateSkeletons getSkeletons animate ModelSkeletons initLines save reshape children append load_frame range apply_transformation load obtain_coords array len indexes get_foot_contact_idxs append range values len index get_foot_contact_idxs plot values norm reshape pi copy tile append range diff norm reshape pi copy tile range einsum diff from_euler rad2deg deg2rad euler print deg2rad rad2deg mat2euler lower euler2mat qinverse quat2euler euler2quat deg2rad rad2deg lower qmult quat2euler euler2quat deg2rad rad2deg lower qmult from_euler deg2rad angle_axis lower euler2axangle deg2rad axangle2euler lower norm array savefig tight_layout plot add_subplot scatter figure annotate keys values plot text add_subplot scatter figure keys values plot add_subplot figure keys range values T plot axis imshow scatter figure subplot2grid keys range enumerate pop print append len BVHWriter list columns remove to_csv join list columns remove replace str to_csv realpath dirname | [](https://youtu.be/VQ8he6jjW08) This repository contains PyTorch based implementation of the ICMI 2020 Best Paper Award recipient paper [Gesticulator: A framework for semantically-aware speech-driven gesture generation](https://svito-zar.github.io/gesticulator/). ## 0. Set up ### Requirements - python3.6+ - ffmpeg (for visualization) ### Installation **NOTE**: during installation, there will be several error messages (one for bert-embedding and one for mxnet) about conflicting packages - please ignore them, they don't affect the functionality of the repository. - Clone the repository: ``` | 1,022 |

Svobikl/cz_corpus | ['word embeddings'] | ['New word analogy corpus for exploring embeddings of Czech words'] | Evaluator.py | # cz_corpus

The word embedding methods have been proven to be very

useful in many tasks of NLP (Natural Language Processing). Much has

been investigated about word embeddings of English words and phrases,

but only little attention has been dedicated to other languages.

Our goal in this paper is to explore the behavior of state-of-the-art

word embedding methods on Czech, the language that is characterized

by very rich morphology. We introduce new corpus for word analogy

task that inspects syntactic, morphosyntactic and semantic properties

| 1,023 |

SwordHolderSH/neural-style-pytorch | ['style transfer'] | ['A Neural Algorithm of Artistic Style'] | utils.py neural_style.py vgg.py closure save_image_epoch normalize gram_matrix normalize_batch load_image Vgg16 save_image_epoch format backward abs print zero_grad clamp_ relu4_3 zip vgg sum mse_loss resize ANTIALIAS open bmm size transpose view view div_ join str squeeze clone unloader save cuda | # neural-style-pytorch A simple PyTorch implementation of "A Neural Algorithm of Artistic Style" ## introduction I try some other codes for neural-style-pytorch, but their outputs may become noise in some epochs, such as epoch 45, 170 and 230 in Figure 1. I don't know why. Therefore, I simply implement the method of "A Neural Algorithm of Artistic Style (http://arxiv.org/abs/1508.06576). <div align=center> <img src="https://github.com/SwordHolderSH/neural-style-pytorch/blob/master/demos/output.png" width="900" /> </div> <p align="center">**Figure 1**</p> ## Results <p align="left"> The output as shown in Table 1. </p> <table> <tr> | 1,024 |

Swybino/PotatoNet | ['face detection'] | ['PyramidBox: A Context-assisted Single Shot Face Detector'] | data_handler.py MemTrack/memnet/access.py MemTrack/build_tfrecords/process_xml.py faceAlignment/face_alignment/__init__.py faceAlignment/examples/detect_landmarks_in_image_3D.py PyramidBox/nets/ssd.py faceAlignment/test/test_utils.py faceAlignment/test/facealignment_test.py faceAlignment/face_alignment/detection/sfd/sfd_detector.py utils.py PyramidBox/tf_extended/bboxes.py side_scripts/align_faces.py test.py MemTrack/memnet/memnet.py faceAlignment/face_alignment/api.py faceAlignment/face_alignment/detection/dlib/__init__.py MemTrack/config.py config.py PyramidBox/widerface_eval.py MemTrack/model.py PyramidBox/tf_utils.py MemTrack/memnet/rnn.py faceAlignment/face_alignment/detection/folder/folder_detector.py PyramidBox/makedir.py MemTrack/memnet/utils.py faceAlignment/face_alignment/utils.py faceAlignment/face_alignment/detection/sfd/detect.py MemTrack/tracking/demo.py PyramidBox/tf_extended/tensors.py PyramidBox/nets/np_methods.py faceAlignment/face_alignment/detection/sfd/bbox.py faceAlignment/face_alignment/detection/folder/__init__.py MemTrack/build_tfrecords/build_data_vid.py face_analyzer.py PyramidBox/demo.py faceAlignment/setup.py faceAlignment/face_alignment/models.py MemTrack/tracking/tracker.py faceAlignment/examples/detect_landmarks_in_image.py PyramidBox/nets/custom_layers.py tests/_test_iou.py eval/evaluation.py MemTrack/input.py PyramidBox/preprocessing/vgg_preprocessing.py faceAlignment/face_alignment/detection/core.py visualization.py PyramidBox/utility/visualization.py PyramidBox/preprocessing/inception_preprocessing.py init.py MemTrack/build_tfrecords/collect_vid_info.py PyramidBox/preprocessing/ssd_vgg_preprocessing.py face_alignment.py PyramidBox/AnchorSampling.py PyramidBox/tf_extended/metrics.py PyramidBox/train_model.py eval/label.py faceAlignment/face_alignment/detection/sfd/net_s3fd.py MemTrack/estimator.py PyramidBox/tf_extended/math.py PyramidBox/datasets/dataset_utils.py faceAlignment/face_alignment/detection/sfd/__init__.py PyramidBox/tf_extended/__init__.py faceAlignment/face_alignment/detection/dlib/dlib_detector.py MemTrack/build_tfrecords/generate_vidb.py PyramidBox/check_data_io.py PyramidBox/datasets/pascalvoc_to_tfrecords.py MemTrack/experiment.py PyramidBox/nets/ssd_common.py PyramidBox/preparedata.py PyramidBox/datasets/pascalvoc_datasets.py PyramidBox/preprocessing/tf_image.py PyramidBox/preprocessing/preprocessing_factory.py MemTrack/feature.py faceAlignment/test/FA_test.py faceAlignment/face_alignment/detection/__init__.py faceAlignment/test/smoke_test.py side_scripts/result_video.py MemTrack/memnet/addressing.py children_tracker.py detect_faces MainTracker get_data DataHandler save_data FaceAligner face_orientation FaceAnalizer load_init load_seq_video process_image run_tracker display_result rotate_landmarks get_angular_dist get_roi is_between bb_intersection_over_union get_bbox_dist is_point_in_bbox rotate_roi crop_roi is_bbox_in_bbox_list get_angle grad bbox_in_roi bbox_img_coord is_point_in_bbox_list get_bbox_dict_ang_pos get_list_disorder load_seq_video landmarks_img_coord reformat_bbox_coord bb_contained rotate_bbox VisualizerOpencv VisualizerPlt Evaluator Labeler find_version read plot plot FaceAlignment LandmarksType NetworkSize ResNetDepth Bottleneck conv3x3 FAN HourGlass ConvBlock appdata_dir shuffle_lr get_preds_fromhm _gaussian flip draw_gaussian transform crop FaceDetector DlibDetector FolderDetector nms decode bboxlog encode bboxloginv flip_detect detect pts_to_bb s3fd L2Norm SFDDetector Tester Tester Estimator experiment extract_feature get_key_feature conv2d bn_relu_conv2d conv2d_bn_relu _parse_example_proto _batch_input _generate_labels_overlap_py _distort_color _bbox_overlaps generate_labels_dist generate_input_fn _translate_and_strech _process_images generate_labels_overlap get_loss build_model ModeKeys model_fn get_train_op build_initial_state get_saver get_dist_error get_summary focal_loss get_predictions batch_conv get_cnn_feature _float_feature_list _int64_feature partition_vid _bytes_feature_list save_to_tfrecords Vid convert_to_example process_videos_area build_tfrecords EncodeJpeg _bytes_feature _float_feature process_videos process collect_video_info generate_vidb Vid BoundingBox process_xml get_int get_item find_num_bb MemoryAccess _reset_and_write attention_read _weighted_softmax update_usage cosine_similarity _vector_norms calc_allocation_weight attention MemNet rnn weights_summay run_tracker load_seq_config display_result calc_x_size get_new_state Model Tracker calc_z_size process_image PrepareData get_init_fn configure_optimizer update_model_scope reshape_list get_variables_to_train print_configuration add_variables_summaries configure_learning_rate TrainModel process_image download_and_uncompress_tarball image_to_tfexample write_label_file int64_feature bytes_feature float_feature read_label_file has_labels get_dataset_info _convert_to_example _add_to_tfrecord _get_output_filename run _get_dataset_filename _process_image abs_smooth_2 pad2d channel_to_last l2_normalization abs_smooth bboxes_nms ssd_bboxes_decode ssd_bboxes_select bboxes_nms_fast bboxes_jaccard bboxes_clip ssd_bboxes_select_layer bboxes_intersection bboxes_resize bboxes_sort PyramidBoxModel tf_ssd_bboxes_select_all_classes tf_ssd_bboxes_select_layer_all_classes tf_ssd_bboxes_encode tf_ssd_bboxes_select_layer tf_ssd_bboxes_encode_layer tf_ssd_bboxes_decode tf_ssd_bboxes_select tf_ssd_bboxes_decode_layer distorted_bounding_box_crop preprocess_for_train preprocess_for_eval preprocess_image distort_color apply_with_random_selector get_preprocessing tf_image_unwhitened distorted_bounding_box_crop np_image_unwhitened tf_summary_image preprocess_for_train preprocess_for_eval preprocess_image tf_image_whitened distort_color apply_with_random_selector _assert _Check3DImage bboxes_crop_or_pad fix_image_flip_shape random_flip_left_right _ImageDimensions _is_tensor resize_image resize_image_bboxes_with_crop_or_pad _aspect_preserving_resize preprocess_for_train _crop _central_crop _smallest_size_at_least _mean_image_subtraction preprocess_for_eval preprocess_image _random_crop bboxes_nms bboxes_matching bboxes_filter_overlap bboxes_filter_labels bboxes_nms_batch bboxes_jaccard bboxes_matching_batch bboxes_sort_all_classes bboxes_clip bboxes_intersection bboxes_filter_center bboxes_resize bboxes_sort safe_divide cummax _create_local streaming_tp_fp_arrays average_precision_voc12 precision_recall_values _safe_div _precision_recall average_precision_voc07 precision_recall _broadcast_weights streaming_precision_recall_arrays get_shape pad_axis bboxes_draw_on_img colors_subselect plt_bboxes draw_rectangle draw_bbox draw_lines to_video fromarray int uint8 bboxes_nms ssd_bboxes_select bboxes_sort bboxes_clip resize ssd_anchors_all_layers bboxes_resize run norm degrees mean cross arcsin array fromarray int uint8 bboxes_nms ssd_bboxes_select bboxes_sort bboxes_clip resize ssd_anchors_all_layers bboxes_resize run VideoCapture read join imwrite sorted print mkdir img_dir sequence_path listdir release makedirs join data_dir readlines append open ConfigProto FONT_HERSHEY_DUPLEX imwrite replace tuple putText save_img astype imshow rectangle split merge video_path split max get_roi int roi_min_size min max get_roi rotate_bound items list get_angle abs get_angle enumerate index len is_point_in_bbox read search M show add_subplot axis imshow figure plot3D view_init set_xlim scatter exp empty range sum _gaussian ones inverse eye transform zeros array resize view FloatTensor size add_ apply_ mul_ transform zeros float max range from_numpy ndimension join remove isdir close executable lstrip getenv prefix getattr startswith abspath dirname expanduser mkdir append log cat decode list view reshape transpose size zeros shape softmax zip append Tensor to array range len zeros detect flip shape min max train generate_input_fn Estimator conv2d max_pooling2d conv2d_bn_relu concat split conv2d batch_normalization batch_normalization relu as_list use_fc_key reshape average_pooling2d key_dim bn_relu_conv2d enqueue image sorted string_input_producer num_preprocess_threads cast append num_readers range min_queue_examples batch_join add_queue_runner glob QueueRunner _process_images _parse_example_proto join read dequeue int32 RandomShuffleQueue to_int32 patch_size crop_to_bounding_box z_exemplar_size top_k linspace random_uniform set_shape gather is_limit_search to_float max_strech_x append max_translate_x range random_shuffle max_search_range max_strech_z max_translate_z stack is_augment decode_jpeg minimum int convert_image_dtype _translate_and_strech convert_to_tensor minimum concat squeeze expand_dims reverse random_uniform crop_and_resize tile float round random_normal random_hue random_saturation random_brightness clip_by_value random_contrast parse_single_sequence_example count_nonzero dist tile zeros array range as_list reshape tolist set_shape py_func count_nonzero arange concatenate print reshape transpose hstack _bbox_overlaps pause stride imshow meshgrid zeros expand_dims append minimum prod maximum as_list reshape as_list reshape squeeze map_fn batch_normalization batch_conv rnn initial_state as_list sigmoid_cross_entropy_with_logits reduce_sum generate_labels_dist focal_loss scalar array use_focal_loss generate_labels_overlap as_list reshape stack tile mean_squared_error expand_dims argmax scalar trainable_variables learning_rate get_or_create_global_step l2_regularizer apply_regularization gradients clip_by_global_norm get_collection lr_decay weight_decay decay_circles AdamOptimizer UPDATE_OPS exponential_decay clip_gradients scalar get_loss get_train_op get_saver get_dist_error get_summary get_predictions get_cnn_feature get_cnn_feature get_saver batch_conv rnn get_cnn_feature int sorted print unique append sum array range len load join Thread basename append partition_vid TFRecordWriter print makedirs Coordinator tfrecords_path start open enumerate flush len height ymin print save_to_tfrecords tolist ymax dir objs xmax xmin acquire width append process release get_img_buffer enumerate print save_to_tfrecords tolist objs dir acquire append process release get_img_buffer enumerate tuple patch_size z_exemplar_size paste floor resize open new prod hstack astype sqrt tile crop join fix_aspect maximum context_amount z_scale img_path array mode len write convert_to_example SerializeToString zip enumerate FeatureLists SequenceExample Features join sorted write close open listdir len pop int join sorted dump max_trackid print trackid Vid process_xml open img_path listdir append split iter BoundingBox join parse get_int get_item getroot append find_num_bb range as_list reshape expand_dims reduce_sum softmax strengths_op expand_dims _vector_norms matmul dense as_list tanh conv2d expand_dims usage_decay stop_gradient squeeze top_k one_hot as_list dense tanh format reshape reduce_max conv2d softmax histogram expand_dims argmax as_list summary_display_step format weights_summay tuple write_weight reshape concat read_weight usage append memory range len as_list format histogram range join sorted otb_data_dir readlines append listdir open join save_path is_save fix_aspect context_amount sqrt z_scale repeat prod x_instance_size z_exemplar_size AccessState num_scale LSTMStateTuple append array append list isinstance join print_config makedirs int num_epochs_per_decay batch_size MomentumOptimizer AdagradOptimizer GradientDescentOptimizer AdamOptimizer RMSPropOptimizer AdadeltaOptimizer FtrlOptimizer name get_model_variables histogram append scalar checkpoint_path latest_checkpoint get_model_variables checkpoint_exclude_scopes IsDirectory startswith info append train_dir extend get_collection TRAINABLE_VARIABLES join urlretrieve print extractall stat join join index split TFExampleDecoder TFRecordReader join read format parse int encode findall print text getroot append find Example _convert_to_example write SerializeToString _process_image seed join sorted int print min shuffle mkdir ceil float listdir _get_dataset_filename range len minimum abs abs square where shape exp reshape zeros_like ssd_bboxes_decode reshape where shape argmax amax concatenate ssd_bboxes_select_layer append range len argsort minimum transpose maximum copy copy minimum transpose maximum minimum transpose maximum ones size logical_and where bboxes_jaccard shape logical_or range append maximum minimum ones while_loop stack zeros log stack exp get_shape dtype reshape reduce_max greater stack cast argmax random_uniform constant constant cast int32 uint8 astype copy tf_image_unwhitened expand_dims image draw_bounding_boxes _is_tensor as_list shape unstack is_fully_defined any with_rank get_shape set_shape pack greater_equal slice to_int32 logical_and with_dependencies Assert shape rank equal greater_equal reshape logical_and with_dependencies extend Assert shape rank random_uniform append range equal len append _crop range split convert_to_tensor to_float to_int32 greater cond convert_to_tensor resize_bilinear squeeze shape set_shape _smallest_size_at_least expand_dims to_float _aspect_preserving_resize random_flip_left_right set_shape random_uniform to_float set_shape _aspect_preserving_resize isinstance isinstance list get_shape list keys isinstance list keys as_list shape unstack is_fully_defined len append range isinstance len line rectangle FONT_HERSHEY_DUPLEX putText rectangle str FONT_HERSHEY_DUPLEX putText shape rectangle range int suptitle add_patch dict imshow figure Rectangle range join write VideoWriter imread listdir VideoWriter_fourcc release | # PotatoNet using PyramidBox for face Detection @article{DBLP:journals/corr/abs-1803-07737, author = {Xu Tang and Daniel K. Du and Zeqiang He and Jingtuo Liu}, title = {PyramidBox: {A} Context-assisted Single Shot Face Detector}, journal = {CoRR}, volume = {abs/1803.07737}, | 1,025 |