repo

stringlengths 8

116

| tasks

stringlengths 8

117

| titles

stringlengths 17

302

| dependencies

stringlengths 5

372k

| readme

stringlengths 5

4.26k

| __index_level_0__

int64 0

4.36k

|

|---|---|---|---|---|---|

cyh4/FCTSFN | ['semantic segmentation'] | ['A Fully Convolutional Two-Stream Fusion Network for Interactive Image Segmentation'] | solve_32s.py surgery.py solve_fctsfn.py solve_8s.py util.py run_example.py voc_click_pattern_layers.py voc_click_pattern_batch_input_data_aug_layers.py score.py solve_16s.py compute_hist do_seg_tests fast_hist do_seg_tests_fg seg_tests seg_tests_fg getClickMap fromarray join uint8 channels astype mkdir save zeros forward print do_seg_tests iter share_with net format print channels close compute_hist sum diag print do_seg_tests_fg iter share_with net format print channels close compute_hist sum diag minimum arange ones square sqrt meshgrid array range | # FCTSFN This repository includes the [caffe][1] model and codes for the following paper on interactive image segmentation: Y.Hu, A. Soltoggio, R. Lock and S. Carter. A Fully Convolutional Two-Stream Fusion Network for Interactive Image Segmentation. Neural Networks, vol.109, pp.31-42, 2019. The codes are tested with Python 2.7 and Ubuntu 16.04. ## Example run Run `run_example.py` on an example to use the model file and pre-trained weights. ## Model training See `./data/example_data/voc` an example of the way to organize training data (note that this is not the full data; see above paper on more detailed information on the data used in the paper). Run `solve_32s.py`, `solve_16s.py`, `solve_8s.py` for the training of TSLFN subnet in FCTSFN from stride 32 to stride 8. Run `solve_fctsfn.py` for the training of MSRN subnet in FCTSFN. | 1,800 |

cyk1337/UrbanDict | ['word embeddings'] | ['How to Evaluate Word Representations of Informal Domain?'] | demo/__init__.py UrbanDictScraper/UD_spider/items.py UD_Extractor/Bootstrapping/Tuple.py trainEmbedding/mittens/Glob_settings.py trainEmbedding/mittens/eval/eval_all_vecs.py trainEmbedding/fastText/fastText-0.1.0/eval.py SeqLabeling/crf_trial_backup/trainPyCRF.py HashtagPrediction/settings.py trainEmbedding/GloVe-1.2/eval/python/python2_evaluate.py HashtagPrediction/data_loader.py UrbanDictScraper/UD_spider/middlewares.py SeqLabeling/load_data.py UrbanDictScraper/UD_spider/settings.py preprocess/Preprocess_sub.py UrbanDictScraper/UD_spider/_crawl_utils.py HashtagPrediction/find_best_val_acc.py SeqLabeling/crf_trial_backup/load_data_NLTK.py UD_Extractor/Bootstrapping/__init__.py calcSim/calcCorrelation.py preprocess/gen_hashtag_data.py UD_Extractor/eval.py trainEmbedding/mittens/prep_scripts/old_vocab_to_new.py UD_Extractor/_config.py preprocess/__init__.py SeqLabeling/__init__.py SeqLabeling/crf_trial_backup/trainCRF_NLTK.py UD_Extractor/test.py SeqLabeling/SL_config.py demo/forms.py UrbanDictScraper/run.py trainEmbedding/mittens/prep_scripts/twokenize.py preprocess/twokenize.py SeqLabeling/HandLabel-rm_index_duplicate/get_list.py UD_Extractor/baseline.py trainEmbedding/mittens/prep_scripts/concatenate_corpus.py trainEmbedding/mittens/prep_scripts/twitter_process.py preprocess/data_split.py UD_Extractor/plot_wordcloud.py UD_Extractor/Bootstrapping/Definition.py calcSim/sample2evalGold.py SeqLabeling/CRF.py trainEmbedding/mittens/gen_wiki.py UrbanDictScraper/UD_spider/spiders/UD.py SeqLabeling/crf_trial_backup/label_processing_NLTK.py preprocess/TwitterProcess.py SeqLabeling/sample2eval.py demo/config.py UD_Extractor/Bootstrapping/Seed.py UrbanDictScraper/UD_spider/spiders/UD_API.py HashtagPrediction/plot_fit.py HashtagPrediction/train_cnn.py UrbanDictScraper/UD_spider/pipelines.py SeqLabeling/label_processing.py SeqLabeling/_utils.py UrbanDictScraper/__init__.py UD_Extractor/ie_utils.py UD_Extractor/Bootstrapping/Pattern.py demo/run.py UD_Extractor/Bootstrap.py demo/_view_func.py UD_Extractor/BootstrapIE.py trainEmbedding/GloVe-1.2/eval/python/evaluate.py calcSim/calcMAP.py trainEmbedding/mittens/prep_scripts/wikipedia_process.py UrbanDictScraper/UD_spider/spiders/__init__.py trainEmbedding/w2v/W2V.py filter_variant_tuple evaluate_pair load_embedding evaluate_all_pairs gen_goldTuple fetch_gold_from_db sample4handcheck QueryForm demo_page extract_variant_spelling search_UrbanDict find_all_entries load_test load_pretrained_model load_tweets plot_all_history save_history visialize_model plot_fit save_fig write2file take_top_N count_tweets allcaps hashtag tokenize traverse_docs tweet_process save_en_tweets main tokenizeRawTweetText simpleTokenize addAllnonempty splitToken squeezeWhitespace splitEdgePunct regex_or tokenize normalizeTextForTagger SelfTrainCRF conn_db load_unlabel_data load_data gen_label eval_single_exp gen_sample100 main count_estimated_label file_len timeit days_hours_mins_secs conn_db load_unlabel_data load_data gen_label word2features split_train_test_set get_labels mk_prediction get_tokens eval_Test main trainPyCRF extract_features word2features split_train_test_set get_labels mk_prediction get_tokens eval_Test main trainPyCRF extract_features compat_splitting similarity main evaluate_vectors main evaluate_vectors processfile verblog traversedocs concatenate_main log tokenizeRawTweetText simpleTokenize addAllnonempty splitToken squeezeWhitespace splitEdgePunct regex_or tokenize normalizeTextForTagger train_cbow train_sg train_sg_ns train_cbow_ns Basic Baseline main Bootstrap main BootstrapIE update_variant_db load_iter update_label_db sample2Estimate_prec get_defns_from_defids count_num update_sample_dir read_valid_file eval_recall save_iter _count_and_write_db conn_db normalized_levenshtein detokenize days_hours_mins_secs iterative_levenshtein load_pkl dump_pkl fetch_data mask basic_plot iterative_levenshtein Definition Pattern Seed Tuple UdSpiderItem UdSpiderSpiderMiddleware UdSpiderDownloaderMiddleware SyncMySQLPipeline CsvExporterPipeline AsyncMySQLPipeline changeTime days_hours_mins_secs _time_log _err_log _filter_word_log UdSpider UdApiSpider dict list print keys print close lower open append split T norm print index dot join format print evaluate_pair len system close load_embedding filter_variant_tuple array open system close open iterrows format read_sql create_engine write to_csv connect close lower split open SubmitField StringField SelectField QueryForm data search_UrbanDict validate_on_submit get urljoin text print select dict BeautifulSoup get_text zip append load SelfTrainCRF format predict_single print lower nlp startswith append extract_features dict extract_variant_spelling find_all_entries count read_csv read_csv dict subplot list plot save_fig xlabel grid ylabel title figure legend range len join format print savefig mkdir join format print plot_model mkdir join from_dict format print to_csv history mkdir grid show subplot list ylabel title legend read_csv range format plot mkdir listdir enumerate join xlabel print figure save_fig len print dict items list len join format group isupper split group re_sub format lower join listdir tweet_process traverse_docs sub addAllnonempty len splitEdgePunct append range finditer split append strip search replace unescape tokenize normalizeTextForTagger connect create_engine int len split load join iterrows format print extend gen_label lower nlp append read_csv load str iterrows print tolist lower nlp append read_csv enumerate join format print system mkdir listdir join sorted print system listdir communicate Popen join count_estimated_label listdir eval_single_exp startswith tolist apply apply append extend train_test_split load_data set_params print Trainer zip append train print classification_report Tagger open join format print Tagger tag load_unlabel_data zip extract_features enumerate open split_train_test_set join trainPyCRF eval_Test shape_ tag_ lemma_ text pos_ is_stop is_alpha dep_ range print print norm items list T add_argument shape ArgumentParser parse_args sum evaluate_vectors zeros len int T arange print min dot flatten ceil zeros float sum array range len print log join endswith close verblog open verblog processfile walk rstrip rootdir add_argument verbose ArgumentParser traversedocs parse_args save_word2vec_format Word2Vec save save_word2vec_format Word2Vec save save_word2vec_format Word2Vec save save_word2vec_format Word2Vec save init_bootstrap Bootstrap seeds BootstrapIE print read_valid_file len print join system join conn_db read_sql join listdir get_defns_from_defids insert print to_csv set mkdir defid_list sample DataFrame append execute conn_db print len execute str conn_db nunique update_variant_db iterrows join update_label_db print tuple insert tolist dirname read_csv _count_and_write_db join sorted listdir join sorted listdir system join mkdir join mkdir strip min range len read_sql connect create_engine show join axis imshow savefig figure generate show join recolor axis set to_file imshow title savefig figure to_array generate array open Field join mkdir join mkdir join mkdir divmod urljoin ascii_uppercase append urljoin ascii_uppercase append | # Discovering spelling variants on Urban Dictionary Source code of the paper [How to Evaluate Word Representations of Informal Domain?](https://arxiv.org/abs/1911.04669) ## Scraping data from [Urban Dictionary](https://www.urbandictionary.com/) :bamboo: * Scraping data from webpage: ```diff + scrapy crawl UD ``` * Scrapying data via API: ```diff + scrapy crawl UD_API | 1,801 |

cyn228/Yelp-Sentiment | ['classification'] | ['Predicting the Sentiment Polarity and Rating of Yelp Reviews'] | classify.py yelp_utils.py classify print_usage valid_args loadData numLines int time print loadData SGDClassifier LogisticRegression Pipeline numLines float MultinomialNB predict fit print append loads open | # Sentiment Predictor For Yelp Review ## About Given a Yelp review, this project builds two types of classifiers to assign to the review 1) a positive or negative sentiment, and 2) a rating in the interval [1, 5]. The classifier is trained with either Naive Bayes, SVM, or Logistic Regression as the model, and the [Yelp dataset](http://www.yelp.com/dataset_challenge/). The corresponding paper *Predicting the Sentiment Polarity and Rating of Yelp Reviews* may be found on arXiv [here](http://arxiv.org/abs/1512.06303). ## Motivation From Section 1.2 of *Predicting the Sentiment Polarity and Rating of Yelp Reviews*: "It is useful for Yelp to associate review text with a star rating (or at least a positive or negative assignment) accurately in order to judge how helpful and reliable certain reviews are. Perhaps users could give a good review but a bad rating, or vice versa. Also Yelp might be interested in automating the rating process, so that all users would have to do is write the review, and Yelp could give a suggested rating." ## Example The user can specify which type of classification to perform (positive/negative or 5-star), which technique to use (Naive Bayes, SVM, or Logistic Regression), and how much data to use. Assuming you have the Yelp review dataset in the same directory as `classify.py`, the following will build a Logistic Regression classifier for 5-star classification using 80% of the data: ``` classify.py svm False 80 | 1,802 |

cynicaldevil/neural-style-transfer | ['style transfer'] | ['Arbitrary Style Transfer in Real-time with Adaptive Instance Normalization'] | train.py | Arbitrary neural style transfer implementation in PyTorch. Based on https://arxiv.org/abs/1703.06868 | 1,803 |

cysmith/neural-style-tf | ['style transfer'] | ['A Neural Algorithm of Artistic Style', 'Artistic style transfer for videos', 'Preserving Color in Neural Artistic Style Transfer'] | neural_style.py get_noise_image style_layer_loss pool_layer write_image_output get_style_images get_prev_frame maybe_make_directory get_content_weights get_bias write_video_output read_image get_optimizer write_image sum_masked_style_losses sum_shortterm_temporal_losses warp_image parse_args get_weights sum_longterm_temporal_losses conv_layer normalize convert_to_original_colors render_single_image postprocess relu_layer stylize build_model preprocess sum_style_losses check_image get_longterm_weights render_video main temporal_loss read_weights_file minimize_with_lbfgs get_content_frame read_flow_file content_layer_loss get_init_image get_prev_warped_frame gram_matrix sum_content_losses get_content_image minimize_with_adam get_mask_image mask_style_layer normalize video_output_dir style_layer_weights add_argument style_imgs_weights maybe_make_directory img_output_dir ArgumentParser content_layer_weights video relu_layer print Variable pool_layer loadmat shape verbose zeros conv_layer model_weights get_shape format print conv2d verbose get_shape format relu print verbose get_shape format print max_pool verbose avg_pool constant reshape size constant pow get_shape value reduce_sum get_shape value reduce_sum gram_matrix pow reshape transpose matmul convert_to_tensor get_shape value multiply stack get_mask_image append expand_dims range convert_to_tensor style_layers zip style_layer_weights style_imgs_weights assign mask_style_layer style_mask_imgs run convert_to_tensor style_layers style_layer_weights style_imgs_weights assign zip run convert_to_tensor content_layers assign zip content_layer_weights run size float32 reduce_sum cast float l2_loss maximum get_content_weights prev_frame_indices range get_prev_warped_frame assign prev_frame_indices get_longterm_weights range run get_prev_warped_frame assign get_content_weights temporal_loss run astype float32 IMREAD_COLOR preprocess check_image imread postprocess imwrite copy astype copy list readlines len map float32 dstack zeros array range split sum makedirs minimize print assign verbose global_variables_initializer run format minimize print assign verbose eval global_variables_initializer run AdamOptimizer learning_rate ScipyOptimizerInterface join format write_image video_output_dir zfill style_layers style_imgs_weights maybe_make_directory open str write_image img_name init_img_type style_weight content_layers format max_iterations close zip max_size tv_weight optimizer join style_imgs content_img write style_mask_imgs content_weight img_output_dir get_prev_frame get_prev_warped_frame get_noise_image noise_ratio join format video_input_dir zfill read_image join content_img_dir float astype float32 IMREAD_COLOR shape preprocess check_image resize max_size imread join style_imgs astype float32 IMREAD_COLOR shape preprocess check_image resize append imread style_imgs_dir seed astype float32 join content_img_dir astype float32 IMREAD_GRAYSCALE check_image resize imread amax join format video_output_dir zfill IMREAD_COLOR check_image imread str join format read_flow_file video_input_dir astype float32 get_prev_frame preprocess str join format video_input_dir read_weights_file remap shape zeros float range postprocess COLOR_LUV2BGR COLOR_YCR_CB2BGR COLOR_BGR2YUV astype float32 COLOR_BGR2LAB COLOR_BGR2YCR_CB merge preprocess COLOR_BGR2LUV COLOR_LAB2BGR COLOR_YUV2BGR cvtColor split get_content_image content_img get_style_images end_frame range start_frame parse_args render_single_image render_video video | # neural-style-tf This is a TensorFlow implementation of several techniques described in the papers: * [Image Style Transfer Using Convolutional Neural Networks](http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Gatys_Image_Style_Transfer_CVPR_2016_paper.pdf) by Leon A. Gatys, Alexander S. Ecker, Matthias Bethge * [Artistic style transfer for videos](https://arxiv.org/abs/1604.08610) by Manuel Ruder, Alexey Dosovitskiy, Thomas Brox * [Preserving Color in Neural Artistic Style Transfer](https://arxiv.org/abs/1606.05897) by Leon A. Gatys, Matthias Bethge, Aaron Hertzmann, Eli Shechtman Additionally, techniques are presented for semantic segmentation and multiple style transfer. The Neural Style algorithm synthesizes a [pastiche](https://en.wikipedia.org/wiki/Pastiche) by separating and combining the content of one image with the style of another image using convolutional neural networks (CNN). Below is an example of transferring the artistic style of [The Starry Night](https://en.wikipedia.org/wiki/The_Starry_Night) onto a photograph of an African lion: | 1,804 |

czarmanu/tiramisu_keras | ['remote sensing image classification', 'change detection for remote sensing images', 'semantic segmentation'] | ['LAKE ICE MONITORING WITH WEBCAMS'] | train.py helper.py result.py test.py data_loader.py dataGenerator.py modelTiramisu.py saveHDF5.py sparse DataGenerator recover_set_list get_num_aug get_mean get_crop main DataLoader DataSet classTocolor computeIoU normalized colorToclass one_hot_reverse one_hot_it Tiramisu main SaveHDF5 main prediction main train int floor value where unique true_divide zeros sum range len print unique append array range len hdf5_dir save_hdf5 in_dir DataLoader generate hdf5_file parse_args zeros float32 equalizeHist zeros range zeros argmax range zeros argmax range true_divide zeros range zeros range SaveHDF5 image_dir label_dir writeHDF5 hdf5_name imwrite num_crop_per_im floor save one_hot_reverse open create sorted ones len predict_on_batch set_list c colorToclass expand_dims sum range value DataGenerator model_from_json close mean load_weights get_crop int read print r File write crop_list zeros array amax makedirs result_dir prediction model_name save_weights save open str create list balancing TensorBoard aug len ylabel strftime class_weight title savefig legend generate Nadam format plot DataGenerator close fit_generator mean keys compile print xlabel EarlyStopping File write crop_list figure ModelCheckpoint array makedirs train | # Lake Ice Monitoring with Webcams This repository is the implementation (keras) of: Xiao M., Rothermel M., Tom M., Galliani S., Baltsavias E., Schindler K.: [Lake Ice Monitoring with Webcams](https://www.isprs-ann-photogramm-remote-sens-spatial-inf-sci.net/IV-2/311/2018/isprs-annals-IV-2-311-2018.pdf), ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, IV-2, pages 311-317, 2018  This work is part of the [Lake Ice Project (Phase 1)](https://prs.igp.ethz.ch/research/completed_projects/integrated-monitoring-of-ice-in-selected-swiss-lakes.html). Here is the link to [Phase 2](https://prs.igp.ethz.ch/research/current_projects/integrated-lake-ice-monitoring-and-generation-of-sustainable--re.html) of the same project. The implementation was extended and modified starting from the one in [0bserver07/One-Hundred-Layers-Tiramisu ](https://github.com/0bserver07/One-Hundred-Layers-Tiramisu). ##### Pre-requisites: - Numpy - Keras | 1,805 |

czbiohub/noise2self | ['denoising'] | ['Noise2Self: Blind Denoising by Self-Supervision'] | models/modules.py mask.py util.py models/dncnn.py models/singleconv.py models/unet.py models/babyunet.py gaussianprocess/gp.py models/models.py pixel_grid_mask interpolate_mask Masker test_mse_rescale plot_tensors plot_images show_data smooth gpuinfo getbestgpu clamp_tensor ssim test_psnr tensor_to_numpy show test_percentile_normalizer psnr gaussian scale_tensor expand normalize get_args create_window test_bernoulli_noise plot_grid getfreegpumem mse show_tensor normalize_mi_ma PercentileNormalizer random_noise _ssim gaussian_process_posterior rbe_kernel convolve2d_vectorized grid_distances GPDataset sample_rbe_gp make_test_gp_dataset BabyUnet DnCNN get_model ConvBlock pad_circular SingleConvolution Unet zeros range conv2d device to sum array transpose imshow numpy max clip numpy subplots set_title set_ticks imshow range len cat show_tensor subplots concatenate reshape set_ticks imshow range imshow set_ticks plot_grid len concatenate bernoulli ones clamp shape device to poisson ones random_noise manual_seed ones randn unsqueeze randn batchwise_mean Tensor contiguous unsqueeze pow conv2d create_window size type_as get_device cuda is_cuda conv2d device to sum array percentile dtype astype clip evaluate astype uint16 PercentileNormalizer communicate Popen split print getfreegpumem index append max range parse_args add_argument ArgumentParser minimum arange concatenate multivariate_normal reshape zeros grid_distances inv solve dot repeat len seed makedirs MSE GPDataset cat | # Noise2Self: Blind Denoising by Self-Supervision This repo demonstrates a framework for blind denoising high-dimensional measurements, as described in the [paper](https://arxiv.org/abs/1901.11365). It can be used to calibrate classical image denoisers and train deep neural nets; the same principle works on matrices of single-cell gene expression. <img src="https://github.com/czbiohub/noise2self/blob/master/figs/hanzi_movie.gif" width="512" height="256" title="Hanzi Noise2Self"> *The result of training a U-Net to denoise a stack of noisy Chinese characters. Note that the only input is the noisy data; no ground truth is necessary.* ## Images The notebook [Intro to Calibration](notebooks/Intro%20to%20Calibration.ipynb) shows how to calibrate any traditional image denoising model, such as median filtering, wavelet thresholding, or non-local means. We use the excellent [scikit-image](www.scikit-image.org) implementations of these methods, and have submitted a PR to incorporate self-supervised calibration directly into the package. (Comments welcome on the [PR](https://github.com/scikit-image/scikit-image/pull/3824)!) The notebook [Intro to Neural Nets](notebooks/Intro%20to%20Neural%20Nets.ipynb) shows how to train a denoising neural net using a self-supervised loss, on the simple example of MNIST digits. The notebook runs in less than a minute, on CPU, on a MacBook Pro. We implement this in [pytorch](www.pytorch.org). | 1,806 |

czhang99/SynonymNet | ['entity disambiguation'] | ['Entity Synonym Discovery via Multipiece Bilateral Context Matching'] | src/train_siamese.py src/train_triplet.py src/model.py src/utils.py __init__.py SiameseModel TripletModel inference valid inference valid createVocabulary siamese_loss loadVocabulary evaluateTopN __splitTagType margin_loss sentenceToIds padSentence DataProcessor str concatenate roc_curve close average_precision_score get_batch_siamese info append auc DataFrame DataProcessor run concatenate close groups evaluateTopN get_batch_siamese append read_csv DataProcessor run get_batch_triple get_batch_triple greater float32 pow cast less str list keys mean nan_to_num info append array len get isinstance index append split split | # Entity Synonym Discovery via Multipiece Bilateral Context Matching This project provides source code and data for SynonymNet, a model that detects entity synonyms via multipiece bilateral context matching. Details about SynonymNet can be accessed [here](https://arxiv.org/abs/1901.00056), and the implementation is based on the Tensorflow library. ## Quick Links - [Installation](#installation) - [Usage](#usage) - [Data](#data) - [Results](#results) - [Acknowledgements](#acknowledgements) ## Installation | 1,807 |

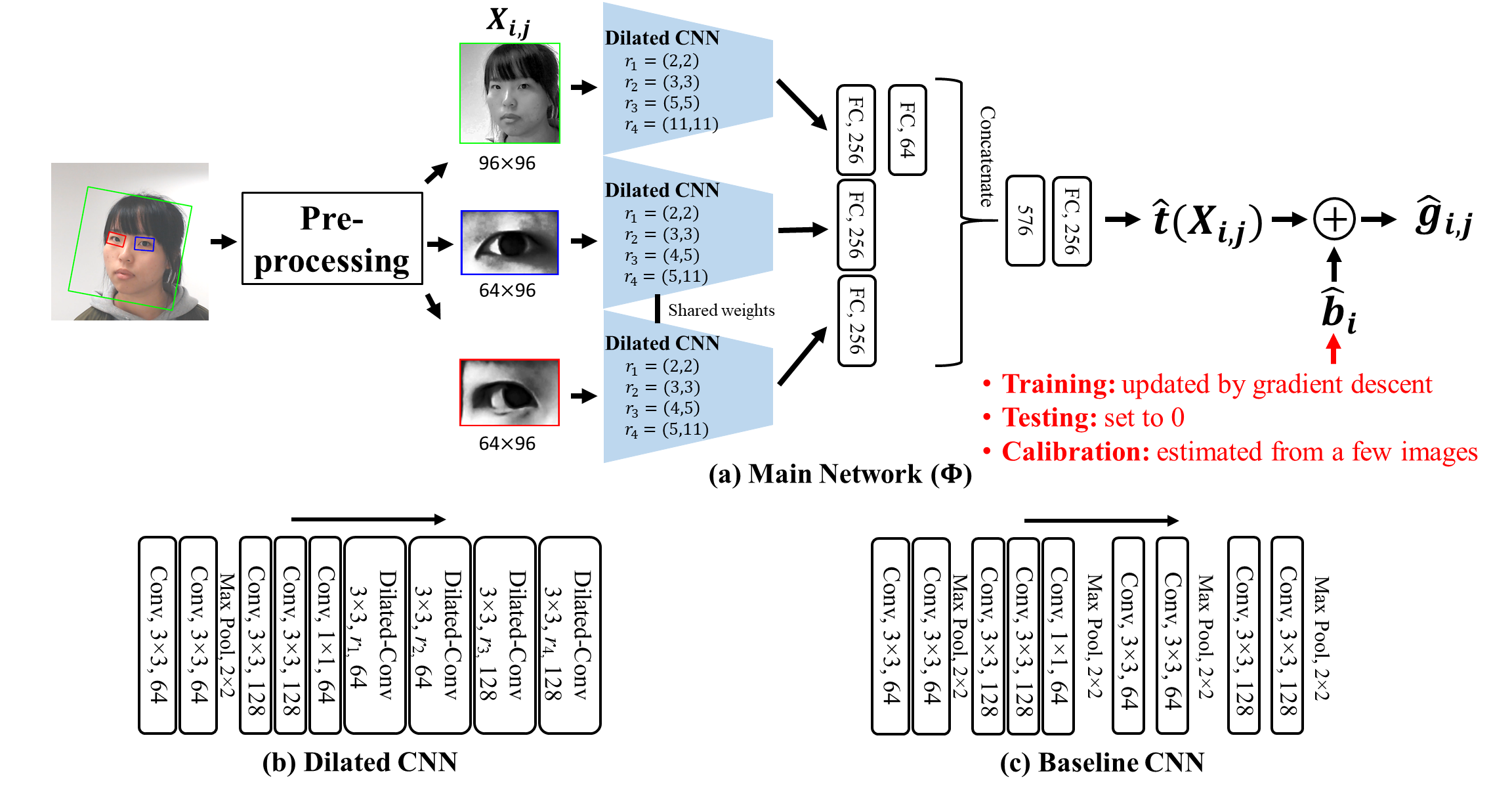

czk32611/GEDDnet | ['gaze estimation'] | ['Towards High Performance Low Complexity Calibration in Appearance Based Gaze Estimation'] | code/PreProcess.py code/GEDDnet.py code/infer.py code/PreProcess_eyecenter.py code/tf_utils.py code/train.py GEDDnet_infer GEDDnet _2d2vec _angle2error sigmoid main shape_to_np preprocess_face_image _vec22d flip_images randomRotate preprocess_eye_image pre_process_face_images pre_process_eye_images WarpNCrop WarpNDraw point_to_matrix grayNhist max_pool_2x2 dilated2d conv2d dense_to_one_hot bias_variable weight_variable _2d2vec _vec22d _angle2error sigmoid creatIter main load max_pool_2x2 relu conv2d l2_loss load resize_images max_pool_2x2 relu concat conv2d stack minimum arccos maximum pi sum zeros range VideoCapture float64 camera_mat Saver global_variables placeholder resizeWindow append WINDOW_NORMAL get_frontal_face_detector GEDDnet_infer int_ set shape_predictor namedWindow mat reshape inv float32 moveWindow zeros loadmat array stack warpAffine _vec22d randint getRotationMatrix2D dot vstack zeros array range resize_images pad_to_bounding_box constant concat crop_to_bounding_box rotate stack uniform cast round map_fn resize_images pad_to_bounding_box constant crop_to_bounding_box stack uniform cast round map_fn shape uniform array arange uint8 COLOR_RGB2GRAY equalizeHist astype stack cvtColor int arctan2 astype degrees getRotationMatrix2D sqrt warpAffine norm point_to_matrix int_ reshape mat hstack transpose astype grayNhist dot cross mean shape vstack resize abs warpPerspective line tuple transpose matmul array truncated_normal random_normal arange int_ astype zeros ravel array from_tensor_slices make_initializable_iterator shuffle get_next batch _2d2vec arange TRAINABLE_VARIABLES where abs num_subject open str GEDDnet data_dir get_collection reduce_sum pre_process_face_images range format creatIter join system write AdamOptimizer reduce_mean UPDATE_OPS bool pre_process_eye_images | # GEDDnet: A Network for Gaze Estimation with Dilation and Decomposition  ## Dilated Convolution We use dilated-convolutions to capture high-level features at high-resolution from eye images. We replace some regular convolutional layers and max-pooling layers of a VGG16 network by dilated-convolutional layers with different dilation rates. ## Gaze Decomposition We propose gaze decomposition for appearance-based gaze estimation, which decomposes the gaze estimate into the sum of a subject-independent term estimated from the input image by a deep convolutional network and a subject-dependent bias term. During training, both the weights of the deep network and the bias terms are estimated. During testing, if no calibration data is available, we can set the bias term to zero. Otherwise, the bias term can be estimated from images of the subject gazing at different gaze targets. The proposed gaze decompostion method enables low complexity calibraiton, i.e., using calibration data collected when subjects view only one or a few gaze targets and the number of images per gaze target is small. ## Setup ### 1. Prerequisites Tensorflow == 1.15 | 1,808 |

czyssrs/Logic2Text | ['text generation'] | ['Logic2Text: High-Fidelity Natural Language Generation from Logical Forms'] | gpt_base/encoder.py gpt_base/preprocess.py execution/execute.py gpt_base/Main.py gpt_base/model.py execution/APIs.py gpt_base/utils.py gpt_base/DataLoader.py gpt_base/SeqUnit.py ExeError fuzzy_match_filter nth_maxmin fuzzy_compare_filter obj_compare agg is_ascii hop_op Node execute_all DataLoader Preprocessor bytes_to_unicode get_pairs get_encoder Encoder main train evaluate mlp default_hparams norm past_shape model block merge_states gelu attention_mask gpt_emb_init_tune positions_for conv1d softmax expand_tile attn shape_list split_states test_split_for_rouge linear_table_in make_html_safe make_dirs preprocess text2id check_res clean_space SeqUnit read_word2vec create_init_embedding read_word2vec_zip progress_bar load_vocab make_html_safe bleu_score write_word get_current_git_version get_rouge write_log check_res format_time get_res reset_index replace extract int reset_index replace all to_datetime astype map findall float DataFrame datetime float extract all replace astype mean sum fillna extract int list all replace to_datetime astype map DataFrame values defaultdict print tqdm Node eval append DataFrame enumerate append list range ord add set str time join epoch evaluate train_set model print flag_values_dict DataLoader reset write_log report save use_table range makedirs decode strip DataLoader setLevel max get_res open tolist bleu_score generate append use_table dev_set test_set close convert_and_evaluate generate_beam join WARNING Rouge155 min write index tqdm array makedirs join ConfigProto gpt_model_name gpt_model_path as_list shape exp reduce_max shape_list shape_list range convert_to_tensor ndims append strip split replace isalpha strip enumerate join makedirs close open join mkdir time test_split_for_rouge print text2id float join mkdir realpath join dirname list print strip len map infolist array open ZipFile split read_word2vec print endswith read_word2vec_zip load_vocab sqrt uniform load_word2vec_format range len int time join format_time write append range flush len int join write open Repo hexsha print append join index split load deepcopy readlines strip zip append open | # Logic2Text ## Data In the dataset folder, we have the full dataset (all_data.json), and the train test split (train.json, valid.json, test.json). Each example is in a dictionary of the following format: ``` "topic": table caption, "wiki": link to the wikipedia page, "action": logic type, "sent": description, "annotation": raw annotation data from the mturk, | 1,809 |

d-maurya/hypred_tensorEVD | ['link prediction'] | ['Hyperedge Prediction using Tensor Eigenvalue Decomposition'] | baselines/HyperedgePrediction_TD/dataset_utils/movielens_network.py baselines/HyperedgePrediction_TD/dataset_utils/twitter_network.py baselines/HyperedgePrediction_TD/dataset_utils/amazon_network.py realdata_utils.py baselines/HyperedgePrediction_TD/__main__.py baselines/HyperedgePrediction_TD/hyperedge_prediction.py baselines/HyperedgePrediction_TD/dataset_utils/dblp_network.py baselines/HyperedgePrediction_TD/dataset_utils/cora_citeseer_network.py baselines/HyperedgePrediction_TD/dataset_utils/mat_utils.py baselines/HyperedgePrediction_TD/model_evaluation_1.py gen_candset.py baselines/HyperedgePrediction_TD/network.py baselines/HyperedgePrediction_TD/hypergraph_utils.py baselines/HyperedgePrediction_TD/dataset_utils/aminer_network.py baselines/HyperedgePrediction_TD/__init__.py hy_utils.py realdata_main.py baselines/HyperedgePrediction_TD/measures.py all_models.py common_neigh hypred_ndp_cand hypred_prop_teneig Katz main_model get_candset_muni sample_neqcliques_m sample_mns get_candset sample_neqcliques sample_neqcliques_m_degree rm_hy_main f1_score_self hy_reduction get_incidence_matrix compute_hm_f1_score get_incidence_matrix_w am_gm_score compute_auc flatten get_incidence_from_hy get_nodeid compute_f1_main hedges_to_pg intersection harmonic_mean rm_hy plot_hyedgefreq get_largest_cc get_hy_specific_card get_hyperedges_from_incidence get_amazon_network get_realdata predict_hyperedge get_pref_node compute_NHAS get_hyedges_from_indices get_incidence_matrix validate_hyedges get_nodeid get_adj_matrix compute_avg_f1_score compute_precision compute_auc compute_f1_score compute_model_f1_score get_hyedges_degree_dist get_missing_hyedges_indices get_hra_scores get_network main model_performance get_amazon_network prune_hyedges get_aminer_cocitation get_file_paths get_aminer_coreference get_aminer_network get_cocitation_network get_coreference_network get_cora_citeseer_network get_dblp_network store_as_mat get_largest_cc get_movielens_network get_twitter_network reciprocal asarray minimize squeeze transpose rand dot flatten spdiags diagonal sum x dot transpose dot transpose inv identity get_hyedges_degree_dist get_hra_scores Katz hypred_ndp_cand hypred_prop_teneig common_neigh list subgraph sort tuple nodes choice set add sample range sum list asarray subgraph sort squeeze tolist tuple nodes apply set get_incidence_from_hy choice add sample DataFrame range int list tolist nodes apply set edges DataFrame union range combinations list tolist map merge apply sample_mns DataFrame fillna sample_neqcliques_m_degree len groupby list hy_reduction tolist get_candset_muni apply DataFrame keys groupby list sample_neqcliques_m apply DataFrame keys len roc_curve auc abs power sum prod len lil_matrix enumerate array get_nodeid lil_matrix get_nodeid lil_matrix enumerate int list asarray DataFrame squeeze min tolist map merge apply get_incidence_from_hy mean sample sum values len groupby list tolist apply DataFrame keys rm_hy len intersection compute_f1_score len groupby list compute_hm_f1_score tolist apply harmonic_mean DataFrame keys transpose from_scipy_sparse_matrix dot diagonal spdiags combinations add_edge Graph print append enumerate has_edge add_edge remove list Graph print nodes index add_nodes_from max range len update list get_largest_cc get_incidence_matrix_w tolist set apply DataFrame len DataFrame apply list append loadmat range len tocsr apply bar title savefig figure transform DataFrame asarray arange squeeze choice sum len append range get_pref_node compute_NHAS choice add set reciprocal asarray subtract squeeze transpose dot spdiags diagonal sum asarray tocsr squeeze set append sum range len tolil append range len set compute_f1_score len list shuffle append float range asarray print squeeze dot sum diags get_adj_matrix int list asarray squeeze Counter append sum keys validate_hyedges get_hyedges_degree_dist get_network predict_hyperedge seed str list compute_avg_f1_score append sum range get_hra_scores set choice get_hyedges_from_indices print sort get_missing_hyedges_indices std len getcwd loadmat print compute_model_f1_score seed add_argument model_performance use_candidateset ArgumentParser K parse_args dataset network append readlines open list readlines add set get_file_paths append open update list readlines set add get_file_paths append open list remove set list prune_hyedges get_aminer_cocitation get_aminer_coreference keys list readlines add set open append split list readlines add set open append split get_cocitation_network get_coreference_network load list sort len set append range open connected_component_subgraphs update list get_movielens_network get_largest_cc get_incidence_matrix get_cora_citeseer_network getcwd get_dblp_network sort set get_twitter_network savemat get_aminer_network get_amazon_network list readlines len prune_hyedges add set split append next keys open int list sort readlines len open append keys range split | # hypred_tensorEVD This repo contains the code for hyperedge prediction using tensor eigenvalue decomposition. We reperesent the hypergraph as tensor and use the Fiedler vector from Laplacian tensor to predict the new hyperedges using the hyperedge score proposed in our work. We compare the results on 5 datasets across three baselines: common neighbour, Katz, and <a href="https://arxiv.org/pdf/2006.11070.pdf">HPRA</a> (a resource allocation based algorithm). The code of HPRA algorithm is directly taken from <a href="https://github.com/darwk/HyperedgePrediction ">this link</a>. For more info about the proposed algorithm, please refer <a href="https://arxiv.org/pdf/2102.04986.pdf">Hyperedge Prediction using Tensor Eigenvalue Decomposition</a>. # Running the code - Run the file 'realdata_main.py' <br> - Please change the dataset using the variable 'realdt_argsinp' <br> - Please change the folder name using variable 'new_folder' for each run <br> The results will be saved in the folder results/<new_folder>/. It will save multiple files | 1,810 |

d909b/perfect_match | ['counterfactual inference'] | ['Perfect Match: A Simple Method for Learning Representations For Counterfactual Inference With Neural Networks'] | perfect_match/models/baselines/gradientboosted.py perfect_match/data_access/ihdp/data_access.py perfect_match/models/baselines/cfr/util.py perfect_match/data_access/patient_generator.py perfect_match/models/pehe_loss.py perfect_match/models/baselines/bart.py perfect_match/apps/main.py perfect_match/models/benchmarks/twins_benchmark.py perfect_match/models/model_eval.py perfect_match/models/model_factory.py perfect_match/apps/parameters.py perfect_match/data_access/propensity_batch.py perfect_match/models/baselines/baseline.py perfect_match/models/benchmarks/news_benchmark.py perfect_match/data_access/mahalanobis_batch.py perfect_match/models/baselines/causal_forest.py perfect_match/models/distributions.py perfect_match/models/benchmarks/ihdp_benchmark.py perfect_match/models/benchmarks/jobs_benchmark.py perfect_match/models/cf_early_stopping.py perfect_match/models/benchmarks/tcga_benchmark.py perfect_match/models/model_builder.py perfect_match/data_access/generator.py perfect_match/models/baselines/neural_network.py perfect_match/models/baselines/knn.py perfect_match/models/baselines/psm.py perfect_match/apps/util.py perfect_match/models/baselines/cfr/cfr_net.py perfect_match/data_access/tcga/data_access.py perfect_match/data_access/jobs/data_access.py perfect_match/models/baselines/ganite_package/ganite_builder.py perfect_match/data_access/twins/data_access.py perfect_match/models/baselines/ganite.py perfect_match/models/baselines/tf_neural_network.py perfect_match/models/baselines/gaussian_process.py perfect_match/apps/run_all_experiments.py perfect_match/models/per_sample_dropout.py perfect_match/apps/evaluate.py perfect_match/models/baselines/random_forest.py perfect_match/models/baselines/ordinary_least_squares.py perfect_match/data_access/batch_augmentation.py setup.py perfect_match/models/baselines/psm_pbm.py perfect_match/models/baselines/ganite_package/ganite_model.py perfect_match/data_access/news/data_access.py EvaluationApplication MainApplication clip_percentage parse_parameters ReadableDir dataset_is_binary_and_has_counterfactuals get_dataset_params model_is_pbm_variant run random_cycle_generator time_function report_duration error resample_with_replacement_generator log get_num_available_gpus BatchAugmentation wrap_generator_with_constant_y make_keras_generator get_last_id_set MahalanobisBatch get_last_row_id report_distribution make_generator PropensityBatch DataAccess DataAccess DataAccess DataAccess convert_array adapt_array DataAccess CounterfactualEarlyStopping safe_sqrt pdist2sq wasserstein calculate_distances calculate_distance ModelBuilder ModelEvaluation ModelFactory ModelFactoryCheckpoint pehe_loss cf_nn pdist2 pehe_nn PerSampleDropout BayesianAdditiveRegressionTrees PickleableMixin Baseline CausalForest GANITE GaussianProcess GradientBoostedTrees KNearestNeighbours NeuralNetwork OrdinaryLeastSquares1 OrdinaryLeastSquares2 PSM PSM_PBM RandomForest NeuralNetwork CFRNet build_mlp safe_sqrt pdist2sq pdist2 save_config get_nonlinearity_by_name wasserstein pop_dist log lindisc mmd2_lin load_data simplex_project validation_split mmd2_rbf load_sparse GANITEBuilder GANITEModel IHDPBenchmark JobsBenchmark NewsBenchmark TCGABenchmark TwinsBenchmark set_defaults add_argument ArgumentParser tolist len format dataset_is_binary_and_has_counterfactuals print model_is_pbm_variant zip get_dataset_params range permutation RandomState randint range len list_local_devices log print log log zeros float sum range len int get_split_indices permutation StratifiedShuffleSplit rint get_labels filter split floor report_distribution make_propensity_lists get_labelled_patients next len BytesIO seek save BytesIO seek transpose reduce_sum square matmul to_float safe_sqrt exp pdist2sq dropout ones concat reduce_max transpose matmul reduce_sum shape reduce_mean stop_gradient gather range to_float zeros concat size cond gather range equal size cond equal transpose reduce_sum square matmul gather argmin pdist2 cf_nn square sqrt reduce_mean gather gather concat range int dropout Variable nonlinearity matmul append zeros range random_normal int list permutation range join close write open load loadtxt load_sparse open int todense loadtxt coo_matrix open safe_sqrt square reduce_sum sign reduce_mean gather gather reduce_mean square reduce_sum to_float exp square reduce_sum gather to_float gather pdist2 to_float safe_sqrt exp pdist2sq dropout ones concat reduce_max transpose matmul reduce_sum shape reduce_mean stop_gradient gather range cumsum list maximum range | ## Perfect Match: A Simple Method for Learning Representations For Counterfactual Inference With Neural Networks  Perfect Match (PM) is a method for learning to estimate individual treatment effect (ITE) using neural networks. PM is easy to implement, compatible with any architecture, does not add computational complexity or hyperparameters, and extends to any number of treatments. This repository contains the source code used to evaluate PM and most of the existing state-of-the-art methods at the time of publication of [our manuscript](https://arxiv.org/abs/1810.00656). PM and the presented experiments are described in detail in our paper. Since we performed one of the most comprehensive evaluations to date with four different datasets with varying characteristics, this repository may serve as a benchmark suite for developing your own methods for estimating causal effects using machine learning methods. In particular, the source code is designed to be easily extensible with (1) new methods and (2) new benchmark datasets. Author(s): Patrick Schwab, ETH Zurich <[email protected]>, Lorenz Linhardt, ETH Zurich <[email protected]> and Walter Karlen, ETH Zurich <[email protected]> License: MIT, see LICENSE.txt #### Citation If you reference or use our methodology, code or results in your work, please consider citing: @article{schwab2018perfect, title={{Perfect Match: A Simple Method for Learning Representations For Counterfactual Inference With Neural Networks}}, | 1,811 |

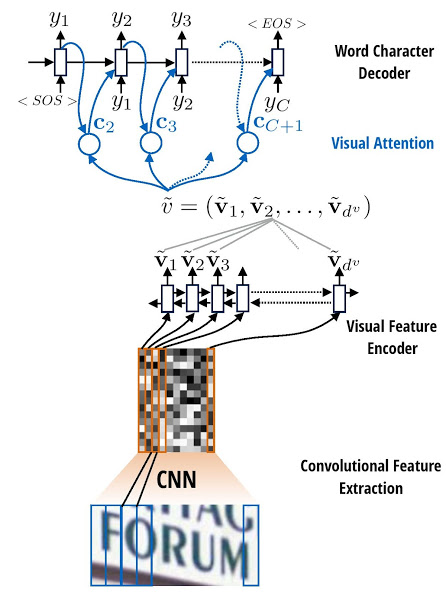

da03/Attention-OCR | ['optical character recognition'] | ['Image-to-Markup Generation with Coarse-to-Fine Attention'] | src/__init__.py src/data_util/bucketdata.py tmp.py src/data_util/__init__.py src/model/seq2seq_model.py src/exp_config.py src/model/model.py src/launcher.py src/data_util/data_gen.py src/model/cnn.py src/model/seq2seq.py src/model/__init__.py ExpConfig main process_args BucketData test_gen DataGen CNN ConvReluBN max_2x2pool batch_norm ConvRelu dropout max_2x1pool tf_create_attention_map var_random Model model_with_buckets tied_rnn_seq2seq embedding_rnn_decoder sequence_loss_by_example one2many_rnn_seq2seq _extract_argmax_and_embed sequence_loss attention_decoder embedding_attention_seq2seq embedding_attention_decoder embedding_tied_rnn_seq2seq rnn_decoder basic_rnn_seq2seq embedding_rnn_seq2seq Seq2SeqModel parse_args set_defaults add_argument ArgumentParser setFormatter basicConfig addHandler StreamHandler Formatter process_args setLevel INFO print EvalGen gen str get_variable as_list get_shape format print convert_to_tensor assert_is_compatible_with convert_to_tensor assert_is_compatible_with output_size convert_to_tensor assert_is_compatible_with output_size | # Attention-OCR Authours: [Qi Guo](http://qiguo.ml) and [Yuntian Deng](https://github.com/da03) Visual Attention based OCR. The model first runs a sliding CNN on the image (images are resized to height 32 while preserving aspect ratio). Then an LSTM is stacked on top of the CNN. Finally, an attention model is used as a decoder for producing the final outputs.  # Prerequsites Most of our code is written based on Tensorflow, but we also use Keras for the convolution part of our model. Besides, we use python package distance to calculate edit distance for evaluation. (However, that is not mandatory, if distance is not installed, we will do exact match). ### Tensorflow: [Installation Instructions](https://www.tensorflow.org/get_started/os_setup#download-and-setup) (tested on 0.12.1) ### Distance (Optional): ``` wget http://www.cs.cmu.edu/~yuntiand/Distance-0.1.3.tar.gz | 1,812 |

dadung/MCVL | ['visual localization', 'visual place recognition'] | ['Visual Localization Under Appearance Change: Filtering Approaches'] | libs/vlfeat-0.9.21/docsrc/doxytag.py libs/vlfeat-0.9.21/docsrc/mdoc.py libs/vlfeat-0.9.21/docsrc/wikidoc.py libs/vlfeat-0.9.21/docsrc/webdoc.py libs/vlfeat-0.9.21/docsrc/formatter.py Doxytag Terminal Lexer B PL L lex Formatter DL BL E extract towiki depth_first breadCrumb MFile Node runcmd xscan wikidoc usage bullet indent inner_content PL group match DL BL len pid Popen waitpid children group lstrip match startswith append open join addMFile addChildNode print sort MFile Node match listdir __next__ prev runcmd join wikidoc print print insert print readlines close len writelines append range exists open | About ============ MATLAB code of our NCAA 2020 paper: "Visual Localization Under Appearance Change: Filtering Approaches" - NCAA 2020. [Anh-Dzung Doan](https://sites.google.com/view/dzungdoan/home), [Yasir Latif](http://ylatif.github.io/), [Tat-Jun Chin](https://cs.adelaide.edu.au/~tjchin/doku.php), [Yu Liu](https://sites.google.com/site/yuliuunilau/home), [Shin-Fang Ch’ng](https://sites.google.com/view/shinfang-chng/), [Thanh-Toan Do](https://sites.google.com/view/thanhtoando/home), and [Ian Reid](https://cs.adelaide.edu.au/~ianr/). [[pdf]](https://arxiv.org/abs/1811.08063) If you use/adapt our code, please kindly cite our paper. <p align="center"> <b>Comparison results on Oxford RobotCar dataset [3] between PoseNet [1], MapNet [2], and our method</b><br> </p>  [1] Alex Kendall, Matthew Grimes, and Roberto Cipolla, "PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization", in CVPR 2015. | 1,813 |

dadung/Visual-Localization-Filtering | ['visual localization', 'visual place recognition'] | ['Visual Localization Under Appearance Change: Filtering Approaches'] | libs/vlfeat-0.9.21/docsrc/doxytag.py libs/vlfeat-0.9.21/docsrc/mdoc.py libs/vlfeat-0.9.21/docsrc/wikidoc.py libs/vlfeat-0.9.21/docsrc/webdoc.py libs/vlfeat-0.9.21/docsrc/formatter.py Doxytag Terminal Lexer B PL L lex Formatter DL BL E extract towiki depth_first breadCrumb MFile Node runcmd xscan wikidoc usage bullet indent inner_content PL group match DL BL len pid Popen waitpid children group lstrip match startswith append open join addMFile addChildNode print sort MFile Node match listdir __next__ prev runcmd join wikidoc print print insert print readlines close len writelines append range exists open | About ============ MATLAB code of our NCAA 2020 paper: "Visual Localization Under Appearance Change: Filtering Approaches" - NCAA 2020. [Anh-Dzung Doan](https://sites.google.com/view/dzungdoan/home), [Yasir Latif](http://ylatif.github.io/), [Tat-Jun Chin](https://cs.adelaide.edu.au/~tjchin/doku.php), [Yu Liu](https://sites.google.com/site/yuliuunilau/home), [Shin-Fang Ch’ng](https://sites.google.com/view/shinfang-chng/), [Thanh-Toan Do](https://sites.google.com/view/thanhtoando/home), and [Ian Reid](https://cs.adelaide.edu.au/~ianr/). [[pdf]](https://arxiv.org/abs/1811.08063) If you use/adapt our code, please kindly cite our paper. <p align="center"> <b>Comparison results on Oxford RobotCar dataset [3] between PoseNet [1], MapNet [2], and our method</b><br> </p>  [1] Alex Kendall, Matthew Grimes, and Roberto Cipolla, "PoseNet: A Convolutional Network for Real-Time 6-DOF Camera Relocalization", in CVPR 2015. | 1,814 |

daemonmaker/neural_artistic_style | ['style transfer'] | ['A Neural Algorithm of Artistic Style'] | gatys/Styler.py gatys/Server.py singlenet/model.py utils.py gatys/__init__.py singlenet/script01.py load_img clip_0_1 tensor_to_image gram_matrix imshow vgg_layers index run_styler StyleContentModel style_content_loss train_step make_style_transfer_model res_block load_img clip_0_1 gram_matrix vgg_layers run_styler array float32 convert_image_dtype read_file cast int32 resize max decode_image title squeeze shape cast float32 einsum VGG19 Model add_n assign apply_gradients gradient clip_0_1 layers train_step print name StyleContentModel Variable Adam VGG19 range len Input range res_block make_style_transfer_model | # neural_artistic_style A playground for experimenting with different methods of neural artistic style transfer. Currently, only the Gatys method (i.e. the original methodology, https://arxiv.org/abs/1508.06576) is supported. There are two implementations. The first being the notebook from the TensorFlow tutorial called Neural Style Transfer (https://www.tensorflow.org/tutorials/generative/style_transfer) which is found in `style_transfer.ipynb`. The other is in the notebook `gatys.ipynb` and is merely a refactorization of this code such that the utility functions are in `utils.py` and the logic specific to the the method is found in the `gatys` python module in this repository. ## Docker image The simplest way to get started with this repository is to use docker. Execute the following command to build the docker image: ```bash ./scripts/build_image ``` The docker image is named `neural_artistic_style` and it supports two modes; one that exposes Jupyter notebooks and another that exposes a Flask server. These modes can be activated by executing `./scripts/run_jupyter` and `./scripts/run_flask` respectively. These scripts need to be executed from within the root of this repository because they mount this repository at `/tf` within the container. *Note:* The resulting containers are not retained once they are shutdown. | 1,815 |

daintlab/confidence-aware-learning | ['active learning', 'out of distribution detection'] | ['Confidence-Aware Learning for Deep Neural Networks'] | train.py data.py metrics.py model/resnet.py test.py model/vgg.py crl_utils.py model/densenet_BC.py utils.py main.py History negative_entropy Custom_Dataset one_hot_encoding get_loader main calc_nll aurc_eaurc calc_fpr_aupr calc_metrics get_metric_values calc_ece coverage_risk calc_nll_brier calc_aurc_eaurc main train accuracy AverageMeter Logger DenseNet3 TransitionBlock BottleneckBlock DenseBlock BasicBlock resnet110 resnet20 PreActBasicBlock ResNet_Cifar Bottleneck conv3x3 PreActBottleneck BasicBlock PreAct_ResNet_Cifar make_layers vgg19 VGG vgg16 log_softmax sum softmax data one_hot_encoding print Compose SVHN labels targets DataLoader CIFAR10 Custom_Dataset CIFAR100 get list print map set array data batch_size SGD MultiStepLR Logger save dataset cuda calc_metrics epochs range state_dict save_path join History makedirs write data_path parameters get_loader train step gpu len calc_fpr_aupr get_metric_values calc_ece calc_nll_brier calc_aurc_eaurc aurc_eaurc list sorted array zip coverage_risk max format print roc_curve argmin average_precision_score array abs max format zip print gt mean eq linspace item tensor le max zeros calc_nll logsoftmax format print LogSoftmax mean item tensor sum range zeros_like len append range len print format log eval load format file_name load_state_dict model get_target_margin zero_grad roll max topk rank_weight max_correctness_update correctness_update update format size softmax item enumerate time backward print AverageMeter clone criterion_cls accuracy criterion_ranking write step negative_entropy len topk size t eq mul_ expand_as append sum max PreAct_ResNet_Cifar PreAct_ResNet_Cifar Conv2d | # Confidence-Aware Learning for Deep Neural Networks This repository provides the code for training with *Correctness Ranking Loss* presented in the paper "[Confidence-Aware Learning for Deep Neural Networks](https://arxiv.org/abs/2007.01458)" accepted to ICML2020. ## Getting Started ### Requirements ``` * ubuntu 18.0.4, cuda10 * python 3.6.8 * pytorch >= 1.2.0 * torchvision >= 0.4.0 ``` | 1,816 |

dair-iitd/PoolingAnalysis | ['text classification'] | ['Why and when should you pool? Analyzing Pooling in Recurrent Architectures'] | train.py my_models/utils.py my_models/models.py my_dataloader.py test.py my_utils.py params.py my_models/custom_lstm.py grad_cam.py free_stored_grads gradients_compute compute_norm write_gradients process_gradients gates_compute compute_ratios load_pickle get_wiki_test_data get_data dump_pickle get_ood_test_data get_all_logs categorical_accuracy set_all_seeds_to get_model_path epoch_time parse_args add_config wiki_appended_text evaluate_NWI evaluate text_to_vector count_parameters get_str vector_to_text evaluate_wiki_attack myprint get_wiki_vec evaluate count_parameters iter_func train myprint round_down CustomLSTM RNN total_extra_params to_cuda MyRNN swish_module gelu swish gelu_module tensorize parse_args list linspace interp range len str tolist write f_ht b_ht process_gradients append concatenate_lists join tolist write close open mean average load task list isinstance close extend BucketIterator append Dataset values open load task list isinstance close extend BucketIterator append Dataset values open load list isinstance close extend append values task splits dump print debug close shuffle load_pickle task int splits print LabelField Field float build_vocab eq argmax int seed manual_seed_all manual_seed str nesterov batch_size use_bert wiki data_size lr amsgrad open add_argument ArgumentParser load items list __dict__ isinstance config_file extend open print range print eval zeros len range split randint int choice long get_str t get_wiki_vec item to range cat print eval zeros eval batch_size str write log flush log compute_ratios gradients zero_grad squeeze exit write_gradients gradients_compute copy label flush criterion backward evaluate text iter_func write accuracy step free_stored_grads out_dim fc_dim final_dim hidden_dim use_base FloatTensor index append range len is_available | # Analyzing Pooling in Recurrent Architectures Repository for the paper [Analyzing Pooling in Recurrent Architectures](https://arxiv.org/abs/2005.00159) by [Pratyush Maini](https://pratyush911.github.io), [Kolluru Sai Keshav](https://saikeshav.github.io/), [Danish Pruthi](https://www.cs.cmu.edu/~ddanish/) and [Mausam](http://www.cse.iitd.ac.in/~mausam/) ## Dependencies The code requires the following dependencies to run can be installed using the `conda` environment file provided: ``` conda env create --file environment.yaml ``` ## Running gradients experiments ### Evaluate the Initial gradient distribution ``` | 1,817 |

dakot/probal | ['active learning'] | ['Toward Optimal Probabilistic Active Learning Using a Bayesian Approach'] | src/utils/mathematical_functions.py src/query_strategies/probabilistic_active_learning.py src/query_strategies/active_learnig_with_cost_embedding.py src/base/query_strategy.py src/query_strategies/expected_error_reduction.py src/query_strategies/random_sampling.py src/utils/evaluation_functions.py src/utils/data_functions.py src/utils/mixture.py src/classifier/parzen_window_classifier.py src/query_strategies/expected_probabilistic_active_learning.py src/query_strategies/uncertainty_sampling.py src/query_strategies/query_by_committee.py src/query_strategies/optimal_sampling.py src/evaluation/experimental_setup_csv.py src/query_strategies/mdsp.py src/base/data_set.py DataSet QueryStrategy PWC main run ALCE cost_sensitive_uncertainty EER expected_error_reduction expected_error_reduction_pwc xpal_gain XPAL _smacof_single_p MDSP smacof_p compute_errors_pwc compute_errors OS PAL pal_gain QBC calc_avg_KL_divergence RS US uncertainty_scores load_data compute_statistics misclf_rate eval_perfs euler_beta gen_l_vec_list multinomial_coefficient kernels np_ix Mixture PWC XPAL PAL process_time make_query QBC update_entries floor abspath DataFrame log str ALCE cdist len dirname append US train_test_split sum fit_transform eval_perfs range format DataSet size EER sqrt mean unique startswith power float int deepcopy RS join print OS to_csv kernels load_data eye split transform fit parse_args add_argument ArgumentParser run int zeros kneighbors array range predict fit deepcopy len predict_proba vstack append zeros sum max range enumerate fit max np_ix zeros sum array range enumerate len T format arange print argmin check_array eye zeros sum array enumerate T check_random_state euclidean_distances ones reshape print inv rand copy ravel dot IsotonicRegression sum check_symmetric range fit_transform list check_random_state hasattr check_array argmin copy warn zip _smacof_single_p randint max range deepcopy len misclf_rate zeros range fit misclf_rate unique zeros argmax range len asarray ones reshape argmin euler_beta multinomial_coefficient vstack swapaxes eye tile zeros sum len check_random_state join list check_X_y compress LabelEncoder dirname abspath fit_transform array read_csv values fit items list predict append perf_func mean array std len pop str append arange range array | # Toward Optimal Probabilistic Active Learning Using a Bayesian Approach ## Project Structure - data: contains .csv-files of data sets being not available at [OpenML](https://www.openml.org/home) - data_set_ids.csv: contains the list of data sets with their IDs at [OpenML](https://www.openml.org/home) - images: path where the visualizations of the results and utility plots will be saved - src: Python package consisting of several sub-packages - base: implementation of DataSet and QueryStrategy class - classifier: implementation of Parzen Window Classifier (PWC) - evaluation: scripts for experimental setup and SLURM - notebooks: jupyter notebooks for the investigation of the different query strategies | 1,818 |

dalab/web2text | ['information retrieval'] | ['Web2Text: Deep Structured Boilerplate Removal'] | src/main/python/forward.py src/main/python/viterbi.py other_frameworks/bte/bte.py src/main/python/config.py src/main/python/main.py src/main/python/shuffle_queue.py src/main/python/data.py src/main/python/data/convert_scala_csv.py html2text bte html_entities find_paragraphs tokenise preclean usage token_value Config assert_raises _get_variable edge unary conv loss get_batch train_edge classify evaluate_edge test_structured main train_unary evaluate_unary ShuffleQueue softmax viterbi cli join bte find_paragraphs tokenise preclean append sub html_entities sub append token_value range len startswith append lower search fn Config convert_to_tensor len Config convert_to_tensor len REGULARIZATION_LOSSES get_collection sparse_softmax_cross_entropy_with_logits reduce_mean add_n scalar l2_regularizer _get_variable dropout truncated_normal_initializer argv train_edge print exit test_structured classify train_unary float prediction_fn enumerate prediction_fn enumerate ShuffleQueue minimize reshape reuse_variables get_collection float32 unary placeholder int64 Saver global_variables_initializer loss ShuffleQueue minimize reshape reuse_variables edge float32 placeholder get_collection int64 Saver global_variables_initializer loss reuse_variables edge unary float32 placeholder get_collection Saver global_variables_initializer genfromtxt constant astype unary float32 edge get_collection Saver global_variables_initializer zeros range takeOne random_integers exp max log softmax zeros argmax range genfromtxt docs list echo save array | # Web2Text Source code for [Web2Text: Deep Structured Boilerplate Removal](https://arxiv.org/abs/1801.02607), full paper at ECIR '18 ## Introduction This repository contains * Scala code to parse an (X)HTML document into a DOM tree, convert it to a CDOM tree, interpret tree leaves as a sequence of text blocks and extract features for each of these blocks. * Python code to train and evaluate unary and pairwise CNNs on top of these features. Inference on the hidden Markov model based on the CNN output potentials can be executed using the provided implementation of the Viterbi algorithm. * The [CleanEval](https://cleaneval.sigwac.org.uk) dataset under `src/main/resources/cleaneval/`: - `orig`: raw pages - `clean`: reference clean pages - `aligned`: clean content aligned with the corresponding raw page on a per-character basis using the alignment algorithm described in our paper | 1,819 |

danielegrattarola/ccm-aae | ['link prediction'] | ['Adversarial Autoencoders with Constant-Curvature Latent Manifolds'] | src/mnist.py src/geometry.py get_ccm_distribution hyperbolic_clip hyperbolic_uniform is_hyperbolic _ccm_uniform clip hyperbolic_distance get_distance spherical_uniform _ccm_normal belongs log_map ccm_normal ccm_uniform is_spherical spherical_normal exp_map euclidean_distance spherical_clip spherical_distance CCMMembership hyperbolic_inner hyperbolic_normal sample_points mean_pred normal spherical_clip sqrt uniform sum sign append _ccm_uniform normal exp_map normal zeros abs ndarray isinstance _ccm_normal zip append len norm copy arange delete copy sqrt sum dot T eye hyperbolic_inner zeros abs ndarray isinstance zeros abs ndarray isinstance reshape hstack shuffle unique append | This is the official implementation of the paper "Adversarial Autoencoders with Constant-Curvature Latent Manifolds" by D. Grattarola, C. Alippi, and L. Livi. (2018, [https://arxiv.org/abs/1812.04314](https://arxiv.org/abs/1812.04314)). This code showcases the general structure of the methodology used for the experiments in the paper, and allows to reproduce the results on MNIST (the other two applications are conceptually similar, but the code was much more messy). Please cite the paper if you use any of this code for your research: ``` @article{grattarola2019adversarial, title={Adversarial autoencoders with constant-curvature latent manifolds}, author={Grattarola, Daniele and Livi, Lorenzo and Alippi, Cesare}, journal={Applied Soft Computing}, volume={81}, pages={105511}, | 1,820 |

danielenricocahall/Keras-Weighted-Hausdorff-Distance-Loss | ['object localization'] | ['Locating Objects Without Bounding Boxes'] | test/test_loss.py hausdorff/hausdorff.py cdist weighted_hausdorff_distance test_hausdorff_loss_diff test_cdist test_hausdorff_loss_match reshape maximum square reduce_sum matmul sqrt convert_to_tensor sqrt cartesian convert_to_tensor cdist expand_dims astype expand_dims astype | # Weighted Hausdorff Distance Loss # In this repository, you'll find an implementation of the weighted Hausdorff Distance Loss, described here (https://arxiv.org/abs/1806.07564). A majority of the work was just porting their PyTorch implementation (https://github.com/HaipengXiong/weighted-hausdorff-loss). I figured some researchers/practitioners that are doing object detection/localization may find this useful! # Setup `pipenv install .` should configure a python environment and install all necessary dependencies in the environment. # Testing Some tests verifying basic components of the loss function have been incorporated. Run `python -m pytest` in the repo to execute them. ## TODO ## Add an example script. | 1,821 |

danielenricocahall/One-Class-NeuralNetwork | ['anomaly detection'] | ['Anomaly Detection using One-Class Neural Networks'] | ocnn.py test/test_basic.py driver.py loss.py main quantile_loss OneClassNeuralNetwork test_loss_function test_build_model add_subplot show use set_xlabel ylabel title scatter legend predict epoch plot set_zlabel train_model pop T ListedColormap xlabel File OneClassNeuralNetwork set_ylabel figure len convert_to_tensor OneClassNeuralNetwork build_model | # One-Class-NeuralNetwork Simplified Keras implementation of one class neural network for nonlinear anomaly detection. The implementation is based on the approach described here: https://arxiv.org/pdf/1802.06360.pdf. I've included several datasets from ODDS (http://odds.cs.stonybrook.edu/) and the Wine Dataset from UCI (https://archive.ics.uci.edu/ml/datasets/wine) to play with. # Setup `pipenv install .` should configure a python environment and install all necessary dependencies in the environment. # Running Running `python kdd_cup.py` or `python wine.py` within your new python environment (either through CLI or IDE) should kick off training on the KDD Cup dataset epochs and generate some output plots. # Testing Two unit tests are defined in `test/test_basic.py`: building the model, and the quantile loss test based on example in the paper:  | 1,822 |

danielgordon10/thor-iqa-cvpr-2018 | ['visual question answering'] | ['IQA: Visual Question Answering in Interactive Environments'] | qa_agents/qa_agent.py generate_questions/generate_existence_questions.py supervised/train_navigation_agent.py depth_estimation_network/models/network.py question_embedding/parse_question.py layouts/make_layout_files.py thor_tests/run_all_tests.py question_embedding/train_question_embedding.py thor_tests/test_image_overlays.py generate_questions/questions.py thor_tests/test_random_initialize.py utils/question_util.py generate_questions/generate_contains_questions.py train.py darknet_object_detection/detector.py thor_tests/speed_test.py networks/question_embedding_network.py supervised/sequence_generator.py graph/batchGraphGRU.py reinforcement_learning/a3c_testing_thread.py networks/free_space_network.py constants.py networks/rl_network.py utils/bb_util.py reinforcement_learning/a3c_test.py graph/graph_obj.py reinforcement_learning/a3c_training_thread.py thor_tests/__init__.py qa_agents/graph_agent.py utils/tf_util.py generate_questions/episode.py generate_questions/generate_counting_questions.py generate_questions.py test_thor.py networks/end_to_end_baseline_network.py tasks.py depth_estimation_network/models/fcrn.py qa_agents/end_to_end_baseline_agent.py utils/action_util.py networks/qa_planner_network.py depth_estimation_network/depth_estimator.py supervised/semantic_map_pretrain.py run_thor_tests.py eval.py human_controlled_test.py utils/game_util.py game_state.py question_to_text.py utils/drawing.py reinforcement_learning/rmsprop_applier.py utils/py_util.py reinforcement_learning/a3c_train.py generate_questions/combine_hdf5.py __init__.py depth_estimation_network/models/__init__.py GameState QuestionGameState PersonGameState main startx pci_records generate_xorg_conf ObjectDetector visualize_detections setup_detectors get_detector DepthEstimator get_depth_estimator ResNet50UpProj layer interleave get_incoming_shape Network combine Episode main main main ListQuestion ExistenceQuestion Question CountQuestion BatchGraphGRUCell Graph EndToEndBaselineNetwork FreeSpaceNetwork QAPlannerNetwork QuestionEmbeddingNetwork DeepQNetwork RLNetwork A3CNetwork EndToEndBaselineGraphAgent GraphAgent RLGraphAgent QAAgent tokenize_sentence get_sequences vocabulary_size shuffle_data run main A3CTestingThread run A3CTrainingThread RMSPropApplier run SequenceGenerator run main run_tests test_movement_speed test_random_initialize_speed assert_image test_depth_and_ids_images run_tests test_random_initialize run_tests test_random_initialize_with_remove_prob assert_failed_action test_random_initialize_randomize_open assert_successful_action test_random_initialize_with_repeats ActionUtil make_square xywh_to_xyxy xyxy_to_xywh scale_bbox clip_bbox subplot visualize_detections draw_detection_box drawRect get_question_str pretty_action distance choose_action_q unique_rows get_objects_of_type check_object_size get_rotation_matrix get_object_bounds choose_action set_open_close_object depth_to_world_coordinates imresize get_object_point get_action_str object_size get_pose reset get_object object_center_position create_env depth_imresize encode decode get_time_str get_question_str cond_scope restore l2_regularizer split_axis kernel_to_image variable_summaries remove_axis_get_shape empty_scope conv_variable_summaries save split_axis_get_shape restore_from_dir remove_axis Session update str sorted int get_question_str glob print File write len set split OBJECTS bool max flush open append decode split append join format int list mkstemp call split astype copy draw_detection_box int32 enumerate append ObjectDetector range acquire release Session Tensor isinstance dtype list str remove print cumsum insert File extend shape rename create_dataset append keys exists enumerate Process join create_dump TEST_SCENE_NUMBERS start sleep ceil round range append TRAIN_SCENE_NUMBERS MAX_SENTENCE_LENGTH len split append int tokenize_sentence items list permutation arange copy save Session str sorted list restore BATCH_SIZE GPU_ID get_time_str append range get glob TEST_SET FileWriter mean LOG_FILE flush join items time train_op graph print extend QuestionEmbeddingNetwork create_network finalize shuffle_data add_summary global_variables_initializer create_train_ops OBJECT_DETECTION USED_QUESTION_TYPES setup_detectors Lock list get_time_str Thread TEST_SET close shuffle LOG_FILE zip extend finalize PARALLEL_SIZE makedirs OBJECT_DETECTION TRAINABLE_VARIABLES conv_variable_summaries Saver restore_from_dir USED_QUESTION_TYPES setup_detectors MAX_TIME_STEP Lock merge_all get_collection placeholder USE_NAVIGATION_AGENT RMSPropApplier Thread close PREDICT_DEPTH start load_weights mkdir RUN_TEST merge sync A3CTestingThread File makedirs float32 CHECKPOINT_DIR A3CTrainingThread reset create_dataset PARALLEL_SIZE len randint where flatten argmax max add_run_metadata subplot STEPS_AHEAD ones tolist set_trace waitKey imshow sum concatenate ascontiguousarray choice copy SCREEN_HEIGHT flipud zeros enumerate RunOptions int DRAWING SCREEN_WIDTH sort reshape maximum min RunMetadata release add acquire sleep GRU_SIZE SequenceGenerator set remove NUM_CLASSES NUM_UNROLLS split create_env run_tests time print reset random_initialize range time print reset random_initialize step range create_env test_movement_speed test_random_initialize_speed compare_images_for_scene print test_depth_and_ids_images step step dict reset assert_failed_action random_initialize step assert_successful_action dict reset assert_failed_action random_initialize step assert_successful_action dict reset random_initialize step assert_successful_action dict reset random_initialize step assert_successful_action test_random_initialize test_random_initialize_with_remove_prob test_random_initialize_randomize_open test_random_initialize_with_repeats clip isinstance astype float32 shape zeros clip_bbox isinstance astype float32 shape zeros clip_bbox Number isinstance astype copy array tile full clip_bbox isinstance astype maximum float32 zeros floor resize max fromarray pad ceil COLORMAP_JET range truetype astype full int uint8 Draw applyColorMap putText text float32 array int squeeze round array int list tuple putText getTextSize map rectangle hexdigest print start Controller dict step abs pose deepcopy dtype view unique copy exp EVAL astype float32 resize astype float32 resize cos matmul pi sin matrix T AGENT_STEP_SIZE SCREEN_WIDTH arange reshape inv SCREEN_HEIGHT stack FOCAL_LENGTH get_rotation_matrix maximum clip astype int32 clip array int32 astype SCREEN_WIDTH SCREEN_HEIGHT array clip int T argmin square sqrt sum array localtime seed randint as_list int ndims tuple squeeze transpose reshape reduce_max sqrt pad ceil reduce_min range variable_summaries minimum join sorted NewCheckpointReader list as_list int print set shape assign Saver append keys get_variable_to_shape_map run get_checkpoint_state model_checkpoint_path restore print print join makedirs pop insert | # THOR-IQA-CVPR-2018  This repository contains the code for training and evaluating the various models presented in the paper [IQA: Visual Question Answering in Interactive Environments](https://arxiv.org/pdf/1712.03316.pdf). It also provides an interface for reading the questions and generating new questions if desired. ## If all you want is IQUAD-V1: IQUAD, the Interactive Question Answering Dataset, is included in this repository. If you do not want the rest of the code, then you may find what is included in the [questions](questions) folder sufficient as long as you also have a working version of THOR. You should be able to set up THOR using the simple pip install command, but you may want to install the exact version specified in the [requirements.txt](reqirements.txt). [questions](questions) contains three sub-folders: | 1,823 |

danielhers/synsem | ['semantic parsing'] | ['Content Differences in Syntactic and Semantic Representation', 'Content Differences in Syntactic and Semantic Representations'] | lost_participants.py compare_yields.py nested_conj.py extract_raw_counts.py secondary_verbs.py eval_conj.py extract_eval.py calc_len.py lost_scenes.py eval_depcl.py AverageLengthCalculator Evaluator ConjunctEvaluator DependentClauseEvaluator eval_ref Data Report eval_model_features strip combine main eval_corpus split eval_ref eval_model_features main get_columns eval_corpus Scene LostParticipantEvaluator LostSceneEvaluator NestedConjunctEvaluator SecondaryVerbEvaluator eval_ref groupby sorted list attrgetter print map set groupby sorted attrgetter eval_model_features print astype map dict zip list replace print tolist combine list getattr combine_first replace eval_corpus groupby sorted attrgetter ref name filter get_columns print sorted set join product Series astype map get_columns | # Content Differences in Syntactic and Semantic Representations This is code for the experiments and analysis in the [paper](https://www.aclweb.org/anthology/N19-1047): ``` @inproceedings{hershcovich2019content, title = "Content Differences in Syntactic and Semantic Representation", author = "Hershcovich, Daniel and Abend, Omri and Rappoport, Ari", booktitle = "Proc. of NAACL-HLT", url = "https://www.aclweb.org/anthology/N19-1047", | 1,824 |

danielhers/tupa | ['semantic parsing'] | ['A Transition-Based Directed Acyclic Graph Parser for UCCA', 'Multitask Parsing Across Semantic Representations'] | tupa/parse.py tupa/classifiers/nn/constants.py tupa/features/dense_features.py tupa/states/state.py tests/test_model.py tupa/states/edge.py tupa/features/empty_features.py tests/test_parser.py tupa/traceutil.py tests/test_oracle.py tupa/config.py docs/conf.py tupa/model_util.py tupa/oracle.py tupa/scripts/load_save.py tupa/classifiers/nn/util.py tupa/classifiers/nn/neural_network.py tupa/model.py tupa/features/sparse_features.py tupa/__main__.py tupa/scripts/viz.py server/parse_server.py tupa/states/node.py tupa/classifiers/linear/sparse_perceptron.py tupa/scripts/dump_vocab.py tupa/scripts/export.py tupa/classifiers/nn/sub_model.py tupa/scripts/tune.py tupa/features/feature_params.py tupa/scripts/visualize_learning_curve.py tupa/scripts/conll18_ud_eval.py tests/conftest.py tupa/classifiers/noop.py tupa/features/feature_extractor.py tupa/scripts/strip_multitask.py tupa/action.py tupa/scripts/enum_to_json.py tests/test_config.py tests/test_features.py setup.py tupa/classifiers/classifier.py tupa/__version__.py tupa/labels.py tupa/classifiers/nn/birnn.py tupa/classifiers/nn/mlp.py install Mock parse download parser_demo get_parser visualize config pytest_addoption empty_features_config passage_files basename write_oracle_actions Settings default_setting weight_decay pytest_generate_tests write_features create_config load_passage remove_existing test_passage assert_all_params_equal test_boolean_params config test_params test_hyperparams _test_features test_features_conllu test_feature_templates FeatureExtractorCreator test_features feature_extractors extract_features test_model parse test_oracle gen_actions test_train_empty test_ensemble test_extra_classifiers test_parser test_copy_shared test_empty_features test_iterations Action Actions Config FallbackNamespace Iterations Hyperparams add_param_arguments HyperparamsInitializer Labels ParameterDefinition Model ClassifierProperty DropoutDict DefaultOrderedDict remove_backup load_enum KeyBasedDefaultDict AutoIncrementDict jsonify Strings load_dict UnknownDict save_dict IdentityVocab remove_existing save_json Lexeme Vocab load_json is_terminal_edge is_implicit_node is_remote_edge Oracle print_scores generate_and_len to_lower_case read_passages single_to_iter Parser PassageParser filter_passages_for_bert get_eval_type from_text_format get_output_converter AbstractParser train_test ParserException ParseMode main BatchParser percents_str main_generator average_f1 tracefunc set_tracefunc set_traceback_listener print_traceback dict_value Classifier NoOp FeatureWeights FeatureWeightsCreator SparsePerceptron HighwayRNN HierarchicalBiRNN BiRNN EmptyRNN CategoricalParameter MultilayerPerceptron NeuralNetwork AxisModel SubModel randomize_orthonormal DenseFeatureExtractor EmptyFeatureExtractor dep_distance static_vars height FeatureTemplateElement FeatureTemplate gap_type get_punctuation head_terminal gap_lengths calc prop_getter FeatureExtractor head_terminal_height has_gaps gap_length_sum FeatureParameters NumericFeatureParameters SparseFeatureExtractor UDError _decode evaluate load_conllu load_conllu_file TestAlignment main _encode main main decode main save_model load_model main main delete_if_exists strip_multitask sample main get_values_based_on_format Params main load_scores visualize main smooth visualize Edge Node State InvalidActionError print Parser decode print to_standard next from_text decode BytesIO print FigureCanvasAgg fromstring from_standard draw close get_data print_png figure print addoption getoption parametrize Config update update_hyperparams update create_config read_files_and_dirs items sorted assert_allclose glob remove Config update update_hyperparams update items list update items list update_hyperparams items list params append need_label label_node values set_format list feature_extractor_creator get_label transition load_passage extract_features finished annotate State items join min init_param Actions Oracle _test_features _test_features feature_extractor_creator join set_format str argmax init_features update init_model finished_step State extract_features set_format finished_item update join load parse sorted items dict Model finalize remove_existing save range assert_all_params_equal all_params load_passage update dict set_format str finished label_node min need_label get_label transition Actions State Oracle values model tuple Parser weight_decay all_params list map append update parse passage_files init zip assert_allclose print dict average_f1 remove_existing train assert_all_params_equal update list passage_files print map dict Parser remove_existing train update list passage_files map update_hyperparams dict Parser remove_existing train update list parse passage_files map dict remove_existing train enumerate update list parse passage_files map dict Parser remove_existing train update list passage_files isinstance map dict remove_existing Iterations append train update dict remove_existing Action update add_argument_group get_group_arg_names add_mutually_exclusive_group add add_boolean ArgParser print copy2 glob remove setrecursionlimit time remove_existing print print time try_load print remove_existing print print_scores parse print Scores Parser testscores get lower all from_text from_pretrained join get str bert_model print ID tokenize len join list passages print train_test Scores shuffle read_passages append folds range args print main_generator list set_traceback_listener print setprofile dump_traceback signal randn shape set_value svd child get_terminals any has_gaps list map sorted prop_getter rstrip UDWord list _decode len map add UDSpan append UDRepresentation range readline process_word words set startswith int join extend split align_words words open system_files format evaluate system_total add_argument load_conllu_file gold_file precision correct gold_total ArgumentParser f1 parse_args recall enumerate counts model_name isinstance data lang get_vocab suffix load_model models params save_json filename ArgParser values load Model print savez_compressed save_model save difference delete_if_exists join basename keep out_dir strip_multitask makedirs get int seed map run max print list plot xlabel ylabel title clf savefig legend range len load_scores splitext visualize reshape subplots smooth values all_params set_major_locator ndarray colorbar pcolormesh OrderedDict delaxes append MaxNLocator tight_layout enumerate items isinstance tqdm sub sca | Transition-based UCCA Parser ============================ TUPA is a transition-based parser for [Universal Conceptual Cognitive Annotation (UCCA)][1]. ### Requirements * Python 3.6+ ### Install Install the latest release: pip install tupa[bert] Alternatively, install the latest code from GitHub (may be unstable): pip install git+git://github.com/danielhers/tupa.git#egg=tupa | 1,825 |