repo

stringlengths 8

116

| tasks

stringlengths 8

117

| titles

stringlengths 17

302

| dependencies

stringlengths 5

372k

| readme

stringlengths 5

4.26k

| __index_level_0__

int64 0

4.36k

|

|---|---|---|---|---|---|

nbfigueroa/ICSC-HMM

|

['time series']

|

['Transform-Invariant Non-Parametric Clustering of Covariance Matrices and its Application to Unsupervised Joint Segmentation and Action Discovery']

|

plotting/mocap/buildHTMLForSkelViz.py ExtractInfoFromWeb CreateHTML GetBasename read strip index dict len splitext join read int arange replace print glob write close take open ExtractInfoFromWeb range split

|

# ICSC-HMM **[TODO: UPDATE README.. paper and code have drastically changed!]** ICSC-HMM : IBP Coupled SPCM-CRP Hidden Markov Model for Transform-Invariant Time-Series Segmentation Website: https://github.com/nbfigueroa/ICSC-HMM Author: Nadia Figueroa (nadia.figueroafernandez AT epfl.ch) **NOTE:** If you are solely interested in the transform-invariant metric and clustering algorithm for Covariance matrices introduced in [1] go to [https://github.com/nbfigueroa/SPCM-CRP.git](https://github.com/nbfigueroa/SPCM-CRP.git) This is a toolbox for inference of the ICSC-HMM (IBP Coupled SPCM-CRP Hidden Markov Model) [1]. The ICSC-HMM is a segmentation and action recognition algorithm that solves for three challenges in HMM-based segmentation and action recognition: **(1) Unknown cardinality:** The typical model selection problem, number of hidden states is unknown. This can be solved by formulating an HMM with the Bayesian Non-Parametric treatment. This is done by placing an infinite prior on the transition distributions, typically the Hierarchical Dirichlet Process (HDP). **(2) Fixed dynamics:** For BNP analysis of ***multiple*** time-series with the HDP prior, the time series are tied together with the same set of transition and emission parameters. This problem was alleviated by the Indian Buffet Process (IBP) prior, which relaxes the assumption of the multiple time-series following the same transition parameters and allowing for only a sub-set of states to be active. **(3) Transform-invariance:** For ***any*** type of HMM, the emission models are always assumed to be unique, there is no way to handle transformations within or throughout time-series.

| 3,100 |

ncoop57/tango

|

['optical character recognition']

|

['It Takes Two to Tango: Combining Visual and Textual Information for Detecting Duplicate Video-Based Bug Reports']

|

two_to_tango/utils.py two_to_tango/cli.py two_to_tango/__init__.py setup.py two_to_tango/combo.py two_to_tango/approach.py two_to_tango/eval.py two_to_tango/features.py two_to_tango/prep.py two_to_tango/_nbdev.py two_to_tango/model.py compute_sims gen_lcs_similarity gen_tfidfs gen_bovw_similarity fuzzy_LCS gen_extracted_features flatten_dict sort_rankings fix_sims approach _download tango _print_performance _output_performance _generate_vis_results _get_single_model_performance _generate_txt_results reproduce download write_results convert_results_format write_rankings tango_combined run_settings execute_retrieval_run get_info_to_ranking_results hit_rate_at_k recall_at_k evaluate get_eval_results evaluate_ranking calc_tf_idf mean_average_precision precision_at_k average_precision cosine_similarity mean_reciprocal_rank rank_stats r_precision new_get_bovw imagenet_normalize_transform Extractor get_df get_bovw calc_tf_idf extract_features gen_vcodebook CNNExtractor get_transforms SimCLRExtractor gen_codebooks SIFTExtractor NTXEntCriterion get_train_transforms imagenet_normalize_transform SimCLRModel get_val_transforms sift_frame_sim SimCLRDataset simclr_frame_sim get_rand_imgs Video vid_from_frames VideoDataset get_rico_imgs read_video_data generate_setting2 get_all_texts get_non_duplicate_corpus read_json_line_by_line read_json load_settings deskew write_json_line_by_line read_csv_to_dic_list canny remove_noise preprocess_img find_file write_csv_from_json_list match_template extract_text dilate opening group_dict thresholding get_grayscale thresholding_med extract_frames erode process_frame custom_doc_links items list isinstance time labels tqdm calc_tf_idf new_get_bovw defaultdict deepcopy list time defaultdict norm items gen_tfidfs dot reversed list range sim_func deepcopy list time defaultdict items tqdm fuzzy_LCS len list reversed items sorted list tuple OrderedDict flatten_dict deepcopy list defaultdict items tqdm sort_rankings fix_sims items new_get_bovw time norm sorted list tuple labels gen_tfidfs calc_tf_idf dot tqdm OrderedDict gen_extracted_features flatten_dict extract_features get BytesIO extractall mkdir Path info content ZipFile _download load items time list evaluate gen_lcs_similarity SIFT_create tqdm eval gen_extracted_features gen_bovw_similarity mkdir info open approach SimCLRExtractor SIFTExtractor convert_results_format check_output read_video_data generate_setting2 mkdir get_all_texts info load append open print mean read_csv values _print_performance seed _download tango_combined _output_performance _generate_vis_results label_from_paths Path info _generate_txt_results load Video PrettyPrinter compute_sims eval pprint Path label_from_paths SimCLRExtractor open items sorted list OrderedDict split update evaluate_ranking print execute_retrieval_run append split to_csv join mkdir extend write_json_line_by_line join extend mkdir load join list write_results write_rankings print extend load_settings find_file run_settings open keys split update join format split arange evaluate_ranking read_json_line_by_line read_json load_settings write_json_line_by_line str list sorted OrderedDict get_info_to_ranking_results append mkdir zip items time join print to_csv group_dict dict split zip append sum array log len append asarray items list asarray hit_rate_at_k print extend mean_average_precision average_precision append rank_stats print int asarray recall_at_k precision_at_k average_precision append range len load extract time asarray dump KMeans fit extend tqdm append open list dump glob open zip sample gen_vcodebook extract asarray extend predict get VideoCapture str read extract list concatenate progress_bar CAP_PROP_FRAME_COUNT array range predict extract int append range len array extend predict norm calc_tf_idf dot expand_dims predict ColorJitter CrossEntropyLoss CosineSimilarity VideoCapture str read CAP_PROP_POS_MSEC set randrange append str parent output input run glob sorted len append extend list extend copy write_json_line_by_line pprint mkdir append keys range get_non_duplicate_corpus len join str sorted replace print stem write_json_line_by_line find_file extract_frames Path mkdir append join fnmatch walk to_csv sort list groupby find_file ones uint8 ones uint8 ones uint8 getRotationMatrix2D warpAffine where column_stack opening get_grayscale thresholding_med system imread extract_text preprocess_img

|

<!-- ################################################# ### THIS FILE WAS AUTOGENERATED! DO NOT EDIT! ### ################################################# # file to edit: nbs/index.ipynb # command to build the docs after a change: nbdev_build_docs --> # Tango > Summary description here. This file will become your README and also the index of your documentation.

| 3,101 |

ndalsanto/PDE-DNN

|

['network embedding']

|

['Data driven approximation of parametrized PDEs by Reduced Basis and Neural Networks']

|

fem_data_generation.py pde_dnn_model.py navier_stokes/navier_stokes_pde_activation.py navier_stokes/main_pde_dnn_model.py navier_stokes/build_ns_rb_manager.py generate_fem_training_data generate_fem_coordinates PrintDot plot_history custom_loss pde_dnn_model build_ns_rb_manager navier_stokes_pde_activation seed print sort randint floor unique ceil zeros float range zeros range get_snapshot_function yscale show epoch plot xlabel rc close ylabel tight_layout ylim savefig figure legend array update_fom_specifics set_affine_decomposition_handler set_Q import_rb_affine_matrices get_rb_functions_dict get_num_mdeim_basis clear_fom_specifics compute_theta_min_max perform_pod substitute_parameter_generator set_affine_a build_snapshots M_snapshots_matrix import_snapshots_matrix set_deim import_snapshots_parameters AffineDecompositionHandler load_mdeim_offline get_number_of_basis get_basis_list save_rb_affine_decomposition M_snapshots_coefficients load_deim_offline Mdeim import_basis_matrix save_matrix __doc__ Tensor_parameter_generator set_mdeim perform_mdeim import_rb_affine_vectors get_num_basis loadtxt save_vector print save_offline_structures build_rb_affine_decompositions import_test_parameters set_affine_f RbManager set_save_offline import_test_snapshots_matrix zeros get_deim_basis_list Deim perform_deim zeros

|

# PDE-DNN This repository contains the numerical examples from the paper "Data driven approximation of parametrized PDEs by Reduced Basis and Neural Networks", by N. Dal Santo, S. Deparis and L. Pegolotti. If you use the code, please cite the following reference [arXiv:1904.01514](https://arxiv.org/abs/1904.01514). In this work we propose a novel way to integrate data and PDE simulations by combining DNNs and RB solvers for the prediction of the solution of a parametrized PDE. The proposed architecture features a MLP followed by a RB solver, acting as nonlinear activation function. The output of the MLP is interpreted as a prediction of parameter dependent quantities: physical parameters, theta functions of the approximated affine decomposition and approximated RB solutions. Compared to standard DNNs, we obtain as byproduct the solution in the full physical space and, for affine dependencies, the value of the parameter. Compared to the RB method, we obtain accurate solutions with a smaller number of affine components by solving a linear problem instead of a nonlinear one. # Running the test To train and use the networks, you need to have a working installation of [TensorFlow](https://www.tensorflow.org/). The RB structures during the offline phase of the RB method have been generated with [PyORB](https://github.com/ndalsanto/pyorb) and you need to have a working installation in order to run the example. PyORB itself must rely on a finite element (FE) library, which can be connected through the [pyorb-matlab-api](https://github.com/ndalsanto/pyorb-matlab-api). In the example provided [feamat](https://github.com/lucapegolotti/feamat) has been used as FE backend.

| 3,102 |

ndrplz/ConvLSTM_pytorch

|

['weather forecasting', 'video prediction']

|

['Convolutional LSTM Network: A Machine Learning Approach for Precipitation Nowcasting']

|

convlstm.py ConvLSTMCell ConvLSTM

|

# ConvLSTM_pytorch **[This](https://github.com/ndrplz/ConvLSTM_pytorch/blob/master/convlstm.py)** file **contains the implementation of Convolutional LSTM in PyTorch** made by [me](https://github.com/ndrplz) and [DavideA](https://github.com/DavideA). We started from [this](https://github.com/rogertrullo/pytorch_convlstm/blob/master/conv_lstm.py) implementation and heavily refactored it add added features to match our needs. Please note that in this repository we implement the following dynamics:  which is a bit different from the one in the original [paper](https://arxiv.org/pdf/1506.04214.pdf). ### How to Use The `ConvLSTM` module derives from `nn.Module` so it can be used as any other PyTorch module. The ConvLSTM class supports an arbitrary number of layers. In this case, it can be specified the hidden dimension (that is, the number of channels) and the kernel size of each layer. In the case more layers are present but a single value is provided, this is replicated for all the layers. For example, in the following snippet each of the three layers has a different hidden dimension but the same kernel size. Example usage:

| 3,103 |

neerakara/TTA_prostate

|

['medical image segmentation', 'denoising', 'domain generalization', 'semantic segmentation']

|

['Test-Time Adaptable Neural Networks for Robust Medical Image Segmentation']

|

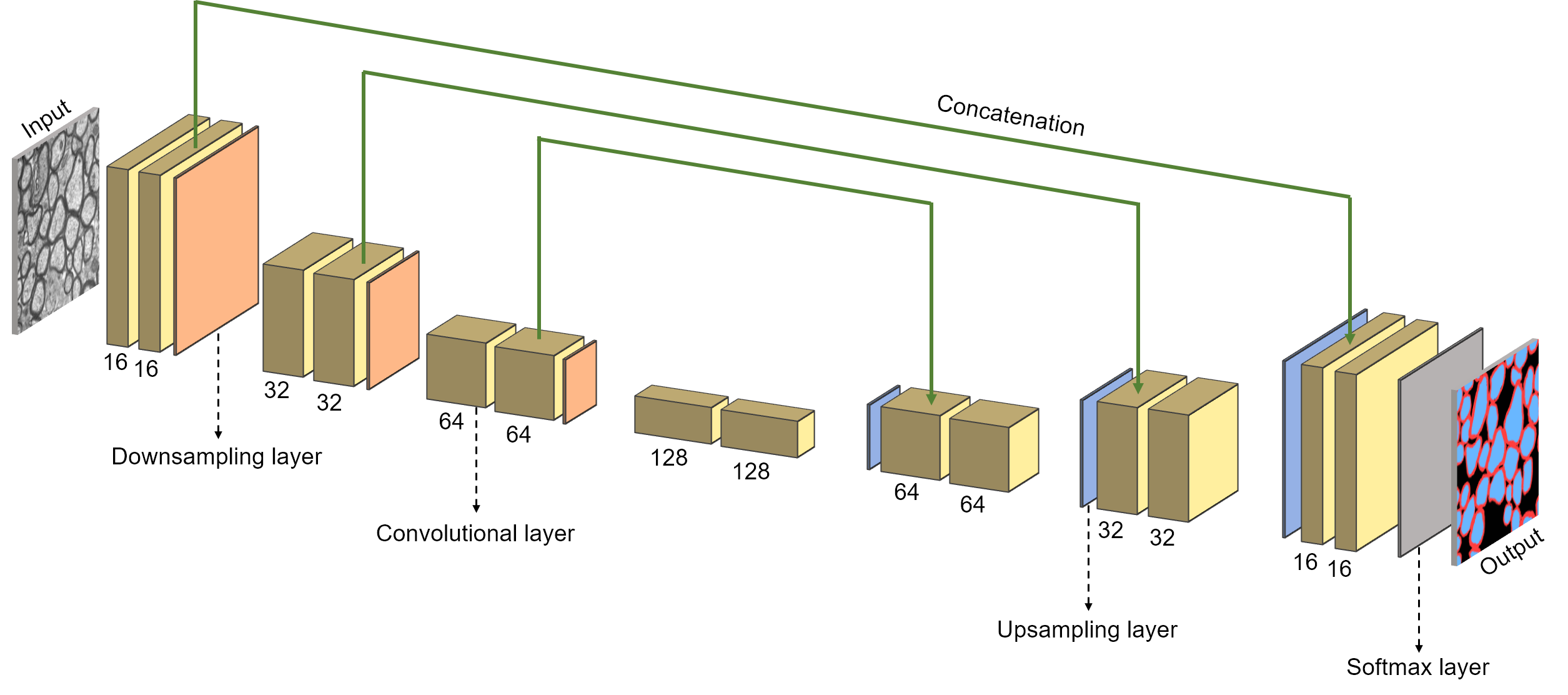

data/data_nci.py data/data_pirad_erc.py tfwrapper/layers.py config/system.py models/i2i/subject_88800049/i2i.py utils_vis.py data/data_promise.py experiments/i2i.py update_i2i.py update_i2i_all.py models/i2i/subject_88800036/i2i.py evaluate.py tfwrapper/losses.py model_zoo.py utils.py model.py main rescale_and_crop predict_segmentation prepare_tensor_for_summary evaluate_losses evaluation_dae predict_i2l evaluation_i2l training_step normalize predict_dae loss dae3D unet2D_i2l net2D_i2i iterate_minibatches_images_and_labels iterate_minibatches_images rescale_image_and_label run_test_time_training main elastic_transform_image_and_label crop_or_pad_slice_to_size_1hot elastic_transform_label_pair_3d elastic_transform_label_3d normalise_image crop_or_pad_slice_to_size crop_or_pad_volume_to_size_along_z makefolder make_onehot get_latest_model_checkpoint_path crop_or_pad_volume_to_size_along_x_1hot group_segmentation_classes_15 save_nii elastic_transform_label load_nii compute_surface_distance_per_label crop_or_pad_volume_to_size_along_x compute_surface_distance group_segmentation_classes save_sample_prediction_results save_sample_results add_1_pixel_each_class save_single_image save_samples_downsampled load_without_size_preprocessing count_slices get_patient_folders prepare_data test_train_val_split load_and_maybe_process_data _release_tmp_memory _write_range_to_hdf5 crop_or_pad_slice_to_size load_without_size_preprocessing count_slices prepare_data load_data _release_tmp_memory _write_range_to_hdf5 load_without_size_preprocessing prepare_data _release_tmp_memory test_train_val_split load_and_maybe_process_data count_slices_and_patient_ids_list convert_to_nii_and_correct_bias_field _write_range_to_hdf5 bilinear_upsample2D conv3D_layer deconv2D_layer_bn crop_and_concat_layer conv3D_layer_bn conv2D_layer_bn conv2D_layer max_pool_layer2d bilinear_upsample3D max_pool_layer3d deconv3D_layer bilinear_upsample3D_ pad_to_size dice_loss compute_dice pixel_wise_cross_entropy_loss_using_probs dice_loss_within_mask pixel_wise_cross_entropy_loss compute_dice_3d_without_batch_axis crop_or_pad_slice_to_size squeeze rescale swapaxes append rot90 array range arange test_dataset flatten load_and_maybe_process_data round open str basicConfig rescale_and_crop array append normalize sum range close mean info f1_score save_sample_prediction_results load_without_size_preprocessing predict_segmentation write compute_surface_distance orig_data_root_pirad_erc load_data zeros orig_data_root_nci std argmax model_handle_i2l softmax argmax softmax model_handle_l2l model_handle_normalizer one_hot pixel_wise_cross_entropy_loss_using_probs dice_loss dice_loss_within_mask softmax pixel_wise_cross_entropy_loss minimize group get_collection UPDATE_OPS optimizer_handle one_hot loss compute_dice prepare_tensor_for_summary concat image softmax evaluate_losses argmax int prepare_tensor_for_summary one_hot dice_loss concat image softmax argmax constant slice reshape squeeze stack cast get_cmap gather expand_dims colors reset_default_graph collect expand_dims arange range copy expand_dims arange range copy int uint8 collect __file__ rescale_image_and_label astype run_test_time_training copy project_code_root MakeDirs save_samples_downsampled uint8 astype float32 make_onehot rescale nlabels argmax makedirs int join glob append max load Nifti1Image to_filename percentile float32 divide copy mean std shape zeros shape zeros zeros zeros zeros group_segmentation_classes_15 arange print unique zeros array RandomState arange reshape rand shape meshgrid gaussian_filter RandomState arange reshape rand shape meshgrid gaussian_filter RandomState arange reshape rand copy meshgrid range gaussian_filter RandomState arange reshape rand copy meshgrid range gaussian_filter zeros shape concatenate distance_transform_edt astype ndim float32 atleast_1d generate_binary_structure bool compute_surface_distance_per_label percentile mean append range range copy clim axis close colorbar add_1_pixel_each_class imshow savefig figure subplot close colorbar imshow add_1_pixel_each_class savefig figure range subplot arange clim close copy colorbar imshow title savefig figure range len subplot zeros_like clim close copy colorbar imshow title savefig figure rot90 range len int join endswith test_train_val_split listdir walk int join test_train_val_split startswith append listdir rescale normalise_image crop_or_pad_slice_to_size str list count_slices squeeze call shape append walk range save_nii close swapaxes zip info float join SliceThickness read get_patient_folders print File read_file create_dataset zeros _release_tmp_memory pixel_array _write_range_to_hdf5 asarray info clear collect join prepare_data makefolder info join get_patient_folders normalise_image append walk join prepare_data makefolder info load_nii range rot90 load_nii unique listdir load_nii listdir call match imread walk save_nii int test_train_val_split match append walk count_slices_and_patient_ids_list GetSpacing ReadImage swapaxes as_list slice subtract append range len as_list subtract pad mod max_pooling2d conv2d conv2d batch_normalization activation batch_normalization conv2d_transpose activation resize_bilinear batch_normalization conv3d activation max_pooling3d conv3d_transpose conv3d reduce_mean softmax_cross_entropy_with_logits_v2 expand_dims reduce_sum copy

|

# TTA_prostate Code for the paper: https://arxiv.org/abs/2004.04668

| 3,104 |

neerakara/test-time-adaptable-neural-networks-for-domain-generalization

|

['medical image segmentation', 'denoising', 'domain generalization', 'semantic segmentation']

|

['Test-Time Adaptable Neural Networks for Robust Medical Image Segmentation']

|

evaluate_with_post_processing.py train_i2l_mapper.py utils_vis.py tfwrapper/losses.py train_l2l_mapper.py utils_masks.py experiments/l2i.py update_i2i_mapper_using_gt_for_multiple_subjects.py evaluate.py utils.py update_i2i_mapper.py update_i2i_mapper_for_multiple_subjects.py train_l2i_mapper.py make_hcp_atlas.py update_i2i_mapper_using_gt.py update_i2l_mapper_for_multiple_subjects.py data/data_hcp_3d.py data/data_abide.py tfwrapper/layers.py generate_visual_results.py model.py config/system.py generate_visual_results_all.py experiments/i2l.py data/data_hcp.py update_i2l_mapper.py experiments/l2l.py experiments/i2i.py model_zoo.py main rescale_and_crop predict_segmentation main rescale_and_crop predict_segmentation main rescale_and_crop predict_segmentation main rescale_and_crop predict_segmentation predict_l2i prepare_tensor_for_summary evaluate_losses likelihood_loss predict_i2l predict_l2l evaluation_i2l evaluation_l2i evaluation_l2l training_step normalize loss unet2D_l2i net2D_i2i unet3D_n5_l2l_no_skip_connections unet3D_n5_l2l_with_skip_connections_except_first_layer unet3D_n5_l2l_with_skip_connections unet2D_i2l unet3D_n4_l2l_no_skip_connections unet3D_n4_l2l_with_skip_connections_except_first_layer unet2D_l2l do_eval iterate_minibatches run_training do_data_augmentation main do_eval iterate_minibatches run_training do_data_augmentation main do_eval iterate_minibatches run_training do_data_augmentation main modify_image_and_label iterate_minibatches_images run_training main iterate_minibatches_images_and_downsampled_labels modify_image_and_label iterate_minibatches_images run_training main iterate_minibatches_images_and_downsampled_labels modify_image_and_label iterate_minibatches_images run_training main iterate_minibatches_images_and_downsampled_labels crop_or_pad_slice_to_size crop_or_pad_volume_to_size_along_z compute_surface_distance_per_label elastic_transform_image_and_label group_segmentation_classes_15 elastic_transform_label makefolder make_onehot crop_or_pad_volume_to_size_along_x elastic_transform_label_pair_3d load_nii group_segmentation_classes compute_surface_distance elastic_transform_label_3d normalise_image get_latest_model_checkpoint_path crop_or_pad_volume_to_size_along_x_1hot save_nii make_noise_masks_2d make_roi_mask make_noise_masks_3d save_sample_prediction_results save_sample_results add_1_pixel_each_class plot_graph save_single_image save_samples_downsampled load_without_size_preprocessing count_slices center_image_and_label prepare_data get_image_and_label_paths copy_site_files_abide_stanford load_and_maybe_process_data correct_bias_field _release_tmp_memory copy_site_files_abide_caltech _write_range_to_hdf5 load_without_size_preprocessing count_slices prepare_data get_image_and_label_paths load_and_maybe_process_data _release_tmp_memory _write_range_to_hdf5 prepare_data get_image_and_label_paths load_and_maybe_process_data _release_tmp_memory _write_range_to_hdf5 bilinear_upsample2D conv3D_layer deconv2D_layer_bn crop_and_concat_layer conv3D_layer_bn conv2D_layer_bn conv2D_layer max_pool_layer2d bilinear_upsample3D max_pool_layer3d deconv3D_layer bilinear_upsample3D_ pad_to_size dice_loss compute_dice pixel_wise_cross_entropy_loss_using_probs dice_loss_within_mask pixel_wise_cross_entropy_loss compute_dice_3d_without_batch_axis crop_or_pad_slice_to_size squeeze rescale swapaxes append rot90 array range arange test_dataset orig_data_root_ixi expname_normalizer flatten load_and_maybe_process_data round open str basicConfig image_depth_ixi rescale_and_crop array image_depth_hcp normalize orig_data_root_abide sum append range orig_data_root_hcp close mean info f1_score log_root save_sample_prediction_results join expname_i2l image_depth_stanford load_without_size_preprocessing predict_segmentation write compute_surface_distance image_depth_caltech std post_process dae_post_process_runs crop_or_pad_volume_to_size_along_z save_single_image argmax model_handle_i2l softmax model_handle_l2i one_hot argmax softmax model_handle_l2l model_handle_normalizer one_hot pixel_wise_cross_entropy_loss_using_probs dice_loss dice_loss_within_mask softmax pixel_wise_cross_entropy_loss ssim reduce_mean square reduce_sum minimize group get_collection UPDATE_OPS optimizer_handle one_hot loss compute_dice prepare_tensor_for_summary concat image softmax evaluate_losses argmax prepare_tensor_for_summary concat image softmax evaluate_losses argmax prepare_tensor_for_summary concat square reduce_mean image ssim argmax constant slice reshape squeeze stack cast get_cmap gather expand_dims colors int str orig_data_root_ixi orig_data_root_hcp experiment_name_i2l shape load_and_maybe_process_data info get_latest_model_checkpoint_path orig_data_root_abide iterate_minibatches info run permutation sort do_data_augmentation expand_dims range crop_or_pad_slice_to_size normal elastic_transform_image_and_label shift copy rotate rescale uniform round range __file__ run_training copy continue_run MakeDirs experiment_name make_roi_mask experiment_name_l2l make_onehot nlabels make_noise_masks_3d elastic_transform_label_3d downsampling_factor_x collect make_onehot rescale nlabels reset_default_graph crop_or_pad_volume_to_size_along_x_1hot expand_dims arange range copy expand_dims arange range copy modify_image_and_label preproc_folder_hcp save_samples_downsampled load int collect uint8 astype float32 make_onehot rescale nlabels argmax makedirs int join glob append max load Nifti1Image to_filename percentile float32 divide copy mean std shape zeros zeros zeros zeros group_segmentation_classes_15 arange print unique zeros array RandomState arange reshape rand shape meshgrid gaussian_filter RandomState arange reshape rand shape meshgrid gaussian_filter RandomState arange reshape rand copy meshgrid range gaussian_filter RandomState arange reshape rand copy meshgrid range gaussian_filter zeros shape concatenate distance_transform_edt astype ndim float32 atleast_1d generate_binary_structure bool compute_surface_distance_per_label percentile mean append range ones_like zeros_like where array range ones zeros range randint ones zeros range randint range copy clim axis close colorbar add_1_pixel_each_class imshow savefig figure subplot close colorbar imshow add_1_pixel_each_class savefig figure range subplot arange zeros_like clim close copy colorbar imshow title savefig figure rot90 range len subplot arange clim close copy colorbar imshow title savefig figure range len close savefig figure plot sorted glob len copyfile range makedirs sorted glob len copyfile range makedirs str sorted glob print call range len get_image_and_label_paths load_nii range minimum min maximum where max rescale normalise_image crop_or_pad_volume_to_size_along_z str sorted list crop_or_pad_slice_to_size count_slices center_image_and_label squeeze append range glob close copy get_image_and_label_paths load_nii swapaxes info float File group_segmentation_classes create_dataset _release_tmp_memory _write_range_to_hdf5 len asarray info clear collect crop_or_pad_volume_to_size_along_z sorted center_image_and_label glob copy get_image_and_label_paths load_nii group_segmentation_classes swapaxes normalise_image join prepare_data makefolder info argmax make_onehot_ array as_list slice subtract append range len as_list subtract pad mod max_pooling2d conv2d conv2d batch_normalization activation batch_normalization conv2d_transpose activation resize_bilinear batch_normalization conv3d activation max_pooling3d conv3d_transpose conv3d reduce_mean softmax_cross_entropy_with_logits_v2 expand_dims reduce_sum copy

|

# domain_generalization_image_segmentation Code for the paper "Test-time adaptable neural networks for robust medical image segmentation": https://arxiv.org/abs/2004.04668 The method consists of three steps: 1. Train a segmentation network on the source domain: train_i2l_mapper.py 2. Train a denoising autoencoder on the source domain labels: train_l2l_mapper.py 3. Adapt the normalization module of the segmentation network for each test image: update_i2i_mapper.py

| 3,105 |

neeyoo/Neuralnetwork_project_art_transfer

|

['style transfer']

|

['A Neural Algorithm of Artistic Style']

|

nst_utils.py generate_noise_image reshape_and_normalize_image save_image CONFIG load_vgg_model reshape _conv2d_relu Variable zeros _avgpool loadmat astype reshape MEANS shape MEANS imwrite astype

|

# Neural Network Project: Art Style Transfer The algorithm of this project will realize Neural Style Transfer. By using neural network, this algorithm can extrat the style of an art masterpiece, for example "The Starry Night" of Vincent van Gogh:  Then merge the style with your own photo. Here is the original photo:  And then, the photo after art style transfer:  <br>

| 3,106 |

nefujiangping/EncAttAgg

|

['relation extraction']

|

['Improving Document-level Relation Extraction via Contextualizing Mention Representations and Weighting Mention Pairs']

|

models/model_utils.py misc/constant.py models/modules.py models/lock_dropout.py data/gen_data.py misc/param.py models/embedding.py misc/util.py models/models.py misc/metrics.py data_loader/data_loader.py main.py trainer/training.py models/long_seq.py data_loader/data_utils.py set_wandb main init collate_fn_bert_enc to_tensor BertEncDataSet get_marker_to_token REDataset IntegrationAttender MutualAttender PoolingStyle compute_f1 __get__dev_true diff_dist_performance compute_ign_f1 __get__test_true num2_p_r_f p_r_f coref_vs_non_coref_performance Accuracy __p_r_f__ Config boolean_string DocumentEncoder LockedDropout process_long_input AttenderAggregator BiLSTMEncoder Embedding MentionExtractor RelationExtraction get_device_of masked_index_fill weighted_sum max_value_of_dtype flatten_and_batch_shift_indices tiny_value_of_dtype get_range_vector ConfigurationError min_value_of_dtype batched_index_select info_value_of_dtype masked_softmax TwoLayerLinear MutualAttendEncoderLayer _get_activation_fn MultiLayerMMA _get_clones EncoderLayer EAATrainer Trainer load FullLoader init open Config param_file EAATrainer evaluate logging train add_argument test print_params ArgumentParser parse_args RelationExtraction save max open list ones convert_tokens_to_ids add append range get dump set build_inputs_with_special_tokens zip tokenize enumerate load int join print min zeros len pad stack to_tensor append max load list format dict open keys len auto auto auto auto split enumerate set load set open enumerate split load dump list len add set num2_p_r_f open enumerate __get__dev_true __get__test_true items list isinstance add set num2_p_r_f append sum range enumerate items list isinstance add set num2_p_r_f append sum range enumerate asarray append float argmax enumerate asarray append float argmax enumerate lower model size tolist extend stack pad unsqueeze zip append to cat enumerate list insert size expand unsqueeze expand_as dim range dtype tiny_value_of_dtype min_value_of_dtype unsqueeze masked_fill softmax sum get_device_of view size get_range_vector unsqueeze range len list view size flatten_and_batch_shift_indices index_select view reshape size flatten_and_batch_shift_indices unsqueeze scatter bool is_floating_point

|

EncAttAgg --------- # ====== 2020.12.30 Update: codes have been re-constructed to [main](https://github.com/nefujiangping/EncAttAgg/tree/main) branch, the master branch is deprecated. ====== This is the source code for ICKG 2020 paper "[Improving Document-level Relation Extraction via Contextualizing Mention Representations and Weighting Mention Pairs](https://conferences.computer.org/ickg/pdfs/ICKG2020-66r9RP2mQIZywMjHhQVtDI/815600a305/815600a305.pdf)" We propose an effective **Enc**oder-**Att**ender-**Agg**regator (EncAttAgg) model for ducument-level RE. This model introduced two attenders to tackle two problems: 1) We introduce a mutual attender layer to efficiently obtain the entity-pair-specific mention representations. 2) We introduce an integration attender to weight mention pairs of a target entity pair.  ## Requirements + python 3.7.4 + scikit-learn

| 3,107 |

nehap25/rlwithgp

|

['gaussian processes']

|

['Manifold Gaussian Processes for Regression']

|

syntheticChrissAlmgren.py robotics_fancy_kernel_experiment.py experiment.py ddpg_agent.py fancy_kernel.py robotics_exp.py gen_data.py fancy_kernel_experiment.py simple_world/primitives.py simple_world/constants.py simple_world/run.py torch_rbf.py main.py untitled.py simple_world/utils.py OUNoise Agent GP d Q get_reward_v2 covering_number argmax_action GP get_reward_v1 FancyKernel train d Q get_reward_v2 covering_number argmax_action GP get_reward_v1 calc_action_list conf_json get_conf gen_objects sample_attributes empty_steps setup data_to_json calc_action_list_v2 gen_stack init_world json_to_data region_json pose_json calc_reward simulate_push data_json robot_json object_json step covering_number d Q get_reward_v2 covering_number argmax_action GP get_reward_v1 d Q get_reward_v2 covering_number argmax_action GP get_reward_v1 MarketEnvironment RBF matern32 multiquadric linear inverse_quadratic gaussian poisson_two matern52 quadratic basis_func_dict spline poisson_one inverse_multiquadric test_cfree_push pose_gen move get_push_conf_fn test_feasible get_push_from_conf get_pose_kin test_touches new_move get_push_to_conf push get_push_conf execute_plan load_world get_problem main solve_problem Pose u_vec close_gripper Conf get_full_aabb get_conf sample_aabb close_enough Region create_object change_constraint collision get_turn_traj create_stack rrt create_constraint touches set_pose assert_poses_close rejection_sample_region get_obstacles get_yaw Robot eul_to_quat set_conf dense_path set_friction step_simulation contains get_straight_traj rejection_sample_aabb Obj sample_region get_dims read_file rrt_to_traj get_pose quat_to_eul pi range len float d min tuple float tolist Q backward step float forward range tuple pid Conf getBasePositionAndOrientation data_json Pose setGravity gen_objects createMultiBody conf createVisualShape connect init_world set_pose gen_stack getDataPath rejection_sample_region get_yaw pos setAdditionalSearchPath eul_to_quat subtract step_simulation getAABB divide extend array GEOM_BOX pos int Conf cos pi sin append range append Conf range step_simulation time simulate_push calc_reward get_conf calc_action_list_v2 step_simulation empty_steps list pid getAABB append range sample_attributes create_object list create_stack append range array sample_attributes Conf getAABB Robot loadURDF uniform rejection_sample_aabb new_move pow exp pow pow ones_like pow pow ones_like pow ones_like log ones_like exp ones_like exp ones_like exp pow ones_like exp pos eul_to_quat u_vec subtract get_yaw pos eul_to_quat u_vec subtract get_yaw T set_conf constrain close_gripper rrt get_conf step_simulation assert_poses_close rrt_to_traj range len time constrain step_simulation close_gripper move sample_aabb array aabb get_pose set_pose get_straight_traj set_conf collision set_pose get_full_aabb solve_problem input eval step_simulation print tuple extend dict load_world read_file append range len Pose setGravity Conf get_conf createMultiBody createVisualShape Region create_object create_stack set_pose loadURDF getDataPath append Robot setAdditionalSearchPath step_simulation getAABB extend get_pose array GEOM_BOX seed get_state DIRECT execute_plan enable disconnect GUI solve_incremental connect step_simulation load_world get_problem set_state disable print_stats print_solution Profile subtract abs norm tuple subtract add append max range len append list getAABB pid pos ori get_turn_traj u_vec subtract Conf extend append list u_vec subtract Conf append dot cross u_vec realpath join dirname sample_aabb any sample_region any array SearchSpace RRTStarBidirectional rrt_star_bidirectional pop ori pos get_turn_traj extend sample_aabb divide changeConstraint pid resetJointState Obj divide set_friction createMultiBody createCollisionShape createVisualShape GEOM_BOX list Pose pose set_pose append range create_object get_full_aabb getNumJoints pid getAABB vstack append amin array range amax tuple pid Pose getBasePositionAndOrientation resetBasePositionAndOrientation pid ori pos changeDynamics pid resetBasePositionAndOrientation pid ori pos stepSimulation range sleep

|

# rlwithgp This is our repository for implementing Algorithm 1 from http://proceedings.mlr.press/v32/grande14.pdf for Efficient Reinforcement Learning with Gaussian Processes, along with all of our other experiments. Our paper can be found at https://github.com/nehap25/rlwithgp/blob/master/6_435_Final_Project.pdf. **experiment.py** --> Implements Algorithm 1 and tests it on Experiment 1 from the paper, also contains our Gaussian Process class. **fancy_kernel.py** --> Implements the kernel from https://arxiv.org/abs/1402.5876 **fancy_kernel_experiment.py** --> Runs Experiment 1 from the paper with fancy_kernel.py **torch_rbf.py** --> implements radial basis kernel in pytorch for fancy_kernel.py **main.py** --> Runs Algorithm 1 for modeling optimal execution of Portfolio Transactions using the Chriss/Almgren model from https://www.math.nyu.edu/faculty/chriss/optliq_f.pdf **ddpg_agent.py** --> Implements the code for the agent's Q-function, the Gaussian Process, as well as noise sampling using an Ornstein-Uhlbeck process **syntheticChrissAlmgren.py** --> Creates a simple simulation trading environment, obtained from https://github.com/udacity/deep-reinforcement-learning/tree/master/finance **robotics_exp.py** --> implements robotics experiment described in paper

| 3,108 |

neheller/labels18

|

['liver segmentation', 'semantic segmentation']

|

['Imperfect Segmentation Labels: How Much Do They Matter?']

|

initializers/convtransposeinterp.py roc_curve.py figures.py Models/Mylayers.py Models/UNet.py Models/SegNet.py experiment_scripts/generate_experiment_scripts.py preprocessing/tools.py callbacks/topnsaver.py viz/create_viz_set.py AUC.py Models/FCN32.py Models/metrics.py model_runner.py callbacks/vizpreds.py losses/sampledbce.py test_perturb.py data_analysis/fancy_scatter.py DataGenerator.py data_analysis/get_performance.py DataGenerator perturb_row random_perturb smooth_function Vector2 choppy_perturb perturb_nat get_segnet get_unet get_callbacks get_run_dir get_time get_fcn32 get_performance dice load_bundles TopNSaver VizPreds get_marker_and_color get_performance get_runs_by_hand Experiment get_assets get_runs LoadBalancer get_access_info ConvTransposeInterp sampled_bce create_fcn32 recall precision dice_sorensen MaxPoolingWithArgmax2D MaxUnpooling2D create_segnet create_unet perturb_row random_perturb perturb smooth_function Vector2 choppy_perturb preprocess natural_perturb perturb_nat load_bundles append normal linspace pi smooth_function fillPoly findContours Vector2 f pi RETR_LIST shape boundingRect unitV zeros CHAIN_APPROX_NONE enumerate len arange normal perturb_row reshape sum range shape logical_and less random join parent existing print new exit map Path mkdir str TopNSaver print TensorBoard VizPreds lower run_dir CSVLogger visualize sum logical_and greater logical_not load int str glob zeros sum logical_and name glob greater cast float32 astype Input range logical_and round cast sum equal logical_and round cast sum equal logical_and round cast sum equal print Input int concatenate append Input range perturb_nat range uint8 random_perturb astype choppy_perturb shape natural_perturb zeros

|

# LABELS 2018 Code A study of how erroneous training data affects the performance of deep learning systems for semantic segmentation ## Usage ``` usage: model_runner.py [-h] [-n NEW] [-e EXISTING] [-b BATCH_SIZE] [-d DATASET] [-p PERTURBATION] [-g GPU_INDEX] [-m MODEL] [-t [TESTING]] [-v [VISUALIZE]] Run a training or testing round for our Labels 2018 Submission optional arguments:

| 3,109 |

neka-nat/gazebo_domain_randomization

|

['object localization']

|

['Domain Randomization for Transferring Deep Neural Networks from Simulation to the Real World']

|

gazebo_domain_randomizer/src/gazebo_domain_randomizer/utils.py gazebo_domain_randomizer/setup.py get_model_properties _get_model_properties_once status_message wait_for_service ServiceProxy logwarn get_model_prop GetModelPropertiesRequest success range _get_model_properties_once sleep

|

# Gazebo Domain Randomization [](https://travis-ci.org/neka-nat/gazebo_domain_randomization) https://arxiv.org/abs/1703.06907 **Double pendulum demo**  **Shadow hand demo**  ## Run ``` roslaunch gazebo_domain_randomizer demo.launch

| 3,110 |

nekitmm/FunnelAct_Pytorch

|

['scene generation', 'semantic segmentation']

|

['Funnel Activation for Visual Recognition']

|

main.py frelu.py resnet_frelu.py FReLU validate AverageMeter accuracy save_checkpoint ProgressMeter train conv1x1 resnext50_32x4d wide_resnet50_2 ResNet resnet50 resnext101_32x8d Bottleneck resnet152 wide_resnet101_2 conv3x3 _resnet resnet34 resnet18 BasicBlock Bottleneck_FReLU resnet101 len eval AverageMeter ProgressMeter model zero_grad cuda display update param_groups size item is_available enumerate time criterion backward print AverageMeter accuracy ProgressMeter step len copyfile save ResNet load_state_dict load_state_dict_from_url

|

# FunnelAct Pytorch Pytorch implementation of Funnel Activation (FReLU): https://arxiv.org/pdf/2007.11824.pdf Validation results are listed below: | Model | Activation | Err@1 | Err@5 | | :---------------------- | :--------: | :------: | :------: | | ResNet50 | FReLU | **22.40** | **6.164** | Note that from the file resnet_frelu.py you can call ResNet18, ResNet34, ResNet50, ResNet101 and ResNet152 but the weights in this repo only available for ResNet50 and I never tried to train other models, so no guaranties there! The code in this repo is based on pytorch imagenet example:

| 3,111 |

nemanja-rakicevic/informed_search

|

['active learning']

|

['Active learning via informed search in movement parameter space for efficient robot task learning and transfer']

|

informed_search/analysis/evaluate_test_target.py informed_search/analysis/plot_evaluations.py informed_search/analysis/evaluate_test_full.py informed_search/tasks/experiment_manage.py informed_search/models/kernels.py informed_search/tasks/environments.py informed_search/utils/plotting.py informed_search/analysis/transfer_test.py informed_search/main_training.py setup.py informed_search/envs/__init__.py informed_search/models/modelling.py informed_search/envs/mujoco/striker_oneshot.py informed_search/utils/misc.py CleanCommand main_run _load_args _start_logging load_metadata main_test load_metadata main_test plot_performance load_metadata _start_logging main_test load_trial_data Striker2LinkEnv Striker5LinkNLEnv Striker5LinkEnv Striker2LinkNLEnv BaseStriker se_kernel mat_kernel rq_kernel UIDFSearch InformedSearch EntropySearch BOSearch BaseModel RandomSearch RobotExperiment SimulationExperiment ExperimentManager scaled_sqdist elementwise_sqdist plot_model_separate plot_model plot_evals MidpointNormalize config_file add_argument ArgumentParser vars parse_args join basicConfig format getcwd strftime upper info makedirs n_success format ExperimentManager n_fail evaluate_test_cases info range _load_args _start_logging update format ExperimentManager std load_metadata print dict mean evaluate_test_cases sum array len evaluate_single_test split subplots grid MultipleLocator set_major_formatter max clip show list set_major_locator set_xlabel FormatStrFormatter savefig legend append range format setdiff1d MaxNLocator plot glob set_xlim cla zip keys enumerate join set_size_inches min set_ylabel split fill_between get_legend_handles_labels array set_ylim len n_success execute_trial zip load_trial_data n_fail uncertainty info append enumerate update_model _start_logging cdist cdist cdist min max arange set_clim set_yticklabels round max MidpointNormalize show subplot set_title set_xlabel colorbar imshow savefig format set_xticklabels cla info join suptitle set_yticks makedirs set_ylabel set_xticks figure len set_clim set_yticklabels add_subplot mu_alpha set_visible uncertainty linspace tick_params round values coord_failed show list set_title tick_right uidf set_xlabel colorbar imshow scatter savefig ticklabel_format meshgrid format sidf set_xticklabels plot set_zticks set_xlim cla set_label_position set_zlim set_zlabel info join set_size_inches suptitle reshape set_yticks makedirs coord_explored set_ylabel set_xticks figure pidf mu_L plot_surface set_ylim len set_clim set_yticklabels add_subplot mu_alpha set_visible linspace tick_params round values list tick_right uidf set_xlabel len colorbar imshow scatter savefig ticklabel_format meshgrid format sidf set_xticklabels plot set_zticks set_xlim set_label_position set_zlim set_zlabel join set_size_inches reshape set_yticks coord_explored set_ylabel set_xticks figure pidf mu_L plot_surface set_ylim makedirs

|

# Informed Search Informed Search of the movement parameter space, that gathers most informative samples for fitting the forward model. <p align="center"> <a href="https://link.springer.com/article/10.1007%2Fs10514-019-09842-7">Full paper</a> | <a href="https://sites.google.com/view/informedsearch">Project website</a> </p> ## Motivation and Method Description The main goal is to build an invertible forward model (maps movement parameters to trial outputs) by fitting a Gaussian Process Regression model

| 3,112 |

neo85824/epsnet

|

['scene parsing', 'panoptic segmentation', 'instance segmentation', 'semantic segmentation']

|

['EPSNet: Efficient Panoptic Segmentation Network with Cross-layer Attention Fusion']

|

scripts/cluster_bbox_sizes.py layers/box_utils.py layers/__init__.py scripts/plot_loss_pan.py utils/timer.py train.py data/__init__.py layers/interpolate.py layers/output_utils.py scripts/make_grid.py layers/functions/detection.py scripts/parse_eval.py panoptic_eval.py data/config.py utils/__init__.py layers/functions/__init__.py utils/augmentations.py scripts/bbox_recall.py epsnet.py scripts/save_bboxes.py layers/modules/multibox_loss.py data/coco.py web/server.py scripts/unpack_statedict.py utils/functions.py scripts/optimize_bboxes.py scripts/convert_darknet.py scripts/compute_masks.py layers/modules/__init__.py scripts/augment_bbox.py scripts/plot_loss.py backbone.py DarkNetBlock DarkNetBackbone darknetconvlayer ResNetBackboneGN VGGBackbone Bottleneck ResNetBackbone construct_backbone make_net FusionModule FPN EPSNet AddCoords Concat CAModule PredictionModule get_transformed_cat calc_map postprocess_ins_sem merge_segmentation evalvideo postprocess_stuff_lincomb CustomDataParallel print_maps prep_unified_display evalimages prep_panoptic_display parse_args savevideo prep_coco_cats postprocess_stuff get_coco_cat evalimage prep_unified_result str2bool evaluate prep_panoptic_result badhash set_lr replace compute_validation_loss ScatterWrapper prepare_data setup_eval compute_validation_map train str2bool COCOPanoptic_inst_sem get_label_map COCOPanoptic COCOAnnotationTransform COCODetection Config set_cfg set_dataset detection_collate index2d decode intersect atss_match log_sum_exp jaccard center_size match sanitize_coordinates is_in_box point_form encode center_distance crop change InterpolateModule postprocess undo_image_transformation display_lincomb instance_logit panoptic_logit semantic_logit Detect CrossEntropyLoss2d MultiBoxLoss FocalLoss2d random_sample_crop intersect prep_box augment_boxes jaccard_numpy make_priors jaccard to_relative intersect process to_relative sigmoid mask_iou logit paint_mask add_randomize test_uniqueness randomize update_centery update_scale update_centerx add render export update_angle update_spacing optimize intersect compute_recall pretty_str jaccard compute_hits print_out to_relative make_priors step grabMAP plot_train smoother plot_val plot_train smoother plot_val SwapChannels ToTensor RandomCrop ToAbsoluteCoords RandomBrightness PhotometricDistort enable_if RandomSaturation Resize BaseTransform RandomSampleCrop ToPercentCoords RandomFlip Pad intersect SSDAugmentation Lambda Compose FastBaseTransform ConvertColor BackboneTransform Expand jaccard_numpy RandomHue ConvertFromInts RandomMirror RandomContrast do_nothing PrepareMasks ToCV2Image RandomLightingNoise RandomScale init_console ProgressBar SavePath MovingAverage enable total_time enable_all start reset disable disable_all stop print_stats env Handler selected_layers type max add_layer sum seed output_web_json add_argument ArgumentParser set_defaults size semantic_logit items addWeighted uint8 IdGenerator undo_image_transformation astype copy shape zeros cuda shape cuda undo_image_transformation imwrite prep_unified_display unsqueeze reset prep_panoptic_display float net use_panoptic_head str join basename print glob evalimage mkdir VideoCapture eval_network transform_frame MovingAverage ThreadPool put cleanup_and_exit CAP_PROP_FPS get_next_frame video_multiframe cuda get_avg list apply_async exit add append get isdigit reversed Queue int time print extract_frame VideoCapture CAP_PROP_FRAME_HEIGHT MovingAverage VideoWriter CAP_PROP_FPS CAP_PROP_FRAME_COUNT get_avg round VideoWriter_fourcc release add set_val range get FastBaseTransform total_time ProgressBar CAP_PROP_FRAME_WIDTH print reset MovingAverage flip_test image unsqueeze save postprocess_ins_sem stuff_num_classes evalvideo cuda pull_image_name video merge_segmentation get_avg list str values display transpose len exit evalimages add from_numpy set_val prep_panoptic_display append multiscale_test savevideo range cat imsave pull_item format total_time ProgressBar shuffle evalimage prep_unified_result flip net use_panoptic_head enumerate fast_nms print sort mask_proto_debug Variable prep_panoptic_result clone reset disable split zeros makedirs class_names values print print_maps get_ap append sum range enumerate len print make_sep make_row len setattr getattr batch_size save_folder MovingAverage lr_warmup_until ScatterWrapper zero_grad make_mask SGD tuple DataLoader save_weights compute_validation_map MultiBoxLoss cuda max max_iter set_lr lr_warmup_init name COCOPanoptic get_interrupt add delayed_settings ceil sum range format replace save_path init_weights load_weights resume mkdir lr item start_iter gamma net time get_latest remove criterion backward print keep_latest EPSNet prepare_data setup_eval parameters reset iteration disable_all step len param_groups Variable cuda parse_args eval replace eval append LongTensor FloatTensor clamp size min expand max intersect expand_as size expand transpose mm view topk encode std use_yolo_regressors zeros_like reshape size jaccard mean long is_in_box append center_distance max range cat enumerate use_yolo_regressors fill_ size jaccard index_fill_ encode max range enumerate center_size log cat cat point_form max max min long clamp sanitize_coordinates size expand size expand_as faster_rcnn_scale sanitize_coordinates interpolate save min_size view mask_proto_mask_activation squeeze matmul range size gt_ max_size float long crop mask_size display_lincomb contiguous mask_proto_debug t center_size preserve_aspect_ratio zeros numpy zeros astype cuda instance_logit num_classes semantic_logit size cuda permute things_to_stuff_map min_size faster_rcnn_scale subtract_means astype float32 preserve_aspect_ratio resize max_size normalize numpy array clip show exp size astype matmul t argsort imshow zeros float numpy range append prep_box concatenate random_sample_crop print uniform randint array minimum clip maximum intersect minimum maximum copy choice uniform float array range jaccard_numpy list range product zip t view matmul shape tile reshape copy exp reshape draw_idle cos square pi imshow set_data tile sin clip render render render render render exp pi set_val uniform render test_uniqueness str set_text draw_idle stack append len randomize add clear str set_text print draw_idle stack save len sum print reshape astype int32 abs range max jaccard float make_priors x_func minimize size min compute_hits cat ndarray isinstance print pretty_str MovingAverage append get_avg range len show basename smoother plot xlabel ylabel title legend append show basename plot xlabel ylabel title legend basesname init add remove clear append perf_counter stop print start pop format print total_time find max len

|

# EPSNet: Efficient Panoptic Segmentation Network with Cross-layer Attention Fusion This project hosts the code for implementing the EPSNet for panoptic segmentation. - [EPSNet: Efficient Panoptic Segmentation Network with Cross-layer Attention Fusion](https://arxiv.org/abs/2003.10142) Some examples from our EPSNet model (19 fps on a 2080Ti and 38.9 PQ on COCO Panoptic test-dev):    ## Models Here are our EPSNet models trained on COCO Panoptic dataset along with their FPS on a 2080Ti and PQ on `val`: | Image Size | Backbone | FPS | PQ | Weights |

| 3,113 |

networkinference/ESL

|

['time series']

|

['Inferring network connectivity from event timing patterns']

|

simulation.py reconstruction.py reconstruction subplots euclidean_distances kurtosis vstack max skew use set_title ones set_xlabel tolist argmin legend append range asarray fabs plot close tight_layout copy mean pinv auc time T norm print loadtxt reshape roc_curve argsort dot set_ylabel zeros std len

|

# ESL -- Event Space Linearization The Event Space Linearization (ESL) is the framework proposed in the article [Inferring network connectivity from event timing patterns, PRL](https://journals.aps.org/prl/abstract/10.1103/PhysRevLett.121.054101). Relying only on spike timing data, ESL reveals the interaction topology of networks of spiking neurons without assuming specific neuron models to be known in advance. In this repository, you will find simple example codes for simulating and reconstructing networks of spiking neurons in Python. [Nest Simulator](http://www.nest-simulator.org/) is required for simulations. Optimized codes for reconstruction may also be found in [Connectivity_from_event_timing_patterns](https://gitlab.com/di.ma/Connectivity_from_event_timing_patterns/) ## Citation ``` @article{PhysRevLett.121.054101, title = {Inferring Network Connectivity from Event Timing Patterns}, author = {Casadiego, Jose and Maoutsa, Dimitra and Timme, Marc},

| 3,114 |

neu-spiral/AcceleratedExperimentalDesign

|

['experimental design']

|

['Accelerated Experimental Design for Pairwise Comparisons']

|

PlotCode/Test_Plot.py Code/Lazypack.py Code/AUCTool.py Code/FourSubmodular.py PlotCode/Time_All.py Code/mathpackage.py Code/Greedypack.py Code/Main.py Code/TimeTool.py AUCReturn AppendY EntGreedy Random MutGreedy Greedy CovGreedy FishGreedy FactorizationGreedy NaiveGreedy Greedy ScalarGreedy ScalarLazyMemo LazyGreedy DeMem FactorizationLazyMemo FactorizationLazy NaiveLazy ScalarLazy Hp shermanMorissonUpdate lamfunction EMupdateVariational logis TimeTransfer SizePlot AllData_Bar shape concatenate concatenate copy LogisticRegression dot append array AppendY roc_auc_score fit sample dot exp dot entropy exp ones inv copy outer dot sqrt range len reverse append array range len str subplots arange plot tick_right suptitle set_yscale set_xlabel set_ylabel savefig legend fill_between set_tick_params array set_ylim len subplots arange tick_right set_xticklabels set_xlim bar set_ylabel set_xticks savefig legend append set_tick_params range set_ylim

|

Experimental Design Acceleration ============================== This code improves the time performance of the greedy algorithm for maximizing D-optimal design for comparisons. We also provide three algorithms Mutual Information (Mut), Fisher Information (Fisher), Entropy (Ent). When using this code, cite the paper >["Accelerated Experimental Design for Pairwise Comparisons"](https://arxiv.org/abs/1901.06080). >Yuan Guo, Jennifer Dy, Deniz Erdogmus, Jayashree Kalpathy-Cramer, Susan Ostmo, J. Peter Campbell, Michael F. Chiang, Stratis Ioannidis. >SIAM International Conference on Data Mining (SDM), Calgary, Alberta, 2019. Usage ====================== An example execution is as follows:

| 3,115 |

neulab/REALSumm

|

['text summarization', 'text generation']

|

['Re-evaluating Evaluation in Text Summarization']

|

process_data/realign_all.py scoring/gehrmann_rouge_opennmt/rouge_baselines/g_rouge.py scoring/get_scores.py process_data/process_bart.py process_data/write_to_presumm_format.py scoring/all_metrics/sentence_mover/wmd/evaluator.py scoring/peyrard_s3/S3/JS_eval.py process_data/process_tac.py process_data/realign.py scoring/peyrard_genetic/example.py scoring/utils.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/utils/string_utils.py scoring/all_metrics/get_rouge_pyrouge.py scoring/gehrmann_rouge_opennmt/rouge_baselines/util.py scoring/gehrmann_rouge_opennmt/rouge_baselines/Rouge155.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/tests/Rouge155_test.py scoring/peyrard_s3/S3/S3.py process_data/process_fast_abs_rl.py process_data/subsample.py process_data/process_unilm.py scoring/peyrard_genetic/SwarmOptimizer.py scoring/peyrard_genetic/JS.py scoring/peyrard_genetic/greedy.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/utils/argparsers.py scoring/all_metrics/sentence_mover/corr_examples.py process_data/file2dir.py scoring/all_metrics/moverscore.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/__init__.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/tests/__main__.py scoring/peyrard_genetic/GeneticOptimizer.py scoring/peyrard_genetic/run.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/utils/file_utils.py process_data/process_reddit.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/utils/sentence_splitter.py scoring/all_metrics/sentence_mover/wmd/__init__.py analysis/utils.py process_data/utils.py process_data/process_t5.py scoring/peyrard_s3/S3/ROUGE.py scoring/peyrard_s3/S3/word_embeddings.py scoring/gehrmann_rouge_opennmt/rouge_baselines/baseline.py scoring/scorer.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/Rouge155.py process_data/preprocess.py scoring/all_metrics/sentence_mover/smd.py scoring/score_dict_update.py process_data/get_alignment.py process_data/filter_by_rouge.py scoring/peyrard_genetic/nlp_utils.py scoring/peyrard_s3/S3/utils.py scoring/peyrard_genetic/post_process_genetic_out.py process_data/process_neusumm.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/setup.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/test.py scoring/gehrmann_rouge_opennmt/rouge_baselines/pyrouge/pyrouge/utils/log.py get_doc_y_val get_topk filter_summaries get_metrics_list print_ktau_matrix init_logger print_score_ranges get_pickle get_correlation get_system_level_scores file2dir file_split process_files_in_dir process_files_q_to_t get_dataset_from_jsonl process_ptrgen remove_dups process_arxiv process_pubmed match find_matching process_files_in_dir process_files_q_to_t get_dataset_from_jsonl process_ptrgen remove_dups process_arxiv process_pubmed remove_empty tokenize read_summaries read_source_docs write_aligned_data listdir_fullpath get_all_summaries process_t5 convert_to_txt_files load_tac_json_files get_clean_text get_num_sys_outs tokenize write_to_disk read_yang_presum find_matching read_processed_file read_msft_unilm write_to_disk get_chunks read_bottom_up match read_abisee read_fast_abs listdir_fullpath get_content subsample tokenize_file get_sents_from_tags get_word_tokenizer get_chunks sent_tokenize sent_list_to_tagged_str apply_function_to_all_items_in_dir read_file get_sent_tokenizer listdir_fullpath get_pickle retain_first_n_sent sent_tokenize_by_tags write_pickle write_to_presumm_format get_num_lines_ref main get_model_paths get_scores_dict_parallel Scorer invert_metric merge_score_dicts remove_metrics apply_cutoff remove_dup_sys_summs sd_to_new_format remove_duplicates add_normed_scores sd_to_new_format_file word_tokenize tokenize_file tokenize_strings get_sents_from_tags get_word_tokenizer get_chunks sent_tokenize sent_list_to_tagged_str apply_function_to_all_items_in_dir get_metrics_list read_file init_logger get_sent_tokenizer listdir_fullpath get_pickle retain_first_n_sent sent_tokenize_by_tags write_pickle get_rouge get_idf_dict collate_idf padding word_mover_score get_bert_embedding bert_encode batched_cdist_l2 truncate process _safe_divide read_normal_file get_overlap_examples process_files read_rouge_wmd_file get_examples get_embeddings tokenize_texts get_weights get_sent_embedding smd Evaluator TailVocabularyOptimizer WMD split_sentences first_sentence first_two_sentences baseline_main verbatim sent_tag_p_verbatim first_three_sentences sent_tag_verbatim adhoc_old0 adhoc_base pre_sent_tag_verbatim register_to_registry second_sentence no_sent_tag full _len_lcs _get_ngrams rouge rouge_l_summary_level rouge_n _recon_lcs _split_into_words _lcs rouge_l_sentence_level _union_lcs _f_p_r_lcs _get_word_ngrams Rouge155 evaluate_rouge n_grams has_repeat Rouge155 main PyrougeTest str_from_file list_files xml_equal DirectoryProcessor verify_dir get_global_console_logger get_console_logger PunktSentenceSplitter remove_newlines cleanup remove_extraneous_whitespace JS_Swarm JS_Gen save_scored_population GeneticOptimizer get_len greedy_optimizer compute_average_freq compute_tf get_all_content_words_lemmatized get_content_words_in_sentence js_divergence compute_word_freq get_all_content_words compute_tf_doc get_all_content_words_stemmed kl_divergence sentence_tokenizer stem_word get_ngrams to_unicode normalize_word get_len remove_duplicates read_out_file write_to_files get_sents generate SwarmOptimizer compute_average_freq compute_tf is_ngram_content KL_Divergence compute_word_freq JS_eval get_all_content_words pre_process_summary JS_Divergence rouge_n_we _ngram_counts rouge_n _find_closest _safe_f1 _ngrams _get_embedding _has_embedding get_all_content_words pre_process_summary _counter_overlap _ngram_count _soft_overlap _safe_divide S3 extract_feature stem_word get_ngrams normalize_word get_len get_words _convert_to_numpy load_embeddings setFormatter getLogger addHandler StreamHandler Formatter setLevel INFO FileHandler append get_metrics_list print tabulate list set print len mean append zeros enumerate percentile kendalltau list isnan filter_summaries spearmanr pearsonr values items list ddict min mean append max values sorted kendalltau join list append pearsonr values join basename print file2dir mkdir normpath listdir exists read_file print join split print join listdir process_ptrgen join listdir mkdir join listdir items list format print lower sub randint len print sent_list_to_tagged_str append enumerate split listdir read_summaries join listdir_fullpath join split listdir_fullpath join append train_test_split out_dec out_src print strip write close out_ref sent_tokenize sent_list_to_tagged_str loads get_sent_tokenizer infile enumerate open update load tac_files info open isnumeric list max values sent_list_to_tagged_str join dump get_clean_text info get_num_sys_outs write close mkdir out_dir range enumerate open sep print items list range len join format print read_folder exists split format print split range len print len format split split length append items sorted list n_jobs get_chunks len create_pipe add_pipe English create_pipe add_pipe English create_tokenizer English tokenizer_fn append strip find join print join listdir function create_pipe add_pipe English findall print sent_tokenizer get_sent_tokenizer zip get_word_tokenizer word_tokenizer append append listdir pjoin get_model_paths pjoin Scorer update list get_model_paths get_num_lines_ref glob print pprint pjoin info keys range get_scores_dict_parallel get_pickle sd_to_new_format write_pickle print list keys set update deepcopy print set keys pop list print startswith keys len sorted print dict append keys print list keys set print keys items print min get_metrics_list max list print set append keys print create_tokenizer join English print strip create_tokenizer nlp append create_pipe add_pipe enumerate tokenizer_fn join list items isinstance Rouge get_scores truncate convert_tokens_to_ids tokenize update defaultdict partial Counter len LongTensor ones item zeros tensor enumerate len eval padding to collate_idf convert_tokens_to_ids tokenize cat len sum sqrt_ _safe_divide sum get_bert_embedding emd_with_flow unsqueeze div_ append zeros numpy array range cat len strip len append float range split append float strip list read_normal_file print read_rouge_wmd_file values len percentile str print min split append max range len percentile str print split append range len append strip range average embed_batch append get_sent_embedding get_vector sum max range len mean list array pop list Counter array append sum keys range len get_embeddings exp ElmoEmbedder print tokenize_texts WMD append get_weights range len findall split_sentences split_sentences split_sentences split_sentences append strip split split_sentences strip split_sentences append join split_sentences append join split_sentences index findall list strip split_sentences join time format list sorted run_rouge rouge print reduce stemming mean evaluate_rouge get_each_score check_repeats run_google_rouge keys tuple add set range len _split_into_words _lcs dict max range tuple _lcs intersection _get_word_ngrams len _len_lcs _split_into_words len _recon_lcs set _split_into_words union len _split_into_words len mean list map zip str join print Rouge155 output_to_dict map rmtree convert_and_evaluate zip randint exists enumerate makedirs len set join walk extend format setFormatter getLogger addHandler StreamHandler Formatter setLevel remove_extraneous_whitespace sub compile sub compile append compute_tf GeneticOptimizer extend append compute_tf extend SwarmOptimizer append get_len index list map extend list map extend list map tokenize extend tokenize list extend set dict append get_all_content_words_stemmed get dict compute_word_freq get_all_content_words_stemmed len get keys set items list compute_average_freq compute_tf kl_divergence print str hasattr isinstance lower tokenize int sorted get_sents_from_tags tuple add set enumerate format print write zip enumerate findall join compute_tf format get_sents evolve save_scored_population info GeneticOptimizer get_all_content_words isnan items list compute_average_freq isnan KL_Divergence pre_process_summary get_all_content_words deque append iteritems _safe_divide pre_process_summary _ngram_counts _ngram_count append append iteritems _get_embedding sorted iteritems _find_closest pre_process_summary _ngram_counts _ngram_count len rouge_n_we JS_eval rouge_n append sorted extract_feature array

|

# REALSumm: Re-evaluating EvALuation in Summarization #### Paper: [Re-evaluating Evaluation in Text Summarization](https://www.aclweb.org/anthology/2020.emnlp-main.751/) #### Authors: [Manik Bhandari](https://manikbhandari.github.io/), [Pranav Gour](https://scholar.google.com/citations?user=OKM72KwAAAAJ&hl=en), [Atabak Ashfaq](https://www.linkedin.com/in/atabakashfaq), [Pengfei Liu](http://pfliu.com/), [Graham Neubig](http://www.phontron.com/) ## Outline * ### [Leaderboard](https://github.com/neulab/Leaderboard-1) * ### [Motivation](https://github.com/neulab/REALSumm#Motivation-1) * ### [Released Data](https://github.com/neulab/REALSumm#Released-Data-1) * ### [Meta-evaluation Tool](https://github.com/neulab/REALSumm#Meta-evaluation-Tool-1) * ### [Bib](https://github.com/neulab/REALSumm#Bib-1) ## Leaderboard

| 3,116 |

neulab/incremental_tree_edit

|

['imitation learning']

|

['Learning Structural Edits via Incremental Tree Transformations']

|

common/utils.py edit_model/data_model.py asdl/asdl.py asdl/transition_system.py edit_components/change_graph.py edit_model/edit_encoder/edit_encoder.py asdl/lang/csharp/demo.py edit_components/utils/decode.py __init__.py edit_model/embedder.py edit_model/utils.py trees/utils.py edit_model/edit_encoder/hybrid_change_encoder.py asdl/hypothesis.py edit_model/edit_encoder/sequential_change_encoder.py edit_model/edit_encoder/bag_of_edits_change_encoder.py common/savable.py edit_model/encdec/encoder.py edit_model/edit_encoder/tree_diff_encoder.py asdl/utils.py edit_components/utils/sub_token.py asdl/__init__.py asdl/asdl_ast.py trees/__init__.py asdl/lang/csharp/demo_edits.py edit_model/pointer_net.py edit_components/dataset.py edit_components/utils/relevance.py edit_model/encdec/__init__.py edit_components/utils/utils.py edit_model/edit_encoder/__init__.py edit_model/nn_utils.py edit_model/encdec/edit_decoder.py edit_model/editor.py asdl/lang/csharp/csharp_grammar.py edit_model/encdec/decoder.py trees/hypothesis.py edit_model/edit_encoder/graph_change_encoder.py exp_githubedits.py asdl/lang/csharp/csharp_transition.py edit_components/change_entry.py trees/substitution_system.py edit_model/encdec/transition_decoder.py edit_model/gnn.py edit_components/vocab.py edit_model/encdec/sequential_encoder.py edit_model/encdec/graph_encoder.py trees/edits.py edit_components/diff_utils.py asdl/lang/csharp/csharp_hypothesis.py edit_components/utils/wikidata.py edit_model/encdec/sequential_decoder.py datasets/utils.py edit_components/utils/unary_closure.py datasets/githubedits/common/config.py common/registerable.py edit_components/evaluate.py _collect_correction_iteration_example_in_batch decode_updated_code imitation_learning eval_csharp_fixer collect_edit_vecs _collect_iteration_example_in_batch _load_dataset _extract_record _train_fn test_ppl _eval_decode_in_batch train _eval_decode _collect_iteration_example _eval_ppl Field ASDLPrimitiveType ASDLCompositeType ASDLGrammar ASDLType ASDLConstructor ASDLProduction AbstractSyntaxTree RealizedField SyntaxToken DummyReduce AbstractSyntaxNode Hypothesis GenTokenDecodingAction ApplySubTreeAction ApplyRuleAction DecodingAction GenTokenAction ReduceDecodingAction TransitionSystem ApplyRuleDecodingAction ApplySubTreeDecodingAction ReduceAction Action remove_comment CSharpASDLGrammar CSharpHypothesis CSharpTransitionSystem _encode Registrable Savable init_arg_parser cached_property update_args ExampleProcessor get_example_processor_cls isfloat isint Arguments ChangeExample ChangeGraph DataSet _encode load_one_change_entry_csharp default_collate_override TokenLevelDiffer evaluate_nll Vocab VocabEntry dump_rerank_file decode_change_vec ndcg dcg get_nn get_rank_score dump_aggregated_query_results_from_query_results_for_annotation gather_all_query_results_from_annotations generate_reranked_list load_query_results generate_top_k_query_results SubTokenHelper extract_unary_closure get_unary_closure_syntax_sub_tree get_entry_str run_from_ipython BatchedCodeChunk Graph2IterEditEditor SequentialAutoEncoder _prepare_edit_encoding NeuralEditor WordPredictionMultiTask ChangedWordPredictionMultiTask Graph2TreeEditor TreeBasedAutoEncoderWithGraphEncoder Seq2SeqEditor SyntaxTreeEmbedder EmbeddingTable CodeTokenEmbedder ConvolutionalCharacterEmbedder Embedder AdjacencyList GatedGraphNeuralNetwork main input_transpose batch_iter dot_prod_attention log_softmax log_sum_exp to_input_variable word2id pad_lists length_array_to_mask_tensor get_sort_map id2word anonymize_unk_tokens PointerNet get_method_args_dict cached_property BagOfEditsChangeEncoder EditEncoder GraphChangeEncoder HybridChangeEncoder SequentialChangeEncoder TreeDiffEncoder Decoder IterativeDecoder SyntaxTreeEncoder SequentialDecoderWithTreeEncoder SequentialDecoder ContextEncoder SequentialEncoder TransitionDecoder Delete Edit Stop Add AddSubtree Hypothesis SubstitutionSystem copy_tree_field get_field_repr get_productions_str get_sibling_ids find_by_id get_field_node_queue calculate_tree_prod_f1 stack_subtrees update hasattr load compute_change_edges time examples dump replace isinstance tgt_actions print enable populate_aligned_token_index_and_mask average load_from_jsonl disable exists enumerate open edit_mappings batch_iter copy_and_reindex_w_dummy_reduce model clip_grad_norm_ zero_grad save cuda list _generate_target_tree_edits Adam load_state_dict tgt_edits append state_dict tgt_actions param_groups item _eval_decode_in_batch _eval_ppl load join time updated_code_ast isinstance backward print SubstitutionSystem parameters transition_system substitution_system prev_code_ast train step print time evaluate_nll isinstance print average eval sum len print average eval sum len compute_change_edges to_string join decode_with_gold_sample root_node get_productions_str ChangeExample populate_aligned_token_index_and_mask add append calculate_tree_prod_f1 float compute_change_edges to_string join tgt_actions root_node get_productions_str decode_with_gold_sample_in_batch ChangeExample populate_aligned_token_index_and_mask add append calculate_tree_prod_f1 float enumerate to_string compute_change_edges decode_with_extend_correction_in_batch tgt_actions root_node get_productions_str ChangeExample add populate_aligned_token_index_and_mask append calculate_tree_prod_f1 range len join to_string list print from_file makedirs write close build Adam parameters _load_dataset _train_fn device to open to_string _train_fn save device max open list defaultdict Adam build add load_state_dict append to range state_dict examples tgt_actions DataSet enable close eval float load join deepcopy time print from_file write extend parameters _load_dataset disable makedirs load setrecursionlimit print _load_dataset _eval_ppl args load int dump basename setrecursionlimit print _load_dataset _eval_decode_in_batch _eval_decode _eval_ppl open seed int load setrecursionlimit print eval _load_dataset args load dump replace print eval _load_dataset open numpy args join sub compile dict add_argument ArgumentParser _actions setattr dest default float int float get_ast_from_json_obj compute_change_edges reindex_w_dummy_reduce populate_gen_and_copy_index_and_mask ChangeExample populate_aligned_token_index_and_mask loads get_decoding_edits_fast _encode get_decoding_actions namedtuple compile list exp training keys dict eval train array load print eval load_from_jsonl generate_reranked_list startswith open args load dump examples isinstance print encode_code_changes code_change_encoder eval load_from_jsonl startswith open args exp log2 float range len int readline print strip close append open update print glob dict isfile load_query_results exp log2 float range len sorted dcg examples sort append dist_func range len join get_example_by_id replace print get_nn makedirs update items list join get_example_by_id replace dict gather_all_query_results_from_annotations keys makedirs join items dump replace print dict open makedirs CSharpTransitionSystem load_from_jsonl read from_roslyn_xml is_composite append add_value AbstractSyntaxNode fields production ndarray isinstance unsqueeze device to AdjacencyList GatedGraphNeuralNetwork compute_node_representations print log_softmax squeeze masked_fill_ softmax bool squeeze masked_fill_ bool max log masked_fill_ bool ones len tensor max enumerate append max len tensor word2id input_transpose pad_lists append max range len list sorted len range enumerate int sorted arange shuffle ceil float range enumerate len dict list __to_new_token_seq getfullargspec dict compile enumerate parent_node as_value_list find_by_id parent_field fields as_value_list parent_node find_by_id append parent_field fields pop copy_and_reindex_w_dummy_reduce root_node parent_node as_value_list find_by_id append parent_field fields copy_and_reindex_wo_dummy_reduce isinstance as_value_list extend fields len get str list items isinstance as_value_list dict fields items list sum append parent_node id

|

# Learning Structural Edits via Incremental Tree Transformations Code for ["Learning Structural Edits via Incremental Tree Transformations" (ICLR'21)](https://openreview.net/pdf?id=v9hAX77--cZ) If you use our code and data, please cite our paper: ``` @inproceedings{yao2021learning, title={Learning Structural Edits via Incremental Tree Transformations}, author={Ziyu Yao and Frank F. Xu and Pengcheng Yin and Huan Sun and Graham Neubig}, booktitle={International Conference on Learning Representations}, year={2021}, url={https://openreview.net/forum?id=v9hAX77--cZ}

| 3,117 |

neulab/langrank

|

['cross lingual transfer']

|

['Choosing Transfer Languages for Cross-Lingual Learning']

|

langrank_predict.py tests/test_train_file.py index_ted_datasets.py index_el_datasets.py index_pos_datasets.py index_parsing_datasets.py langrank.py get_vocab read_data get_vocab read_data get_vocab read_data read_data map_task_to_models map_task_to_data uriel_distance_vec check_task_model_data check_task_model prepare_train_file distance_vec lgbm_rel_exp rank prepare_new_dataset get_candidates read_vocab_file train check_task test_train append int split append split map_task_to_models join check_task join check_task_model map_task_to_data append int split join map_task_to_data resource_filename item append isinstance print set float len inventory_distance print geographic_distance syntactic_distance genetic_distance phonological_distance featural_distance set float intersection len ndarray isinstance join format uriel_distance_vec print len write close distance_vec prepare_new_dataset mkdir zip open array lgbm_rel_exp enumerate join save_model load_svmlight_file LGBMRanker fit Booster list check_task_model distance_vec append sum range predict map_task_to_models format resource_filename enumerate join uriel_distance_vec print min argsort get_candidates array len prepare_train_file format train

|

# LangRank by [NeuLab](http://www.cs.cmu.edu/~neulab/) @ [CMU LTI](https://lti.cs.cmu.edu) <img align="right" width="400px" src="figures/overview.png"> LangRank is a program for **Choosing Transfer Languages for Cross-lingual Transfer Learning**, described by our [paper](https://arxiv.org/abs/1905.12688) on the topic at ACL 2019. Cross-lingual transfer, where a high-resource *transfer* language is used to improve the accuracy of a low-resource *task* language, is now an invaluable tool for improving performance of natural language processing (NLP) on low-resource languages. However, given a particular task language, it is not clear *which* language to transfer from, and the standard strategy is to select languages based on *ad hoc* criteria, usually the intuition of the experimenter. Since a large number of features contribute to the success of cross-lingual transfer (including phylogenetic similarity, typological properties, lexical overlap, or size of available data), even the most enlightened experimenter rarely considers all these factors for the particular task at hand. LangRank is a program to solve this task of automatically selecting optimal transfer languages, treating it as a ranking problem and building models that consider the aforementioned features to perform this prediction. For example, let's say you have a *machine translation* (MT) task, and you want to know which languages/datasets you should use to build a system for the low-resource language *Azerbaijani*. In this case, you would prepare an example of the type of data you want to translate (in word and sub-word format, details below), and the language code "aze", then run a command like the following (where `-n 3` are the top 3 languages):

| 3,118 |

neuro-ml/inverse_weighting

|

['medical image segmentation', 'semantic segmentation']

|

['Universal Loss Reweighting to Balance Lesion Size Inequality in 3D Medical Image Segmentation']

|