repo

stringlengths 8

116

| tasks

stringlengths 8

117

| titles

stringlengths 17

302

| dependencies

stringlengths 5

372k

| readme

stringlengths 5

4.26k

| __index_level_0__

int64 0

4.36k

|

|---|---|---|---|---|---|

KevinHuang841006/AI_Security_training

|

['adversarial attack']

|

['Towards Evaluating the Robustness of Neural Networks']

|

rescaling_defense/l2_attack.py ZOO/models.py FGSM/model_CW.py CandWL2/models.py rescaling_defense/check_test.py FGSM/model.py rescaling_defense/test_attack.py Downscaling_Attack/down_attack.py rescaling_defense/setup_inception2.py FGSM/run.py CandWL2/train_model.py ZOO/zoo_newton.py CandWL2/C_W.py ZOO/train_model.py CandWL2/CarliniWagner.py model.py cnn_model conv_2d ZERO CarliniWagnerL2 Softmax MLP Linear Model ReLU Conv2D make_basic_cnn Flatten Layer batch_indices model_loss model_train cnn_model conv_2d conv_2d batch_indices model_train cnn_model model_loss fgm test show generate_data CarliniL2 readimg NodeLookup ImageNet create_graph InceptionModel main maybe_download_and_extract run_inference_on_image show generate_data Softmax MLP Linear Model ReLU Conv2D make_basic_cnn Flatten Layer batch_indices model_loss model_train plot_image Run_Stocastic_Gradient check_range gradient_hessian Sequential model Activation add MLP softmax_cross_entropy_with_logits reduce_mean int update join list RandomState minimize print batch_indices len shuffle AdamOptimizer Saver save global_variables_initializer range model_loss run print to_float reduce_max reduce_sum stop_gradient equal to_float softmax_cross_entropy_with_logits gradients reduce_max reduce_sum sign reduce_mean clip_by_value stop_gradient equal flatten join print range append array range sample create_graph read fatal join urlretrieve st_size print extractall stat model_dir makedirs maybe_download_and_extract run_inference_on_image imread array imresize square randint sqrt zeros sum max log run print reshape show reshape imshow argmax print plot_image min range sqrt gradient_hessian zeros sum max clip run

|

# AI_Security_training ## Setup run python ./C_W.py env : anoconda, python-3.5, numpy, tensorflow ## Attack : Carlini and Wagner L2 Proposed by Carlini and Wagner. It is an iterative, white box attack. paper reference : https://arxiv.org/abs/1608.04644 ## Model model data structure : Reference from Cleverhan. Definded in models.py, witch providing numerous hirachical tensor model. ### 3 layer CNN

| 600 |

KexianHust/Structure-Guided-Ranking-Loss

|

['depth estimation', 'monocular depth estimation']

|

['Structure-Guided Ranking Loss for Single Image Depth Prediction']

|

demo.py models/syncbn/modules/functional/syncbn.py models/syncbn/test.py models/syncbn/modules/nn/__init__.py models/networks.py models/syncbn/modules/functional/_syncbn/_ext/syncbn/__init__.py models/syncbn/modules/functional/__init__.py models/syncbn/modules/functional/_syncbn/build.py models/syncbn/modules/nn/syncbn.py models/resnet.py models/DepthNet.py demo DepthNet Decoder FTB AO FFM ResNet resnet50 Bottleneck resnet152 conv3x3 resnet34 resnet18 BasicBlock resnet101 init_weight _count_samples _check_contiguous BatchNorm2dSyncFunc _import_symbols BatchNorm2d unsqueeze resize fromarray data_dir imsave format asarray img_transform size Compose astype listdir net join uint8 ANTIALIAS print Variable result_dir convert BILINEAR numpy update ResNet load_state_dict state_dict update ResNet load_state_dict state_dict update ResNet load_state_dict state_dict update ResNet load_state_dict state_dict update ResNet load_state_dict state_dict fill_ isinstance out_channels Conv2d normal_ sqrt modules zero_ Linear size enumerate dir _wrap_function getattr append callable

|

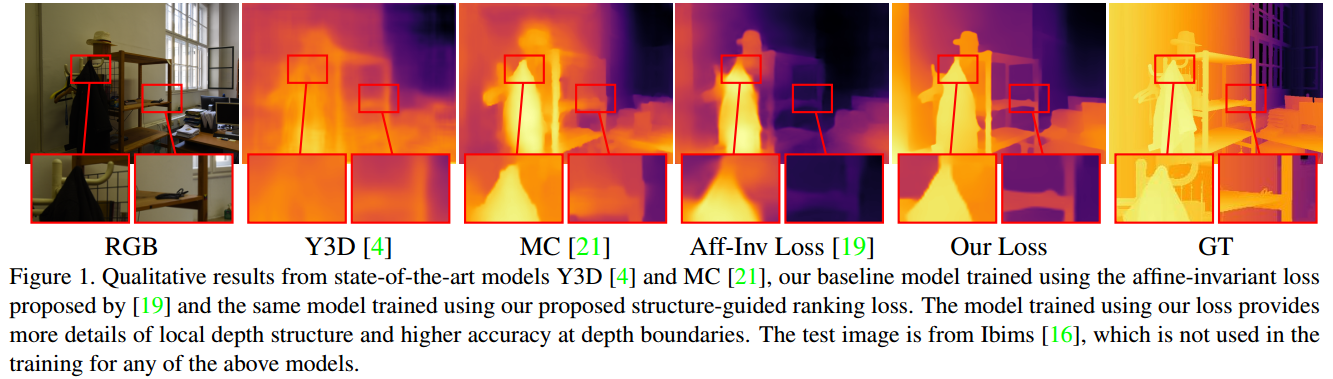

# Structure-Guided Ranking Loss for Single Image Depth Prediction This repository contains a pytorch implementation of our CVPR2020 paper "Structure-Guided Ranking Loss for Single Image Depth Prediction". [Project Page](https://KexianHust.github.io/Structure-Guided-Ranking-Loss/)  ## Changelog * [Jun. 2020] Initial release ## To do - [ ] Mix data training ## Prerequisites * Pytorch >= 0.4.1

| 601 |

Keziwen/Unsupervised-via-TIS

|

['mri reconstruction']

|

['An Unsupervised Deep Learning Method for Multi-coil Cine MRI']

|

train_v2.py evaluate_v2.py model.py dataset.py get_train_data_UIH get_fine_tuning_data get_train_data get_test_data get_data_sos main evaluate getCoilCombineImage_DCCNN apply_conv getMultiCoilImage getADMM_2D apply_conv_3D dc conv_op DC_CNN_2D dc_DCCNN real2complex getCoilCombineImage generate_data get_data_sos shape transpose shape transpose shape transpose transpose concatenate abs transpose squeeze fft2 shape tile sum join makedirs join format fftshift evaluate get_test_data print size sum value value value cast imag real stack range zeros_like complex conv_op range dc_DCCNN append stack range getADMM_2D complex apply_conv getADMM_2D relu concat dc shape stack append abs range complex apply_conv relu concat shape stack ifft2d DC_CNN_2D real2complex abs range append multiply ifft2d fft2d real2complex multiply ifft2d fft2d permutation range len

|

# Unsupervised-via-TIS Code for our work: "An unsupervised deep learning method for multi-coil cine MRI". If you use this code, please refer to: Ke et al 2020 Phys. Med. Biol. https://doi.org/10.1088/1361-6560/abaffa

| 602 |

Kipok/understanding-momentum

|

['stochastic optimization']

|

['Understanding the Role of Momentum in Stochastic Gradient Methods']

|

utils/qhm.py resnet_on_cifar/utils.py resnet_on_cifar/shb.py utils/verify_conjecture.py lr_on_mnist/utils.py lr_on_mnist/main.py resnet_on_cifar/main.py lr_on_mnist/qhm.py resnet_on_cifar/models.py lr_on_mnist/param_conv.py eval_train eval_test Net loss_fn run_exp train from_accsgd from_synthesized_nesterov from_nadam from_robust_momentum from_pid from_two_state_optimizer QHM get_git_diff get_git_hash Logger eval_test AverageMeter loss_fn run_exp train resnet44_cifar resnet110_cifar resnet1202_cifar resnet1001_cifar PreActBasicBlock ResNet_Cifar Bottleneck resnet164_cifar preact_resnet110_cifar resnet20_cifar conv3x3 preact_resnet1001_cifar resnet32_cifar PreActBottleneck BasicBlock resnet56_cifar PreAct_ResNet_Cifar preact_resnet164_cifar SHB get_git_diff get_git_hash Logger regime alpha_solver qhm_rate qhm qhm_rate_split beta_solver nu_solver alpha_beta_solver verify_conjecture exp view nll_loss scatter_ wd weight zeros sum mse_loss step enumerate add_scalar format add_scalar print eval item dataset len format add_scalar print eval dataset len drop_freq MultiStepLR DataLoader output_dir device StepLR eval_test data_dir drop_steps epochs to range eval_train SummaryWriter format bs is_available drop_rate MNIST join print QHM parameters train step add_scalar sqrt sqrt named_parameters model zero_grad max update val format size mean avg time norm backward print AverageMeter min named_parameters loss_fn SHB save load_state_dict CIFAR10 checkpoint load isfile ResNet_Cifar ResNet_Cifar ResNet_Cifar ResNet_Cifar ResNet_Cifar ResNet_Cifar ResNet_Cifar ResNet_Cifar PreAct_ResNet_Cifar PreAct_ResNet_Cifar PreAct_ResNet_Cifar g copy zeros empty range sqrt abs max sqrt alpha_solver qhm_rate linspace empty enumerate alpha_solver qhm_rate linspace empty enumerate alpha_solver qhm_rate linspace empty enumerate join qhm_rate format kappa_grid_size kappa_rb nu_grid_size print dump_results kappa_lb mkdir linspace save alpha_beta_solver empty range enumerate

|

# Understanding the Role of Momentum in Stochastic Gradient Methods To reproduce experiments performed in the paper, see code and comments in `experiments.ipynb`. To run logistic regression on MNIST or ResNet-18 on CIFAR, you can use scripts in `lr_on_mnist` and `resnet_on_cifar` correspondingly. Run `python main.py --help` for list of available hyperparameters in both scripts. To be able to run the code, you need to install the following Python packages: numpy, matplotlib, pytorch (v0.4), tensorboardx

| 603 |

KirillShmilovich/ActiveLearningCG

|

['active learning']

|

['Discovery of Self-Assembling $π$-Conjugated Peptides by Active Learning-Directed Coarse-Grained Molecular Simulation']

|

ActiveLearning/step_2-aquisition/Acquisitions.py ActiveLearning/step_2-aquisition/GPR_v2.py ActiveLearning/step_1-GPR/GPR_v2.py VAE/IO.py VAE/martini22_ff.py VAE/encoding.py VAE/MAP.py VAE/SS.py VAE/FUNC.py GaussianProcessRegressor EIacquisition PIacquisition UCBacquisition Exploitacquisition GaussianProcessRegressor main Processing norm2 pat norm cos_angle distance2 spl hash nsplit formatString pdbOut pdbAtom pdbBoxRead Residue pdbFrameIterator breaks streamTag contacts Chain getChargeType pdbBoxString check_merge groAtom pdbChains add_dummy groFrameIterator groBoxRead residues residueDistance2 isPdbAtom mapIndex CoarseGrained map aver martini22 tt ssClassification typesub call_dssp sqrt log pi sqrt sum startswith sqrt append info append pdbAtom pdbBoxRead startswith strip int groBoxRead next print iteritems input endswith isdigit open info append range len append norm range join zip sort distance2 extend set dict add reverse warning info append range len update spl nsplit sum zip replace zip dict join translate communicate debug pdbOut wait write system id readlines Popen

|

# Discovery of Self-Assembling pi-Conjugated Peptides by Active Learning-Directed Coarse-Grained Molecular Simulation Code and notebooks to accompany "Discovery of Self-Assembling pi-Conjugated Peptides by Active Learning-Directed Coarse-Grained Molecular Simulation" (DOI: https://doi.org/10.1021/acs.jpcb.0c00708) ## Contents ### VAE The [VAE](VAE) directory details the procedure for generating the VAE used for producing the latent space embeddings. [VAE notebook](VAE/VAE.ipynb)  ### Active Learning The [ActiveLearning](ActiveLearning) directory contains details the steps involved for a single active learning iteration. [GPR model selection notebook](ActiveLearning/step_1-GPR/Model_Selection_GPR.ipynb)

| 604 |

Kishwar/tensorflow

|

['style transfer']

|

['Artistic style transfer for videos and spherical images']

|

rnn-mnist/model.py vgg19-style-transfer/model.py vgg19-style-transfer-video/vgg19Model.py emotion/emo_test.py vgg19-style-transfer/utils.py deep-cnn-rnn-asr/char_map.py vgg19-style-transfer-video/utils.py vgg19-style-transfer/optimize.py vgg19-style-transfer/style-transfer-main.py emotion/model.py vgg19-style-transfer/constants.py vgg19-style-transfer-video/style-transfer-Noise-train.py vgg19-style-transfer-video/optimize.py vgg19-style-transfer-video/style-transfer-Noise-test.py vgg19-style-transfer-video/noiseModel.py rnn-mnist/rnn_mnist_train.py mnist/mnist_test.py vgg19-style-transfer-video/constants.py rnn-mnist/constants.py tiny_yolo_tf_keras/yolo_utils.py mnist/mnist_train.py tiny_yolo_tf_keras/coco2pascal.py tiny_yolo_tf_keras/preprocessing.py emotion/utils.py mnist/model.py emotion/constants.py mnist/constants.py emotion/emo_train.py rnn-voice-control-car/keras/Generate_Voice_Data.py rnn-voice-control-car/keras/Test-Voice-Control-Car-Model.py main main model init_biases init_weights layer_weights layer_biases train loss create_onehot_label read_data get_next_batch run init_weights layer_weights model RNN run model wav2mfcc run create_imageset write_categories get_instances keyjoin create_annotations rename instance_to_xml root BatchGenerator parse_annotation _sigmoid generate_colors _softmax read_anchors draw_boxes BoundBox bbox_iou decode_netout read_classes preprocess_image scale_boxes vgg19 optimize build_parser generate_noise_image getresizeImage compute_style_cost compute_tv_cost normalize_image compute_layer_gen_cost list_files Denormalize tensor_size gram_matrix compute_content_cost load_mat_file save_image compute_layer_style_cost resizeImage total_cost init_weights layer_weights Layer_Norm noiseModel optimize generate build_parser build_parser generate_noise_image getresizeImage readimage compute_style_cost compute_tv_cost normalize_image compute_layer_gen_cost list_files Denormalize tensor_size gram_matrix compute_content_cost load_mat_file get_video_image save_image compute_layer_style_cost resizeImage total_cost vgg19 model Variable float32 placeholder layer_biases layer_weights init_weights init_biases dropout relu reshape matmul max_pool conv2d add_to_collection bias_add l2_loss softmax_cross_entropy_with_logits get_collection reduce_mean add_n scalar zeros join int permutation print reshape apply dropna read_csv softmax_cross_entropy_with_logits model print reshape minimize placeholder shape layer_weights reduce_mean argmax read_data_sets reshape BasicLSTMCell transpose static_rnn split RNN equal float32 cast sleep encode send LSTM Dense Sequential add load print shape pad mfcc ElementMaker ElementMaker text tuple expand loads savemat text loads expand stripext write_text format stripext expand listdir iteritems groupby format print write expand get_instances rename instance_to_xml root imread append enumerate int list parse sorted listdir text iter float round seed list shuffle map reshape stack tuple reversed BICUBIC what resize expand_dims array open get_score int str ymin putText ymax FONT_HERSHEY_SIMPLEX xmin shape xmax rectangle ymin min ymax xmax xmin max list exp _softmax _sigmoid reversed argsort BoundBox append range len exp min max constant relu max_pool _weights conv2d load_mat_file bias_add vgg19 reset_default_graph save_image run str compute_style_cost normalize_image placeholder shape imsave range getresizeImage compute_tv_cost astype compute_content_cost zeros InteractiveSession generate_noise_image time minimize print float32 tensor_size AdamOptimizer global_variables_initializer array total_cost add_argument ArgumentParser walk extend gram_matrix reshape eval size as_list reshape transpose matmul size compute_layer_gen_cost reduce add append compute_layer_style_cost l2_loss l2_loss tensor_size l2_loss str print shape uniform imsave astype imsave as_list tanh conv2d_transpose relu conv2d layer_weights Layer_Norm stack moments len VideoCapture destroyAllWindows Graph ConfigProto release divide multiply divide float32 cast to_float get_shape multiply subtract divide float32 reduce_prod cast to_float multiply subtract divide to_float subtract multiply divide add add subtract add uint8 astype avg_pool

|

# tensorflow (Python 2.7 / 3.5) This repo contains my tensorflow codes ## MNIST <br /> - mnist training - python mnist_train.py <br /> Required training and test/validation data is automatically downloaded by code. <br /> - mnist test - python mnist_test.py <br /> Image input as arg (not yet implemented, arg for input image, change in code for now) ## EMOTION <br /> - emotion training - python emo_train.py <br /> Required training and test/validation data can be downloaded from <br />

| 605 |

KnollFrank/automl_zero

|

['automl']

|

['AutoML-Zero: Evolving Machine Learning Algorithms From Scratch']

|

generate_datasets.py task_pb2.py get_dataset create_projected_binary_dataset main load_projected_binary_dataset train_valid_test_split seed int list append randn extend ScalarLabelDataset get_dataset dot add transform StandardScaler range train_valid_test_split fit valid_labels train_labels zeros features range test_labels len as_numpy reshape load_fn astype float select_classes train_test_split projected_dim as_numpy min_data_seed dataset_name sorted list data_dir SerializeToString create_projected_binary_dataset num_test_examples range format num_valid_examples load max_data_seed num_train_examples print makedirs len

|

# AutoML-Zero Open source code for the paper: \"[**AutoML-Zero: Evolving Machine Learning Algorithms From Scratch**](https://arxiv.org/abs/2003.03384)" | [Introduction](#what-is-automl-zero) | [Quick Demo](#5-minute-demo-discovering-linear-regression-from-scratch)| [Reproducing Search Baselines](#reproducing-search-baselines) | [Citation](#citation) | |-|-|-|-| ## What is AutoML-Zero? AutoML-Zero aims to automatically discover computer programs that can solve machine learning tasks, starting from empty or random programs and using only basic math operations. The goal is to simultaneously search for all aspects of an ML algorithm—including the model structure and the learning strategy—while employing *minimal human bias*.  Despite AutoML-Zero's challenging search space, *evolutionary search* shows promising results by discovering linear regression with gradient descent, 2-layer neural networks with backpropagation, and even algorithms that surpass hand designed baselines of comparable complexity. The figure above shows an example sequence of discoveries from one of our experiments, evolving algorithms to solve binary classification tasks. Notably, the evolved algorithms can be *interpreted*. Below is an analysis of the best evolved algorithm: the search process "invented" techniques like bilinear interactions, weight averaging, normalized gradient, and data augmentation (by adding noise to the inputs).  More examples, analysis, and details can be found in the [paper](https://arxiv.org/abs/2003.03384).

| 606 |

Koldh/LearnableGroupTransform-TimeSeries

|

['time series']

|

['Learnable Group Transform For Time-Series']

|

LGT-ToyExample.py LGT-Haptics.py LGT-BirdDetection.py

|

# LearnableGroupTransform-TimeSeries

| 607 |

Krisztina-Sinkovics/IDcard-visibility-classifier

|

['face detection']

|

['MIDV-500: A Dataset for Identity Documents Analysis and Recognition on Mobile Devices in Video Stream']

|

code/main.py code/lib/model.py code/lib/predict.py code/lib/train.py code/lib/load_images.py code/lib/helpers/__init__.py code/lib/helpers/helpers.py load_images load_single_image get_model predict train_model to_one_channel contrast_stretching oversample_minority_classes join print len append array load_single_image to_one_channel imread contrast_stretching stack print compile Sequential output Adam add Dense ResNet50 Model Flatten Dropout load_model print reshape argmax load_single_image strip to_categorical LabelEncoder load_images classes_ flow save list apply fit_generator ImageDataGenerator join oversample_minority_classes print summary transform get_model read_csv fit percentile rescale_intensity int str value_counts print shape floor append float range len

|

*** # Classifying visibility of ID cards in photos Implementation of multiclass image classifier for identifying visibility of ID cards on corrupted images utilizing CNN architecture with a pretrained backbone network and Keras/Tensorflow. Input image example:  Output labels example:  The project includes: - a [notebook](notebook/House_of_ID_Cards.html) with data exploration, model training and evaluation of the performance;

| 608 |

KurochkinAlexey/IMV_LSTM

|

['time series']

|

['Exploring Interpretable LSTM Neural Networks over Multi-Variable Data']

|

networks.py IMVFullLSTM IMVTensorLSTM

|

# IMV-LSTM Pytorch implementation of "Exploring Interpretable LSTM Neural Networks over Multi-Variable Data" https://arxiv.org/pdf/1905.12034.pdf # Content 1) Nasdaq dataset experiment 2) SML2010 dataset experiment 3) PM2.5 dataset experiment

| 609 |

KylianvG/Embetter

|

['word embeddings']

|

['Man is to Computer Programmer as Woman is to Homemaker? Debiasing Word Embeddings']

|

embetter/data.py embetter/download.py embetter/benchmarks.py experiments.py embetter/debias.py compare_bias.py embetter/embeddings_config.py embetter/we.py compare_occupational_bias get_datapoints_embedding project_profession_words plot_comparison_embeddings soft run_benchmark hard print_details show_bias main Benchmark load_professions load_gender_seed load_equalize_pairs load_data load_definitional_pairs soft_debias hard_debias download get_confirm_token save_response_content doPCA viz WordEmbedding to_utf8 drop show format subplots xlabel min set_xlim ylabel title scatter savefig max set_ylim sorted range len words load_definitional_pairs profession_stereotypes load_professions list get_datapoints_embedding project_profession_words intersection plot_comparison_embeddings center soft print run_benchmark words hard print_details WordEmbedding show_bias load_data embeddings center print ljust embeddings rjust center best_analogies_dist_thresh print viz profession_stereotypes print deepcopy hard_debias deepcopy soft_debias print WordEmbedding do_soft Benchmark format evaluate print pprint_compare join join join join load_professions load_gender_seed load_definitional_pairs load_equalize_pairs norm words v set sqrt normalize enumerate drop randn zero_grad MultiStepLR numpy svd list str mm Adam range detach words set item norm backward print t step diag get join save_response_content print get_confirm_token dirname abspath Session items startswith isinstance center join print ljust rjust PCA append v array fit

|

# Embetter: FactAI Word Embedding Debiassing Are you using pre-trained word embeddings like word2vec, GloVe or fastText? `Embetter` allows you to easily remove gender bias. No longer can nurses only be female or are males the only cowards. This repository was made during the FACT-AI course at the University of Amsterdam, during which papers from the FACT field are reproduced and possibly extended. ## Getting Started Here we will outline how to access the code. ### Prerequisites To run the code, create an Anaconda environment using: ``` conda env create -f environment.yml

| 610 |

Kyubyong/g2p

|

['speech synthesis']

|

['Learning pronunciation from a foreign language in speech synthesis networks', 'Learning pronunciation from a foreign language in speech synthesis networks']

|

g2p_en/expand.py setup.py g2p_en/g2p.py g2p_en/__init__.py normalize_numbers _expand_dollars _expand_ordinal _expand_decimal_point _expand_number _remove_commas G2p construct_homograph_dictionary group split int group sub join dict splitlines startswith split

|

[](https://pypi.org/project/g2p-en/) [](https://pypi.org/project/g2p-en/) # g2pE: A Simple Python Module for English Grapheme To Phoneme Conversion * [v.2.0] We removed TensorFlow from the dependencies. After all, it changes its APIs quite often, and we don't expect you to have a GPU. Instead, NumPy is used for inference. This module is designed to convert English graphemes (spelling) to phonemes (pronunciation). It is considered essential in several tasks such as speech synthesis. Unlike many languages like Spanish or German where pronunciation of a word can be inferred from its spelling, English words are often far from people's expectations. Therefore, it will be the best idea to consult a dictionary if we want to know the pronunciation of some word. However, there are at least two tentative issues in this approach.

| 611 |

L0SG/seqgan-music

|

['text generation']

|

['SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient']

|

discriminator.py music_seqgan_conditional.py postprocessing.py music_seqgan.py discriminator_w.py music_lsseqgan.py music_wseqgan.py dataloader.py utils.py discriminator_ls.py generator_w.py rollout.py music_lsseqgan_conditional.py generator.py make_ref.py utils_pickle_reference.py rollout_w.py preprocessing.py generator_ls.py overfit_noise.py rollout_ls.py Dis_fakedataloader Gen_Data_loader Dis_dataloader Dis_realdataloader linear highway Discriminator linear highway Discriminator linear highway Discriminator Generator Generator Generator load_data preprocessing generate_samples load_checkpoint save_checkpoint main pre_train_epoch calculate_train_loss_epoch calculate_bleu generate_samples pre_train_epoch_condtional generate_samples_conditional_v2 load_checkpoint generate_samples_conditonal save_checkpoint main pre_train_epoch calculate_train_loss_epoch calculate_bleu generate_samples load_checkpoint save_checkpoint main pre_train_epoch calculate_train_loss_epoch calculate_bleu generate_samples pre_train_epoch_condtional generate_samples_conditional_v2 load_checkpoint generate_samples_conditonal save_checkpoint main pre_train_epoch calculate_train_loss_epoch calculate_bleu generate_samples load_checkpoint save_checkpoint main pre_train_epoch calculate_train_loss_epoch calculate_bleu load_data preprocessing ROLLOUT ROLLOUT ROLLOUT Dis_fakedataloader Gen_Data_loader Dis_dataloader Dis_realdataloader linear highway Discriminator Generator load_data preprocessing generate_samples load_checkpoint save_checkpoint main pre_train_epoch calculate_train_loss_epoch calculate_bleu pre_train_epoch_condtional generate_samples_conditional_v2 generate_samples_conditonal pre_train_epoch_condtional generate_samples_conditional_v2 generate_samples_conditonal load_data preprocessing ROLLOUT as_list parse int range extend generate pretrain_step num_batch append next_batch reset_pointer range calculate_nll_loss_step num_batch append next_batch reset_pointer range SmoothingFunction tolist extend map mean num_batch array Pool next_batch reset_pointer range predict trainable_variables Dis_realdataloader num_batch save_checkpoint Gen_Data_loader Saver create_batches Dis_fakedataloader Session calculate_bleu open seed str run ROLLOUT Generator Discriminator generate pre_train_epoch range load_train_data close get_reward update_params ConfigProto flush generate_samples train_op print load_checkpoint g_updates write global_variables_initializer next_batch reset_pointer print str restore format join str format print save int range extend predict int extend next_batch reset_pointer range predict constant pretrain_step num_batch append next_batch reset_pointer range generate_samples_conditional_v2 pre_train_epoch_condtional Dis_dataloader argmax

|

**This repo is a work-in-progress status without code cleanup and refactoring.** ## Introduction This is an implementation of a paper [Polyphonic Music Generation with Sequence Generative Adversarial Networks](https://arxiv.org/abs/1710.11418) in TensorFlow. Hard-forked from the [official SeqGAN code](https://github.com/LantaoYu/SeqGAN). ## Requirements Python 2.7 Tensorflow 1.4 or newer (tested on 1.9) pip packages: music21 4.1.0, pyyaml, nltk, pathos ## How to use `python music_seqgan.py` for full training run.

| 612 |

L706077/OCR-CRNN

|

['optical character recognition', 'scene text recognition']

|

['An End-to-End Trainable Neural Network for Image-based Sequence Recognition and Its Application to Scene Text Recognition']

|

tool/create_dataset.py createDataset writeCache checkImageIsValid imdecode fromstring IMREAD_GRAYSCALE join str print len xrange writeCache open

|

Convolutional Recurrent Neural Network ====================================== This software implements the Convolutional Recurrent Neural Network (CRNN), a combination of CNN, RNN and CTC loss for image-based sequence recognition tasks, such as scene text recognition and OCR. For details, please refer to our paper http://arxiv.org/abs/1507.05717. **UPDATE Mar 14, 2017** A Docker file has been added to the project. Thanks to [@varun-suresh](https://github.com/varun-suresh). **UPDATE May 1, 2017** A PyTorch [port](https://github.com/meijieru/crnn.pytorch) has been made by [@meijieru](https://github.com/meijieru). **UPDATE Jun 19, 2017** For an end-to-end text detector+recognizer, check out the [CTPN+CRNN implementation](https://github.com/AKSHAYUBHAT/DeepVideoAnalytics/tree/master/notebooks/OCR) by [@AKSHAYUBHAT](https://github.com/AKSHAYUBHAT). Build ----- The software has only been tested on Ubuntu 14.04 (x64). CUDA-enabled GPUs are required. To build the project, first install the latest versions of [Torch7](http://torch.ch), [fblualib](https://github.com/facebook/fblualib) and LMDB. Please follow their installation instructions respectively. On Ubuntu, lmdb can be installed by ``apt-get install liblmdb-dev``. To build the project, go to ``src/`` and execute ``sh build_cpp.sh`` to build the C++ code. If successful, a file named ``libcrnn.so`` should be produced in the ``src/`` directory.

| 613 |

LALIC-UFSCar/sense-vectors-analogies-pt

|

['word embeddings']

|

['Generating Sense Embeddings for Syntactic and Semantic Analogy for Portuguese']

|

sense2vec/evaluation/intrinsic/keyedvectorss2v.py mssg/evaluation/intrinsic/analogies.py sense2vec/evaluation/intrinsic/analogies.py corpora/preprocessing.py sense2vec/preprocessing/postagging.py clean_text Word2VecKeyedVectors Doc2VecKeyedVectors WordEmbeddingsKeyedVectors FastTextKeyedVectors Vocab BaseKeyedVectors main represent_sentence_nlpnet represent_doc represent_word_nlpnet lower sub str replace tag strip POSTagger append sents represent_sentence_nlpnet load str format iglob print chdir Path resolve

|

# Sense Embeddings for Syntactic and Semantic Analogy for Portuguese Implementation of Sense Embeddings for Syntactic and Semantic Analogy for Portuguese --- ## About the paper This repository contains the results obtained in a paper to be presented in <a href="http://comissoes.sbc.org.br/ce-pln/stil2019/">STIL 2019</a>. ``` Rodrigues, J. S. and Caseli, H. M. (2019). Generating Sense Embeddings for Syntactic and Semantic Analogy for Portuguese. STIL - Symposium in Information and Human Language Technology. Salvador, Bahia. ``` <a href="https://drive.google.com/open?id=1_VL-UTNxg-dBPFUVgMqvifCQb_JDbTUk">Trained embeddings models</a>

| 614 |

LIDS-UNICAMP/DISF

|

['superpixels', 'semantic segmentation']

|

['Superpixel Segmentation using Dynamic and Iterative Spanning Forest']

|

DISF_demo.py python3/setup.py

|

LIDS-UNICAMP/DISF

| 615 |

LIDS-UNICAMP/grabber

|

['semantic segmentation']

|

['Grabber: A tool to improve convergence in interactive image segmentation']

|

grabber/grabber.py grabber/__init__.py grabber/_tests/test_dock_widget.py grabber/_dock_widget.py setup.py grabber/anchors.py grabber/grabberwidget.py Anchors Grabber Path GrabberWidget napari_experimental_provide_dock_widget test_something_with_viewer _dock_widgets add_plugin_dock_widget make_napari_viewer register len

|

# Grabber: A Tool to Improve Convergence in Interactive Image Segmentation [](https://github.com/LIDS-UNICAMP/grabber/raw/master/LICENSE) [](https://pypi.org/project/grabber) [](https://python.org) [](https://github.com/LIDS-UNICAMP/grabber/actions) [](https://codecov.io/gh/LIDS-UNICAMP/grabber) A tool for contour-based segmentation correction (2D only). This repository provides a demo code of the paper: > **Grabber: A Tool to Improve Convergence in Interactive Image Segmentation** > [Jordão Bragantini](https://jookuma.github.io/), Bruno Moura, [Alexandre X. Falcão](http://lids.ic.unicamp.br/), [Fábio A. M. Cappabianco](https://scholar.google.com/citations?user=qmH9VEEAAAAJ&hl=en&oi=ao)

| 616 |

LIVIAETS/extended_logbarrier

|

['stochastic optimization', 'weakly supervised segmentation', 'semantic segmentation']

|

['Constrained Deep Networks: Lagrangian Optimization via Log-Barrier Extensions']

|

report.py preprocess/gen_toy.py log_compare.py metrics.py dataloader.py layers.py plot.py utils.py hist.py gen_weak.py networks.py remap_values.py test.py augment.py moustache.py main.py scheduler.py losses.py bounds.py preprocess/slice_promise.py main process_name get_args save BoxBounds TagsPredictions ConstantBounds PredictionBounds PreciseTags PreciseUpper TagBounds PreciseBounds SliceDataset get_loaders PatientSampler get_args centroid_strat box_strat erosion_strat main weaken_img random_strat get_args run conv_block convBatch residualConv conv_block_3 maxpool conv_block_Asym conv_block_1 conv_block_3_3 upSampleConv conv interpolate downSampleConv conv_decod_block relu log_barrier ext_log_barrier_quadra quadratic ext_log_barrier_quadra_2 ext_log_barrier LogBarrierLoss CrossEntropy NaivePenalty do_epoch get_args setup run main get_args runInference get_args run Dummy ENet BottleNeckNormal_Asym Bottleneck UNet BasicBlock BottleNeckDownSamplingDilatedConv Conv_residual_conv BottleNeckDownSampling ResidualUNet weights_init BottleneckC DenseNet fcn8s ResNeXt BottleNeckNormal Transition BottleNeckDownSamplingDilatedConvLast resnext101 BottleNeckUpSampling get_args run main remap main display_metric get_args AddWeightLoss StealWeight DummyScheduler MultiplyT TestDistMap TestCentroid TestDice TestNumpyHaussdorf map_ save_images mmap_ simplex compose_acc depth compose uniq flatten_ union_sum probs2one_hot uncurry soft_centroid eq simplex2colors intersection iIoU augment_arr numpy_haussdorf get_center union class2one_hot one_hot uc_ one_hot2dist batch_soft_size sset haussdorf fast_np_class2one_hot augment meta_dice str2bool probs2class id_ inter_sum soft_size ndarray get_args noise gen_img main get_args save_slices get_p_id main norm_arr print mmap_ partial mkdir range save with_suffix zip print parse_args add_argument ArgumentParser data_loader bounds_class PatientSampler group_train list grp_regex training_folders __import__ getattr append partial Compose eval gen_dataset zip enumerate losses print folders fromarray uint8 ellipse Draw print astype verbose fromarray dtype uint8 print astype generate_binary_structure ellipse Draw where fromarray min max argwhere show base_folder save_subfolder imshow verbose Path mkdir save strategy map_ list asarray pprint verbose zip map_ grid linspace show columns set_title set_xlabel shape savefig nbins legend gca set_xlim tight_layout mean zip set_ylabel hist figure BatchNorm2d Sequential Conv2d PReLU BatchNorm2d Sequential Conv2d PReLU BatchNorm2d Sequential Conv2d PReLU BatchNorm2d Sequential Conv2d conv_block BatchNorm2d Sequential Conv2d activ insert append BatchNorm2d layer BatchNorm2d Sequential ConvTranspose2d MaxPool2d load use_sgd losses print weights __import__ Adam SGD apply parameters eval getattr append to loss_class network enumerate zero_grad reduce union_sum list set_postfix range detach update slice close mean eval zip tqdm_ enumerate join backward print inter_sum train step len batch_size compute_miou Path save dataset DataFrame exists list setup do_epoch csv compute_haussdorf in_memory copytree range get_loaders param_groups debug eval type items scheduler print float32 to_csv rmtree numpy list n_class range items list slice grp_regex print SliceDataset Compose len DataLoader to enumerate PatientSampler tqdm_ pred_folders get_args runInference set_xticklabels boxplot enumerate set_yticks set_xticks set_ylim xavier_normal_ normal_ data fill_ ResNeXt arange smooth stem ylabel ylim title curves_styles plot hline l_line rc min labels spline eval Path axises folders display_metric print mean argmax std enumerate len map_ float32 shape stack type einsum ones_like type float32 shape zeros numpy_haussdorf numpy range union_sum inter_sum shape argmax shape type unsqueeze int32 shape zeros put_along_axis shape class2one_hot probs2class zeros_like astype distance any bool range str with_suffix shape mkdir zip numpy imsave map_ size flip mirror fliplr map_ list flip dot shape wh range n asarray ellipse Draw with_suffix noise save clip seed max min astype float32 stem match str time sum GetSpacing map_ asarray augment shape ReadImage mkdir zip imread norm_arr range len uc_

|

LIVIAETS/extended_logbarrier

| 617 |

LahiruJayasinghe/DeepDOA

|

['denoising']

|

['RF-Based Direction Finding of UAVs Using DNN']

|

DenoisingAE.py get_csv_data.py main.py DNN_Ground_data_8sectors.py autoencoder corrupt kl_divergence train_DOA multilayer_perceptron corrupt get_predicted_angle HandleData autoencoder multilayer_perceptron getDAE corrupt cast float32 random_uniform add random_uniform kl_divergence transpose placeholder matmul shape reverse append astype square sqrt corrupt enumerate int tanh print Variable float32 reduce_mean zeros autoencoder Saver save xticks Session yticks run subplot show HandleData total_data legend range plot mean minimize print get_synthatic_data subplots_adjust global_variables_initializer next_batch array len relu corrupt matmul add import_meta_graph autoencoder restore latest_checkpoint print mean reset_default_graph Saver append global_variables_initializer array Session run

|

# DeepDOA An implementation of a Sparse Denoising Autoencoder (SDAE)-based Deep Neural Network (DNN) for direction finding (DF) of small unmanned aerial vehicles (UAVs). It is motivated by the practical challenges associated with classical DF algorithms such as MUSIC and ESPRIT. The proposed DF scheme is practical and low-complex in the sense that a phase synchronization mechanism, an antenna calibration mechanism, and the analytical model of the antenna radiation pattern are not essential. Also, the proposed DF method can be implemented using a single-channel RF receiver. For more details, please see our [Arxiv paper](https://arxiv.org/pdf/1712.01154.pdf). ### Whole Architecture: <img src="images/Whole_Architecture.PNG" width="500"> ### Architecture training phase: <img src="images/Training.PNG" width="500"> ### Dependencies - Tensorflow (recommended below 1.5)

| 618 |

LahiruJayasinghe/RUL-Net

|

['data augmentation']

|

['Temporal Convolutional Memory Networks for Remaining Useful Life Estimation of Industrial Machinery']

|

data_processing.py utils_laj.py model.py kink_RUL get_PHM08Data combine_FD001_and_FD003 compute_rul_of_one_file compute_rul_of_one_id get_CMAPSSData data_augmentation analyse_Data CNNLSTM get_predicted_expected_RUL get_RNNCell dense_layer model_summary conv_layer BatchNorm scoring_func trjectory_generator batch_generator plot_data append range len tolist max int compute_rul_of_one_id extend set append concat drop save values list read_table append fit_transform range replace compute_rul_of_one_file mean eval print to_csv MinMaxScaler std read_csv len read_table concatenate print compute_rul_of_one_file mean savetxt unique save std values drop combine_FD001_and_FD003 concat drop save max values show str list from_dict read_table title append range reset_index replace plot compute_rul_of_one_file shuffle copy mean unique print to_csv index figure randint std len show str get_PHM08Data plot print title figure get_CMAPSSData list read_table concat max range len max_pooling1d Saver get_PHM08Data placeholder reduce_sum get_CMAPSSData conv_layer get_RNNCell dense_layer square model_summary sqrt batch_generator as_list dynamic_rnn zero_state minimize print reshape float32 reduce_mean MultiRNNCell BasicRNNCell GLSTMCell LSTMCell LSTMBlockFusedCell DropoutWrapper append LayerNormBasicLSTMCell GRUCell zeros range randint int concatenate print ones reshape index copy unique append zeros range len show plot dirname makedirs exp argmax round

|

# RUL-Net Deep learning approach for estimation of Remaining Useful Life (RUL) of an engine This repo is dedicated to new architectures for estimating RUL using CMAPSS dataset and PHM08 prognostic challenge dataset The datasets are included in this repo or can be donwloaded from: https://ti.arc.nasa.gov/tech/dash/groups/pcoe/prognostic-data-repository/#turbofan For more details, please see our [Arxiv paper](https://arxiv.org/pdf/1810.05644.pdf). ## System Model  ## Dependencies tensorflow 1.8 numpy 1.14.4

| 619 |

Lambda-3/Indra

|

['semantic textual similarity']

|

['Indra: A Word Embedding and Semantic Relatedness Server']

|

indra-core/src/test/resources/annoy/retrieve.py indra-core/src/test/resources/annoy/makeindex.py do load AnnoyIndex

|

[](https://travis-ci.org/Lambda-3/Indra) [](https://gitter.im/Lambda-3/Lobby) # What is Indra? Indra is an efficient library and service to deliver word-embeddings and semantic relatedness to real-world applications in the domains of machine learning and natural language processing. It offers 60+ pre-build models in 15 languages and several model algorithms and corpora. Indra is powered by [spotify-annoy](https://github.com/spotify/annoy) delivering an efficient [approximate nearest neighbors](http://en.wikipedia.org/wiki/Nearest_neighbor_search#Approximate_nearest_neighbor) function. # Features * Efficient approximate nearest neighbors (powered by [spotify-annoy](https://github.com/spotify/annoy)); * 60+ pre-build models in 15 languages; * Permissive license for commercial use (MIT License);

| 620 |

Lambda-3/pyindra

|

['semantic textual similarity']

|

['Indra: A Word Embedding and Semantic Relatedness Server']

|

setup.py pyindra/__init__.py IndraException Indra NeighborsType

|

# The Python Indra Client ##### The official python client for the Indra word embedding and semantic relatedness server Indra is an efficient library and service to deliver word-embeddings and semantic relatedness to real-world applications in the domains of machine learning and natural language processing. It offers 60+ pre-build models in 14 languages and several model algorithms and corpora. Indra is powered by [spotify-annoy](https://github.com/spotify/annoy) delivering an efficient [approximate nearest neighbors](http://en.wikipedia.org/wiki/Nearest_neighbor_search#Approximate_nearest_neighbor) function. Indra server is available with MIT License in [this repository](https://github.com/Lambda-3/Indra).

| 621 |

LantaoYu/SeqGAN

|

['text generation']

|

['SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient']

|

rollout.py discriminator.py sequence_gan.py dataloader.py generator.py target_lstm.py Gen_Data_loader Dis_dataloader linear highway Discriminator Generator ROLLOUT TARGET_LSTM as_list

|

# SeqGAN ## Requirements: * **Tensorflow r1.0.1** * Python 2.7 * CUDA 7.5+ (For GPU) ## Introduction Apply Generative Adversarial Nets to generating sequences of discrete tokens.  The illustration of SeqGAN. Left: D is trained over the real data and the generated data by G. Right: G is trained by policy gradient where the final reward signal is provided by D and is passed back to the intermediate action value via Monte Carlo search. The research paper [SeqGAN: Sequence Generative Adversarial Nets with Policy Gradient](http://arxiv.org/abs/1609.05473) has been accepted at the Thirty-First AAAI Conference on Artificial Intelligence (AAAI-17).

| 622 |

LaoYang1994/SOGNet

|

['panoptic segmentation', 'instance segmentation', 'semantic segmentation']

|

['SOGNet: Scene Overlap Graph Network for Panoptic Segmentation']

|

sognet/rpn/setup.py sognet/config/parse_args.py sognet/dataset/cityscapes.py sognet/operators/functions/mod_deform_conv.py sognet/operators/modules/mask_roi.py sognet/bbox/sample_rois.py sognet/models/fcn.py sognet/lib/utils/metric.py sognet/operators/functions/proposal_target.py sognet/bbox/bbox_regression.py sognet/lib/utils/timer.py sognet/operators/functions/proposal_mask_target.py sognet/lib/nn/optimizer.py sognet/operators/modules/view.py sognet/dataset/ade20k.py sognet/lib/utils/logging.py sognet/dataset/json_dataset_city.py init_coco.py sognet/operators/modules/pyramid_proposal.py sognet/operators/modules/fpn_roi_pooling.py sognet/operators/modules/deform_conv.py sognet/operators/modules/proposal_mask_target.py sognet/lib/utils/data_parallel.py sognet/operators/functions/pyramid_proposal.py sognet/models/relation.py sognet/dataset/__init__.py sognet/operators/build_deform_conv.py sognet/nms/py_cpu_nms.py sognet/operators/modules/mask_removal.py sognet/operators/modules/mask_matching.py sognet/dataset/imdb.py sognet/models/__init__.py sognet/operators/build_mod_deform_conv.py tools/train_net.py sognet/lib/utils/callback.py sognet/operators/functions/roialign.py sognet/bbox/setup.py sognet/rpn/assign_anchor.py tools/test_net.py sognet/dataset/base_dataset.py sognet/operators/modules/fpn_roi_align.py sognet/operators/modules/unary_logits.py sognet/bbox/bbox_transform.py sognet/lib/utils/colormap.py sognet/models/sognet.py sognet/operators/functions/deform_conv.py sognet/operators/modules/proposal_target.py sognet/mask/mask_transform.py sognet/rpn/generate_anchors.py sognet/models/rpn.py sognet/operators/modules/roialign.py sognet/config/config.py sognet/dataset/json_dataset.py sognet/nms/nms.py sognet/models/fpn.py sognet/operators/build_roialign.py sognet/operators/modules/mod_deform_conv.py sognet/models/resnet.py sognet/nms/setup.py sognet/dataset/coco.py sognet/models/rcnn.py add_bbox_regression_targets compute_bbox_mask_targets_and_label expand_bbox_regression_targets compute_bbox_regression_targets compute_mask_and_label expand_boxes iou_transform clip_xyxy_to_image nonlinear_transform aspect_ratio flip_boxes filter_boxes unique_boxes nonlinear_pred xywh_to_xyxy soft_nms clip_boxes bbox_transform clip_tiled_boxes xyxy_to_xywh bbox_overlaps_py bbox_transform_inv clip_boxes_to_image iou_pred bbox_overlaps _compute_targets sample_rois _expand_bbox_targets compute_mask_and_label compute_assign_targets customize_compiler_for_nvcc custom_build_ext update_config parse_args ade20k PQStat BaseDataset PQStatCat Cityscapes coco imdb add_bbox_regression_targets extend_with_flipped_entries _compute_targets _merge_proposal_boxes_into_roidb _add_class_assignments _sort_proposals filter_for_training _filter_crowd_proposals JsonDataset add_proposals add_bbox_regression_targets extend_with_flipped_entries _compute_targets _merge_proposal_boxes_into_roidb _add_class_assignments _sort_proposals filter_for_training _filter_crowd_proposals JsonDataset add_proposals clip_grad Adam SGD Speedometer colormap DataParallel _check_balance create_logger IoUMetric AvgMetric AccWithIgnoreMetric EvalMetric timeit Timer add_mask_rcnn_blobs mask_overlap mask_to_bbox _expand_to_class_specific_mask_targets polys_to_mask flip_segms intersect_box_mask get_gt_masks polys_to_boxes mask_aggregation polys_to_mask_wrt_box FCNSubNet FCNHead CrossEntropyLoss2d FPN MaskRCNNLoss RCNNLoss RCNN MaskBranch RelationHead RelationLoss resnet_rcnn DCNBottleneck Bottleneck res_block ResNetBackbone get_params conv1 RPN RPNLoss resnet_101_sognet SOGNet resnet_50_sognet py_nms cpu_nms_wrapper py_nms_wrapper py_soft_nms_wrapper gpu_nms_wrapper py_cpu_nms find_in_path customize_compiler_for_nvcc custom_build_ext locate_cuda _create_module_dir _create_module_dir _create_module_dir DeformConvFunction ModDeformConvFunction ProposalMaskTargetFunction ProposalTargetFunction PyramidProposalFunction RoIAlignFunction DeformConv DeformConvWithOffset FPNRoIAlign FPNRoIPool MaskMatching PanopticGTGenerate MaskRemoval MaskROI ModDeformConvWithOffsetMask ModDeformConv ProposalMaskTarget ProposalTarget PyramidProposal RoIAlign MaskTerm SegTerm View add_rpn_blobs _get_rpn_blobs assign_anchor assign_pyramid_anchor generate_anchors _scale_enum get_field_of_anchors _whctrs unmap compute_targets _ratio_enum _generate_anchors _mkanchors find_in_path customize_compiler_for_nvcc custom_build_ext locate_cuda im_post im_detect sognet_test lr_poly adjust_learning_rate sognet_train lr_factor get_step_index print bbox_transform astype zeros float argmax bbox_overlaps min where shape unique resize zeros max range print astype float argmax bbox_overlaps compute_mask_and_label bbox_stds bbox_means print bbox_normalization_precomputed mean sqrt compute_bbox_regression_targets tile zeros array range len zeros bbox_weights class_agnostic shape min zeros float max range minimum maximum minimum maximum minimum maximum minimum maximum dot array unique transpose log exp astype shape zeros float zeros float astype shape ndarray maximum isinstance ndarray isinstance copy copy float32 uint8 ascontiguousarray minimum dtype exp astype shape zeros log transpose log zeros shape logical_and rcnn_feat_stride sqrt zeros enumerate minimum int fg_fraction add_mask_rcnn_blobs has_mask_head ones size _compute_targets hstack float32 choice dict batch_rois _expand_bbox_targets append round array bbox_reg_weights bbox_transform_inv zeros int shape append _compile compiler_so debug_mode add_argument parse_known_args cfg ArgumentParser update_config _merge_proposal_boxes_into_roidb _add_class_assignments _filter_crowd_proposals append range len items flip_segms extend copy append format info len _compute_targets astype zeros argmax bbox_overlaps dtype toarray csr_matrix astype bbox_overlaps zeros argmax max append enumerate iou toarray csr_matrix xyxy_to_xywh len max argmax toarray argsort list filter clamp_ reshape astype float32 warn_imbalance join basicConfig format setFormatter getLogger addHandler strftime StreamHandler Formatter setLevel INFO makedirs zeros astype range resize sum min max zeros min max min astype where array zeros max range len append _flip_rle frPyObjects sum array decode decode maximum append frPyObjects sum array min zeros max range len mask_size _expand_to_class_specific_mask_targets ones reshape hstack astype float32 copy polys_to_boxes array zeros argmax bbox_overlaps range polys_to_mask_wrt_box mask_size int range named_modules join named_parameters append maximum minimum append maximum minimum pathsep pjoin exists split find_in_path items pjoin pathsep dirname sep join reduce close accumulate split rpartition makedirs arange _unmap rpn_bbox_weights rpn_fg_fraction argmax rpn_batch_size rpn_clobber_positives generate_anchors transpose array meshgrid sum bbox_transform astype choice fill float empty int reshape zeros bbox_overlaps _unmap rpn_bbox_weights rpn_fg_fraction argmax rpn_batch_size max rpn_clobber_positives generate_anchors list transpose array append sum range concatenate bbox_transform anchors_cython astype choice sqrt fill float empty int print reshape zeros bbox_overlaps len items anchor_scales get_field_of_anchors has_fpn concatenate anchor_ratios rpn_feat_stride _get_rpn_blobs field_of_anchors zeros round array append enumerate num_cell_anchors arange rpn_fg_fraction argmax rpn_batch_size transpose rpn_straddle_thresh append sum unmap choice fill empty int compute_targets dict zeros bbox_overlaps field_size len vstack _ratio_enum array str generate_anchors int arange meshgrid reshape transpose FieldOfAnchors rcnn_feat_stride max_size ceil float ravel fill empty hstack sqrt _whctrs round _mkanchors _whctrs _mkanchors has_mask_head has_fcn_head append has_panoptic_head range len mask_size expand_boxes max decode hstack astype maximum min int32 resize append zeros array range model_prefix DataLoader Timer evaluate_ssegs im_post resize cuda open str vis_mask get_unified_pan_result num_classes evaluate_boxes exit pprint tic eval_only load_state_dict has_fcn_head has_rcnn append to next vis_all_mask range format has_mask_head astype eval pformat info __iter__ zip enumerate load join items toc weight_path makedirs extend evaluate_panoptic test_iteration parameters int32 evaluate_masks has_panoptic_head output_path split enumerate backbone_freeze_at callback allreduce_async backbone_fix_bn model_prefix zero_grad SGD DistributedOptimizer use_syncbn pretrained DataLoader adjust_learning_rate save div_ cuda has_rpn use_horovod str DistributedSampler fcn_with_roi_loss relation_loss_weight has_relation load_state_dict has_fcn_head has_rcnn broadcast_parameters to next append eval_data state_dict update get freeze_backbone format has_mask_head bbox_loss_weight begin_iteration get_params_lr mean eval resume pformat info __iter__ item zip keys panoptic_loss_weight enumerate load join items train_model backward extend set_epoch reset fcn_loss_weight train step has_panoptic_head add_scalar

|

# Attention!!! We have made the [detectron2-based code](https://github.com/LaoYang1994/SOGNet-Dev) public. But there are still some bugs in it. We will fix them as soon as possible. # SOGNet This repository is for [SOGNet: Scene Overlap Graph Network for Panoptic Segmentation](https://arxiv.org/abs/1911.07527) which has been accepted by AAAI2020 and won the Innovation Award in COCO 2019 challenge, by [Yibo Yang](https://zero-lab-pku.github.io/personwise/yangyibo/), [Hongyang Li](https://zero-lab-pku.github.io/personwise/lihongyang/), [Xia Li](https://zero-lab-pku.github.io/personwise/lixia/), Qijie Zhao, [Jianlong Wu](https://zero-lab-pku.github.io/personwise/wujianlong/), [Zhouchen Lin](https://zero-lab-pku.github.io/personwise/linzhouchen/) This repo is modified from [UPSNet](https://github.com/uber-research/UPSNet). We have been transfering the code into [detectron2](https://github.com/facebookresearch/detectron2) framework. Not finished yet. ## Introduction The panoptic segmentation task requires a unified result from semantic and instance segmentation outputs that may contain overlaps. However, current studies widely ignore modeling overlaps. In this study, we aim to model overlap relations among instances and resolve them for panoptic segmentation. Inspired by scene graph representation, we formulate the overlapping problem as a simplified case, named scene overlap graph. We leverage each object's category, geometry and appearance features to perform relational embedding, and output a relation matrix that encodes overlap relations. In order to overcome the lack of supervision, we introduce a differentiable module to resolve the overlap between any pair of instances. The mask logits after removing overlaps are fed into per-pixel instance id classification, which leverages the panoptic supervision to assist in the modeling of overlap relations. Besides, we generate an approximate ground truth of overlap relations as the weak supervision, to quantify the accuracy of overlap relations predicted by our method. Experiments on COCO and Cityscapes demonstrate that our method is able to accurately predict overlap relations, and outperform the state-of-the-art performance for panoptic segmentation. Our method also won the Innovation Award in COCO 2019 challenge.  ## Usage

| 623 |

Larryliu912/Vincent-2.0

|

['style transfer']

|

['A Neural Algorithm of Artistic Style']

|

Vincent/histsimilar.py Vincent/vincent.py Vincent/vincent_2.0.py Vincent/vgg.py Vincent/stylize.py make_regalur_image hist_similar calc_similar calc_similar_by_path stylize _tensor_size load_net _pool_layer net_preloaded _conv_layer unprocess preprocess generate_noise_image style_loss_func content_loss_func imread load_vgg_model save_image main imread imsave load_net Graph shape range len mean loadmat _pool_layer relu reshape transpose _conv_layer enumerate conv2d constant Variable random_normal float astype dstack astype imsave _conv2d_relu Variable zeros _avgpool loadmat sum stylize imresize imsave shape imread sum range len uint8 astype save

|

# Vincent-2.0 It is a Neural Network that can transfer a picture to amazing Vincent van Gogh's oil paintings. Here I try to implement the algorithm and neural network in the paper A Neural Algorithm of Artistic Style https://arxiv.org/pdf/1508.06576v2.pdf by Tensorflow and Python. In this paper, the authors claim a method to obtain a representation of the style of a picture and apply and combine this style into another picture. For Vincent, the main idea is: 1. Use the pre-trained classification network VGG 19 to extract the features in the different layers of the picture (The imagenet-vgg-verydeep-19.mat is needed to run this program, because it is too large so please download it by http://www.vlfeat.org/matconvnet/models/beta16/imagenet-vgg-verydeep-19.mat and put it in the document Vincent) 2. The style is in the low layer of the picture, the content is the in the high layer of the picture 3. Use the gradient descent to fine the image by the loss function 4. After iteration, we can keep a part of the content of the picture and apply the style of the other picture in this picture.

| 624 |

LaurantChao/VIP

|

['gaussian processes', 'stochastic optimization']

|

['Variational Implicit Processes']

|

import_libs.py validation.py test.py Main.py VIP_fun_speedup_recon_alpha_classic_validate.py

|

# Variational Implicit Processes This is an example code for the paper titled Variational Implicit Processes (ICML 2019) This code implememts VIP-BNN on a UCI dataset. To use this code: simply run Main.py minor differences from the paper might occur due to randomness. Dependencies: - sklearn - 0.19.0 - pandas - 0.22.0 - tensorflow - 1.4.0

| 625 |

LaurenceA/bayesfunc

|

['gaussian processes', 'data augmentation']

|

['Global inducing point variational posteriors for Bayesian neural networks and deep Gaussian processes']

|

bayesfunc/kernels_minimal.py bayesfunc/bconv2d.py bayesfunc/det.py bayesfunc/outputs.py bayesfunc/gp.py bayesfunc/dkp.py docs/conf.py bayesfunc/inducing.py bayesfunc/abstract_bnn.py examples/cifar10.py bayesfunc/transforms.py bayesfunc/wishart_dist.py bayesfunc/priors.py bayesfunc/prob_prog.py bayesfunc/general.py bayesfunc/wishart_layer.py bayesfunc/singular_cholesky.py bayesfunc/factorised_local_reparam.py examples/models/resnet8.py bayesfunc/__init__.py bayesfunc/random.py bayesfunc/factorised.py setup.py bayesfunc/conv_mm.py bayesfunc/lop.py uci/__init__.py AbstractConv2d AbstractLinear bconv2d conv_1x1 extract_patches_conv conv_mm batch_extract_patches_conv extract_patches_unfold DetLinear DetLinearWeights DetConv2d DetParam DetConv2dWeights InverseWishart SingularIWLayer IWLayer bartlett FactorisedLinear FactorisedConv2dWeights FactorisedParam FactorisedConv2d FactorisedLinearWeights FactorisedLRLinearWeights FactorisedLRConv2d FactorisedLRParam FactorisedLRLinear FactorisedParam FactorisedLRConv2dWeights AbstractLRLinear InducingAdd InducingWrapper Reduce KG DetBatchNorm2d Conv2d_2_FC WrapMod Bias MaxPool2d MultFeatures BiasFeature Sum unbatch AbstractBatchNorm2d clear_sample InducingRemove Cat2d get_sample_dict AdaptiveAvgPool2d MultKernel propagate batch AvgPool2d set_sample_dict WrapMod2d logpq _Conv2d_2_FC NormalLearnedScale BatchNorm2d Print KernelLIGP GIGP KernelGIGP GIConv2dWeights LILinearWeights rsample_logpq_weights_fc GILinear GILinearWeights GIConv2d GILinearFullPrec LILinear LIConv2d rsample_logpq_weights LIConv2dWeights Kernel ReluKernelFeatures FeaturesToKernel KernelFeatures CombinedKernel ReluKernelGram SqExpKernel SqExpKernelGram IdentityKernel KernelGram Inv TriangularMatrix PositiveDefiniteMatrix Product FullMatrix mvnormal_log_prob Matrix kron_prod Scale LowerTriangularMatrix trace_quad UpperTriangularMatrix Identity KFac mvnormal_log_prob_unnorm tests CategoricalOutput NormalOutput Output CutOutput DetScalePrior ScalePrior SpatialIWPrior IWPrior InsanePrior KFacIWPrior NealPrior FactorisedPrior VI_Normal VI_InverseWishart VI_Scale VI_Scalar VI_Gamma RandomLinear RandomParam RandomLinearWeights RandomConv2d RandomConv2dWeights singular_cholesky Blur DoG ConvTransform Identity InverseWishart Wishart bartlett IWLinear train test block net UCI Dataset view reshape transpose shape conv2d shape sum view reshape transpose expand shape conv2d pad permute view reshape transpose expand conv2d pad numel view extract_patches_conv unfold shape pad contiguous PositiveDefiniteMatrix Gamma arange randn LowerTriangularMatrix shape sqrt eye N tril InducingAdd InducingRemove hasattr modules hasattr modules named_modules _sample hasattr items detach clear_sample f set_sample_dict get_sample_dict contiguous GIGP DefaultKernel DefaultKernel GIGP InducingAdd InducingRemove randn squeeze transpose unsqueeze cholesky device cholesky_solve mvnormal_log_prob_unnorm sum full prior u exp transpose prec_L log_prec_lr log_prec_scaled randn cholesky transpose eye allclose ones device argmax backward train_samples squeeze zero_grad L logsumexp expand mean log floor Categorical step propagate len squeeze test_samples logsumexp expand mean argmax log Conv2d_2_FC AvgPool2d linear block Sequential conv2d ReLU AdaptiveAvgPool2d

|

# bayesfunc ## Installation Run: ```python python setup.py develop ``` which copies symlinks to your package directory. ## Tutorial Look at examples/simple.ipynb in the repo. ## Documentation

| 626 |

Lavender105/DFF

|

['object proposal generation', 'edge detection', 'semantic segmentation']

|

['CASENet: Deep Category-Aware Semantic Edge Detection', 'Dynamic Feature Fusion for Semantic Edge Detection']

|

pytorch-encoding/scripts/prepare_cityscapes.py pytorch-encoding/experiments/recognition/model/encnet.py pytorch-encoding/scripts/prepare_pcontext.py pytorch-encoding/encoding/nn/encoding.py pytorch-encoding/encoding/nn/customize.py pytorch-encoding/encoding/utils/log.py pytorch-encoding/encoding/nn/syncbn.py pytorch-encoding/experiments/recognition/model/mynn.py exps/visualize/__init__.py pytorch-encoding/encoding/__init__.py exps/datasets/base_sbd.py exps/models/base.py pytorch-encoding/encoding/datasets/ade20k.py pytorch-encoding/tests/unit_test/test_function.py pytorch-encoding/encoding/lib/cpu/setup.py pytorch-encoding/encoding/utils/pallete.py pytorch-encoding/encoding/models/psp.py pytorch-encoding/encoding/parallel.py pytorch-encoding/encoding/models/__init__.py pytorch-encoding/encoding/datasets/pcontext.py pytorch-encoding/encoding/models/model_zoo.py pytorch-encoding/encoding/lib/__init__.py pytorch-encoding/encoding/models/gcnet.py pytorch-encoding/experiments/recognition/dataset/minc.py pytorch-encoding/experiments/segmentation/train.py pytorch-encoding/encoding/nn/comm.py pytorch-encoding/scripts/prepare_coco.py pytorch-encoding/experiments/recognition/model/resnet.py exps/losses/customize.py exps/models/dff.py exps/visualize/visualize.py exps/train.py pytorch-encoding/encoding/models/model_store.py pytorch-encoding/encoding/models/encnet.py pytorch-encoding/encoding/utils/visualize.py pytorch-encoding/scripts/prepare_ade20k.py exps/datasets/sbd.py pytorch-encoding/experiments/recognition/main.py pytorch-encoding/encoding/datasets/base.py pytorch-encoding/tests/unit_test/test_module.py pytorch-encoding/encoding/utils/__init__.py pytorch-encoding/encoding/datasets/cityscapes.py pytorch-encoding/tests/unit_test/test_utils.py pytorch-encoding/experiments/recognition/dataset/cifar10.py pytorch-encoding/encoding/dilated/__init__.py pytorch-encoding/encoding/datasets/pascal_voc.py pytorch-encoding/encoding/utils/files_orig.py pytorch-encoding/encoding/utils/presets.py pytorch-encoding/encoding/dilated/resnet.py pytorch-encoding/experiments/segmentation/option.py pytorch-encoding/tests/lint.py pytorch-encoding/encoding/version.py pytorch-encoding/encoding/datasets/pascal_aug.py pytorch-encoding/setup.py pytorch-encoding/docs/source/conf.py pytorch-encoding/encoding/functions/encoding.py exps/datasets/__init__.py pytorch-encoding/encoding/utils/train_helper.py pytorch-encoding/encoding/datasets/__init__.py pytorch-encoding/encoding/models/casenet.py pytorch-encoding/encoding/utils/files.py pytorch-encoding/experiments/recognition/option.py pytorch-encoding/experiments/segmentation/demo.py pytorch-encoding/encoding/datasets/coco.py exps/option.py pytorch-encoding/scripts/prepare_pascal.py pytorch-encoding/encoding/models/base.py pytorch-encoding/experiments/recognition/model/deepten.py pytorch-encoding/encoding/lib/gpu/setup.py pytorch-encoding/scripts/prepare_minc.py pytorch-encoding/experiments/segmentation/test_models.py exps/models/__init__.py pytorch-encoding/encoding/utils/lr_scheduler.py pytorch-encoding/encoding/models/danet.py pytorch-encoding/encoding/nn/__init__.py pytorch-encoding/encoding/functions/customize.py exps/models/casenet.py exps/losses/__init__.py pytorch-encoding/encoding/functions/syncbn.py pytorch-encoding/encoding/nn/attention.py pytorch-encoding/encoding/functions/__init__.py pytorch-encoding/experiments/recognition/model/download_models.py exps/datasets/base_cityscapes.py pytorch-encoding/encoding/models/plain.py exps/datasets/cityscapes.py exps/test.py pytorch-encoding/encoding/models/fcn.py pytorch-encoding/experiments/recognition/model/encnetdrop.py pytorch-encoding/experiments/segmentation/test.py pytorch-encoding/encoding/utils/metrics.py Options eval_model test BaseDataset test_batchify_fn BaseDataset test_batchify_fn _get_cityscapes_pairs CityscapesEdgeDetection _get_sbd_pairs SBDEdgeDetection get_edge_dataset WeightedCrossEntropyWithLogits EdgeDetectionReweightedLosses_CPU EdgeDetectionReweightedLosses module_inference MultiEvalModule BaseNet flip_image resize_image pad_image crop_image CaseNet get_casenet get_dff DFF LocationAdaptiveLearner get_edge_model visualize_prediction apply_mask create_version_file develop install patched_make_field CallbackContext allreduce AllReduce DataParallelModel _criterion_parallel_apply execute_replication_callbacks Reduce patch_replication_callback DataParallelCriterion ADE20KSegmentation _get_ade20k_pairs BaseDataset test_batchify_fn _get_cityscapes_pairs CityscapesEdgeDetection COCOSegmentation VOCAugSegmentation VOCSegmentation ContextSegmentation get_segmentation_dataset get_edge_dataset ResNet resnet50 Bottleneck resnet152 conv3x3 resnet34 resnet18 BasicBlock resnet101 NonMaxSuppression scaled_l2 aggregate _aggregate pairwise_cosine _scaled_l2 _batchnormtrain batchnormtrain _sum_square sum_square module_inference MultiEvalModule BaseNet flip_image resize_image pad_image crop_image CaseNet get_casenet DANet get_danet DANetHead EncHead EncNet get_encnet_resnet50_ade EncModule get_encnet_resnet101_pcontext get_encnet get_encnet_resnet50_pcontext get_fcn_resnet50_pcontext FCNHead get_fcn_resnet50_ade FCN get_fcn get_gcnet Customized_Unit GCNet GCNetHead GCN get_model_file short_hash purge pretrained_model_list get_model PlainNet get_plain PlainNetHead PSPHead PSP get_psp_resnet50_ade get_psp get_edge_model CAM_Module PAM_Module SyncMaster FutureResult SlavePipe softmax_crossentropy WeightedCrossEntropyWithLogits SegmentationMultiLosses Mean EdgeDetectionReweightedChannelwiseLosses EdgeDetectionReweightedLosses_CPU WeightedChannelwiseCrossEntropyWithLogits EdgeDetectionReweightedChannelwiseLosses_CPU SegmentationLosses Sum Normalize View PyramidPooling GramMatrix EdgeDetectionReweightedLosses Encoding EncodingDrop UpsampleConv2d Inspiration BatchNorm3d SharedTensor _SyncBatchNorm BatchNorm1d BatchNorm2d download save_checkpoint mkdir check_sha1 download save_checkpoint mkdir check_sha1 create_logger LR_Scheduler SegmentationMetric batch_intersection_union batch_pix_accuracy pixel_accuracy intersection_and_union get_mask_pallete _get_voc_pallete load_image get_selabel_vector EMA visualize_prediction apply_mask class_specific_color main Options Dataloader Lighting find_classes MINCDataloder Dataloader make_dataset Net Net Net test EncDropLayer Bottleneck EncLayerV3 conv3x3 Basicblock EncBasicBlock EncBottleneck EncLayerV2 EncLayer Net Options test Trainer parse_args download_ade download_city parse_args parse_args install_coco_api download_coco parse_args download_minc parse_args download_voc download_aug install_pcontext_api parse_args download_ade filepath_enumerate LintHelper get_header_guard_dmlc main process test_aggregate_v2 test_non_max_suppression test_sum_square test_syncbn_func test_encoding_dist_inference test_encoding_dist test_aggregate test_scaled_l2 _assert_tensor_close _assert_tensor_close testSyncBN test_encoding test_all_reduce test_segmentation_metrics Options eval_model test BaseDataset test_batchify_fn _get_cityscapes_pairs CityscapesEdgeDetection _get_sbd_pairs SBDEdgeDetection get_edge_dataset WeightedCrossEntropyWithLogits EdgeDetectionReweightedLosses_CPU EdgeDetectionReweightedLosses module_inference MultiEvalModule BaseNet flip_image resize_image pad_image crop_image CaseNet get_casenet get_dff DFF LocationAdaptiveLearner get_edge_model visualize_prediction apply_mask create_version_file develop install patched_make_field CallbackContext allreduce AllReduce DataParallelModel _criterion_parallel_apply execute_replication_callbacks Reduce patch_replication_callback DataParallelCriterion ADE20KSegmentation _get_ade20k_pairs BaseDataset test_batchify_fn _get_cityscapes_pairs CityscapesEdgeDetection COCOSegmentation VOCAugSegmentation VOCSegmentation ContextSegmentation get_segmentation_dataset get_edge_dataset ResNet resnet50 Bottleneck resnet152 conv3x3 resnet34 resnet18 BasicBlock resnet101 NonMaxSuppression scaled_l2 aggregate _aggregate pairwise_cosine _scaled_l2 _batchnormtrain batchnormtrain _sum_square sum_square module_inference MultiEvalModule BaseNet flip_image resize_image pad_image crop_image CaseNet get_casenet DANet get_danet DANetHead EncHead EncNet get_encnet_resnet50_ade EncModule get_encnet_resnet101_pcontext get_encnet get_encnet_resnet50_pcontext get_fcn_resnet50_pcontext FCNHead get_fcn_resnet50_ade FCN get_fcn get_gcnet Customized_Unit GCNet GCNetHead GCN get_model_file short_hash purge pretrained_model_list get_model PlainNet get_plain PlainNetHead PSPHead PSP get_psp_resnet50_ade get_psp get_edge_model CAM_Module PAM_Module SyncMaster FutureResult SlavePipe softmax_crossentropy WeightedCrossEntropyWithLogits SegmentationMultiLosses Mean EdgeDetectionReweightedChannelwiseLosses EdgeDetectionReweightedLosses_CPU WeightedChannelwiseCrossEntropyWithLogits EdgeDetectionReweightedChannelwiseLosses_CPU SegmentationLosses Sum Normalize View PyramidPooling GramMatrix EdgeDetectionReweightedLosses Encoding EncodingDrop UpsampleConv2d Inspiration BatchNorm3d SharedTensor _SyncBatchNorm BatchNorm1d BatchNorm2d download save_checkpoint mkdir check_sha1 create_logger LR_Scheduler SegmentationMetric batch_intersection_union batch_pix_accuracy pixel_accuracy intersection_and_union get_mask_pallete _get_voc_pallete load_image get_selabel_vector EMA visualize_prediction apply_mask class_specific_color main Options Dataloader Lighting find_classes MINCDataloder make_dataset Net Net test EncDropLayer Bottleneck EncLayerV3 conv3x3 Basicblock EncBasicBlock EncBottleneck EncLayerV2 EncLayer Net Options test Trainer parse_args download_ade download_city install_coco_api download_coco download_minc download_voc download_aug install_pcontext_api download_ade filepath_enumerate LintHelper get_header_guard_dmlc main process test_aggregate_v2 test_non_max_suppression test_sum_square test_syncbn_func test_encoding_dist_inference test_encoding_dist test_aggregate test_scaled_l2 _assert_tensor_close testSyncBN test_encoding test_all_reduce test_segmentation_metrics load get_edge_dataset num_class get_edge_model model print Compose tqdm eval DataLoader resume load_state_dict append dataset cuda range enumerate makedirs resume_dir test isinstance zip join get_path_pairs join get_path_pairs flip_image evaluate size resize_ pad array range load CaseNet get_model_file load_state_dict NUM_CLASS load DFF get_model_file load_state_dict NUM_CLASS range where imsave transpose astype shape apply_mask zeros bool array range print join handle_item field_body label field_name list_type join isinstance _worker len start is_grad_enabled append range Lock list hasattr __data_parallel_replicate__ modules enumerate len replicate join get_path_pairs replace load_url ResNet load_state_dict load_url ResNet load_state_dict load ResNet load_state_dict get_model_file load ResNet load_state_dict get_model_file load ResNet load_state_dict is_cuda normalize load get_model_file DANet load_state_dict NUM_CLASS load EncNet get_model_file load_state_dict NUM_CLASS load get_model_file load_state_dict NUM_CLASS FCN load get_model_file GCNet load_state_dict NUM_CLASS get join remove format print check_sha1 expanduser download exists makedirs join remove endswith expanduser listdir lower load get_model_file PlainNet load_state_dict NUM_CLASS load PSP get_model_file load_state_dict NUM_CLASS save makedirs get join isdir print dirname abspath expanduser makedirs sha1 makedirs copyfile join setFormatter format FileHandler getLogger addHandler strftime StreamHandler localtime Formatter setLevel INFO makedirs sum max astype dtype max histogram astype sum asarray asarray histogram fromarray astype putpalette range int ANTIALIAS convert resize input_transform histc size zeros float range hsv_to_rgb ones class_specific_color uint32 model SGD DataParallel clf dataset ion cuda seed show getloader ylabel Dataloader savefig load_state_dict CrossEntropyLoss range parse format plot test Net eval import_module resume lr start_epoch manual_seed lr_step load lr_scheduler LR_Scheduler print xlabel parameters isfile train epochs len sort parameters net get_mask_pallete get_segmentation_model save get_segmentation_dataset format model_zoo zip join SegmentationMetric get_model add_argument ArgumentParser join download mkdir print join mkdir download mkdir rmtree system join download mkdir join download mkdir join download mkdir basename move rmtree system join normpath isfile append walk FileInfo sub startswith append RepositoryName project_name find process_python str process_cpp rsplit exclude_path ArgumentParser project exit stderr normpath parse_args process filetype walk filepath_enumerate set print_summary getwriter join getreader add_argument path StreamReaderWriter format aggregate Variable print gradcheck uniform_ scaled_l2 format Variable print gradcheck uniform_ format aggregate_v2 Variable print requires_grad_ gradcheck uniform_ _assert_tensor_close py_aggregate_v2 cuda detach format backward print encoding_dist requires_grad_ gradcheck mahalanobis_dist zero_ uniform_ _assert_tensor_close sum cuda detach encoding_dist_inference format backward Variable print grad requires_grad_ gradcheck mahalanobis_dist uniform_ _assert_tensor_close sum cuda detach format Variable print gradcheck uniform_ sum_square format batchnormtrain Variable print gradcheck uniform_ _test_nms format Variable print gradcheck uniform_ cuda data allreduce format print device_count gradcheck _assert_tensor_close range print _check_batchnorm_result patch_replication_callback double cuda range spacing batch_intersection_union print batch_pix_accuracy random mean unsqueeze randint matrix argmax long pixel_accuracy intersection_and_union

|

# Dynamic Feature Fusion for Semantic Edge Detection (DFF) Yuan Hu, Yunpeng Chen, Xiang Li and Jiashi Feng   ### Video Demo We have released a demo video of DFF on [Youtube](https://youtu.be/wSCKTepMfhY) and [Bilibili](https://www.bilibili.com/video/av54650328/). ## Introduction The repository contains the entire pipeline (including data preprocessing, training, testing, visualization, evaluation and demo generation, etc) for DFF using Pytorch 1.0. We propose a novel dynamic feature fusion strategy for semantic edge detection. This is achieved by a proposed weight learner to infer proper fusion weights over multi-level features for each location of the feature map, conditioned on the specific input. We show that our model with the novel dynamic feature fusion is superior to fixed weight fusion and also the na¨ ıve location-invariant weight fusion methods, and we achieve new state-of-the-art on benchmarks Cityscapes and SBD. For more details, please refer to the [IJCAI2019](https://www.ijcai.org/proceedings/2019/0110.pdf) paper. We also reproduce CASENet in this repository, and actually achieve higher accuracy than the original [paper](https://arxiv.org/abs/1705.09759) .

| 627 |

LeMinhThong/blackbox-attack

|

['adversarial attack']

|

['Towards Evaluating the Robustness of Neural Networks']

|

boundary_attack.py blackbox_attack.py models.py batch_attack.py zoo_attack.py attack_untargeted fine_grained_binary_search initial_fine_grained_binary_search initial_fine_grained_binary_search_targeted attack_mnist fine_grained_binary_search_local attack_imgnet fine_grained_binary_search_targeted attack_targeted fine_grained_binary_search_local_targeted attack_single attack_cifar attack_untargeted fine_grained_binary_search attack_mnist fine_grained_binary_search_local attack_cifar10 fine_grained_binary_search_targeted attack_targeted fine_grained_binary_search_local_targeted attack_imagenet attack_untargeted fine_grained_binary_search_local fine_grained_binary_search boundary_attack_mnist save_model ToSpaceBGR train_cifar10 load_mnist_data ToRange255 load_model ImagenetTestDataset SimpleMNIST train_mnist IMAGENET show_image CIFAR10 test_cifar10 imagenettest load_imagenet_data load_cifar10_data MNIST train_simple_mnist test_mnist zoo_attack attack coordinate_ADAM sub_ randn cuda FloatTensor squeeze expand range predict initial_fine_grained_binary_search_targeted size fine_grained_binary_search_local_targeted manual_seed float type enumerate time norm print min clone max mul view FloatTensor predict_batch size resize_ clone min expand type cuda range view print predict_batch min expand sub_ randn cuda initial_fine_grained_binary_search FloatTensor squeeze expand range predict size manual_seed float type enumerate time norm print predict_batch fine_grained_binary_search_local min clone max mul view FloatTensor predict_batch size resize_ clone min expand type cuda range view print predict_batch min expand attack_untargeted print attack_targeted show_image numpy predict MNIST seed list format pop load_model print choice DataParallel eval is_available randint cuda range load_mnist_data len seed pop list format load_model print len choice DataParallel eval CIFAR10 is_available randint cuda range load_cifar10_data seed pop list format print IMAGENET choice randint imagenettest range len set fine_grained_binary_search_targeted sample zeros len enumerate fine_grained_binary_search set sample zeros len format load_model print DataParallel eval CIFAR10 is_available cuda load_cifar10_data len format print IMAGENET load_imagenet_data len enumerate MNIST attack_untargeted format load_model print predict DataParallel eval show_image is_available numpy cuda load_mnist_data enumerate flatten join print range MNIST DataLoader DataLoader CIFAR10 ImageFolder Compose Normalize DataLoader DataLoader Compose Normalize ImagenetTestDataset criterion model Variable backward print zero_grad Adam range parameters step CrossEntropyLoss enumerate criterion model Variable backward print zero_grad SGD range parameters is_available train step CrossEntropyLoss enumerate data model Variable print eval is_available max criterion model Variable backward print zero_grad SGD range parameters is_available train step CrossEntropyLoss enumerate data model Variable print eval is_available max save state_dict load load_state_dict sqrt power range reshape permutation scatter_ cuda view FloatTensor ones coordinate_ADAM from_numpy sum range size zero_ net norm Variable clamp print zeros numpy MNIST module load_model print predict_batch DataParallel eval DataLoader attack CIFAR10 is_available cuda enumerate load_mnist_data load_cifar10_data

|

# blackbox-attack ### About Implementations of the blackbox attack algorithms in Pytorch ### Model description There are two CNN models for MNIST dataset: a simple model and C&W model. Simple Model for MNIST: stride = 1, padding = 0 Layer 1: Conv2d 5x5x16, BatchNorm(16), ReLU, Max Pooling 2x2 Layer 2: Conv2d 5x5x32, BatchNorm(32), ReLU, Max Pooling 2x2 Layer 3: FC 10

| 628 |

Leandropassosjr/o2pf

|

['breast cancer detection']

|