modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

AnonymousSub/SR_specter | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

license: mit

tags:

- nowcasting

- forecasting

- timeseries

- remote-sensing

---

# Nowcasting CNN

## Model description

3d conv model, that takes in different data streams

architecture is roughly

1. satellite image time series goes into many 3d convolution layers.

2. nwp time series goes into many 3d convolution layers.

3. Final convolutional layer goes to full connected layer. This is joined by

other data inputs like

- pv yield

- time variables

Then there ~4 fully connected layers which end up forecasting the

pv yield / gsp into the future

## Intended uses & limitations

Forecasting short term PV power for different regions and nationally in the UK

## How to use

[More information needed]

## Limitations and bias

[More information needed]

## Training data

Training data is EUMETSAT RSS imagery over the UK, on-the-ground PV data, and NWP predictions.

## Training procedure

[More information needed]

## Evaluation results

[More information needed]

|

AnonymousSub/T5_pubmedqa_question_generation | [

"pytorch",

"t5",

"text2text-generation",

"transformers",

"autotrain_compatible"

]

| text2text-generation | {

"architectures": [

"T5ForConditionalGeneration"

],

"model_type": "t5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": true,

"length_penalty": 2,

"max_length": 200,

"min_length": 30,

"no_repeat_ngram_size": 3,

"num_beams": 4,

"prefix": "summarize: "

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to German: "

},

"translation_en_to_fr": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to French: "

},

"translation_en_to_ro": {

"early_stopping": true,

"max_length": 300,

"num_beams": 4,

"prefix": "translate English to Romanian: "

}

}

} | 6 | 2022-09-30T14:29:25Z | ---

language:

- is

- en

- multilingual

tags:

- translation

inference:

parameters:

src_lang: is_IS

tgt_lang: en_XX

decoder_start_token_id: 2

max_length: 512

widget:

- text: Einu sinni átti ég hest. Hann var svartur og hvítur.

---

# mBART based translation model

This model was trained to translate multiple sentences at once, compared to one sentence at a time.

It will occasionally combine sentences or add an extra sentence.

This is the same model as are provided on CLARIN: https://repository.clarin.is/repository/xmlui/handle/20.500.12537/278

|

AnonymousSub/bert-base-uncased_wikiqa | [

"pytorch",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 30 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- librispeech_asr

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="librispeech" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-librispeech" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="2" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="300" \

--final_generation_num_beams="12" \

--generation_length_penalty="1.6" \

--hidden_dropout="0.2" \

--activation_dropout="0.2" \

--feat_proj_dropout="0.2" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/bert_hier_diff_equal_wts_epochs_1_shard_1 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- mozilla-foundation/common_voice_9_0

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="common_voice" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-common-voice" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="2" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="200" \

--generation_num_beams="14" \

--generation_length_penalty="1.2" \

--max_eval_duration_in_seconds="20" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/bert_hier_diff_equal_wts_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- LIUM/tedlium

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-tedlium" \

--dataset_config_name="tedlium" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-tedlium" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="2" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="250" \

--final_generation_num_beams="12" \

--generation_length_penalty="1.5" \

--hidden_dropout="0.2" \

--activation_dropout="0.2" \

--feat_proj_dropout="0.2" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/bert_mean_diff_epochs_1_shard_1 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | 2022-09-30T14:39:11Z | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- facebook/voxpopuli

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="voxpopuli" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-voxpopuli" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="1" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="10001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="225" \

--final_generation_num_beams="5" \

--generation_length_penalty="0.8" \

--hidden_dropout="0.2" \

--activation_dropout="0.2" \

--feat_proj_dropout="0.2" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/bert_mean_diff_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- speechcolab/gigaspeech

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="gigaspeech" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-gigaspeech" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="2" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="200" \

--final_generation_num_beams="14" \

--generation_length_penalty="1.2" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/bert_snips | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- kensho/spgispeech

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="spgispeech" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-spgispeech" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="2" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="225" \

--final_generation_num_beams="14" \

--generation_length_penalty="1.6" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/bert_triplet_epochs_1_shard_1 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- revdotcom/earnings22

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="earnings22" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-earnings22" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="4" \

--logging_steps="25" \

--max_steps="50000" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--generation_length_penalty="1.2" \

--final_generation_max_length="200" \

--final_generation_num_beams="5" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--hidden_dropout="0.2" \

--activation_dropout="0.2" \

--feat_proj_dropout="0.2" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/bert_triplet_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- edinburghcstr/ami

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="ami" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-ami" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="4" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="225" \

--final_generation_num_beams="5" \

--generation_length_penalty="1.4" \

--hidden_dropout="0.2" \

--activation_dropout="0.2" \

--feat_proj_dropout="0.2" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/cline-papers-biomed-0.618 | [

"pytorch",

"roberta",

"transformers"

]

| null | {

"architectures": [

"LecbertForPreTraining"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

license: mit

---

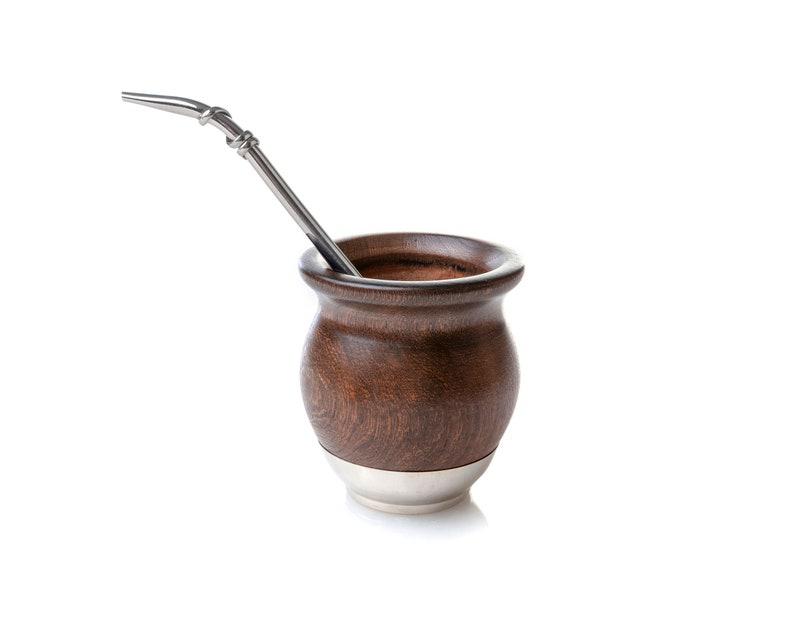

### mate on Stable Diffusion via Dreambooth

#### model by machinelearnear

This your the Stable Diffusion model fine-tuned the mate concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **a photo of sks mate**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

AnonymousSub/cline-s10-SR | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- ldc/switchboard

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="switchboard" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-switchboard" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="2" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="260" \

--final_generation_num_beams="5" \

--generation_length_penalty="0.8" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/cline_emanuals | [

"pytorch",

"roberta",

"transformers"

]

| null | {

"architectures": [

"LecbertForPreTraining"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

language:

- en

tags:

- esb

datasets:

- esb/datasets

- ldc/chime-4

---

To reproduce this run, execute:

```python

#!/usr/bin/env bash

python run_flax_speech_recognition_seq2seq.py \

--dataset_name="esb/datasets" \

--model_name_or_path="esb/wav2vec2-aed-pretrained" \

--dataset_config_name="chime4" \

--output_dir="./" \

--wandb_name="wav2vec2-aed-chime4" \

--wandb_project="wav2vec2-aed" \

--per_device_train_batch_size="8" \

--per_device_eval_batch_size="4" \

--logging_steps="25" \

--max_steps="50001" \

--eval_steps="10000" \

--save_steps="10000" \

--generation_max_length="40" \

--generation_num_beams="1" \

--final_generation_max_length="250" \

--final_generation_num_beams="5" \

--generation_length_penalty="0.6" \

--learning_rate="1e-4" \

--warmup_steps="500" \

--hidden_dropout="0.2" \

--activation_dropout="0.2" \

--feat_proj_dropout="0.2" \

--overwrite_output_dir \

--gradient_checkpointing \

--freeze_feature_encoder \

--predict_with_generate \

--do_eval \

--do_train \

--do_predict \

--push_to_hub \

--use_auth_token

```

|

AnonymousSub/cline_wikiqa | [

"pytorch",

"roberta",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 27 | null | ---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

model-index:

- name: stbl_clinical_bert_ft_rs7

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# stbl_clinical_bert_ft_rs7

This model is a fine-tuned version of [emilyalsentzer/Bio_ClinicalBERT](https://huggingface.co/emilyalsentzer/Bio_ClinicalBERT) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0848

- F1: 0.9208

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 12

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.2755 | 1.0 | 101 | 0.0986 | 0.8484 |

| 0.0655 | 2.0 | 202 | 0.0780 | 0.8873 |

| 0.0299 | 3.0 | 303 | 0.0622 | 0.9047 |

| 0.0145 | 4.0 | 404 | 0.0675 | 0.9110 |

| 0.0097 | 5.0 | 505 | 0.0706 | 0.9141 |

| 0.0057 | 6.0 | 606 | 0.0753 | 0.9174 |

| 0.0032 | 7.0 | 707 | 0.0755 | 0.9182 |

| 0.0024 | 8.0 | 808 | 0.0835 | 0.9219 |

| 0.0014 | 9.0 | 909 | 0.0838 | 0.9197 |

| 0.0013 | 10.0 | 1010 | 0.0838 | 0.9204 |

| 0.0009 | 11.0 | 1111 | 0.0850 | 0.9183 |

| 0.0009 | 12.0 | 1212 | 0.0848 | 0.9208 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

|

AnonymousSub/rule_based_bert_mean_diff_epochs_1_shard_10 | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 4 | null | ---

license: mit

---

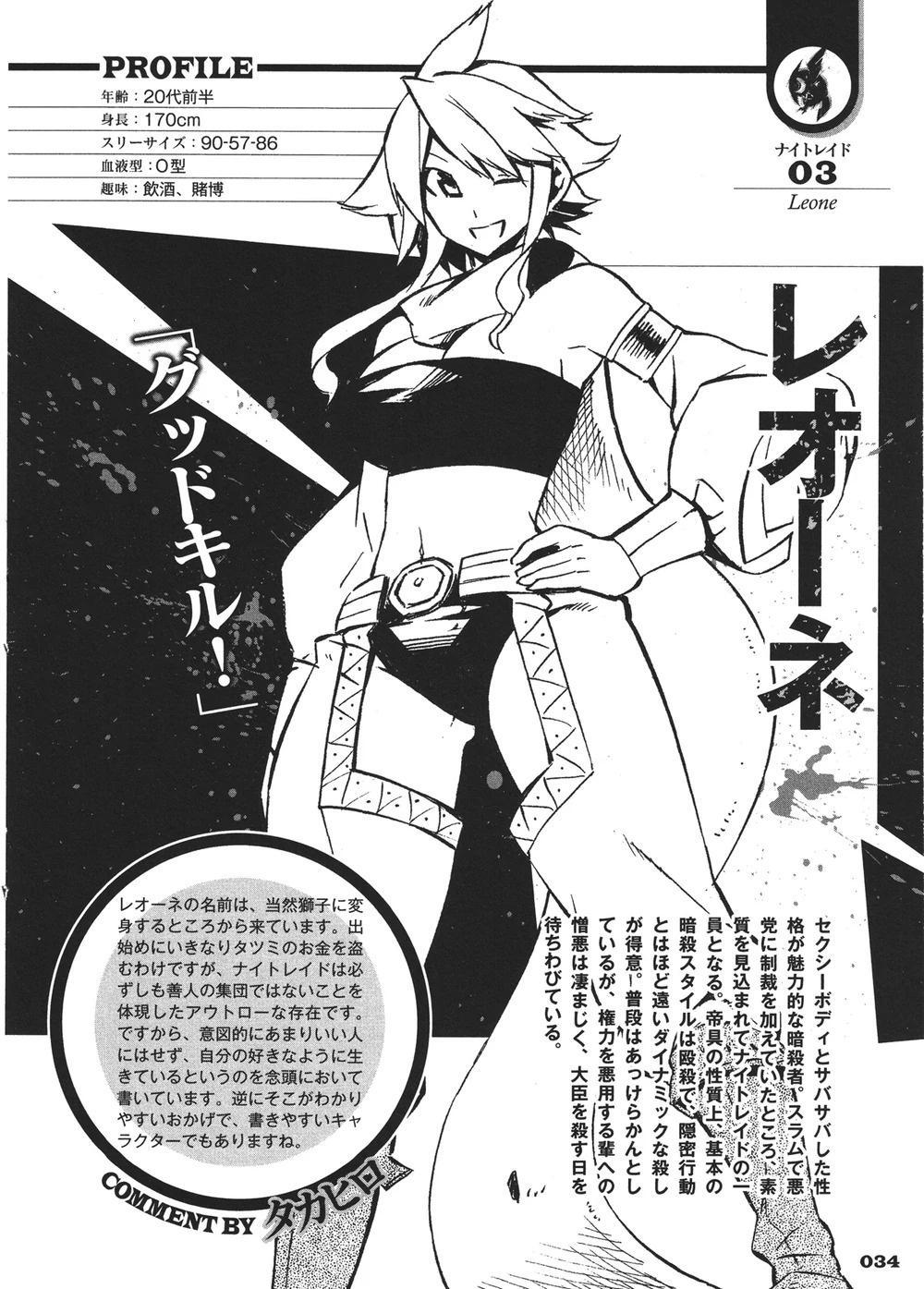

# Warning: Heavy Overfitting #

# Description

Trainer: Hank

Demiurge from Overlord

# Dataset

>Training: 6 images

>Regularization: 20 images

# Info

>Model Used: Waifu Diffusion 1.2

>Steps: 4000

>Keyword: Demiurge (Use this in the prompt)

>Class Phrase: anime_man_slick_black_hair_with_glasses

|

AnonymousSub/rule_based_roberta_twostage_quadruplet_epochs_1_shard_10 | [

"pytorch",

"roberta",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"RobertaModel"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

language:

- en

- ja

tags:

- nllb

license: cc-by-nc-4.0

---

# NLLB 1.3B fine-tuned on Japanese to English Light Novel translation

This model was fine-tuned on light and web novel for Japanese to English translation.

It can translate sentences and paragraphs up to 512 tokens.

## Usage

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("thefrigidliquidation/nllb-jaen-1.3B-lightnovels")

model = AutoModelForSeq2SeqLM.from_pretrained("thefrigidliquidation/nllb-jaen-1.3B-lightnovels")

generated_tokens = model.generate(

**inputs,

forced_bos_token_id=tokenizer.lang_code_to_id[tokenizer.tgt_lang],

max_new_tokens=1024,

no_repeat_ngram_size=6,

).cpu()

translated_text = tokenizer.batch_decode(generated_tokens, skip_special_tokens=True)[0]

```

Generating with diverse beam search seems to work best. Add the following to `model.generate`:

```python

num_beams=8,

num_beam_groups=4,

do_sample=False,

```

## Glossary

You can provide up to 10 custom translations for nouns and character names at runtime. To do so, surround the Japanese term with term tokens. Prefix the word with one of `<t0>, <t1>, ..., <t9>` and suffix the word with `</t>`. The term will be translated as the prefix term token which can then be string replaced.

For example, in `マイン、ルッツが迎えに来たよ` if you wish to have `マイン` translated as `Myne` you would replace `マイン` with `<t0>マイン</t>`. The model will translate `<t0>マイン</t>、ルッツが迎えに来たよ` as `<t0>, Lutz is here to pick you up.` Then simply do a string replacement on the output, replacing `<t0>` with `Myne`.

## Honorifics

You can force the model to generate or ignore honorifics.

```python

# default, the model decides whether to use honorifics

tokenizer.tgt_lang = "jpn_Jpan"

# no honorifics, the model is discouraged from using honorifics

tokenizer.tgt_lang = "zsm_Latn"

# honorifics, the model is encouraged to use honorifics

tokenizer.tgt_lang = "zul_Latn"

```

|

AnonymousSub/unsup-consert-base_squad2.0 | [

"pytorch",

"bert",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | {

"architectures": [

"BertForQuestionAnswering"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="FIT17/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

AnonymousSub/unsup-consert-emanuals | [

"pytorch",

"bert",

"feature-extraction",

"transformers"

]

| feature-extraction | {

"architectures": [

"BertModel"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

tags:

- Taxi-v3

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-Taxi-v3

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: Taxi-v3

type: Taxi-v3

metrics:

- type: mean_reward

value: 7.50 +/- 2.76

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **Taxi-v3**

This is a trained model of a **Q-Learning** agent playing **Taxi-v3** .

## Usage

```python

model = load_from_hub(repo_id="FIT17/q-Taxi-v3", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

AnonymousSubmission/pretrained-model-1 | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 275.78 +/- 16.76

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Anonymreign/savagebeta | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

---

### ChairTest on Stable Diffusion via Dreambooth

#### model by dadosdq

This your the Stable Diffusion model fine-tuned the ChairTest concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **ChA1r**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

Anthos23/my-awesome-model | [

"pytorch",

"tf",

"roberta",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 30 | null | ---

language: en

license: apache-2.0

library_name: diffusers

tags: []

datasets: microsoft/fluentui-emoji

metrics: []

---

<!-- This model card has been generated automatically according to the information the training script had access to. You

should probably proofread and complete it, then remove this comment. -->

# emoji-diffusion

## Model description

This diffusion model is trained with the [🤗 Diffusers](https://github.com/huggingface/diffusers) library

on the `microsoft/fluentui-emoji` dataset.

## Intended uses & limitations

#### How to use

```python

# TODO: add an example code snippet for running this diffusion pipeline

```

#### Limitations and bias

[TODO: provide examples of latent issues and potential remediations]

## Training data

[TODO: describe the data used to train the model]

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 16

- gradient_accumulation_steps: 1

- optimizer: AdamW with betas=(None, None), weight_decay=None and epsilon=None

- lr_scheduler: None

- lr_warmup_steps: 500

- ema_inv_gamma: None

- ema_inv_gamma: None

- ema_inv_gamma: None

- mixed_precision: False

### Training results

📈 [TensorBoard logs](https://huggingface.co/rycont/emoji-diffusion/tensorboard?#scalars)

|

ArashEsk95/bert-base-uncased-finetuned-cola | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-10-01T13:23:05Z | ---

license: mit

---

### Joseph Russel Ammen on Stable Diffusion via Dreambooth

#### model by wallowbitz

This your the Stable Diffusion model fine-tuned the Joseph Russel Ammen concept taught to Stable Diffusion with Dreambooth.

It can be used by modifying the `instance_prompt`: **Joseph Russel Ammen**

You can also train your own concepts and upload them to the library by using [this notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_training.ipynb).

And you can run your new concept via `diffusers`: [Colab Notebook for Inference](https://colab.research.google.com/github/huggingface/notebooks/blob/main/diffusers/sd_dreambooth_inference.ipynb), [Spaces with the Public Concepts loaded](https://huggingface.co/spaces/sd-dreambooth-library/stable-diffusion-dreambooth-concepts)

Here are the images used for training this concept:

|

ArashEsk95/bert-base-uncased-finetuned-sst2 | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-10-01T13:24:06Z | ---

language: en

thumbnail: http://www.huggingtweets.com/elonmusk-nftfreaks-nftgirl/1664630772232/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1572573363255525377/Xz3fufYY_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1524408283674591232/ZcdTVEPl_400x400.jpg')">

</div>

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1384551299681714177/fHRGvDJR_400x400.jpg')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI CYBORG 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Elon Musk & NFT Freaks 🗝🏰🦸🏿♂️ & NFTGirl 🖼</div>

<div style="text-align: center; font-size: 14px;">@elonmusk-nftfreaks-nftgirl</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Elon Musk & NFT Freaks 🗝🏰🦸🏿♂️ & NFTGirl 🖼.

| Data | Elon Musk | NFT Freaks 🗝🏰🦸🏿♂️ | NFTGirl 🖼 |

| --- | --- | --- | --- |

| Tweets downloaded | 3200 | 3247 | 2210 |

| Retweets | 121 | 1753 | 298 |

| Short tweets | 984 | 306 | 395 |

| Tweets kept | 2095 | 1188 | 1517 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/3aevkd35/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @elonmusk-nftfreaks-nftgirl's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/al5jjb8v) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/al5jjb8v/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/elonmusk-nftfreaks-nftgirl')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

ArenaGrenade/char-cnn | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-10-01T14:28:12Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets: din0s/msmarco-nlgen

model-index:

- name: t5-base-msmarco-nlgen-cb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-msmarco-nlgen-cb

This model is a fine-tuned version of [t5-base](https://huggingface.co/t5-base) on the [MS MARCO Natural Language Generation](https://huggingface.co/datasets/din0s/msmarco-nlgen) dataset.

It achieves the following results on the evaluation set:

- Loss: 2.0571

- Rougelsum: 24.7427

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0001

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:---------:|

| 2.1393 | 0.26 | 2500 | 2.1099 | 24.5028 |

| 2.1006 | 0.52 | 5000 | 2.0739 | 24.6017 |

| 2.0694 | 0.78 | 7500 | 2.0571 | 24.7427 |

### Framework versions

- Transformers 4.23.0.dev0

- Pytorch 1.12.1+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

AriakimTaiyo/kumiko | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-10-01T15:02:22Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9415

- name: F1

type: f1

value: 0.9414702638466222

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1764

- Accuracy: 0.9415

- F1: 0.9415

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.436 | 1.0 | 2000 | 0.2178 | 0.93 | 0.9305 |

| 0.1615 | 2.0 | 4000 | 0.1764 | 0.9415 | 0.9415 |

### Framework versions

- Transformers 4.13.0

- Pytorch 1.12.1+cu113

- Datasets 1.16.1

- Tokenizers 0.10.3

|

Arina/Erine | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="rwheel/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

evaluate_agent(env, model["max_steps"], model["n_eval_episodes"], model["qtable"], model["eval_seed"])

```

|

ArjunKadya/HuggingFace | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-10-01T15:12:12Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets: din0s/asqa

model-index:

- name: t5-base-asqa-ob

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# t5-base-asqa-ob

This model is a fine-tuned version of [t5-base](https://huggingface.co/t5-base) on the [ASQA](https://huggingface.co/datasets/din0s/asqa) dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7356

- Rougelsum: 12.0879

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rougelsum |

|:-------------:|:-----:|:----:|:---------------:|:---------:|

| No log | 1.0 | 355 | 1.8545 | 11.6549 |

| 2.4887 | 2.0 | 710 | 1.8050 | 11.7533 |

| 1.9581 | 3.0 | 1065 | 1.7843 | 11.8327 |

| 1.9581 | 4.0 | 1420 | 1.7722 | 11.9442 |

| 1.9252 | 5.0 | 1775 | 1.7648 | 11.9331 |

| 1.8853 | 6.0 | 2130 | 1.7567 | 11.9788 |

| 1.8853 | 7.0 | 2485 | 1.7519 | 12.0300 |

| 1.8512 | 8.0 | 2840 | 1.7483 | 12.0225 |

| 1.8328 | 9.0 | 3195 | 1.7451 | 12.0402 |

| 1.8115 | 10.0 | 3550 | 1.7436 | 12.0444 |

| 1.8115 | 11.0 | 3905 | 1.7419 | 12.0850 |

| 1.7878 | 12.0 | 4260 | 1.7408 | 12.1047 |

| 1.774 | 13.0 | 4615 | 1.7394 | 12.0839 |

| 1.774 | 14.0 | 4970 | 1.7390 | 12.0910 |

| 1.7787 | 15.0 | 5325 | 1.7381 | 12.0880 |

| 1.7632 | 16.0 | 5680 | 1.7380 | 12.1088 |

| 1.7623 | 17.0 | 6035 | 1.7370 | 12.1046 |

| 1.7623 | 18.0 | 6390 | 1.7368 | 12.0997 |

| 1.7508 | 19.0 | 6745 | 1.7359 | 12.0902 |

| 1.7597 | 20.0 | 7100 | 1.7356 | 12.0879 |

### Framework versions

- Transformers 4.23.0.dev0

- Pytorch 1.12.1+cu102

- Datasets 2.4.0

- Tokenizers 0.12.1

|

asaakyan/mbart-poetic-all | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-10-01T15:25:30Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-finetuned-ner

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

config: conll2003

split: train

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.9363425925925926

- name: Recall

type: recall

value: 0.9530461124200605

- name: F1

type: f1

value: 0.9446205170975813

- name: Accuracy

type: accuracy

value: 0.986769294166127

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-finetuned-ner

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0774

- Precision: 0.9363

- Recall: 0.9530

- F1: 0.9446

- Accuracy: 0.9868

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| 0.0273 | 1.0 | 1756 | 0.0787 | 0.9286 | 0.9411 | 0.9348 | 0.9845 |

| 0.0141 | 2.0 | 3512 | 0.0772 | 0.9299 | 0.9504 | 0.9400 | 0.9863 |

| 0.0054 | 3.0 | 5268 | 0.0774 | 0.9363 | 0.9530 | 0.9446 | 0.9868 |

### Framework versions

- Transformers 4.22.2

- Pytorch 1.12.1+cu113

- Datasets 2.5.1

- Tokenizers 0.12.1

|

Arnold/common_voiceha | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2022-10-01T15:48:32Z | ---

language:

- en

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- image-to-image

datasets:

- rrustom/architecture2022clean

pipeline: image-to-image

--- |

Arnold/wav2vec2-hausa2-demo-colab | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"dataset:common_voice",

"transformers",

"generated_from_trainer",

"license:apache-2.0"

]

| automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | 2022-10-01T15:54:14Z | ---

license: mit

---

# Description

Trainer: Chris

Aqua from Konosuba

# Dataset

>Training: 16 images

>Regularization: 3249 images - waifu-research-department reg images

# Info

>Model Used: Waifu Diffusion 1.3 epoch 5

>Steps: 3000

>Keyword: Aqua (Use this in the prompt)

>Class Phrase: Useless_Goddess |

Arnold/wav2vec2-large-xlsr-hausa2-demo-colab | [

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"dataset:common_voice",

"transformers",

"generated_from_trainer",

"license:apache-2.0"

]

| automatic-speech-recognition | {

"architectures": [

"Wav2Vec2ForCTC"

],

"model_type": "wav2vec2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

- precision

- recall

- f1

model-index:

- name: roberta-large-finetuned-ours-DS

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-large-finetuned-ours-DS

This model is a fine-tuned version of [roberta-large](https://huggingface.co/roberta-large) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 2.3369

- Accuracy: 0.75

- Precision: 0.7054

- Recall: 0.6949

- F1: 0.6974

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 43

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 20

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | Precision | Recall | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:---------:|:------:|:------:|

| 1.0561 | 0.99 | 99 | 0.8773 | 0.615 | 0.4054 | 0.5584 | 0.4591 |

| 0.762 | 1.98 | 198 | 0.6514 | 0.715 | 0.6735 | 0.6672 | 0.6588 |

| 0.5661 | 2.97 | 297 | 0.6806 | 0.71 | 0.6764 | 0.6608 | 0.6435 |

| 0.3699 | 3.96 | 396 | 0.8358 | 0.71 | 0.6611 | 0.6691 | 0.6570 |

| 0.2184 | 4.95 | 495 | 1.1627 | 0.7 | 0.6597 | 0.6337 | 0.6414 |

| 0.1743 | 5.94 | 594 | 1.0544 | 0.725 | 0.6831 | 0.6949 | 0.6831 |

| 0.098 | 6.93 | 693 | 1.4757 | 0.73 | 0.6885 | 0.6902 | 0.6892 |

| 0.0813 | 7.92 | 792 | 1.8146 | 0.73 | 0.6840 | 0.6772 | 0.6800 |

| 0.0435 | 8.91 | 891 | 1.6697 | 0.755 | 0.7141 | 0.7127 | 0.7132 |

| 0.0209 | 9.9 | 990 | 1.8931 | 0.755 | 0.7102 | 0.7070 | 0.7082 |

| 0.0201 | 10.89 | 1089 | 2.1934 | 0.74 | 0.6971 | 0.6866 | 0.6907 |

| 0.0095 | 11.88 | 1188 | 2.1389 | 0.75 | 0.7014 | 0.6915 | 0.6932 |

| 0.0141 | 12.87 | 1287 | 2.1902 | 0.74 | 0.6942 | 0.6943 | 0.6936 |

| 0.0112 | 13.86 | 1386 | 2.5021 | 0.73 | 0.6889 | 0.6669 | 0.6741 |

| 0.0054 | 14.85 | 1485 | 2.3840 | 0.73 | 0.6819 | 0.6715 | 0.6746 |

| 0.0088 | 15.84 | 1584 | 2.3224 | 0.74 | 0.6909 | 0.6825 | 0.6787 |

| 0.003 | 16.83 | 1683 | 2.2641 | 0.75 | 0.7054 | 0.6949 | 0.6974 |

| 0.0017 | 17.82 | 1782 | 2.3361 | 0.75 | 0.7077 | 0.6968 | 0.7012 |

| 0.0014 | 18.81 | 1881 | 2.3041 | 0.755 | 0.7131 | 0.7009 | 0.7051 |

| 0.0083 | 19.8 | 1980 | 2.3369 | 0.75 | 0.7054 | 0.6949 | 0.6974 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.10.1+cu111

- Datasets 2.3.2

- Tokenizers 0.12.1

|

ArtemisZealot/DialoGTP-small-Qkarin | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,