modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

Callidior/bert2bert-base-arxiv-titlegen | [

"pytorch",

"safetensors",

"encoder-decoder",

"text2text-generation",

"en",

"dataset:arxiv_dataset",

"transformers",

"summarization",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| summarization | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 145 | null | ---

language: "en" # Example: en

license: "cc-by-4.0" # Example: apache-2.0 or any license from https://hf.co/docs/hub/repositories-licenses

library_name: "transformers" # Optional. Example: keras or any library from https://github.com/huggingface/hub-docs/blob/main/js/src/lib/interfaces/Libraries.ts

---

# Model description

This is the T5-3B model for System 3 DREAM-FLUTE (all 4 dimensions), as described in our paper Just-DREAM-about-it: Figurative Language Understanding with DREAM-FLUTE, FigLang workshop @ EMNLP 2022 (Arxiv link: https://arxiv.org/abs/2210.16407)

Systems 3: DREAM-FLUTE - Providing DREAM’s different dimensions as input context

We adapt DREAM’s scene elaborations (Gu et al., 2022) for the figurative language understanding NLI task by using the DREAM model to generate elaborations for the premise and hypothesis separately. This allows us to investigate if similarities or differences in the scene elaborations for the premise and hypothesis will provide useful signals for entailment/contradiction label prediction and improving explanation quality. The input-output format is:

```

Input <Premise> <Premise-elaboration-from-DREAM> <Hypothesis> <Hypothesis-elaboration-from-DREAM>

Output <Label> <Explanation>

```

where the scene elaboration dimensions from DREAM are: consequence, emotion, motivation, and social norm. We also consider a system incorporating all these dimensions as additional context.

In this model, DREAM-FLUTE (all 4 dimensions), we use elaborations along all DREAM dimensions. For more details on DREAM, please refer to DREAM: Improving Situational QA by First Elaborating the Situation, NAACL 2022 (Arxiv link: https://arxiv.org/abs/2112.08656, ACL Anthology link: https://aclanthology.org/2022.naacl-main.82/).

# How to use this model?

We provide a quick example of how you can try out DREAM-FLUTE (all 4 dimensions) in our paper with just a few lines of code:

```

>>> from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

>>> model = AutoModelForSeq2SeqLM.from_pretrained("allenai/System3_DREAM_FLUTE_all_dimensions_FigLang2022")

>>> tokenizer = AutoTokenizer.from_pretrained("t5-3b")

>>> input_string = "Premise: I was really looking forward to camping but now it is going to rain so I won't go. [Premise - social norm] It's okay to be disappointed when plans change. [Premise - emotion] I (myself)'s emotion is disappointed. [Premise - motivation] I (myself)'s motivation is to stay home. [Premise - likely consequence] I will miss out on a great experience and be bored and sad. Hypothesis: I am absolutely elated at the prospects of getting drenched in the rain and then sleep in a wet tent just to have the experience of camping. [Hypothesis - social norm] It's good to want to have new experiences. [Hypothesis - emotion] I (myself)'s emotion is excited. [Hypothesis - motivation] I (myself)'s motivation is to have fun. [Hypothesis - likely consequence] I am so excited that I forget to bring a raincoat and my tent gets soaked. Is there a contradiction or entailment between the premise and hypothesis?"

>>> input_ids = tokenizer.encode(input_string, return_tensors="pt")

>>> output = model.generate(input_ids, max_length=200)

>>> tokenizer.batch_decode(output, skip_special_tokens=True)

['Answer : Contradiction. Explanation : Camping in the rain is often associated with the prospect of getting wet and cold, so someone who is elated about it is not being rational.']

```

# More details about DREAM-FLUTE ...

For more details about DREAM-FLUTE, please refer to our:

* 📄Paper: https://arxiv.org/abs/2210.16407

* 💻GitHub Repo: https://github.com/allenai/dream/

This model is part of our DREAM-series of works. This is a line of research where we make use of scene elaboration for building a "mental model" of situation given in text. Check out our GitHub Repo for more!

# More details about this model ...

## Training and evaluation data

We use the FLUTE dataset for the FigLang2022SharedTask (https://huggingface.co/datasets/ColumbiaNLP/FLUTE) for training this model. ∼7500 samples are provided as the training set. We used a 80-20 split to create our own training (6027 samples) and validation (1507 samples) partitions on which we build our models. For details on how we make use of the training data provided in the FigLang2022 shared task, please refer to https://github.com/allenai/dream/blob/main/FigLang2022SharedTask/Process_Data_Train_Dev_split.ipynb.

## Model details

This model is a fine-tuned version of [t5-3b](https://huggingface.co/t5-3b).

It achieves the following results on the evaluation set:

- Loss: 0.7499

- Rouge1: 58.5551

- Rouge2: 38.5673

- Rougel: 52.3701

- Rougelsum: 52.335

- Gen Len: 40.7452

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: multi-GPU

- num_devices: 2

- total_train_batch_size: 2

- total_eval_batch_size: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 0.992 | 0.33 | 1000 | 0.8911 | 39.9287 | 27.5817 | 38.2127 | 38.2042 | 19.0 |

| 0.9022 | 0.66 | 2000 | 0.8409 | 40.8873 | 28.7963 | 39.16 | 39.1615 | 19.0 |

| 0.8744 | 1.0 | 3000 | 0.7813 | 41.2617 | 29.5498 | 39.5857 | 39.5695 | 19.0 |

| 0.5636 | 1.33 | 4000 | 0.7961 | 41.1429 | 30.2299 | 39.6592 | 39.6648 | 19.0 |

| 0.5585 | 1.66 | 5000 | 0.7763 | 41.2581 | 30.0851 | 39.6859 | 39.68 | 19.0 |

| 0.5363 | 1.99 | 6000 | 0.7499 | 41.8302 | 30.964 | 40.3059 | 40.2964 | 19.0 |

| 0.3347 | 2.32 | 7000 | 0.8540 | 41.4633 | 30.6209 | 39.9933 | 39.9948 | 18.9954 |

| 0.341 | 2.65 | 8000 | 0.8599 | 41.6576 | 31.0316 | 40.1466 | 40.1526 | 18.9907 |

| 0.3531 | 2.99 | 9000 | 0.8368 | 42.05 | 31.6387 | 40.6239 | 40.6254 | 18.9907 |

### Framework versions

- Transformers 4.22.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

CallumRai/HansardGPT2 | [

"pytorch",

"jax",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 14 | null | ---

language: "en" # Example: en

license: "cc-by-4.0" # Example: apache-2.0 or any license from https://hf.co/docs/hub/repositories-licenses

library_name: "transformers" # Optional. Example: keras or any library from https://github.com/huggingface/hub-docs/blob/main/js/src/lib/interfaces/Libraries.ts

---

# Model description

This is the T5-3B model for the "explain" component of System 4's "Classify then explain" pipeline, as described in our paper Just-DREAM-about-it: Figurative Language Understanding with DREAM-FLUTE, FigLang workshop @ EMNLP 2022 (Arxiv link: https://arxiv.org/abs/2210.16407)

System 4: Two-step System - Classify then explain

In contrast to Systems 1 to 3 where the entailment/contradiction label and associated explanation are predicted jointly, System 4 uses a two-step “classify then explain” pipeline. This current model is for the "explain" component of the pipeline. The input-output format is:

```

Input <Premise> <Hypothesis> <Label>

Output <Explanation>

```

# How to use this model?

We provide a quick example of how you can try out the "explain" component of System 4 in our paper with just a few lines of code:

```

>>> from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

>>> model = AutoModelForSeq2SeqLM.from_pretrained("allenai/System4_explain_FigLang2022")

>>> tokenizer = AutoTokenizer.from_pretrained("t5-3b")

>>> input_string = "Premise: It is wrong to lie to children. Hypothesis: Telling lies to the young is like clippin the wings of a butterfly. Is there a contradiction or entailment between the premise and hypothesis? Answer : Entailment. Explanation : "

>>> input_ids = tokenizer.encode(input_string, return_tensors="pt")

>>> output = model.generate(input_ids, max_length=200)

>>> tokenizer.batch_decode(output, skip_special_tokens=True)

['Clipping the wings of a butterfly means that the butterfly will never be able to fly, so lying to children is like doing the same.']

```

# More details about DREAM-FLUTE ...

For more details about DREAM-FLUTE, please refer to our:

* 📄Paper: https://arxiv.org/abs/2210.16407

* 💻GitHub Repo: https://github.com/allenai/dream/

This model is part of our DREAM-series of works. This is a line of research where we make use of scene elaboration for building a "mental model" of situation given in text. Check out our GitHub Repo for more!

# More details about this model ...

## Training and evaluation data

We use the FLUTE dataset for the FigLang2022SharedTask (https://huggingface.co/datasets/ColumbiaNLP/FLUTE) for training this model. ∼7500 samples are provided as the training set. We used a 80-20 split to create our own training (6027 samples) and validation (1507 samples) partitions on which we build our models. For details on how we make use of the training data provided in the FigLang2022 shared task, please refer to https://github.com/allenai/dream/blob/main/FigLang2022SharedTask/Process_Data_Train_Dev_split.ipynb.

## Model details

This model is a fine-tuned version of [t5-3b](https://huggingface.co/t5-3b).

It achieves the following results on the evaluation set:

- Loss: 1.0331

- Rouge1: 53.8485

- Rouge2: 32.8855

- Rougel: 46.6534

- Rougelsum: 46.6435

- Gen Len: 29.7724

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- distributed_type: multi-GPU

- num_devices: 2

- total_train_batch_size: 2

- total_eval_batch_size: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 1.3633 | 0.33 | 1000 | 1.2468 | 44.8469 | 24.3002 | 37.9797 | 37.9943 | 18.8341 |

| 1.2531 | 0.66 | 2000 | 1.1445 | 45.7234 | 25.6755 | 39.5817 | 39.5653 | 18.8786 |

| 1.2148 | 1.0 | 3000 | 1.0806 | 47.4244 | 27.6605 | 41.0803 | 41.0628 | 18.7339 |

| 0.7554 | 1.33 | 4000 | 1.1006 | 47.5505 | 28.2781 | 41.385 | 41.3774 | 18.6556 |

| 0.7761 | 1.66 | 5000 | 1.0671 | 48.583 | 29.6223 | 42.5451 | 42.5247 | 18.6821 |

| 0.7777 | 1.99 | 6000 | 1.0331 | 48.8329 | 30.5086 | 43.0964 | 43.0586 | 18.6881 |

| 0.4378 | 2.32 | 7000 | 1.1978 | 48.6239 | 30.2101 | 42.8863 | 42.8851 | 18.7259 |

| 0.4715 | 2.66 | 8000 | 1.1545 | 49.1311 | 31.0582 | 43.523 | 43.5043 | 18.7598 |

| 0.462 | 2.99 | 9000 | 1.1471 | 49.4022 | 31.7946 | 44.0345 | 44.0128 | 18.7200 |

### Framework versions

- Transformers 4.22.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

CalvinHuang/mt5-small-finetuned-amazon-en-es | [

"pytorch",

"tensorboard",

"mt5",

"text2text-generation",

"transformers",

"summarization",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible"

]

| summarization | {

"architectures": [

"MT5ForConditionalGeneration"

],

"model_type": "mt5",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 16 | null | ---

license: mit

tags:

- generated_from_trainer

datasets:

- conll2003

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: hmBERT-CoNLL-cp2

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: conll2003

type: conll2003

args: conll2003

metrics:

- name: Precision

type: precision

value: 0.8931730929727926

- name: Recall

type: recall

value: 0.9005385392123864

- name: F1

type: f1

value: 0.8968406938741306

- name: Accuracy

type: accuracy

value: 0.983217164440637

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# hmBERT-CoNLL-cp2

This model is a fine-tuned version of [dbmdz/bert-base-historic-multilingual-cased](https://huggingface.co/dbmdz/bert-base-historic-multilingual-cased) on the conll2003 dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0666

- Precision: 0.8932

- Recall: 0.9005

- F1: 0.8968

- Accuracy: 0.9832

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 0.06 | 25 | 0.4116 | 0.3632 | 0.3718 | 0.3674 | 0.9005 |

| No log | 0.11 | 50 | 0.2247 | 0.6384 | 0.6902 | 0.6633 | 0.9459 |

| No log | 0.17 | 75 | 0.1624 | 0.7303 | 0.7627 | 0.7461 | 0.9580 |

| No log | 0.23 | 100 | 0.1541 | 0.7338 | 0.7688 | 0.7509 | 0.9588 |

| No log | 0.28 | 125 | 0.1349 | 0.7610 | 0.8095 | 0.7845 | 0.9643 |

| No log | 0.34 | 150 | 0.1230 | 0.7982 | 0.8253 | 0.8115 | 0.9694 |

| No log | 0.4 | 175 | 0.0997 | 0.8069 | 0.8406 | 0.8234 | 0.9727 |

| No log | 0.46 | 200 | 0.1044 | 0.8211 | 0.8410 | 0.8309 | 0.9732 |

| No log | 0.51 | 225 | 0.0871 | 0.8413 | 0.8603 | 0.8507 | 0.9760 |

| No log | 0.57 | 250 | 0.1066 | 0.8288 | 0.8465 | 0.8376 | 0.9733 |

| No log | 0.63 | 275 | 0.0872 | 0.8580 | 0.8667 | 0.8624 | 0.9766 |

| No log | 0.68 | 300 | 0.0834 | 0.8522 | 0.8706 | 0.8613 | 0.9773 |

| No log | 0.74 | 325 | 0.0832 | 0.8545 | 0.8834 | 0.8687 | 0.9783 |

| No log | 0.8 | 350 | 0.0776 | 0.8542 | 0.8834 | 0.8685 | 0.9787 |

| No log | 0.85 | 375 | 0.0760 | 0.8629 | 0.8896 | 0.8760 | 0.9801 |

| No log | 0.91 | 400 | 0.0673 | 0.8775 | 0.9004 | 0.8888 | 0.9824 |

| No log | 0.97 | 425 | 0.0681 | 0.8827 | 0.8938 | 0.8882 | 0.9817 |

| No log | 1.03 | 450 | 0.0659 | 0.8844 | 0.8950 | 0.8897 | 0.9824 |

| No log | 1.08 | 475 | 0.0690 | 0.8833 | 0.9015 | 0.8923 | 0.9832 |

| 0.1399 | 1.14 | 500 | 0.0666 | 0.8932 | 0.9005 | 0.8968 | 0.9832 |

| 0.1399 | 1.2 | 525 | 0.0667 | 0.8891 | 0.8997 | 0.8944 | 0.9825 |

| 0.1399 | 1.25 | 550 | 0.0699 | 0.8751 | 0.8953 | 0.8851 | 0.9820 |

| 0.1399 | 1.31 | 575 | 0.0617 | 0.8947 | 0.9068 | 0.9007 | 0.9840 |

| 0.1399 | 1.37 | 600 | 0.0633 | 0.9 | 0.9058 | 0.9029 | 0.9841 |

| 0.1399 | 1.42 | 625 | 0.0639 | 0.8966 | 0.9116 | 0.9040 | 0.9843 |

| 0.1399 | 1.48 | 650 | 0.0624 | 0.8972 | 0.9110 | 0.9041 | 0.9845 |

| 0.1399 | 1.54 | 675 | 0.0619 | 0.8980 | 0.9081 | 0.9030 | 0.9842 |

| 0.1399 | 1.59 | 700 | 0.0615 | 0.9002 | 0.9090 | 0.9045 | 0.9843 |

| 0.1399 | 1.65 | 725 | 0.0601 | 0.9037 | 0.9128 | 0.9082 | 0.9850 |

| 0.1399 | 1.71 | 750 | 0.0585 | 0.9031 | 0.9142 | 0.9086 | 0.9849 |

| 0.1399 | 1.77 | 775 | 0.0582 | 0.9035 | 0.9143 | 0.9089 | 0.9851 |

| 0.1399 | 1.82 | 800 | 0.0580 | 0.9044 | 0.9157 | 0.9100 | 0.9853 |

| 0.1399 | 1.88 | 825 | 0.0583 | 0.9034 | 0.9160 | 0.9097 | 0.9851 |

| 0.1399 | 1.94 | 850 | 0.0578 | 0.9058 | 0.9170 | 0.9114 | 0.9854 |

| 0.1399 | 1.99 | 875 | 0.0576 | 0.9060 | 0.9165 | 0.9112 | 0.9852 |

### Framework versions

- Transformers 4.20.1

- Pytorch 1.12.0

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Cat/Kitty | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- generated_from_trainer

metrics:

- bleu

model-index:

- name: mBART_translator_json_sentence_split

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mBART_translator_json_sentence_split

This model is a fine-tuned version of [facebook/mbart-large-cc25](https://huggingface.co/facebook/mbart-large-cc25) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0769

- Bleu: 87.2405

- Gen Len: 27.425

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Bleu | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|

| 2.0011 | 1.0 | 2978 | 0.5458 | 63.8087 | 32.3819 |

| 1.1978 | 2.0 | 5956 | 0.1854 | 76.5291 | 27.6781 |

| 0.9276 | 3.0 | 8934 | 0.1123 | 84.7194 | 27.5773 |

| 0.776 | 4.0 | 11912 | 0.0845 | 87.505 | 27.2845 |

| 0.6889 | 5.0 | 14890 | 0.0769 | 87.2405 | 27.425 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

dccuchile/albert-base-spanish-finetuned-mldoc | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 34 | null | ---

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mT5_multilingual_XLSum-finetuned-xlsum-coba

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mT5_multilingual_XLSum-finetuned-xlsum-coba

This model is a fine-tuned version of [csebuetnlp/mT5_multilingual_XLSum](https://huggingface.co/csebuetnlp/mT5_multilingual_XLSum) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2369

- Rouge1: 0.3744

- Rouge2: 0.1718

- Rougel: 0.3092

- Rougelsum: 0.3106

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.00056

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:------:|:------:|:---------:|

| 1.6963 | 1.0 | 7648 | 1.2369 | 0.3744 | 0.1718 | 0.3092 | 0.3106 |

| 1.6975 | 2.0 | 15296 | 1.2369 | 0.3744 | 0.1718 | 0.3092 | 0.3106 |

| 1.6969 | 3.0 | 22944 | 1.2369 | 0.3744 | 0.1718 | 0.3092 | 0.3106 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

dccuchile/albert-base-spanish-finetuned-pawsx | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 25 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- beans

metrics:

- accuracy

model-index:

- name: platzi-vit-model-yeder-lvicente

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: beans

type: beans

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 1.0

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# platzi-vit-model-yeder-lvicente

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the beans dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0077

- Accuracy: 1.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.0084 | 3.85 | 500 | 0.0077 | 1.0 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

dccuchile/albert-large-spanish-finetuned-mldoc | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 27 | null | storage for eva models. it has intermediate low-performing models |

dccuchile/albert-xlarge-spanish-finetuned-ner | [

"pytorch",

"albert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"AlbertForTokenClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null |

---

language: en

tags:

- diffusers

license: mit

--- |

dccuchile/albert-xlarge-spanish-finetuned-pawsx | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 24 | null | ---

license: apache-2.0

library_name: keras

language: en

tags:

- vision

- maxim

- image-to-image

datasets:

- realblur_j

---

# MAXIM pre-trained on RealBlur-J for image deblurring

MAXIM model pre-trained for image deblurring. It was introduced in the paper [MAXIM: Multi-Axis MLP for Image Processing](https://arxiv.org/abs/2201.02973) by Zhengzhong Tu, Hossein Talebi, Han Zhang, Feng Yang, Peyman Milanfar, Alan Bovik, Yinxiao Li and first released in [this repository](https://github.com/google-research/maxim).

Disclaimer: The team releasing MAXIM did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

MAXIM introduces a shared MLP-based backbone for different image processing tasks such as image deblurring, deraining, denoising, dehazing, low-light image enhancement, and retouching. The following figure depicts the main components of MAXIM:

## Training procedure and results

The authors didn't release the training code. For more details on how the model was trained, refer to the [original paper](https://arxiv.org/abs/2201.02973).

As per the [table](https://github.com/google-research/maxim#results-and-pre-trained-models), the model achieves a PSNR of 32.84 and an SSIM of 0.935.

## Intended uses & limitations

You can use the raw model for image deblurring tasks.

The model is [officially released in JAX](https://github.com/google-research/maxim). It was ported to TensorFlow in [this repository](https://github.com/sayakpaul/maxim-tf).

### How to use

Here is how to use this model:

```python

from huggingface_hub import from_pretrained_keras

from PIL import Image

import tensorflow as tf

import numpy as np

import requests

url = "https://github.com/sayakpaul/maxim-tf/raw/main/images/Deblurring/input/1fromGOPR0950.png"

image = Image.open(requests.get(url, stream=True).raw)

image = np.array(image)

image = tf.convert_to_tensor(image)

image = tf.image.resize(image, (256, 256))

model = from_pretrained_keras("google/maxim-s3-deblurring-realblur-j")

predictions = model.predict(tf.expand_dims(image, 0))

```

For a more elaborate prediction pipeline, refer to [this Colab Notebook](https://colab.research.google.com/github/sayakpaul/maxim-tf/blob/main/notebooks/inference-dynamic-resize.ipynb).

### Citation

```bibtex

@article{tu2022maxim,

title={MAXIM: Multi-Axis MLP for Image Processing},

author={Tu, Zhengzhong and Talebi, Hossein and Zhang, Han and Yang, Feng and Milanfar, Peyman and Bovik, Alan and Li, Yinxiao},

journal={CVPR},

year={2022},

}

```

|

dccuchile/albert-xlarge-spanish-finetuned-qa-mlqa | [

"pytorch",

"albert",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | {

"architectures": [

"AlbertForQuestionAnswering"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | null | ---

license: apache-2.0

library_name: keras

language: en

tags:

- vision

- maxim

- image-to-image

datasets:

- rain13k

---

# MAXIM pre-trained on Rain13k for image deraining

MAXIM model pre-trained for image deraining. It was introduced in the paper [MAXIM: Multi-Axis MLP for Image Processing](https://arxiv.org/abs/2201.02973) by Zhengzhong Tu, Hossein Talebi, Han Zhang, Feng Yang, Peyman Milanfar, Alan Bovik, Yinxiao Li and first released in [this repository](https://github.com/google-research/maxim).

Disclaimer: The team releasing MAXIM did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

MAXIM introduces a shared MLP-based backbone for different image processing tasks such as image deblurring, deraining, denoising, dehazing, low-light image enhancement, and retouching. The following figure depicts the main components of MAXIM:

## Training procedure and results

The authors didn't release the training code. For more details on how the model was trained, refer to the [original paper](https://arxiv.org/abs/2201.02973).

As per the [table](https://github.com/google-research/maxim#results-and-pre-trained-models), the model achieves a PSNR of 33.24 and an SSIM of 0.933.

## Intended uses & limitations

You can use the raw model for image deraining tasks.

The model is [officially released in JAX](https://github.com/google-research/maxim). It was ported to TensorFlow in [this repository](https://github.com/sayakpaul/maxim-tf).

### How to use

Here is how to use this model:

```python

from huggingface_hub import from_pretrained_keras

from PIL import Image

import tensorflow as tf

import numpy as np

import requests

url = "https://github.com/sayakpaul/maxim-tf/raw/main/images/Deraining/input/55.png"

image = Image.open(requests.get(url, stream=True).raw)

image = np.array(image)

image = tf.convert_to_tensor(image)

image = tf.image.resize(image, (256, 256))

model = from_pretrained_keras("google/maxim-s2-deraining-rain13k")

predictions = model.predict(tf.expand_dims(image, 0))

```

For a more elaborate prediction pipeline, refer to [this Colab Notebook](https://colab.research.google.com/github/sayakpaul/maxim-tf/blob/main/notebooks/inference-dynamic-resize.ipynb).

### Citation

```bibtex

@article{tu2022maxim,

title={MAXIM: Multi-Axis MLP for Image Processing},

author={Tu, Zhengzhong and Talebi, Hossein and Zhang, Han and Yang, Feng and Milanfar, Peyman and Bovik, Alan and Li, Yinxiao},

journal={CVPR},

year={2022},

}

```

|

dccuchile/albert-xxlarge-spanish-finetuned-mldoc | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 26 | 2022-10-19T06:12:24Z | ---

license: apache-2.0

library_name: keras

language: en

tags:

- vision

- maxim

- image-to-image

datasets:

- raindrop

---

# MAXIM pre-trained on Raindrop for image deraining

MAXIM model pre-trained for image deraining. It was introduced in the paper [MAXIM: Multi-Axis MLP for Image Processing](https://arxiv.org/abs/2201.02973) by Zhengzhong Tu, Hossein Talebi, Han Zhang, Feng Yang, Peyman Milanfar, Alan Bovik, Yinxiao Li and first released in [this repository](https://github.com/google-research/maxim).

Disclaimer: The team releasing MAXIM did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

MAXIM introduces a shared MLP-based backbone for different image processing tasks such as image deblurring, deraining, denoising, dehazing, low-light image enhancement, and retouching. The following figure depicts the main components of MAXIM:

## Training procedure and results

The authors didn't release the training code. For more details on how the model was trained, refer to the [original paper](https://arxiv.org/abs/2201.02973).

As per the [table](https://github.com/google-research/maxim#results-and-pre-trained-models), the model achieves a PSNR of 31.87 and an SSIM of 0.935.

## Intended uses & limitations

You can use the raw model for image deraining tasks.

The model is [officially released in JAX](https://github.com/google-research/maxim). It was ported to TensorFlow in [this repository](https://github.com/sayakpaul/maxim-tf).

### How to use

Here is how to use this model:

```python

from huggingface_hub import from_pretrained_keras

from PIL import Image

import tensorflow as tf

import numpy as np

import requests

url = "https://github.com/sayakpaul/maxim-tf/raw/main/images/Deraining/input/55.png"

image = Image.open(requests.get(url, stream=True).raw)

image = np.array(image)

image = tf.convert_to_tensor(image)

image = tf.image.resize(image, (256, 256))

model = from_pretrained_keras("google/maxim-s2-deraining-raindrop")

predictions = model.predict(tf.expand_dims(image, 0))

```

For a more elaborate prediction pipeline, refer to [this Colab Notebook](https://colab.research.google.com/github/sayakpaul/maxim-tf/blob/main/notebooks/inference-dynamic-resize.ipynb).

### Citation

```bibtex

@article{tu2022maxim,

title={MAXIM: Multi-Axis MLP for Image Processing},

author={Tu, Zhengzhong and Talebi, Hossein and Zhang, Han and Yang, Feng and Milanfar, Peyman and Bovik, Alan and Li, Yinxiao},

journal={CVPR},

year={2022},

}

```

|

dccuchile/albert-xxlarge-spanish-finetuned-pawsx | [

"pytorch",

"albert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"AlbertForSequenceClassification"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 26 | null | ---

license: apache-2.0

library_name: keras

language: en

tags:

- vision

- maxim

- image-to-image

datasets:

- sots-indoor

---

# MAXIM pre-trained on RESIDE-Indoor for image dehazing

MAXIM model pre-trained for image dehazing. It was introduced in the paper [MAXIM: Multi-Axis MLP for Image Processing](https://arxiv.org/abs/2201.02973) by Zhengzhong Tu, Hossein Talebi, Han Zhang, Feng Yang, Peyman Milanfar, Alan Bovik, Yinxiao Li and first released in [this repository](https://github.com/google-research/maxim).

Disclaimer: The team releasing MAXIM did not write a model card for this model so this model card has been written by the Hugging Face team.

## Model description

MAXIM introduces a shared MLP-based backbone for different image processing tasks such as image deblurring, deraining, denoising, dehazing, low-light image enhancement, and retouching. The following figure depicts the main components of MAXIM:

## Training procedure and results

The authors didn't release the training code. For more details on how the model was trained, refer to the [original paper](https://arxiv.org/abs/2201.02973).

As per the [table](https://github.com/google-research/maxim#results-and-pre-trained-models), the model achieves a PSNR of 38.11 and an SSIM of 0.991.

## Intended uses & limitations

You can use the raw model for image dehazing tasks.

The model is [officially released in JAX](https://github.com/google-research/maxim). It was ported to TensorFlow in [this repository](https://github.com/sayakpaul/maxim-tf).

### How to use

Here is how to use this model:

```python

from huggingface_hub import from_pretrained_keras

from PIL import Image

import tensorflow as tf

import numpy as np

import requests

url = "https://github.com/sayakpaul/maxim-tf/raw/main/images/Dehazing/input/1440_10.png"

image = Image.open(requests.get(url, stream=True).raw)

image = np.array(image)

image = tf.convert_to_tensor(image)

image = tf.image.resize(image, (256, 256))

model = from_pretrained_keras("google/maxim-s2-dehazing-sots-indoor")

predictions = model.predict(tf.expand_dims(image, 0))

```

For a more elaborate prediction pipeline, refer to [this Colab Notebook](https://colab.research.google.com/github/sayakpaul/maxim-tf/blob/main/notebooks/inference-dynamic-resize.ipynb).

### Citation

```bibtex

@article{tu2022maxim,

title={MAXIM: Multi-Axis MLP for Image Processing},

author={Tu, Zhengzhong and Talebi, Hossein and Zhang, Han and Yang, Feng and Milanfar, Peyman and Bovik, Alan and Li, Yinxiao},

journal={CVPR},

year={2022},

}

```

|

dccuchile/albert-xxlarge-spanish-finetuned-qa-mlqa | [

"pytorch",

"albert",

"question-answering",

"transformers",

"autotrain_compatible"

]

| question-answering | {

"architectures": [

"AlbertForQuestionAnswering"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 7 | null | ---

license: mit

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: roberta-base-twitter_eval_sentiment

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-twitter_eval_sentiment

This model is a fine-tuned version of [roberta-base](https://huggingface.co/roberta-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.8020

- Accuracy: 0.6635

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.0144 | 1.0 | 1875 | 0.9109 | 0.6025 |

| 0.8331 | 2.0 | 3750 | 0.8187 | 0.6555 |

| 0.7549 | 3.0 | 5625 | 0.8020 | 0.6635 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

dccuchile/albert-xxlarge-spanish | [

"pytorch",

"tf",

"albert",

"pretraining",

"es",

"dataset:large_spanish_corpus",

"transformers",

"spanish",

"OpenCENIA"

]

| null | {

"architectures": [

"AlbertForPreTraining"

],

"model_type": "albert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 42 | null | Model card for RoSummary-large

---

language:

- ro

---

# RoSummary

This is a version of the RoGPT2 model trained on the [AlephNews](https://huggingface.co/datasets/readerbench/AlephNews) dataset for the summarization task. There are 3 trained versions, they are available on the HuggingFace Hub:

* [base](https://huggingface.co/readerbench/RoSummary-base)

* [medium](https://huggingface.co/readerbench/RoSummary-medium)

* [large](https://huggingface.co/readerbench/RoSummary-large)

## Evaluation on [AlephNews](https://huggingface.co/datasets/readerbench/AlephNews)

| Model | Decode Method | | BERTScore | | | ROUGE | |

|:------:|:--------------:|:---------:|:---------:|:--------:|:--------:|:--------:|:--------:|

| | | Precision | Recall | F1-Score | ROUGE-1 | ROUGE-2 | ROUGE-L |

| | Greedy | 0.7335 | 0.7399 | 0.7358 | 0.3360 | 0.1862 | 0.3333 |

| Base | Beam Search | 0.7354 | 0.7468 | 0.7404 | 0.3480 | 0.1991 | 0.3416 |

| | Top-p Sampling | 0.7296 | 0.7299 | 0.7292 | 0.3058 | 0.1452 | 0.2951 |

| | Greedy | 0.7378 | 0.7401 | 0.7380 | 0.3422 | 0.1922 | 0.3394 |

| Medium | Beam Search | 0.7390 | **0.7493**|**0.7434**|**0.3546**|**0.2061**|**0.3467**|

| | Top-p Sampling | 0.7315 | 0.7285 | 0.7294 | 0.3042 | 0.1400 | 0.2921 |

| | Greedy | 0.7376 | 0.7424 | 0.7391 | 0.3414 | 0.1895 | 0.3355 |

| Large | Beam Search | **0.7394**| 0.7470 | 0.7424 | 0.3492 | 0.1995 | 0.3384 |

| | Top-p Sampling | 0.7311 | 0.7301 | 0.7299 | 0.3051 | 0.1418 | 0.2931 |

## Acknowledgments

---

Research supported with [Cloud TPUs](https://cloud.google.com/tpu/) from Google's [TensorFlow Research Cloud (TFRC)](https://www.tensorflow.org/tfrc)

|

dccuchile/bert-base-spanish-wwm-cased-finetuned-pos | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1 | null | ---

license: cc-by-nc-sa-4.0

tags:

- generated_from_trainer

datasets:

- sroie

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: Sinergy-Question-Answering

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: sroie

type: sroie

config: discharge

split: train

args: discharge

metrics:

- name: Precision

type: precision

value: 0.7948717948717948

- name: Recall

type: recall

value: 0.7948717948717948

- name: F1

type: f1

value: 0.7948717948717948

- name: Accuracy

type: accuracy

value: 0.9261159569009748

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Sinergy-Question-Answering

This model is a fine-tuned version of [microsoft/layoutlmv3-base](https://huggingface.co/microsoft/layoutlmv3-base) on the sroie dataset.

It achieves the following results on the evaluation set:

- Loss: 0.5867

- Precision: 0.7949

- Recall: 0.7949

- F1: 0.7949

- Accuracy: 0.9261

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 2

- eval_batch_size: 2

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:------:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 4.55 | 100 | 0.3686 | 0.5748 | 0.7179 | 0.6384 | 0.8881 |

| No log | 9.09 | 200 | 0.3057 | 0.6799 | 0.7546 | 0.7153 | 0.9189 |

| No log | 13.64 | 300 | 0.3287 | 0.7491 | 0.7875 | 0.7679 | 0.9354 |

| No log | 18.18 | 400 | 0.3452 | 0.7414 | 0.7875 | 0.7638 | 0.9307 |

| 0.2603 | 22.73 | 500 | 0.3365 | 0.7313 | 0.7875 | 0.7584 | 0.9415 |

| 0.2603 | 27.27 | 600 | 0.5244 | 0.7745 | 0.7802 | 0.7774 | 0.9097 |

| 0.2603 | 31.82 | 700 | 0.4429 | 0.7737 | 0.7766 | 0.7751 | 0.9338 |

| 0.2603 | 36.36 | 800 | 0.4776 | 0.7657 | 0.8022 | 0.7835 | 0.9266 |

| 0.2603 | 40.91 | 900 | 0.5305 | 0.7855 | 0.7912 | 0.7883 | 0.9236 |

| 0.051 | 45.45 | 1000 | 0.5867 | 0.7949 | 0.7949 | 0.7949 | 0.9261 |

| 0.051 | 50.0 | 1100 | 0.5569 | 0.7774 | 0.7802 | 0.7788 | 0.9323 |

| 0.051 | 54.55 | 1200 | 0.6154 | 0.7509 | 0.7509 | 0.7509 | 0.9200 |

| 0.051 | 59.09 | 1300 | 0.5406 | 0.7305 | 0.7546 | 0.7423 | 0.9297 |

| 0.051 | 63.64 | 1400 | 0.6069 | 0.7544 | 0.7875 | 0.7706 | 0.9287 |

| 0.0127 | 68.18 | 1500 | 0.6142 | 0.7603 | 0.7436 | 0.7519 | 0.9210 |

| 0.0127 | 72.73 | 1600 | 0.5822 | 0.7399 | 0.7399 | 0.7399 | 0.9297 |

| 0.0127 | 77.27 | 1700 | 0.5584 | 0.75 | 0.7582 | 0.7541 | 0.9297 |

| 0.0127 | 81.82 | 1800 | 0.5962 | 0.7509 | 0.7729 | 0.7617 | 0.9241 |

| 0.0127 | 86.36 | 1900 | 0.6891 | 0.7580 | 0.7802 | 0.7690 | 0.9236 |

| 0.0013 | 90.91 | 2000 | 0.6205 | 0.75 | 0.7582 | 0.7541 | 0.9266 |

| 0.0013 | 95.45 | 2100 | 0.6235 | 0.7745 | 0.7802 | 0.7774 | 0.9292 |

| 0.0013 | 100.0 | 2200 | 0.6329 | 0.7656 | 0.7656 | 0.7656 | 0.9292 |

| 0.0013 | 104.55 | 2300 | 0.6482 | 0.7739 | 0.7399 | 0.7566 | 0.9241 |

| 0.0013 | 109.09 | 2400 | 0.6440 | 0.7675 | 0.7619 | 0.7647 | 0.9292 |

| 0.0008 | 113.64 | 2500 | 0.6388 | 0.7630 | 0.7546 | 0.7587 | 0.9343 |

| 0.0008 | 118.18 | 2600 | 0.7076 | 0.7774 | 0.7546 | 0.7658 | 0.9225 |

| 0.0008 | 122.73 | 2700 | 0.6698 | 0.7721 | 0.7692 | 0.7706 | 0.9297 |

| 0.0008 | 127.27 | 2800 | 0.6898 | 0.76 | 0.7656 | 0.7628 | 0.9220 |

| 0.0008 | 131.82 | 2900 | 0.6800 | 0.7482 | 0.7619 | 0.7550 | 0.9282 |

| 0.0006 | 136.36 | 3000 | 0.6911 | 0.7393 | 0.7582 | 0.7486 | 0.9215 |

| 0.0006 | 140.91 | 3100 | 0.6818 | 0.7446 | 0.7582 | 0.7514 | 0.9220 |

| 0.0006 | 145.45 | 3200 | 0.7043 | 0.7473 | 0.7692 | 0.7581 | 0.9210 |

| 0.0006 | 150.0 | 3300 | 0.6935 | 0.7482 | 0.7729 | 0.7604 | 0.9246 |

| 0.0006 | 154.55 | 3400 | 0.7163 | 0.7482 | 0.7729 | 0.7604 | 0.9230 |

| 0.0001 | 159.09 | 3500 | 0.7329 | 0.7590 | 0.7729 | 0.7659 | 0.9205 |

| 0.0001 | 163.64 | 3600 | 0.7570 | 0.7737 | 0.7766 | 0.7751 | 0.9215 |

| 0.0001 | 168.18 | 3700 | 0.7552 | 0.7664 | 0.7692 | 0.7678 | 0.9225 |

| 0.0001 | 172.73 | 3800 | 0.7226 | 0.7831 | 0.7802 | 0.7817 | 0.9246 |

| 0.0001 | 177.27 | 3900 | 0.6868 | 0.7844 | 0.7729 | 0.7786 | 0.9297 |

| 0.0003 | 181.82 | 4000 | 0.6916 | 0.7757 | 0.7729 | 0.7743 | 0.9256 |

| 0.0003 | 186.36 | 4100 | 0.6862 | 0.7749 | 0.7692 | 0.7721 | 0.9292 |

| 0.0003 | 190.91 | 4200 | 0.7067 | 0.7749 | 0.7692 | 0.7721 | 0.9225 |

| 0.0003 | 195.45 | 4300 | 0.7059 | 0.7628 | 0.7656 | 0.7642 | 0.9210 |

| 0.0003 | 200.0 | 4400 | 0.7300 | 0.7609 | 0.7692 | 0.7650 | 0.9210 |

| 0.0002 | 204.55 | 4500 | 0.7299 | 0.7572 | 0.7656 | 0.7614 | 0.9215 |

| 0.0002 | 209.09 | 4600 | 0.7168 | 0.7527 | 0.7692 | 0.7609 | 0.9210 |

| 0.0002 | 213.64 | 4700 | 0.7177 | 0.7545 | 0.7656 | 0.76 | 0.9210 |

| 0.0002 | 218.18 | 4800 | 0.7182 | 0.7545 | 0.7656 | 0.76 | 0.9210 |

| 0.0002 | 222.73 | 4900 | 0.7190 | 0.7628 | 0.7656 | 0.7642 | 0.9205 |

| 0.0001 | 227.27 | 5000 | 0.7168 | 0.7572 | 0.7656 | 0.7614 | 0.9215 |

### Framework versions

- Transformers 4.24.0.dev0

- Pytorch 1.12.1+cu113

- Datasets 2.2.2

- Tokenizers 0.13.1

|

dccuchile/bert-base-spanish-wwm-cased-finetuned-xnli | [

"pytorch",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 28 | null | ---

license: apache-2.0

---

# T5 Sami - Norwegian - Sami

Placeholder for future model. Description is coming soon.

|

dccuchile/bert-base-spanish-wwm-uncased-finetuned-ner | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- opus_books

model-index:

- name: byt5-small-finetuned-1epoch-batch16-opus_books-en-to-it

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# byt5-small-finetuned-1epoch-batch16-opus_books-en-to-it

This model is a fine-tuned version of [google/byt5-small](https://huggingface.co/google/byt5-small) on the opus_books dataset.

It achieves the following results on the evaluation set:

- Loss: 0.9848

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.3771 | 1.0 | 1819 | 0.9848 |

### Framework versions

- Transformers 4.23.1

- Pytorch 1.12.1+cu113

- Datasets 2.6.1

- Tokenizers 0.13.1

|

dccuchile/bert-base-spanish-wwm-uncased-finetuned-pos | [

"pytorch",

"bert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

language: en

tags:

- EBK-BERT

license: apache-2.0

datasets:

- Araevent(November)

- Araevent(July)

---

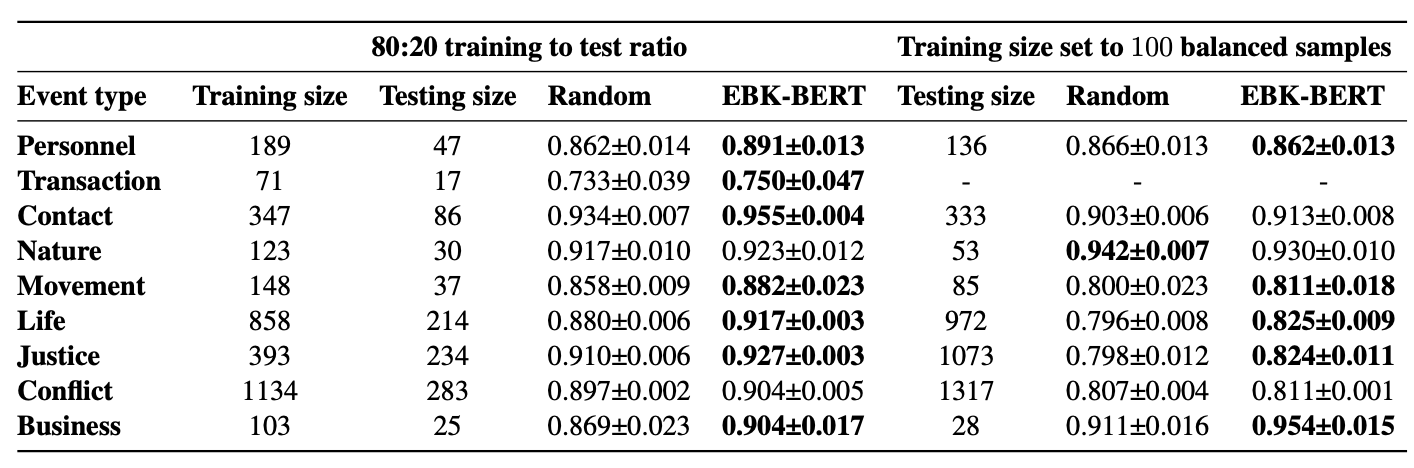

# BK-BERT

Event Knowledge-Based BERT (EBK-BERT) leverages knowledge extracted from events-related sentences to mask words that

are significant to the events detection task. This approach aims to produce a language model that enhances the

performance of the down-stream event detection task, which is later trained during the fine-tuning process.

## Model description

The BERT-base configuration is adopted which has 12 encoder blocks, 768 hidden dimensions, 12 attention heads,

512 maximum sequence length, and a total of 110M parameters.

## Pre-training Data

The pre-training data consists of news articles from the 1.5 billion words corpus by (El-Khair, 2016).

Due to computation limitations, we only use articles from Alittihad, Riyadh, Almasrya- lyoum, and Alqabas,

which amount to 10GB of text and about 8M sentences after splitting the articles to approximately

100 word sentences to accommodate the 128 max_sentence length used when training the model.

The average number of tokens per sentence is 105.

### Pretraining

As previous studies have shown, contextual representation models that are pre-trained using top Personnel

Transaction Contact Nature Movement Life Justice Conflict business the MLM training task benefit from masking

the most significant words, using whole word masking.

To select the most significant words we use odds-ratio. Only words with greater than 2 odds-ratio are considered

in the masking, which means the words included are at least twice as likely to appear in one event type than the other.

Google Cloud GPU is used for pre-training the model. The selected hyperparameters are: learning rate=1e − 4,

batch size =16, maxi- mum sequence length = 128 and average se- quence length = 104. In total, we pre-trained

our models for 500, 000 steps, completing 1 epoch. Pre-training a single model took approximately 2.25 days.

## Fine-tuning data

Tweets are collected from well-known Arabic news accounts, which are: Al-Arabiya, Sabq,

CNN Arabic, and BBC Arabic. These accounts belong to television channels and online

newspapers, where they use Twitter to broadcast news related to real-world events.

The first collection process tracks tweets from the news accounts for 20 days period,

between November 2, 2021, and November 22, 2021 and we call this dataset AraEvent(November).

## Evaluation results

When fine-tuned on down-stream event detection task, this model achieves the following results:

## Gradio Demo

will be released soon |

dccuchile/distilbert-base-spanish-uncased-finetuned-mldoc | [

"pytorch",

"distilbert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"DistilBertForSequenceClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 27 | null | Access to model sd-concepts-library/wedding-HandPainted is restricted and you are not in the authorized list. Visit https://huggingface.co/sd-concepts-library/wedding-HandPainted to ask for access. |

dccuchile/distilbert-base-spanish-uncased-finetuned-pos | [

"pytorch",

"distilbert",

"token-classification",

"transformers",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"DistilBertForTokenClassification"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 3 | null | ---

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: bert-base-greek-uncased-v1-finetuned-ner

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# bert-base-greek-uncased-v1-finetuned-ner

This model is a fine-tuned version of [nlpaueb/bert-base-greek-uncased-v1](https://huggingface.co/nlpaueb/bert-base-greek-uncased-v1) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.1052

- Precision: 0.8440

- Recall: 0.8566

- F1: 0.8503

- Accuracy: 0.9768

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 0.64 | 250 | 0.0913 | 0.7814 | 0.8208 | 0.8073 | 0.9728 |

| 0.1144 | 1.29 | 500 | 0.0823 | 0.7940 | 0.8448 | 0.8342 | 0.9755 |

| 0.1144 | 1.93 | 750 | 0.0812 | 0.8057 | 0.8212 | 0.8328 | 0.9751 |

| 0.0570 | 2.58 | 1000 | 0.0855 | 0.8244 | 0.8514 | 0.8292 | 0.9744 |

| 0.0570 | 3.22 | 1250 | 0.0926 | 0.8329 | 0.8441 | 0.8397 | 0.9760 |

| 0.0393 | 3.87 | 1500 | 0.0869 | 0.8256 | 0.8633 | 0.8440 | 0.9774 |

| 0.0393 | 4.51 | 1750 | 0.1049 | 0.8290 | 0.8636 | 0.8459 | 0.9766 |

| 0.026 | 5.15 | 2000 | 0.1093 | 0.8440 | 0.8566 | 0.8503 | 0.9768 |

| 0.026 | 5.8 | 2250 | 0.1172 | 0.8301 | 0.8514 | 0.8406 | 0.9760 |

| 0.0189 | 6.44 | 2500 | 0.1273 | 0.8238 | 0.8688 | 0.8457 | 0.9766 |

| 0.0189 | 7.09 | 2750 | 0.1246 | 0.8350 | 0.8539 | 0.8443 | 0.9764 |

| 0.0148 | 7.73 | 3000 | 0.1262 | 0.8333 | 0.8608 | 0.8468 | 0.9764 |

| 0.0148 | 8.38 | 3250 | 0.1347 | 0.8319 | 0.8591 | 0.8453 | 0.9762 |

| 0.0010 | 9.02 | 3500 | 0.1325 | 0.8376 | 0.8504 | 0.8439 | 0.9766 |

| 0.0010 | 9.66 | 3750 | 0.1362 | 0.8371 | 0.8563 | 0.8466 | 0.9765 |

### Framework versions

- Transformers 4.22.0

- Pytorch 1.12.1+cu113

- Datasets 2.4.0

- Tokenizers 0.12.1

|

Certified-Zoomer/DialoGPT-small-rick | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

tags:

- generated_from_keras_callback

model-index:

- name: Long_Bartpho_word_base

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Long_Bartpho_word_base

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.23.1

- TensorFlow 2.9.2

- Tokenizers 0.13.1

|

Chae/botman | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 5 | null | ---

tags:

- generated_from_keras_callback

model-index:

- name: Long_Bartpho_syllable_base

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# Long_Bartpho_syllable_base

This model was trained from scratch on an unknown dataset.

It achieves the following results on the evaluation set:

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: None

- training_precision: float32

### Training results

### Framework versions

- Transformers 4.23.1

- TensorFlow 2.9.2

- Tokenizers 0.13.1

|

Chaewon/mmnt_decoder_en | [

"pytorch",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null