modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

CLTL/gm-ner-xlmrbase | [

"pytorch",

"tf",

"xlm-roberta",

"token-classification",

"nl",

"transformers",

"dighum",

"license:apache-2.0",

"autotrain_compatible"

]

| token-classification | {

"architectures": [

"XLMRobertaForTokenClassification"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 2 | null | ---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

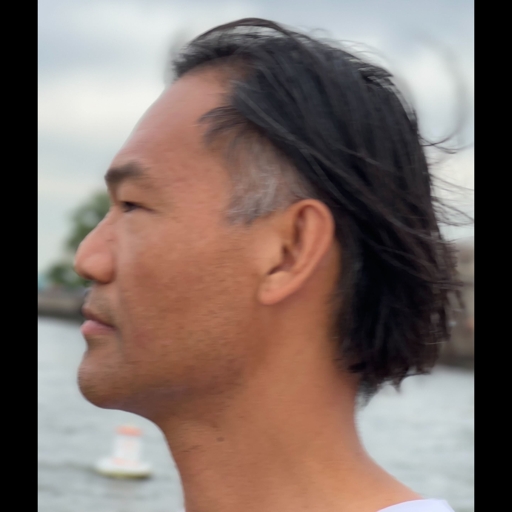

### Hussein_Deliberate_1000steps Dreambooth model trained by HusseinHE with [TheLastBen's fast-DreamBooth](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast-DreamBooth.ipynb) notebook

Test the concept via A1111 Colab [fast-Colab-A1111](https://colab.research.google.com/github/TheLastBen/fast-stable-diffusion/blob/main/fast_stable_diffusion_AUTOMATIC1111.ipynb)

Sample pictures of this concept:

|

CLTL/icf-levels-adm | [

"pytorch",

"roberta",

"text-classification",

"nl",

"transformers",

"license:mit"

]

| text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 33 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 273.05 +/- 17.96

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

CLTL/icf-levels-etn | [

"pytorch",

"roberta",

"text-classification",

"nl",

"transformers",

"license:mit"

]

| text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 31 | null | A tiny version of Zipformer-Transducer (https://github.com/k2-fsa/icefall/tree/master/egs/librispeech/ASR/pruned_transducer_stateless7).

Number of model parameters: 20697573

Decoding results at epoch-30-avg-9:

* greedy_search: 2.67 & 6.4

* modified_beam_search: 2.6 & 6.26

* fast_beam_search: 2.64 & 6.3

|

CLTL/icf-levels-fac | [

"pytorch",

"roberta",

"text-classification",

"nl",

"transformers",

"license:mit"

]

| text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 32 | 2023-01-28T01:48:37Z | ---

license: cc-by-sa-4.0

---

Abstract Expression Machine 30 (AEM30):

Stable Diffusion 2 from Stability AI fine-tuned 30 steps on [maximalmargin/mitchell](https://huggingface.co/datasets/maximalmargin/mitchell) dataset. |

CLTL/icf-levels-ins | [

"pytorch",

"roberta",

"text-classification",

"nl",

"transformers",

"license:mit"

]

| text-classification | {

"architectures": [

"RobertaForSequenceClassification"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 32 | 2023-01-28T01:48:47Z | ---

license: cc-by-sa-4.0

---

Abstract Expression Machine 100 (AEM100):

Stable Diffusion 2 from Stability AI fine-tuned 100 steps on [maximalmargin/mitchell](https://huggingface.co/datasets/maximalmargin/mitchell) dataset. |

CSResearcher/TestModel | [

"license:mit"

]

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2023-01-28T03:16:19Z | ---

language: en

thumbnail: http://www.huggingtweets.com/aneternalenigma/1674876241213/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1610259562988294147/Ck8uDVHJ_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">🔴LIVE on TWITCH!🔴AnEternalEnigma</div>

<div style="text-align: center; font-size: 14px;">@aneternalenigma</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from 🔴LIVE on TWITCH!🔴AnEternalEnigma.

| Data | 🔴LIVE on TWITCH!🔴AnEternalEnigma |

| --- | --- |

| Tweets downloaded | 3235 |

| Retweets | 773 |

| Short tweets | 320 |

| Tweets kept | 2142 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/mw1vu54f/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @aneternalenigma's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/cu1opgto) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/cu1opgto/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/aneternalenigma')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

CSZay/bart | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

language: en

thumbnail: https://github.com/borisdayma/huggingtweets/blob/master/img/logo.png?raw=true

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1597709018142855170/e0xfVtT4_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">silly little time</div>

<div style="text-align: center; font-size: 14px;">@muzhroommama</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from silly little time.

| Data | silly little time |

| --- | --- |

| Tweets downloaded | 236 |

| Retweets | 87 |

| Short tweets | 32 |

| Tweets kept | 117 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/xaynl4xc/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @muzhroommama's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/x523rtvl) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/x523rtvl/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/muzhroommama')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

CZWin32768/xlm-align | [

"pytorch",

"xlm-roberta",

"fill-mask",

"arxiv:2106.06381",

"transformers",

"autotrain_compatible"

]

| fill-mask | {

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 6 | null | Access to model shaoncsecu/en_Disease_A_Z_SpaCy is restricted and you are not in the authorized list. Visit https://huggingface.co/shaoncsecu/en_Disease_A_Z_SpaCy to ask for access. |

Callidior/bert2bert-base-arxiv-titlegen | [

"pytorch",

"safetensors",

"encoder-decoder",

"text2text-generation",

"en",

"dataset:arxiv_dataset",

"transformers",

"summarization",

"license:apache-2.0",

"autotrain_compatible",

"has_space"

]

| summarization | {

"architectures": [

"EncoderDecoderModel"

],

"model_type": "encoder-decoder",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 145 | null | ---

tags:

- conversational

---

#42meow DialoGPT Model |

CallumRai/HansardGPT2 | [

"pytorch",

"jax",

"gpt2",

"text-generation",

"transformers"

]

| text-generation | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 14 | null |

---

tags:

- ultralyticsplus

- yolov8

- ultralytics

- yolo

- vision

- image-classification

- pytorch

- awesome-yolov8-models

library_name: ultralytics

library_version: 8.0.23

inference: false

datasets:

- keremberke/chest-xray-classification

model-index:

- name: keremberke/yolov8m-chest-xray-classification

results:

- task:

type: image-classification

dataset:

type: keremberke/chest-xray-classification

name: chest-xray-classification

split: validation

metrics:

- type: accuracy

value: 0.95533 # min: 0.0 - max: 1.0

name: top1 accuracy

- type: accuracy

value: 1 # min: 0.0 - max: 1.0

name: top5 accuracy

---

<div align="center">

<img width="640" alt="keremberke/yolov8m-chest-xray-classification" src="https://huggingface.co/keremberke/yolov8m-chest-xray-classification/resolve/main/thumbnail.jpg">

</div>

### Supported Labels

```

['NORMAL', 'PNEUMONIA']

```

### How to use

- Install [ultralyticsplus](https://github.com/fcakyon/ultralyticsplus):

```bash

pip install ultralyticsplus==0.0.24 ultralytics==8.0.23

```

- Load model and perform prediction:

```python

from ultralyticsplus import YOLO, postprocess_classify_output

# load model

model = YOLO('keremberke/yolov8m-chest-xray-classification')

# set model parameters

model.overrides['conf'] = 0.25 # model confidence threshold

# set image

image = 'https://github.com/ultralytics/yolov5/raw/master/data/images/zidane.jpg'

# perform inference

results = model.predict(image)

# observe results

print(results[0].probs) # [0.1, 0.2, 0.3, 0.4]

processed_result = postprocess_classify_output(model, result=results[0])

print(processed_result) # {"cat": 0.4, "dog": 0.6}

```

**More models available at: [awesome-yolov8-models](https://yolov8.xyz)** |

Cameron/BERT-Jigsaw | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 35 | null |

---

tags:

- ultralyticsplus

- yolov8

- ultralytics

- yolo

- vision

- image-classification

- pytorch

- awesome-yolov8-models

library_name: ultralytics

library_version: 8.0.21

inference: false

datasets:

- keremberke/pokemon-classification

model-index:

- name: keremberke/yolov8n-pokemon-classification

results:

- task:

type: image-classification

dataset:

type: keremberke/pokemon-classification

name: pokemon-classification

split: validation

metrics:

- type: accuracy

value: 0.02322 # min: 0.0 - max: 1.0

name: top1 accuracy

- type: accuracy

value: 0.09016 # min: 0.0 - max: 1.0

name: top5 accuracy

---

<div align="center">

<img width="640" alt="keremberke/yolov8n-pokemon-classification" src="https://huggingface.co/keremberke/yolov8n-pokemon-classification/resolve/main/thumbnail.jpg">

</div>

### Supported Labels

```

['Abra', 'Aerodactyl', 'Alakazam', 'Alolan Sandslash', 'Arbok', 'Arcanine', 'Articuno', 'Beedrill', 'Bellsprout', 'Blastoise', 'Bulbasaur', 'Butterfree', 'Caterpie', 'Chansey', 'Charizard', 'Charmander', 'Charmeleon', 'Clefable', 'Clefairy', 'Cloyster', 'Cubone', 'Dewgong', 'Diglett', 'Ditto', 'Dodrio', 'Doduo', 'Dragonair', 'Dragonite', 'Dratini', 'Drowzee', 'Dugtrio', 'Eevee', 'Ekans', 'Electabuzz', 'Electrode', 'Exeggcute', 'Exeggutor', 'Farfetchd', 'Fearow', 'Flareon', 'Gastly', 'Gengar', 'Geodude', 'Gloom', 'Golbat', 'Goldeen', 'Golduck', 'Golem', 'Graveler', 'Grimer', 'Growlithe', 'Gyarados', 'Haunter', 'Hitmonchan', 'Hitmonlee', 'Horsea', 'Hypno', 'Ivysaur', 'Jigglypuff', 'Jolteon', 'Jynx', 'Kabuto', 'Kabutops', 'Kadabra', 'Kakuna', 'Kangaskhan', 'Kingler', 'Koffing', 'Krabby', 'Lapras', 'Lickitung', 'Machamp', 'Machoke', 'Machop', 'Magikarp', 'Magmar', 'Magnemite', 'Magneton', 'Mankey', 'Marowak', 'Meowth', 'Metapod', 'Mew', 'Mewtwo', 'Moltres', 'MrMime', 'Muk', 'Nidoking', 'Nidoqueen', 'Nidorina', 'Nidorino', 'Ninetales', 'Oddish', 'Omanyte', 'Omastar', 'Onix', 'Paras', 'Parasect', 'Persian', 'Pidgeot', 'Pidgeotto', 'Pidgey', 'Pikachu', 'Pinsir', 'Poliwag', 'Poliwhirl', 'Poliwrath', 'Wigglytuff', 'Zapdos', 'Zubat']

```

### How to use

- Install [ultralyticsplus](https://github.com/fcakyon/ultralyticsplus):

```bash

pip install ultralyticsplus==0.0.23 ultralytics==8.0.21

```

- Load model and perform prediction:

```python

from ultralyticsplus import YOLO, postprocess_classify_output

# load model

model = YOLO('keremberke/yolov8n-pokemon-classification')

# set model parameters

model.overrides['conf'] = 0.25 # model confidence threshold

# set image

image = 'https://github.com/ultralytics/yolov5/raw/master/data/images/zidane.jpg'

# perform inference

results = model.predict(image)

# observe results

print(results[0].probs) # [0.1, 0.2, 0.3, 0.4]

processed_result = postprocess_classify_output(model, result=results[0])

print(processed_result) # {"cat": 0.4, "dog": 0.6}

```

**More models available at: [awesome-yolov8-models](https://yolov8.xyz)** |

Cameron/BERT-SBIC-offensive | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 31 | null | ---

language: en

thumbnail: http://www.huggingtweets.com/rhilever/1674878721821/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1611525483770044416/fYPREQ1N_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Rhilever</div>

<div style="text-align: center; font-size: 14px;">@rhilever</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Rhilever.

| Data | Rhilever |

| --- | --- |

| Tweets downloaded | 2728 |

| Retweets | 326 |

| Short tweets | 402 |

| Tweets kept | 2000 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/imxxkyr1/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @rhilever's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/bvkpy3yi) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/bvkpy3yi/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/rhilever')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Cameron/BERT-SBIC-targetcategory | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 30 | null |

---

license: creativeml-openrail-m

base_model: CompVis/stable-diffusion-v1-4

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

# KerasCV Stable Diffusion in Diffusers 🧨🤗

The pipeline contained in this repository was created using [this Space](https://huggingface.co/spaces/sayakpaul/convert-kerascv-sd-diffusers). The purpose is to convert the KerasCV Stable Diffusion weights in a way that is compatible with [Diffusers](https://github.com/huggingface/diffusers). This allows users to fine-tune using KerasCV and use the fine-tuned weights in Diffusers taking advantage of its nifty features (like [schedulers](https://huggingface.co/docs/diffusers/main/en/using-diffusers/schedulers), [fast attention](https://huggingface.co/docs/diffusers/optimization/fp16), etc.).

Following weight paths (KerasCV) were used

: ['https://huggingface.co/sayakpaul/dreambooth-keras-dogs-unet/resolve/main/lr_1e-6_steps_1000.h5'] |

Cameron/BERT-eec-emotion | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 36 | 2023-01-28T04:19:19Z | ---

language: en

thumbnail: http://www.huggingtweets.com/maxylobes/1674880251172/predictions.png

tags:

- huggingtweets

widget:

- text: "My dream is"

---

<div class="inline-flex flex-col" style="line-height: 1.5;">

<div class="flex">

<div

style="display:inherit; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('https://pbs.twimg.com/profile_images/1618233941110173696/x6aWoIH3_400x400.jpg')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

<div

style="display:none; margin-left: 4px; margin-right: 4px; width: 92px; height:92px; border-radius: 50%; background-size: cover; background-image: url('')">

</div>

</div>

<div style="text-align: center; margin-top: 3px; font-size: 16px; font-weight: 800">🤖 AI BOT 🤖</div>

<div style="text-align: center; font-size: 16px; font-weight: 800">Maxy</div>

<div style="text-align: center; font-size: 14px;">@maxylobes</div>

</div>

I was made with [huggingtweets](https://github.com/borisdayma/huggingtweets).

Create your own bot based on your favorite user with [the demo](https://colab.research.google.com/github/borisdayma/huggingtweets/blob/master/huggingtweets-demo.ipynb)!

## How does it work?

The model uses the following pipeline.

To understand how the model was developed, check the [W&B report](https://wandb.ai/wandb/huggingtweets/reports/HuggingTweets-Train-a-Model-to-Generate-Tweets--VmlldzoxMTY5MjI).

## Training data

The model was trained on tweets from Maxy.

| Data | Maxy |

| --- | --- |

| Tweets downloaded | 3245 |

| Retweets | 236 |

| Short tweets | 142 |

| Tweets kept | 2867 |

[Explore the data](https://wandb.ai/wandb/huggingtweets/runs/xfg543v2/artifacts), which is tracked with [W&B artifacts](https://docs.wandb.com/artifacts) at every step of the pipeline.

## Training procedure

The model is based on a pre-trained [GPT-2](https://huggingface.co/gpt2) which is fine-tuned on @maxylobes's tweets.

Hyperparameters and metrics are recorded in the [W&B training run](https://wandb.ai/wandb/huggingtweets/runs/ge6894ny) for full transparency and reproducibility.

At the end of training, [the final model](https://wandb.ai/wandb/huggingtweets/runs/ge6894ny/artifacts) is logged and versioned.

## How to use

You can use this model directly with a pipeline for text generation:

```python

from transformers import pipeline

generator = pipeline('text-generation',

model='huggingtweets/maxylobes')

generator("My dream is", num_return_sequences=5)

```

## Limitations and bias

The model suffers from [the same limitations and bias as GPT-2](https://huggingface.co/gpt2#limitations-and-bias).

In addition, the data present in the user's tweets further affects the text generated by the model.

## About

*Built by Boris Dayma*

[](https://twitter.com/intent/follow?screen_name=borisdayma)

For more details, visit the project repository.

[](https://github.com/borisdayma/huggingtweets)

|

Cameron/BERT-jigsaw-severetoxic | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 30 | 2023-01-28T04:23:35Z | ---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imagefolder

metrics:

- accuracy

model-index:

- name: swin-tiny-patch4-window7-224-finetuned-eurosat

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: imagefolder

type: imagefolder

config: default

split: train

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9822222222222222

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# swin-tiny-patch4-window7-224-finetuned-eurosat

This model is a fine-tuned version of [microsoft/swin-tiny-patch4-window7-224](https://huggingface.co/microsoft/swin-tiny-patch4-window7-224) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0583

- Accuracy: 0.9822

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 4

- total_train_batch_size: 128

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.2655 | 1.0 | 190 | 0.1039 | 0.9707 |

| 0.1519 | 2.0 | 380 | 0.0866 | 0.9715 |

| 0.1402 | 3.0 | 570 | 0.0583 | 0.9822 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu117

- Datasets 2.9.0

- Tokenizers 0.13.2

|

Cameron/BERT-mdgender-convai-binary | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 33 | 2023-01-28T04:36:59Z | ---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="shivr/FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

Cameron/BERT-mdgender-wizard | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 30 | 2023-01-28T04:48:41Z |

---

tags:

- ultralyticsplus

- yolov8

- ultralytics

- yolo

- vision

- image-classification

- pytorch

- awesome-yolov8-models

library_name: ultralytics

library_version: 8.0.21

inference: false

datasets:

- keremberke/pokemon-classification

model-index:

- name: keremberke/yolov8s-pokemon-classification

results:

- task:

type: image-classification

dataset:

type: keremberke/pokemon-classification

name: pokemon-classification

split: validation

metrics:

- type: accuracy

value: 0.02459 # min: 0.0 - max: 1.0

name: top1 accuracy

- type: accuracy

value: 0.0806 # min: 0.0 - max: 1.0

name: top5 accuracy

---

<div align="center">

<img width="640" alt="keremberke/yolov8s-pokemon-classification" src="https://huggingface.co/keremberke/yolov8s-pokemon-classification/resolve/main/thumbnail.jpg">

</div>

### Supported Labels

```

['Abra', 'Aerodactyl', 'Alakazam', 'Alolan Sandslash', 'Arbok', 'Arcanine', 'Articuno', 'Beedrill', 'Bellsprout', 'Blastoise', 'Bulbasaur', 'Butterfree', 'Caterpie', 'Chansey', 'Charizard', 'Charmander', 'Charmeleon', 'Clefable', 'Clefairy', 'Cloyster', 'Cubone', 'Dewgong', 'Diglett', 'Ditto', 'Dodrio', 'Doduo', 'Dragonair', 'Dragonite', 'Dratini', 'Drowzee', 'Dugtrio', 'Eevee', 'Ekans', 'Electabuzz', 'Electrode', 'Exeggcute', 'Exeggutor', 'Farfetchd', 'Fearow', 'Flareon', 'Gastly', 'Gengar', 'Geodude', 'Gloom', 'Golbat', 'Goldeen', 'Golduck', 'Golem', 'Graveler', 'Grimer', 'Growlithe', 'Gyarados', 'Haunter', 'Hitmonchan', 'Hitmonlee', 'Horsea', 'Hypno', 'Ivysaur', 'Jigglypuff', 'Jolteon', 'Jynx', 'Kabuto', 'Kabutops', 'Kadabra', 'Kakuna', 'Kangaskhan', 'Kingler', 'Koffing', 'Krabby', 'Lapras', 'Lickitung', 'Machamp', 'Machoke', 'Machop', 'Magikarp', 'Magmar', 'Magnemite', 'Magneton', 'Mankey', 'Marowak', 'Meowth', 'Metapod', 'Mew', 'Mewtwo', 'Moltres', 'MrMime', 'Muk', 'Nidoking', 'Nidoqueen', 'Nidorina', 'Nidorino', 'Ninetales', 'Oddish', 'Omanyte', 'Omastar', 'Onix', 'Paras', 'Parasect', 'Persian', 'Pidgeot', 'Pidgeotto', 'Pidgey', 'Pikachu', 'Pinsir', 'Poliwag', 'Poliwhirl', 'Poliwrath', 'Wigglytuff', 'Zapdos', 'Zubat']

```

### How to use

- Install [ultralyticsplus](https://github.com/fcakyon/ultralyticsplus):

```bash

pip install ultralyticsplus==0.0.23 ultralytics==8.0.21

```

- Load model and perform prediction:

```python

from ultralyticsplus import YOLO, postprocess_classify_output

# load model

model = YOLO('keremberke/yolov8s-pokemon-classification')

# set model parameters

model.overrides['conf'] = 0.25 # model confidence threshold

# set image

image = 'https://github.com/ultralytics/yolov5/raw/master/data/images/zidane.jpg'

# perform inference

results = model.predict(image)

# observe results

print(results[0].probs) # [0.1, 0.2, 0.3, 0.4]

processed_result = postprocess_classify_output(model, result=results[0])

print(processed_result) # {"cat": 0.4, "dog": 0.6}

```

**More models available at: [awesome-yolov8-models](https://yolov8.xyz)** |

Cameron/BERT-rtgender-opgender-annotations | [

"pytorch",

"jax",

"bert",

"text-classification",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 33 | null | ---

library_name: stable-baselines3

tags:

- LunarLander-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: PPO

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: LunarLander-v2

type: LunarLander-v2

metrics:

- type: mean_reward

value: 249.56 +/- 19.30

name: mean_reward

verified: false

---

# **PPO** Agent playing **LunarLander-v2**

This is a trained model of a **PPO** agent playing **LunarLander-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Camzure/MaamiBot | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2023-01-28T05:02:37Z |

---

tags:

- ultralyticsplus

- yolov8

- ultralytics

- yolo

- vision

- image-classification

- pytorch

- awesome-yolov8-models

library_name: ultralytics

library_version: 8.0.23

inference: false

datasets:

- keremberke/pokemon-classification

model-index:

- name: keremberke/yolov8m-pokemon-classification

results:

- task:

type: image-classification

dataset:

type: keremberke/pokemon-classification

name: pokemon-classification

split: validation

metrics:

- type: accuracy

value: 0.03279 # min: 0.0 - max: 1.0

name: top1 accuracy

- type: accuracy

value: 0.09699 # min: 0.0 - max: 1.0

name: top5 accuracy

---

<div align="center">

<img width="640" alt="keremberke/yolov8m-pokemon-classification" src="https://huggingface.co/keremberke/yolov8m-pokemon-classification/resolve/main/thumbnail.jpg">

</div>

### Supported Labels

```

['Abra', 'Aerodactyl', 'Alakazam', 'Alolan Sandslash', 'Arbok', 'Arcanine', 'Articuno', 'Beedrill', 'Bellsprout', 'Blastoise', 'Bulbasaur', 'Butterfree', 'Caterpie', 'Chansey', 'Charizard', 'Charmander', 'Charmeleon', 'Clefable', 'Clefairy', 'Cloyster', 'Cubone', 'Dewgong', 'Diglett', 'Ditto', 'Dodrio', 'Doduo', 'Dragonair', 'Dragonite', 'Dratini', 'Drowzee', 'Dugtrio', 'Eevee', 'Ekans', 'Electabuzz', 'Electrode', 'Exeggcute', 'Exeggutor', 'Farfetchd', 'Fearow', 'Flareon', 'Gastly', 'Gengar', 'Geodude', 'Gloom', 'Golbat', 'Goldeen', 'Golduck', 'Golem', 'Graveler', 'Grimer', 'Growlithe', 'Gyarados', 'Haunter', 'Hitmonchan', 'Hitmonlee', 'Horsea', 'Hypno', 'Ivysaur', 'Jigglypuff', 'Jolteon', 'Jynx', 'Kabuto', 'Kabutops', 'Kadabra', 'Kakuna', 'Kangaskhan', 'Kingler', 'Koffing', 'Krabby', 'Lapras', 'Lickitung', 'Machamp', 'Machoke', 'Machop', 'Magikarp', 'Magmar', 'Magnemite', 'Magneton', 'Mankey', 'Marowak', 'Meowth', 'Metapod', 'Mew', 'Mewtwo', 'Moltres', 'MrMime', 'Muk', 'Nidoking', 'Nidoqueen', 'Nidorina', 'Nidorino', 'Ninetales', 'Oddish', 'Omanyte', 'Omastar', 'Onix', 'Paras', 'Parasect', 'Persian', 'Pidgeot', 'Pidgeotto', 'Pidgey', 'Pikachu', 'Pinsir', 'Poliwag', 'Poliwhirl', 'Poliwrath', 'Wigglytuff', 'Zapdos', 'Zubat']

```

### How to use

- Install [ultralyticsplus](https://github.com/fcakyon/ultralyticsplus):

```bash

pip install ultralyticsplus==0.0.24 ultralytics==8.0.23

```

- Load model and perform prediction:

```python

from ultralyticsplus import YOLO, postprocess_classify_output

# load model

model = YOLO('keremberke/yolov8m-pokemon-classification')

# set model parameters

model.overrides['conf'] = 0.25 # model confidence threshold

# set image

image = 'https://github.com/ultralytics/yolov5/raw/master/data/images/zidane.jpg'

# perform inference

results = model.predict(image)

# observe results

print(results[0].probs) # [0.1, 0.2, 0.3, 0.4]

processed_result = postprocess_classify_output(model, result=results[0])

print(processed_result) # {"cat": 0.4, "dog": 0.6}

```

**More models available at: [awesome-yolov8-models](https://yolov8.xyz)** |

Canadiancaleb/DialoGPT-small-jesse | [

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

]

| conversational | {

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 9 | null | ---

license: apache-2.0

---

小说模型 https://github.com/BlinkDL/AI-Writer/releases |

Canadiancaleb/jessebot | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | 2023-01-28T05:25:57Z | ---

license: other

tags:

- generated_from_keras_callback

model-index:

- name: dousey/scene_segmentation

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# dousey/scene_segmentation

This model is a fine-tuned version of [nvidia/mit-b0](https://huggingface.co/nvidia/mit-b0) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: nan

- Validation Loss: nan

- Validation Mean Iou: 0.0217

- Validation Mean Accuracy: 0.5

- Validation Overall Accuracy: 0.2545

- Validation Accuracy Background: 1.0

- Validation Accuracy Bleuet: 0.0

- Validation Accuracy Comptonie: nan

- Validation Accuracy Kalmia: nan

- Validation Iou Background: 0.0433

- Validation Iou Bleuet: 0.0

- Validation Iou Comptonie: nan

- Validation Iou Kalmia: nan

- Epoch: 1

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 6e-05, 'decay_steps': 76500, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Validation Loss | Validation Mean Iou | Validation Mean Accuracy | Validation Overall Accuracy | Validation Accuracy Background | Validation Accuracy Bleuet | Validation Accuracy Comptonie | Validation Accuracy Kalmia | Validation Iou Background | Validation Iou Bleuet | Validation Iou Comptonie | Validation Iou Kalmia | Epoch |

|:----------:|:---------------:|:-------------------:|:------------------------:|:---------------------------:|:------------------------------:|:--------------------------:|:-----------------------------:|:--------------------------:|:-------------------------:|:---------------------:|:------------------------:|:---------------------:|:-----:|

| nan | nan | 0.0217 | 0.5 | 0.2545 | 1.0 | 0.0 | nan | nan | 0.0433 | 0.0 | nan | nan | 0 |

| nan | nan | 0.0217 | 0.5 | 0.2545 | 1.0 | 0.0 | nan | nan | 0.0433 | 0.0 | nan | nan | 1 |

### Framework versions

- Transformers 4.26.0

- TensorFlow 2.9.2

- Datasets 2.9.0

- Tokenizers 0.13.2

|

Capreolus/birch-bert-large-mb | [

"pytorch",

"tf",

"jax",

"bert",

"next-sentence-prediction",

"transformers"

]

| null | {

"architectures": [

"BertForNextSentencePrediction"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1 | null | ---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: piyusharma/bert-base-uncased-finetuned-lex

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# piyusharma/bert-base-uncased-finetuned-lex

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.2112

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'name': 'AdamWeightDecay', 'learning_rate': 2e-05, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-07, 'amsgrad': False, 'weight_decay_rate': 0.01}

- training_precision: float32

### Training results

| Train Loss | Epoch |

|:----------:|:-----:|

| 0.2112 | 0 |

### Framework versions

- Transformers 4.26.0

- TensorFlow 2.9.2

- Datasets 2.9.0

- Tokenizers 0.13.2

|

Capreolus/birch-bert-large-msmarco_mb | [

"pytorch",

"tf",

"jax",

"bert",

"next-sentence-prediction",

"transformers"

]

| null | {

"architectures": [

"BertForNextSentencePrediction"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 1 | null | ---

library_name: stable-baselines3

tags:

- PandaReachDense-v2

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: A2C

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: PandaReachDense-v2

type: PandaReachDense-v2

metrics:

- type: mean_reward

value: -1.00 +/- 0.25

name: mean_reward

verified: false

---

# **A2C** Agent playing **PandaReachDense-v2**

This is a trained model of a **A2C** agent playing **PandaReachDense-v2**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3).

## Usage (with Stable-baselines3)

TODO: Add your code

```python

from stable_baselines3 import ...

from huggingface_sb3 import load_from_hub

...

```

|

Captain-1337/CrudeBERT | [

"pytorch",

"bert",

"text-classification",

"arxiv:1908.10063",

"transformers"

]

| text-classification | {

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 28 | null | ---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: model1_absa_cont

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# model1_absa_cont

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 1.2382

- Precision: 0.2445

- Recall: 0.3046

- F1: 0.2712

- Accuracy: 0.5420

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Precision | Recall | F1 | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:---------:|:------:|:------:|:--------:|

| No log | 1.0 | 7 | 1.2382 | 0.2445 | 0.3046 | 0.2712 | 0.5420 |

| No log | 2.0 | 14 | 1.2382 | 0.2445 | 0.3046 | 0.2712 | 0.5420 |

| No log | 3.0 | 21 | 1.2382 | 0.2445 | 0.3046 | 0.2712 | 0.5420 |

| No log | 4.0 | 28 | 1.2382 | 0.2445 | 0.3046 | 0.2712 | 0.5420 |

### Framework versions

- Transformers 4.26.0

- Pytorch 1.13.1+cu116

- Datasets 2.9.0

- Tokenizers 0.13.2

|

Carlork314/Carlos | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

datasets:

- Ramos-Ramos/nllb-eng-tgl-12k

---

# Ramos-Ramos/xlm-roberta-base-en-tl

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('Ramos-Ramos/xlm-roberta-base-en-tl')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('Ramos-Ramos/xlm-roberta-base-en-tl')

model = AutoModel.from_pretrained('Ramos-Ramos/xlm-roberta-base-en-tl')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=Ramos-Ramos/xlm-roberta-base-en-tl)

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 308 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MSELoss.MSELoss`

Parameters of the fit()-Method:

```

{

"epochs": 5,

"evaluation_steps": 0,

"evaluator": "sentence_transformers.evaluation.SequentialEvaluator.SequentialEvaluator",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"eps": 1e-06,

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 200,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information --> |

Carlork314/Xd | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null |

---

tags:

- ultralyticsplus

- yolov8

- ultralytics

- yolo

- vision

- image-segmentation

- pytorch

- awesome-yolov8-models

library_name: ultralytics

library_version: 8.0.21

inference: false

datasets:

- keremberke/pcb-defect-segmentation

model-index:

- name: keremberke/yolov8n-pcb-defect-segmentation

results:

- task:

type: image-segmentation

dataset:

type: keremberke/pcb-defect-segmentation

name: pcb-defect-segmentation

split: validation

metrics:

- type: precision # since [email protected] is not available on hf.co/metrics

value: 0.51186 # min: 0.0 - max: 1.0

name: [email protected](box)

- type: precision # since [email protected] is not available on hf.co/metrics

value: 0.51667 # min: 0.0 - max: 1.0

name: [email protected](mask)

---

<div align="center">

<img width="640" alt="keremberke/yolov8n-pcb-defect-segmentation" src="https://huggingface.co/keremberke/yolov8n-pcb-defect-segmentation/resolve/main/thumbnail.jpg">

</div>

### Supported Labels

```

['Dry_joint', 'Incorrect_installation', 'PCB_damage', 'Short_circuit']

```

### How to use

- Install [ultralyticsplus](https://github.com/fcakyon/ultralyticsplus):

```bash

pip install ultralyticsplus==0.0.23 ultralytics==8.0.21

```

- Load model and perform prediction:

```python

from ultralyticsplus import YOLO, render_result

# load model

model = YOLO('keremberke/yolov8n-pcb-defect-segmentation')

# set model parameters

model.overrides['conf'] = 0.25 # NMS confidence threshold

model.overrides['iou'] = 0.45 # NMS IoU threshold

model.overrides['agnostic_nms'] = False # NMS class-agnostic

model.overrides['max_det'] = 1000 # maximum number of detections per image

# set image

image = 'https://github.com/ultralytics/yolov5/raw/master/data/images/zidane.jpg'

# perform inference

results = model.predict(image)

# observe results

print(results[0].boxes)

print(results[0].masks)

render = render_result(model=model, image=image, result=results[0])

render.show()

```

**More models available at: [awesome-yolov8-models](https://yolov8.xyz)** |

Carolhuehuehuehue/Sla | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

} | 0 | null | ---

license: mit

tags:

- pytorch

- diffusers

- unconditional-image-generation

- diffusion-models-class

---

# Model Card for Unit 1 of the [Diffusion Models Class 🧨](https://github.com/huggingface/diffusion-models-class)

This model is a diffusion model for unconditional image generation of cute 🦋.

## Usage

```python

from diffusers import DDPMPipeline

pipeline = DDPMPipeline.from_pretrained('garg-aayush/sd-class-butterflies-32')

image = pipeline().images[0]

image

```

|

Cat/Kitty | []

| null | {

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,