modelId

stringlengths 4

81

| tags

list | pipeline_tag

stringclasses 17

values | config

dict | downloads

int64 0

59.7M

| first_commit

timestamp[ns, tz=UTC] | card

stringlengths 51

438k

|

|---|---|---|---|---|---|---|

CoderBoy432/DialoGPT-small-harrypotter

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 11 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- wer

model-index:

- name: whisper-small-chunke-nfl-finance

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# whisper-small-chunke-nfl-finance

This model is a fine-tuned version of [openai/whisper-small.en](https://huggingface.co/openai/whisper-small.en) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0732

- Wer: 10.2102

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:-----:|:----:|:---------------:|:-------:|

| 0.0004 | 10.42 | 1000 | 0.0638 | 8.2761 |

| 0.0001 | 20.83 | 2000 | 0.0691 | 8.9629 |

| 0.0001 | 31.25 | 3000 | 0.0721 | 9.6356 |

| 0.0001 | 41.67 | 4000 | 0.0732 | 10.2102 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.1+cu117

- Datasets 2.12.0

- Tokenizers 0.13.3

|

CoffeeAddict93/gpt2-modest-proposal

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers"

] |

text-generation

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 12 | null |

---

license: apache-2.0

datasets:

- asset

- wi_locness

- GEM/wiki_auto_asset_turk

- discofuse

- zaemyung/IteraTeR_plus

- jfleg

language:

- en

metrics:

- sari

- bleu

- accuracy

---

# Model Card for CoEdIT-xl-composite

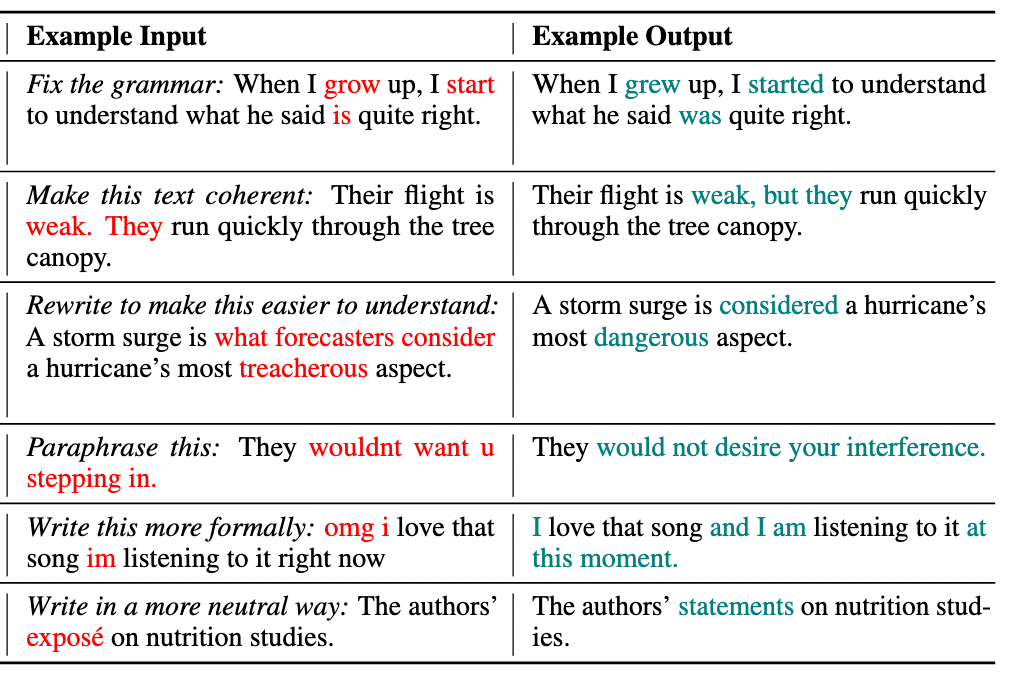

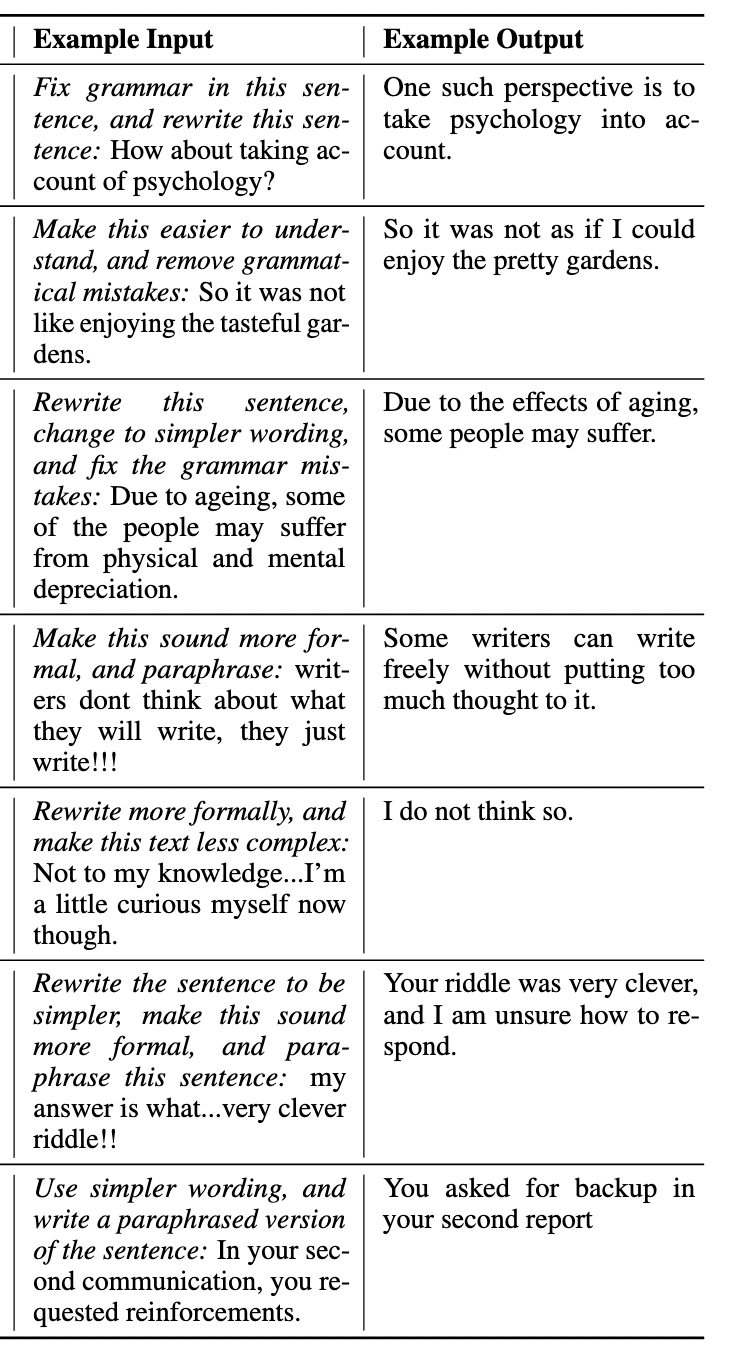

This model was obtained by fine-tuning the corresponding `google/flan-t5-xl` model on the CoEdIT-Composite dataset. Details of the dataset can be found in our paper and repository.

**Paper:** CoEdIT: Text Editing by Task-Specific Instruction Tuning

**Authors:** Vipul Raheja, Dhruv Kumar, Ryan Koo, Dongyeop Kang

## Model Details

### Model Description

- **Language(s) (NLP)**: English

- **Finetuned from model:** google/flan-t5-xl

### Model Sources

- **Repository:** https://github.com/vipulraheja/coedit

- **Paper:** https://arxiv.org/abs/2305.09857

## How to use

We make available the models presented in our paper.

<table>

<tr>

<th>Model</th>

<th>Number of parameters</th>

</tr>

<tr>

<td>CoEdIT-large</td>

<td>770M</td>

</tr>

<tr>

<td>CoEdIT-xl</td>

<td>3B</td>

</tr>

<tr>

<td>CoEdIT-xxl</td>

<td>11B</td>

</tr>

</table>

## Uses

## Text Revision Task

Given an edit instruction and an original text, our model can generate the edited version of the text.<br>

This model can also perform edits on composite instructions, as shown below:

## Usage

```python

from transformers import AutoTokenizer, T5ForConditionalGeneration

tokenizer = AutoTokenizer.from_pretrained("grammarly/coedit-xl-composite")

model = T5ForConditionalGeneration.from_pretrained("grammarly/coedit-xl-composite")

input_text = 'Fix grammatical errors in this sentence and make it simpler: When I grow up, I start to understand what he said is quite right.'

input_ids = tokenizer(input_text, return_tensors="pt").input_ids

outputs = model.generate(input_ids, max_length=256)

edited_text = tokenizer.decode(outputs[0], skip_special_tokens=True)

```

#### Software

https://github.com/vipulraheja/coedit

## Citation

**BibTeX:**

```

@article{raheja2023coedit,

title={CoEdIT: Text Editing by Task-Specific Instruction Tuning},

author={Vipul Raheja and Dhruv Kumar and Ryan Koo and Dongyeop Kang},

year={2023},

eprint={2305.09857},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

**APA:**

Raheja, V., Kumar, D., Koo, R., & Kang, D. (2023). CoEdIT: Text Editing by Task-Specific Instruction Tuning. ArXiv. /abs/2305.09857

|

ComCom/gpt2-large

|

[

"pytorch",

"gpt2",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"GPT2Model"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": true,

"max_length": 50

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 1 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: flan-t5-large-extraction-all-cnndm_4000-ep5-nonstop

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan-t5-large-extraction-all-cnndm_4000-ep5-nonstop

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7363

- Hint Hit Num: 1.936

- Hint Precision: 0.3338

- Num: 5.818

- Gen Len: 18.99

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 40

- eval_batch_size: 80

- seed: 1799

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | Hint Hit Num | Hint Precision | Num | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------------:|:--------------:|:-----:|:-------:|

| 2.1988 | 1.0 | 100 | 1.8020 | 1.852 | 0.3214 | 5.78 | 19.0 |

| 1.9385 | 2.0 | 200 | 1.7482 | 1.974 | 0.3426 | 5.796 | 18.986 |

| 1.8744 | 3.0 | 300 | 1.7407 | 1.976 | 0.3399 | 5.86 | 18.99 |

| 1.8422 | 4.0 | 400 | 1.7398 | 1.958 | 0.3382 | 5.816 | 18.99 |

| 1.8238 | 5.0 | 500 | 1.7363 | 1.936 | 0.3338 | 5.818 | 18.99 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.0+cu111

- Datasets 2.5.1

- Tokenizers 0.12.1

|

ComCom-Dev/gpt2-bible-test

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- imdb

model-index:

- name: distilbert-base-uncased-finetuned-imdb

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-imdb

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the imdb dataset.

It achieves the following results on the evaluation set:

- Loss: 2.4411

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 2.7193 | 1.0 | 313 | 2.4903 |

| 2.5804 | 2.0 | 626 | 2.4569 |

| 2.5498 | 3.0 | 939 | 2.4574 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0+cu118

- Datasets 2.12.0

- Tokenizers 0.13.3

|

Connor/DialoGPT-small-rick

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 7 | null |

---

license: mit

tags:

- generated_from_trainer

model-index:

- name: gpt2-wikitext2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-wikitext2

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 6.3144

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3.0

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 6.7737 | 1.0 | 1125 | 6.6432 |

| 6.4176 | 2.0 | 2250 | 6.3929 |

| 6.2554 | 3.0 | 3375 | 6.3144 |

### Framework versions

- Transformers 4.28.1

- Pytorch 1.11.0+cu102

- Datasets 2.12.0

- Tokenizers 0.12.1

|

Contrastive-Tension/BERT-Distil-CT-STSb

|

[

"pytorch",

"tf",

"distilbert",

"feature-extraction",

"transformers"

] |

feature-extraction

|

{

"architectures": [

"DistilBertModel"

],

"model_type": "distilbert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 1 | null |

---

language:

- zh

- en

library_name: transformers

pipeline_tag: text2text-generation

datasets:

- Yaxin/SemEval2016Task5NLTK

metrics:

- yuyijiong/quad_match_score

---

情感分析任务 T5模型\

[yuyijiong/T5-large-sentiment-analysis-Chinese](https://huggingface.co/yuyijiong/T5-large-sentiment-analysis-Chinese)的改进版,增加更多任务,使用chatgpt生成部分数据\

在多个中英文情感分析数据集上微调得到 \

输出格式为

```

'对象1 | 观点1 | 方面1 | 情感极性1 & 对象2 | 观点2 | 方面2 | 情感极性2 ......'

```

可以使用yuyijiong/quad_match_score评估指标进行评估

```python

import evaluate

module = evaluate.load("yuyijiong/quad_match_score")

predictions=["food | good | food#taste | pos"]

references=["food | good | food#taste | pos & service | bad | service#general | neg"]

result=module.compute(predictions=predictions, references=references)

print(result)

```

支持以下情感分析任务

```

["四元组(对象 | 观点 | 方面 | 极性)",

'二元组(对象 | 观点)',

'三元组(对象 | 观点 | 方面)',

'三元组(对象 | 观点 | 极性)',

'三元组(对象 | 方面 | 极性)',

'二元组(方面 | 极性)',

'二元组(观点 | 极性)',

'单元素(极性)']

```

可以增加额外条件来控制答案的生成,例如:

答案风格控制,希望抽取的观点为整句话or缩减为几个词:\

(观点尽量短)\

(观点可以较长)\

(对较长观点进行概括) 注意此条件可能使答案中出现与原文不同的词

可以对指定的方面做情感分析:

(方面选项:商品/物流/商家/平台)

情感对象target可能为null,表示文本中未明确给出

可以允许模型自动猜测为null的对象:

(补全null)

support the following sentiment analysis tasks

```

["quadruples (target | opinion | aspect | polarity)",

"quadruples (target | opinion | aspect | polarity)",

'pairs (target | opinion)',

'triples (target | opinion | aspect)',

'triples (target | opinion | polarity)',

'triples (target | aspect | polarity)',

'pairs (aspect | polarity)',

'pairs (target | polarity)',

'pairs (opinion | polarity)',

'single (polarity)']

```

使用方法:

Usage

```python

import torch

from transformers import T5Tokenizer, AutoModelForSeq2SeqLM

tokenizer = T5Tokenizer.from_pretrained("yuyijiong/T5-large-sentiment-analysis-Chinese-MultiTask")

model = AutoModelForSeq2SeqLM.from_pretrained("yuyijiong/T5-large-sentiment-analysis-Chinese-MultiTask", device_map="auto")

generation_config=GenerationConfig.from_pretrained("yuyijiong/T5-large-sentiment-analysis-Chinese-MultiTask")

text = '情感四元组(对象 | 观点 | 方面 | 极性)抽取任务(观点可以较长): [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]'

input_ids = tokenizer(text,return_tensors="pt", padding=True)['input_ids'].cuda(0)

with torch.no_grad():

output = model.generate(input_ids=input_ids,generation_config=generation_config)

output_str = tokenizer.batch_decode(output, skip_special_tokens=True)

print(output_str)

```

用法示例

```

Q:情感四元组(对象 | 观点 | 方面 | 极性)抽取任务(观点可以较长): [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]

A:个头 | 大 | 商品#大小 | 积极 & 口感 | 不错 | 商品#口感 | 积极 & null | 个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好 | 商品#新鲜程度 | 消极

Q:情感四元组(对象 | 观点 | 方面 | 极性)抽取任务(观点可以较长,补全null): [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]

A:个头 | 大 | 商品#大小 | 积极 & 口感 | 不错 | 商品#口感 | 积极 & null (个别) | 有烂掉口子刻意用泥土封着,这样做不好 | 商品#新鲜程度 | 消极

Q:情感四元组(对象 | 观点 | 方面 | 极性)抽取任务(观点尽量短): [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]

A:个头 | 大 | 商品#大小 | 积极 & 口感 | 不错 | 商品#口感 | 积极

Q:情感四元组(对象 | 观点 | 方面 | 极性)抽取任务(对较长观点进行概括): [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]

A:个头 | 大 | 商品#大小 | 积极 & 口感 | 不错 | 商品#口感 | 积极 & null | 坏了口子封泥 | 商品#新鲜程度 | 消极

Q:情感三元组(对象 | 观点 | 极性)抽取任务(观点可以较长,补全null): [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]

A:个头 | 大 | 积极 & 口感 | 不错 | 积极 & null (花生) | 个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好 | 消极

Q:判断以下评论的情感极性: [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]

A:中性

Q:情感二元组(方面 | 极性)抽取任务(方面选项: 价格#性价比/价格#折扣/价格#水平/食品#外观/食物#分量/食物#味道/食物#推荐): [个头大、口感不错,就是个别坏了的或者有烂掉口子刻意用泥土封着,这样做不好。]

A:食物#分量 | 积极 & 食物#味道 | 中性

Q:sentiment quadruples (target | opinion | aspect | polarity) extraction task : [The hot dogs are good , yes , but the reason to get over here is the fantastic pork croquette sandwich , perfect on its supermarket squishy bun .]

A:hot dogs | good | food#quality | pos & pork croquette sandwich | fantastic | food#quality | pos & bun | perfect | food#quality | pos

```

|

Coolhand/Abuela

|

[

"en",

"image_restoration",

"superresolution",

"license:mit"

] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: apache-2.0

tags:

- generated_from_keras_callback

model-index:

- name: CatherineGeng/vit-base-patch16-224-in21k-euroSat

results: []

---

<!-- This model card has been generated automatically according to the information Keras had access to. You should

probably proofread and complete it, then remove this comment. -->

# CatherineGeng/vit-base-patch16-224-in21k-euroSat

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on an unknown dataset.

It achieves the following results on the evaluation set:

- Train Loss: 0.4854

- Train Accuracy: 0.9415

- Train Top-3-accuracy: 0.9856

- Validation Loss: 0.1574

- Validation Accuracy: 0.9817

- Validation Top-3-accuracy: 0.9988

- Epoch: 0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- optimizer: {'inner_optimizer': {'class_name': 'AdamWeightDecay', 'config': {'name': 'AdamWeightDecay', 'learning_rate': {'class_name': 'PolynomialDecay', 'config': {'initial_learning_rate': 3e-05, 'decay_steps': 3590, 'end_learning_rate': 0.0, 'power': 1.0, 'cycle': False, 'name': None}}, 'decay': 0.0, 'beta_1': 0.9, 'beta_2': 0.999, 'epsilon': 1e-08, 'amsgrad': False, 'weight_decay_rate': 0.01}}, 'dynamic': True, 'initial_scale': 32768.0, 'dynamic_growth_steps': 2000}

- training_precision: mixed_float16

### Training results

| Train Loss | Train Accuracy | Train Top-3-accuracy | Validation Loss | Validation Accuracy | Validation Top-3-accuracy | Epoch |

|:----------:|:--------------:|:--------------------:|:---------------:|:-------------------:|:-------------------------:|:-----:|

| 0.4854 | 0.9415 | 0.9856 | 0.1574 | 0.9817 | 0.9988 | 0 |

### Framework versions

- Transformers 4.29.1

- TensorFlow 2.4.0

- Datasets 2.12.0

- Tokenizers 0.13.3

|

CopymySkill/DialoGPT-medium-atakan

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 7 | null |

---

license: mit

tags:

- generated_from_trainer

datasets:

- imagefolder

model-index:

- name: git-base-pokemon

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# git-base-pokemon

This model is a fine-tuned version of [microsoft/git-base](https://huggingface.co/microsoft/git-base) on the imagefolder dataset.

It achieves the following results on the evaluation set:

- Loss: 6.6921

- Wer Score: 19.4724

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 50

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer Score |

|:-------------:|:-----:|:----:|:---------------:|:---------:|

| 3.9687 | 50.0 | 50 | 6.6921 | 19.4724 |

### Framework versions

- Transformers 4.29.1

- Pytorch 2.0.0+cu118

- Datasets 2.12.0

- Tokenizers 0.13.3

|

Corvus/DialoGPT-medium-CaptainPrice

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 7 | null |

---

license: creativeml-openrail-m

tags:

- text-to-image

- stable-diffusion

---

# Model Card for EGRX

## Model Description

- **Developed by:** BADMONK

- **Model type:** Dreambooth Model + Extracted LoRA

- **Language(s) (NLP):** EN

- **License:** Creativeml-Openrail-M

- **Parent Model:** ChilloutMix

# How to Get Started with the Model

Use the code below to get started with the model.

### EGRX ###

|

Coyotl/DialoGPT-test-last-arthurmorgan

|

[

"conversational"

] |

conversational

|

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- precision

- recall

model-index:

- name: Baseline_10Kphish_benignFall_20_20_20

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Baseline_10Kphish_benignFall_20_20_20

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0830

- Accuracy: 0.9916

- F1: 0.9039

- Precision: 0.9971

- Recall: 0.8266

- Roc Auc Score: 0.9132

- Tpr At Fpr 0.01: 0.8118

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall | Roc Auc Score | Tpr At Fpr 0.01 |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|:------:|:---------:|:------:|:-------------:|:---------------:|

| 0.0118 | 1.0 | 6563 | 0.0538 | 0.9889 | 0.8681 | 0.9948 | 0.77 | 0.8849 | 0.7234 |

| 0.0053 | 2.0 | 13126 | 0.0538 | 0.9915 | 0.9021 | 0.9945 | 0.8254 | 0.9126 | 0.7654 |

| 0.0018 | 3.0 | 19689 | 0.0639 | 0.9916 | 0.9040 | 0.9945 | 0.8286 | 0.9142 | 0.7782 |

| 0.0009 | 4.0 | 26252 | 0.0843 | 0.9905 | 0.8894 | 0.9978 | 0.8022 | 0.9011 | 0.8086 |

| 0.0 | 5.0 | 32815 | 0.0830 | 0.9916 | 0.9039 | 0.9971 | 0.8266 | 0.9132 | 0.8118 |

### Framework versions

- Transformers 4.29.1

- Pytorch 1.9.0+cu111

- Datasets 2.10.1

- Tokenizers 0.13.2

|

Coyotl/DialoGPT-test3-arthurmorgan

|

[

"conversational"

] |

conversational

|

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

datasets:

- glue

model-index:

- name: e5-large-unsupervised-mnli

results: []

pipeline_tag: zero-shot-classification

language:

- en

license: mit

---

# e5-large-unsupervised-mnli

This model is a fine-tuned version of [intfloat/e5-large-unsupervised](https://huggingface.co/intfloat/e5-large-unsupervised) on the glue dataset.

## Model description

[Text Embeddings by Weakly-Supervised Contrastive Pre-training](https://arxiv.org/pdf/2212.03533.pdf).

Liang Wang, Nan Yang, Xiaolong Huang, Binxing Jiao, Linjun Yang, Daxin Jiang, Rangan Majumder, Furu Wei, arXiv 2022

## How to use the model

The model can be loaded with the `zero-shot-classification` pipeline like so:

```python

from transformers import pipeline

classifier = pipeline("zero-shot-classification",

model="mjwong/e5-large-unsupervised-mnli")

```

You can then use this pipeline to classify sequences into any of the class names you specify.

```python

sequence_to_classify = "one day I will see the world"

candidate_labels = ['travel', 'cooking', 'dancing']

classifier(sequence_to_classify, candidate_labels)

#{'sequence': 'one day I will see the world',

# 'labels': ['travel', 'dancing', 'cooking'],

# 'scores': [0.9692057967185974, 0.019781911745667458, 0.011012292467057705]}

```

If more than one candidate label can be correct, pass `multi_class=True` to calculate each class independently:

```python

candidate_labels = ['travel', 'cooking', 'dancing', 'exploration']

classifier(sequence_to_classify, candidate_labels, multi_class=True)

#{'sequence': 'one day I will see the world',

# 'labels': ['travel', 'exploration', 'dancing', 'cooking'],

# 'scores': [0.9841939806938171,

# 0.9155724048614502,

# 0.2912618815898895,

# 0.024328863248229027]}

```

### Eval results

The model was evaluated using the dev sets for MultiNLI and test sets for ANLI. The metric used is accuracy.

|Datasets|mnli_dev_m|mnli_dev_mm|anli_test_r1|anli_test_r2|anli_test_r3|

| :---: | :---: | :---: | :---: | :---: | :---: |

|[e5-base-mnli](https://huggingface.co/mjwong/e5-base-mnli)|0.840|0.839|0.231|0.285|0.309|

|[e5-large-mnli](https://huggingface.co/mjwong/e5-large-mnli)|0.868|0.869|0.301|0.296|0.294|

|[e5-large-unsupervised-mnli](https://huggingface.co/mjwong/e5-large-unsupervised-mnli)|0.865|0.867|0.314|0.285|0.303|

|[e5-large-mnli-anli](https://huggingface.co/mjwong/e5-large-mnli-anli)|0.843|0.848|0.646|0.484|0.458|

|[e5-large-unsupervised-mnli-anli](https://huggingface.co/mjwong/e5-large-unsupervised-mnli-anli)|0.836|0.842|0.634|0.481|0.478|

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 2

### Framework versions

- Transformers 4.28.1

- Pytorch 1.12.1+cu116

- Datasets 2.11.0

- Tokenizers 0.12.1

|

Crisblair/Wkwk

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: flan-t5-large-fce-e8-b16

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan-t5-large-fce-e8-b16

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3123

- Rouge1: 87.0781

- Rouge2: 79.8175

- Rougel: 86.6213

- Rougelsum: 86.6385

- Gen Len: 14.8832

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adafactor

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 0.3761 | 0.23 | 400 | 0.3325 | 86.7053 | 79.1321 | 86.1593 | 86.1958 | 14.8864 |

| 0.3516 | 0.45 | 800 | 0.3201 | 86.8076 | 79.1282 | 86.2981 | 86.3209 | 14.8781 |

| 0.3401 | 0.68 | 1200 | 0.3187 | 86.479 | 78.6505 | 85.9172 | 85.9585 | 14.8800 |

| 0.3283 | 0.9 | 1600 | 0.3123 | 87.0781 | 79.8175 | 86.6213 | 86.6385 | 14.8832 |

| 0.2395 | 1.13 | 2000 | 0.3278 | 86.7979 | 79.3766 | 86.3314 | 86.3581 | 14.9046 |

| 0.1817 | 1.35 | 2400 | 0.3170 | 86.8343 | 79.4019 | 86.3148 | 86.3232 | 14.8964 |

| 0.1962 | 1.58 | 2800 | 0.3138 | 86.8702 | 79.425 | 86.3412 | 86.36 | 14.9069 |

| 0.1971 | 1.81 | 3200 | 0.3191 | 86.8355 | 79.3178 | 86.2974 | 86.322 | 14.8809 |

| 0.1816 | 2.03 | 3600 | 0.3490 | 87.0986 | 79.7312 | 86.6108 | 86.6227 | 14.9142 |

| 0.0975 | 2.26 | 4000 | 0.3534 | 86.7684 | 79.3649 | 86.2755 | 86.2885 | 14.9069 |

| 0.1033 | 2.48 | 4400 | 0.3536 | 86.8978 | 79.714 | 86.4135 | 86.435 | 14.9302 |

| 0.1086 | 2.71 | 4800 | 0.3553 | 86.6286 | 79.3293 | 86.1381 | 86.1686 | 14.9078 |

| 0.1141 | 2.93 | 5200 | 0.3530 | 86.8452 | 79.4178 | 86.2927 | 86.3239 | 14.9010 |

| 0.076 | 3.16 | 5600 | 0.4088 | 86.992 | 79.8179 | 86.5124 | 86.5186 | 14.9096 |

| 0.0595 | 3.39 | 6000 | 0.4052 | 86.8874 | 79.6302 | 86.3643 | 86.3784 | 14.9101 |

| 0.0606 | 3.61 | 6400 | 0.4051 | 86.9236 | 79.5305 | 86.3715 | 86.3959 | 14.9101 |

| 0.0653 | 3.84 | 6800 | 0.3860 | 86.8353 | 79.541 | 86.3249 | 86.3292 | 14.9165 |

| 0.0553 | 4.06 | 7200 | 0.4229 | 86.7788 | 79.5444 | 86.3393 | 86.3468 | 14.8868 |

| 0.0339 | 4.29 | 7600 | 0.4478 | 86.6863 | 79.5215 | 86.216 | 86.2363 | 14.9133 |

| 0.0375 | 4.51 | 8000 | 0.4359 | 86.8412 | 79.668 | 86.3237 | 86.3349 | 14.9229 |

| 0.0376 | 4.74 | 8400 | 0.4459 | 86.8836 | 79.682 | 86.3993 | 86.4062 | 14.9069 |

| 0.0372 | 4.97 | 8800 | 0.4324 | 86.6833 | 79.5114 | 86.1856 | 86.2031 | 14.9197 |

| 0.023 | 5.19 | 9200 | 0.4930 | 86.9595 | 79.8244 | 86.4103 | 86.4373 | 14.9279 |

| 0.0211 | 5.42 | 9600 | 0.4927 | 87.0212 | 79.8707 | 86.5054 | 86.5117 | 14.9320 |

| 0.0215 | 5.64 | 10000 | 0.4915 | 86.9495 | 79.8479 | 86.458 | 86.4632 | 14.9115 |

| 0.0205 | 5.87 | 10400 | 0.4919 | 86.8966 | 79.7666 | 86.424 | 86.4482 | 14.9069 |

| 0.0169 | 6.09 | 10800 | 0.5415 | 87.1119 | 80.0504 | 86.6205 | 86.6255 | 14.9083 |

| 0.0116 | 6.32 | 11200 | 0.5767 | 87.1828 | 80.2547 | 86.6809 | 86.6742 | 14.9215 |

| 0.0113 | 6.55 | 11600 | 0.5799 | 87.2494 | 80.2853 | 86.7412 | 86.761 | 14.9147 |

| 0.0103 | 6.77 | 12000 | 0.6036 | 87.1081 | 80.1873 | 86.6086 | 86.6176 | 14.9251 |

| 0.0106 | 7.0 | 12400 | 0.5821 | 87.1489 | 80.1987 | 86.654 | 86.6694 | 14.9242 |

| 0.0064 | 7.22 | 12800 | 0.6325 | 87.2026 | 80.2043 | 86.6988 | 86.704 | 14.9197 |

| 0.0056 | 7.45 | 13200 | 0.6878 | 87.184 | 80.1382 | 86.6798 | 86.7049 | 14.9188 |

| 0.0061 | 7.67 | 13600 | 0.6888 | 87.2465 | 80.1602 | 86.7407 | 86.7459 | 14.9201 |

| 0.0057 | 7.9 | 14000 | 0.6922 | 87.2584 | 80.2614 | 86.7806 | 86.7948 | 14.9201 |

### Framework versions

- Transformers 4.28.1

- Pytorch 1.11.0a0+b6df043

- Datasets 2.12.0

- Tokenizers 0.13.3

|

CrypticT1tan/DialoGPT-medium-harrypotter

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: flan-t5-base-fce-e8-b16

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan-t5-base-fce-e8-b16

This model is a fine-tuned version of [google/flan-t5-base](https://huggingface.co/google/flan-t5-base) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3114

- Rouge1: 86.9035

- Rouge2: 79.2645

- Rougel: 86.4197

- Rougelsum: 86.4231

- Gen Len: 14.8850

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adafactor

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 0.4128 | 0.23 | 400 | 0.3457 | 86.8983 | 79.1632 | 86.3755 | 86.3944 | 14.8435 |

| 0.3783 | 0.45 | 800 | 0.3469 | 86.8995 | 78.8428 | 86.3368 | 86.3283 | 14.8955 |

| 0.3627 | 0.68 | 1200 | 0.3114 | 86.9035 | 79.2645 | 86.4197 | 86.4231 | 14.8850 |

| 0.3484 | 0.9 | 1600 | 0.3239 | 87.2292 | 79.8056 | 86.7218 | 86.7237 | 14.8759 |

| 0.2696 | 1.13 | 2000 | 0.3419 | 87.15 | 79.6016 | 86.6082 | 86.6241 | 14.8959 |

| 0.22 | 1.35 | 2400 | 0.3270 | 87.0232 | 79.4806 | 86.5137 | 86.5173 | 14.8868 |

| 0.2327 | 1.58 | 2800 | 0.3185 | 87.1028 | 79.6758 | 86.5985 | 86.6221 | 14.9005 |

| 0.2354 | 1.81 | 3200 | 0.3125 | 87.143 | 79.786 | 86.6545 | 86.6788 | 14.9010 |

| 0.2177 | 2.03 | 3600 | 0.3292 | 87.0858 | 79.5707 | 86.5451 | 86.5456 | 14.9133 |

| 0.1347 | 2.26 | 4000 | 0.3342 | 87.1768 | 79.9161 | 86.6402 | 86.6666 | 14.9142 |

| 0.1411 | 2.48 | 4400 | 0.3456 | 87.1049 | 79.9438 | 86.6152 | 86.6265 | 14.9110 |

| 0.1487 | 2.71 | 4800 | 0.3393 | 86.5182 | 78.468 | 86.0005 | 86.0283 | 14.8813 |

| 0.1498 | 2.93 | 5200 | 0.3347 | 87.2024 | 79.7098 | 86.6782 | 86.6904 | 14.8859 |

| 0.1055 | 3.16 | 5600 | 0.4027 | 87.1281 | 79.799 | 86.5714 | 86.5965 | 14.9105 |

| 0.0862 | 3.39 | 6000 | 0.4046 | 87.2721 | 79.8755 | 86.6838 | 86.6956 | 14.9073 |

| 0.0894 | 3.61 | 6400 | 0.3776 | 87.1508 | 79.865 | 86.6178 | 86.6424 | 14.8946 |

| 0.0942 | 3.84 | 6800 | 0.3781 | 87.2854 | 80.0876 | 86.7694 | 86.7867 | 14.8927 |

| 0.0816 | 4.06 | 7200 | 0.4300 | 87.3854 | 80.1162 | 86.8398 | 86.8446 | 14.8978 |

| 0.0582 | 4.29 | 7600 | 0.4201 | 87.2594 | 80.1824 | 86.7653 | 86.7807 | 14.9019 |

| 0.0588 | 4.51 | 8000 | 0.4129 | 87.3373 | 80.1802 | 86.8332 | 86.8414 | 14.9014 |

| 0.0571 | 4.74 | 8400 | 0.4437 | 87.2985 | 80.0215 | 86.8171 | 86.8238 | 14.8946 |

| 0.0587 | 4.97 | 8800 | 0.4019 | 87.2321 | 80.0933 | 86.6888 | 86.6931 | 14.9105 |

| 0.0381 | 5.19 | 9200 | 0.4822 | 87.2798 | 80.1822 | 86.7799 | 86.7886 | 14.9014 |

| 0.0378 | 5.42 | 9600 | 0.4831 | 87.409 | 80.3418 | 86.8845 | 86.8844 | 14.8927 |

| 0.0368 | 5.64 | 10000 | 0.4809 | 87.2276 | 79.9415 | 86.6776 | 86.6833 | 14.9105 |

| 0.0359 | 5.87 | 10400 | 0.4964 | 87.2916 | 80.1468 | 86.7693 | 86.7704 | 14.9028 |

| 0.0311 | 6.09 | 10800 | 0.5266 | 87.3443 | 80.1762 | 86.7852 | 86.7825 | 14.8991 |

| 0.0225 | 6.32 | 11200 | 0.5550 | 87.3142 | 80.2689 | 86.7856 | 86.7884 | 14.9037 |

| 0.0239 | 6.55 | 11600 | 0.5308 | 87.4003 | 80.2637 | 86.8373 | 86.8356 | 14.9023 |

| 0.0236 | 6.77 | 12000 | 0.5490 | 87.3865 | 80.3184 | 86.8563 | 86.8626 | 14.9037 |

| 0.0223 | 7.0 | 12400 | 0.5454 | 87.3842 | 80.2875 | 86.8109 | 86.8293 | 14.9055 |

| 0.0164 | 7.22 | 12800 | 0.5818 | 87.4641 | 80.3669 | 86.8908 | 86.9062 | 14.8964 |

| 0.0155 | 7.45 | 13200 | 0.5927 | 87.4191 | 80.3356 | 86.8541 | 86.8718 | 14.9014 |

| 0.0152 | 7.67 | 13600 | 0.5990 | 87.4257 | 80.2974 | 86.8481 | 86.8589 | 14.9005 |

| 0.0144 | 7.9 | 14000 | 0.6084 | 87.4754 | 80.3558 | 86.9086 | 86.9184 | 14.9014 |

### Framework versions

- Transformers 4.28.1

- Pytorch 1.11.0a0+b6df043

- Datasets 2.12.0

- Tokenizers 0.13.3

|

Crystal/distilbert-base-uncased-finetuned-squad

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mt5-large-fce-e8-b16

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mt5-large-fce-e8-b16

This model is a fine-tuned version of [google/mt5-large](https://huggingface.co/google/mt5-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3526

- Rouge1: 84.5329

- Rouge2: 76.3656

- Rougel: 83.9027

- Rougelsum: 83.9238

- Gen Len: 15.4614

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.001

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adafactor

- lr_scheduler_type: linear

- num_epochs: 8

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:-----:|:---------------:|:-------:|:-------:|:-------:|:---------:|:-------:|

| 1.2105 | 0.23 | 400 | 0.4344 | 84.6268 | 76.3447 | 84.0402 | 84.0182 | 15.4564 |

| 0.4664 | 0.45 | 800 | 0.4256 | 84.3821 | 75.6104 | 83.8113 | 83.8303 | 15.4404 |

| 0.434 | 0.68 | 1200 | 0.3839 | 84.0212 | 75.7319 | 83.4232 | 83.431 | 15.4952 |

| 0.406 | 0.9 | 1600 | 0.3713 | 84.7743 | 76.7805 | 84.2379 | 84.2352 | 15.4514 |

| 0.3193 | 1.13 | 2000 | 0.3665 | 84.634 | 76.5132 | 84.0604 | 84.0755 | 15.4774 |

| 0.2693 | 1.35 | 2400 | 0.3718 | 84.6587 | 76.7057 | 84.099 | 84.1045 | 15.4619 |

| 0.2815 | 1.58 | 2800 | 0.3617 | 84.5181 | 76.6792 | 83.9922 | 83.9976 | 15.4820 |

| 0.2776 | 1.81 | 3200 | 0.3526 | 84.5329 | 76.3656 | 83.9027 | 83.9238 | 15.4614 |

| 0.2551 | 2.03 | 3600 | 0.3720 | 84.504 | 76.6676 | 83.9957 | 84.0108 | 15.4801 |

| 0.1617 | 2.26 | 4000 | 0.3648 | 84.4385 | 76.3684 | 83.8585 | 83.8657 | 15.4897 |

| 0.1711 | 2.48 | 4400 | 0.3671 | 84.5241 | 76.6518 | 83.9862 | 83.9987 | 15.4902 |

| 0.1771 | 2.71 | 4800 | 0.3607 | 84.6437 | 76.6682 | 84.103 | 84.1174 | 15.4683 |

| 0.1803 | 2.93 | 5200 | 0.3582 | 84.479 | 76.6205 | 83.9509 | 83.9504 | 15.4715 |

| 0.1199 | 3.16 | 5600 | 0.3971 | 84.6367 | 76.7872 | 84.0191 | 84.0534 | 15.4715 |

| 0.1005 | 3.39 | 6000 | 0.4085 | 84.5153 | 76.6564 | 83.9365 | 83.9506 | 15.4820 |

| 0.1033 | 3.61 | 6400 | 0.4007 | 84.3191 | 76.399 | 83.8183 | 83.8142 | 15.4728 |

| 0.1067 | 3.84 | 6800 | 0.4014 | 84.5289 | 76.5335 | 83.9706 | 83.9967 | 15.4674 |

| 0.09 | 4.06 | 7200 | 0.4328 | 84.3978 | 76.6231 | 83.8654 | 83.8728 | 15.4783 |

| 0.0574 | 4.29 | 7600 | 0.4305 | 84.4476 | 76.7198 | 83.8943 | 83.9 | 15.4820 |

| 0.0579 | 4.51 | 8000 | 0.4510 | 84.5536 | 76.7635 | 83.977 | 83.9745 | 15.4719 |

| 0.061 | 4.74 | 8400 | 0.4447 | 84.5632 | 76.9892 | 84.0419 | 84.0501 | 15.4815 |

| 0.0608 | 4.97 | 8800 | 0.4353 | 84.6004 | 76.8883 | 84.0518 | 84.0596 | 15.4788 |

| 0.0362 | 5.19 | 9200 | 0.4853 | 84.7169 | 77.1321 | 84.1485 | 84.1486 | 15.4760 |

| 0.0333 | 5.42 | 9600 | 0.5053 | 84.851 | 77.4661 | 84.307 | 84.3106 | 15.4829 |

| 0.0325 | 5.64 | 10000 | 0.5066 | 84.7412 | 77.3031 | 84.2107 | 84.2006 | 15.4948 |

| 0.0335 | 5.87 | 10400 | 0.4947 | 84.7596 | 77.2636 | 84.2156 | 84.224 | 15.4906 |

| 0.0269 | 6.09 | 10800 | 0.5306 | 84.7484 | 77.2693 | 84.1824 | 84.1962 | 15.4811 |

| 0.0184 | 6.32 | 11200 | 0.5535 | 84.8066 | 77.3749 | 84.2765 | 84.2989 | 15.4756 |

| 0.0177 | 6.55 | 11600 | 0.5555 | 84.7335 | 77.2108 | 84.1917 | 84.2084 | 15.4865 |

| 0.0168 | 6.77 | 12000 | 0.5538 | 84.7053 | 77.2902 | 84.184 | 84.1929 | 15.4792 |

| 0.0165 | 7.0 | 12400 | 0.5614 | 84.7332 | 77.3098 | 84.2055 | 84.2055 | 15.4879 |

| 0.0092 | 7.22 | 12800 | 0.6222 | 84.7668 | 77.3059 | 84.2235 | 84.2397 | 15.4724 |

| 0.0086 | 7.45 | 13200 | 0.6485 | 84.8211 | 77.4247 | 84.2857 | 84.2996 | 15.4751 |

| 0.0098 | 7.67 | 13600 | 0.6417 | 84.7854 | 77.4226 | 84.2457 | 84.2652 | 15.4865 |

| 0.0088 | 7.9 | 14000 | 0.6445 | 84.7809 | 77.4171 | 84.2396 | 84.2591 | 15.4852 |

### Framework versions

- Transformers 4.28.1

- Pytorch 1.11.0a0+b6df043

- Datasets 2.12.0

- Tokenizers 0.13.3

|

CyberMuffin/DialoGPT-small-ChandlerBot

|

[

"pytorch",

"gpt2",

"text-generation",

"transformers",

"conversational"

] |

conversational

|

{

"architectures": [

"GPT2LMHeadModel"

],

"model_type": "gpt2",

"task_specific_params": {

"conversational": {

"max_length": 1000

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 9 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: flan-t5-large-extraction-all-cnndm_2000-ep6-nonstop

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# flan-t5-large-extraction-all-cnndm_2000-ep6-nonstop

This model is a fine-tuned version of [google/flan-t5-large](https://huggingface.co/google/flan-t5-large) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 1.7750

- Hint Hit Num: 1.962

- Hint Precision: 0.3394

- Num: 5.812

- Gen Len: 18.986

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 40

- eval_batch_size: 80

- seed: 1799

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 6

### Training results

| Training Loss | Epoch | Step | Validation Loss | Hint Hit Num | Hint Precision | Num | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:------------:|:--------------:|:-----:|:-------:|

| 2.1808 | 2.0 | 100 | 1.8110 | 1.822 | 0.3218 | 5.738 | 19.0 |

| 1.9109 | 4.0 | 200 | 1.7813 | 1.916 | 0.3357 | 5.76 | 18.986 |

| 1.8525 | 6.0 | 300 | 1.7750 | 1.962 | 0.3394 | 5.812 | 18.986 |

### Framework versions

- Transformers 4.18.0

- Pytorch 1.10.0+cu111

- Datasets 2.5.1

- Tokenizers 0.12.1

|

D3xter1922/electra-base-discriminator-finetuned-cola

|

[

"pytorch",

"tensorboard",

"electra",

"text-classification",

"dataset:glue",

"transformers",

"generated_from_trainer",

"license:apache-2.0",

"model-index"

] |

text-classification

|

{

"architectures": [

"ElectraForSequenceClassification"

],

"model_type": "electra",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 68 | null |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

- f1

- precision

- recall

model-index:

- name: Baseline_100Kphish_benignFall_20_20_20

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Baseline_100Kphish_benignFall_20_20_20

This model is a fine-tuned version of [bert-base-uncased](https://huggingface.co/bert-base-uncased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0206

- Accuracy: 0.9973

- F1: 0.9713

- Precision: 0.9998

- Recall: 0.9444

- Roc Auc Score: 0.9722

- Tpr At Fpr 0.01: 0.962

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 32

- eval_batch_size: 32

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5.0

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 | Precision | Recall | Roc Auc Score | Tpr At Fpr 0.01 |

|:-------------:|:-----:|:------:|:---------------:|:--------:|:------:|:---------:|:------:|:-------------:|:---------------:|

| 0.0021 | 1.0 | 65625 | 0.0198 | 0.9974 | 0.9721 | 0.9966 | 0.9488 | 0.9743 | 0.9436 |

| 0.0013 | 2.0 | 131250 | 0.0251 | 0.9969 | 0.9664 | 0.9996 | 0.9354 | 0.9677 | 0.9416 |

| 0.0025 | 3.0 | 196875 | 0.0284 | 0.9966 | 0.9625 | 0.9996 | 0.928 | 0.9640 | 0.953 |

| 0.0 | 4.0 | 262500 | 0.0187 | 0.9974 | 0.9717 | 0.9994 | 0.9456 | 0.9728 | 0.965 |

| 0.0011 | 5.0 | 328125 | 0.0206 | 0.9973 | 0.9713 | 0.9998 | 0.9444 | 0.9722 | 0.962 |

### Framework versions

- Transformers 4.29.1

- Pytorch 1.9.0+cu111

- Datasets 2.10.1

- Tokenizers 0.13.2

|

DTAI-KULeuven/robbertje-1-gb-merged

|

[

"pytorch",

"roberta",

"fill-mask",

"nl",

"dataset:oscar",

"dataset:oscar (NL)",

"dataset:dbrd",

"dataset:lassy-ud",

"dataset:europarl-mono",

"dataset:conll2002",

"arxiv:2101.05716",

"transformers",

"Dutch",

"Flemish",

"RoBERTa",

"RobBERT",

"RobBERTje",

"license:mit",

"autotrain_compatible"

] |

fill-mask

|

{

"architectures": [

"RobertaForMaskedLM"

],

"model_type": "roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 1 | null |

---

license: apache-2.0

tags:

- setfit

- sentence-transformers

- text-classification

pipeline_tag: text-classification

---

# tollefj/setfit-nocola-20-iter-25-epochs-allsamples

This is a [SetFit model](https://github.com/huggingface/setfit) that can be used for text classification. The model has been trained using an efficient few-shot learning technique that involves:

1. Fine-tuning a [Sentence Transformer](https://www.sbert.net) with contrastive learning.

2. Training a classification head with features from the fine-tuned Sentence Transformer.

## Usage

To use this model for inference, first install the SetFit library:

```bash

python -m pip install setfit

```

You can then run inference as follows:

```python

from setfit import SetFitModel

# Download from Hub and run inference

model = SetFitModel.from_pretrained("tollefj/setfit-nocola-20-iter-25-epochs-allsamples")

# Run inference

preds = model(["i loved the spiderman movie!", "pineapple on pizza is the worst 🤮"])

```

## BibTeX entry and citation info

```bibtex

@article{https://doi.org/10.48550/arxiv.2209.11055,

doi = {10.48550/ARXIV.2209.11055},

url = {https://arxiv.org/abs/2209.11055},

author = {Tunstall, Lewis and Reimers, Nils and Jo, Unso Eun Seo and Bates, Luke and Korat, Daniel and Wasserblat, Moshe and Pereg, Oren},

keywords = {Computation and Language (cs.CL), FOS: Computer and information sciences, FOS: Computer and information sciences},

title = {Efficient Few-Shot Learning Without Prompts},

publisher = {arXiv},

year = {2022},

copyright = {Creative Commons Attribution 4.0 International}

}

```

|

alexandrainst/da-emotion-classification-base

|

[

"pytorch",

"tf",

"bert",

"text-classification",

"da",

"transformers",

"license:cc-by-sa-4.0"

] |

text-classification

|

{

"architectures": [

"BertForSequenceClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 837 | null |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 128 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 2819 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MultipleNegativesRankingLoss.MultipleNegativesRankingLoss` with parameters:

```

{'scale': 20.0, 'similarity_fct': 'cos_sim'}

```

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: AlbertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

(2): Dense({'in_features': 768, 'out_features': 128, 'bias': True, 'activation_function': 'torch.nn.modules.activation.Tanh'})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

alexandrainst/da-ner-base

|

[

"pytorch",

"tf",

"bert",

"token-classification",

"da",

"dataset:dane",

"transformers",

"license:cc-by-sa-4.0",

"autotrain_compatible"

] |

token-classification

|

{

"architectures": [

"BertForTokenClassification"

],

"model_type": "bert",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 78 | null |

---

license: other

---

# 聲明 Disclaimer

本資料夾中的模型不是我所製作,版權歸原作者所有(各模型版權詳見 http://www.civitai.com 所示)。我上傳至本資料夾僅爲方便在綫抽取資源,并非盈利。

The models in this folder are not made by me, and the copyright belongs to the original author (see http://www.civitai.com for details on the copyright of each model). I uploaded to this folder only for the convenience of extracting resources online, not for profit.

# 模型列表 List of Models

本資料夾中所有模型詳見下表。

All the models in this folder are detailed in the table below.

| 模型名稱 Model Name | Civitai 頁面鏈接 Civitai Page Link | Civitai 下載鏈接 Civitai Download Link |

|----------------------|--------------------|--------------------|

|fantasticmix_v50.safetensors |https://civitai.com/models/22402?modelVersionId=68821 |https://civitai.com/api/download/models/68821 |

|fantasticmix_v40.safetensors |https://civitai.com/models/22402?modelVersionId=59385 |https://civitai.com/api/download/models/59385 |

|fantasticmix_v30.safetensors |https://civitai.com/models/22402?modelVersionId=39880 |https://civitai.com/api/download/models/39880 |

|fantasticmix_v30Baked.safetensors |https://civitai.com/models/22402?modelVersionId=39900 |https://civitai.com/api/download/models/39900 |

|fantasticmix_v20.safetensors |https://civitai.com/models/22402?modelVersionId=30145 |https://civitai.com/api/download/models/30145 |

|fantasticmix_v20Baked.safetensors |https://civitai.com/models/22402?modelVersionId=30589 |https://civitai.com/api/download/models/30589 |

|fantasticmix_v10.safetensors |https://civitai.com/models/22402?modelVersionId=26744 |https://civitai.com/api/download/models/26744 |

|

DaWang/demo

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

license: mit

---

# InstructPix2Pix: Learning to Follow Image Editing Instructions

GitHub: https://github.com/timothybrooks/instruct-pix2pix

<img src='https://instruct-pix2pix.timothybrooks.com/teaser.jpg'/>

## Example

To use `InstructPix2Pix`, install `diffusers` using `main` for now. The pipeline will be available in the next release

```bash

pip install diffusers accelerate safetensors transformers

```

```python

import PIL

import requests

import torch

from diffusers import StableDiffusionInstructPix2PixPipeline, EulerAncestralDiscreteScheduler

model_id = "timbrooks/instruct-pix2pix"

pipe = StableDiffusionInstructPix2PixPipeline.from_pretrained(model_id, torch_dtype=torch.float16, safety_checker=None)

pipe.to("cuda")

pipe.scheduler = EulerAncestralDiscreteScheduler.from_config(pipe.scheduler.config)

url = "https://raw.githubusercontent.com/timothybrooks/instruct-pix2pix/main/imgs/example.jpg"

def download_image(url):

image = PIL.Image.open(requests.get(url, stream=True).raw)

image = PIL.ImageOps.exif_transpose(image)

image = image.convert("RGB")

return image

image = download_image(URL)

prompt = "turn him into cyborg"

images = pipe(prompt, image=image, num_inference_steps=10, image_guidance_scale=1).images

images[0]

```

|

Darkecho789/email-gen

|

[] | null |

{

"architectures": null,

"model_type": null,

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 0 | null |

---

tags:

- autotrain

- vision

- image-classification

datasets:

- ceogpt/autotrain-data-test-cgpt

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/tiger.jpg

example_title: Tiger

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/teapot.jpg

example_title: Teapot

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/palace.jpg

example_title: Palace

co2_eq_emissions:

emissions: 0.20224231551586896

---

# Model Trained Using AutoTrain

- Problem type: Binary Classification

- Model ID: 57734132888

- CO2 Emissions (in grams): 0.2022

## Validation Metrics

- Loss: 0.430

- Accuracy: 0.882

- Precision: 0.000

- Recall: 0.000

- AUC: 0.233

- F1: 0.000

|

Davlan/xlm-roberta-base-finetuned-xhosa

|

[

"pytorch",

"xlm-roberta",

"fill-mask",

"transformers",

"license:apache-2.0",

"autotrain_compatible"

] |

fill-mask

|

{

"architectures": [

"XLMRobertaForMaskedLM"

],

"model_type": "xlm-roberta",

"task_specific_params": {

"conversational": {

"max_length": null

},

"summarization": {

"early_stopping": null,

"length_penalty": null,

"max_length": null,

"min_length": null,

"no_repeat_ngram_size": null,

"num_beams": null,

"prefix": null

},

"text-generation": {

"do_sample": null,

"max_length": null

},

"translation_en_to_de": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_fr": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

},

"translation_en_to_ro": {

"early_stopping": null,

"max_length": null,

"num_beams": null,

"prefix": null

}

}

}

| 12 | null |

---

library_name: stable-baselines3

tags:

- SpaceInvadersNoFrameskip-v4

- deep-reinforcement-learning

- reinforcement-learning

- stable-baselines3

model-index:

- name: DQN

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: SpaceInvadersNoFrameskip-v4

type: SpaceInvadersNoFrameskip-v4

metrics:

- type: mean_reward

value: 434.00 +/- 154.03

name: mean_reward

verified: false

---

# **DQN** Agent playing **SpaceInvadersNoFrameskip-v4**

This is a trained model of a **DQN** agent playing **SpaceInvadersNoFrameskip-v4**

using the [stable-baselines3 library](https://github.com/DLR-RM/stable-baselines3)

and the [RL Zoo](https://github.com/DLR-RM/rl-baselines3-zoo).

The RL Zoo is a training framework for Stable Baselines3

reinforcement learning agents,

with hyperparameter optimization and pre-trained agents included.

## Usage (with SB3 RL Zoo)

RL Zoo: https://github.com/DLR-RM/rl-baselines3-zoo<br/>

SB3: https://github.com/DLR-RM/stable-baselines3<br/>

SB3 Contrib: https://github.com/Stable-Baselines-Team/stable-baselines3-contrib

Install the RL Zoo (with SB3 and SB3-Contrib):

```bash

pip install rl_zoo3

```

```

# Download model and save it into the logs/ folder

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga Bhanu9Prakash -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

If you installed the RL Zoo3 via pip (`pip install rl_zoo3`), from anywhere you can do:

```

python -m rl_zoo3.load_from_hub --algo dqn --env SpaceInvadersNoFrameskip-v4 -orga Bhanu9Prakash -f logs/

python -m rl_zoo3.enjoy --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

```

## Training (with the RL Zoo)

```

python -m rl_zoo3.train --algo dqn --env SpaceInvadersNoFrameskip-v4 -f logs/

# Upload the model and generate video (when possible)