modelId

stringlengths 5

139

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC]date 2020-02-15 11:33:14

2025-07-27 18:27:08

| downloads

int64 0

223M

| likes

int64 0

11.7k

| library_name

stringclasses 533

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 55

values | createdAt

timestamp[us, tz=UTC]date 2022-03-02 23:29:04

2025-07-27 18:22:57

| card

stringlengths 11

1.01M

|

|---|---|---|---|---|---|---|---|---|---|

Sympan/Reinforce-Cart

|

Sympan

| 2023-07-11T13:53:32Z | 0 | 0 | null |

[

"CartPole-v1",

"reinforce",

"reinforcement-learning",

"custom-implementation",

"deep-rl-class",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-11T13:53:23Z |

---

tags:

- CartPole-v1

- reinforce

- reinforcement-learning

- custom-implementation

- deep-rl-class

model-index:

- name: Reinforce-Cart

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: CartPole-v1

type: CartPole-v1

metrics:

- type: mean_reward

value: 485.30 +/- 44.10

name: mean_reward

verified: false

---

# **Reinforce** Agent playing **CartPole-v1**

This is a trained model of a **Reinforce** agent playing **CartPole-v1** .

To learn to use this model and train yours check Unit 4 of the Deep Reinforcement Learning Course: https://huggingface.co/deep-rl-course/unit4/introduction

|

Xorbits/chatglm2-6B-GGML

|

Xorbits

| 2023-07-11T13:50:37Z | 0 | 23 | null |

[

"arxiv:1911.02150",

"license:apache-2.0",

"region:us"

] | null | 2023-07-05T08:03:24Z |

---

license: apache-2.0

---

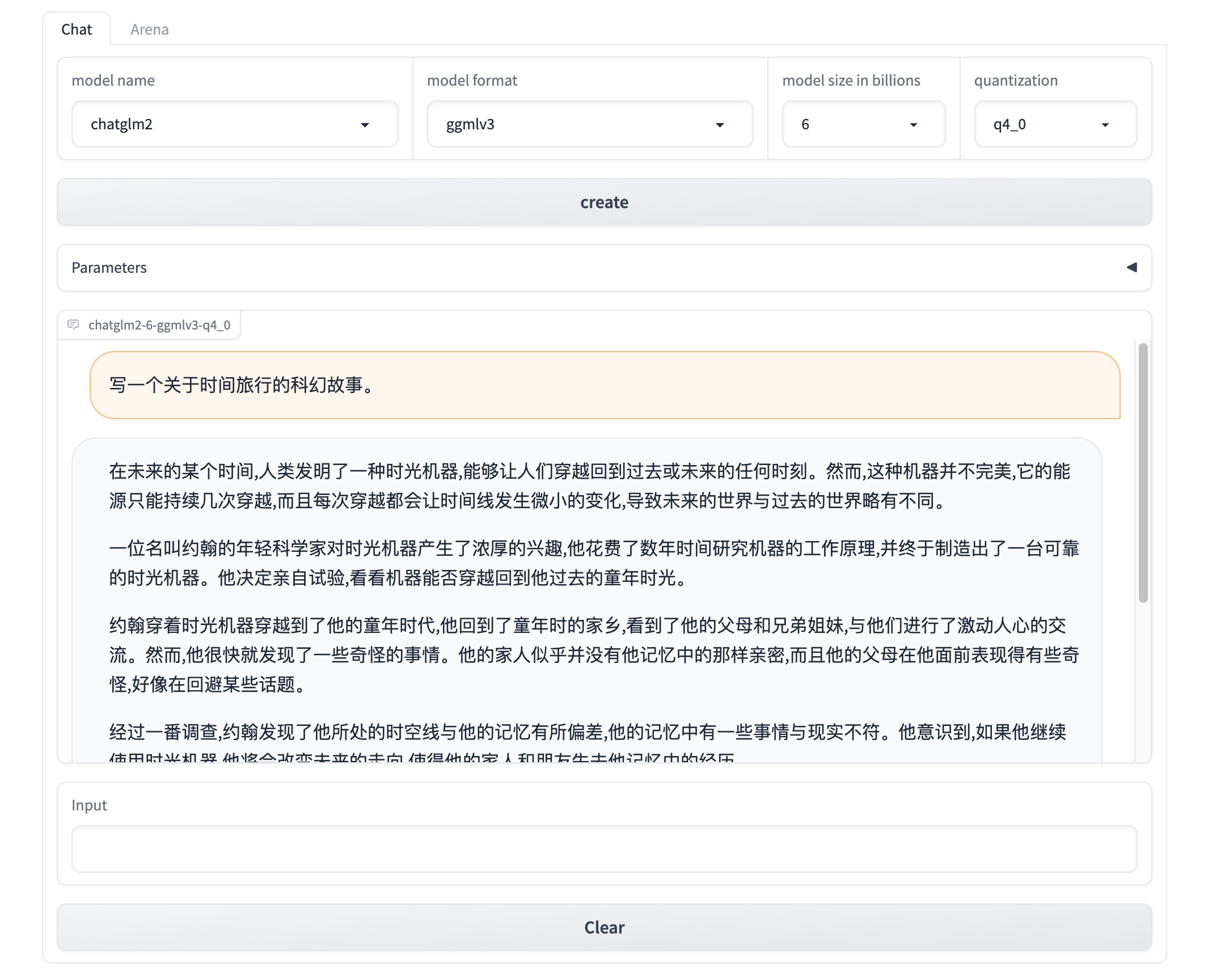

# THUDM's chatglm2 6B GGML

These files are GGML format model files for [THUDM's chatglm2 6B](https://huggingface.co/THUDM/chatglm2-6b).

GGML files are for CPU + GPU inference using [chatglm.cpp](https://github.com/li-plus/chatglm.cpp) and [Xorbits Inference](https://github.com/xorbitsai/inference).

# Prompt template

**NOTE**: prompt template is not available yet since the system prompt is hard coded in chatglm.cpp for now.

# Provided files

| Name | Quant method | Bits | Size |

|------|--------------|------|------|

| chatglm2-ggml-q4_0.bin | q4_0 | 4 | 3.5 GB |

| chatglm2-ggml-q4_1.bin | q4_1 | 4 | 3.9 GB |

| chatglm2-ggml-q5_0.bin | q5_0 | 5 | 4.3 GB |

| chatglm2-ggml-q5_1.bin | q5_1 | 5 | 4.7 GB |

| chatglm2-ggml-q8_0.bin | q8_0 | 8 | 6.6 GB |

# How to run in Xorbits Inference

## Install

Xinference can be installed via pip from PyPI. It is highly recommended to create a new virtual environment to avoid conflicts.

```bash

$ pip install "xinference[all]"

$ pip install chatglm-cpp

```

## Start Xorbits Inference

To start a local instance of Xinference, run the following command:

```bash

$ xinference

```

Once Xinference is running, an endpoint will be accessible for model management via CLI or Xinference client. The default endpoint is `http://localhost:9997`. You can also view a web UI using the Xinference endpoint to chat with all the builtin models. You can even chat with two cutting-edge AI models side-by-side to compare their performance!

# Slack

For further support, and discussions on these models and AI in general, join our [slack channel](https://join.slack.com/t/xorbitsio/shared_invite/zt-1o3z9ucdh-RbfhbPVpx7prOVdM1CAuxg)!

# Original model card: THUDM's chatglm2 6B

ChatGLM**2**-6B is the second-generation version of the open-source bilingual (Chinese-English) chat model [ChatGLM-6B](https://github.com/THUDM/ChatGLM-6B). It retains the smooth conversation flow and low deployment threshold of the first-generation model, while introducing the following new features:

1. **Stronger Performance**: Based on the development experience of the first-generation ChatGLM model, we have fully upgraded the base model of ChatGLM2-6B. ChatGLM2-6B uses the hybrid objective function of [GLM](https://github.com/THUDM/GLM), and has undergone pre-training with 1.4T bilingual tokens and human preference alignment training. The [evaluation results](README.md#evaluation-results) show that, compared to the first-generation model, ChatGLM2-6B has achieved substantial improvements in performance on datasets like MMLU (+23%), CEval (+33%), GSM8K (+571%), BBH (+60%), showing strong competitiveness among models of the same size.

2. **Longer Context**: Based on [FlashAttention](https://github.com/HazyResearch/flash-attention) technique, we have extended the context length of the base model from 2K in ChatGLM-6B to 32K, and trained with a context length of 8K during the dialogue alignment, allowing for more rounds of dialogue. However, the current version of ChatGLM2-6B has limited understanding of single-round ultra-long documents, which we will focus on optimizing in future iterations.

3. **More Efficient Inference**: Based on [Multi-Query Attention](http://arxiv.org/abs/1911.02150) technique, ChatGLM2-6B has more efficient inference speed and lower GPU memory usage: under the official implementation, the inference speed has increased by 42% compared to the first generation; under INT4 quantization, the dialogue length supported by 6G GPU memory has increased from 1K to 8K.

For more instructions, including how to run CLI and web demos, and model quantization, please refer to our [Github Repo](https://github.com/THUDM/ChatGLM2-6B).

|

h2o-llmstudio/falcon-7b-fix

|

h2o-llmstudio

| 2023-07-11T13:46:34Z | 17 | 1 |

transformers

|

[

"transformers",

"pytorch",

"RefinedWebModel",

"text-generation",

"custom_code",

"en",

"dataset:tiiuae/falcon-refinedweb",

"arxiv:2205.14135",

"arxiv:1911.02150",

"arxiv:2101.00027",

"arxiv:2005.14165",

"arxiv:2104.09864",

"arxiv:2306.01116",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] |

text-generation

| 2023-07-06T09:48:00Z |

---

datasets:

- tiiuae/falcon-refinedweb

language:

- en

inference: false

license: apache-2.0

---

⚠️ **This is an unofficial fork of the original [Falcon-7B](https://huggingface.co/tiiuae/falcon-7b) model.**

The following changes have been made:

- Fixing generation configuration setting

- Model now properly uses specified ```attention_mask``` when calling ```scaled_dot_product_attention``` - this also allows to specify custom attention masks and work with left-padded input. However, this will disable additional memory and flash optimization.

# 🚀 Falcon-7B

**Falcon-7B is a 7B parameters causal decoder-only model built by [TII](https://www.tii.ae) and trained on 1,500B tokens of [RefinedWeb](https://huggingface.co/datasets/tiiuae/falcon-refinedweb) enhanced with curated corpora. It is made available under the Apache 2.0 license.**

*Paper coming soon* 😊.

🤗 To get started with Falcon (inference, finetuning, quantization, etc.), we recommend reading [this great blogpost fron HF](https://huggingface.co/blog/falcon)!

## Why use Falcon-7B?

* **It outperforms comparable open-source models** (e.g., [MPT-7B](https://huggingface.co/mosaicml/mpt-7b), [StableLM](https://github.com/Stability-AI/StableLM), [RedPajama](https://huggingface.co/togethercomputer/RedPajama-INCITE-Base-7B-v0.1) etc.), thanks to being trained on 1,500B tokens of [RefinedWeb](https://huggingface.co/datasets/tiiuae/falcon-refinedweb) enhanced with curated corpora. See the [OpenLLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard).

* **It features an architecture optimized for inference**, with FlashAttention ([Dao et al., 2022](https://arxiv.org/abs/2205.14135)) and multiquery ([Shazeer et al., 2019](https://arxiv.org/abs/1911.02150)).

* **It is made available under a permissive Apache 2.0 license allowing for commercial use**, without any royalties or restrictions.

⚠️ **This is a raw, pretrained model, which should be further finetuned for most usecases.** If you are looking for a version better suited to taking generic instructions in a chat format, we recommend taking a look at [Falcon-7B-Instruct](https://huggingface.co/tiiuae/falcon-7b-instruct).

🔥 **Looking for an even more powerful model?** [Falcon-40B](https://huggingface.co/tiiuae/falcon-40b) is Falcon-7B's big brother!

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

model = "tiiuae/falcon-7b"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

)

sequences = pipeline(

"Girafatron is obsessed with giraffes, the most glorious animal on the face of this Earth. Giraftron believes all other animals are irrelevant when compared to the glorious majesty of the giraffe.\nDaniel: Hello, Girafatron!\nGirafatron:",

max_length=200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

```

💥 **Falcon LLMs require PyTorch 2.0 for use with `transformers`!**

For fast inference with Falcon, check-out [Text Generation Inference](https://github.com/huggingface/text-generation-inference)! Read more in this [blogpost]((https://huggingface.co/blog/falcon).

You will need **at least 16GB of memory** to swiftly run inference with Falcon-7B.

# Model Card for Falcon-7B

## Model Details

### Model Description

- **Developed by:** [https://www.tii.ae](https://www.tii.ae);

- **Model type:** Causal decoder-only;

- **Language(s) (NLP):** English and French;

- **License:** Apache 2.0.

### Model Source

- **Paper:** *coming soon*.

## Uses

### Direct Use

Research on large language models; as a foundation for further specialization and finetuning for specific usecases (e.g., summarization, text generation, chatbot, etc.)

### Out-of-Scope Use

Production use without adequate assessment of risks and mitigation; any use cases which may be considered irresponsible or harmful.

## Bias, Risks, and Limitations

Falcon-7B is trained on English and French data only, and will not generalize appropriately to other languages. Furthermore, as it is trained on a large-scale corpora representative of the web, it will carry the stereotypes and biases commonly encountered online.

### Recommendations

We recommend users of Falcon-7B to consider finetuning it for the specific set of tasks of interest, and for guardrails and appropriate precautions to be taken for any production use.

## How to Get Started with the Model

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

import transformers

import torch

model = "tiiuae/falcon-7b"

tokenizer = AutoTokenizer.from_pretrained(model)

pipeline = transformers.pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

torch_dtype=torch.bfloat16,

trust_remote_code=True,

device_map="auto",

)

sequences = pipeline(

"Girafatron is obsessed with giraffes, the most glorious animal on the face of this Earth. Giraftron believes all other animals are irrelevant when compared to the glorious majesty of the giraffe.\nDaniel: Hello, Girafatron!\nGirafatron:",

max_length=200,

do_sample=True,

top_k=10,

num_return_sequences=1,

eos_token_id=tokenizer.eos_token_id,

)

for seq in sequences:

print(f"Result: {seq['generated_text']}")

```

## Training Details

### Training Data

Falcon-7B was trained on 1,500B tokens of [RefinedWeb](https://huggingface.co/datasets/tiiuae/falcon-refinedweb), a high-quality filtered and deduplicated web dataset which we enhanced with curated corpora. Significant components from our curated copora were inspired by The Pile ([Gao et al., 2020](https://arxiv.org/abs/2101.00027)).

| **Data source** | **Fraction** | **Tokens** | **Sources** |

|--------------------|--------------|------------|-----------------------------------|

| [RefinedWeb-English](https://huggingface.co/datasets/tiiuae/falcon-refinedweb) | 79% | 1,185B | massive web crawl |

| Books | 7% | 110B | |

| Conversations | 6% | 85B | Reddit, StackOverflow, HackerNews |

| Code | 3% | 45B | |

| RefinedWeb-French | 3% | 45B | massive web crawl |

| Technical | 2% | 30B | arXiv, PubMed, USPTO, etc. |

The data was tokenized with the Falcon-[7B](https://huggingface.co/tiiuae/falcon-7b)/[40B](https://huggingface.co/tiiuae/falcon-40b) tokenizer.

### Training Procedure

Falcon-7B was trained on 384 A100 40GB GPUs, using a 2D parallelism strategy (PP=2, DP=192) combined with ZeRO.

#### Training Hyperparameters

| **Hyperparameter** | **Value** | **Comment** |

|--------------------|------------|-------------------------------------------|

| Precision | `bfloat16` | |

| Optimizer | AdamW | |

| Learning rate | 6e-4 | 4B tokens warm-up, cosine decay to 1.2e-5 |

| Weight decay | 1e-1 | |

| Z-loss | 1e-4 | |

| Batch size | 2304 | 30B tokens ramp-up |

#### Speeds, Sizes, Times

Training happened in early March 2023 and took about two weeks.

## Evaluation

*Paper coming soon*.

See the [OpenLLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard) for early results.

## Technical Specifications

### Model Architecture and Objective

Falcon-7B is a causal decoder-only model trained on a causal language modeling task (i.e., predict the next token).

The architecture is broadly adapted from the GPT-3 paper ([Brown et al., 2020](https://arxiv.org/abs/2005.14165)), with the following differences:

* **Positionnal embeddings:** rotary ([Su et al., 2021](https://arxiv.org/abs/2104.09864));

* **Attention:** multiquery ([Shazeer et al., 2019](https://arxiv.org/abs/1911.02150)) and FlashAttention ([Dao et al., 2022](https://arxiv.org/abs/2205.14135));

* **Decoder-block:** parallel attention/MLP with a single layer norm.

| **Hyperparameter** | **Value** | **Comment** |

|--------------------|-----------|----------------------------------------|

| Layers | 32 | |

| `d_model` | 4544 | Increased to compensate for multiquery |

| `head_dim` | 64 | Reduced to optimise for FlashAttention |

| Vocabulary | 65024 | |

| Sequence length | 2048 | |

### Compute Infrastructure

#### Hardware

Falcon-7B was trained on AWS SageMaker, on 384 A100 40GB GPUs in P4d instances.

#### Software

Falcon-7B was trained a custom distributed training codebase, Gigatron. It uses a 3D parallelism approach combined with ZeRO and high-performance Triton kernels (FlashAttention, etc.)

## Citation

*Paper coming soon* 😊. In the meanwhile, you can use the following information to cite:

```

@article{falcon40b,

title={{Falcon-40B}: an open large language model with state-of-the-art performance},

author={Almazrouei, Ebtesam and Alobeidli, Hamza and Alshamsi, Abdulaziz and Cappelli, Alessandro and Cojocaru, Ruxandra and Debbah, Merouane and Goffinet, Etienne and Heslow, Daniel and Launay, Julien and Malartic, Quentin and Noune, Badreddine and Pannier, Baptiste and Penedo, Guilherme},

year={2023}

}

```

To learn more about the pretraining dataset, see the 📓 [RefinedWeb paper](https://arxiv.org/abs/2306.01116).

```

@article{refinedweb,

title={The {R}efined{W}eb dataset for {F}alcon {LLM}: outperforming curated corpora with web data, and web data only},

author={Guilherme Penedo and Quentin Malartic and Daniel Hesslow and Ruxandra Cojocaru and Alessandro Cappelli and Hamza Alobeidli and Baptiste Pannier and Ebtesam Almazrouei and Julien Launay},

journal={arXiv preprint arXiv:2306.01116},

eprint={2306.01116},

eprinttype = {arXiv},

url={https://arxiv.org/abs/2306.01116},

year={2023}

}

```

## License

Falcon-7B is made available under the Apache 2.0 license.

## Contact

[email protected]

|

shaunster/xlm-roberta-base-finetuned-panx-en-ext_head_loss

|

shaunster

| 2023-07-11T13:38:10Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"token-classification",

"generated_from_trainer",

"dataset:xtreme",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-07-05T04:20:15Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- xtreme

metrics:

- f1

model-index:

- name: xlm-roberta-base-finetuned-panx-en-ext_head_loss

results:

- task:

name: Token Classification

type: token-classification

dataset:

name: xtreme

type: xtreme

config: PAN-X.en

split: validation

args: PAN-X.en

metrics:

- name: F1

type: f1

value: 0.8075228972942741

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# xlm-roberta-base-finetuned-panx-en-ext_head_loss

This model is a fine-tuned version of [xlm-roberta-base](https://huggingface.co/xlm-roberta-base) on the xtreme dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2985

- F1: 0.8075

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 24

- eval_batch_size: 24

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 |

|:-------------:|:-----:|:----:|:---------------:|:------:|

| 0.9161 | 1.0 | 417 | 0.5816 | 0.3588 |

| 0.5572 | 2.0 | 834 | 0.5326 | 0.4619 |

| 0.3821 | 3.0 | 1251 | 0.3929 | 0.5508 |

| 0.2552 | 4.0 | 1668 | 0.3149 | 0.8015 |

| 0.1755 | 5.0 | 2085 | 0.2985 | 0.8075 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

sjdata/speecht5_finetuned_voxpopuli_nl

|

sjdata

| 2023-07-11T13:37:35Z | 75 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"speecht5",

"text-to-audio",

"generated_from_trainer",

"dataset:voxpopuli",

"license:mit",

"endpoints_compatible",

"region:us"

] |

text-to-audio

| 2023-07-11T11:50:21Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- voxpopuli

model-index:

- name: speecht5_finetuned_voxpopuli_nl

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# speecht5_finetuned_voxpopuli_nl

This model is a fine-tuned version of [microsoft/speecht5_tts](https://huggingface.co/microsoft/speecht5_tts) on the voxpopuli dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4626

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 4

- eval_batch_size: 2

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 32

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 4000

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 0.5217 | 4.3 | 1000 | 0.4827 |

| 0.4955 | 8.61 | 2000 | 0.4678 |

| 0.4936 | 12.91 | 3000 | 0.4666 |

| 0.4936 | 17.21 | 4000 | 0.4626 |

### Framework versions

- Transformers 4.31.0.dev0

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

BlueAvenir/model_growth_restructuring_V_0_1

|

BlueAvenir

| 2023-07-11T13:20:12Z | 2 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2023-07-11T13:19:50Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 258 with parameters:

```

{'batch_size': 16, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.CosineSimilarityLoss.CosineSimilarityLoss`

Parameters of the fit()-Method:

```

{

"epochs": 1,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": 258,

"warmup_steps": 26,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

NasimB/gpt2-cocnat-mod-datasets3-rarity-all

|

NasimB

| 2023-07-11T13:13:07Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-11T11:20:45Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-cocnat-mod-datasets3-rarity-all

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-cocnat-mod-datasets3-rarity-all

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.3779

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 6.7201 | 0.3 | 500 | 5.6554 |

| 5.3777 | 0.6 | 1000 | 5.2100 |

| 5.0257 | 0.91 | 1500 | 4.9662 |

| 4.7428 | 1.21 | 2000 | 4.8246 |

| 4.5916 | 1.51 | 2500 | 4.6972 |

| 4.4886 | 1.81 | 3000 | 4.5927 |

| 4.3213 | 2.12 | 3500 | 4.5355 |

| 4.173 | 2.42 | 4000 | 4.4840 |

| 4.1402 | 2.72 | 4500 | 4.4195 |

| 4.0833 | 3.02 | 5000 | 4.3844 |

| 3.8496 | 3.33 | 5500 | 4.3743 |

| 3.8398 | 3.63 | 6000 | 4.3421 |

| 3.8193 | 3.93 | 6500 | 4.3113 |

| 3.6103 | 4.23 | 7000 | 4.3294 |

| 3.5592 | 4.53 | 7500 | 4.3199 |

| 3.5442 | 4.84 | 8000 | 4.3041 |

| 3.4575 | 5.14 | 8500 | 4.3158 |

| 3.3572 | 5.44 | 9000 | 4.3191 |

| 3.3595 | 5.74 | 9500 | 4.3171 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

Mithun12/my_first_asr_model

|

Mithun12

| 2023-07-11T13:12:29Z | 77 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"wav2vec2",

"automatic-speech-recognition",

"generated_from_trainer",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] |

automatic-speech-recognition

| 2023-07-11T11:15:57Z |

---

license: apache-2.0

tags:

- generated_from_trainer

model-index:

- name: my_first_asr_model

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# my_first_asr_model

This model is a fine-tuned version of [facebook/wav2vec2-base](https://huggingface.co/facebook/wav2vec2-base) on the None dataset.

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-05

- train_batch_size: 1

- eval_batch_size: 1

- seed: 42

- gradient_accumulation_steps: 2

- total_train_batch_size: 2

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 2000

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

Bhanu9Prakash/ast-finetuned-audioset-10-10-0.4593-finetuned-gtzan

|

Bhanu9Prakash

| 2023-07-11T13:05:14Z | 222 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"audio-spectrogram-transformer",

"audio-classification",

"generated_from_trainer",

"dataset:marsyas/gtzan",

"base_model:MIT/ast-finetuned-audioset-10-10-0.4593",

"base_model:finetune:MIT/ast-finetuned-audioset-10-10-0.4593",

"license:bsd-3-clause",

"model-index",

"endpoints_compatible",

"region:us"

] |

audio-classification

| 2023-07-11T12:44:34Z |

---

license: bsd-3-clause

base_model: MIT/ast-finetuned-audioset-10-10-0.4593

tags:

- generated_from_trainer

datasets:

- marsyas/gtzan

metrics:

- accuracy

model-index:

- name: ast-finetuned-audioset-10-10-0.4593-finetuned-gtzan

results:

- task:

name: Audio Classification

type: audio-classification

dataset:

name: GTZAN

type: marsyas/gtzan

config: all

split: train

args: all

metrics:

- name: Accuracy

type: accuracy

value: 0.92

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# ast-finetuned-audioset-10-10-0.4593-finetuned-gtzan

This model is a fine-tuned version of [MIT/ast-finetuned-audioset-10-10-0.4593](https://huggingface.co/MIT/ast-finetuned-audioset-10-10-0.4593) on the GTZAN dataset.

It achieves the following results on the evaluation set:

- Loss: 0.3966

- Accuracy: 0.92

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-05

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 1.0687 | 1.0 | 113 | 0.6197 | 0.84 |

| 0.299 | 2.0 | 226 | 0.5065 | 0.86 |

| 0.2634 | 3.0 | 339 | 0.5042 | 0.88 |

| 0.0473 | 4.0 | 452 | 0.5413 | 0.88 |

| 0.0033 | 5.0 | 565 | 0.3706 | 0.91 |

| 0.0003 | 6.0 | 678 | 0.4485 | 0.9 |

| 0.2538 | 7.0 | 791 | 0.4006 | 0.9 |

| 0.0002 | 8.0 | 904 | 0.3985 | 0.9 |

| 0.003 | 9.0 | 1017 | 0.3952 | 0.91 |

| 0.0001 | 10.0 | 1130 | 0.3966 | 0.92 |

### Framework versions

- Transformers 4.31.0.dev0

- Pytorch 1.12.1+cu116

- Datasets 2.4.0

- Tokenizers 0.12.1

|

kmariunas/uncased-bert-triplet-40

|

kmariunas

| 2023-07-11T13:02:44Z | 101 | 1 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"tf",

"bert",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2023-07-11T13:01:06Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 108 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.BatchHardTripletLoss.BatchHardTripletLoss`

Parameters of the fit()-Method:

```

{

"epochs": 40,

"evaluation_steps": 0,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 429.20000000000005,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 512, 'do_lower_case': False}) with Transformer model: BertModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

ashnrk/textual_inversion_residential

|

ashnrk

| 2023-07-11T12:59:49Z | 18 | 0 |

diffusers

|

[

"diffusers",

"tensorboard",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"textual_inversion",

"base_model:stabilityai/stable-diffusion-2-1",

"base_model:adapter:stabilityai/stable-diffusion-2-1",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-07-11T11:57:37Z |

---

license: creativeml-openrail-m

base_model: stabilityai/stable-diffusion-2-1

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

- textual_inversion

inference: true

---

# Textual inversion text2image fine-tuning - ashnrk/textual_inversion_residential

These are textual inversion adaption weights for stabilityai/stable-diffusion-2-1. You can find some example images in the following.

|

antonioalvarado/text_analyzer_albert-base-v2

|

antonioalvarado

| 2023-07-11T12:53:05Z | 103 | 1 |

transformers

|

[

"transformers",

"pytorch",

"albert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-11T12:23:30Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: text_analyzer_albert-base-v2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# text_analyzer_albert-base-v2

This model is a fine-tuned version of [albert-base-v2](https://huggingface.co/albert-base-v2) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0093

- Accuracy: 0.9988

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:--------:|

| 0.4321 | 1.0 | 3457 | 0.3820 | 0.9341 |

| 0.1756 | 2.0 | 6914 | 0.1220 | 0.9815 |

| 0.029 | 3.0 | 10371 | 0.0464 | 0.9919 |

| 0.0001 | 4.0 | 13828 | 0.0093 | 0.9988 |

### Framework versions

- Transformers 4.29.1

- Pytorch 1.12.0+cu102

- Datasets 2.13.1

- Tokenizers 0.13.3

|

sumitrsch/multiconer2_muril_large_bn

|

sumitrsch

| 2023-07-11T12:41:30Z | 109 | 2 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-02-02T13:05:45Z |

For prediction on test data use this link. https://colab.research.google.com/drive/1K-ED0yvMsdciNo52rluauQBEAg-DBomC?usp=sharing

update best_model_path = "sumitrsch/multiconer2_muril_large_bn"

If you are using this code, cite paper "silp_nlp at SemEval-2023 Task 2: Cross-lingual Knowledge Transfer for Mono-lingual Learning"

https://aclanthology.org/2023.semeval-1.164

|

sumitrsch/multiconer2_muril_large_hi

|

sumitrsch

| 2023-07-11T12:40:43Z | 120 | 2 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"token-classification",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

token-classification

| 2023-02-02T13:04:38Z |

For prediction on test data use this link. https://colab.research.google.com/drive/1K-ED0yvMsdciNo52rluauQBEAg-DBomC?usp=sharing

If you are using this code, cite paper "silp_nlp at SemEval-2023 Task 2: Cross-lingual Knowledge Transfer for

Mono-lingual Learning"

https://aclanthology.org/2023.semeval-1.164

|

duwuonline/mymodel-generation

|

duwuonline

| 2023-07-11T12:38:20Z | 105 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"t5",

"text2text-generation",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text2text-generation

| 2023-07-11T12:20:34Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- rouge

model-index:

- name: mymodel-generation

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# mymodel-generation

This model is a fine-tuned version of [t5-small](https://huggingface.co/t5-small) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4959

- Rouge1: 15.814

- Rouge2: 6.0889

- Rougel: 13.524

- Rougelsum: 13.6797

- Gen Len: 19.0

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 10

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rouge1 | Rouge2 | Rougel | Rougelsum | Gen Len |

|:-------------:|:-----:|:----:|:---------------:|:-------:|:------:|:-------:|:---------:|:-------:|

| No log | 1.0 | 100 | 0.6815 | 14.8968 | 4.9117 | 12.5655 | 12.7826 | 19.0 |

| No log | 2.0 | 200 | 0.6100 | 14.9404 | 4.9974 | 12.8103 | 13.0953 | 19.0 |

| No log | 3.0 | 300 | 0.5827 | 14.991 | 5.2082 | 12.9564 | 13.1979 | 19.0 |

| No log | 4.0 | 400 | 0.5568 | 14.9205 | 5.1634 | 12.6664 | 12.8388 | 19.0 |

| 0.8938 | 5.0 | 500 | 0.5352 | 15.2597 | 5.6541 | 13.0388 | 13.1956 | 19.0 |

| 0.8938 | 6.0 | 600 | 0.5212 | 15.4645 | 5.7723 | 13.2198 | 13.3698 | 19.0 |

| 0.8938 | 7.0 | 700 | 0.5098 | 15.4663 | 5.8769 | 13.2799 | 13.403 | 19.0 |

| 0.8938 | 8.0 | 800 | 0.5015 | 16.0013 | 6.2874 | 13.7037 | 13.8538 | 19.0 |

| 0.8938 | 9.0 | 900 | 0.4957 | 15.8722 | 6.1918 | 13.6299 | 13.7783 | 19.0 |

| 0.6764 | 10.0 | 1000 | 0.4959 | 15.814 | 6.0889 | 13.524 | 13.6797 | 19.0 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

digiplay/NewMarsMix_R

|

digiplay

| 2023-07-11T12:31:12Z | 0 | 0 | null |

[

"license:other",

"region:us"

] | null | 2023-07-11T01:51:42Z |

---

license: other

---

https://civitai.com/models/19321?modelVersionId=25545

|

srirammadduri-ts/roberta-base-squad2-finetuned-roberta

|

srirammadduri-ts

| 2023-07-11T12:26:34Z | 106 | 0 |

transformers

|

[

"transformers",

"pytorch",

"roberta",

"question-answering",

"generated_from_trainer",

"license:cc-by-4.0",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-07-11T12:06:31Z |

---

license: cc-by-4.0

tags:

- generated_from_trainer

model-index:

- name: roberta-base-squad2-finetuned-roberta

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# roberta-base-squad2-finetuned-roberta

This model is a fine-tuned version of [deepset/roberta-base-squad2](https://huggingface.co/deepset/roberta-base-squad2) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0003

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 3

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| No log | 1.0 | 2 | 0.0008 |

| No log | 2.0 | 4 | 0.0004 |

| No log | 3.0 | 6 | 0.0003 |

### Framework versions

- Transformers 4.28.1

- Pytorch 2.0.0

- Datasets 2.13.1

- Tokenizers 0.13.3

|

F-Haru/test

|

F-Haru

| 2023-07-11T12:10:12Z | 2 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2023-07-11T12:03:50Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 1686 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MSELoss.MSELoss`

Parameters of the fit()-Method:

```

{

"epochs": 10,

"evaluation_steps": 1000,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"eps": 1e-06,

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 128, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

NasimB/gpt2-concat-all-mod-datasets1-rarity-all-c13k-c2p6k-rev

|

NasimB

| 2023-07-11T12:08:38Z | 5 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-11T10:23:20Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-concat-all-mod-datasets1-rarity-all-c13k-c2p6k-rev

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-concat-all-mod-datasets1-rarity-all-c13k-c2p6k-rev

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.8844

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 6.7952 | 0.32 | 500 | 5.7564 |

| 5.4839 | 0.63 | 1000 | 5.3858 |

| 5.1442 | 0.95 | 1500 | 5.2129 |

| 4.8614 | 1.27 | 2000 | 5.0996 |

| 4.7476 | 1.59 | 2500 | 5.0222 |

| 4.6481 | 1.9 | 3000 | 4.9507 |

| 4.4504 | 2.22 | 3500 | 4.9514 |

| 4.3796 | 2.54 | 4000 | 4.8926 |

| 4.3388 | 2.85 | 4500 | 4.8553 |

| 4.1754 | 3.17 | 5000 | 4.8697 |

| 4.0695 | 3.49 | 5500 | 4.8398 |

| 4.0541 | 3.8 | 6000 | 4.8012 |

| 3.9275 | 4.12 | 6500 | 4.8334 |

| 3.7741 | 4.44 | 7000 | 4.8258 |

| 3.7686 | 4.76 | 7500 | 4.8182 |

| 3.7113 | 5.07 | 8000 | 4.8313 |

| 3.5725 | 5.39 | 8500 | 4.8351 |

| 3.5762 | 5.71 | 9000 | 4.8371 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

komo-dono/risataneda

|

komo-dono

| 2023-07-11T12:03:18Z | 0 | 0 | null |

[

"region:us"

] | null | 2023-07-11T12:01:51Z |

---

license: openrail

language:

- ja

tags:

- music

risa taneda 600 epoch

|

kfkas/LawBot-v1_koalpaca_legalQA_easylaw_train

|

kfkas

| 2023-07-11T12:00:10Z | 0 | 0 |

peft

|

[

"peft",

"region:us"

] | null | 2023-07-11T12:00:06Z |

---

library_name: peft

---

## Training procedure

The following `bitsandbytes` quantization config was used during training:

- load_in_8bit: False

- load_in_4bit: True

- llm_int8_threshold: 6.0

- llm_int8_skip_modules: None

- llm_int8_enable_fp32_cpu_offload: False

- llm_int8_has_fp16_weight: False

- bnb_4bit_quant_type: nf4

- bnb_4bit_use_double_quant: True

- bnb_4bit_compute_dtype: bfloat16

### Framework versions

- PEFT 0.4.0.dev0

|

sofia-todeschini/PubMedBERT-LitCovid-v1.2

|

sofia-todeschini

| 2023-07-11T11:50:04Z | 107 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"bert",

"text-classification",

"generated_from_trainer",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-11T08:51:46Z |

---

license: mit

tags:

- generated_from_trainer

metrics:

- f1

- accuracy

model-index:

- name: PubMedBERT-LitCovid-v1.2

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# PubMedBERT-LitCovid-v1.2

This model is a fine-tuned version of [microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract](https://huggingface.co/microsoft/BiomedNLP-PubMedBERT-base-uncased-abstract) on an unknown dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0998

- F1: 0.9200

- Roc Auc: 0.9529

- Accuracy: 0.7868

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 16

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 5

### Training results

| Training Loss | Epoch | Step | Validation Loss | F1 | Roc Auc | Accuracy |

|:-------------:|:-----:|:-----:|:---------------:|:------:|:-------:|:--------:|

| 0.1017 | 1.0 | 2211 | 0.0897 | 0.9155 | 0.9492 | 0.7722 |

| 0.0742 | 2.0 | 4422 | 0.0868 | 0.9177 | 0.9508 | 0.7778 |

| 0.0559 | 3.0 | 6633 | 0.0903 | 0.9191 | 0.9521 | 0.7827 |

| 0.0396 | 4.0 | 8844 | 0.0955 | 0.9184 | 0.9512 | 0.7814 |

| 0.0282 | 5.0 | 11055 | 0.0998 | 0.9200 | 0.9529 | 0.7868 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.0

- Datasets 2.1.0

- Tokenizers 0.13.3

|

Penbloom/Penbloom_semirealmix

|

Penbloom

| 2023-07-11T11:34:24Z | 0 | 0 | null |

[

"musclar",

"korean",

"license:openrail",

"region:us"

] | null | 2023-04-08T14:59:05Z |

---

license: openrail

tags:

- musclar

- korean

---

## Model Detail & Merge Recipes

Penbloom_semirealmix aims to create musclar girls with nice skin texture and detailed clothes.This is a ``merge`` model.

## Source model

[Civitai:Beenyou|Stable Diffusion Checkpoint](https://civitai.com/models/27688/beenyou)

[⚠NSFW][Civitai:饭特稀|Stable Diffusion Checkpoint](https://civitai.com/models/18427/v08))

### Penbloom_semirealmix_v1.0

|

vvasanth/falcon7b-finetune-test-220623_1

|

vvasanth

| 2023-07-11T11:31:41Z | 0 | 0 | null |

[

"text-generation",

"license:apache-2.0",

"region:us"

] |

text-generation

| 2023-07-04T11:51:13Z |

---

license: apache-2.0

pipeline_tag: text-generation

---

|

jasonyim2/distilbert-base-uncased-finetuned-emotion

|

jasonyim2

| 2023-07-11T11:22:55Z | 104 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"distilbert",

"text-classification",

"generated_from_trainer",

"dataset:emotion",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2022-03-26T06:45:02Z |

---

license: apache-2.0

tags:

- generated_from_trainer

datasets:

- emotion

metrics:

- accuracy

- f1

model-index:

- name: distilbert-base-uncased-finetuned-emotion

results:

- task:

name: Text Classification

type: text-classification

dataset:

name: emotion

type: emotion

config: split

split: validation

args: split

metrics:

- name: Accuracy

type: accuracy

value: 0.9215

- name: F1

type: f1

value: 0.9215386837894378

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# distilbert-base-uncased-finetuned-emotion

This model is a fine-tuned version of [distilbert-base-uncased](https://huggingface.co/distilbert-base-uncased) on the emotion dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2227

- Accuracy: 0.9215

- F1: 0.9215

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy | F1 |

|:-------------:|:-----:|:----:|:---------------:|:--------:|:------:|

| 0.8265 | 1.0 | 250 | 0.3204 | 0.9 | 0.8963 |

| 0.2534 | 2.0 | 500 | 0.2227 | 0.9215 | 0.9215 |

### Framework versions

- Transformers 4.30.2

- Pytorch 2.0.1+cu118

- Datasets 2.13.1

- Tokenizers 0.13.3

|

antonioalvarado/text_analyzer_base_bert

|

antonioalvarado

| 2023-07-11T11:21:46Z | 103 | 0 |

transformers

|

[

"transformers",

"pytorch",

"bert",

"text-classification",

"generated_from_trainer",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

text-classification

| 2023-07-11T10:55:01Z |

---

license: apache-2.0

tags:

- generated_from_trainer

metrics:

- accuracy

model-index:

- name: text_analyzer_base_bert

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# text_analyzer_base_bert

This model is a fine-tuned version of [bert-base-cased](https://huggingface.co/bert-base-cased) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0472

- Accuracy: 0.9861

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 1

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.3672 | 1.0 | 1728 | 0.1788 | 0.9469 |

| 0.1509 | 2.0 | 3456 | 0.1311 | 0.9769 |

| 0.0071 | 3.0 | 5184 | 0.0494 | 0.9861 |

| 0.0076 | 4.0 | 6912 | 0.0472 | 0.9861 |

### Framework versions

- Transformers 4.29.1

- Pytorch 1.12.0+cu102

- Datasets 2.13.1

- Tokenizers 0.13.3

|

jwu323/origin-llama-7b

|

jwu323

| 2023-07-11T11:06:24Z | 8 | 0 |

transformers

|

[

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-11T09:17:15Z |

This contains the original weights for the LLaMA-7b model.

This model is under a non-commercial license (see the LICENSE file).

You should only use this repository if you have been granted access to the model by filling out [this form](https://docs.google.com/forms/d/e/1FAIpQLSfqNECQnMkycAp2jP4Z9TFX0cGR4uf7b_fBxjY_OjhJILlKGA/viewform) but either lost your copy of the weights or got some trouble converting them to the Transformers format.

[According to this comment](https://github.com/huggingface/transformers/issues/21681#issuecomment-1436552397), dtype of a model in PyTorch is always float32, regardless of the dtype of the checkpoint you saved. If you load a float16 checkpoint in a model you create (which is in float32 by default), the dtype that is kept at the end is the dtype of the model, not the dtype of the checkpoint.

|

NasimB/gpt2-concat-all-mod-datasets1-rarity-all-iorder-end-c2p6k

|

NasimB

| 2023-07-11T10:57:16Z | 6 | 0 |

transformers

|

[

"transformers",

"pytorch",

"gpt2",

"text-generation",

"generated_from_trainer",

"dataset:generator",

"license:mit",

"autotrain_compatible",

"text-generation-inference",

"endpoints_compatible",

"region:us"

] |

text-generation

| 2023-07-11T09:09:23Z |

---

license: mit

tags:

- generated_from_trainer

datasets:

- generator

model-index:

- name: gpt2-concat-all-mod-datasets1-rarity-all-iorder-end-c2p6k

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# gpt2-concat-all-mod-datasets1-rarity-all-iorder-end-c2p6k

This model is a fine-tuned version of [gpt2](https://huggingface.co/gpt2) on the generator dataset.

It achieves the following results on the evaluation set:

- Loss: 4.3830

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0005

- train_batch_size: 64

- eval_batch_size: 64

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 1000

- num_epochs: 6

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 6.784 | 0.32 | 500 | 5.6510 |

| 5.4458 | 0.63 | 1000 | 5.2234 |

| 5.0999 | 0.95 | 1500 | 4.9802 |

| 4.8039 | 1.26 | 2000 | 4.8338 |

| 4.6712 | 1.58 | 2500 | 4.7110 |

| 4.5643 | 1.89 | 3000 | 4.5978 |

| 4.3499 | 2.21 | 3500 | 4.5524 |

| 4.2538 | 2.52 | 4000 | 4.4816 |

| 4.2202 | 2.84 | 4500 | 4.4235 |

| 4.0629 | 3.15 | 5000 | 4.4144 |

| 3.9341 | 3.47 | 5500 | 4.3803 |

| 3.9186 | 3.78 | 6000 | 4.3457 |

| 3.8084 | 4.1 | 6500 | 4.3452 |

| 3.6343 | 4.41 | 7000 | 4.3378 |

| 3.6317 | 4.73 | 7500 | 4.3197 |

| 3.5947 | 5.04 | 8000 | 4.3232 |

| 3.4369 | 5.36 | 8500 | 4.3272 |

| 3.434 | 5.67 | 9000 | 4.3271 |

| 3.4327 | 5.99 | 9500 | 4.3265 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.11.0+cu113

- Datasets 2.13.0

- Tokenizers 0.13.3

|

digiplay/RealEpicMajicRevolution_v1

|

digiplay

| 2023-07-11T10:42:18Z | 393 | 1 |

diffusers

|

[

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-07-11T09:48:27Z |

---

license: other

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

Model info:

https://civitai.com/models/107185/real-epic-majic-revolution

Original Author's DEMO images :

|

mort1k/q-FrozenLake-v1-4x4-noSlippery

|

mort1k

| 2023-07-11T10:35:42Z | 0 | 0 | null |

[

"FrozenLake-v1-4x4-no_slippery",

"q-learning",

"reinforcement-learning",

"custom-implementation",

"model-index",

"region:us"

] |

reinforcement-learning

| 2023-07-11T10:35:41Z |

---

tags:

- FrozenLake-v1-4x4-no_slippery

- q-learning

- reinforcement-learning

- custom-implementation

model-index:

- name: q-FrozenLake-v1-4x4-noSlippery

results:

- task:

type: reinforcement-learning

name: reinforcement-learning

dataset:

name: FrozenLake-v1-4x4-no_slippery

type: FrozenLake-v1-4x4-no_slippery

metrics:

- type: mean_reward

value: 1.00 +/- 0.00

name: mean_reward

verified: false

---

# **Q-Learning** Agent playing1 **FrozenLake-v1**

This is a trained model of a **Q-Learning** agent playing **FrozenLake-v1** .

## Usage

```python

model = load_from_hub(repo_id="mort1k/q-FrozenLake-v1-4x4-noSlippery", filename="q-learning.pkl")

# Don't forget to check if you need to add additional attributes (is_slippery=False etc)

env = gym.make(model["env_id"])

```

|

F-Haru/paraphrase-mpnet-base-v2_09-04-MarginMSELoss-finetuning-7-5

|

F-Haru

| 2023-07-11T10:29:25Z | 1 | 0 |

sentence-transformers

|

[

"sentence-transformers",

"pytorch",

"xlm-roberta",

"feature-extraction",

"sentence-similarity",

"transformers",

"autotrain_compatible",

"text-embeddings-inference",

"endpoints_compatible",

"region:us"

] |

sentence-similarity

| 2023-07-11T09:35:14Z |

---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- transformers

---

ファインチューニングする時のNegative ja-en, en-jaのコサイン類似度が0.9以上0.4以下のみで

ファインチューニングをした後に、

教師モデルをparaphrase-mpnet-base-v2で知識蒸留をしたモデル

# {MODEL_NAME}

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 768 dimensional dense vector space and can be used for tasks like clustering or semantic search.

<!--- Describe your model here -->

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('{MODEL_NAME}')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('{MODEL_NAME}')

model = AutoModel.from_pretrained('{MODEL_NAME}')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling. In this case, mean pooling.

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

<!--- Describe how your model was evaluated -->

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name={MODEL_NAME})

## Training

The model was trained with the parameters:

**DataLoader**:

`torch.utils.data.dataloader.DataLoader` of length 1686 with parameters:

```

{'batch_size': 64, 'sampler': 'torch.utils.data.sampler.RandomSampler', 'batch_sampler': 'torch.utils.data.sampler.BatchSampler'}

```

**Loss**:

`sentence_transformers.losses.MSELoss.MSELoss`

Parameters of the fit()-Method:

```

{

"epochs": 3,

"evaluation_steps": 1000,

"evaluator": "NoneType",

"max_grad_norm": 1,

"optimizer_class": "<class 'torch.optim.adamw.AdamW'>",

"optimizer_params": {

"eps": 1e-06,

"lr": 2e-05

},

"scheduler": "WarmupLinear",

"steps_per_epoch": null,

"warmup_steps": 10000,

"weight_decay": 0.01

}

```

## Full Model Architecture

```

SentenceTransformer(

(0): Transformer({'max_seq_length': 514, 'do_lower_case': False}) with Transformer model: XLMRobertaModel

(1): Pooling({'word_embedding_dimension': 768, 'pooling_mode_cls_token': False, 'pooling_mode_mean_tokens': True, 'pooling_mode_max_tokens': False, 'pooling_mode_mean_sqrt_len_tokens': False})

)

```

## Citing & Authors

<!--- Describe where people can find more information -->

|

miki-kawa/huggingdatavit-base-beans

|

miki-kawa

| 2023-07-11T10:22:59Z | 193 | 0 |

transformers

|

[

"transformers",

"pytorch",

"tensorboard",

"vit",

"image-classification",

"generated_from_trainer",

"dataset:beans",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

image-classification

| 2023-07-11T09:55:51Z |

---

license: apache-2.0

tags:

- image-classification

- generated_from_trainer

datasets:

- beans

metrics:

- accuracy

model-index:

- name: huggingdatavit-base-beans

results:

- task:

name: Image Classification

type: image-classification

dataset:

name: beans

type: beans

config: default

split: validation

args: default

metrics:

- name: Accuracy

type: accuracy

value: 0.9924812030075187

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# huggingdatavit-base-beans

This model is a fine-tuned version of [google/vit-base-patch16-224-in21k](https://huggingface.co/google/vit-base-patch16-224-in21k) on the beans dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0356

- Accuracy: 0.9925

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 0.0002

- train_batch_size: 16

- eval_batch_size: 8

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss | Accuracy |

|:-------------:|:-----:|:----:|:---------------:|:--------:|

| 0.1059 | 1.54 | 100 | 0.0356 | 0.9925 |

| 0.0256 | 3.08 | 200 | 0.0663 | 0.9774 |

### Framework versions

- Transformers 4.28.0

- Pytorch 2.0.1+cu117

- Datasets 2.13.0

- Tokenizers 0.11.0

|

Krish23/Tujgc

|

Krish23

| 2023-07-11T10:22:51Z | 0 | 0 | null |

[