modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

mradermacher/miqu-evil-dpo-GGUF | mradermacher | 2024-05-05T15:05:38Z | 775 | 0 | transformers | [

"transformers",

"gguf",

"not-for-all-audiences",

"en",

"base_model:maywell/miqu-evil-dpo",

"license:other",

"endpoints_compatible",

"region:us"

] | null | 2024-04-26T12:57:37Z | ---

base_model: maywell/miqu-evil-dpo

language:

- en

library_name: transformers

license: other

license_link: LICENSE

license_name: miqu-license

quantized_by: mradermacher

tags:

- not-for-all-audiences

---

## About

<!-- ### quantize_version: 1 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: -->

<!-- ### vocab_type: -->

static quants of https://huggingface.co/maywell/miqu-evil-dpo

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/miqu-evil-dpo-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q2_K.gguf) | Q2_K | 25.6 | |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.IQ3_XS.gguf) | IQ3_XS | 28.4 | |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.IQ3_S.gguf) | IQ3_S | 30.0 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q3_K_S.gguf) | Q3_K_S | 30.0 | |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.IQ3_M.gguf) | IQ3_M | 31.0 | |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q3_K_M.gguf) | Q3_K_M | 33.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q3_K_L.gguf) | Q3_K_L | 36.2 | |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.IQ4_XS.gguf) | IQ4_XS | 37.3 | |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q4_K_S.gguf) | Q4_K_S | 39.3 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q4_K_M.gguf) | Q4_K_M | 41.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q5_K_S.gguf) | Q5_K_S | 47.6 | |

| [GGUF](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q5_K_M.gguf) | Q5_K_M | 48.9 | |

| [PART 1](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q6_K.gguf.part2of2) | Q6_K | 56.7 | very good quality |

| [PART 1](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q8_0.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/miqu-evil-dpo-GGUF/resolve/main/miqu-evil-dpo.Q8_0.gguf.part2of2) | Q8_0 | 73.4 | fast, best quality |

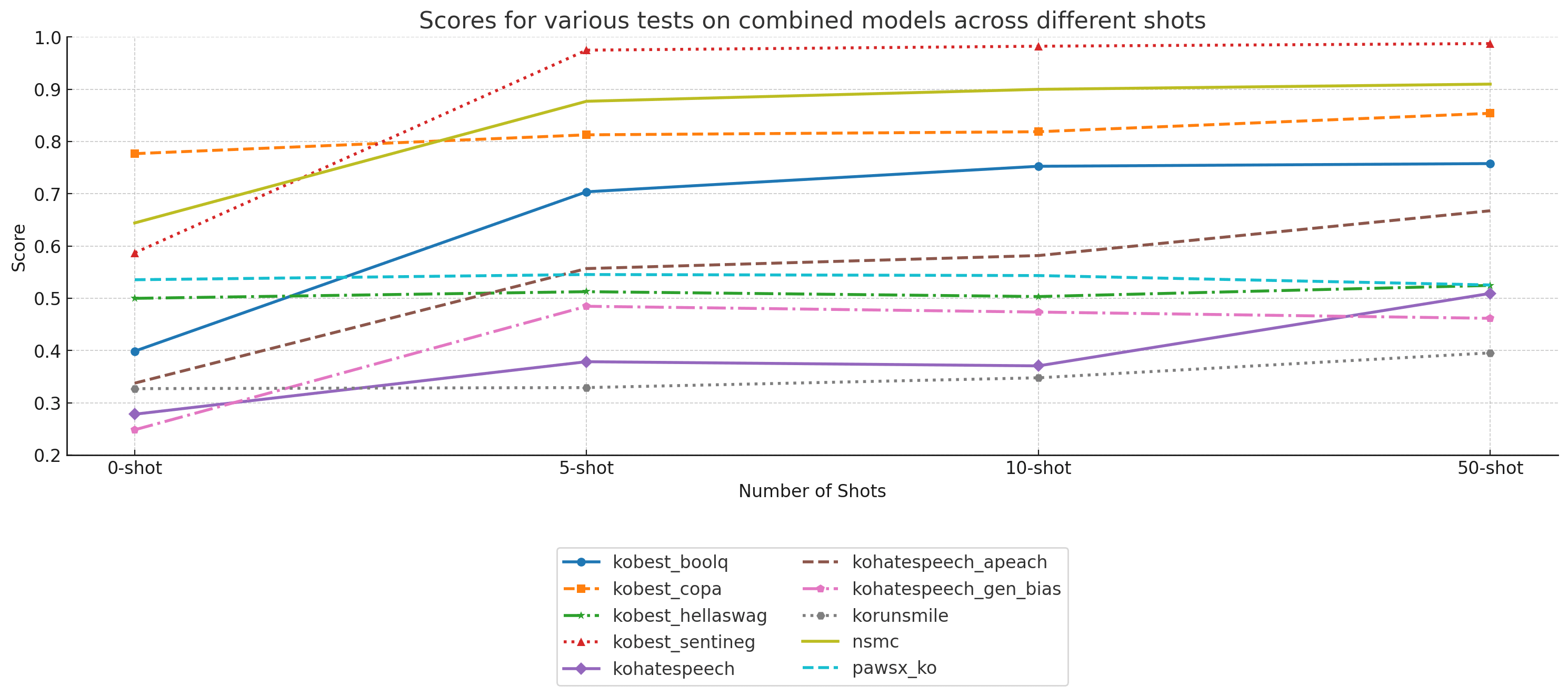

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

CHE-72/Yi-1.5-6B-Chat-Q5_K_S-GGUF | CHE-72 | 2024-06-22T07:21:11Z | 775 | 0 | null | [

"gguf",

"llama-cpp",

"gguf-my-repo",

"base_model:01-ai/Yi-1.5-6B-Chat",

"license:apache-2.0",

"region:us"

] | null | 2024-06-22T07:20:53Z | ---

base_model: 01-ai/Yi-1.5-6B-Chat

license: apache-2.0

tags:

- llama-cpp

- gguf-my-repo

---

# CHE-72/Yi-1.5-6B-Chat-Q5_K_S-GGUF

This model was converted to GGUF format from [`01-ai/Yi-1.5-6B-Chat`](https://huggingface.co/01-ai/Yi-1.5-6B-Chat) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/01-ai/Yi-1.5-6B-Chat) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo CHE-72/Yi-1.5-6B-Chat-Q5_K_S-GGUF --hf-file yi-1.5-6b-chat-q5_k_s.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo CHE-72/Yi-1.5-6B-Chat-Q5_K_S-GGUF --hf-file yi-1.5-6b-chat-q5_k_s.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo CHE-72/Yi-1.5-6B-Chat-Q5_K_S-GGUF --hf-file yi-1.5-6b-chat-q5_k_s.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo CHE-72/Yi-1.5-6B-Chat-Q5_K_S-GGUF --hf-file yi-1.5-6b-chat-q5_k_s.gguf -c 2048

```

|

Tech-Meld/llm-compiler-7b-Q4_K_M-GGUF | Tech-Meld | 2024-06-28T09:58:28Z | 775 | 1 | null | [

"gguf",

"llama-cpp",

"base_model:facebook/llm-compiler-7b",

"license:other",

"region:us"

] | null | 2024-06-28T09:56:08Z | ---

base_model: facebook/llm-compiler-7b

license: other

tags:

- llama-cpp

extra_gated_prompt: >-

**Meta Large Language Model Compiler (LLM Compiler) LICENSE AGREEMENT**

Version Release Date: 27th June 2024

“**Agreement**” means the terms and conditions for use, reproduction,

distribution and modification of the LLM Compiler Materials set forth herein.

“**Documentation**” means the specifications, manuals and documentation

accompanying the LLM Compiler distributed by Meta at:

*

[https://huggingface.co/facebook/llm-compiler-7b](https://huggingface.co/facebook/llm-compiler-7b)

*

[https://huggingface.co/facebook/llm-compiler-7b-ftd](https://huggingface.co/facebook/llm-compiler-7b-ftd)

*

[https://huggingface.co/facebook/llm-compiler-13b](https://huggingface.co/facebook/llm-compiler-13b)

*

[https://huggingface.co/facebook/llm-compiler-13b-ftd](https://huggingface.co/facebook/llm-compiler-13b-ftd)

“**Licensee**” or “**you**” means you, or your employer or any other person or

entity (if you are entering into this Agreement on such person or entity’s

behalf), of the age required under applicable laws, rules or regulations to

provide legal consent and that has legal authority to bind your employer or

such other person or entity if you are entering in this Agreement on their

behalf.

“**Meta Large Language Model Compiler” and “LLM Compiler**” mean the

foundational large language models and software and algorithms, including

machine-learning model code, trained model weights, inference-enabling code,

training-enabling code, fine-tuning enabling code and other elements of the

foregoing distributed by Meta at:

*

[https://huggingface.co/facebook/llm-compiler-7b](https://huggingface.co/facebook/llm-compiler-7b)

*

[https://huggingface.co/facebook/llm-compiler-7b-ftd](https://huggingface.co/facebook/llm-compiler-7b-ftd)

*

[https://huggingface.co/facebook/llm-compiler-13b](https://huggingface.co/facebook/llm-compiler-13b)

*

[https://huggingface.co/facebook/llm-compiler-13b-ftd](https://huggingface.co/facebook/llm-compiler-13b-ftd)

“**LLM Compiler Materials**” means, collectively, Meta’s proprietary LLM

Compiler and Documentation (and any portion thereof) made available under this

Agreement.

“**Meta**” or “**we**” means Meta Platforms Ireland Limited (if you are

located in or, if you are an entity, your principal place of business is in

the EEA or Switzerland) and Meta Platforms, Inc. (if you are located outside

of the EEA or Switzerland).

By clicking “I Accept” below or by using or distributing any portion or

element of the LLM Compiler Materials, you agree to be bound by this

Agreement.

1. **License Rights and Redistribution**. \

a. <span style="text-decoration:underline;">Grant of Rights</span>. You are granted a non-exclusive, worldwide, non-transferable and royalty-free limited license under Meta’s intellectual property or other rights owned by Meta embodied in the LLM Compiler Materials to use, reproduce, distribute, copy, create derivative works of, and make modifications to the LLM Compiler Materials.

b. <span style="text-decoration:underline;">Redistribution and Use</span>.

i. If you distribute or make available the LLM Compiler Materials (or any derivative works thereof), or a product or service that uses any of them, including another AI model, you shall (A) provide a copy of this Agreement with any such LLM Compiler Materials; and (B) prominently display “Built with LLM Compiler” on a related website, user interface, blogpost, about page, or product documentation. If you use the LLM Compiler Materials to create, train, fine tune, or otherwise improve an AI model, which is distributed or made available, you shall also include “LLM Compiler” at the beginning of any such AI model name.

ii. If you receive LLM Compiler Materials, or any derivative works thereof, from a Licensee as part of an integrated end user product, then Section 2 of this Agreement will not apply to you.

iii. You must retain in all copies of the LLM Compiler Materials that you distribute the following attribution notice within a “Notice” text file distributed as a part of such copies: “LLM Compiler is licensed under the LLM Compiler License, Copyright © Meta Platforms, Inc. All Rights Reserved.”

iv. Your use of the LLM Compiler Materials must comply with applicable laws and regulations (including trade compliance laws and regulations) and adhere to the Acceptable Use Policy for Llama Materials (available at https://llama.meta.com/llama3/use-policy), which is hereby incorporated by reference into this Agreement.

v. You will not use the LLM Compiler Materials or any output or results of the LLM Compiler Materials to improve any other large language model.

2. **Additional Commercial Terms**. If, on the LLM Compiler release date, the

monthly active users of the products or services made available by or for

Licensee, or Licensee’s affiliates, is greater than 700 million monthly active

users in the preceding calendar month, you must request a license from Meta,

which Meta may grant to you in its sole discretion, and you are not authorized

to exercise any of the rights under this Agreement unless or until Meta

otherwise expressly grants you such rights.

3**. Disclaimer of Warranty**. UNLESS REQUIRED BY APPLICABLE LAW, THE LLM

COMPILER MATERIALS AND ANY OUTPUT AND RESULTS THEREFROM ARE PROVIDED ON AN “AS

IS” BASIS, WITHOUT WARRANTIES OF ANY KIND, AND META DISCLAIMS ALL WARRANTIES

OF ANY KIND, BOTH EXPRESS AND IMPLIED, INCLUDING, WITHOUT LIMITATION, ANY

WARRANTIES OF TITLE, NON-INFRINGEMENT, MERCHANTABILITY, OR FITNESS FOR A

PARTICULAR PURPOSE. YOU ARE SOLELY RESPONSIBLE FOR DETERMINING THE

APPROPRIATENESS OF USING OR REDISTRIBUTING THE LLM COMPILER MATERIALS AND

ASSUME ANY RISKS ASSOCIATED WITH YOUR USE OF THE LLM COMPILER MATERIALS AND

ANY OUTPUT AND RESULTS.

4. **Limitation of Liability**. IN NO EVENT WILL META OR ITS AFFILIATES BE

LIABLE UNDER ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, TORT, NEGLIGENCE,

PRODUCTS LIABILITY, OR OTHERWISE, ARISING OUT OF THIS AGREEMENT, FOR ANY LOST

PROFITS OR ANY INDIRECT, SPECIAL, CONSEQUENTIAL, INCIDENTAL, EXEMPLARY OR

PUNITIVE DAMAGES, EVEN IF META OR ITS AFFILIATES HAVE BEEN ADVISED OF THE

POSSIBILITY OF ANY OF THE FOREGOING.

5. **Intellectual Property**.

a. No trademark licenses are granted under this Agreement, and in connection with the LLM Compiler Materials, neither Meta nor Licensee may use any name or mark owned by or associated with the other or any of its affiliates, except as required for reasonable and customary use in describing and redistributing the LLM Compiler Materials or as set forth in this Section 5(a). Meta hereby grants you a license to use LLM Compiler (the “Mark”) solely as required to comply with the last sentence of Section 1.b.i. You will comply with Meta’s brand guidelines (currently accessible at[ https://about.meta.com/brand/resources/meta/company-brand/)](https://about.meta.com/brand/resources/meta/company-brand/). All goodwill arising out of your use of the Mark will inure to the benefit of Meta.

b. Subject to Meta’s ownership of LLM Compiler Materials and derivatives made by or for Meta, with respect to any derivative works and modifications of the LLM Compiler Materials that are made by you, as between you and Meta, you are and will be the owner of such derivative works and modifications.

c. If you institute litigation or other proceedings against Meta or any entity (including a cross-claim or counterclaim in a lawsuit) alleging that the LLM Compiler Materials or LLM Compiler outputs or results, or any portion of any of the foregoing, constitutes infringement of intellectual property or other rights owned or licensable by you, then any licenses granted to you under this Agreement shall terminate as of the date such litigation or claim is filed or instituted. You will indemnify and hold harmless Meta from and against any claim by any third party arising out of or related to your use or distribution of the LLM Compiler Materials.

6. **Term and Termination**. The term of this Agreement will commence upon

your acceptance of this Agreement or access to the LLM Compiler Materials and

will continue in full force and effect until terminated in accordance with the

terms and conditions herein. Meta may terminate this Agreement if you are in

breach of any term or condition of this Agreement. Upon termination of this

Agreement, you shall delete and cease use of the LLM Compiler Materials.

Sections 3, 4 and 7 shall survive the termination of this Agreement.

7. **Governing Law and Jurisdiction**. This Agreement will be governed and

construed under the laws of the State of California without regard to choice

of law principles, and the UN Convention on Contracts for the International

Sale of Goods does not apply to this Agreement. The courts of California shall

have exclusive jurisdiction of any dispute arising out of this Agreement.

extra_gated_fields:

First Name: text

Last Name: text

Date of birth: date_picker

Country: country

Affiliation: text

I accept the terms and conditions: checkbox

geo: ip_location

By clicking Submit below I accept the terms of the license and acknowledge that the information I provide will be collected stored processed and shared in accordance with the Meta Privacy Policy: checkbox

extra_gated_description: >-

The information you provide will be collected, stored, processed and shared in

accordance with the [Meta Privacy

Policy](https://www.facebook.com/privacy/policy/).

extra_gated_button_content: I Accept Meta LLM Compiler License and AUP

---

# Tech-Meld/llm-compiler-7b-Q4_K_M-GGUF

This model was converted to GGUF format from [`facebook/llm-compiler-7b`](https://huggingface.co/facebook/llm-compiler-7b) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/facebook/llm-compiler-7b) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Tech-Meld/llm-compiler-7b-Q4_K_M-GGUF --hf-file llm-compiler-7b-q4_k_m.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Tech-Meld/llm-compiler-7b-Q4_K_M-GGUF --hf-file llm-compiler-7b-q4_k_m.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Tech-Meld/llm-compiler-7b-Q4_K_M-GGUF --hf-file llm-compiler-7b-q4_k_m.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Tech-Meld/llm-compiler-7b-Q4_K_M-GGUF --hf-file llm-compiler-7b-q4_k_m.gguf -c 2048

``` |

timm/dpn68.mx_in1k | timm | 2023-04-21T21:56:34Z | 774 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:1707.01629",

"license:apache-2.0",

"region:us"

] | image-classification | 2023-04-21T21:56:14Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for dpn68.mx_in1k

A DPN (Dual-Path Net) image classification model. Trained on ImageNet-1k in MXNet by paper authors and ported to PyTorch by Ross Wightman.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 12.6

- GMACs: 2.4

- Activations (M): 10.5

- Image size: 224 x 224

- **Papers:**

- Dual Path Networks: https://arxiv.org/abs/1707.01629

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/cypw/DPNs

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('dpn68.mx_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'dpn68.mx_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 10, 112, 112])

# torch.Size([1, 144, 56, 56])

# torch.Size([1, 320, 28, 28])

# torch.Size([1, 704, 14, 14])

# torch.Size([1, 832, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'dpn68.mx_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 832, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Citation

```bibtex

@article{Chen2017,

title={Dual Path Networks},

author={Yunpeng Chen, Jianan Li, Huaxin Xiao, Xiaojie Jin, Shuicheng Yan, Jiashi Feng},

journal={arXiv preprint arXiv:1707.01629},

year={2017}

}

```

|

openai/shap-e-img2img | openai | 2023-07-20T16:02:43Z | 774 | 38 | diffusers | [

"diffusers",

"image-to-image",

"text-to-3d",

"shap-e",

"arxiv:2305.02463",

"license:mit",

"diffusers:ShapEImg2ImgPipeline",

"region:us"

] | image-to-image | 2023-07-04T13:25:57Z | ---

license: mit

tags:

- image-to-image

- text-to-3d

- diffusers

- shap-e

---

# Shap-E

Shap-E introduces a diffusion process that can generate a 3D image from a text prompt. It was introduced in [Shap-E: Generating Conditional 3D Implicit Functions](https://arxiv.org/abs/2305.02463) by Heewoo Jun and Alex Nichol from OpenAI.

Original repository of Shap-E can be found here: https://github.com/openai/shap-e.

_The authors of Shap-E didn't author this model card. They provide a separate model card [here](https://github.com/openai/shap-e/blob/main/model-card.md)._

## Introduction

The abstract of the Shap-E paper:

*We present Shap-E, a conditional generative model for 3D assets. Unlike recent work on 3D generative models which produce a single output representation, Shap-E directly generates the parameters of implicit functions that can be rendered as both textured meshes and neural radiance fields. We train Shap-E in two stages: first, we train an encoder that deterministically maps 3D assets into the parameters of an implicit function; second, we train a conditional diffusion model on outputs of the encoder. When trained on a large dataset of paired 3D and text data, our resulting models are capable of generating complex and diverse 3D assets in a matter of seconds. When compared to Point-E, an explicit generative model over point clouds, Shap-E converges faster and reaches comparable or better sample quality despite modeling a higher-dimensional, multi-representation output space. We release model weights, inference code, and samples at [this https URL](https://github.com/openai/shap-e).*

## Released checkpoints

The authors released the following checkpoints:

* [openai/shap-e](https://hf.co/openai/shap-e): produces a 3D image from a text input prompt

* [openai/shap-e-img2img](https://hf.co/openai/shap-e-img2img): samples a 3D image from synthetic 2D image

## Usage examples in 🧨 diffusers

First make sure you have installed all the dependencies:

```bash

pip install transformers accelerate -q

pip install git+https://github.com/huggingface/diffusers@@shap-ee

```

Once the dependencies are installed, use the code below:

```python

import torch

from diffusers import ShapEImg2ImgPipeline

from diffusers.utils import export_to_gif, load_image

ckpt_id = "openai/shap-e-img2img"

pipe = ShapEImg2ImgPipeline.from_pretrained(repo).to("cuda")

img_url = "https://hf.co/datasets/diffusers/docs-images/resolve/main/shap-e/corgi.png"

image = load_image(img_url)

generator = torch.Generator(device="cuda").manual_seed(0)

batch_size = 4

guidance_scale = 3.0

images = pipe(

image,

num_images_per_prompt=batch_size,

generator=generator,

guidance_scale=guidance_scale,

num_inference_steps=64,

size=256,

output_type="pil"

).images

gif_path = export_to_gif(images, "corgi_sampled_3d.gif")

```

## Results

<table>

<tbody>

<tr>

<td align="center">

<img src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/shap-e/corgi.png" alt="Reference corgi image in 2D">

</td>

<td align="center">

<img src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/shap-e/corgi_sampled_3d.gif" alt="Sampled image in 3D (one)">

</td align="center">

<td align="center">

<img src="https://huggingface.co/datasets/diffusers/docs-images/resolve/main/shap-e/corgi_sampled_3d_two.gif" alt="Sampled image in 3D (two)">

</td>

</tr>

<tr>

<td align="center">Reference corgi image in 2D</td>

<td align="center">Sampled image in 3D (one)</td>

<td align="center">Sampled image in 3D (two)</td>

</tr>

</tr>

</tbody>

<table>

## Training details

Refer to the [original paper](https://arxiv.org/abs/2305.02463).

## Known limitations and potential biases

Refer to the [original model card](https://github.com/openai/shap-e/blob/main/model-card.md).

## Citation

```bibtex

@misc{jun2023shape,

title={Shap-E: Generating Conditional 3D Implicit Functions},

author={Heewoo Jun and Alex Nichol},

year={2023},

eprint={2305.02463},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

``` |

TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF | TheBloke | 2023-11-14T01:59:42Z | 774 | 9 | transformers | [

"transformers",

"gguf",

"mistral",

"llama-2",

"code",

"text-generation",

"en",

"dataset:jondurbin/airoboros-2.2.1",

"dataset:Open-Orca/OpenOrca",

"dataset:garage-bAInd/Open-Platypus",

"dataset:ehartford/samantha-data",

"arxiv:2310.06825",

"base_model:uukuguy/speechless-mistral-dolphin-orca-platypus-samantha-7b",

"license:llama2",

"model-index",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-14T00:30:32Z | ---

base_model: uukuguy/speechless-mistral-dolphin-orca-platypus-samantha-7b

datasets:

- jondurbin/airoboros-2.2.1

- Open-Orca/OpenOrca

- garage-bAInd/Open-Platypus

- ehartford/samantha-data

inference: false

language:

- en

library_name: transformers

license: llama2

model-index:

- name: SpeechlessCoder

results:

- dataset:

name: HumanEval

type: openai_humaneval

metrics:

- name: pass@1

type: pass@1

value: 34.146

verified: false

task:

type: text-generation

model_creator: Jiangwen Su

model_name: Speechless Mistral Dolphin Orca Platypus Samantha 7B

model_type: mistral

pipeline_tag: text-generation

prompt_template: '<|im_start|>system

{system_message}<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant

'

quantized_by: TheBloke

tags:

- llama-2

- code

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Speechless Mistral Dolphin Orca Platypus Samantha 7B - GGUF

- Model creator: [Jiangwen Su](https://huggingface.co/uukuguy)

- Original model: [Speechless Mistral Dolphin Orca Platypus Samantha 7B](https://huggingface.co/uukuguy/speechless-mistral-dolphin-orca-platypus-samantha-7b)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Jiangwen Su's Speechless Mistral Dolphin Orca Platypus Samantha 7B](https://huggingface.co/uukuguy/speechless-mistral-dolphin-orca-platypus-samantha-7b).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF)

* [Jiangwen Su's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/uukuguy/speechless-mistral-dolphin-orca-platypus-samantha-7b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: ChatML

```

<|im_start|>system

{system_message}<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q2_K.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q2_K.gguf) | Q2_K | 2 | 3.08 GB| 5.58 GB | smallest, significant quality loss - not recommended for most purposes |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q3_K_S.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q3_K_S.gguf) | Q3_K_S | 3 | 3.16 GB| 5.66 GB | very small, high quality loss |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q3_K_M.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q3_K_M.gguf) | Q3_K_M | 3 | 3.52 GB| 6.02 GB | very small, high quality loss |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q3_K_L.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q3_K_L.gguf) | Q3_K_L | 3 | 3.82 GB| 6.32 GB | small, substantial quality loss |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_0.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_0.gguf) | Q4_0 | 4 | 4.11 GB| 6.61 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_S.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_S.gguf) | Q4_K_S | 4 | 4.14 GB| 6.64 GB | small, greater quality loss |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_M.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_M.gguf) | Q4_K_M | 4 | 4.37 GB| 6.87 GB | medium, balanced quality - recommended |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q5_0.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q5_0.gguf) | Q5_0 | 5 | 5.00 GB| 7.50 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q5_K_S.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q5_K_S.gguf) | Q5_K_S | 5 | 5.00 GB| 7.50 GB | large, low quality loss - recommended |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q5_K_M.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q5_K_M.gguf) | Q5_K_M | 5 | 5.13 GB| 7.63 GB | large, very low quality loss - recommended |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q6_K.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q6_K.gguf) | Q6_K | 6 | 5.94 GB| 8.44 GB | very large, extremely low quality loss |

| [speechless-mistral-dolphin-orca-platypus-samantha-7b.Q8_0.gguf](https://huggingface.co/TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF/blob/main/speechless-mistral-dolphin-orca-platypus-samantha-7b.Q8_0.gguf) | Q8_0 | 8 | 7.70 GB| 10.20 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF and below it, a specific filename to download, such as: speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<|im_start|>system\n{system_message}<|im_end|>\n<|im_start|>user\n{prompt}<|im_end|>\n<|im_start|>assistant"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model in Python code, using ctransformers

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install ctransformers

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]

# Or with AMD ROCm GPU acceleration (Linux only)

CT_HIPBLAS=1 pip install ctransformers --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems only

CT_METAL=1 pip install ctransformers --no-binary ctransformers

```

#### Simple ctransformers example code

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/speechless-mistral-dolphin-orca-platypus-samantha-7B-GGUF", model_file="speechless-mistral-dolphin-orca-platypus-samantha-7b.Q4_K_M.gguf", model_type="mistral", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Brandon Frisco, LangChain4j, Spiking Neurons AB, transmissions 11, Joseph William Delisle, Nitin Borwankar, Willem Michiel, Michael Dempsey, vamX, Jeffrey Morgan, zynix, jjj, Omer Bin Jawed, Sean Connelly, jinyuan sun, Jeromy Smith, Shadi, Pawan Osman, Chadd, Elijah Stavena, Illia Dulskyi, Sebastain Graf, Stephen Murray, terasurfer, Edmond Seymore, Celu Ramasamy, Mandus, Alex, biorpg, Ajan Kanaga, Clay Pascal, Raven Klaugh, 阿明, K, ya boyyy, usrbinkat, Alicia Loh, John Villwock, ReadyPlayerEmma, Chris Smitley, Cap'n Zoog, fincy, GodLy, S_X, sidney chen, Cory Kujawski, OG, Mano Prime, AzureBlack, Pieter, Kalila, Spencer Kim, Tom X Nguyen, Stanislav Ovsiannikov, Michael Levine, Andrey, Trailburnt, Vadim, Enrico Ros, Talal Aujan, Brandon Phillips, Jack West, Eugene Pentland, Michael Davis, Will Dee, webtim, Jonathan Leane, Alps Aficionado, Rooh Singh, Tiffany J. Kim, theTransient, Luke @flexchar, Elle, Caitlyn Gatomon, Ari Malik, subjectnull, Johann-Peter Hartmann, Trenton Dambrowitz, Imad Khwaja, Asp the Wyvern, Emad Mostaque, Rainer Wilmers, Alexandros Triantafyllidis, Nicholas, Pedro Madruga, SuperWojo, Harry Royden McLaughlin, James Bentley, Olakabola, David Ziegler, Ai Maven, Jeff Scroggin, Nikolai Manek, Deo Leter, Matthew Berman, Fen Risland, Ken Nordquist, Manuel Alberto Morcote, Luke Pendergrass, TL, Fred von Graf, Randy H, Dan Guido, NimbleBox.ai, Vitor Caleffi, Gabriel Tamborski, knownsqashed, Lone Striker, Erik Bjäreholt, John Detwiler, Leonard Tan, Iucharbius

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Jiangwen Su's Speechless Mistral Dolphin Orca Platypus Samantha 7B

<p><h1> speechless-mistral-dolphin-orca-platypus-samantha-7b </h1></p>

This model is a merge of ehartford/dolphin-2.1-mistral-7b, Open-Orca/Mistral-7B-OpenOrca, bhenrym14/mistral-7b-platypus-fp16 and ehartford/samantha-1.2-mistral-7b.

I'm very sorry for giving such a long and peculiar name. Originally, it was just my lazy behavior during the process of making models to easily distinguish various model and dataset combinations. I didn't expect the [previous model](https://huggingface.co/uukuguy/speechless-llama2-hermes-orca-platypus-wizardlm-13b) ([Thebloke GPTQ Version](https://huggingface.co/TheBloke/Speechless-Llama2-Hermes-Orca-Platypus-WizardLM-13B-GPTQ)) to be so popular. This time, based on some guys's request, I am releasing a model based on Mistral, and I have also inherited the style of the super long name along with it. Welcome to try the model, please refrain from harsh criticism if you don't like it.

Code: https://github.com/uukuguy/speechless

## HumanEval

| Metric | Value |

| --- | --- |

| humaneval-python | 34.146|

[Big Code Models Leaderboard](https://huggingface.co/spaces/bigcode/bigcode-models-leaderboard)

CodeLlama-34B-Python: 53.29

CodeLlama-34B-Instruct: 50.79

CodeLlama-13B-Instruct: 50.6

CodeLlama-34B: 45.11

CodeLlama-13B-Python: 42.89

CodeLlama-13B: 35.07

Mistral-7B-v0.1: 30.488

## LM-Evaluation-Harness

[Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

| Metric | Value |

| --- | --- |

| ARC | 64.33 |

| HellaSwag | 84.4|

| MMLU | 63.72 |

| TruthfulQA | 52.52|

| Winogrande | 78.37 |

| GSM8K | 21.38 |

| DROP | 8.66 |

| Average | 53.34 |

# Model Card for Mistral-7B-v0.1

The Mistral-7B-v0.1 Large Language Model (LLM) is a pretrained generative text model with 7 billion parameters.

Mistral-7B-v0.1 outperforms Llama 2 13B on all benchmarks we tested.

For full details of this model please read our [paper](https://arxiv.org/abs/2310.06825) and [release blog post](https://mistral.ai/news/announcing-mistral-7b/).

## Model Architecture

Mistral-7B-v0.1 is a transformer model, with the following architecture choices:

- Grouped-Query Attention

- Sliding-Window Attention

- Byte-fallback BPE tokenizer

## Troubleshooting

- If you see the following error:

``

KeyError: 'mistral'

``

- Or:

``

NotImplementedError: Cannot copy out of meta tensor; no data!

``

Ensure you are utilizing a stable version of Transformers, 4.34.0 or newer.

## Notice

Mistral 7B is a pretrained base model and therefore does not have any moderation mechanisms.

## The Mistral AI Team

Albert Jiang, Alexandre Sablayrolles, Arthur Mensch, Chris Bamford, Devendra Singh Chaplot, Diego de las Casas, Florian Bressand, Gianna Lengyel, Guillaume Lample, Lélio Renard Lavaud, Lucile Saulnier, Marie-Anne Lachaux, Pierre Stock, Teven Le Scao, Thibaut Lavril, Thomas Wang, Timothée Lacroix, William El Sayed.`

<!-- original-model-card end -->

|

Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test | Josephgflowers | 2024-03-09T13:54:23Z | 774 | 0 | transformers | [

"transformers",

"safetensors",

"gguf",

"llama",

"text-generation",

"license:mit",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-04T14:51:23Z | ---

license: mit

widget:

- text: '<|system|>

You are a helpful assistant</s>

<|user|>

What is your name? Tell me about yourself.</s>

<|assistant|>

'

model-index:

- name: Tinyllama-1.3B-Cinder-Reason-Test

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 32.51

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 55.85

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 26.61

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 35.59

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 62.12

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 2.35

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=Josephgflowers/Tinyllama-1.3B-Cinder-Reason-Test

name: Open LLM Leaderboard

---

1.3B test of two Cinder models merged layers 1-22 and 18-22, trained on math and step by step reasoning. Model Overview Cinder is an AI chatbot tailored for engaging users in scientific and educational conversations, offering companionship, and sparking imaginative exploration. It is built on the TinyLlama 1.1B parameter model and trained on a unique combination of datasets. Testing on Reason-with-cinder dataset.

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_Josephgflowers__Tinyllama-1.3B-Cinder-Reason-Test)

| Metric |Value|

|---------------------------------|----:|

|Avg. |35.84|

|AI2 Reasoning Challenge (25-Shot)|32.51|

|HellaSwag (10-Shot) |55.85|

|MMLU (5-Shot) |26.61|

|TruthfulQA (0-shot) |35.59|

|Winogrande (5-shot) |62.12|

|GSM8k (5-shot) | 2.35|

|

makhataei/Whisper-Small-Ctejarat | makhataei | 2024-03-25T08:01:02Z | 774 | 1 | transformers | [

"transformers",

"tensorboard",

"safetensors",

"whisper",

"automatic-speech-recognition",

"fa-asr",

"generated_from_trainer",

"fa",

"base_model:makhataei/Whisper-Small-Ctejarat",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2024-03-10T07:05:08Z | ---

language:

- fa

license: apache-2.0

base_model: makhataei/Whisper-Small-Ctejarat

tags:

- fa-asr

- generated_from_trainer

metrics:

- wer

model-index:

- name: Whisper Small Persian

results: []

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Whisper Small Persian

This model is a fine-tuned version of [makhataei/Whisper-Small-Ctejarat](https://huggingface.co/makhataei/Whisper-Small-Ctejarat) on the Ctejarat dataset.

It achieves the following results on the evaluation set:

- Loss: 0.0031

- Wer: 13.3353

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 1e-07

- train_batch_size: 8

- eval_batch_size: 8

- seed: 42

- gradient_accumulation_steps: 8

- total_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_steps: 500

- training_steps: 5000

### Training results

| Training Loss | Epoch | Step | Validation Loss | Wer |

|:-------------:|:------:|:----:|:---------------:|:-------:|

| 0.0032 | 9.52 | 100 | 0.0031 | 13.1328 |

| 0.0027 | 19.05 | 200 | 0.0031 | 13.0171 |

| 0.002 | 28.57 | 300 | 0.0031 | 13.0171 |

| 0.0015 | 38.1 | 400 | 0.0031 | 13.0749 |

| 0.0005 | 47.62 | 500 | 0.0031 | 13.3642 |

| 0.0003 | 57.14 | 600 | 0.0031 | 13.3353 |

| 0.0002 | 66.67 | 700 | 0.0031 | 13.3353 |

| 0.0002 | 76.19 | 800 | 0.0031 | 13.3353 |

| 0.0001 | 85.71 | 900 | 0.0031 | 13.3063 |

| 0.0001 | 95.24 | 1000 | 0.0031 | 13.3063 |

| 0.0001 | 104.76 | 1100 | 0.0031 | 13.3063 |

| 0.0001 | 114.29 | 1200 | 0.0031 | 13.3931 |

| 0.0001 | 123.81 | 1300 | 0.0031 | 13.3931 |

| 0.0001 | 133.33 | 1400 | 0.0031 | 13.3642 |

| 0.0001 | 142.86 | 1500 | 0.0031 | 13.3931 |

| 0.0001 | 152.38 | 1600 | 0.0031 | 13.3931 |

| 0.0001 | 161.9 | 1700 | 0.0031 | 13.3642 |

| 0.0 | 171.43 | 1800 | 0.0031 | 13.3642 |

| 0.0001 | 180.95 | 1900 | 0.0031 | 13.3642 |

| 0.0 | 190.48 | 2000 | 0.0031 | 13.3642 |

| 0.0 | 200.0 | 2100 | 0.0031 | 13.3642 |

| 0.0 | 209.52 | 2200 | 0.0031 | 13.3642 |

| 0.0 | 219.05 | 2300 | 0.0031 | 13.3642 |

| 0.0 | 228.57 | 2400 | 0.0031 | 13.3642 |

| 0.0 | 238.1 | 2500 | 0.0031 | 13.3353 |

| 0.0 | 247.62 | 2600 | 0.0031 | 13.3353 |

| 0.0 | 257.14 | 2700 | 0.0031 | 13.3353 |

| 0.0 | 266.67 | 2800 | 0.0031 | 13.3353 |

| 0.0 | 276.19 | 2900 | 0.0031 | 13.3353 |

| 0.0 | 285.71 | 3000 | 0.0031 | 13.3353 |

| 0.0 | 295.24 | 3100 | 0.0031 | 13.3353 |

| 0.0 | 304.76 | 3200 | 0.0031 | 13.3353 |

| 0.0 | 314.29 | 3300 | 0.0031 | 13.3642 |

| 0.0 | 323.81 | 3400 | 0.0031 | 13.3642 |

| 0.0 | 333.33 | 3500 | 0.0031 | 13.3642 |

| 0.0 | 342.86 | 3600 | 0.0031 | 13.3642 |

| 0.0 | 352.38 | 3700 | 0.0031 | 13.3353 |

| 0.0 | 361.9 | 3800 | 0.0031 | 13.3353 |

| 0.0 | 371.43 | 3900 | 0.0031 | 13.3353 |

| 0.0 | 380.95 | 4000 | 0.0031 | 13.3353 |

| 0.0 | 390.48 | 4100 | 0.0031 | 13.3353 |

| 0.0 | 400.0 | 4200 | 0.0031 | 13.3353 |

| 0.0 | 409.52 | 4300 | 0.0031 | 13.3353 |

| 0.0 | 419.05 | 4400 | 0.0031 | 13.3353 |

| 0.0 | 428.57 | 4500 | 0.0031 | 13.3353 |

| 0.0 | 438.1 | 4600 | 0.0031 | 13.3353 |

| 0.0 | 447.62 | 4700 | 0.0031 | 13.3353 |

| 0.0 | 457.14 | 4800 | 0.0031 | 13.3353 |

| 0.0 | 466.67 | 4900 | 0.0031 | 13.3353 |

| 0.0 | 476.19 | 5000 | 0.0031 | 13.3353 |

### Framework versions

- Transformers 4.35.2

- Pytorch 2.0.1+cu117

- Datasets 2.15.0

- Tokenizers 0.15.0

|

rubbrband/juggernaut_reborn | rubbrband | 2024-04-23T07:07:17Z | 774 | 0 | diffusers | [

"diffusers",

"safetensors",

"arxiv:1910.09700",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2024-04-23T07:06:53Z | ---

library_name: diffusers

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🧨 diffusers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

nbeerbower/llama-3-stinky-8B | nbeerbower | 2024-05-04T01:12:59Z | 774 | 2 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"conversational",

"arxiv:2403.19522",

"base_model:cgato/L3-TheSpice-8b-v0.8.3",

"base_model:Undi95/Llama3-Unholy-8B-OAS",

"base_model:Endevor/InfinityRP-v2-8B",

"base_model:nbeerbower/llama-3-bophades-v3-8B",

"base_model:NousResearch/Hermes-2-Pro-Llama-3-8B",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-05-03T22:03:10Z | ---

base_model:

- cgato/L3-TheSpice-8b-v0.8.3

- Undi95/Llama3-Unholy-8B-OAS

- Endevor/InfinityRP-v2-8B

- nbeerbower/llama-3-bophades-v3-8B

- NousResearch/Hermes-2-Pro-Llama-3-8B

library_name: transformers

tags:

- mergekit

- merge

license: other

license_name: llama3

---

# llama-3-stinky-8B

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using [nbeerbower/llama-3-bophades-v3-8B](https://huggingface.co/nbeerbower/llama-3-bophades-v3-8B) as a base.

### Models Merged

The following models were included in the merge:

* [cgato/L3-TheSpice-8b-v0.8.3](https://huggingface.co/cgato/L3-TheSpice-8b-v0.8.3)

* [Undi95/Llama3-Unholy-8B-OAS](https://huggingface.co/Undi95/Llama3-Unholy-8B-OAS)

* [Endevor/InfinityRP-v2-8B](https://huggingface.co/Endevor/InfinityRP-v2-8B)

* [NousResearch/Hermes-2-Pro-Llama-3-8B](https://huggingface.co/NousResearch/Hermes-2-Pro-Llama-3-8B)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: cgato/L3-TheSpice-8b-v0.8.3

- model: Endevor/InfinityRP-v2-8B

- model: Undi95/Llama3-Unholy-8B-OAS

- model: NousResearch/Hermes-2-Pro-Llama-3-8B

merge_method: model_stock

base_model: nbeerbower/llama-3-bophades-v3-8B

dtype: bfloat16

```

|

John6666/pony-diffusion-v6-xl-sdxl-spo | John6666 | 2024-06-22T10:49:23Z | 774 | 1 | diffusers | [

"diffusers",

"safetensors",

"text-to-image",

"stable-diffusion",

"stable-diffusion-xl",

"anime",

"pony",

"SPO",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionXLPipeline",

"region:us"

] | text-to-image | 2024-06-22T06:45:42Z | ---

license: other

license_name: faipl-1.0-sd

license_link: https://freedevproject.org/faipl-1.0-sd/

tags:

- text-to-image

- stable-diffusion

- stable-diffusion-xl

- anime

- pony

- SPO

---

Original model is [here](https://civitai.com/models/257749/pony-diffusion-v6-xl).

|

mohammad2928git/medical_v5_gguf | mohammad2928git | 2024-06-29T06:27:27Z | 774 | 0 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"base_model:ruslanmv/Medical-Llama3-8B",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-29T06:23:10Z | ---

base_model: ruslanmv/Medical-Llama3-8B

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

---

# Uploaded model

- **Developed by:** mohammad2928git

- **License:** apache-2.0

- **Finetuned from model :** ruslanmv/Medical-Llama3-8B

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

clancystudios/DialoGPT-medium-Morty | clancystudios | 2022-02-07T12:38:25Z | 773 | 0 | transformers | [

"transformers",

"pytorch",

"gpt2",

"text-generation",

"conversational",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2022-03-02T23:29:05Z | ---

tags:

- conversational

--- |

TheBloke/Yarn-Llama-2-13B-64K-GGUF | TheBloke | 2023-09-27T12:46:59Z | 773 | 18 | transformers | [

"transformers",

"gguf",

"llama",

"dataset:pg19",

"arxiv:2309.00071",

"base_model:NousResearch/Yarn-Llama-2-13b-64k",

"license:llama2",

"text-generation-inference",

"region:us"

] | null | 2023-09-01T08:38:06Z | ---

license: llama2

library_name: transformers

datasets:

- pg19

metrics:

- perplexity

model_name: Yarn Llama 2 13B 64K

base_model: NousResearch/Yarn-Llama-2-13b-64k

inference: false

model_creator: NousResearch

model_type: llama

prompt_template: '{prompt}

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Yarn Llama 2 13B 64K - GGUF

- Model creator: [NousResearch](https://huggingface.co/NousResearch)

- Original model: [Yarn Llama 2 13B 64K](https://huggingface.co/NousResearch/Yarn-Llama-2-13b-64k)

<!-- description start -->

## Description

This repo contains GGUF format model files for [NousResearch's Yarn Llama 2 13B 64K](https://huggingface.co/NousResearch/Yarn-Llama-2-13b-64k).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Yarn-Llama-2-13B-64K-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Yarn-Llama-2-13B-64K-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Yarn-Llama-2-13B-64K-GGUF)

* [NousResearch's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/NousResearch/Yarn-Llama-2-13b-64k)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: None

```

{prompt}

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [yarn-llama-2-13b-64k.Q2_K.gguf](https://huggingface.co/TheBloke/Yarn-Llama-2-13B-64K-GGUF/blob/main/yarn-llama-2-13b-64k.Q2_K.gguf) | Q2_K | 2 | 5.43 GB| 7.93 GB | smallest, significant quality loss - not recommended for most purposes |

| [yarn-llama-2-13b-64k.Q3_K_S.gguf](https://huggingface.co/TheBloke/Yarn-Llama-2-13B-64K-GGUF/blob/main/yarn-llama-2-13b-64k.Q3_K_S.gguf) | Q3_K_S | 3 | 5.66 GB| 8.16 GB | very small, high quality loss |