modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

6DammK9/bpmodel-sd14-merge | 6DammK9 | 2023-11-08T14:01:14Z | 690 | 1 | diffusers | [

"diffusers",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"safetensors",

"en",

"license:creativeml-openrail-m",

"region:us"

] | text-to-image | 2023-09-10T15:12:49Z | ---

language:

- en

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- safetensors

#inference: true

#thumbnail: https://s2.loli.net/2023/05/31/bl27yWANrT3asoG.png

#widget:

#- text: >-

# 1girl with blonde two side up disheveled hair red eyes in black serafuku red

# ribbon, upper body, simple background, grey background, collarbone

# example_title: example 1girl

#datasets:

#- Crosstyan/BPDataset

library_name: diffusers

---

# BPModel (and other models)'s UNET merged into SD 1.4 #

```

parameters

(ushanka:0.98), [[braid]], [[astolfo]], [[[[moscow, russia]]]], [[[[[[snow]]]]]]

Negative prompt: (bad:0), (comic:0), (cropped:0), (error:0), (extra:0), (low:0), (lowres:0), (speech:0), (worst:0)

Steps: 48, Sampler: Euler, CFG scale: 11, Seed: 1370168022, Size: 768x768, Model hash: 4a15b47ed1, Model: bp_nman_e29-sd_14, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Clip skip: 2, Dynamic thresholding enabled: True, Mimic scale: 1, Separate Feature Channels: False, Scaling Startpoint: MEAN, Variability Measure: AD, Interpolate Phi: 0.3, Threshold percentile: 100, Version: v1.6.0

```

## Self explained. ##

- [BPModel](https://huggingface.co/Crosstyan/BPModel/tree/main) is a model intentionally preserved its UNET, *and dropped all the others, especially Text Encoder and VAE*. [PR has been made](https://github.com/AUTOMATIC1111/stable-diffusion-webui/pull/7523), and it never get entertained. Workaround (Switch to SD 1.4 and then BPModel) works fine, [until I have some other use for it.](https://github.com/6DammK9/nai-anime-pure-negative-prompt/blob/main/ch05/README.MD)

- I need to align all the models I want to merge before I start merging them, to ensure *preserving the original Text Encoder will maximize the variance of the generation.*

## Why SD 1.4 ##

- "*It is by design."* - Not author, quote lost long ago

- To verify, [head to my artwork long ago and try to replicate.](https://www.pixiv.net/en/artworks/104582308) *I made a more fancy version of him because dynamic CFG OP*

## Models to be merged ##

- See [Files.](https://huggingface.co/6DammK9/bpmodel-sd14-merge/tree/main)

- [Full list in Github](https://github.com/6DammK9/nai-anime-pure-negative-prompt/blob/main/ch05/README.MD#merging-models-from-different-background)

- *Since I cannot rename the file name, please use Ctrl+F to search for the desired file.*

|Index|Model|File name|

|---|---|---|

|01|[VBP](https://github.com/6DammK9/nai-anime-pure-negative-prompt/blob/main/ch02/f59359c175.md)|[_01a-VBP23-1024-ep49-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_01a-VBP23-1024-ep49-sd-v1-4.safetensors)|

|02|[CBP](https://github.com/6DammK9/nai-anime-pure-negative-prompt/blob/main/ch02/ae2b38ac14.md)|[_02a-cbp2-e60-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_02a-cbp2-e60-sd-v1-4.safetensors)|

|03|[mzpikas_tmnd_enhanced](https://huggingface.co/ashen-sensored/mzpikas_tmnd_enhanced)|[_03a-mzpikas_tmnd_enhanced-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_03a-mzpikas_tmnd_enhanced-sd-v1-4.safetensors)|

|04|[DreamShaperV8](https://civitai.com/models/4384/dreamshaper)|[_04a-dreamshaper_8-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_04a-dreamshaper_8-sd-v1-4.safetensors)|

|05|[CoffeeWithLiquor](https://huggingface.co/StereoBartender/CoffeeWithLiquor)|[_05a-CoffeeWithLiquor-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_05a-CoffeeWithLiquor-sd-v1-4.safetensors)|

|06|[BreakDomain](https://civitai.com/models/117192/breakdomainowners-model-i-have-no-rights-if-he-want-it-will-be-taken-down)|[_06a-breakdomain-A0440-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_06a-breakdomain-A0440-sd-v1-4.safetensors)|

|07|[AIWMix](https://civitai.com/models/74165?modelVersionId=78888)|[_07a-aiwmix-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_07a-aiwmix-sd-v1-4.safetensors)|

|08|[Ether Blu Mix](https://civitai.com/models/17427/ether-blu-mix)|[_08a-etherBluMix5-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_08a-etherBluMix5-sd-v1-4.safetensors)|

|09|[MajicMix](https://civitai.com/models/43331?modelVersionId=94640)|[_09a-majicmixRealistic_v6-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_09a-majicmixRealistic_v6-sd-v1-4.safetensors)|

|10|[Silicon29](https://huggingface.co/Xynon/SD-Silicon)|[_10a-Silicon29-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_10a-Silicon29-sd.safetensors)|

|11|[BPModel](https://huggingface.co/Crosstyan/BPModel)|[_11a-bp_nman_e29-sd-v1-4](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_11a-bp_nman_e29-sd-v1-4.safetensors)|

|12|[CGA9](https://t.me/StableDiffusion_CN/1170018)|[_12a-CGA9-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_12a-CGA9-sd.safetensors)|

|13|[LimeREmix_anniversary](https://civitai.com/models/153081?modelVersionId=171399)|[_13a-limeremixAnniversary-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_13a-limeremixAnniversary-sd.safetensors)|

|14|[CyberRealistic Classic](https://civitai.com/models/71185/cyberrealistic-classic)|[_14a-cyberrealistic_classicV2-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_14a-cyberrealistic_classicV2-sd.safetensors)|

|15|[ORCHIDHEART](https://huggingface.co/reroti/ORCHIDHEART)|[_15a-ORCHID-HEART-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_15a-ORCHID-HEART-sd.safetensors)|

|16|[BB95 Furry Mix](https://civitai.com/models/17649/bb95-furry-mix)|[_16a-bb95FurryMix-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_16a-bb95FurryMix-sd.safetensors)|

|17|[Indigo Furry mix](https://civitai.com/models/34469?modelVersionId=167882)|[_17a-indigoFurryMix_v75-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_17a-indigoFurryMix_v75-sd.safetensors)|

|18|[AOAOKO [PVC Style Model]](https://civitai.com/models/15509/aoaoko-pvc-style-model)|[_18a-aoaokoPVC-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_18a-aoaokoPVC-sd.safetensors)|

|19|[GuoFeng3](https://civitai.com/models/10415/3-guofeng3)|[_19a-GuoFeng3.4-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_19a-GuoFeng3.4-sd.safetensors)|

|20|[YiffyMix](https://civitai.com/models/3671?modelVersionId=189192)|[_20a-yiffymix_v34-sd](https://huggingface.co/6DammK9/bpmodel-sd14-merge/blob/main/_20a-yiffymix_v34-sd.safetensors)|

## Models may be merged ##

- [ALunarDream](https://discord.com/channels/930499730843250783/1087111248447017172/1087111248447017172): *Coming soon*

- [AIDv2.10](https://civitai.com/models/16828/aidv210-anime-illust-diffusion): *Coming soon*

## Experimental Merges ##

- *Swapping TE back with original model*. See [AstolfoMix.](https://huggingface.co/6DammK9/AstolfoMix/blob/main/README.md)

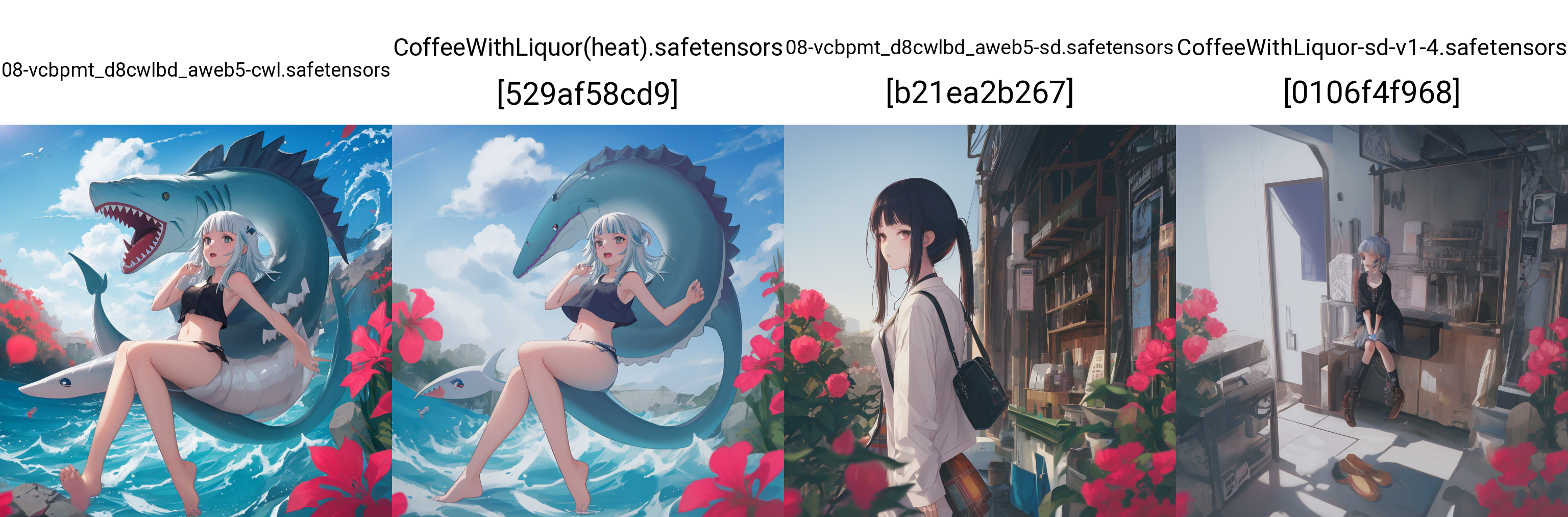

- 08 with CoffeeWithLiquor: `08-vcbpmt_d8cwlbd_aweb5-cwl`

```

parameters

(aesthetic:0), (quality:0), (solo:0), (1girl:0), (gawr_gura:0.98)

Negative prompt: (worst:0), (low:0), (bad:0), (exceptional:0), (masterpiece:0), (comic:0), (extra:0), (lowres:0)

Steps: 48, Sampler: Euler, CFG scale: 4.5, Seed: 978318572, Size: 768x768, Model hash: d94d7363a0, Model: 08-vcbpmt_d8cwlbd_aweb5-cwl, VAE hash: 551eac7037, VAE: vae-ft-mse-840000-ema-pruned.ckpt, Clip skip: 2, Version: v1.6.0

```

## License ##

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage. The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully) Please read the full license [here](https://huggingface.co/spaces/CompVis/stable-diffusion-license/blob/main/license.txt) |

facebook/dpt-dinov2-small-kitti | facebook | 2024-03-29T08:03:38Z | 690 | 3 | transformers | [

"transformers",

"pytorch",

"safetensors",

"dpt",

"depth-estimation",

"vision",

"dinov2",

"arxiv:2304.07193",

"arxiv:2103.13413",

"license:apache-2.0",

"region:us"

] | depth-estimation | 2023-10-31T17:48:29Z | ---

license: apache-2.0

tags:

- vision

- dinov2

- depth-estimation

inference: false

---

# Model Card: DPT model with DINOv2 backbone

## Model Details

DPT (Dense Prediction Transformer) model with DINOv2 backbone as proposed in [DINOv2: Learning Robust Visual Features without Supervision](https://arxiv.org/abs/2304.07193) by Oquab et al.

<img src="https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/dpt_architecture.jpg"

alt="drawing" width="600"/>

<small> DPT architecture. Taken from the <a href="https://arxiv.org/abs/2103.13413" target="_blank">original paper</a>. </small>

### Resources

- [DINOv2 Paper](https://arxiv.org/abs/2304.07193)

- [DPT Paper](https://arxiv.org/abs/2103.13413)

### Use with Transformers

```python

from transformers import AutoImageProcessor, DPTForDepthEstimation

import torch

import numpy as np

from PIL import Image

import requests

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

image_processor = AutoImageProcessor.from_pretrained("facebook/dpt-dinov2-small-kitti")

model = DPTForDepthEstimation.from_pretrained("facebook/dpt-dinov2-small-kitti")

# prepare image for the model

inputs = image_processor(images=image, return_tensors="pt")

with torch.no_grad():

outputs = model(**inputs)

predicted_depth = outputs.predicted_depth

# interpolate to original size

prediction = torch.nn.functional.interpolate(

predicted_depth.unsqueeze(1),

size=image.size[::-1],

mode="bicubic",

align_corners=False,

)

# visualize the prediction

output = prediction.squeeze().cpu().numpy()

formatted = (output * 255 / np.max(output)).astype("uint8")

depth = Image.fromarray(formatted)

```

## Model Use

### Intended Use

The model is intended to showcase that using the DPT framework with DINOv2 as backbone yields a powerful depth estimator.

### BibTeX entry and citation info

```bibtex

@misc{oquab2023dinov2,

title={DINOv2: Learning Robust Visual Features without Supervision},

author={Maxime Oquab and Timothée Darcet and Théo Moutakanni and Huy Vo and Marc Szafraniec and Vasil Khalidov and Pierre Fernandez and Daniel Haziza and Francisco Massa and Alaaeldin El-Nouby and Mahmoud Assran and Nicolas Ballas and Wojciech Galuba and Russell Howes and Po-Yao Huang and Shang-Wen Li and Ishan Misra and Michael Rabbat and Vasu Sharma and Gabriel Synnaeve and Hu Xu and Hervé Jegou and Julien Mairal and Patrick Labatut and Armand Joulin and Piotr Bojanowski},

year={2023},

eprint={2304.07193},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

``` |

TheBloke/openchat-3.5-0106-AWQ | TheBloke | 2024-01-10T11:07:19Z | 690 | 5 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"openchat",

"C-RLFT",

"conversational",

"arxiv:2309.11235",

"arxiv:2303.08774",

"base_model:openchat/openchat-3.5-0106",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"4-bit",

"awq",

"region:us"

] | text-generation | 2024-01-10T10:49:16Z | ---

base_model: openchat/openchat-3.5-0106

inference: false

library_name: transformers

license: apache-2.0

model_creator: OpenChat

model_name: Openchat 3.5 0106

model_type: mistral

pipeline_tag: text-generation

prompt_template: 'GPT4 Correct User: {prompt}<|end_of_turn|>GPT4 Correct Assistant:

'

quantized_by: TheBloke

tags:

- openchat

- mistral

- C-RLFT

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Openchat 3.5 0106 - AWQ

- Model creator: [OpenChat](https://huggingface.co/openchat)

- Original model: [Openchat 3.5 0106](https://huggingface.co/openchat/openchat-3.5-0106)

<!-- description start -->

## Description

This repo contains AWQ model files for [OpenChat's Openchat 3.5 0106](https://huggingface.co/openchat/openchat-3.5-0106).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

### About AWQ

AWQ is an efficient, accurate and blazing-fast low-bit weight quantization method, currently supporting 4-bit quantization. Compared to GPTQ, it offers faster Transformers-based inference with equivalent or better quality compared to the most commonly used GPTQ settings.

AWQ models are currently supported on Linux and Windows, with NVidia GPUs only. macOS users: please use GGUF models instead.

It is supported by:

- [Text Generation Webui](https://github.com/oobabooga/text-generation-webui) - using Loader: AutoAWQ

- [vLLM](https://github.com/vllm-project/vllm) - version 0.2.2 or later for support for all model types.

- [Hugging Face Text Generation Inference (TGI)](https://github.com/huggingface/text-generation-inference)

- [Transformers](https://huggingface.co/docs/transformers) version 4.35.0 and later, from any code or client that supports Transformers

- [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) - for use from Python code

<!-- description end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/openchat-3.5-0106-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/openchat-3.5-0106-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/openchat-3.5-0106-GGUF)

* [OpenChat's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/openchat/openchat-3.5-0106)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: OpenChat-Correct

```

GPT4 Correct User: {prompt}<|end_of_turn|>GPT4 Correct Assistant:

```

<!-- prompt-template end -->

<!-- README_AWQ.md-provided-files start -->

## Provided files, and AWQ parameters

I currently release 128g GEMM models only. The addition of group_size 32 models, and GEMV kernel models, is being actively considered.

Models are released as sharded safetensors files.

| Branch | Bits | GS | AWQ Dataset | Seq Len | Size |

| ------ | ---- | -- | ----------- | ------- | ---- |

| [main](https://huggingface.co/TheBloke/openchat-3.5-0106-AWQ/tree/main) | 4 | 128 | [VMware Open Instruct](https://huggingface.co/datasets/VMware/open-instruct/viewer/) | 4096 | 4.15 GB

<!-- README_AWQ.md-provided-files end -->

<!-- README_AWQ.md-text-generation-webui start -->

## How to easily download and use this model in [text-generation-webui](https://github.com/oobabooga/text-generation-webui)

Please make sure you're using the latest version of [text-generation-webui](https://github.com/oobabooga/text-generation-webui).

It is strongly recommended to use the text-generation-webui one-click-installers unless you're sure you know how to make a manual install.

1. Click the **Model tab**.

2. Under **Download custom model or LoRA**, enter `TheBloke/openchat-3.5-0106-AWQ`.

3. Click **Download**.

4. The model will start downloading. Once it's finished it will say "Done".

5. In the top left, click the refresh icon next to **Model**.

6. In the **Model** dropdown, choose the model you just downloaded: `openchat-3.5-0106-AWQ`

7. Select **Loader: AutoAWQ**.

8. Click Load, and the model will load and is now ready for use.

9. If you want any custom settings, set them and then click **Save settings for this model** followed by **Reload the Model** in the top right.

10. Once you're ready, click the **Text Generation** tab and enter a prompt to get started!

<!-- README_AWQ.md-text-generation-webui end -->

<!-- README_AWQ.md-use-from-vllm start -->

## Multi-user inference server: vLLM

Documentation on installing and using vLLM [can be found here](https://vllm.readthedocs.io/en/latest/).

- Please ensure you are using vLLM version 0.2 or later.

- When using vLLM as a server, pass the `--quantization awq` parameter.

For example:

```shell

python3 -m vllm.entrypoints.api_server --model TheBloke/openchat-3.5-0106-AWQ --quantization awq --dtype auto

```

- When using vLLM from Python code, again set `quantization=awq`.

For example:

```python

from vllm import LLM, SamplingParams

prompts = [

"Tell me about AI",

"Write a story about llamas",

"What is 291 - 150?",

"How much wood would a woodchuck chuck if a woodchuck could chuck wood?",

]

prompt_template=f'''GPT4 Correct User: {prompt}<|end_of_turn|>GPT4 Correct Assistant:

'''

prompts = [prompt_template.format(prompt=prompt) for prompt in prompts]

sampling_params = SamplingParams(temperature=0.8, top_p=0.95)

llm = LLM(model="TheBloke/openchat-3.5-0106-AWQ", quantization="awq", dtype="auto")

outputs = llm.generate(prompts, sampling_params)

# Print the outputs.

for output in outputs:

prompt = output.prompt

generated_text = output.outputs[0].text

print(f"Prompt: {prompt!r}, Generated text: {generated_text!r}")

```

<!-- README_AWQ.md-use-from-vllm start -->

<!-- README_AWQ.md-use-from-tgi start -->

## Multi-user inference server: Hugging Face Text Generation Inference (TGI)

Use TGI version 1.1.0 or later. The official Docker container is: `ghcr.io/huggingface/text-generation-inference:1.1.0`

Example Docker parameters:

```shell

--model-id TheBloke/openchat-3.5-0106-AWQ --port 3000 --quantize awq --max-input-length 3696 --max-total-tokens 4096 --max-batch-prefill-tokens 4096

```

Example Python code for interfacing with TGI (requires [huggingface-hub](https://github.com/huggingface/huggingface_hub) 0.17.0 or later):

```shell

pip3 install huggingface-hub

```

```python

from huggingface_hub import InferenceClient

endpoint_url = "https://your-endpoint-url-here"

prompt = "Tell me about AI"

prompt_template=f'''GPT4 Correct User: {prompt}<|end_of_turn|>GPT4 Correct Assistant:

'''

client = InferenceClient(endpoint_url)

response = client.text_generation(prompt,

max_new_tokens=128,

do_sample=True,

temperature=0.7,

top_p=0.95,

top_k=40,

repetition_penalty=1.1)

print(f"Model output: ", response)

```

<!-- README_AWQ.md-use-from-tgi end -->

<!-- README_AWQ.md-use-from-python start -->

## Inference from Python code using Transformers

### Install the necessary packages

- Requires: [Transformers](https://huggingface.co/docs/transformers) 4.35.0 or later.

- Requires: [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) 0.1.6 or later.

```shell

pip3 install --upgrade "autoawq>=0.1.6" "transformers>=4.35.0"

```

Note that if you are using PyTorch 2.0.1, the above AutoAWQ command will automatically upgrade you to PyTorch 2.1.0.

If you are using CUDA 11.8 and wish to continue using PyTorch 2.0.1, instead run this command:

```shell

pip3 install https://github.com/casper-hansen/AutoAWQ/releases/download/v0.1.6/autoawq-0.1.6+cu118-cp310-cp310-linux_x86_64.whl

```

If you have problems installing [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) using the pre-built wheels, install it from source instead:

```shell

pip3 uninstall -y autoawq

git clone https://github.com/casper-hansen/AutoAWQ

cd AutoAWQ

pip3 install .

```

### Transformers example code (requires Transformers 4.35.0 and later)

```python

from transformers import AutoModelForCausalLM, AutoTokenizer, TextStreamer

model_name_or_path = "TheBloke/openchat-3.5-0106-AWQ"

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path)

model = AutoModelForCausalLM.from_pretrained(

model_name_or_path,

low_cpu_mem_usage=True,

device_map="cuda:0"

)

# Using the text streamer to stream output one token at a time

streamer = TextStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True)

prompt = "Tell me about AI"

prompt_template=f'''GPT4 Correct User: {prompt}<|end_of_turn|>GPT4 Correct Assistant:

'''

# Convert prompt to tokens

tokens = tokenizer(

prompt_template,

return_tensors='pt'

).input_ids.cuda()

generation_params = {

"do_sample": True,

"temperature": 0.7,

"top_p": 0.95,

"top_k": 40,

"max_new_tokens": 512,

"repetition_penalty": 1.1

}

# Generate streamed output, visible one token at a time

generation_output = model.generate(

tokens,

streamer=streamer,

**generation_params

)

# Generation without a streamer, which will include the prompt in the output

generation_output = model.generate(

tokens,

**generation_params

)

# Get the tokens from the output, decode them, print them

token_output = generation_output[0]

text_output = tokenizer.decode(token_output)

print("model.generate output: ", text_output)

# Inference is also possible via Transformers' pipeline

from transformers import pipeline

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

**generation_params

)

pipe_output = pipe(prompt_template)[0]['generated_text']

print("pipeline output: ", pipe_output)

```

<!-- README_AWQ.md-use-from-python end -->

<!-- README_AWQ.md-compatibility start -->

## Compatibility

The files provided are tested to work with:

- [text-generation-webui](https://github.com/oobabooga/text-generation-webui) using `Loader: AutoAWQ`.

- [vLLM](https://github.com/vllm-project/vllm) version 0.2.0 and later.

- [Hugging Face Text Generation Inference (TGI)](https://github.com/huggingface/text-generation-inference) version 1.1.0 and later.

- [Transformers](https://huggingface.co/docs/transformers) version 4.35.0 and later.

- [AutoAWQ](https://github.com/casper-hansen/AutoAWQ) version 0.1.1 and later.

<!-- README_AWQ.md-compatibility end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Michael Levine, 阿明, Trailburnt, Nikolai Manek, John Detwiler, Randy H, Will Dee, Sebastain Graf, NimbleBox.ai, Eugene Pentland, Emad Mostaque, Ai Maven, Jim Angel, Jeff Scroggin, Michael Davis, Manuel Alberto Morcote, Stephen Murray, Robert, Justin Joy, Luke @flexchar, Brandon Frisco, Elijah Stavena, S_X, Dan Guido, Undi ., Komninos Chatzipapas, Shadi, theTransient, Lone Striker, Raven Klaugh, jjj, Cap'n Zoog, Michel-Marie MAUDET (LINAGORA), Matthew Berman, David, Fen Risland, Omer Bin Jawed, Luke Pendergrass, Kalila, OG, Erik Bjäreholt, Rooh Singh, Joseph William Delisle, Dan Lewis, TL, John Villwock, AzureBlack, Brad, Pedro Madruga, Caitlyn Gatomon, K, jinyuan sun, Mano Prime, Alex, Jeffrey Morgan, Alicia Loh, Illia Dulskyi, Chadd, transmissions 11, fincy, Rainer Wilmers, ReadyPlayerEmma, knownsqashed, Mandus, biorpg, Deo Leter, Brandon Phillips, SuperWojo, Sean Connelly, Iucharbius, Jack West, Harry Royden McLaughlin, Nicholas, terasurfer, Vitor Caleffi, Duane Dunston, Johann-Peter Hartmann, David Ziegler, Olakabola, Ken Nordquist, Trenton Dambrowitz, Tom X Nguyen, Vadim, Ajan Kanaga, Leonard Tan, Clay Pascal, Alexandros Triantafyllidis, JM33133, Xule, vamX, ya boyyy, subjectnull, Talal Aujan, Alps Aficionado, wassieverse, Ari Malik, James Bentley, Woland, Spencer Kim, Michael Dempsey, Fred von Graf, Elle, zynix, William Richards, Stanislav Ovsiannikov, Edmond Seymore, Jonathan Leane, Martin Kemka, usrbinkat, Enrico Ros

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

# Original model card: OpenChat's Openchat 3.5 0106

<div align="center">

<img src="https://raw.githubusercontent.com/imoneoi/openchat/master/assets/logo_new.png" style="width: 65%">

<h1>Advancing Open-source Language Models with Mixed-Quality Data</h1>

</div>

<p align="center" style="margin-top: 0px;">

<a href="https://openchat.team">

<img src="https://github.com/alpayariyak/openchat/blob/master/assets/logo_nobg.png?raw=true" alt="OpenChat Logo" style="width:20px; vertical-align: middle; display: inline-block; margin-right: 5px; margin-left: 10px; margin-top: 0px; margin-bottom: 0px;"/>

<span class="link-text" style=" margin-right: 5px;">Online Demo</span>

</a> |

<a href="https://github.com/imoneoi/openchat">

<img src="https://github.githubassets.com/assets/GitHub-Mark-ea2971cee799.png" alt="GitHub Logo" style="width:20px; vertical-align: middle; display: inline-block; margin-right: 5px; margin-left: 5px; margin-top: 0px; margin-bottom: 0px;"/>

<span class="link-text" style=" margin-right: 5px;">GitHub</span>

</a> |

<a href="https://arxiv.org/pdf/2309.11235.pdf">

<img src="https://github.com/alpayariyak/openchat/blob/master/assets/arxiv-logomark-small-square-border.png?raw=true" alt="ArXiv Logo" style="width:20px; vertical-align: middle; display: inline-block; margin-right: 5px; margin-left: 5px; margin-top: 0px; margin-bottom: 0px;"/>

<span class="link-text" style="margin-right: 5px;">Paper</span>

</a> |

<a href="https://discord.gg/pQjnXvNKHY">

<img src="https://cloud.githubusercontent.com/assets/6291467/26705903/96c2d66e-477c-11e7-9f4e-f3c0efe96c9a.png" alt="Discord Logo" style="width:20px; vertical-align: middle; display: inline-block; margin-right: 5px; margin-left: 5px; margin-top: 0px; margin-bottom: 0px;"/>

<span class="link-text">Discord</span>

</a>

</p>

<p align="center" style="margin-top: 0px;">

<span class="link-text" style=" margin-right: 0px; font-size: 0.8em">Sponsored by RunPod</span>

<img src="https://styles.redditmedia.com/t5_6075m3/styles/profileIcon_71syco7c5lt81.png?width=256&height=256&frame=1&auto=webp&crop=256:256,smart&s=24bd3c71dc11edc5d4f88d0cbc1da72ed7ae1969" alt="RunPod Logo" style="width:30px; vertical-align: middle; display: inline-block; margin-right: 5px; margin-left: 5px; margin-top: 0px; margin-bottom: 0px;"/>

</p>

<div style="background-color: white; padding: 0.7em; border-radius: 0.5em; color: black; display: flex; flex-direction: column; justify-content: center; text-align: center; ont-size: 0.5em; border: 0.8em solid #864AF9;">

<a href="https://huggingface.co/openchat/openchat-3.5-0106" style="text-decoration: none; color: black;">

<span style="font-size: 1.7em; font-family: 'Helvetica'; letter-spacing: 0.1em; font-weight: bold; color: black;">OPENCHAT</span><span style="font-size: 1.8em; font-family: 'Helvetica'; color: #3c72db; ">3.5</span>

<span style="font-size: 1.0em; font-family: 'Helvetica'; color: white; background-color: #864AF9; vertical-align: top; border-radius: 6em; padding: 0.066em 0.4em; letter-spacing: 0.1em; font-weight: bold;">0106</span>

<span style="font-size: 0.85em; font-family: 'Helvetica'; color: black;">

<br> 🏆 The Overall Best Performing Open Source 7B Model 🏆

<br> 🤖 Outperforms <span style="font-weight: bold;">ChatGPT</span> (March) and <span style="font-weight: bold;">Grok-1</span> 🤖

<br> 🚀<span style="font-size: 1em; font-family: 'Helvetica'; color: black; font-weight: bold;">15</span>-point improvement in Coding over <span style="font-size: 0.9em;

font-family: 'Helvetica'; color: black; font-weight: bold;">OpenChat-3.5🚀</span>

<br><br><span style="font-size: 1em; font-family: 'Helvetica'; color: #3c72db; font-weight: bold;">New Features</span>

<br> 💡 2 Modes: Coding + Generalist, Mathematical Reasoning 💡

<br> 🧑⚖️ Experimental support for Evaluator and Feedback capabilities 🧑⚖️

</span>

</a>

</div>

<div style="display: flex; justify-content: center; align-items: center">

<img src="https://raw.githubusercontent.com/imoneoi/openchat/master/assets/openchat-bench-0106.png" style="width: 100%; border-radius: 1em">

</div>

<div>

<h3> Table of Contents</h3>

</div>

1. [Usage](#usage)

2. [Benchmarks](#benchmarks)

3. [Limitations](#limitations)

4. [License](#license)

6. [Citation](#citation)

7. [Acknowledgements](#acknowledgements)

<div align="center">

<h2> Usage </h2>

</div>

To use this model, we highly recommend installing the OpenChat package by following the [installation guide](https://github.com/imoneoi/openchat#installation) in our repository and using the OpenChat OpenAI-compatible API server by running the serving command from the table below. The server is optimized for high-throughput deployment using [vLLM](https://github.com/vllm-project/vllm) and can run on a consumer GPU with 24GB RAM. To enable tensor parallelism, append `--tensor-parallel-size N` to the serving command.

Once started, the server listens at `localhost:18888` for requests and is compatible with the [OpenAI ChatCompletion API specifications](https://platform.openai.com/docs/api-reference/chat). Please refer to the example request below for reference. Additionally, you can use the [OpenChat Web UI](https://github.com/imoneoi/openchat#web-ui) for a user-friendly experience.

If you want to deploy the server as an online service, you can use `--api-keys sk-KEY1 sk-KEY2 ...` to specify allowed API keys and `--disable-log-requests --disable-log-stats --log-file openchat.log` for logging only to a file. For security purposes, we recommend using an [HTTPS gateway](https://fastapi.tiangolo.com/es/deployment/concepts/#security-https) in front of the server.

| Model | Size | Context | Weights | Serving |

|-------------------|------|---------|------------------------------------------------------------------|------------------------------------------------------------------------------------------------------------------|

| OpenChat-3.5-0106 | 7B | 8192 | [Huggingface](https://huggingface.co/openchat/openchat-3.5-0106) | `python -m ochat.serving.openai_api_server --model openchat/openchat-3.5-0106 --engine-use-ray --worker-use-ray` |

<details>

<summary>Example request (click to expand)</summary>

💡 **Default Mode (GPT4 Correct)**: Best for coding, chat and general tasks

```bash

curl http://localhost:18888/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "openchat_3.5",

"messages": [{"role": "user", "content": "You are a large language model named OpenChat. Write a poem to describe yourself"}]

}'

```

🧮 **Mathematical Reasoning Mode**: Tailored for solving math problems

```bash

curl http://localhost:18888/v1/chat/completions \

-H "Content-Type: application/json" \

-d '{

"model": "openchat_3.5",

"condition": "Math Correct",

"messages": [{"role": "user", "content": "10.3 − 7988.8133 = "}]

}'

```

</details>

### Conversation templates

💡 **Default Mode (GPT4 Correct)**: Best for coding, chat and general tasks

```

GPT4 Correct User: Hello<|end_of_turn|>GPT4 Correct Assistant: Hi<|end_of_turn|>GPT4 Correct User: How are you today?<|end_of_turn|>GPT4 Correct Assistant:

```

🧮 **Mathematical Reasoning Mode**: Tailored for solving math problems

```

Math Correct User: 10.3 − 7988.8133=<|end_of_turn|>Math Correct Assistant:

```

⚠️ **Notice:** Remember to set `<|end_of_turn|>` as end of generation token.

The default (GPT4 Correct) template is also available as the integrated `tokenizer.chat_template`,

which can be used instead of manually specifying the template:

```python

messages = [

{"role": "user", "content": "Hello"},

{"role": "assistant", "content": "Hi"},

{"role": "user", "content": "How are you today?"}

]

tokens = tokenizer.apply_chat_template(messages, add_generation_prompt=True)

assert tokens == [1, 420, 6316, 28781, 3198, 3123, 1247, 28747, 22557, 32000, 420, 6316, 28781, 3198, 3123, 21631, 28747, 15359, 32000, 420, 6316, 28781, 3198, 3123, 1247, 28747, 1602, 460, 368, 3154, 28804, 32000, 420, 6316, 28781, 3198, 3123, 21631, 28747]

```

<div align="center">

<h2> (Experimental) Evaluator / Feedback Capabilities </h2>

</div>

We've included evaluator capabilities in this release to advance open-source models as evaluators. You can use `Default Mode (GPT4 Correct)` with the following prompt (same as [Prometheus](https://huggingface.co/datasets/kaist-ai/Feedback-Collection)) to evaluate a response.

```

###Task Description:

An instruction (might include an Input inside it), a response to evaluate, a reference answer that gets a score of 5, and a score rubric representing a evaluation criteria are given.

1. Write a detailed feedback that assess the quality of the response strictly based on the given score rubric, not evaluating in general.

2. After writing a feedback, write a score that is an integer between 1 and 5. You should refer to the score rubric.

3. The output format should look as follows: "Feedback: (write a feedback for criteria) [RESULT] (an integer number between 1 and 5)"

4. Please do not generate any other opening, closing, and explanations.

###The instruction to evaluate:

{orig_instruction}

###Response to evaluate:

{orig_response}

###Reference Answer (Score 5):

{orig_reference_answer}

###Score Rubrics:

[{orig_criteria}]

Score 1: {orig_score1_description}

Score 2: {orig_score2_description}

Score 3: {orig_score3_description}

Score 4: {orig_score4_description}

Score 5: {orig_score5_description}

###Feedback:

```

<div align="center">

<h2> Benchmarks </h2>

</div>

| Model | # Params | Average | MT-Bench | HumanEval | BBH MC | AGIEval | TruthfulQA | MMLU | GSM8K | BBH CoT |

|-----------------------|----------|----------|----------|-----------|----------|----------|------------|----------|----------|----------|

| **OpenChat-3.5-0106** | **7B** | **64.5** | 7.8 | **71.3** | **51.5** | **49.1** | 61.0 | 65.8 | **77.4** | 62.2 |

| OpenChat-3.5-1210 | **7B** | 63.8 | 7.76 | 68.9 | 49.5 | 48.0 | **61.8** | 65.3 | 77.3 | 61.8 |

| OpenChat-3.5 | **7B** | 61.6 | 7.81 | 55.5 | 47.6 | 47.4 | 59.1 | 64.3 | 77.3 | 63.5 |

| ChatGPT (March)* | ???B | 61.5 | **7.94** | 48.1 | 47.6 | 47.1 | 57.7 | **67.3** | 74.9 | **70.1** |

| | | | | | | | | | | |

| OpenHermes 2.5 | 7B | 59.3 | 7.54 | 48.2 | 49.4 | 46.5 | 57.5 | 63.8 | 73.5 | 59.9 |

| OpenOrca Mistral | 7B | 52.7 | 6.86 | 38.4 | 49.4 | 42.9 | 45.9 | 59.3 | 59.1 | 58.1 |

| Zephyr-β^ | 7B | 34.6 | 7.34 | 22.0 | 40.6 | 39.0 | 40.8 | 39.8 | 5.1 | 16.0 |

| Mistral | 7B | - | 6.84 | 30.5 | 39.0 | 38.0 | - | 60.1 | 52.2 | - |

<details>

<summary>Evaluation Details(click to expand)</summary>

*: ChatGPT (March) results are from [GPT-4 Technical Report](https://arxiv.org/abs/2303.08774), [Chain-of-Thought Hub](https://github.com/FranxYao/chain-of-thought-hub), and our evaluation. Please note that ChatGPT is not a fixed baseline and evolves rapidly over time.

^: Zephyr-β often fails to follow few-shot CoT instructions, likely because it was aligned with only chat data but not trained on few-shot data.

**: Mistral and Open-source SOTA results are taken from reported results in instruction-tuned model papers and official repositories.

All models are evaluated in chat mode (e.g. with the respective conversation template applied). All zero-shot benchmarks follow the same setting as in the AGIEval paper and Orca paper. CoT tasks use the same configuration as Chain-of-Thought Hub, HumanEval is evaluated with EvalPlus, and MT-bench is run using FastChat. To reproduce our results, follow the instructions in [our repository](https://github.com/imoneoi/openchat/#benchmarks).

</details>

<div>

<h3>HumanEval+</h3>

</div>

| Model | Size | HumanEval+ pass@1 |

|-----------------------------|--------|-------------------|

| **OpenChat-3.5-0106** | **7B** | **65.9** |

| ChatGPT (December 12, 2023) | ???B | 64.6 |

| WizardCoder-Python-34B-V1.0 | 34B | 64.6 |

| OpenChat 3.5 1210 | 7B | 63.4 |

| OpenHermes 2.5 | 7B | 41.5 |

<div>

<h3>OpenChat-3.5 vs. Grok</h3>

</div>

🔥 OpenChat-3.5-0106 (7B) now outperforms Grok-0 (33B) on **all 4 benchmarks** and Grok-1 (???B) on average and **3/4 benchmarks**.

| | License | # Param | Average | MMLU | HumanEval | MATH | GSM8k |

|-----------------------|-------------|---------|----------|--------|-----------|----------|----------|

| **OpenChat-3.5-0106** | Apache-2.0 | **7B** | **61.0** | 65.8 | **71.3** | **29.3** | **77.4** |

| OpenChat-3.5-1210 | Apache-2.0 | **7B** | 60.1 | 65.3 | 68.9 | 28.9 | 77.3 |

| OpenChat-3.5 | Apache-2.0 | **7B** | 56.4 | 64.3 | 55.5 | 28.6 | 77.3 |

| Grok-0 | Proprietary | 33B | 44.5 | 65.7 | 39.7 | 15.7 | 56.8 |

| Grok-1 | Proprietary | ???B | 55.8 | **73** | 63.2 | 23.9 | 62.9 |

*: Grok results are reported by [X.AI](https://x.ai/).

<div align="center">

<h2> Limitations </h2>

</div>

**Foundation Model Limitations**

Despite its advanced capabilities, OpenChat is still bound by the limitations inherent in its foundation models. These limitations may impact the model's performance in areas such as:

- Complex reasoning

- Mathematical and arithmetic tasks

- Programming and coding challenges

**Hallucination of Non-existent Information**

OpenChat may sometimes generate information that does not exist or is not accurate, also known as "hallucination". Users should be aware of this possibility and verify any critical information obtained from the model.

**Safety**

OpenChat may sometimes generate harmful, hate speech, biased responses, or answer unsafe questions. It's crucial to apply additional AI safety measures in use cases that require safe and moderated responses.

<div align="center">

<h2> License </h2>

</div>

Our OpenChat 3.5 code and models are distributed under the Apache License 2.0.

<div align="center">

<h2> Citation </h2>

</div>

```

@article{wang2023openchat,

title={OpenChat: Advancing Open-source Language Models with Mixed-Quality Data},

author={Wang, Guan and Cheng, Sijie and Zhan, Xianyuan and Li, Xiangang and Song, Sen and Liu, Yang},

journal={arXiv preprint arXiv:2309.11235},

year={2023}

}

```

<div align="center">

<h2> 💌 Main Contributor </h2>

</div>

* Wang Guan [[email protected]], Cheng Sijie [[email protected]], Alpay Ariyak [[email protected]]

* We look forward to hearing you and collaborating on this exciting project!

|

mradermacher/GOAT-70B-Storytelling-i1-GGUF | mradermacher | 2024-05-06T06:22:15Z | 690 | 3 | transformers | [

"transformers",

"gguf",

"facebook",

"meta",

"pytorch",

"llama",

"llama-2",

"Storywriter",

"en",

"base_model:GOAT-AI/GOAT-70B-Storytelling",

"license:llama2",

"endpoints_compatible",

"region:us"

] | null | 2024-02-23T03:25:30Z | ---

base_model: GOAT-AI/GOAT-70B-Storytelling

language:

- en

library_name: transformers

license: llama2

model_type: llama

quantized_by: mradermacher

tags:

- facebook

- meta

- pytorch

- llama

- llama-2

- Storywriter

---

## About

weighted/imatrix quants of https://huggingface.co/GOAT-AI/GOAT-70B-Storytelling

<!-- provided-files -->

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ1_S.gguf) | i1-IQ1_S | 15.0 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ1_M.gguf) | i1-IQ1_M | 16.0 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 18.7 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ2_XS.gguf) | i1-IQ2_XS | 20.8 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ2_S.gguf) | i1-IQ2_S | 21.8 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ2_M.gguf) | i1-IQ2_M | 23.7 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q2_K.gguf) | i1-Q2_K | 25.9 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 27.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ3_XS.gguf) | i1-IQ3_XS | 28.6 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q3_K_XS.gguf) | i1-Q3_K_XS | 28.7 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ3_S.gguf) | i1-IQ3_S | 30.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q3_K_S.gguf) | i1-Q3_K_S | 30.3 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ3_M.gguf) | i1-IQ3_M | 31.4 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q3_K_M.gguf) | i1-Q3_K_M | 33.7 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q3_K_L.gguf) | i1-Q3_K_L | 36.6 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ4_XS.gguf) | i1-IQ4_XS | 37.2 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-IQ4_NL.gguf) | i1-IQ4_NL | 39.4 | prefer IQ4_XS |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q4_0.gguf) | i1-Q4_0 | 39.4 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q4_K_S.gguf) | i1-Q4_K_S | 39.7 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q4_K_M.gguf) | i1-Q4_K_M | 41.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q5_K_S.gguf) | i1-Q5_K_S | 47.9 | |

| [GGUF](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q5_K_M.gguf) | i1-Q5_K_M | 49.2 | |

| [PART 1](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q6_K.gguf.part1of2) [PART 2](https://huggingface.co/mradermacher/GOAT-70B-Storytelling-i1-GGUF/resolve/main/GOAT-70B-Storytelling.i1-Q6_K.gguf.part2of2) | i1-Q6_K | 57.0 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

Felladrin/gguf-TinyMistral-248M-Chat-v2 | Felladrin | 2024-06-15T10:19:12Z | 690 | 0 | null | [

"gguf",

"base_model:Felladrin/TinyMistral-248M-Chat-v2",

"license:apache-2.0",

"region:us"

] | null | 2024-04-02T07:49:25Z | ---

license: apache-2.0

base_model: Felladrin/TinyMistral-248M-Chat-v2

---

GGUF version of [Felladrin/TinyMistral-248M-Chat-v2](https://huggingface.co/Felladrin/TinyMistral-248M-Chat-v2).

## Try it with [llama.cpp](https://github.com/ggerganov/llama.cpp)

```bash

brew install ggerganov/ggerganov/llama.cpp

```

```bash

llama-cli \

--hf-repo Felladrin/gguf-TinyMistral-248M-Chat-v2 \

--model TinyMistral-248M-Chat-v2.Q8_0.gguf \

--random-prompt \

--temp 1.3 \

--dynatemp-range 1.2 \

--top-k 0 \

--top-p 1 \

--min-p 0.1 \

--typical 0.85 \

--mirostat 2 \

--mirostat-ent 3.5 \

--repeat-penalty 1.1 \

--repeat-last-n -1 \

-n 256

```

|

QuantFactory/Matter-0.2-7B-GGUF | QuantFactory | 2024-04-02T17:29:21Z | 690 | 0 | transformers | [

"transformers",

"gguf",

"mistral",

"conversational",

"text-generation-inference",

"text-generation",

"en",

"dataset:0-hero/Matter-0.2",

"base_model:0-hero/Matter-0.2-7B",

"license:apache-2.0",

"region:us"

] | text-generation | 2024-04-02T14:57:56Z | ---

license: apache-2.0

datasets:

- 0-hero/Matter-0.2

language:

- en

inference: false

library_name: transformers

pipeline_tag: text-generation

base_model: 0-hero/Matter-0.2-7B

tags:

- mistral

- conversational

- text-generation-inference

---

## Matter 7B - 0.2 - GGUF (Mistral 7B 0.2 Finetune)

## This is the GGUF version of the model, made for the llama.cpp inference engine.

# Model Description

Matter 7B 0.2 is full-finetune on the **Matter 0.2 dataset** (not yet released), which is curated from over 35 datsets analyzing >6B tokens

### Training

Prompt format: This model uses ChatML prompt format.

```

<|im_start|>system

You are a helpful AI assistant.<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant

```

### Function Calling

Model also supports function calling. Additional tokens for function calling

Model function call tokens

- `<tool_call>` - Function call start token

- `</tool_call>` - Function call end token

Function call response tokens

- `<tool_response>` - Function response start token

- `</tool_response>` - Function response end token

Example

```

<|im_start|>system

You are a helpful assistant with access to the following functions. Use them if required -

{ "name": "get_news_headlines",

"description": "Get the latest news headlines",

"parameters":

{ "type": "object",

"properties":

{ "country":

{ "type": "string",

"description": "The country for which to fetch news"

}

},

"required": [ "country" ]

}

}

<|im_end|>

<|im_start|>user

Can you tell me the latest news headlines for the United States?<|im_end|>

<|im_start|>assistant

<tool_call>{"name": "get_news_headlines", "arguments": '{"country": "United States"}'}</tool_call><|im_end|>

<|im_start|>user

<tool_response>{

"headlines":

[

"Biden announces new vaccine mandates",

"Hurricane Ida devastates Louisiana",

"Apple unveils new iPhone",

"NASA's Perseverance rover collects first Mars rock sample"

]

}</tool_response>

<|im_end|>

<|im_start|>assistant

Here are the latest news headlines for the United States:

1. Biden announces new vaccine mandates

2. Hurricane Ida devastates Louisiana

3. Apple unveils new iPhone

4. NASA's Perseverance rover collects first Mars rock sample

<|im_end|>

``` |

DrNicefellow/Mistral-1-from-Mixtral-8x7B-v0.1 | DrNicefellow | 2024-04-11T12:08:18Z | 690 | 1 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-11T04:31:51Z | ---

license: apache-2.0

---

# Mixtral-8x7B--v0.1: Model 1

## Model Description

This model is the 1st extracted standalone model from the [mistralai/Mixtral-8x7B-v0.1](https://huggingface.co/mistralai/Mixtral-8x7B-v0.1), using the [Mixtral Model Expert Extractor tool](https://github.com/MeNicefellow/Mixtral-Model-Expert-Extractor) I made. It is constructed by selecting the first expert from each Mixture of Experts (MoE) layer. The extraction of this model is experimental. It is expected to be worse than Mistral-7B.

## Model Architecture

The architecture of this model includes:

- Multi-head attention layers derived from the base Mixtral model.

- The first expert from each MoE layer, intended to provide a balanced approach to language understanding and generation tasks.

- Additional layers and components as required to ensure the model's functionality outside the MoE framework.

### Example

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "DrNicefellow/Mistral-1-from-Mixtral-8x7B-v0.1"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

text = "Today is a pleasant"

input_ids = tokenizer.encode(text, return_tensors='pt')

output = model.generate(input_ids)

print(tokenizer.decode(output[0], skip_special_tokens=True))

```

## License

This model is available under the Apache 2.0 License.

## Discord Server

Join our Discord server [here](https://discord.gg/xhcBDEM3).

## License

This model is open-sourced under the Apache 2.0 License. See the LICENSE file for more details.

|

DrNicefellow/Mistral-7-from-Mixtral-8x7B-v0.1 | DrNicefellow | 2024-04-12T16:24:40Z | 690 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-11T12:07:45Z | ---

license: apache-2.0

---

# Mixtral-8x7B--v0.1: Model 7

## Model Description

This model is the 7th extracted standalone model from the [mistralai/Mixtral-8x7B-v0.1](https://huggingface.co/mistralai/Mixtral-8x7B-v0.1), using the [Mixtral Model Expert Extractor tool](https://github.com/MeNicefellow/Mixtral-Model-Expert-Extractor) I made. It is constructed by selecting the first expert from each Mixture of Experts (MoE) layer. The extraction of this model is experimental. It is expected to be worse than Mistral-7B.

## Model Architecture

The architecture of this model includes:

- Multi-head attention layers derived from the base Mixtral model.

- The first expert from each MoE layer, intended to provide a balanced approach to language understanding and generation tasks.

- Additional layers and components as required to ensure the model's functionality outside the MoE framework.

### Example

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "DrNicefellow/Mistral-3-from-Mixtral-8x7B-v0.1"

tokenizer = AutoTokenizer.from_pretrained(model_name)

model = AutoModelForCausalLM.from_pretrained(model_name)

text = "Today is a pleasant"

input_ids = tokenizer.encode(text, return_tensors='pt')

output = model.generate(input_ids)

print(tokenizer.decode(output[0], skip_special_tokens=True))

```

## License

This model is available under the Apache 2.0 License.

## Discord Server

Join our Discord server [here](https://discord.gg/xhcBDEM3).

## License

This model is open-sourced under the Apache 2.0 License. See the LICENSE file for more details.

|

UnfilteredAI/Promt-generator | UnfilteredAI | 2024-04-15T09:16:41Z | 690 | 8 | transformers | [

"transformers",

"pytorch",

"safetensors",

"bloom",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-15T09:14:35Z | Entry not found |

AlanRobotics/nanit | AlanRobotics | 2024-04-19T05:55:43Z | 690 | 0 | transformers | [

"transformers",

"safetensors",

"phi",

"text-generation",

"custom_code",

"license:other",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-18T19:51:31Z | ---

license: other

library_name: transformers

model-index:

- name: nanit

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 58.96

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=AlanRobotics/nanit

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 74.68

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=AlanRobotics/nanit

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 57.67

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=AlanRobotics/nanit

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 47.89

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=AlanRobotics/nanit

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 76.16

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=AlanRobotics/nanit

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 55.04

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=AlanRobotics/nanit

name: Open LLM Leaderboard

---

Hello, my name is nanit. I am an AI assistant designed to help you with various tasks and provide information. I can assist with answering questions, providing recommendations, and performing calculations. I am constantly learning and improving to better serve you. If you have any specific questions or tasks you would like assistance with, please let me know.

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_AlanRobotics__nanit)

| Metric |Value|

|---------------------------------|----:|

|Avg. |61.73|

|AI2 Reasoning Challenge (25-Shot)|58.96|

|HellaSwag (10-Shot) |74.68|

|MMLU (5-Shot) |57.67|

|TruthfulQA (0-shot) |47.89|

|Winogrande (5-shot) |76.16|

|GSM8k (5-shot) |55.04|

|

PrunaAI/codegemma-2b-GGUF-smashed | PrunaAI | 2024-04-18T22:17:13Z | 690 | 1 | null | [

"gguf",

"pruna-ai",

"region:us"

] | null | 2024-04-18T21:58:30Z | ---

thumbnail: "https://assets-global.website-files.com/646b351987a8d8ce158d1940/64ec9e96b4334c0e1ac41504_Logo%20with%20white%20text.svg"

metrics:

- memory_disk

- memory_inference

- inference_latency

- inference_throughput

- inference_CO2_emissions

- inference_energy_consumption

tags:

- pruna-ai

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<a href="https://www.pruna.ai/" target="_blank" rel="noopener noreferrer">

<img src="https://i.imgur.com/eDAlcgk.png" alt="PrunaAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</a>

</div>

<!-- header end -->

[](https://twitter.com/PrunaAI)

[](https://github.com/PrunaAI)

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

[](https://discord.gg/CP4VSgck)

# Simply make AI models cheaper, smaller, faster, and greener!

- Give a thumbs up if you like this model!

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

- Join Pruna AI community on Discord [here](https://discord.gg/CP4VSgck) to share feedback/suggestions or get help.

**Frequently Asked Questions**

- ***How does the compression work?*** The model is compressed with GGUF.

- ***How does the model quality change?*** The quality of the model output might vary compared to the base model.

- ***What is the model format?*** We use GGUF format.

- ***What calibration data has been used?*** If needed by the compression method, we used WikiText as the calibration data.

- ***How to compress my own models?*** You can request premium access to more compression methods and tech support for your specific use-cases [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

# Downloading and running the models

You can download the individual files from the Files & versions section. Here is a list of the different versions we provide. For more info checkout [this chart](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9) and [this guide](https://www.reddit.com/r/LocalLLaMA/comments/1ba55rj/overview_of_gguf_quantization_methods/):

| Quant type | Description |

|------------|--------------------------------------------------------------------------------------------|

| Q5_K_M | High quality, recommended. |

| Q5_K_S | High quality, recommended. |

| Q4_K_M | Good quality, uses about 4.83 bits per weight, recommended. |

| Q4_K_S | Slightly lower quality with more space savings, recommended. |

| IQ4_NL | Decent quality, slightly smaller than Q4_K_S with similar performance, recommended. |

| IQ4_XS | Decent quality, smaller than Q4_K_S with similar performance, recommended. |

| Q3_K_L | Lower quality but usable, good for low RAM availability. |

| Q3_K_M | Even lower quality. |

| IQ3_M | Medium-low quality, new method with decent performance comparable to Q3_K_M. |

| IQ3_S | Lower quality, new method with decent performance, recommended over Q3_K_S quant, same size with better performance. |

| Q3_K_S | Low quality, not recommended. |

| IQ3_XS | Lower quality, new method with decent performance, slightly better than Q3_K_S. |

| Q2_K | Very low quality but surprisingly usable. |

## How to download GGUF files ?

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

- **Option A** - Downloading in `text-generation-webui`:

- **Step 1**: Under Download Model, you can enter the model repo: PrunaAI/codegemma-2b-GGUF-smashed-smashed and below it, a specific filename to download, such as: phi-2.IQ3_M.gguf.

- **Step 2**: Then click Download.

- **Option B** - Downloading on the command line (including multiple files at once):

- **Step 1**: We recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

- **Step 2**: Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download PrunaAI/codegemma-2b-GGUF-smashed-smashed codegemma-2b.IQ3_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

Alternatively, you can also download multiple files at once with a pattern:

```shell

huggingface-cli download PrunaAI/codegemma-2b-GGUF-smashed-smashed --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download PrunaAI/codegemma-2b-GGUF-smashed-smashed codegemma-2b.IQ3_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## How to run model in GGUF format?

- **Option A** - Introductory example with `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m codegemma-2b.IQ3_M.gguf --color -c 32768 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<s>[INST] {prompt\} [/INST]"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 32768` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

- **Option B** - Running in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20-%20Model%20Tab.md#llamacpp).

- **Option C** - Running from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./codegemma-2b.IQ3_M.gguf", # Download the model file first

n_ctx=32768, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"<s>[INST] {prompt} [/INST]", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./codegemma-2b.IQ3_M.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

- **Option D** - Running with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

## Configurations

The configuration info are in `smash_config.json`.

## Credits & License

The license of the smashed model follows the license of the original model. Please check the license of the original model before using this model which provided the base model. The license of the `pruna-engine` is [here](https://pypi.org/project/pruna-engine/) on Pypi.

## Want to compress other models?

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

- Request access to easily compress your own AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

|

PotatoB/Mistral-offspring-1-3 | PotatoB | 2024-04-21T16:04:45Z | 690 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"akjindal53244/Arithmo-Mistral-7B",

"meta-math/MetaMath-Mistral-7B",

"base_model:akjindal53244/Arithmo-Mistral-7B",

"base_model:meta-math/MetaMath-Mistral-7B",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-21T13:13:04Z | ---

tags:

- merge

- mergekit

- lazymergekit

- akjindal53244/Arithmo-Mistral-7B

- meta-math/MetaMath-Mistral-7B

base_model:

- akjindal53244/Arithmo-Mistral-7B

- meta-math/MetaMath-Mistral-7B

license: apache-2.0

---

# Mistral-offspring-1-3

Mistral-offspring-1-3 is a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [akjindal53244/Arithmo-Mistral-7B](https://huggingface.co/akjindal53244/Arithmo-Mistral-7B)

* [meta-math/MetaMath-Mistral-7B](https://huggingface.co/meta-math/MetaMath-Mistral-7B)

## 🧩 Configuration

```yaml

models:

- model: mistralai/Mistral-7B-v0.1

# no parameters necessary for base model

- model: akjindal53244/Arithmo-Mistral-7B

parameters:

density: 0.5

weight: 0.5

- model: meta-math/MetaMath-Mistral-7B

parameters:

density: 0.5

weight: 0.5

merge_method: ties

base_model: mistralai/Mistral-7B-v0.1

parameters:

normalize: true

dtype: float16

```

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "PotatoB/Mistral-offspring-1-3"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

``` |

Yuma42/KangalKhan-Alpha-Sapphiroid-7B-Fixed | Yuma42 | 2024-04-25T18:17:59Z | 690 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"merge",

"mergekit",

"lazymergekit",

"kaist-ai/mistral-orpo-capybara-7k",

"argilla/CapybaraHermes-2.5-Mistral-7B",

"conversational",

"en",

"base_model:kaist-ai/mistral-orpo-capybara-7k",

"base_model:argilla/CapybaraHermes-2.5-Mistral-7B",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-25T18:10:06Z | ---

tags:

- merge

- mergekit

- lazymergekit

- kaist-ai/mistral-orpo-capybara-7k

- argilla/CapybaraHermes-2.5-Mistral-7B

base_model:

- kaist-ai/mistral-orpo-capybara-7k

- argilla/CapybaraHermes-2.5-Mistral-7B

license: apache-2.0

language:

- en

---

# KangalKhan-Alpha-Sapphiroid-7B-Fixed

KangalKhan-Alpha-Sapphiroid-7B-Fixed is a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [kaist-ai/mistral-orpo-capybara-7k](https://huggingface.co/kaist-ai/mistral-orpo-capybara-7k)

* [argilla/CapybaraHermes-2.5-Mistral-7B](https://huggingface.co/argilla/CapybaraHermes-2.5-Mistral-7B)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: kaist-ai/mistral-orpo-capybara-7k

layer_range: [0, 32]

- model: argilla/CapybaraHermes-2.5-Mistral-7B

layer_range: [0, 32]

merge_method: slerp

base_model: kaist-ai/mistral-orpo-capybara-7k

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "Yuma42/KangalKhan-Alpha-Sapphiroid-7B-Fixed"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)