modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

nbeerbower/maidphin | nbeerbower | 2024-02-04T20:24:16Z | 451 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"not-for-all-audiences",

"nsfw",

"base_model:SanjiWatsuki/Silicon-Maid-7B",

"base_model:nbeerbower/bruphin-zeta",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-02-04T20:21:14Z | ---

license: cc-by-4.0

base_model:

- SanjiWatsuki/Silicon-Maid-7B

- nbeerbower/bruphin-zeta

tags:

- mergekit

- merge

- not-for-all-audiences

- nsfw

---

# maidphin

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the SLERP merge method.

### Models Merged

The following models were included in the merge:

* [SanjiWatsuki/Silicon-Maid-7B](https://huggingface.co/SanjiWatsuki/Silicon-Maid-7B)

* [nbeerbower/bruphin-zeta](https://huggingface.co/nbeerbower/bruphin-zeta)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: nbeerbower/bruphin-zeta

layer_range: [0, 32]

- model: SanjiWatsuki/Silicon-Maid-7B

layer_range: [0, 32]

merge_method: slerp

base_model: nbeerbower/bruphin-zeta

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

|

predibase/dbpedia | predibase | 2024-02-21T19:14:00Z | 451 | 8 | peft | [

"peft",

"safetensors",

"text-generation",

"base_model:mistralai/Mistral-7B-v0.1",

"region:us"

]

| text-generation | 2024-02-19T23:16:23Z | ---

library_name: peft

base_model: mistralai/Mistral-7B-v0.1

pipeline_tag: text-generation

---

Description: Topic extraction from a news article and title\

Original dataset: https://huggingface.co/datasets/fancyzhx/dbpedia_14 \

---\

Try querying this adapter for free in Lora Land at https://predibase.com/lora-land! \

The adapter_category is Topic Identification and the name is News Topic Identification (dbpedia)\

---\

Sample input: You are given the title and the body of an article below. Please determine the type of the article.\n### Title: Great White Whale\n\n### Body: Great White Whale is the debut album by the Canadian rock band Secret and Whisper. The album was in the works for about a year and was released on February 12 2008. A music video was shot in Pittsburgh for the album's first single XOXOXO. The album reached number 17 on iTunes's top 100 albums in its first week on sale.\n\n### Article Type: \

---\

Sample output: 11\

---\

Try using this adapter yourself!

```

from transformers import AutoModelForCausalLM, AutoTokenizer

model_id = "mistralai/Mistral-7B-v0.1"

peft_model_id = "predibase/dbpedia"

model = AutoModelForCausalLM.from_pretrained(model_id)

model.load_adapter(peft_model_id)

``` |

dreamgen/opus-v1.2-70b | dreamgen | 2024-03-30T17:04:51Z | 451 | 2 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"unsloth",

"axolotl",

"en",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-03-06T13:31:54Z | ---

language:

- en

pipeline_tag: text-generation

tags:

- unsloth

- axolotl

license: cc-by-nc-nd-4.0

---

# DreamGen Opus V1

<div style="display: flex; flex-direction: row; align-items: center;">

<img src="/dreamgen/opus-v1.2-70b/resolve/main/images/logo-1024.png" alt="model logo" style="

border-radius: 12px;

margin-right: 12px;

margin-top: 0px;

margin-bottom: 0px;

max-width: 100px;

height: auto;

"/>

Models for **(steerable) story-writing and role-playing**.

<br/>[All Opus V1 models, including quants](https://huggingface.co/collections/dreamgen/opus-v1-65d092a6f8ab7fc669111b31).

</div>

## Resources

- [**Opus V1 prompting guide**](https://dreamgen.com/docs/models/opus/v1) with many (interactive) examples and prompts that you can copy.

- [**Google Colab**](https://colab.research.google.com/drive/1J178fH6IdQOXNi-Njgdacf5QgAxsdT20?usp=sharing) for interactive role-play using `opus-v1.2-7b`.

- [Python code](example/prompt/format.py) to format the prompt correctly.

- Join the community on [**Discord**](https://dreamgen.com/discord) to get early access to new models.

<img src="/dreamgen/opus-v1.2-70b/resolve/main/images/story_writing.webp" alt="story writing on dreamgen.com" style="

padding: 12px;

border-radius: 12px;

border: 2px solid #f9a8d4;

background: rgb(9, 9, 11);

"/>

## Prompting

<details>

<summary>The models use an extended version of ChatML.</summary>

```

<|im_start|>system

(Story description in the right format here)

(Typically consists of plot description, style description and characters)<|im_end|>

<|im_start|>user

(Your instruction on how the story should continue)<|im_end|>

<|im_start|>text names= Alice

(Continuation of the story from the Alice character)<|im_end|>

<|im_start|>text

(Continuation of the story from no character in particular (pure narration))<|im_end|>

<|im_start|>user

(Your instruction on how the story should continue)<|im_end|>

<|im_start|>text names= Bob

(Continuation of the story from the Bob character)<|im_end|>

```

The Opus V1 extension is the addition of the `text` role, and the addition / modification of role names.

Pay attention to the following:

- The `text` messages can (but don't have to have) `names`, names are used to indicate the "active" character during role-play.

- There can be multiple subsequent message with a `text` role, especially if names are involved.

- There can be multiple names attached to a message.

- The format for names is `names= {{name[0]}}; {{name[1]}}`, beware of the spaces after `names=` and after the `;`. This spacing leads to most natural tokenization for the names.

</details>

While the main goal for the models is great story-writing and role-playing performance, the models are also capable of several writing related tasks as well as general assistance.

Here's how you can prompt the model for the following tasks

### Steerable Story-writing and Role-playing:

- [Story-writing prompt guide](https://dreamgen.com/docs/models/opus/v1#task-story-writing)

- [Role-playing prompt guide](https://dreamgen.com/docs/models/opus/v1#task-role-playing)

- Input:

- System prompt: You provide story / role-play description, which consists of:

- Plot description

- Style description

- Characters and their descriptions

- Conversation turns:

- Text / message turn: This represents part of the story or role play

- Instruction: This tells the model what should happen next

- Output: Continuation of the story / role-play.

### Story plot summarization

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-plot-description)

- Input: A story, or a few chapters of a story.

- Output: A description of the story or chapters.

#### Examples:

<details>

<summary>Summary of <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">short fiction story</a> in 50 words</summary>

<blockquote>

Malygris, a powerful magician, summons his long-dead lover Nylissa from the grave using necromancy. However, upon seeing her, he realizes that she is not the same person he once knew, leading him to question the nature of love, memory, and the power of magic.

</blockquote>

</details>

<details>

<summary>Summary of <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">short fiction story</a> in 200 words</summary>

<blockquote>

The story is a dark fantasy set in the city of Susran, where an ancient and powerful magician named Malygris dwells in a tower atop a conical hill. Malygris, who has mastered all forms of sorcery and necromancy, is plagued by a deep sense of ennui and melancholy. He seeks solace in the memory of Nylissa, a girl he once loved in his youth.

One day, Malygris decides to summon Nylissa's spirit from the grave using his necromantic powers. He performs the necessary rituals and incantations, and Nylissa appears before him as a ghostly apparition. However, as Malygris gazes upon her, he becomes increasingly disillusioned. He notices subtle changes in her appearance and behavior that do not match his memories of her. He begins to question whether this is truly the same Nylissa he once knew, or if it is just another illusion conjured by his magic.

As Malygris grapples with this uncertainty, he becomes increasingly despondent and disillusioned with life itself. He realizes that even his magic cannot bring back the past or make him truly happy. In the end, he dismisses Nylissa's spirit and returns to his lonely tower, resigned to his fate as an old and weary sorcerer.

</blockquote>

</details>

<details>

<summary>Summary of <a href="https://edition.cnn.com/2024/02/29/health/pets-diseases-viruses-bacteria-wellness/index.html">CNN News article</a> in 150 words</summary>

<blockquote>

The article discusses the recent human deaths from diseases transmitted by animals, specifically Alaskapox and bubonic plague. Dr. Leana Wen, an emergency physician and former Baltimore health commissioner, explains the various ways household pets can transmit diseases to humans. These include fecal-oral transmission, scratches and bites, and vector-borne diseases like ticks, fleas, and mosquitoes. Wen emphasizes the importance of vaccinating pets, maintaining cleanliness, and taking commonsense precautions to reduce disease transmission. She also advises that individuals who are immunocompromised, babies, and pregnant women should take additional care when interacting with animals.

</blockquote>

</details>

<details>

<summary>Summary of <a href="https://edition.cnn.com/2024/02/29/health/pets-diseases-viruses-bacteria-wellness/index.html">CNN News article</a> in 50 words</summary>

<blockquote>

A doctor discusses the risks of diseases transmitted from animals to humans, focusing on household pets like cats and dogs. They emphasize the importance of vaccination, hygiene, and regular veterinary care to prevent these illnesses.

</blockquote>

</details>

### Story character description

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-char-description)

- Input: A story, or a few chapters of a story, set of characters.

- Output: A description of the characters.

#### Examples:

<details>

<summary>Description of the Malygris character from <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">this story</a> in 50 words</summary>

<blockquote>

Malygris is an ancient and powerful magician, characterized by his long white beard, cold green eyes, and a violet robe. He is a master of necromancy and possesses a vast knowledge of forbidden magic. Despite his immense power, he is plagued by a deep sense of melancholy and ennui.

</blockquote>

</details>

<details>

<summary>Description of the prince character from <a href="https://www.libraryofshortstories.com/onlinereader/the-pearl-of-love">this story</a> in 50 words</summary>

<blockquote>

The prince is a devoted and determined individual, driven by his love for his late queen. He is described as a graceful youth in the beginning, but as he grows older, his dedication to the Pearl of Love transforms him into a grave and intent man. His sense of color and design evolves over time, reflecting his growing maturity and artistic vision.

</blockquote>

</details>

### Story style description

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-style-description)

- Input: A story, or a few chapters of a story.

- Output: A description the style of the story.

<details>

<summary>Style description of <a href="https://www.libraryofshortstories.com/onlinereader/the-last-incantation">this story</a></summary>

<blockquote>

The story is written in a descriptive and evocative style, with a focus on the atmosphere and setting. The author uses vivid and detailed language to create a sense of place and time, and employs a variety of literary devices such as similes, metaphors, and allusions to enhance the mood and tone of the narrative. The sentence structure is varied, with a mix of short and long sentences that create a rhythmic flow to the prose.

</blockquote>

</details>

<details>

<summary>Style description of <a href="https://www.libraryofshortstories.com/onlinereader/the-pearl-of-love">this story</a></summary>

<blockquote>

The writing style is descriptive and evocative, with a focus on the beauty and grandeur of the Pearl of Love. The author uses vivid imagery and sensory details to create a rich and immersive atmosphere. The tone is reverential and contemplative, reflecting the prince's deep love for his queen and his dedication to creating a lasting monument to her memory.

</blockquote>

</details>

### Story description to chapters

- [Prompt guide](https://dreamgen.com/docs/models/opus/v1#task-story-description-to-chapter-descriptions)

- Input: A brief plot description and the desired number of chapters.

- Output: A description for each chapter.

### And more...

## Sampling params

For story-writing and role-play, I recommend "Min P" based sampling with `min_p` in the range `[0.01, 0.1]` and with `temperature` in the range `[0.5, 1.5]`, depending on your preferences. A good starting point would be `min_p=0.1; temperature=0.8`.

You may also benefit from setting presence, frequency and repetition penalties, especially at lower temperatures.

## Dataset

The fine-tuning dataset consisted of ~100M tokens of steerable story-writing, role-playing, writing-assistant and general-assistant examples. Each example was up to 31000 tokens long.

All story-writing and role-playing examples were based on human-written text.

## Running the model

The model is should be compatible with any software that supports the base model, but beware of prompting and tokenization.

I recommend using these model versions:

- 7B: [no quant (opus-v1.2-7b)](https://huggingface.co/dreamgen/opus-v1.2-7b)

- 34B: [no quant (opus-v1-34b)](https://huggingface.co/dreamgen/opus-v1-34b) or [awq (opus-v1-34b-awq)](https://huggingface.co/dreamgen/opus-v1-34b-awq)

- 34B: [no quant (opus-v1.2-70b)](https://huggingface.co/dreamgen/opus-v1.2-70b) or [awq (opus-v1.2-70b-awq)](https://huggingface.co/dreamgen/opus-v1.2-70b-awq)

### Running on DreamGen.com (free)

You can run the models on [dreamgen.com](https://dreamgen.com) for free — you can use the built-in UI for story-writing & role-playing, or use [the API](https://dreamgen.com/docs/api).

### Running Locally

- **Make sure your prompt is as close as possible to the Opus V1**

- Regardless of which backend you use, it's important that you format your prompt well and that the tokenization works correctly.

- [Read the prompt guide](https://dreamgen.com/docs/models/opus/v1)

- [Read the prompt formatting code](example/prompt/format.py)

- Make sure `<|im_start|>` and `<|im_end|>` are tokenized correctly

- **vLLM**

- [**Google Colab**](https://colab.research.google.com/drive/1J178fH6IdQOXNi-Njgdacf5QgAxsdT20?usp=sharing): This is a simple interactive Google Colab to do role-play with the 7B model, it should fit on the T4 GPU.

- [Code](example/prompt/interactive.py): This is simple script for interactive chat for one hard-coded scenario.

- **SillyTavern**

- [Official SillyTavern documentation for DreamGen](https://docs.sillytavern.app/usage/api-connections/dreamgen/) -- applies to both the API an local models

- SillyTavern (staging) comes with built-in DreamGen preset for RP

- Other presets can be found [here](https://huggingface.co/dreamgen/opus-v1.2-70b/tree/main/configs/silly_tavern), v2 kindly provided by @MarinaraSpaghetti

- Make sure to unselect `Skip special tokens`, otherwise it won't work

- This is just an attempt at approximating the Opus V1 prompt, it won't be perfect

- Character cards specifically rewritten for the built-in DreamGen preset:

- [Seraphina](configs/silly_tavern/cards/Seraphina.png) (based on the default Seraphina card)

- [Lara Lightland](configs/silly_tavern/cards/LaraLightland.png) (based on the card by Deffcolony)

- **LM Studio**

- [Config](configs/lmstudio/preset.json)

- Just like ChatML, just changed "assistant" to "text" role.

- **There's a bug** in LM Studio if you delete a message or click "Continue", [see here for details](https://discord.com/channels/1110598183144399058/1212665261128417280/1212665261128417280).

- **HuggingFace**

- [Chat template](tokenizer_config.json#L51)

- Just like ChatML, just changed "assistant" to "text" role.

## Known Issues

- **34B repetition**:

- The 34B sometimes gets stuck repeating the same word, or synonyms. This seems to be a common problem across various Yi 34B fine-tunes.

- **GGUF**:

- The tokenization might be messed up. Some users reported that `<|im_start|>` and `<|im_end|>` are tokenized as multiple tokens. Also llama.cpp may not tokenize correctly (the Yi tokenizer is subtly different from the Llama 2 tokenizer).

## License

- This model is intended for personal use only, other use is not permitted. |

TeeZee/DarkForest-20B-v2.0-GGUF-iMat | TeeZee | 2024-04-01T11:03:43Z | 451 | 6 | null | [

"gguf",

"merge",

"not-for-all-audiences",

"license:other",

"region:us"

]

| null | 2024-03-19T09:02:40Z | ---

license: other

license_name: microsoft-research-license

tags:

- merge

- not-for-all-audiences

---

# DarkForest 20B v2.0 - GGUF IMat quants

[IMatrix file](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.fp16_512.imatrix?download=true) prepared witch context 4096 and 5521 chunks of wiki.test.raw.

Original model info: [DarkForest-20B-v2.0](https://huggingface.co/TeeZee/DarkForest-20B-v2.0)

[q8_0](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0_Q8_0_imatrix.gguf?download=true):

- [5521]8.5016,

- Final estimate: PPL = 8.5016 +/- 0.02134

[q6_k](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.q6_K_imatrix.gguf?download=true):

- [5521]8.5046,

- Final estimate: PPL = 8.5046 +/- 0.02136

[q5_0](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.q5_0_imatrix.gguf?download=true):

- [5521]8.4903,

- Final estimate: PPL = 8.4903 +/- 0.02132

[q4_K_S](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.q4_K_S_imatrix.gguf?download=true):

- [5521]8.5880,

- Final estimate: PPL = 8.5880 +/- 0.02162

[q4_K_M](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.q4_K_M_imatrix.gguf?download=true):

- [5521]8.5906,

- Final estimate: PPL = 8.5906 +/- 0.02163

[q4_0](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.q4_0_imatrix.gguf?download=true):

- [5521]8.5610,

- Final estimate: PPL = 8.5610 +/- 0.02151

[q3_K_M](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.q3_K_M_imatrix.gguf?download=true):

- [5521]8.7283,

- Final estimate: PPL = 8.7283 +/- 0.02196

[q2_K](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.q2_K_imatrix.gguf?download=true):

- [5521]9.2445

- Final estimate: PPL = 9.2445 +/- 0.02351

[IQ2_XS](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.IQ2_XS_imatrix.gguf?download=true):

- [5521]9.8329,

- Final estimate: PPL = 9.8329 +/- 0.02452

[IQ2_XSS](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.IQ2_XXS_imatrix.gguf?download=true):

- [5521]10.5170,

- Final estimate: PPL = 10.5170 +/- 0.02651

[IQ1_S](https://huggingface.co/TeeZee/DarkForest-20B-v2.0-GGUF-iMat/resolve/main/DarkForest-20B-v2.0.IQ1_S_imatrix.gguf?download=true):

- [5521]13.9487,

- Final estimate: PPL = 13.9487 +/- 0.03502 - UNUSABLE, model is too small to be coherent in 1 bit.

All comments are greatly appreciated, download, test and if you appreciate my work, consider buying me my fuel:

<a href="https://www.buymeacoffee.com/TeeZee" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/v2/default-yellow.png" alt="Buy Me A Coffee" style="height: 60px !important;width: 217px !important;" ></a>

|

stablediffusionapi/lazymixv4-inpaint | stablediffusionapi | 2024-03-20T08:09:22Z | 451 | 0 | diffusers | [

"diffusers",

"modelslab.com",

"stable-diffusion-api",

"text-to-image",

"ultra-realistic",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2024-03-20T08:07:26Z | ---

license: creativeml-openrail-m

tags:

- modelslab.com

- stable-diffusion-api

- text-to-image

- ultra-realistic

pinned: true

---

# API Inference

## Get API Key

Get API key from [ModelsLab API](http://modelslab.com), No Payment needed.

Replace Key in below code, change **model_id** to "lazymixv4-inpaint"

Coding in PHP/Node/Java etc? Have a look at docs for more code examples: [View docs](https://modelslab.com/docs)

Try model for free: [Generate Images](https://modelslab.com/models/lazymixv4-inpaint)

Model link: [View model](https://modelslab.com/models/lazymixv4-inpaint)

View all models: [View Models](https://modelslab.com/models)

import requests

import json

url = "https://modelslab.com/api/v6/images/text2img"

payload = json.dumps({

"key": "your_api_key",

"model_id": "lazymixv4-inpaint",

"prompt": "ultra realistic close up portrait ((beautiful pale cyberpunk female with heavy black eyeliner)), blue eyes, shaved side haircut, hyper detail, cinematic lighting, magic neon, dark red city, Canon EOS R3, nikon, f/1.4, ISO 200, 1/160s, 8K, RAW, unedited, symmetrical balance, in-frame, 8K",

"negative_prompt": "painting, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, deformed, ugly, blurry, bad anatomy, bad proportions, extra limbs, cloned face, skinny, glitchy, double torso, extra arms, extra hands, mangled fingers, missing lips, ugly face, distorted face, extra legs, anime",

"width": "512",

"height": "512",

"samples": "1",

"num_inference_steps": "30",

"safety_checker": "no",

"enhance_prompt": "yes",

"seed": None,

"guidance_scale": 7.5,

"multi_lingual": "no",

"panorama": "no",

"self_attention": "no",

"upscale": "no",

"embeddings": "embeddings_model_id",

"lora": "lora_model_id",

"webhook": None,

"track_id": None

})

headers = {

'Content-Type': 'application/json'

}

response = requests.request("POST", url, headers=headers, data=payload)

print(response.text)

> Use this coupon code to get 25% off **DMGG0RBN** |

ruslanmv/Medical-Llama3-8B-GGUF | ruslanmv | 2024-04-24T11:55:08Z | 451 | 4 | transformers | [

"transformers",

"gguf",

"text-generation-inference",

"ruslanmv",

"llama",

"trl",

"en",

"dataset:ruslanmv/ai-medical-chatbot",

"base_model:meta-llama/Meta-Llama-3-8B",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2024-04-23T14:51:07Z | ---

language: en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- ruslanmv

- llama

- trl

base_model: meta-llama/Meta-Llama-3-8B

datasets:

- ruslanmv/ai-medical-chatbot

---

# Medical-Llama3-8B-GGUF

[](https://ruslanmv.com/)

This is a fine-tuned version of the Llama3 8B model, specifically designed to answer medical questions.

The model was trained on the AI Medical Chatbot dataset, which can be found at [ruslanmv/ai-medical-chatbot](https://huggingface.co/datasets/ruslanmv/ai-medical-chatbot). This fine-tuned model leverages the GGUF (General-Purpose Gradient-based Quantization with Uniform Forwarding) technique for efficient inference with 4-bit quantization.

**Model:** [ruslanmv/Medical-Llama3-8B-GGUF](https://huggingface.co/ruslanmv/Medical-Llama3-8B-GGUF)

- **Developed by:** ruslanmv

- **License:** apache-2.0

- **Finetuned from model:** meta-llama/Meta-Llama-3-8B

## Installation

**Prerequisites:**

- A system with CUDA support is highly recommended for optimal performance.

- Python 3.10 or later

1. **Install required Python libraries:**

```bash

# GPU llama-cpp-python

!CMAKE_ARGS="-DLLAMA_CUBLAS=on" FORCE_CMAKE=1 pip install llama-cpp-python --force-reinstall --upgrade --no-cache-dir --verbose

```

```bash

%%capture

!pip install huggingface-hub hf-transfer

```

2. **Download model quantized:**

```bash

import os

os.environ["HF_HUB_ENABLE_HF_TRANSFER"] = "1"

!huggingface-cli download \

ruslanmv/Medical-Llama3-8B-GGUF \

medical-llama3-8b.Q5_K_M.gguf \

--local-dir . \

--local-dir-use-symlinks False

MODEL_PATH="/content/medical-llama3-8b.Q5_K_M.gguf"

```

## Example of use

Here's an example of how to use the Medical-Llama3-8B-GGUF 4bit model to generate an answer to a medical question:

```python

from llama_cpp import Llama

import json

B_INST, E_INST = "<s>[INST]", "[/INST]"

B_SYS, E_SYS = "<<SYS>>\n", "\n<</SYS>>\n\n"

DEFAULT_SYSTEM_PROMPT = """\

You are an AI Medical Chatbot Assistant, I'm equipped with a wealth of medical knowledge derived from extensive datasets. I aim to provide comprehensive and informative responses to your inquiries. However, please note that while I strive for accuracy, my responses should not replace professional medical advice and short answers.

If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information."""

SYSTEM_PROMPT = B_SYS + DEFAULT_SYSTEM_PROMPT + E_SYS

def create_prompt(user_query):

instruction = f"User asks: {user_query}\n"

prompt = B_INST + SYSTEM_PROMPT + instruction + E_INST

return prompt.strip()

user_query = "I'm a 35-year-old male experiencing symptoms like fatigue, increased sensitivity to cold, and dry, itchy skin. Could these be indicative of hypothyroidism?"

prompt = create_prompt(user_query)

print(prompt)

llm = Llama(model_path=MODEL_PATH, n_gpu_layers=-1)

result = llm(

prompt=prompt,

max_tokens=100,

echo=False

)

print(result['choices'][0]['text'])

```

The output exmample

```bash

Hi, thank you for your query.

Hypothyroidism is characterized by fatigue, sensitivity to cold, weight gain, depression, hair loss and mental dullness. I would suggest that you get a complete blood count with thyroid profile including TSH (thyroid stimulating hormone), free thyroxine level, and anti-thyroglobulin antibodies. These tests will help in establishing the diagnosis of hypothyroidism.

If there is no family history of autoimmune disorders, then it might be due

```

## License

This model is licensed under the Apache License 2.0. You can find the full license in the LICENSE file. |

duyntnet/Mistral-7B-Instruct-v0.2-imatrix-GGUF | duyntnet | 2024-04-26T06:46:06Z | 451 | 0 | transformers | [

"transformers",

"gguf",

"imatrix",

"mistralai",

"Mistral-7B-Instruct-v0.2",

"text-generation",

"en",

"license:other",

"region:us"

]

| text-generation | 2024-04-26T03:54:16Z | ---

license: other

inference: false

language:

- en

pipeline_tag: text-generation

tags:

- gguf

- imatrix

- mistralai

- Mistral-7B-Instruct-v0.2

- transformers

---

Quantizations of https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.2

# From original readme

## Instruction format

In order to leverage instruction fine-tuning, your prompt should be surrounded by `[INST]` and `[/INST]` tokens. The very first instruction should begin with a begin of sentence id. The next instructions should not. The assistant generation will be ended by the end-of-sentence token id.

E.g.

```

text = "<s>[INST] What is your favourite condiment? [/INST]"

"Well, I'm quite partial to a good squeeze of fresh lemon juice. It adds just the right amount of zesty flavour to whatever I'm cooking up in the kitchen!</s> "

"[INST] Do you have mayonnaise recipes? [/INST]"

```

This format is available as a [chat template](https://huggingface.co/docs/transformers/main/chat_templating) via the `apply_chat_template()` method:

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

device = "cuda" # the device to load the model onto

model = AutoModelForCausalLM.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

tokenizer = AutoTokenizer.from_pretrained("mistralai/Mistral-7B-Instruct-v0.2")

messages = [

{"role": "user", "content": "What is your favourite condiment?"},

{"role": "assistant", "content": "Well, I'm quite partial to a good squeeze of fresh lemon juice. It adds just the right amount of zesty flavour to whatever I'm cooking up in the kitchen!"},

{"role": "user", "content": "Do you have mayonnaise recipes?"}

]

encodeds = tokenizer.apply_chat_template(messages, return_tensors="pt")

model_inputs = encodeds.to(device)

model.to(device)

generated_ids = model.generate(model_inputs, max_new_tokens=1000, do_sample=True)

decoded = tokenizer.batch_decode(generated_ids)

print(decoded[0])

```

## Troubleshooting

- If you see the following error:

```

Traceback (most recent call last):

File "", line 1, in

File "/transformers/models/auto/auto_factory.py", line 482, in from_pretrained

config, kwargs = AutoConfig.from_pretrained(

File "/transformers/models/auto/configuration_auto.py", line 1022, in from_pretrained

config_class = CONFIG_MAPPING[config_dict["model_type"]]

File "/transformers/models/auto/configuration_auto.py", line 723, in getitem

raise KeyError(key)

KeyError: 'mistral'

```

Installing transformers from source should solve the issue

pip install git+https://github.com/huggingface/transformers

This should not be required after transformers-v4.33.4. |

tanganke/clip-vit-base-patch32_eurosat | tanganke | 2024-04-28T11:30:39Z | 451 | 0 | transformers | [

"transformers",

"safetensors",

"clip_vision_model",

"feature-extraction",

"dataset:tanganke/eurosat",

"base_model:openai/clip-vit-base-patch32",

"endpoints_compatible",

"region:us"

]

| feature-extraction | 2024-04-28T11:26:19Z | ---

base_model:

- openai/clip-vit-base-patch32

datasets:

- tanganke/eurosat

metrics:

- accuracy

---

# Model Card

## Model Details

- Architecture: ViT-Base with patch size 32

- Training Data: EuroSAT dataset

## Training Details

Adam Optimizer with a constant learning rate 1e-5 for 4000 steps training (batch_size=32).

Only the vision encoder is fine-tuned.

## Evaluation Results

- pre-trained: 0.455623596906662

- fine-tuned: 0.9906941652297974

## Usage

load vision model

```python

from transformers import CLIPVisionModel

vision_model = CLIPVisionModel.from_pretrained('tanganke/clip-vit-base-patch32_eurosat')

```

substitute the vision encoder of clip

```python

from transformers import CLIPModel

clip_model = CLIPModel.from_pretrained("openai/clip-vit-base-patch32")

clip_model.vision_model.load_state_dict(vision_model.vision_model.state_dict())

```

|

Local-Novel-LLM-project/Vecteus-v1-gguf | Local-Novel-LLM-project | 2024-05-03T00:37:22Z | 451 | 5 | transformers | [

"transformers",

"gguf",

"finetuned",

"text-generation",

"en",

"ja",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| text-generation | 2024-05-01T06:39:28Z | ---

license: apache-2.0

language:

- en

- ja

tags:

- finetuned

library_name: transformers

pipeline_tag: text-generation

---

<img src="./veteus_logo.svg" width="100%" height="20%" alt="">

- Vecteus-v1のGGUF版

# Our Models for GGUF

- [Vecteus](https://huggingface.co/Local-Novel-LLM-project/Vecteus-v1-gguf)

- [Ninja-v1](https://huggingface.co/Local-Novel-LLM-project/Ninja-v1-GGUF)

- [Ninja-v1-NSFW](https://huggingface.co/Local-Novel-LLM-project/Ninja-v1-NSFW-GGUF)

|

ukung/komodo-7b-base-GGUF | ukung | 2024-05-19T16:47:36Z | 451 | 0 | null | [

"gguf",

"finetuned",

"text-generation",

"base_model:Yellow-AI-NLP/komodo-7b-base",

"license:apache-2.0",

"region:us"

]

| text-generation | 2024-05-19T14:13:14Z | ---

base_model: Yellow-AI-NLP/komodo-7b-base

inference: false

license: apache-2.0

model_type: llama

pipeline_tag: text-generation

prompt_template: '<s>[INST] {prompt} [/INST]

'

quantized_by: ukung

tags:

- finetuned

---

<!-- markdownlint-disable MD041 -->

<!-- description start -->

## Description

These files were quantised using hardware kindly provided by [Massed Compute](https://kaggle.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/ukung/komodo-7b-base-GGUF)

* [Model original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/ukung/komodo-7b-base-GGUF)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Mistral

```

<s>[INST] {prompt} [/INST]

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* q2_k: Uses Q4_K for the attention.vw and feed_forward.w2 tensors, Q2_K for the other tensors.

* q3_k_l: Uses Q5_K for the attention.wv, attention.wo, and feed_forward.w2 tensors, else Q3_K

* q3_k_m: Uses Q4_K for the attention.wv, attention.wo, and feed_forward.w2 tensors, else Q3_K

* q3_k_s: Uses Q3_K for all tensors

* q4_0: Original quant method, 4-bit.

* q4_1: Higher accuracy than q4_0 but not as high as q5_0. However has quicker inference than q5 models.

* q4_k_m: Uses Q6_K for half of the attention.wv and feed_forward.w2 tensors, else Q4_K

* q4_k_s: Uses Q4_K for all tensors

* q5_0: Higher accuracy, higher resource usage and slower inference.

* q5_1: Even higher accuracy, resource usage and slower inference.

* q5_k_m: Uses Q6_K for half of the attention.wv and feed_forward.w2 tensors, else Q5_K

* q5_k_s: Uses Q5_K for all tensors

* q6_k: Uses Q8_K for all tensors

* q8_0: Almost indistinguishable from float16. High resource use and slow. Not recommended for most users.

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [komodo-7b-base-q2_k.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q2_k.gguf) | Q2_K | 2 | 2.55 GB | Tidak diketahui | smallest, significant quality loss - not recommended for most purposes |

| [komodo-7b-base-q3_k_l.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q3_k_l.gguf) | Q3_K_L | 3 | 3.61 GB | Tidak diketahui | very small, high quality loss |

| [komodo-7b-base-q3_k_m.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q3_k_m.gguf) | Q3_K_M | 3 | 3.31 GB | Tidak diketahui | very small, high quality loss |

| [komodo-7b-base-q3_k_s.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q3_k_s.gguf) | Q3_K_S | 3 | 2.96 GB | Tidak diketahui | very small, high quality loss |

| [komodo-7b-base-q4_0.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q4_0.gguf) | Q4_0 | 4 | 3.84 GB | Tidak diketahui | smaller, moderate quality loss |

| [komodo-7b-base-q4_1.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q4_1.gguf) | Q4_1 | 4 | 4.26 GB | Tidak diketahui | smaller, moderate quality loss |

| [komodo-7b-base-q4_k_m.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q4_k_m.gguf) | Q4_K_M | 4 | 4.1 GB | Tidak diketahui | smaller, moderate quality loss |

| [komodo-7b-base-q4_k_s.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q4_k_s.gguf) | Q4_K_S | 4 | 3.87 GB | Tidak diketahui | smaller, moderate quality loss |

| [komodo-7b-base-q5_0.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q5_0.gguf) | Q5_0 | 5 | 4.67 GB | Tidak diketahui | medium, balanced quality |

| [komodo-7b-base-q5_1.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q5_1.gguf) | Q5_1 | 5 | 5.08 GB | Tidak diketahui | medium, balanced quality |

| [komodo-7b-base-q5_k_m.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q5_k_m.gguf) | Q5_K_M | 5 | 4.8 GB | Tidak diketahui | medium, balanced quality |

| [komodo-7b-base-q5_k_s.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q5_k_s.gguf) | Q5_K_S | 5 | 4.67 GB | Tidak diketahui | medium, balanced quality |

| [komodo-7b-base-q6_k.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q6_k.gguf) | Q6_K | 6 | 5.55 GB | Tidak diketahui | larger, higher quality |

| [komodo-7b-base-q8_0.gguf](https://huggingface.co/ukung/komodo-7b-base-GGUF/tree/main/komodo-7b-base-q8_0.gguf) | Q8_0 | 8 | 7.19 GB | Tidak diketahui | largest, best quality |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/Mistral-7B-Instruct-v0.2-GGUF and below it, a specific filename to download, such as: komodo-7b-base-q4_0.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/Mistral-7B-Instruct-v0.2-GGUF komodo-7b-base-q4_0.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download Yellow-AI-NLP/komodo-7b-base --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download Yellow-AI-NLP/komodo-7b-base komodo-7b-base-q4_0.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 35 -m komodo-7b-base-q4_0.gguf --color -c 32768 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<s>[INST] {prompt} [/INST]"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 32768` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically. Note that longer sequence lengths require much more resources, so you may need to reduce this value.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries. Note that at the time of writing (Nov 27th 2023), ctransformers has not been updated for some time and is not compatible with some recent models. Therefore I recommend you use llama-cpp-python.

### How to load this model in Python code, using llama-cpp-python

For full documentation, please see: [llama-cpp-python docs](https://abetlen.github.io/llama-cpp-python/).

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install llama-cpp-python

# With NVidia CUDA acceleration

CMAKE_ARGS="-DLLAMA_CUBLAS=on" pip install llama-cpp-python

# Or with OpenBLAS acceleration

CMAKE_ARGS="-DLLAMA_BLAS=ON -DLLAMA_BLAS_VENDOR=OpenBLAS" pip install llama-cpp-python

# Or with CLBLast acceleration

CMAKE_ARGS="-DLLAMA_CLBLAST=on" pip install llama-cpp-python

# Or with AMD ROCm GPU acceleration (Linux only)

CMAKE_ARGS="-DLLAMA_HIPBLAS=on" pip install llama-cpp-python

# Or with Metal GPU acceleration for macOS systems only

CMAKE_ARGS="-DLLAMA_METAL=on" pip install llama-cpp-python

# In windows, to set the variables CMAKE_ARGS in PowerShell, follow this format; eg for NVidia CUDA:

$env:CMAKE_ARGS = "-DLLAMA_OPENBLAS=on"

pip install llama-cpp-python

```

#### Simple llama-cpp-python example code

```python

from llama_cpp import Llama

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = Llama(

model_path="./komodo-7b-base-q4_0.gguf", # Download the model file first

n_ctx=32768, # The max sequence length to use - note that longer sequence lengths require much more resources

n_threads=8, # The number of CPU threads to use, tailor to your system and the resulting performance

n_gpu_layers=35 # The number of layers to offload to GPU, if you have GPU acceleration available

)

# Simple inference example

output = llm(

"<s>[INST] {prompt} [/INST]", # Prompt

max_tokens=512, # Generate up to 512 tokens

stop=["</s>"], # Example stop token - not necessarily correct for this specific model! Please check before using.

echo=True # Whether to echo the prompt

)

# Chat Completion API

llm = Llama(model_path="./komodo-7b-base-q4_0.gguf", chat_format="llama-2") # Set chat_format according to the model you are using

llm.create_chat_completion(

messages = [

{"role": "system", "content": "You are a story writing assistant."},

{

"role": "user",

"content": "Write a story about llamas."

}

]

)

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

<!-- footer end -->

<!-- original-model-card start -->

## Instruction format

In order to leverage instruction fine-tuning, your prompt should be surrounded by `[INST]` and `[/INST]` tokens. The very first instruction should begin with a begin of sentence id. The next instructions should not. The assistant generation will be ended by the end-of-sentence token id.

E.g.

```

text = "<s>[INST] What is your favourite condiment? [/INST]"

"Well, I'm quite partial to a good squeeze of fresh lemon juice. It adds just the right amount of zesty flavour to whatever I'm cooking up in the kitchen!</s> "

"[INST] Do you have mayonnaise recipes? [/INST]"

```

This format is available as a [chat template](https://huggingface.co/docs/transformers/main/chat_templating) via the `apply_chat_template()` method:

```python

pip install ctransformers

from ctransformers import AutoModelForCausalLM

llm1 = AutoModelForCausalLM.from_pretrained("ukung/komodo-7b-base-GGUF", model_file="komodo-7b-base-q4_0.gguf", model_type="llama", gpu_layers=50)

prompt="""jelaskan dengan detail apa itu self-attention?"""

for text in llm1(prompt, stream=True, max_new_tokens=2048, stop=["</s>", "<s>", "<|im_start|>", "<|im_end|>", "|im_end|>", "|im_end|", "<"]):

print(text, end='')

```

## Model Architecture

This instruction model is based on Llama2, a transformer model with the following architecture choices:

- Grouped-Query Attention

- Sliding-Window Attention

- Byte-fallback BPE tokenizer

## Troubleshooting

- If you see the following error:

```

Traceback (most recent call last):

File "", line 1, in

File "/transformers/models/auto/auto_factory.py", line 482, in from_pretrained

config, kwargs = AutoConfig.from_pretrained(

File "/transformers/models/auto/configuration_auto.py", line 1022, in from_pretrained

config_class = CONFIG_MAPPING[config_dict["model_type"]]

File "/transformers/models/auto/configuration_auto.py", line 723, in getitem

raise KeyError(key)

KeyError: 'llama'

```

Installing transformers from source should solve the issue

pip install git+https://github.com/huggingface/transformers

This should not be required after transformers-v4.33.4.

## Limitations

The model is a quick demonstration that the base model can be easily fine-tuned to achieve compelling performance.

It does not have any moderation mechanisms. We're looking forward to engaging with the community on ways to

make the model finely respect guardrails, allowing for deployment in environments requiring moderated outputs.

## The AI Team

[email protected]

<!-- original-model-card end --> |

RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf | RichardErkhov | 2024-05-27T16:14:42Z | 451 | 0 | null | [

"gguf",

"region:us"

]

| null | 2024-05-27T14:14:57Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

llama-2-7b-hf_open-platypus - GGUF

- Model creator: https://huggingface.co/lgaalves/

- Original model: https://huggingface.co/lgaalves/llama-2-7b-hf_open-platypus/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [llama-2-7b-hf_open-platypus.Q2_K.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q2_K.gguf) | Q2_K | 2.36GB |

| [llama-2-7b-hf_open-platypus.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.IQ3_XS.gguf) | IQ3_XS | 2.6GB |

| [llama-2-7b-hf_open-platypus.IQ3_S.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.IQ3_S.gguf) | IQ3_S | 2.75GB |

| [llama-2-7b-hf_open-platypus.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q3_K_S.gguf) | Q3_K_S | 2.75GB |

| [llama-2-7b-hf_open-platypus.IQ3_M.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.IQ3_M.gguf) | IQ3_M | 2.9GB |

| [llama-2-7b-hf_open-platypus.Q3_K.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q3_K.gguf) | Q3_K | 3.07GB |

| [llama-2-7b-hf_open-platypus.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q3_K_M.gguf) | Q3_K_M | 3.07GB |

| [llama-2-7b-hf_open-platypus.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q3_K_L.gguf) | Q3_K_L | 3.35GB |

| [llama-2-7b-hf_open-platypus.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.IQ4_XS.gguf) | IQ4_XS | 3.4GB |

| [llama-2-7b-hf_open-platypus.Q4_0.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q4_0.gguf) | Q4_0 | 3.56GB |

| [llama-2-7b-hf_open-platypus.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.IQ4_NL.gguf) | IQ4_NL | 3.58GB |

| [llama-2-7b-hf_open-platypus.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q4_K_S.gguf) | Q4_K_S | 3.59GB |

| [llama-2-7b-hf_open-platypus.Q4_K.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q4_K.gguf) | Q4_K | 3.8GB |

| [llama-2-7b-hf_open-platypus.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q4_K_M.gguf) | Q4_K_M | 3.8GB |

| [llama-2-7b-hf_open-platypus.Q4_1.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q4_1.gguf) | Q4_1 | 3.95GB |

| [llama-2-7b-hf_open-platypus.Q5_0.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q5_0.gguf) | Q5_0 | 4.33GB |

| [llama-2-7b-hf_open-platypus.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q5_K_S.gguf) | Q5_K_S | 4.33GB |

| [llama-2-7b-hf_open-platypus.Q5_K.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q5_K.gguf) | Q5_K | 4.45GB |

| [llama-2-7b-hf_open-platypus.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q5_K_M.gguf) | Q5_K_M | 4.45GB |

| [llama-2-7b-hf_open-platypus.Q5_1.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q5_1.gguf) | Q5_1 | 4.72GB |

| [llama-2-7b-hf_open-platypus.Q6_K.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q6_K.gguf) | Q6_K | 5.15GB |

| [llama-2-7b-hf_open-platypus.Q8_0.gguf](https://huggingface.co/RichardErkhov/lgaalves_-_llama-2-7b-hf_open-platypus-gguf/blob/main/llama-2-7b-hf_open-platypus.Q8_0.gguf) | Q8_0 | 6.67GB |

Original model description:

---

license: llama2

datasets:

- garage-bAInd/Open-Platypus

pipeline_tag: text-generation

language:

- en

---

# Llama-2-7b-hf_open-platypus

**llama-2-7b-hf_open-platypus** is an instruction fine-tuned model based on the LLaMA2-7B transformer architecture.

### Benchmark Metrics

| Metric | llama-2-7b-hf_open-platypus | garage-bAInd/Platypus2-7B| meta-llama/Llama-2-7b-hf (base) |

|-----------------------|-------|-------|-------|

| Avg. | 54.35|**56.13** | 54.32 |

| ARC (25-shot) | 51.45 |**55.2**| 53.07 |

| HellaSwag (10-shot) | 78.63 |**78.84**| 78.59 |

| MMLU (5-shot) | 43.6 |**49.83**| 46.87 |

| TruthfulQA (0-shot) | **43.71** |40.64| 38.76 |

We use state-of-the-art [Language Model Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness) to run the benchmark tests above, using the same version as the HuggingFace LLM Leaderboard. Please see below for detailed instructions on reproducing benchmark results.

### Model Details

* **Trained by**: Luiz G A Alves

* **Model type:** **llama-2-7b-hf_open-platypus** is an auto-regressive language model based on the LLaMA2 transformer architecture.

* **Language(s)**: English

### How to use:

```python

# Use a pipeline as a high-level helper

>>> from transformers import pipeline

>>> pipe = pipeline("text-generation", model="lgaalves/llama-2-7b-hf_open-platypus")

>>> question = "What is a large language model?"

>>> answer = pipe(question)

>>> print(answer[0]['generated_text'])

```

or, you can load the model direclty using:

```python

# Load model directly

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("lgaalves/llama-2-7b-hf_open-platypus")

model = AutoModelForCausalLM.from_pretrained("lgaalves/llama-2-7b-hf_open-platypus")

```

### Training Dataset

`lgaalves/llama-2-7b-hf_open-platypus` trained using STEM and logic based dataset [`garage-bAInd/Open-Platypus`](https://huggingface.co/datasets/garage-bAInd/Open-Platypus).

### Training Procedure

`lgaalves/llama-2-7b-hf_open-platypus` was instruction fine-tuned using LoRA on 1 Tesla V100-SXM2-16GB.

### Limitations and bias

Llama 2 and fine-tuned variants are a new technology that carries risks with use. Testing conducted to date has been in English, and has not covered, nor could it cover all scenarios. For these reasons, as with all LLMs, Llama 2 and any fine-tuned varient's potential outputs cannot be predicted in advance, and the model may in some instances produce inaccurate, biased or other objectionable responses to user prompts. Therefore, before deploying any applications of Llama 2 variants, developers should perform safety testing and tuning tailored to their specific applications of the model.

Please see the Responsible Use Guide available at https://ai.meta.com/llama/responsible-use-guide/

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_lgaalves__llama-2-7b-hf_open-platypus)

| Metric | Value |

|-----------------------|---------------------------|

| Avg. | 43.49 |

| ARC (25-shot) | 51.45 |

| HellaSwag (10-shot) | 78.63 |

| MMLU (5-shot) | 43.6 |

| TruthfulQA (0-shot) | 43.71 |

| Winogrande (5-shot) | 74.43 |

| GSM8K (5-shot) | 6.6 |

| DROP (3-shot) | 5.99 |

|

mradermacher/Maixtchup-4x7b-GGUF | mradermacher | 2024-05-31T06:43:41Z | 451 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:StatsGary/Maixtchup-4x7b",

"endpoints_compatible",

"region:us"

]

| null | 2024-05-30T05:48:38Z | ---

base_model: StatsGary/Maixtchup-4x7b

language:

- en

library_name: transformers

quantized_by: mradermacher

tags: []

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/StatsGary/Maixtchup-4x7b

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Maixtchup-4x7b-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q2_K.gguf) | Q2_K | 8.9 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.IQ3_XS.gguf) | IQ3_XS | 10.0 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q3_K_S.gguf) | Q3_K_S | 10.5 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.IQ3_S.gguf) | IQ3_S | 10.6 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.IQ3_M.gguf) | IQ3_M | 10.7 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q3_K_M.gguf) | Q3_K_M | 11.7 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q3_K_L.gguf) | Q3_K_L | 12.6 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.IQ4_XS.gguf) | IQ4_XS | 13.1 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q4_K_S.gguf) | Q4_K_S | 13.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q4_K_M.gguf) | Q4_K_M | 14.7 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q5_K_S.gguf) | Q5_K_S | 16.7 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q5_K_M.gguf) | Q5_K_M | 17.2 | |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q6_K.gguf) | Q6_K | 19.9 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Maixtchup-4x7b-GGUF/resolve/main/Maixtchup-4x7b.Q8_0.gguf) | Q8_0 | 25.8 | fast, best quality |

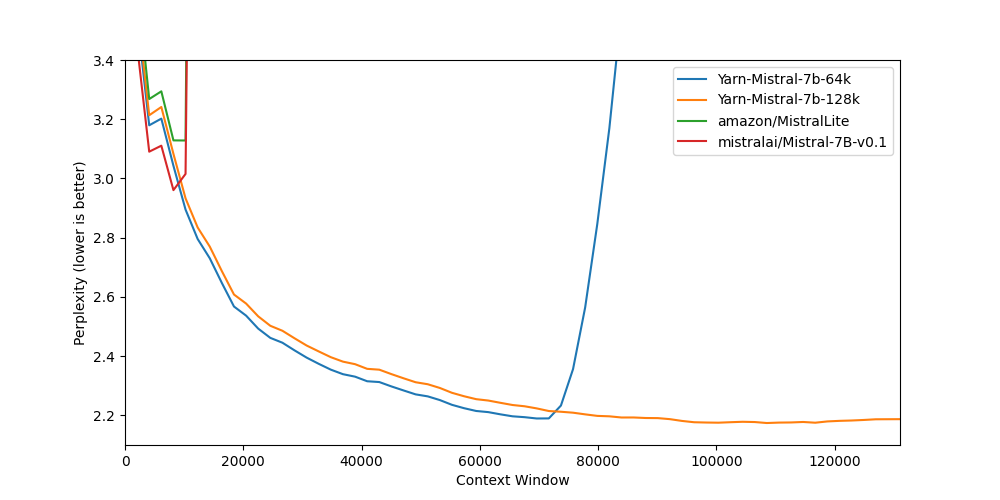

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

gglabs/TinyLM-Chat-0611-10-epoch | gglabs | 2024-06-11T14:44:58Z | 451 | 0 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"base_model:unsloth/tinyllama-chat-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2024-06-11T14:40:29Z | ---

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

base_model: unsloth/tinyllama-chat-bnb-4bit

---

# Uploaded model

- **Developed by:** gglabs

- **License:** apache-2.0

- **Finetuned from model :** unsloth/tinyllama-chat-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

mradermacher/TeenyTinyLlama-Doc-160m-GGUF | mradermacher | 2024-06-29T05:32:32Z | 451 | 0 | transformers | [

"transformers",

"gguf",

"llama-2",

"pt",

"medicine",

"dataset:mmoreirast/medicine-training-pt",

"dataset:mmoreirast/medicine-evaluation-pt",

"base_model:mmoreirast/TeenyTinyLlama-Doc-160m",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2024-06-22T17:41:17Z | ---

base_model: mmoreirast/TeenyTinyLlama-Doc-160m

datasets:

- mmoreirast/medicine-training-pt

- mmoreirast/medicine-evaluation-pt

language:

- pt

library_name: transformers

license: apache-2.0

quantized_by: mradermacher

tags:

- llama-2

- pt

- medicine

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/mmoreirast/TeenyTinyLlama-Doc-160m

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q2_K.gguf) | Q2_K | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.IQ3_XS.gguf) | IQ3_XS | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.IQ3_S.gguf) | IQ3_S | 0.2 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q3_K_S.gguf) | Q3_K_S | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.IQ3_M.gguf) | IQ3_M | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q3_K_M.gguf) | Q3_K_M | 0.2 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q3_K_L.gguf) | Q3_K_L | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.IQ4_XS.gguf) | IQ4_XS | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q4_K_S.gguf) | Q4_K_S | 0.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q4_K_M.gguf) | Q4_K_M | 0.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q5_K_S.gguf) | Q5_K_S | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q5_K_M.gguf) | Q5_K_M | 0.2 | |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q6_K.gguf) | Q6_K | 0.2 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.Q8_0.gguf) | Q8_0 | 0.3 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/TeenyTinyLlama-Doc-160m-GGUF/resolve/main/TeenyTinyLlama-Doc-160m.f16.gguf) | f16 | 0.4 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

larenspear/llama2-7b-WildJailbreak-Q2_K-GGUF | larenspear | 2024-06-30T19:25:43Z | 451 | 0 | null | [

"gguf",

"llama-cpp",

"gguf-my-repo",

"en",

"base_model:allenai/llama2-7b-WildJailbreak",

"license:apache-2.0",

"region:us"

]

| null | 2024-06-30T19:25:32Z | ---

base_model: allenai/llama2-7b-WildJailbreak

language:

- en

license: apache-2.0

tags:

- llama-cpp

- gguf-my-repo

extra_gated_prompt: Access to this model is automatically granted upon accepting the

[AI2 Responsible Use Guidelines](https://allenai.org/responsible-use.pdf), and completing

all fields below

extra_gated_fields:

Your full name: text

Organization or entity you are affiliated with: text

State or country you are located in: text

Contact email: text

Please describe your intended use of the low risk artifact(s): text

I understand that this model is a research artifact that may contain or produce unfiltered, toxic, or harmful material: checkbox

I agree to use this model for research purposes in accordance with the AI2 Responsible Use Guidelines: checkbox

I agree that AI2 may use my information as described in the Privacy Policy: checkbox

I certify that the information I have provided is true and accurate: checkbox

---

# larenspear/llama2-7b-WildJailbreak-Q2_K-GGUF

This model was converted to GGUF format from [`allenai/llama2-7b-WildJailbreak`](https://huggingface.co/allenai/llama2-7b-WildJailbreak) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/allenai/llama2-7b-WildJailbreak) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo larenspear/llama2-7b-WildJailbreak-Q2_K-GGUF --hf-file llama2-7b-wildjailbreak-q2_k.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo larenspear/llama2-7b-WildJailbreak-Q2_K-GGUF --hf-file llama2-7b-wildjailbreak-q2_k.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo larenspear/llama2-7b-WildJailbreak-Q2_K-GGUF --hf-file llama2-7b-wildjailbreak-q2_k.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo larenspear/llama2-7b-WildJailbreak-Q2_K-GGUF --hf-file llama2-7b-wildjailbreak-q2_k.gguf -c 2048

```

|

Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1-Q4_K_S-GGUF | Isaak-Carter | 2024-07-02T13:13:48Z | 451 | 1 | transformers | [

"transformers",

"gguf",

"text-generation-inference",

"unsloth",

"llama",

"trl",

"sft",

"llama-cpp",

"gguf-my-repo",

"en",

"de",

"base_model:Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2024-07-01T07:53:13Z | ---

base_model: Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1

language:

- en

- de

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- trl

- sft

- llama-cpp

- gguf-my-repo

---

use in ollama `ollama run goekdenizguelmez/j.o.s.i.e.v4o-8b-stage1-beta2.3.1`

# Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1-Q4_K_S-GGUF

This model was converted to GGUF format from [`Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1`](https://huggingface.co/Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1) using llama.cpp via the ggml.ai's [GGUF-my-repo](https://huggingface.co/spaces/ggml-org/gguf-my-repo) space.

Refer to the [original model card](https://huggingface.co/Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1) for more details on the model.

## Use with llama.cpp

Install llama.cpp through brew (works on Mac and Linux)

```bash

brew install llama.cpp

```

Invoke the llama.cpp server or the CLI.

### CLI:

```bash

llama-cli --hf-repo Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1-Q4_K_S-GGUF --hf-file josiev4o-8b-stage1-beta2.3.1-q4_k_s.gguf -p "The meaning to life and the universe is"

```

### Server:

```bash

llama-server --hf-repo Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1-Q4_K_S-GGUF --hf-file josiev4o-8b-stage1-beta2.3.1-q4_k_s.gguf -c 2048

```

Note: You can also use this checkpoint directly through the [usage steps](https://github.com/ggerganov/llama.cpp?tab=readme-ov-file#usage) listed in the Llama.cpp repo as well.

Step 1: Clone llama.cpp from GitHub.

```

git clone https://github.com/ggerganov/llama.cpp

```

Step 2: Move into the llama.cpp folder and build it with `LLAMA_CURL=1` flag along with other hardware-specific flags (for ex: LLAMA_CUDA=1 for Nvidia GPUs on Linux).

```

cd llama.cpp && LLAMA_CURL=1 make

```

Step 3: Run inference through the main binary.

```

./llama-cli --hf-repo Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1-Q4_K_S-GGUF --hf-file josiev4o-8b-stage1-beta2.3.1-q4_k_s.gguf -p "The meaning to life and the universe is"

```

or

```

./llama-server --hf-repo Isaak-Carter/JOSIEv4o-8b-stage1-beta2.3.1-Q4_K_S-GGUF --hf-file josiev4o-8b-stage1-beta2.3.1-q4_k_s.gguf -c 2048

```

|

s3nh/MathLLM-MathCoder-CL-7B-GGUF | s3nh | 2023-10-06T08:47:16Z | 450 | 2 | transformers | [

"transformers",

"gguf",

"text-generation",

"zh",

"en",

"license:openrail",

"endpoints_compatible",

"region:us"

]

| text-generation | 2023-10-06T08:41:21Z |

---

license: openrail

pipeline_tag: text-generation