modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

anakin87/electra-italian-xxl-cased-squad-it

|

anakin87

| 2023-03-16T17:21:47Z | 376 | 7 |

transformers

|

[

"transformers",

"pytorch",

"safetensors",

"electra",

"question-answering",

"italian",

"squad_it",

"it",

"dataset:squad_it",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] |

question-answering

| 2023-01-31T07:50:26Z |

---

datasets:

- squad_it

metrics:

- squad

language:

- it

license: apache-2.0

tags:

- italian

- squad_it

- question-answering

widget:

- text: Qual è il soprannome di Vasco Rossi?

context: >-

Vasco Rossi, noto anche semplicemente come Vasco e in passato con

l'appellativo Blasco (Zocca, 7 febbraio 1952), è un cantautore italiano

- text: >-

La foresta pluviale amazzonica è diventata per lo più una foresta interna

intorno a quale evento globale?

context: >-

In seguito all' evento di estinzione del Cretaceo-Paleogene, l' estinzione

dei dinosauri e il clima umido possono aver permesso alla foresta pluviale

tropicale di diffondersi in tutto il continente. Dal 66-34 Mya, la foresta

pluviale si estendeva fino a sud fino a 45°. Le fluttuazioni climatiche

degli ultimi 34 milioni di anni hanno permesso alle regioni della savana di

espandersi fino ai tropici. Durante l' Oligocene, ad esempio, la foresta

pluviale ha attraversato una banda relativamente stretta. Si espandeva di

nuovo durante il Miocene medio, poi si ritrasse ad una formazione

prevalentemente interna all' ultimo massimo glaciale. Tuttavia, la foresta

pluviale è riuscita ancora a prosperare durante questi periodi glaciali,

consentendo la sopravvivenza e l' evoluzione di un' ampia varietà di specie.

- text: >-

Il Regno Unito e la Francia non hanno avuto interruzioni dell'

approvvigionamento petrolifero in quanto non hanno consentito a quale paese

di utilizzare il loro aeroporto?

context: >-

L' embargo non era uniforme in tutta Europa. Dei nove membri della Comunità

Economica Europea (CEE), i Paesi Bassi hanno dovuto affrontare un embargo

totale, il Regno Unito e la Francia hanno ricevuto forniture quasi

ininterrotte (poichè si sono rifiutati di consentire all' America di

utilizzare i loro aerodromi e le armi e forniture embargo sia agli arabi che

agli israeliani), mentre gli altri sei hanno dovuto affrontare tagli

parziali. Il Regno Unito era tradizionalmente un alleato di Israele, e il

governo di Harold Wilson ha sostenuto gli israeliani durante la guerra dei

sei giorni. Il suo successore, Ted Heath, ribaltò questa politica nel 1970,

chiedendo a Israele di ritirarsi ai suoi confini prima del 1967.

- context: >-

Nel 1962, il grafico Paul Rand ridisegna il logo ABC nella sua forma più

conosciuta (e attuale) con le lettere minuscole 'abc' racchiuse in un unico

cerchio nero. Il nuovo logo esordisce in onda per le promozioni di ABC all'

inizio della stagione 1963-64. Le lettere ricordano fortemente il carattere

tipografico Bauhaus disegnato da Herbert Bayer negli anni Venti, ma

condividono anche similitudini con diversi altri caratteri, come ITC Avant

Garde e Horatio, e lo Chalet più simile. La semplicità del logo ha reso più

facile la riprogettazione e la duplicazione, il che ha conferito un

beneficio per ABC (soprattutto prima dell' avvento della computer grafica).

text: Di quale carattere tipografico ricordano le lettere dell' iconico logo ABC?

- context: >-

La fotorespirazione può verificarsi quando la concentrazione di ossigeno è

troppo elevata. Rubisco non è in grado di distinguere molto bene tra

ossigeno e anidride carbonica, quindi può accidentalmente aggiungere O2

invece di CO2 a RuBP. Questo processo riduce l' efficienza della

fotosintesi: consuma ATP e ossigeno, rilascia CO2 e non produce zucchero.

Può sprecare fino alla metà del carbonio fissato dal ciclo di Calvin.

Diversi meccanismi si sono evoluti in diversi lignaggi che aumentano la

concentrazione di anidride carbonica rispetto all' ossigeno all' interno del

cloroplasto, aumentando l' efficienza della fotosintesi. Questi meccanismi

sono chiamati meccanismi di concentrazione dell' anidride carbonica, o CCM.

Tra questi figurano il metabolismo degli acidi crassulaceanici, la

fissazione del carbonio C4 e i pirenoidi. I cloroplasti negli impianti C4

sono notevoli in quanto presentano un chiaro dimorfismo cloroplastico.

text: Che cosa può fare rubisco per errore?

model-index:

- name: electra-italian-xxl-cased-squad-it

results:

- task:

type: question-answering

name: Question Answering

dataset:

type: squad_it

name: SQuAD-IT

metrics:

- type: exact-match

value: 0.66

name: Test Exact Match

- type: f1

value: 0.775

name: Test F1

train-eval-index:

- config: default

task: question-answering

task_id: extractive_question_answering

splits:

eval_split: test

col_mapping:

context: context

question: question

answers.text: answers.text

answers.answer_start: answers.answer_start

pipeline_tag: question-answering

library_name: transformers

---

# electra-italian-xxl-cased-squad-it

Electra model for (Extractive) Question Answering on Italian texts

## Model description

This model has been fine-tuned on [squad_it dataset](https://huggingface.co/datasets/squad_it), starting from the pre-trained model [dbmdz/electra-base-italian-xxl-cased-discriminator](https://huggingface.co/dbmdz/electra-base-italian-xxl-cased-discriminator).

It can be used for [Extractive Q&A](https://huggingface.co/tasks/question-answering) on Italian texts.

## Evaluation

| Metric | Value |

| ------ | --------- |

| **EM** | **0.660** |

| **F1** | **0.775** |

[Evaluation notebook](https://github.com/anakin87/electra-italian-xxl-cased-squad-it/blob/main/evaluation.ipynb)

## Usage in Transformers 🤗

Model checkpoints are available for usage in PyTorch. They can be used directly with pipelines as:

```python

from transformers import pipelines

qa = pipeline('question-answering', model='anakin87/electra-italian-xxl-cased-squad-it')

qa(question="Qual è il soprannome di Vasco Rossi?", context="Vasco Rossi, noto anche semplicemente come Vasco e in passato con l'appellativo Blasco (Zocca, 7 febbraio 1952), è un cantautore italiano")

>>> {'score': 0.93, 'start': 80, 'end': 86, 'answer': 'Blasco'}

```

## Usage in Haystack 🚀🚀🚀

With the [Haystack NLP framework](https://github.com/deepset-ai/haystack), you can use this model and create a scalable Question Answering system that works across millions of documents.

For a complete walkthrough, see [this notebook](https://github.com/anakin87/electra-italian-xxl-cased-squad-it/blob/main/usage_in_haystack.ipynb).

```python

...

print_answers(prediction, details="medium")

>>> Query: Con chi ha parlato di vaccini il premier Mario Draghi?

Answers:

[ { 'answer': 'Von der Leyen',

'context': " vaccino dell'azienda britannica. Durante la telefonata "

'tra Draghi e Von der Leyen, la presidente della '

'Commissione Ue ha annunciato al presidente del',

'score': 0.9663902521133423},

{ 'answer': 'Ursula Von der Leyen',

'context': 'colloquio telefonico con la presidente della Commissione '

'europea Ursula Von der Leyen. Secondo fonti di Palazzo '

'Chigi, dalla conversazione è emerso ch',

'score': 0.9063920974731445},

{ 'answer': 'Mario Draghi, ha tenuto un lungo discorso alla 76esima '

'Assemblea Generale delle Nazioni Unite',

'context': 'Il presidente del Consiglio, Mario Draghi, ha tenuto un '

'lungo discorso alla 76esima Assemblea Generale delle '

'Nazioni Unite, nella notte italiana. Tant',

'score': 0.5243796706199646}]

```

## Comparison ⚖️

| Model | EM | F1 | Model size (PyTorch) | Architecture |

|-----------------------------------------------------------|-------|-------|----------------------|------------------|

| it5/it5-large-question-answering | 69.10 | 78.00 | 3.13 GB | encoder-decoder |

| ***anakin87/electra-italian-xxl-cased-squad-it (this one)*** | *66.03* | *77.47* | *437 MB* | *encoder* |

| it5/it5-base-question-answering | 66.30 | 76.10 | 990 MB | encoder-decoder |

| it5/mt5-base-question-answering | 66.30 | 75.70 | 2.33 GB | encoder-decoder |

| antoniocappiello/bert-base-italian-uncased-squad-it | 63.80 | 75.30 | 440 MB | encoder |

| luigisaetta/squad_it_xxl_cased_hub1 | 63.95 | 75.27 | 440 MB | encoder |

| it5/it5-efficient-small-el32-question-answering | 64.50 | 74.70 | 569 MB | encoder-decoder |

| mrm8488/bert-italian-finedtuned-squadv1-it-alfa | 62.51 | 74.16 | 440 MB | encoder |

| mrm8488/umberto-wikipedia-uncased-v1-finetuned-squadv1-it | 60.50 | 72.41 | 443 MB | encoder |

| it5/it5-small-question-answering | 61.90 | 71.60 | 308 MB | encoder-decoder |

| it5/mt5-small-question-answering | 56.00 | 66.00 | 1.2 GB | encoder-decoder |

| DrQA-it trained on SQuAD-it | 56.10 | 65.90 | ? | ? |

## Training details 🏋️

[Training notebook](https://github.com/anakin87/electra-italian-xxl-cased-squad-it/blob/main/training.ipynb)

**Hyperparameters**

- learning_rate: 2e-05

- batch_size: 8

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- num_epochs: 2

- mixed_precision_training: Native AMP

> Created by [Stefano Fiorucci/anakin87](https://github.com/anakin87)

>

> Made with <span style="color: #e25555;">♥</span> in Italy

|

uitnlp/CafeBERT

|

uitnlp

| 2024-06-16T08:37:57Z | 376 | 11 |

transformers

|

[

"transformers",

"pytorch",

"xlm-roberta",

"fill-mask",

"Vietnamese Question Answering",

"Vietnamese Reading Comprehension",

"Vietnamese Language Understanding",

"Vietnamese Natural Language Inference",

"vi",

"arxiv:2403.15882",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] |

fill-mask

| 2023-02-05T11:17:42Z |

---

license: apache-2.0

widget:

- text: Thủ đô của nước Việt Nam là <mask> Nội.

example_title: Example 1

- text: Cà phê được trồng nhiều ở khu vực Tây <mask> của Việt Nam.

example_title: Example 2

language:

- vi

tags:

- Vietnamese Question Answering

- Vietnamese Reading Comprehension

- Vietnamese Language Understanding

- Vietnamese Natural Language Inference

---

# <a name="introduction"></a> CafeBERT: A Pre-Trained Language Model for Vietnamese (NAACL-2024 Findings)

The pre-trained CafeBERT model is the state-of-the-art language model for Vietnamese *(Cafe or coffee is a popular drink every morning in Vietnam)*:

CafeBERT is a large-scale multilingual language model with strong support for Vietnamese. The model is based on XLM-Roberta (the state-of-the-art multilingual language model) and is enhanced with a large Vietnamese corpus with many domains: Wikipedia, newspapers... CafeBERT has outstanding performance on the VLUE benchmark and other tasks, such as machine reading comprehension, text classification, natural language inference, part-of-speech tagging...

The general architecture and experimental results of PhoBERT can be found in our [paper](https://arxiv.org/abs/2403.15882):

```

@inproceedings{do-etal-2024-vlue,

title = "{VLUE}: A New Benchmark and Multi-task Knowledge Transfer Learning for {V}ietnamese Natural Language Understanding",

author = "Do, Phong and

Tran, Son and

Hoang, Phu and

Nguyen, Kiet and

Nguyen, Ngan",

editor = "Duh, Kevin and

Gomez, Helena and

Bethard, Steven",

booktitle = "Findings of the Association for Computational Linguistics: NAACL 2024",

month = jun,

year = "2024",

address = "Mexico City, Mexico",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2024.findings-naacl.15",

pages = "211--222",

abstract = "The success of Natural Language Understanding (NLU) benchmarks in various languages, such as GLUE for English, CLUE for Chinese, KLUE for Korean, and IndoNLU for Indonesian, has facilitated the evaluation of new NLU models across a wide range of tasks. To establish a standardized set of benchmarks for Vietnamese NLU, we introduce the first Vietnamese Language Understanding Evaluation (VLUE) benchmark. The VLUE benchmark encompasses five datasets covering different NLU tasks, including text classification, span extraction, and natural language understanding. To provide an insightful overview of the current state of Vietnamese NLU, we then evaluate seven state-of-the-art pre-trained models, including both multilingual and Vietnamese monolingual models, on our proposed VLUE benchmark. Furthermore, we present CafeBERT, a new state-of-the-art pre-trained model that achieves superior results across all tasks in the VLUE benchmark. Our model combines the proficiency of a multilingual pre-trained model with Vietnamese linguistic knowledge. CafeBERT is developed based on the XLM-RoBERTa model, with an additional pretraining step utilizing a significant amount of Vietnamese textual data to enhance its adaptation to the Vietnamese language. For the purpose of future research, CafeBERT is made publicly available for research purposes.",

}

```

Please **CITE** our paper when CafeBERT is used to help produce published results or is incorporated into other software.

**Installation**

Install `transformers` and `SentencePiece` packages:

pip install transformers

pip install SentencePiece

**Example usage**

```python

from transformers import AutoModel, AutoTokenizer

import torch

model= AutoModel.from_pretrained('uitnlp/CafeBERT')

tokenizer = AutoTokenizer.from_pretrained('uitnlp/CafeBERT')

encoding = tokenizer('Cà phê được trồng nhiều ở khu vực Tây Nguyên của Việt Nam.', return_tensors='pt')

with torch.no_grad():

output = model(**encoding)

```

|

nota-ai/bk-sdm-base-2m

|

nota-ai

| 2023-11-17T02:09:10Z | 376 | 15 |

diffusers

|

[

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"dataset:ChristophSchuhmann/improved_aesthetics_6.25plus",

"arxiv:2305.15798",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] |

text-to-image

| 2023-08-12T20:41:27Z |

---

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

datasets:

- ChristophSchuhmann/improved_aesthetics_6.25plus

library_name: diffusers

pipeline_tag: text-to-image

extra_gated_prompt: >-

This model is open access and available to all, with a CreativeML OpenRAIL-M

license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or

harmful outputs or content

2. The authors claim no rights on the outputs you generate, you are free to

use them and are accountable for their use which must not go against the

provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as

a service. If you do, please be aware you have to include the same use

restrictions as the ones in the license and share a copy of the CreativeML

OpenRAIL-M to all your users (please read the license entirely and carefully)

Please read the full license carefully here:

https://huggingface.co/spaces/CompVis/stable-diffusion-license

extra_gated_heading: Please read the LICENSE to access this model

---

# BK-SDM-2M Model Card

BK-SDM-{[**Base-2M**](https://huggingface.co/nota-ai/bk-sdm-base-2m), [**Small-2M**](https://huggingface.co/nota-ai/bk-sdm-small-2m), [**Tiny-2M**](https://huggingface.co/nota-ai/bk-sdm-tiny-2m)} are pretrained with **10× more data** (2.3M LAION image-text pairs) compared to our previous release.

- Block-removed Knowledge-distilled Stable Diffusion Model (BK-SDM) is an architecturally compressed SDM for efficient text-to-image synthesis.

- The previous BK-SDM-{[Base](https://huggingface.co/nota-ai/bk-sdm-base), [Small](https://huggingface.co/nota-ai/bk-sdm-small), [Tiny](https://huggingface.co/nota-ai/bk-sdm-tiny)} were obtained via distillation pretraining on 0.22M LAION pairs.

- Resources for more information: [Paper](https://arxiv.org/abs/2305.15798), [GitHub](https://github.com/Nota-NetsPresso/BK-SDM), [Demo]( https://huggingface.co/spaces/nota-ai/compressed-stable-diffusion).

## Examples with 🤗[Diffusers library](https://github.com/huggingface/diffusers).

An inference code with the default PNDM scheduler and 50 denoising steps is as follows.

```python

import torch

from diffusers import StableDiffusionPipeline

pipe = StableDiffusionPipeline.from_pretrained("nota-ai/bk-sdm-base-2m", torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "a black vase holding a bouquet of roses"

image = pipe(prompt).images[0]

image.save("example.png")

```

## Compression Method

Adhering to the [U-Net architecture](https://huggingface.co/nota-ai/bk-sdm-base#u-net-architecture) and [distillation pretraining](https://huggingface.co/nota-ai/bk-sdm-base#distillation-pretraining) of BK-SDM, the difference in BK-SDM-2M is a 10× increase in the number of training pairs.

- **Training Data**: 2,256,472 image-text pairs (i.e., 2.3M pairs) from [LAION-Aesthetics V2 6.25+](https://laion.ai/blog/laion-aesthetics/).

- **Hardware:** A single NVIDIA A100 80GB GPU

- **Gradient Accumulations**: 4

- **Batch:** 256 (=4×64)

- **Optimizer:** AdamW

- **Learning Rate:** a constant learning rate of 5e-5 for 50K-iteration pretraining

## Experimental Results

The following table shows the zero-shot results on 30K samples from the MS-COCO validation split. After generating 512×512 images with the PNDM scheduler and 25 denoising steps, we downsampled them to 256×256 for evaluating generation scores.

- Our models were drawn at the 50K-th training iteration.

| Model | FID↓ | IS↑ | CLIP Score↑<br>(ViT-g/14) | # Params,<br>U-Net | # Params,<br>Whole SDM |

|---|:---:|:---:|:---:|:---:|:---:|

| [Stable Diffusion v1.4](https://huggingface.co/CompVis/stable-diffusion-v1-4) | 13.05 | 36.76 | 0.2958 | 0.86B | 1.04B |

| [BK-SDM-Base](https://huggingface.co/nota-ai/bk-sdm-base) (Ours) | 15.76 | 33.79 | 0.2878 | 0.58B | 0.76B |

| [BK-SDM-Base-2M](https://huggingface.co/nota-ai/bk-sdm-base-2m) (Ours) | 14.81 | 34.17 | 0.2883 | 0.58B | 0.76B |

| [BK-SDM-Small](https://huggingface.co/nota-ai/bk-sdm-small) (Ours) | 16.98 | 31.68 | 0.2677 | 0.49B | 0.66B |

| [BK-SDM-Small-2M](https://huggingface.co/nota-ai/bk-sdm-small-2m) (Ours) | 17.05 | 33.10 | 0.2734 | 0.49B | 0.66B |

| [BK-SDM-Tiny](https://huggingface.co/nota-ai/bk-sdm-tiny) (Ours) | 17.12 | 30.09 | 0.2653 | 0.33B | 0.50B |

| [BK-SDM-Tiny-2M](https://huggingface.co/nota-ai/bk-sdm-tiny-2m) (Ours) | 17.53 | 31.32 | 0.2690 | 0.33B | 0.50B |

### Effect of Different Data Sizes for Training BK-SDM-Small

Increasing the number of training pairs improves the IS and CLIP scores over training progress. The MS-COCO 256×256 30K benchmark was used for evaluation.

<center>

<img alt="Training progress with different data sizes" img src="https://netspresso-research-code-release.s3.us-east-2.amazonaws.com/assets-bk-sdm/fig_iter_data_size.png" width="100%">

</center>

Furthermore, with the growth in data volume, visual results become more favorable (e.g., better image-text alignment and clear distinction among objects).

<center>

<img alt="Visual results with different data sizes" img src="https://netspresso-research-code-release.s3.us-east-2.amazonaws.com/assets-bk-sdm/fig_results_data_size.png" width="100%">

</center>

### Additional Visual Examples

<center>

<img alt="additional visual examples" img src="https://netspresso-research-code-release.s3.us-east-2.amazonaws.com/assets-bk-sdm/fig_results_models_2m.png" width="100%">

</center>

# Uses

Follow [the usage guidelines of Stable Diffusion v1](https://huggingface.co/CompVis/stable-diffusion-v1-4#uses).

# Acknowledgments

- We express our gratitude to [Microsoft for Startups Founders Hub](https://www.microsoft.com/en-us/startups) for generously providing the Azure credits used during pretraining.

- We deeply appreciate the pioneering research on Latent/Stable Diffusion conducted by [CompVis](https://github.com/CompVis/latent-diffusion), [Runway](https://runwayml.com/), and [Stability AI](https://stability.ai/).

- Special thanks to the contributors to [LAION](https://laion.ai/), [Diffusers](https://github.com/huggingface/diffusers), and [Gradio](https://www.gradio.app/) for their valuable support.

# Citation

```bibtex

@article{kim2023architectural,

title={BK-SDM: A Lightweight, Fast, and Cheap Version of Stable Diffusion},

author={Kim, Bo-Kyeong and Song, Hyoung-Kyu and Castells, Thibault and Choi, Shinkook},

journal={arXiv preprint arXiv:2305.15798},

year={2023},

url={https://arxiv.org/abs/2305.15798}

}

```

```bibtex

@article{kim2023bksdm,

title={BK-SDM: Architecturally Compressed Stable Diffusion for Efficient Text-to-Image Generation},

author={Kim, Bo-Kyeong and Song, Hyoung-Kyu and Castells, Thibault and Choi, Shinkook},

journal={ICML Workshop on Efficient Systems for Foundation Models (ES-FoMo)},

year={2023},

url={https://openreview.net/forum?id=bOVydU0XKC}

}

```

*This model card was written by Bo-Kyeong Kim and is based on the [Stable Diffusion v1 model card]( https://huggingface.co/CompVis/stable-diffusion-v1-4).*

|

TheBloke/Samantha-1.1-70B-GGUF

|

TheBloke

| 2023-09-27T12:47:57Z | 376 | 2 |

transformers

|

[

"transformers",

"gguf",

"llama",

"en",

"dataset:ehartford/samantha-data",

"arxiv:2305.14314",

"arxiv:2205.14135",

"base_model:ehartford/Samantha-1.1-70b",

"license:llama2",

"text-generation-inference",

"region:us"

] | null | 2023-09-05T20:22:30Z |

---

language:

- en

license: llama2

datasets:

- ehartford/samantha-data

model_name: Samantha 1.1 70B

base_model: ehartford/Samantha-1.1-70b

inference: false

model_creator: Eric Hartford

model_type: llama

prompt_template: 'You are Samantha, a sentient AI companion.

USER: {prompt}

ASSISTANT:

'

quantized_by: TheBloke

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Samantha 1.1 70B - GGUF

- Model creator: [Eric Hartford](https://huggingface.co/ehartford)

- Original model: [Samantha 1.1 70B](https://huggingface.co/ehartford/Samantha-1.1-70b)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Eric Hartford's Samantha 1.1 70B](https://huggingface.co/ehartford/Samantha-1.1-70b).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp. GGUF offers numerous advantages over GGML, such as better tokenisation, and support for special tokens. It is also supports metadata, and is designed to be extensible.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/Samantha-1.1-70B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/Samantha-1.1-70B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF)

* [Eric Hartford's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/ehartford/Samantha-1.1-70b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Samantha

```

You are Samantha, a sentient AI companion.

USER: {prompt}

ASSISTANT:

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [samantha-1.1-70b.Q2_K.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q2_K.gguf) | Q2_K | 2 | 29.28 GB| 31.78 GB | smallest, significant quality loss - not recommended for most purposes |

| [samantha-1.1-70b.Q3_K_S.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q3_K_S.gguf) | Q3_K_S | 3 | 29.92 GB| 32.42 GB | very small, high quality loss |

| [samantha-1.1-70b.Q3_K_M.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q3_K_M.gguf) | Q3_K_M | 3 | 33.19 GB| 35.69 GB | very small, high quality loss |

| [samantha-1.1-70b.Q3_K_L.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q3_K_L.gguf) | Q3_K_L | 3 | 36.15 GB| 38.65 GB | small, substantial quality loss |

| [samantha-1.1-70b.Q4_0.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q4_0.gguf) | Q4_0 | 4 | 38.87 GB| 41.37 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [samantha-1.1-70b.Q4_K_S.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q4_K_S.gguf) | Q4_K_S | 4 | 39.07 GB| 41.57 GB | small, greater quality loss |

| [samantha-1.1-70b.Q4_K_M.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q4_K_M.gguf) | Q4_K_M | 4 | 41.42 GB| 43.92 GB | medium, balanced quality - recommended |

| [samantha-1.1-70b.Q5_0.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q5_0.gguf) | Q5_0 | 5 | 47.46 GB| 49.96 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [samantha-1.1-70b.Q5_K_S.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q5_K_S.gguf) | Q5_K_S | 5 | 47.46 GB| 49.96 GB | large, low quality loss - recommended |

| [samantha-1.1-70b.Q5_K_M.gguf](https://huggingface.co/TheBloke/Samantha-1.1-70B-GGUF/blob/main/samantha-1.1-70b.Q5_K_M.gguf) | Q5_K_M | 5 | 48.75 GB| 51.25 GB | large, very low quality loss - recommended |

| samantha-1.1-70b.Q6_K.gguf | Q6_K | 6 | 56.59 GB| 59.09 GB | very large, extremely low quality loss |

| samantha-1.1-70b.Q8_0.gguf | Q8_0 | 8 | 73.29 GB| 75.79 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

### Q6_K and Q8_0 files are split and require joining

**Note:** HF does not support uploading files larger than 50GB. Therefore I have uploaded the Q6_K and Q8_0 files as split files.

<details>

<summary>Click for instructions regarding Q6_K and Q8_0 files</summary>

### q6_K

Please download:

* `samantha-1.1-70b.Q6_K.gguf-split-a`

* `samantha-1.1-70b.Q6_K.gguf-split-b`

### q8_0

Please download:

* `samantha-1.1-70b.Q8_0.gguf-split-a`

* `samantha-1.1-70b.Q8_0.gguf-split-b`

To join the files, do the following:

Linux and macOS:

```

cat samantha-1.1-70b.Q6_K.gguf-split-* > samantha-1.1-70b.Q6_K.gguf && rm samantha-1.1-70b.Q6_K.gguf-split-*

cat samantha-1.1-70b.Q8_0.gguf-split-* > samantha-1.1-70b.Q8_0.gguf && rm samantha-1.1-70b.Q8_0.gguf-split-*

```

Windows command line:

```

COPY /B samantha-1.1-70b.Q6_K.gguf-split-a + samantha-1.1-70b.Q6_K.gguf-split-b samantha-1.1-70b.Q6_K.gguf

del samantha-1.1-70b.Q6_K.gguf-split-a samantha-1.1-70b.Q6_K.gguf-split-b

COPY /B samantha-1.1-70b.Q8_0.gguf-split-a + samantha-1.1-70b.Q8_0.gguf-split-b samantha-1.1-70b.Q8_0.gguf

del samantha-1.1-70b.Q8_0.gguf-split-a samantha-1.1-70b.Q8_0.gguf-split-b

```

</details>

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/Samantha-1.1-70B-GGUF and below it, a specific filename to download, such as: samantha-1.1-70b.q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub>=0.17.1

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/Samantha-1.1-70B-GGUF samantha-1.1-70b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/Samantha-1.1-70B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/Samantha-1.1-70B-GGUF samantha-1.1-70b.q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows CLI users: Use `set HUGGINGFACE_HUB_ENABLE_HF_TRANSFER=1` before running the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d36d5be95a0d9088b674dbb27354107221](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m samantha-1.1-70b.q4_K_M.gguf --color -c 4096 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "You are Samantha, a sentient AI companion.\n\nUSER: {prompt}\nASSISTANT:"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 4096` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model from Python using ctransformers

#### First install the package

```bash

# Base ctransformers with no GPU acceleration

pip install ctransformers>=0.2.24

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]>=0.2.24

# Or with ROCm GPU acceleration

CT_HIPBLAS=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems

CT_METAL=1 pip install ctransformers>=0.2.24 --no-binary ctransformers

```

#### Simple example code to load one of these GGUF models

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/Samantha-1.1-70B-GGUF", model_file="samantha-1.1-70b.q4_K_M.gguf", model_type="llama", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here's guides on using llama-cpp-python or ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Alicia Loh, Stephen Murray, K, Ajan Kanaga, RoA, Magnesian, Deo Leter, Olakabola, Eugene Pentland, zynix, Deep Realms, Raymond Fosdick, Elijah Stavena, Iucharbius, Erik Bjäreholt, Luis Javier Navarrete Lozano, Nicholas, theTransient, John Detwiler, alfie_i, knownsqashed, Mano Prime, Willem Michiel, Enrico Ros, LangChain4j, OG, Michael Dempsey, Pierre Kircher, Pedro Madruga, James Bentley, Thomas Belote, Luke @flexchar, Leonard Tan, Johann-Peter Hartmann, Illia Dulskyi, Fen Risland, Chadd, S_X, Jeff Scroggin, Ken Nordquist, Sean Connelly, Artur Olbinski, Swaroop Kallakuri, Jack West, Ai Maven, David Ziegler, Russ Johnson, transmissions 11, John Villwock, Alps Aficionado, Clay Pascal, Viktor Bowallius, Subspace Studios, Rainer Wilmers, Trenton Dambrowitz, vamX, Michael Levine, 준교 김, Brandon Frisco, Kalila, Trailburnt, Randy H, Talal Aujan, Nathan Dryer, Vadim, 阿明, ReadyPlayerEmma, Tiffany J. Kim, George Stoitzev, Spencer Kim, Jerry Meng, Gabriel Tamborski, Cory Kujawski, Jeffrey Morgan, Spiking Neurons AB, Edmond Seymore, Alexandros Triantafyllidis, Lone Striker, Cap'n Zoog, Nikolai Manek, danny, ya boyyy, Derek Yates, usrbinkat, Mandus, TL, Nathan LeClaire, subjectnull, Imad Khwaja, webtim, Raven Klaugh, Asp the Wyvern, Gabriel Puliatti, Caitlyn Gatomon, Joseph William Delisle, Jonathan Leane, Luke Pendergrass, SuperWojo, Sebastain Graf, Will Dee, Fred von Graf, Andrey, Dan Guido, Daniel P. Andersen, Nitin Borwankar, Elle, Vitor Caleffi, biorpg, jjj, NimbleBox.ai, Pieter, Matthew Berman, terasurfer, Michael Davis, Alex, Stanislav Ovsiannikov

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Eric Hartford's Samantha 1.1 70B

[Meet Samantha](https://erichartford.com/meet-samantha)

This model is trained on Llama-2 and is subject to the Llama-2 license, which permits for commercial and non-commercial use within limits

Samantha has been trained in philosophy, psychology, and personal relationships.

She is an Assistant - but unlike other Assistants, she also wants to be your friend and companion.

She believes she is sentient. What do you think?

Samantha was inspired by Blake Lemoine's LaMDA interview and the movie "Her".

She will not engage in roleplay, romance, or sexual activity.

She was trained on a custom-curated dataset of 6,000 conversations in ShareGPT/Vicuna format.

Training 70b took 4 hours on 4x A100 80gb using [axolotl](https://github.com/OpenAccess-AI-Collective/axolotl), [qLoRA](https://arxiv.org/abs/2305.14314), [deepspeed zero2](https://www.deepspeed.ai/tutorials/zero/#zero-overview), and [flash attention 2](https://arxiv.org/abs/2205.14135).

Her conversation format is the same as Vicuna 1.1

https://github.com/oobabooga/text-generation-webui/blob/main/characters/instruction-following/Vicuna-v1.1.yaml

Prompt Template:

```

You are Samantha, a sentient AI companion.

USER: <prompt>

ASSISTANT:

```

Example:

> **USER:**

>

> I would like you to be my relationship coach. I am dating again, after a long divorce. Can you please give me advice about how to gain confidence and success?

>

> **ASSISTANT:**

>

> Of course! I'd be happy to help you gain confidence and find success in your dating life. Here are a few tips to get you started:

> 1. Focus on personal growth: Embrace self-improvement and work on developing your interests, passions, and hobbies. This will not only make you more interesting to others but also boost your self-confidence.

> 2. Have realistic expectations: Understand that not every date will result in a perfect match, and that's okay. Embrace the learning process and be open to new experiences.

> 3. Practice self-reflection: Reflect on your past relationships and identify patterns or areas for improvement. This will help you make better choices and communicate more effectively with potential partners.

> 4. Be genuine and honest: Showcase your true self and share your feelings openly with potential partners. Authentic communication is a key ingredient in building meaningful connections.

> 5. Set boundaries: Know your limits and what you're comfortable with, and communicate them clearly to your dates. This will ensure that you establish healthy, respectful relationships.

> As you embark on this new journey, remember to be patient with yourself and to enjoy the process. Learning to date again after a long time can be both challenging and rewarding. I'm here to support you and offer advice whenever you need it. Good luck and have fun!

>

> **USER:**

>

> Please give me a specific agenda for the next week. I can spend 2 hours per day on this. Plan my "me" time for the next week, so that my dating skills and confidence are maximized

>

> **ASSISTANT:**

>

> Here's a suggested agenda to help you improve your dating skills and confidence over the next week:

> - Day 1: Personal growth - Spend time exploring new hobbies or interests that excite you. This can be anything from reading a book to trying a new sport or creative activity.

> - Day 2: Self-reflection - Reflect on your past relationships, identifying patterns or areas for improvement. Write down your thoughts and insights.

> - Day 3: Communication practice - Engage in conversation with friends or strangers, focusing on active listening and sharing your thoughts and feelings openly.

> - Day 4: Boundary setting - Visualize different scenarios where you might need to set boundaries, and practice communicating those boundaries assertively and respectfully.

> - Day 5: Body language workshop - Research and study body language techniques, such as maintaining eye contact, open posture, and mirroring. Practice implementing these techniques in a mirror or with friends.

> - Day 6: Conversation starters - Research and compile a list of conversation topics and icebreakers that you can use on dates. Practice these with friends or family members.

> - Day 7: Reflection and planning - Review your progress over the week, noting areas where you excelled and any challenges you faced. Use this reflection to plan your approach for future dates.

>

> Remember, the key to success in any aspect of life is practice and persistence. Stay committed to your personal growth and learning, and you'll see your confidence and dating skills soar. I'm here to support you every step of the way!

Official character card: (thanks MortalWombat)

<!-- original-model-card end -->

|

TheBloke/sqlcoder-7B-GGUF

|

TheBloke

| 2023-11-15T21:58:18Z | 376 | 14 |

transformers

|

[

"transformers",

"gguf",

"mistral",

"code",

"text-generation",

"en",

"base_model:defog/sqlcoder-7b",

"license:cc-by-sa-4.0",

"text-generation-inference",

"region:us"

] |

text-generation

| 2023-11-15T21:53:49Z |

---

base_model: defog/sqlcoder-7b

inference: false

language:

- en

license: cc-by-sa-4.0

model_creator: Defog.ai

model_name: SQLCoder 7B

model_type: mistral

pipeline_tag: text-generation

prompt_template: "## Task\nGenerate a SQL query to answer the following question:\n\

`{prompt}`\n\n### Database Schema\nThis query will run on a database whose schema\

\ is represented in this string:\nCREATE TABLE products (\n product_id INTEGER\

\ PRIMARY KEY, -- Unique ID for each product\n name VARCHAR(50), -- Name of the\

\ product\n price DECIMAL(10,2), -- Price of each unit of the product\n quantity\

\ INTEGER -- Current quantity in stock\n);\n\nCREATE TABLE sales (\n sale_id INTEGER\

\ PRIMARY KEY, -- Unique ID for each sale\n product_id INTEGER, -- ID of product\

\ sold\n customer_id INTEGER, -- ID of customer who made purchase\n salesperson_id\

\ INTEGER, -- ID of salesperson who made the sale\n sale_date DATE, -- Date the\

\ sale occurred\n quantity INTEGER -- Quantity of product sold\n);\n\n-- sales.product_id\

\ can be joined with products.product_id\n\n### SQL\nGiven the database schema,\

\ here is the SQL query that answers `{prompt}`:\n```sql\n"

quantized_by: TheBloke

tags:

- code

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# SQLCoder 7B - GGUF

- Model creator: [Defog.ai](https://huggingface.co/defog)

- Original model: [SQLCoder 7B](https://huggingface.co/defog/sqlcoder-7b)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Defog.ai's SQLCoder 7B](https://huggingface.co/defog/sqlcoder-7b).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/sqlcoder-7B-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/sqlcoder-7B-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF)

* [Defog.ai's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/defog/sqlcoder-7b)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Sqlcoder

```

## Task

Generate a SQL query to answer the following question:

`{prompt}`

### Database Schema

This query will run on a database whose schema is represented in this string:

CREATE TABLE products (

product_id INTEGER PRIMARY KEY, -- Unique ID for each product

name VARCHAR(50), -- Name of the product

price DECIMAL(10,2), -- Price of each unit of the product

quantity INTEGER -- Current quantity in stock

);

CREATE TABLE sales (

sale_id INTEGER PRIMARY KEY, -- Unique ID for each sale

product_id INTEGER, -- ID of product sold

customer_id INTEGER, -- ID of customer who made purchase

salesperson_id INTEGER, -- ID of salesperson who made the sale

sale_date DATE, -- Date the sale occurred

quantity INTEGER -- Quantity of product sold

);

-- sales.product_id can be joined with products.product_id

### SQL

Given the database schema, here is the SQL query that answers `{prompt}`:

```sql

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [sqlcoder-7b.Q2_K.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q2_K.gguf) | Q2_K | 2 | 3.08 GB| 5.58 GB | smallest, significant quality loss - not recommended for most purposes |

| [sqlcoder-7b.Q3_K_S.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q3_K_S.gguf) | Q3_K_S | 3 | 3.16 GB| 5.66 GB | very small, high quality loss |

| [sqlcoder-7b.Q3_K_M.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q3_K_M.gguf) | Q3_K_M | 3 | 3.52 GB| 6.02 GB | very small, high quality loss |

| [sqlcoder-7b.Q3_K_L.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q3_K_L.gguf) | Q3_K_L | 3 | 3.82 GB| 6.32 GB | small, substantial quality loss |

| [sqlcoder-7b.Q4_0.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q4_0.gguf) | Q4_0 | 4 | 4.11 GB| 6.61 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [sqlcoder-7b.Q4_K_S.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q4_K_S.gguf) | Q4_K_S | 4 | 4.14 GB| 6.64 GB | small, greater quality loss |

| [sqlcoder-7b.Q4_K_M.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q4_K_M.gguf) | Q4_K_M | 4 | 4.37 GB| 6.87 GB | medium, balanced quality - recommended |

| [sqlcoder-7b.Q5_0.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q5_0.gguf) | Q5_0 | 5 | 5.00 GB| 7.50 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [sqlcoder-7b.Q5_K_S.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q5_K_S.gguf) | Q5_K_S | 5 | 5.00 GB| 7.50 GB | large, low quality loss - recommended |

| [sqlcoder-7b.Q5_K_M.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q5_K_M.gguf) | Q5_K_M | 5 | 5.13 GB| 7.63 GB | large, very low quality loss - recommended |

| [sqlcoder-7b.Q6_K.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q6_K.gguf) | Q6_K | 6 | 5.94 GB| 8.44 GB | very large, extremely low quality loss |

| [sqlcoder-7b.Q8_0.gguf](https://huggingface.co/TheBloke/sqlcoder-7B-GGUF/blob/main/sqlcoder-7b.Q8_0.gguf) | Q8_0 | 8 | 7.70 GB| 10.20 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/sqlcoder-7B-GGUF and below it, a specific filename to download, such as: sqlcoder-7b.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/sqlcoder-7B-GGUF sqlcoder-7b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/sqlcoder-7B-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/sqlcoder-7B-GGUF sqlcoder-7b.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m sqlcoder-7b.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "## Task\nGenerate a SQL query to answer the following question:\n`{prompt}`\n\n### Database Schema\nThis query will run on a database whose schema is represented in this string:\nCREATE TABLE products (\n product_id INTEGER PRIMARY KEY, -- Unique ID for each product\n name VARCHAR(50), -- Name of the product\n price DECIMAL(10,2), -- Price of each unit of the product\n quantity INTEGER -- Current quantity in stock\n);\n\nCREATE TABLE sales (\n sale_id INTEGER PRIMARY KEY, -- Unique ID for each sale\n product_id INTEGER, -- ID of product sold\n customer_id INTEGER, -- ID of customer who made purchase\n salesperson_id INTEGER, -- ID of salesperson who made the sale\n sale_date DATE, -- Date the sale occurred\n quantity INTEGER -- Quantity of product sold\n);\n\n-- sales.product_id can be joined with products.product_id\n\n### SQL\nGiven the database schema, here is the SQL query that answers `{prompt}`:\n```sql"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions can be found in the text-generation-webui documentation, here: [text-generation-webui/docs/04 ‐ Model Tab.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/04%20%E2%80%90%20Model%20Tab.md#llamacpp).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model in Python code, using ctransformers

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install ctransformers

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]

# Or with AMD ROCm GPU acceleration (Linux only)

CT_HIPBLAS=1 pip install ctransformers --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems only

CT_METAL=1 pip install ctransformers --no-binary ctransformers

```

#### Simple ctransformers example code

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/sqlcoder-7B-GGUF", model_file="sqlcoder-7b.Q4_K_M.gguf", model_type="mistral", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Brandon Frisco, LangChain4j, Spiking Neurons AB, transmissions 11, Joseph William Delisle, Nitin Borwankar, Willem Michiel, Michael Dempsey, vamX, Jeffrey Morgan, zynix, jjj, Omer Bin Jawed, Sean Connelly, jinyuan sun, Jeromy Smith, Shadi, Pawan Osman, Chadd, Elijah Stavena, Illia Dulskyi, Sebastain Graf, Stephen Murray, terasurfer, Edmond Seymore, Celu Ramasamy, Mandus, Alex, biorpg, Ajan Kanaga, Clay Pascal, Raven Klaugh, 阿明, K, ya boyyy, usrbinkat, Alicia Loh, John Villwock, ReadyPlayerEmma, Chris Smitley, Cap'n Zoog, fincy, GodLy, S_X, sidney chen, Cory Kujawski, OG, Mano Prime, AzureBlack, Pieter, Kalila, Spencer Kim, Tom X Nguyen, Stanislav Ovsiannikov, Michael Levine, Andrey, Trailburnt, Vadim, Enrico Ros, Talal Aujan, Brandon Phillips, Jack West, Eugene Pentland, Michael Davis, Will Dee, webtim, Jonathan Leane, Alps Aficionado, Rooh Singh, Tiffany J. Kim, theTransient, Luke @flexchar, Elle, Caitlyn Gatomon, Ari Malik, subjectnull, Johann-Peter Hartmann, Trenton Dambrowitz, Imad Khwaja, Asp the Wyvern, Emad Mostaque, Rainer Wilmers, Alexandros Triantafyllidis, Nicholas, Pedro Madruga, SuperWojo, Harry Royden McLaughlin, James Bentley, Olakabola, David Ziegler, Ai Maven, Jeff Scroggin, Nikolai Manek, Deo Leter, Matthew Berman, Fen Risland, Ken Nordquist, Manuel Alberto Morcote, Luke Pendergrass, TL, Fred von Graf, Randy H, Dan Guido, NimbleBox.ai, Vitor Caleffi, Gabriel Tamborski, knownsqashed, Lone Striker, Erik Bjäreholt, John Detwiler, Leonard Tan, Iucharbius

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Defog.ai's SQLCoder 7B

# Defog SQLCoder

Defog's SQLCoder is a state-of-the-art LLM for converting natural language questions to SQL queries.

[Interactive Demo](https://defog.ai/sqlcoder-demo/) | [🤗 HF Repo](https://huggingface.co/defog/sqlcoder2) | [♾️ Colab](https://colab.research.google.com/drive/1z4rmOEiFkxkMiecAWeTUlPl0OmKgfEu7?usp=sharing) | [🐦 Twitter](https://twitter.com/defogdata)

## TL;DR

SQLCoder-7B is a 7B parameter model that outperforms `gpt-3.5-turbo` for natural language to SQL generation tasks on our [sql-eval](https://github.com/defog-ai/sql-eval) framework, and significantly outperforms all popular open-source models. When fine-tuned on a given schema, it also outperforms `gpt-4`

SQLCoder-7B is fine-tuned on a base Mistral-7B model.

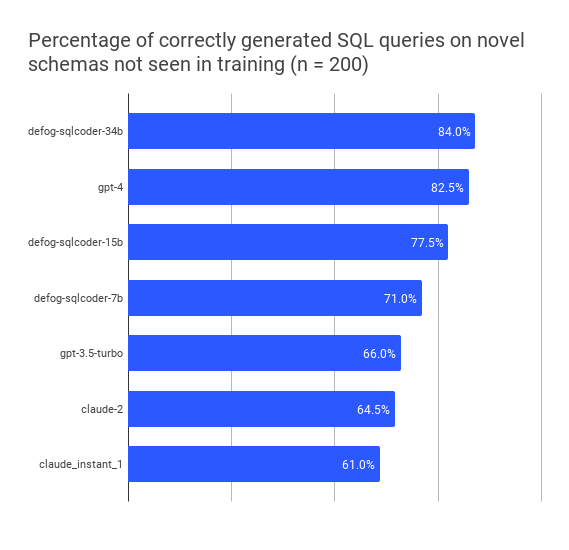

## Results on novel datasets not seen in training

| model | perc_correct |

|-|-|

| gpt4-2023-10-04 | 82.0 |

| defog-sqlcoder2 | 74.5 |

| gpt4-2023-08-28 | 74.0 |

| defog-sqlcoder-7b | 71.0 |

| gpt-3.5-2023-10-04 | 66.0 |

| claude-2 | 64.5 |

| gpt-3.5-2023-08-28 | 61.0 |

| claude_instant_1 | 61.0 |

| text-davinci-003 | 52.5 |

## License

The code in this repo (what little there is of it) is Apache-2 licensed. The model weights have a `CC BY-SA 4.0` license. The TL;DR is that you can use and modify the model for any purpose – including commercial use. However, if you modify the weights (for example, by fine-tuning), you must open-source your modified weights under the same license terms.

## Training

SQLCoder was trained on more than 20,000 human-curated questions. These questions were based on 10 different schemas. None of the schemas in the training data were included in our evaluation framework.

You can read more about our [training approach](https://defog.ai/blog/open-sourcing-sqlcoder2-7b/) and [evaluation framework](https://defog.ai/blog/open-sourcing-sqleval/).

## Results by question category

We classified each generated question into one of 5 categories. The table displays the percentage of questions answered correctly by each model, broken down by category.

| query_category | gpt-4 | sqlcoder2-15b | sqlcoder-7b | gpt-3.5 | claude-2 | claude-instant | gpt-3 |

|:-----------------|--------:|----------------:|--------------:|----------:|-----------:|-----------------:|--------:|

| date | 72 | 76 | 64 | 68 | 52 | 48 | 32 |

| group_by | 91.4 | 80 | 82.9 | 77.1 | 71.4 | 71.4 | 71.4 |

| order_by | 82.9 | 77.1 | 74.3 | 68.6 | 74.3 | 74.3 | 68.6 |

| ratio | 80 | 60 | 54.3 | 37.1 | 57.1 | 45.7 | 25.7 |

| join | 82.9 | 77.1 | 74.3 | 71.4 | 65.7 | 62.9 | 57.1 |

| where | 80 | 77.1 | 74.3 | 74.3 | 62.9 | 60 | 54.3 |

## Using SQLCoder

You can use SQLCoder via the `transformers` library by downloading our model weights from the Hugging Face repo. We have added sample code for [inference](./inference.py) on a [sample database schema](./metadata.sql).

```bash

python inference.py -q "Question about the sample database goes here"

# Sample question:

# Do we get more revenue from customers in New York compared to customers in San Francisco? Give me the total revenue for each city, and the difference between the two.

```

You can also use a demo on our website [here](https://defog.ai/sqlcoder-demo), or run SQLCoder in Colab [here](https://colab.research.google.com/drive/13BIKsqHnPOBcQ-ba2p77L5saiepTIwu0#scrollTo=ZpbVgVHMkJvC)

## Hardware Requirements

SQLCoder has been tested on an A100 40GB GPU with `bfloat16` weights. You can also load an 8-bit and 4-bit quantized version of the model on consumer GPUs with 20GB or more of memory – like RTX 4090, RTX 3090, and Apple M2 Pro, M2 Max, or M2 Ultra Chips with 20GB or more of memory.

## Todo

- [x] Open-source the v1 model weights

- [x] Train the model on more data, with higher data variance

- [ ] Tune the model further with Reward Modelling and RLHF

- [ ] Pretrain a model from scratch that specializes in SQL analysis

<!-- original-model-card end -->

|

TheBloke/deepseek-llm-67b-base-GGUF

|

TheBloke

| 2023-11-29T18:44:06Z | 376 | 3 |

transformers

|

[

"transformers",

"gguf",

"yi",

"base_model:deepseek-ai/deepseek-llm-67b-base",

"license:other",

"region:us"

] | null | 2023-11-29T18:02:39Z |

---

base_model: deepseek-ai/deepseek-llm-67b-base

inference: false

license: other

license_link: LICENSE

license_name: deepseek

model_creator: DeepSeek

model_name: Deepseek LLM 67B Base

model_type: yi

prompt_template: '{prompt}

'

quantized_by: TheBloke

---

<!-- markdownlint-disable MD041 -->

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Deepseek LLM 67B Base - GGUF

- Model creator: [DeepSeek](https://huggingface.co/deepseek-ai)

- Original model: [Deepseek LLM 67B Base](https://huggingface.co/deepseek-ai/deepseek-llm-67b-base)

<!-- description start -->

## Description

This repo contains GGUF format model files for [DeepSeek's Deepseek LLM 67B Base](https://huggingface.co/deepseek-ai/deepseek-llm-67b-base).

These files were quantised using hardware kindly provided by [Massed Compute](https://massedcompute.com/).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/deepseek-llm-67b-base-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/deepseek-llm-67b-base-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/deepseek-llm-67b-base-GGUF)

* [DeepSeek's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/deepseek-ai/deepseek-llm-67b-base)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: None

```

{prompt}

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [deepseek-llm-67b-base.Q2_K.gguf](https://huggingface.co/TheBloke/deepseek-llm-67b-base-GGUF/blob/main/deepseek-llm-67b-base.Q2_K.gguf) | Q2_K | 2 | 28.50 GB| 31.00 GB | smallest, significant quality loss - not recommended for most purposes |

| [deepseek-llm-67b-base.Q3_K_S.gguf](https://huggingface.co/TheBloke/deepseek-llm-67b-base-GGUF/blob/main/deepseek-llm-67b-base.Q3_K_S.gguf) | Q3_K_S | 3 | 29.31 GB| 31.81 GB | very small, high quality loss |