modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf | RichardErkhov | 2024-06-25T12:41:28Z | 9,565 | 0 | null | [

"gguf",

"region:us"

] | null | 2024-06-25T09:05:54Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

NeuralSynthesis-7b-v0.4-slerp - GGUF

- Model creator: https://huggingface.co/Kukedlc/

- Original model: https://huggingface.co/Kukedlc/NeuralSynthesis-7b-v0.4-slerp/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [NeuralSynthesis-7b-v0.4-slerp.Q2_K.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q2_K.gguf) | Q2_K | 2.53GB |

| [NeuralSynthesis-7b-v0.4-slerp.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.IQ3_XS.gguf) | IQ3_XS | 2.81GB |

| [NeuralSynthesis-7b-v0.4-slerp.IQ3_S.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.IQ3_S.gguf) | IQ3_S | 2.96GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q3_K_S.gguf) | Q3_K_S | 2.95GB |

| [NeuralSynthesis-7b-v0.4-slerp.IQ3_M.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.IQ3_M.gguf) | IQ3_M | 3.06GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q3_K.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q3_K.gguf) | Q3_K | 3.28GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q3_K_M.gguf) | Q3_K_M | 3.28GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q3_K_L.gguf) | Q3_K_L | 3.56GB |

| [NeuralSynthesis-7b-v0.4-slerp.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.IQ4_XS.gguf) | IQ4_XS | 3.67GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q4_0.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q4_0.gguf) | Q4_0 | 3.83GB |

| [NeuralSynthesis-7b-v0.4-slerp.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.IQ4_NL.gguf) | IQ4_NL | 3.87GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q4_K_S.gguf) | Q4_K_S | 3.86GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q4_K.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q4_K.gguf) | Q4_K | 4.07GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q4_K_M.gguf) | Q4_K_M | 4.07GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q4_1.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q4_1.gguf) | Q4_1 | 4.24GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q5_0.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q5_0.gguf) | Q5_0 | 4.65GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q5_K_S.gguf) | Q5_K_S | 4.65GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q5_K.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q5_K.gguf) | Q5_K | 4.78GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q5_K_M.gguf) | Q5_K_M | 4.78GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q5_1.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q5_1.gguf) | Q5_1 | 5.07GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q6_K.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q6_K.gguf) | Q6_K | 5.53GB |

| [NeuralSynthesis-7b-v0.4-slerp.Q8_0.gguf](https://huggingface.co/RichardErkhov/Kukedlc_-_NeuralSynthesis-7b-v0.4-slerp-gguf/blob/main/NeuralSynthesis-7b-v0.4-slerp.Q8_0.gguf) | Q8_0 | 7.17GB |

Original model description:

---

tags:

- merge

- mergekit

- lazymergekit

- allknowingroger/MultiverseEx26-7B-slerp

- Kukedlc/NeuralSynthesis-7B-v0.1

base_model:

- allknowingroger/MultiverseEx26-7B-slerp

- Kukedlc/NeuralSynthesis-7B-v0.1

license: apache-2.0

---

# NeuralSynthesis-7b-v0.4-slerp

NeuralSynthesis-7b-v0.4-slerp is a merge of the following models using [LazyMergekit](https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing):

* [allknowingroger/MultiverseEx26-7B-slerp](https://huggingface.co/allknowingroger/MultiverseEx26-7B-slerp)

* [Kukedlc/NeuralSynthesis-7B-v0.1](https://huggingface.co/Kukedlc/NeuralSynthesis-7B-v0.1)

## 🧩 Configuration

```yaml

slices:

- sources:

- model: allknowingroger/MultiverseEx26-7B-slerp

layer_range: [0, 32]

- model: Kukedlc/NeuralSynthesis-7B-v0.1

layer_range: [0, 32]

merge_method: slerp

base_model: Kukedlc/NeuralSynthesis-7B-v0.1

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16

```

## 💻 Usage

```python

!pip install -qU transformers accelerate

from transformers import AutoTokenizer

import transformers

import torch

model = "Kukedlc/NeuralSynthesis-7b-v0.4-slerp"

messages = [{"role": "user", "content": "What is a large language model?"}]

tokenizer = AutoTokenizer.from_pretrained(model)

prompt = tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

pipeline = transformers.pipeline(

"text-generation",

model=model,

torch_dtype=torch.float16,

device_map="auto",

)

outputs = pipeline(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

```

|

mradermacher/Code-Llama-Bagel-8B-GGUF | mradermacher | 2024-06-22T10:31:40Z | 9,563 | 1 | transformers | [

"transformers",

"gguf",

"merge",

"mergekit",

"lazymergekit",

"theprint/Code-Llama-Bagel-8B",

"ajibawa-2023/Code-Llama-3-8B",

"jondurbin/bagel-8b-v1.0",

"en",

"base_model:theprint/Code-Llama-Bagel-8B",

"license:llama3",

"endpoints_compatible",

"region:us"

] | null | 2024-06-22T02:20:46Z | ---

base_model: theprint/Code-Llama-Bagel-8B

language:

- en

library_name: transformers

license: llama3

quantized_by: mradermacher

tags:

- merge

- mergekit

- lazymergekit

- theprint/Code-Llama-Bagel-8B

- ajibawa-2023/Code-Llama-3-8B

- jondurbin/bagel-8b-v1.0

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/theprint/Code-Llama-Bagel-8B

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.IQ3_XS.gguf) | IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.IQ3_S.gguf) | IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.IQ3_M.gguf) | IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Code-Llama-Bagel-8B-GGUF/resolve/main/Code-Llama-Bagel-8B.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

DeepMount00/Qwen2-1.5B-Ita | DeepMount00 | 2024-06-20T03:57:51Z | 9,561 | 16 | transformers | [

"transformers",

"safetensors",

"qwen2",

"text-generation",

"conversational",

"it",

"en",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-06-13T07:43:18Z | ---

language:

- it

- en

license: apache-2.0

library_name: transformers

---

# Qwen2 1.5B: Almost the Same Performance as ITALIA (iGenius) but 6 Times Smaller 🚀

### Model Overview

**Model Name:** Qwen2 1.5B Fine-tuned for Italian Language

**Version:** 1.5b

**Model Type:** Language Model

**Parameter Count:** 1.5 billion

**Language:** Italian

**Comparable Model:** [ITALIA by iGenius](https://huggingface.co/iGeniusAI) (9 billion parameters)

### Model Description

Qwen2 1.5B is a compact language model specifically fine-tuned for the Italian language. Despite its relatively small size of 1.5 billion parameters, Qwen2 1.5B demonstrates strong performance, nearly matching the capabilities of larger models, such as the **9 billion parameter ITALIA model by iGenius**. The fine-tuning process focused on optimizing the model for various language tasks in Italian, making it highly efficient and effective for Italian language applications.

### Performance Evaluation

The performance of Qwen2 1.5B was evaluated on several benchmarks and compared against the ITALIA model. The results are as follows:

### Performance Evaluation

| Model | Parameters | Average | MMLU | ARC | HELLASWAG |

|:----------:|:----------:|:-------:|:-----:|:-----:|:---------:|

| ITALIA | 9B | 43.5 | 35.22 | **38.49** | **56.79** |

| Qwen2-1.5B-Ita | 1.5B | **43.98** | **51.45** | 32.34 | 48.15 |

### Conclusion

Qwen2 1.5B demonstrates that a smaller, more efficient model can achieve performance levels comparable to much larger models. It excels in the MMLU benchmark, showing its strength in multitask language understanding. While it scores slightly lower in the ARC and HELLASWAG benchmarks, its overall performance makes it a viable option for Italian language tasks, offering a balance between efficiency and capability. |

indonesian-nlp/wav2vec2-indonesian-javanese-sundanese | indonesian-nlp | 2022-08-19T07:44:40Z | 9,560 | 5 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"automatic-speech-recognition",

"audio",

"hf-asr-leaderboard",

"id",

"jv",

"robust-speech-event",

"speech",

"su",

"sun",

"dataset:mozilla-foundation/common_voice_7_0",

"dataset:openslr",

"dataset:magic_data",

"dataset:titml",

"license:apache-2.0",

"model-index",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language:

- id

- jv

- sun

datasets:

- mozilla-foundation/common_voice_7_0

- openslr

- magic_data

- titml

metrics:

- wer

tags:

- audio

- automatic-speech-recognition

- hf-asr-leaderboard

- id

- jv

- robust-speech-event

- speech

- su

license: apache-2.0

model-index:

- name: Wav2Vec2 Indonesian Javanese and Sundanese by Indonesian NLP

results:

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 6.1

type: common_voice

args: id

metrics:

- name: Test WER

type: wer

value: 4.056

- name: Test CER

type: cer

value: 1.472

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Common Voice 7

type: mozilla-foundation/common_voice_7_0

args: id

metrics:

- name: Test WER

type: wer

value: 4.492

- name: Test CER

type: cer

value: 1.577

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Robust Speech Event - Dev Data

type: speech-recognition-community-v2/dev_data

args: id

metrics:

- name: Test WER

type: wer

value: 48.94

- task:

name: Automatic Speech Recognition

type: automatic-speech-recognition

dataset:

name: Robust Speech Event - Test Data

type: speech-recognition-community-v2/eval_data

args: id

metrics:

- name: Test WER

type: wer

value: 68.95

---

# Multilingual Speech Recognition for Indonesian Languages

This is the model built for the project

[Multilingual Speech Recognition for Indonesian Languages](https://github.com/indonesian-nlp/multilingual-asr).

It is a fine-tuned [facebook/wav2vec2-large-xlsr-53](https://huggingface.co/facebook/wav2vec2-large-xlsr-53)

model on the [Indonesian Common Voice dataset](https://huggingface.co/datasets/common_voice),

[High-quality TTS data for Javanese - SLR41](https://huggingface.co/datasets/openslr), and

[High-quality TTS data for Sundanese - SLR44](https://huggingface.co/datasets/openslr) datasets.

We also provide a [live demo](https://huggingface.co/spaces/indonesian-nlp/multilingual-asr) to test the model.

When using this model, make sure that your speech input is sampled at 16kHz.

## Usage

The model can be used directly (without a language model) as follows:

```python

import torch

import torchaudio

from datasets import load_dataset

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

test_dataset = load_dataset("common_voice", "id", split="test[:2%]")

processor = Wav2Vec2Processor.from_pretrained("indonesian-nlp/wav2vec2-indonesian-javanese-sundanese")

model = Wav2Vec2ForCTC.from_pretrained("indonesian-nlp/wav2vec2-indonesian-javanese-sundanese")

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the aduio files as arrays

def speech_file_to_array_fn(batch):

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

inputs = processor(test_dataset[:2]["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values, attention_mask=inputs.attention_mask).logits

predicted_ids = torch.argmax(logits, dim=-1)

print("Prediction:", processor.batch_decode(predicted_ids))

print("Reference:", test_dataset[:2]["sentence"])

```

## Evaluation

The model can be evaluated as follows on the Indonesian test data of Common Voice.

```python

import torch

import torchaudio

from datasets import load_dataset, load_metric

from transformers import Wav2Vec2ForCTC, Wav2Vec2Processor

import re

test_dataset = load_dataset("common_voice", "id", split="test")

wer = load_metric("wer")

processor = Wav2Vec2Processor.from_pretrained("indonesian-nlp/wav2vec2-indonesian-javanese-sundanese")

model = Wav2Vec2ForCTC.from_pretrained("indonesian-nlp/wav2vec2-indonesian-javanese-sundanese")

model.to("cuda")

chars_to_ignore_regex = '[\,\?\.\!\-\;\:\"\“\%\‘\'\”\�]'

resampler = torchaudio.transforms.Resample(48_000, 16_000)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def speech_file_to_array_fn(batch):

batch["sentence"] = re.sub(chars_to_ignore_regex, '', batch["sentence"]).lower()

speech_array, sampling_rate = torchaudio.load(batch["path"])

batch["speech"] = resampler(speech_array).squeeze().numpy()

return batch

test_dataset = test_dataset.map(speech_file_to_array_fn)

# Preprocessing the datasets.

# We need to read the audio files as arrays

def evaluate(batch):

inputs = processor(batch["speech"], sampling_rate=16_000, return_tensors="pt", padding=True)

with torch.no_grad():

logits = model(inputs.input_values.to("cuda"), attention_mask=inputs.attention_mask.to("cuda")).logits

pred_ids = torch.argmax(logits, dim=-1)

batch["pred_strings"] = processor.batch_decode(pred_ids)

return batch

result = test_dataset.map(evaluate, batched=True, batch_size=8)

print("WER: {:2f}".format(100 * wer.compute(predictions=result["pred_strings"], references=result["sentence"])))

```

**Test Result**: 11.57 %

## Training

The Common Voice `train`, `validation`, and ... datasets were used for training as well as ... and ... # TODO

The script used for training can be found [here](https://github.com/cahya-wirawan/indonesian-speech-recognition)

(will be available soon)

|

vicgalle/CarbonBeagle-11B-truthy | vicgalle | 2024-03-04T12:19:58Z | 9,559 | 9 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"dataset:jondurbin/truthy-dpo-v0.1",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-02-10T12:40:49Z | ---

license: apache-2.0

library_name: transformers

datasets:

- jondurbin/truthy-dpo-v0.1

model-index:

- name: CarbonBeagle-11B-truthy

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 72.27

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=vicgalle/CarbonBeagle-11B-truthy

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 89.31

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=vicgalle/CarbonBeagle-11B-truthy

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 66.55

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=vicgalle/CarbonBeagle-11B-truthy

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 78.55

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=vicgalle/CarbonBeagle-11B-truthy

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 83.82

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=vicgalle/CarbonBeagle-11B-truthy

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 66.11

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=vicgalle/CarbonBeagle-11B-truthy

name: Open LLM Leaderboard

---

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_vicgalle__CarbonBeagle-11B-truthy)

| Metric |Value|

|---------------------------------|----:|

|Avg. |76.10|

|AI2 Reasoning Challenge (25-Shot)|72.27|

|HellaSwag (10-Shot) |89.31|

|MMLU (5-Shot) |66.55|

|TruthfulQA (0-shot) |78.55|

|Winogrande (5-shot) |83.82|

|GSM8k (5-shot) |66.11|

|

Yntec/Fanta | Yntec | 2024-04-20T21:46:51Z | 9,558 | 1 | diffusers | [

"diffusers",

"safetensors",

"Base Model",

"Photorealistic",

"Beautiful",

"art",

"artistic",

"michin",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"en",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2024-04-20T21:02:09Z | ---

language:

- en

license: other

tags:

- Base Model

- Photorealistic

- Beautiful

- art

- artistic

- michin

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

---

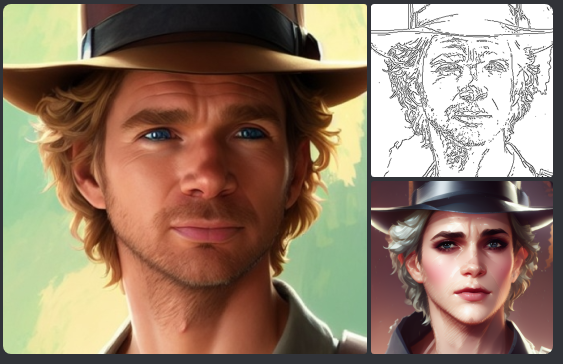

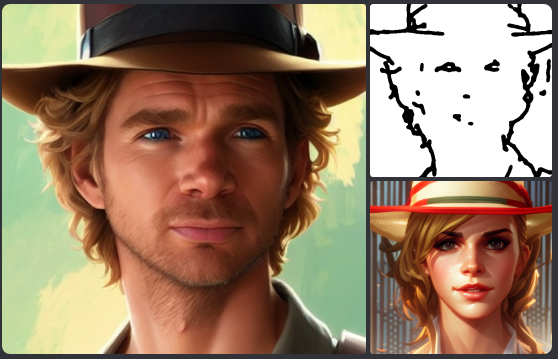

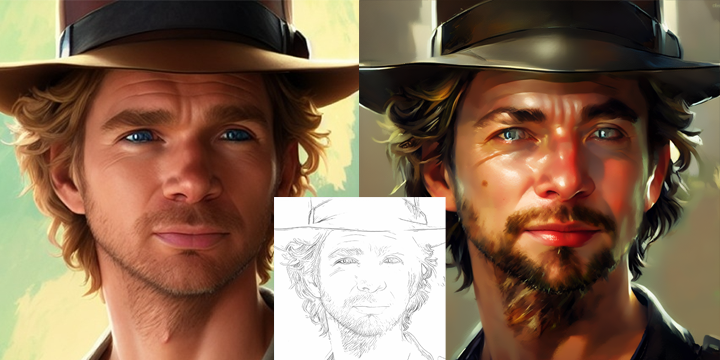

# Fanta

Samples and prompts:

(Click for larger)

Top left: Elvis's Daughters. Movie still. Pretty CUTE LITTLE Girl with sister playing with miniature toy city, bokeh. DETAILED vintage colors photography brown EYES, sitting on a box of pepsis, gorgeous detailed Ponytail, cocacola can Magazine ad, iconic, 1935, sharp focus. Illustration By KlaysMoji and leyendecker and artgerm and Dave Rapoza

Top right: cinematic 1980 movie still, handsome VALENTINE MAN with pretty woman with cleavage, classroom, school Uniforms, blackboard. Pinup. He wears a backpack, bokeh

Bottom left: analog style 70s color photograph of young kiefer sutherland as jack bauer, 24 behind the scenes

Bottom right: absurdres, adorable cute harley quinn, at night, dark alley, moon, :) red ponytail, blonde ponytail, in matte black hardsuit, military, roughed up, bat, city fog,

FantasticMix v65 (my favorite) by michin mixed with artistic models to... well, make its outputs more artistic!

Original page: https://civitai.com/models/22402?modelVersionId=77784

# FantasticDreams

Same models, different mix. This model goes hard! Perhaps too hard... |

cognitivecomputations/dolphin-2.8-mistral-7b-v02 | cognitivecomputations | 2024-05-20T14:49:07Z | 9,555 | 200 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"en",

"dataset:cognitivecomputations/dolphin",

"dataset:cognitivecomputations/dolphin-coder",

"dataset:cognitivecomputations/samantha-data",

"dataset:jondurbin/airoboros-2.2.1",

"dataset:teknium/openhermes-2.5",

"dataset:m-a-p/Code-Feedback",

"dataset:m-a-p/CodeFeedback-Filtered-Instruction",

"base_model:alpindale/Mistral-7B-v0.2-hf",

"license:apache-2.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-03-28T06:24:16Z | ---

base_model: alpindale/Mistral-7B-v0.2-hf

language:

- en

license: apache-2.0

datasets:

- cognitivecomputations/dolphin

- cognitivecomputations/dolphin-coder

- cognitivecomputations/samantha-data

- jondurbin/airoboros-2.2.1

- teknium/openhermes-2.5

- m-a-p/Code-Feedback

- m-a-p/CodeFeedback-Filtered-Instruction

model-index:

- name: dolphin-2.8-mistral-7b-v02

results:

- task:

type: text-generation

dataset:

type: openai_humaneval

name: HumanEval

metrics:

- name: pass@1

type: pass@1

value: 0.469

verified: false

---

# Dolphin 2.8 Mistral 7b v0.2 🐬

By Eric Hartford and Cognitive Computations

[](https://discord.gg/cognitivecomputations)

Discord: https://discord.gg/cognitivecomputations

<img src="https://cdn-uploads.huggingface.co/production/uploads/63111b2d88942700629f5771/ldkN1J0WIDQwU4vutGYiD.png" width="600" />

My appreciation for the sponsors of Dolphin 2.8:

- [Crusoe Cloud](https://crusoe.ai/) - provided excellent on-demand 10xL40S node

- [Winston Sou](https://twitter.com/WinsonDabbles) - Along with a generous anonymous sponsor, donated a massive personally owned compute resource!

- [Abacus AI](https://abacus.ai/) - my employer and partner in many things.

This model is based on [Mistral-7b-v0.2](https://huggingface.co/alpindale/Mistral-7B-v0.2-hf) a new base model released by MistralAI on March 23, 2024 but they have not yet published on HuggingFace. Thanks to @alpindale for converting / publishing.

The base model has 32k context, and the full-weights fine-tune was with 16k sequence lengths.

It took 3 days on 10x L40S provided by [Crusoe Cloud](https://crusoe.ai/)

Dolphin-2.8 has a variety of instruction, conversational, and coding skills.

Dolphin is uncensored. I have filtered the dataset to remove alignment and bias. This makes the model more compliant. You are advised to implement your own alignment layer before exposing the model as a service. It will be highly compliant to any requests, even unethical ones. Please read my blog post about uncensored models. https://erichartford.com/uncensored-models You are responsible for any content you create using this model. Enjoy responsibly.

Dolphin is licensed Apache 2.0. I grant permission for any use including commercial. Dolphin was trained on data generated from GPT4 among other models.

# Evals

```

{

"arc_challenge": {

"acc,none": 0.5921501706484642,

"acc_stderr,none": 0.014361097288449701,

"acc_norm,none": 0.6339590443686007,

"acc_norm_stderr,none": 0.014077223108470139

},

"gsm8k": {

"exact_match,strict-match": 0.4783927217589083,

"exact_match_stderr,strict-match": 0.013759618667051773,

"exact_match,flexible-extract": 0.5367702805155421,

"exact_match_stderr,flexible-extract": 0.013735191956468648

},

"hellaswag": {

"acc,none": 0.6389165504879506,

"acc_stderr,none": 0.004793330525656218,

"acc_norm,none": 0.8338976299541924,

"acc_norm_stderr,none": 0.00371411888431746

},

"mmlu": {

"acc,none": 0.6122347243982339,

"acc_stderr,none": 0.003893774654142997

},

"truthfulqa_mc2": {

"acc,none": 0.5189872652778472,

"acc_stderr,none": 0.014901128316426086

},

"winogrande": {

"acc,none": 0.7971586424625099,

"acc_stderr,none": 0.011301439925936643

}

}

```

[<img src="https://raw.githubusercontent.com/OpenAccess-AI-Collective/axolotl/main/image/axolotl-badge-web.png" alt="Built with Axolotl" width="200" height="32"/>](https://github.com/OpenAccess-AI-Collective/axolotl)

<details><summary>See axolotl config</summary>

axolotl version: `0.4.0`

```yaml

base_model: alpindale/Mistral-7B-v0.2-hf

model_type: MistralForCausalLM

tokenizer_type: LlamaTokenizer

is_mistral_derived_model: true

load_in_8bit: false

load_in_4bit: false

strict: false

datasets:

- path: /workspace/datasets/dolphin201-sharegpt2.jsonl

type: sharegpt

- path: /workspace/datasets/dolphin-coder-translate-sharegpt2.jsonl

type: sharegpt

- path: /workspace/datasets/dolphin-coder-codegen-sharegpt2.jsonl

type: sharegpt

- path: /workspace/datasets/m-a-p_Code-Feedback-sharegpt.jsonl

type: sharegpt

- path: /workspace/datasets/m-a-p_CodeFeedback-Filtered-Instruction-sharegpt.jsonl

type: sharegpt

- path: /workspace/datasets/not_samantha_norefusals.jsonl

type: sharegpt

- path: /workspace/datasets/openhermes2_5-sharegpt.jsonl

type: sharegpt

chat_template: chatml

dataset_prepared_path: last_run_prepared

val_set_size: 0.001

output_dir: /workspace/dolphin-2.8-mistral-7b

sequence_len: 16384

sample_packing: true

pad_to_sequence_len: true

wandb_project: dolphin

wandb_entity:

wandb_watch:

wandb_run_id:

wandb_log_model:

gradient_accumulation_steps: 8

micro_batch_size: 3

num_epochs: 4

adam_beta2: 0.95

adam_epsilon: 0.00001

max_grad_norm: 1.0

lr_scheduler: cosine

learning_rate: 0.000005

optimizer: adamw_bnb_8bit

train_on_inputs: false

group_by_length: false

bf16: true

fp16: false

tf32: false

gradient_checkpointing: true

gradient_checkpointing_kwargs:

use_reentrant: true

early_stopping_patience:

resume_from_checkpoint:

local_rank:

logging_steps: 1

xformers_attention:

flash_attention: true

warmup_steps: 10

eval_steps: 73

eval_table_size:

eval_table_max_new_tokens:

eval_sample_packing: false

saves_per_epoch:

save_steps: 73

save_total_limit: 2

debug:

deepspeed: deepspeed_configs/zero3_bf16.json

weight_decay: 0.1

fsdp:

fsdp_config:

special_tokens:

eos_token: "<|im_end|>"

tokens:

- "<|im_start|>"

```

</details><br>

# workspace/dolphin-2.8-mistral-7b

This model is a fine-tuned version of [alpindale/Mistral-7B-v0.2-hf](https://huggingface.co/alpindale/Mistral-7B-v0.2-hf) on the None dataset.

It achieves the following results on the evaluation set:

- Loss: 0.4828

## Model description

More information needed

## Intended uses & limitations

More information needed

## Training and evaluation data

More information needed

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-06

- train_batch_size: 3

- eval_batch_size: 3

- seed: 42

- distributed_type: multi-GPU

- num_devices: 10

- gradient_accumulation_steps: 8

- total_train_batch_size: 240

- total_eval_batch_size: 30

- optimizer: Adam with betas=(0.9,0.95) and epsilon=1e-05

- lr_scheduler_type: cosine

- lr_scheduler_warmup_steps: 10

- num_epochs: 4

### Training results

| Training Loss | Epoch | Step | Validation Loss |

|:-------------:|:-----:|:----:|:---------------:|

| 1.1736 | 0.0 | 1 | 1.0338 |

| 0.6106 | 0.36 | 73 | 0.5439 |

| 0.5766 | 0.72 | 146 | 0.5171 |

| 0.5395 | 1.06 | 219 | 0.5045 |

| 0.5218 | 1.42 | 292 | 0.4976 |

| 0.5336 | 1.78 | 365 | 0.4915 |

| 0.5018 | 2.13 | 438 | 0.4885 |

| 0.5113 | 2.48 | 511 | 0.4856 |

| 0.5066 | 2.84 | 584 | 0.4838 |

| 0.4967 | 3.19 | 657 | 0.4834 |

| 0.4956 | 3.55 | 730 | 0.4830 |

| 0.5026 | 3.9 | 803 | 0.4828 |

### Framework versions

- Transformers 4.40.0.dev0

- Pytorch 2.2.1+cu121

- Datasets 2.18.0

- Tokenizers 0.15.0

# Quants

- [dagbs/-GGUF](https://huggingface.co/dagbs/dolphin-2.8-mistral-7b-v02-GGUF)

- [bartowski/ExLlamaV2](https://huggingface.co/bartowski/dolphin-2.8-mistral-7b-v02-exl2)

- [solidrust/AWQ](https://huggingface.co/solidrust/dolphin-2.8-mistral-7b-v02-AWQ) |

facebook/mms-tts-eng | facebook | 2023-09-06T13:32:25Z | 9,548 | 122 | transformers | [

"transformers",

"pytorch",

"safetensors",

"vits",

"text-to-audio",

"mms",

"text-to-speech",

"arxiv:2305.13516",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

] | text-to-speech | 2023-08-24T09:09:22Z |

---

license: cc-by-nc-4.0

tags:

- mms

- vits

pipeline_tag: text-to-speech

---

# Massively Multilingual Speech (MMS): English Text-to-Speech

This repository contains the **English (eng)** language text-to-speech (TTS) model checkpoint.

This model is part of Facebook's [Massively Multilingual Speech](https://arxiv.org/abs/2305.13516) project, aiming to

provide speech technology across a diverse range of languages. You can find more details about the supported languages

and their ISO 639-3 codes in the [MMS Language Coverage Overview](https://dl.fbaipublicfiles.com/mms/misc/language_coverage_mms.html),

and see all MMS-TTS checkpoints on the Hugging Face Hub: [facebook/mms-tts](https://huggingface.co/models?sort=trending&search=facebook%2Fmms-tts).

MMS-TTS is available in the 🤗 Transformers library from version 4.33 onwards.

## Model Details

VITS (**V**ariational **I**nference with adversarial learning for end-to-end **T**ext-to-**S**peech) is an end-to-end

speech synthesis model that predicts a speech waveform conditional on an input text sequence. It is a conditional variational

autoencoder (VAE) comprised of a posterior encoder, decoder, and conditional prior.

A set of spectrogram-based acoustic features are predicted by the flow-based module, which is formed of a Transformer-based

text encoder and multiple coupling layers. The spectrogram is decoded using a stack of transposed convolutional layers,

much in the same style as the HiFi-GAN vocoder. Motivated by the one-to-many nature of the TTS problem, where the same text

input can be spoken in multiple ways, the model also includes a stochastic duration predictor, which allows the model to

synthesise speech with different rhythms from the same input text.

The model is trained end-to-end with a combination of losses derived from variational lower bound and adversarial training.

To improve the expressiveness of the model, normalizing flows are applied to the conditional prior distribution. During

inference, the text encodings are up-sampled based on the duration prediction module, and then mapped into the

waveform using a cascade of the flow module and HiFi-GAN decoder. Due to the stochastic nature of the duration predictor,

the model is non-deterministic, and thus requires a fixed seed to generate the same speech waveform.

For the MMS project, a separate VITS checkpoint is trained on each langauge.

## Usage

MMS-TTS is available in the 🤗 Transformers library from version 4.33 onwards. To use this checkpoint,

first install the latest version of the library:

```

pip install --upgrade transformers accelerate

```

Then, run inference with the following code-snippet:

```python

from transformers import VitsModel, AutoTokenizer

import torch

model = VitsModel.from_pretrained("facebook/mms-tts-eng")

tokenizer = AutoTokenizer.from_pretrained("facebook/mms-tts-eng")

text = "some example text in the English language"

inputs = tokenizer(text, return_tensors="pt")

with torch.no_grad():

output = model(**inputs).waveform

```

The resulting waveform can be saved as a `.wav` file:

```python

import scipy

scipy.io.wavfile.write("techno.wav", rate=model.config.sampling_rate, data=output.float().numpy())

```

Or displayed in a Jupyter Notebook / Google Colab:

```python

from IPython.display import Audio

Audio(output.numpy(), rate=model.config.sampling_rate)

```

## BibTex citation

This model was developed by Vineel Pratap et al. from Meta AI. If you use the model, consider citing the MMS paper:

```

@article{pratap2023mms,

title={Scaling Speech Technology to 1,000+ Languages},

author={Vineel Pratap and Andros Tjandra and Bowen Shi and Paden Tomasello and Arun Babu and Sayani Kundu and Ali Elkahky and Zhaoheng Ni and Apoorv Vyas and Maryam Fazel-Zarandi and Alexei Baevski and Yossi Adi and Xiaohui Zhang and Wei-Ning Hsu and Alexis Conneau and Michael Auli},

journal={arXiv},

year={2023}

}

```

## License

The model is licensed as **CC-BY-NC 4.0**.

|

mradermacher/LCARS_AI_001-GGUF | mradermacher | 2024-06-14T11:08:37Z | 9,543 | 1 | transformers | [

"transformers",

"gguf",

"text-generation-inference",

"unsloth",

"mistral",

"trl",

"chemistry",

"biology",

"legal",

"art",

"music",

"finance",

"code",

"medical",

"not-for-all-audiences",

"merge",

"climate",

"chain-of-thought",

"tree-of-knowledge",

"forest-of-thoughts",

"visual-spacial-sketchpad",

"alpha-mind",

"knowledge-graph",

"entity-detection",

"encyclopedia",

"wikipedia",

"stack-exchange",

"Reddit",

"Cyber-series",

"MegaMind",

"Cybertron",

"SpydazWeb",

"Spydaz",

"LCARS",

"star-trek",

"mega-transformers",

"Mulit-Mega-Merge",

"Multi-Lingual",

"Afro-Centric",

"African-Model",

"Ancient-One",

"en",

"sw",

"ig",

"tw",

"es",

"dataset:gretelai/synthetic_text_to_sql",

"dataset:HuggingFaceTB/cosmopedia",

"dataset:teknium/OpenHermes-2.5",

"dataset:Open-Orca/SlimOrca",

"dataset:Open-Orca/OpenOrca",

"dataset:cognitivecomputations/dolphin-coder",

"dataset:databricks/databricks-dolly-15k",

"dataset:yahma/alpaca-cleaned",

"dataset:uonlp/CulturaX",

"dataset:mwitiderrick/SwahiliPlatypus",

"dataset:swahili",

"dataset:Rogendo/English-Swahili-Sentence-Pairs",

"dataset:ise-uiuc/Magicoder-Evol-Instruct-110K",

"dataset:meta-math/MetaMathQA",

"dataset:abacusai/ARC_DPO_FewShot",

"dataset:abacusai/MetaMath_DPO_FewShot",

"dataset:abacusai/HellaSwag_DPO_FewShot",

"dataset:HaltiaAI/Her-The-Movie-Samantha-and-Theodore-Dataset",

"dataset:HuggingFaceFW/fineweb",

"dataset:occiglot/occiglot-fineweb-v0.5",

"dataset:omi-health/medical-dialogue-to-soap-summary",

"dataset:keivalya/MedQuad-MedicalQnADataset",

"dataset:ruslanmv/ai-medical-dataset",

"dataset:Shekswess/medical_llama3_instruct_dataset_short",

"dataset:ShenRuililin/MedicalQnA",

"dataset:virattt/financial-qa-10K",

"dataset:PatronusAI/financebench",

"dataset:takala/financial_phrasebank",

"dataset:Replete-AI/code_bagel",

"dataset:athirdpath/DPO_Pairs-Roleplay-Alpaca-NSFW",

"dataset:IlyaGusev/gpt_roleplay_realm",

"dataset:rickRossie/bluemoon_roleplay_chat_data_300k_messages",

"dataset:jtatman/hypnosis_dataset",

"dataset:Hypersniper/philosophy_dialogue",

"dataset:Locutusque/function-calling-chatml",

"dataset:bible-nlp/biblenlp-corpus",

"dataset:DatadudeDev/Bible",

"dataset:Helsinki-NLP/bible_para",

"dataset:HausaNLP/AfriSenti-Twitter",

"dataset:aixsatoshi/Chat-with-cosmopedia",

"dataset:HuggingFaceTB/cosmopedia-100k",

"dataset:HuggingFaceFW/fineweb-edu",

"dataset:m-a-p/CodeFeedback-Filtered-Instruction",

"dataset:heliosbrahma/mental_health_chatbot_dataset",

"base_model:LeroyDyer/LCARS_AI_001",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-14T10:20:10Z | ---

base_model: LeroyDyer/LCARS_AI_001

datasets:

- gretelai/synthetic_text_to_sql

- HuggingFaceTB/cosmopedia

- teknium/OpenHermes-2.5

- Open-Orca/SlimOrca

- Open-Orca/OpenOrca

- cognitivecomputations/dolphin-coder

- databricks/databricks-dolly-15k

- yahma/alpaca-cleaned

- uonlp/CulturaX

- mwitiderrick/SwahiliPlatypus

- swahili

- Rogendo/English-Swahili-Sentence-Pairs

- ise-uiuc/Magicoder-Evol-Instruct-110K

- meta-math/MetaMathQA

- abacusai/ARC_DPO_FewShot

- abacusai/MetaMath_DPO_FewShot

- abacusai/HellaSwag_DPO_FewShot

- HaltiaAI/Her-The-Movie-Samantha-and-Theodore-Dataset

- HuggingFaceFW/fineweb

- occiglot/occiglot-fineweb-v0.5

- omi-health/medical-dialogue-to-soap-summary

- keivalya/MedQuad-MedicalQnADataset

- ruslanmv/ai-medical-dataset

- Shekswess/medical_llama3_instruct_dataset_short

- ShenRuililin/MedicalQnA

- virattt/financial-qa-10K

- PatronusAI/financebench

- takala/financial_phrasebank

- Replete-AI/code_bagel

- athirdpath/DPO_Pairs-Roleplay-Alpaca-NSFW

- IlyaGusev/gpt_roleplay_realm

- rickRossie/bluemoon_roleplay_chat_data_300k_messages

- jtatman/hypnosis_dataset

- Hypersniper/philosophy_dialogue

- Locutusque/function-calling-chatml

- bible-nlp/biblenlp-corpus

- DatadudeDev/Bible

- Helsinki-NLP/bible_para

- HausaNLP/AfriSenti-Twitter

- aixsatoshi/Chat-with-cosmopedia

- HuggingFaceTB/cosmopedia-100k

- HuggingFaceFW/fineweb-edu

- m-a-p/CodeFeedback-Filtered-Instruction

- heliosbrahma/mental_health_chatbot_dataset

language:

- en

- sw

- ig

- tw

- es

library_name: transformers

license: apache-2.0

quantized_by: mradermacher

tags:

- text-generation-inference

- transformers

- unsloth

- mistral

- trl

- chemistry

- biology

- legal

- art

- music

- finance

- code

- medical

- not-for-all-audiences

- merge

- climate

- chain-of-thought

- tree-of-knowledge

- forest-of-thoughts

- visual-spacial-sketchpad

- alpha-mind

- knowledge-graph

- entity-detection

- encyclopedia

- wikipedia

- stack-exchange

- Reddit

- Cyber-series

- MegaMind

- Cybertron

- SpydazWeb

- Spydaz

- LCARS

- star-trek

- mega-transformers

- Mulit-Mega-Merge

- Multi-Lingual

- Afro-Centric

- African-Model

- Ancient-One

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/LeroyDyer/LCARS_AI_001

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/LCARS_AI_001-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q2_K.gguf) | Q2_K | 2.8 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.IQ3_XS.gguf) | IQ3_XS | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q3_K_S.gguf) | Q3_K_S | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.IQ3_S.gguf) | IQ3_S | 3.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.IQ3_M.gguf) | IQ3_M | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q3_K_M.gguf) | Q3_K_M | 3.6 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q3_K_L.gguf) | Q3_K_L | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.IQ4_XS.gguf) | IQ4_XS | 4.0 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q4_K_S.gguf) | Q4_K_S | 4.2 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q4_K_M.gguf) | Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q5_K_S.gguf) | Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q5_K_M.gguf) | Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q6_K.gguf) | Q6_K | 6.0 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.Q8_0.gguf) | Q8_0 | 7.8 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/LCARS_AI_001-GGUF/resolve/main/LCARS_AI_001.f16.gguf) | f16 | 14.6 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

TIGER-Lab/Mantis-8B-siglip-llama3 | TIGER-Lab | 2024-05-23T04:08:15Z | 9,533 | 23 | transformers | [

"transformers",

"safetensors",

"llava",

"pretraining",

"multimodal",

"lmm",

"vlm",

"siglip",

"llama3",

"mantis",

"en",

"dataset:TIGER-Lab/Mantis-Instruct",

"arxiv:2405.01483",

"base_model:TIGER-Lab/Mantis-8B-siglip-llama3-pretraind",

"license:llama3",

"endpoints_compatible",

"region:us"

] | null | 2024-05-03T02:53:08Z | ---

base_model: TIGER-Lab/Mantis-8B-siglip-llama3-pretraind

tags:

- multimodal

- lmm

- vlm

- llava

- siglip

- llama3

- mantis

model-index:

- name: Mantis-8B-siglip-llama3

results: []

license: llama3

datasets:

- TIGER-Lab/Mantis-Instruct

language:

- en

---

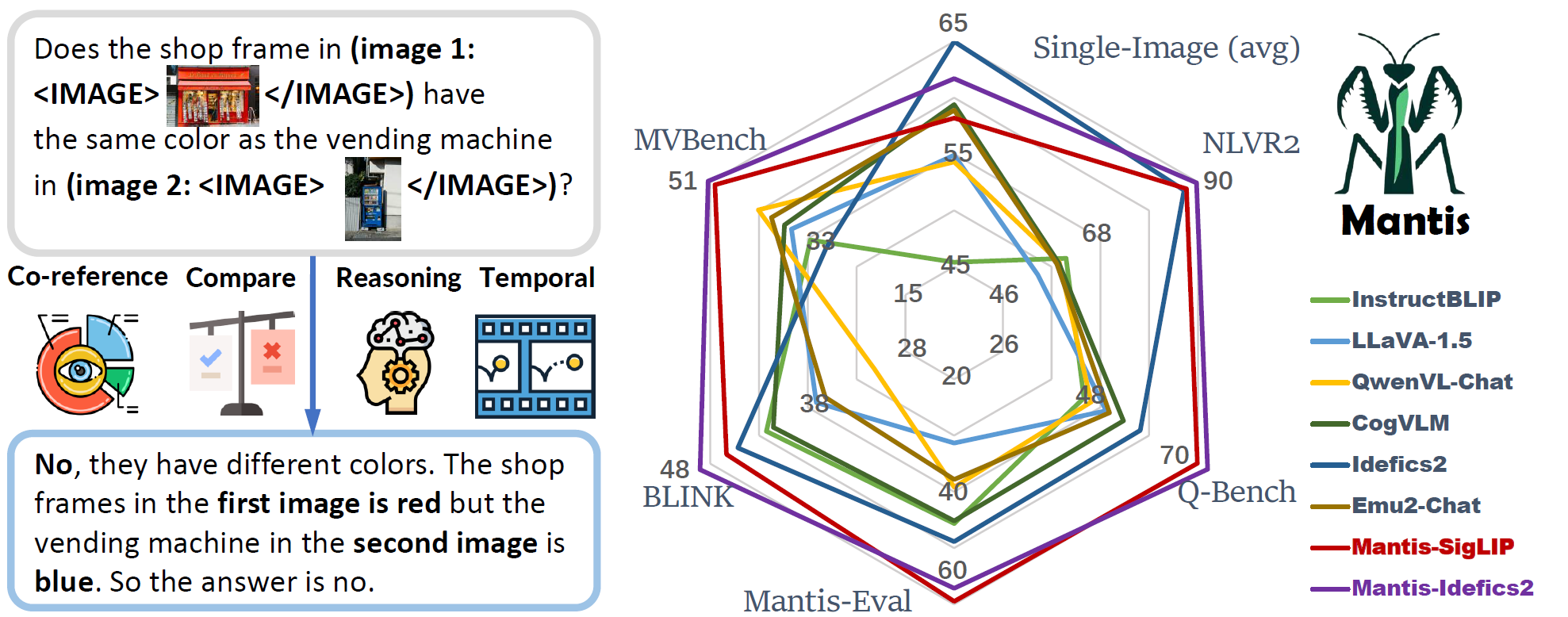

# 🔥 Mantis

[Paper](https://arxiv.org/abs/2405.01483) | [Website](https://tiger-ai-lab.github.io/Mantis/) | [Github](https://github.com/TIGER-AI-Lab/Mantis) | [Models](https://huggingface.co/collections/TIGER-Lab/mantis-6619b0834594c878cdb1d6e4) | [Demo](https://huggingface.co/spaces/TIGER-Lab/Mantis)

## Summary

- Mantis is an LLaMA-3 based LMM with **interleaved text and image as inputs**, train on Mantis-Instruct under academic-level resources (i.e. 36 hours on 16xA100-40G).

- Mantis is trained to have multi-image skills including co-reference, reasoning, comparing, temporal understanding.

- Mantis reaches the state-of-the-art performance on five multi-image benchmarks (NLVR2, Q-Bench, BLINK, MVBench, Mantis-Eval), and also maintain a strong single-image performance on par with CogVLM and Emu2.

## Multi-Image Performance

| Models | Size | Format | NLVR2 | Q-Bench | Mantis-Eval | BLINK | MVBench | Avg |

|--------------------|:----:|:--------:|:-----:|:-------:|:-----------:|:-----:|:-------:|:----:|

| GPT-4V | - | sequence | 88.80 | 76.52 | 62.67 | 51.14 | 43.50 | 64.5 |

| Open Source Models | | | | | | | | |

| Random | - | - | 48.93 | 40.20 | 23.04 | 38.09 | 27.30 | 35.5 |

| Kosmos2 | 1.6B | merge | 49.00 | 35.10 | 30.41 | 37.50 | 21.62 | 34.7 |

| LLaVA-v1.5 | 7B | merge | 53.88 | 49.32 | 31.34 | 37.13 | 36.00 | 41.5 |

| LLava-V1.6 | 7B | merge | 58.88 | 54.80 | 45.62 | 39.55 | 40.90 | 48.0 |

| Qwen-VL-Chat | 7B | merge | 58.72 | 45.90 | 39.17 | 31.17 | 42.15 | 43.4 |

| Fuyu | 8B | merge | 51.10 | 49.15 | 27.19 | 36.59 | 30.20 | 38.8 |

| BLIP-2 | 13B | merge | 59.42 | 51.20 | 49.77 | 39.45 | 31.40 | 46.2 |

| InstructBLIP | 13B | merge | 60.26 | 44.30 | 45.62 | 42.24 | 32.50 | 45.0 |

| CogVLM | 17B | merge | 58.58 | 53.20 | 45.16 | 41.54 | 37.30 | 47.2 |

| OpenFlamingo | 9B | sequence | 36.41 | 19.60 | 12.44 | 39.18 | 7.90 | 23.1 |

| Otter-Image | 9B | sequence | 49.15 | 17.50 | 14.29 | 36.26 | 15.30 | 26.5 |

| Idefics1 | 9B | sequence | 54.63 | 30.60 | 28.11 | 24.69 | 26.42 | 32.9 |

| VideoLLaVA | 7B | sequence | 56.48 | 45.70 | 35.94 | 38.92 | 44.30 | 44.3 |

| Emu2-Chat | 37B | sequence | 58.16 | 50.05 | 37.79 | 36.20 | 39.72 | 44.4 |

| Vila | 8B | sequence | 76.45 | 45.70 | 51.15 | 39.30 | 49.40 | 52.4 |

| Idefics2 | 8B | sequence | 86.87 | 57.00 | 48.85 | 45.18 | 29.68 | 53.5 |

| Mantis-CLIP | 8B | sequence | 84.66 | 66.00 | 55.76 | 47.06 | 48.30 | 60.4 |

| Mantis-SIGLIP | 8B | sequence | 87.43 | 69.90 | **59.45** | 46.35 | 50.15 | 62.7 |

| Mantis-Flamingo | 9B | sequence | 52.96 | 46.80 | 32.72 | 38.00 | 40.83 | 42.3 |

| Mantis-Idefics2 | 8B | sequence | **89.71** | **75.20** | 57.14 | **49.05** | **51.38** | **64.5** |

| $\Delta$ over SOTA | - | - | +2.84 | +18.20 | +8.30 | +3.87 | +1.98 | +11.0 |

## Single-Image Performance

| Model | Size | TextVQA | VQA | MMB | MMMU | OKVQA | SQA | MathVista | Avg |

|-----------------|:----:|:-------:|:----:|:----:|:----:|:-----:|:----:|:---------:|:----:|

| OpenFlamingo | 9B | 46.3 | 58.0 | 32.4 | 28.7 | 51.4 | 45.7 | 18.6 | 40.2 |

| Idefics1 | 9B | 39.3 | 68.8 | 45.3 | 32.5 | 50.4 | 51.6 | 21.1 | 44.1 |

| InstructBLIP | 7B | 33.6 | 75.2 | 38.3 | 30.6 | 45.2 | 70.6 | 24.4 | 45.4 |

| Yi-VL | 6B | 44.8 | 72.5 | 68.4 | 39.1 | 51.3 | 71.7 | 29.7 | 53.9 |

| Qwen-VL-Chat | 7B | 63.8 | 78.2 | 61.8 | 35.9 | 56.6 | 68.2 | 15.5 | 54.3 |

| LLaVA-1.5 | 7B | 58.2 | 76.6 | 64.8 | 35.3 | 53.4 | 70.4 | 25.6 | 54.9 |

| Emu2-Chat | 37B | <u>66.6</u> | **84.9** | 63.6 | 36.3 | **64.8** | 65.3 | 30.7 | 58.9 |

| CogVLM | 17B | **70.4** | <u>82.3</u> | 65.8 | 32.1 | <u>64.8</u> | 65.6 | 35.0 | 59.4 |

| Idefics2 | 8B | 70.4 | 79.1 | <u>75.7</u> | **43.0** | 53.5 | **86.5** | **51.4** | **65.7** |

| Mantis-CLIP | 8B | 56.4 | 73.0 | 66.0 | 38.1 | 53.0 | 73.8 | 31.7 | 56.0 |

| Mantis-SigLIP | 8B | 59.2 | 74.9 | 68.7 | 40.1 | 55.4 | 74.9 | 34.4 | 58.2 |

| Mantis-Idefics2 | 8B | 63.5 | 77.6 | 75.7 | <u>41.1</u> | 52.6 | <u>81.3</u> | <u>40.4</u> | <u>61.7</u> |

## How to use

### Installation

```bash

# This only installs minimum packages (torch, transformers, accelerate) for inference, no redundant packages are installed.

pip install git+https://github.com/TIGER-AI-Lab/Mantis.git

```

### Run example inference:

```python

from mantis.models.mllava import chat_mllava

from PIL import Image

import torch

image1 = "image1.jpg"

image2 = "image2.jpg"

images = [Image.open(image1), Image.open(image2)]

# load processor and model

from mantis.models.mllava import MLlavaProcessor, LlavaForConditionalGeneration

processor = MLlavaProcessor.from_pretrained("TIGER-Lab/Mantis-8B-siglip-llama3")

attn_implementation = None # or "flash_attention_2"

model = LlavaForConditionalGeneration.from_pretrained("TIGER-Lab/Mantis-8B-siglip-llama3", device_map="cuda", torch_dtype=torch.bfloat16, attn_implementation=attn_implementation)

generation_kwargs = {

"max_new_tokens": 1024,

"num_beams": 1,

"do_sample": False

}

# chat

text = "Describe the difference of <image> and <image> as much as you can."

response, history = chat_mllava(text, images, model, processor, **generation_kwargs)

print("USER: ", text)

print("ASSISTANT: ", response)

text = "How many wallets are there in image 1 and image 2 respectively?"

response, history = chat_mllava(text, images, model, processor, history=history, **generation_kwargs)

print("USER: ", text)

print("ASSISTANT: ", response)

"""

USER: Describe the difference of <image> and <image> as much as you can.

ASSISTANT: The second image has more variety in terms of colors and designs. While the first image only shows two brown leather pouches, the second image features four different pouches in various colors and designs, including a purple one with a gold coin, a red one with a gold coin, a black one with a gold coin, and a brown one with a gold coin. This variety makes the second image more visually interesting and dynamic.

USER: How many wallets are there in image 1 and image 2 respectively?

ASSISTANT: There are two wallets in image 1, and four wallets in image 2.

"""

```

### Training

See [mantis/train](https://github.com/TIGER-AI-Lab/Mantis/tree/main/mantis/train) for details

### Evaluation

See [mantis/benchmark](https://github.com/TIGER-AI-Lab/Mantis/tree/main/mantis/benchmark) for details

**Please cite our paper or give a star to out Github repo if you find this model useful**

## Citation

```

@inproceedings{Jiang2024MANTISIM,

title={MANTIS: Interleaved Multi-Image Instruction Tuning},

author={Dongfu Jiang and Xuan He and Huaye Zeng and Cong Wei and Max W.F. Ku and Qian Liu and Wenhu Chen},

publisher={arXiv2405.01483}

year={2024},

}

``` |

facebook/wav2vec2-large-robust-ft-libri-960h | facebook | 2023-06-23T16:47:23Z | 9,507 | 10 | transformers | [

"transformers",

"pytorch",

"safetensors",

"wav2vec2",

"automatic-speech-recognition",

"speech",

"audio",

"en",

"dataset:libri_light",

"dataset:common_voice",

"dataset:switchboard",

"dataset:fisher",

"dataset:librispeech_asr",

"arxiv:2104.01027",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language: en

datasets:

- libri_light

- common_voice

- switchboard

- fisher

- librispeech_asr

tags:

- speech

- audio

- automatic-speech-recognition

widget:

- example_title: Librispeech sample 1

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

- example_title: Librispeech sample 2

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

license: apache-2.0

---

# Wav2Vec2-Large-Robust finetuned on Librispeech

[Facebook's Wav2Vec2](https://ai.facebook.com/blog/wav2vec-20-learning-the-structure-of-speech-from-raw-audio/).

This model is a fine-tuned version of the [wav2vec2-large-robust](https://huggingface.co/facebook/wav2vec2-large-robust) model.

It has been pretrained on:

- [Libri-Light](https://github.com/facebookresearch/libri-light): open-source audio books from the LibriVox project; clean, read-out audio data

- [CommonVoice](https://huggingface.co/datasets/common_voice): crowd-source collected audio data; read-out text snippets

- [Switchboard](https://catalog.ldc.upenn.edu/LDC97S62): telephone speech corpus; noisy telephone data

- [Fisher](https://catalog.ldc.upenn.edu/LDC2004T19): conversational telephone speech; noisy telephone data

and subsequently been finetuned on 960 hours of

- [Librispeech](https://huggingface.co/datasets/librispeech_asr): open-source read-out audio data.

When using the model make sure that your speech input is also sampled at 16Khz.

[Paper Robust Wav2Vec2](https://arxiv.org/abs/2104.01027)

Authors: Wei-Ning Hsu, Anuroop Sriram, Alexei Baevski, Tatiana Likhomanenko, Qiantong Xu, Vineel Pratap, Jacob Kahn, Ann Lee, Ronan Collobert, Gabriel Synnaeve, Michael Auli

**Abstract**

Self-supervised learning of speech representations has been a very active research area but most work is focused on a single domain such as read audio books for which there exist large quantities of labeled and unlabeled data. In this paper, we explore more general setups where the domain of the unlabeled data for pre-training data differs from the domain of the labeled data for fine-tuning, which in turn may differ from the test data domain. Our experiments show that using target domain data during pre-training leads to large performance improvements across a variety of setups. On a large-scale competitive setup, we show that pre-training on unlabeled in-domain data reduces the gap between models trained on in-domain and out-of-domain labeled data by 66%-73%. This has obvious practical implications since it is much easier to obtain unlabeled target domain data than labeled data. Moreover, we find that pre-training on multiple domains improves generalization performance on domains not seen during training. Code and models will be made available at this https URL.

The original model can be found under https://github.com/pytorch/fairseq/tree/master/examples/wav2vec#wav2vec-20.

# Usage

To transcribe audio files the model can be used as a standalone acoustic model as follows:

```python

from transformers import Wav2Vec2Processor, Wav2Vec2ForCTC

from datasets import load_dataset

import soundfile as sf

import torch

# load model and processor

processor = Wav2Vec2Processor.from_pretrained("facebook/wav2vec2-large-robust-ft-libri-960h")

model = Wav2Vec2ForCTC.from_pretrained("facebook/wav2vec2-large-robust-ft-libri-960h")

# define function to read in sound file

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

# load dummy dataset and read soundfiles

ds = load_dataset("patrickvonplaten/librispeech_asr_dummy", "clean", split="validation")

ds = ds.map(map_to_array)

# tokenize

input_values = processor(ds["speech"][:2], return_tensors="pt", padding="longest").input_values # Batch size 1

# retrieve logits

logits = model(input_values).logits

# take argmax and decode

predicted_ids = torch.argmax(logits, dim=-1)

transcription = processor.batch_decode(predicted_ids)

``` |

allenai/tulu-v2.5-13b-preference-mix-rm | allenai | 2024-06-14T02:04:27Z | 9,487 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-classification",

"en",

"dataset:allenai/tulu-2.5-preference-data",

"dataset:allenai/tulu-v2-sft-mixture",

"arxiv:2406.09279",

"base_model:allenai/tulu-2-13b",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-classification | 2024-06-11T20:11:59Z | ---

model-index:

- name: tulu-v2.5-13b-preference-mix-rm

results: []

datasets:

- allenai/tulu-2.5-preference-data

- allenai/tulu-v2-sft-mixture

language:

- en

base_model: allenai/tulu-2-13b

license: apache-2.0

---

<center>

<img src="https://huggingface.co/datasets/allenai/blog-images/resolve/main/tulu-2.5/tulu_25_banner.png" alt="Tulu 2.5 banner image" width="800px"/>

</center>

# Model Card for Tulu V2.5 13B RM - Preference Mix

Tulu is a series of language models that are trained to act as helpful assistants.

Tulu V2.5 is a series of models trained using DPO and PPO starting from the [Tulu 2 suite](https://huggingface.co/collections/allenai/tulu-v2-suite-6551b56e743e6349aab45101).

This is a reward model used for PPO training trained on our preference data mixture.

It was used to train [this](https://huggingface.co/allenai/tulu-v2.5-ppo-13b-uf-mean-13b-mix-rm) model.

For more details, read the paper:

[Unpacking DPO and PPO: Disentangling Best Practices for Learning from Preference Feedback](https://arxiv.org/abs/2406.09279).

## .Model description

- **Model type:** One model belonging to a suite of RLHF tuned chat models on a mix of publicly available, synthetic and human-created datasets.

- **Language(s) (NLP):** English

- **License:** Apache 2.0.

- **Finetuned from model:** [meta-llama/Llama-2-13b-hf](https://huggingface.co/meta-llama/Llama-2-13b-hf)

### Model Sources

- **Repository:** https://github.com/allenai/open-instruct

- **Dataset:** Data used to train this model can be found [here](https://huggingface.co/datasets/allenai/tulu-2.5-preference-data) - specifically the `preference_big_mixture` split.

- **Model Family:** The collection of related models can be found [here](https://huggingface.co/collections/allenai/tulu-v25-suite-66676520fd578080e126f618).

## Input Format

The model is trained to use the following format (note the newlines):

```

<|user|>

Your message here!

<|assistant|>

```

For best results, format all inputs in this manner. **Make sure to include a newline after `<|assistant|>`, this can affect generation quality quite a bit.**

We have included a [chat template](https://huggingface.co/docs/transformers/main/en/chat_templating) in the tokenizer implementing this template.

## Intended uses & limitations

The model was initially fine-tuned on a filtered and preprocessed of the [Tulu V2 mix dataset](https://huggingface.co/datasets/allenai/tulu-v2-sft-mixture), which contains a diverse range of human created instructions and synthetic dialogues generated primarily by other LLMs.

We then further trained the model with a [Jax RM trainer](https://github.com/hamishivi/EasyLM/blob/main/EasyLM/models/llama/llama_train_rm.py) built on [EasyLM](https://github.com/young-geng/EasyLM) on the dataset mentioned above.

This model is meant as a research artefact.

### Training hyperparameters

The following hyperparameters were used during PPO training:

- learning_rate: 1e-06

- total_train_batch_size: 512

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear cooldown to 1e-05.

- lr_scheduler_warmup_ratio: 0.03

- num_epochs: 1.0

## Citation

If you find Tulu 2.5 is useful in your work, please cite it with:

```

@misc{ivison2024unpacking,

title={{Unpacking DPO and PPO: Disentangling Best Practices for Learning from Preference Feedback}},

author={{Hamish Ivison and Yizhong Wang and Jiacheng Liu and Ellen Wu and Valentina Pyatkin and Nathan Lambert and Yejin Choi and Noah A. Smith and Hannaneh Hajishirzi}}

year={2024},

eprint={2406.09279},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

|

mradermacher/Llama-3-8B-WizardLM-196K-GGUF | mradermacher | 2024-06-28T13:38:46Z | 9,485 | 0 | transformers | [

"transformers",

"gguf",

"axolotl",

"generated_from_trainer",

"en",

"base_model:Magpie-Align/Llama-3-8B-WizardLM-196K",

"license:llama3",

"endpoints_compatible",

"region:us"

] | null | 2024-06-27T01:45:28Z | ---

base_model: Magpie-Align/Llama-3-8B-WizardLM-196K

language:

- en

library_name: transformers

license: llama3

quantized_by: mradermacher

tags:

- axolotl

- generated_from_trainer

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/Magpie-Align/Llama-3-8B-WizardLM-196K

<!-- provided-files -->

weighted/imatrix quants are available at https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-i1-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.IQ3_XS.gguf) | IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.IQ3_S.gguf) | IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.IQ3_M.gguf) | IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/Llama-3-8B-WizardLM-196K-GGUF/resolve/main/Llama-3-8B-WizardLM-196K.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

PrunaAI/mattshumer-Llama-3-8B-16K-GGUF-smashed | PrunaAI | 2024-06-28T21:52:07Z | 9,467 | 0 | null | [

"gguf",

"pruna-ai",

"region:us"

] | null | 2024-06-28T21:10:37Z | ---

thumbnail: "https://assets-global.website-files.com/646b351987a8d8ce158d1940/64ec9e96b4334c0e1ac41504_Logo%20with%20white%20text.svg"

metrics:

- memory_disk

- memory_inference

- inference_latency

- inference_throughput

- inference_CO2_emissions

- inference_energy_consumption

tags:

- pruna-ai

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<a href="https://www.pruna.ai/" target="_blank" rel="noopener noreferrer">

<img src="https://i.imgur.com/eDAlcgk.png" alt="PrunaAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</a>

</div>

<!-- header end -->

[](https://twitter.com/PrunaAI)

[](https://github.com/PrunaAI)

[](https://www.linkedin.com/company/93832878/admin/feed/posts/?feedType=following)

[](https://discord.com/invite/vb6SmA3hxu)

## This repo contains GGUF versions of the mattshumer/Llama-3-8B-16K model.

# Simply make AI models cheaper, smaller, faster, and greener!

- Give a thumbs up if you like this model!

- Contact us and tell us which model to compress next [here](https://www.pruna.ai/contact).

- Request access to easily compress your *own* AI models [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

- Read the documentations to know more [here](https://pruna-ai-pruna.readthedocs-hosted.com/en/latest/)

- Join Pruna AI community on Discord [here](https://discord.com/invite/vb6SmA3hxu) to share feedback/suggestions or get help.

**Frequently Asked Questions**

- ***How does the compression work?*** The model is compressed with GGUF.

- ***How does the model quality change?*** The quality of the model output might vary compared to the base model.

- ***What is the model format?*** We use GGUF format.

- ***What calibration data has been used?*** If needed by the compression method, we used WikiText as the calibration data.

- ***How to compress my own models?*** You can request premium access to more compression methods and tech support for your specific use-cases [here](https://z0halsaff74.typeform.com/pruna-access?typeform-source=www.pruna.ai).

# Downloading and running the models

You can download the individual files from the Files & versions section. Here is a list of the different versions we provide. For more info checkout [this chart](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9) and [this guide](https://www.reddit.com/r/LocalLLaMA/comments/1ba55rj/overview_of_gguf_quantization_methods/):

| Quant type | Description |

|------------|--------------------------------------------------------------------------------------------|

| Q5_K_M | High quality, recommended. |

| Q5_K_S | High quality, recommended. |

| Q4_K_M | Good quality, uses about 4.83 bits per weight, recommended. |

| Q4_K_S | Slightly lower quality with more space savings, recommended. |

| IQ4_NL | Decent quality, slightly smaller than Q4_K_S with similar performance, recommended. |

| IQ4_XS | Decent quality, smaller than Q4_K_S with similar performance, recommended. |

| Q3_K_L | Lower quality but usable, good for low RAM availability. |

| Q3_K_M | Even lower quality. |

| IQ3_M | Medium-low quality, new method with decent performance comparable to Q3_K_M. |

| IQ3_S | Lower quality, new method with decent performance, recommended over Q3_K_S quant, same size with better performance. |

| Q3_K_S | Low quality, not recommended. |

| IQ3_XS | Lower quality, new method with decent performance, slightly better than Q3_K_S. |

| Q2_K | Very low quality but surprisingly usable. |

## How to download GGUF files ?

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

* LM Studio

* LoLLMS Web UI

* Faraday.dev

- **Option A** - Downloading in `text-generation-webui`:

- **Step 1**: Under Download Model, you can enter the model repo: mattshumer-Llama-3-8B-16K-GGUF-smashed and below it, a specific filename to download, such as: phi-2.IQ3_M.gguf.

- **Step 2**: Then click Download.

- **Option B** - Downloading on the command line (including multiple files at once):

- **Step 1**: We recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

- **Step 2**: Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download mattshumer-Llama-3-8B-16K-GGUF-smashed Llama-3-8B-16K.IQ3_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage (click to read)</summary>

Alternatively, you can also download multiple files at once with a pattern:

```shell

huggingface-cli download mattshumer-Llama-3-8B-16K-GGUF-smashed --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download mattshumer-Llama-3-8B-16K-GGUF-smashed Llama-3-8B-16K.IQ3_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## How to run model in GGUF format?

- **Option A** - Introductory example with `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell