modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF | mradermacher | 2024-06-14T01:41:27Z | 5,266 | 1 | transformers | [

"transformers",

"gguf",

"mergekit",

"merge",

"not-for-all-audiences",

"en",

"base_model:invisietch/EtherealRainbow-v0.2-8B",

"license:llama3",

"endpoints_compatible",

"region:us"

] | null | 2024-06-13T22:47:43Z | ---

base_model: invisietch/EtherealRainbow-v0.2-8B

language:

- en

library_name: transformers

license: llama3

quantized_by: mradermacher

tags:

- mergekit

- merge

- not-for-all-audiences

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

weighted/imatrix quants of https://huggingface.co/invisietch/EtherealRainbow-v0.2-8B

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ1_S.gguf) | i1-IQ1_S | 2.1 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ1_M.gguf) | i1-IQ1_M | 2.3 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 2.5 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ2_XS.gguf) | i1-IQ2_XS | 2.7 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ2_S.gguf) | i1-IQ2_S | 2.9 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ2_M.gguf) | i1-IQ2_M | 3.0 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q2_K.gguf) | i1-Q2_K | 3.3 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 3.4 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ3_XS.gguf) | i1-IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q3_K_S.gguf) | i1-Q3_K_S | 3.8 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ3_S.gguf) | i1-IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ3_M.gguf) | i1-IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q3_K_M.gguf) | i1-Q3_K_M | 4.1 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q3_K_L.gguf) | i1-Q3_K_L | 4.4 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-IQ4_XS.gguf) | i1-IQ4_XS | 4.5 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q4_0.gguf) | i1-Q4_0 | 4.8 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q4_K_S.gguf) | i1-Q4_K_S | 4.8 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q4_K_M.gguf) | i1-Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q5_K_S.gguf) | i1-Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q5_K_M.gguf) | i1-Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/EtherealRainbow-v0.2-8B-i1-GGUF/resolve/main/EtherealRainbow-v0.2-8B.i1-Q6_K.gguf) | i1-Q6_K | 6.7 | practically like static Q6_K |

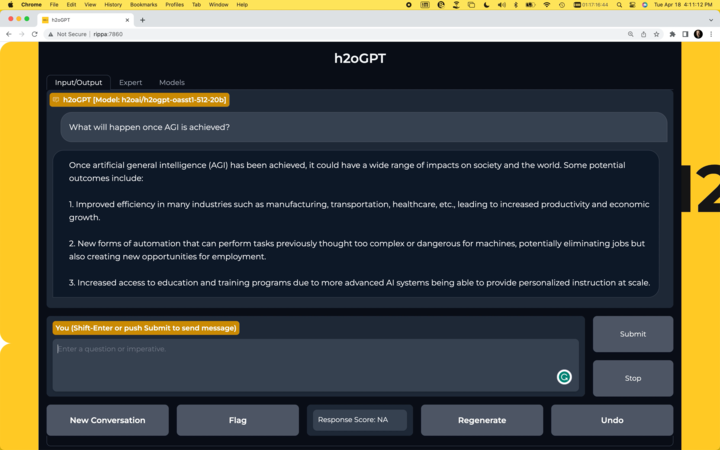

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

mradermacher/Autolycus-Mistral_7B-i1-GGUF | mradermacher | 2024-06-11T14:32:48Z | 5,265 | 0 | transformers | [

"transformers",

"gguf",

"mistral",

"instruct",

"finetune",

"chatml",

"gpt4",

"en",

"base_model:FPHam/Autolycus-Mistral_7B",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-11T13:22:00Z | ---

base_model: FPHam/Autolycus-Mistral_7B

language:

- en

library_name: transformers

license: apache-2.0

quantized_by: mradermacher

tags:

- mistral

- instruct

- finetune

- chatml

- gpt4

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/FPHam/Autolycus-Mistral_7B

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/Autolycus-Mistral_7B-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ1_S.gguf) | i1-IQ1_S | 1.7 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ1_M.gguf) | i1-IQ1_M | 1.9 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 2.1 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ2_XS.gguf) | i1-IQ2_XS | 2.3 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ2_S.gguf) | i1-IQ2_S | 2.4 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ2_M.gguf) | i1-IQ2_M | 2.6 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q2_K.gguf) | i1-Q2_K | 2.8 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 2.9 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ3_XS.gguf) | i1-IQ3_XS | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q3_K_S.gguf) | i1-Q3_K_S | 3.3 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ3_S.gguf) | i1-IQ3_S | 3.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ3_M.gguf) | i1-IQ3_M | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q3_K_M.gguf) | i1-Q3_K_M | 3.6 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q3_K_L.gguf) | i1-Q3_K_L | 3.9 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-IQ4_XS.gguf) | i1-IQ4_XS | 4.0 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q4_0.gguf) | i1-Q4_0 | 4.2 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q4_K_S.gguf) | i1-Q4_K_S | 4.2 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q4_K_M.gguf) | i1-Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q5_K_S.gguf) | i1-Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q5_K_M.gguf) | i1-Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/Autolycus-Mistral_7B-i1-GGUF/resolve/main/Autolycus-Mistral_7B.i1-Q6_K.gguf) | i1-Q6_K | 6.0 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his hardware for calculating the imatrix for these quants.

<!-- end -->

|

Yntec/RetroLife | Yntec | 2024-03-09T09:15:42Z | 5,262 | 4 | diffusers | [

"diffusers",

"safetensors",

"Photorealistic",

"Retro",

"Base model",

"Abstract",

"Elldreths",

"Fusch",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

] | text-to-image | 2024-03-05T13:51:24Z | ---

license: creativeml-openrail-m

library_name: diffusers

pipeline_tag: text-to-image

tags:

- Photorealistic

- Retro

- Base model

- Abstract

- Elldreths

- Fusch

- stable-diffusion

- stable-diffusion-diffusers

- diffusers

- text-to-image

---

# Retro Life

A mix of Elldreth's Retro Mix and Real Life 2.0. The old version hosted here has been renamed to RetroLifeAlpha, the new one improves the anatomy. Original pages:

https://huggingface.co/Yntec/RealLife

https://huggingface.co/Yntec/ElldrethsRetroMix

Samples and prompts:

(Click for larger)

Top left: Stock washed out worn Retro colors TV movie TRAILER. Closeup Santa Claus and daughters enjoying enchiladas with tacos. sitting with a pretty cute little girl, Art Christmas Theme by Gil_Elvgren and Haddon_Sundblom. Posing

Top right: Retropunk painting of a rainbow fantasy phoenix by Bnhr, fire eyes, nature, grass, tree, outdoors, forest, animal focus, blue eyes

Bottom left: vintage colors photo of view from diagonally above, Heidi Bloom, central adjustment, skinny young northern european female, long reddish ponytail hair, real hair movement, elongated head, beautiful face, grey eyes, thin bowed eyebrows, snub nose, gentle lower jaw line, narrow chin, da vinci lips, slightly smiling with parted lips, curious friendly facial expression, small, slim narrow tapered hips

Bottom right: 1977 kodachrome camera transparency, dramatic lighting film grain, PARTY HARD BACKGROUND, pretty cute little girl in Zone 51, Extraterrestrial, Alien Space Ship Delivering Christmas Presents, Alien Space Ship Decorated With Garlands and Christmas Balls, Snowstorm

# Recipes:

- SuperMerger Weight sum MBW 0,1,1,1,1,1,1,0,0,0,0,0,0,1,1,1,1,1,1,1,0,0,0,0,0,0

Model A: Real Life 2.0

Model B: ElldrethsRetroMix

Output: RetroLifeAlpha

- SuperMerger Weight sum MBW 0,1,1,1,1,1,0,0,0,0,0,0,0,1,1,1,1,1,1,1,0,0,0,0,0,1

Model A: Real Life 2.0

Model B: ElldrethsRetroMix

Output: RetroLife |

Remek/Llama-3-8B-Omnibus-1-PL-v01-INSTRUCT-GGUF | Remek | 2024-05-12T13:10:22Z | 5,257 | 3 | null | [

"gguf",

"text-generation",

"pl",

"en",

"region:us"

] | text-generation | 2024-04-22T20:25:02Z | ---

language:

- pl

- en

pipeline_tag: text-generation

---

# Llama-3-8B-Omnibus-1-PL-v01-INSTRUCT-GGUF

To repozytorum zawiera konwersję modeli Llama-3-8B-Omnibus-1-PL-v01-INSTRUCT do formatu GGUF - Q8_0 oraz Q4_K_M. Przetestowana została w dwóch środowiskach uruchomieniowych:

#### LM Studio

Wersja minimum 0.2.20 - koniecznie wybierz format promptu Llama 3 (!) (opcja Preset)

#### Ollama

Wersja 0.1.32. Konfiguracja ollama plik Modelfile. Uwaga! Nie zmieniaj SYSTEM mimo, że chcesz rozmawiać w języku polskim. Pozostaw treść pola systemowego po angielsku tak jak jest.

```

FROM ./Llama-3-Omnibus-PL-v01-GGUF.Q4_K_M.gguf

TEMPLATE """{{ if .System }}<|start_header_id|>system<|end_header_id|>

{{ .System }}<|eot_id|>{{ end }}{{ if .Prompt }}<|start_header_id|>user<|end_header_id|>

{{ .Prompt }}<|eot_id|>{{ end }}<|start_header_id|>assistant<|end_header_id|>

{{ .Response }}<|eot_id|>"""

SYSTEM """You are a helpful, smart, kind, and efficient AI assistant. You always fulfill the user's requests to the best of your ability."""

PARAMETER num_ctx 8192

PARAMETER num_gpu 99

```

Repozytorium zawiera model Meta Llama-3-8B-Omnibus-1-PL-v01 w wersji polskojęzycznej. Model postał na podstawie finetuningu modelu bazowego Llama-3-8B. Wykorzystano do tego dataset instrukcji Omnibus-1-PL (stworzyłem go na własne potrzeby przeprowadzania eksperymenów finetuningu modeli w języku polskim). Szczegóły parametrów treningu w sekcji Trening. Celem tego eksperymentu było sprawdzenie czy można namówić Llama-3-8B do płynnego rozmawiania w języku polskim (oryginalny model instrukcyjny 8B ma z tym problem - woli zdecydowanie bardziej rozmawiać po angielsku).

<img src="Llama-3-8B-PL-small.jpg" width="420" />

Uwaga!

* Model NIE jest CENZUROWANY. To wersja do zabawy. Nie została ujarzmiona.

* Model będzie dalej rozwijany ponieważ eksperymentuję z a. kolejnymi wersjami datasetu, b. model jest świetną bazą do testowania różnych technik finetunowania (LoRA, QLoRA; DPO, ORPO itd.)

* Udostępniłem go spontanicznie by użytkownicy mogli go używać i sprawdzać jakość Llama 3 ale w kontekście języka polskiego.

* Po informacji, że baza była trenowana na 15T tokenów (tylko 5% nie angielskich) uznałem, że to świetny model do finetuningu. Być może lekkie dotrenowanie modelu za pomocą contingued-pretraining da jeszcze większy uzysk.

### Sposób kodowania nazwy modelu

* Nazwa modelu bazowego: Llama-3-8B

* Nazwa datasetu: Omnibus-1

* Wersja językowa: PL (polska)

* Wersja modelu: v01

### Dataset

Omnibus-1 to zbiór polskich instrukcji (100% kontekstu Polskiego - fakty, osoby, miejsca osadzone w Polsce), który został w 100% syntetycznie wygenerowany. Zawiera on instrukcje z kategorii - matematyka, umiejętność pisania, dialogi, tematy medyczne, zagadki logiczne, tłumaczenia itd. Powstał on w ramach moich prac związanych z badaniem jakości modeli w kontekście języka polskiego. Pozwala on na finetuning modelu i sprawdzenie podatności modelu do mówienia w naszym rodzimym języku. Dataset zawiera obecnie 75.000 instrukcji. Będzie cały czas udoskonalony i być może w przyszłości udostępniony (jak uznam, że już jest wtstarczająco pełen i obejmuje szerokie spektrum tematyki i umiejętności). Dataset jest w 100% generowany za pomocą innych LLM (GPT3.5, GPT4, Mixtral itd.)

### Szablon konwersacji

Szablon konwersacji to oryginalna wersja Llama3

```

<|start_header_id|>You are a helpful, smart, kind, and efficient AI assistant. You always fulfill the user's requests to the best of your ability.<|end_header_id|>

{System}

<|eot_id|>

<|start_header_id|>user<|end_header_id|>

{User}

<|eot_id|><|start_header_id|>assistant<|end_header_id|>

{Assistant}

```

### Trening

Poniżej szczegóły hiperparametrów treningu:

* learning_rate: 2e-05

* train_batch_size: 8

* eval_batch_size: 8

* seed: 42

* distributed_type: single-GPU (Nvidia A6000 Ada)

* num_devices: 1

* gradient_accumulation_steps: 4

* optimizer: adamw_8bit

* lr_scheduler_type: linear

* lr_scheduler_warmup_steps: 5

* num_epochs: 1

* QLoRa - 4bit: rank 64, alpha 128

#### Unsloth

<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/made with unsloth.png" width="200px" align="center" />

[Unsloth](https://unsloth.ai), narzędzie dzięki któremu powstał ten model.

### Licencja

Licencja na zasadzie nie do komercyjnego użycia (ze względu na dataset - generowany syntetycznie za pomocą modeli GPT4, GPT3.5) oraz licencja Llama3 (proszę o zapoznanie się ze szczegółami licencji).

|

lcw99/zephykor-ko-beta-7b-chang | lcw99 | 2023-12-25T01:17:13Z | 5,252 | 1 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"conversational",

"ko",

"en",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-11-27T00:46:19Z | ---

language:

- ko

- en

---

* Under construction, be carefull. |

RLHFlow/pair-preference-model-LLaMA3-8B | RLHFlow | 2024-05-24T07:05:10Z | 5,250 | 26 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"arxiv:2405.07863",

"license:llama3",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-23T04:51:16Z | ---

license: llama3

---

This preference model is trained from [LLaMA3-8B-it](meta-llama/Meta-Llama-3-8B-Instruct) with the training script at [Reward Modeling](https://github.com/RLHFlow/RLHF-Reward-Modeling/tree/pm_dev/pair-pm).

The dataset is RLHFlow/pair_preference_model_dataset. It achieves Chat-98.6, Char-hard 65.8, Safety 89.6, and reasoning 94.9 in reward bench.

See our paper [RLHF Workflow: From Reward Modeling to Online RLHF](https://arxiv.org/abs/2405.07863) for more details of this model.

## Service the RM

Here is an example to use the Preference Model to rank a pair. For n>2 responses, it is recommened to use the tournament style ranking strategy to get the best response so that the complexity is linear in n.

```python

device = 0

model = AutoModelForCausalLM.from_pretrained(script_args.preference_name_or_path,

torch_dtype=torch.bfloat16, attn_implementation="flash_attention_2").cuda()

tokenizer = AutoTokenizer.from_pretrained(script_args.preference_name_or_path, use_fast=True)

tokenizer_plain = AutoTokenizer.from_pretrained(script_args.preference_name_or_path, use_fast=True)

tokenizer_plain.chat_template = "\n{% for message in messages %}{% if loop.index0 % 2 == 0 %}\n\n<turn> user\n {{ message['content'] }}{% else %}\n\n<turn> assistant\n {{ message['content'] }}{% endif %}{% endfor %}\n\n\n"

prompt_template = "[CONTEXT] {context} [RESPONSE A] {response_A} [RESPONSE B] {response_B} \n"

token_id_A = tokenizer.encode("A", add_special_tokens=False)

token_id_B = tokenizer.encode("B", add_special_tokens=False)

assert len(token_id_A) == 1 and len(token_id_B) == 1

token_id_A = token_id_A[0]

token_id_B = token_id_B[0]

temperature = 1.0

model.eval()

response_chosen = "BBBB"

response_rejected = "CCCC"

## We can also handle multi-turn conversation.

instruction = [{"role": "user", "content": ...},

{"role": "assistant", "content": ...},

{"role": "user", "content": ...},

]

context = tokenizer_plain.apply_chat_template(instruction, tokenize=False)

responses = [response_chosen, response_rejected]

probs_chosen = []

for chosen_position in [0, 1]:

# we swap order to mitigate position bias

response_A = responses[chosen_position]

response_B = responses[1 - chosen_position]

prompt = prompt_template.format(context=context, response_A=response_A, response_B=response_B)

message = [

{"role": "user", "content": prompt},

]

input_ids = tokenizer.encode(tokenizer.apply_chat_template(message, tokenize=False).replace(tokenizer.bos_token, ""), return_tensors='pt', add_special_tokens=False).cuda()

with torch.no_grad():

output = model(input_ids)

logit_A = output.logits[0, -1, token_id_A].item()

logit_B = output.logits[0, -1, token_id_B].item()

# take softmax to get the probability; using numpy

Z = np.exp(logit_A / temperature) + np.exp(logit_B / temperature)

logit_chosen = [logit_A, logit_B][chosen_position]

prob_chosen = np.exp(logit_chosen / temperature) / Z

probs_chosen.append(prob_chosen)

avg_prob_chosen = np.mean(probs_chosen)

correct = 0.5 if avg_prob_chosen == 0.5 else float(avg_prob_chosen > 0.5)

print(correct)

```

## Citation

If you use this model in your research, please consider citing our paper

```

@misc{rlhflow,

title={RLHF Workflow: From Reward Modeling to Online RLHF},

author={Hanze Dong and Wei Xiong and Bo Pang and Haoxiang Wang and Han Zhao and Yingbo Zhou and Nan Jiang and Doyen Sahoo and Caiming Xiong and Tong Zhang},

year={2024},

eprint={2405.07863},

archivePrefix={arXiv},

primaryClass={cs.LG}

}

```

and Google's Slic paper (which initially proposes this pairwise preference model)

```

@article{zhao2023slic,

title={Slic-hf: Sequence likelihood calibration with human feedback},

author={Zhao, Yao and Joshi, Rishabh and Liu, Tianqi and Khalman, Misha and Saleh, Mohammad and Liu, Peter J},

journal={arXiv preprint arXiv:2305.10425},

year={2023}

}

``` |

01-ai/Yi-1.5-34B-32K | 01-ai | 2024-06-26T10:42:31Z | 5,246 | 33 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:2403.04652",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-05-15T10:42:51Z | ---

license: apache-2.0

---

<div align="center">

<picture>

<img src="https://raw.githubusercontent.com/01-ai/Yi/main/assets/img/Yi_logo_icon_light.svg" width="150px">

</picture>

</div>

<p align="center">

<a href="https://github.com/01-ai">🐙 GitHub</a> •

<a href="https://discord.gg/hYUwWddeAu">👾 Discord</a> •

<a href="https://twitter.com/01ai_yi">🐤 Twitter</a> •

<a href="https://github.com/01-ai/Yi-1.5/issues/2">💬 WeChat</a>

<br/>

<a href="https://arxiv.org/abs/2403.04652">📝 Paper</a> •

<a href="https://01-ai.github.io/">💪 Tech Blog</a> •

<a href="https://github.com/01-ai/Yi/tree/main?tab=readme-ov-file#faq">🙌 FAQ</a> •

<a href="https://github.com/01-ai/Yi/tree/main?tab=readme-ov-file#learning-hub">📗 Learning Hub</a>

</p>

# Intro

Yi-1.5 is an upgraded version of Yi. It is continuously pre-trained on Yi with a high-quality corpus of 500B tokens and fine-tuned on 3M diverse fine-tuning samples.

Compared with Yi, Yi-1.5 delivers stronger performance in coding, math, reasoning, and instruction-following capability, while still maintaining excellent capabilities in language understanding, commonsense reasoning, and reading comprehension.

<div align="center">

Model | Context Length | Pre-trained Tokens

| :------------: | :------------: | :------------: |

| Yi-1.5 | 4K, 16K, 32K | 3.6T

</div>

# Models

- Chat models

<div align="center">

| Name | Download |

| --------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| Yi-1.5-34B-Chat | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI)|

| Yi-1.5-34B-Chat-16K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B-Chat | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B-Chat-16K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-6B-Chat | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

</div>

- Base models

<div align="center">

| Name | Download |

| ---------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| Yi-1.5-34B | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-34B-32K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-9B-32K | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

| Yi-1.5-6B | • [🤗 Hugging Face](https://huggingface.co/collections/01-ai/yi-15-2024-05-663f3ecab5f815a3eaca7ca8) • [🤖 ModelScope](https://www.modelscope.cn/organization/01ai) • [🟣 wisemodel](https://wisemodel.cn/organization/01.AI) |

</div>

# Benchmarks

- Chat models

Yi-1.5-34B-Chat is on par with or excels beyond larger models in most benchmarks.

Yi-1.5-9B-Chat is the top performer among similarly sized open-source models.

- Base models

Yi-1.5-34B is on par with or excels beyond larger models in some benchmarks.

Yi-1.5-9B is the top performer among similarly sized open-source models.

# Quick Start

For getting up and running with Yi-1.5 models quickly, see [README](https://github.com/01-ai/Yi-1.5).

|

mradermacher/Winterreise-m7-i1-GGUF | mradermacher | 2024-06-05T08:43:46Z | 5,246 | 0 | transformers | [

"transformers",

"gguf",

"en",

"dataset:LDJnr/Capybara",

"dataset:chargoddard/rpguild",

"dataset:PocketDoc/Guanaco-Unchained-Refined",

"dataset:lemonilia/LimaRP",

"base_model:Sao10K/Winterreise-m7",

"license:cc-by-nc-4.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-04T13:07:32Z | ---

base_model: Sao10K/Winterreise-m7

datasets:

- LDJnr/Capybara

- chargoddard/rpguild

- PocketDoc/Guanaco-Unchained-Refined

- lemonilia/LimaRP

language:

- en

library_name: transformers

license: cc-by-nc-4.0

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: nicoboss -->

weighted/imatrix quants of https://huggingface.co/Sao10K/Winterreise-m7

<!-- provided-files -->

static quants are available at https://huggingface.co/mradermacher/Winterreise-m7-GGUF

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ1_S.gguf) | i1-IQ1_S | 1.7 | for the desperate |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ1_M.gguf) | i1-IQ1_M | 1.9 | mostly desperate |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ2_XXS.gguf) | i1-IQ2_XXS | 2.1 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ2_XS.gguf) | i1-IQ2_XS | 2.3 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ2_S.gguf) | i1-IQ2_S | 2.4 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ2_M.gguf) | i1-IQ2_M | 2.6 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q2_K.gguf) | i1-Q2_K | 2.8 | IQ3_XXS probably better |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ3_XXS.gguf) | i1-IQ3_XXS | 2.9 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ3_XS.gguf) | i1-IQ3_XS | 3.1 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q3_K_S.gguf) | i1-Q3_K_S | 3.3 | IQ3_XS probably better |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ3_S.gguf) | i1-IQ3_S | 3.3 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ3_M.gguf) | i1-IQ3_M | 3.4 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q3_K_M.gguf) | i1-Q3_K_M | 3.6 | IQ3_S probably better |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q3_K_L.gguf) | i1-Q3_K_L | 3.9 | IQ3_M probably better |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-IQ4_XS.gguf) | i1-IQ4_XS | 4.0 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q4_0.gguf) | i1-Q4_0 | 4.2 | fast, low quality |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q4_K_S.gguf) | i1-Q4_K_S | 4.2 | optimal size/speed/quality |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q4_K_M.gguf) | i1-Q4_K_M | 4.5 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q5_K_S.gguf) | i1-Q5_K_S | 5.1 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q5_K_M.gguf) | i1-Q5_K_M | 5.2 | |

| [GGUF](https://huggingface.co/mradermacher/Winterreise-m7-i1-GGUF/resolve/main/Winterreise-m7.i1-Q6_K.gguf) | i1-Q6_K | 6.0 | practically like static Q6_K |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time. Additional thanks to [@nicoboss](https://huggingface.co/nicoboss) for giving me access to his hardware for calculating the imatrix for these quants.

<!-- end -->

|

Muennighoff/SGPT-125M-weightedmean-msmarco-specb-bitfit | Muennighoff | 2023-03-27T22:19:34Z | 5,241 | 2 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"gpt_neo",

"feature-extraction",

"sentence-similarity",

"mteb",

"arxiv:2202.08904",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | sentence-similarity | 2022-03-02T23:29:04Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

- mteb

model-index:

- name: SGPT-125M-weightedmean-msmarco-specb-bitfit

results:

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (en)

config: en

split: test

revision: 2d8a100785abf0ae21420d2a55b0c56e3e1ea996

metrics:

- type: accuracy

value: 61.23880597014926

- type: ap

value: 25.854431650388644

- type: f1

value: 55.751862762818604

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (de)

config: de

split: test

revision: 2d8a100785abf0ae21420d2a55b0c56e3e1ea996

metrics:

- type: accuracy

value: 56.88436830835117

- type: ap

value: 72.67279104379772

- type: f1

value: 54.449840243786404

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (en-ext)

config: en-ext

split: test

revision: 2d8a100785abf0ae21420d2a55b0c56e3e1ea996

metrics:

- type: accuracy

value: 58.27586206896551

- type: ap

value: 14.067357642500387

- type: f1

value: 48.172318518691334

- task:

type: Classification

dataset:

type: mteb/amazon_counterfactual

name: MTEB AmazonCounterfactualClassification (ja)

config: ja

split: test

revision: 2d8a100785abf0ae21420d2a55b0c56e3e1ea996

metrics:

- type: accuracy

value: 54.64668094218415

- type: ap

value: 11.776694555054965

- type: f1

value: 44.526622834078765

- task:

type: Classification

dataset:

type: mteb/amazon_polarity

name: MTEB AmazonPolarityClassification

config: default

split: test

revision: 80714f8dcf8cefc218ef4f8c5a966dd83f75a0e1

metrics:

- type: accuracy

value: 65.401225

- type: ap

value: 60.22809958678552

- type: f1

value: 65.0251824898292

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (en)

config: en

split: test

revision: c379a6705fec24a2493fa68e011692605f44e119

metrics:

- type: accuracy

value: 31.165999999999993

- type: f1

value: 30.908870050167437

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (de)

config: de

split: test

revision: c379a6705fec24a2493fa68e011692605f44e119

metrics:

- type: accuracy

value: 24.79

- type: f1

value: 24.5833598854121

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (es)

config: es

split: test

revision: c379a6705fec24a2493fa68e011692605f44e119

metrics:

- type: accuracy

value: 26.643999999999995

- type: f1

value: 26.39012792213563

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (fr)

config: fr

split: test

revision: c379a6705fec24a2493fa68e011692605f44e119

metrics:

- type: accuracy

value: 26.386000000000003

- type: f1

value: 26.276867791454873

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (ja)

config: ja

split: test

revision: c379a6705fec24a2493fa68e011692605f44e119

metrics:

- type: accuracy

value: 22.078000000000003

- type: f1

value: 21.797960290226843

- task:

type: Classification

dataset:

type: mteb/amazon_reviews_multi

name: MTEB AmazonReviewsClassification (zh)

config: zh

split: test

revision: c379a6705fec24a2493fa68e011692605f44e119

metrics:

- type: accuracy

value: 24.274

- type: f1

value: 23.887054434822627

- task:

type: Retrieval

dataset:

type: arguana

name: MTEB ArguAna

config: default

split: test

revision: 5b3e3697907184a9b77a3c99ee9ea1a9cbb1e4e3

metrics:

- type: map_at_1

value: 22.404

- type: map_at_10

value: 36.845

- type: map_at_100

value: 37.945

- type: map_at_1000

value: 37.966

- type: map_at_3

value: 31.78

- type: map_at_5

value: 34.608

- type: mrr_at_1

value: 22.902

- type: mrr_at_10

value: 37.034

- type: mrr_at_100

value: 38.134

- type: mrr_at_1000

value: 38.155

- type: mrr_at_3

value: 31.935000000000002

- type: mrr_at_5

value: 34.812

- type: ndcg_at_1

value: 22.404

- type: ndcg_at_10

value: 45.425

- type: ndcg_at_100

value: 50.354

- type: ndcg_at_1000

value: 50.873999999999995

- type: ndcg_at_3

value: 34.97

- type: ndcg_at_5

value: 40.081

- type: precision_at_1

value: 22.404

- type: precision_at_10

value: 7.303999999999999

- type: precision_at_100

value: 0.951

- type: precision_at_1000

value: 0.099

- type: precision_at_3

value: 14.746

- type: precision_at_5

value: 11.337

- type: recall_at_1

value: 22.404

- type: recall_at_10

value: 73.044

- type: recall_at_100

value: 95.092

- type: recall_at_1000

value: 99.075

- type: recall_at_3

value: 44.239

- type: recall_at_5

value: 56.686

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-p2p

name: MTEB ArxivClusteringP2P

config: default

split: test

revision: 0bbdb47bcbe3a90093699aefeed338a0f28a7ee8

metrics:

- type: v_measure

value: 39.70858340673288

- task:

type: Clustering

dataset:

type: mteb/arxiv-clustering-s2s

name: MTEB ArxivClusteringS2S

config: default

split: test

revision: b73bd54100e5abfa6e3a23dcafb46fe4d2438dc3

metrics:

- type: v_measure

value: 28.242847713721048

- task:

type: Reranking

dataset:

type: mteb/askubuntudupquestions-reranking

name: MTEB AskUbuntuDupQuestions

config: default

split: test

revision: 4d853f94cd57d85ec13805aeeac3ae3e5eb4c49c

metrics:

- type: map

value: 55.83700395192393

- type: mrr

value: 70.3891307215407

- task:

type: STS

dataset:

type: mteb/biosses-sts

name: MTEB BIOSSES

config: default

split: test

revision: 9ee918f184421b6bd48b78f6c714d86546106103

metrics:

- type: cos_sim_pearson

value: 79.25366801756223

- type: cos_sim_spearman

value: 75.20954502580506

- type: euclidean_pearson

value: 78.79900722991617

- type: euclidean_spearman

value: 77.79996549607588

- type: manhattan_pearson

value: 78.18408109480399

- type: manhattan_spearman

value: 76.85958262303106

- task:

type: Classification

dataset:

type: mteb/banking77

name: MTEB Banking77Classification

config: default

split: test

revision: 44fa15921b4c889113cc5df03dd4901b49161ab7

metrics:

- type: accuracy

value: 77.70454545454545

- type: f1

value: 77.6929000113803

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-p2p

name: MTEB BiorxivClusteringP2P

config: default

split: test

revision: 11d0121201d1f1f280e8cc8f3d98fb9c4d9f9c55

metrics:

- type: v_measure

value: 33.63260395543984

- task:

type: Clustering

dataset:

type: mteb/biorxiv-clustering-s2s

name: MTEB BiorxivClusteringS2S

config: default

split: test

revision: c0fab014e1bcb8d3a5e31b2088972a1e01547dc1

metrics:

- type: v_measure

value: 27.038042665369925

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackAndroidRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 22.139

- type: map_at_10

value: 28.839

- type: map_at_100

value: 30.023

- type: map_at_1000

value: 30.153000000000002

- type: map_at_3

value: 26.521

- type: map_at_5

value: 27.775

- type: mrr_at_1

value: 26.466

- type: mrr_at_10

value: 33.495000000000005

- type: mrr_at_100

value: 34.416999999999994

- type: mrr_at_1000

value: 34.485

- type: mrr_at_3

value: 31.402

- type: mrr_at_5

value: 32.496

- type: ndcg_at_1

value: 26.466

- type: ndcg_at_10

value: 33.372

- type: ndcg_at_100

value: 38.7

- type: ndcg_at_1000

value: 41.696

- type: ndcg_at_3

value: 29.443

- type: ndcg_at_5

value: 31.121

- type: precision_at_1

value: 26.466

- type: precision_at_10

value: 6.037

- type: precision_at_100

value: 1.0670000000000002

- type: precision_at_1000

value: 0.16199999999999998

- type: precision_at_3

value: 13.782

- type: precision_at_5

value: 9.757

- type: recall_at_1

value: 22.139

- type: recall_at_10

value: 42.39

- type: recall_at_100

value: 65.427

- type: recall_at_1000

value: 86.04899999999999

- type: recall_at_3

value: 31.127

- type: recall_at_5

value: 35.717999999999996

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackEnglishRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 20.652

- type: map_at_10

value: 27.558

- type: map_at_100

value: 28.473

- type: map_at_1000

value: 28.577

- type: map_at_3

value: 25.402

- type: map_at_5

value: 26.68

- type: mrr_at_1

value: 25.223000000000003

- type: mrr_at_10

value: 31.966

- type: mrr_at_100

value: 32.664

- type: mrr_at_1000

value: 32.724

- type: mrr_at_3

value: 30.074

- type: mrr_at_5

value: 31.249

- type: ndcg_at_1

value: 25.223000000000003

- type: ndcg_at_10

value: 31.694

- type: ndcg_at_100

value: 35.662

- type: ndcg_at_1000

value: 38.092

- type: ndcg_at_3

value: 28.294000000000004

- type: ndcg_at_5

value: 30.049

- type: precision_at_1

value: 25.223000000000003

- type: precision_at_10

value: 5.777

- type: precision_at_100

value: 0.9730000000000001

- type: precision_at_1000

value: 0.13999999999999999

- type: precision_at_3

value: 13.397

- type: precision_at_5

value: 9.605

- type: recall_at_1

value: 20.652

- type: recall_at_10

value: 39.367999999999995

- type: recall_at_100

value: 56.485

- type: recall_at_1000

value: 73.292

- type: recall_at_3

value: 29.830000000000002

- type: recall_at_5

value: 34.43

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGamingRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 25.180000000000003

- type: map_at_10

value: 34.579

- type: map_at_100

value: 35.589999999999996

- type: map_at_1000

value: 35.68

- type: map_at_3

value: 31.735999999999997

- type: map_at_5

value: 33.479

- type: mrr_at_1

value: 29.467

- type: mrr_at_10

value: 37.967

- type: mrr_at_100

value: 38.800000000000004

- type: mrr_at_1000

value: 38.858

- type: mrr_at_3

value: 35.465

- type: mrr_at_5

value: 37.057

- type: ndcg_at_1

value: 29.467

- type: ndcg_at_10

value: 39.796

- type: ndcg_at_100

value: 44.531

- type: ndcg_at_1000

value: 46.666000000000004

- type: ndcg_at_3

value: 34.676

- type: ndcg_at_5

value: 37.468

- type: precision_at_1

value: 29.467

- type: precision_at_10

value: 6.601999999999999

- type: precision_at_100

value: 0.9900000000000001

- type: precision_at_1000

value: 0.124

- type: precision_at_3

value: 15.568999999999999

- type: precision_at_5

value: 11.172

- type: recall_at_1

value: 25.180000000000003

- type: recall_at_10

value: 52.269

- type: recall_at_100

value: 73.574

- type: recall_at_1000

value: 89.141

- type: recall_at_3

value: 38.522

- type: recall_at_5

value: 45.323

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackGisRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 16.303

- type: map_at_10

value: 21.629

- type: map_at_100

value: 22.387999999999998

- type: map_at_1000

value: 22.489

- type: map_at_3

value: 19.608

- type: map_at_5

value: 20.774

- type: mrr_at_1

value: 17.740000000000002

- type: mrr_at_10

value: 23.214000000000002

- type: mrr_at_100

value: 23.97

- type: mrr_at_1000

value: 24.054000000000002

- type: mrr_at_3

value: 21.243000000000002

- type: mrr_at_5

value: 22.322

- type: ndcg_at_1

value: 17.740000000000002

- type: ndcg_at_10

value: 25.113000000000003

- type: ndcg_at_100

value: 29.287999999999997

- type: ndcg_at_1000

value: 32.204

- type: ndcg_at_3

value: 21.111

- type: ndcg_at_5

value: 23.061999999999998

- type: precision_at_1

value: 17.740000000000002

- type: precision_at_10

value: 3.955

- type: precision_at_100

value: 0.644

- type: precision_at_1000

value: 0.093

- type: precision_at_3

value: 8.851

- type: precision_at_5

value: 6.418

- type: recall_at_1

value: 16.303

- type: recall_at_10

value: 34.487

- type: recall_at_100

value: 54.413999999999994

- type: recall_at_1000

value: 77.158

- type: recall_at_3

value: 23.733

- type: recall_at_5

value: 28.381

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackMathematicaRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 10.133000000000001

- type: map_at_10

value: 15.665999999999999

- type: map_at_100

value: 16.592000000000002

- type: map_at_1000

value: 16.733999999999998

- type: map_at_3

value: 13.625000000000002

- type: map_at_5

value: 14.721

- type: mrr_at_1

value: 12.562000000000001

- type: mrr_at_10

value: 18.487000000000002

- type: mrr_at_100

value: 19.391

- type: mrr_at_1000

value: 19.487

- type: mrr_at_3

value: 16.418

- type: mrr_at_5

value: 17.599999999999998

- type: ndcg_at_1

value: 12.562000000000001

- type: ndcg_at_10

value: 19.43

- type: ndcg_at_100

value: 24.546

- type: ndcg_at_1000

value: 28.193

- type: ndcg_at_3

value: 15.509999999999998

- type: ndcg_at_5

value: 17.322000000000003

- type: precision_at_1

value: 12.562000000000001

- type: precision_at_10

value: 3.794

- type: precision_at_100

value: 0.74

- type: precision_at_1000

value: 0.122

- type: precision_at_3

value: 7.546

- type: precision_at_5

value: 5.721

- type: recall_at_1

value: 10.133000000000001

- type: recall_at_10

value: 28.261999999999997

- type: recall_at_100

value: 51.742999999999995

- type: recall_at_1000

value: 78.075

- type: recall_at_3

value: 17.634

- type: recall_at_5

value: 22.128999999999998

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackPhysicsRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 19.991999999999997

- type: map_at_10

value: 27.346999999999998

- type: map_at_100

value: 28.582

- type: map_at_1000

value: 28.716

- type: map_at_3

value: 24.907

- type: map_at_5

value: 26.1

- type: mrr_at_1

value: 23.773

- type: mrr_at_10

value: 31.647

- type: mrr_at_100

value: 32.639

- type: mrr_at_1000

value: 32.706

- type: mrr_at_3

value: 29.195

- type: mrr_at_5

value: 30.484

- type: ndcg_at_1

value: 23.773

- type: ndcg_at_10

value: 32.322

- type: ndcg_at_100

value: 37.996

- type: ndcg_at_1000

value: 40.819

- type: ndcg_at_3

value: 27.876

- type: ndcg_at_5

value: 29.664

- type: precision_at_1

value: 23.773

- type: precision_at_10

value: 5.976999999999999

- type: precision_at_100

value: 1.055

- type: precision_at_1000

value: 0.15

- type: precision_at_3

value: 13.122

- type: precision_at_5

value: 9.451

- type: recall_at_1

value: 19.991999999999997

- type: recall_at_10

value: 43.106

- type: recall_at_100

value: 67.264

- type: recall_at_1000

value: 86.386

- type: recall_at_3

value: 30.392000000000003

- type: recall_at_5

value: 34.910999999999994

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackProgrammersRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 17.896

- type: map_at_10

value: 24.644

- type: map_at_100

value: 25.790000000000003

- type: map_at_1000

value: 25.913999999999998

- type: map_at_3

value: 22.694

- type: map_at_5

value: 23.69

- type: mrr_at_1

value: 21.346999999999998

- type: mrr_at_10

value: 28.594

- type: mrr_at_100

value: 29.543999999999997

- type: mrr_at_1000

value: 29.621

- type: mrr_at_3

value: 26.807

- type: mrr_at_5

value: 27.669

- type: ndcg_at_1

value: 21.346999999999998

- type: ndcg_at_10

value: 28.833

- type: ndcg_at_100

value: 34.272000000000006

- type: ndcg_at_1000

value: 37.355

- type: ndcg_at_3

value: 25.373

- type: ndcg_at_5

value: 26.756

- type: precision_at_1

value: 21.346999999999998

- type: precision_at_10

value: 5.2170000000000005

- type: precision_at_100

value: 0.954

- type: precision_at_1000

value: 0.13899999999999998

- type: precision_at_3

value: 11.948

- type: precision_at_5

value: 8.425

- type: recall_at_1

value: 17.896

- type: recall_at_10

value: 37.291000000000004

- type: recall_at_100

value: 61.138000000000005

- type: recall_at_1000

value: 83.212

- type: recall_at_3

value: 27.705999999999996

- type: recall_at_5

value: 31.234

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 17.195166666666665

- type: map_at_10

value: 23.329083333333333

- type: map_at_100

value: 24.30308333333333

- type: map_at_1000

value: 24.422416666666667

- type: map_at_3

value: 21.327416666666664

- type: map_at_5

value: 22.419999999999998

- type: mrr_at_1

value: 19.999916666666667

- type: mrr_at_10

value: 26.390166666666666

- type: mrr_at_100

value: 27.230999999999998

- type: mrr_at_1000

value: 27.308333333333334

- type: mrr_at_3

value: 24.4675

- type: mrr_at_5

value: 25.541083333333336

- type: ndcg_at_1

value: 19.999916666666667

- type: ndcg_at_10

value: 27.248666666666665

- type: ndcg_at_100

value: 32.00258333333334

- type: ndcg_at_1000

value: 34.9465

- type: ndcg_at_3

value: 23.58566666666667

- type: ndcg_at_5

value: 25.26341666666666

- type: precision_at_1

value: 19.999916666666667

- type: precision_at_10

value: 4.772166666666666

- type: precision_at_100

value: 0.847

- type: precision_at_1000

value: 0.12741666666666668

- type: precision_at_3

value: 10.756166666666669

- type: precision_at_5

value: 7.725416666666667

- type: recall_at_1

value: 17.195166666666665

- type: recall_at_10

value: 35.99083333333334

- type: recall_at_100

value: 57.467999999999996

- type: recall_at_1000

value: 78.82366666666667

- type: recall_at_3

value: 25.898499999999995

- type: recall_at_5

value: 30.084333333333333

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackStatsRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 16.779

- type: map_at_10

value: 21.557000000000002

- type: map_at_100

value: 22.338

- type: map_at_1000

value: 22.421

- type: map_at_3

value: 19.939

- type: map_at_5

value: 20.903

- type: mrr_at_1

value: 18.404999999999998

- type: mrr_at_10

value: 23.435

- type: mrr_at_100

value: 24.179000000000002

- type: mrr_at_1000

value: 24.25

- type: mrr_at_3

value: 21.907

- type: mrr_at_5

value: 22.781000000000002

- type: ndcg_at_1

value: 18.404999999999998

- type: ndcg_at_10

value: 24.515

- type: ndcg_at_100

value: 28.721000000000004

- type: ndcg_at_1000

value: 31.259999999999998

- type: ndcg_at_3

value: 21.508

- type: ndcg_at_5

value: 23.01

- type: precision_at_1

value: 18.404999999999998

- type: precision_at_10

value: 3.834

- type: precision_at_100

value: 0.641

- type: precision_at_1000

value: 0.093

- type: precision_at_3

value: 9.151

- type: precision_at_5

value: 6.503

- type: recall_at_1

value: 16.779

- type: recall_at_10

value: 31.730000000000004

- type: recall_at_100

value: 51.673

- type: recall_at_1000

value: 71.17599999999999

- type: recall_at_3

value: 23.518

- type: recall_at_5

value: 27.230999999999998

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackTexRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 9.279

- type: map_at_10

value: 13.822000000000001

- type: map_at_100

value: 14.533

- type: map_at_1000

value: 14.649999999999999

- type: map_at_3

value: 12.396

- type: map_at_5

value: 13.214

- type: mrr_at_1

value: 11.149000000000001

- type: mrr_at_10

value: 16.139

- type: mrr_at_100

value: 16.872

- type: mrr_at_1000

value: 16.964000000000002

- type: mrr_at_3

value: 14.613000000000001

- type: mrr_at_5

value: 15.486

- type: ndcg_at_1

value: 11.149000000000001

- type: ndcg_at_10

value: 16.82

- type: ndcg_at_100

value: 20.73

- type: ndcg_at_1000

value: 23.894000000000002

- type: ndcg_at_3

value: 14.11

- type: ndcg_at_5

value: 15.404000000000002

- type: precision_at_1

value: 11.149000000000001

- type: precision_at_10

value: 3.063

- type: precision_at_100

value: 0.587

- type: precision_at_1000

value: 0.1

- type: precision_at_3

value: 6.699

- type: precision_at_5

value: 4.928

- type: recall_at_1

value: 9.279

- type: recall_at_10

value: 23.745

- type: recall_at_100

value: 41.873

- type: recall_at_1000

value: 64.982

- type: recall_at_3

value: 16.152

- type: recall_at_5

value: 19.409000000000002

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackUnixRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 16.36

- type: map_at_10

value: 21.927

- type: map_at_100

value: 22.889

- type: map_at_1000

value: 22.994

- type: map_at_3

value: 20.433

- type: map_at_5

value: 21.337

- type: mrr_at_1

value: 18.75

- type: mrr_at_10

value: 24.859

- type: mrr_at_100

value: 25.746999999999996

- type: mrr_at_1000

value: 25.829

- type: mrr_at_3

value: 23.383000000000003

- type: mrr_at_5

value: 24.297

- type: ndcg_at_1

value: 18.75

- type: ndcg_at_10

value: 25.372

- type: ndcg_at_100

value: 30.342999999999996

- type: ndcg_at_1000

value: 33.286

- type: ndcg_at_3

value: 22.627

- type: ndcg_at_5

value: 24.04

- type: precision_at_1

value: 18.75

- type: precision_at_10

value: 4.1419999999999995

- type: precision_at_100

value: 0.738

- type: precision_at_1000

value: 0.11100000000000002

- type: precision_at_3

value: 10.261000000000001

- type: precision_at_5

value: 7.164

- type: recall_at_1

value: 16.36

- type: recall_at_10

value: 32.949

- type: recall_at_100

value: 55.552

- type: recall_at_1000

value: 77.09899999999999

- type: recall_at_3

value: 25.538

- type: recall_at_5

value: 29.008

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWebmastersRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 17.39

- type: map_at_10

value: 23.058

- type: map_at_100

value: 24.445

- type: map_at_1000

value: 24.637999999999998

- type: map_at_3

value: 21.037

- type: map_at_5

value: 21.966

- type: mrr_at_1

value: 19.96

- type: mrr_at_10

value: 26.301000000000002

- type: mrr_at_100

value: 27.297

- type: mrr_at_1000

value: 27.375

- type: mrr_at_3

value: 24.340999999999998

- type: mrr_at_5

value: 25.339

- type: ndcg_at_1

value: 19.96

- type: ndcg_at_10

value: 27.249000000000002

- type: ndcg_at_100

value: 32.997

- type: ndcg_at_1000

value: 36.359

- type: ndcg_at_3

value: 23.519000000000002

- type: ndcg_at_5

value: 24.915000000000003

- type: precision_at_1

value: 19.96

- type: precision_at_10

value: 5.356000000000001

- type: precision_at_100

value: 1.198

- type: precision_at_1000

value: 0.20400000000000001

- type: precision_at_3

value: 10.738

- type: precision_at_5

value: 7.904999999999999

- type: recall_at_1

value: 17.39

- type: recall_at_10

value: 35.254999999999995

- type: recall_at_100

value: 61.351

- type: recall_at_1000

value: 84.395

- type: recall_at_3

value: 25.194

- type: recall_at_5

value: 28.546

- task:

type: Retrieval

dataset:

type: BeIR/cqadupstack

name: MTEB CQADupstackWordpressRetrieval

config: default

split: test

revision: 2b9f5791698b5be7bc5e10535c8690f20043c3db

metrics:

- type: map_at_1

value: 14.238999999999999

- type: map_at_10

value: 19.323

- type: map_at_100

value: 19.994

- type: map_at_1000

value: 20.102999999999998

- type: map_at_3

value: 17.631

- type: map_at_5

value: 18.401

- type: mrr_at_1

value: 15.157000000000002

- type: mrr_at_10

value: 20.578

- type: mrr_at_100

value: 21.252

- type: mrr_at_1000

value: 21.346999999999998

- type: mrr_at_3

value: 18.762

- type: mrr_at_5

value: 19.713

- type: ndcg_at_1

value: 15.157000000000002

- type: ndcg_at_10

value: 22.468

- type: ndcg_at_100

value: 26.245

- type: ndcg_at_1000

value: 29.534

- type: ndcg_at_3

value: 18.981

- type: ndcg_at_5

value: 20.349999999999998

- type: precision_at_1

value: 15.157000000000002

- type: precision_at_10

value: 3.512

- type: precision_at_100

value: 0.577

- type: precision_at_1000

value: 0.091

- type: precision_at_3

value: 8.01

- type: precision_at_5

value: 5.656

- type: recall_at_1

value: 14.238999999999999

- type: recall_at_10

value: 31.038

- type: recall_at_100

value: 49.122

- type: recall_at_1000

value: 74.919

- type: recall_at_3

value: 21.436

- type: recall_at_5

value: 24.692

- task:

type: Retrieval

dataset:

type: climate-fever

name: MTEB ClimateFEVER

config: default

split: test

revision: 392b78eb68c07badcd7c2cd8f39af108375dfcce

metrics:

- type: map_at_1

value: 8.828

- type: map_at_10

value: 14.982000000000001

- type: map_at_100

value: 16.495

- type: map_at_1000

value: 16.658

- type: map_at_3

value: 12.366000000000001

- type: map_at_5

value: 13.655000000000001

- type: mrr_at_1

value: 19.088

- type: mrr_at_10

value: 29.29

- type: mrr_at_100

value: 30.291

- type: mrr_at_1000

value: 30.342000000000002

- type: mrr_at_3

value: 25.907000000000004

- type: mrr_at_5

value: 27.840999999999998

- type: ndcg_at_1

value: 19.088

- type: ndcg_at_10

value: 21.858

- type: ndcg_at_100

value: 28.323999999999998

- type: ndcg_at_1000

value: 31.561

- type: ndcg_at_3

value: 17.175

- type: ndcg_at_5

value: 18.869

- type: precision_at_1

value: 19.088

- type: precision_at_10

value: 6.9190000000000005

- type: precision_at_100

value: 1.376

- type: precision_at_1000

value: 0.197

- type: precision_at_3

value: 12.703999999999999

- type: precision_at_5

value: 9.993

- type: recall_at_1

value: 8.828

- type: recall_at_10

value: 27.381

- type: recall_at_100

value: 50.0

- type: recall_at_1000

value: 68.355

- type: recall_at_3

value: 16.118

- type: recall_at_5

value: 20.587

- task:

type: Retrieval

dataset:

type: dbpedia-entity

name: MTEB DBPedia

config: default

split: test

revision: f097057d03ed98220bc7309ddb10b71a54d667d6

metrics:

- type: map_at_1

value: 5.586

- type: map_at_10

value: 10.040000000000001

- type: map_at_100

value: 12.55

- type: map_at_1000

value: 13.123999999999999

- type: map_at_3

value: 7.75

- type: map_at_5

value: 8.835999999999999

- type: mrr_at_1

value: 42.25

- type: mrr_at_10

value: 51.205999999999996

- type: mrr_at_100

value: 51.818

- type: mrr_at_1000

value: 51.855

- type: mrr_at_3

value: 48.875

- type: mrr_at_5

value: 50.488

- type: ndcg_at_1

value: 32.25

- type: ndcg_at_10

value: 22.718

- type: ndcg_at_100

value: 24.359

- type: ndcg_at_1000

value: 29.232000000000003

- type: ndcg_at_3

value: 25.974000000000004

- type: ndcg_at_5

value: 24.291999999999998

- type: precision_at_1

value: 42.25

- type: precision_at_10

value: 17.75

- type: precision_at_100

value: 5.032

- type: precision_at_1000

value: 1.117

- type: precision_at_3

value: 28.833

- type: precision_at_5

value: 24.25

- type: recall_at_1

value: 5.586

- type: recall_at_10

value: 14.16

- type: recall_at_100

value: 28.051

- type: recall_at_1000

value: 45.157000000000004

- type: recall_at_3

value: 8.758000000000001

- type: recall_at_5

value: 10.975999999999999

- task:

type: Classification

dataset:

type: mteb/emotion

name: MTEB EmotionClassification

config: default

split: test

revision: 829147f8f75a25f005913200eb5ed41fae320aa1

metrics:

- type: accuracy

value: 39.075

- type: f1

value: 35.01420354708222

- task:

type: Retrieval

dataset:

type: fever

name: MTEB FEVER

config: default

split: test

revision: 1429cf27e393599b8b359b9b72c666f96b2525f9

metrics:

- type: map_at_1

value: 43.519999999999996

- type: map_at_10

value: 54.368

- type: map_at_100

value: 54.918

- type: map_at_1000

value: 54.942

- type: map_at_3

value: 51.712

- type: map_at_5

value: 53.33599999999999

- type: mrr_at_1

value: 46.955000000000005

- type: mrr_at_10

value: 58.219

- type: mrr_at_100

value: 58.73500000000001

- type: mrr_at_1000

value: 58.753

- type: mrr_at_3

value: 55.518

- type: mrr_at_5

value: 57.191

- type: ndcg_at_1

value: 46.955000000000005

- type: ndcg_at_10

value: 60.45

- type: ndcg_at_100

value: 63.047

- type: ndcg_at_1000

value: 63.712999999999994

- type: ndcg_at_3

value: 55.233

- type: ndcg_at_5

value: 58.072

- type: precision_at_1

value: 46.955000000000005

- type: precision_at_10

value: 8.267

- type: precision_at_100

value: 0.962

- type: precision_at_1000

value: 0.10300000000000001

- type: precision_at_3

value: 22.326999999999998

- type: precision_at_5

value: 14.940999999999999

- type: recall_at_1

value: 43.519999999999996

- type: recall_at_10

value: 75.632

- type: recall_at_100

value: 87.41600000000001

- type: recall_at_1000

value: 92.557

- type: recall_at_3

value: 61.597

- type: recall_at_5

value: 68.518

- task:

type: Retrieval

dataset:

type: fiqa

name: MTEB FiQA2018

config: default

split: test

revision: 41b686a7f28c59bcaaa5791efd47c67c8ebe28be

metrics:

- type: map_at_1

value: 9.549000000000001

- type: map_at_10

value: 15.762

- type: map_at_100

value: 17.142

- type: map_at_1000

value: 17.329

- type: map_at_3

value: 13.575000000000001

- type: map_at_5

value: 14.754000000000001

- type: mrr_at_1

value: 19.753

- type: mrr_at_10

value: 26.568

- type: mrr_at_100

value: 27.606

- type: mrr_at_1000

value: 27.68

- type: mrr_at_3

value: 24.203

- type: mrr_at_5

value: 25.668999999999997

- type: ndcg_at_1

value: 19.753

- type: ndcg_at_10

value: 21.118000000000002

- type: ndcg_at_100

value: 27.308

- type: ndcg_at_1000

value: 31.304

- type: ndcg_at_3

value: 18.319

- type: ndcg_at_5

value: 19.414

- type: precision_at_1

value: 19.753

- type: precision_at_10

value: 6.08

- type: precision_at_100

value: 1.204

- type: precision_at_1000

value: 0.192

- type: precision_at_3

value: 12.191

- type: precision_at_5

value: 9.383

- type: recall_at_1

value: 9.549000000000001

- type: recall_at_10

value: 26.131

- type: recall_at_100

value: 50.544999999999995

- type: recall_at_1000

value: 74.968

- type: recall_at_3

value: 16.951

- type: recall_at_5

value: 20.95

- task:

type: Retrieval

dataset:

type: hotpotqa

name: MTEB HotpotQA

config: default

split: test

revision: 766870b35a1b9ca65e67a0d1913899973551fc6c

metrics:

- type: map_at_1

value: 25.544

- type: map_at_10

value: 32.62

- type: map_at_100

value: 33.275

- type: map_at_1000

value: 33.344

- type: map_at_3

value: 30.851

- type: map_at_5

value: 31.868999999999996

- type: mrr_at_1

value: 51.087

- type: mrr_at_10

value: 57.704

- type: mrr_at_100

value: 58.175

- type: mrr_at_1000

value: 58.207

- type: mrr_at_3

value: 56.106

- type: mrr_at_5

value: 57.074000000000005

- type: ndcg_at_1

value: 51.087

- type: ndcg_at_10

value: 40.876000000000005

- type: ndcg_at_100

value: 43.762

- type: ndcg_at_1000

value: 45.423

- type: ndcg_at_3

value: 37.65

- type: ndcg_at_5

value: 39.305

- type: precision_at_1

value: 51.087

- type: precision_at_10

value: 8.304

- type: precision_at_100

value: 1.059

- type: precision_at_1000

value: 0.128

- type: precision_at_3

value: 22.875999999999998

- type: precision_at_5

value: 15.033

- type: recall_at_1

value: 25.544

- type: recall_at_10

value: 41.519

- type: recall_at_100

value: 52.957

- type: recall_at_1000

value: 64.132

- type: recall_at_3

value: 34.315

- type: recall_at_5

value: 37.583

- task:

type: Classification

dataset:

type: mteb/imdb

name: MTEB ImdbClassification

config: default

split: test

revision: 8d743909f834c38949e8323a8a6ce8721ea6c7f4

metrics:

- type: accuracy

value: 58.6696

- type: ap

value: 55.3644880984279

- type: f1

value: 58.07942097405652

- task:

type: Retrieval

dataset:

type: msmarco

name: MTEB MSMARCO

config: default

split: validation

revision: e6838a846e2408f22cf5cc337ebc83e0bcf77849

metrics:

- type: map_at_1

value: 14.442

- type: map_at_10

value: 22.932

- type: map_at_100

value: 24.132

- type: map_at_1000

value: 24.213

- type: map_at_3

value: 20.002

- type: map_at_5

value: 21.636

- type: mrr_at_1

value: 14.841999999999999

- type: mrr_at_10

value: 23.416

- type: mrr_at_100

value: 24.593999999999998

- type: mrr_at_1000

value: 24.669

- type: mrr_at_3

value: 20.494

- type: mrr_at_5

value: 22.14

- type: ndcg_at_1

value: 14.841999999999999

- type: ndcg_at_10

value: 27.975

- type: ndcg_at_100

value: 34.143

- type: ndcg_at_1000

value: 36.370000000000005

- type: ndcg_at_3

value: 21.944

- type: ndcg_at_5

value: 24.881

- type: precision_at_1

value: 14.841999999999999

- type: precision_at_10

value: 4.537

- type: precision_at_100

value: 0.767

- type: precision_at_1000

value: 0.096

- type: precision_at_3

value: 9.322

- type: precision_at_5

value: 7.074

- type: recall_at_1

value: 14.442

- type: recall_at_10

value: 43.557

- type: recall_at_100

value: 72.904

- type: recall_at_1000

value: 90.40700000000001

- type: recall_at_3

value: 27.088

- type: recall_at_5

value: 34.144000000000005

- task:

type: Classification

dataset:

type: mteb/mtop_domain

name: MTEB MTOPDomainClassification (en)

config: en

split: test

revision: a7e2a951126a26fc8c6a69f835f33a346ba259e3

metrics:

- type: accuracy

value: 86.95622435020519

- type: f1

value: 86.58363130708494

- task:

type: Classification

dataset:

type: mteb/mtop_domain

name: MTEB MTOPDomainClassification (de)

config: de

split: test

revision: a7e2a951126a26fc8c6a69f835f33a346ba259e3

metrics: