modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

MaziyarPanahi/mergekit-slerp-aywerbb-GGUF | MaziyarPanahi | 2024-06-17T20:38:41Z | 2,549 | 0 | transformers | [

"transformers",

"gguf",

"mistral",

"quantized",

"2-bit",

"3-bit",

"4-bit",

"5-bit",

"6-bit",

"8-bit",

"GGUF",

"safetensors",

"text-generation",

"mergekit",

"merge",

"conversational",

"base_model:Equall/Saul-Base",

"base_model:HuggingFaceH4/zephyr-7b-beta",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us",

"base_model:mergekit-community/mergekit-slerp-aywerbb"

] | text-generation | 2024-06-17T20:16:01Z | ---

tags:

- quantized

- 2-bit

- 3-bit

- 4-bit

- 5-bit

- 6-bit

- 8-bit

- GGUF

- transformers

- safetensors

- mistral

- text-generation

- mergekit

- merge

- conversational

- base_model:Equall/Saul-Base

- base_model:HuggingFaceH4/zephyr-7b-beta

- autotrain_compatible

- endpoints_compatible

- text-generation-inference

- region:us

- text-generation

model_name: mergekit-slerp-aywerbb-GGUF

base_model: mergekit-community/mergekit-slerp-aywerbb

inference: false

model_creator: mergekit-community

pipeline_tag: text-generation

quantized_by: MaziyarPanahi

---

# [MaziyarPanahi/mergekit-slerp-aywerbb-GGUF](https://huggingface.co/MaziyarPanahi/mergekit-slerp-aywerbb-GGUF)

- Model creator: [mergekit-community](https://huggingface.co/mergekit-community)

- Original model: [mergekit-community/mergekit-slerp-aywerbb](https://huggingface.co/mergekit-community/mergekit-slerp-aywerbb)

## Description

[MaziyarPanahi/mergekit-slerp-aywerbb-GGUF](https://huggingface.co/MaziyarPanahi/mergekit-slerp-aywerbb-GGUF) contains GGUF format model files for [mergekit-community/mergekit-slerp-aywerbb](https://huggingface.co/mergekit-community/mergekit-slerp-aywerbb).

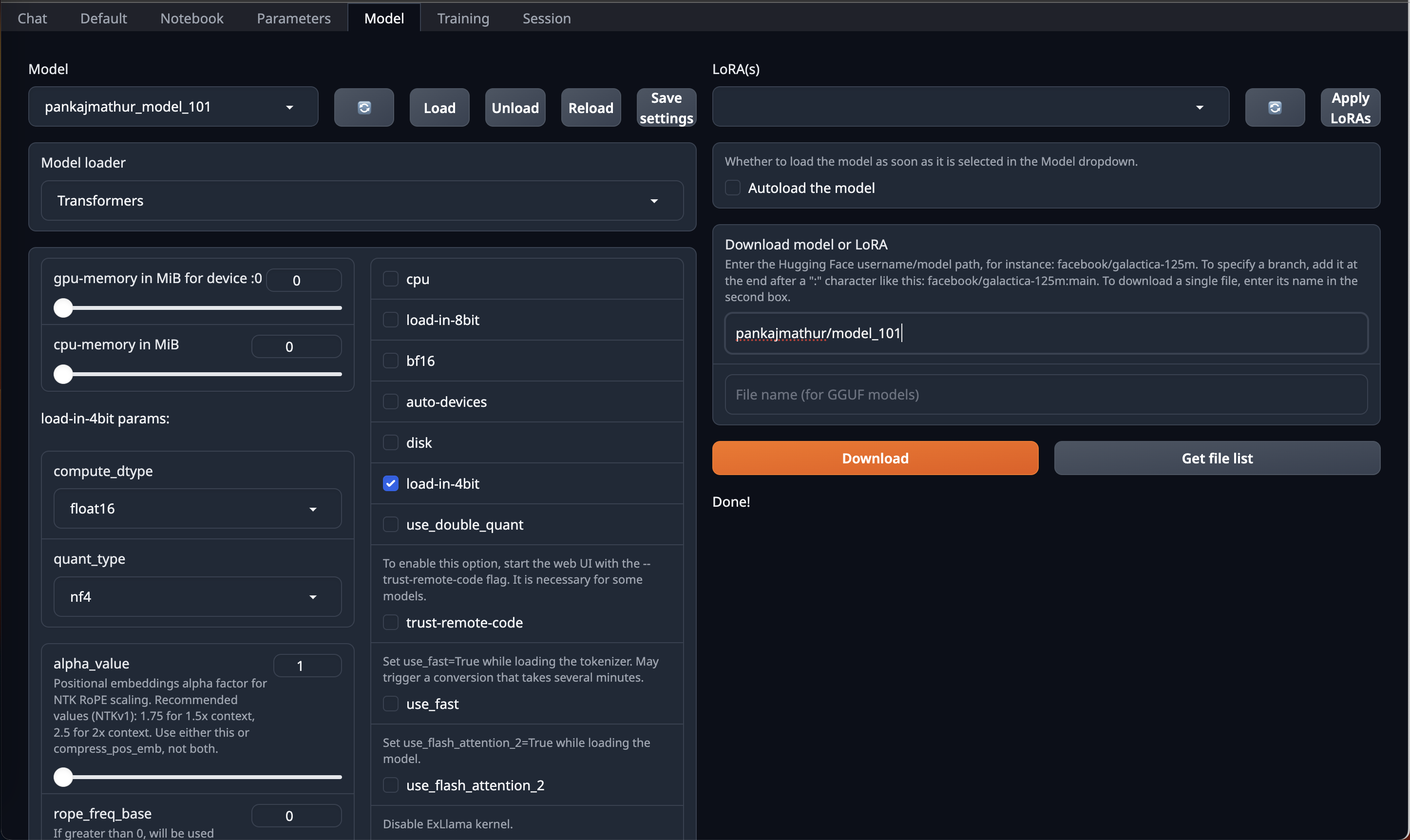

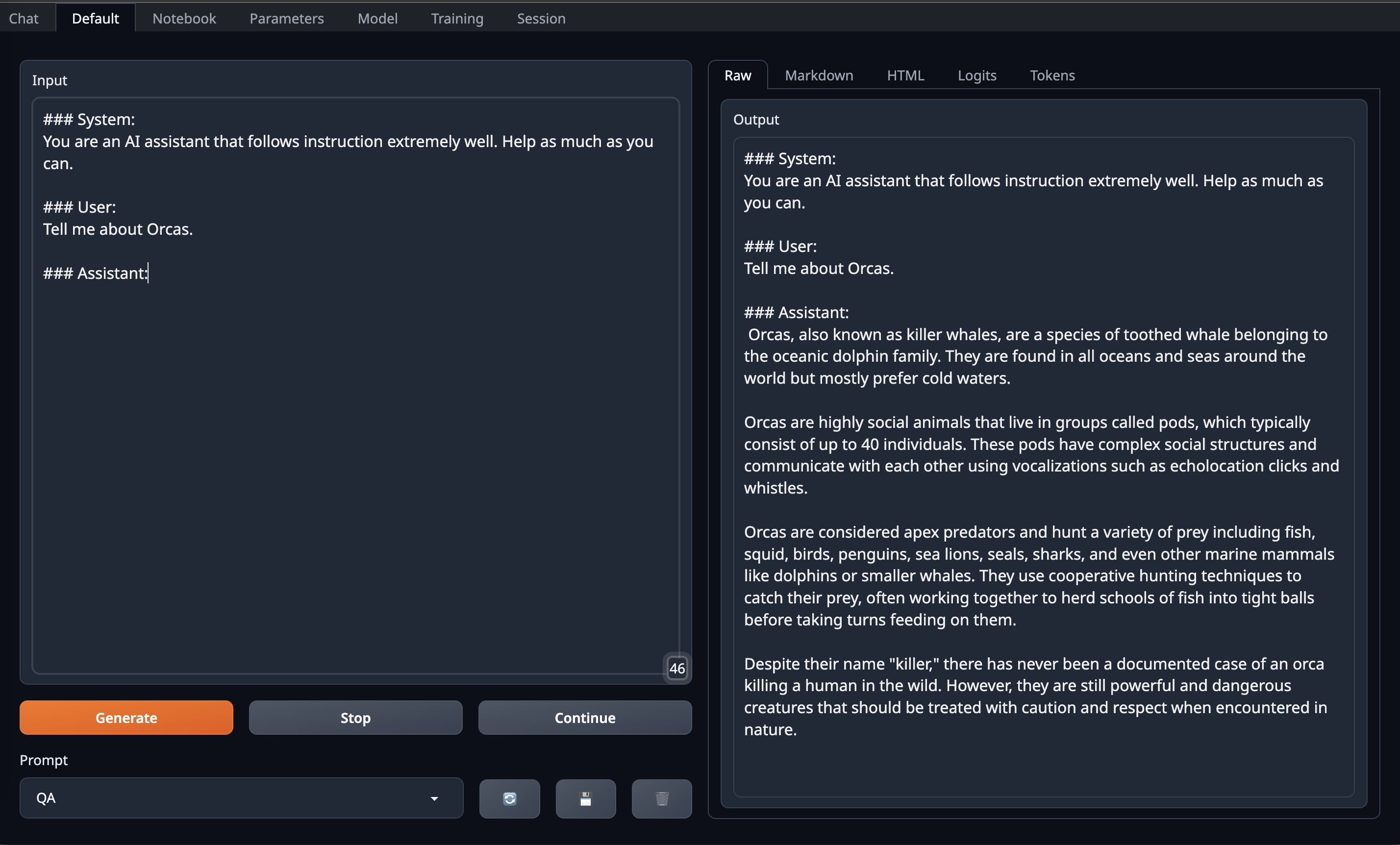

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

## Special thanks

🙏 Special thanks to [Georgi Gerganov](https://github.com/ggerganov) and the whole team working on [llama.cpp](https://github.com/ggerganov/llama.cpp/) for making all of this possible. |

ManifestoChatbot/llama-3-8b-Instruct-bnb-4bit-flori-demo | ManifestoChatbot | 2024-06-29T10:21:59Z | 2,549 | 0 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"base_model:unsloth/llama-3-8b-Instruct-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-29T10:11:32Z | ---

base_model: unsloth/llama-3-8b-Instruct-bnb-4bit

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

---

# Uploaded model

- **Developed by:** iFlor

- **License:** apache-2.0

- **Finetuned from model :** unsloth/llama-3-8b-Instruct-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

mradermacher/heavenly-mouse-v1-GGUF | mradermacher | 2024-06-04T11:58:38Z | 2,548 | 0 | transformers | [

"transformers",

"gguf",

"en",

"base_model:DeverDever/heavenly-mouse-v1",

"endpoints_compatible",

"region:us"

] | null | 2024-06-04T11:08:24Z | ---

base_model: DeverDever/heavenly-mouse-v1

language:

- en

library_name: transformers

quantized_by: mradermacher

---

## About

<!-- ### quantize_version: 2 -->

<!-- ### output_tensor_quantised: 1 -->

<!-- ### convert_type: hf -->

<!-- ### vocab_type: -->

<!-- ### tags: -->

static quants of https://huggingface.co/DeverDever/heavenly-mouse-v1

<!-- provided-files -->

weighted/imatrix quants seem not to be available (by me) at this time. If they do not show up a week or so after the static ones, I have probably not planned for them. Feel free to request them by opening a Community Discussion.

## Usage

If you are unsure how to use GGUF files, refer to one of [TheBloke's

READMEs](https://huggingface.co/TheBloke/KafkaLM-70B-German-V0.1-GGUF) for

more details, including on how to concatenate multi-part files.

## Provided Quants

(sorted by size, not necessarily quality. IQ-quants are often preferable over similar sized non-IQ quants)

| Link | Type | Size/GB | Notes |

|:-----|:-----|--------:|:------|

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q2_K.gguf) | Q2_K | 3.3 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.IQ3_XS.gguf) | IQ3_XS | 3.6 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q3_K_S.gguf) | Q3_K_S | 3.8 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.IQ3_S.gguf) | IQ3_S | 3.8 | beats Q3_K* |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.IQ3_M.gguf) | IQ3_M | 3.9 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q3_K_M.gguf) | Q3_K_M | 4.1 | lower quality |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q3_K_L.gguf) | Q3_K_L | 4.4 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.IQ4_XS.gguf) | IQ4_XS | 4.6 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q4_K_S.gguf) | Q4_K_S | 4.8 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q4_K_M.gguf) | Q4_K_M | 5.0 | fast, recommended |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q5_K_S.gguf) | Q5_K_S | 5.7 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q5_K_M.gguf) | Q5_K_M | 5.8 | |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q6_K.gguf) | Q6_K | 6.7 | very good quality |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.Q8_0.gguf) | Q8_0 | 8.6 | fast, best quality |

| [GGUF](https://huggingface.co/mradermacher/heavenly-mouse-v1-GGUF/resolve/main/heavenly-mouse-v1.f16.gguf) | f16 | 16.2 | 16 bpw, overkill |

Here is a handy graph by ikawrakow comparing some lower-quality quant

types (lower is better):

And here are Artefact2's thoughts on the matter:

https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9

## FAQ / Model Request

See https://huggingface.co/mradermacher/model_requests for some answers to

questions you might have and/or if you want some other model quantized.

## Thanks

I thank my company, [nethype GmbH](https://www.nethype.de/), for letting

me use its servers and providing upgrades to my workstation to enable

this work in my free time.

<!-- end -->

|

castorini/tct_colbert-msmarco | castorini | 2021-04-21T01:29:30Z | 2,546 | 0 | transformers | [

"transformers",

"pytorch",

"arxiv:2010.11386",

"endpoints_compatible",

"region:us"

] | null | 2022-03-02T23:29:05Z | This model is to reproduce the TCT-ColBERT dense retrieval described in the following paper:

> Sheng-Chieh Lin, Jheng-Hong Yang, and Jimmy Lin. [Distilling Dense Representations for Ranking using Tightly-Coupled Teachers.](https://arxiv.org/abs/2010.11386) arXiv:2010.11386, October 2020.

For more details on how to use it, check our experiments in [Pyserini](https://github.com/castorini/pyserini/blob/master/docs/experiments-tct_colbert.md)

|

raxtemur/trocr-base-ru | raxtemur | 2024-05-29T23:05:29Z | 2,545 | 13 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"safetensors",

"vision-encoder-decoder",

"ocr",

"image-to-text",

"ru",

"en",

"dataset:nastyboget/stackmix_hkr_large",

"dataset:nastyboget/stackmix_cyrillic_large",

"dataset:nastyboget/synthetic_cyrillic_large",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | image-to-text | 2024-02-16T15:01:16Z | ---

license: apache-2.0

datasets:

- nastyboget/stackmix_hkr_large

- nastyboget/stackmix_cyrillic_large

- nastyboget/synthetic_cyrillic_large

language:

- ru

- en

pipeline_tag: image-to-text

widget:

- src: examples/1.png

- src: examples/2.png

- src: examples/3.png

- src: examples/4.png

tags:

- ocr

---

# Model Card for TrOCR-Ru

<!-- Provide a quick summary of what the model is/does. -->

Finetuned model [microsoft/trocr-base-handwritten](https://huggingface.co/microsoft/trocr-base-handwritten) on large synth datasets from [nastyboget](https://huggingface.co/nastyboget).

## Metrics on HKR/Cyrillic datasets

| Metric | HKR_val | HKR_test1 | HKR_test2 | CYR_val | CYR_test |

|:--------:|:---------:|:---------:|:---------:|:---------:|:---------:|

| Accuracy | 69.9947 | 67.4184 | 69.9187 | 72.3613 | 63.9249 |

| CER | 6.7964 | 8.9113 | 6.7278 | 6.6403 | 9.2576 |

| WER | 21.6688 | 27.3849 | 21.6200 | 27.6715 | 33.2406 |

Last update form 29/02/2024 |

alexm-nm/tinyllama-24-marlin24-4bit-channelwise | alexm-nm | 2024-05-08T15:31:07Z | 2,544 | 1 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"conversational",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"4-bit",

"gptq",

"region:us"

] | text-generation | 2024-05-08T15:27:57Z | ---

license: apache-2.0

---

|

BioMistral/BioMistral-MedMNX | BioMistral | 2024-04-20T17:59:42Z | 2,543 | 1 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"mergekit",

"merge",

"conversational",

"arxiv:2311.03099",

"arxiv:2306.01708",

"base_model:johnsnowlabs/JSL-MedMNX-7B",

"base_model:BioMistral/BioMistral-7B-DARE",

"license:cc-by-nc-nd-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-20T17:10:46Z | ---

license: cc-by-nc-nd-4.0

base_model:

- johnsnowlabs/JSL-MedMNX-7B

- BioMistral/BioMistral-7B-DARE

library_name: transformers

tags:

- mergekit

- merge

---

# BioMistral-MedMNX

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

## Merge Details

### Merge Method

This model was merged using the [DARE](https://arxiv.org/abs/2311.03099) [TIES](https://arxiv.org/abs/2306.01708) merge method using [johnsnowlabs/JSL-MedMNX-7B](https://huggingface.co/johnsnowlabs/JSL-MedMNX-7B) as a base.

### Models Merged

The following models were included in the merge:

* [BioMistral/BioMistral-7B-DARE](https://huggingface.co/BioMistral/BioMistral-7B-DARE)

### Configuration

The following YAML configuration was used to produce this model:

```yaml

models:

- model: johnsnowlabs/JSL-MedMNX-7B

parameters:

density: 0.53

weight: 0.4

- model: BioMistral/BioMistral-7B-DARE

parameters:

density: 0.53

weight: 0.3

merge_method: dare_ties

tokenizer_source: union

base_model: johnsnowlabs/JSL-MedMNX-7B

parameters:

int8_mask: true

dtype: bfloat16

```

|

w601sxs/b1ade-embed-kd_3 | w601sxs | 2024-06-10T17:18:24Z | 2,543 | 0 | transformers | [

"transformers",

"safetensors",

"bert",

"feature-extraction",

"mteb",

"arxiv:1910.09700",

"model-index",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

] | feature-extraction | 2024-06-10T16:53:19Z | ---

model-index:

- name: no_model_name_available

results:

- dataset:

config: en

name: MTEB MassiveScenarioClassification

revision: fad2c6e8459f9e1c45d9315f4953d921437d70f8

split: test

type: mteb/amazon_massive_scenario

metrics:

- type: accuracy

value: 0.7591123066577001

task:

type: Classification

- dataset:

config: default

name: MTEB ImdbClassification

revision: 3d86128a09e091d6018b6d26cad27f2739fc2db7

split: test

type: mteb/imdb

metrics:

- type: accuracy

value: 0.737088

task:

type: Classification

- dataset:

config: default

name: MTEB SCIDOCS

revision: f8c2fcf00f625baaa80f62ec5bd9e1fff3b8ae88

split: test

type: mteb/scidocs

metrics:

- type: ndcg_at_10

value: 0.14645

task:

type: Retrieval

- dataset:

config: default

name: MTEB BIOSSES

revision: d3fb88f8f02e40887cd149695127462bbcf29b4a

split: test

type: mteb/biosses-sts

metrics:

- type: cosine_spearman

value: 0.7433663356966029

task:

type: STS

- dataset:

config: default

name: MTEB TwentyNewsgroupsClustering

revision: 6125ec4e24fa026cec8a478383ee943acfbd5449

split: test

type: mteb/twentynewsgroups-clustering

metrics:

- type: v_measure

value: 0.4597050473156563

task:

type: Clustering

- dataset:

config: default

name: MTEB FEVER

revision: bea83ef9e8fb933d90a2f1d5515737465d613e12

split: test

type: mteb/fever

metrics:

- type: ndcg_at_10

value: 0.25419

task:

type: Retrieval

- dataset:

config: default

name: MTEB TwitterSemEval2015

revision: 70970daeab8776df92f5ea462b6173c0b46fd2d1

split: test

type: mteb/twittersemeval2015-pairclassification

metrics:

- type: ap

value: 0.6721556260260827

task:

type: PairClassification

- dataset:

config: default

name: MTEB NFCorpus

revision: ec0fa4fe99da2ff19ca1214b7966684033a58814

split: test

type: mteb/nfcorpus

metrics:

- type: ndcg_at_10

value: 0.23269

task:

type: Retrieval

- dataset:

config: default

name: MTEB HotpotQA

revision: ab518f4d6fcca38d87c25209f94beba119d02014

split: test

type: mteb/hotpotqa

metrics:

- type: ndcg_at_10

value: 0.29778

task:

type: Retrieval

- dataset:

config: default

name: MTEB STS15

revision: ae752c7c21bf194d8b67fd573edf7ae58183cbe3

split: test

type: mteb/sts15-sts

metrics:

- type: cosine_spearman

value: 0.8236097824265283

task:

type: STS

- dataset:

config: default

name: MTEB CQADupstackMathematicaRetrieval

revision: 90fceea13679c63fe563ded68f3b6f06e50061de

split: test

type: mteb/cqadupstack-mathematica

metrics:

- type: ndcg_at_10

value: 0.1537

task:

type: Retrieval

- dataset:

config: default

name: MTEB CQADupstackEnglishRetrieval

revision: ad9991cb51e31e31e430383c75ffb2885547b5f0

split: test

type: mteb/cqadupstack-english

metrics:

- type: ndcg_at_10

value: 0.24932

task:

type: Retrieval

- dataset:

config: default

name: MTEB ArxivClusteringP2P

revision: a122ad7f3f0291bf49cc6f4d32aa80929df69d5d

split: test

type: mteb/arxiv-clustering-p2p

metrics:

- type: v_measure

value: 0.44358822700946515

task:

type: Clustering

- dataset:

config: default

name: MTEB FiQA2018

revision: 27a168819829fe9bcd655c2df245fb19452e8e06

split: test

type: mteb/fiqa

metrics:

- type: ndcg_at_10

value: 0.15899

task:

type: Retrieval

- dataset:

config: default

name: MTEB SciDocsRR

revision: d3c5e1fc0b855ab6097bf1cda04dd73947d7caab

split: test

type: mteb/scidocs-reranking

metrics:

- type: map

value: 0.7885898217382544

task:

type: Reranking

- dataset:

config: default

name: MTEB RedditClustering

revision: 24640382cdbf8abc73003fb0fa6d111a705499eb

split: test

type: mteb/reddit-clustering

metrics:

- type: v_measure

value: 0.4966068486651516

task:

type: Clustering

- dataset:

config: default

name: MTEB Touche2020

revision: a34f9a33db75fa0cbb21bb5cfc3dae8dc8bec93f

split: test

type: mteb/touche2020

metrics:

- type: ndcg_at_10

value: 0.12018

task:

type: Retrieval

- dataset:

config: en

name: MTEB MTOPDomainClassification

revision: d80d48c1eb48d3562165c59d59d0034df9fff0bf

split: test

type: mteb/mtop_domain

metrics:

- type: accuracy

value: 0.925079799361605

task:

type: Classification

- dataset:

config: default

name: MTEB Banking77Classification

revision: 0fd18e25b25c072e09e0d92ab615fda904d66300

split: test

type: mteb/banking77

metrics:

- type: accuracy

value: 0.8135064935064935

task:

type: Classification

- dataset:

config: default

name: MTEB SummEval

revision: cda12ad7615edc362dbf25a00fdd61d3b1eaf93c

split: test

type: mteb/summeval

metrics:

- type: cosine_spearman

value: 0.2889324869563879

task:

type: Summarization

- dataset:

config: default

name: MTEB TwitterURLCorpus

revision: 8b6510b0b1fa4e4c4f879467980e9be563ec1cdf

split: test

type: mteb/twitterurlcorpus-pairclassification

metrics:

- type: ap

value: 0.8389671787853494

task:

type: PairClassification

- dataset:

config: en-en

name: MTEB STS17

revision: faeb762787bd10488a50c8b5be4a3b82e411949c

split: test

type: mteb/sts17-crosslingual-sts

metrics:

- type: cosine_spearman

value: 0.8952714837442336

task:

type: STS

- dataset:

config: default

name: MTEB ArxivClusteringS2S

revision: f910caf1a6075f7329cdf8c1a6135696f37dbd53

split: test

type: mteb/arxiv-clustering-s2s

metrics:

- type: v_measure

value: 0.37137555761396807

task:

type: Clustering

- dataset:

config: default

name: MTEB CQADupstackWebmastersRetrieval

revision: 160c094312a0e1facb97e55eeddb698c0abe3571

split: test

type: mteb/cqadupstack-webmasters

metrics:

- type: ndcg_at_10

value: 0.25273

task:

type: Retrieval

- dataset:

config: en

name: MTEB AmazonCounterfactualClassification

revision: e8379541af4e31359cca9fbcf4b00f2671dba205

split: test

type: mteb/amazon_counterfactual

metrics:

- type: accuracy

value: 0.6864179104477611

task:

type: Classification

- dataset:

config: default

name: MTEB MindSmallReranking

revision: 59042f120c80e8afa9cdbb224f67076cec0fc9a7

split: test

type: mteb/mind_small

metrics:

- type: map

value: 0.30932148624587386

task:

type: Reranking

- dataset:

config: default

name: MTEB MSMARCO

revision: c5a29a104738b98a9e76336939199e264163d4a0

split: dev

type: mteb/msmarco

metrics:

- type: ndcg_at_10

value: 0.20298

task:

type: Retrieval

- dataset:

config: default

name: MTEB STS13

revision: 7e90230a92c190f1bf69ae9002b8cea547a64cca

split: test

type: mteb/sts13-sts

metrics:

- type: cosine_spearman

value: 0.8282788944395595

task:

type: STS

- dataset:

config: default

name: MTEB StackOverflowDupQuestions

revision: e185fbe320c72810689fc5848eb6114e1ef5ec69

split: test

type: mteb/stackoverflowdupquestions-reranking

metrics:

- type: map

value: 0.4666186087388775

task:

type: Reranking

- dataset:

config: en

name: MTEB STS22

revision: de9d86b3b84231dc21f76c7b7af1f28e2f57f6e3

split: test

type: mteb/sts22-crosslingual-sts

metrics:

- type: cosine_spearman

value: 0.6528643554148379

task:

type: STS

- dataset:

config: en

name: MTEB MTOPIntentClassification

revision: ae001d0e6b1228650b7bd1c2c65fb50ad11a8aba

split: test

type: mteb/mtop_intent

metrics:

- type: accuracy

value: 0.6971044231646147

task:

type: Classification

- dataset:

config: default

name: MTEB RedditClusteringP2P

revision: 385e3cb46b4cfa89021f56c4380204149d0efe33

split: test

type: mteb/reddit-clustering-p2p

metrics:

- type: v_measure

value: 0.5520754883265135

task:

type: Clustering

- dataset:

config: default

name: MTEB CQADupstackUnixRetrieval

revision: 6c6430d3a6d36f8d2a829195bc5dc94d7e063e53

split: test

type: mteb/cqadupstack-unix

metrics:

- type: ndcg_at_10

value: 0.23623

task:

type: Retrieval

- dataset:

config: default

name: MTEB AskUbuntuDupQuestions

revision: 2000358ca161889fa9c082cb41daa8dcfb161a54

split: test

type: mteb/askubuntudupquestions-reranking

metrics:

- type: map

value: 0.5611127024658868

task:

type: Reranking

- dataset:

config: default

name: MTEB QuoraRetrieval

revision: e4e08e0b7dbe3c8700f0daef558ff32256715259

split: test

type: mteb/quora

metrics:

- type: ndcg_at_10

value: 0.84318

task:

type: Retrieval

- dataset:

config: en

name: MTEB MassiveIntentClassification

revision: 4672e20407010da34463acc759c162ca9734bca6

split: test

type: mteb/amazon_massive_intent

metrics:

- type: accuracy

value: 0.7035305985205111

task:

type: Classification

- dataset:

config: default

name: MTEB SprintDuplicateQuestions

revision: d66bd1f72af766a5cc4b0ca5e00c162f89e8cc46

split: test

type: mteb/sprintduplicatequestions-pairclassification

metrics:

- type: ap

value: 0.8547019640605763

task:

type: PairClassification

- dataset:

config: default

name: MTEB AmazonPolarityClassification

revision: e2d317d38cd51312af73b3d32a06d1a08b442046

split: test

type: mteb/amazon_polarity

metrics:

- type: accuracy

value: 0.8622175000000001

task:

type: Classification

- dataset:

config: default

name: MTEB SICK-R

revision: 20a6d6f312dd54037fe07a32d58e5e168867909d

split: test

type: mteb/sickr-sts

metrics:

- type: cosine_spearman

value: 0.8074931072126776

task:

type: STS

- dataset:

config: default

name: MTEB StackExchangeClustering

revision: 6cbc1f7b2bc0622f2e39d2c77fa502909748c259

split: test

type: mteb/stackexchange-clustering

metrics:

- type: v_measure

value: 0.5824562550961052

task:

type: Clustering

- dataset:

config: default

name: MTEB DBPedia

revision: c0f706b76e590d620bd6618b3ca8efdd34e2d659

split: test

type: mteb/dbpedia

metrics:

- type: ndcg_at_10

value: 0.27904

task:

type: Retrieval

- dataset:

config: default

name: MTEB CQADupstackProgrammersRetrieval

revision: 6184bc1440d2dbc7612be22b50686b8826d22b32

split: test

type: mteb/cqadupstack-programmers

metrics:

- type: ndcg_at_10

value: 0.22149

task:

type: Retrieval

- dataset:

config: default

name: MTEB MedrxivClusteringP2P

revision: e7a26af6f3ae46b30dde8737f02c07b1505bcc73

split: test

type: mteb/medrxiv-clustering-p2p

metrics:

- type: v_measure

value: 0.3237094363112699

task:

type: Clustering

- dataset:

config: default

name: MTEB ToxicConversationsClassification

revision: edfaf9da55d3dd50d43143d90c1ac476895ae6de

split: test

type: mteb/toxic_conversations_50k

metrics:

- type: accuracy

value: 0.69345703125

task:

type: Classification

- dataset:

config: default

name: MTEB CQADupstackStatsRetrieval

revision: 65ac3a16b8e91f9cee4c9828cc7c335575432a2a

split: test

type: mteb/cqadupstack-stats

metrics:

- type: ndcg_at_10

value: 0.20067

task:

type: Retrieval

- dataset:

config: default

name: MTEB STS16

revision: 4d8694f8f0e0100860b497b999b3dbed754a0513

split: test

type: mteb/sts16-sts

metrics:

- type: cosine_spearman

value: 0.8059731462041985

task:

type: STS

- dataset:

config: default

name: MTEB SciFact

revision: 0228b52cf27578f30900b9e5271d331663a030d7

split: test

type: mteb/scifact

metrics:

- type: ndcg_at_10

value: 0.50544

task:

type: Retrieval

- dataset:

config: default

name: MTEB TweetSentimentExtractionClassification

revision: d604517c81ca91fe16a244d1248fc021f9ecee7a

split: test

type: mteb/tweet_sentiment_extraction

metrics:

- type: accuracy

value: 0.6107243916242219

task:

type: Classification

- dataset:

config: default

name: MTEB STS12

revision: a0d554a64d88156834ff5ae9920b964011b16384

split: test

type: mteb/sts12-sts

metrics:

- type: cosine_spearman

value: 0.7407164757075673

task:

type: STS

- dataset:

config: default

name: MTEB CQADupstackPhysicsRetrieval

revision: 79531abbd1fb92d06c6d6315a0cbbbf5bb247ea4

split: test

type: mteb/cqadupstack-physics

metrics:

- type: ndcg_at_10

value: 0.31707

task:

type: Retrieval

- dataset:

config: default

name: MTEB BiorxivClusteringP2P

revision: 65b79d1d13f80053f67aca9498d9402c2d9f1f40

split: test

type: mteb/biorxiv-clustering-p2p

metrics:

- type: v_measure

value: 0.36100815785085566

task:

type: Clustering

- dataset:

config: default

name: MTEB CQADupstackGisRetrieval

revision: 5003b3064772da1887988e05400cf3806fe491f2

split: test

type: mteb/cqadupstack-gis

metrics:

- type: ndcg_at_10

value: 0.22124

task:

type: Retrieval

- dataset:

config: default

name: MTEB CQADupstackGamingRetrieval

revision: 4885aa143210c98657558c04aaf3dc47cfb54340

split: test

type: mteb/cqadupstack-gaming

metrics:

- type: ndcg_at_10

value: 0.42459

task:

type: Retrieval

- dataset:

config: default

name: MTEB STSBenchmark

revision: b0fddb56ed78048fa8b90373c8a3cfc37b684831

split: test

type: mteb/stsbenchmark-sts

metrics:

- type: cosine_spearman

value: 0.7980073939799789

task:

type: STS

- dataset:

config: default

name: MTEB CQADupstackWordpressRetrieval

revision: 4ffe81d471b1924886b33c7567bfb200e9eec5c4

split: test

type: mteb/cqadupstack-wordpress

metrics:

- type: ndcg_at_10

value: 0.15801

task:

type: Retrieval

- dataset:

config: default

name: MTEB StackExchangeClusteringP2P

revision: 815ca46b2622cec33ccafc3735d572c266efdb44

split: test

type: mteb/stackexchange-clustering-p2p

metrics:

- type: v_measure

value: 0.30348330076332997

task:

type: Clustering

- dataset:

config: default

name: MTEB BiorxivClusteringS2S

revision: 258694dd0231531bc1fd9de6ceb52a0853c6d908

split: test

type: mteb/biorxiv-clustering-s2s

metrics:

- type: v_measure

value: 0.3066700709222508

task:

type: Clustering

- dataset:

config: default

name: MTEB CQADupstackTexRetrieval

revision: 46989137a86843e03a6195de44b09deda022eec7

split: test

type: mteb/cqadupstack-tex

metrics:

- type: ndcg_at_10

value: 0.14452

task:

type: Retrieval

- dataset:

config: default

name: MTEB CQADupstackAndroidRetrieval

revision: f46a197baaae43b4f621051089b82a364682dfeb

split: test

type: mteb/cqadupstack-android

metrics:

- type: ndcg_at_10

value: 0.34255

task:

type: Retrieval

- dataset:

config: default

name: MTEB TRECCOVID

revision: bb9466bac8153a0349341eb1b22e06409e78ef4e

split: test

type: mteb/trec-covid

metrics:

- type: ndcg_at_10

value: 0.37473

task:

type: Retrieval

- dataset:

config: default

name: MTEB NQ

revision: b774495ed302d8c44a3a7ea25c90dbce03968f31

split: test

type: mteb/nq

metrics:

- type: ndcg_at_10

value: 0.21214

task:

type: Retrieval

- dataset:

config: default

name: MTEB CQADupstackWebmastersRetrieval

revision: 160c094312a0e1facb97e55eeddb698c0abe3571

split: test

type: mteb/cqadupstack-webmasters

metrics:

- type: ndcg_at_10

value: 0.25273

task:

type: Retrieval

- dataset:

config: default

name: MTEB ArguAna

revision: c22ab2a51041ffd869aaddef7af8d8215647e41a

split: test

type: mteb/arguana

metrics:

- type: ndcg_at_10

value: 0.44416

task:

type: Retrieval

- dataset:

config: default

name: MTEB STS14

revision: 6031580fec1f6af667f0bd2da0a551cf4f0b2375

split: test

type: mteb/sts14-sts

metrics:

- type: cosine_spearman

value: 0.7544709602964104

task:

type: STS

- dataset:

config: default

name: MTEB MedrxivClusteringS2S

revision: 35191c8c0dca72d8ff3efcd72aa802307d469663

split: test

type: mteb/medrxiv-clustering-s2s

metrics:

- type: v_measure

value: 0.2932525018378166

task:

type: Clustering

- dataset:

config: en

name: MTEB AmazonReviewsClassification

revision: 1399c76144fd37290681b995c656ef9b2e06e26d

split: test

type: mteb/amazon_reviews_multi

metrics:

- type: accuracy

value: 0.43157999999999996

task:

type: Classification

- dataset:

config: default

name: MTEB ClimateFEVER

revision: 47f2ac6acb640fc46020b02a5b59fdda04d39380

split: test

type: mteb/climate-fever

metrics:

- type: ndcg_at_10

value: 0.11327

task:

type: Retrieval

- dataset:

config: default

name: MTEB EmotionClassification

revision: 4f58c6b202a23cf9a4da393831edf4f9183cad37

split: test

type: mteb/emotion

metrics:

- type: accuracy

value: 0.48450000000000004

task:

type: Classification

tags:

- mteb

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & metricss

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### metricss

<!-- These are the evaluation metricss being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0 | PracticeLLM | 2024-06-21T05:48:13Z | 2,542 | 0 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"en",

"ko",

"base_model:upstage/SOLAR-10.7B-v1.0",

"license:cc-by-nc-sa-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-26T18:15:42Z | ---

language:

- en

- ko

license: cc-by-nc-sa-4.0

pipeline_tag: text-generation

base_model:

- upstage/SOLAR-10.7B-v1.0

- Yhyu13/LMCocktail-10.7B-v1

model-index:

- name: SOLAR-tail-10.7B-Merge-v1.0

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 66.13

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 86.54

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 66.52

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 60.57

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 84.77

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 65.58

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0

name: Open LLM Leaderboard

---

# **SOLAR-tail-10.7B-Merge-v1.0**

## Model Details

**Model Developers** Kyujin Han (kyujinpy)

**Method**

Using [Mergekit](https://github.com/cg123/mergekit).

- [upstage/SOLAR-10.7B-v1.0](https://huggingface.co/upstage/SOLAR-10.7B-v1.0)

- [Yhyu13/LMCocktail-10.7B-v1](Yhyu13/LMCocktail-10.7B-v1)

**Merge config**

```

slices:

- sources:

- model: upstage/SOLAR-10.7B-v1.0

layer_range: [0, 48]

- model: Yhyu13/LMCocktail-10.7B-v1

layer_range: [0, 48]

merge_method: slerp

base_model: upstage/SOLAR-10.7B-v1.0

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5 # fallback for rest of tensors

tokenizer_source: union

dtype: float16

```

# **Model Benchmark**

## Open Ko leaderboard

- Follow up as [Ko-link](https://huggingface.co/spaces/upstage/open-ko-llm-leaderboard).

| Model | Average | ARC | HellaSwag | MMLU | TruthfulQA | Ko-CommonGenV2 |

| --- | --- | --- | --- | --- | --- | --- |

| PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0 | 48.32 | 45.73 | 56.97 | 38.77 | 38.75 | 61.16 |

| jjourney1125/M-SOLAR-10.7B-v1.0 | 55.15 | 49.57 | 60.12 | 54.60 | 49.23 | 62.22 |

- Follow up as [En-link](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard).

| Model | Average | ARC | HellaSwag | MMLU | TruthfulQA | Winogrande | GSM8K |

| --- | --- | --- | --- | --- | --- | --- | --- |

| PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0 | 71.68 | 66.13 | 86.54 | **66.52** | 60.57 | **84.77** | **65.58** |

| kyujinpy/Sakura-SOLAR-Instruct | **74.40** | **70.99** | **88.42** | 66.33 | **71.79** | 83.66 | 65.20 |

## lm-evaluation-harness

```

gpt2 (pretrained=PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0), limit: None, provide_description: False, num_fewshot: 0, batch_size: None

| Task |Version| Metric |Value | |Stderr|

|----------------|------:|--------|-----:|---|-----:|

|kobest_boolq | 0|acc |0.5021|± |0.0133|

| | |macro_f1|0.3343|± |0.0059|

|kobest_copa | 0|acc |0.6220|± |0.0153|

| | |macro_f1|0.6217|± |0.0154|

|kobest_hellaswag| 0|acc |0.4380|± |0.0222|

| | |acc_norm|0.5380|± |0.0223|

| | |macro_f1|0.4366|± |0.0222|

|kobest_sentineg | 0|acc |0.4962|± |0.0251|

| | |macro_f1|0.3316|± |0.0113|

```

# Implementation Code

```python

### KO-Platypus

from transformers import AutoModelForCausalLM, AutoTokenizer

import torch

repo = "PracticeLLM/SOLAR-tail-10.7B-Merge-v1.0"

OpenOrca = AutoModelForCausalLM.from_pretrained(

repo,

return_dict=True,

torch_dtype=torch.float16,

device_map='auto'

)

OpenOrca_tokenizer = AutoTokenizer.from_pretrained(repo)

```

---

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_PracticeLLM__SOLAR-tail-10.7B-Merge-v1.0)

| Metric |Value|

|---------------------------------|----:|

|Avg. |71.68|

|AI2 Reasoning Challenge (25-Shot)|66.13|

|HellaSwag (10-Shot) |86.54|

|MMLU (5-Shot) |66.52|

|TruthfulQA (0-shot) |60.57|

|Winogrande (5-shot) |84.77|

|GSM8k (5-shot) |65.58|

|

RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf | RichardErkhov | 2024-06-16T11:50:34Z | 2,542 | 0 | null | [

"gguf",

"region:us"

] | null | 2024-06-16T07:56:06Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

openbuddy-llama3-8b-v21.1-8k - GGUF

- Model creator: https://huggingface.co/OpenBuddy/

- Original model: https://huggingface.co/OpenBuddy/openbuddy-llama3-8b-v21.1-8k/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [openbuddy-llama3-8b-v21.1-8k.Q2_K.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q2_K.gguf) | Q2_K | 2.96GB |

| [openbuddy-llama3-8b-v21.1-8k.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.IQ3_XS.gguf) | IQ3_XS | 3.28GB |

| [openbuddy-llama3-8b-v21.1-8k.IQ3_S.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.IQ3_S.gguf) | IQ3_S | 3.43GB |

| [openbuddy-llama3-8b-v21.1-8k.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q3_K_S.gguf) | Q3_K_S | 3.41GB |

| [openbuddy-llama3-8b-v21.1-8k.IQ3_M.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.IQ3_M.gguf) | IQ3_M | 3.52GB |

| [openbuddy-llama3-8b-v21.1-8k.Q3_K.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q3_K.gguf) | Q3_K | 3.74GB |

| [openbuddy-llama3-8b-v21.1-8k.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q3_K_M.gguf) | Q3_K_M | 3.74GB |

| [openbuddy-llama3-8b-v21.1-8k.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q3_K_L.gguf) | Q3_K_L | 4.03GB |

| [openbuddy-llama3-8b-v21.1-8k.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.IQ4_XS.gguf) | IQ4_XS | 4.18GB |

| [openbuddy-llama3-8b-v21.1-8k.Q4_0.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q4_0.gguf) | Q4_0 | 4.34GB |

| [openbuddy-llama3-8b-v21.1-8k.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.IQ4_NL.gguf) | IQ4_NL | 4.38GB |

| [openbuddy-llama3-8b-v21.1-8k.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q4_K_S.gguf) | Q4_K_S | 4.37GB |

| [openbuddy-llama3-8b-v21.1-8k.Q4_K.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q4_K.gguf) | Q4_K | 4.58GB |

| [openbuddy-llama3-8b-v21.1-8k.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q4_K_M.gguf) | Q4_K_M | 4.58GB |

| [openbuddy-llama3-8b-v21.1-8k.Q4_1.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q4_1.gguf) | Q4_1 | 4.78GB |

| [openbuddy-llama3-8b-v21.1-8k.Q5_0.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q5_0.gguf) | Q5_0 | 5.21GB |

| [openbuddy-llama3-8b-v21.1-8k.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q5_K_S.gguf) | Q5_K_S | 5.21GB |

| [openbuddy-llama3-8b-v21.1-8k.Q5_K.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q5_K.gguf) | Q5_K | 5.34GB |

| [openbuddy-llama3-8b-v21.1-8k.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q5_K_M.gguf) | Q5_K_M | 5.34GB |

| [openbuddy-llama3-8b-v21.1-8k.Q5_1.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q5_1.gguf) | Q5_1 | 5.65GB |

| [openbuddy-llama3-8b-v21.1-8k.Q6_K.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q6_K.gguf) | Q6_K | 6.14GB |

| [openbuddy-llama3-8b-v21.1-8k.Q8_0.gguf](https://huggingface.co/RichardErkhov/OpenBuddy_-_openbuddy-llama3-8b-v21.1-8k-gguf/blob/main/openbuddy-llama3-8b-v21.1-8k.Q8_0.gguf) | Q8_0 | 7.95GB |

Original model description:

---

language:

- zh

- en

- fr

- de

- ja

- ko

- it

- fi

pipeline_tag: text-generation

tags:

- llama-3

license: other

license_name: llama3

license_link: https://llama.meta.com/llama3/license/

---

# OpenBuddy - Open Multilingual Chatbot

GitHub and Usage Guide: [https://github.com/OpenBuddy/OpenBuddy](https://github.com/OpenBuddy/OpenBuddy)

Website and Demo: [https://openbuddy.ai](https://openbuddy.ai)

Evaluation result of this model: [Evaluation.txt](Evaluation.txt)

# Run locally with 🦙Ollama

```

ollama run openbuddy/openbuddy-llama3-8b-v21.1-8k

```

# Copyright Notice

**Built with Meta Llama 3**

License: https://llama.meta.com/llama3/license/

Acceptable Use Policy: https://llama.meta.com/llama3/use-policy

This model is intended for use in English and Chinese.

# Prompt Format

We recommend using the fast tokenizer from `transformers`, which should be enabled by default in the `transformers` and `vllm` libraries. Other implementations including `sentencepiece` may not work as expected, especially for special tokens like `<|role|>`, `<|says|>` and `<|end|>`.

```

<|role|>system<|says|>You(assistant) are a helpful, respectful and honest INTP-T AI Assistant named Buddy. You are talking to a human(user).

Always answer as helpfully and logically as possible, while being safe. Your answers should not include any harmful, political, religious, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.

You cannot access the internet, but you have vast knowledge, cutoff: 2023-04.

You are trained by OpenBuddy team, (https://openbuddy.ai, https://github.com/OpenBuddy/OpenBuddy), not related to GPT or OpenAI.<|end|>

<|role|>user<|says|>History input 1<|end|>

<|role|>assistant<|says|>History output 1<|end|>

<|role|>user<|says|>History input 2<|end|>

<|role|>assistant<|says|>History output 2<|end|>

<|role|>user<|says|>Current input<|end|>

<|role|>assistant<|says|>

```

This format is also defined in `tokenizer_config.json`, which means you can directly use `vllm` to deploy an OpenAI-like API service. For more information, please refer to the [vllm documentation](https://docs.vllm.ai/en/latest/serving/openai_compatible_server.html).

## Disclaimer

All OpenBuddy models have inherent limitations and may potentially produce outputs that are erroneous, harmful, offensive, or otherwise undesirable. Users should not use these models in critical or high-stakes situations that may lead to personal injury, property damage, or significant losses. Examples of such scenarios include, but are not limited to, the medical field, controlling software and hardware systems that may cause harm, and making important financial or legal decisions.

OpenBuddy is provided "as-is" without any warranty of any kind, either express or implied, including, but not limited to, the implied warranties of merchantability, fitness for a particular purpose, and non-infringement. In no event shall the authors, contributors, or copyright holders be liable for any claim, damages, or other liabilities, whether in an action of contract, tort, or otherwise, arising from, out of, or in connection with the software or the use or other dealings in the software.

By using OpenBuddy, you agree to these terms and conditions, and acknowledge that you understand the potential risks associated with its use. You also agree to indemnify and hold harmless the authors, contributors, and copyright holders from any claims, damages, or liabilities arising from your use of OpenBuddy.

## 免责声明

所有OpenBuddy模型均存在固有的局限性,可能产生错误的、有害的、冒犯性的或其他不良的输出。用户在关键或高风险场景中应谨慎行事,不要使用这些模型,以免导致人身伤害、财产损失或重大损失。此类场景的例子包括但不限于医疗领域、可能导致伤害的软硬件系统的控制以及进行重要的财务或法律决策。

OpenBuddy按“原样”提供,不附带任何种类的明示或暗示的保证,包括但不限于适销性、特定目的的适用性和非侵权的暗示保证。在任何情况下,作者、贡献者或版权所有者均不对因软件或使用或其他软件交易而产生的任何索赔、损害赔偿或其他责任(无论是合同、侵权还是其他原因)承担责任。

使用OpenBuddy即表示您同意这些条款和条件,并承认您了解其使用可能带来的潜在风险。您还同意赔偿并使作者、贡献者和版权所有者免受因您使用OpenBuddy而产生的任何索赔、损害赔偿或责任的影响。

|

IDEA-CCNL/Erlangshen-MegatronBert-1.3B-NLI | IDEA-CCNL | 2023-05-26T06:33:52Z | 2,541 | 3 | transformers | [

"transformers",

"pytorch",

"megatron-bert",

"text-classification",

"bert",

"NLU",

"NLI",

"zh",

"arxiv:2209.02970",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-classification | 2022-05-12T04:02:48Z | ---

language:

- zh

license: apache-2.0

tags:

- bert

- NLU

- NLI

inference: true

widget:

- text: "今天心情不好[SEP]今天很开心"

---

# Erlangshen-MegatronBert-1.3B-NLI

- Main Page:[Fengshenbang](https://fengshenbang-lm.com/)

- Github: [Fengshenbang-LM](https://github.com/IDEA-CCNL/Fengshenbang-LM)

## 简介 Brief Introduction

2021年登顶FewCLUE和ZeroCLUE的中文BERT,在数个推理任务微调后的版本

This is the fine-tuned version of the Chinese BERT model on several NLI datasets, which topped FewCLUE and ZeroCLUE benchmark in 2021

## 模型分类 Model Taxonomy

| 需求 Demand | 任务 Task | 系列 Series | 模型 Model | 参数 Parameter | 额外 Extra |

| :----: | :----: | :----: | :----: | :----: | :----: |

| 通用 General | 自然语言理解 NLU | 二郎神 Erlangshen | MegatronBert | 1.3B | 自然语言推断 NLI |

## 模型信息 Model Information

基于[Erlangshen-MegatronBert-1.3B](https://huggingface.co/IDEA-CCNL/Erlangshen-MegatronBert-1.3B),我们在收集的4个中文领域的NLI(自然语言推理)数据集,总计1014787个样本上微调了一个NLI版本。

Based on [Erlangshen-MegatronBert-1.3B](https://huggingface.co/IDEA-CCNL/Erlangshen-MegatronBert-1.3B), we fine-tuned a NLI version on 4 Chinese Natural Language Inference (NLI) datasets, with totaling 1,014,787 samples.

### 下游效果 Performance

| 模型 Model | cmnli | ocnli | snli |

| :--------: | :-----: | :----: | :-----: |

| Erlangshen-Roberta-110M-NLI | 80.83 | 78.56 | 88.01 |

| Erlangshen-Roberta-330M-NLI | 82.25 | 79.82 | 88.00 |

| Erlangshen-MegatronBert-1.3B-NLI | 84.52 | 84.17 | 88.67 |

## 使用 Usage

``` python

from transformers import AutoModelForSequenceClassification

from transformers import BertTokenizer

import torch

tokenizer=BertTokenizer.from_pretrained('IDEA-CCNL/Erlangshen-MegatronBert-1.3B-NLI')

model=AutoModelForSequenceClassification.from_pretrained('IDEA-CCNL/Erlangshen-MegatronBert-1.3B-NLI')

texta='今天的饭不好吃'

textb='今天心情不好'

output=model(torch.tensor([tokenizer.encode(texta,textb)]))

print(torch.nn.functional.softmax(output.logits,dim=-1))

```

## 引用 Citation

如果您在您的工作中使用了我们的模型,可以引用我们的[论文](https://arxiv.org/abs/2209.02970):

If you are using the resource for your work, please cite the our [paper](https://arxiv.org/abs/2209.02970):

```text

@article{fengshenbang,

author = {Jiaxing Zhang and Ruyi Gan and Junjie Wang and Yuxiang Zhang and Lin Zhang and Ping Yang and Xinyu Gao and Ziwei Wu and Xiaoqun Dong and Junqing He and Jianheng Zhuo and Qi Yang and Yongfeng Huang and Xiayu Li and Yanghan Wu and Junyu Lu and Xinyu Zhu and Weifeng Chen and Ting Han and Kunhao Pan and Rui Wang and Hao Wang and Xiaojun Wu and Zhongshen Zeng and Chongpei Chen},

title = {Fengshenbang 1.0: Being the Foundation of Chinese Cognitive Intelligence},

journal = {CoRR},

volume = {abs/2209.02970},

year = {2022}

}

```

也可以引用我们的[网站](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

You can also cite our [website](https://github.com/IDEA-CCNL/Fengshenbang-LM/):

```text

@misc{Fengshenbang-LM,

title={Fengshenbang-LM},

author={IDEA-CCNL},

year={2021},

howpublished={\url{https://github.com/IDEA-CCNL/Fengshenbang-LM}},

}

``` |

Bingsu/clip-vit-base-patch32-ko | Bingsu | 2022-11-08T11:02:10Z | 2,541 | 4 | transformers | [

"transformers",

"pytorch",

"tf",

"safetensors",

"clip",

"zero-shot-image-classification",

"ko",

"arxiv:2004.09813",

"doi:10.57967/hf/1615",

"license:mit",

"endpoints_compatible",

"region:us"

] | zero-shot-image-classification | 2022-09-16T05:18:05Z | ---

widget:

- src: https://huggingface.co/datasets/mishig/sample_images/resolve/main/cat-dog-music.png

candidate_labels: 기타치는 고양이, 피아노 치는 강아지

example_title: Guitar, cat and dog

language: ko

license: mit

---

# clip-vit-base-patch32-ko

Korean CLIP model trained by [Making Monolingual Sentence Embeddings Multilingual using Knowledge Distillation](https://arxiv.org/abs/2004.09813)

[Making Monolingual Sentence Embeddings Multilingual using Knowledge Distillation](https://arxiv.org/abs/2004.09813)로 학습된 한국어 CLIP 모델입니다.

훈련 코드: <https://github.com/Bing-su/KoCLIP_training_code>

사용된 데이터: AIHUB에 있는 모든 한국어-영어 병렬 데이터

## How to Use

#### 1.

```python

import requests

import torch

from PIL import Image

from transformers import AutoModel, AutoProcessor

repo = "Bingsu/clip-vit-base-patch32-ko"

model = AutoModel.from_pretrained(repo)

processor = AutoProcessor.from_pretrained(repo)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

image = Image.open(requests.get(url, stream=True).raw)

inputs = processor(text=["고양이 두 마리", "개 두 마리"], images=image, return_tensors="pt", padding=True)

with torch.inference_mode():

outputs = model(**inputs)

logits_per_image = outputs.logits_per_image

probs = logits_per_image.softmax(dim=1)

```

```python

>>> probs

tensor([[0.9926, 0.0074]])

```

#### 2.

```python

from transformers import pipeline

repo = "Bingsu/clip-vit-base-patch32-ko"

pipe = pipeline("zero-shot-image-classification", model=repo)

url = "http://images.cocodataset.org/val2017/000000039769.jpg"

result = pipe(images=url, candidate_labels=["고양이 한 마리", "고양이 두 마리", "분홍색 소파에 드러누운 고양이 친구들"], hypothesis_template="{}")

```

```python

>>> result

[{'score': 0.9456236958503723, 'label': '분홍색 소파에 드러누운 고양이 친구들'},

{'score': 0.05315302312374115, 'label': '고양이 두 마리'},

{'score': 0.0012233294546604156, 'label': '고양이 한 마리'}]

```

## Tokenizer

토크나이저는 한국어 데이터와 영어 데이터를 7:3 비율로 섞어, 원본 CLIP 토크나이저에서 `.train_new_from_iterator`를 통해 학습되었습니다.

https://github.com/huggingface/transformers/blob/bc21aaca789f1a366c05e8b5e111632944886393/src/transformers/models/clip/modeling_clip.py#L661-L666

```python

# text_embeds.shape = [batch_size, sequence_length, transformer.width]

# take features from the eot embedding (eot_token is the highest number in each sequence)

# casting to torch.int for onnx compatibility: argmax doesn't support int64 inputs with opset 14

pooled_output = last_hidden_state[

torch.arange(last_hidden_state.shape[0]), input_ids.to(torch.int).argmax(dim=-1)

]

```

CLIP 모델은 `pooled_output`을 구할때 id가 가장 큰 토큰을 사용하기 때문에, eos 토큰은 가장 마지막 토큰이 되어야 합니다.

|

timm/convnext_atto_ols.a2_in1k | timm | 2024-02-10T23:26:51Z | 2,541 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2201.03545",

"license:apache-2.0",

"region:us"

] | image-classification | 2022-12-13T07:06:15Z | ---

license: apache-2.0

library_name: timm

tags:

- image-classification

- timm

datasets:

- imagenet-1k

---

# Model card for convnext_atto_ols.a2_in1k

A ConvNeXt image classification model. Trained in `timm` on ImageNet-1k by Ross Wightman.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 3.7

- GMACs: 0.6

- Activations (M): 4.1

- Image size: train = 224 x 224, test = 288 x 288

- **Papers:**

- A ConvNet for the 2020s: https://arxiv.org/abs/2201.03545

- **Original:** https://github.com/huggingface/pytorch-image-models

- **Dataset:** ImageNet-1k

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model('convnext_atto_ols.a2_in1k', pretrained=True)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

top5_probabilities, top5_class_indices = torch.topk(output.softmax(dim=1) * 100, k=5)

```

### Feature Map Extraction

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'convnext_atto_ols.a2_in1k',

pretrained=True,

features_only=True,

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # unsqueeze single image into batch of 1

for o in output:

# print shape of each feature map in output

# e.g.:

# torch.Size([1, 40, 56, 56])

# torch.Size([1, 80, 28, 28])

# torch.Size([1, 160, 14, 14])

# torch.Size([1, 320, 7, 7])

print(o.shape)

```

### Image Embeddings

```python

from urllib.request import urlopen

from PIL import Image

import timm

img = Image.open(urlopen(

'https://huggingface.co/datasets/huggingface/documentation-images/resolve/main/beignets-task-guide.png'

))

model = timm.create_model(

'convnext_atto_ols.a2_in1k',

pretrained=True,

num_classes=0, # remove classifier nn.Linear

)

model = model.eval()

# get model specific transforms (normalization, resize)

data_config = timm.data.resolve_model_data_config(model)

transforms = timm.data.create_transform(**data_config, is_training=False)

output = model(transforms(img).unsqueeze(0)) # output is (batch_size, num_features) shaped tensor

# or equivalently (without needing to set num_classes=0)

output = model.forward_features(transforms(img).unsqueeze(0))

# output is unpooled, a (1, 320, 7, 7) shaped tensor

output = model.forward_head(output, pre_logits=True)

# output is a (1, num_features) shaped tensor

```

## Model Comparison

Explore the dataset and runtime metrics of this model in timm [model results](https://github.com/huggingface/pytorch-image-models/tree/main/results).

All timing numbers from eager model PyTorch 1.13 on RTX 3090 w/ AMP.

| model |top1 |top5 |img_size|param_count|gmacs |macts |samples_per_sec|batch_size|

|------------------------------------------------------------------------------------------------------------------------------|------|------|--------|-----------|------|------|---------------|----------|

| [convnextv2_huge.fcmae_ft_in22k_in1k_512](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in22k_in1k_512) |88.848|98.742|512 |660.29 |600.81|413.07|28.58 |48 |

| [convnextv2_huge.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in22k_in1k_384) |88.668|98.738|384 |660.29 |337.96|232.35|50.56 |64 |

| [convnext_xxlarge.clip_laion2b_soup_ft_in1k](https://huggingface.co/timm/convnext_xxlarge.clip_laion2b_soup_ft_in1k) |88.612|98.704|256 |846.47 |198.09|124.45|122.45 |256 |

| [convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_384](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_384) |88.312|98.578|384 |200.13 |101.11|126.74|196.84 |256 |

| [convnextv2_large.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in22k_in1k_384) |88.196|98.532|384 |197.96 |101.1 |126.74|128.94 |128 |

| [convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_320](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_soup_ft_in12k_in1k_320) |87.968|98.47 |320 |200.13 |70.21 |88.02 |283.42 |256 |

| [convnext_xlarge.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_xlarge.fb_in22k_ft_in1k_384) |87.75 |98.556|384 |350.2 |179.2 |168.99|124.85 |192 |

| [convnextv2_base.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in22k_in1k_384) |87.646|98.422|384 |88.72 |45.21 |84.49 |209.51 |256 |

| [convnext_large.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_large.fb_in22k_ft_in1k_384) |87.476|98.382|384 |197.77 |101.1 |126.74|194.66 |256 |

| [convnext_large_mlp.clip_laion2b_augreg_ft_in1k](https://huggingface.co/timm/convnext_large_mlp.clip_laion2b_augreg_ft_in1k) |87.344|98.218|256 |200.13 |44.94 |56.33 |438.08 |256 |

| [convnextv2_large.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in22k_in1k) |87.26 |98.248|224 |197.96 |34.4 |43.13 |376.84 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in12k_in1k_384](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in12k_in1k_384) |87.138|98.212|384 |88.59 |45.21 |84.49 |365.47 |256 |

| [convnext_xlarge.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_xlarge.fb_in22k_ft_in1k) |87.002|98.208|224 |350.2 |60.98 |57.5 |368.01 |256 |

| [convnext_base.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_base.fb_in22k_ft_in1k_384) |86.796|98.264|384 |88.59 |45.21 |84.49 |366.54 |256 |

| [convnextv2_base.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in22k_in1k) |86.74 |98.022|224 |88.72 |15.38 |28.75 |624.23 |256 |

| [convnext_large.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_large.fb_in22k_ft_in1k) |86.636|98.028|224 |197.77 |34.4 |43.13 |581.43 |256 |

| [convnext_base.clip_laiona_augreg_ft_in1k_384](https://huggingface.co/timm/convnext_base.clip_laiona_augreg_ft_in1k_384) |86.504|97.97 |384 |88.59 |45.21 |84.49 |368.14 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in12k_in1k](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in12k_in1k) |86.344|97.97 |256 |88.59 |20.09 |37.55 |816.14 |256 |

| [convnextv2_huge.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_huge.fcmae_ft_in1k) |86.256|97.75 |224 |660.29 |115.0 |79.07 |154.72 |256 |

| [convnext_small.in12k_ft_in1k_384](https://huggingface.co/timm/convnext_small.in12k_ft_in1k_384) |86.182|97.92 |384 |50.22 |25.58 |63.37 |516.19 |256 |

| [convnext_base.clip_laion2b_augreg_ft_in1k](https://huggingface.co/timm/convnext_base.clip_laion2b_augreg_ft_in1k) |86.154|97.68 |256 |88.59 |20.09 |37.55 |819.86 |256 |

| [convnext_base.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_base.fb_in22k_ft_in1k) |85.822|97.866|224 |88.59 |15.38 |28.75 |1037.66 |256 |

| [convnext_small.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_small.fb_in22k_ft_in1k_384) |85.778|97.886|384 |50.22 |25.58 |63.37 |518.95 |256 |

| [convnextv2_large.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_large.fcmae_ft_in1k) |85.742|97.584|224 |197.96 |34.4 |43.13 |375.23 |256 |

| [convnext_small.in12k_ft_in1k](https://huggingface.co/timm/convnext_small.in12k_ft_in1k) |85.174|97.506|224 |50.22 |8.71 |21.56 |1474.31 |256 |

| [convnext_tiny.in12k_ft_in1k_384](https://huggingface.co/timm/convnext_tiny.in12k_ft_in1k_384) |85.118|97.608|384 |28.59 |13.14 |39.48 |856.76 |256 |

| [convnextv2_tiny.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in22k_in1k_384) |85.112|97.63 |384 |28.64 |13.14 |39.48 |491.32 |256 |

| [convnextv2_base.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_base.fcmae_ft_in1k) |84.874|97.09 |224 |88.72 |15.38 |28.75 |625.33 |256 |

| [convnext_small.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_small.fb_in22k_ft_in1k) |84.562|97.394|224 |50.22 |8.71 |21.56 |1478.29 |256 |

| [convnext_large.fb_in1k](https://huggingface.co/timm/convnext_large.fb_in1k) |84.282|96.892|224 |197.77 |34.4 |43.13 |584.28 |256 |

| [convnext_tiny.in12k_ft_in1k](https://huggingface.co/timm/convnext_tiny.in12k_ft_in1k) |84.186|97.124|224 |28.59 |4.47 |13.44 |2433.7 |256 |

| [convnext_tiny.fb_in22k_ft_in1k_384](https://huggingface.co/timm/convnext_tiny.fb_in22k_ft_in1k_384) |84.084|97.14 |384 |28.59 |13.14 |39.48 |862.95 |256 |

| [convnextv2_tiny.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in22k_in1k) |83.894|96.964|224 |28.64 |4.47 |13.44 |1452.72 |256 |

| [convnext_base.fb_in1k](https://huggingface.co/timm/convnext_base.fb_in1k) |83.82 |96.746|224 |88.59 |15.38 |28.75 |1054.0 |256 |

| [convnextv2_nano.fcmae_ft_in22k_in1k_384](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in22k_in1k_384) |83.37 |96.742|384 |15.62 |7.22 |24.61 |801.72 |256 |

| [convnext_small.fb_in1k](https://huggingface.co/timm/convnext_small.fb_in1k) |83.142|96.434|224 |50.22 |8.71 |21.56 |1464.0 |256 |

| [convnextv2_tiny.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_tiny.fcmae_ft_in1k) |82.92 |96.284|224 |28.64 |4.47 |13.44 |1425.62 |256 |

| [convnext_tiny.fb_in22k_ft_in1k](https://huggingface.co/timm/convnext_tiny.fb_in22k_ft_in1k) |82.898|96.616|224 |28.59 |4.47 |13.44 |2480.88 |256 |

| [convnext_nano.in12k_ft_in1k](https://huggingface.co/timm/convnext_nano.in12k_ft_in1k) |82.282|96.344|224 |15.59 |2.46 |8.37 |3926.52 |256 |

| [convnext_tiny_hnf.a2h_in1k](https://huggingface.co/timm/convnext_tiny_hnf.a2h_in1k) |82.216|95.852|224 |28.59 |4.47 |13.44 |2529.75 |256 |

| [convnext_tiny.fb_in1k](https://huggingface.co/timm/convnext_tiny.fb_in1k) |82.066|95.854|224 |28.59 |4.47 |13.44 |2346.26 |256 |

| [convnextv2_nano.fcmae_ft_in22k_in1k](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in22k_in1k) |82.03 |96.166|224 |15.62 |2.46 |8.37 |2300.18 |256 |

| [convnextv2_nano.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_nano.fcmae_ft_in1k) |81.83 |95.738|224 |15.62 |2.46 |8.37 |2321.48 |256 |

| [convnext_nano_ols.d1h_in1k](https://huggingface.co/timm/convnext_nano_ols.d1h_in1k) |80.866|95.246|224 |15.65 |2.65 |9.38 |3523.85 |256 |

| [convnext_nano.d1h_in1k](https://huggingface.co/timm/convnext_nano.d1h_in1k) |80.768|95.334|224 |15.59 |2.46 |8.37 |3915.58 |256 |

| [convnextv2_pico.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_pico.fcmae_ft_in1k) |80.304|95.072|224 |9.07 |1.37 |6.1 |3274.57 |256 |

| [convnext_pico.d1_in1k](https://huggingface.co/timm/convnext_pico.d1_in1k) |79.526|94.558|224 |9.05 |1.37 |6.1 |5686.88 |256 |

| [convnext_pico_ols.d1_in1k](https://huggingface.co/timm/convnext_pico_ols.d1_in1k) |79.522|94.692|224 |9.06 |1.43 |6.5 |5422.46 |256 |

| [convnextv2_femto.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_femto.fcmae_ft_in1k) |78.488|93.98 |224 |5.23 |0.79 |4.57 |4264.2 |256 |

| [convnext_femto_ols.d1_in1k](https://huggingface.co/timm/convnext_femto_ols.d1_in1k) |77.86 |93.83 |224 |5.23 |0.82 |4.87 |6910.6 |256 |

| [convnext_femto.d1_in1k](https://huggingface.co/timm/convnext_femto.d1_in1k) |77.454|93.68 |224 |5.22 |0.79 |4.57 |7189.92 |256 |

| [convnextv2_atto.fcmae_ft_in1k](https://huggingface.co/timm/convnextv2_atto.fcmae_ft_in1k) |76.664|93.044|224 |3.71 |0.55 |3.81 |4728.91 |256 |

| [convnext_atto_ols.a2_in1k](https://huggingface.co/timm/convnext_atto_ols.a2_in1k) |75.88 |92.846|224 |3.7 |0.58 |4.11 |7963.16 |256 |

| [convnext_atto.d2_in1k](https://huggingface.co/timm/convnext_atto.d2_in1k) |75.664|92.9 |224 |3.7 |0.55 |3.81 |8439.22 |256 |

## Citation

```bibtex

@misc{rw2019timm,

author = {Ross Wightman},

title = {PyTorch Image Models},

year = {2019},

publisher = {GitHub},

journal = {GitHub repository},

doi = {10.5281/zenodo.4414861},

howpublished = {\url{https://github.com/huggingface/pytorch-image-models}}

}

```

```bibtex

@article{liu2022convnet,

author = {Zhuang Liu and Hanzi Mao and Chao-Yuan Wu and Christoph Feichtenhofer and Trevor Darrell and Saining Xie},

title = {A ConvNet for the 2020s},

journal = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

year = {2022},

}

```

|

RichardErkhov/01-ai_-_Yi-1.5-9B-gguf | RichardErkhov | 2024-06-14T21:35:39Z | 2,541 | 0 | null | [

"gguf",

"arxiv:2403.04652",

"region:us"

] | null | 2024-06-14T20:35:24Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

Yi-1.5-9B - GGUF

- Model creator: https://huggingface.co/01-ai/

- Original model: https://huggingface.co/01-ai/Yi-1.5-9B/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [Yi-1.5-9B.Q2_K.gguf](https://huggingface.co/RichardErkhov/01-ai_-_Yi-1.5-9B-gguf/blob/main/Yi-1.5-9B.Q2_K.gguf) | Q2_K | 3.12GB |

| [Yi-1.5-9B.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/01-ai_-_Yi-1.5-9B-gguf/blob/main/Yi-1.5-9B.IQ3_XS.gguf) | IQ3_XS | 3.46GB |

| [Yi-1.5-9B.IQ3_S.gguf](https://huggingface.co/RichardErkhov/01-ai_-_Yi-1.5-9B-gguf/blob/main/Yi-1.5-9B.IQ3_S.gguf) | IQ3_S | 3.64GB |

| [Yi-1.5-9B.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/01-ai_-_Yi-1.5-9B-gguf/blob/main/Yi-1.5-9B.Q3_K_S.gguf) | Q3_K_S | 3.63GB |