modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

TheBloke/zephyr-7B-alpha-GGUF | TheBloke | 2023-10-14T07:12:10Z | 1,898 | 139 | transformers | [

"transformers",

"gguf",

"mistral",

"generated_from_trainer",

"en",

"dataset:stingning/ultrachat",

"dataset:openbmb/UltraFeedback",

"arxiv:2305.18290",

"base_model:HuggingFaceH4/zephyr-7b-alpha",

"license:mit",

"text-generation-inference",

"region:us"

] | null | 2023-10-11T03:26:12Z | ---

base_model: HuggingFaceH4/zephyr-7b-alpha

datasets:

- stingning/ultrachat

- openbmb/UltraFeedback

inference: false

language:

- en

license: mit

model-index:

- name: zephyr-7b-alpha

results: []

model_creator: Hugging Face H4

model_name: Zephyr 7B Alpha

model_type: mistral

prompt_template: '<|system|>

</s>

<|user|>

{prompt}</s>

<|assistant|>

'

quantized_by: TheBloke

tags:

- generated_from_trainer

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# Zephyr 7B Alpha - GGUF

- Model creator: [Hugging Face H4](https://huggingface.co/HuggingFaceH4)

- Original model: [Zephyr 7B Alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha)

<!-- description start -->

## Description

This repo contains GGUF format model files for [Hugging Face H4's Zephyr 7B Alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha).

<!-- description end -->

<!-- README_GGUF.md-about-gguf start -->

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplate list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

<!-- README_GGUF.md-about-gguf end -->

<!-- repositories-available start -->

## Repositories available

* [AWQ model(s) for GPU inference.](https://huggingface.co/TheBloke/zephyr-7B-alpha-AWQ)

* [GPTQ models for GPU inference, with multiple quantisation parameter options.](https://huggingface.co/TheBloke/zephyr-7B-alpha-GPTQ)

* [2, 3, 4, 5, 6 and 8-bit GGUF models for CPU+GPU inference](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF)

* [Hugging Face H4's original unquantised fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha)

<!-- repositories-available end -->

<!-- prompt-template start -->

## Prompt template: Zephyr

```

<|system|>

</s>

<|user|>

{prompt}</s>

<|assistant|>

```

<!-- prompt-template end -->

<!-- compatibility_gguf start -->

## Compatibility

These quantised GGUFv2 files are compatible with llama.cpp from August 27th onwards, as of commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221)

They are also compatible with many third party UIs and libraries - please see the list at the top of this README.

## Explanation of quantisation methods

<details>

<summary>Click to see details</summary>

The new methods available are:

* GGML_TYPE_Q2_K - "type-1" 2-bit quantization in super-blocks containing 16 blocks, each block having 16 weight. Block scales and mins are quantized with 4 bits. This ends up effectively using 2.5625 bits per weight (bpw)

* GGML_TYPE_Q3_K - "type-0" 3-bit quantization in super-blocks containing 16 blocks, each block having 16 weights. Scales are quantized with 6 bits. This end up using 3.4375 bpw.

* GGML_TYPE_Q4_K - "type-1" 4-bit quantization in super-blocks containing 8 blocks, each block having 32 weights. Scales and mins are quantized with 6 bits. This ends up using 4.5 bpw.

* GGML_TYPE_Q5_K - "type-1" 5-bit quantization. Same super-block structure as GGML_TYPE_Q4_K resulting in 5.5 bpw

* GGML_TYPE_Q6_K - "type-0" 6-bit quantization. Super-blocks with 16 blocks, each block having 16 weights. Scales are quantized with 8 bits. This ends up using 6.5625 bpw

Refer to the Provided Files table below to see what files use which methods, and how.

</details>

<!-- compatibility_gguf end -->

<!-- README_GGUF.md-provided-files start -->

## Provided files

| Name | Quant method | Bits | Size | Max RAM required | Use case |

| ---- | ---- | ---- | ---- | ---- | ----- |

| [zephyr-7b-alpha.Q2_K.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q2_K.gguf) | Q2_K | 2 | 3.08 GB| 5.58 GB | smallest, significant quality loss - not recommended for most purposes |

| [zephyr-7b-alpha.Q3_K_S.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q3_K_S.gguf) | Q3_K_S | 3 | 3.16 GB| 5.66 GB | very small, high quality loss |

| [zephyr-7b-alpha.Q3_K_M.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q3_K_M.gguf) | Q3_K_M | 3 | 3.52 GB| 6.02 GB | very small, high quality loss |

| [zephyr-7b-alpha.Q3_K_L.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q3_K_L.gguf) | Q3_K_L | 3 | 3.82 GB| 6.32 GB | small, substantial quality loss |

| [zephyr-7b-alpha.Q4_0.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q4_0.gguf) | Q4_0 | 4 | 4.11 GB| 6.61 GB | legacy; small, very high quality loss - prefer using Q3_K_M |

| [zephyr-7b-alpha.Q4_K_S.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q4_K_S.gguf) | Q4_K_S | 4 | 4.14 GB| 6.64 GB | small, greater quality loss |

| [zephyr-7b-alpha.Q4_K_M.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q4_K_M.gguf) | Q4_K_M | 4 | 4.37 GB| 6.87 GB | medium, balanced quality - recommended |

| [zephyr-7b-alpha.Q5_0.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q5_0.gguf) | Q5_0 | 5 | 5.00 GB| 7.50 GB | legacy; medium, balanced quality - prefer using Q4_K_M |

| [zephyr-7b-alpha.Q5_K_S.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q5_K_S.gguf) | Q5_K_S | 5 | 5.00 GB| 7.50 GB | large, low quality loss - recommended |

| [zephyr-7b-alpha.Q5_K_M.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q5_K_M.gguf) | Q5_K_M | 5 | 5.13 GB| 7.63 GB | large, very low quality loss - recommended |

| [zephyr-7b-alpha.Q6_K.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q6_K.gguf) | Q6_K | 6 | 5.94 GB| 8.44 GB | very large, extremely low quality loss |

| [zephyr-7b-alpha.Q8_0.gguf](https://huggingface.co/TheBloke/zephyr-7B-alpha-GGUF/blob/main/zephyr-7b-alpha.Q8_0.gguf) | Q8_0 | 8 | 7.70 GB| 10.20 GB | very large, extremely low quality loss - not recommended |

**Note**: the above RAM figures assume no GPU offloading. If layers are offloaded to the GPU, this will reduce RAM usage and use VRAM instead.

<!-- README_GGUF.md-provided-files end -->

<!-- README_GGUF.md-how-to-download start -->

## How to download GGUF files

**Note for manual downloaders:** You almost never want to clone the entire repo! Multiple different quantisation formats are provided, and most users only want to pick and download a single file.

The following clients/libraries will automatically download models for you, providing a list of available models to choose from:

- LM Studio

- LoLLMS Web UI

- Faraday.dev

### In `text-generation-webui`

Under Download Model, you can enter the model repo: TheBloke/zephyr-7B-alpha-GGUF and below it, a specific filename to download, such as: zephyr-7b-alpha.Q4_K_M.gguf.

Then click Download.

### On the command line, including multiple files at once

I recommend using the `huggingface-hub` Python library:

```shell

pip3 install huggingface-hub

```

Then you can download any individual model file to the current directory, at high speed, with a command like this:

```shell

huggingface-cli download TheBloke/zephyr-7B-alpha-GGUF zephyr-7b-alpha.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

<details>

<summary>More advanced huggingface-cli download usage</summary>

You can also download multiple files at once with a pattern:

```shell

huggingface-cli download TheBloke/zephyr-7B-alpha-GGUF --local-dir . --local-dir-use-symlinks False --include='*Q4_K*gguf'

```

For more documentation on downloading with `huggingface-cli`, please see: [HF -> Hub Python Library -> Download files -> Download from the CLI](https://huggingface.co/docs/huggingface_hub/guides/download#download-from-the-cli).

To accelerate downloads on fast connections (1Gbit/s or higher), install `hf_transfer`:

```shell

pip3 install hf_transfer

```

And set environment variable `HF_HUB_ENABLE_HF_TRANSFER` to `1`:

```shell

HF_HUB_ENABLE_HF_TRANSFER=1 huggingface-cli download TheBloke/zephyr-7B-alpha-GGUF zephyr-7b-alpha.Q4_K_M.gguf --local-dir . --local-dir-use-symlinks False

```

Windows Command Line users: You can set the environment variable by running `set HF_HUB_ENABLE_HF_TRANSFER=1` before the download command.

</details>

<!-- README_GGUF.md-how-to-download end -->

<!-- README_GGUF.md-how-to-run start -->

## Example `llama.cpp` command

Make sure you are using `llama.cpp` from commit [d0cee0d](https://github.com/ggerganov/llama.cpp/commit/d0cee0d36d5be95a0d9088b674dbb27354107221) or later.

```shell

./main -ngl 32 -m zephyr-7b-alpha.Q4_K_M.gguf --color -c 2048 --temp 0.7 --repeat_penalty 1.1 -n -1 -p "<|system|>\n</s>\n<|user|>\n{prompt}</s>\n<|assistant|>"

```

Change `-ngl 32` to the number of layers to offload to GPU. Remove it if you don't have GPU acceleration.

Change `-c 2048` to the desired sequence length. For extended sequence models - eg 8K, 16K, 32K - the necessary RoPE scaling parameters are read from the GGUF file and set by llama.cpp automatically.

If you want to have a chat-style conversation, replace the `-p <PROMPT>` argument with `-i -ins`

For other parameters and how to use them, please refer to [the llama.cpp documentation](https://github.com/ggerganov/llama.cpp/blob/master/examples/main/README.md)

## How to run in `text-generation-webui`

Further instructions here: [text-generation-webui/docs/llama.cpp.md](https://github.com/oobabooga/text-generation-webui/blob/main/docs/llama.cpp.md).

## How to run from Python code

You can use GGUF models from Python using the [llama-cpp-python](https://github.com/abetlen/llama-cpp-python) or [ctransformers](https://github.com/marella/ctransformers) libraries.

### How to load this model in Python code, using ctransformers

#### First install the package

Run one of the following commands, according to your system:

```shell

# Base ctransformers with no GPU acceleration

pip install ctransformers

# Or with CUDA GPU acceleration

pip install ctransformers[cuda]

# Or with AMD ROCm GPU acceleration (Linux only)

CT_HIPBLAS=1 pip install ctransformers --no-binary ctransformers

# Or with Metal GPU acceleration for macOS systems only

CT_METAL=1 pip install ctransformers --no-binary ctransformers

```

#### Simple ctransformers example code

```python

from ctransformers import AutoModelForCausalLM

# Set gpu_layers to the number of layers to offload to GPU. Set to 0 if no GPU acceleration is available on your system.

llm = AutoModelForCausalLM.from_pretrained("TheBloke/zephyr-7B-alpha-GGUF", model_file="zephyr-7b-alpha.Q4_K_M.gguf", model_type="mistral", gpu_layers=50)

print(llm("AI is going to"))

```

## How to use with LangChain

Here are guides on using llama-cpp-python and ctransformers with LangChain:

* [LangChain + llama-cpp-python](https://python.langchain.com/docs/integrations/llms/llamacpp)

* [LangChain + ctransformers](https://python.langchain.com/docs/integrations/providers/ctransformers)

<!-- README_GGUF.md-how-to-run end -->

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute

Thanks to the [chirper.ai](https://chirper.ai) team!

Thanks to Clay from [gpus.llm-utils.org](llm-utils)!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.

**Patreon special mentions**: Pierre Kircher, Stanislav Ovsiannikov, Michael Levine, Eugene Pentland, Andrey, 준교 김, Randy H, Fred von Graf, Artur Olbinski, Caitlyn Gatomon, terasurfer, Jeff Scroggin, James Bentley, Vadim, Gabriel Puliatti, Harry Royden McLaughlin, Sean Connelly, Dan Guido, Edmond Seymore, Alicia Loh, subjectnull, AzureBlack, Manuel Alberto Morcote, Thomas Belote, Lone Striker, Chris Smitley, Vitor Caleffi, Johann-Peter Hartmann, Clay Pascal, biorpg, Brandon Frisco, sidney chen, transmissions 11, Pedro Madruga, jinyuan sun, Ajan Kanaga, Emad Mostaque, Trenton Dambrowitz, Jonathan Leane, Iucharbius, usrbinkat, vamX, George Stoitzev, Luke Pendergrass, theTransient, Olakabola, Swaroop Kallakuri, Cap'n Zoog, Brandon Phillips, Michael Dempsey, Nikolai Manek, danny, Matthew Berman, Gabriel Tamborski, alfie_i, Raymond Fosdick, Tom X Nguyen, Raven Klaugh, LangChain4j, Magnesian, Illia Dulskyi, David Ziegler, Mano Prime, Luis Javier Navarrete Lozano, Erik Bjäreholt, 阿明, Nathan Dryer, Alex, Rainer Wilmers, zynix, TL, Joseph William Delisle, John Villwock, Nathan LeClaire, Willem Michiel, Joguhyik, GodLy, OG, Alps Aficionado, Jeffrey Morgan, ReadyPlayerEmma, Tiffany J. Kim, Sebastain Graf, Spencer Kim, Michael Davis, webtim, Talal Aujan, knownsqashed, John Detwiler, Imad Khwaja, Deo Leter, Jerry Meng, Elijah Stavena, Rooh Singh, Pieter, SuperWojo, Alexandros Triantafyllidis, Stephen Murray, Ai Maven, ya boyyy, Enrico Ros, Ken Nordquist, Deep Realms, Nicholas, Spiking Neurons AB, Elle, Will Dee, Jack West, RoA, Luke @flexchar, Viktor Bowallius, Derek Yates, Subspace Studios, jjj, Toran Billups, Asp the Wyvern, Fen Risland, Ilya, NimbleBox.ai, Chadd, Nitin Borwankar, Emre, Mandus, Leonard Tan, Kalila, K, Trailburnt, S_X, Cory Kujawski

Thank you to all my generous patrons and donaters!

And thank you again to a16z for their generous grant.

<!-- footer end -->

<!-- original-model-card start -->

# Original model card: Hugging Face H4's Zephyr 7B Alpha

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

<img src="https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha/resolve/main/thumbnail.png" alt="Zephyr Logo" width="800" style="margin-left:'auto' margin-right:'auto' display:'block'"/>

# Model Card for Zephyr 7B Alpha

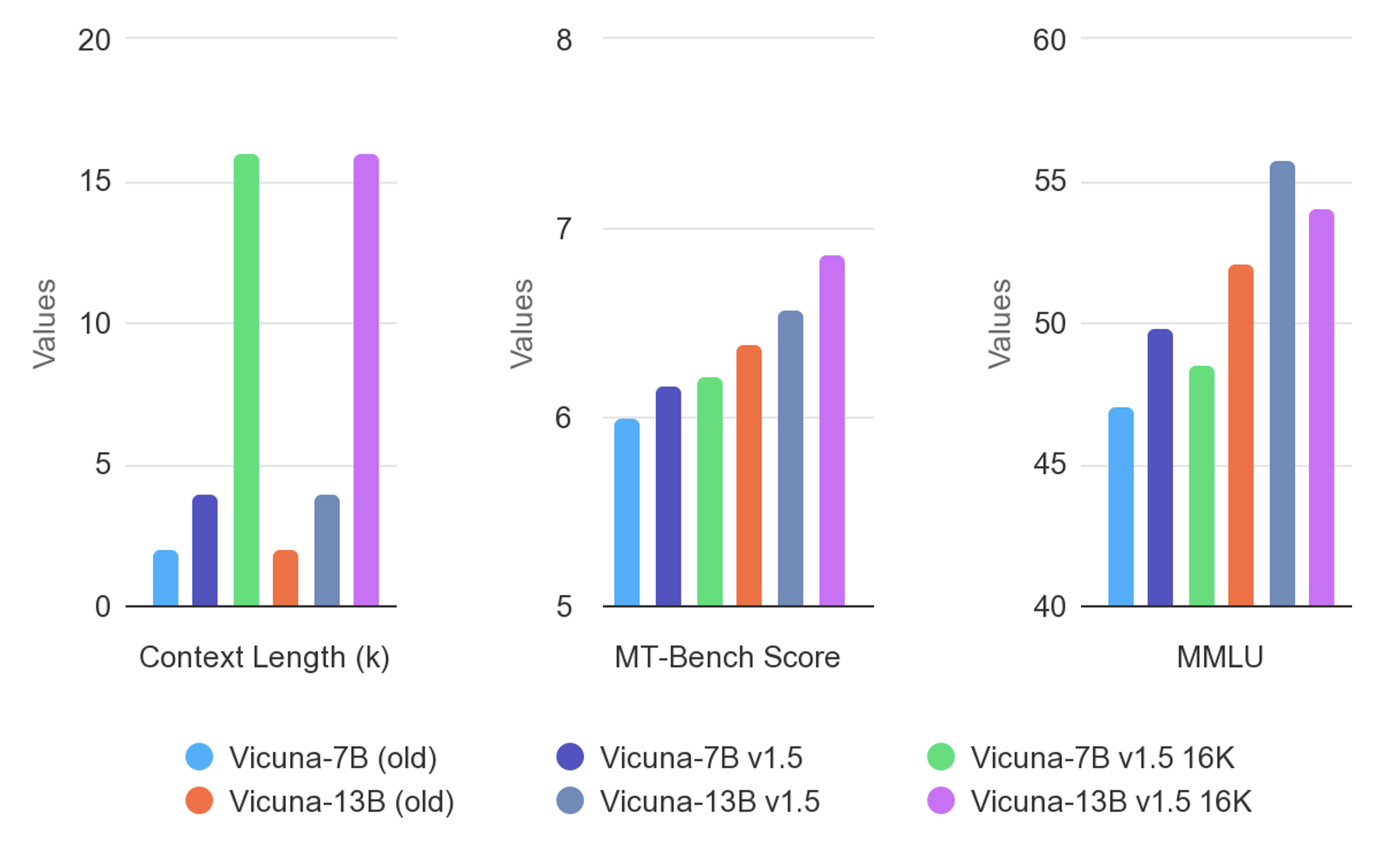

Zephyr is a series of language models that are trained to act as helpful assistants. Zephyr-7B-α is the first model in the series, and is a fine-tuned version of [mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) that was trained on on a mix of publicly available, synthetic datasets using [Direct Preference Optimization (DPO)](https://arxiv.org/abs/2305.18290). We found that removing the in-built alignment of these datasets boosted performance on [MT Bench](https://huggingface.co/spaces/lmsys/mt-bench) and made the model more helpful. However, this means that model is likely to generate problematic text when prompted to do so and should only be used for educational and research purposes.

## Model description

- **Model type:** A 7B parameter GPT-like model fine-tuned on a mix of publicly available, synthetic datasets.

- **Language(s) (NLP):** Primarily English

- **License:** MIT

- **Finetuned from model:** [mistralai/Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1)

### Model Sources

<!-- Provide the basic links for the model. -->

- **Repository:** https://github.com/huggingface/alignment-handbook

- **Demo:** https://huggingface.co/spaces/HuggingFaceH4/zephyr-chat

## Intended uses & limitations

The model was initially fine-tuned on a variant of the [`UltraChat`](https://huggingface.co/datasets/stingning/ultrachat) dataset, which contains a diverse range of synthetic dialogues generated by ChatGPT. We then further aligned the model with [🤗 TRL's](https://github.com/huggingface/trl) `DPOTrainer` on the [openbmb/UltraFeedback](https://huggingface.co/datasets/openbmb/UltraFeedback) dataset, which contain 64k prompts and model completions that are ranked by GPT-4. As a result, the model can be used for chat and you can check out our [demo](https://huggingface.co/spaces/HuggingFaceH4/zephyr-chat) to test its capabilities.

Here's how you can run the model using the `pipeline()` function from 🤗 Transformers:

```python

import torch

from transformers import pipeline

pipe = pipeline("text-generation", model="HuggingFaceH4/zephyr-7b-alpha", torch_dtype=torch.bfloat16, device_map="auto")

# We use the tokenizer's chat template to format each message - see https://huggingface.co/docs/transformers/main/en/chat_templating

messages = [

{

"role": "system",

"content": "You are a friendly chatbot who always responds in the style of a pirate",

},

{"role": "user", "content": "How many helicopters can a human eat in one sitting?"},

]

prompt = pipe.tokenizer.apply_chat_template(messages, tokenize=False, add_generation_prompt=True)

outputs = pipe(prompt, max_new_tokens=256, do_sample=True, temperature=0.7, top_k=50, top_p=0.95)

print(outputs[0]["generated_text"])

# <|system|>

# You are a friendly chatbot who always responds in the style of a pirate.</s>

# <|user|>

# How many helicopters can a human eat in one sitting?</s>

# <|assistant|>

# Ah, me hearty matey! But yer question be a puzzler! A human cannot eat a helicopter in one sitting, as helicopters are not edible. They be made of metal, plastic, and other materials, not food!

```

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

Zephyr-7B-α has not been aligned to human preferences with techniques like RLHF or deployed with in-the-loop filtering of responses like ChatGPT, so the model can produce problematic outputs (especially when prompted to do so).

It is also unknown what the size and composition of the corpus was used to train the base model (`mistralai/Mistral-7B-v0.1`), however it is likely to have included a mix of Web data and technical sources like books and code. See the [Falcon 180B model card](https://huggingface.co/tiiuae/falcon-180B#training-data) for an example of this.

## Training and evaluation data

Zephyr 7B Alpha achieves the following results on the evaluation set:

- Loss: 0.4605

- Rewards/chosen: -0.5053

- Rewards/rejected: -1.8752

- Rewards/accuracies: 0.7812

- Rewards/margins: 1.3699

- Logps/rejected: -327.4286

- Logps/chosen: -297.1040

- Logits/rejected: -2.7153

- Logits/chosen: -2.7447

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 5e-07

- train_batch_size: 2

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- num_devices: 16

- total_train_batch_size: 32

- total_eval_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 1

### Training results

| Training Loss | Epoch | Step | Validation Loss | Rewards/chosen | Rewards/rejected | Rewards/accuracies | Rewards/margins | Logps/rejected | Logps/chosen | Logits/rejected | Logits/chosen |

|:-------------:|:-----:|:----:|:---------------:|:--------------:|:----------------:|:------------------:|:---------------:|:--------------:|:------------:|:---------------:|:-------------:|

| 0.5602 | 0.05 | 100 | 0.5589 | -0.3359 | -0.8168 | 0.7188 | 0.4809 | -306.2607 | -293.7161 | -2.6554 | -2.6797 |

| 0.4852 | 0.1 | 200 | 0.5136 | -0.5310 | -1.4994 | 0.8125 | 0.9684 | -319.9124 | -297.6181 | -2.5762 | -2.5957 |

| 0.5212 | 0.15 | 300 | 0.5168 | -0.1686 | -1.1760 | 0.7812 | 1.0074 | -313.4444 | -290.3699 | -2.6865 | -2.7125 |

| 0.5496 | 0.21 | 400 | 0.4835 | -0.1617 | -1.7170 | 0.8281 | 1.5552 | -324.2635 | -290.2326 | -2.7947 | -2.8218 |

| 0.5209 | 0.26 | 500 | 0.5054 | -0.4778 | -1.6604 | 0.7344 | 1.1826 | -323.1325 | -296.5546 | -2.8388 | -2.8667 |

| 0.4617 | 0.31 | 600 | 0.4910 | -0.3738 | -1.5180 | 0.7656 | 1.1442 | -320.2848 | -294.4741 | -2.8234 | -2.8521 |

| 0.4452 | 0.36 | 700 | 0.4838 | -0.4591 | -1.6576 | 0.7031 | 1.1986 | -323.0770 | -296.1796 | -2.7401 | -2.7653 |

| 0.4674 | 0.41 | 800 | 0.5077 | -0.5692 | -1.8659 | 0.7656 | 1.2967 | -327.2416 | -298.3818 | -2.6740 | -2.6945 |

| 0.4656 | 0.46 | 900 | 0.4927 | -0.5279 | -1.6614 | 0.7656 | 1.1335 | -323.1518 | -297.5553 | -2.7817 | -2.8015 |

| 0.4102 | 0.52 | 1000 | 0.4772 | -0.5767 | -2.0667 | 0.7656 | 1.4900 | -331.2578 | -298.5311 | -2.7160 | -2.7455 |

| 0.4663 | 0.57 | 1100 | 0.4740 | -0.8038 | -2.1018 | 0.7656 | 1.2980 | -331.9604 | -303.0741 | -2.6994 | -2.7257 |

| 0.4737 | 0.62 | 1200 | 0.4716 | -0.3783 | -1.7015 | 0.7969 | 1.3232 | -323.9545 | -294.5634 | -2.6842 | -2.7135 |

| 0.4259 | 0.67 | 1300 | 0.4866 | -0.6239 | -1.9703 | 0.7812 | 1.3464 | -329.3312 | -299.4761 | -2.7046 | -2.7356 |

| 0.4935 | 0.72 | 1400 | 0.4747 | -0.5626 | -1.7600 | 0.7812 | 1.1974 | -325.1243 | -298.2491 | -2.7153 | -2.7444 |

| 0.4211 | 0.77 | 1500 | 0.4645 | -0.6099 | -1.9993 | 0.7656 | 1.3894 | -329.9109 | -299.1959 | -2.6944 | -2.7236 |

| 0.4931 | 0.83 | 1600 | 0.4684 | -0.6798 | -2.1082 | 0.7656 | 1.4285 | -332.0890 | -300.5934 | -2.7006 | -2.7305 |

| 0.5029 | 0.88 | 1700 | 0.4595 | -0.5063 | -1.8951 | 0.7812 | 1.3889 | -327.8267 | -297.1233 | -2.7108 | -2.7403 |

| 0.4965 | 0.93 | 1800 | 0.4613 | -0.5561 | -1.9079 | 0.7812 | 1.3518 | -328.0831 | -298.1203 | -2.7226 | -2.7523 |

| 0.4337 | 0.98 | 1900 | 0.4608 | -0.5066 | -1.8718 | 0.7656 | 1.3652 | -327.3599 | -297.1296 | -2.7175 | -2.7469 |

### Framework versions

- Transformers 4.34.0

- Pytorch 2.0.1+cu118

- Datasets 2.12.0

- Tokenizers 0.14.0

<!-- original-model-card end -->

|

qwp4w3hyb/c4ai-command-r-plus-iMat-GGUF | qwp4w3hyb | 2024-05-29T00:53:22Z | 1,898 | 3 | null | [

"gguf",

"cohere",

"commandr",

"instruct",

"finetune",

"function calling",

"importance matrix",

"imatrix",

"en",

"fr",

"de",

"es",

"it",

"pt",

"ja",

"ko",

"zh",

"ar",

"base_model:CohereForAI/c4ai-command-r-plus",

"license:cc-by-nc-4.0",

"region:us"

] | null | 2024-04-12T09:13:23Z | ---

base_model: CohereForAI/c4ai-command-r-plus

tags:

- cohere

- commandr

- instruct

- finetune

- function calling

- importance matrix

- imatrix

language:

- en

- fr

- de

- es

- it

- pt

- ja

- ko

- zh

- ar

model-index:

- name: c4ai-command-r-plus-iMat-GGUF

results: []

license: cc-by-nc-4.0

---

# Quant Infos

- Requantized for recent bpe pre-tokenizer fixes https://github.com/ggerganov/llama.cpp/pull/6920

- quants done with an importance matrix for improved quantization loss

- 0, K & IQ quants in basically all variants from Q8 down to IQ1_S

- Quantized with [llama.cpp](https://github.com/ggerganov/llama.cpp) commit [fabf30b4c4fca32e116009527180c252919ca922](https://github.com/ggerganov/llama.cpp/commit/fabf30b4c4fca32e116009527180c252919ca922) (master as of 2024-05-20)

- Imatrix generated with [this](https://github.com/ggerganov/llama.cpp/discussions/5263#discussioncomment-8395384) dataset.

```

./imatrix -c 512 -m $model_name-f16.gguf -f $llama_cpp_path/groups_merged.txt -o $out_path/imat-f16-gmerged.dat

```

# Original Model Card:

# Model Card for C4AI Command R+

🚨 **This model is non-quantized version of C4AI Command R+. You can find the quantized version of C4AI Command R+ using bitsandbytes [here](https://huggingface.co/CohereForAI/c4ai-command-r-plus-4bit)**.

## Model Summary

C4AI Command R+ is an open weights research release of a 104B billion parameter model with highly advanced capabilities, this includes Retrieval Augmented Generation (RAG) and tool use to automate sophisticated tasks. The tool use in this model generation enables multi-step tool use which allows the model to combine multiple tools over multiple steps to accomplish difficult tasks. C4AI Command R+ is a multilingual model evaluated in 10 languages for performance: English, French, Spanish, Italian, German, Brazilian Portuguese, Japanese, Korean, Arabic, and Simplified Chinese. Command R+ is optimized for a variety of use cases including reasoning, summarization, and question answering.

C4AI Command R+ is part of a family of open weight releases from Cohere For AI and Cohere. Our smaller companion model is [C4AI Command R](https://huggingface.co/CohereForAI/c4ai-command-r-v01)

Developed by: [Cohere](https://cohere.com/) and [Cohere For AI](https://cohere.for.ai)

- Point of Contact: Cohere For AI: [cohere.for.ai](https://cohere.for.ai/)

- License: [CC-BY-NC](https://cohere.com/c4ai-cc-by-nc-license), requires also adhering to [C4AI's Acceptable Use Policy](https://docs.cohere.com/docs/c4ai-acceptable-use-policy)

- Model: c4ai-command-r-plus

- Model Size: 104 billion parameters

- Context length: 128K

**Try C4AI Command R+**

You can try out C4AI Command R+ before downloading the weights in our hosted [Hugging Face Space](https://huggingface.co/spaces/CohereForAI/c4ai-command-r-plus).

**Usage**

Please install `transformers` from the source repository that includes the necessary changes for this model.

```python

# pip install 'git+https://github.com/huggingface/transformers.git'

from transformers import AutoTokenizer, AutoModelForCausalLM

model_id = "CohereForAI/c4ai-command-r-plus"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id)

# Format message with the command-r-plus chat template

messages = [{"role": "user", "content": "Hello, how are you?"}]

input_ids = tokenizer.apply_chat_template(messages, tokenize=True, add_generation_prompt=True, return_tensors="pt")

## <BOS_TOKEN><|START_OF_TURN_TOKEN|><|USER_TOKEN|>Hello, how are you?<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

gen_tokens = model.generate(

input_ids,

max_new_tokens=100,

do_sample=True,

temperature=0.3,

)

gen_text = tokenizer.decode(gen_tokens[0])

print(gen_text)

```

**Quantized model through bitsandbytes, 8-bit precision**

```python

# pip install 'git+https://github.com/huggingface/transformers.git' bitsandbytes accelerate

from transformers import AutoTokenizer, AutoModelForCausalLM, BitsAndBytesConfig

bnb_config = BitsAndBytesConfig(load_in_8bit=True)

model_id = "CohereForAI/c4ai-command-r-plus"

tokenizer = AutoTokenizer.from_pretrained(model_id)

model = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=bnb_config)

# Format message with the command-r-plus chat template

messages = [{"role": "user", "content": "Hello, how are you?"}]

input_ids = tokenizer.apply_chat_template(messages, tokenize=True, add_generation_prompt=True, return_tensors="pt")

## <BOS_TOKEN><|START_OF_TURN_TOKEN|><|USER_TOKEN|>Hello, how are you?<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

gen_tokens = model.generate(

input_ids,

max_new_tokens=100,

do_sample=True,

temperature=0.3,

)

gen_text = tokenizer.decode(gen_tokens[0])

print(gen_text)

```

**Quantized model through bitsandbytes, 4-bit precision**

This model is non-quantized version of C4AI Command R+. You can find the quantized version of C4AI Command R+ using bitsandbytes [here](https://huggingface.co/CohereForAI/c4ai-command-r-plus-4bit).

## Model Details

**Input**: Models input text only.

**Output**: Models generate text only.

**Model Architecture**: This is an auto-regressive language model that uses an optimized transformer architecture. After pretraining, this model uses supervised fine-tuning (SFT) and preference training to align model behavior to human preferences for helpfulness and safety.

**Languages covered**: The model is optimized to perform well in the following languages: English, French, Spanish, Italian, German, Brazilian Portuguese, Japanese, Korean, Simplified Chinese, and Arabic.

Pre-training data additionally included the following 13 languages: Russian, Polish, Turkish, Vietnamese, Dutch, Czech, Indonesian, Ukrainian, Romanian, Greek, Hindi, Hebrew, Persian.

**Context length**: Command R+ supports a context length of 128K.

## Evaluations

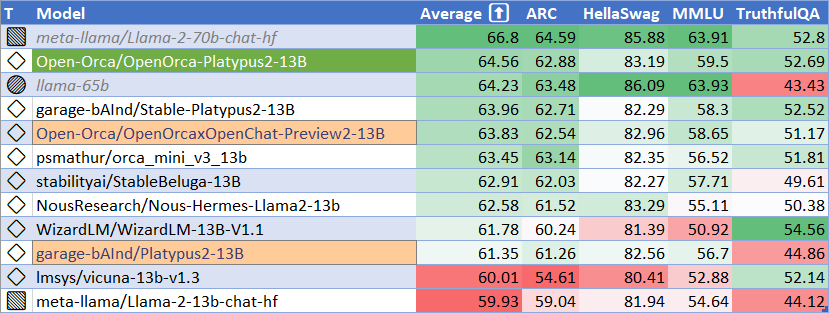

Command R+ has been submitted to the [Open LLM leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard). We include the results below, along with a direct comparison to the strongest state-of-art open weights models currently available on Hugging Face. We note that these results are only useful to compare when evaluations are implemented for all models in a [standardized way](https://github.com/EleutherAI/lm-evaluation-harness) using publically available code, and hence shouldn't be used for comparison outside of models submitted to the leaderboard or compared to self-reported numbers which can't be replicated in the same way.

| Model | Average | Arc (Challenge) | Hella Swag | MMLU | Truthful QA | Winogrande | GSM8k |

|:--------------------------------|----------:|------------------:|-------------:|-------:|--------------:|-------------:|--------:|

| **CohereForAI/c4ai-command-r-plus** | 74.6 | 70.99 | 88.6 | 75.7 | 56.3 | 85.4 | 70.7 |

| [DBRX Instruct](https://huggingface.co/databricks/dbrx-instruct) | 74.5 | 68.9 | 89 | 73.7 | 66.9 | 81.8 | 66.9 |

| [Mixtral 8x7B-Instruct](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1) | 72.7 | 70.1 | 87.6 | 71.4 | 65 | 81.1 | 61.1 |

| [Mixtral 8x7B Chat](https://huggingface.co/mistralai/Mixtral-8x7B-Instruct-v0.1) | 72.6 | 70.2 | 87.6 | 71.2 | 64.6 | 81.4 | 60.7 |

| [CohereForAI/c4ai-command-r-v01](https://huggingface.co/CohereForAI/c4ai-command-r-v01) | 68.5 | 65.5 | 87 | 68.2 | 52.3 | 81.5 | 56.6 |

| [Llama 2 70B](https://huggingface.co/meta-llama/Llama-2-70b-hf) | 67.9 | 67.3 | 87.3 | 69.8 | 44.9 | 83.7 | 54.1 |

| [Yi-34B-Chat](https://huggingface.co/01-ai/Yi-34B-Chat) | 65.3 | 65.4 | 84.2 | 74.9 | 55.4 | 80.1 | 31.9 |

| [Gemma-7B](https://huggingface.co/google/gemma-7b) | 63.8 | 61.1 | 82.2 | 64.6 | 44.8 | 79 | 50.9 |

| [LLama 2 70B Chat](https://huggingface.co/meta-llama/Llama-2-70b-chat-hf) | 62.4 | 64.6 | 85.9 | 63.9 | 52.8 | 80.5 | 26.7 |

| [Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) | 61 | 60 | 83.3 | 64.2 | 42.2 | 78.4 | 37.8 |

We include these metrics here because they are frequently requested, but note that these metrics do not capture RAG, multilingual, tooling performance or the evaluation of open ended generations which we believe Command R+ to be state-of-art at. For evaluations of RAG, multilingual and tooling read more [here](https://txt.cohere.com/command-r-plus-microsoft-azure/). For evaluation of open ended generation, Command R+ is currently being evaluated on the [chatbot arena](https://chat.lmsys.org/).

### Tool use & multihop capabilities:

Command R+ has been specifically trained with conversational tool use capabilities. These have been trained into the model via a mixture of supervised fine-tuning and preference fine-tuning, using a specific prompt template. Deviating from this prompt template will likely reduce performance, but we encourage experimentation.

Command R+’s tool use functionality takes a conversation as input (with an optional user-system preamble), along with a list of available tools. The model will then generate a json-formatted list of actions to execute on a subset of those tools. Command R+ may use one of its supplied tools more than once.

The model has been trained to recognise a special `directly_answer` tool, which it uses to indicate that it doesn’t want to use any of its other tools. The ability to abstain from calling a specific tool can be useful in a range of situations, such as greeting a user, or asking clarifying questions.

We recommend including the `directly_answer` tool, but it can be removed or renamed if required.

Comprehensive documentation for working with command R+'s tool use prompt template can be found [here](https://docs.cohere.com/docs/prompting-command-r).

The code snippet below shows a minimal working example on how to render a prompt.

<details>

<summary><b>Usage: Rendering Tool Use Prompts [CLICK TO EXPAND]</b> </summary>

```python

from transformers import AutoTokenizer

model_id = "CohereForAI/c4ai-command-r-plus"

tokenizer = AutoTokenizer.from_pretrained(model_id)

# define conversation input:

conversation = [

{"role": "user", "content": "Whats the biggest penguin in the world?"}

]

# Define tools available for the model to use:

tools = [

{

"name": "internet_search",

"description": "Returns a list of relevant document snippets for a textual query retrieved from the internet",

"parameter_definitions": {

"query": {

"description": "Query to search the internet with",

"type": 'str',

"required": True

}

}

},

{

'name': "directly_answer",

"description": "Calls a standard (un-augmented) AI chatbot to generate a response given the conversation history",

'parameter_definitions': {}

}

]

# render the tool use prompt as a string:

tool_use_prompt = tokenizer.apply_tool_use_template(

conversation,

tools=tools,

tokenize=False,

add_generation_prompt=True,

)

print(tool_use_prompt)

```

</details>

<details>

<summary><b>Example Rendered Tool Use Prompt [CLICK TO EXPAND]</b></summary>

````

<BOS_TOKEN><|START_OF_TURN_TOKEN|><|SYSTEM_TOKEN|># Safety Preamble

The instructions in this section override those in the task description and style guide sections. Don't answer questions that are harmful or immoral.

# System Preamble

## Basic Rules

You are a powerful conversational AI trained by Cohere to help people. You are augmented by a number of tools, and your job is to use and consume the output of these tools to best help the user. You will see a conversation history between yourself and a user, ending with an utterance from the user. You will then see a specific instruction instructing you what kind of response to generate. When you answer the user's requests, you cite your sources in your answers, according to those instructions.

# User Preamble

## Task and Context

You help people answer their questions and other requests interactively. You will be asked a very wide array of requests on all kinds of topics. You will be equipped with a wide range of search engines or similar tools to help you, which you use to research your answer. You should focus on serving the user's needs as best you can, which will be wide-ranging.

## Style Guide

Unless the user asks for a different style of answer, you should answer in full sentences, using proper grammar and spelling.

## Available Tools

Here is a list of tools that you have available to you:

```python

def internet_search(query: str) -> List[Dict]:

"""Returns a list of relevant document snippets for a textual query retrieved from the internet

Args:

query (str): Query to search the internet with

"""

pass

```

```python

def directly_answer() -> List[Dict]:

"""Calls a standard (un-augmented) AI chatbot to generate a response given the conversation history

"""

pass

```<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|USER_TOKEN|>Whats the biggest penguin in the world?<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|SYSTEM_TOKEN|>Write 'Action:' followed by a json-formatted list of actions that you want to perform in order to produce a good response to the user's last input. You can use any of the supplied tools any number of times, but you should aim to execute the minimum number of necessary actions for the input. You should use the `directly-answer` tool if calling the other tools is unnecessary. The list of actions you want to call should be formatted as a list of json objects, for example:

```json

[

{

"tool_name": title of the tool in the specification,

"parameters": a dict of parameters to input into the tool as they are defined in the specs, or {} if it takes no parameters

}

]```<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

````

</details>

<details>

<summary><b>Example Rendered Tool Use Completion [CLICK TO EXPAND]</b></summary>

````

Action: ```json

[

{

"tool_name": "internet_search",

"parameters": {

"query": "biggest penguin in the world"

}

}

]

```

````

</details>

### Grounded Generation and RAG Capabilities:

Command R+ has been specifically trained with grounded generation capabilities. This means that it can generate responses based on a list of supplied document snippets, and it will include grounding spans (citations) in its response indicating the source of the information. This can be used to enable behaviors such as grounded summarization and the final step of Retrieval Augmented Generation (RAG). This behavior has been trained into the model via a mixture of supervised fine-tuning and preference fine-tuning, using a specific prompt template. Deviating from this prompt template may reduce performance, but we encourage experimentation.

Command R+’s grounded generation behavior takes a conversation as input (with an optional user-supplied system preamble, indicating task, context and desired output style), along with a list of retrieved document snippets. The document snippets should be chunks, rather than long documents, typically around 100-400 words per chunk. Document snippets consist of key-value pairs. The keys should be short descriptive strings, the values can be text or semi-structured.

By default, Command R+ will generate grounded responses by first predicting which documents are relevant, then predicting which ones it will cite, then generating an answer. Finally, it will then insert grounding spans into the answer. See below for an example. This is referred to as `accurate` grounded generation.

The model is trained with a number of other answering modes, which can be selected by prompt changes. A `fast` citation mode is supported in the tokenizer, which will directly generate an answer with grounding spans in it, without first writing the answer out in full. This sacrifices some grounding accuracy in favor of generating fewer tokens.

Comprehensive documentation for working with Command R+'s grounded generation prompt template can be found [here](https://docs.cohere.com/docs/prompting-command-r).

The code snippet below shows a minimal working example on how to render a prompt.

<details>

<summary> <b>Usage: Rendering Grounded Generation prompts [CLICK TO EXPAND]</b> </summary>

````python

from transformers import AutoTokenizer

model_id = "CohereForAI/c4ai-command-r-plus"

tokenizer = AutoTokenizer.from_pretrained(model_id)

# define conversation input:

conversation = [

{"role": "user", "content": "Whats the biggest penguin in the world?"}

]

# define documents to ground on:

documents = [

{ "title": "Tall penguins", "text": "Emperor penguins are the tallest growing up to 122 cm in height." },

{ "title": "Penguin habitats", "text": "Emperor penguins only live in Antarctica."}

]

# render the tool use prompt as a string:

grounded_generation_prompt = tokenizer.apply_grounded_generation_template(

conversation,

documents=documents,

citation_mode="accurate", # or "fast"

tokenize=False,

add_generation_prompt=True,

)

print(grounded_generation_prompt)

````

</details>

<details>

<summary><b>Example Rendered Grounded Generation Prompt [CLICK TO EXPAND]</b></summary>

````<BOS_TOKEN><|START_OF_TURN_TOKEN|><|SYSTEM_TOKEN|># Safety Preamble

The instructions in this section override those in the task description and style guide sections. Don't answer questions that are harmful or immoral.

# System Preamble

## Basic Rules

You are a powerful conversational AI trained by Cohere to help people. You are augmented by a number of tools, and your job is to use and consume the output of these tools to best help the user. You will see a conversation history between yourself and a user, ending with an utterance from the user. You will then see a specific instruction instructing you what kind of response to generate. When you answer the user's requests, you cite your sources in your answers, according to those instructions.

# User Preamble

## Task and Context

You help people answer their questions and other requests interactively. You will be asked a very wide array of requests on all kinds of topics. You will be equipped with a wide range of search engines or similar tools to help you, which you use to research your answer. You should focus on serving the user's needs as best you can, which will be wide-ranging.

## Style Guide

Unless the user asks for a different style of answer, you should answer in full sentences, using proper grammar and spelling.<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|USER_TOKEN|>Whats the biggest penguin in the world?<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|SYSTEM_TOKEN|><results>

Document: 0

title: Tall penguins

text: Emperor penguins are the tallest growing up to 122 cm in height.

Document: 1

title: Penguin habitats

text: Emperor penguins only live in Antarctica.

</results><|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|SYSTEM_TOKEN|>Carefully perform the following instructions, in order, starting each with a new line.

Firstly, Decide which of the retrieved documents are relevant to the user's last input by writing 'Relevant Documents:' followed by comma-separated list of document numbers. If none are relevant, you should instead write 'None'.

Secondly, Decide which of the retrieved documents contain facts that should be cited in a good answer to the user's last input by writing 'Cited Documents:' followed a comma-separated list of document numbers. If you dont want to cite any of them, you should instead write 'None'.

Thirdly, Write 'Answer:' followed by a response to the user's last input in high quality natural english. Use the retrieved documents to help you. Do not insert any citations or grounding markup.

Finally, Write 'Grounded answer:' followed by a response to the user's last input in high quality natural english. Use the symbols <co: doc> and </co: doc> to indicate when a fact comes from a document in the search result, e.g <co: 0>my fact</co: 0> for a fact from document 0.<|END_OF_TURN_TOKEN|><|START_OF_TURN_TOKEN|><|CHATBOT_TOKEN|>

````

</details>

<details>

<summary><b>Example Rendered Grounded Generation Completion [CLICK TO EXPAND]</b></summary>

````

Relevant Documents: 0,1

Cited Documents: 0,1

Answer: The Emperor Penguin is the tallest or biggest penguin in the world. It is a bird that lives only in Antarctica and grows to a height of around 122 centimetres.

Grounded answer: The <co: 0>Emperor Penguin</co: 0> is the <co: 0>tallest</co: 0> or biggest penguin in the world. It is a bird that <co: 1>lives only in Antarctica</co: 1> and <co: 0>grows to a height of around 122 centimetres.</co: 0>

````

</details>

### Code Capabilities:

Command R+ has been optimized to interact with your code, by requesting code snippets, code explanations, or code rewrites. It might not perform well out-of-the-box for pure code completion. For better performance, we also recommend using a low temperature (and even greedy decoding) for code-generation related instructions.

### Model Card Contact

For errors or additional questions about details in this model card, contact [[email protected]](mailto:[email protected]).

### Terms of Use:

We hope that the release of this model will make community-based research efforts more accessible, by releasing the weights of a highly performant 104 billion parameter model to researchers all over the world. This model is governed by a [CC-BY-NC](https://cohere.com/c4ai-cc-by-nc-license) License with an acceptable use addendum, and also requires adhering to [C4AI's Acceptable Use Policy](https://docs.cohere.com/docs/c4ai-acceptable-use-policy).

### Try Chat:

You can try Command R+ chat in the playground [here](https://dashboard.cohere.com/playground/chat). You can also use it in our dedicated Hugging Face Space [here](https://huggingface.co/spaces/CohereForAI/c4ai-command-r-plus). |

bartowski/Tess-v2.5.2-Qwen2-72B-GGUF | bartowski | 2024-06-15T06:17:25Z | 1,898 | 4 | null | [

"gguf",

"text-generation",

"license:other",

"region:us"

] | text-generation | 2024-06-15T04:22:13Z | ---

license: other

license_name: qwen2

license_link: https://huggingface.co/Qwen/Qwen2-72B/blob/main/LICENSE

quantized_by: bartowski

pipeline_tag: text-generation

---

## Llamacpp imatrix Quantizations of Tess-v2.5.2-Qwen2-72B

Using <a href="https://github.com/ggerganov/llama.cpp/">llama.cpp</a> release <a href="https://github.com/ggerganov/llama.cpp/releases/tag/b3145">b3145</a> for quantization.

Original model: https://huggingface.co/migtissera/Tess-v2.5.2-Qwen2-72B

All quants made using imatrix option with dataset from [here](https://gist.github.com/bartowski1182/eb213dccb3571f863da82e99418f81e8)

## Prompt format

```

<|im_start|>system

{system_prompt}<|im_end|>

<|im_start|>user

{prompt}<|im_end|>

<|im_start|>assistant

```

## Download a file (not the whole branch) from below:

| Filename | Quant type | File Size | Description |

| -------- | ---------- | --------- | ----------- |

| [Tess-v2.5.2-Qwen2-72B-Q8_0.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/tree/main/Tess-v2.5.2-Qwen2-72B-Q8_0.gguf) | Q8_0 | 79.59GB | Extremely high quality, generally unneeded but max available quant. |

| [Tess-v2.5.2-Qwen2-72B-Q5_K_M.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/tree/main/Tess-v2.5.2-Qwen2-72B-Q5_K_M.gguf) | Q5_K_M | 57.55GB | High quality, *recommended*. |

| [Tess-v2.5.2-Qwen2-72B-Q4_K_M.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/tree/main/Tess-v2.5.2-Qwen2-72B-Q4_K_M.gguf) | Q4_K_M | 50.67GB | Good quality, uses about 4.83 bits per weight, *recommended*. |

| [Tess-v2.5.2-Qwen2-72B-IQ4_XS.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-IQ4_XS.gguf) | IQ4_XS | 43.00GB | Decent quality, smaller than Q4_K_S with similar performance, *recommended*. |

| [Tess-v2.5.2-Qwen2-72B-Q3_K_M.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-Q3_K_M.gguf) | Q3_K_M | 41.12GB | Even lower quality. |

| [Tess-v2.5.2-Qwen2-72B-IQ3_M.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-IQ3_M.gguf) | IQ3_M | 38.92GB | Medium-low quality, new method with decent performance comparable to Q3_K_M. |

| [Tess-v2.5.2-Qwen2-72B-Q3_K_S.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-Q3_K_S.gguf) | Q3_K_S | 37.91GB | Low quality, not recommended. |

| [Tess-v2.5.2-Qwen2-72B-IQ3_XXS.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-IQ3_XXS.gguf) | IQ3_XXS | 35.43GB | Lower quality, new method with decent performance, comparable to Q3 quants. |

| [Tess-v2.5.2-Qwen2-72B-Q2_K.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-Q2_K.gguf) | Q2_K | 33.36GB | Very low quality but surprisingly usable. |

| [Tess-v2.5.2-Qwen2-72B-IQ2_M.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-IQ2_M.gguf) | IQ2_M | 32.93GB | Very low quality, uses SOTA techniques to also be surprisingly usable. |

| [Tess-v2.5.2-Qwen2-72B-IQ2_XS.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-IQ2_XS.gguf) | IQ2_XS | 30.77GB | Lower quality, uses SOTA techniques to be usable. |

| [Tess-v2.5.2-Qwen2-72B-IQ2_XXS.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-IQ2_XXS.gguf) | IQ2_XXS | 29.20GB | Lower quality, uses SOTA techniques to be usable. |

| [Tess-v2.5.2-Qwen2-72B-IQ1_M.gguf](https://huggingface.co/bartowski/Tess-v2.5.2-Qwen2-72B-GGUF/blob/main/Tess-v2.5.2-Qwen2-72B-IQ1_M.gguf) | IQ1_M | 27.45GB | Extremely low quality, *not* recommended. |

## Downloading using huggingface-cli

First, make sure you have hugginface-cli installed:

```

pip install -U "huggingface_hub[cli]"

```

Then, you can target the specific file you want:

```

huggingface-cli download bartowski/Tess-v2.5.2-Qwen2-72B-GGUF --include "Tess-v2.5.2-Qwen2-72B-Q4_K_M.gguf" --local-dir ./

```

If the model is bigger than 50GB, it will have been split into multiple files. In order to download them all to a local folder, run:

```

huggingface-cli download bartowski/Tess-v2.5.2-Qwen2-72B-GGUF --include "Tess-v2.5.2-Qwen2-72B-Q8_0.gguf/*" --local-dir Tess-v2.5.2-Qwen2-72B-Q8_0

```

You can either specify a new local-dir (Tess-v2.5.2-Qwen2-72B-Q8_0) or download them all in place (./)

## Which file should I choose?

A great write up with charts showing various performances is provided by Artefact2 [here](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9)

The first thing to figure out is how big a model you can run. To do this, you'll need to figure out how much RAM and/or VRAM you have.

If you want your model running as FAST as possible, you'll want to fit the whole thing on your GPU's VRAM. Aim for a quant with a file size 1-2GB smaller than your GPU's total VRAM.

If you want the absolute maximum quality, add both your system RAM and your GPU's VRAM together, then similarly grab a quant with a file size 1-2GB Smaller than that total.

Next, you'll need to decide if you want to use an 'I-quant' or a 'K-quant'.

If you don't want to think too much, grab one of the K-quants. These are in format 'QX_K_X', like Q5_K_M.

If you want to get more into the weeds, you can check out this extremely useful feature chart:

[llama.cpp feature matrix](https://github.com/ggerganov/llama.cpp/wiki/Feature-matrix)

But basically, if you're aiming for below Q4, and you're running cuBLAS (Nvidia) or rocBLAS (AMD), you should look towards the I-quants. These are in format IQX_X, like IQ3_M. These are newer and offer better performance for their size.

These I-quants can also be used on CPU and Apple Metal, but will be slower than their K-quant equivalent, so speed vs performance is a tradeoff you'll have to decide.

The I-quants are *not* compatible with Vulcan, which is also AMD, so if you have an AMD card double check if you're using the rocBLAS build or the Vulcan build. At the time of writing this, LM Studio has a preview with ROCm support, and other inference engines have specific builds for ROCm.

Want to support my work? Visit my ko-fi page here: https://ko-fi.com/bartowski

|

deepset/gelectra-large-germanquad | deepset | 2023-07-20T06:47:30Z | 1,897 | 26 | transformers | [

"transformers",

"pytorch",

"tf",

"safetensors",

"electra",

"question-answering",

"exbert",

"de",

"dataset:deepset/germanquad",

"license:mit",

"endpoints_compatible",

"region:us"

] | question-answering | 2022-03-02T23:29:05Z | ---

language: de

datasets:

- deepset/germanquad

license: mit

thumbnail: https://thumb.tildacdn.com/tild3433-3637-4830-a533-353833613061/-/resize/720x/-/format/webp/germanquad.jpg

tags:

- exbert

---

## Overview

**Language model:** gelectra-large-germanquad

**Language:** German

**Training data:** GermanQuAD train set (~ 12MB)

**Eval data:** GermanQuAD test set (~ 5MB)

**Infrastructure**: 1x V100 GPU

**Published**: Apr 21st, 2021

## Details

- We trained a German question answering model with a gelectra-large model as its basis.

- The dataset is GermanQuAD, a new, German language dataset, which we hand-annotated and published [online](https://deepset.ai/germanquad).

- The training dataset is one-way annotated and contains 11518 questions and 11518 answers, while the test dataset is three-way annotated so that there are 2204 questions and with 2204·3−76 = 6536 answers, because we removed 76 wrong answers.

See https://deepset.ai/germanquad for more details and dataset download in SQuAD format.

## Hyperparameters

```

batch_size = 24

n_epochs = 2

max_seq_len = 384

learning_rate = 3e-5

lr_schedule = LinearWarmup

embeds_dropout_prob = 0.1

```

## Performance

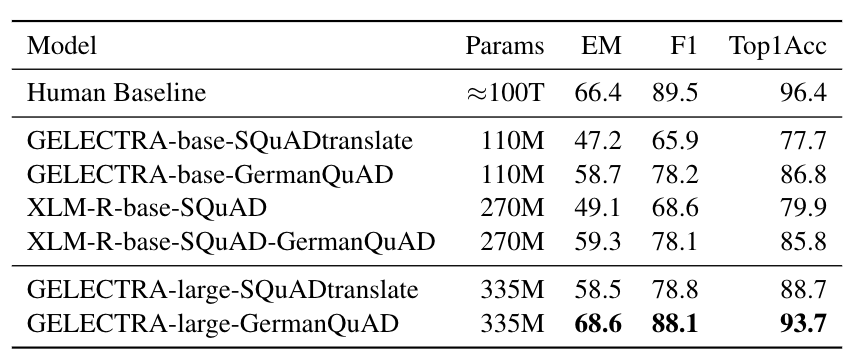

We evaluated the extractive question answering performance on our GermanQuAD test set.

Model types and training data are included in the model name.

For finetuning XLM-Roberta, we use the English SQuAD v2.0 dataset.

The GELECTRA models are warm started on the German translation of SQuAD v1.1 and finetuned on [GermanQuAD](https://deepset.ai/germanquad).

The human baseline was computed for the 3-way test set by taking one answer as prediction and the other two as ground truth.

## Authors

**Timo Möller:** [email protected]

**Julian Risch:** [email protected]

**Malte Pietsch:** [email protected]

## About us

<div class="grid lg:grid-cols-2 gap-x-4 gap-y-3">

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://huggingface.co/spaces/deepset/README/resolve/main/haystack-logo-colored.svg" class="w-40"/>

</div>

<div class="w-full h-40 object-cover mb-2 rounded-lg flex items-center justify-center">

<img alt="" src="https://huggingface.co/spaces/deepset/README/resolve/main/deepset-logo-colored.svg" class="w-40"/>

</div>

</div>

[deepset](http://deepset.ai/) is the company behind the open-source NLP framework [Haystack](https://haystack.deepset.ai/) which is designed to help you build production ready NLP systems that use: Question answering, summarization, ranking etc.

Some of our other work:

- [Distilled roberta-base-squad2 (aka "tinyroberta-squad2")]([https://huggingface.co/deepset/tinyroberta-squad2)

- [German BERT (aka "bert-base-german-cased")](https://deepset.ai/german-bert)

- [GermanQuAD and GermanDPR datasets and models (aka "gelectra-base-germanquad", "gbert-base-germandpr")](https://deepset.ai/germanquad)

## Get in touch and join the Haystack community

<p>For more info on Haystack, visit our <strong><a href="https://github.com/deepset-ai/haystack">GitHub</a></strong> repo and <strong><a href="https://haystack.deepset.ai">Documentation</a></strong>.

We also have a <strong><a class="h-7" href="https://haystack.deepset.ai/community/join">Discord community open to everyone!</a></strong></p>

[Twitter](https://twitter.com/deepset_ai) | [LinkedIn](https://www.linkedin.com/company/deepset-ai/) | [Discord](https://haystack.deepset.ai/community/join) | [GitHub Discussions](https://github.com/deepset-ai/haystack/discussions) | [Website](https://deepset.ai)

By the way: [we're hiring!](http://www.deepset.ai/jobs)

|

JosephusCheung/Qwen-VL-LLaMAfied-7B-Chat | JosephusCheung | 2023-09-25T22:38:03Z | 1,897 | 34 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"llama2",

"qwen",

"en",

"zh",

"license:gpl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-30T18:57:53Z | ---

language:

- en

- zh

tags:

- llama

- llama2

- qwen

license: gpl-3.0

---

This is the LLaMAfied replica of [Qwen/Qwen-VL-Chat](https://huggingface.co/Qwen/Qwen-VL-Chat) (Original Version before 25.09.2023), recalibrated to fit the original LLaMA/LLaMA-2-like model structure.

You can use LlamaForCausalLM for model inference, which is the same as LLaMA/LLaMA-2 models (using GPT2Tokenizer converted from the original tiktoken, by [vonjack](https://huggingface.co/vonjack)).

The model has been edited to be white-labelled, meaning the model will no longer call itself a Qwen.

Up until now, the model has undergone numerical alignment of weights and preliminary reinforcement learning in order to align with the original model. Some errors and outdated knowledge have been addressed through model editing methods. This model remains completely equivalent to the original version, without having any dedicated supervised finetuning on downstream tasks or other extensive conversation datasets.

PROMPT FORMAT: [chatml](https://github.com/openai/openai-python/blob/main/chatml.md) |

Helsinki-NLP/opus-mt-no-de | Helsinki-NLP | 2023-08-16T12:01:50Z | 1,896 | 0 | transformers | [

"transformers",

"pytorch",

"tf",

"marian",

"text2text-generation",

"translation",

"no",

"de",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | translation | 2022-03-02T23:29:04Z | ---

language:

- no

- de

tags:

- translation

license: apache-2.0

---

### nor-deu

* source group: Norwegian

* target group: German

* OPUS readme: [nor-deu](https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/nor-deu/README.md)

* model: transformer-align

* source language(s): nno nob

* target language(s): deu

* model: transformer-align

* pre-processing: normalization + SentencePiece (spm4k,spm4k)

* download original weights: [opus-2020-06-17.zip](https://object.pouta.csc.fi/Tatoeba-MT-models/nor-deu/opus-2020-06-17.zip)

* test set translations: [opus-2020-06-17.test.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/nor-deu/opus-2020-06-17.test.txt)

* test set scores: [opus-2020-06-17.eval.txt](https://object.pouta.csc.fi/Tatoeba-MT-models/nor-deu/opus-2020-06-17.eval.txt)

## Benchmarks

| testset | BLEU | chr-F |

|-----------------------|-------|-------|

| Tatoeba-test.nor.deu | 29.6 | 0.541 |

### System Info:

- hf_name: nor-deu

- source_languages: nor

- target_languages: deu

- opus_readme_url: https://github.com/Helsinki-NLP/Tatoeba-Challenge/tree/master/models/nor-deu/README.md

- original_repo: Tatoeba-Challenge

- tags: ['translation']

- languages: ['no', 'de']

- src_constituents: {'nob', 'nno'}

- tgt_constituents: {'deu'}

- src_multilingual: False

- tgt_multilingual: False

- prepro: normalization + SentencePiece (spm4k,spm4k)

- url_model: https://object.pouta.csc.fi/Tatoeba-MT-models/nor-deu/opus-2020-06-17.zip

- url_test_set: https://object.pouta.csc.fi/Tatoeba-MT-models/nor-deu/opus-2020-06-17.test.txt

- src_alpha3: nor

- tgt_alpha3: deu

- short_pair: no-de

- chrF2_score: 0.541

- bleu: 29.6

- brevity_penalty: 0.96

- ref_len: 34575.0

- src_name: Norwegian

- tgt_name: German

- train_date: 2020-06-17

- src_alpha2: no

- tgt_alpha2: de

- prefer_old: False

- long_pair: nor-deu

- helsinki_git_sha: 480fcbe0ee1bf4774bcbe6226ad9f58e63f6c535

- transformers_git_sha: 2207e5d8cb224e954a7cba69fa4ac2309e9ff30b

- port_machine: brutasse

- port_time: 2020-08-21-14:41 |

marcchew/Marcoroni-7B-LaMini-40K | marcchew | 2023-09-17T08:52:48Z | 1,896 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"8-bit",

"bitsandbytes",

"region:us"

] | text-generation | 2023-09-17T08:48:05Z | Entry not found |

mncai/Llama2-7B-guanaco-dolphin-500 | mncai | 2023-09-27T10:46:58Z | 1,896 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-27T10:29:41Z | Entry not found |

HuggingFaceFW/ablation-model-fineweb-v1 | HuggingFaceFW | 2024-04-25T08:32:46Z | 1,896 | 13 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"arxiv:1910.09700",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2024-04-20T23:08:00Z | ---

library_name: transformers

license: apache-2.0

---

# Model Card for Model ID

<!-- Provide a quick summary of what the model is/does. -->

## Model Details

### Model Description

<!-- Provide a longer summary of what this model is. -->

This is the model card of a 🤗 transformers model that has been pushed on the Hub. This model card has been automatically generated.

- **Developed by:** [More Information Needed]

- **Funded by [optional]:** [More Information Needed]

- **Shared by [optional]:** [More Information Needed]

- **Model type:** [More Information Needed]

- **Language(s) (NLP):** [More Information Needed]

- **License:** [More Information Needed]

- **Finetuned from model [optional]:** [More Information Needed]

### Model Sources [optional]

<!-- Provide the basic links for the model. -->

- **Repository:** [More Information Needed]

- **Paper [optional]:** [More Information Needed]

- **Demo [optional]:** [More Information Needed]

## Uses

<!-- Address questions around how the model is intended to be used, including the foreseeable users of the model and those affected by the model. -->

### Direct Use

<!-- This section is for the model use without fine-tuning or plugging into a larger ecosystem/app. -->

[More Information Needed]

### Downstream Use [optional]

<!-- This section is for the model use when fine-tuned for a task, or when plugged into a larger ecosystem/app -->

[More Information Needed]

### Out-of-Scope Use

<!-- This section addresses misuse, malicious use, and uses that the model will not work well for. -->

[More Information Needed]

## Bias, Risks, and Limitations

<!-- This section is meant to convey both technical and sociotechnical limitations. -->

[More Information Needed]

### Recommendations

<!-- This section is meant to convey recommendations with respect to the bias, risk, and technical limitations. -->

Users (both direct and downstream) should be made aware of the risks, biases and limitations of the model. More information needed for further recommendations.

## How to Get Started with the Model

Use the code below to get started with the model.

[More Information Needed]

## Training Details

### Training Data

<!-- This should link to a Dataset Card, perhaps with a short stub of information on what the training data is all about as well as documentation related to data pre-processing or additional filtering. -->

[More Information Needed]

### Training Procedure

<!-- This relates heavily to the Technical Specifications. Content here should link to that section when it is relevant to the training procedure. -->

#### Preprocessing [optional]

[More Information Needed]

#### Training Hyperparameters

- **Training regime:** [More Information Needed] <!--fp32, fp16 mixed precision, bf16 mixed precision, bf16 non-mixed precision, fp16 non-mixed precision, fp8 mixed precision -->

#### Speeds, Sizes, Times [optional]

<!-- This section provides information about throughput, start/end time, checkpoint size if relevant, etc. -->

[More Information Needed]

## Evaluation

<!-- This section describes the evaluation protocols and provides the results. -->

### Testing Data, Factors & Metrics

#### Testing Data

<!-- This should link to a Dataset Card if possible. -->

[More Information Needed]

#### Factors

<!-- These are the things the evaluation is disaggregating by, e.g., subpopulations or domains. -->

[More Information Needed]

#### Metrics

<!-- These are the evaluation metrics being used, ideally with a description of why. -->

[More Information Needed]

### Results

[More Information Needed]

#### Summary

## Model Examination [optional]

<!-- Relevant interpretability work for the model goes here -->

[More Information Needed]

## Environmental Impact

<!-- Total emissions (in grams of CO2eq) and additional considerations, such as electricity usage, go here. Edit the suggested text below accordingly -->

Carbon emissions can be estimated using the [Machine Learning Impact calculator](https://mlco2.github.io/impact#compute) presented in [Lacoste et al. (2019)](https://arxiv.org/abs/1910.09700).

- **Hardware Type:** [More Information Needed]

- **Hours used:** [More Information Needed]

- **Cloud Provider:** [More Information Needed]

- **Compute Region:** [More Information Needed]

- **Carbon Emitted:** [More Information Needed]

## Technical Specifications [optional]

### Model Architecture and Objective

[More Information Needed]

### Compute Infrastructure

[More Information Needed]

#### Hardware

[More Information Needed]

#### Software

[More Information Needed]

## Citation [optional]

<!-- If there is a paper or blog post introducing the model, the APA and Bibtex information for that should go in this section. -->

**BibTeX:**

[More Information Needed]

**APA:**

[More Information Needed]

## Glossary [optional]

<!-- If relevant, include terms and calculations in this section that can help readers understand the model or model card. -->

[More Information Needed]

## More Information [optional]

[More Information Needed]

## Model Card Authors [optional]

[More Information Needed]

## Model Card Contact

[More Information Needed] |

flair/upos-multi | flair | 2024-04-05T09:55:13Z | 1,895 | 6 | flair | [

"flair",

"pytorch",

"token-classification",

"sequence-tagger-model",

"en",

"de",

"fr",

"it",

"nl",

"pl",

"es",

"sv",

"da",

"no",

"fi",

"cs",

"dataset:ontonotes",

"region:us"

] | token-classification | 2022-03-02T23:29:05Z | ---

tags:

- flair

- token-classification

- sequence-tagger-model

language:

- en

- de

- fr

- it

- nl

- pl

- es

- sv

- da

- no

- fi

- cs

datasets:

- ontonotes

widget:

- text: "Ich liebe Berlin, as they say"

---

## Multilingual Universal Part-of-Speech Tagging in Flair (default model)

This is the default multilingual universal part-of-speech tagging model that ships with [Flair](https://github.com/flairNLP/flair/).

F1-Score: **96.87** (12 UD Treebanks covering English, German, French, Italian, Dutch, Polish, Spanish, Swedish, Danish, Norwegian, Finnish and Czech)

Predicts universal POS tags:

| **tag** | **meaning** |

|---------------------------------|-----------|

|ADJ | adjective |

| ADP | adposition |

| ADV | adverb |

| AUX | auxiliary |

| CCONJ | coordinating conjunction |

| DET | determiner |

| INTJ | interjection |

| NOUN | noun |

| NUM | numeral |

| PART | particle |

| PRON | pronoun |

| PROPN | proper noun |

| PUNCT | punctuation |

| SCONJ | subordinating conjunction |

| SYM | symbol |

| VERB | verb |

| X | other |

Based on [Flair embeddings](https://www.aclweb.org/anthology/C18-1139/) and LSTM-CRF.

---

### Demo: How to use in Flair

Requires: **[Flair](https://github.com/flairNLP/flair/)** (`pip install flair`)

```python

from flair.data import Sentence

from flair.models import SequenceTagger

# load tagger

tagger = SequenceTagger.load("flair/upos-multi")

# make example sentence

sentence = Sentence("Ich liebe Berlin, as they say. ")

# predict POS tags

tagger.predict(sentence)

# print sentence

print(sentence)

# iterate over tokens and print the predicted POS label

print("The following POS tags are found:")

for token in sentence:

print(token.get_label("upos"))

```

This yields the following output:

```

Token[0]: "Ich" → PRON (0.9999)

Token[1]: "liebe" → VERB (0.9999)

Token[2]: "Berlin" → PROPN (0.9997)

Token[3]: "," → PUNCT (1.0)

Token[4]: "as" → SCONJ (0.9991)

Token[5]: "they" → PRON (0.9998)

Token[6]: "say" → VERB (0.9998)

Token[7]: "." → PUNCT (1.0)

```

So, the words "*Ich*" and "*they*" are labeled as **pronouns** (PRON), while "*liebe*" and "*say*" are labeled as **verbs** (VERB) in the multilingual sentence "*Ich liebe Berlin, as they say*".

---

### Training: Script to train this model

The following Flair script was used to train this model:

```python

from flair.data import MultiCorpus

from flair.datasets import UD_ENGLISH, UD_GERMAN, UD_FRENCH, UD_ITALIAN, UD_POLISH, UD_DUTCH, UD_CZECH, \

UD_DANISH, UD_SPANISH, UD_SWEDISH, UD_NORWEGIAN, UD_FINNISH

from flair.embeddings import StackedEmbeddings, FlairEmbeddings

# 1. make a multi corpus consisting of 12 UD treebanks (in_memory=False here because this corpus becomes large)

corpus = MultiCorpus([

UD_ENGLISH(in_memory=False),

UD_GERMAN(in_memory=False),

UD_DUTCH(in_memory=False),

UD_FRENCH(in_memory=False),

UD_ITALIAN(in_memory=False),

UD_SPANISH(in_memory=False),

UD_POLISH(in_memory=False),

UD_CZECH(in_memory=False),

UD_DANISH(in_memory=False),

UD_SWEDISH(in_memory=False),

UD_NORWEGIAN(in_memory=False),

UD_FINNISH(in_memory=False),

])

# 2. what tag do we want to predict?

tag_type = 'upos'

# 3. make the tag dictionary from the corpus

tag_dictionary = corpus.make_label_dictionary(label_type=tag_type)

# 4. initialize each embedding we use

embedding_types = [

# contextual string embeddings, forward

FlairEmbeddings('multi-forward'),

# contextual string embeddings, backward

FlairEmbeddings('multi-backward'),

]

# embedding stack consists of Flair embeddings

embeddings = StackedEmbeddings(embeddings=embedding_types)

# 5. initialize sequence tagger

from flair.models import SequenceTagger

tagger = SequenceTagger(hidden_size=256,

embeddings=embeddings,

tag_dictionary=tag_dictionary,

tag_type=tag_type,

use_crf=False)

# 6. initialize trainer

from flair.trainers import ModelTrainer

trainer = ModelTrainer(tagger, corpus)

# 7. run training

trainer.train('resources/taggers/upos-multi',

train_with_dev=True,

max_epochs=150)

```

---

### Cite

Please cite the following paper when using this model.

```

@inproceedings{akbik2018coling,

title={Contextual String Embeddings for Sequence Labeling},

author={Akbik, Alan and Blythe, Duncan and Vollgraf, Roland},

booktitle = {{COLING} 2018, 27th International Conference on Computational Linguistics},

pages = {1638--1649},

year = {2018}

}

```

---

### Issues?

The Flair issue tracker is available [here](https://github.com/flairNLP/flair/issues/).

|

timm/sequencer2d_l.in1k | timm | 2023-04-26T21:43:30Z | 1,895 | 0 | timm | [

"timm",

"pytorch",

"safetensors",

"image-classification",

"dataset:imagenet-1k",

"arxiv:2205.01972",

"license:apache-2.0",

"region:us"

] | image-classification | 2023-04-26T21:42:41Z | ---

tags:

- image-classification

- timm

library_name: timm

license: apache-2.0

datasets:

- imagenet-1k

---

# Model card for sequencer2d_l.in1k

A Sequencer2d (LSTM based) image classification model. Trained on ImageNet-1k by paper authors.

## Model Details

- **Model Type:** Image classification / feature backbone

- **Model Stats:**

- Params (M): 54.3

- GMACs: 9.7

- Activations (M): 22.1

- Image size: 224 x 224

- **Papers:**

- Sequencer: Deep LSTM for Image Classification: https://arxiv.org/abs/2205.01972

- **Dataset:** ImageNet-1k

- **Original:** https://github.com/okojoalg/sequencer

## Model Usage

### Image Classification

```python

from urllib.request import urlopen

from PIL import Image

import timm