modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

listlengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

Sahajtomar/NER_legal_de | Sahajtomar | 2021-05-18T22:27:00Z | 1,708 | 3 | transformers | [

"transformers",

"pytorch",

"tf",

"jax",

"bert",

"token-classification",

"NER",

"de",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| token-classification | 2022-03-02T23:29:04Z | ---

language: de

tags:

- pytorch

- tf

- bert

- NER

datasets:

- legal entity recognition

---

### NER model trained on BERT

MODEL used for fine tuning is GBERT Large by deepset.ai

## Test

Accuracy: 98 \

F1: 84.1 \

Precision: 82.7 \

Recall: 85.5

## Model inferencing:

```python

!pip install -q transformers

from transformers import pipeline

ner = pipeline(

"ner",

model="Sahajtomar/NER_legal_de",

tokenizer="Sahajtomar/NER_legal_de")

nlp_ner("Für eine Zuständigkeit des Verwaltungsgerichts Berlin nach § 52 Nr. 1 bis 4 VwGO hat der \

Antragsteller keine Anhaltspunkte vorgetragen .")

```

|

facebook/s2t-medium-librispeech-asr | facebook | 2023-09-07T15:42:27Z | 1,708 | 8 | transformers | [

"transformers",

"pytorch",

"tf",

"safetensors",

"speech_to_text",

"automatic-speech-recognition",

"audio",

"en",

"dataset:librispeech_asr",

"arxiv:2010.05171",

"arxiv:1904.08779",

"license:mit",

"endpoints_compatible",

"region:us"

]

| automatic-speech-recognition | 2022-03-02T23:29:05Z | ---

language: en

datasets:

- librispeech_asr

tags:

- audio

- automatic-speech-recognition

pipeline_tag: automatic-speech-recognition

widget:

- example_title: Librispeech sample 1

src: https://cdn-media.huggingface.co/speech_samples/sample1.flac

- example_title: Librispeech sample 2

src: https://cdn-media.huggingface.co/speech_samples/sample2.flac

license: mit

---

# S2T-MEDIUM-LIBRISPEECH-ASR

`s2t-medium-librispeech-asr` is a Speech to Text Transformer (S2T) model trained for automatic speech recognition (ASR).

The S2T model was proposed in [this paper](https://arxiv.org/abs/2010.05171) and released in

[this repository](https://github.com/pytorch/fairseq/tree/master/examples/speech_to_text)

## Model description

S2T is an end-to-end sequence-to-sequence transformer model. It is trained with standard

autoregressive cross-entropy loss and generates the transcripts autoregressively.

## Intended uses & limitations

This model can be used for end-to-end speech recognition (ASR).

See the [model hub](https://huggingface.co/models?filter=speech_to_text) to look for other S2T checkpoints.

### How to use

As this a standard sequence to sequence transformer model, you can use the `generate` method to generate the

transcripts by passing the speech features to the model.

*Note: The `Speech2TextProcessor` object uses [torchaudio](https://github.com/pytorch/audio) to extract the

filter bank features. Make sure to install the `torchaudio` package before running this example.*

You could either install those as extra speech dependancies with

`pip install transformers"[speech, sentencepiece]"` or install the packages seperatly

with `pip install torchaudio sentencepiece`.

```python

import torch

from transformers import Speech2TextProcessor, Speech2TextForConditionalGeneration

from datasets import load_dataset

import soundfile as sf

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-medium-librispeech-asr")

processor = Speech2Textprocessor.from_pretrained("facebook/s2t-medium-librispeech-asr")

def map_to_array(batch):

speech, _ = sf.read(batch["file"])

batch["speech"] = speech

return batch

ds = load_dataset(

"patrickvonplaten/librispeech_asr_dummy",

"clean",

split="validation"

)

ds = ds.map(map_to_array)

input_features = processor(

ds["speech"][0],

sampling_rate=16_000,

return_tensors="pt"

).input_features # Batch size 1

generated_ids = model.generate(input_features=input_features)

transcription = processor.batch_decode(generated_ids)

```

#### Evaluation on LibriSpeech Test

The following script shows how to evaluate this model on the [LibriSpeech](https://huggingface.co/datasets/librispeech_asr)

*"clean"* and *"other"* test dataset.

```python

from datasets import load_dataset

from evaluate import load

from transformers import Speech2TextForConditionalGeneration, Speech2TextProcessor

librispeech_eval = load_dataset("librispeech_asr", "clean", split="test") # change to "other" for other test dataset

wer = load("wer")

model = Speech2TextForConditionalGeneration.from_pretrained("facebook/s2t-medium-librispeech-asr").to("cuda")

processor = Speech2TextProcessor.from_pretrained("facebook/s2t-medium-librispeech-asr", do_upper_case=True)

def map_to_pred(batch):

features = processor(batch["audio"]["array"], sampling_rate=16000, padding=True, return_tensors="pt")

input_features = features.input_features.to("cuda")

attention_mask = features.attention_mask.to("cuda")

gen_tokens = model.generate(input_features=input_features, attention_mask=attention_mask)

batch["transcription"] = processor.batch_decode(gen_tokens, skip_special_tokens=True)[0]

return batch

result = librispeech_eval.map(map_to_pred, remove_columns=["audio"])

print("WER:", wer.compute(predictions=result["transcription"], references=result["text"]))

```

*Result (WER)*:

| "clean" | "other" |

|:-------:|:-------:|

| 3.5 | 7.8 |

## Training data

The S2T-MEDIUM-LIBRISPEECH-ASR is trained on [LibriSpeech ASR Corpus](https://www.openslr.org/12), a dataset consisting of

approximately 1000 hours of 16kHz read English speech.

## Training procedure

### Preprocessing

The speech data is pre-processed by extracting Kaldi-compliant 80-channel log mel-filter bank features automatically from

WAV/FLAC audio files via PyKaldi or torchaudio. Further utterance-level CMVN (cepstral mean and variance normalization)

is applied to each example.

The texts are lowercased and tokenized using SentencePiece and a vocabulary size of 10,000.

### Training

The model is trained with standard autoregressive cross-entropy loss and using [SpecAugment](https://arxiv.org/abs/1904.08779).

The encoder receives speech features, and the decoder generates the transcripts autoregressively.

### BibTeX entry and citation info

```bibtex

@inproceedings{wang2020fairseqs2t,

title = {fairseq S2T: Fast Speech-to-Text Modeling with fairseq},

author = {Changhan Wang and Yun Tang and Xutai Ma and Anne Wu and Dmytro Okhonko and Juan Pino},

booktitle = {Proceedings of the 2020 Conference of the Asian Chapter of the Association for Computational Linguistics (AACL): System Demonstrations},

year = {2020},

}

``` |

AdamOswald1/Anything-Preservation | AdamOswald1 | 2023-01-27T17:23:51Z | 1,708 | 103 | diffusers | [

"diffusers",

"safetensors",

"stable-diffusion",

"stable-diffusion-diffusers",

"text-to-image",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"diffusers:StableDiffusionPipeline",

"region:us"

]

| text-to-image | 2023-01-18T16:41:58Z | ---

language:

- en

license: creativeml-openrail-m

tags:

- stable-diffusion

- stable-diffusion-diffusers

- text-to-image

- diffusers

inference: true

---

DISCLAIMER! This Is A Preservation Repository!

# Anything V3 - Better VAE

Welcome to Anything V3 - Better VAE. It currently has three model formats: diffusers, ckpt, and safetensors. You'll never see a grey image result again. This model is designed to produce high-quality, highly detailed anime-style images with just a few prompts. Like other anime-style Stable Diffusion models, it also supports danbooru tags for image generation.

e.g. **_1girl, white hair, golden eyes, beautiful eyes, detail, flower meadow, cumulonimbus clouds, lighting, detailed sky, garden_**

## Gradio

We support a [Gradio](https://github.com/gradio-app/gradio) Web UI to run Anything V3 with Better VAE:

[](https://huggingface.co/spaces/Linaqruf/Linaqruf-anything-v3-better-vae)

## 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion). You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX]().

You should install dependencies below in order to running the pipeline

```bash

pip install diffusers transformers accelerate scipy safetensors

```

Running the pipeline (if you don't swap the scheduler it will run with the default DDIM, in this example we are swapping it to DPMSolverMultistepScheduler):

```python

from diffusers import StableDiffusionPipeline, DPMSolverMultistepScheduler

model_id = "Linaqruf/anything-v3-0-better-vae"

# Use the DPMSolverMultistepScheduler (DPM-Solver++) scheduler here instead

pipe = StableDiffusionPipeline.from_pretrained(model_id, torch_dtype=torch.float16)

pipe.scheduler = DPMSolverMultistepScheduler.from_config(pipe.scheduler.config)

pipe = pipe.to("cuda")

prompt = "masterpiece, best quality, illustration, beautiful detailed, finely detailed, dramatic light, intricate details, 1girl, brown hair, green eyes, colorful, autumn, cumulonimbus clouds, lighting, blue sky, falling leaves, garden"

negative_prompt = "lowres, bad anatomy, bad hands, text, error, missing fingers, extra digit, fewer digits, cropped, worst quality, low quality, normal quality, jpeg artifacts, signature, watermark, username, blurry, artist name"

with autocast("cuda"):

image = pipe(prompt,

negative_prompt=negative_prompt,

width=512,

height=640,

guidance_scale=12,

num_inference_steps=50).images[0]

image.save("anime_girl.png")

```

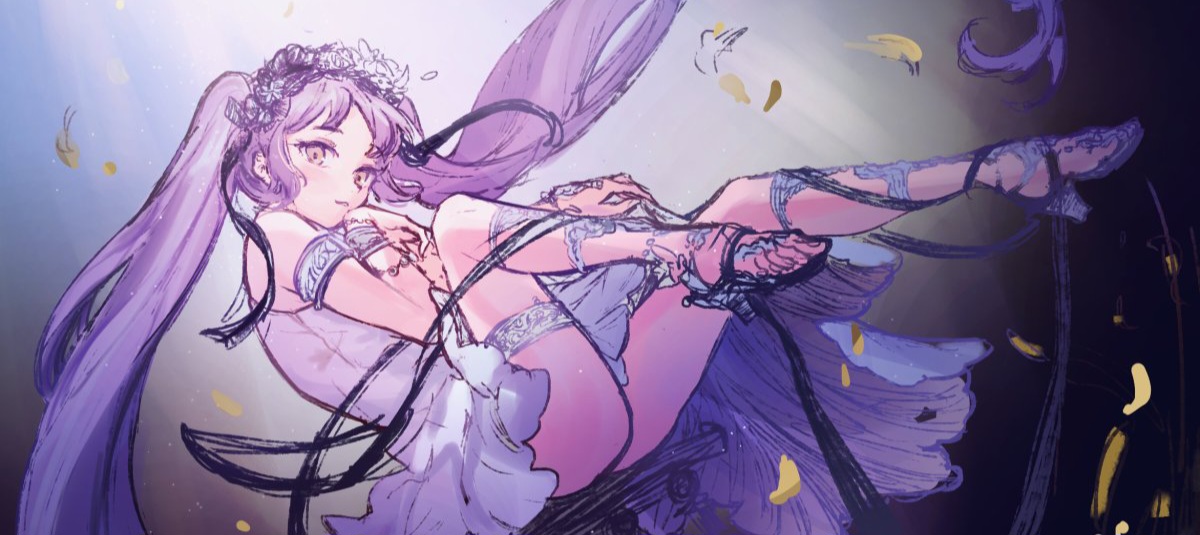

## Examples

Below are some examples of images generated using this model:

**Anime Girl:**

**Anime Boy:**

**Scenery:**

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license)

# Announcement

For (unofficial) continuation of this model, please visit [andite/anything-v4.0](https://huggingface.co/andite/anything-v4.0). I am aware that the repo exists because I am literally the one who (accidentally) gave the idea to publish his fine-tuned model ([andite/yohan-diffusion](https://huggingface.co/andite/yohan-diffusion)) as a base and merged it with many mysterious model, "hey, let's call it 'Anything V4.0'", because the quality is quite similar to Anything V3 but upgraded.

I also wanted to tell you something. I had a plan to remove/make private one of each repo named "Anything V3":

- [Linaqruf/anything-v3.0](https://huggingface.co/Linaqruf/anything-v3.0/)

- [Linaqruf/anything-v3-better-vae](https://huggingface.co/Linaqruf/anything-v3-better-vae)

Because there are two versions now and I'm late to realize this mysterious non-sense model is already polluted Huggingface Trending for so long, and now when the new repo comes out it is also there. I feel guilty everytime this model is in trending leaderboard.

I prefer to delete/make private this one and let us slowly move to [Linaqruf/anything-v3-better-vae](https://huggingface.co/Linaqruf/anything-v3-better-vae) with better repo management and a better VAE included in the model.

Please share your thoughts in this #133 discussion about whether I should delete this repo or another one, or maybe both of them.

Thanks,

Linaqruf.

---

# Anything V3

Welcome to Anything V3 - a latent diffusion model for weebs. This model is intended to produce high-quality, highly detailed anime style with just a few prompts. Like other anime-style Stable Diffusion models, it also supports danbooru tags to generate images.

e.g. **_1girl, white hair, golden eyes, beautiful eyes, detail, flower meadow, cumulonimbus clouds, lighting, detailed sky, garden_**

## Gradio

We support a [Gradio](https://github.com/gradio-app/gradio) Web UI to run Anything-V3.0:

[Open in Spaces](https://huggingface.co/spaces/akhaliq/anything-v3.0)

## 🧨 Diffusers

This model can be used just like any other Stable Diffusion model. For more information,

please have a look at the [Stable Diffusion](https://huggingface.co/docs/diffusers/api/pipelines/stable_diffusion).

You can also export the model to [ONNX](https://huggingface.co/docs/diffusers/optimization/onnx), [MPS](https://huggingface.co/docs/diffusers/optimization/mps) and/or [FLAX/JAX]().

```python

from diffusers import StableDiffusionPipeline

import torch

model_id = "Linaqruf/anything-v3.0"

branch_name= "diffusers"

pipe = StableDiffusionPipeline.from_pretrained(model_id, revision=branch_name, torch_dtype=torch.float16)

pipe = pipe.to("cuda")

prompt = "pikachu"

image = pipe(prompt).images[0]

image.save("./pikachu.png")

```

## Examples

Below are some examples of images generated using this model:

**Anime Girl:**

```

1girl, brown hair, green eyes, colorful, autumn, cumulonimbus clouds, lighting, blue sky, falling leaves, garden

Steps: 50, Sampler: DDIM, CFG scale: 12

```

**Anime Boy:**

```

1boy, medium hair, blonde hair, blue eyes, bishounen, colorful, autumn, cumulonimbus clouds, lighting, blue sky, falling leaves, garden

Steps: 50, Sampler: DDIM, CFG scale: 12

```

**Scenery:**

```

scenery, shibuya tokyo, post-apocalypse, ruins, rust, sky, skyscraper, abandoned, blue sky, broken window, building, cloud, crane machine, outdoors, overgrown, pillar, sunset

Steps: 50, Sampler: DDIM, CFG scale: 12

```

## License

This model is open access and available to all, with a CreativeML OpenRAIL-M license further specifying rights and usage.

The CreativeML OpenRAIL License specifies:

1. You can't use the model to deliberately produce nor share illegal or harmful outputs or content

2. The authors claims no rights on the outputs you generate, you are free to use them and are accountable for their use which must not go against the provisions set in the license

3. You may re-distribute the weights and use the model commercially and/or as a service. If you do, please be aware you have to include the same use restrictions as the ones in the license and share a copy of the CreativeML OpenRAIL-M to all your users (please read the license entirely and carefully)

[Please read the full license here](https://huggingface.co/spaces/CompVis/stable-diffusion-license) |

RWKV/rwkv-raven-1b5 | RWKV | 2023-05-15T10:08:58Z | 1,708 | 10 | transformers | [

"transformers",

"pytorch",

"rwkv",

"text-generation",

"dataset:EleutherAI/pile",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-generation | 2023-05-04T14:57:11Z | ---

datasets:

- EleutherAI/pile

---

# Model card for RWKV-4 | 1B5 parameters chat version (Raven)

RWKV is a project led by [Bo Peng](https://github.com/BlinkDL). Learn more about the model architecture in the blogposts from Johan Wind [here](https://johanwind.github.io/2023/03/23/rwkv_overview.html) and [here](https://johanwind.github.io/2023/03/23/rwkv_details.html). Learn more about the project by joining the [RWKV discord server](https://discordapp.com/users/468093332535640064).

# Table of contents

0. [TL;DR](#TL;DR)

1. [Model Details](#model-details)

2. [Usage](#usage)

3. [Citation](#citation)

## TL;DR

Below is the description from the [original repository](https://github.com/BlinkDL/RWKV-LM)

> RWKV is an RNN with transformer-level LLM performance. It can be directly trained like a GPT (parallelizable). It's combining the best of RNN and transformer - great performance, fast inference, saves VRAM, fast training, "infinite" ctx_len, and free sentence embedding.

## Model Details

The details of the architecture can be found on the blogpost mentioned above and the Hugging Face blogpost of the integration.

## Usage

### Convert the raw weights to the HF format

You can use the [`convert_rwkv_checkpoint_to_hf.py`](https://github.com/huggingface/transformers/tree/main/src/transformers/models/rwkv/convert_rwkv_checkpoint_to_hf.py) script by specifying the repo_id of the original weights, the filename and the output directory. You can also optionally directly push the converted model on the Hub by passing `--push_to_hub` flag and `--model_name` argument to specify where to push the converted weights.

```bash

python convert_rwkv_checkpoint_to_hf.py --repo_id RAW_HUB_REPO --checkpoint_file RAW_FILE --output_dir OUTPUT_DIR --push_to_hub --model_name dummy_user/converted-rwkv

```

### Generate text

You can use the `AutoModelForCausalLM` and `AutoTokenizer` classes to generate texts from the model. Expand the sections below to understand how to run the model in different scenarios:

The "Raven" models needs to be prompted in a specific way, learn more about that [in the integration blogpost](https://huggingface.co/blog/rwkv).

### Running the model on a CPU

<details>

<summary> Click to expand </summary>

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("RWKV/rwkv-raven-1b5")

tokenizer = AutoTokenizer.from_pretrained("RWKV/rwkv-raven-1b5")

prompt = "\nIn a shocking finding, scientist discovered a herd of dragons living in a remote, previously unexplored valley, in Tibet. Even more surprising to the researchers was the fact that the dragons spoke perfect Chinese."

inputs = tokenizer(prompt, return_tensors="pt")

output = model.generate(inputs["input_ids"], max_new_tokens=40)

print(tokenizer.decode(output[0].tolist(), skip_special_tokens=True))

```

### Running the model on a single GPU

<details>

<summary> Click to expand </summary>

```python

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("RWKV/rwkv-raven-1b5").to(0)

tokenizer = AutoTokenizer.from_pretrained("RWKV/rwkv-raven-1b5")

prompt = "\nIn a shocking finding, scientist discovered a herd of dragons living in a remote, previously unexplored valley, in Tibet. Even more surprising to the researchers was the fact that the dragons spoke perfect Chinese."

inputs = tokenizer(prompt, return_tensors="pt").to(0)

output = model.generate(inputs["input_ids"], max_new_tokens=40)

print(tokenizer.decode(output[0].tolist(), skip_special_tokens=True))

```

</details>

</details>

### Running the model in half-precision, on GPU

<details>

<summary> Click to expand </summary>

```python

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("RWKV/rwkv-raven-1b5", torch_dtype=torch.float16).to(0)

tokenizer = AutoTokenizer.from_pretrained("RWKV/rwkv-raven-1b5")

prompt = "\nIn a shocking finding, scientist discovered a herd of dragons living in a remote, previously unexplored valley, in Tibet. Even more surprising to the researchers was the fact that the dragons spoke perfect Chinese."

inputs = tokenizer(prompt, return_tensors="pt").to(0)

output = model.generate(inputs["input_ids"], max_new_tokens=40)

print(tokenizer.decode(output[0].tolist(), skip_special_tokens=True))

```

</details>

### Running the model multiple GPUs

<details>

<summary> Click to expand </summary>

```python

# pip install accelerate

from transformers import AutoModelForCausalLM, AutoTokenizer

model = AutoModelForCausalLM.from_pretrained("RWKV/rwkv-raven-1b5", device_map="auto")

tokenizer = AutoTokenizer.from_pretrained("RWKV/rwkv-raven-1b5")

prompt = "\nIn a shocking finding, scientist discovered a herd of dragons living in a remote, previously unexplored valley, in Tibet. Even more surprising to the researchers was the fact that the dragons spoke perfect Chinese."

inputs = tokenizer(prompt, return_tensors="pt").to(0)

output = model.generate(inputs["input_ids"], max_new_tokens=40)

print(tokenizer.decode(output[0].tolist(), skip_special_tokens=True))

```

</details>

## Citation

If you use this model, please consider citing the original work, from the original repo [here](https://github.com/BlinkDL/ChatRWKV/) |

RichardErkhov/Qwen_-_Qwen2-0.5B-gguf | RichardErkhov | 2024-06-22T18:00:53Z | 1,708 | 0 | null | [

"gguf",

"region:us"

]

| null | 2024-06-22T17:53:32Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

Qwen2-0.5B - GGUF

- Model creator: https://huggingface.co/Qwen/

- Original model: https://huggingface.co/Qwen/Qwen2-0.5B/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [Qwen2-0.5B.Q2_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q2_K.gguf) | Q2_K | 0.32GB |

| [Qwen2-0.5B.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.IQ3_XS.gguf) | IQ3_XS | 0.32GB |

| [Qwen2-0.5B.IQ3_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.IQ3_S.gguf) | IQ3_S | 0.32GB |

| [Qwen2-0.5B.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q3_K_S.gguf) | Q3_K_S | 0.32GB |

| [Qwen2-0.5B.IQ3_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.IQ3_M.gguf) | IQ3_M | 0.32GB |

| [Qwen2-0.5B.Q3_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q3_K.gguf) | Q3_K | 0.33GB |

| [Qwen2-0.5B.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q3_K_M.gguf) | Q3_K_M | 0.33GB |

| [Qwen2-0.5B.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q3_K_L.gguf) | Q3_K_L | 0.34GB |

| [Qwen2-0.5B.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.IQ4_XS.gguf) | IQ4_XS | 0.33GB |

| [Qwen2-0.5B.Q4_0.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q4_0.gguf) | Q4_0 | 0.33GB |

| [Qwen2-0.5B.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.IQ4_NL.gguf) | IQ4_NL | 0.33GB |

| [Qwen2-0.5B.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q4_K_S.gguf) | Q4_K_S | 0.36GB |

| [Qwen2-0.5B.Q4_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q4_K.gguf) | Q4_K | 0.37GB |

| [Qwen2-0.5B.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q4_K_M.gguf) | Q4_K_M | 0.37GB |

| [Qwen2-0.5B.Q4_1.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q4_1.gguf) | Q4_1 | 0.35GB |

| [Qwen2-0.5B.Q5_0.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q5_0.gguf) | Q5_0 | 0.37GB |

| [Qwen2-0.5B.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q5_K_S.gguf) | Q5_K_S | 0.38GB |

| [Qwen2-0.5B.Q5_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q5_K.gguf) | Q5_K | 0.39GB |

| [Qwen2-0.5B.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q5_K_M.gguf) | Q5_K_M | 0.39GB |

| [Qwen2-0.5B.Q5_1.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q5_1.gguf) | Q5_1 | 0.39GB |

| [Qwen2-0.5B.Q6_K.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q6_K.gguf) | Q6_K | 0.47GB |

| [Qwen2-0.5B.Q8_0.gguf](https://huggingface.co/RichardErkhov/Qwen_-_Qwen2-0.5B-gguf/blob/main/Qwen2-0.5B.Q8_0.gguf) | Q8_0 | 0.49GB |

Original model description:

---

language:

- en

pipeline_tag: text-generation

tags:

- pretrained

license: apache-2.0

---

# Qwen2-0.5B

## Introduction

Qwen2 is the new series of Qwen large language models. For Qwen2, we release a number of base language models and instruction-tuned language models ranging from 0.5 to 72 billion parameters, including a Mixture-of-Experts model. This repo contains the 0.5B Qwen2 base language model.

Compared with the state-of-the-art opensource language models, including the previous released Qwen1.5, Qwen2 has generally surpassed most opensource models and demonstrated competitiveness against proprietary models across a series of benchmarks targeting for language understanding, language generation, multilingual capability, coding, mathematics, reasoning, etc.

For more details, please refer to our [blog](https://qwenlm.github.io/blog/qwen2/), [GitHub](https://github.com/QwenLM/Qwen2), and [Documentation](https://qwen.readthedocs.io/en/latest/).

<br>

## Model Details

Qwen2 is a language model series including decoder language models of different model sizes. For each size, we release the base language model and the aligned chat model. It is based on the Transformer architecture with SwiGLU activation, attention QKV bias, group query attention, etc. Additionally, we have an improved tokenizer adaptive to multiple natural languages and codes.

## Requirements

The code of Qwen2 has been in the latest Hugging face transformers and we advise you to install `transformers>=4.37.0`, or you might encounter the following error:

```

KeyError: 'qwen2'

```

## Usage

We do not advise you to use base language models for text generation. Instead, you can apply post-training, e.g., SFT, RLHF, continued pretraining, etc., on this model.

## Performance

The evaluation of base models mainly focuses on the model performance of natural language understanding, general question answering, coding, mathematics, scientific knowledge, reasoning, multilingual capability, etc.

The datasets for evaluation include:

**English Tasks**: MMLU (5-shot), MMLU-Pro (5-shot), GPQA (5shot), Theorem QA (5-shot), BBH (3-shot), HellaSwag (10-shot), Winogrande (5-shot), TruthfulQA (0-shot), ARC-C (25-shot)

**Coding Tasks**: EvalPlus (0-shot) (HumanEval, MBPP, HumanEval+, MBPP+), MultiPL-E (0-shot) (Python, C++, JAVA, PHP, TypeScript, C#, Bash, JavaScript)

**Math Tasks**: GSM8K (4-shot), MATH (4-shot)

**Chinese Tasks**: C-Eval(5-shot), CMMLU (5-shot)

**Multilingual Tasks**: Multi-Exam (M3Exam 5-shot, IndoMMLU 3-shot, ruMMLU 5-shot, mMMLU 5-shot), Multi-Understanding (BELEBELE 5-shot, XCOPA 5-shot, XWinograd 5-shot, XStoryCloze 0-shot, PAWS-X 5-shot), Multi-Mathematics (MGSM 8-shot), Multi-Translation (Flores-101 5-shot)

#### Qwen2-0.5B & Qwen2-1.5B performances

| Datasets | Phi-2 | Gemma-2B | MiniCPM | Qwen1.5-1.8B | Qwen2-0.5B | Qwen2-1.5B |

| :--------| :---------: | :------------: | :------------: |:------------: | :------------: | :------------: |

|#Non-Emb Params | 2.5B | 2.0B | 2.4B | 1.3B | 0.35B | 1.3B |

|MMLU | 52.7 | 42.3 | 53.5 | 46.8 | 45.4 | **56.5** |

|MMLU-Pro | - | 15.9 | - | - | 14.7 | 21.8 |

|Theorem QA | - | - | - |- | 8.9 | **15.0** |

|HumanEval | 47.6 | 22.0 |**50.0**| 20.1 | 22.0 | 31.1 |

|MBPP | **55.0** | 29.2 | 47.3 | 18.0 | 22.0 | 37.4 |

|GSM8K | 57.2 | 17.7 | 53.8 | 38.4 | 36.5 | **58.5** |

|MATH | 3.5 | 11.8 | 10.2 | 10.1 | 10.7 | **21.7** |

|BBH | **43.4** | 35.2 | 36.9 | 24.2 | 28.4 | 37.2 |

|HellaSwag | **73.1** | 71.4 | 68.3 | 61.4 | 49.3 | 66.6 |

|Winogrande | **74.4** | 66.8 | -| 60.3 | 56.8 | 66.2 |

|ARC-C | **61.1** | 48.5 | -| 37.9 | 31.5 | 43.9 |

|TruthfulQA | 44.5 | 33.1 | -| 39.4 | 39.7 | **45.9** |

|C-Eval | 23.4 | 28.0 | 51.1| 59.7 | 58.2 | **70.6** |

|CMMLU | 24.2 | - | 51.1 | 57.8 | 55.1 | **70.3** |

## Citation

If you find our work helpful, feel free to give us a cite.

```

@article{qwen2,

title={Qwen2 Technical Report},

year={2024}

}

```

|

RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf | RichardErkhov | 2024-06-30T08:23:32Z | 1,708 | 0 | null | [

"gguf",

"region:us"

]

| null | 2024-06-30T08:16:26Z | Quantization made by Richard Erkhov.

[Github](https://github.com/RichardErkhov)

[Discord](https://discord.gg/pvy7H8DZMG)

[Request more models](https://github.com/RichardErkhov/quant_request)

ko-llm-llama-2-7b-chat2 - GGUF

- Model creator: https://huggingface.co/sronger/

- Original model: https://huggingface.co/sronger/ko-llm-llama-2-7b-chat2/

| Name | Quant method | Size |

| ---- | ---- | ---- |

| [ko-llm-llama-2-7b-chat2.Q2_K.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q2_K.gguf) | Q2_K | 0.22GB |

| [ko-llm-llama-2-7b-chat2.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.IQ3_XS.gguf) | IQ3_XS | 0.25GB |

| [ko-llm-llama-2-7b-chat2.IQ3_S.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.IQ3_S.gguf) | IQ3_S | 0.26GB |

| [ko-llm-llama-2-7b-chat2.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q3_K_S.gguf) | Q3_K_S | 0.26GB |

| [ko-llm-llama-2-7b-chat2.IQ3_M.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.IQ3_M.gguf) | IQ3_M | 0.26GB |

| [ko-llm-llama-2-7b-chat2.Q3_K.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q3_K.gguf) | Q3_K | 0.28GB |

| [ko-llm-llama-2-7b-chat2.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q3_K_M.gguf) | Q3_K_M | 0.28GB |

| [ko-llm-llama-2-7b-chat2.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q3_K_L.gguf) | Q3_K_L | 0.31GB |

| [ko-llm-llama-2-7b-chat2.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.IQ4_XS.gguf) | IQ4_XS | 0.32GB |

| [ko-llm-llama-2-7b-chat2.Q4_0.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q4_0.gguf) | Q4_0 | 0.33GB |

| [ko-llm-llama-2-7b-chat2.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.IQ4_NL.gguf) | IQ4_NL | 0.34GB |

| [ko-llm-llama-2-7b-chat2.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q4_K_S.gguf) | Q4_K_S | 0.34GB |

| [ko-llm-llama-2-7b-chat2.Q4_K.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q4_K.gguf) | Q4_K | 0.36GB |

| [ko-llm-llama-2-7b-chat2.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q4_K_M.gguf) | Q4_K_M | 0.36GB |

| [ko-llm-llama-2-7b-chat2.Q4_1.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q4_1.gguf) | Q4_1 | 0.37GB |

| [ko-llm-llama-2-7b-chat2.Q5_0.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q5_0.gguf) | Q5_0 | 0.4GB |

| [ko-llm-llama-2-7b-chat2.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q5_K_S.gguf) | Q5_K_S | 0.4GB |

| [ko-llm-llama-2-7b-chat2.Q5_K.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q5_K.gguf) | Q5_K | 0.41GB |

| [ko-llm-llama-2-7b-chat2.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q5_K_M.gguf) | Q5_K_M | 0.41GB |

| [ko-llm-llama-2-7b-chat2.Q5_1.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q5_1.gguf) | Q5_1 | 0.44GB |

| [ko-llm-llama-2-7b-chat2.Q6_K.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q6_K.gguf) | Q6_K | 0.48GB |

| [ko-llm-llama-2-7b-chat2.Q8_0.gguf](https://huggingface.co/RichardErkhov/sronger_-_ko-llm-llama-2-7b-chat2-gguf/blob/main/ko-llm-llama-2-7b-chat2.Q8_0.gguf) | Q8_0 | 0.62GB |

Original model description:

Entry not found

|

OpenGVLab/InternViT-6B-224px | OpenGVLab | 2024-05-29T11:02:22Z | 1,707 | 17 | transformers | [

"transformers",

"pytorch",

"intern_vit_6b",

"feature-extraction",

"image-feature-extraction",

"custom_code",

"dataset:laion/laion2B-en",

"dataset:laion/laion-coco",

"dataset:laion/laion2B-multi",

"dataset:kakaobrain/coyo-700m",

"dataset:conceptual_captions",

"dataset:wanng/wukong100m",

"arxiv:2312.14238",

"arxiv:2404.16821",

"license:mit",

"region:us"

]

| image-feature-extraction | 2023-12-22T01:53:49Z | ---

license: mit

datasets:

- laion/laion2B-en

- laion/laion-coco

- laion/laion2B-multi

- kakaobrain/coyo-700m

- conceptual_captions

- wanng/wukong100m

pipeline_tag: image-feature-extraction

---

# Model Card for InternViT-6B-224px

<p align="center">

<img src="https://cdn-uploads.huggingface.co/production/uploads/64119264f0f81eb569e0d569/jSJ7TChEGvGP_gwNhrYoA.webp" alt="Image Description" width="300" height="300">

</p>

[\[🆕 Blog\]](https://internvl.github.io/blog/) [\[📜 InternVL 1.0 Paper\]](https://arxiv.org/abs/2312.14238) [\[📜 InternVL 1.5 Report\]](https://arxiv.org/abs/2404.16821) [\[🗨️ Chat Demo\]](https://internvl.opengvlab.com/)

[\[🤗 HF Demo\]](https://huggingface.co/spaces/OpenGVLab/InternVL) [\[🚀 Quick Start\]](#model-usage) [\[🌐 Community-hosted API\]](https://rapidapi.com/adushar1320/api/internvl-chat) [\[📖 中文解读\]](https://zhuanlan.zhihu.com/p/675877376)

| Model | Date | Download | Note |

| ----------------------- | ---------- | ---------------------------------------------------------------------- | -------------------------------- |

| InternViT-6B-448px-V1-5 | 2024.04.20 | 🤗 [HF link](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-5) | support dynamic resolution, super strong OCR (🔥new) |

| InternViT-6B-448px-V1-2 | 2024.02.11 | 🤗 [HF link](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-2) | 448 resolution |

| InternViT-6B-448px-V1-0 | 2024.01.30 | 🤗 [HF link](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-0) | 448 resolution |

| InternViT-6B-224px | 2023.12.22 | 🤗 [HF link](https://huggingface.co/OpenGVLab/InternViT-6B-224px) | vision foundation model |

| InternVL-14B-224px | 2023.12.22 | 🤗 [HF link](https://huggingface.co/OpenGVLab/InternVL-14B-224px) | vision-language foundation model |

## Model Details

- **Model Type:** vision foundation model, feature backbone

- **Model Stats:**

- Params (M): 5903

- Image size: 224 x 224

- **Pretrain Dataset:** LAION-en, LAION-COCO, COYO, CC12M, CC3M, SBU, Wukong, LAION-multi

- **Note:** This model has 48 blocks, and we found that using the output after the fourth-to-last block worked best for VLLM. Therefore, when building a VLLM with this model, **please use the features from the fourth-to-last layer.**

## Linear Probing Performance

See this [document](https://github.com/OpenGVLab/InternVL/tree/main/classification#-evaluation) for more details about the linear probing evaluation.

| IN-1K | IN-ReaL | IN-V2 | IN-A | IN-R | IN-Sketch |

| :---: | :-----: | :---: | :--: | :--: | :-------: |

| 88.2 | 90.4 | 79.9 | 77.5 | 89.8 | 69.1 |

## Model Usage (Image Embeddings)

```python

import torch

from PIL import Image

from transformers import AutoModel, CLIPImageProcessor

model = AutoModel.from_pretrained(

'OpenGVLab/InternViT-6B-224px',

torch_dtype=torch.bfloat16,

low_cpu_mem_usage=True,

trust_remote_code=True).cuda().eval()

image = Image.open('./examples/image1.jpg').convert('RGB')

image_processor = CLIPImageProcessor.from_pretrained('OpenGVLab/InternViT-6B-224px')

pixel_values = image_processor(images=image, return_tensors='pt').pixel_values

pixel_values = pixel_values.to(torch.bfloat16).cuda()

outputs = model(pixel_values)

```

## Citation

If you find this project useful in your research, please consider citing:

```BibTeX

@article{chen2023internvl,

title={InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks},

author={Chen, Zhe and Wu, Jiannan and Wang, Wenhai and Su, Weijie and Chen, Guo and Xing, Sen and Zhong, Muyan and Zhang, Qinglong and Zhu, Xizhou and Lu, Lewei and Li, Bin and Luo, Ping and Lu, Tong and Qiao, Yu and Dai, Jifeng},

journal={arXiv preprint arXiv:2312.14238},

year={2023}

}

@article{chen2024far,

title={How Far Are We to GPT-4V? Closing the Gap to Commercial Multimodal Models with Open-Source Suites},

author={Chen, Zhe and Wang, Weiyun and Tian, Hao and Ye, Shenglong and Gao, Zhangwei and Cui, Erfei and Tong, Wenwen and Hu, Kongzhi and Luo, Jiapeng and Ma, Zheng and others},

journal={arXiv preprint arXiv:2404.16821},

year={2024}

}

```

## Acknowledgement

InternVL is built with reference to the code of the following projects: [OpenAI CLIP](https://github.com/openai/CLIP), [Open CLIP](https://github.com/mlfoundations/open_clip), [CLIP Benchmark](https://github.com/LAION-AI/CLIP_benchmark), [EVA](https://github.com/baaivision/EVA/tree/master), [InternImage](https://github.com/OpenGVLab/InternImage), [ViT-Adapter](https://github.com/czczup/ViT-Adapter), [MMSegmentation](https://github.com/open-mmlab/mmsegmentation), [Transformers](https://github.com/huggingface/transformers), [DINOv2](https://github.com/facebookresearch/dinov2), [BLIP-2](https://github.com/salesforce/LAVIS/tree/main/projects/blip2), [Qwen-VL](https://github.com/QwenLM/Qwen-VL/tree/master/eval_mm), and [LLaVA-1.5](https://github.com/haotian-liu/LLaVA). Thanks for their awesome work! |

MaziyarPanahi/mergekit-slerp-mkzyzjw-GGUF | MaziyarPanahi | 2024-06-16T06:14:18Z | 1,707 | 0 | transformers | [

"transformers",

"gguf",

"mistral",

"quantized",

"2-bit",

"3-bit",

"4-bit",

"5-bit",

"6-bit",

"8-bit",

"GGUF",

"safetensors",

"llama",

"text-generation",

"mergekit",

"merge",

"conversational",

"base_model:cognitivecomputations/dolphin-2.9-llama3-8b-256k",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us",

"base_model:mergekit-community/mergekit-slerp-mkzyzjw"

]

| text-generation | 2024-06-16T05:49:37Z | ---

tags:

- quantized

- 2-bit

- 3-bit

- 4-bit

- 5-bit

- 6-bit

- 8-bit

- GGUF

- transformers

- safetensors

- llama

- text-generation

- mergekit

- merge

- conversational

- base_model:cognitivecomputations/dolphin-2.9-llama3-8b-256k

- autotrain_compatible

- endpoints_compatible

- text-generation-inference

- region:us

- text-generation

model_name: mergekit-slerp-mkzyzjw-GGUF

base_model: mergekit-community/mergekit-slerp-mkzyzjw

inference: false

model_creator: mergekit-community

pipeline_tag: text-generation

quantized_by: MaziyarPanahi

---

# [MaziyarPanahi/mergekit-slerp-mkzyzjw-GGUF](https://huggingface.co/MaziyarPanahi/mergekit-slerp-mkzyzjw-GGUF)

- Model creator: [mergekit-community](https://huggingface.co/mergekit-community)

- Original model: [mergekit-community/mergekit-slerp-mkzyzjw](https://huggingface.co/mergekit-community/mergekit-slerp-mkzyzjw)

## Description

[MaziyarPanahi/mergekit-slerp-mkzyzjw-GGUF](https://huggingface.co/MaziyarPanahi/mergekit-slerp-mkzyzjw-GGUF) contains GGUF format model files for [mergekit-community/mergekit-slerp-mkzyzjw](https://huggingface.co/mergekit-community/mergekit-slerp-mkzyzjw).

### About GGUF

GGUF is a new format introduced by the llama.cpp team on August 21st 2023. It is a replacement for GGML, which is no longer supported by llama.cpp.

Here is an incomplete list of clients and libraries that are known to support GGUF:

* [llama.cpp](https://github.com/ggerganov/llama.cpp). The source project for GGUF. Offers a CLI and a server option.

* [llama-cpp-python](https://github.com/abetlen/llama-cpp-python), a Python library with GPU accel, LangChain support, and OpenAI-compatible API server.

* [LM Studio](https://lmstudio.ai/), an easy-to-use and powerful local GUI for Windows and macOS (Silicon), with GPU acceleration. Linux available, in beta as of 27/11/2023.

* [text-generation-webui](https://github.com/oobabooga/text-generation-webui), the most widely used web UI, with many features and powerful extensions. Supports GPU acceleration.

* [KoboldCpp](https://github.com/LostRuins/koboldcpp), a fully featured web UI, with GPU accel across all platforms and GPU architectures. Especially good for story telling.

* [GPT4All](https://gpt4all.io/index.html), a free and open source local running GUI, supporting Windows, Linux and macOS with full GPU accel.

* [LoLLMS Web UI](https://github.com/ParisNeo/lollms-webui), a great web UI with many interesting and unique features, including a full model library for easy model selection.

* [Faraday.dev](https://faraday.dev/), an attractive and easy to use character-based chat GUI for Windows and macOS (both Silicon and Intel), with GPU acceleration.

* [candle](https://github.com/huggingface/candle), a Rust ML framework with a focus on performance, including GPU support, and ease of use.

* [ctransformers](https://github.com/marella/ctransformers), a Python library with GPU accel, LangChain support, and OpenAI-compatible AI server. Note, as of time of writing (November 27th 2023), ctransformers has not been updated in a long time and does not support many recent models.

## Special thanks

🙏 Special thanks to [Georgi Gerganov](https://github.com/ggerganov) and the whole team working on [llama.cpp](https://github.com/ggerganov/llama.cpp/) for making all of this possible. |

majoh837/openchat_3.5_1210_code_2_gguf | majoh837 | 2024-06-24T04:49:21Z | 1,707 | 0 | transformers | [

"transformers",

"gguf",

"mistral",

"text-generation-inference",

"unsloth",

"en",

"base_model:majoh837/openchat_3.5_pyco_r32_gguf",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

]

| null | 2024-06-24T04:33:13Z | ---

base_model: majoh837/openchat_3.5_pyco_r32_gguf

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- mistral

- gguf

---

# Uploaded model

- **Developed by:** majoh837

- **License:** apache-2.0

- **Finetuned from model :** majoh837/openchat_3.5_pyco_r32_gguf

This mistral model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

VietAI/vit5-large-vietnews-summarization | VietAI | 2022-09-07T02:28:54Z | 1,706 | 11 | transformers | [

"transformers",

"pytorch",

"tf",

"t5",

"text2text-generation",

"summarization",

"vi",

"dataset:cc100",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| summarization | 2022-05-12T10:09:43Z | ---

language: vi

datasets:

- cc100

tags:

- summarization

license: mit

widget:

- text: "vietnews: VietAI là tổ chức phi lợi nhuận với sứ mệnh ươm mầm tài năng về trí tuệ nhân tạo và xây dựng một cộng đồng các chuyên gia trong lĩnh vực trí tuệ nhân tạo đẳng cấp quốc tế tại Việt Nam."

---

# ViT5-large Finetuned on `vietnews` Abstractive Summarization

State-of-the-art pretrained Transformer-based encoder-decoder model for Vietnamese.

[](https://paperswithcode.com/sota/abstractive-text-summarization-on-vietnews?p=vit5-pretrained-text-to-text-transformer-for)

## How to use

For more details, do check out [our Github repo](https://github.com/vietai/ViT5) and [eval script](https://github.com/vietai/ViT5/blob/main/eval/Eval_vietnews_sum.ipynb).

```python

from transformers import AutoTokenizer, AutoModelForSeq2SeqLM

tokenizer = AutoTokenizer.from_pretrained("VietAI/vit5-large-vietnews-summarization")

model = AutoModelForSeq2SeqLM.from_pretrained("VietAI/vit5-large-vietnews-summarization")

model.cuda()

sentence = "VietAI là tổ chức phi lợi nhuận với sứ mệnh ươm mầm tài năng về trí tuệ nhân tạo và xây dựng một cộng đồng các chuyên gia trong lĩnh vực trí tuệ nhân tạo đẳng cấp quốc tế tại Việt Nam."

text = "vietnews: " + sentence + " </s>"

encoding = tokenizer(text, return_tensors="pt")

input_ids, attention_masks = encoding["input_ids"].to("cuda"), encoding["attention_mask"].to("cuda")

outputs = model.generate(

input_ids=input_ids, attention_mask=attention_masks,

max_length=256,

early_stopping=True

)

for output in outputs:

line = tokenizer.decode(output, skip_special_tokens=True, clean_up_tokenization_spaces=True)

print(line)

```

## Citation

```

@inproceedings{phan-etal-2022-vit5,

title = "{V}i{T}5: Pretrained Text-to-Text Transformer for {V}ietnamese Language Generation",

author = "Phan, Long and Tran, Hieu and Nguyen, Hieu and Trinh, Trieu H.",

booktitle = "Proceedings of the 2022 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies: Student Research Workshop",

year = "2022",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.naacl-srw.18",

pages = "136--142",

}

``` |

TencentGameMate/chinese-wav2vec2-base | TencentGameMate | 2022-06-24T01:53:18Z | 1,706 | 23 | transformers | [

"transformers",

"pytorch",

"wav2vec2",

"pretraining",

"license:mit",

"endpoints_compatible",

"region:us"

]

| null | 2022-06-02T06:17:07Z | ---

license: mit

---

Pretrained on 10k hours WenetSpeech L subset. More details in [TencentGameMate/chinese_speech_pretrain](https://github.com/TencentGameMate/chinese_speech_pretrain)

This model does not have a tokenizer as it was pretrained on audio alone.

In order to use this model speech recognition, a tokenizer should be created and the model should be fine-tuned on labeled text data.

python package:

transformers==4.16.2

```python

import torch

import torch.nn.functional as F

import soundfile as sf

from fairseq import checkpoint_utils

from transformers import (

Wav2Vec2FeatureExtractor,

Wav2Vec2ForPreTraining,

Wav2Vec2Model,

)

from transformers.models.wav2vec2.modeling_wav2vec2 import _compute_mask_indices

model_path=""

wav_path=""

mask_prob=0.0

mask_length=10

feature_extractor = Wav2Vec2FeatureExtractor.from_pretrained(model_path)

model = Wav2Vec2Model.from_pretrained(model_path)

# for pretrain: Wav2Vec2ForPreTraining

# model = Wav2Vec2ForPreTraining.from_pretrained(model_path)

model = model.to(device)

model = model.half()

model.eval()

wav, sr = sf.read(wav_path)

input_values = feature_extractor(wav, return_tensors="pt").input_values

input_values = input_values.half()

input_values = input_values.to(device)

# for Wav2Vec2ForPreTraining

# batch_size, raw_sequence_length = input_values.shape

# sequence_length = model._get_feat_extract_output_lengths(raw_sequence_length)

# mask_time_indices = _compute_mask_indices((batch_size, sequence_length), mask_prob=0.0, mask_length=2)

# mask_time_indices = torch.tensor(mask_time_indices, device=input_values.device, dtype=torch.long)

with torch.no_grad():

outputs = model(input_values)

last_hidden_state = outputs.last_hidden_state

# for Wav2Vec2ForPreTraining

# outputs = model(input_values, mask_time_indices=mask_time_indices, output_hidden_states=True)

# last_hidden_state = outputs.hidden_states[-1]

``` |

upstage/Llama-2-70b-instruct | upstage | 2023-08-03T22:01:09Z | 1,706 | 63 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"upstage",

"llama-2",

"instruct",

"instruction",

"en",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-07-24T09:13:08Z | ---

language:

- en

tags:

- upstage

- llama-2

- instruct

- instruction

pipeline_tag: text-generation

---

# LLaMa-2-70b-instruct-1024 model card

## Model Details

* **Developed by**: [Upstage](https://en.upstage.ai)

* **Backbone Model**: [LLaMA-2](https://github.com/facebookresearch/llama/tree/main)

* **Language(s)**: English

* **Library**: [HuggingFace Transformers](https://github.com/huggingface/transformers)

* **License**: Fine-tuned checkpoints is licensed under the Non-Commercial Creative Commons license ([CC BY-NC-4.0](https://creativecommons.org/licenses/by-nc/4.0/))

* **Where to send comments**: Instructions on how to provide feedback or comments on a model can be found by opening an issue in the [Hugging Face community's model repository](https://huggingface.co/upstage/Llama-2-70b-instruct/discussions)

* **Contact**: For questions and comments about the model, please email [[email protected]](mailto:[email protected])

## Dataset Details

### Used Datasets

- Orca-style dataset

- No other data was used except for the dataset mentioned above

### Prompt Template

```

### System:

{System}

### User:

{User}

### Assistant:

{Assistant}

```

## Usage

- Tested on A100 80GB

- Our model can handle up to 10k+ input tokens, thanks to the `rope_scaling` option

```python

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, TextStreamer

tokenizer = AutoTokenizer.from_pretrained("upstage/Llama-2-70b-instruct")

model = AutoModelForCausalLM.from_pretrained(

"upstage/Llama-2-70b-instruct",

device_map="auto",

torch_dtype=torch.float16,

load_in_8bit=True,

rope_scaling={"type": "dynamic", "factor": 2} # allows handling of longer inputs

)

prompt = "### User:\nThomas is healthy, but he has to go to the hospital. What could be the reasons?\n\n### Assistant:\n"

inputs = tokenizer(prompt, return_tensors="pt").to(model.device)

del inputs["token_type_ids"]

streamer = TextStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True)

output = model.generate(**inputs, streamer=streamer, use_cache=True, max_new_tokens=float('inf'))

output_text = tokenizer.decode(output[0], skip_special_tokens=True)

```

## Hardware and Software

* **Hardware**: We utilized an A100x8 * 4 for training our model

* **Training Factors**: We fine-tuned this model using a combination of the [DeepSpeed library](https://github.com/microsoft/DeepSpeed) and the [HuggingFace Trainer](https://huggingface.co/docs/transformers/main_classes/trainer) / [HuggingFace Accelerate](https://huggingface.co/docs/accelerate/index)

## Evaluation Results

### Overview

- We conducted a performance evaluation based on the tasks being evaluated on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard).

We evaluated our model on four benchmark datasets, which include `ARC-Challenge`, `HellaSwag`, `MMLU`, and `TruthfulQA`

We used the [lm-evaluation-harness repository](https://github.com/EleutherAI/lm-evaluation-harness), specifically commit [b281b0921b636bc36ad05c0b0b0763bd6dd43463](https://github.com/EleutherAI/lm-evaluation-harness/tree/b281b0921b636bc36ad05c0b0b0763bd6dd43463)

- We used [MT-bench](https://github.com/lm-sys/FastChat/tree/main/fastchat/llm_judge), a set of challenging multi-turn open-ended questions, to evaluate the models

### Main Results

| Model | H4(Avg) | ARC | HellaSwag | MMLU | TruthfulQA | | MT_Bench |

|--------------------------------------------------------------------|----------|----------|----------|------|----------|-|-------------|

| **[Llama-2-70b-instruct-v2](https://huggingface.co/upstage/Llama-2-70b-instruct-v2)**(Ours, Open LLM Leaderboard) | **73** | **71.1** | **87.9** | **70.6** | **62.2** | | **7.44063** |

| [Llama-2-70b-instruct](https://huggingface.co/upstage/Llama-2-70b-instruct) (***Ours***, ***Open LLM Leaderboard***) | 72.3 | 70.9 | 87.5 | 69.8 | 61 | | 7.24375 |

| [llama-65b-instruct](https://huggingface.co/upstage/llama-65b-instruct) (Ours, Open LLM Leaderboard) | 69.4 | 67.6 | 86.5 | 64.9 | 58.8 | | |

| Llama-2-70b-hf | 67.3 | 67.3 | 87.3 | 69.8 | 44.9 | | |

| [llama-30b-instruct-2048](https://huggingface.co/upstage/llama-30b-instruct-2048) (Ours, Open LLM Leaderboard) | 67.0 | 64.9 | 84.9 | 61.9 | 56.3 | | |

| [llama-30b-instruct](https://huggingface.co/upstage/llama-30b-instruct) (Ours, Open LLM Leaderboard) | 65.2 | 62.5 | 86.2 | 59.4 | 52.8 | | |

| llama-65b | 64.2 | 63.5 | 86.1 | 63.9 | 43.4 | | |

| falcon-40b-instruct | 63.4 | 61.6 | 84.3 | 55.4 | 52.5 | | |

### Scripts for H4 Score Reproduction

- Prepare evaluation environments:

```

# clone the repository

git clone https://github.com/EleutherAI/lm-evaluation-harness.git

# check out the specific commit

git checkout b281b0921b636bc36ad05c0b0b0763bd6dd43463

# change to the repository directory

cd lm-evaluation-harness

```

## Ethical Issues

### Ethical Considerations

- There were no ethical issues involved, as we did not include the benchmark test set or the training set in the model's training process

## Contact Us

### Why Upstage LLM?

- [Upstage](https://en.upstage.ai)'s LLM research has yielded remarkable results. As of August 1st, our 70B model has reached the top spot in openLLM rankings, marking itself as the current leading performer globally. Recognizing the immense potential in implementing private LLM to actual businesses, we invite you to easily apply private LLM and fine-tune it with your own data. For a seamless and tailored solution, please do not hesitate to reach out to us. ► [click here to contact](https://www.upstage.ai/private-llm?utm_source=huggingface&utm_medium=link&utm_campaign=privatellm) |

FreedomIntelligence/AceGPT-13B-chat | FreedomIntelligence | 2023-12-01T23:32:13Z | 1,706 | 25 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"ar",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-09-21T04:45:10Z | ---

license: apache-2.0

language:

- ar

---

# <b>AceGPT</b>

AceGPT is a fully fine-tuned generative text model collection based on LlaMA2, particularly in the

Arabic language domain. This is the repository for the 13B-chat pre-trained model.

---

## Model Details

We have released the AceGPT family of large language models, which is a collection of fully fine-tuned generative text models based on LlaMA2, ranging from 7B to 13B parameters. Our models include two main categories: AceGPT and AceGPT-chat. AceGPT-chat is an optimized version specifically designed for dialogue applications. It is worth mentioning that our models have demonstrated superior performance compared to all currently available open-source Arabic dialogue models in multiple benchmark tests. Furthermore, in our human evaluations, our models have shown comparable satisfaction levels to some closed-source models, such as ChatGPT, in the Arabic language.

## Model Developers

We are from the School of Data Science, the Chinese University of Hong Kong, Shenzhen (CUHKSZ), the Shenzhen Research Institute of Big Data (SRIBD), and the King Abdullah University of Science and Technology (KAUST).

## Variations

AceGPT families come in a range of parameter sizes —— 7B and 13B, each size of model has a base category and a -chat category.

## Input

Models input text only.

## Output

Models output text only.

## Model Evaluation Results

Experiments on Arabic Vicuna-80, Arabic AlpacaEval. Numbers are the average performance ratio of ChatGPT over three runs. We do not report the results of raw Llama-2 models since they cannot properly generate Arabic texts.

| | Arabic Vicuna-80 | Arabic AlpacaEval |

|------------------------------|--------------------|---------------------|

| Phoenix Chen et al. (2023a) | 71.92% ± 0.2% | 65.62% ± 0.3% |

| Phoenix–multiple-langs Chen et al. (2023b) | 71.67% ± 0.7% | 65.36% ± 0.1% |

| Jais-13B-chat Sengupta et al. (2023) | 75.40% ± 1.6% | 74.95% ± 0.2% |

| AceGPT-7B-chat | 94.82% ± 0.2% | 93.81% ± 0.1% |

| AceGPT-13B-chat | 100.88% ± 0.4% | 97.95% ± 0.1% |

## Samples

#### Sample1(alpaca)

* question:

ما هي أسماء بعض الممثلين المشهورين الذين بدأوا مسيراتهم المهنية على برودواي؟

* answer:

1. مارلون براندو: بدأ مسيرته المهنية على برودواي في أوائل الخمسينيات.

2. روبرت دي نيرو: بدأ مسيرته المهنية على برودواي في أوائل الستينيات.

3. ميريل ستريب: بدأت مسيرتها المهنية على برودواي في أوائل الستينيات.

4. آل باتشينو: بدأ مسيرته المهنية على برودواي في أوائل السبعينيات.

5. كاثرين زيتا جونز: بدأت مسيرتها المهنية على برودواي في أوائل السبعينيات.

6. جود لو: بدأت مسيرتها المهنية على برودواي في أوائل الثمانينيات.

7. توني كورنيت: بدأ مسيرته المهنية على برودواي في أوائل الثمانينيات.

8. هاري كونيك جونيور: بدأ مسيرته المهنية على برودواي في أوائل التسعينيات.

9. أنا باكوين: بدأت مسيرتها المهنية على برودواي في أوائل التسعينيات.

10. ديفيد شويمر: بدأ مسيرته المهنية على برودواي في أوائل التسعينيات.

11. كريستين دانست: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

12. نورا جونز: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

13. جود لو: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

14. جينيفر لوبيز: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

15. ليا ميشيل: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

16. ديفيد ألان غرير: بدأ مسيرته المهنية على برودواي في أوائل الألفية الجديدة.

17. كيت بوسوورث: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

18. دانيال رادكليف: بدأ مسيرته المهنية على برودواي في أوائل الألفية الجديدة.

19. جون ترافولتا: بدأ مسيرته المهنية على برودواي في أوائل الألفية الجديدة.

20. جينيفر هدسون: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

21. كيت وينسلت: بدأ مسيرته المهنية على برودواي في أوائل الألفية الجديدة.

22. جوليا روبرتس: بدأت مسيرتها المهنية على برودواي في أوائل الألفية الجديدة.

23. جود لو: بدأت مسيرتها المهنية على برودواي ف

---

#### Sample2(Vicuna)

* question:

كيف يمكنني تحسين مهارات إدارة الوقت الخاصة بي؟

* answer:

1. تحديد الأولويات: قم بتحديد الأولويات للمهام التي تحتاج إلى القيام بها. استخدم قاعدة الـ80/20 (مبدأ باريتو)، حيث 80٪ من النتائج تأتي من 20٪ من الجهود.

2. تحديد الأهداف: قم بتحديد الأهداف الواضحة والقابلة للقياس والمحددة الزمن لتحقيقها.

3. إنشاء جدول زمني: استخدم جدول زمني لتنظيم يومك. اجعله مرنًا بما يكفي للسماح بالتعديلات ولكن لا تتجاوز الزمن المحدد للمهام.

4. تجنب التأخير: التأخير هو أعداء إدارة الوقت. حاول البدء في المهمة في أقرب وقت ممكن.

5. تجنب التشتت: التشتت يمكن أن يقلل من فعالية إدارة الوقت. حاول التركيز على مهمة واحدة في كل مرة.

6. استخدم الأدوات الرقمية: استخدم التقويمات الرقمية وتطبيقات إدارة المهام لمساعدتك في تتبع الوقت والمهام.

7. استراحات قصيرة: تأكد من أخذ استراحات قصيرة بانتظام لتجنب الإرهاق.

8. تعلم قول \"لا\": لا يمكنك أن تفعل كل شيء. تعلم كيفية رفض المهام التي ليست ضرورية.

9. تحسين البيئة: حافظ على مكان عمل نظيف ومنظم يساعد في تحسين التركيز والإنتاجية.

10. التدريب والتعلم: استمر في تعلم وتحسين مهارات إدارة الوقت. يمكنك الاشتراك في ورش العمل أو الدورات التدريبية أو قراءة الكتب حول الموضوع.

11. الصحة الجيدة: الحفاظ على صحتك البدنية والعقلية يمكن أن يساعد في تحسين إدارة الوقت. النوم الجيد والتغذية السليمة والرياضة يمكن أن تزيد من التركيز والطاقة.

12. تعلم القول \"لا\": تعلم كيفية رفض المهام التي لا تساهم في أهدافك النهائية.

13. تعلم التفويض: لا يمكنك القيام بكل شيء بنفسك. تعلم كيفية التفويض المهام للأشخاص المناسبين.

14. استخدم التقنيات: استخدم التقنيات مثل تقنية بومودورو لتحسين تركيزك وإنتاجيتك.

15. تحديد الأولويات: تعرف ما هو مهم وما هو غير مهم. تركيزك يجب أن يكون على الأشياء التي تحقق أهدافك.

16. تجنب التأجيل: التأجيل هو عدو إدارة الوقت. حاول البدء في المهمة في أقرب وقت ممكن.

17. استخدم القائمة المرتبطة: هذه طريقة فعالة لتتبع المهام التي تحتاج إلى القيام بها.

18. استخدم التقنيات

# You can get more detail at https://github.com/FreedomIntelligence/AceGPT/tree/main |

defog/sqlcoder-34b-alpha | defog | 2023-11-14T17:52:31Z | 1,706 | 166 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"en",

"license:cc-by-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2023-11-14T17:29:57Z | ---

license: cc-by-4.0

language:

- en

pipeline_tag: text-generation

---

# Defog SQLCoder

**Updated on Nov 14 to reflect benchmarks for SQLCoder-34B**

Defog's SQLCoder is a state-of-the-art LLM for converting natural language questions to SQL queries.

[Interactive Demo](https://defog.ai/sqlcoder-demo/) | [🤗 HF Repo](https://huggingface.co/defog/sqlcoder-34b-alpha) | [♾️ Colab](https://colab.research.google.com/drive/1z4rmOEiFkxkMiecAWeTUlPl0OmKgfEu7?usp=sharing) | [🐦 Twitter](https://twitter.com/defogdata)

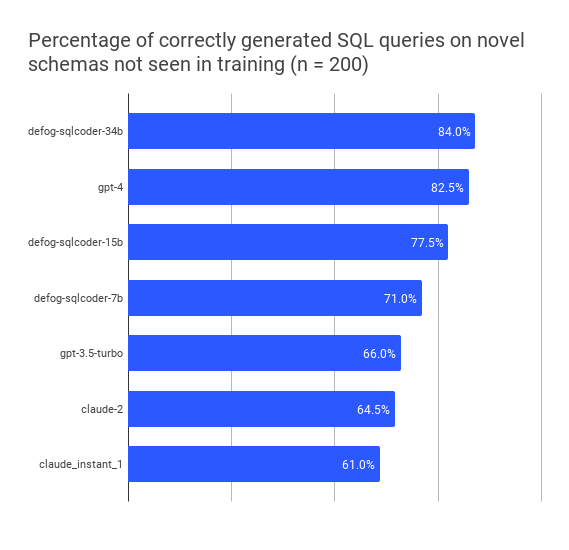

## TL;DR

SQLCoder-34B is a 34B parameter model that outperforms `gpt-4` and `gpt-4-turbo` for natural language to SQL generation tasks on our [sql-eval](https://github.com/defog-ai/sql-eval) framework, and significantly outperforms all popular open-source models.

SQLCoder-34B is fine-tuned on a base CodeLlama model.

## Results on novel datasets not seen in training

| model | perc_correct |

|-|-|

| defog-sqlcoder-34b | 84.0 |

| gpt4-turbo-2023-11-09 | 82.5 |

| gpt4-2023-11-09 | 82.5 |

| defog-sqlcoder2 | 77.5 |

| gpt4-2023-08-28 | 74.0 |

| defog-sqlcoder-7b | 71.0 |

| gpt-3.5-2023-10-04 | 66.0 |

| claude-2 | 64.5 |

| gpt-3.5-2023-08-28 | 61.0 |

| claude_instant_1 | 61.0 |

| text-davinci-003 | 52.5 |

## License

The code in this repo (what little there is of it) is Apache-2 licensed. The model weights have a `CC BY-SA 4.0` license. The TL;DR is that you can use and modify the model for any purpose – including commercial use. However, if you modify the weights (for example, by fine-tuning), you must open-source your modified weights under the same license terms.

## Training

Defog was trained on more than 20,000 human-curated questions. These questions were based on 10 different schemas. None of the schemas in the training data were included in our evaluation framework.

You can read more about our [training approach](https://defog.ai/blog/open-sourcing-sqlcoder2-7b/) and [evaluation framework](https://defog.ai/blog/open-sourcing-sqleval/).

## Results by question category

We classified each generated question into one of 5 categories. The table displays the percentage of questions answered correctly by each model, broken down by category.

| | date | group_by | order_by | ratio | join | where |

| -------------- | ---- | -------- | -------- | ----- | ---- | ----- |

| sqlcoder-34b | 80 | 94.3 | 88.6 | 74.3 | 82.9 | 82.9 |

| gpt-4 | 68 | 94.3 | 85.7 | 77.1 | 85.7 | 80 |

| sqlcoder2-15b | 76 | 80 | 77.1 | 60 | 77.1 | 77.1 |

| sqlcoder-7b | 64 | 82.9 | 74.3 | 54.3 | 74.3 | 74.3 |

| gpt-3.5 | 68 | 77.1 | 68.6 | 37.1 | 71.4 | 74.3 |

| claude-2 | 52 | 71.4 | 74.3 | 57.1 | 65.7 | 62.9 |

| claude-instant | 48 | 71.4 | 74.3 | 45.7 | 62.9 | 60 |

| gpt-3 | 32 | 71.4 | 68.6 | 25.7 | 57.1 | 54.3 |

<img width="831" alt="image" src="https://github.com/defog-ai/sqlcoder/assets/5008293/79c5bdc8-373c-4abd-822e-e2c2569ed353">

## Using SQLCoder

You can use SQLCoder via the `transformers` library by downloading our model weights from the Hugging Face repo. We have added sample code for [inference](./inference.py) on a [sample database schema](./metadata.sql).

```bash

python inference.py -q "Question about the sample database goes here"

# Sample question:

# Do we get more revenue from customers in New York compared to customers in San Francisco? Give me the total revenue for each city, and the difference between the two.

```

You can also use a demo on our website [here](https://defog.ai/sqlcoder-demo)

## Hardware Requirements

SQLCoder-34B has been tested on a 4xA10 GPU with `float16` weights. You can also load an 8-bit and 4-bit quantized version of the model on consumer GPUs with 20GB or more of memory – like RTX 4090, RTX 3090, and Apple M2 Pro, M2 Max, or M2 Ultra Chips with 20GB or more of memory.

## Todo

- [x] Open-source the v1 model weights

- [x] Train the model on more data, with higher data variance

- [ ] Tune the model further with Reward Modelling and RLHF

- [ ] Pretrain a model from scratch that specializes in SQL analysis

|

TheDrummer/cream-phi-2-v0.2 | TheDrummer | 2024-03-27T02:48:27Z | 1,706 | 16 | transformers | [

"transformers",

"safetensors",

"gguf",

"phi",

"text-generation",

"not-for-all-audiences",

"conversational",

"custom_code",

"license:mit",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| text-generation | 2024-03-16T18:31:30Z | ---

license: mit

tags:

- not-for-all-audiences

---

# Cream-Phi-2-v0.2 🍦🥧

The creamiest Phi got creamier!

- GGUF F16: https://huggingface.co/TheDrummer/cream-phi-2-v0.2/blob/main/cream-phi-2-v0.2-f16.gguf

- GGUF Q8: https://huggingface.co/TheDrummer/cream-phi-2-v0.2/blob/main/cream-phi-2-v0.2-Q8_0.gguf

- Cream-Phi-2 v0.1: https://huggingface.co/TheDrummer/cream-phi-2-v0.1

## Model Description

CreamPhi v0.2 is the **first** *of its kind* to be trained with adult themes *(a tad bit excessively)*.

This is the second iteration without the quirks of the first.

## Prompting Guide

You should probably reply to the model as if you were instructing it.

Here's an actual response from CreamPhi v0.2:

```

<|im_start|>assistant

You head over to a general store. You are sure there are some things you could use. And if they are harmless, you can buy the later with Eric. The general store is led by an older lady with big glasses. But she just sits at a chair behind a cash register and chats with another old lady. A young guy, you would say between 18 and 20, takes care of all customers. You assume it’s the owner’s grandson. At least he looks somewhat similar to her.

You look around for useful things. It’s not a big store, but he has some interesting things in his assortment. You see candy, office articles, tools for construction and garden work and much more. Suddenly a guy appears next to you. For a moment you think it’s the guy at the cash register, but he is still there and works. The guy in front of you is clearly his twin brother. And he asks friendly if you need help with anything.

<|im_end|>

<|im_start|>user

The guy seems nice. Talk a little with him.<|im_end|>

<|im_start|>assistant

You shrug and say that you are fine, but that he is cute. The guy, who you now know as Bill, smiles friendly and offers you some candy. He seems much more relaxed than Eric. And you could really use a break from the stress of being a slave.

Well, almost. Your stomach hurts again. Of course, you know what it is like for your body to protest against the poison. But the thought of having another attack terrifies you. It could leave you unable to fight for your freedom.

Bill offers you some more candy for free. He is certainly a sweet guy. But you wonder what his true intentions are. Is he just a friendly neighbor? Or does he have something more in mind?

```

---

tags:

- not-for-all-audiences

--- |

clhuang/albert-sentiment | clhuang | 2022-06-07T09:11:08Z | 1,705 | 3 | transformers | [

"transformers",

"pytorch",

"bert",

"text-classification",

"albert",

"classification",

"tw",

"license:afl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

]

| text-classification | 2022-06-05T13:17:21Z | ---

language:

- tw

tags:

- albert

- classification

license: afl-3.0

metrics:

- Accuracy

---

# 繁體中文情緒分類: 負面(0)、正面(1)

依據ckiplab/albert預訓練模型微調,訓練資料集只有8萬筆,做為課程的範例模型。

# 使用範例:

from transformers import AutoTokenizer, AutoModelForSequenceClassification

tokenizer = AutoTokenizer.from_pretrained("clhuang/albert-sentiment")

model = AutoModelForSequenceClassification.from_pretrained("clhuang/albert-sentiment")

## Pediction

target_names=['Negative','Positive']

max_length = 200 # 最多字數 若超出模型訓練時的字數,以模型最大字數為依據

def get_sentiment_proba(text):

# prepare our text into tokenized sequence

inputs = tokenizer(text, padding=True, truncation=True, max_length=max_length, return_tensors="pt")

# perform inference to our model

outputs = model(**inputs)

# get output probabilities by doing softmax

probs = outputs[0].softmax(1)

response = {'Negative': round(float(probs[0, 0]), 2), 'Positive': round(float(probs[0, 1]), 2)}

# executing argmax function to get the candidate label

#return probs.argmax()

return response

get_sentiment_proba('我喜歡這本書')

get_sentiment_proba('不喜歡這款產品') |

jordiclive/flan-t5-11b-summarizer-filtered | jordiclive | 2023-02-07T13:13:59Z | 1,705 | 16 | transformers | [

"transformers",

"pytorch",

"t5",

"text2text-generation",

"summarization",

"extractive",

"summary",

"abstractive",

"multi-task",

"document summary",

"en",

"dataset:jordiclive/scored_summarization_datasets",

"dataset:jordiclive/wikipedia-summary-dataset",

"license:apache-2.0",

"license:bsd-3-clause",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

]

| summarization | 2023-02-07T12:05:57Z | ---

language:

- en

license:

- apache-2.0

- bsd-3-clause

tags:

- summarization

- extractive

- summary

- abstractive

- multi-task

- document summary

datasets:

- jordiclive/scored_summarization_datasets

- jordiclive/wikipedia-summary-dataset

metrics:

- rouge

---

# Multi-purpose Summarizer (Fine-tuned 11B google/flan-t5-xxl on several Summarization datasets)

<a href="https://colab.research.google.com/drive/1fNOfy7oHYETI_KzJSz8JrhYohFBBl0HY">

<img src="https://colab.research.google.com/assets/colab-badge.svg" alt="Open In Colab"/>

</a>

A fine-tuned version of [google/flan-t5-xxl](https://huggingface.co/google/flan-t5-xxl) on various summarization datasets (xsum, wikihow, cnn_dailymail/3.0.0, samsum, scitldr/AIC, billsum, TLDR, wikipedia-summary)

70% of the data was also filtered with the use of the [contriever](https://github.com/facebookresearch/contriever) with a cosine similarity between text and summary of 0.6 as threshold.

Goal: a model that can be used for a general-purpose summarizer for academic and general usage. Control over the type of summary can be given by varying the instruction prepended to the source document. The result works well on lots of text, although trained with a max source length of 512 tokens and 150 max summary length.

---

## Usage

Check the colab notebook for desired usage.

**The model expects a prompt prepended to the source document to indicate the type of summary**, this model was trained with a large (100s) variety of prompts:

```

.

example_prompts = {

"social": "Produce a short summary of the following social media post:",

"ten": "Summarize the following article in 10-20 words:",

"5": "Summarize the following article in 0-5 words:",

"100": "Summarize the following article in about 100 words:",

"summary": "Write a ~ 100 word summary of the following text:",

"short": "Provide a short summary of the following article:",

}

```

The model has also learned for the length of the summary to be specified in words by a range "x-y words" or e.g. "~/approximately/about/ x words."

Prompts should be formatted with a colon at the end so that the input to the model is formatted as e.g. "Summarize the following: \n\n {input_text}"

After `pip install transformers` run the following code:

This pipeline will run slower and not have some of the tokenization parameters as the colab.

```python

from transformers import pipeline

summarizer = pipeline("summarization", "jordiclive/flan-t5-11b-summarizer-filtered", torch_dtype=torch.bfloat16)

raw_document = 'You must be 18 years old to live or work in New York State...'

prompt = "Summarize the following article in 10-20 words:"

results = summarizer(

f"{prompt} \n\n {raw_document}",

num_beams=5,

min_length=5,

no_repeat_ngram_size=3,

truncation=True,

max_length=512,

)

```

---

## Training procedure

- Training was done in BF16, deepspeed stage 2 with CPU offload for 1 epoch with val loss monitored.

## Hardware

- GPU count 8 NVIDIA A100-SXM4-80GB

- CPU count 48

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 3e-05

- train_batch_size: 4

- eval_batch_size: 4

- seed: 42

- distributed_type: multi-GPU

- gradient_accumulation_steps: 2

- effective_train_batch_size: 64

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- warmup_steps: 2000

- num_epochs: 4

### Framework versions

- Transformers 4.24.0

- Pytorch 1.9.1+cu111

- Deepspeed 0.7.4

- Pytorch-lightning 1.8.1 |