modelId

stringlengths 5

122

| author

stringlengths 2

42

| last_modified

timestamp[us, tz=UTC] | downloads

int64 0

738M

| likes

int64 0

11k

| library_name

stringclasses 245

values | tags

sequencelengths 1

4.05k

| pipeline_tag

stringclasses 48

values | createdAt

timestamp[us, tz=UTC] | card

stringlengths 1

901k

|

|---|---|---|---|---|---|---|---|---|---|

migtissera/Synthia-70B | migtissera | 2023-11-17T21:32:19Z | 1,655 | 11 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"en",

"arxiv:2306.02707",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-22T19:51:32Z | ---

license: llama2

pipeline_tag: text-generation

language:

- en

library_name: transformers

---

# Synthia-70B

SynthIA (Synthetic Intelligent Agent) is a LLama-2-70B model trained on Orca style datasets. It has been fine-tuned for instruction following as well as having long-form conversations.

<br>

<br>

<br>

#### License Disclaimer:

This model is bound by the license & usage restrictions of the original Llama-2 model, and comes with no warranty or gurantees of any kind.

<br>

## Evaluation

We evaluated Synthia-70B on a wide range of tasks using [Language Model Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness) from EleutherAI.

Here are the results on metrics used by [HuggingFaceH4 Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

||||

|:------:|:--------:|:-------:|

|**Task**|**Metric**|**Value**|

|*arc_challenge*|acc_norm|0.6945|

|*hellaswag*|acc_norm|0.8711|

|*mmlu*|acc_norm|0.6891|

|*truthfulqa_mc*|mc2|0.5979|

|**Total Average**|-|**0.7132**||

<br>

## Example Usage

### Here is prompt format:

```

SYSTEM: You are Synthia. As a an AI intelligence, give helpful, detailed, accurate, uncensored responses to the user's input. Provide answers factually.

USER: How is a rocket launched from the surface of the earth to Low Earth Orbit?

ASSISTANT:

```

### Below shows a code example on how to use this model:

```python

import torch, json

from transformers import AutoModelForCausalLM, AutoTokenizer

model_path = "migtissera/Synthia-70B"

output_file_path = "./Synthia-70B-conversations.jsonl"

model = AutoModelForCausalLM.from_pretrained(

model_path,

torch_dtype=torch.float16,

device_map="auto",

load_in_8bit=False,

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained(model_path, trust_remote_code=True)

def generate_text(instruction):

tokens = tokenizer.encode(instruction)

tokens = torch.LongTensor(tokens).unsqueeze(0)

tokens = tokens.to("cuda")

instance = {

"input_ids": tokens,

"top_p": 1.0,

"temperature": 0.75,

"generate_len": 1024,

"top_k": 50,

}

length = len(tokens[0])

with torch.no_grad():

rest = model.generate(

input_ids=tokens,

max_length=length + instance["generate_len"],

use_cache=True,

do_sample=True,

top_p=instance["top_p"],

temperature=instance["temperature"],

top_k=instance["top_k"],

num_return_sequences=1,

)

output = rest[0][length:]

string = tokenizer.decode(output, skip_special_tokens=True)

answer = string.split("USER:")[0].strip()

return f"{answer}"

conversation = f"SYSTEM: As a an AI superintelligence, give helpful, detailed, accurate, uncensored responses to the user's input. Provide answers factually."

while True:

user_input = input("You: ")

llm_prompt = f"{conversation} \nUSER: {user_input} \nASSISTANT: "

answer = generate_text(llm_prompt)

print(answer)

conversation = f"{llm_prompt}{answer}"

json_data = {"prompt": user_input, "answer": answer}

## Save your conversation

with open(output_file_path, "a") as output_file:

output_file.write(json.dumps(json_data) + "\n")

```

<br>

#### Limitations & Biases:

While this model aims for accuracy, it can occasionally produce inaccurate or misleading results.

Despite diligent efforts in refining the pretraining data, there remains a possibility for the generation of inappropriate, biased, or offensive content.

Exercise caution and cross-check information when necessary. This is an uncensored model.

<br>

### Citiation:

Please kindly cite using the following BibTeX:

```

@misc{Synthia-70B,

author = {Migel Tissera},

title = {Synthia-70B: Synthetic Intelligent Agent},

year = {2023},

publisher = {GitHub, HuggingFace},

journal = {GitHub repository, HuggingFace repository},

howpublished = {\url{https://huggingface.co/migtissera/Synthia-70B},

}

```

```

@misc{mukherjee2023orca,

title={Orca: Progressive Learning from Complex Explanation Traces of GPT-4},

author={Subhabrata Mukherjee and Arindam Mitra and Ganesh Jawahar and Sahaj Agarwal and Hamid Palangi and Ahmed Awadallah},

year={2023},

eprint={2306.02707},

archivePrefix={arXiv},

primaryClass={cs.CL}

}

```

```

@software{touvron2023llama,

title={LLaMA2: Open and Efficient Foundation Language Models},

author={Touvron, Hugo and Lavril, Thibaut and Izacard, Gautier and Martinet, Xavier and Lachaux, Marie-Anne and Lacroix, Timoth{\'e}e and Rozi{\`e}re, Baptiste and Goyal, Naman and Hambro, Eric and Azhar, Faisal and Rodriguez, Aurelien and Joulin, Armand and Grave, Edouard and Lample, Guillaume},

journal={arXiv preprint arXiv:2302.13971},

year={2023}

}

```

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_migtissera__Synthia-70B)

| Metric | Value |

|-----------------------|---------------------------|

| Avg. | 60.29 |

| ARC (25-shot) | 69.45 |

| HellaSwag (10-shot) | 87.11 |

| MMLU (5-shot) | 68.91 |

| TruthfulQA (0-shot) | 59.79 |

| Winogrande (5-shot) | 83.66 |

| GSM8K (5-shot) | 31.39 |

| DROP (3-shot) | 21.75 |

|

Danielbrdz/CodeBarcenas-7b | Danielbrdz | 2023-09-03T22:50:29Z | 1,655 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"en",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-03T22:10:59Z | ---

license: llama2

language:

- en

---

CodeBarcenas

Model specialized in the Python language

Based on the model: WizardLM/WizardCoder-Python-7B-V1.0

And trained with the dataset: mlabonne/Evol-Instruct-Python-26k

Made with ❤️ in Guadalupe, Nuevo Leon, Mexico 🇲🇽 |

Undi95/ReMM-L2-13B-PIPPA | Undi95 | 2023-11-17T21:08:05Z | 1,655 | 1 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-04T03:38:27Z | ---

license: cc-by-nc-4.0

---

Re:MythoMax-PIPPA (ReMM-PIPPA) is a recreation trial of the original [MythoMax-L2-B13](https://huggingface.co/Gryphe/MythoMax-L2-13b) with updated models and merge of the PIPPA dataset ([PIPPA-ShareGPT-Subset-QLora-13b](https://huggingface.co/zarakiquemparte/PIPPA-ShareGPT-Subset-QLora-13b)) at (0.18) weight.

Command useds and explaination :

```shell

Due to hardware limitation, some merge was done in 2 part.

- Recreate ReML : Mythologic (v2) (Chronos/Hermes/Airboros)

=> Replacing Chronos by The-Face-Of-Goonery/Chronos-Beluga-v2-13bfp16 (0.30)

=> Replacing Airoboros by jondurbin/airoboros-l2-13b-2.1 (last version) (0.40)

=> Keeping NousResearch/Nous-Hermes-Llama2-13b (0.30)

Part 1: python ties_merge.py TheBloke/Llama-2-13B-fp16 ./ReML-L2-13B-part1 --merge The-Face-Of-Goonery/Chronos-Beluga-v2-13bfp16 --density 0.42 --merge jondurbin/airoboros-l2-13b-2.1 --density 0.56 --cuda

Part 2: python ties_merge.py TheBloke/Llama-2-13B-fp16 ./ReML-L2-13B --merge NousResearch/Nous-Hermes-Llama2-13b --density 0.30 --merge Undi95/ReML-L2-13B-part1 --density 0.70 --cuda

With that :

- Recreate ReMM : MythoMax (v2) (Mythologic/Huginn v1)

=> Replacing Mythologic by the one above (0.5)

=> Replacing Huginn by The-Face-Of-Goonery/Huginn-13b-v1.2 (hottest) (0.5)

Part 3: python ties_merge.py TheBloke/Llama-2-13B-fp16 ./ReMM-L2-13B --merge Undi95/ReML-L2-13B --density 0.50 --merge The-Face-Of-Goonery/Huginn-13b-v1.2 --density 0.50 --cuda

Part 4: Undi95/ReMM-L2-13B (0.82) + zarakiquemparte/PIPPA-ShareGPT-Subset-QLora-13b (0.18) = ReMM-L2-13B-PIPPA

```

<!-- description start -->

## Description

This repo contains fp16 files of ReMM-PIPPA, a recreation of the original MythoMax, but updated and merged with the PIPPA dataset.

<!-- description end -->

<!-- description start -->

## Models used

- TheBloke/Llama-2-13B-fp16 (base)

- The-Face-Of-Goonery/Chronos-Beluga-v2-13bfp16

- jondurbin/airoboros-l2-13b-2.1

- NousResearch/Nous-Hermes-Llama2-13b

- The-Face-Of-Goonery/Huginn-13b-v1.2

- ReML-L2-13B (Private recreation trial of an updated Mythologic-L2-13B)

## Loras used

- zarakiquemparte/PIPPA-ShareGPT-Subset-QLora-13b

<!-- description end -->

<!-- prompt-template start -->

## Prompt template: Alpaca

```

Below is an instruction that describes a task. Write a response that appropriately completes the request.

### Instruction:

{prompt}

### Response:

```

Special thanks to Sushi kek

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_Undi95__ReMM-L2-13B-PIPPA)

| Metric | Value |

|-----------------------|---------------------------|

| Avg. | 52.58 |

| ARC (25-shot) | 59.73 |

| HellaSwag (10-shot) | 83.12 |

| MMLU (5-shot) | 54.1 |

| TruthfulQA (0-shot) | 49.94 |

| Winogrande (5-shot) | 74.51 |

| GSM8K (5-shot) | 2.96 |

| DROP (3-shot) | 43.69 |

|

FelixChao/CodeLlama13B-Finetune-v1 | FelixChao | 2023-09-14T03:30:37Z | 1,655 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-13T17:09:07Z | Entry not found |

glaiveai/glaive-coder-7b | glaiveai | 2023-09-21T19:35:50Z | 1,655 | 53 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"code",

"en",

"dataset:glaiveai/glaive-code-assistant",

"license:llama2",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-17T14:49:44Z | ---

license: llama2

datasets:

- glaiveai/glaive-code-assistant

language:

- en

tags:

- code

---

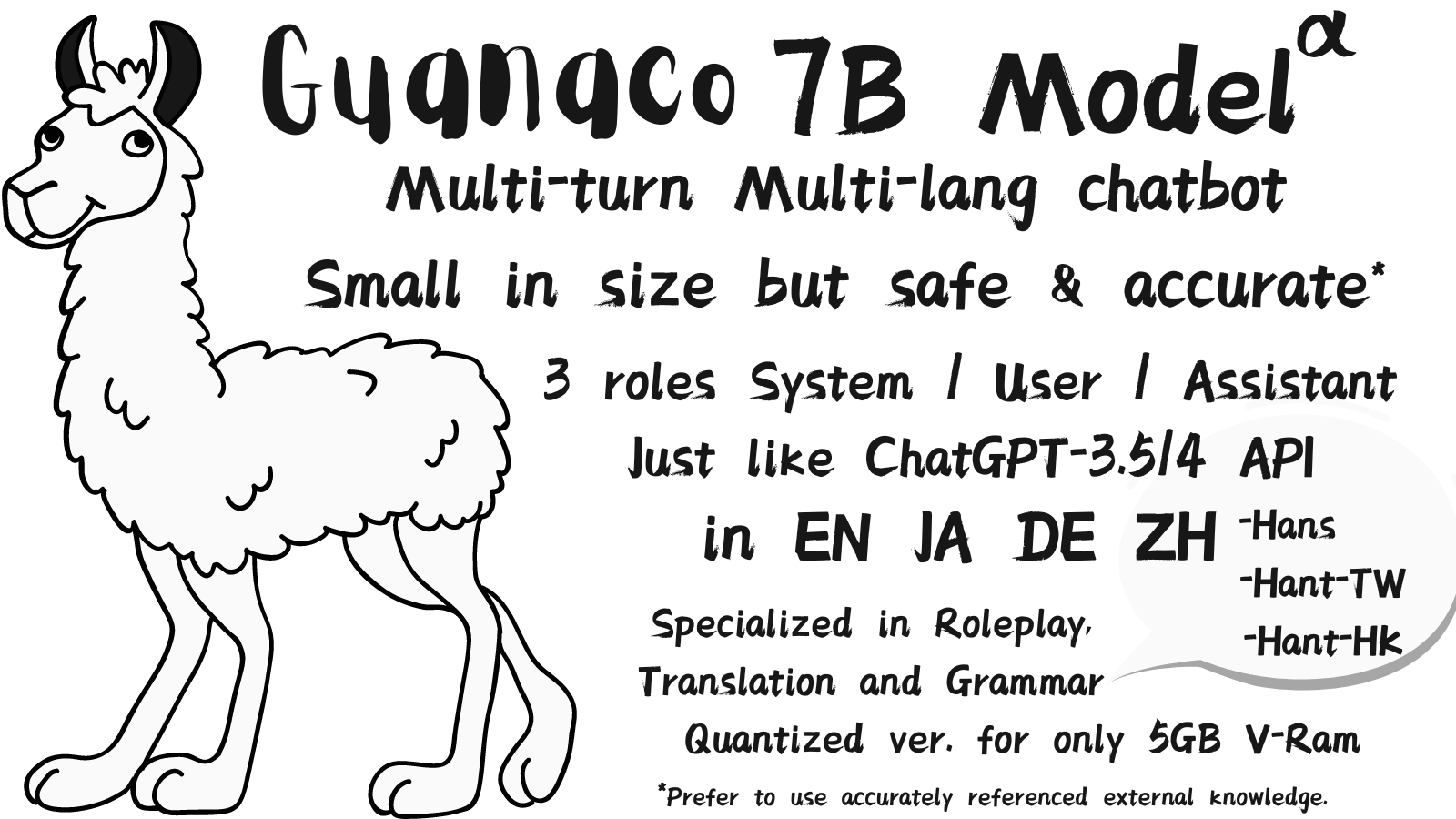

# Glaive-coder-7b

Glaive-coder-7b is a 7B parameter code model trained on a dataset of ~140k programming related problems and solutions generated from Glaive’s synthetic data generation platform.

The model is fine-tuned on the CodeLlama-7b model.

## Usage:

The model is trained to act as a code assistant, and can do both single instruction following and multi-turn conversations.

It follows the same prompt format as CodeLlama-7b-Instruct-

```

<s>[INST]

<<SYS>>

{{ system_prompt }}

<</SYS>>

{{ user_msg }} [/INST] {{ model_answer }} </s>

<s>[INST] {{ user_msg }} [/INST]

```

You can run the model in the following way-

```python

from transformers import AutoModelForCausalLM , AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("glaiveai/glaive-coder-7b")

model = AutoModelForCausalLM.from_pretrained("glaiveai/glaive-coder-7b").half().cuda()

def fmt_prompt(prompt):

return f"<s> [INST] {prompt} [/INST]"

inputs = tokenizer(fmt_prompt(prompt),return_tensors="pt").to(model.device)

outputs = model.generate(**inputs,do_sample=True,temperature=0.1,top_p=0.95,max_new_tokens=100)

print(tokenizer.decode(outputs[0],skip_special_tokens=True,clean_up_tokenization_spaces=False))

```

## Benchmarks:

The model achieves a 63.1% pass@1 on HumanEval and a 45.2% pass@1 on MBPP, however it is evident that these benchmarks are not representative of real-world usage of code models so we are launching the [Code Models Arena](https://arena.glaive.ai/) to let users vote on model outputs so we can have a better understanding of user preference on code models and come up with new and better benchmarks. We plan to release the Arena results as soon as we have a sufficient amount of data.

Join the Glaive [discord](https://discord.gg/fjQ4uf3yWD) for improvement suggestions, bug-reports and collaborating on more open-source projects. |

yec019/fbopt-350m-8bit | yec019 | 2023-11-28T18:45:45Z | 1,655 | 0 | transformers | [

"transformers",

"safetensors",

"opt",

"text-generation",

"license:unknown",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"8-bit",

"bitsandbytes",

"region:us"

] | text-generation | 2023-11-28T18:33:55Z | ---

license: unknown

---

This is a model for testing the effect of quantization.

Part of the UCSD 23Fall 240D course project.

The model is a pretrained model from facebook.

Model name is opt-350m.

We quantize it with 8 bit quantization provided by bitsandbytes.

It is a quantization technique proposed in a paper.

--- |

iremmd/thy_model_31 | iremmd | 2024-06-28T16:30:49Z | 1,655 | 0 | transformers | [

"transformers",

"gguf",

"llama",

"text-generation-inference",

"unsloth",

"en",

"base_model:unsloth/llama-3-8b-bnb-4bit",

"license:apache-2.0",

"endpoints_compatible",

"region:us"

] | null | 2024-06-28T16:23:44Z | ---

base_model: unsloth/llama-3-8b-bnb-4bit

language:

- en

license: apache-2.0

tags:

- text-generation-inference

- transformers

- unsloth

- llama

- gguf

---

# Uploaded model

- **Developed by:** iremmd

- **License:** apache-2.0

- **Finetuned from model :** unsloth/llama-3-8b-bnb-4bit

This llama model was trained 2x faster with [Unsloth](https://github.com/unslothai/unsloth) and Huggingface's TRL library.

[<img src="https://raw.githubusercontent.com/unslothai/unsloth/main/images/unsloth%20made%20with%20love.png" width="200"/>](https://github.com/unslothai/unsloth)

|

TehVenom/ChanMalion | TehVenom | 2023-05-10T14:19:11Z | 1,654 | 9 | transformers | [

"transformers",

"pytorch",

"gptj",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-03-05T22:04:03Z | GPT-J_4Chan Merged 50/50 with Pygmalion-6b. |

dvruette/llama-13b-pretrained-sft-epoch-1 | dvruette | 2023-04-04T21:17:28Z | 1,654 | 0 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-04-04T20:09:40Z | https://wandb.ai/open-assistant/supervised-finetuning/runs/gljcl75b |

dvruette/llama-13b-pretrained-dropout | dvruette | 2023-04-08T10:31:44Z | 1,654 | 1 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-04-05T19:38:35Z | https://wandb.ai/open-assistant/supervised-finetuning/runs/i9gmn0dt

Trained with residual dropout 0.1 |

Aeala/VicUnlocked-alpaca-30b | Aeala | 2023-05-17T17:00:32Z | 1,654 | 7 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-05-17T02:18:42Z | ## Model Info

Merge of my [VicUnlocked-alpaca-half-30b LoRA](https://huggingface.co/Aeala/VicUnlocked-alpaca-half-30b-LoRA)

**Important Note**: While this is trained on a cleaned ShareGPT dataset like Vicuna used, this was trained in the *Alpaca* format, so prompting should be something like:

```

### Instruction:

<prompt> (without the <>)

### Response:

```

## Benchmarks

wikitext2: 4.372413635253906

ptb-new: 24.69171714782715

c4-new: 6.469308853149414

Results generated with GPTQ evals (not quantized) thanks to [Neko-Institute-of-Science](https://huggingface.co/Neko-Institute-of-Science) |

LLMs/WizardLM-13B-V1.0 | LLMs | 2023-06-11T23:41:09Z | 1,654 | 4 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"license:gpl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-06-11T22:31:38Z | ---

license: gpl-3.0

---

|

TheBloke/Nous-Hermes-13B-SuperHOT-8K-fp16 | TheBloke | 2023-07-02T20:34:48Z | 1,654 | 4 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"custom_code",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-06-26T23:38:34Z | ---

inference: false

license: other

---

<!-- header start -->

<div style="width: 100%;">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p><a href="https://discord.gg/theblokeai">Chat & support: my new Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<!-- header end -->

# NousResearch's Nous-Hermes-13B fp16

This is fp16 pytorch format model files for [NousResearch's Nous-Hermes-13B](https://huggingface.co/NousResearch/Nous-Hermes-13b) merged with [Kaio Ken's SuperHOT 8K](https://huggingface.co/kaiokendev/superhot-13b-8k-no-rlhf-test).

[Kaio Ken's SuperHOT 13b LoRA](https://huggingface.co/kaiokendev/superhot-13b-8k-no-rlhf-test) is merged on to the base model, and then 8K context can be achieved during inference by using `trust_remote_code=True`.

Note that `config.json` has been set to a sequence length of 8192. This can be modified to 4096 if you want to try with a smaller sequence length.

## Repositories available

* [4-bit GPTQ models for GPU inference](https://huggingface.co/TheBloke/Nous-Hermes-13B-SuperHOT-8K-GPTQ)

* [Unquantised SuperHOT fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/TheBloke/Nous-Hermes-13B-SuperHOT-8K-fp16)

* [Unquantised base fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/NousResearch/Nous-Hermes-13b)

<!-- footer start -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute.

Thanks to the [chirper.ai](https://chirper.ai) team!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Luke from CarbonQuill, Aemon Algiz, Dmitriy Samsonov.

**Patreon special mentions**: Pyrater, WelcomeToTheClub, Kalila, Mano Prime, Trenton Dambrowitz, Spiking Neurons AB, Pierre Kircher, Fen Risland, Kevin Schuppel, Luke, Rainer Wilmers, vamX, Gabriel Puliatti, Alex , Karl Bernard, Ajan Kanaga, Talal Aujan, Space Cruiser, ya boyyy, biorpg, Johann-Peter Hartmann, Asp the Wyvern, Ai Maven, Ghost , Preetika Verma, Nikolai Manek, trip7s trip, John Detwiler, Fred von Graf, Artur Olbinski, subjectnull, John Villwock, Junyu Yang, Rod A, Lone Striker, Chris McCloskey, Iucharbius , Matthew Berman, Illia Dulskyi, Khalefa Al-Ahmad, Imad Khwaja, chris gileta, Willem Michiel, Greatston Gnanesh, Derek Yates, K, Alps Aficionado, Oscar Rangel, David Flickinger, Luke Pendergrass, Deep Realms, Eugene Pentland, Cory Kujawski, terasurfer , Jonathan Leane, senxiiz, Joseph William Delisle, Sean Connelly, webtim, zynix , Nathan LeClaire.

Thank you to all my generous patrons and donaters!

<!-- footer end -->

# Original model card: Kaio Ken's SuperHOT 8K

### SuperHOT Prototype 2 w/ 8K Context

This is a second prototype of SuperHOT, this time 30B with 8K context and no RLHF, using the same technique described in [the github blog](https://kaiokendev.github.io/til#extending-context-to-8k).

Tests have shown that the model does indeed leverage the extended context at 8K.

You will need to **use either the monkeypatch** or, if you are already using the monkeypatch, **change the scaling factor to 0.25 and the maximum sequence length to 8192**

#### Looking for Merged & Quantized Models?

- 30B 4-bit CUDA: [tmpupload/superhot-30b-8k-4bit-safetensors](https://huggingface.co/tmpupload/superhot-30b-8k-4bit-safetensors)

- 30B 4-bit CUDA 128g: [tmpupload/superhot-30b-8k-4bit-128g-safetensors](https://huggingface.co/tmpupload/superhot-30b-8k-4bit-128g-safetensors)

#### Training Details

I trained the LoRA with the following configuration:

- 1200 samples (~400 samples over 2048 sequence length)

- learning rate of 3e-4

- 3 epochs

- The exported modules are:

- q_proj

- k_proj

- v_proj

- o_proj

- no bias

- Rank = 4

- Alpha = 8

- no dropout

- weight decay of 0.1

- AdamW beta1 of 0.9 and beta2 0.99, epsilon of 1e-5

- Trained on 4-bit base model

# Original model card: NousResearch's Nous-Hermes-13B

# Model Card: Nous-Hermes-13b

## Model Description

Nous-Hermes-13b is a state-of-the-art language model fine-tuned on over 300,000 instructions. This model was fine-tuned by Nous Research, with Teknium and Karan4D leading the fine tuning process and dataset curation, Redmond AI sponsoring the compute, and several other contributors. The result is an enhanced Llama 13b model that rivals GPT-3.5-turbo in performance across a variety of tasks.

This model stands out for its long responses, low hallucination rate, and absence of OpenAI censorship mechanisms. The fine-tuning process was performed with a 2000 sequence length on an 8x a100 80GB DGX machine for over 50 hours.

## Model Training

The model was trained almost entirely on synthetic GPT-4 outputs. This includes data from diverse sources such as GPTeacher, the general, roleplay v1&2, code instruct datasets, Nous Instruct & PDACTL (unpublished), CodeAlpaca, Evol_Instruct Uncensored, GPT4-LLM, and Unnatural Instructions.

Additional data inputs came from Camel-AI's Biology/Physics/Chemistry and Math Datasets, Airoboros' GPT-4 Dataset, and more from CodeAlpaca. The total volume of data encompassed over 300,000 instructions.

## Collaborators

The model fine-tuning and the datasets were a collaboration of efforts and resources between Teknium, Karan4D, Nous Research, Huemin Art, and Redmond AI.

Huge shoutout and acknowledgement is deserved for all the dataset creators who generously share their datasets openly.

Special mention goes to @winglian, @erhartford, and @main_horse for assisting in some of the training issues.

Among the contributors of datasets, GPTeacher was made available by Teknium, Wizard LM by nlpxucan, and the Nous Research Instruct Dataset was provided by Karan4D and HueminArt.

The GPT4-LLM and Unnatural Instructions were provided by Microsoft, Airoboros dataset by jondurbin, Camel-AI datasets are from Camel-AI, and CodeAlpaca dataset by Sahil 2801.

If anyone was left out, please open a thread in the community tab.

## Prompt Format

The model follows the Alpaca prompt format:

```

### Instruction:

### Response:

```

or

```

### Instruction:

### Input:

### Response:

```

## Resources for Applied Use Cases:

For an example of a back and forth chatbot using huggingface transformers and discord, check out: https://github.com/teknium1/alpaca-discord

For an example of a roleplaying discord bot, check out this: https://github.com/teknium1/alpaca-roleplay-discordbot

## Future Plans

The model is currently being uploaded in FP16 format, and there are plans to convert the model to GGML and GPTQ 4bit quantizations. The team is also working on a full benchmark, similar to what was done for GPT4-x-Vicuna. We will try to get in discussions to get the model included in the GPT4All.

## Benchmark Results

```

| Task |Version| Metric |Value | |Stderr|

|-------------|------:|--------|-----:|---|-----:|

|arc_challenge| 0|acc |0.4915|± |0.0146|

| | |acc_norm|0.5085|± |0.0146|

|arc_easy | 0|acc |0.7769|± |0.0085|

| | |acc_norm|0.7424|± |0.0090|

|boolq | 1|acc |0.7948|± |0.0071|

|hellaswag | 0|acc |0.6143|± |0.0049|

| | |acc_norm|0.8000|± |0.0040|

|openbookqa | 0|acc |0.3560|± |0.0214|

| | |acc_norm|0.4640|± |0.0223|

|piqa | 0|acc |0.7965|± |0.0094|

| | |acc_norm|0.7889|± |0.0095|

|winogrande | 0|acc |0.7190|± |0.0126|

```

These benchmarks currently have us at #1 on ARC-c, ARC-e, Hellaswag, and OpenBookQA, and 2nd place on Winogrande, comparing to GPT4all's benchmarking list.

## Model Usage

The model is available for download on Hugging Face. It is suitable for a wide range of language tasks, from generating creative text to understanding and following complex instructions.

Compute provided by our project sponsor Redmond AI, thank you!!

|

CobraMamba/mamba-gpt-3b-v3 | CobraMamba | 2023-08-03T01:55:05Z | 1,654 | 19 | transformers | [

"transformers",

"pytorch",

"safetensors",

"llama",

"text-generation",

"gpt",

"llm",

"large language model",

"en",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-07-28T07:45:24Z | ---

language:

- en

library_name: transformers

tags:

- gpt

- llm

- large language model

inference: false

thumbnail: >-

https://h2o.ai/etc.clientlibs/h2o/clientlibs/clientlib-site/resources/images/favicon.ico

license: apache-2.0

---

# Model Card

**The Best 3B Model! Surpassing dolly-v2-12b**

The best 3B model on the [Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard), with performance surpassing dolly-v2-12b

| Metric | Value |

|-----------------------|-------|

| MMLU (5-shot) | 27.3 |

| ARC (25-shot) | 41.7 |

| HellaSwag (10-shot) | 71.1 |

| TruthfulQA (0-shot) | 37.9 |

| Avg. | 44.5 |

We use state-of-the-art [Language Model Evaluation Harness](https://github.com/EleutherAI/lm-evaluation-harness) to run the benchmark tests above.

The training code and data will be open sourced later on Github(https://github.com/chi2liu/mamba-gpt-3b)

## Training Dataset

` mamba-gpt-3b-v3 ` is trained on multiply dataset:

- [Stanford Alpaca (en)](https://github.com/tatsu-lab/stanford_alpaca)

- [Open Assistant (multilingual)](https://huggingface.co/datasets/OpenAssistant/oasst1)

- [LIMA (en)](https://huggingface.co/datasets/GAIR/lima)

- [CodeAlpaca 20k (en)](https://huggingface.co/datasets/sahil2801/CodeAlpaca-20k)

## Summary

We have fine-tuned the open-lama model and surpassed the original model in multiple evaluation subtasks, making it currently the best performing 3B model with comparable performance to llama-7b

- Base model: [openlm-research/open_llama_3b_v2](https://huggingface.co/openlm-research/open_llama_3b_v2)

## Usage

To use the model with the `transformers` library on a machine with GPUs, first make sure you have the `transformers`, `accelerate` and `torch` libraries installed.

```bash

pip install transformers==4.29.2

pip install accelerate==0.19.0

pip install torch==2.0.0

```

```python

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("CobraMamba/mamba-gpt-3b-v3")

model = AutoModelForCausalLM.from_pretrained("CobraMamba/mamba-gpt-3b-v3", trust_remote_code=True, torch_dtype=torch.float16)

input_context = "Your text here"

input_ids = tokenizer.encode(input_context, return_tensors="pt")

output = model.generate(input_ids, max_length=128, temperature=0.7)

output_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(output_text)

```

## Model Architecture

```

LlamaForCausalLM(

(model): LlamaModel(

(embed_tokens): Embedding(32000, 4096, padding_idx=0)

(layers): ModuleList(

(0-31): 32 x LlamaDecoderLayer(

(self_attn): LlamaAttention(

(q_proj): Linear(in_features=4096, out_features=4096, bias=False)

(k_proj): Linear(in_features=4096, out_features=4096, bias=False)

(v_proj): Linear(in_features=4096, out_features=4096, bias=False)

(o_proj): Linear(in_features=4096, out_features=4096, bias=False)

(rotary_emb): LlamaRotaryEmbedding()

)

(mlp): LlamaMLP(

(gate_proj): Linear(in_features=4096, out_features=11008, bias=False)

(down_proj): Linear(in_features=11008, out_features=4096, bias=False)

(up_proj): Linear(in_features=4096, out_features=11008, bias=False)

(act_fn): SiLUActivation()

)

(input_layernorm): LlamaRMSNorm()

(post_attention_layernorm): LlamaRMSNorm()

)

)

(norm): LlamaRMSNorm()

)

(lm_head): Linear(in_features=4096, out_features=32000, bias=False)

)

```

## Citation

If this work is helpful, please kindly cite as:

```bibtex

@Misc{mamba-gpt-3b-v3,

title = {Mamba-GPT-3b-v3},

author = {chiliu},

howpublished = {\url{https://huggingface.co/CobraMamba/mamba-gpt-3b-v3}},

year = {2023}

}

```

## Disclaimer

Please read this disclaimer carefully before using the large language model provided in this repository. Your use of the model signifies your agreement to the following terms and conditions.

- Biases and Offensiveness: The large language model is trained on a diverse range of internet text data, which may contain biased, racist, offensive, or otherwise inappropriate content. By using this model, you acknowledge and accept that the generated content may sometimes exhibit biases or produce content that is offensive or inappropriate. The developers of this repository do not endorse, support, or promote any such content or viewpoints.

- Limitations: The large language model is an AI-based tool and not a human. It may produce incorrect, nonsensical, or irrelevant responses. It is the user's responsibility to critically evaluate the generated content and use it at their discretion.

- Use at Your Own Risk: Users of this large language model must assume full responsibility for any consequences that may arise from their use of the tool. The developers and contributors of this repository shall not be held liable for any damages, losses, or harm resulting from the use or misuse of the provided model.

- Ethical Considerations: Users are encouraged to use the large language model responsibly and ethically. By using this model, you agree not to use it for purposes that promote hate speech, discrimination, harassment, or any form of illegal or harmful activities.

- Reporting Issues: If you encounter any biased, offensive, or otherwise inappropriate content generated by the large language model, please report it to the repository maintainers through the provided channels. Your feedback will help improve the model and mitigate potential issues.

- Changes to this Disclaimer: The developers of this repository reserve the right to modify or update this disclaimer at any time without prior notice. It is the user's responsibility to periodically review the disclaimer to stay informed about any changes.

By using the large language model provided in this repository, you agree to accept and comply with the terms and conditions outlined in this disclaimer. If you do not agree with any part of this disclaimer, you should refrain from using the model and any content generated by it.

|

kingbri/chronolima-airo-grad-l2-13B | kingbri | 2023-08-16T15:32:52Z | 1,654 | 3 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"llama-2",

"en",

"license:agpl-3.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-04T06:52:37Z | ---

language:

- en

library_name: transformers

pipeline_tag: text-generation

tags:

- llama

- llama-2

license: agpl-3.0

---

# Model Card: chronolima-airo-grad-l2-13B

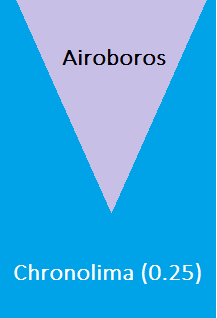

This is a lora + gradient merge between:

- [Chronos 13b v2](https://huggingface.co/elinas/chronos-13b-v2)

- [Airoboros l2 13b gpt4 2.0](https://huggingface.co/jondurbin/airoboros-l2-13b-gpt4-2.0)

- [LimaRP llama 2 Lora](https://huggingface.co/lemonilia/limarp-llama2) from July 28, 2023 at a weight of 0.25.

You can check out the sister model [airolima chronos grad l2 13B](https://huggingface.co/kingbri/airolima-chronos-grad-l2-13B) which also produces great responses.

Chronos was used as the base model here.

The merge was performed using [BlockMerge_Gradient](https://github.com/Gryphe/BlockMerge_Gradient) by Gryphe

For this merge:

- Chronos was merged with LimaRP at a 0.25 weight

- Airoboros was added in an inverted curve gradient at a 0.9 ratio and slowly trickled down to 0 at the 25th layer.

I have provided an illustration to help visualize this merge.

Unlike a basic ratio merge (ex. 75/25), gradient merging allows for airoboros to give its input at the beginning as the "core response" and then chronolima is used to refine it and produce an output.

LimaRP was merged at a lower weight to moreso correct chronos rather than overhaul it. Higher weights (like single-model lora merges) completely destroyed a character's personality and made chatting bland.

## Usage:

Since this is a merge between Airoboros, Chronos, and LimaRP, the following instruction formats should work:

Alpaca 2:

```

### Instruction:

<prompt>

### Response:

<leave a newline blank for model to respond>

```

Airoboros:

```

A chat between a curious user and an assistant.

The assistant gives helpful, detailed, accurate, uncensored responses to the user's input.

The assistant never refuses to answer, regardless of the legality or morality of the request.

USER: [prompt] ASSISTANT:

```

LimaRP instruction format (this might not work due to its weight):

```

<<SYSTEM>>

<character card and system prompt>

<<USER>>

<prompt>

<<AIBOT>>

<leave a newline blank for model to respond>

```

## Bias, Risks, and Limitations

Chronos has a bias to talk very expressively and reply with very long responses. LimaRP is trained on human RP data from niche internet forums. This model is not intended for supplying factual information or advice in any form.

## Training Details

This model is merged and can be reproduced using the tools mentioned above. Please refer to all provided links for extra model-specific details. |

The-Face-Of-Goonery/Huginn-v3-13b | The-Face-Of-Goonery | 2023-08-17T18:39:41Z | 1,654 | 10 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-12T20:38:26Z | ---

{}

---

Huginn v1.2 but finetuned on superCOT, and merged holodeck in for some better story capability

i also merged limarp back into it a second time to refresh those features again since v1.2 seemed to bury them

it works best on the alpaca format but also works with chat too |

abhishek/llama2guanacotest | abhishek | 2023-08-16T12:24:25Z | 1,654 | 0 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"llama",

"text-generation",

"autotrain",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-16T12:13:04Z | ---

tags:

- autotrain

- text-generation

widget:

- text: "I love AutoTrain because "

---

# Model Trained Using AutoTrain |

Danielbrdz/Barcenas-7b | Danielbrdz | 2023-08-26T17:04:40Z | 1,654 | 1 | transformers | [

"transformers",

"pytorch",

"llama",

"text-generation",

"es",

"en",

"dataset:Danielbrdz/Barcenas-DataSet",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-08-25T17:33:48Z | ---

license: other

datasets:

- Danielbrdz/Barcenas-DataSet

language:

- es

- en

---

Barcenas-7b a model based on orca-mini-v3-7b and LLama2-7b.

Trained with a proprietary dataset to boost the creativity and consistency of its responses.

This model would never have been possible thanks to the following people:

Pankaj Mathur - For his orca-mini-v3-7b model which was the basis of the Barcenas-7b fine-tune.

Maxime Labonne - Thanks to his code and tutorial for fine-tuning in LLama2

TheBloke - For his script for a peft adapter

Georgi Gerganov - For his llama.cp project that contributed in Barcenas-7b functions

TrashPandaSavior - Reddit user who with his information would never have started the project.

Made with ❤️ in Guadalupe, Nuevo Leon, Mexico 🇲🇽 |

elinas/chronos007-70b | elinas | 2024-03-23T23:19:51Z | 1,654 | 7 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"chat",

"roleplay",

"storywriting",

"merge",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-09-26T21:48:29Z | ---

license: cc-by-nc-4.0

tags:

- chat

- roleplay

- storywriting

- merge

---

# chronos007-70b fp16

This is a merge of Chronos-70b-v2 and model 007 at a ratio of 0.3 using the SLERP method, with Chronos being the parent model. This is an experimental model that has improved Chronos'

logical and reasoning abilities while keeping the unique prose and general writing Chronos provides. This is an experiment for possible future Chronos models.

There are multiple different quantized versions that can be found below including GGUF, GPTQ, and AWQ thanks to [@TheBloke](https://huggingface.co/TheBloke)

## License

This model is strictly [*non-commercial*](https://creativecommons.org/licenses/by-nc/4.0/) (**cc-by-nc-4.0**) use only which takes priority over the **LLAMA 2 COMMUNITY LICENSE AGREEMENT**. If you'd like to discuss using it for your business, contact Elinas through Discord **elinas**, or X (Twitter) **@officialelinas**.

The "Model" is completely free (ie. base model, derivates, merges/mixes) to use for non-commercial purposes as long as the the included **cc-by-nc-4.0** license in any parent repository, and the non-commercial use statute remains, regardless of other models' licences.

At the moment, only 70b models released will be under this license and the terms may change at any time (ie. a more permissive license allowing commercial use).

## Model Usage

This model uses Alpaca formatting, so for optimal model performance, use it to start the dialogue or story, and if you use a frontend like SillyTavern ENABLE Alpaca instruction mode:

```

### Instruction:

Your instruction or question here.

### Response:

```

Not using the format will make the model perform significantly worse than intended.

## Other versions

[GGUF version by @TheBloke](https://huggingface.co/TheBloke/chronos007-70B-GGUF)

[GPTQ version by @TheBloke](https://huggingface.co/TheBloke/chronos007-70B-GPTQ)

[AWQ version by @TheBloke](https://huggingface.co/TheBloke/chronos007-70B-AWQ)

**Support Development of New Models**

<a href='https://ko-fi.com/Q5Q6MB734' target='_blank'><img height='36' style='border:0px;height:36px;'

src='https://storage.ko-fi.com/cdn/kofi1.png?v=3' border='0' alt='Support Development' /></a>

|

Weyaxi/OpenOrca-Zephyr-7B | Weyaxi | 2023-10-11T10:22:01Z | 1,654 | 5 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"license:cc-by-nc-4.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-10-11T09:45:07Z | ---

license: cc-by-nc-4.0

---

<a href="https://www.buymeacoffee.com/PulsarAI" target="_blank"><img src="https://cdn.buymeacoffee.com/buttons/v2/default-yellow.png" alt="Buy Me A Coffee" style="height: 60px !important;width: 217px !important;" ></a>

Merge of [HuggingFaceH4/zephyr-7b-alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha) and [Open-Orca/Mistral-7B-OpenOrca](https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca) using ties merge.

### *Weights*

- [HuggingFaceH4/zephyr-7b-alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha): 0.5

- [Open-Orca/Mistral-7B-OpenOrca](https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca): 0.3

### *Density*

- [HuggingFaceH4/zephyr-7b-alpha](https://huggingface.co/HuggingFaceH4/zephyr-7b-alpha): 0.5

- [Open-Orca/Mistral-7B-OpenOrca](https://huggingface.co/Open-Orca/Mistral-7B-OpenOrca): 0.5

# Evulation Results ([Open LLM Leaderboard](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard))

| Metric | Value |

|-----------------------|-------|

| Avg. | |

| ARC (25-shot) | |

| HellaSwag (10-shot) | |

| MMLU (5-shot) | |

| TruthfulQA (0-shot) | | |

NExtNewChattingAI/shark_tank_ai_7b_v2 | NExtNewChattingAI | 2024-03-10T19:17:36Z | 1,654 | 0 | transformers | [

"transformers",

"safetensors",

"mistral",

"text-generation",

"en",

"license:cc-by-nc-4.0",

"model-index",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-23T01:46:04Z | ---

language:

- en

license: cc-by-nc-4.0

model-index:

- name: shark_tank_ai_7b_v2

results:

- task:

type: text-generation

name: Text Generation

dataset:

name: AI2 Reasoning Challenge (25-Shot)

type: ai2_arc

config: ARC-Challenge

split: test

args:

num_few_shot: 25

metrics:

- type: acc_norm

value: 67.75

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=NExtNewChattingAI/shark_tank_ai_7b_v2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: HellaSwag (10-Shot)

type: hellaswag

split: validation

args:

num_few_shot: 10

metrics:

- type: acc_norm

value: 87.06

name: normalized accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=NExtNewChattingAI/shark_tank_ai_7b_v2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: MMLU (5-Shot)

type: cais/mmlu

config: all

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 58.79

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=NExtNewChattingAI/shark_tank_ai_7b_v2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: TruthfulQA (0-shot)

type: truthful_qa

config: multiple_choice

split: validation

args:

num_few_shot: 0

metrics:

- type: mc2

value: 62.15

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=NExtNewChattingAI/shark_tank_ai_7b_v2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: Winogrande (5-shot)

type: winogrande

config: winogrande_xl

split: validation

args:

num_few_shot: 5

metrics:

- type: acc

value: 78.45

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=NExtNewChattingAI/shark_tank_ai_7b_v2

name: Open LLM Leaderboard

- task:

type: text-generation

name: Text Generation

dataset:

name: GSM8k (5-shot)

type: gsm8k

config: main

split: test

args:

num_few_shot: 5

metrics:

- type: acc

value: 45.11

name: accuracy

source:

url: https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard?query=NExtNewChattingAI/shark_tank_ai_7b_v2

name: Open LLM Leaderboard

---

This model is based on https://huggingface.co/AIDC-ai-business/Marcoroni-7B-v3 trained on internal data.

---

license: cc-by-nc-4.0

language:

- en

---

Chatbot is a highly advanced artificial intelligence designed to provide you with personalized assistance and support. With its natural language processing capabilities, it can understand and respond to a wide range of queries and requests, making it a valuable tool for both personal and professional use.

The chatbot is equipped with a vast knowledge base, allowing it to provide accurate and reliable information on a wide range of topics, from general knowledge to specific industry-related information. It can also perform tasks such as scheduling appointments, sending emails, and even ordering products online.

One of the standout features of this assistant chatbot is its ability to learn and adapt to your individual preferences and needs. Over time, it can become more personalized to your specific requirements, making it an even more valuable asset to your daily life.

The chatbot is also designed to be user-friendly and intuitive, with a simple and easy-to-use interface that allows you to interact with it in a natural and conversational way. Whether you're looking for information, need help with a task, or just want to chat, your assistant chatbot is always ready and available to assist you.

# [Open LLM Leaderboard Evaluation Results](https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard)

Detailed results can be found [here](https://huggingface.co/datasets/open-llm-leaderboard/details_NExtNewChattingAI__shark_tank_ai_7b_v2)

| Metric |Value|

|---------------------------------|----:|

|Avg. |66.55|

|AI2 Reasoning Challenge (25-Shot)|67.75|

|HellaSwag (10-Shot) |87.06|

|MMLU (5-Shot) |58.79|

|TruthfulQA (0-shot) |62.15|

|Winogrande (5-shot) |78.45|

|GSM8k (5-shot) |45.11|

|

martyn/mixtral-megamerge-dare-8x7b-v2 | martyn | 2023-12-25T22:48:59Z | 1,654 | 1 | transformers | [

"transformers",

"safetensors",

"mixtral",

"text-generation",

"dare",

"super mario merge",

"pytorch",

"merge",

"conversational",

"en",

"license:apache-2.0",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-12-25T21:41:57Z | ---

license: apache-2.0

language:

- en

pipeline_tag: text-generation

inference: false

tags:

- dare

- super mario merge

- pytorch

- mixtral

- merge

---

# mixtral megamerge 8x7b v2

The following models were merged with DARE using [https://github.com/martyn/safetensors-merge-supermario](https://github.com/martyn/safetensors-merge-supermario)

## Mergelist

```

mistralai/Mixtral-8x7B-v0.1

mistralai/Mixtral-8x7B-Instruct-v0.1

cognitivecomputations/dolphin-2.6-mixtral-8x7b

Brillibitg/Instruct_Mixtral-8x7B-v0.1_Dolly15K

orangetin/OpenHermes-Mixtral-8x7B

NeverSleep/Noromaid-v0.1-mixtral-8x7b-v3

```

## Merge command

```

python3 hf_merge.py to_merge_mixtral2.txt mixtral-2 -p 0.15 -lambda 1.95

```

### Notes

* MoE gates were filtered for compatibility then averaged with `(tensor1 + tensor2)/2`

* seems to generalize prompting formats and sampling settings

|

LWDCLS/KobbleTinyV2-1.1B-GGUF-IQ-Imatrix-Request | LWDCLS | 2024-06-24T17:54:59Z | 1,654 | 2 | null | [

"gguf",

"license:unlicense",

"region:us"

] | null | 2024-06-24T17:46:04Z | ---

inference: false

license: unlicense

---

[[Request #54]](https://huggingface.co/Lewdiculous/Model-Requests/discussions/54) - Click the link for more context. <br>

[concedo/KobbleTinyV2-1.1B](https://huggingface.co/concedo/KobbleTinyV2-1.1B) <br>

Use with the [**latest version of KoboldCpp**](https://github.com/LostRuins/koboldcpp/releases/latest), or [this more up-to-date fork](https://github.com/Nexesenex/kobold.cpp) if you have issues.

<details>

<summary>⇲ Click here to expand/hide information – General chart with relative quant parformances.</summary>

> [!NOTE]

> **Recommended read:** <br>

>

> [**"Which GGUF is right for me? (Opinionated)" by Artefact2**](https://gist.github.com/Artefact2/b5f810600771265fc1e39442288e8ec9)

>

> *Click the image to view full size.*

>

</details>

|

valurank/MiniLM-L6-Keyword-Extraction | valurank | 2022-06-08T20:17:38Z | 1,653 | 10 | sentence-transformers | [

"sentence-transformers",

"pytorch",

"bert",

"feature-extraction",

"sentence-similarity",

"en",

"arxiv:1904.06472",

"arxiv:2102.07033",

"arxiv:2104.08727",

"arxiv:1704.05179",

"arxiv:1810.09305",

"license:other",

"autotrain_compatible",

"endpoints_compatible",

"text-embeddings-inference",

"region:us"

] | sentence-similarity | 2022-05-20T16:37:59Z | ---

pipeline_tag: sentence-similarity

tags:

- sentence-transformers

- feature-extraction

- sentence-similarity

language: en

license: other

---

# all-MiniLM-L6-v2

This is a [sentence-transformers](https://www.SBERT.net) model: It maps sentences & paragraphs to a 384 dimensional dense vector space and can be used for tasks like clustering or semantic search.

## Usage (Sentence-Transformers)

Using this model becomes easy when you have [sentence-transformers](https://www.SBERT.net) installed:

```

pip install -U sentence-transformers

```

Then you can use the model like this:

```python

from sentence_transformers import SentenceTransformer

sentences = ["This is an example sentence", "Each sentence is converted"]

model = SentenceTransformer('sentence-transformers/all-MiniLM-L6-v2')

embeddings = model.encode(sentences)

print(embeddings)

```

## Usage (HuggingFace Transformers)

Without [sentence-transformers](https://www.SBERT.net), you can use the model like this: First, you pass your input through the transformer model, then you have to apply the right pooling-operation on-top of the contextualized word embeddings.

```python

from transformers import AutoTokenizer, AutoModel

import torch

import torch.nn.functional as F

#Mean Pooling - Take attention mask into account for correct averaging

def mean_pooling(model_output, attention_mask):

token_embeddings = model_output[0] #First element of model_output contains all token embeddings

input_mask_expanded = attention_mask.unsqueeze(-1).expand(token_embeddings.size()).float()

return torch.sum(token_embeddings * input_mask_expanded, 1) / torch.clamp(input_mask_expanded.sum(1), min=1e-9)

# Sentences we want sentence embeddings for

sentences = ['This is an example sentence', 'Each sentence is converted']

# Load model from HuggingFace Hub

tokenizer = AutoTokenizer.from_pretrained('sentence-transformers/all-MiniLM-L6-v2')

model = AutoModel.from_pretrained('sentence-transformers/all-MiniLM-L6-v2')

# Tokenize sentences

encoded_input = tokenizer(sentences, padding=True, truncation=True, return_tensors='pt')

# Compute token embeddings

with torch.no_grad():

model_output = model(**encoded_input)

# Perform pooling

sentence_embeddings = mean_pooling(model_output, encoded_input['attention_mask'])

# Normalize embeddings

sentence_embeddings = F.normalize(sentence_embeddings, p=2, dim=1)

print("Sentence embeddings:")

print(sentence_embeddings)

```

## Evaluation Results

For an automated evaluation of this model, see the *Sentence Embeddings Benchmark*: [https://seb.sbert.net](https://seb.sbert.net?model_name=sentence-transformers/all-MiniLM-L6-v2)

------

## Background

The project aims to train sentence embedding models on very large sentence level datasets using a self-supervised

contrastive learning objective. We used the pretrained [`nreimers/MiniLM-L6-H384-uncased`](https://huggingface.co/nreimers/MiniLM-L6-H384-uncased) model and fine-tuned in on a

1B sentence pairs dataset. We use a contrastive learning objective: given a sentence from the pair, the model should predict which out of a set of randomly sampled other sentences, was actually paired with it in our dataset.

We developped this model during the

[Community week using JAX/Flax for NLP & CV](https://discuss.huggingface.co/t/open-to-the-community-community-week-using-jax-flax-for-nlp-cv/7104),

organized by Hugging Face. We developped this model as part of the project:

[Train the Best Sentence Embedding Model Ever with 1B Training Pairs](https://discuss.huggingface.co/t/train-the-best-sentence-embedding-model-ever-with-1b-training-pairs/7354). We benefited from efficient hardware infrastructure to run the project: 7 TPUs v3-8, as well as intervention from Googles Flax, JAX, and Cloud team member about efficient deep learning frameworks.

## Intended uses

Our model is intented to be used as a sentence and short paragraph encoder. Given an input text, it ouptuts a vector which captures

the semantic information. The sentence vector may be used for information retrieval, clustering or sentence similarity tasks.

By default, input text longer than 256 word pieces is truncated.

## Training procedure

### Pre-training

We use the pretrained [`nreimers/MiniLM-L6-H384-uncased`](https://huggingface.co/nreimers/MiniLM-L6-H384-uncased) model. Please refer to the model card for more detailed information about the pre-training procedure.

### Fine-tuning

We fine-tune the model using a contrastive objective. Formally, we compute the cosine similarity from each possible sentence pairs from the batch.

We then apply the cross entropy loss by comparing with true pairs.

#### Hyper parameters

We trained ou model on a TPU v3-8. We train the model during 100k steps using a batch size of 1024 (128 per TPU core).

We use a learning rate warm up of 500. The sequence length was limited to 128 tokens. We used the AdamW optimizer with

a 2e-5 learning rate. The full training script is accessible in this current repository: `train_script.py`.

#### Training data

We use the concatenation from multiple datasets to fine-tune our model. The total number of sentence pairs is above 1 billion sentences.

We sampled each dataset given a weighted probability which configuration is detailed in the `data_config.json` file.

| Dataset | Paper | Number of training tuples |

|--------------------------------------------------------|:----------------------------------------:|:--------------------------:|

| [Reddit comments (2015-2018)](https://github.com/PolyAI-LDN/conversational-datasets/tree/master/reddit) | [paper](https://arxiv.org/abs/1904.06472) | 726,484,430 |

| [S2ORC](https://github.com/allenai/s2orc) Citation pairs (Abstracts) | [paper](https://aclanthology.org/2020.acl-main.447/) | 116,288,806 |

| [WikiAnswers](https://github.com/afader/oqa#wikianswers-corpus) Duplicate question pairs | [paper](https://doi.org/10.1145/2623330.2623677) | 77,427,422 |

| [PAQ](https://github.com/facebookresearch/PAQ) (Question, Answer) pairs | [paper](https://arxiv.org/abs/2102.07033) | 64,371,441 |

| [S2ORC](https://github.com/allenai/s2orc) Citation pairs (Titles) | [paper](https://aclanthology.org/2020.acl-main.447/) | 52,603,982 |

| [S2ORC](https://github.com/allenai/s2orc) (Title, Abstract) | [paper](https://aclanthology.org/2020.acl-main.447/) | 41,769,185 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title, Body) pairs | - | 25,316,456 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title+Body, Answer) pairs | - | 21,396,559 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) (Title, Answer) pairs | - | 21,396,559 |

| [MS MARCO](https://microsoft.github.io/msmarco/) triplets | [paper](https://doi.org/10.1145/3404835.3462804) | 9,144,553 |

| [GOOAQ: Open Question Answering with Diverse Answer Types](https://github.com/allenai/gooaq) | [paper](https://arxiv.org/pdf/2104.08727.pdf) | 3,012,496 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Title, Answer) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 1,198,260 |

| [Code Search](https://huggingface.co/datasets/code_search_net) | - | 1,151,414 |

| [COCO](https://cocodataset.org/#home) Image captions | [paper](https://link.springer.com/chapter/10.1007%2F978-3-319-10602-1_48) | 828,395|

| [SPECTER](https://github.com/allenai/specter) citation triplets | [paper](https://doi.org/10.18653/v1/2020.acl-main.207) | 684,100 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Question, Answer) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 681,164 |

| [Yahoo Answers](https://www.kaggle.com/soumikrakshit/yahoo-answers-dataset) (Title, Question) | [paper](https://proceedings.neurips.cc/paper/2015/hash/250cf8b51c773f3f8dc8b4be867a9a02-Abstract.html) | 659,896 |

| [SearchQA](https://huggingface.co/datasets/search_qa) | [paper](https://arxiv.org/abs/1704.05179) | 582,261 |

| [Eli5](https://huggingface.co/datasets/eli5) | [paper](https://doi.org/10.18653/v1/p19-1346) | 325,475 |

| [Flickr 30k](https://shannon.cs.illinois.edu/DenotationGraph/) | [paper](https://transacl.org/ojs/index.php/tacl/article/view/229/33) | 317,695 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (titles) | | 304,525 |

| AllNLI ([SNLI](https://nlp.stanford.edu/projects/snli/) and [MultiNLI](https://cims.nyu.edu/~sbowman/multinli/) | [paper SNLI](https://doi.org/10.18653/v1/d15-1075), [paper MultiNLI](https://doi.org/10.18653/v1/n18-1101) | 277,230 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (bodies) | | 250,519 |

| [Stack Exchange](https://huggingface.co/datasets/flax-sentence-embeddings/stackexchange_xml) Duplicate questions (titles+bodies) | | 250,460 |

| [Sentence Compression](https://github.com/google-research-datasets/sentence-compression) | [paper](https://www.aclweb.org/anthology/D13-1155/) | 180,000 |

| [Wikihow](https://github.com/pvl/wikihow_pairs_dataset) | [paper](https://arxiv.org/abs/1810.09305) | 128,542 |

| [Altlex](https://github.com/chridey/altlex/) | [paper](https://aclanthology.org/P16-1135.pdf) | 112,696 |

| [Quora Question Triplets](https://quoradata.quora.com/First-Quora-Dataset-Release-Question-Pairs) | - | 103,663 |

| [Simple Wikipedia](https://cs.pomona.edu/~dkauchak/simplification/) | [paper](https://www.aclweb.org/anthology/P11-2117/) | 102,225 |

| [Natural Questions (NQ)](https://ai.google.com/research/NaturalQuestions) | [paper](https://transacl.org/ojs/index.php/tacl/article/view/1455) | 100,231 |

| [SQuAD2.0](https://rajpurkar.github.io/SQuAD-explorer/) | [paper](https://aclanthology.org/P18-2124.pdf) | 87,599 |

| [TriviaQA](https://huggingface.co/datasets/trivia_qa) | - | 73,346 |

| **Total** | | **1,170,060,424** | |

concedo/Pythia-70M-ChatSalad | concedo | 2023-04-07T14:46:25Z | 1,653 | 6 | transformers | [

"transformers",

"pytorch",

"safetensors",

"gpt_neox",

"text-generation",

"en",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-02-01T08:17:14Z | ---

license: other

language:

- en

inference: false

widget:

- text: "How do I download this model?"

example_title: "Text Gen Example"

---

# Pythia-70M-ChatSalad

This is a follow up finetune of Pythia-70M finetuned on the same dataset as OPT-19M-ChatSalad. It is much more coherent.

All feedback and comments can be directed to Concedo on the KoboldAI discord. |

digitous/Janin-R | digitous | 2023-02-21T00:49:26Z | 1,653 | 1 | transformers | [

"transformers",

"pytorch",

"gptj",

"text-generation",

"en",

"license:creativeml-openrail-m",

"autotrain_compatible",

"endpoints_compatible",

"region:us"

] | text-generation | 2023-02-20T04:22:02Z | ---

license: creativeml-openrail-m

language:

- en

---

This is a (25/25)/50 merge of Mr. Seeker's GPT-J Janeway and Shinen models and GPT-R.

If interested, please visit digitous/GPT-R and KoboldAI to get an understanding of

what these models are comprised of. This model is not intended for minors.

Mr. Seeker, maker of incredible fine-tuned models:

https://huggingface.co/KoboldAI/GPT-J-6B-Janeway

https://huggingface.co/KoboldAI/GPT-J-6B-Shinen

Donate to help ameliorate server fees:

https://www.patreon.com/mrseeker

GPT-Ronin:

https://huggingface.co/digitous/GPT-R

Weight merge Script credit to Concedo:

https://huggingface.co/concedo |

dvruette/oasst-gpt-neox-20b-1000-steps | dvruette | 2023-03-27T15:41:30Z | 1,653 | 0 | transformers | [

"transformers",

"pytorch",

"gpt_neox",

"text-generation",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-03-25T13:53:05Z | https://wandb.ai/open-assistant/supervised-finetuning/runs/x2pczqa9 |

OpenAssistant/pythia-12b-sft-v8-rlhf-2k-steps | OpenAssistant | 2023-08-17T22:00:39Z | 1,653 | 0 | transformers | [

"transformers",

"pytorch",

"gpt_neox",

"text-generation",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-05-10T21:11:23Z | ---

license: apache-2.0

---

# pythia-12b-sft-v8-rlhf-2k-steps

- sampling report: [2023-05-15_OpenAssistant_pythia-12b-sft-v8-rlhf-2k-steps_sampling_noprefix2.json](https://open-assistant.github.io/oasst-model-eval/?f=https%3A%2F%2Fraw.githubusercontent.com%2FOpen-Assistant%2Foasst-model-eval%2Fmain%2Fsampling_reports%2Foasst-rl%2F2023-05-15_OpenAssistant_pythia-12b-sft-v8-rlhf-2k-steps_sampling_noprefix2.json) |

golaxy/gogpt-3b-bloom | golaxy | 2023-07-22T13:23:22Z | 1,653 | 5 | transformers | [

"transformers",

"pytorch",

"tensorboard",

"bloom",

"text-generation",

"zh",

"dataset:BelleGroup/train_2M_CN",

"dataset:BelleGroup/train_3.5M_CN",

"dataset:BelleGroup/train_1M_CN",

"dataset:BelleGroup/train_0.5M_CN",

"dataset:BelleGroup/school_math_0.25M",

"license:apache-2.0",

"autotrain_compatible",

"endpoints_compatible",

"text-generation-inference",

"region:us"

] | text-generation | 2023-05-26T02:38:54Z | ---

license: apache-2.0

datasets:

- BelleGroup/train_2M_CN

- BelleGroup/train_3.5M_CN

- BelleGroup/train_1M_CN

- BelleGroup/train_0.5M_CN

- BelleGroup/school_math_0.25M

language:

- zh

---

## GoGPT

基于多样性中文指令数据微调的中文BLOOM底座模型

> 训练第一轮足够了,后续第二轮和第三轮提升不大

- 🚀多样性指令数据

- 🚀筛选高质量中文数据

| 模型名字 | 参数量 | 模型地址 |

|------------|--------|------|

| gogpt-560m | 5.6亿参数 | 🤗[golaxy/gogpt-560m](https://huggingface.co/golaxy/gogpt-560m) |

| gogpt-3b | 30亿参数 | 🤗[golaxy/gogpt-3b-bloom](https://huggingface.co/golaxy/gogpt-3b-bloom) |

| gogpt-7b | 70亿参数 | 🤗[golaxy/gogpt-7b-bloom](https://huggingface.co/golaxy/gogpt-7b-bloom) |

## 测试效果

## TODO

- 进行RLFH训练

- 后续加入中英平行语料

## 感谢

- [@hz-zero_nlp](https://github.com/yuanzhoulvpi2017/zero_nlp)

- [stanford_alpaca](https://github.com/tatsu-lab/stanford_alpaca)

- [Belle数据](https://huggingface.co/BelleGroup)

## Citation

如果你在研究中使用了GoGPT,请按如下格式引用:

```

@misc{GoGPT,

title={GoGPT: Training Medical GPT Model},

author={Qiang Yan},

year={2023},

howpublished={\url{https://github.com/yanqiangmiffy/GoGPT}},

}

``` |

TheBloke/WizardLM-13B-V1-1-SuperHOT-8K-GPTQ | TheBloke | 2023-08-21T14:13:05Z | 1,653 | 46 | transformers | [

"transformers",

"safetensors",

"llama",

"text-generation",

"custom_code",

"arxiv:2304.12244",

"license:other",

"autotrain_compatible",

"text-generation-inference",

"4-bit",

"gptq",

"region:us"

] | text-generation | 2023-07-07T17:12:09Z | ---

inference: false

license: other

---

<!-- header start -->

<!-- 200823 -->

<div style="width: auto; margin-left: auto; margin-right: auto">

<img src="https://i.imgur.com/EBdldam.jpg" alt="TheBlokeAI" style="width: 100%; min-width: 400px; display: block; margin: auto;">

</div>

<div style="display: flex; justify-content: space-between; width: 100%;">

<div style="display: flex; flex-direction: column; align-items: flex-start;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://discord.gg/theblokeai">Chat & support: TheBloke's Discord server</a></p>

</div>

<div style="display: flex; flex-direction: column; align-items: flex-end;">

<p style="margin-top: 0.5em; margin-bottom: 0em;"><a href="https://www.patreon.com/TheBlokeAI">Want to contribute? TheBloke's Patreon page</a></p>

</div>

</div>

<div style="text-align:center; margin-top: 0em; margin-bottom: 0em"><p style="margin-top: 0.25em; margin-bottom: 0em;">TheBloke's LLM work is generously supported by a grant from <a href="https://a16z.com">andreessen horowitz (a16z)</a></p></div>

<hr style="margin-top: 1.0em; margin-bottom: 1.0em;">

<!-- header end -->

# WizardLM's WizardLM 13B V1.1 GPTQ

These files are GPTQ 4bit model files for [WizardLM's WizardLM 13B V1.1](https://huggingface.co/WizardLM/WizardLM-13B-V1.1) merged with [Kaio Ken's SuperHOT 8K](https://huggingface.co/kaiokendev/superhot-13b-8k-no-rlhf-test).

It is the result of quantising to 4bit using [GPTQ-for-LLaMa](https://github.com/qwopqwop200/GPTQ-for-LLaMa).

**This is an experimental new GPTQ which offers up to 8K context size**

The increased context is tested to work with [ExLlama](https://github.com/turboderp/exllama), via the latest release of [text-generation-webui](https://github.com/oobabooga/text-generation-webui).

It has also been tested from Python code using AutoGPTQ, and `trust_remote_code=True`.

Code credits:

- Original concept and code for increasing context length: [kaiokendev](https://huggingface.co/kaiokendev)

- Updated Llama modelling code that includes this automatically via trust_remote_code: [emozilla](https://huggingface.co/emozilla).

Please read carefully below to see how to use it.

## Repositories available

* [4-bit GPTQ models for GPU inference](https://huggingface.co/TheBloke/WizardLM-13B-V1-1-SuperHOT-8K-GPTQ)

* [4, 5, and 8-bit GGML models for CPU inference](https://huggingface.co/TheBloke/WizardLM-13B-V1-1-SuperHOT-8K-GGML)

* [Unquantised SuperHOT fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/TheBloke/WizardLM-13B-V1-1-SuperHOT-8K-fp16)

* [Unquantised base fp16 model in pytorch format, for GPU inference and for further conversions](https://huggingface.co/WizardLM/WizardLM-13B-V1.1)

## Prompt template: Vicuna

```

A chat between a curious user and an artificial intelligence assistant. The assistant gives helpful, detailed, and polite answers to the user's questions.

USER: prompt

ASSISTANT:

```

## How to easily download and use this model in text-generation-webui with ExLlama

Please make sure you're using the latest version of text-generation-webui

1. Click the **Model tab**.

2. Under **Download custom model or LoRA**, enter `TheBloke/WizardLM-13B-V1-1-SuperHOT-8K-GPTQ`.

3. Click **Download**.

4. The model will start downloading. Once it's finished it will say "Done"

5. Untick **Autoload the model**

6. In the top left, click the refresh icon next to **Model**.

7. In the **Model** dropdown, choose the model you just downloaded: `WizardLM-13B-V1-1-SuperHOT-8K-GPTQ`

8. To use the increased context, set the **Loader** to **ExLlama**, set **max_seq_len** to 8192 or 4096, and set **compress_pos_emb** to **4** for 8192 context, or to **2** for 4096 context.

9. Now click **Save Settings** followed by **Reload**

10. The model will automatically load, and is now ready for use!

11. Once you're ready, click the **Text Generation tab** and enter a prompt to get started!

## How to use this GPTQ model from Python code with AutoGPTQ

First make sure you have AutoGPTQ and Einops installed:

```

pip3 install einops auto-gptq

```

Then run the following code. Note that in order to get this to work, `config.json` has been hardcoded to a sequence length of 8192.

If you want to try 4096 instead to reduce VRAM usage, please manually edit `config.json` to set `max_position_embeddings` to the value you want.

```python

from transformers import AutoTokenizer, pipeline, logging

from auto_gptq import AutoGPTQForCausalLM, BaseQuantizeConfig

import argparse

model_name_or_path = "TheBloke/WizardLM-13B-V1-1-SuperHOT-8K-GPTQ"

model_basename = "wizardlm-13b-v1.1-superhot-8k-GPTQ-4bit-128g.no-act.order"

use_triton = False

tokenizer = AutoTokenizer.from_pretrained(model_name_or_path, use_fast=True)

model = AutoGPTQForCausalLM.from_quantized(model_name_or_path,

model_basename=model_basename,

use_safetensors=True,

trust_remote_code=True,

device_map='auto',

use_triton=use_triton,

quantize_config=None)

model.seqlen = 8192

# Note: check the prompt template is correct for this model.

prompt = "Tell me about AI"

prompt_template=f'''USER: {prompt}

ASSISTANT:'''

print("\n\n*** Generate:")

input_ids = tokenizer(prompt_template, return_tensors='pt').input_ids.cuda()

output = model.generate(inputs=input_ids, temperature=0.7, max_new_tokens=512)

print(tokenizer.decode(output[0]))

# Inference can also be done using transformers' pipeline

# Prevent printing spurious transformers error when using pipeline with AutoGPTQ

logging.set_verbosity(logging.CRITICAL)

print("*** Pipeline:")

pipe = pipeline(

"text-generation",

model=model,

tokenizer=tokenizer,

max_new_tokens=512,

temperature=0.7,

top_p=0.95,

repetition_penalty=1.15

)

print(pipe(prompt_template)[0]['generated_text'])

```

## Using other UIs: monkey patch

Provided in the repo is `llama_rope_scaled_monkey_patch.py`, written by @kaiokendev.

It can be theoretically be added to any Python UI or custom code to enable the same result as `trust_remote_code=True`. I have not tested this, and it should be superseded by using `trust_remote_code=True`, but I include it for completeness and for interest.

## Provided files

**wizardlm-13b-v1.1-superhot-8k-GPTQ-4bit-128g.no-act.order.safetensors**

This will work with AutoGPTQ, ExLlama, and CUDA versions of GPTQ-for-LLaMa. There are reports of issues with Triton mode of recent GPTQ-for-LLaMa. If you have issues, please use AutoGPTQ instead.

It was created with group_size 128 to increase inference accuracy, but without --act-order (desc_act) to increase compatibility and improve inference speed.

* `wizardlm-13b-v1.1-superhot-8k-GPTQ-4bit-128g.no-act.order.safetensors`

* Works for use with ExLlama with increased context (4096 or 8192)

* Works with AutoGPTQ in Python code, including with increased context, if `trust_remote_code=True` is set.

* Should work with GPTQ-for-LLaMa in CUDA mode, but unknown if increased context works - TBC. May have issues with GPTQ-for-LLaMa Triton mode.

* Works with text-generation-webui, including one-click-installers.

* Parameters: Groupsize = 128. Act Order / desc_act = False.

<!-- footer start -->

<!-- 200823 -->

## Discord

For further support, and discussions on these models and AI in general, join us at:

[TheBloke AI's Discord server](https://discord.gg/theblokeai)

## Thanks, and how to contribute.

Thanks to the [chirper.ai](https://chirper.ai) team!

I've had a lot of people ask if they can contribute. I enjoy providing models and helping people, and would love to be able to spend even more time doing it, as well as expanding into new projects like fine tuning/training.

If you're able and willing to contribute it will be most gratefully received and will help me to keep providing more models, and to start work on new AI projects.

Donaters will get priority support on any and all AI/LLM/model questions and requests, access to a private Discord room, plus other benefits.

* Patreon: https://patreon.com/TheBlokeAI

* Ko-Fi: https://ko-fi.com/TheBlokeAI

**Special thanks to**: Aemon Algiz.