url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.07B

| node_id

stringlengths 18

32

| number

int64 1

3.38k

| title

stringlengths 1

276

| user

dict | labels

sequence | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

null | comments

sequence | created_at

int64 1,587B

1,639B

| updated_at

int64 1,587B

1,639B

| closed_at

null 1,587B

1,639B

⌀ | author_association

stringclasses 3

values | active_lock_reason

null | draft

bool 2

classes | pull_request

dict | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/3378 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3378/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3378/comments | https://api.github.com/repos/huggingface/datasets/issues/3378/events | https://github.com/huggingface/datasets/pull/3378 | 1,070,580,126 | PR_kwDODunzps4vXF1D | 3,378 | Add The Pile subsets | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,638,537,294,000 | 1,638,537,294,000 | null | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3378",

"html_url": "https://github.com/huggingface/datasets/pull/3378",

"diff_url": "https://github.com/huggingface/datasets/pull/3378.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3378.patch",

"merged_at": null

} | Add The Pile subsets:

- pubmed

- ubuntu_irc

- europarl

- hacker_news

- nih_exporter

Close bigscience-workshop/data_tooling#301. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3378/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3378/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3377 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3377/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3377/comments | https://api.github.com/repos/huggingface/datasets/issues/3377/events | https://github.com/huggingface/datasets/pull/3377 | 1,070,562,907 | PR_kwDODunzps4vXCHn | 3,377 | COCO 🥥 on the 🤗 Hub? | {

"login": "merveenoyan",

"id": 53175384,

"node_id": "MDQ6VXNlcjUzMTc1Mzg0",

"avatar_url": "https://avatars.githubusercontent.com/u/53175384?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/merveenoyan",

"html_url": "https://github.com/merveenoyan",

"followers_url": "https://api.github.com/users/merveenoyan/followers",

"following_url": "https://api.github.com/users/merveenoyan/following{/other_user}",

"gists_url": "https://api.github.com/users/merveenoyan/gists{/gist_id}",

"starred_url": "https://api.github.com/users/merveenoyan/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/merveenoyan/subscriptions",

"organizations_url": "https://api.github.com/users/merveenoyan/orgs",

"repos_url": "https://api.github.com/users/merveenoyan/repos",

"events_url": "https://api.github.com/users/merveenoyan/events{/privacy}",

"received_events_url": "https://api.github.com/users/merveenoyan/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"@mariosasko I fixed couple of bugs",

"TO-DO: \r\n- [x] Add unlabeled 2017 splits, train and validation splits of 2015\r\n- [ ] Add Class Labels as list instead"

] | 1,638,536,127,000 | 1,638,543,147,000 | null | CONTRIBUTOR | null | true | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3377",

"html_url": "https://github.com/huggingface/datasets/pull/3377",

"diff_url": "https://github.com/huggingface/datasets/pull/3377.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3377.patch",

"merged_at": null

} | This is a draft PR since I ran into few small problems.

I referred to this TFDS code: https://github.com/tensorflow/datasets/blob/2538a08c184d53b37bfcf52cc21dd382572a88f4/tensorflow_datasets/object_detection/coco.py

cc: @mariosasko | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3377/reactions",

"total_count": 1,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 1,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3377/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3376 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3376/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3376/comments | https://api.github.com/repos/huggingface/datasets/issues/3376/events | https://github.com/huggingface/datasets/pull/3376 | 1,070,522,979 | PR_kwDODunzps4vW5sB | 3,376 | Update clue benchmark | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,638,533,161,000 | 1,638,533,161,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3376",

"html_url": "https://github.com/huggingface/datasets/pull/3376",

"diff_url": "https://github.com/huggingface/datasets/pull/3376.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3376.patch",

"merged_at": null

} | Fix #3374 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3376/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3376/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3375 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3375/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3375/comments | https://api.github.com/repos/huggingface/datasets/issues/3375/events | https://github.com/huggingface/datasets/pull/3375 | 1,070,454,913 | PR_kwDODunzps4vWrXz | 3,375 | Support streaming zipped dataset repo by passing only repo name | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,638,528,185,000 | 1,638,528,185,000 | null | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3375",

"html_url": "https://github.com/huggingface/datasets/pull/3375",

"diff_url": "https://github.com/huggingface/datasets/pull/3375.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3375.patch",

"merged_at": null

} | Fix #3373. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3375/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3375/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3374 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3374/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3374/comments | https://api.github.com/repos/huggingface/datasets/issues/3374/events | https://github.com/huggingface/datasets/issues/3374 | 1,070,426,462 | I_kwDODunzps4_zWle | 3,374 | NonMatchingChecksumError for the CLUE:cluewsc2020, chid, c3 and tnews | {

"login": "Namco0816",

"id": 34687537,

"node_id": "MDQ6VXNlcjM0Njg3NTM3",

"avatar_url": "https://avatars.githubusercontent.com/u/34687537?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Namco0816",

"html_url": "https://github.com/Namco0816",

"followers_url": "https://api.github.com/users/Namco0816/followers",

"following_url": "https://api.github.com/users/Namco0816/following{/other_user}",

"gists_url": "https://api.github.com/users/Namco0816/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Namco0816/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Namco0816/subscriptions",

"organizations_url": "https://api.github.com/users/Namco0816/orgs",

"repos_url": "https://api.github.com/users/Namco0816/repos",

"events_url": "https://api.github.com/users/Namco0816/events{/privacy}",

"received_events_url": "https://api.github.com/users/Namco0816/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | 1,638,526,254,000 | 1,638,532,337,000 | null | NONE | null | null | null | Hi, it seems like there are updates in cluewsc2020, chid, c3 and tnews, since i could not load them due to the checksum error. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3374/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3374/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3373 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3373/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3373/comments | https://api.github.com/repos/huggingface/datasets/issues/3373/events | https://github.com/huggingface/datasets/issues/3373 | 1,070,406,391 | I_kwDODunzps4_zRr3 | 3,373 | Support streaming zipped CSV dataset repo by passing only repo name | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] | open | false | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | 1,638,524,904,000 | 1,638,526,949,000 | null | MEMBER | null | null | null | Given a community 🤗 dataset repository containing only a zipped CSV file (only raw data, no loading script), I would like to load it in streaming mode without passing `data_files`:

```

ds_name = "bigscience-catalogue-data/vietnamese_poetry_from_fsoft_ai_lab"

ds = load_dataset(ds_name, split="train", streaming=True, use_auth_token=True)

item = next(iter(ds))

```

Currently, it gives a `FileNotFoundError` because there is no glob (no "\*" after "zip://": "zip://*") in the passed URL:

```

'zip://::https://huggingface.co/datasets/bigscience-catalogue-data/vietnamese_poetry_from_fsoft_ai_lab/resolve/e5d45f1bd9a8a798cc14f0a45ebc1ce91907c792/poems_dataset.zip'

```

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3373/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3373/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3372 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3372/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3372/comments | https://api.github.com/repos/huggingface/datasets/issues/3372/events | https://github.com/huggingface/datasets/issues/3372 | 1,069,948,178 | I_kwDODunzps4_xh0S | 3,372 | [SEO improvement] Add Dataset Metadata to make datasets indexable | {

"login": "cakiki",

"id": 3664563,

"node_id": "MDQ6VXNlcjM2NjQ1NjM=",

"avatar_url": "https://avatars.githubusercontent.com/u/3664563?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/cakiki",

"html_url": "https://github.com/cakiki",

"followers_url": "https://api.github.com/users/cakiki/followers",

"following_url": "https://api.github.com/users/cakiki/following{/other_user}",

"gists_url": "https://api.github.com/users/cakiki/gists{/gist_id}",

"starred_url": "https://api.github.com/users/cakiki/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/cakiki/subscriptions",

"organizations_url": "https://api.github.com/users/cakiki/orgs",

"repos_url": "https://api.github.com/users/cakiki/repos",

"events_url": "https://api.github.com/users/cakiki/events{/privacy}",

"received_events_url": "https://api.github.com/users/cakiki/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

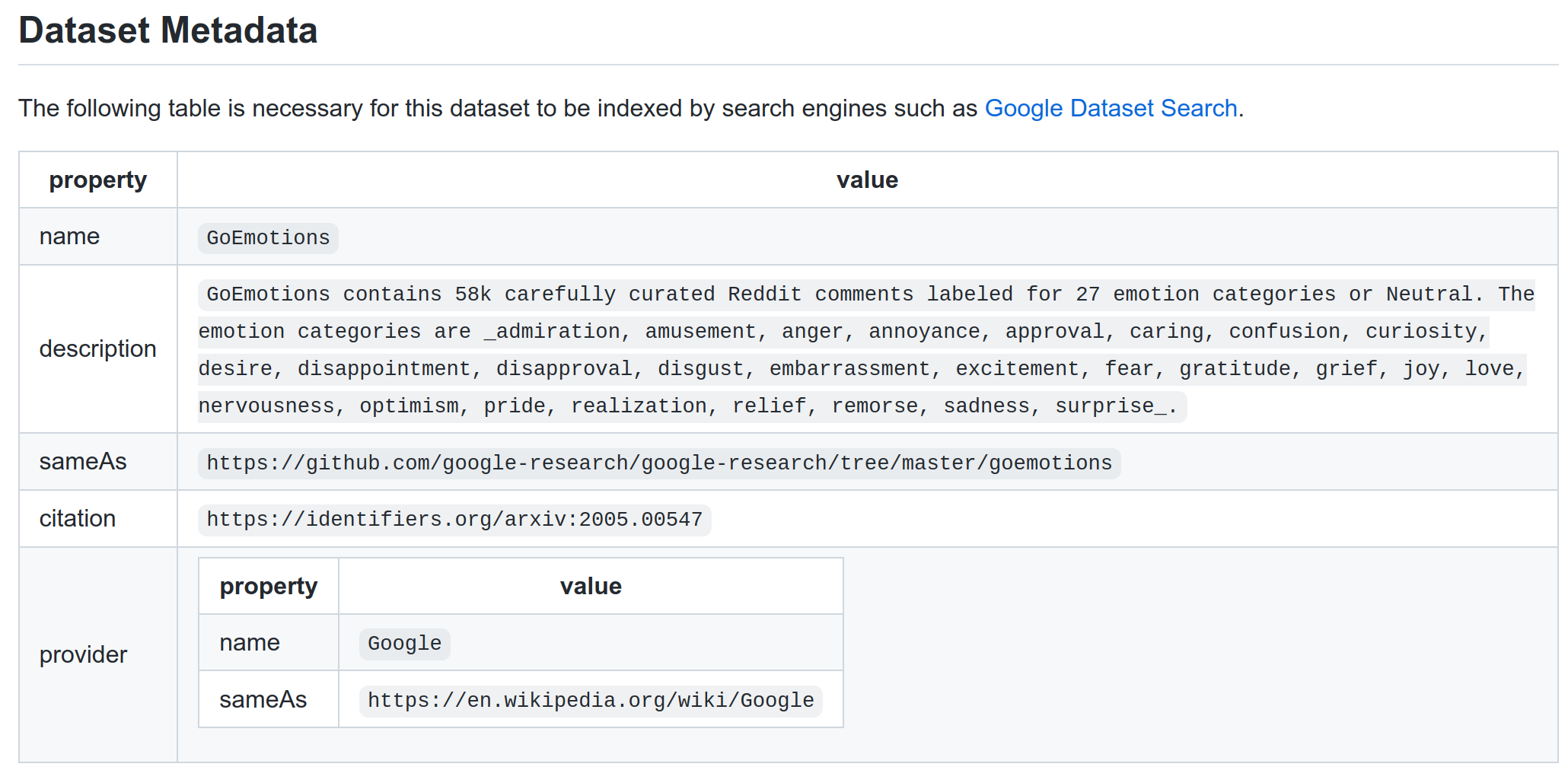

] | open | false | null | [] | null | [] | 1,638,476,467,000 | 1,638,476,467,000 | null | NONE | null | null | null | Some people who host datasets on github seem to include a table of metadata at the end of their README.md to make the dataset indexable by [Google Dataset Search](https://datasetsearch.research.google.com/) (See [here](https://github.com/google-research/google-research/tree/master/goemotions#dataset-metadata) and [here](https://github.com/cvdfoundation/google-landmark#dataset-metadata)). This could be a useful addition to canonical datasets; perhaps even community datasets.

I'll include a screenshot (as opposed to markdown) as an example so as not to have a github issue indexed as a dataset:

>

**_PS: It might very well be the case that this is already covered by some other markdown magic I'm not aware of._**

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3372/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3372/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3371 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3371/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3371/comments | https://api.github.com/repos/huggingface/datasets/issues/3371/events | https://github.com/huggingface/datasets/pull/3371 | 1,069,821,335 | PR_kwDODunzps4vUnbp | 3,371 | feat: add americas nli dataset | {

"login": "fdschmidt93",

"id": 39233597,

"node_id": "MDQ6VXNlcjM5MjMzNTk3",

"avatar_url": "https://avatars.githubusercontent.com/u/39233597?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/fdschmidt93",

"html_url": "https://github.com/fdschmidt93",

"followers_url": "https://api.github.com/users/fdschmidt93/followers",

"following_url": "https://api.github.com/users/fdschmidt93/following{/other_user}",

"gists_url": "https://api.github.com/users/fdschmidt93/gists{/gist_id}",

"starred_url": "https://api.github.com/users/fdschmidt93/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/fdschmidt93/subscriptions",

"organizations_url": "https://api.github.com/users/fdschmidt93/orgs",

"repos_url": "https://api.github.com/users/fdschmidt93/repos",

"events_url": "https://api.github.com/users/fdschmidt93/events{/privacy}",

"received_events_url": "https://api.github.com/users/fdschmidt93/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,638,467,099,000 | 1,638,473,264,000 | null | NONE | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3371",

"html_url": "https://github.com/huggingface/datasets/pull/3371",

"diff_url": "https://github.com/huggingface/datasets/pull/3371.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3371.patch",

"merged_at": null

} | This PR adds the [Americas NLI](https://arxiv.org/abs/2104.08726) dataset, extension of XNLI to 10 low-resource indigenous languages spoken in the Americas: Ashaninka, Aymara, Bribri, Guarani, Nahuatl, Otomi, Quechua, Raramuri, Shipibo-Konibo, and Wixarika.

One odd thing (not sure) is that I had to set

`datasets-cli dummy_data ./datasets/americas_nli/ --auto_generate --n_lines 7500`

`n_lines` very large to successfully generate the dummy files for all the subsets. Happy to get some guidance here.

Otherwise, I hope everything is in order :)

e: missed a step, onto fixing the tests

e2: there you go -- hope it's ok to have added more languages with their ISO codes to `languages.json`, need those tests to pass :laughing: | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3371/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3371/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3370 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3370/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3370/comments | https://api.github.com/repos/huggingface/datasets/issues/3370/events | https://github.com/huggingface/datasets/pull/3370 | 1,069,735,423 | PR_kwDODunzps4vUVA3 | 3,370 | Document a training loop for streaming dataset | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | 1,638,461,820,000 | 1,638,538,475,000 | null | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3370",

"html_url": "https://github.com/huggingface/datasets/pull/3370",

"diff_url": "https://github.com/huggingface/datasets/pull/3370.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3370.patch",

"merged_at": 1638538474000

} | I added some docs about streaming dataset. In particular I added two subsections:

- one on how to use `map` for preprocessing

- one on how to use a streaming dataset in a pytorch training loop

cc @patrickvonplaten @stevhliu if you have some comments

cc @Rocketknight1 later we can add the one for TF and I might need your help ^^' | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3370/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3370/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3369 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3369/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3369/comments | https://api.github.com/repos/huggingface/datasets/issues/3369/events | https://github.com/huggingface/datasets/issues/3369 | 1,069,587,674 | I_kwDODunzps4_wJza | 3,369 | [Audio] Allow resampling for audio datasets in streaming mode | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] | open | false | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

},

{

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | 1,638,453,897,000 | 1,638,453,908,000 | null | MEMBER | null | null | null | Many audio datasets like Common Voice always need to be resampled. This can very easily be done in non-streaming mode as follows:

```python

from datasets import load_dataset

ds = load_dataset("common_voice", "ab", split="test")

ds = ds.cast_column("audio", Audio(sampling_rate=16_000))

```

However in streaming mode it fails currently:

```python

from datasets import load_dataset

ds = load_dataset("common_voice", "ab", split="test", streaming=True)

ds = ds.cast_column("audio", Audio(sampling_rate=16_000))

```

with the following error:

```

AttributeError: 'IterableDataset' object has no attribute 'cast_column'

```

It would be great if we could add such a feature (I'm not 100% sure though how complex this would be) | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3369/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3369/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3368 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3368/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3368/comments | https://api.github.com/repos/huggingface/datasets/issues/3368/events | https://github.com/huggingface/datasets/pull/3368 | 1,069,403,624 | PR_kwDODunzps4vTObo | 3,368 | Fix dict source_datasets tagset validator | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | 1,638,442,340,000 | 1,638,460,118,000 | null | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3368",

"html_url": "https://github.com/huggingface/datasets/pull/3368",

"diff_url": "https://github.com/huggingface/datasets/pull/3368.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3368.patch",

"merged_at": 1638460117000

} | Currently, the `source_datasets` tag validation does not support passing a dict with configuration keys.

This PR:

- Extends `tagset_validator` to support regex tags

- Uses `tagset_validator` to validate dict `source_datasets` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3368/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3368/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3367 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3367/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3367/comments | https://api.github.com/repos/huggingface/datasets/issues/3367/events | https://github.com/huggingface/datasets/pull/3367 | 1,069,241,274 | PR_kwDODunzps4vSsfk | 3,367 | Fix typo in other-structured-to-text task tag | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | 1,638,432,147,000 | 1,638,461,234,000 | null | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3367",

"html_url": "https://github.com/huggingface/datasets/pull/3367",

"diff_url": "https://github.com/huggingface/datasets/pull/3367.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3367.patch",

"merged_at": 1638461233000

} | Fix typo in task tag:

- `other-stuctured-to-text` (before)

- `other-structured-to-text` (now) | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3367/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3367/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3366 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3366/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3366/comments | https://api.github.com/repos/huggingface/datasets/issues/3366/events | https://github.com/huggingface/datasets/issues/3366 | 1,069,214,022 | I_kwDODunzps4_uulG | 3,366 | Add multimodal datasets | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 2067376369,

"node_id": "MDU6TGFiZWwyMDY3Mzc2MzY5",

"url": "https://api.github.com/repos/huggingface/datasets/labels/dataset%20request",

"name": "dataset request",

"color": "e99695",

"default": false,

"description": "Requesting to add a new dataset"

}

] | open | false | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

}

] | null | [] | 1,638,429,844,000 | 1,638,430,413,000 | null | MEMBER | null | null | null | Epic issue to track the addition of multimodal datasets:

- [ ] #2526

- [ ] #1842

- [ ] #1810

Instructions to add a new dataset can be found [here](https://github.com/huggingface/datasets/blob/master/ADD_NEW_DATASET.md).

@VictorSanh feel free to add and sort by priority any interesting dataset. I have added the multimodal dataset requests which were already present as issues. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3366/reactions",

"total_count": 2,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 1,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 1

} | https://api.github.com/repos/huggingface/datasets/issues/3366/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3365 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3365/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3365/comments | https://api.github.com/repos/huggingface/datasets/issues/3365/events | https://github.com/huggingface/datasets/issues/3365 | 1,069,195,887 | I_kwDODunzps4_uqJv | 3,365 | Add task tags for multimodal datasets | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892871,

"node_id": "MDU6TGFiZWwxOTM1ODkyODcx",

"url": "https://api.github.com/repos/huggingface/datasets/labels/enhancement",

"name": "enhancement",

"color": "a2eeef",

"default": true,

"description": "New feature or request"

}

] | open | false | null | [] | null | [] | 1,638,428,300,000 | 1,638,430,389,000 | null | MEMBER | null | null | null | ## **Is your feature request related to a problem? Please describe.**

Currently, task tags are either exclusively related to text or speech processing:

- https://github.com/huggingface/datasets/blob/master/src/datasets/utils/resources/tasks.json

## **Describe the solution you'd like**

We should also add tasks related to:

- multimodality

- image

- video

CC: @VictorSanh @lewtun @lhoestq @merveenoyan @SBrandeis | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3365/reactions",

"total_count": 3,

"+1": 3,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3365/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3364 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3364/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3364/comments | https://api.github.com/repos/huggingface/datasets/issues/3364/events | https://github.com/huggingface/datasets/pull/3364 | 1,068,851,196 | PR_kwDODunzps4vRaxq | 3,364 | Use the Audio feature in the AutomaticSpeechRecognition template | {

"login": "anton-l",

"id": 26864830,

"node_id": "MDQ6VXNlcjI2ODY0ODMw",

"avatar_url": "https://avatars.githubusercontent.com/u/26864830?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/anton-l",

"html_url": "https://github.com/anton-l",

"followers_url": "https://api.github.com/users/anton-l/followers",

"following_url": "https://api.github.com/users/anton-l/following{/other_user}",

"gists_url": "https://api.github.com/users/anton-l/gists{/gist_id}",

"starred_url": "https://api.github.com/users/anton-l/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/anton-l/subscriptions",

"organizations_url": "https://api.github.com/users/anton-l/orgs",

"repos_url": "https://api.github.com/users/anton-l/repos",

"events_url": "https://api.github.com/users/anton-l/events{/privacy}",

"received_events_url": "https://api.github.com/users/anton-l/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,638,391,346,000 | 1,638,449,170,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3364",

"html_url": "https://github.com/huggingface/datasets/pull/3364",

"diff_url": "https://github.com/huggingface/datasets/pull/3364.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3364.patch",

"merged_at": null

} | This updates the ASR template and all supported datasets to use the `Audio` feature | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3364/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3364/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3363 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3363/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3363/comments | https://api.github.com/repos/huggingface/datasets/issues/3363/events | https://github.com/huggingface/datasets/pull/3363 | 1,068,824,340 | PR_kwDODunzps4vRVCl | 3,363 | Update URL of Jeopardy! dataset | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Closing this PR in favor of #3266."

] | 1,638,389,290,000 | 1,638,534,901,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3363",

"html_url": "https://github.com/huggingface/datasets/pull/3363",

"diff_url": "https://github.com/huggingface/datasets/pull/3363.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3363.patch",

"merged_at": null

} | Updates the URL of the Jeopardy! dataset.

Fix #3361 | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3363/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3363/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3362 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3362/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3362/comments | https://api.github.com/repos/huggingface/datasets/issues/3362/events | https://github.com/huggingface/datasets/pull/3362 | 1,068,809,768 | PR_kwDODunzps4vRR2r | 3,362 | Adapt image datasets | {

"login": "mariosasko",

"id": 47462742,

"node_id": "MDQ6VXNlcjQ3NDYyNzQy",

"avatar_url": "https://avatars.githubusercontent.com/u/47462742?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariosasko",

"html_url": "https://github.com/mariosasko",

"followers_url": "https://api.github.com/users/mariosasko/followers",

"following_url": "https://api.github.com/users/mariosasko/following{/other_user}",

"gists_url": "https://api.github.com/users/mariosasko/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariosasko/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariosasko/subscriptions",

"organizations_url": "https://api.github.com/users/mariosasko/orgs",

"repos_url": "https://api.github.com/users/mariosasko/repos",

"events_url": "https://api.github.com/users/mariosasko/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariosasko/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,638,388,321,000 | 1,638,553,377,000 | null | CONTRIBUTOR | null | true | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3362",

"html_url": "https://github.com/huggingface/datasets/pull/3362",

"diff_url": "https://github.com/huggingface/datasets/pull/3362.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3362.patch",

"merged_at": null

} | This PR:

* adapts the ImageClassification template to use the new Image feature

* adapts the following datasets to use the new Image feature:

* beans (+ fixes streaming)

* cast_vs_dogs (+ fixes streaming)

* cifar10

* cifar100

* fashion_mnist

* mnist

* head_qa

cc @nateraw | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3362/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3362/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3361 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3361/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3361/comments | https://api.github.com/repos/huggingface/datasets/issues/3361/events | https://github.com/huggingface/datasets/issues/3361 | 1,068,736,268 | I_kwDODunzps4_s58M | 3,361 | Jeopardy _URL access denied | {

"login": "tianjianjiang",

"id": 4812544,

"node_id": "MDQ6VXNlcjQ4MTI1NDQ=",

"avatar_url": "https://avatars.githubusercontent.com/u/4812544?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/tianjianjiang",

"html_url": "https://github.com/tianjianjiang",

"followers_url": "https://api.github.com/users/tianjianjiang/followers",

"following_url": "https://api.github.com/users/tianjianjiang/following{/other_user}",

"gists_url": "https://api.github.com/users/tianjianjiang/gists{/gist_id}",

"starred_url": "https://api.github.com/users/tianjianjiang/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/tianjianjiang/subscriptions",

"organizations_url": "https://api.github.com/users/tianjianjiang/orgs",

"repos_url": "https://api.github.com/users/tianjianjiang/repos",

"events_url": "https://api.github.com/users/tianjianjiang/events{/privacy}",

"received_events_url": "https://api.github.com/users/tianjianjiang/received_events",

"type": "User",

"site_admin": false

} | [

{

"id": 1935892857,

"node_id": "MDU6TGFiZWwxOTM1ODkyODU3",

"url": "https://api.github.com/repos/huggingface/datasets/labels/bug",

"name": "bug",

"color": "d73a4a",

"default": true,

"description": "Something isn't working"

}

] | open | false | null | [] | null | [] | 1,638,382,893,000 | 1,638,382,972,000 | null | NONE | null | null | null | ## Describe the bug

http://skeeto.s3.amazonaws.com/share/JEOPARDY_QUESTIONS1.json.gz returns Access Denied now.

However, https://drive.google.com/file/d/0BwT5wj_P7BKXb2hfM3d2RHU1ckE/view?usp=sharing from the original Reddit post https://www.reddit.com/r/datasets/comments/1uyd0t/200000_jeopardy_questions_in_a_json_file/ may work.

## Steps to reproduce the bug

```shell

> python

Python 3.7.12 (default, Sep 5 2021, 08:34:29)

[Clang 11.0.3 (clang-1103.0.32.62)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

```

```python

>>> from datasets import load_dataset

>>> load_dataset("jeopardy")

```

## Expected results

The download completes.

## Actual results

```shell

Downloading: 4.18kB [00:00, 1.60MB/s]

Downloading: 2.03kB [00:00, 1.04MB/s]

Using custom data configuration default

Downloading and preparing dataset jeopardy/default (download: 12.13 MiB, generated: 34.46 MiB, post-processed: Unknown size, total: 46.59 MiB) to /Users/mike/.cache/huggingface/datasets/jeopardy/default/0.1.0/25ee3e4a73755e637b8810f6493fd36e4523dea3ca8a540529d0a6e24c7f9810...

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/load.py", line 1632, in load_dataset

use_auth_token=use_auth_token,

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/builder.py", line 608, in download_and_prepare

dl_manager=dl_manager, verify_infos=verify_infos, **download_and_prepare_kwargs

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/builder.py", line 675, in _download_and_prepare

split_generators = self._split_generators(dl_manager, **split_generators_kwargs)

File "/Users/mike/.cache/huggingface/modules/datasets_modules/datasets/jeopardy/25ee3e4a73755e637b8810f6493fd36e4523dea3ca8a540529d0a6e24c7f9810/jeopardy.py", line 72, in _split_generators

filepath = dl_manager.download_and_extract(_DATA_URL)

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/utils/download_manager.py", line 284, in download_and_extract

return self.extract(self.download(url_or_urls))

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/utils/download_manager.py", line 197, in download

download_func, url_or_urls, map_tuple=True, num_proc=download_config.num_proc, disable_tqdm=False

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/utils/py_utils.py", line 197, in map_nested

return function(data_struct)

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/utils/download_manager.py", line 217, in _download

return cached_path(url_or_filename, download_config=download_config)

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/utils/file_utils.py", line 305, in cached_path

use_auth_token=download_config.use_auth_token,

File "/Users/mike/Library/Caches/pypoetry/virtualenvs/promptsource-hsdAcWsQ-py3.7/lib/python3.7/site-packages/datasets/utils/file_utils.py", line 594, in get_from_cache

raise ConnectionError("Couldn't reach {}".format(url))

ConnectionError: Couldn't reach http://skeeto.s3.amazonaws.com/share/JEOPARDY_QUESTIONS1.json.gz

```

---

```shell

> curl http://skeeto.s3.amazonaws.com/share/JEOPARDY_QUESTIONS1.json.gz

```

```xml

<?xml version="1.0" encoding="UTF-8"?>

<Error><Code>AccessDenied</Code><Message>Access Denied</Message><RequestId>70Y9R36XNPEQXMGV</RequestId><HostId>G6F5AK4qo7JdaEdKGMtS0P6gdLPeFOdEfSEfvTOZEfk9km0/jAfp08QLfKSTFFj1oWIKoAoBehM=</HostId></Error>

```

## Environment info

<!-- You can run the command `datasets-cli env` and copy-and-paste its output below. -->

- `datasets` version: 1.14.0

- Platform: macOS Catalina 10.15.7

- Python version: 3.7.12

- PyArrow version: 6.0.1

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3361/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3361/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3360 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3360/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3360/comments | https://api.github.com/repos/huggingface/datasets/issues/3360/events | https://github.com/huggingface/datasets/pull/3360 | 1,068,724,697 | PR_kwDODunzps4vQ_16 | 3,360 | Add The Pile USPTO subset | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [] | 1,638,382,085,000 | 1,638,531,929,000 | null | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3360",

"html_url": "https://github.com/huggingface/datasets/pull/3360",

"diff_url": "https://github.com/huggingface/datasets/pull/3360.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3360.patch",

"merged_at": 1638531927000

} | Add:

- USPTO subset of The Pile: "uspto" config

Close bigscience-workshop/data_tooling#297.

CC: @StellaAthena | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3360/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3360/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3359 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3359/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3359/comments | https://api.github.com/repos/huggingface/datasets/issues/3359/events | https://github.com/huggingface/datasets/pull/3359 | 1,068,638,213 | PR_kwDODunzps4vQtI0 | 3,359 | Add The Pile Free Law subset | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",

"followers_url": "https://api.github.com/users/albertvillanova/followers",

"following_url": "https://api.github.com/users/albertvillanova/following{/other_user}",

"gists_url": "https://api.github.com/users/albertvillanova/gists{/gist_id}",

"starred_url": "https://api.github.com/users/albertvillanova/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/albertvillanova/subscriptions",

"organizations_url": "https://api.github.com/users/albertvillanova/orgs",

"repos_url": "https://api.github.com/users/albertvillanova/repos",

"events_url": "https://api.github.com/users/albertvillanova/events{/privacy}",

"received_events_url": "https://api.github.com/users/albertvillanova/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"@albertvillanova Is there a specific reason you’re adding the Pile under “the” instead of under “pile”? That does not appear to be consistent with other datasets.",

"Hi @StellaAthena,\r\n\r\nI asked myself the same question, but at the end I decided to be consistent with previously added Pile subsets:\r\n- #2817\r\n\r\nI guess the reason is to stress that the definite article is always used before the name of the dataset (your site says: \"The Pile. An 800GB Dataset of Diverse Text for Language Modeling\"). Other datasets are not usually preceded by the definite article, like \"the SQuAD\" or \"the GLUE\" or \"the Common Voice\"...\r\n\r\nCC: @lhoestq "

] | 1,638,377,164,000 | 1,638,438,019,000 | null | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3359",

"html_url": "https://github.com/huggingface/datasets/pull/3359",

"diff_url": "https://github.com/huggingface/datasets/pull/3359.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3359.patch",

"merged_at": 1638379843000

} | Add:

- Free Law subset of The Pile: "free_law" config

Close bigscience-workshop/data_tooling#75.

CC: @StellaAthena | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3359/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3359/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3358 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3358/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3358/comments | https://api.github.com/repos/huggingface/datasets/issues/3358/events | https://github.com/huggingface/datasets/issues/3358 | 1,068,623,216 | I_kwDODunzps4_seVw | 3,358 | add new field, and get errors | {

"login": "yanllearnn",

"id": 38966558,

"node_id": "MDQ6VXNlcjM4OTY2NTU4",

"avatar_url": "https://avatars.githubusercontent.com/u/38966558?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/yanllearnn",

"html_url": "https://github.com/yanllearnn",

"followers_url": "https://api.github.com/users/yanllearnn/followers",

"following_url": "https://api.github.com/users/yanllearnn/following{/other_user}",

"gists_url": "https://api.github.com/users/yanllearnn/gists{/gist_id}",

"starred_url": "https://api.github.com/users/yanllearnn/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/yanllearnn/subscriptions",

"organizations_url": "https://api.github.com/users/yanllearnn/orgs",

"repos_url": "https://api.github.com/users/yanllearnn/repos",

"events_url": "https://api.github.com/users/yanllearnn/events{/privacy}",

"received_events_url": "https://api.github.com/users/yanllearnn/received_events",

"type": "User",

"site_admin": false

} | [] | closed | false | null | [] | null | [

"Hi, \r\n\r\ncould you please post this question on our [Forum](https://discuss.huggingface.co/) as we keep issues for bugs and feature requests? ",

"> Hi,\r\n> \r\n> could you please post this question on our [Forum](https://discuss.huggingface.co/) as we keep issues for bugs and feature requests?\r\n\r\nok."

] | 1,638,376,538,000 | 1,638,411,982,000 | null | NONE | null | null | null | after adding new field **tokenized_examples["example_id"]**, and get errors below,

I think it is due to changing data to tensor, and **tokenized_examples["example_id"]** is string list

**all fields**

```

***************** train_dataset 1: Dataset({

features: ['attention_mask', 'end_positions', 'example_id', 'input_ids', 'start_positions', 'token_type_ids'],

num_rows: 87714

})

```

**Errors**

```

Traceback (most recent call last):

File "/usr/local/lib/python3.7/site-packages/transformers/tokenization_utils_base.py", line 705, in convert_to_tensors

tensor = as_tensor(value)

ValueError: too many dimensions 'str'

``` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3358/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3358/timeline | null | false |

https://api.github.com/repos/huggingface/datasets/issues/3357 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3357/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3357/comments | https://api.github.com/repos/huggingface/datasets/issues/3357/events | https://github.com/huggingface/datasets/pull/3357 | 1,068,607,382 | PR_kwDODunzps4vQmcL | 3,357 | Update README.md | {

"login": "apergo-ai",

"id": 68908804,

"node_id": "MDQ6VXNlcjY4OTA4ODA0",

"avatar_url": "https://avatars.githubusercontent.com/u/68908804?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/apergo-ai",

"html_url": "https://github.com/apergo-ai",

"followers_url": "https://api.github.com/users/apergo-ai/followers",

"following_url": "https://api.github.com/users/apergo-ai/following{/other_user}",

"gists_url": "https://api.github.com/users/apergo-ai/gists{/gist_id}",

"starred_url": "https://api.github.com/users/apergo-ai/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/apergo-ai/subscriptions",

"organizations_url": "https://api.github.com/users/apergo-ai/orgs",

"repos_url": "https://api.github.com/users/apergo-ai/repos",

"events_url": "https://api.github.com/users/apergo-ai/events{/privacy}",

"received_events_url": "https://api.github.com/users/apergo-ai/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [] | 1,638,375,646,000 | 1,638,375,646,000 | null | NONE | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3357",

"html_url": "https://github.com/huggingface/datasets/pull/3357",

"diff_url": "https://github.com/huggingface/datasets/pull/3357.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3357.patch",

"merged_at": null

} | After having worked a bit with the dataset.

As far as I know, it is solely in English (en-US). There are only a few mails in Spanish, French or German (less than a dozen I would estimate). | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3357/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3357/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3356 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3356/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3356/comments | https://api.github.com/repos/huggingface/datasets/issues/3356/events | https://github.com/huggingface/datasets/pull/3356 | 1,068,503,932 | PR_kwDODunzps4vQQLD | 3,356 | to_tf_dataset() refactor | {

"login": "Rocketknight1",

"id": 12866554,

"node_id": "MDQ6VXNlcjEyODY2NTU0",

"avatar_url": "https://avatars.githubusercontent.com/u/12866554?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/Rocketknight1",

"html_url": "https://github.com/Rocketknight1",

"followers_url": "https://api.github.com/users/Rocketknight1/followers",

"following_url": "https://api.github.com/users/Rocketknight1/following{/other_user}",

"gists_url": "https://api.github.com/users/Rocketknight1/gists{/gist_id}",

"starred_url": "https://api.github.com/users/Rocketknight1/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/Rocketknight1/subscriptions",

"organizations_url": "https://api.github.com/users/Rocketknight1/orgs",

"repos_url": "https://api.github.com/users/Rocketknight1/repos",

"events_url": "https://api.github.com/users/Rocketknight1/events{/privacy}",

"received_events_url": "https://api.github.com/users/Rocketknight1/received_events",

"type": "User",

"site_admin": false

} | [] | open | false | null | [] | null | [

"Also, please don't merge yet - I need to make sure all the code samples and notebooks have a collate_fn specified, since we're removing the ability for this method to work without one!"

] | 1,638,370,470,000 | 1,638,541,032,000 | null | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/3356",

"html_url": "https://github.com/huggingface/datasets/pull/3356",

"diff_url": "https://github.com/huggingface/datasets/pull/3356.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/3356.patch",

"merged_at": null

} | This is the promised cleanup to `to_tf_dataset()` now that the course is out of the way! The main changes are:

- A collator is always required (there was way too much hackiness making things like labels work without it)

- Lots of cleanup and a lot of code moved to `_get_output_signature`

- Should now handle it gracefully when the data collator adds unexpected columns | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/3356/reactions",

"total_count": 2,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 2,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/3356/timeline | null | true |

https://api.github.com/repos/huggingface/datasets/issues/3355 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/3355/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/3355/comments | https://api.github.com/repos/huggingface/datasets/issues/3355/events | https://github.com/huggingface/datasets/pull/3355 | 1,068,468,573 | PR_kwDODunzps4vQIoy | 3,355 | Extend support for streaming datasets that use pd.read_excel | {

"login": "albertvillanova",

"id": 8515462,

"node_id": "MDQ6VXNlcjg1MTU0NjI=",

"avatar_url": "https://avatars.githubusercontent.com/u/8515462?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/albertvillanova",

"html_url": "https://github.com/albertvillanova",