message

stringlengths 13

484

| diff

stringlengths 38

4.63k

|

|---|---|

Renderer : improve performance of filter clean-up for IPR sessions.

We're creating filters in parallel by traversing the scene. Cleaning them up is

done serially, though. This commit makes sure clean-up can happen on all cores. | @@ -2468,8 +2468,13 @@ void LightFilterConnections::deregisterLightFilter( const IECore::StringVectorDa

return;

}

- for( const std::string &lightName : lightNames->readable() )

+ const std::vector<std::string> &lights = lightNames->readable();

+ tbb::parallel_for(

+ size_t(0),

+ lights.size(),

+ [this, lights, groupEmptied, filterGroup]( size_t i)

{

+ const std::string &lightName = lights[i];

ConnectionsMap::accessor a;

if( m_connections.find( a, lightName ) )

{

@@ -2480,6 +2485,7 @@ void LightFilterConnections::deregisterLightFilter( const IECore::StringVectorDa

a->second.dirty = true;

}

}

+ );

if( groupEmptied )

{

|

help center: Rewrite "Contact support" page.

Structure page as use case -> recommended channel.

Include expected SLAs for all channels. | # Contact support

-General and technical support for Zulip is available through three

-channels:

-

-* [The Zulip development community][development-community].

- Depending on the time of day/week of your query and the complexity

- of your question, you may get complete responses immediately or up

- to a couple days later, but Zulip's threading makes it easy for us

- to reply to every thread. Please read the community conventions

- (linked above) and choose a topic when starting a conversation.

-* We aim to reply to [email support](mailto:[email protected])

- questions within a business day. Simple queries or requests for

- data imports generally receive faster replies.

-* GitHub [server](https://github.com/zulip/zulip/issues/new), web

- [frontend](https://github.com/zulip/zulip/issues/new), [mobile

- app](https://github.com/zulip/zulip-mobile/issues/new), [desktop

- app](https://github.com/zulip/zulip-desktop/issues/new). If you

- aren't able to provide a clear bug report or are otherwise

- uncertain, it can be helpful to discuss in

- [the Zulip development community][development-community]

- first to help create a better GitHub issue.

-* We don't offer phone support except as part of enterprise contracts.

-

-We love hearing feedback! Please reach out if you have feedback,

-questions, or just want to brainstorm how to make Zulip work for your

-organization.

+We're here to help! This page will guide you to the best way to reach us.

+

+* For **support requests** regarding your Zulip Cloud organization or

+ self-hosted server, [email Zulip support](mailto:[email protected]).

+ * Response time: Usually 1-3 business days, or within one business day for

+ paid customers.

+

+* For **sales**, **billing**, **partnerships**, and **other commercial

+ questions**, contact [[email protected]](mailto:[email protected]).

+ * Response time: Usually within one business day.

+

+* To **suggest new features**, **report an issue**, or **share any other

+ feedback**, join the [Zulip development community][development-community].

+ It's the best place to interactively discuss your problem or proposal. Please

+ [follow the community norms](/development-community/#community-norms) when

+ posting.

+ * Response time: Usually within one business day.

+

+* If you have a **concrete bug report**, you can create an issue in the

+ appropriate [Zulip GitHub repository](https://github.com/zulip). Use the

+ [server/web app](https://github.com/zulip/zulip/issues/new) repository if you

+ aren't sure where to start.

+ * Response time: Usually within one week.

+

+* Phone support is available only for **Enterprise support customers**.

+

+Your feedback helps us make Zulip better for everyone! Please reach out if you

+have questions, suggestions, or just want to brainstorm how to make Zulip work

+for your organization.

[development-community]: https://zulip.com/development-community/

-## Billing and commercial questions

+## Related articles

-We provide exclusively [email support](mailto:[email protected])

-for sales, billing, partnerships, and other commercial questions.

+* [Zulip Cloud billing](/help/zulip-cloud-billing)

+* [View Zulip version](/help/view-zulip-version)

|

docs: add SolidExecutionContext to relevant APIs for Solids

Summary: Make context object more prominent in the solid docs.

Test Plan: make dev

Reviewers: alangenfeld, cdecarolis | @@ -12,11 +12,12 @@ Solids are the functional unit of work in Dagster. A solid's responsibility is t

## Relevant APIs

| Name | Description |

-| -------------------------------------- | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

-| <PyObject object="solid" decorator /> | The decorator used to define solids. The decorated function is called the `compute_fn`. The decorator returns a <PyObject object="SolidDefinition" /> |

-| <PyObject object="InputDefinition" /> | InputDefinitions define the inputs to a solid compute function. These are defined on the `input_defs` argument to the <PyObject object="solid" decorator/> decorator |

-| <PyObject object="OutputDefinition" /> | OutputDefinitions define the outputs of a solid compute function. These are defined on the `output_defs` argument to the <PyObject object="solid" displayText="@solid"/> decorator |

-| <PyObject object="SolidDefinition" /> | Class for solids. You almost never want to use initialize this class directly. Instead, you should use the <PyObject object="solid" decorator /> which returns a <PyObject object="SolidDefinition" /> |

+| ------------------------------------------ | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

+| <PyObject object="solid" decorator /> | The decorator used to define solids. The decorated function is called the `compute_fn`. The decorator returns a <PyObject object="SolidDefinition" />. |

+| <PyObject object="InputDefinition" /> | InputDefinitions define the inputs to a solid compute function. These are defined on the `input_defs` argument to the <PyObject object="solid" decorator/> decorator. |

+| <PyObject object="OutputDefinition" /> | OutputDefinitions define the outputs of a solid compute function. These are defined on the `output_defs` argument to the <PyObject object="solid" displayText="@solid"/> decorator. |

+| <PyObject object="SolidExecutionContext"/> | An optional first argument to a solid's compute function, providing access to Dagster system information such as resources, logging, and the underlying Dagster instance. |

+| <PyObject object="SolidDefinition" /> | Class for solids. You almost never want to use initialize this class directly. Instead, you should use the <PyObject object="solid" decorator /> which returns a <PyObject object="SolidDefinition" />. |

## Overview

@@ -120,12 +121,7 @@ Like inputs, outputs can also have [Dagster Types](/concepts/types).

### Solid Context

-When writing a solid, users can optionally provide a first parameter, `context`. When this parameter is supplied, Dagster will supply a context object to the body of the solid, which is an instance of <PyObject object="SolidExecutionContext"/>. The context provides access to:

-

-- Solid configuration (`context.solid_config`)

-- Loggers (`context.log`)

-- Resources (`context.resources`)

-- The current run ID: (`context.run_id`)

+When writing a solid, users can optionally provide a first parameter, `context`. When this parameter is supplied, Dagster will supply a context object to the body of the solid. The context provides access to system information like solid configuration, loggers, resources, and the current run id. See <PyObject object="SolidExecutionContext"/> for the full list of properties accessible from the solid context.

For example, to access the logger and log a info message:

|

Add failing test for filtering attributes

Added a test that fails when the friendlyName of the requested attribute

is not the same with the name of the internal attribute (even though the

OIDs and the internal representation names of the attribute are the same) | @@ -81,6 +81,18 @@ def test_filter_on_attributes_1():

assert ava["serialNumber"] == ["12345"]

+def test_filter_on_attributes_2():

+

+ a = to_dict(Attribute(friendly_name="surName",name="urn:oid:2.5.4.4",

+ name_format=NAME_FORMAT_URI), ONTS)

+ required = [a]

+ ava = {"sn":["kakavas"]}

+

+ ava = filter_on_attributes(ava,required,acs=ac_factory())

+ assert list(ava.keys()) == ['sn']

+ assert ava["sn"] == ["kakavas"]

+

+

def test_filter_on_attributes_without_friendly_name():

ava = {"eduPersonTargetedID": "[email protected]",

"eduPersonAffiliation": "test",

@@ -923,3 +935,4 @@ def test_assertion_with_authn_instant():

if __name__ == "__main__":

test_assertion_2()

+

|

Update PillarStack stack.py to latest upstream version

which provide the following fixes/enhancements: | @@ -413,7 +413,7 @@ def ext_pillar(minion_id, pillar, *args, **kwargs):

stack_config_files += cfgs

for cfg in stack_config_files:

if not os.path.isfile(cfg):

- log.warning(

+ log.info(

'Ignoring pillar stack cfg "%s": file does not exist', cfg)

continue

stack = _process_stack_cfg(cfg, stack, minion_id, pillar)

@@ -424,10 +424,6 @@ def _to_unix_slashes(path):

return posixpath.join(*path.split(os.sep))

-def _construct_unicode(loader, node):

- return node.value

-

-

def _process_stack_cfg(cfg, stack, minion_id, pillar):

log.debug('Config: %s', cfg)

basedir, filename = os.path.split(cfg)

@@ -437,7 +433,8 @@ def _process_stack_cfg(cfg, stack, minion_id, pillar):

"__salt__": __salt__,

"__grains__": __grains__,

"__stack__": {

- 'traverse': salt.utils.data.traverse_dict_and_list

+ 'traverse': salt.utils.data.traverse_dict_and_list,

+ 'cfg_path': cfg,

},

"minion_id": minion_id,

"pillar": pillar,

@@ -448,7 +445,7 @@ def _process_stack_cfg(cfg, stack, minion_id, pillar):

continue # silently ignore whitespace or empty lines

paths = glob.glob(os.path.join(basedir, item))

if not paths:

- log.warning(

+ log.info(

'Ignoring pillar stack template "%s": can\'t find from root '

'dir "%s"', item, basedir

)

@@ -457,7 +454,7 @@ def _process_stack_cfg(cfg, stack, minion_id, pillar):

log.debug('YAML: basedir=%s, path=%s', basedir, path)

# FileSystemLoader always expects unix-style paths

unix_path = _to_unix_slashes(os.path.relpath(path, basedir))

- obj = salt.utils.yaml.safe_load(jenv.get_template(unix_path).render(stack=stack))

+ obj = salt.utils.yaml.safe_load(jenv.get_template(unix_path).render(stack=stack, ymlpath=path))

if not isinstance(obj, dict):

log.info('Ignoring pillar stack template "%s": Can\'t parse '

'as a valid yaml dictionary', path)

|

pmerge: color code display_failure output.

green == normal

yellow == unsolved

red == failure point.

It's not perfect, but it does make it easier

for the eye to zero in on the relevant data.

Closes: | @@ -366,21 +366,35 @@ def do_unmerge(options, out, err, vdb, matches, world_set, repo_obs):

out.write(f"finished; removed {len(matches)} packages")

-def display_failures(out, sequence, first_level=True, debug=False):

+def display_failures(out, sequence, first_level=True, debug=False, _color_index=None):

"""when resolution fails, display a nicely formatted message"""

sequence = iter(sequence)

frame = next(sequence)

+

+ def set_color(color):

+ out.first_prefix[_color_index] = out.fg(color)

+

if first_level:

+ # _color_index is an encapsulation failure; we're modifying the formatters

+ # prefix directly; that's very much a hack. Don't rely on it beyond in

+ # this function.

+ _color_index = len(out.first_prefix)

# pops below need to exactly match.

- out.first_prefix.extend((out.fg("red"), "!!! ", out.reset))

+ out.first_prefix.extend((out.fg("green"), "!!! ", out.reset))

+ else:

+ assert _color_index is not None

+ set_color("green")

+

out.write(f"request {frame.atom}, mode {frame.mode}")

for pkg, steps in sequence:

+ set_color("yellow")

out.write(f"trying {pkg.cpvstr}")

out.first_prefix.append(" ")

for step in steps:

+ set_color("red")

if isinstance(step, list):

- display_failures(out, step, False, debug=debug)

+ display_failures(out, step, False, debug=debug, _color_index=_color_index)

elif step[0] == 'reduce':

out.write("removing choices involving %s" %

', '.join(str(x) for x in step[1]))

@@ -396,6 +410,7 @@ def display_failures(out, sequence, first_level=True, debug=False):

out.write("failed due to %s" % (step[3],))

elif step[0] == "debug":

if debug:

+ set_color("yellow")

out.write(step[1])

else:

out.write(step)

|

viafree: Change autonaming from tv3play to viafree

Change part autogenerated file names from tv3play to viafree | @@ -135,6 +135,7 @@ class Viaplay(Service, OpenGraphThumbMixin):

if self.options.output_auto:

directory = os.path.dirname(self.options.output)

self.options.service = "tv3play"

+ self.options.service = "viafree"

basename = self._autoname(dataj)

title = "%s-%s-%s" % (basename, vid, self.options.service)

if len(directory):

|

Double the docker puppet process counts

Deploy steps run the docker puppet steps with max of

a 3 processes. This takes like 30 min to finish the

containers configuration for a typical overcloud (in CI).

Double the numbers to allow more puppets finish threir

tasks sooner. | @@ -72,7 +72,7 @@ parameters:

description: Set to True to enable debug logging with docker-puppet.py

DockerPuppetProcessCount:

type: number

- default: 3

+ default: 6

description: Number of concurrent processes to use when running docker-puppet to generate config files.

ctlplane_service_ips:

type: json

|

Update README.md

fixed numerical <> categorical target drift links in the examples | ## What is it?

-Evidently helps evaluate machine learning models during validation and monitor them in production. The tool generates interactive visual reports and JSON profiles from pandas `DataFrame` or `csv` files. You can use visual reports for ad hoc analysis, debugging and team sharing, and JSON profiles to integrate Evidently in prediction pipelines or with other visualization tools.

+Evidently helps evaluate machine learning models during validation and monitor them in production. The tool generates interactive visual reports and JSON profiles from pandas `DataFrame` or `csv` files.

+

+You can use **visual reports** for ad hoc analysis, debugging and team sharing, and **JSON profiles** to integrate Evidently in prediction pipelines or with other visualization tools.

Currently 6 reports are available.

@@ -358,11 +360,11 @@ For more information, refer to a complete <a href="https://evidentlyai.gitbook.i

[Iris](https://github.com/evidentlyai/evidently/blob/main/evidently/examples/iris_data_drift.ipynb),

[Boston](https://github.com/evidentlyai/evidently/blob/main/evidently/examples/boston_data_drift.ipynb)

-- See **Numerical Target and Data Drift** Dashboard and Profile generation to explore the results both inside a Jupyter notebook and as a separate file:

+- See **Categorical Target and Data Drift** Dashboard and Profile generation to explore the results both inside a Jupyter notebook and as a separate file:

[Iris](https://github.com/evidentlyai/evidently/blob/main/evidently/examples/iris_target_and_data_drift.ipynb),

[Breast Cancer](https://github.com/evidentlyai/evidently/blob/main/evidently/examples/breast_cancer_target_and_data_drift.ipynb)

-- See **Categorical Target and Data Drift** Dashboard and Profile generation to explore the results both inside a Jupyter notebook and as a separate file:

+- See **Numerical Target and Data Drift** Dashboard and Profile generation to explore the results both inside a Jupyter notebook and as a separate file:

[Boston](https://github.com/evidentlyai/evidently/blob/main/evidently/examples/boston_target_and_data_drift.ipynb)

- See **Regression Performance** Dashboard and Profile generation to explore the results both inside a Jupyter notebook and as a separate file:

|

fix InProcessRepositoryLocation.executable_path

Summary:

Very unlikely to matter for this particular repository location but you never know :)

Test Plan: BK | @@ -264,7 +264,9 @@ def origin(self) -> InProcessRepositoryLocationOrigin:

@property

def executable_path(self) -> Optional[str]:

- return sys.executable

+ return (

+ self._recon_repo.executable_path if self._recon_repo.executable_path else sys.executable

+ )

@property

def container_image(self) -> str:

|

Update elastic_net.pyx

Elasticnet regression:

Change "smaell" to small" | @@ -28,7 +28,7 @@ class ElasticNet:

ElasticNet extends LinearRegression with combined L1 and L2 regularizations

on the coefficients when predicting response y with a linear combination of

the predictors in X. It can reduce the variance of the predictors, force

- some coefficients to be smaell, and improves the conditioning of the

+ some coefficients to be small, and improves the conditioning of the

problem.

cuML's ElasticNet an array-like object or cuDF DataFrame, uses coordinate

|

fix ANTSPATH

fixes | @@ -24,7 +24,7 @@ binaries:

yum:

- curl

env:

- ANTSPATH: "{{ self.install_path }}"

+ ANTSPATH: "{{ self.install_path }}/"

PATH: "{{ self.install_path }}:$PATH"

instructions: |

{{ self.install_dependencies() }}

|

Update ci.yml

more matrix-include trial and error | @@ -30,25 +30,14 @@ jobs:

"da_tests.py"

]

include:

+ - os: macos-latest

+ python-version: 3.8

+ run-type: mac

- os: ubuntu-latest

python-version: 3.8

run-type: nb

test-path: autotest_notebooks.py

- - os: macos-latest

- python-version: 3.8

- test-path: [

- "pst_tests_2.py",

- "utils_tests.py",

- "pst_from_tests.py",

- "pst_tests.py",

- "full_meal_deal_tests.py",

- "en_tests.py",

- "la_tests.py",

- "plot_tests.py",

- "moouu_tests.py",

- "mat_tests.py",

- "da_tests.py"

- ]

+

steps:

- uses: actions/[email protected]

|

Update apt_cobaltdickens.txt

Merging trails and fixing dups. | # See the file 'LICENSE' for copying permission

# Reference: https://www.secureworks.com/blog/back-to-school-cobalt-dickens-targets-universities

-

-anvc.me

-eduv.icu

-jhbn.me

-nimc.cf

-uncr.me

-unie.ga

-unie.ml

-unin.icu

-unip.cf

-unip.gq

-unir.cf

-unir.gq

-unir.ml

-unisv.xyz

-univ.red

-untc.me

-untf.me

-unts.me

-unvc.me

-ebookfafa.com

-lib-service.com

-

# Reference: https://www.secureworks.com/blog/cobalt-dickens-goes-back-to-school-again

# Reference: https://otx.alienvault.com/pulse/5d78eaf37b37c503fb07d45a

|

Disable test_backward_per_tensor in test_fake_quant

Summary:

Pull Request resolved:

This testcase started breaking, clean up for the build.

ghstack-source-id:

Test Plan: Unittest disabling change | @@ -89,6 +89,7 @@ class TestFakeQuantizePerTensor(TestCase):

@given(device=st.sampled_from(['cpu', 'cuda'] if torch.cuda.is_available() else ['cpu']),

X=hu.tensor(shapes=hu.array_shapes(1, 5,),

qparams=hu.qparams(dtypes=torch.quint8)))

+ @unittest.skip("temporarily disable the test")

def test_backward_per_tensor(self, device, X):

r"""Tests the backward method.

"""

|

Adding list sketches to the tsctl command

* First steps toward adding the ability to manually run a sketch.

* Revert "First steps toward adding the ability to manually run a sketch."

This reverts commit

* Adding a list sketch to tsctl. | @@ -303,6 +303,34 @@ class SearchTemplateManager(Command):

db_session.commit()

+class ListSketches(Command):

+ """List all available sketches."""

+

+ # pylint: disable=arguments-differ, method-hidden

+ def run(self):

+ """The run method for the command."""

+ sketches = Sketch.query.all()

+

+ name_len = max([len(x.name) for x in sketches])

+ desc_len = max([len(x.description) for x in sketches])

+

+ if not name_len:

+ name_len = 5

+ if not desc_len:

+ desc_len = 10

+

+ fmt_string = '{{0:^3d}} | {{1:{0:d}s}} | {{2:{1:d}s}}'.format(

+ name_len, desc_len)

+

+ print('+-'*40)

+ print(' ID | Name {0:s} | Description'.format(' '*(name_len-5)))

+ print('+-'*40)

+ for sketch in sketches:

+ print(fmt_string.format(

+ sketch.id, sketch.name, sketch.description))

+ print('-'*80)

+

+

class ImportTimeline(Command):

"""Create a new Timesketch timeline from a file."""

option_list = (

@@ -431,6 +459,7 @@ def main():

shell_manager.add_command('add_index', AddSearchIndex())

shell_manager.add_command('db', MigrateCommand)

shell_manager.add_command('drop_db', DropDataBaseTables())

+ shell_manager.add_command('list_sketches', ListSketches())

shell_manager.add_command('purge', PurgeTimeline())

shell_manager.add_command('search_template', SearchTemplateManager())

shell_manager.add_command('import', ImportTimeline())

|

[IMPR] Use ThreadList with weblinkchecker.py

Also simplify ignore url checking | @@ -133,7 +133,7 @@ from pywikibot.bot import ExistingPageBot, SingleSiteBot, suggest_help

from pywikibot.pagegenerators import (

XMLDumpPageGenerator as _XMLDumpPageGenerator,

)

-from pywikibot.tools import deprecated

+from pywikibot.tools import deprecated, ThreadList

from pywikibot.tools.formatter import color_format

try:

@@ -857,32 +857,24 @@ class WeblinkCheckerRobot(SingleSiteBot, ExistingPageBot):

self.HTTPignore = HTTPignore

self.day = day

+ # Limit the number of threads started at the same time

+ self.threads = ThreadList(limit=config.max_external_links,

+ wait_time=config.retry_wait)

+

def treat_page(self):

"""Process one page."""

page = self.current_page

- text = page.get()

- for url in weblinksIn(text):

+ for url in weblinksIn(page.text):

for ignoreR in ignorelist:

if ignoreR.match(url):

break

- else: # not ignore url

- # Limit the number of threads started at the same time. Each

- # thread will check one page, then die.

- while threading.activeCount() >= config.max_external_links:

- pywikibot.sleep(config.retry_wait)

+ else:

+ # Each thread will check one page, then die.

thread = LinkCheckThread(page, url, self.history,

self.HTTPignore, self.day)

# thread dies when program terminates

thread.setDaemon(True)

- try:

- thread.start()

- except threading.ThreadError:

- pywikibot.warning(

- "Can't start a new thread.\nPlease decrease "

- 'max_external_links in your user-config.py or use\n'

- "'-max_external_links:' option with a smaller value. "

- 'Default is 50.')

- raise

+ self.threads.append(thread)

def RepeatPageGenerator():

|

[Bugfix] fskip of EliminateCommonSubexpr cannot always return false

* 'fskip' will not always return false

fskip returns false at the end of PackedFunc, discards return true in 'cast' case

* Update build_module.cc | @@ -318,6 +318,7 @@ class RelayBuildModule : public runtime::ModuleNode {

pass_seqs.push_back(transform::SimplifyInference());

PackedFunc fskip = PackedFunc([](TVMArgs args, TVMRetValue* rv) {

Expr expr = args[0];

+ *rv = false;

if (expr.as<CallNode>()) {

auto call_node = expr.as<CallNode>();

auto op_node = call_node->op.as<OpNode>();

@@ -328,7 +329,6 @@ class RelayBuildModule : public runtime::ModuleNode {

}

}

}

- *rv = false;

});

pass_seqs.push_back(transform::EliminateCommonSubexpr(fskip));

pass_seqs.push_back(transform::CombineParallelConv2D(3));

|

explicitly provide memory format when calling to clone() at Sorting.cpp

Summary: Pull Request resolved:

Test Plan: Imported from OSS | @@ -120,7 +120,7 @@ static std::tuple<Tensor&, Tensor&> kthvalue_out_impl_cpu(

indices.zero_();

return std::forward_as_tuple(values, indices);

}

- auto tmp_values = self.clone();

+ auto tmp_values = self.clone(at::MemoryFormat::Contiguous);

auto tmp_indices = at::empty(self.sizes(), self.options().dtype(kLong));

AT_DISPATCH_ALL_TYPES(self.scalar_type(), "kthvalue_cpu", [&] {

dim_apply(

@@ -290,9 +290,9 @@ Tensor median_cpu(const Tensor& self) {

#endif

TORCH_CHECK(self.numel() > 0, "median cannot be called with empty tensor");

if (self.dim() == 0 && self.numel() == 1) {

- return self.clone();

+ return self.clone(at::MemoryFormat::Contiguous);

}

- auto tmp_values = self.clone().view(-1);

+ auto tmp_values = self.clone(at::MemoryFormat::Contiguous).view(-1);

auto result = at::empty({1}, self.options());

AT_DISPATCH_ALL_TYPES(self.scalar_type(), "median", [&] {

// note, quick_select is 0 based while kthvalue is not

|

ENH: extend delete single value optimization

Allow arrays of shape (1,) for delete's obj parameter to utilize the

optimization for a single value. See | @@ -4368,6 +4368,19 @@ def delete(arr, obj, axis=None):

return new

if isinstance(obj, (int, integer)) and not isinstance(obj, bool):

+ single_value = True

+ else:

+ single_value = False

+ _obj = obj

+ obj = np.asarray(obj)

+ if obj.size == 0 and not isinstance(_obj, np.ndarray):

+ obj = obj.astype(intp)

+

+ if obj.shape == (1,):

+ obj = obj.item()

+ single_value = True

+

+ if single_value:

# optimization for a single value

if (obj < -N or obj >= N):

raise IndexError(

@@ -4384,11 +4397,6 @@ def delete(arr, obj, axis=None):

slobj2[axis] = slice(obj+1, None)

new[tuple(slobj)] = arr[tuple(slobj2)]

else:

- _obj = obj

- obj = np.asarray(obj)

- if obj.size == 0 and not isinstance(_obj, np.ndarray):

- obj = obj.astype(intp)

-

if obj.dtype == bool:

if obj.shape != (N,):

raise ValueError('boolean array argument obj to delete '

|

Assert page size return less than or equal to amt

The EC2 API was returning us empty result lists with next tokens | @@ -64,9 +64,9 @@ class TestEC2Pagination(unittest.TestCase):

self.assertEqual(len(results), 3)

for parsed in results:

reserved_inst_offer = parsed['ReservedInstancesOfferings']

- # There should only be one reserved instance offering on each

- # page.

- self.assertEqual(len(reserved_inst_offer), 1)

+ # There should be no more than one reserved instance

+ # offering on each page.

+ self.assertLessEqual(len(reserved_inst_offer), 1)

def test_can_fall_back_to_old_starting_token(self):

# Using an operation that we know will paginate.

|

Expand advanced install instructions

For playbook-based installations of bifrost it is important to

make sure that the appropriate virtual environment is activated

and the correct working directory is used. Adding this

information to the existing document | @@ -375,14 +375,37 @@ restarted.

Playbook Execution

==================

+Playbook based install provides a greater degree of visibility and control

+over the process and is suitable for advanced installation scenarios.

+

+Examples:

+

+First, make sure that the virtual environment is active (the example below

+assumes that bifrost venv is installed into the default path

+/opt/stack/bifrost).

+

+ $ . /opt/stack/bifrost/bin/activate

+ (bifrost) $

+

+Verify if the ansible-playbook executable points to the one installed in

+the virtual environment:

+

+ (bifrost) $ which ansible-playbook

+ /opt/stack/bifrost/bin/ansible-playbook

+ (bifrost) $

+

+change to the ``playbooks`` subdirectory of the cloned bifrost repository:

+

+ $ cd playbooks

+

If you have passwordless sudo enabled, run::

- ansible-playbook -vvvv -i inventory/target install.yaml

+ $ ansible-playbook -vvvv -i inventory/target install.yaml

Otherwise, add the ``-K`` to the ansible command line, to trigger ansible

to prompt for the sudo password::

- ansible-playbook -K -vvvv -i inventory/target install.yaml

+ $ ansible-playbook -K -vvvv -i inventory/target install.yaml

With regard to testing, ironic's node cleaning capability is enabled by

default, but only metadata cleaning is turned on, as it can be an unexpected

@@ -393,7 +416,7 @@ If you wish to enable full cleaning, you can achieve this by passing the option

``-e cleaning_disk_erase=true`` to the command line or executing the command

below::

- ansible-playbook -K -vvvv -i inventory/target install.yaml -e cleaning_disk_erase=true

+ $ ansible-playbook -K -vvvv -i inventory/target install.yaml -e cleaning_disk_erase=true

After you have performed an installation, you can edit

``/etc/ironic/ironic.conf`` to enable or disable cleaning as desired.

@@ -410,7 +433,7 @@ These drivers and information about them can be found in

If you would like to install the ironic staging drivers, simply pass

``-e staging_drivers_include=true`` when executing the install playbook::

- ansible-playbook -K -vvvv -i inventory/target install.yaml -e staging_drivers_include=true

+ $ ansible-playbook -K -vvvv -i inventory/target install.yaml -e staging_drivers_include=true

===============

Advanced Topics

|

Modify the list group date

Modify the list group date to the correct value according to the API document described | @@ -20,9 +20,9 @@ from tempest.tests.lib.services import base

class TestGroupSnapshotsClient(base.BaseServiceTest):

FAKE_CREATE_GROUP_SNAPSHOT = {

"group_snapshot": {

- "group_id": "49c8c114-0d68-4e89-b8bc-3f5a674d54be",

- "name": "group-snapshot-001",

- "description": "Test group snapshot 1"

+ "id": "6f519a48-3183-46cf-a32f-41815f816666",

+ "name": "first_group_snapshot",

+ "group_type_id": "58737af7-786b-48b7-ab7c-2447e74b0ef4"

}

}

@@ -34,7 +34,7 @@ class TestGroupSnapshotsClient(base.BaseServiceTest):

"description": "Test group snapshot 1",

"group_type_id": "0e58433f-d108-4bf3-a22c-34e6b71ef86b",

"status": "available",

- "created_at": "20127-06-20T03:50:07Z"

+ "created_at": "2017-06-20T03:50:07Z"

}

}

@@ -102,8 +102,7 @@ class TestGroupSnapshotsClient(base.BaseServiceTest):

resp_body = {

'group_snapshots': [{

'id': group_snapshot['id'],

- 'name': group_snapshot['name'],

- 'group_type_id': group_snapshot['group_type_id']}

+ 'name': group_snapshot['name']}

for group_snapshot in

self.FAKE_LIST_GROUP_SNAPSHOTS['group_snapshots']

]

|

Fix Cisco.IOS profile

HG--

branch : feature/microservices | @@ -108,6 +108,8 @@ class Profile(BaseProfile):

)

if il.startswith("virtual-template"):

return "Vi %s" % il[16:].strip()

+ if il.startswith("service-engine"):

+ return "Service-Engine %s" % il[14:].strip()

# Serial0/1/0:15-Signaling -> Serial0/1/0:15

if il.startswith("se") and "-" in interface:

interface = interface.split("-")[0]

|

Keystone - List user groups 'membership_expires_at' attribute

With the introduction of expiring group memberships, there is a

new attribute `membership_expires_at` when listing user groups.

This patch updates the test to check the attribute and then

ignore it for group dict comparison.

Partial-Bug: | @@ -114,6 +114,13 @@ class GroupsV3TestJSON(base.BaseIdentityV3AdminTest):

self.groups_client.add_group_user(group['id'], user['id'])

# list groups which user belongs to

user_groups = self.users_client.list_user_groups(user['id'])['groups']

+ # The `membership_expires_at` attribute is present when listing user

+ # group memberships, and is not an attribute of the groups themselves.

+ # Therefore we remove it from the comparison.

+ for g in user_groups:

+ if 'membership_expires_at' in g:

+ self.assertIsNone(g['membership_expires_at'])

+ del(g['membership_expires_at'])

self.assertEqual(sorted(groups, key=lambda k: k['name']),

sorted(user_groups, key=lambda k: k['name']))

self.assertEqual(2, len(user_groups))

|

Use Bland's rule

Note: pivot_col() assume some obj value is negative | @@ -195,14 +195,11 @@ def simplex(c, m, b):

if any(_.is_negative for _ in tableau[:-1, -1]):

raise NotImplementedError("Phase I for simplex isn't implemented.")

- # Pivoting strategy use Bland's rule

-

def pivot_col(obj):

- low, idx = 0, 0

- for i in range(len(obj) - 1):

- if obj[i] < low:

- low, idx = obj[i], i

- return -1 if idx == 0 else idx

+ # use Bland's rule

+ for i in range(len(obj) - 1): # pragma: no branch

+ if obj[i] < 0:

+ return i

def pivot_row(lhs, rhs):

ratio, idx = oo, 0

|

Update rules

Add key-spacing and switch from no-spaced-func to func-call-spacing

Change space-before-function-paren to require space for anonymous functions | @@ -41,13 +41,23 @@ module.exports = {

"camelcase": ["error", {"properties": "never"}],

"comma-dangle": ["warn", "always-multiline"],

"eqeqeq": ["error"],

+ "func-call-spacing": ["error"],

"indent": ["warn", 4, {"SwitchCase":1}],

"linebreak-style": ["error", "unix"],

- "semi": ["error", "always"],

+ "key-spacing": ["error"],

+ "keyword-spacing": ["error"],

+ "no-implicit-globals": ["error"],

+ "no-irregular-whitespace": ["error"],

"no-new-object": ["error"],

- "no-unneeded-ternary": ["error"],

+ "no-regex-spaces": ["error"],

"no-throw-literal": ["error"],

- "no-implicit-globals": ["error"],

+ "no-unneeded-ternary": ["error"],

+ "no-whitespace-before-property": ["error"], // match flake8 E201 and E211

+ "semi": ["error", "always"],

+ "space-before-function-paren": ["error", {"anonymous": "always", "named": "never", "asyncArrow": "always"}],

+ "space-before-blocks": ["error"],

+ "space-in-parens": ["error", "never"],

+ "space-infix-ops": ["error"], // match flake8 E225

"eslint-dimagi/no-unblessed-new": ["error", ["Date", "Error", "RegExp", "Clipboard"]],

}

|

Update hvac/api/secrets_engines/kv_v2.py

changed to be align with sshishov's suggestions and removed the duplicated code | @@ -61,10 +61,7 @@ class KvV2(VaultApiBase):

return self._adapter.get(url=api_path)

def read_secret(self, path, mount_point=DEFAULT_MOUNT_POINT):

- api_path = utils.format_url('/v1/{mount_point}/data/{path}', mount_point=mount_point, path=path)

- return self._adapter.get(

- url=api_path,

- )

+ return self.read_secret_version(path, mount_point=mount_point)

def read_secret_version(self, path, version=None, mount_point=DEFAULT_MOUNT_POINT):

"""Retrieve the secret at the specified location.

|

doc: Adding exit code for pytest usage examples

* doc: Adding exit code for pytest usage examples

Fix | @@ -168,15 +168,15 @@ You can invoke ``pytest`` from Python code directly:

.. code-block:: python

- pytest.main()

+ retcode = pytest.main()

this acts as if you would call "pytest" from the command line.

-It will not raise ``SystemExit`` but return the exitcode instead.

+It will not raise :class:`SystemExit` but return the :ref:`exit code <exit-codes>` instead.

You can pass in options and arguments:

.. code-block:: python

- pytest.main(["-x", "mytestdir"])

+ retcode = pytest.main(["-x", "mytestdir"])

You can specify additional plugins to ``pytest.main``:

@@ -191,7 +191,8 @@ You can specify additional plugins to ``pytest.main``:

print("*** test run reporting finishing")

- pytest.main(["-qq"], plugins=[MyPlugin()])

+ if __name__ == "__main__":

+ sys.exit(pytest.main(["-qq"], plugins=[MyPlugin()]))

Running it will show that ``MyPlugin`` was added and its

hook was invoked:

|

Added timer in VM resume case

15 min timeout, and 15 second internval for state checking | @@ -125,6 +125,7 @@ function Main {

Start-Sleep -s 120

# Verify the VM status

+ # Can not find if VM hibernation completion or not as soon as it disconnects the network. Assume it is in timeout.

$vmStatus = Get-AzVM -Name $vmName -ResourceGroupName $rgName -Status

if ($vmStatus.Statuses[1].DisplayStatus = "VM stopped") {

Write-LogInfo "$vmStatus.Statuses[1].DisplayStatus: Verified successfully VM status is stopped after hibernation command sent"

@@ -135,8 +136,33 @@ function Main {

# Resume the VM

Start-AzVM -Name $vmName -ResourceGroupName $rgName -NoWait | Out-Null

- Write-LogInfo "Waked up the VM $vmName in Resource Group $rgName and waiting 180 seconds"

- Start-Sleep -s 180

+ Write-LogInfo "Waked up the VM $vmName in Resource Group $rgName and continue checking its status in every 15 seconds until 15 minutes timeout "

+

+ # Wait for VM resume for 15 min-timeout

+ $timeout = New-Timespan -Minutes 15

+ $sw = [diagnostics.stopwatch]::StartNew()

+ while ($sw.elapsed -lt $timeout){

+ $vmCount = $AllVMData.Count

+ Wait-Time -seconds 15

+ $state = Run-LinuxCmd -ip $VMData.PublicIP -port $VMData.SSHPort -username $user -password $password "date"

+ if ($state -eq "TestCompleted") {

+ $kernelCompileCompleted = Run-LinuxCmd -ip $VMData.PublicIP -port $VMData.SSHPort -username $user -password $password "dmesg | grep -i 'hibernation exit'"

+ if ($kernelCompileCompleted -ne "hibernation exit") {

+ Write-LogErr "VM $($VMData.RoleName) resumed successfully but could not determine hibernation completion"

+ } else {

+ Write-LogInfo "VM $($VMData.RoleName) resumed successfully"

+ $vmCount--

+ }

+ break

+ } else {

+ Write-LogInfo "VM is still resuming!"

+ }

+ }

+ if ($vmCount -le 0){

+ Write-LogInfo "VM resume completed"

+ } else {

+ Throw "VM resume did not finish"

+ }

#Verify the VM status after power on event

$vmStatus = Get-AzVM -Name $vmName -ResourceGroupName $rgName -Status

|

Standalone: Blacklist more "test" modules from standard library.

* These were causing issues on Python 2.6 and Travis, but other systems

might be affected as well. | @@ -383,11 +383,11 @@ def scanStandardLibraryPath(stdlib_dir):

]

if import_path in ("tkinter", "importlib", "ctypes", "unittest",

- "sqlite3", "distutils"):

+ "sqlite3", "distutils", "email", "bsddb"):

if "test" in dirs:

dirs.remove("test")

- if import_path == "lib2to3":

+ if import_path in ("lib2to3", "json", "distutils"):

if "tests" in dirs:

dirs.remove("tests")

|

Minor polish to QAOA example

removes function annotations for Python 2 compatibility

puts factor of 2 on betas to match the paper convention | @@ -133,24 +133,16 @@ def Rzz(rads):

return cirq.ZZPowGate(exponent=2 * rads / np.pi, global_shift=-0.5)

-def qaoa_max_cut_unitary(

- qubits,

- betas,

- gammas,

- graph, # Nodes should be integers

-) -> cirq.OP_TREE:

+def qaoa_max_cut_unitary(qubits, betas, gammas,

+ graph): # Nodes should be integers

for beta, gamma in zip(betas, gammas):

yield (

Rzz(-0.5 * gamma).on(qubits[i], qubits[j]) for i, j in graph.edges)

- yield cirq.Rx(beta).on_each(*qubits)

+ yield cirq.Rx(2 * beta).on_each(*qubits)

-def qaoa_max_cut_circuit(

- qubits,

- betas,

- gammas,

- graph, # Nodes should be integers

-) -> cirq.Circuit:

+def qaoa_max_cut_circuit(qubits, betas, gammas,

+ graph): # Nodes should be integers

return cirq.Circuit.from_ops(

# Prepare uniform superposition

cirq.H.on_each(*qubits),

|

Some changes in resource_utils.py:

_Resource now abstract

Removed ResourceBundle as decided to use modules for this purpose instead

Added dosctring | -from typing import Union, Optional, Tuple, Dict

+from typing import Optional, Dict

import hail as hl

# Resource classes

-class ResourceBundle:

- def __init__(self, **kwargs):

- self.resources = kwargs

- self.__dict__.update(kwargs)

-

- def __getitem__(self, item):

- return self.resources[item]

-

- def __repr__(self):

- return f"ResourceBundle({self.resources.__repr__()})"

-

- def __str__(self):

- return f"ResourceBundle({self.resources.__str__()})"

-

-

class _Resource:

+ """

+ Generic abstract resource class.

+ """

def __init__(self, path: str, source_path: Optional[str] = None, **kwargs):

+ if type(self) is _Resource:

+ raise TypeError("Can't instantiate abstract class _Resource")

+

self.source_path = source_path

self.path = path

self.__dict__.update(kwargs)

@classmethod

- def versioned(cls, versions: Dict[str, '_Resource']):

+ def versioned(cls, versions: Dict[str, '_Resource']) -> '_Resource':

+ """

+ Creates a versioned resource.

+ The `path`/`source_path` attributes of the versioned resource are those

+ of default version of the resource (first in the dict keys).

+

+ In addition, all versions of the resource are stored in the `versions` attribute.

+

+ :param dict of str -> _Resource versions: A dict of version name -> resource. The default version should be first in the dict keys.

+ :return: A Resource with versions

+ :rtype: _Resource

+ """

latest = versions[list(versions)[0]]

versioned_resource = cls(path=latest.path, source_path=latest.source_path)

versioned_resource.versions = versions

@@ -33,10 +35,26 @@ class _Resource:

class TableResource(_Resource):

- def ht(self):

+ """

+ A Hail Table resource

+ """

+ def ht(self) -> hl.Table:

+ """

+ Read and return the Hail Table resource

+ :return: Hail Table resource

+ :rtype: Table

+ """

return hl.read_table(self.path)

class MatrixTableResource(_Resource):

- def mt(self):

+ """

+ A Hail MatrixTable resource

+ """

+ def mt(self)-> hl.MatrixTable:

+ """

+ Read and return the Hail MatrixTable resource

+ :return: Hail MatrixTable resource

+ :rtype: MatrixTable

+ """

return hl.read_matrix_table(self.path)

|

tests: pin ansible-lint version

This commit pins the ansible-lint version to 4.3.7 as ceph-ansible isn't

compatible with recent changes in 5.0.0 | @@ -14,5 +14,5 @@ jobs:

with:

python-version: ${{ matrix.python-version }}

architecture: x64

- - run: pip install -r <(grep ansible tests/requirements.txt) ansible-lint

+ - run: pip install -r <(grep ansible tests/requirements.txt) ansible-lint==4.3.7

- run: ansible-lint -x 106,204,205,208 -v --force-color ./roles/*/ ./infrastructure-playbooks/*.yml site-container.yml.sample site-container.yml.sample

\ No newline at end of file

|

Potential fix for bug in "Use These Parameters" popup menu entry.

The fix is actually changing the set_parameter API and it is setting the current value instead of the default value. | @@ -55,8 +55,8 @@ class Input(object):

"""

self._parameter = parameter

- if parameter.default is not None:

- self.setValue(parameter.default)

+ if parameter.value is not None:

+ self.setValue(parameter.value)

if hasattr(parameter, 'units') and parameter.units:

self.setSuffix(" %s" % parameter.units)

|

Fix lack of space in fatal error ("tofile an issue")

Also reduces duplication by storing the error message in a variable | @@ -638,15 +638,10 @@ class LocalizedEvent(DatetimeEvent):

try:

starttz = getattr(self._vevents[self.ref]['DTSTART'].dt, 'tzinfo', None)

except KeyError:

- logger.fatal(

- "Cannot understand event {} from calendar {},\n you might want to"

- "file an issue at https://github.com/pimutils/khal/issues"

- "".format(kwargs.get('href'), kwargs.get('calendar'))

- )

+ msg = "Cannot understand event {} from calendar {}, you might want to file an issue at https://github.com/pimutils/khal/issues".format(kwargs.get('href'), kwargs.get('calendar'))

+ logger.fatal(msg)

raise FatalError( # because in ikhal you won't see the logger's output

- "Cannot understand event {} from calendar {},\n you might want to"

- "file an issue at https://github.com/pimutils/khal/issues"

- "".format(kwargs.get('href'), kwargs.get('calendar'))

+ msg

)

if starttz is None:

|

structured config docs import consistency

Closes | @@ -206,6 +206,7 @@ supported by OmegaConf (``int``, ``float``. ``bool``, ``str``, ``Enum`` or Struc

.. doctest::

+ >>> from dataclasses import dataclass, field

>>> from typing import List, Tuple

>>> @dataclass

... class User:

@@ -246,6 +247,7 @@ as arbitrary Structured configs)

.. doctest::

+ >>> from dataclasses import dataclass, field

>>> from typing import Dict

>>> @dataclass

... class DictExample:

@@ -385,6 +387,8 @@ Frozen dataclasses and attr classes are supported via OmegaConf :ref:`read-only-

.. doctest::

+ >>> from dataclasses import dataclass, field

+ >>> from typing import List

>>> @dataclass(frozen=True)

... class FrozenClass:

... x: int = 10

|

Fix 'metadata referenced before assignment' error

For the life of me, I can't figure out how this wasn't caught by the

test added in | @@ -21,6 +21,7 @@ import websockets

from . import provisioner, tag, utils

from .annotationhelper import _get_annotations, _set_annotations

from .bundle import BundleHandler, get_charm_series

+from .charm import get_local_charm_metadata

from .charmhub import CharmHub

from .charmstore import CharmStore

from .client import client, connector

@@ -1419,7 +1420,6 @@ class Model:

entity_url = str(entity_url) # allow for pathlib.Path objects

entity_path = Path(entity_url.replace('local:', ''))

bundle_path = entity_path / 'bundle.yaml'

- metadata_path = entity_path / 'metadata.yaml'

is_local = (

entity_url.startswith('local:') or

@@ -1470,12 +1470,8 @@ class Model:

entity_id,

entity=entity)

else:

+ metadata = get_local_charm_metadata(entity_path)

if not application_name:

- if str(entity_path).endswith('.charm'):

- with zipfile.ZipFile(entity_path, 'r') as charm_file:

- metadata = yaml.load(charm_file.read('metadata.yaml'), Loader=yaml.FullLoader)

- else:

- metadata = yaml.load(metadata_path.read_text(), Loader=yaml.FullLoader)

application_name = metadata['name']

# We have a local charm dir that needs to be uploaded

charm_dir = os.path.abspath(

|

Better error message for spark.serializer unset

fixes | @@ -105,10 +105,11 @@ object HailContext {

val problems = new ArrayBuffer[String]

- val serializer = conf.get("spark.serializer")

+ val serializer = conf.getOption("spark.serializer")

val kryoSerializer = "org.apache.spark.serializer.KryoSerializer"

- if (serializer != kryoSerializer)

- problems += s"Invalid configuration property spark.serializer: required $kryoSerializer. Found: $serializer."

+ if (!serializer.contains(kryoSerializer))

+ problems += s"Invalid configuration property spark.serializer: required $kryoSerializer. " +

+ s"Found: ${ serializer.getOrElse("empty parameter") }."

if (!conf.getOption("spark.kryo.registrator").exists(_.split(",").contains("is.hail.kryo.HailKryoRegistrator")))

problems += s"Invalid config parameter: spark.kryo.registrator must include is.hail.kryo.HailKryoRegistrator." +

|

Fix check on empty dataset

Without this fix we read empty datasets that resulted in several

warnings. | @@ -129,7 +129,7 @@ class SqParquetDB(SqDB):

dataset = ds.dataset(elem, format='parquet',

partitioning='hive')

- if not dataset:

+ if not dataset.files:

continue

tmp_df = self._process_dataset(dataset, namespace, start,

|

Temporarily disabling tests for 0.28.x because 0.28.x is currently unsupported by the test

cases. | @@ -125,6 +125,12 @@ if [[ "${TFX_VERSION}" == 0.27.* ]]; then

)

fi

+if [[ "${TFX_VERSION}" == 0.28.* ]]; then

+ # Skipping all TFX 0.28.0 tests until all the issues has been resolved

+ # http://b/183541263 TFX Tests fails for TFX 0.28.0

+ exit 0

+fi

+

# TODO(b/182435431): Delete the following test after the hanging issue resolved.

SKIP_LIST+=(

"tfx/experimental/distributed_inference/graphdef_experiments/subgraph_partitioning/beam_pipeline_test.py"

|

[ci] Add CODEOWNERS for .buildkite/hooks

These hooks are specific to our Buildkite setup and require some context to be edited successfully. Thus they should be protected by codeowner approval. | #/.travis.yml @ray-project/ray-core

#/ci/ @ray-project/ray-core

+# Buildkite pipeline management

+.buildkite/hooks @simon-mo @krfricke

+

/.github/ISSUE_TEMPLATE/ @ericl @stephanie-wang @scv119 @pcmoritz

|

Amend fix in commit #b737828

An empty string counts as a label. It should to be ignored. | @@ -218,7 +218,10 @@ class Layout(object):

out : str

x-axis label

"""

- return self.panel_scales_x[0].name or labels.get('x', '')

+ if self.panel_scales_x[0].name is not None:

+ return self.panel_scales_x[0].name

+ else:

+ return labels.get('x', '')

def ylabel(self, labels):

"""

@@ -235,4 +238,7 @@ class Layout(object):

out : str

y-axis label

"""

- return self.panel_scales_y[0].name or labels.get('y', '')

+ if self.panel_scales_y[0].name is not None:

+ return self.panel_scales_y[0].name

+ else:

+ return labels.get('y', '')

|

Minor terminology fix

For libadalang#923 | @@ -1752,7 +1752,7 @@ def make_formatter(

wrapped paragraphs, the given ``line_prefix`` for each line, and given

``prefix`` and ``suffix``.

- If the ``:typeref:`` Langkit directive is used in the docstring,

+ If the ``:typeref:`` Langkit role is used in the docstring,

``get_node_name`` will be used to translate the name to the proper name in

the given language, and ``type_role_name`` will be used as the name for the

type reference role in the given language.

|

fix: move GitLab mirror to sw release

This commit moves the repo sync to the correct place. | # This job will execute a release:

# Ansible galaxy new release

# GitHub release with tag

+# Mirror to GitLab

# Documents release

name: release

@@ -60,6 +61,15 @@ jobs:

python3 -m pip install --force dist/*

kubeinit -v

fi

+ - name: Mirror to GitLab

+ run: |

+ git clone https://github.com/Kubeinit/kubeinit.git kubeinit_mirror

+ cd kubeinit_mirror

+ git branch -r | grep -v -- ' -> ' | while read remote; do git branch --track "${remote#origin/}" "$remote" 2>&1 | grep -v ' already exists'; done

+ git fetch --all

+ git pull --all

+ sed -i 's/https:\/\/github\.com\/Kubeinit\/kubeinit\.git/https:\/\/github-access:${{ secrets.GITLAB_TOKEN }}@gitlab\.com\/kubeinit\/kubeinit.git/g' .git/config

+ git push --force --all origin

release_docs:

needs: release_sw

@@ -157,12 +167,3 @@ jobs:

branch: gh-pages

directory: gh-pages

github_token: ${{ secrets.GITHUB_TOKEN }}

- - name: Mirror to GitLab

- run: |

- git clone https://github.com/Kubeinit/kubeinit.git kubeinit_mirror

- cd kubeinit_mirror

- git branch -r | grep -v '\->' | while read remote; do git branch --track "${remote#origin/}" "$remote"; done

- git fetch --all

- git pull --all

- sed -i 's/https:\/\/github\.com\/Kubeinit\/kubeinit\.git/https:\/\/github-access:${{ secrets.GITLAB_TOKEN }}@gitlab\.com\/kubeinit\/kubeinit.git/g' .git/config

- git push --force --all origin

|

doc:update virtual gpu doc

Add a note to the documentation,the GPU vendor's VGPU

driver software needs to be installed and configured. | @@ -11,7 +11,8 @@ Processing Units (vGPUs) if the hypervisor supports the hardware driver and has

the capability to create guests using those virtual devices.

This feature is highly dependent on the hypervisor, its version and the

-physical devices present on the host.

+physical devices present on the host. In addition, the vendor's vGPU driver software

+must be installed and configured on the host at the same time.

.. important:: As of the Queens release, there is no upstream continuous

integration testing with a hardware environment that has virtual

@@ -93,7 +94,8 @@ Depending on your hypervisor:

- For libvirt, virtual GPUs are seen as mediated devices. Physical PCI devices

(the graphic card here) supporting virtual GPUs propose mediated device

(mdev) types. Since mediated devices are supported by the Linux kernel

- through sysfs files, you can see the required properties as follows:

+ through sysfs files after installing the vendor's virtual GPUs driver

+ software, you can see the required properties as follows:

.. code-block:: console

|

Update README.rst

Update | *******************************************

-Uncertainty Quantification using python (UQpy)

+Uncertainty Quantification with python (UQpy)

*******************************************

|logo|

+

+====

+

:Authors: Michael D. Shields, Dimitris G. Giovanis

-:Contributors: Jiaxin Zhang, Aakash Bangalore Satish, Lohit Vandanapu, Mohit Singh Chauhan

+:Contributors: Aakash Bangalore Satish, Mohit Singh Chauhan, Lohit Vandanapu, Jiaxin Zhang

:Contact: [email protected], [email protected]

-:Web site: www.ce.jhu.edu/surg

-:Documentation: http://uqpy-docs-v0.readthedocs.io/en/latest/

-:Copyright: SURG

-:License:

-:Date: March 2018

:Version: 0.1.0

-Note

-====

-

-UQpy is currently in initial development and therefore should not be

-considered as a stable release.

Description

===========

-UQpy is a numerical tool for performing uncertainty quantification

-using python.

+UQpy (Uncertainty Quantification with python) is a general purpose Python toolbox for modeling uncertainty in physical and mathematical systems..

Supported methods

------------------

+===========

+For sampling:

1. Monte Carlo simulation (MCS),

2. Latin Hypercube Sampling (LHS),

3. Markov Chain Monte Carlo simulation (MCMC)

4. Partially Stratified Sampling (PSS).

-5. Stochastic Reduced Order Models (SROM).

+For reliability analysis:

+ 1. Subset Simulation

-Requirements

-------------

+For surrogate modeling:

+ 1. Stochastic Reduced Order Models (SROM).

+

+

+Dependencies

+===========

* ::

- Python >= 3.6.2

+ Python >= 3.6

Git >= 2.13.1

Installation

-------------

+===========

+

+From PyPI

+

+ * ::

+

+ $pip install UQpy

+

+In order to uninstall it

+

+ * ::

-This will check for all the necessary packages. It will create the required virtual environment and install all its dependencies.

+ $pip uninstall UQpy

+

+Clone your fork of the UQpy repo from your GitHub account to your local disk (to get the latest version):

* ::

$git clone https://github.com/SURGroup/UQpy.git

$cd UQpy/

- $pip install -r requirements.txt

- $python setup.py install

+ $python setup.py install (user installation)

+ $python setup.py develop (developer installation)

+Help and Support

+===========

-In order to uninstall it

+Documentation:

+ http://uqpy-docs-v0.readthedocs.io/en/latest/

- * ::

+Website:

+ www.ce.jhu.edu/surg

- $pip uninstall UQpy

.. |logo| image:: logo.jpg

|

Always return true for feedback type when interaction type is not specified.

Cleanup comments. | @@ -95,9 +95,12 @@ const CMIFeedback = {

// Coerce the value to a string for validation

value = String(value);

if (obj.type === 'true-false') {

+ // Single character matching the valid true false characters

return trueFalse.test(value) && value.length === 1;

}

if (obj.type === 'choice') {

+ // Choice is comma separated list of 0-9 and a-z

+ // Can also be wrapped in curly braces

if (value[0] === '{') {

if (value[value.length - 1] === '}') {

value = value.substring(1, value.length - 1);

@@ -108,18 +111,24 @@ const CMIFeedback = {

return value.split(',').every(singleAlphaNumeric);

}

if (obj.type === 'fill-in' || obj.type === 'performance') {

+ // Ensure it doesn't exceed the max length

if (value.length <= 255) {

return true;

}

return false;

}

if (obj.type === 'numeric') {

+ // Just validate that it's a number

return CMIDecimal.validate(value);

}

if (obj.type === 'likert') {

+ // A single alphanumeric character

return alphaNumeric.test(String(value)) && value.length === 1;

}

if (obj.type === 'matching') {

+ // Like the choice type, except that the comma separated

+ // values are pairs separted by '.'

+ // e.g. 'a.b,1.c'

if (value[0] === '{') {

if (value[value.length - 1] === '}') {

value = value.substring(1, value.length - 1);

@@ -132,8 +141,11 @@ const CMIFeedback = {

});

}

if (obj.type === 'sequencing') {

+ // Comma separated list of single characters

return value.split(',').every(singleAlphaNumeric);

}

+ // No type, return true as we can't validate

+ return true;

},

};

@@ -554,8 +566,6 @@ export default class SCORM extends BaseShim {

iframeInitialize() {

this.__setShimInterface();

- // window.parent is our own shim, so we use regular assignment

- // here to play nicely with the Proxy we are using.

try {

window.parent.API = this.shim;

} catch (e) {

|

rolling_update: add ceph-handler role

since the introduction of ceph-handler, it has to be added in

rolling_update playbook as well | roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

roles:

- ceph-defaults

+ - ceph-handler

- { role: ceph-common, when: not containerized_deployment }

- { role: ceph-docker-common, when: containerized_deployment }

- ceph-config

|

add sanity check for prod installs

Test Plan:

make sanity_check

make rebuild_dagit

Reviewers: #ft, max

Subscribers: max | @@ -81,7 +81,10 @@ install_dev_python_modules_verbose:

graphql:

cd js_modules/dagit/; make generate-types

-rebuild_dagit:

+sanity_check:

+ ! pip list --exclude-editable | grep -e dagster -e dagit

+

+rebuild_dagit: sanity_check

cd js_modules/dagit/; yarn install --offline && yarn build-for-python

dev_install: install_dev_python_modules rebuild_dagit

|

paper.md: Fixed broken link

i had to use "latest" instead of "stable" for the RTD build because there is no release (yet) with the latest change (section: "use cases") | @@ -78,7 +78,7 @@ Another challenge of medical imaging is the heterogeneity of the data across hos

# Usage

-Past and ongoing research projects using `ivadomed` are listed [here](https://ivadomed.org/en/stable/use_cases.html). The figure below illustrates a cascaded architecture for segmenting spinal tumors on MRI data [@lemay_fully_2020].

+Past and ongoing research projects using `ivadomed` are listed [here](https://ivadomed.org/en/latest/use_cases.html). The figure below illustrates a cascaded architecture for segmenting spinal tumors on MRI data [@lemay_fully_2020].

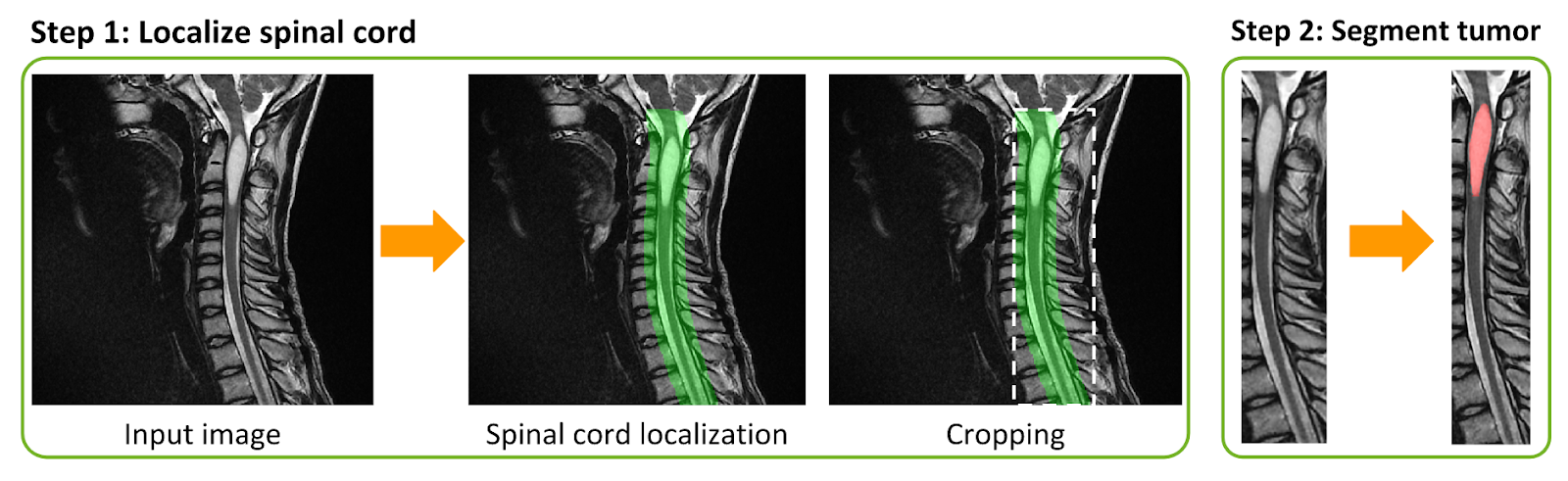

|

FIX: account for API change in ophyd

We are not doing anything with the status object we are passed yet,

but this should work with both signatures and will prevent excessive

warnings. | @@ -1788,7 +1788,7 @@ class RunEngine:

await current_run.kickoff(msg)

- def done_callback():

+ def done_callback(status=None):

self.log.debug(

"The object %r reports 'kickoff' is done " "with status %r",

msg.obj,

@@ -1840,7 +1840,7 @@ class RunEngine:

p_event = asyncio.Event(loop=self.loop)

pardon_failures = self._pardon_failures

- def done_callback():

+ def done_callback(status=None):

self.log.debug(

"The object %r reports 'complete' is done " "with status %r",

msg.obj,

@@ -1910,7 +1910,7 @@ class RunEngine:

p_event = asyncio.Event(loop=self.loop)

pardon_failures = self._pardon_failures

- def done_callback():

+ def done_callback(status=None):

self.log.debug("The object %r reports set is done "

"with status %r", msg.obj, ret.success)

task = self._loop.call_soon_threadsafe(

@@ -1941,7 +1941,7 @@ class RunEngine:

p_event = asyncio.Event(loop=self.loop)

pardon_failures = self._pardon_failures

- def done_callback():

+ def done_callback(status=None):

self.log.debug("The object %r reports trigger is "

"done with status %r.", msg.obj, ret.success)

task = self._loop.call_soon_threadsafe(

|

Guess entity type on positive IDs in events and avoid some RPCs

Now specifying a single positive integer ID will add all the types

to the white/blacklist so it can be "guessed". Explicit peers will

always be only that type, and an RPC is avoided (since it was not

needed to begin with). | @@ -20,10 +20,24 @@ def _into_id_set(client, chats):

result = set()

for chat in chats:

+ if isinstance(chat, int):

+ if chat < 0:

+ result.add(chat) # Explicitly marked IDs are negative

+ else:

+ result.update({ # Support all valid types of peers

+ utils.get_peer_id(types.PeerUser(chat)),

+ utils.get_peer_id(types.PeerChat(chat)),

+ utils.get_peer_id(types.PeerChannel(chat)),

+ })

+ elif isinstance(chat, TLObject) and chat.SUBCLASS_OF_ID == 0x2d45687:

+ # 0x2d45687 == crc32(b'Peer')

+ result.add(utils.get_peer_id(chat))

+ else:

chat = client.get_input_entity(chat)

if isinstance(chat, types.InputPeerSelf):

chat = client.get_me(input_peer=True)

result.add(utils.get_peer_id(chat))

+

return result

|

chore!: Deprecate request data source

Remove RequestDataSource | @@ -601,16 +601,6 @@ class RequestSource(DataSource):

raise NotImplementedError

-@typechecked

-class RequestDataSource(RequestSource):

- def __init__(self, *args, **kwargs):

- warnings.warn(

- "The 'RequestDataSource' class is deprecated and was renamed to RequestSource. Please use RequestSource instead. This class name will be removed in Feast 0.24.",

- DeprecationWarning,

- )

- super().__init__(*args, **kwargs)

-

-

@typechecked

class KinesisSource(DataSource):

def validate(self, config: RepoConfig):

|

Fix compute baremetal service client tests

While removing the baremetal tests in

compute baremetal

service client in clients.py also got removed but it is needed

in tempest/api/compute/admin/test_baremetal_nodes.py.

This patch fix this. | @@ -163,6 +163,7 @@ class Manager(clients.ServiceClients):

self.aggregates_client = self.compute.AggregatesClient()

self.services_client = self.compute.ServicesClient()

self.tenant_usages_client = self.compute.TenantUsagesClient()

+ self.baremetal_nodes_client = self.compute.BaremetalNodesClient()

self.hosts_client = self.compute.HostsClient()

self.hypervisor_client = self.compute.HypervisorClient()

self.instance_usages_audit_log_client = (

|

added an image buffer to fix the artificial latency

author Maxime Ellerbach +0200

committer Maxime Ellerbach +0200

added an image buffer to fix the artificial latency | @@ -23,7 +23,8 @@ class DonkeyGymEnv(object):

conf["exe_path"] = sim_path

conf["host"] = host

conf["port"] = port

- conf['guid'] = 0

+ conf["guid"] = 0

+ conf["frame_skip"] = 1

self.env = gym.make(env_name, conf=conf)

self.frame = self.env.reset()

self.action = [0.0, 0.0, 0.0]

@@ -34,14 +35,37 @@ class DonkeyGymEnv(object):

'gyro': (0., 0., 0.),

'accel': (0., 0., 0.),

'vel': (0., 0., 0.)}

- self.delay = float(delay)

+ self.delay = float(delay) / 1000

self.record_location = record_location

self.record_gyroaccel = record_gyroaccel

self.record_velocity = record_velocity

self.record_lidar = record_lidar

+ self.buffer = []

+

+ def delay_buffer(self, frame, info):

+ now = time.time()

+ buffer_tuple = (now, frame, info)

+ self.buffer.append(buffer_tuple)

+

+ # go through the buffer

+ num_to_remove = 0

+ for buf in self.buffer:

+ if now - buf[0] >= self.delay:

+ num_to_remove += 1

+ self.frame = buf[1]

+ else:

+ break

+

+ # clear the buffer

+ del self.buffer[:num_to_remove]

+

def update(self):

while self.running:

+ if self.delay > 0.0:

+ current_frame, _, _, current_info = self.env.step(self.action)

+ self.delay_buffer(current_frame, current_info)

+ else:

self.frame, _, _, self.info = self.env.step(self.action)

def run_threaded(self, steering, throttle, brake=None):

@@ -50,8 +74,7 @@ class DonkeyGymEnv(object):

throttle = 0.0

if brake is None:

brake = 0.0

- if self.delay > 0.0:

- time.sleep(self.delay / 1000.0)

+

self.action = [steering, throttle, brake]

# Output Sim-car position information if configured

|

Fix typo in docs of `nn.ARGVA`

Fixes a small typo of the docstring of `nn.ARGVA` | @@ -237,7 +237,6 @@ class ARGVA(ARGA):

r"""The Adversarially Regularized Variational Graph Auto-Encoder model from

the `"Adversarially Regularized Graph Autoencoder for Graph Embedding"

<https://arxiv.org/abs/1802.04407>`_ paper.

- paper.

Args:

encoder (Module): The encoder module to compute :math:`\mu` and

|

Close spur.SshShell connections when done

Resolves | @@ -63,8 +63,7 @@ def get_server(sid=None, name=None):

def get_connection(sid):

"""

- Attempts to connect to the given server and

- returns a connection.

+ Attempts to connect to the given server and returns a connection.

"""

server = get_server(sid)

@@ -90,6 +89,8 @@ def ensure_setup(shell):

"""

Runs sanity checks on the shell connection to ensure that

shell_manager is set up correctly.

+

+ Leaves connection open.

"""

result = shell.run(

@@ -192,6 +193,8 @@ def get_problem_status_from_server(sid):

Connects to the server and checks the status of the problems running there.

Runs `sudo shell_manager status --json` and parses its output.

+ Closes connection after running command.

+

Args:

sid: The sid of the server to check

@@ -204,6 +207,7 @@ def get_problem_status_from_server(sid):

shell = get_connection(sid)

ensure_setup(shell)

+ with shell:

output = shell.run(

["sudo", "/picoCTF-env/bin/shell_manager", "status",

"--json"]).output.decode("utf-8")

@@ -229,6 +233,8 @@ def load_problems_from_server(sid):

Connects to the server and loads the problems from its deployment state.

Runs `sudo shell_manager publish` and captures its output.

+ Closes connection after running command.

+

Args:

sid: The sid of the server to load problems from.

@@ -238,6 +244,7 @@ def load_problems_from_server(sid):

shell = get_connection(sid)

+ with shell:

result = shell.run(["sudo", "/picoCTF-env/bin/shell_manager", "publish"])

data = json.loads(result.output.decode("utf-8"))

|

azure,hyperv: Fixed cpio unpacking for initrd TC

On ubuntu distros the initrd image is made from 2 separate archives

(microcode & initrd) and the old unpacking failed. We skip the microcode

archive and unpack the actual initrd image. | @@ -100,17 +100,26 @@ if [ "${hv_modules:-UNDEFINED}" = "UNDEFINED" ]; then

exit 0

fi

-if [[ $DISTRO == "redhat_6" ]]; then

- yum_install -y dracut-network

+GetDistro

+case $DISTRO in

+ centos_6 | redhat_6)

+ update_repos

+ install_package dracut-network

dracut -f

if [ "$?" = "0" ]; then

- LogMsg "dracut -f ran successfully"

+ LogMsg "Info: dracut -f ran successfully"

else

- LogErr "dracut -f fails to execute"

+ LogErr "Error: dracut -f fails to execute"

SetTestStateAborted

- exit 0

- fi

+ exit 1

fi

+ ;;

+ ubuntu* | debian*)

+ update_repos

+ # Provides skipcpio binary

+ install_package dracut-core

+ ;;

+esac

if [ -f /boot/initramfs-0-rescue* ]; then

img=/boot/initramfs-0-rescue*

@@ -136,7 +145,10 @@ LogMsg "Unpacking the image..."

case $img_type in

*ASCII*cpio*)

- /usr/lib/dracut/skipcpio boot.img |zcat| cpio -id --no-absolute-filenames

+ cpio -id -F boot.img &> out.file

+ skip_block_size=$(cat out.file | awk '{print $1}')

+ dd if=boot.img of=finalInitrd.img bs=512 skip=$skip_block_size

+ /usr/lib/dracut/skipcpio finalInitrd.img |zcat| cpio -id --no-absolute-filenames

if [ $? -eq 0 ]; then

LogMsg "Successfully unpacked the image."

else

|

Change test to not check the actual values

there seem to be differences between linux and mac probably because of the really small probability values coming out of the learner. | import tempfile

import os

+import numpy as np

from itertools import count

from nose.tools import assert_equal, eq_, raises

-from numpy.testing import assert_array_almost_equal

from rsmtool.utils import (float_format_func,

int_or_float_format_func,

@@ -397,9 +397,5 @@ class TestExpectedScores():

def test_expected_scores(self):

computed_predictions = compute_expected_scores_from_model(self.svc_with_probs, self.test_fs, 0, 4)

- expected_predictions = [1.99309843, 3.0150261, 2.81954592, 2.05229557, 2.53435775,

- 2.02165905, 0.99727808, 2.12953073, 3.89610915, 3.55630337,

- 2.92610019, 1.50800606, 3.06142746, 3.76927309, 2.00217987,

- 2.2993259, 2.91747598, 1.00907901, 1.02490296, 3.69485081,

- 2.04568922, 2.60198602, 1.8403947, 1.35505209, 1.99068596]

- assert_array_almost_equal(computed_predictions, expected_predictions, decimal=2)

+ assert len(computed_predictions) == len(self.test_fs)

+ assert np.all([((prediction >= 0) and (prediction <= 4)) for prediction in computed_predictions])

|

ssh: Fix password authentication with Python 3.x & OpenSSH 7.5+

Since PERMDENIED_PROMPT is a byte string the interpolation was resulting

in: b"user@host: b'permission denied'". Needless to say this didn't

match. | @@ -291,8 +291,8 @@ class Stream(mitogen.parent.Stream):

raise HostKeyError(self.hostkey_failed_msg)

elif buf.lower().startswith((

PERMDENIED_PROMPT,

- b("%s@%s: %s" % (self.username, self.hostname,

- PERMDENIED_PROMPT)),

+ b("%s@%s: " % (self.username, self.hostname))

+ + PERMDENIED_PROMPT,

)):

# issue #271: work around conflict with user shell reporting

# 'permission denied' e.g. during chdir($HOME) by only matching

|

SceneTestCase : Use prefix in set comparisons in `assertScenesEqual()`

Also simplify the code for comparing pruned sets. | @@ -229,16 +229,14 @@ class SceneTestCase( GafferImageTest.ImageTestCase ) :

if "sets" in checks :

self.assertEqual( scenePlug1.setNames(), scenePlug2.setNames() )

for setName in scenePlug1.setNames() :

- if not pathsToPrune:

- self.assertEqual( scenePlug1.set( setName ), scenePlug2.set( setName ) )

- else:

- if scenePlug1.set( setName ) != scenePlug2.set( setName ):

- pruned1 = scenePlug1.set( setName )

- pruned2 = scenePlug2.set( setName )

+ set1 = scenePlug1.set( setName ).value

+ set2 = scenePlug2.set( setName ).value

for p in pathsToPrune :

- pruned1.value.prune( p )

- pruned2.value.prune( p )

- self.assertEqual( pruned1, pruned2 )

+ set1.prune( p )

+ set2.prune( p )

+ if scenePlug2PathPrefix :

+ set2 = set2.subTree( scenePlug2PathPrefix )

+ self.assertEqual( set1, set2 )

def assertPathHashesEqual( self, scenePlug1, scenePath1, scenePlug2, scenePath2, checks = allPathChecks ) :

|

cabana: fix incorrect freq&counter

fix wrong freq | @@ -65,7 +65,6 @@ QList<QPointF> CANMessages::findSignalValues(const QString &id, const Signal *si

void CANMessages::process(QHash<QString, std::deque<CanData>> *messages) {

for (auto it = messages->begin(); it != messages->end(); ++it) {

- ++counters[it.key()];

auto &msgs = can_msgs[it.key()];

const auto &new_msgs = it.value();

if (new_msgs.size() == settings.can_msg_log_size || msgs.empty()) {

|

Update http: to https: for push.mattermost.com