message

stringlengths 13

484

| diff

stringlengths 38

4.63k

|

|---|---|

Add another test case for Metadata.supports_py2

This pattern is commonly used to indicate Python 2.7 or 3.x support. | @@ -81,7 +81,8 @@ def test_normalize_file_permissions():

("3", False),

(">= 3.7", False),

("<4, > 3.2", False),

- ('>3.4', False),

+ (">3.4", False),

+ (">=2.7, !=3.0.*, !=3.1.*, !=3.2.*", True),

],

)

def test_supports_py2(requires_python, expected_result):

|

Remove long comment

was complaining about this. It looks like personal debugging

info, so I think it should be safe to delete. Otherwise, we should

put it into a more useful form. | @@ -15,5 +15,3 @@ export JOBNAME="${jobname}"

${user_script}

'''

-

-# cd / && aprun -n 1 -N 1 -cc none -d 24 -F exclusive /bin/bash -c "/usr/bin/perl /home/yadunandb/.globus/coasters/cscript4670024543168323237.pl http://10.128.0.219:60003,http://127.0.0.2:60003,http://192.5.86.107:60003 0718-5002250-000000 NOLOGGING; echo \$? > /autonfs/home/yadunandb/beagle/run019/scripts/PBS3963957609739419742.submit.exitcode" 1>>/autonfs/home/yadunandb/beagle/run019/scripts/PBS3963957609739419742.submit.stdout 2>>/autonfs/home/yadunandb/beagle/run019/scripts/PBS3963957609739419742.submit.stderr

|

Add 5th screenshot custom click & fix style issue

I tested this locally as well. | @@ -185,7 +185,10 @@ quaternary:

message: wait for IA quaternary

PR:

- overseerScript: await page.waitForNavigation({waitUntil:"domcontentloaded"}); await page.waitForSelector("#ember906");page.click("#ember906");page.done();

+ overseerScript:

+ await page.waitForNavigation({waitUntil:"domcontentloaded"});

+ await page.waitForSelector("#ember906");

+ page.click("#ember906");page.done();

message: click button for PR recoveries ("Convalecientes")

@@ -194,3 +197,11 @@ quinary:

IA:

overseerScript: page.manualWait(); await page.waitForDelay(60000); page.done();

message: wait for IA quinary

+

+ PR:

+ overseerScript:

+ await page.waitForNavigation({waitUntil:"domcontentloaded"});

+ await page.waitForSelector("#ember772");

+ page.click("#ember772");page.done();

+ message: click button for PR confirmed Deaths ("Muertes Confirmades")

+

|

doc: minor fix to resource resolution docs

Correct the documentation for what happens if a resource is not found. | @@ -241,8 +241,9 @@ An object looking for a resource invokes a resource resolver with an instance of

``Resource`` describing the resource it is after. The resolver goes through the

getters registered for that resource type in priority order attempting to obtain

the resource; once the resource is obtained, it is returned to the calling

-object. If none of the registered getters could find the resource, ``None`` is

-returned instead.

+object. If none of the registered getters could find the resource,

+``NotFoundError`` is raised (or``None`` is returned instead, if invoked with

+``strict=False``).

The most common kind of object looking for resources is a ``Workload``, and the

``Workload`` class defines

|

Update README.md

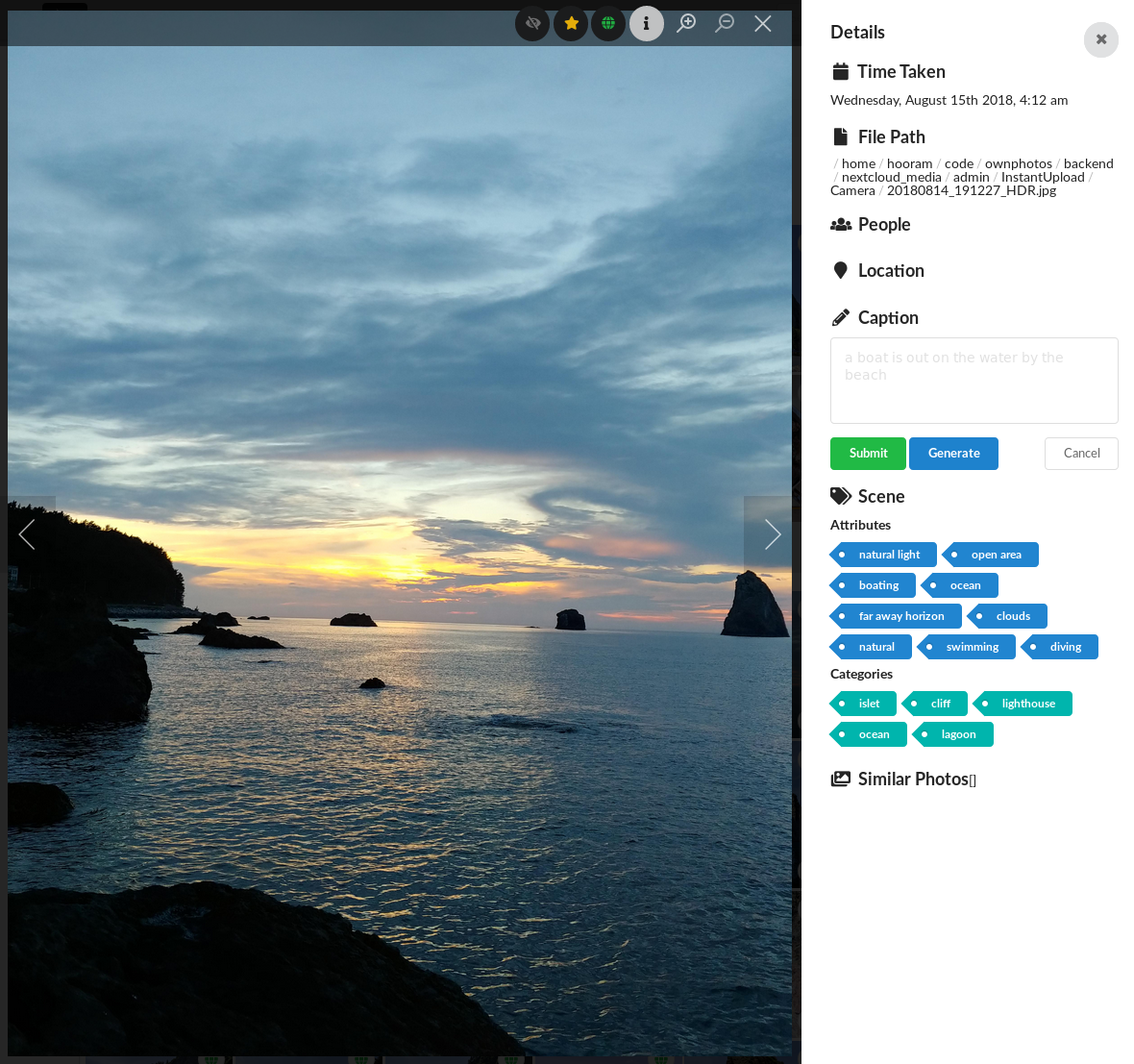

changed references to librephotos | <div style="text-align:center"><img width="100" src ="/screenshots/logo.png"/></div>

-# Ownphotos

+# LibrePhotos

## Screenshots

-

-

-

+

+

+

## Live demo

Live [demo avilable here](https://demo.ownphotos.io).

User is demo, password is demo1234.

## Discord Server

-https://discord.gg/dPCdTBN

+https://discord.gg/y4SmdUJQ

## What is it?

-- Self hosted wannabe Google Photos clone, with a slight focus on cool graphs

+- LibrePhotos is a fork of Ownphotos

+- Self hosted Google Photos clone, with a slight focus on cool graphs

- Django backend & React frontend.

- In development. Contributions are welcome!

@@ -68,19 +69,15 @@ https://discord.gg/dPCdTBN

### Docker

-Ownphotos comes with separate backend and frontend

+LibrePhotos comes with separate backend and frontend

servers. The backend serves the restful API, and the frontend serves, well,

the frontend. They are connected via a proxy.

The easiest way to do it is using Docker.

-If you want the backend server to be reachable by

-`ownphotos-api.example.com` and the frontend by `ownphotos.example.com` from

-outside. You must account for the corsaCross-Origin Resource Sharing (CORS) in your proxy.

-

## Docker-compose method (Recommended)

```

-wget https://raw.githubusercontent.com/hooram/ownphotos/dev/docker-compose.yml.template

+wget https://raw.githubusercontent.com/LibrePhotos/librephotos/dev/docker-compose.yml.template

cp docker-compose.yml.template docker-compose.yml

```

@@ -90,7 +87,7 @@ Open `docker-compose.yml` in your favorite text editor and make changes in the l

docker-compose up -d

```

-You should have ownphotos accessible after a few seconds of bootup on: [localhost:3000](http://localhost:3000)

+You should have librephotos accessible after a few minutes of bootup on: [localhost:3000](http://localhost:3000)

User is admin, password is admin and its important you change it on a public server via the ``docker-compose.yml`` file.

## First steps after setting up

|

Remove extra call to ordering in get_guid method

Guids are already ordered by -created, so extra ordering call wasn't

allowing prefetch related optimizations to be effective | @@ -269,7 +269,7 @@ class OptionalGuidMixin(BaseIDMixin):

pass

else:

return guid

- return self.guids.order_by('-created').first()

+ return self.guids.first()

class Meta:

abstract = True

|

Update solve_captchas.py for aiopogo 2.0

Update solve_captchas to use the protobuf objects returned by aiopogo

2.0, also use the inventory_timestamp in the account dict if available. | #!/usr/bin/env python3

-from multiprocessing.managers import BaseManager

from asyncio import get_event_loop, sleep

+from multiprocessing.managers import BaseManager

from time import time

-from selenium import webdriver

-from selenium.webdriver.support.ui import WebDriverWait

-from selenium.webdriver.support import expected_conditions as EC

-from selenium.webdriver.common.by import By

from aiopogo import PGoApi, close_sessions, activate_hash_server, exceptions as ex

from aiopogo.auth_ptc import AuthPtc

+from selenium import webdriver

+from selenium.webdriver.common.by import By

+from selenium.webdriver.support import expected_conditions as EC

+from selenium.webdriver.support.ui import WebDriverWait

from monocle import altitudes, sanitized as conf

from monocle.utils import get_device_info, get_address, randomize_point

@@ -31,20 +31,14 @@ async def solve_captcha(url, api, driver, timestamp):

for attempt in range(-1, conf.MAX_RETRIES):

try:

- response = await request.call()

- return response['responses']['VERIFY_CHALLENGE']['success']

+ responses = await request.call()

+ return responses['VERIFY_CHALLENGE'].success

except (ex.HashServerException, ex.MalformedResponseException, ex.ServerBusyOrOfflineException) as e:

if attempt == conf.MAX_RETRIES - 1:

raise

else:

print('{}, trying again soon.'.format(e))

await sleep(4)

- except ex.NianticThrottlingException:

- if attempt == conf.MAX_RETRIES - 1:

- raise

- else:

- print('Throttled, trying again in 11 seconds.')

- await sleep(11)

except (KeyError, TypeError):

return False

@@ -109,16 +103,15 @@ async def main():

request.download_remote_config_version(platform=1, app_version=6301)

request.check_challenge()

request.get_hatched_eggs()

- request.get_inventory()

+ request.get_inventory(last_timestamp_ms=account.get('inventory_timestamp', 0))

request.check_awarded_badges()

request.download_settings()

- response = await request.call()

+ responses = await request.call()

account['time'] = time()

- responses = response['responses']

- challenge_url = responses['CHECK_CHALLENGE']['challenge_url']

- timestamp = responses.get('GET_INVENTORY', {}).get('inventory_delta', {}).get('new_timestamp_ms')

- account['location'] = lat, lon, alt

+ challenge_url = responses['CHECK_CHALLENGE'].challenge_url

+ timestamp = responses['GET_INVENTORY'].inventory_delta.new_timestamp_ms

+ account['location'] = lat, lon

account['inventory_timestamp'] = timestamp

if challenge_url == ' ':

account['captcha'] = False

|

Use links from releases, not packages.

Prevents duplicates. | @@ -55,8 +55,8 @@ function import_opsmgr_variables() {

{% endif %}

{% endfor %}

{% endfor %}

- {% for package in context.packages if package.is_app %}

- {% for link in package.consumes %}

+ {% for release in context.releases if release.consumes %}

+ {% for link in release.consumes %}

<% if_link('{{ link }}') do |link| %>

<% hosts = link.instances.map { |instance| instance.address } %>

export {{ link | upper }}_HOST="<%= link.instances[0].address %>"

@@ -174,8 +174,8 @@ function add_env_vars() {

{% endfor %}

{% endfor %}

- {% for package in context.packages if package.is_app %}

- {% for link in package.consumes %}

+ {% for release in context.releases if release.consumes %}

+ {% for link in release.consumes %}

<% if_link('{{ link }}') do |link| %>

cf set-env $1 {{ link | upper }}_HOST "${{ link | upper }}_HOST"

cf set-env $1 {{ link | upper }}_HOSTS "${{ link | upper }}_HOSTS"

|

Replace sudo with --user in CI caffe2 install

Summary: Pull Request resolved: | @@ -217,7 +217,7 @@ if [[ -z "$INTEGRATED" ]]; then

else

- sudo FULL_CAFFE2=1 python setup.py install

+ FULL_CAFFE2=1 python setup.py install --user

# TODO: I'm not sure why this is necessary

cp -r torch/lib/tmp_install $INSTALL_PREFIX

|

Add Timeout To The Sync Cog

Adds a 30-minute timeout while waiting for the guild to be chunked in

the sync cog, after which the cog is not loaded. | import asyncio

+import datetime

from typing import Any, Dict

from botcore.site_api import ResponseCodeError

from discord import Member, Role, User

from discord.ext import commands

-from discord.ext.commands import Cog, Context

+from discord.ext.commands import Cog, Context, errors

from bot import constants

from bot.bot import Bot

@@ -29,10 +30,17 @@ class Sync(Cog):

return

log.info("Waiting for guild to be chunked to start syncers.")

+ end = datetime.datetime.now() + datetime.timedelta(minutes=30)

while not guild.chunked:

await asyncio.sleep(10)

- log.info("Starting syncers.")

+ if datetime.datetime.now() > end:

+ # More than 30 minutes have passed while trying, abort

+ raise errors.ExtensionFailed(

+ self.__class__.__name__,

+ RuntimeError("The guild was not chunked in time, not loading sync cog.")

+ )

+ log.info("Starting syncers.")

for syncer in (_syncers.RoleSyncer, _syncers.UserSyncer):

await syncer.sync(guild)

|

Assert that unsigned resources stay unsigned

This adds a test to verify that an unsigned resource retains the

signature version `botocore.UNSIGNED`. Previously an issue with

deepcopy caused this behavior to break. | # language governing permissions and limitations under the License.

from tests import unittest

+import botocore

import botocore.stub

+from botocore.config import Config

from botocore.stub import Stubber

from botocore.compat import six

@@ -531,3 +533,16 @@ class TestS3ObjectSummary(unittest.TestCase):

# Even though an HeadObject was used to load this, it should

# only expose the attributes from its shape defined in ListObjects.

self.assertFalse(hasattr(self.obj_summary, 'content_length'))

+

+

+class TestServiceResource(unittest.TestCase):

+ def setUp(self):

+ self.session = boto3.session.Session()

+

+ def test_unsigned_signature_version_is_not_corrupted(self):

+ config = Config(signature_version=botocore.UNSIGNED)

+ resource = self.session.resource('s3', config=config)

+ self.assertIs(

+ resource.meta.client.meta.config.signature_version,

+ botocore.UNSIGNED

+ )

|

Fix SB generator

Fixed one bug where the muxing logic was incorrect

Made the config reg addressing explicit and to-spec. | @@ -46,7 +46,7 @@ class SB(Configurable):

for side_in in sides:

if side_in == side:

continue

- mux_in = getattr(side.I, f"layer{layer}")[track]

+ mux_in = getattr(side_in.I, f"layer{layer}")[track]

self.wire(mux_in, mux.ports.I[idx])

idx += 1

for input_ in self.inputs[layer]:

@@ -60,8 +60,18 @@ class SB(Configurable):

self.add_config(config_name, mux.sel_bits)

self.wire(self.registers[config_name].ports.O, mux.ports.S)

+ # NOTE(rsetaluri): We set the config register addresses explicitly and

+ # in a well-defined order. This ordering can be considered a part of the

+ # functional spec of this module.

+ idx = 0

+ for side in sides:

+ for layer in (1, 16):

+ for track in range(5):

+ reg_name = f"mux_{side._name}_{layer}_{track}_sel"

+ self.registers[reg_name].set_addr(idx)

+ idx += 1

+

for idx, reg in enumerate(self.registers.values()):

- reg.set_addr(idx)

reg.set_addr_width(8)

reg.set_data_width(32)

self.wire(self.ports.config.config_addr, reg.ports.config_addr)

|

[Bug] Final state was stored as Hermitian by default.

Final state will not be Hermitian if initial state is not. | @@ -537,6 +537,7 @@ def _generic_ode_solve(func, ode_args, rho0, tlist, e_ops, opt,

if opt.store_final_state:

cdata = get_curr_state_data(r)

- output.final_state = Qobj(cdata, dims=dims, isherm=True)

+ output.final_state = Qobj(cdata, dims=dims,

+ isherm=rho0.isherm or None)

return output

|

BUG: fix regression in _save(): remove precision argument

does not exist in recent numpy | @@ -21,7 +21,7 @@ __all__ = ['lobpcg']

def _save(ar, fileName):

# Used only when verbosity level > 10.

- np.savetxt(fileName, ar, precision=8)

+ np.savetxt(fileName, ar)

def _report_nonhermitian(M, a, b, name):

|

fw/DatabaseOutput: Only attempt to extract config if avaliable

Do not try to parse `kernel_config` if no data is present. | @@ -948,6 +948,7 @@ class DatabaseOutput(Output):

def kernel_config_from_db(raw):

kernel_config = {}

+ if raw:

for k, v in zip(raw[0], raw[1]):

kernel_config[k] = v

return kernel_config

|

Update org.py

Fix a string formatting issue. | @@ -7,12 +7,12 @@ def dict_to_yaml(x):

yaml = []

for key, value in x.items():

if type(value) == list:

- yaml += "{key}:".format(key=key)

+ yaml += "{key}:\n".format(key=key)

for v in value:

- yaml += "- {v}".format(v=v)

+ yaml += "- {v}\n".format(v=v)

else:

- yaml += "{key}: {value}".format(key=key, value=value)

- return "\n".join(yaml)

+ yaml += "{key}: {value}\n".format(key=key, value=value)

+ return ''.join(yaml)

class OrgConverter(KnowledgePostConverter):

|

Throw if block is too old

So that the block stays in the new_block_pool to avoid broadcast storm | @@ -648,7 +648,11 @@ class ShardState:

self.header_tip.height,

)

)

- return None

+ raise ValueError(

+ "block is too old {} << {}".format(

+ block.header.height, self.header_tip.height

+ )

+ )

if self.db.contain_minor_block_by_hash(block.header.get_hash()):

return None

|

Minor fixes to ARFReg

Make the weighted vote be applied only when aggregation is 'mean'

Fix typo

Update test | @@ -249,7 +249,7 @@ class BaseTreeRegressor(HoeffdingTreeRegressor):

leaf_model: base.Regressor = None,

model_selector_decay: float = 0.95,

nominal_attributes: list = None,

- attr_obs: str = "gaussian",

+ attr_obs: str = "e-bst",

attr_obs_params: dict = None,

min_samples_split: int = 5,

seed=None,

@@ -733,7 +733,7 @@ class AdaptiveRandomForestRegressor(BaseForest, base.Regressor):

>>> metric = metrics.MAE()

>>> evaluate.progressive_val_score(dataset, model, metric)

- MAE: 23.320694

+ MAE: 1.870913

"""

@@ -749,7 +749,7 @@ class AdaptiveRandomForestRegressor(BaseForest, base.Regressor):

aggregation_method: str = "median",

lambda_value: int = 6,

metric: RegressionMetric = MSE(),

- disable_weighted_vote=False,

+ disable_weighted_vote=True,

drift_detector: base.DriftDetector = ADWIN(0.001),

warning_detector: base.DriftDetector = ADWIN(0.01),

# Tree parameters

@@ -822,7 +822,7 @@ class AdaptiveRandomForestRegressor(BaseForest, base.Regressor):

y_pred = np.zeros(self.n_models)

- if not self.disable_weighted_vote:

+ if not self.disable_weighted_vote and self.aggregation_method != self._MEDIAN:

weights = np.zeros(self.n_models)

sum_weights = 0.0

for idx, model in enumerate(self.models):

@@ -1081,7 +1081,7 @@ class ForestMemberRegressor(BaseForestMember, base.Regressor):

if self._var.mean.n == 1:

return 0.5 # The expected error is the normalized mean error

- sd = math.sqrt(self._var.sigma)

+ sd = math.sqrt(self._var.get())

# We assume the error follows a normal distribution -> (empirical rule)

# 99.73% of the values lie between [mean - 3*sd, mean + 3*sd]. We

|

Strip quotes from font names in SVG

Fix | @@ -27,7 +27,9 @@ def text(svg, node, font_size):

# TODO: use real computed values

style = INITIAL_VALUES.copy()

- style['font_family'] = node.get('font-family', 'sans-serif').split(',')

+ style['font_family'] = [

+ font.strip('"\'') for font in

+ node.get('font-family', 'sans-serif').split(',')]

style['font_style'] = node.get('font-style', 'normal')

style['font_weight'] = node.get('font-weight', 400)

style['font_size'] = font_size

|

Changed the way song is played from AsnycIO Queue to Python List[]

Added $removesong <index> command | @@ -71,7 +71,7 @@ class VoiceState:

self.voice = None

self.bot = bot

self.play_next_song = asyncio.Event()

- self.songs = asyncio.Queue()

+ #self.songs = asyncio.Queue()

self.playlist = []

self.skip_votes = set() # a set of user_ids that voted

self.audio_player = self.bot.loop.create_task(self.audio_player_task())

@@ -98,7 +98,12 @@ class VoiceState:

async def audio_player_task(self):

while True:

self.play_next_song.clear()

- self.current = await self.songs.get()

+

+ if len(self.playlist) <= 0:

+ await asyncio.sleep(1)

+ continue

+

+ self.current = self.playlist[0]

await self.bot.send_message(self.current.channel, 'Now playing ' + str(self.current))

self.current.player.start()

await self.play_next_song.wait()

@@ -206,7 +211,7 @@ class Music:

player.volume = volume

entry = VoiceEntry(ctx.message, player)

await self.bot.say('Enqueued ' + str(entry))

- await state.songs.put(entry)

+ #await state.songs.put(entry)

state.playlist.append(entry)

@commands.command(pass_context=True, no_pm=True)

@@ -340,3 +345,27 @@ class Music:

count = count + 1

#playlist_string += '```'

await self.bot.say(playlist_string)

+

+

+ @commands.command(pass_context=True, no_pm=True)

+ async def removesong(self, ctx, idx : int):

+ """Removes a song in the playlist by the index."""

+

+ # I'm not sure how to check for mod status can you add this part Corp? :D

+ # isAdmin = author.permissions_in(channel).administrator

+ # # Only allow admins to change server stats

+ # if not isAdmin:

+ # await self.bot.send_message(channel, 'You do not have sufficient privileges to access this command.')

+ # return

+

+ idx = idx - 1

+ state = self.get_voice_state(ctx.message.server)

+ if idx < 0 or idx >= len(state.playlist):

+ await self.bot.say('Invalid song index, please refer to $playlist for the song index.')

+ return

+ song = state.playlist[idx]

+ await self.bot.say('Deleted {} from playlist'.format(str(song)))

+ if idx == 0:

+ await self.bot.say('Cannot delete currently playing song, use $skip instead')

+ return

+ del state.playlist[idx]

|

Attempt fix test timeouts

Zuul unit test jobs have sometimes been timing out, often while

executing a test that attempts getaddrinfo. Mock the getaddrinfo call

to see if that helps. | @@ -5580,17 +5580,34 @@ class TestStatsdLogging(unittest.TestCase):

# instantiation so we don't call getaddrinfo() too often and don't have

# to call bind() on our socket to detect IPv4/IPv6 on every send.

#

- # This test uses the real getaddrinfo, so we patch over the mock to

- # put the real one back. If we just stop the mock, then

- # unittest.exit() blows up, but stacking real-fake-real works okay.

- with mock.patch.object(utils.socket, 'getaddrinfo',

- self.real_getaddrinfo):

+ # This test patches over the existing mock. If we just stop the

+ # existing mock, then unittest.exit() blows up, but stacking

+ # real-fake-fake works okay.

+ calls = []

+

+ def fake_getaddrinfo(host, port, family, *args):

+ calls.append(family)

+ if len(calls) == 1:

+ raise socket.gaierror

+ # this is what a real getaddrinfo('::1', port,

+ # socket.AF_INET6) returned once

+ return [(socket.AF_INET6,

+ socket.SOCK_STREAM,

+ socket.IPPROTO_TCP,

+ '', ('::1', port, 0, 0)),

+ (socket.AF_INET6,

+ socket.SOCK_DGRAM,

+ socket.IPPROTO_UDP,

+ '',

+ ('::1', port, 0, 0))]

+

+ with mock.patch.object(utils.socket, 'getaddrinfo', fake_getaddrinfo):

logger = utils.get_logger({

'log_statsd_host': '::1',

'log_statsd_port': '9876',

}, 'some-name', log_route='some-route')

statsd_client = logger.logger.statsd_client

-

+ self.assertEqual([socket.AF_INET, socket.AF_INET6], calls)

self.assertEqual(statsd_client._sock_family, socket.AF_INET6)

self.assertEqual(statsd_client._target, ('::1', 9876, 0, 0))

|

Updated build_application_zip to raise when there's an error.

The errors were being returned, but nothing is looking at that return value. | @@ -3,6 +3,8 @@ from io import open

import os

import tempfile

from wsgiref.util import FileWrapper

+from celery import states

+from celery.exceptions import Ignore

from celery.task import task

from celery.utils.log import get_task_logger

from django.conf import settings

@@ -152,6 +154,9 @@ def build_application_zip(include_multimedia_files, include_index_files, app,

z.writestr(path, data, file_compression)

progress += file_progress / file_count

DownloadBase.set_progress(build_application_zip, progress, 100)

+ if errors:

+ build_application_zip.update_state(state=states.FAILURE, meta={'errors': errors})

+ raise Ignore() # We want the task to fail hard, so ignore any future updates to it

else:

DownloadBase.set_progress(build_application_zip, initial_progress + file_progress, 100)

@@ -175,6 +180,3 @@ def build_application_zip(include_multimedia_files, include_index_files, app,

)

DownloadBase.set_progress(build_application_zip, 100, 100)

- return {

- "errors": errors,

- }

|

Add component overlay classes.

These are the classes that should apply to all overlays and replace

their current functions. | .new-style a.no-style {

color: inherit;

}

+

+/* -- unified overlay design component -- */

+.overlay {

+ position: fixed;

+ top: 0;

+ left: 0;

+ width: 100vw;

+ height: 100vh;

+ overflow: auto;

+ -webkit-overflow-scrolling: touch;

+

+ background-color: rgba(32,32,32,0.8);

+ z-index: 105;

+

+ pointer-events: none;

+ opacity: 0;

+

+ transition: opacity 0.3s ease;

+}

+

+.overlay.show {

+ opacity: 1;

+ pointer-events: all;

+}

+

+.overlay .overlay-content {

+ -webkit-transform: scale(0.5);

+ transform: scale(0.5);

+

+ z-index: 102;

+

+ transition: transform 0.3s ease;

+}

+

+.overlay.show .overlay-content {

+ -webkit-transform: scale(1);

+ transform: scale(1);

+}

|

Force output format to json

To solve a potential issue wih "print(r.json())" at L1504 | @@ -1494,7 +1494,8 @@ class WDItemEngine(object):

'claim': statement_id,

'token': login.get_edit_token(),

'baserevid': revision,

- 'bot': True

+ 'bot': True,

+ 'format': 'json'

}

headers = {

'User-Agent': user_agent

|

DOC: sparse.csgraph: clarify laplacian normalization

Clarify normalization of laplacian being symmetric normalization as

opposed to random walk normalization. | @@ -24,7 +24,7 @@ def laplacian(csgraph, normed=False, return_diag=False, use_out_degree=False):

csgraph : array_like or sparse matrix, 2 dimensions

compressed-sparse graph, with shape (N, N).

normed : bool, optional

- If True, then compute normalized Laplacian.

+ If True, then compute symmetric normalized Laplacian.

return_diag : bool, optional

If True, then also return an array related to vertex degrees.

use_out_degree : bool, optional

|

Fix pre 0.4 builds.

Fix regex expression to search for libaten | @@ -106,7 +106,7 @@ cuda_files = find(curdir, lambda file: file.endswith(".cu"), True)

cuda_headers = find(curdir, lambda file: file.endswith(".cuh"), True)

headers = find(curdir, lambda file: file.endswith(".h"), True)

-libaten = list(set(find(torch_dir, re.compile("libaten", re.IGNORECASE).search, True)))

+libaten = list(set(find(torch_dir, re.compile("libaten.*so", re.IGNORECASE).match, True)))

libaten_names = [os.path.splitext(os.path.basename(entry))[0] for entry in libaten]

for i, entry in enumerate(libaten_names):

if entry[:3]=='lib':

|

Update eveonline.py

update documentation url since original url is 404'ing | """

EVE Online Single Sign-On (SSO) OAuth2 backend

-Documentation at https://developers.eveonline.com/resource/single-sign-on

+Documentation at https://eveonline-third-party-documentation.readthedocs.io/en/latest/sso/index.html

"""

from .oauth import BaseOAuth2

@@ -8,8 +8,9 @@ from .oauth import BaseOAuth2

class EVEOnlineOAuth2(BaseOAuth2):

"""EVE Online OAuth authentication backend"""

name = 'eveonline'

- AUTHORIZATION_URL = 'https://login.eveonline.com/oauth/authorize'

- ACCESS_TOKEN_URL = 'https://login.eveonline.com/oauth/token'

+ BASE_URL = 'https://login.eveonline.com/oauth'

+ AUTHORIZATION_URL = BASE_URL + '/authorize'

+ ACCESS_TOKEN_URL = BASE_URL + '/token'

ID_KEY = 'CharacterID'

ACCESS_TOKEN_METHOD = 'POST'

EXTRA_DATA = [

|

Update reverse_words.py

The following update results in less lines of code and faster performance while preserving functionality. | # Created by sarathkaul on 18/11/19

+# Edited by farnswj1 on 4/4/20

def reverse_words(input_str: str) -> str:

@@ -13,10 +14,7 @@ def reverse_words(input_str: str) -> str:

input_str = input_str.split(" ")

new_str = list()

- for a_word in input_str:

- new_str.insert(0, a_word)

-

- return " ".join(new_str)

+ return ' '.join(reversed(input_str))

if __name__ == "__main__":

|

added more output for debugging

added more output for debugging

more info | @@ -65,7 +65,6 @@ def test_detection(test):

print("Testing %s" % name)

# Download data to temporal folder

data_dir = tempfile.TemporaryDirectory(prefix="data", dir=get_path("%s/humvee" % SSML_CWD))

- print("Temporal data dir %s" % data_dir.name)

# Temporal solution

d = test_desc['attack_data'][0]

test_data = os.path.abspath("%s/%s" % (data_dir.name, d['file_name']))

@@ -95,6 +94,8 @@ def test_detection(test):

print("Errors in detection")

print("-------------------")

print(res)

+ print("\nTested query from %s:\n" % detection)

+ print(spl2)

exit(1)

|

improve simplification for Take with inserted index

This patch replaces InsertAxis._rtake by a more powerful, unalign-based

simplification in Take.simplified. | @@ -1192,9 +1192,6 @@ class InsertAxis(Array):

return appendaxes(self.func, index.shape)

return InsertAxis(_take(self.func, index, axis), self.length)

- def _rtake(self, func, axis):

- return insertaxis(_take(func, self.func, axis), axis+self.ndim-1, self.length)

-

def _takediag(self, axis1, axis2):

assert axis1 < axis2

if axis2 == self.ndim-1:

@@ -2001,6 +1998,10 @@ class Take(Array):

def _simplified(self):

if self.indices.size == 0:

return zeros_like(self)

+ unaligned, where = unalign(self.indices)

+ if len(where) < self.indices.ndim:

+ n = self.func.ndim-1

+ return align(Take(self.func, unaligned), (*range(n), *(n+i for i in where)), self.shape)

trytake = self.func._take(self.indices, self.func.ndim-1) or \

self.indices._rtake(self.func, self.func.ndim-1)

if trytake:

|

allow to pass 0 files to python App

Needed for LKQL's documentation generator. | @@ -1945,7 +1945,7 @@ class App(object):

def __init__(self, args=None):

self.parser = argparse.ArgumentParser(description=self.description)

- self.parser.add_argument('files', nargs='+', help='Files')

+ self.parser.add_argument('files', nargs='*', help='Files')

self.add_arguments()

# Parse command line arguments

|

Add a docstring to testing default gate domain constant

This was breaking my doc build for reasons I have not been able to track down. | @@ -16,6 +16,7 @@ from typing import List, Union, Sequence, Dict, Optional, TYPE_CHECKING

from cirq import ops, value

from cirq.circuits import Circuit

+from cirq._doc import document

if TYPE_CHECKING:

import cirq

@@ -33,6 +34,13 @@ DEFAULT_GATE_DOMAIN: Dict[ops.Gate, int] = {

ops.Y: 1,

ops.Z: 1

}

+document(

+ DEFAULT_GATE_DOMAIN,

+ """The default gate domain for `cirq.testing.random_circuit`.

+

+This includes the gates CNOT, CZ, H, ISWAP, CZ, S, SWAP, T, X, Y,

+and Z gates.

+""")

def random_circuit(qubits: Union[Sequence[ops.Qid], int],

|

Update ptcheat.rst

scheduler.step() should not be called at the start of every epoch as of PyTorch 1.1.0. Instead it should be called after the optimizer has updated the weights (after optimizer.step() is called) | @@ -237,7 +237,7 @@ Learning rate scheduling

.. code-block:: python

scheduler = optim.X(optimizer,...) # create lr scheduler

- scheduler.step() # update lr at start of epoch

+ scheduler.step() # update lr after optimizer updates weights

optim.lr_scheduler.X # where X is LambdaLR, MultiplicativeLR,

# StepLR, MultiStepLR, ExponentialLR,

# CosineAnnealingLR, ReduceLROnPlateau, CyclicLR,

|

Remove trailing slash in MANIFEST.in

Trailing slashes aren't allowed in directory patterns on Windows, and

have no effect otherwise. | @@ -2,8 +2,8 @@ include README.md

include LICENSE.txt

include requirements.txt

include qutip.bib

-recursive-include qutip/ *.pyx

-recursive-include qutip/ *.pxi

-recursive-include qutip/ *.hpp

-recursive-include qutip/ *.pxd

-recursive-include qutip/ *.ini

+recursive-include qutip *.pyx

+recursive-include qutip *.pxi

+recursive-include qutip *.hpp

+recursive-include qutip *.pxd

+recursive-include qutip *.ini

|

orgmode: Add support for console (shell-session) pygment lexer

Pygment has a colorizer for shell session avalaible as "console" or

"shell-session":

This simple change enables processing for these languages for

colorized console transcripts that I use often in my blog. | '(("asymptote" . "asymptote")

("awk" . "awk")

("c" . "c")

+ ("console" . "console")

("c++" . "cpp")

("cpp" . "cpp")

("clojure" . "clojure")

("scala" . "scala")

("scheme" . "scheme")

("sh" . "sh")

+ ("shell-session" . "shell-session")

("sql" . "sql")

("sqlite" . "sqlite3")

("tcl" . "tcl"))

|

Validate that head and tail are valid properties

Are defined on the element. | @@ -3,8 +3,9 @@ import itertools

import pytest

from gaphor.C4Model import diagramitems as c4_diagramitems

+from gaphor.core.modeling.element import Element

from gaphor.diagram.presentation import LinePresentation

-from gaphor.diagram.support import get_diagram_item_metadata

+from gaphor.diagram.support import get_diagram_item_metadata, get_model_element

from gaphor.RAAML import diagramitems as raaml_diagramitems

from gaphor.SysML import diagramitems as sysml_diagramitems

from gaphor.UML import diagramitems as uml_diagramitems

@@ -44,7 +45,11 @@ blacklist = [

)

def test_line_presentations_have_metadata(diagram_item):

metadata = get_diagram_item_metadata(diagram_item)

+ element_class = get_model_element(diagram_item)

assert metadata

assert "head" in metadata

assert "tail" in metadata

+ assert issubclass(element_class, Element)

+ assert metadata["head"] in element_class.umlproperties()

+ assert metadata["tail"] in element_class.umlproperties()

|

Standardize timezone when truncating dates

This fixes the tests when run on other timezones. | @@ -109,7 +109,8 @@ def product_counts(index, period, expressions):

for product, series in index.datasets.count_by_product_through_time(period, **expressions):

click.echo(product.name)

for timerange, count in series:

- click.echo(' {}: {}'.format(timerange[0].strftime("%Y-%m-%d"), count))

+ formatted_dt = _assume_utc(timerange[0]).strftime("%Y-%m-%d")

+ click.echo(' {}: {}'.format(formatted_dt, count))

@singledispatch

@@ -127,11 +128,14 @@ def printable_dt(val):

"""

:type val: datetime.datetime

"""

- # Default to UTC.

+ return _assume_utc(val).isoformat()

+

+

+def _assume_utc(val):

if val.tzinfo is None:

- return val.replace(tzinfo=tz.tzutc()).isoformat()

+ return val.replace(tzinfo=tz.tzutc())

else:

- return val.astimezone(tz.tzutc()).isoformat()

+ return val.astimezone(tz.tzutc())

@printable.register(Range)

|

remove unused import statement

small commit and push to try run testing on jenkins again | @@ -4,7 +4,6 @@ Demand model of thermal loads

"""

from __future__ import division

import numpy as np

-import pandas as pd

from cea.demand import demand_writers

from cea.demand import latent_loads

from cea.demand import occupancy_model, hourly_procedure_heating_cooling_system_load, ventilation_air_flows_simple

|

Don't zip datum lists together

Hopefully this is simpler iteration | @@ -909,27 +909,28 @@ class EntriesHelper(object):

datum_.id = new_id

ret = []

- offset = 0

- for this_datum_meta, parent_datum_meta in zip_longest(datums, parent_datums):

- if parent_datum_meta and this_datum_meta != parent_datum_meta:

+ datums_remaining = list(datums)

+ for parent_datum_meta in parent_datums:

if not parent_datum_meta.requires_selection:

- # Add parent datums of opened subcases and automatically-selected cases

- # The position needs to be offset from 'head' in order to match the order in the

- # parent module since each loop iteration also appends 'this_datum_meta' to the list

- head = len(ret)

- ret.insert(head - offset, attr.evolve(parent_datum_meta, from_parent=True))

- offset += 1

+ ret.append(attr.evolve(parent_datum_meta, from_parent=True))

+ continue

+

+ if datums_remaining:

+ this_datum_meta = datums_remaining[0]

+ if this_datum_meta == parent_datum_meta:

+ ret.append(datums_remaining.pop(0))

elif _same_case(this_datum_meta, parent_datum_meta) and this_datum_meta.action:

if parent_datum_meta.id in datum_ids:

datum = datum_ids[parent_datum_meta.id]

set_id(this_datum_meta, '_'.join((datum.id, datum.case_type)), datum.datum)

set_id(this_datum_meta, parent_datum_meta.id)

+ ret.append(datums_remaining.pop(0))

elif _same_id(this_datum_meta, parent_datum_meta) and this_datum_meta.action:

set_id(this_datum_meta, '_'.join((this_datum_meta.id, this_datum_meta.case_type)))

- if this_datum_meta:

- ret.append(this_datum_meta)

- return ret

+ ret.append(datums_remaining.pop(0))

+

+ return ret + datums_remaining

@staticmethod

def _get_module_for_persistent_context(detail_module, module_unique_id):

|

Add docker instructions

Thanks for helping me get this to run! | @@ -21,6 +21,19 @@ python setup.py install

bash install_for_anaconda_users.sh

```

+### For Docker Users

+

+Install Docker from https://www.docker.com/

+For macOS users with [Homebrew](https://brew.sh/) installed, use `brew cask install docker`

+

+Then, run:

+

+```sh

+git clone https://github.com/OpenMined/PySyft.git

+cd PySyft/notebooks/

+docker run --rm -it -v $PWD:/notebooks -w /notebooks -p 8888:8888 openmined/pysyft jupyter notebook --ip=0.0.0.0 --allow-root

+```

+

## For Contributors

If you are interested in contributing to Syft, first check out our [Contributor Quickstart Guide](https://github.com/OpenMined/Docs/blob/master/contributor_quickstart.md) and then checkout our [Project Roadmap](https://github.com/OpenMined/Syft/blob/master/ROADMAP.md) and sign into our Slack Team channel #syft to let us know which projects sound interesting to you! (or propose your own!).

|

Update mediaprocessor.py

default to None instead of first valid pix_fmt, lets FFMPEG choose | @@ -708,11 +708,11 @@ class MediaProcessor:

vpix_fmt = None

elif vpix_fmt and vpix_fmt not in valid_formats:

if vHDR and len(self.settings.hdr.get('pix_fmt')) > 0:

- new_vpix_fmt = next((vf for vf in self.settings.hdr.get('pix_fmt') if vf in valid_formats), valid_formats[0])

+ new_vpix_fmt = next((vf for vf in self.settings.hdr.get('pix_fmt') if vf in valid_formats), None)

elif not vHDR and len(self.settings.pix_fmt):

- new_vpix_fmt = next((vf for vf in self.settings.pix_fmt if vf in valid_formats), valid_formats[0])

+ new_vpix_fmt = next((vf for vf in self.settings.pix_fmt if vf in valid_formats), None)

else:

- new_vpix_fmt = valid_formats[0]

+ new_vpix_fmt = None

self.log.info("Pix_fmt selected %s is not compatible with encoder %s and source video format is not compatible , using %s" % (vpix_fmt, encoder, new_vpix_fmt))

vpix_fmt = new_vpix_fmt

|

Update suspicious_event_log_service_behavior.yml

updating filter name | @@ -8,7 +8,7 @@ datamodel: []

description: This search looks for Windows events that indicate the event logging service has been shut down.

search: (`wineventlog_security` EventCode=1100) | stats count min(_time) as firstTime max(_time) as lastTime by EventCode

dest | `security_content_ctime(firstTime)` | `security_content_ctime(lastTime)`

- | `windows_event_log_cleared_filter`

+ | `suspicious_event_log_service_behavior`

how_to_implement: To successfully implement this search, you need to be ingesting

Windows event logs from your hosts.

known_false_positives: It is possible the Event Logging service gets shut down due to system errors or legitimately administration tasks.

|

DOCS: developer style guide

First draft of a pysat style guide. | @@ -93,3 +93,35 @@ For merging, you should:

Travis to run the tests for each change you add in the pull request.

Because testing here will delay tests by other developers, please ensure

that the code passes all tests on your local system first.

+

+Project Style Guidelines

+------------------------

+

+In general, pysat follows PEP8 and numpydoc guidelines. Unit and integration

+tests use pytest, linting is done with flake8, and sphinx-build performs

+documentation tests. However, there are certain additional style elements that

+have been settle on to ensure the project maintains a consistent coding style.

+These include:

+

+* Line breaks should occur before a binary operator (ignoring flake8 W503)

+* Combine long strings using `join`

+* Preferably break long lines on open parentheses rather than using `\`

+* Avoid using Instrument class key attribute names as unrelated variable names:

+ * `platform`

+ * `name`

+ * `tag`

+ * `inst_id`

+* The pysat logger is imported into each sub-module and provides status updates

+ at the info and warning levels (as appropriate)

+* Several dependent packages have common nicknames, including:

+ * `import datetime as dt`

+ * `import numpy as np`

+ * `import pandas as pds`

+ * `import xarray as xr`

+* All classes should have `__repr__` and `__str__` functions

+* Docstrings use `Note` instead of `Notes`

+* Try to avoid creating a try/except statement where except passes

+* Use setup and teardown in test classes

+* Use pytest parametrize in test classes when appropriate

+* Provide testing class methods with informative failure statements and

+ descriptive, one-line docstrings

\ No newline at end of file

|

Re-enable Compute library tests.

ci image v0.06 does not appear to have the flakiness shown in ci image v0.05.

However what changed between the 2 remains a mystery and needs further

debugging. However for now re-enable this to see how this fares in CI

Fixes | @@ -27,5 +27,4 @@ source tests/scripts/setup-pytest-env.sh

find . -type f -path "*.pyc" | xargs rm -f

make cython3

-echo "Temporarily suspended while we understand flakiness with #8117"

-#run_pytest ctypes python-arm_compute_lib tests/python/contrib/test_arm_compute_lib

+run_pytest ctypes python-arm_compute_lib tests/python/contrib/test_arm_compute_lib

|

fix: travis handle git errors if any occur

eg:

fatal: Invalid symmetric difference expression

fatal: bad object | @@ -47,11 +47,14 @@ matrix:

script: bench --site test_site run-ui-tests frappe --headless

before_install:

- # do we really want to run travis?

+ # do we really want to run travis? check which files are changed and if git doesnt face any fatal errors

- |

- ONLY_DOCS_CHANGES=$(git diff --name-only $TRAVIS_COMMIT_RANGE | grep -qvE '\.(md|png|jpg|jpeg)$|^.github|LICENSE' ; echo $?)

- ONLY_JS_CHANGES=$(git diff --name-only $TRAVIS_COMMIT_RANGE | grep -qvE '\.js$' ; echo $?)

- ONLY_PY_CHANGES=$(git diff --name-only $TRAVIS_COMMIT_RANGE | grep -qvE '\.py$' ; echo $?)

+ FILES_CHANGED=$( git diff --name-only $TRAVIS_COMMIT_RANGE 2>&1 )

+

+ if [[ $FILES_CHANGED != *"fatal"* ]]; then

+ ONLY_DOCS_CHANGES=$( echo $FILES_CHANGED | grep -qvE '\.(md|png|jpg|jpeg)$|^.github|LICENSE' ; echo $? )

+ ONLY_JS_CHANGES=$( echo $FILES_CHANGED | grep -qvE '\.js$' ; echo $? )

+ ONLY_PY_CHANGES=$( echo $FILES_CHANGED | grep -qvE '\.py$' ; echo $? )

if [[ $ONLY_DOCS_CHANGES == "1" ]]; then

echo "Only docs were updated, stopping build process.";

@@ -65,6 +68,7 @@ before_install:

echo "Only Python code was updated, stopping Cypress build process.";

exit;

fi

+ fi

# install wkhtmltopdf

- wget -O /tmp/wkhtmltox.tar.xz https://github.com/frappe/wkhtmltopdf/raw/master/wkhtmltox-0.12.3_linux-generic-amd64.tar.xz

|

Update charsets

add all digits to setset

add upperset letters to alphaset

set vars must start with alphaset char | @@ -12,13 +12,13 @@ binset = set('01')

decset = set('0123456789')

hexset = set('01234567890abcdef')

intset = set('01234567890abcdefx')

-setset = set('.abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ')

+setset = set('.abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789')

varset = set('$.:abcdefghijklmnopqrstuvwxyz_ABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789')

timeset = set('01234567890')

propset = set(':abcdefghijklmnopqrstuvwxyz_0123456789')

starset = varset.union({'*'})

tagfilt = varset.union({'#', '*'})

-alphaset = set('abcdefghijklmnopqrstuvwxyz')

+alphaset = set('abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ')

# this may be used to meh() potentially unquoted values

valmeh = whites.union({'(', ')', '=', ',', '[', ']', '{', '}'})

@@ -605,6 +605,8 @@ def parse_storm(text, off=0):

if nextchar(text, off, '$'):

_, off = nom(text, off + 1, whites)

+ if not nextin(text, off, alphaset):

+ raise s_common.BadSyntaxError(msg='Set variables must start with an alpha char')

name, off = nom(text, off, setset)

_, off = nom(text, off, whites)

|

Add no_letterhead to form_dict

no_letterhead in form_dict was missing | @@ -1220,7 +1220,7 @@ def format(*args, **kwargs):

import frappe.utils.formatters

return frappe.utils.formatters.format_value(*args, **kwargs)

-def get_print(doctype=None, name=None, print_format=None, style=None, html=None, as_pdf=False, doc=None, output = None):

+def get_print(doctype=None, name=None, print_format=None, style=None, html=None, as_pdf=False, doc=None, output = None, no_letterhead = 0):

"""Get Print Format for given document.

:param doctype: DocType of document.

@@ -1236,6 +1236,7 @@ def get_print(doctype=None, name=None, print_format=None, style=None, html=None,

local.form_dict.format = print_format

local.form_dict.style = style

local.form_dict.doc = doc

+ local.form_dict.no_letterhead = no_letterhead

if not html:

html = build_page("printview")

|

update signal-scope ExternalProject version

also fix qt version argument passed to external signal-scope build | @@ -586,11 +586,12 @@ if(USE_SIGNAL_SCOPE)

ExternalProject_Add(signal-scope

GIT_REPOSITORY https://github.com/openhumanoids/signal-scope.git

- GIT_TAG 62fe2f4

+ GIT_TAG a0bc791

CMAKE_CACHE_ARGS

${default_cmake_args}

${python_args}

${qt_args}

+ -DUSED_QTVERSION:STRING=${DD_QT_VERSION}

DEPENDS

ctkPythonConsole

PythonQt

|

Remove old test code at end of launcher.py

This is from benc-mypy typechecking work. | @@ -506,10 +506,3 @@ wait

overrides=self.overrides,

debug=debug_num)

return x

-

-

-if __name__ == '__main__':

-

- s = SingleNodeLauncher()

- wrapped = s("hello", 1, 1)

- print(wrapped)

|

AnimationGadget : draw tangents in animation editor gadget.

* draw tangent as line from key to tangent end position.

* draw handle as square at tangent end position.

ref | @@ -569,12 +569,55 @@ void AnimationGadget::renderLayer( Layer layer, const Style *style, RenderReason

{

Animation::CurvePlug *curvePlug = IECore::runTimeCast<Animation::CurvePlug>( &runtimeTyped );

+ const Imath::Color3f color3 = colorFromName( drivenPlugName( curvePlug ) );

+ const Imath::Color4f color4( color3.x, color3.y, color3.z, 1.0 );

+

+ Animation::Key* previousKey = 0;

+ V2f previousKeyPosition = V2f( 0 );

+ bool previousKeySelected = false;

+

for( Animation::Key &key : *curvePlug )

{

bool isHighlighted = ( & key == m_highlightedKey.get() ) || ( selecting && b.intersects( V2f( key.getTime(), key.getValue() ) ) );

bool isSelected = m_selectedKeys->contains( &key );

V2f keyPosition = viewportGadget->worldToRasterSpace( V3f( key.getTime(), key.getValue(), 0 ) );

style->renderAnimationKey( keyPosition, isSelected || isHighlighted ? Style::HighlightedState : Style::NormalState, isHighlighted ? 3.0 : 2.0, &black );

+

+ // draw the tangents

+ //

+ // NOTE : only draw if they are unconstrained and key or adjacent key is selected

+

+ if( previousKey )

+ {

+ const Animation::Tangent& in = key.tangentIn();

+ const Animation::Tangent& out = previousKey->tangentOut();

+

+ if( ( isSelected || previousKeySelected ) && ( ! out.slopeIsConstrained() || ! out.scaleIsConstrained() ) )

+ {

+ const V2d outPosKey = out.getPosition();

+ const V2f outPosRas = viewportGadget->worldToRasterSpace( V3f( outPosKey.x, outPosKey.y, 0 ) );

+ const double outSize = 2.0;

+ const Box2f outBox( outPosRas - V2f( outSize ), outPosRas + V2f( outSize ) );

+ style->renderLine( IECore::LineSegment3f( V3f( outPosRas.x, outPosRas.y, 0 ), V3f( previousKeyPosition.x, previousKeyPosition.y, 0 ) ),

+ 1.0, &color4 );

+ style->renderRectangle( outBox );

+ }

+

+ if( ( isSelected || previousKeySelected ) && ( ! in.slopeIsConstrained() || ! in.scaleIsConstrained() ) )

+ {

+ const V2d inPosKey = in.getPosition();

+ const V2f inPosRas = viewportGadget->worldToRasterSpace( V3f( inPosKey.x, inPosKey.y, 0 ) );

+ const double inSize = 2.0;

+ const Box2f inBox( inPosRas - V2f( inSize ), inPosRas + V2f( inSize ) );

+ style->renderLine( IECore::LineSegment3f( V3f( inPosRas.x, inPosRas.y, 0 ), V3f( keyPosition.x, keyPosition.y, 0 ) ),

+ 1.0, &color4 );

+ style->renderRectangle( inBox );

+ }

+ }

+

+ previousKey = & key;

+ previousKeyPosition = keyPosition;

+ previousKeySelected = isSelected;

}

}

break;

|

Give console feedback when creating datatoken

Why:

it takes a few seconds for the tx to go through on rinkeby

printing the resulting address helps insight & debugging | @@ -76,8 +76,11 @@ alice_wallet = Wallet(ocean.web3, private_key=os.getenv('ALICE_KEY'))

Publish a datatoken.

```

+print("create datatoken: begin")

data_token = ocean.create_data_token('DataToken1', 'DT1', alice_wallet, blob=ocean.config.metadata_store_url)

token_address = data_token.address

+print("create datatoken: done")

+print(f"datatoken address: {token_address}")

```

Specify the service attributes, and connect that to `service_endpoint` and `download_service`.

|

Fix butler output.

Encode process output so that we don't get b'' everywhere. | @@ -118,7 +118,7 @@ def process_proc_output(proc, print_output=True):

lines = []

for line in iter(proc.stdout.readline, b''):

- _print('| %s' % line.rstrip())

+ _print('| %s' % line.rstrip().decode('utf-8'))

lines.append(line)

return b''.join(lines)

|

Add hall of fame

powered by | `nlp` originated from a fork of the awesome [`TensorFlow Datasets`](https://github.com/tensorflow/datasets) and the HuggingFace team want to deeply thank the TensorFlow Datasets team for building this amazing library. More details on the differences between `nlp` and `tfds` can be found in the section [Main differences between `nlp` and `tfds`](#main-differences-between-nlp-and-tfds).

+# Contributors

+

+[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/0)[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/1)[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/2)[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/3)[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/4)[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/5)[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/6)[](https://sourcerer.io/fame/clmnt/huggingface/nlp/links/7)

+

# Installation

## From PyPI

@@ -112,3 +116,4 @@ Similarly to Tensorflow Dataset, `nlp` is a utility library that downloads and p

If you're a dataset owner and wish to update any part of it (description, citation, etc.), or do not want your dataset to be included in this library, please get in touch through a GitHub issue. Thanks for your contribution to the ML community!

If you're interested in learning more about responsible AI practices, including fairness, please see Google AI's Responsible AI Practices.

+

|

[massthings] dont use this cog. i srsly, dont

massunban cmd | @@ -3,9 +3,10 @@ from collections import Counter

from typing import Union

import discord

-from redbot.core import checks, commands

+from redbot.core import checks, commands, modlog

from redbot.core.config import Config

from redbot.core.i18n import Translator, cog_i18n

+from redbot.core.utils import AsyncIter

from redbot.core.utils import chat_formatting as chat

from redbot.core.utils.mod import get_audit_reason

from redbot.core.utils.predicates import MessagePredicate

@@ -21,7 +22,7 @@ class MassThings(commands.Cog, command_attrs={"hidden": True}):

May be against Discord API terms. Use with caution.

I'm not responsible for any aftermath of using this cog."""

- __version__ = "1.0.0"

+ __version__ = "1.1.0"

# noinspection PyMissingConstructor

def __init__(self, bot):

@@ -157,3 +158,63 @@ class MassThings(commands.Cog, command_attrs={"hidden": True}):

)

)

)

+

+ @commands.group()

+ @commands.guild_only()

+ @commands.cooldown(1, 300, commands.BucketType.guild)

+ @checks.admin_or_permissions(ban_members=True)

+ @commands.bot_has_permissions(ban_members=True)

+ @commands.max_concurrency(1, commands.BucketType.guild)

+ async def massunban(self, ctx: commands.Context, banned_by: discord.Member):

+ """Unban all users that banned by specified user

+

+ Uses modlog data, since discord not saves author of ban."""

+ cases = await modlog.get_all_cases(ctx.guild, self.bot)

+ bans = [b.user.id for b in await ctx.guild.bans()]

+ c = Counter()

+ async with ctx.typing():

+ async for case in AsyncIter(cases).filter(

+ lambda x: x.moderator.id == banned_by.id and x.action_type == "ban"

+ ):

+ if case.user.id in bans:

+ try:

+ await ctx.guild.unban(

+ case.user, reason=get_audit_reason(ctx.author, "Mass unban")

+ )

+ except Exception as e:

+ c[str(e)] += 1

+ else:

+ c["Success"] += 1

+ await ctx.send(

+ chat.info(

+ _("Unbanned users:\n{}").format(

+ chat.box(tabulate(c.most_common(), tablefmt="psql"), "ml")

+ )

+ )

+ )

+

+ @massunban.command(name="all", aliases=["everyone"])

+ @commands.cooldown(1, 300, commands.BucketType.guild)

+ @commands.max_concurrency(1, commands.BucketType.guild)

+ async def massunban_all(self, ctx):

+ """Unban everyone from current guild"""

+ bans = await ctx.guild.bans()

+ c = Counter()

+

+ async with ctx.typing():

+ for ban in bans:

+ try:

+ await ctx.guild.unban(

+ ban.user, reason=get_audit_reason(ctx.author, "Mass unban (everyone)")

+ )

+ except Exception as e:

+ c[str(e)] += 1

+ else:

+ c["Success"] += 1

+ await ctx.send(

+ chat.info(

+ _("Unbanned users:\n{}").format(

+ chat.box(tabulate(c.most_common(), tablefmt="psql"), "ml")

+ )

+ )

+ )

|

jenkins engine status checker changes

previous was useless, it wasnot failed due incorrect creds. | @@ -85,8 +85,8 @@ class JenkinsEngine(BaseEngine):

status = config.get('PROVISIONER_UNKNOWN_STATE')

try:

client = jenkins.Jenkins(config.get('JENKINS_API_URL'), **conn_kw)

- version = client.get_version()

- if version:

+ auth_verify = client.get_whoami()

+ if auth_verify:

status = config.get('PROVISIONER_OK_STATE')

except Exception as e:

logger.exception('Could not contact JenkinsEngine backend: ')

|

Update rsmtool/utils.py

Change description of formula. | @@ -746,7 +746,7 @@ def quadratic_weighted_kappa(y_true_observed, y_pred, ddof=0):

:math:`QWK=\\frac{2*Cov[M-H]}{Var(H)+Var(M)+(\\bar{M}-\\bar{H})^2}`, where

- - :math:'Cov' - Covariance with normalization by the number of observations given

+ - :math:`Cov` - covariance with normalization by :math:`N` (the total number of observations given)

- :math:`H` - the human score

- :math:`M` - the system score

- :math:`\\bar{H}` - mean of :math:`H`

|

Opt: allow deferred type as _booleanize

TN: | @@ -1072,7 +1072,12 @@ class Opt(Parser):

return [self.parser]

def get_type(self):

- return self._booleanize or self.parser.get_type()

+ if self._booleanize is None:

+ return self.parser.get_type()

+ elif self._booleanize is BoolType:

+ return self._booleanize

+ else:

+ return resolve_type(self._booleanize)

def create_vars_after(self, start_pos):

self.init_vars(

|

Move atbash alphabet to cipheydists

Move atbash alphabet to cipheydists and add a parameter to specify which alphabet is used for the atbash operation. | from typing import Optional, Dict, List

-from ciphey.iface import Config, ParamSpec, T, U, Decoder, registry

+from ciphey.iface import Config, ParamSpec, T, U, Decoder, registry, WordList

@registry.register

@@ -18,8 +18,7 @@ class Atbash(Decoder[str, str]):

"""

result = ""

- letters = list("abcdefghijklmnopqrstuvwxyz")

- atbash_dict = {letters[i]: letters[::-1][i] for i in range(26)}

+ atbash_dict = {self.ALPHABET[i]: self.ALPHABET[::-1][i] for i in range(26)}

# Ensure that ciphertext is a string

if type(ctext) == str:

@@ -45,10 +44,17 @@ class Atbash(Decoder[str, str]):

def __init__(self, config: Config):

super().__init__(config)

+ self.ALPHABET = config.get_resource(self._params()["dict"], WordList)

@staticmethod

def getParams() -> Optional[Dict[str, ParamSpec]]:

- return None

+ return {

+ "dict": ParamSpec(

+ desc="The alphabet used for the atbash operation.",

+ req=False,

+ default="cipheydists::list::englishAlphabet",

+ )

+ }

@staticmethod

def getTarget() -> str:

|

Update CONTRIBUTING.md

Minor typo fixes | -============

Contributing

============

@@ -52,7 +51,7 @@ To set up `pysat` for local development:

Now you can make your changes locally. Tests for new instruments are

performed automatically. Tests for custom functions should be added to the

appropriately named file in ``pysat/tests``. For example, custom functions

- for the OMNI HRO data are tested in ``pysat/tests/test_omni_hro.py``. No

+ for the OMNI HRO data are tested in ``pysat/tests/test_omni_hro.py``. If no

test file exists, then you should create one. This testing uses nose, which

will run tests on any python file in the test directory that starts with

``test_``.

|

[commands] Fix certain annotations being allowed in hybrid commands

Union types were not properly constrained and callable types were

too eagerly being converted | @@ -109,6 +109,11 @@ def is_transformer(converter: Any) -> bool:

)

+def required_pos_arguments(func: Callable[..., Any]) -> int:

+ sig = inspect.signature(func)

+ return sum(p.default is p.empty for p in sig.parameters.values())

+

+

def make_converter_transformer(converter: Any) -> Type[app_commands.Transformer]:

async def transform(cls, interaction: discord.Interaction, value: str) -> Any:

try:

@@ -184,13 +189,19 @@ def replace_parameters(parameters: Dict[str, Parameter], signature: inspect.Sign

param = param.replace(annotation=make_greedy_transformer(inner, parameter))

elif is_converter(converter):

param = param.replace(annotation=make_converter_transformer(converter))

- elif origin is Union and len(args) == 2 and args[-1] is _NoneType:

+ elif origin is Union:

+ if len(args) == 2 and args[-1] is _NoneType:

# Special case Optional[X] where X is a single type that can optionally be a converter

inner = args[0]

is_inner_tranformer = is_transformer(inner)

if is_converter(inner) and not is_inner_tranformer:

param = param.replace(annotation=Optional[make_converter_transformer(inner)]) # type: ignore

+ else:

+ raise

elif callable(converter) and not inspect.isclass(converter):

+ param_count = required_pos_arguments(converter)

+ if param_count != 1:

+ raise

param = param.replace(annotation=make_callable_transformer(converter))

if parameter.default is not parameter.empty:

|

FCR API Bulk_run_v2 handler (FIRST DRAFT)

Summary: This diff creates the handler for the v2 api for the bulk_run in FCR. | @@ -242,6 +242,31 @@ class CommandHandler(Counters, FacebookBase, FcrIface):

for dev, cmds in device_to_commands.items()

}

+ @ensure_thrift_exception

+ @input_fields_validator

+ @_append_debug_info_to_exception

+ @_ensure_uuid

+ async def bulk_run_v2(

+ self, request: ttypes.BulkRunCommandRequest

+ ) -> ttypes.BulkRunCommandResponse:

+ device_to_commands = {}

+ for device_commands in request.device_commands_list:

+ device_details = device_commands.device

+ # Since this is in the handler, is it okay to have struct as a key?

+ device_to_commands[device_details] = device_commands.commands

+

+ result = await self.bulk_run(

+ device_to_commands,

+ request.timeout,

+ request.open_timeout,

+ request.client_ip,

+ request.client_port,

+ request.uuid,

+ )

+ response = ttypes.BulkRunCommandResponse()

+ response.device_to_result = result

+ return response

+

@ensure_thrift_exception

@input_fields_validator

@_append_debug_info_to_exception

|

use isErr func

Fix release notes | @@ -15,7 +15,7 @@ script: |-

var retries = parseInt(args.retries) || 10;

for (i = 0 ; i < retries; i++) {

res = executeCommand('addEntitlement', {'persistent': args.persistent, 'replyEntriesTag': args.replyEntriesTag})

- if (res[0].Type === entryTypes.error) {

+ if (isError(res[0])) {

if (res[0].Contents.contains('[investigations] [investigation] (15)')) {

wait(1);

continue;

@@ -151,4 +151,4 @@ args:

description: Indicates how many times to try and create an entitlement in case of failure

defaultValue: "10"

scripttarget: 0

-releaseNotes: Support script to run in parallel with itself

+releaseNotes: Added support for parallel execution of the script, with better error handling

|

camerad: reduce cpu usage

wait for 50ms | @@ -126,7 +126,7 @@ CameraBuf::~CameraBuf() {

}

bool CameraBuf::acquire() {

- if (!safe_queue.try_pop(cur_buf_idx, 1)) return false;

+ if (!safe_queue.try_pop(cur_buf_idx, 50)) return false;

if (camera_bufs_metadata[cur_buf_idx].frame_id == -1) {

LOGE("no frame data? wtf");

|

[perimeterPen] move source encoding declaration to the first line

Otherwise I get this error on python2.7:

SyntaxError: Non-ASCII character '\xc2' in file perimeterPen.py on line 87, but no encoding declared; see for details | -"""Calculate the perimeter of a glyph."""

# -*- coding: utf-8 -*-

+"""Calculate the perimeter of a glyph."""

from __future__ import print_function, division, absolute_import

from fontTools.misc.py23 import *

|

Fix incorrect __round__ behaviour

In some cases with large scenario parameters,

__round__ raises an error:

TypeError: type NoneType doesn't define __round__ method

Fix __round__ behaviour for StreamingAlgorithm ins == None. | @@ -330,7 +330,11 @@ class Table(Chart):

:returns: rounded float

:returns: str "n/a"

"""

- return round(ins.result(), 3) if has_result else "n/a"

+ r = ins.result()

+ if not has_result or r is None:

+ return "n/a"

+ else:

+ return round(r, 3)

def _row_has_results(self, values):

"""Determine whether row can be assumed as having values.

|

The CLI documentation link sends to error 404

The CLI documentation link redirects to (with an ending slash), which gives a "page not found" error. works. It could probably be also solved by modifying the redirection. | @@ -134,7 +134,7 @@ By default, the Dropbox folder names will contain the capitalised config-name in

In the above case, this will be "Dropbox (Personal)" and "Dropbox (Work)".

A full documentation of the CLI is available on the

-[website](https://samschott.github.io/maestral/cli/).

+[website](https://maestral.app/cli).

## Contribute

|

PathListingWidget : Adapt for persistent PathModel

Logically this belongs with the previous commit, but I've separated them in the hope that it'll be easier to review separately. | @@ -392,23 +392,9 @@ class PathListingWidget( GafferUI.Widget ) :

dirPath = self.__dirPath()

if self.__currentDir!=dirPath or str( self.__path )==self.__currentPath :

- selectedPaths = self.getSelectedPaths()

- expandedPaths = None

- if str( self.__path ) == self.__currentPath :

- # the path location itself hasn't changed so we are assuming that just the filter has.

- # if we're in the tree view mode, the user would probably be very happy

- # if we didn't forget what was expanded.

- if self.getDisplayMode() == self.DisplayMode.Tree :

- expandedPaths = self.getExpandedPaths()

-

_GafferUI._pathListingWidgetUpdateModel( GafferUI._qtAddress( self._qtWidget() ), dirPath.copy() )

-

- if expandedPaths is not None :

- self.setExpandedPaths( expandedPaths )

-

- self.setSelectedPaths( selectedPaths, scrollToFirst = False, expandNonLeaf = False )

-

self.__currentDir = dirPath

+ self._qtWidget().updateColumnWidths()

self.__currentPath = str( self.__path )

@@ -629,9 +615,9 @@ class _TreeView( QtWidgets.QTreeView ) :

QtWidgets.QTreeView.setModel( self, model )

- model.modelReset.connect( self.__recalculateColumnSizes )

+ model.modelReset.connect( self.updateColumnWidths )

- self.__recalculateColumnSizes()

+ self.updateColumnWidths()

def setExpansion( self, paths ) :

@@ -649,7 +635,7 @@ class _TreeView( QtWidgets.QTreeView ) :

self.collapsed.connect( self.__collapsed )

self.expanded.connect( self.__expanded )

- self.__recalculateColumnSizes()

+ self.updateColumnWidths()

self.expansionChanged.emit()

@@ -695,7 +681,7 @@ class _TreeView( QtWidgets.QTreeView ) :

QtWidgets.QTreeView.mouseDoubleClickEvent( self, event )

self.__currentEventModifiers = QtCore.Qt.NoModifier

- def __recalculateColumnSizes( self ) :

+ def updateColumnWidths( self ) :

self.__recalculatingColumnWidths = True

@@ -724,21 +710,21 @@ class _TreeView( QtWidgets.QTreeView ) :

return

# store the difference between the ideal size and what the user would prefer, so

- # we can apply it again in __recalculateColumnSizes

+ # we can apply it again in updateColumnWidths

if len( self.__idealColumnWidths ) > index :

self.__columnWidthAdjustments[index] = newWidth - self.__idealColumnWidths[index]

def __collapsed( self, index ) :

self.__propagateExpanded( index, False )

- self.__recalculateColumnSizes()

+ self.updateColumnWidths()

self.expansionChanged.emit()

def __expanded( self, index ) :

self.__propagateExpanded( index, True )

- self.__recalculateColumnSizes()

+ self.updateColumnWidths()

self.expansionChanged.emit()

|

Update developers.md : remove codacy

We took codacy out of our CI > 6 mos ago, because it was too heavy. Local was even harder to use. We missed removing it from developers.md, I guess. Fixing that now:) | @@ -130,46 +130,6 @@ pre-commit install

Now, this will auto-apply isort (import sorting), flake8 (linting) and black (automatic code formatting) to commits. Black formatting is the standard and is checked as part of pull requests.

-### 7.2 Code quality tests

-

-Use [codacy-analysis-cli](https://github.com/codacy/codacy-analysis-cli).

-

-First, install once. In a new console:

-

-```console

-curl -L https://github.com/codacy/codacy-analysis-cli/archive/master.tar.gz | tar xvz

-cd codacy-analysis-cli-* && sudo make install

-```

-

-In main console (with venv on):

-

-```console

-#run all tools, plus Metrics and Clones data.

-codacy-analysis-cli analyze --directory ~/code/ocean.py/ocean_lib/ocean

-

-#run tools individually

-codacy-analysis-cli analyze --directory ~/code/ocean.py/ocean_lib/ocean --tool Pylint

-codacy-analysis-cli analyze --directory ~/code/ocean.py/ocean_lib/ocean --tool Prospector

-codacy-analysis-cli analyze --directory ~/code/ocean.py/ocean_lib/ocean --tool Bandit

-```

-

-You'll get a report that looks like this.

-

-```console

-Found [Info] `First line should end with a period (D415)` in ocean_compute.py:50 (Prospector_pep257)

-Found [Info] `Missing docstring in __init__ (D107)` in ocean_assets.py:42 (Prospector_pep257)

-Found [Info] `Method could be a function` in ocean_pool.py:473 (PyLint_R0201)

-Found [Warning] `Possible hardcoded password: ''` in ocean_exchange.py:23 (Bandit_B107)

-Found [Metrics] in ocean_exchange.py:

- LOC - 68

- CLOC - 4

- #methods - 6

-```

-

-(C)LOC = (Commented) Lines Of Code.

-

-Finally, you can [go here](https://app.codacy.com/gh/oceanprotocol/ocean.py/dashboard) to see results of remotely-run tests. (You may need special permissions.)

-

## 8. Appendix: Contributing to docs

You are welcome to contribute to ocean.py docs and READMEs. For clean markdowns in the READMEs folder, we use the `remark` tool for automatic markdown formatting.

|

TST: Use joba='R' in gejsv

Previously joba='A' would incorrectly calculate a very small singulat

value for complex128 with Windows on Azure. joba='R' calculates the rank

more accurately leading to a passing result | @@ -1920,7 +1920,7 @@ def test_gejsv_with_rank_deficient_matrix(dtype):

sva[k:] = 0

SIGMA = np.diag(work[0] / work[1] * sva[:n])

A_rank_k = u @ SIGMA @ v.T

- sva, u, v, work, iwork, info = gejsv(A_rank_k)

+ sva, u, v, work, iwork, info = gejsv(A_rank_k, joba='R')

assert_equal(iwork[0], k)

assert_equal(iwork[1], k)

|

Updated Validator.pizza to MailCheck.ai

Updated Validator.pizza to MailCheck.ai (name, url, and description) to match service's change from December 2nd 2021. | @@ -446,12 +446,12 @@ API | Description | Auth | HTTPS | CORS |

| [EVA](https://eva.pingutil.com/) | Validate email addresses | No | Yes | Yes |

| [Kickbox](https://open.kickbox.com/) | Email verification API | No | Yes | Yes |

| [Lob.com](https://lob.com/) | US Address Verification | `apiKey` | Yes | Unknown |

+| [MailCheck.ai](https://www.mailcheck.ai/#documentation) | Prevent users to sign up with temporary email addresses | No | Yes | Unknown |

| [Postman Echo](https://www.postman-echo.com) | Test api server to receive and return value from HTTP method | No | Yes | Unknown |

| [PurgoMalum](http://www.purgomalum.com) | Content validator against profanity & obscenity | No | No | Unknown |

| [US Autocomplete](https://smartystreets.com/docs/cloud/us-autocomplete-api) | Enter address data quickly with real-time address suggestions | `apiKey` | Yes | Yes |

| [US Extract](https://smartystreets.com/products/apis/us-extract-api) | Extract postal addresses from any text including emails | `apiKey` | Yes | Yes |

| [US Street Address](https://smartystreets.com/docs/cloud/us-street-api) | Validate and append data for any US postal address | `apiKey` | Yes | Yes |

-| [Validator.pizza](https://www.validator.pizza/) | Prevent users to register to websites with a disposable email address | No | Yes | Unknown |

| [vatlayer](https://vatlayer.com/documentation) | VAT number validation | `apiKey` | Yes | Unknown |

| [Verifier](https://verifier.meetchopra.com/docs#/) | Verifies that a given email is real | `apiKey` | Yes | Yes |

| [Veriphone](https://veriphone.io) | Phone number validation & carrier lookup | `apiKey` | Yes | Yes |

|

Fix vae_test

The success condition is not so reliable. I've seen several CI failure because of it. So relax it a little bit. | @@ -121,7 +121,7 @@ class VaeTest(VaeMnistTest):

last_val_loss = hist.history['val_loss'][-1]

print("loss: ", last_val_loss)

- self.assertTrue(38.0 < last_val_loss <= 39.0)

+ self.assertTrue(37.5 < last_val_loss <= 39.0)

if INTERACTIVE_MODE:

self.show_encoded_images(model)

self.show_sampled_images(lambda eps: decoding_layers(eps))

|

Update egghead_questions.json

Fixed question "Who is considered to be the founder of Earth Day?"

Fixed question "When was the first Earth Day?" | "1999",

"1970"

],

- "correct_answer": 2

+ "correct_answer": 3

},

{

"question": "Who is considered to be the founder of Earth Day?",

"President Jimmy Carter",

"President John F. Kennedy",

"Vice President Al Gore",

- "Senator Gaylord Nelson"

+ "John McConnell"

],

"correct_answer": 3

},

|

Update settings.py

Fix missing kwargs and task ids in django-celery-results | @@ -895,6 +895,7 @@ CELERY_TASK_DECORATOR_KWARGS = {

CELERY_RESULT_BACKEND = os.environ.get("CELERY_RESULT_BACKEND", "django-db")

CELERY_RESULT_PERSISTENT = True

+CELERY_RESULT_EXTENDED = True

CELERY_RESULT_EXPIRES = timedelta(days=7)

CELERY_TASK_ACKS_LATE = strtobool(

os.environ.get("CELERY_TASK_ACKS_LATE", "False")

|

[easy] Allow non-integer daemon intervals

Summary: Some new daemons ping more frequently than once per second potentially.

Test Plan: BK

Reviewers: johann, prha | @@ -37,7 +37,7 @@ def get_default_daemon_logger(daemon_name):

class DagsterDaemon:

def __init__(self, interval_seconds):

self._logger = get_default_daemon_logger(type(self).__name__)

- self.interval_seconds = check.int_param(interval_seconds, "interval_seconds")

+ self.interval_seconds = check.numeric_param(interval_seconds, "interval_seconds")

self._last_iteration_time = None

self._last_heartbeat_time = None

|

[cli][testing] skipping files that cannot be dumped to json

Files that are unable to be dumped to json will now be skipped in the loop. | @@ -106,7 +106,12 @@ def format_record(test_record):

elif service == 'kinesis':

kinesis_path = os.path.join(DIR_TEMPLATES, 'kinesis.json')

with open(kinesis_path, 'r') as kinesis_template:

+ try:

template = json.load(kinesis_template)

+ except ValueError as err:

+ LOGGER_CLI.error('Error loading kinesis.json: %s', err)

+ return

+

template['kinesis']['data'] = base64.b64encode(data)

template['eventSourceARN'] = 'arn:aws:kinesis:us-east-1:111222333:stream/{}'.format(source)

return template

@@ -146,8 +151,9 @@ def test_kinesis_alert_rules():

with open(rule_file_path, 'r') as rule_file_handle:

try:

contents = json.load(rule_file_handle)

- except ValueError:

- LOGGER_CLI.info('Error loading %s, bad JSON', rule_file)

+ except ValueError as err:

+ LOGGER_CLI.error('Error loading %s: %s', rule_file, err)

+ continue

test_records = contents['records']

if len(test_records) == 0:

|

Update documentation for release process

Summary: Removing references to RTD and add step for release notes.

Test Plan: docs only

Reviewers: sashank, max, alangenfeld, yuhan | @@ -37,7 +37,6 @@ It's also prudent to release from a fresh virtualenv.

- You must have PyPI credentials available to twine (see below), and you must be permissioned as a

maintainer on the projects.

-- You must be permissioned as a maintainer on ReadTheDocs.

- You must export `SLACK_RELEASE_BOT_TOKEN` with an appropriate value.