instance_id

stringlengths 10

57

| patch

stringlengths 261

37.7k

| repo

stringlengths 7

53

| base_commit

stringlengths 40

40

| hints_text

stringclasses 301

values | test_patch

stringlengths 212

2.22M

| problem_statement

stringlengths 23

37.7k

| version

stringclasses 1

value | environment_setup_commit

stringlengths 40

40

| FAIL_TO_PASS

listlengths 1

4.94k

| PASS_TO_PASS

listlengths 0

7.82k

| meta

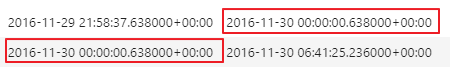

dict | created_at

stringlengths 25

25

| license

stringclasses 8

values | __index_level_0__

int64 0

6.41k

|

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

maximkulkin__lollipop-53

|

diff --git a/lollipop/types.py b/lollipop/types.py

index 5f3ac70..3ec8a50 100644

--- a/lollipop/types.py

+++ b/lollipop/types.py

@@ -1,6 +1,6 @@

from lollipop.errors import ValidationError, ValidationErrorBuilder, \

ErrorMessagesMixin, merge_errors

-from lollipop.utils import is_list, is_dict, make_context_aware, \

+from lollipop.utils import is_sequence, is_mapping, make_context_aware, \

constant, identity, OpenStruct

from lollipop.compat import string_types, int_types, iteritems, OrderedDict

import datetime

@@ -377,7 +377,7 @@ class List(Type):

self._fail('required')

# TODO: Make more intelligent check for collections

- if not is_list(data):

+ if not is_sequence(data):

self._fail('invalid')

errors_builder = ValidationErrorBuilder()

@@ -395,7 +395,7 @@ class List(Type):

if value is MISSING or value is None:

self._fail('required')

- if not is_list(value):

+ if not is_sequence(value):

self._fail('invalid')

errors_builder = ValidationErrorBuilder()

@@ -422,6 +422,7 @@ class Tuple(Type):

Example: ::

Tuple([String(), Integer(), Boolean()]).load(['foo', 123, False])

+ # => ('foo', 123, False)

:param list item_types: List of item types.

:param kwargs: Same keyword arguments as for :class:`Type`.

@@ -439,11 +440,13 @@ class Tuple(Type):

if data is MISSING or data is None:

self._fail('required')

- if not is_list(data):

+ if not is_sequence(data):

self._fail('invalid')

if len(data) != len(self.item_types):

- self._fail('invalid_length', expected_length=len(self.item_types))

+ self._fail('invalid_length',

+ expected_length=len(self.item_types),

+ actual_length=len(data))

errors_builder = ValidationErrorBuilder()

result = []

@@ -454,13 +457,13 @@ class Tuple(Type):

errors_builder.add_errors({idx: ve.messages})

errors_builder.raise_errors()

- return super(Tuple, self).load(result, *args, **kwargs)

+ return tuple(super(Tuple, self).load(result, *args, **kwargs))

def dump(self, value, *args, **kwargs):

if value is MISSING or value is None:

self._fail('required')

- if not is_list(value):

+ if not is_sequence(value):

self._fail('invalid')

if len(value) != len(self.item_types):

@@ -560,7 +563,7 @@ class OneOf(Type):

if data is MISSING or data is None:

self._fail('required')

- if is_dict(self.types) and self.load_hint:

+ if is_mapping(self.types) and self.load_hint:

type_id = self.load_hint(data)

if type_id not in self.types:

self._fail('unknown_type_id', type_id=type_id)

@@ -569,7 +572,8 @@ class OneOf(Type):

result = item_type.load(data, *args, **kwargs)

return super(OneOf, self).load(result, *args, **kwargs)

else:

- for item_type in (self.types.values() if is_dict(self.types) else self.types):

+ for item_type in (self.types.values()

+ if is_mapping(self.types) else self.types):

try:

result = item_type.load(data, *args, **kwargs)

return super(OneOf, self).load(result, *args, **kwargs)

@@ -582,7 +586,7 @@ class OneOf(Type):

if data is MISSING or data is None:

self._fail('required')

- if is_dict(self.types) and self.dump_hint:

+ if is_mapping(self.types) and self.dump_hint:

type_id = self.dump_hint(data)

if type_id not in self.types:

self._fail('unknown_type_id', type_id=type_id)

@@ -591,7 +595,8 @@ class OneOf(Type):

result = item_type.dump(data, *args, **kwargs)

return super(OneOf, self).dump(result, *args, **kwargs)

else:

- for item_type in (self.types.values() if is_dict(self.types) else self.types):

+ for item_type in (self.types.values()

+ if is_mapping(self.types) else self.types):

try:

result = item_type.dump(data, *args, **kwargs)

return super(OneOf, self).dump(result, *args, **kwargs)

@@ -668,7 +673,7 @@ class Dict(Type):

if data is MISSING or data is None:

self._fail('required')

- if not is_dict(data):

+ if not is_mapping(data):

self._fail('invalid')

errors_builder = ValidationErrorBuilder()

@@ -695,7 +700,7 @@ class Dict(Type):

if value is MISSING or value is None:

self._fail('required')

- if not is_dict(value):

+ if not is_mapping(value):

self._fail('invalid')

errors_builder = ValidationErrorBuilder()

@@ -1091,10 +1096,10 @@ class Object(Type):

if isinstance(bases_or_fields, Type):

bases = [bases_or_fields]

- if is_list(bases_or_fields) and \

+ if is_sequence(bases_or_fields) and \

all([isinstance(base, Type) for base in bases_or_fields]):

bases = bases_or_fields

- elif is_list(bases_or_fields) or is_dict(bases_or_fields):

+ elif is_sequence(bases_or_fields) or is_mapping(bases_or_fields):

if fields is None:

bases = []

fields = bases_or_fields

@@ -1108,9 +1113,9 @@ class Object(Type):

self._allow_extra_fields = allow_extra_fields

self._immutable = immutable

self._ordered = ordered

- if only is not None and not is_list(only):

+ if only is not None and not is_sequence(only):

only = [only]

- if exclude is not None and not is_list(exclude):

+ if exclude is not None and not is_sequence(exclude):

exclude = [exclude]

self._only = only

self._exclude = exclude

@@ -1155,7 +1160,8 @@ class Object(Type):

if fields is not None:

all_fields += [

(name, self._normalize_field(field))

- for name, field in (iteritems(fields) if is_dict(fields) else fields)

+ for name, field in (iteritems(fields)

+ if is_mapping(fields) else fields)

]

return OrderedDict(all_fields)

@@ -1164,7 +1170,7 @@ class Object(Type):

if data is MISSING or data is None:

self._fail('required')

- if not is_dict(data):

+ if not is_mapping(data):

self._fail('invalid')

errors_builder = ValidationErrorBuilder()

@@ -1213,7 +1219,7 @@ class Object(Type):

if data is None:

self._fail('required')

- if not is_dict(data):

+ if not is_mapping(data):

self._fail('invalid')

errors_builder = ValidationErrorBuilder()

@@ -1528,7 +1534,7 @@ def validated_type(base_type, name=None, validate=None):

"""

if validate is None:

validate = []

- if not is_list(validate):

+ if not is_sequence(validate):

validate = [validate]

class ValidatedSubtype(base_type):

diff --git a/lollipop/utils.py b/lollipop/utils.py

index 596706c..fa0bb4b 100644

--- a/lollipop/utils.py

+++ b/lollipop/utils.py

@@ -1,6 +1,7 @@

import inspect

import re

from lollipop.compat import DictMixin, iterkeys

+import collections

def identity(value):

@@ -14,14 +15,18 @@ def constant(value):

return func

-def is_list(value):

+def is_sequence(value):

"""Returns True if value supports list interface; False - otherwise"""

- return isinstance(value, list)

+ return isinstance(value, collections.Sequence)

-

-def is_dict(value):

+def is_mapping(value):

"""Returns True if value supports dict interface; False - otherwise"""

- return isinstance(value, dict)

+ return isinstance(value, collections.Mapping)

+

+

+# Backward compatibility

+is_list = is_sequence

+is_dict = is_mapping

def make_context_aware(func, numargs):

diff --git a/lollipop/validators.py b/lollipop/validators.py

index 8652d96..c29b1da 100644

--- a/lollipop/validators.py

+++ b/lollipop/validators.py

@@ -1,7 +1,7 @@

from lollipop.errors import ValidationError, ValidationErrorBuilder, \

ErrorMessagesMixin

from lollipop.compat import string_types, iteritems

-from lollipop.utils import make_context_aware, is_list, identity

+from lollipop.utils import make_context_aware, is_sequence, identity

import re

@@ -291,7 +291,7 @@ class Unique(Validator):

self._error_messages['unique'] = error

def __call__(self, value, context=None):

- if not is_list(value):

+ if not is_sequence(value):

self._fail('invalid')

seen = set()

@@ -318,12 +318,12 @@ class Each(Validator):

def __init__(self, validators, **kwargs):

super(Validator, self).__init__(**kwargs)

- if not is_list(validators):

+ if not is_sequence(validators):

validators = [validators]

self.validators = validators

def __call__(self, value, context=None):

- if not is_list(value):

+ if not is_sequence(value):

self._fail('invalid', data=value)

error_builder = ValidationErrorBuilder()

|

maximkulkin/lollipop

|

f7c50ac54610b7965d41f28d0ee5ee5e24dea0ee

|

diff --git a/tests/test_types.py b/tests/test_types.py

index 2ab07e1..e652fb6 100644

--- a/tests/test_types.py

+++ b/tests/test_types.py

@@ -509,7 +509,7 @@ class TestList(NameDescriptionTestsMixin, RequiredTestsMixin, ValidationTestsMix

def test_loading_non_list_value_raises_ValidationError(self):

with pytest.raises(ValidationError) as exc_info:

- List(String()).load('1, 2, 3')

+ List(String()).load(123)

assert exc_info.value.messages == List.default_error_messages['invalid']

def test_loading_list_value_with_items_of_incorrect_type_raises_ValidationError(self):

@@ -542,9 +542,13 @@ class TestList(NameDescriptionTestsMixin, RequiredTestsMixin, ValidationTestsMix

def test_dumping_list_value(self):

assert List(String()).dump(['foo', 'bar', 'baz']) == ['foo', 'bar', 'baz']

+ def test_dumping_sequence_value(self):

+ assert List(String()).dump(('foo', 'bar', 'baz')) == ['foo', 'bar', 'baz']

+ assert List(String()).dump('foobar') == ['f', 'o', 'o', 'b', 'a', 'r']

+

def test_dumping_non_list_value_raises_ValidationError(self):

with pytest.raises(ValidationError) as exc_info:

- List(String()).dump('1, 2, 3')

+ List(String()).dump(123)

assert exc_info.value.messages == List.default_error_messages['invalid']

def test_dumping_list_value_with_items_of_incorrect_type_raises_ValidationError(self):

@@ -563,15 +567,15 @@ class TestList(NameDescriptionTestsMixin, RequiredTestsMixin, ValidationTestsMix

class TestTuple(NameDescriptionTestsMixin, RequiredTestsMixin, ValidationTestsMixin):

tested_type = partial(Tuple, [Integer(), Integer()])

valid_data = [123, 456]

- valid_value = [123, 456]

+ valid_value = (123, 456)

def test_loading_tuple_with_values_of_same_type(self):

assert Tuple([Integer(), Integer()]).load([123, 456]) == \

- [123, 456]

+ (123, 456)

def test_loading_tuple_with_values_of_different_type(self):

assert Tuple([String(), Integer(), Boolean()]).load(['foo', 123, False]) == \

- ['foo', 123, False]

+ ('foo', 123, False)

def test_loading_non_tuple_value_raises_ValidationError(self):

with pytest.raises(ValidationError) as exc_info:

@@ -596,23 +600,35 @@ class TestTuple(NameDescriptionTestsMixin, RequiredTestsMixin, ValidationTestsMi

assert inner_type.load_context == context

def test_dump_tuple(self):

- assert Tuple([Integer(), Integer()]).dump([123, 456]) == [123, 456]

+ assert Tuple([String(), Integer()]).dump(('hello', 123)) == ['hello', 123]

+

+ def test_dump_sequence(self):

+ assert Tuple([String(), Integer()]).dump(['hello', 123]) == ['hello', 123]

def test_dumping_non_tuple_raises_ValidationError(self):

with pytest.raises(ValidationError) as exc_info:

- Tuple(String()).dump('foo')

+ Tuple([String()]).dump(123)

assert exc_info.value.messages == Tuple.default_error_messages['invalid']

+ def test_dumping_sequence_of_incorrect_length_raises_ValidationError(self):

+ with pytest.raises(ValidationError) as exc_info:

+ Tuple([String(), Integer()]).dump(['hello', 123, 456])

+ assert exc_info.value.messages == \

+ Tuple.default_error_messages['invalid_length'].format(

+ expected_length=2,

+ actual_length=3,

+ )

+

def test_dumping_tuple_with_items_of_incorrect_type_raises_ValidationError(self):

with pytest.raises(ValidationError) as exc_info:

- Tuple([String(), String()]).dump([123, 456])

+ Tuple([String(), String()]).dump(('hello', 456))

message = String.default_error_messages['invalid']

- assert exc_info.value.messages == {0: message, 1: message}

+ assert exc_info.value.messages == {1: message}

def test_dumping_tuple_passes_context_to_inner_type_dump(self):

inner_type = SpyType()

context = object()

- Tuple([inner_type, inner_type]).dump(['foo','foo'], context)

+ Tuple([inner_type, inner_type]).dump(('foo','foo'), context)

assert inner_type.dump_context == context

diff --git a/tests/test_validators.py b/tests/test_validators.py

index fa84610..5d6421a 100644

--- a/tests/test_validators.py

+++ b/tests/test_validators.py

@@ -318,7 +318,7 @@ class TestRegexp:

class TestUnique:

def test_raising_ValidationError_if_value_is_not_collection(self):

with raises(ValidationError) as exc_info:

- Unique()('foo')

+ Unique()(123)

assert exc_info.value.messages == Unique.default_error_messages['invalid']

def test_matching_empty_collection(self):

@@ -371,7 +371,7 @@ is_small = Predicate(lambda x: x <= 5, 'Value should be small')

class TestEach:

def test_raising_ValidationError_if_value_is_not_collection(self):

with raises(ValidationError) as exc_info:

- Each(lambda x: x)('foo')

+ Each(lambda x: x)(123)

assert exc_info.value.messages == Each.default_error_messages['invalid']

def test_matching_empty_collections(self):

|

Misleading Tuple type

Is it on purpose that the Tuple type dumps into / loads from a list instead of a tuple? If yes, I think the naming is a bit misleading…

```

Tuple([String(), Integer(), Boolean()]).load(('foo', 123, False))

Traceback (most recent call last):

File "<input>", line 1, in <module>

File "site-packages\lollipop\types.py", line 443, in load self._fail('invalid')

File "site-packages\lollipop\errors.py", line 63, in _fail raise ValidationError(msg)

lollipop.errors.ValidationError: Invalid data: 'Value should be list'

```

|

0.0

|

f7c50ac54610b7965d41f28d0ee5ee5e24dea0ee

|

[

"tests/test_types.py::TestList::test_dumping_sequence_value",

"tests/test_types.py::TestTuple::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestTuple::test_loading_tuple_with_values_of_same_type",

"tests/test_types.py::TestTuple::test_loading_tuple_with_values_of_different_type",

"tests/test_types.py::TestTuple::test_dump_tuple",

"tests/test_types.py::TestTuple::test_dumping_tuple_with_items_of_incorrect_type_raises_ValidationError",

"tests/test_types.py::TestTuple::test_dumping_tuple_passes_context_to_inner_type_dump"

] |

[

"tests/test_types.py::TestString::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestString::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestString::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestString::test_loading_passes_context_to_validator",

"tests/test_types.py::TestString::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestString::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestString::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestString::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestString::test_loading_None_raises_required_error",

"tests/test_types.py::TestString::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestString::test_dumping_None_raises_required_error",

"tests/test_types.py::TestString::test_name",

"tests/test_types.py::TestString::test_description",

"tests/test_types.py::TestString::test_loading_string_value",

"tests/test_types.py::TestString::test_loading_non_string_value_raises_ValidationError",

"tests/test_types.py::TestString::test_dumping_string_value",

"tests/test_types.py::TestString::test_dumping_non_string_value_raises_ValidationError",

"tests/test_types.py::TestNumber::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestNumber::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestNumber::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestNumber::test_loading_passes_context_to_validator",

"tests/test_types.py::TestNumber::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestNumber::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestNumber::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestNumber::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestNumber::test_loading_None_raises_required_error",

"tests/test_types.py::TestNumber::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestNumber::test_dumping_None_raises_required_error",

"tests/test_types.py::TestNumber::test_name",

"tests/test_types.py::TestNumber::test_description",

"tests/test_types.py::TestNumber::test_loading_float_value",

"tests/test_types.py::TestNumber::test_loading_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestNumber::test_dumping_float_value",

"tests/test_types.py::TestNumber::test_dumping_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestInteger::test_loading_integer_value",

"tests/test_types.py::TestInteger::test_loading_long_value",

"tests/test_types.py::TestInteger::test_loading_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestInteger::test_dumping_integer_value",

"tests/test_types.py::TestInteger::test_dumping_long_value",

"tests/test_types.py::TestInteger::test_dumping_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestFloat::test_loading_float_value",

"tests/test_types.py::TestFloat::test_loading_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestFloat::test_dumping_float_value",

"tests/test_types.py::TestFloat::test_dumping_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestBoolean::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestBoolean::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestBoolean::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestBoolean::test_loading_passes_context_to_validator",

"tests/test_types.py::TestBoolean::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestBoolean::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestBoolean::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestBoolean::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestBoolean::test_loading_None_raises_required_error",

"tests/test_types.py::TestBoolean::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestBoolean::test_dumping_None_raises_required_error",

"tests/test_types.py::TestBoolean::test_name",

"tests/test_types.py::TestBoolean::test_description",

"tests/test_types.py::TestBoolean::test_loading_boolean_value",

"tests/test_types.py::TestBoolean::test_loading_non_boolean_value_raises_ValidationError",

"tests/test_types.py::TestBoolean::test_dumping_boolean_value",

"tests/test_types.py::TestBoolean::test_dumping_non_boolean_value_raises_ValidationError",

"tests/test_types.py::TestDateTime::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestDateTime::test_loading_passes_context_to_validator",

"tests/test_types.py::TestDateTime::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestDateTime::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestDateTime::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestDateTime::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestDateTime::test_loading_None_raises_required_error",

"tests/test_types.py::TestDateTime::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestDateTime::test_dumping_None_raises_required_error",

"tests/test_types.py::TestDateTime::test_name",

"tests/test_types.py::TestDateTime::test_description",

"tests/test_types.py::TestDateTime::test_loading_string_date",

"tests/test_types.py::TestDateTime::test_loading_using_predefined_format",

"tests/test_types.py::TestDateTime::test_loading_using_custom_format",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestDateTime::test_customizing_error_message_if_value_is_not_string",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDateTime::test_customizing_error_message_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDateTime::test_loading_passes_deserialized_date_to_validator",

"tests/test_types.py::TestDateTime::test_dumping_date",

"tests/test_types.py::TestDateTime::test_dumping_using_predefined_format",

"tests/test_types.py::TestDateTime::test_dumping_using_custom_format",

"tests/test_types.py::TestDateTime::test_dumping_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestDate::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestDate::test_loading_passes_context_to_validator",

"tests/test_types.py::TestDate::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestDate::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestDate::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestDate::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestDate::test_loading_None_raises_required_error",

"tests/test_types.py::TestDate::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestDate::test_dumping_None_raises_required_error",

"tests/test_types.py::TestDate::test_name",

"tests/test_types.py::TestDate::test_description",

"tests/test_types.py::TestDate::test_loading_string_date",

"tests/test_types.py::TestDate::test_loading_using_predefined_format",

"tests/test_types.py::TestDate::test_loading_using_custom_format",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestDate::test_customizing_error_message_if_value_is_not_string",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDate::test_customizing_error_message_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDate::test_loading_passes_deserialized_date_to_validator",

"tests/test_types.py::TestDate::test_dumping_date",

"tests/test_types.py::TestDate::test_dumping_using_predefined_format",

"tests/test_types.py::TestDate::test_dumping_using_custom_format",

"tests/test_types.py::TestDate::test_dumping_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestTime::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestTime::test_loading_passes_context_to_validator",

"tests/test_types.py::TestTime::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestTime::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestTime::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestTime::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestTime::test_loading_None_raises_required_error",

"tests/test_types.py::TestTime::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestTime::test_dumping_None_raises_required_error",

"tests/test_types.py::TestTime::test_name",

"tests/test_types.py::TestTime::test_description",

"tests/test_types.py::TestTime::test_loading_string_date",

"tests/test_types.py::TestTime::test_loading_using_custom_format",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestTime::test_customizing_error_message_if_value_is_not_string",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestTime::test_customizing_error_message_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestTime::test_loading_passes_deserialized_date_to_validator",

"tests/test_types.py::TestTime::test_dumping_date",

"tests/test_types.py::TestTime::test_dumping_using_custom_format",

"tests/test_types.py::TestTime::test_dumping_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestList::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestList::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestList::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestList::test_loading_passes_context_to_validator",

"tests/test_types.py::TestList::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestList::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestList::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestList::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestList::test_loading_None_raises_required_error",

"tests/test_types.py::TestList::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestList::test_dumping_None_raises_required_error",

"tests/test_types.py::TestList::test_name",

"tests/test_types.py::TestList::test_description",

"tests/test_types.py::TestList::test_loading_list_value",

"tests/test_types.py::TestList::test_loading_non_list_value_raises_ValidationError",

"tests/test_types.py::TestList::test_loading_list_value_with_items_of_incorrect_type_raises_ValidationError",

"tests/test_types.py::TestList::test_loading_list_value_with_items_that_have_validation_errors_raises_ValidationError",

"tests/test_types.py::TestList::test_loading_does_not_validate_whole_list_if_items_have_errors",

"tests/test_types.py::TestList::test_loading_passes_context_to_inner_type_load",

"tests/test_types.py::TestList::test_dumping_list_value",

"tests/test_types.py::TestList::test_dumping_non_list_value_raises_ValidationError",

"tests/test_types.py::TestList::test_dumping_list_value_with_items_of_incorrect_type_raises_ValidationError",

"tests/test_types.py::TestList::test_dumping_passes_context_to_inner_type_dump",

"tests/test_types.py::TestTuple::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestTuple::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestTuple::test_loading_passes_context_to_validator",

"tests/test_types.py::TestTuple::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestTuple::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestTuple::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestTuple::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestTuple::test_loading_None_raises_required_error",

"tests/test_types.py::TestTuple::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestTuple::test_dumping_None_raises_required_error",

"tests/test_types.py::TestTuple::test_name",

"tests/test_types.py::TestTuple::test_description",

"tests/test_types.py::TestTuple::test_loading_non_tuple_value_raises_ValidationError",

"tests/test_types.py::TestTuple::test_loading_tuple_with_items_of_incorrect_type_raises_ValidationError",

"tests/test_types.py::TestTuple::test_loading_tuple_with_items_that_have_validation_errors_raises_ValidationErrors",

"tests/test_types.py::TestTuple::test_loading_passes_context_to_inner_type_load",

"tests/test_types.py::TestTuple::test_dump_sequence",

"tests/test_types.py::TestTuple::test_dumping_non_tuple_raises_ValidationError",

"tests/test_types.py::TestTuple::test_dumping_sequence_of_incorrect_length_raises_ValidationError",

"tests/test_types.py::TestDict::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestDict::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestDict::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestDict::test_loading_passes_context_to_validator",

"tests/test_types.py::TestDict::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestDict::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestDict::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestDict::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestDict::test_loading_None_raises_required_error",

"tests/test_types.py::TestDict::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestDict::test_dumping_None_raises_required_error",

"tests/test_types.py::TestDict::test_name",

"tests/test_types.py::TestDict::test_description",

"tests/test_types.py::TestDict::test_loading_dict_with_custom_key_type",

"tests/test_types.py::TestDict::test_loading_accepts_any_key_if_key_type_is_not_specified",

"tests/test_types.py::TestDict::test_loading_dict_with_values_of_the_same_type",

"tests/test_types.py::TestDict::test_loading_dict_with_values_of_different_types",

"tests/test_types.py::TestDict::test_loading_accepts_any_value_if_value_types_are_not_specified",

"tests/test_types.py::TestDict::test_loading_non_dict_value_raises_ValidationError",

"tests/test_types.py::TestDict::test_loading_dict_with_incorrect_key_value_raises_ValidationError",

"tests/test_types.py::TestDict::test_loading_dict_with_items_of_incorrect_type_raises_ValidationError",

"tests/test_types.py::TestDict::test_loading_dict_with_items_that_have_validation_errors_raises_ValidationError",

"tests/test_types.py::TestDict::test_loading_does_not_validate_whole_list_if_items_have_errors",

"tests/test_types.py::TestDict::test_loading_dict_with_incorrect_key_value_and_incorrect_value_raises_ValidationError_with_both_errors",

"tests/test_types.py::TestDict::test_loading_passes_context_to_inner_type_load",

"tests/test_types.py::TestDict::test_dumping_dict_with_custom_key_type",

"tests/test_types.py::TestDict::test_dumping_accepts_any_key_if_key_type_is_not_specified",

"tests/test_types.py::TestDict::test_dumping_dict_with_values_of_the_same_type",

"tests/test_types.py::TestDict::test_dumping_dict_with_values_of_different_types",

"tests/test_types.py::TestDict::test_dumping_accepts_any_value_if_value_types_are_not_specified",

"tests/test_types.py::TestDict::test_dumping_non_dict_value_raises_ValidationError",

"tests/test_types.py::TestDict::test_dumping_dict_with_incorrect_key_value_raises_ValidationError",

"tests/test_types.py::TestDict::test_dumping_dict_with_items_of_incorrect_type_raises_ValidationError",

"tests/test_types.py::TestDict::test_dumping_dict_with_incorrect_key_value_and_incorrect_value_raises_ValidationError_with_both_errors",

"tests/test_types.py::TestDict::test_dumping_passes_context_to_inner_type_dump",

"tests/test_types.py::TestOneOf::test_loading_values_of_one_of_listed_types",

"tests/test_types.py::TestOneOf::test_loading_raises_ValidationError_if_value_is_of_unlisted_type",

"tests/test_types.py::TestOneOf::test_loading_raises_ValidationError_if_deserialized_value_has_errors",

"tests/test_types.py::TestOneOf::test_loading_raises_ValidationError_if_type_hint_is_unknown",

"tests/test_types.py::TestOneOf::test_loading_with_type_hinting",

"tests/test_types.py::TestOneOf::test_loading_with_type_hinting_raises_ValidationError_if_deserialized_value_has_errors",

"tests/test_types.py::TestOneOf::test_dumping_values_of_one_of_listed_types",

"tests/test_types.py::TestOneOf::test_dumping_raises_ValidationError_if_value_is_of_unlisted_type",

"tests/test_types.py::TestOneOf::test_dumping_raises_ValidationError_if_type_hint_is_unknown",

"tests/test_types.py::TestOneOf::test_dumping_raises_ValidationError_if_serialized_value_has_errors",

"tests/test_types.py::TestOneOf::test_dumping_with_type_hinting",

"tests/test_types.py::TestOneOf::test_dumping_with_type_hinting_raises_ValidationError_if_deserialized_value_has_errors",

"tests/test_types.py::TestAttributeField::test_getting_value_returns_value_of_given_object_attribute",

"tests/test_types.py::TestAttributeField::test_getting_value_returns_value_of_configured_object_attribute",

"tests/test_types.py::TestAttributeField::test_getting_value_returns_value_of_field_name_transformed_with_given_name_transformation",

"tests/test_types.py::TestAttributeField::test_setting_value_sets_given_value_to_given_object_attribute",

"tests/test_types.py::TestAttributeField::test_setting_value_sets_given_value_to_configured_object_attribute",

"tests/test_types.py::TestAttributeField::test_setting_value_sets_given_value_to_field_name_transformed_with_given_name_transformation",

"tests/test_types.py::TestAttributeField::test_loading_value_with_field_type",

"tests/test_types.py::TestAttributeField::test_loading_given_attribute_regardless_of_attribute_override",

"tests/test_types.py::TestAttributeField::test_loading_missing_value_if_attribute_does_not_exist",

"tests/test_types.py::TestAttributeField::test_loading_passes_context_to_field_type_load",

"tests/test_types.py::TestAttributeField::test_dumping_given_attribute_from_object",

"tests/test_types.py::TestAttributeField::test_dumping_object_attribute_with_field_type",

"tests/test_types.py::TestAttributeField::test_dumping_a_different_attribute_from_object",

"tests/test_types.py::TestAttributeField::test_dumping_passes_context_to_field_type_dump",

"tests/test_types.py::TestMethodField::test_get_value_returns_result_of_calling_configured_method_on_object",

"tests/test_types.py::TestMethodField::test_get_value_returns_result_of_calling_method_calculated_by_given_function_on_object",

"tests/test_types.py::TestMethodField::test_get_value_returns_MISSING_if_get_method_is_not_specified",

"tests/test_types.py::TestMethodField::test_get_value_raises_ValueError_if_method_does_not_exist",

"tests/test_types.py::TestMethodField::test_get_value_raises_ValueError_if_property_is_not_callable",

"tests/test_types.py::TestMethodField::test_get_value_passes_context_to_method",

"tests/test_types.py::TestMethodField::test_set_value_calls_configure_method_on_object",

"tests/test_types.py::TestMethodField::test_set_value_calls_method_calculated_by_given_function_on_object",

"tests/test_types.py::TestMethodField::test_set_value_does_not_do_anything_if_set_method_is_not_specified",

"tests/test_types.py::TestMethodField::test_set_value_raises_ValueError_if_method_does_not_exist",

"tests/test_types.py::TestMethodField::test_set_value_raises_ValueError_if_property_is_not_callable",

"tests/test_types.py::TestMethodField::test_set_value_passes_context_to_method",

"tests/test_types.py::TestMethodField::test_loading_value_with_field_type",

"tests/test_types.py::TestMethodField::test_loading_value_returns_loaded_value",

"tests/test_types.py::TestMethodField::test_loading_value_passes_context_to_field_types_load",

"tests/test_types.py::TestMethodField::test_loading_value_into_existing_object_calls_field_types_load_into",

"tests/test_types.py::TestMethodField::test_loading_value_into_existing_object_calls_field_types_load_if_load_into_is_not_available",

"tests/test_types.py::TestMethodField::test_loading_value_into_existing_object_calls_field_types_load_if_old_value_is_None",

"tests/test_types.py::TestMethodField::test_loading_value_into_existing_object_calls_field_types_load_if_old_value_is_MISSING",

"tests/test_types.py::TestMethodField::test_loading_value_into_existing_object_passes_context_to_field_types_load_into",

"tests/test_types.py::TestMethodField::test_dumping_result_of_given_objects_method",

"tests/test_types.py::TestMethodField::test_dumping_result_of_objects_method_with_field_type",

"tests/test_types.py::TestMethodField::test_dumping_result_of_a_different_objects_method",

"tests/test_types.py::TestMethodField::test_dumping_raises_ValueError_if_given_method_does_not_exist",

"tests/test_types.py::TestMethodField::test_dumping_raises_ValueError_if_given_method_is_not_callable",

"tests/test_types.py::TestMethodField::test_dumping_passes_context_to_field_type_dump",

"tests/test_types.py::TestFunctionField::test_get_value_returns_result_of_calling_configured_function_with_object",

"tests/test_types.py::TestFunctionField::test_get_value_returns_MISSING_if_get_func_is_not_specified",

"tests/test_types.py::TestFunctionField::test_get_value_raises_ValueError_if_property_is_not_callable",

"tests/test_types.py::TestFunctionField::test_get_value_passes_context_to_func",

"tests/test_types.py::TestFunctionField::test_set_value_calls_configure_method_on_object",

"tests/test_types.py::TestFunctionField::test_set_value_does_not_do_anything_if_set_func_is_not_specified",

"tests/test_types.py::TestFunctionField::test_set_value_raises_ValueError_if_property_is_not_callable",

"tests/test_types.py::TestFunctionField::test_set_value_passes_context_to_func",

"tests/test_types.py::TestFunctionField::test_loading_value_with_field_type",

"tests/test_types.py::TestFunctionField::test_loading_value_returns_loaded_value",

"tests/test_types.py::TestFunctionField::test_loading_value_passes_context_to_field_types_load",

"tests/test_types.py::TestFunctionField::test_loading_value_into_existing_object_calls_field_types_load_into",

"tests/test_types.py::TestFunctionField::test_loading_value_into_existing_object_calls_field_types_load_if_load_into_is_not_available",

"tests/test_types.py::TestFunctionField::test_loading_value_into_existing_object_calls_field_types_load_if_old_value_is_None",

"tests/test_types.py::TestFunctionField::test_loading_value_into_existing_object_calls_field_types_load_if_old_value_is_MISSING",

"tests/test_types.py::TestFunctionField::test_loading_value_into_existing_object_passes_context_to_field_types_load_into",

"tests/test_types.py::TestFunctionField::test_dumping_result_of_given_function",

"tests/test_types.py::TestFunctionField::test_dumping_result_of_objects_method_with_field_type",

"tests/test_types.py::TestFunctionField::test_dumping_raises_ValueError_if_given_get_func_is_not_callable",

"tests/test_types.py::TestFunctionField::test_dumping_passes_context_to_field_type_dump",

"tests/test_types.py::TestConstant::test_name",

"tests/test_types.py::TestConstant::test_description",

"tests/test_types.py::TestConstant::test_loading_always_returns_missing",

"tests/test_types.py::TestConstant::test_loading_raises_ValidationError_if_loaded_value_is_not_a_constant_value_specified",

"tests/test_types.py::TestConstant::test_loading_value_with_inner_type_before_checking_value_correctness",

"tests/test_types.py::TestConstant::test_customizing_error_message_when_value_is_incorrect",

"tests/test_types.py::TestConstant::test_dumping_always_returns_given_value",

"tests/test_types.py::TestConstant::test_dumping_given_constant_with_field_type",

"tests/test_types.py::TestObject::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestObject::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestObject::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestObject::test_loading_passes_context_to_validator",

"tests/test_types.py::TestObject::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestObject::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestObject::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestObject::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestObject::test_loading_None_raises_required_error",

"tests/test_types.py::TestObject::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestObject::test_dumping_None_raises_required_error",

"tests/test_types.py::TestObject::test_name",

"tests/test_types.py::TestObject::test_description",

"tests/test_types.py::TestObject::test_default_field_type_is_unset_by_default",

"tests/test_types.py::TestObject::test_inheriting_default_field_type_from_first_base_class_that_has_it_set",

"tests/test_types.py::TestObject::test_constructor_is_unset_by_default",

"tests/test_types.py::TestObject::test_inheriting_constructor_from_first_base_class_that_has_it_set",

"tests/test_types.py::TestObject::test_allow_extra_fields_is_unset_by_default",

"tests/test_types.py::TestObject::test_inheriting_allow_extra_fields_from_first_base_class_that_has_it_set",

"tests/test_types.py::TestObject::test_immutable_is_unset_by_default",

"tests/test_types.py::TestObject::test_inheriting_immutable_from_first_base_class_that_has_it_set",

"tests/test_types.py::TestObject::test_ordered_is_unset_by_default",

"tests/test_types.py::TestObject::test_iheriting_ordered_from_first_base_class_that_has_it_set",

"tests/test_types.py::TestObject::test_loading_dict_value",

"tests/test_types.py::TestObject::test_loading_non_dict_values_raises_ValidationError",

"tests/test_types.py::TestObject::test_loading_bypasses_values_for_which_field_type_returns_missing_value",

"tests/test_types.py::TestObject::test_loading_dict_with_field_errors_raises_ValidationError_with_all_field_errors_merged",

"tests/test_types.py::TestObject::test_loading_dict_with_field_errors_does_not_run_whole_object_validators",

"tests/test_types.py::TestObject::test_loading_calls_field_load_passing_field_name_and_whole_data",

"tests/test_types.py::TestObject::test_loading_passes_context_to_inner_type_load",

"tests/test_types.py::TestObject::test_constructing_objects_with_default_constructor_on_load",

"tests/test_types.py::TestObject::test_constructing_custom_objects_on_load",

"tests/test_types.py::TestObject::test_load_ignores_extra_fields_by_default",

"tests/test_types.py::TestObject::test_load_raises_ValidationError_if_reporting_extra_fields",

"tests/test_types.py::TestObject::test_loading_inherited_fields",

"tests/test_types.py::TestObject::test_loading_multiple_inherited_fields",

"tests/test_types.py::TestObject::test_loading_raises_ValidationError_if_inherited_fields_have_errors",

"tests/test_types.py::TestObject::test_loading_only_specified_fields",

"tests/test_types.py::TestObject::test_loading_only_specified_fields_does_not_affect_own_fields",

"tests/test_types.py::TestObject::test_loading_all_but_specified_base_class_fields",

"tests/test_types.py::TestObject::test_loading_all_but_specified_fields_does_not_affect_own_fields",

"tests/test_types.py::TestObject::test_loading_values_into_existing_object",

"tests/test_types.py::TestObject::test_loading_values_into_existing_object_returns_that_object",

"tests/test_types.py::TestObject::test_loading_values_into_existing_object_passes_all_object_attributes_to_validators",

"tests/test_types.py::TestObject::test_loading_values_into_immutable_object_creates_a_copy",

"tests/test_types.py::TestObject::test_loading_values_into_immutable_object_does_not_modify_original_object",

"tests/test_types.py::TestObject::test_loading_values_into_nested_object_of_immutable_object_creates_copy_of_it_regardless_of_nested_objects_immutable_flag",

"tests/test_types.py::TestObject::test_loading_values_into_nested_object_of_immutable_object_does_not_modify_original_objects",

"tests/test_types.py::TestObject::test_loading_values_into_nested_objects_with_inplace_False_does_not_modify_original_objects",

"tests/test_types.py::TestObject::test_loading_values_into_existing_objects_ignores_missing_fields",

"tests/test_types.py::TestObject::test_loading_MISSING_into_existing_object_does_not_do_anything",

"tests/test_types.py::TestObject::test_loading_None_into_existing_objects_raises_ValidationError",

"tests/test_types.py::TestObject::test_loading_None_into_field_of_existing_object_passes_None_to_field",

"tests/test_types.py::TestObject::test_loading_values_into_existing_objects_raises_ValidationError_if_data_contains_errors",

"tests/test_types.py::TestObject::test_loading_values_into_existing_objects_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestObject::test_loading_values_into_existing_objects_annotates_field_errors_with_field_names",

"tests/test_types.py::TestObject::test_loading_values_into_existing_nested_objects",

"tests/test_types.py::TestObject::test_loading_values_into_existing_object_when_nested_object_does_not_exist",

"tests/test_types.py::TestObject::test_validating_data_for_existing_objects_returns_None_if_data_is_valid",

"tests/test_types.py::TestObject::test_validating_data_for_existing_objects_returns_errors_if_data_contains_errors",

"tests/test_types.py::TestObject::test_validating_data_for_existing_objects_returns_errors_if_validator_fails",

"tests/test_types.py::TestObject::test_validating_data_for_existing_objects_does_not_modify_original_objects",

"tests/test_types.py::TestObject::test_dumping_object_attributes",

"tests/test_types.py::TestObject::test_dumping_calls_field_dump_passing_field_name_and_whole_object",

"tests/test_types.py::TestObject::test_dumping_passes_context_to_inner_type_dump",

"tests/test_types.py::TestObject::test_dumping_inherited_fields",

"tests/test_types.py::TestObject::test_dumping_multiple_inherited_fields",

"tests/test_types.py::TestObject::test_dumping_only_specified_fields_of_base_classes",

"tests/test_types.py::TestObject::test_dumping_only_specified_fields_does_not_affect_own_fields",

"tests/test_types.py::TestObject::test_dumping_all_but_specified_base_class_fields",

"tests/test_types.py::TestObject::test_dumping_all_but_specified_fields_does_not_affect_own_fields",

"tests/test_types.py::TestObject::test_shortcut_for_specifying_constant_fields",

"tests/test_types.py::TestObject::test_dumping_fields_in_declared_order_if_ordered_is_True",

"tests/test_types.py::TestOptional::test_loading_value_calls_load_of_inner_type",

"tests/test_types.py::TestOptional::test_loading_missing_value_returns_None",

"tests/test_types.py::TestOptional::test_loading_None_returns_None",

"tests/test_types.py::TestOptional::test_loading_missing_value_does_not_call_inner_type_load",

"tests/test_types.py::TestOptional::test_loading_None_does_not_call_inner_type_load",

"tests/test_types.py::TestOptional::test_loading_passes_context_to_inner_type_load",

"tests/test_types.py::TestOptional::test_overriding_missing_value_on_load",

"tests/test_types.py::TestOptional::test_overriding_None_value_on_load",

"tests/test_types.py::TestOptional::test_using_function_to_override_value_on_load",

"tests/test_types.py::TestOptional::test_loading_passes_context_to_override_function",

"tests/test_types.py::TestOptional::test_dumping_value_calls_dump_of_inner_type",

"tests/test_types.py::TestOptional::test_dumping_missing_value_returns_None",

"tests/test_types.py::TestOptional::test_dumping_None_returns_None",

"tests/test_types.py::TestOptional::test_dumping_missing_value_does_not_call_inner_type_dump",

"tests/test_types.py::TestOptional::test_dumping_None_does_not_call_inner_type_dump",

"tests/test_types.py::TestOptional::test_dumping_passes_context_to_inner_type_dump",

"tests/test_types.py::TestOptional::test_overriding_missing_value_on_dump",

"tests/test_types.py::TestOptional::test_overriding_None_value_on_dump",

"tests/test_types.py::TestOptional::test_using_function_to_override_value_on_dump",

"tests/test_types.py::TestOptional::test_dumping_passes_context_to_override_function",

"tests/test_types.py::TestLoadOnly::test_name",

"tests/test_types.py::TestLoadOnly::test_description",

"tests/test_types.py::TestLoadOnly::test_loading_returns_inner_type_load_result",

"tests/test_types.py::TestLoadOnly::test_loading_passes_context_to_inner_type_load",

"tests/test_types.py::TestLoadOnly::test_dumping_always_returns_missing",

"tests/test_types.py::TestLoadOnly::test_dumping_does_not_call_inner_type_dump",

"tests/test_types.py::TestDumpOnly::test_name",

"tests/test_types.py::TestDumpOnly::test_description",

"tests/test_types.py::TestDumpOnly::test_loading_always_returns_missing",

"tests/test_types.py::TestDumpOnly::test_loading_does_not_call_inner_type_dump",

"tests/test_types.py::TestDumpOnly::test_dumping_returns_inner_type_dump_result",

"tests/test_types.py::TestDumpOnly::test_dumping_passes_context_to_inner_type_dump",

"tests/test_types.py::TestTransform::test_name",

"tests/test_types.py::TestTransform::test_description",

"tests/test_types.py::TestTransform::test_loading_calls_pre_load_with_original_value",

"tests/test_types.py::TestTransform::test_loading_calls_inner_type_load_with_result_of_pre_load",

"tests/test_types.py::TestTransform::test_loading_calls_post_load_with_result_of_inner_type_load",

"tests/test_types.py::TestTransform::test_transform_passes_context_to_inner_type_load",

"tests/test_types.py::TestTransform::test_transform_passes_context_to_pre_load",

"tests/test_types.py::TestTransform::test_transform_passes_context_to_post_load",

"tests/test_types.py::TestTransform::test_dumping_calls_pre_dump_with_original_value",

"tests/test_types.py::TestTransform::test_dumping_calls_inner_type_dump_with_result_of_pre_dump",

"tests/test_types.py::TestTransform::test_dumping_calls_post_dump_with_result_of_inner_type_dump",

"tests/test_types.py::TestTransform::test_transform_passes_context_to_inner_type_dump",

"tests/test_types.py::TestTransform::test_transform_passes_context_to_pre_dump",

"tests/test_types.py::TestTransform::test_transform_passes_context_to_post_dump",

"tests/test_types.py::TestValidatedType::test_returns_subclass_of_given_type",

"tests/test_types.py::TestValidatedType::test_returns_type_that_has_single_given_validator",

"tests/test_types.py::TestValidatedType::test_accepts_context_unaware_validators",

"tests/test_types.py::TestValidatedType::test_returns_type_that_has_multiple_given_validators",

"tests/test_types.py::TestValidatedType::test_specifying_more_validators_on_type_instantiation",

"tests/test_types.py::TestValidatedType::test_new_type_accepts_same_constructor_arguments_as_base_type",

"tests/test_validators.py::TestPredicate::test_matching_values",

"tests/test_validators.py::TestPredicate::test_raising_ValidationError_if_predicate_returns_False",

"tests/test_validators.py::TestPredicate::test_customizing_validation_error",

"tests/test_validators.py::TestPredicate::test_passing_context_to_predicate",

"tests/test_validators.py::TestRange::test_matching_min_value",

"tests/test_validators.py::TestRange::test_raising_ValidationError_when_matching_min_value_and_given_value_is_less",

"tests/test_validators.py::TestRange::test_customzing_min_error_message",

"tests/test_validators.py::TestRange::test_matching_max_value",

"tests/test_validators.py::TestRange::test_raising_ValidationError_when_matching_max_value_and_given_value_is_greater",

"tests/test_validators.py::TestRange::test_customzing_max_error_message",

"tests/test_validators.py::TestRange::test_matching_range",

"tests/test_validators.py::TestRange::test_raising_ValidationError_when_matching_range_and_given_value_is_less",

"tests/test_validators.py::TestRange::test_raising_ValidationError_when_matching_range_and_given_value_is_greater",

"tests/test_validators.py::TestRange::test_customzing_range_error_message",

"tests/test_validators.py::TestRange::test_customizing_all_error_messages_at_once",

"tests/test_validators.py::TestLength::test_matching_exact_value",

"tests/test_validators.py::TestLength::test_raising_ValidationError_when_matching_exact_value_and_given_value_does_not_match",

"tests/test_validators.py::TestLength::test_customizing_exact_error_message",

"tests/test_validators.py::TestLength::test_matching_min_value",

"tests/test_validators.py::TestLength::test_raising_ValidationError_when_matching_min_value_and_given_value_is_less",

"tests/test_validators.py::TestLength::test_customzing_min_error_message",

"tests/test_validators.py::TestLength::test_matching_max_value",

"tests/test_validators.py::TestLength::test_raising_ValidationError_when_matching_max_value_and_given_value_is_greater",

"tests/test_validators.py::TestLength::test_customzing_max_error_message",

"tests/test_validators.py::TestLength::test_matching_range",

"tests/test_validators.py::TestLength::test_raising_ValidationError_when_matching_range_and_given_value_is_less",

"tests/test_validators.py::TestLength::test_raising_ValidationError_when_matching_range_and_given_value_is_greater",

"tests/test_validators.py::TestLength::test_customzing_range_error_message",

"tests/test_validators.py::TestLength::test_customizing_all_error_messages_at_once",

"tests/test_validators.py::TestNoneOf::test_matching_values_other_than_given_values",

"tests/test_validators.py::TestNoneOf::test_raising_ValidationError_when_value_is_one_of_forbidden_values",

"tests/test_validators.py::TestNoneOf::test_customizing_error_message",

"tests/test_validators.py::TestAnyOf::test_matching_given_values",

"tests/test_validators.py::TestAnyOf::test_raising_ValidationError_when_value_is_other_than_given_values",

"tests/test_validators.py::TestAnyOf::test_customizing_error_message",

"tests/test_validators.py::TestRegexp::test_matching_by_string_regexp",

"tests/test_validators.py::TestRegexp::test_matching_by_string_regexp_with_flags",

"tests/test_validators.py::TestRegexp::test_matching_by_regexp",

"tests/test_validators.py::TestRegexp::test_matching_by_regexp_ignores_flags",

"tests/test_validators.py::TestRegexp::test_raising_ValidationError_if_given_string_does_not_match_string_regexp",

"tests/test_validators.py::TestRegexp::test_raising_ValidationError_if_given_string_does_not_match_regexp",

"tests/test_validators.py::TestRegexp::test_customizing_error_message",

"tests/test_validators.py::TestUnique::test_raising_ValidationError_if_value_is_not_collection",

"tests/test_validators.py::TestUnique::test_matching_empty_collection",

"tests/test_validators.py::TestUnique::test_matching_collection_of_unique_values",

"tests/test_validators.py::TestUnique::test_matching_collection_of_values_with_unique_custom_keys",

"tests/test_validators.py::TestUnique::test_raising_ValidationError_if_item_appears_more_than_once",

"tests/test_validators.py::TestUnique::test_raising_ValidationError_if_custom_key_appears_more_than_once",

"tests/test_validators.py::TestUnique::test_customizing_error_message",

"tests/test_validators.py::TestEach::test_raising_ValidationError_if_value_is_not_collection",

"tests/test_validators.py::TestEach::test_matching_empty_collections",

"tests/test_validators.py::TestEach::test_matching_collections_each_elemenet_of_which_matches_given_validators",

"tests/test_validators.py::TestEach::test_raising_ValidationError_if_single_validator_fails",

"tests/test_validators.py::TestEach::test_raising_ValidationError_if_any_item_fails_any_validator"

] |

{

"failed_lite_validators": [

"has_many_modified_files",

"has_many_hunks"

],

"has_test_patch": true,

"is_lite": false

}

|

2017-06-09 05:22:05+00:00

|

mit

| 3,835 |

|

maximkulkin__lollipop-55

|

diff --git a/lollipop/types.py b/lollipop/types.py

index 3ec8a50..acb7f3b 100644

--- a/lollipop/types.py

+++ b/lollipop/types.py

@@ -679,16 +679,22 @@ class Dict(Type):

errors_builder = ValidationErrorBuilder()

result = {}

for k, v in iteritems(data):

- value_type = self.value_types.get(k)

- if value_type is None:

- continue

try:

k = self.key_type.load(k, *args, **kwargs)

except ValidationError as ve:

errors_builder.add_error(k, ve.messages)

+ if k is MISSING:

+ continue

+

+ value_type = self.value_types.get(k)

+ if value_type is None:

+ continue

+

try:

- result[k] = value_type.load(v, *args, **kwargs)

+ value = value_type.load(v, *args, **kwargs)

+ if value is not MISSING:

+ result[k] = value

except ValidationError as ve:

errors_builder.add_error(k, ve.messages)

@@ -715,8 +721,13 @@ class Dict(Type):

except ValidationError as ve:

errors_builder.add_error(k, ve.messages)

+ if k is MISSING:

+ continue

+

try:

- result[k] = value_type.dump(v, *args, **kwargs)

+ value = value_type.dump(v, *args, **kwargs)

+ if value is not MISSING:

+ result[k] = value

except ValidationError as ve:

errors_builder.add_error(k, ve.messages)

|

maximkulkin/lollipop

|

360bbc8f9c2b6203ab5af8a3cd051f852ba8dae3

|

diff --git a/tests/test_types.py b/tests/test_types.py

index e652fb6..6489dbf 100644

--- a/tests/test_types.py

+++ b/tests/test_types.py

@@ -641,6 +641,30 @@ class TestDict(NameDescriptionTestsMixin, RequiredTestsMixin, ValidationTestsMix

assert Dict(Any(), key_type=Integer())\

.load({'123': 'foo', '456': 'bar'}) == {123: 'foo', 456: 'bar'}

+ def test_loading_dict_with_custom_key_type_and_values_of_different_types(self):

+ assert Dict({1: Integer(), 2: String()}, key_type=Integer())\

+ .load({'1': '123', '2': 'bar'}) == {1: 123, 2: 'bar'}

+

+ def test_loading_skips_key_value_if_custom_key_type_loads_to_missing(self):

+ class CustomKeyType(String):

+ def load(self, data, *args, **kwargs):

+ if data == 'foo':

+ return MISSING

+ return super(CustomKeyType, self).load(data, *args, **kwargs)

+

+ assert Dict(String(), key_type=CustomKeyType())\

+ .load({'foo': 'hello', 'bar': 'goodbye'}) == {'bar': 'goodbye'}

+

+ def test_loading_skips_key_value_if_value_type_loads_to_missing(self):

+ class CustomValueType(String):

+ def load(self, data, *args, **kwargs):

+ if data == 'foo':

+ return MISSING

+ return super(CustomValueType, self).load(data, *args, **kwargs)

+

+ assert Dict(CustomValueType())\

+ .load({'key1': 'foo', 'key2': 'bar'}) == {'key2': 'bar'}

+

def test_loading_accepts_any_key_if_key_type_is_not_specified(self):

assert Dict(Any())\

.load({'123': 'foo', 456: 'bar'}) == {'123': 'foo', 456: 'bar'}

@@ -719,7 +743,27 @@ class TestDict(NameDescriptionTestsMixin, RequiredTestsMixin, ValidationTestsMix

def test_dumping_dict_with_values_of_different_types(self):

value = {'foo': 1, 'bar': 'hello', 'baz': True}

assert Dict({'foo': Integer(), 'bar': String(), 'baz': Boolean()})\

- .load(value) == value

+ .dump(value) == value

+

+ def test_dumping_skips_key_value_if_custom_key_type_loads_to_missing(self):

+ class CustomKeyType(String):

+ def dump(self, data, *args, **kwargs):

+ if data == 'foo':

+ return MISSING

+ return super(CustomKeyType, self).load(data, *args, **kwargs)

+

+ assert Dict(String(), key_type=CustomKeyType())\

+ .dump({'foo': 'hello', 'bar': 'goodbye'}) == {'bar': 'goodbye'}

+

+ def test_dumping_skips_key_value_if_value_type_loads_to_missing(self):

+ class CustomValueType(String):

+ def dump(self, data, *args, **kwargs):

+ if data == 'foo':

+ return MISSING

+ return super(CustomValueType, self).load(data, *args, **kwargs)

+

+ assert Dict(CustomValueType())\

+ .dump({'key1': 'foo', 'key2': 'bar'}) == {'key2': 'bar'}

def test_dumping_accepts_any_value_if_value_types_are_not_specified(self):

assert Dict()\

|

Asymmetry in load/dump for Dict with key_type and dict as values_type

I think there is a problem with the Dict type when using both key_type verification and a values_type dictionary:

In Dict.dump, the key is used in its original / non-dumped form to lookup the value type. However, in Dict.load, the dumped key is used to lookup the value type.

This works fine when using native types as key type such as String and Integer since they map to the same loaded/dumped value. But it's causing a problem when using a more complex key type (e.g. in my case an Enum that dumps to a string).

I believe, in Dict.load, key_type.load should be called **before** the lookup of the value type, so that the lookup is again performed with the original / non-dumped value.

|

0.0

|

360bbc8f9c2b6203ab5af8a3cd051f852ba8dae3

|

[

"tests/test_types.py::TestDict::test_loading_dict_with_custom_key_type_and_values_of_different_types",

"tests/test_types.py::TestDict::test_loading_skips_key_value_if_custom_key_type_loads_to_missing",

"tests/test_types.py::TestDict::test_loading_skips_key_value_if_value_type_loads_to_missing",

"tests/test_types.py::TestDict::test_dumping_skips_key_value_if_custom_key_type_loads_to_missing",

"tests/test_types.py::TestDict::test_dumping_skips_key_value_if_value_type_loads_to_missing"

] |

[

"tests/test_types.py::TestString::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestString::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestString::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestString::test_loading_passes_context_to_validator",

"tests/test_types.py::TestString::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestString::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestString::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestString::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestString::test_loading_None_raises_required_error",

"tests/test_types.py::TestString::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestString::test_dumping_None_raises_required_error",

"tests/test_types.py::TestString::test_name",

"tests/test_types.py::TestString::test_description",

"tests/test_types.py::TestString::test_loading_string_value",

"tests/test_types.py::TestString::test_loading_non_string_value_raises_ValidationError",

"tests/test_types.py::TestString::test_dumping_string_value",

"tests/test_types.py::TestString::test_dumping_non_string_value_raises_ValidationError",

"tests/test_types.py::TestNumber::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestNumber::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestNumber::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestNumber::test_loading_passes_context_to_validator",

"tests/test_types.py::TestNumber::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestNumber::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestNumber::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestNumber::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestNumber::test_loading_None_raises_required_error",

"tests/test_types.py::TestNumber::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestNumber::test_dumping_None_raises_required_error",

"tests/test_types.py::TestNumber::test_name",

"tests/test_types.py::TestNumber::test_description",

"tests/test_types.py::TestNumber::test_loading_float_value",

"tests/test_types.py::TestNumber::test_loading_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestNumber::test_dumping_float_value",

"tests/test_types.py::TestNumber::test_dumping_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestInteger::test_loading_integer_value",

"tests/test_types.py::TestInteger::test_loading_long_value",

"tests/test_types.py::TestInteger::test_loading_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestInteger::test_dumping_integer_value",

"tests/test_types.py::TestInteger::test_dumping_long_value",

"tests/test_types.py::TestInteger::test_dumping_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestFloat::test_loading_float_value",

"tests/test_types.py::TestFloat::test_loading_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestFloat::test_dumping_float_value",

"tests/test_types.py::TestFloat::test_dumping_non_numeric_value_raises_ValidationError",

"tests/test_types.py::TestBoolean::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestBoolean::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestBoolean::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestBoolean::test_loading_passes_context_to_validator",

"tests/test_types.py::TestBoolean::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestBoolean::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestBoolean::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestBoolean::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestBoolean::test_loading_None_raises_required_error",

"tests/test_types.py::TestBoolean::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestBoolean::test_dumping_None_raises_required_error",

"tests/test_types.py::TestBoolean::test_name",

"tests/test_types.py::TestBoolean::test_description",

"tests/test_types.py::TestBoolean::test_loading_boolean_value",

"tests/test_types.py::TestBoolean::test_loading_non_boolean_value_raises_ValidationError",

"tests/test_types.py::TestBoolean::test_dumping_boolean_value",

"tests/test_types.py::TestBoolean::test_dumping_non_boolean_value_raises_ValidationError",

"tests/test_types.py::TestDateTime::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestDateTime::test_loading_passes_context_to_validator",

"tests/test_types.py::TestDateTime::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestDateTime::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestDateTime::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestDateTime::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestDateTime::test_loading_None_raises_required_error",

"tests/test_types.py::TestDateTime::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestDateTime::test_dumping_None_raises_required_error",

"tests/test_types.py::TestDateTime::test_name",

"tests/test_types.py::TestDateTime::test_description",

"tests/test_types.py::TestDateTime::test_loading_string_date",

"tests/test_types.py::TestDateTime::test_loading_using_predefined_format",

"tests/test_types.py::TestDateTime::test_loading_using_custom_format",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestDateTime::test_customizing_error_message_if_value_is_not_string",

"tests/test_types.py::TestDateTime::test_loading_raises_ValidationError_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDateTime::test_customizing_error_message_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDateTime::test_loading_passes_deserialized_date_to_validator",

"tests/test_types.py::TestDateTime::test_dumping_date",

"tests/test_types.py::TestDateTime::test_dumping_using_predefined_format",

"tests/test_types.py::TestDateTime::test_dumping_using_custom_format",

"tests/test_types.py::TestDateTime::test_dumping_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestDate::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestDate::test_loading_passes_context_to_validator",

"tests/test_types.py::TestDate::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestDate::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestDate::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestDate::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestDate::test_loading_None_raises_required_error",

"tests/test_types.py::TestDate::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestDate::test_dumping_None_raises_required_error",

"tests/test_types.py::TestDate::test_name",

"tests/test_types.py::TestDate::test_description",

"tests/test_types.py::TestDate::test_loading_string_date",

"tests/test_types.py::TestDate::test_loading_using_predefined_format",

"tests/test_types.py::TestDate::test_loading_using_custom_format",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestDate::test_customizing_error_message_if_value_is_not_string",

"tests/test_types.py::TestDate::test_loading_raises_ValidationError_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDate::test_customizing_error_message_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestDate::test_loading_passes_deserialized_date_to_validator",

"tests/test_types.py::TestDate::test_dumping_date",

"tests/test_types.py::TestDate::test_dumping_using_predefined_format",

"tests/test_types.py::TestDate::test_dumping_using_custom_format",

"tests/test_types.py::TestDate::test_dumping_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestTime::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestTime::test_loading_passes_context_to_validator",

"tests/test_types.py::TestTime::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestTime::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestTime::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestTime::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestTime::test_loading_None_raises_required_error",

"tests/test_types.py::TestTime::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestTime::test_dumping_None_raises_required_error",

"tests/test_types.py::TestTime::test_name",

"tests/test_types.py::TestTime::test_description",

"tests/test_types.py::TestTime::test_loading_string_date",

"tests/test_types.py::TestTime::test_loading_using_custom_format",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestTime::test_customizing_error_message_if_value_is_not_string",

"tests/test_types.py::TestTime::test_loading_raises_ValidationError_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestTime::test_customizing_error_message_if_value_string_does_not_match_date_format",

"tests/test_types.py::TestTime::test_loading_passes_deserialized_date_to_validator",

"tests/test_types.py::TestTime::test_dumping_date",

"tests/test_types.py::TestTime::test_dumping_using_custom_format",

"tests/test_types.py::TestTime::test_dumping_raises_ValidationError_if_value_is_not_string",

"tests/test_types.py::TestList::test_loading_does_not_raise_ValidationError_if_validators_succeed",

"tests/test_types.py::TestList::test_loading_raises_ValidationError_if_validator_fails",

"tests/test_types.py::TestList::test_loading_raises_ValidationError_with_combined_messages_if_multiple_validators_fail",

"tests/test_types.py::TestList::test_loading_passes_context_to_validator",

"tests/test_types.py::TestList::test_validation_returns_None_if_validators_succeed",

"tests/test_types.py::TestList::test_validation_returns_errors_if_validator_fails",

"tests/test_types.py::TestList::test_validation_returns_combined_errors_if_multiple_validators_fail",

"tests/test_types.py::TestList::test_loading_missing_value_raises_required_error",

"tests/test_types.py::TestList::test_loading_None_raises_required_error",

"tests/test_types.py::TestList::test_dumping_missing_value_raises_required_error",

"tests/test_types.py::TestList::test_dumping_None_raises_required_error",

"tests/test_types.py::TestList::test_name",

"tests/test_types.py::TestList::test_description",

"tests/test_types.py::TestList::test_loading_list_value",

"tests/test_types.py::TestList::test_loading_non_list_value_raises_ValidationError",