problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

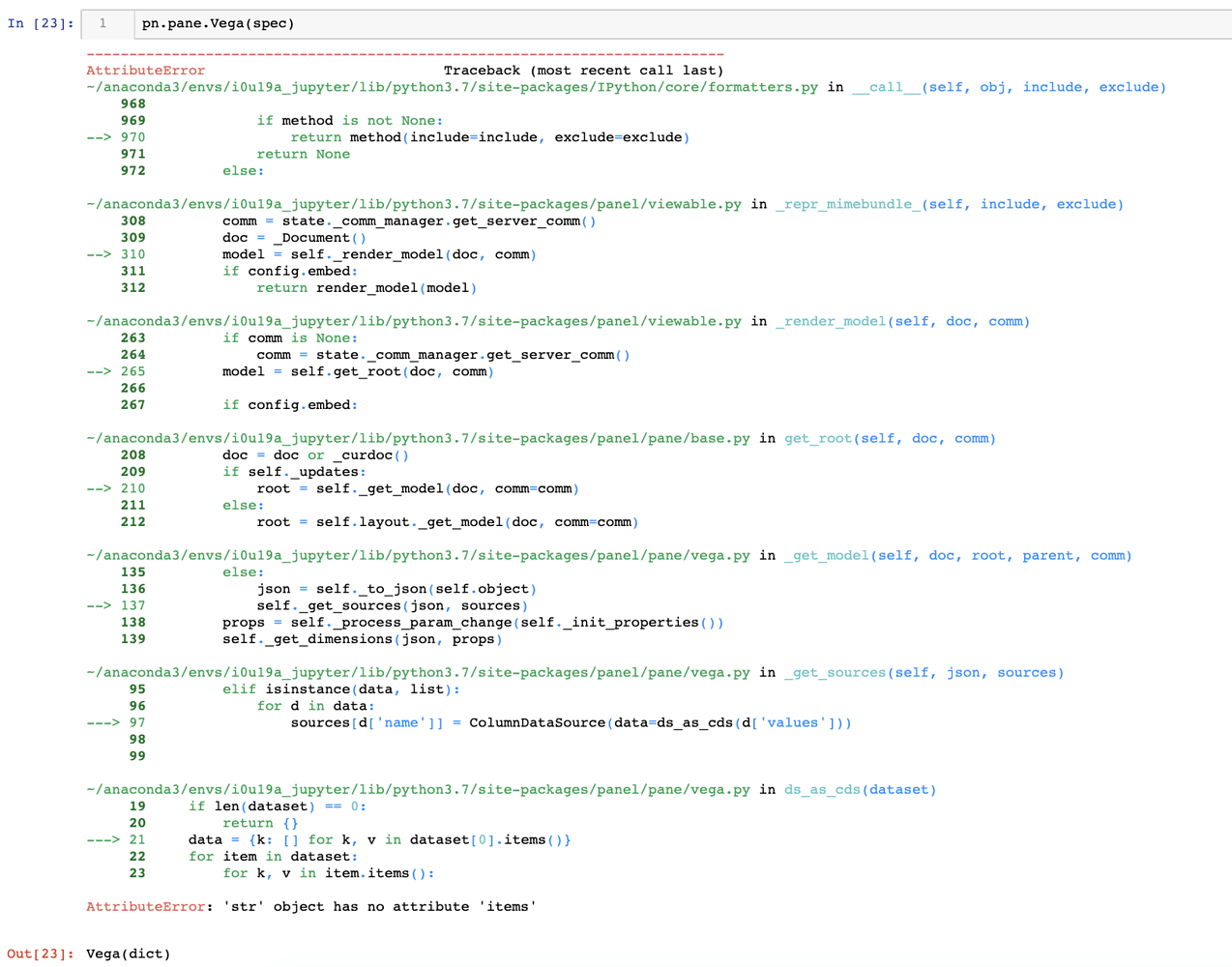

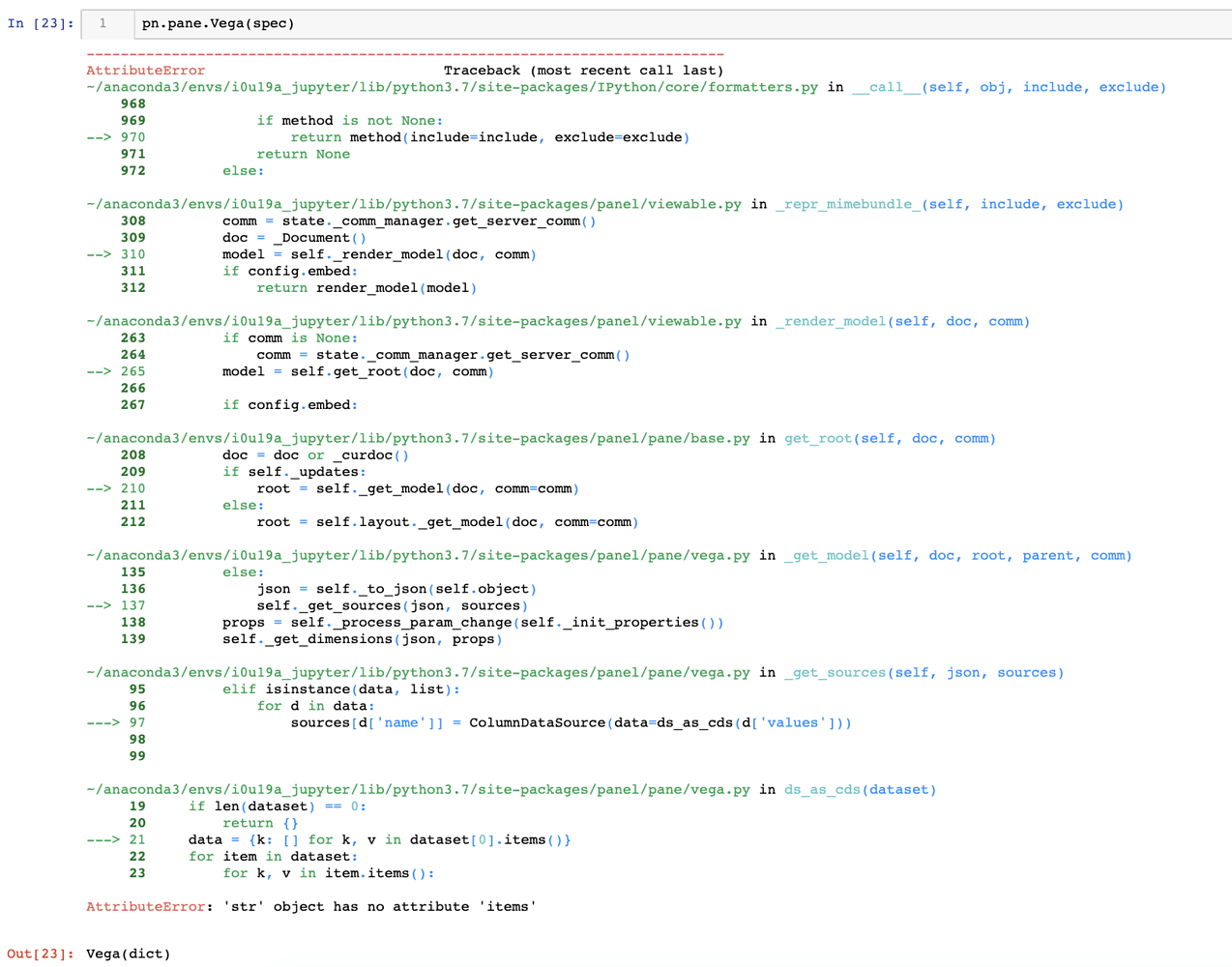

gh_patches_debug_37088 | rasdani/github-patches | git_diff | pre-commit__pre-commit-321 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Does not work within submodules

I'm getting:

```

An unexpected error has occurred: NotADirectoryError: [Errno 20] Not a directory: '/home/quentin/chef-repo/cookbooks/ssmtp-cookbook/.git/hooks/pre-commit'

```

chef-repo is my primary repository and ssmtp-cookbook a git submodule of that.

**ssmtp-cookbook/.git file contents:**

```

gitdir: ../../.git/modules/cookbooks/ssmtp-cookbook

```

</issue>

<code>

[start of pre_commit/git.py]

1 from __future__ import unicode_literals

2

3 import functools

4 import logging

5 import os

6 import os.path

7 import re

8

9 from pre_commit.errors import FatalError

10 from pre_commit.util import CalledProcessError

11 from pre_commit.util import cmd_output

12 from pre_commit.util import memoize_by_cwd

13

14

15 logger = logging.getLogger('pre_commit')

16

17

18 def get_root():

19 try:

20 return cmd_output('git', 'rev-parse', '--show-toplevel')[1].strip()

21 except CalledProcessError:

22 raise FatalError(

23 'Called from outside of the gits. Please cd to a git repository.'

24 )

25

26

27 def is_in_merge_conflict():

28 return (

29 os.path.exists(os.path.join('.git', 'MERGE_MSG')) and

30 os.path.exists(os.path.join('.git', 'MERGE_HEAD'))

31 )

32

33

34 def parse_merge_msg_for_conflicts(merge_msg):

35 # Conflicted files start with tabs

36 return [

37 line.lstrip('#').strip()

38 for line in merge_msg.splitlines()

39 # '#\t' for git 2.4.1

40 if line.startswith(('\t', '#\t'))

41 ]

42

43

44 @memoize_by_cwd

45 def get_conflicted_files():

46 logger.info('Checking merge-conflict files only.')

47 # Need to get the conflicted files from the MERGE_MSG because they could

48 # have resolved the conflict by choosing one side or the other

49 merge_msg = open(os.path.join('.git', 'MERGE_MSG')).read()

50 merge_conflict_filenames = parse_merge_msg_for_conflicts(merge_msg)

51

52 # This will get the rest of the changes made after the merge.

53 # If they resolved the merge conflict by choosing a mesh of both sides

54 # this will also include the conflicted files

55 tree_hash = cmd_output('git', 'write-tree')[1].strip()

56 merge_diff_filenames = cmd_output(

57 'git', 'diff', '-m', tree_hash, 'HEAD', 'MERGE_HEAD', '--name-only',

58 )[1].splitlines()

59 return set(merge_conflict_filenames) | set(merge_diff_filenames)

60

61

62 @memoize_by_cwd

63 def get_staged_files():

64 return cmd_output('git', 'diff', '--staged', '--name-only')[1].splitlines()

65

66

67 @memoize_by_cwd

68 def get_all_files():

69 return cmd_output('git', 'ls-files')[1].splitlines()

70

71

72 def get_files_matching(all_file_list_strategy):

73 @functools.wraps(all_file_list_strategy)

74 @memoize_by_cwd

75 def wrapper(include_expr, exclude_expr):

76 include_regex = re.compile(include_expr)

77 exclude_regex = re.compile(exclude_expr)

78 return set(

79 filename

80 for filename in all_file_list_strategy()

81 if (

82 include_regex.search(filename) and

83 not exclude_regex.search(filename) and

84 os.path.exists(filename)

85 )

86 )

87 return wrapper

88

89

90 get_staged_files_matching = get_files_matching(get_staged_files)

91 get_all_files_matching = get_files_matching(get_all_files)

92 get_conflicted_files_matching = get_files_matching(get_conflicted_files)

93

[end of pre_commit/git.py]

[start of pre_commit/runner.py]

1 from __future__ import unicode_literals

2

3 import os

4 import os.path

5

6 from cached_property import cached_property

7

8 import pre_commit.constants as C

9 from pre_commit import git

10 from pre_commit.clientlib.validate_config import load_config

11 from pre_commit.repository import Repository

12 from pre_commit.store import Store

13

14

15 class Runner(object):

16 """A `Runner` represents the execution context of the hooks. Notably the

17 repository under test.

18 """

19

20 def __init__(self, git_root):

21 self.git_root = git_root

22

23 @classmethod

24 def create(cls):

25 """Creates a PreCommitRunner by doing the following:

26 - Finds the root of the current git repository

27 - chdirs to that directory

28 """

29 root = git.get_root()

30 os.chdir(root)

31 return cls(root)

32

33 @cached_property

34 def config_file_path(self):

35 return os.path.join(self.git_root, C.CONFIG_FILE)

36

37 @cached_property

38 def repositories(self):

39 """Returns a tuple of the configured repositories."""

40 config = load_config(self.config_file_path)

41 repositories = tuple(Repository.create(x, self.store) for x in config)

42 for repository in repositories:

43 repository.require_installed()

44 return repositories

45

46 def get_hook_path(self, hook_type):

47 return os.path.join(self.git_root, '.git', 'hooks', hook_type)

48

49 @cached_property

50 def pre_commit_path(self):

51 return self.get_hook_path('pre-commit')

52

53 @cached_property

54 def pre_push_path(self):

55 return self.get_hook_path('pre-push')

56

57 @cached_property

58 def cmd_runner(self):

59 # TODO: remove this and inline runner.store.cmd_runner

60 return self.store.cmd_runner

61

62 @cached_property

63 def store(self):

64 return Store()

65

[end of pre_commit/runner.py]

[start of pre_commit/main.py]

1 from __future__ import unicode_literals

2

3 import argparse

4 import os

5 import sys

6

7 import pkg_resources

8

9 from pre_commit import color

10 from pre_commit import five

11 from pre_commit.commands.autoupdate import autoupdate

12 from pre_commit.commands.clean import clean

13 from pre_commit.commands.install_uninstall import install

14 from pre_commit.commands.install_uninstall import uninstall

15 from pre_commit.commands.run import run

16 from pre_commit.error_handler import error_handler

17 from pre_commit.runner import Runner

18

19

20 # https://github.com/pre-commit/pre-commit/issues/217

21 # On OSX, making a virtualenv using pyvenv at . causes `virtualenv` and `pip`

22 # to install packages to the wrong place. We don't want anything to deal with

23 # pyvenv

24 os.environ.pop('__PYVENV_LAUNCHER__', None)

25 # https://github.com/pre-commit/pre-commit/issues/300

26 # In git 2.6.3 (maybe others), git exports this while running pre-commit hooks

27 os.environ.pop('GIT_WORK_TREE', None)

28

29

30 def main(argv=None):

31 argv = argv if argv is not None else sys.argv[1:]

32 argv = [five.to_text(arg) for arg in argv]

33 parser = argparse.ArgumentParser()

34

35 # http://stackoverflow.com/a/8521644/812183

36 parser.add_argument(

37 '-V', '--version',

38 action='version',

39 version='%(prog)s {0}'.format(

40 pkg_resources.get_distribution('pre-commit').version

41 )

42 )

43

44 subparsers = parser.add_subparsers(dest='command')

45

46 install_parser = subparsers.add_parser(

47 'install', help='Install the pre-commit script.',

48 )

49 install_parser.add_argument(

50 '-f', '--overwrite', action='store_true',

51 help='Overwrite existing hooks / remove migration mode.',

52 )

53 install_parser.add_argument(

54 '--install-hooks', action='store_true',

55 help=(

56 'Whether to install hook environments for all environments '

57 'in the config file.'

58 ),

59 )

60 install_parser.add_argument(

61 '-t', '--hook-type', choices=('pre-commit', 'pre-push'),

62 default='pre-commit',

63 )

64

65 uninstall_parser = subparsers.add_parser(

66 'uninstall', help='Uninstall the pre-commit script.',

67 )

68 uninstall_parser.add_argument(

69 '-t', '--hook-type', choices=('pre-commit', 'pre-push'),

70 default='pre-commit',

71 )

72

73 subparsers.add_parser('clean', help='Clean out pre-commit files.')

74

75 subparsers.add_parser(

76 'autoupdate',

77 help="Auto-update pre-commit config to the latest repos' versions.",

78 )

79

80 run_parser = subparsers.add_parser('run', help='Run hooks.')

81 run_parser.add_argument('hook', nargs='?', help='A single hook-id to run')

82 run_parser.add_argument(

83 '--color', default='auto', type=color.use_color,

84 help='Whether to use color in output. Defaults to `auto`',

85 )

86 run_parser.add_argument(

87 '--no-stash', default=False, action='store_true',

88 help='Use this option to prevent auto stashing of unstaged files.',

89 )

90 run_parser.add_argument(

91 '--verbose', '-v', action='store_true', default=False,

92 )

93 run_parser.add_argument(

94 '--origin', '-o',

95 help='The origin branch\'s commit_id when using `git push`',

96 )

97 run_parser.add_argument(

98 '--source', '-s',

99 help='The remote branch\'s commit_id when using `git push`',

100 )

101 run_parser.add_argument(

102 '--allow-unstaged-config', default=False, action='store_true',

103 help='Allow an unstaged config to be present. Note that this will'

104 'be stashed before parsing unless --no-stash is specified'

105 )

106 run_parser.add_argument(

107 '--hook-stage', choices=('commit', 'push'), default='commit',

108 help='The stage during which the hook is fired e.g. commit or push',

109 )

110 run_mutex_group = run_parser.add_mutually_exclusive_group(required=False)

111 run_mutex_group.add_argument(

112 '--all-files', '-a', action='store_true', default=False,

113 help='Run on all the files in the repo. Implies --no-stash.',

114 )

115 run_mutex_group.add_argument(

116 '--files', nargs='*', help='Specific filenames to run hooks on.',

117 )

118

119 help = subparsers.add_parser(

120 'help', help='Show help for a specific command.'

121 )

122 help.add_argument('help_cmd', nargs='?', help='Command to show help for.')

123

124 # Argparse doesn't really provide a way to use a `default` subparser

125 if len(argv) == 0:

126 argv = ['run']

127 args = parser.parse_args(argv)

128

129 if args.command == 'help':

130 if args.help_cmd:

131 parser.parse_args([args.help_cmd, '--help'])

132 else:

133 parser.parse_args(['--help'])

134

135 with error_handler():

136 runner = Runner.create()

137

138 if args.command == 'install':

139 return install(

140 runner, overwrite=args.overwrite, hooks=args.install_hooks,

141 hook_type=args.hook_type,

142 )

143 elif args.command == 'uninstall':

144 return uninstall(runner, hook_type=args.hook_type)

145 elif args.command == 'clean':

146 return clean(runner)

147 elif args.command == 'autoupdate':

148 return autoupdate(runner)

149 elif args.command == 'run':

150 return run(runner, args)

151 else:

152 raise NotImplementedError(

153 'Command {0} not implemented.'.format(args.command)

154 )

155

156 raise AssertionError(

157 'Command {0} failed to exit with a returncode'.format(args.command)

158 )

159

160

161 if __name__ == '__main__':

162 exit(main())

163

[end of pre_commit/main.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/pre_commit/git.py b/pre_commit/git.py

--- a/pre_commit/git.py

+++ b/pre_commit/git.py

@@ -24,10 +24,18 @@

)

+def get_git_dir(git_root):

+ return os.path.normpath(os.path.join(

+ git_root,

+ cmd_output('git', 'rev-parse', '--git-dir', cwd=git_root)[1].strip(),

+ ))

+

+

def is_in_merge_conflict():

+ git_dir = get_git_dir('.')

return (

- os.path.exists(os.path.join('.git', 'MERGE_MSG')) and

- os.path.exists(os.path.join('.git', 'MERGE_HEAD'))

+ os.path.exists(os.path.join(git_dir, 'MERGE_MSG')) and

+ os.path.exists(os.path.join(git_dir, 'MERGE_HEAD'))

)

@@ -46,7 +54,7 @@

logger.info('Checking merge-conflict files only.')

# Need to get the conflicted files from the MERGE_MSG because they could

# have resolved the conflict by choosing one side or the other

- merge_msg = open(os.path.join('.git', 'MERGE_MSG')).read()

+ merge_msg = open(os.path.join(get_git_dir('.'), 'MERGE_MSG')).read()

merge_conflict_filenames = parse_merge_msg_for_conflicts(merge_msg)

# This will get the rest of the changes made after the merge.

diff --git a/pre_commit/main.py b/pre_commit/main.py

--- a/pre_commit/main.py

+++ b/pre_commit/main.py

@@ -25,6 +25,13 @@

# https://github.com/pre-commit/pre-commit/issues/300

# In git 2.6.3 (maybe others), git exports this while running pre-commit hooks

os.environ.pop('GIT_WORK_TREE', None)

+# In git 1.9.1 (maybe others), git exports these while running pre-commit hooks

+# in submodules. In the general case this causes problems.

+# These are covered by test_install_in_submodule_and_run

+# Causes git clone to clone wrong thing

+os.environ.pop('GIT_DIR', None)

+# Causes 'error invalid object ...' during commit

+os.environ.pop('GIT_INDEX_FILE', None)

def main(argv=None):

diff --git a/pre_commit/runner.py b/pre_commit/runner.py

--- a/pre_commit/runner.py

+++ b/pre_commit/runner.py

@@ -30,6 +30,10 @@

os.chdir(root)

return cls(root)

+ @cached_property

+ def git_dir(self):

+ return git.get_git_dir(self.git_root)

+

@cached_property

def config_file_path(self):

return os.path.join(self.git_root, C.CONFIG_FILE)

@@ -44,7 +48,7 @@

return repositories

def get_hook_path(self, hook_type):

- return os.path.join(self.git_root, '.git', 'hooks', hook_type)

+ return os.path.join(self.git_dir, 'hooks', hook_type)

@cached_property

def pre_commit_path(self):

| {"golden_diff": "diff --git a/pre_commit/git.py b/pre_commit/git.py\n--- a/pre_commit/git.py\n+++ b/pre_commit/git.py\n@@ -24,10 +24,18 @@\n )\n \n \n+def get_git_dir(git_root):\n+ return os.path.normpath(os.path.join(\n+ git_root,\n+ cmd_output('git', 'rev-parse', '--git-dir', cwd=git_root)[1].strip(),\n+ ))\n+\n+\n def is_in_merge_conflict():\n+ git_dir = get_git_dir('.')\n return (\n- os.path.exists(os.path.join('.git', 'MERGE_MSG')) and\n- os.path.exists(os.path.join('.git', 'MERGE_HEAD'))\n+ os.path.exists(os.path.join(git_dir, 'MERGE_MSG')) and\n+ os.path.exists(os.path.join(git_dir, 'MERGE_HEAD'))\n )\n \n \n@@ -46,7 +54,7 @@\n logger.info('Checking merge-conflict files only.')\n # Need to get the conflicted files from the MERGE_MSG because they could\n # have resolved the conflict by choosing one side or the other\n- merge_msg = open(os.path.join('.git', 'MERGE_MSG')).read()\n+ merge_msg = open(os.path.join(get_git_dir('.'), 'MERGE_MSG')).read()\n merge_conflict_filenames = parse_merge_msg_for_conflicts(merge_msg)\n \n # This will get the rest of the changes made after the merge.\ndiff --git a/pre_commit/main.py b/pre_commit/main.py\n--- a/pre_commit/main.py\n+++ b/pre_commit/main.py\n@@ -25,6 +25,13 @@\n # https://github.com/pre-commit/pre-commit/issues/300\n # In git 2.6.3 (maybe others), git exports this while running pre-commit hooks\n os.environ.pop('GIT_WORK_TREE', None)\n+# In git 1.9.1 (maybe others), git exports these while running pre-commit hooks\n+# in submodules. In the general case this causes problems.\n+# These are covered by test_install_in_submodule_and_run\n+# Causes git clone to clone wrong thing\n+os.environ.pop('GIT_DIR', None)\n+# Causes 'error invalid object ...' during commit\n+os.environ.pop('GIT_INDEX_FILE', None)\n \n \n def main(argv=None):\ndiff --git a/pre_commit/runner.py b/pre_commit/runner.py\n--- a/pre_commit/runner.py\n+++ b/pre_commit/runner.py\n@@ -30,6 +30,10 @@\n os.chdir(root)\n return cls(root)\n \n+ @cached_property\n+ def git_dir(self):\n+ return git.get_git_dir(self.git_root)\n+\n @cached_property\n def config_file_path(self):\n return os.path.join(self.git_root, C.CONFIG_FILE)\n@@ -44,7 +48,7 @@\n return repositories\n \n def get_hook_path(self, hook_type):\n- return os.path.join(self.git_root, '.git', 'hooks', hook_type)\n+ return os.path.join(self.git_dir, 'hooks', hook_type)\n \n @cached_property\n def pre_commit_path(self):\n", "issue": "Does not work within submodules\nI'm getting: \n\n```\nAn unexpected error has occurred: NotADirectoryError: [Errno 20] Not a directory: '/home/quentin/chef-repo/cookbooks/ssmtp-cookbook/.git/hooks/pre-commit'\n```\n\nchef-repo is my primary repository and ssmtp-cookbook a git submodule of that. \n\n**ssmtp-cookbook/.git file contents:**\n\n```\ngitdir: ../../.git/modules/cookbooks/ssmtp-cookbook\n```\n\n", "before_files": [{"content": "from __future__ import unicode_literals\n\nimport functools\nimport logging\nimport os\nimport os.path\nimport re\n\nfrom pre_commit.errors import FatalError\nfrom pre_commit.util import CalledProcessError\nfrom pre_commit.util import cmd_output\nfrom pre_commit.util import memoize_by_cwd\n\n\nlogger = logging.getLogger('pre_commit')\n\n\ndef get_root():\n try:\n return cmd_output('git', 'rev-parse', '--show-toplevel')[1].strip()\n except CalledProcessError:\n raise FatalError(\n 'Called from outside of the gits. Please cd to a git repository.'\n )\n\n\ndef is_in_merge_conflict():\n return (\n os.path.exists(os.path.join('.git', 'MERGE_MSG')) and\n os.path.exists(os.path.join('.git', 'MERGE_HEAD'))\n )\n\n\ndef parse_merge_msg_for_conflicts(merge_msg):\n # Conflicted files start with tabs\n return [\n line.lstrip('#').strip()\n for line in merge_msg.splitlines()\n # '#\\t' for git 2.4.1\n if line.startswith(('\\t', '#\\t'))\n ]\n\n\n@memoize_by_cwd\ndef get_conflicted_files():\n logger.info('Checking merge-conflict files only.')\n # Need to get the conflicted files from the MERGE_MSG because they could\n # have resolved the conflict by choosing one side or the other\n merge_msg = open(os.path.join('.git', 'MERGE_MSG')).read()\n merge_conflict_filenames = parse_merge_msg_for_conflicts(merge_msg)\n\n # This will get the rest of the changes made after the merge.\n # If they resolved the merge conflict by choosing a mesh of both sides\n # this will also include the conflicted files\n tree_hash = cmd_output('git', 'write-tree')[1].strip()\n merge_diff_filenames = cmd_output(\n 'git', 'diff', '-m', tree_hash, 'HEAD', 'MERGE_HEAD', '--name-only',\n )[1].splitlines()\n return set(merge_conflict_filenames) | set(merge_diff_filenames)\n\n\n@memoize_by_cwd\ndef get_staged_files():\n return cmd_output('git', 'diff', '--staged', '--name-only')[1].splitlines()\n\n\n@memoize_by_cwd\ndef get_all_files():\n return cmd_output('git', 'ls-files')[1].splitlines()\n\n\ndef get_files_matching(all_file_list_strategy):\n @functools.wraps(all_file_list_strategy)\n @memoize_by_cwd\n def wrapper(include_expr, exclude_expr):\n include_regex = re.compile(include_expr)\n exclude_regex = re.compile(exclude_expr)\n return set(\n filename\n for filename in all_file_list_strategy()\n if (\n include_regex.search(filename) and\n not exclude_regex.search(filename) and\n os.path.exists(filename)\n )\n )\n return wrapper\n\n\nget_staged_files_matching = get_files_matching(get_staged_files)\nget_all_files_matching = get_files_matching(get_all_files)\nget_conflicted_files_matching = get_files_matching(get_conflicted_files)\n", "path": "pre_commit/git.py"}, {"content": "from __future__ import unicode_literals\n\nimport os\nimport os.path\n\nfrom cached_property import cached_property\n\nimport pre_commit.constants as C\nfrom pre_commit import git\nfrom pre_commit.clientlib.validate_config import load_config\nfrom pre_commit.repository import Repository\nfrom pre_commit.store import Store\n\n\nclass Runner(object):\n \"\"\"A `Runner` represents the execution context of the hooks. Notably the\n repository under test.\n \"\"\"\n\n def __init__(self, git_root):\n self.git_root = git_root\n\n @classmethod\n def create(cls):\n \"\"\"Creates a PreCommitRunner by doing the following:\n - Finds the root of the current git repository\n - chdirs to that directory\n \"\"\"\n root = git.get_root()\n os.chdir(root)\n return cls(root)\n\n @cached_property\n def config_file_path(self):\n return os.path.join(self.git_root, C.CONFIG_FILE)\n\n @cached_property\n def repositories(self):\n \"\"\"Returns a tuple of the configured repositories.\"\"\"\n config = load_config(self.config_file_path)\n repositories = tuple(Repository.create(x, self.store) for x in config)\n for repository in repositories:\n repository.require_installed()\n return repositories\n\n def get_hook_path(self, hook_type):\n return os.path.join(self.git_root, '.git', 'hooks', hook_type)\n\n @cached_property\n def pre_commit_path(self):\n return self.get_hook_path('pre-commit')\n\n @cached_property\n def pre_push_path(self):\n return self.get_hook_path('pre-push')\n\n @cached_property\n def cmd_runner(self):\n # TODO: remove this and inline runner.store.cmd_runner\n return self.store.cmd_runner\n\n @cached_property\n def store(self):\n return Store()\n", "path": "pre_commit/runner.py"}, {"content": "from __future__ import unicode_literals\n\nimport argparse\nimport os\nimport sys\n\nimport pkg_resources\n\nfrom pre_commit import color\nfrom pre_commit import five\nfrom pre_commit.commands.autoupdate import autoupdate\nfrom pre_commit.commands.clean import clean\nfrom pre_commit.commands.install_uninstall import install\nfrom pre_commit.commands.install_uninstall import uninstall\nfrom pre_commit.commands.run import run\nfrom pre_commit.error_handler import error_handler\nfrom pre_commit.runner import Runner\n\n\n# https://github.com/pre-commit/pre-commit/issues/217\n# On OSX, making a virtualenv using pyvenv at . causes `virtualenv` and `pip`\n# to install packages to the wrong place. We don't want anything to deal with\n# pyvenv\nos.environ.pop('__PYVENV_LAUNCHER__', None)\n# https://github.com/pre-commit/pre-commit/issues/300\n# In git 2.6.3 (maybe others), git exports this while running pre-commit hooks\nos.environ.pop('GIT_WORK_TREE', None)\n\n\ndef main(argv=None):\n argv = argv if argv is not None else sys.argv[1:]\n argv = [five.to_text(arg) for arg in argv]\n parser = argparse.ArgumentParser()\n\n # http://stackoverflow.com/a/8521644/812183\n parser.add_argument(\n '-V', '--version',\n action='version',\n version='%(prog)s {0}'.format(\n pkg_resources.get_distribution('pre-commit').version\n )\n )\n\n subparsers = parser.add_subparsers(dest='command')\n\n install_parser = subparsers.add_parser(\n 'install', help='Install the pre-commit script.',\n )\n install_parser.add_argument(\n '-f', '--overwrite', action='store_true',\n help='Overwrite existing hooks / remove migration mode.',\n )\n install_parser.add_argument(\n '--install-hooks', action='store_true',\n help=(\n 'Whether to install hook environments for all environments '\n 'in the config file.'\n ),\n )\n install_parser.add_argument(\n '-t', '--hook-type', choices=('pre-commit', 'pre-push'),\n default='pre-commit',\n )\n\n uninstall_parser = subparsers.add_parser(\n 'uninstall', help='Uninstall the pre-commit script.',\n )\n uninstall_parser.add_argument(\n '-t', '--hook-type', choices=('pre-commit', 'pre-push'),\n default='pre-commit',\n )\n\n subparsers.add_parser('clean', help='Clean out pre-commit files.')\n\n subparsers.add_parser(\n 'autoupdate',\n help=\"Auto-update pre-commit config to the latest repos' versions.\",\n )\n\n run_parser = subparsers.add_parser('run', help='Run hooks.')\n run_parser.add_argument('hook', nargs='?', help='A single hook-id to run')\n run_parser.add_argument(\n '--color', default='auto', type=color.use_color,\n help='Whether to use color in output. Defaults to `auto`',\n )\n run_parser.add_argument(\n '--no-stash', default=False, action='store_true',\n help='Use this option to prevent auto stashing of unstaged files.',\n )\n run_parser.add_argument(\n '--verbose', '-v', action='store_true', default=False,\n )\n run_parser.add_argument(\n '--origin', '-o',\n help='The origin branch\\'s commit_id when using `git push`',\n )\n run_parser.add_argument(\n '--source', '-s',\n help='The remote branch\\'s commit_id when using `git push`',\n )\n run_parser.add_argument(\n '--allow-unstaged-config', default=False, action='store_true',\n help='Allow an unstaged config to be present. Note that this will'\n 'be stashed before parsing unless --no-stash is specified'\n )\n run_parser.add_argument(\n '--hook-stage', choices=('commit', 'push'), default='commit',\n help='The stage during which the hook is fired e.g. commit or push',\n )\n run_mutex_group = run_parser.add_mutually_exclusive_group(required=False)\n run_mutex_group.add_argument(\n '--all-files', '-a', action='store_true', default=False,\n help='Run on all the files in the repo. Implies --no-stash.',\n )\n run_mutex_group.add_argument(\n '--files', nargs='*', help='Specific filenames to run hooks on.',\n )\n\n help = subparsers.add_parser(\n 'help', help='Show help for a specific command.'\n )\n help.add_argument('help_cmd', nargs='?', help='Command to show help for.')\n\n # Argparse doesn't really provide a way to use a `default` subparser\n if len(argv) == 0:\n argv = ['run']\n args = parser.parse_args(argv)\n\n if args.command == 'help':\n if args.help_cmd:\n parser.parse_args([args.help_cmd, '--help'])\n else:\n parser.parse_args(['--help'])\n\n with error_handler():\n runner = Runner.create()\n\n if args.command == 'install':\n return install(\n runner, overwrite=args.overwrite, hooks=args.install_hooks,\n hook_type=args.hook_type,\n )\n elif args.command == 'uninstall':\n return uninstall(runner, hook_type=args.hook_type)\n elif args.command == 'clean':\n return clean(runner)\n elif args.command == 'autoupdate':\n return autoupdate(runner)\n elif args.command == 'run':\n return run(runner, args)\n else:\n raise NotImplementedError(\n 'Command {0} not implemented.'.format(args.command)\n )\n\n raise AssertionError(\n 'Command {0} failed to exit with a returncode'.format(args.command)\n )\n\n\nif __name__ == '__main__':\n exit(main())\n", "path": "pre_commit/main.py"}]} | 3,687 | 682 |

gh_patches_debug_9704 | rasdani/github-patches | git_diff | Textualize__textual-441 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

[textual][bug] CSS rule parsing fails when the name of the colour we pass contains a digit

So while this is working correctly:

```css

#my_widget {

background: dark_cyan;

}

```

...this fails:

```css

#my_widget {

background: turquoise4;

}

```

...with the following error:

```

• failed to parse color 'turquoise';

• failed to parse 'turquoise' as a color;

```

(maybe just a regex that doesn't take into account the fact that colour names can include numbers?)

</issue>

<code>

[start of src/textual/css/tokenize.py]

1 from __future__ import annotations

2

3 import re

4 from typing import Iterable

5

6 from textual.css.tokenizer import Expect, Tokenizer, Token

7

8 COMMENT_START = r"\/\*"

9 SCALAR = r"\-?\d+\.?\d*(?:fr|%|w|h|vw|vh)"

10 DURATION = r"\d+\.?\d*(?:ms|s)"

11 NUMBER = r"\-?\d+\.?\d*"

12 COLOR = r"\#[0-9a-fA-F]{8}|\#[0-9a-fA-F]{6}|rgb\(\-?\d+\.?\d*,\-?\d+\.?\d*,\-?\d+\.?\d*\)|rgba\(\-?\d+\.?\d*,\-?\d+\.?\d*,\-?\d+\.?\d*,\-?\d+\.?\d*\)"

13 KEY_VALUE = r"[a-zA-Z_-][a-zA-Z0-9_-]*=[0-9a-zA-Z_\-\/]+"

14 TOKEN = "[a-zA-Z_-]+"

15 STRING = r"\".*?\""

16 VARIABLE_REF = r"\$[a-zA-Z0-9_\-]+"

17

18 # Values permitted in variable and rule declarations.

19 DECLARATION_VALUES = {

20 "scalar": SCALAR,

21 "duration": DURATION,

22 "number": NUMBER,

23 "color": COLOR,

24 "key_value": KEY_VALUE,

25 "token": TOKEN,

26 "string": STRING,

27 "variable_ref": VARIABLE_REF,

28 }

29

30 # The tokenizers "expectation" while at the root/highest level of scope

31 # in the CSS file. At this level we might expect to see selectors, comments,

32 # variable definitions etc.

33 expect_root_scope = Expect(

34 whitespace=r"\s+",

35 comment_start=COMMENT_START,

36 selector_start_id=r"\#[a-zA-Z_\-][a-zA-Z0-9_\-]*",

37 selector_start_class=r"\.[a-zA-Z_\-][a-zA-Z0-9_\-]*",

38 selector_start_universal=r"\*",

39 selector_start=r"[a-zA-Z_\-]+",

40 variable_name=rf"{VARIABLE_REF}:",

41 ).expect_eof(True)

42

43 # After a variable declaration e.g. "$warning-text: TOKENS;"

44 # for tokenizing variable value ------^~~~~~~^

45 expect_variable_name_continue = Expect(

46 variable_value_end=r"\n|;",

47 whitespace=r"\s+",

48 comment_start=COMMENT_START,

49 **DECLARATION_VALUES,

50 ).expect_eof(True)

51

52 expect_comment_end = Expect(

53 comment_end=re.escape("*/"),

54 )

55

56 # After we come across a selector in CSS e.g. ".my-class", we may

57 # find other selectors, pseudo-classes... e.g. ".my-class :hover"

58 expect_selector_continue = Expect(

59 whitespace=r"\s+",

60 comment_start=COMMENT_START,

61 pseudo_class=r"\:[a-zA-Z_-]+",

62 selector_id=r"\#[a-zA-Z_\-][a-zA-Z0-9_\-]*",

63 selector_class=r"\.[a-zA-Z_\-][a-zA-Z0-9_\-]*",

64 selector_universal=r"\*",

65 selector=r"[a-zA-Z_\-]+",

66 combinator_child=">",

67 new_selector=r",",

68 declaration_set_start=r"\{",

69 )

70

71 # A rule declaration e.g. "text: red;"

72 # ^---^

73 expect_declaration = Expect(

74 whitespace=r"\s+",

75 comment_start=COMMENT_START,

76 declaration_name=r"[a-zA-Z_\-]+\:",

77 declaration_set_end=r"\}",

78 )

79

80 expect_declaration_solo = Expect(

81 whitespace=r"\s+",

82 comment_start=COMMENT_START,

83 declaration_name=r"[a-zA-Z_\-]+\:",

84 declaration_set_end=r"\}",

85 ).expect_eof(True)

86

87 # The value(s)/content from a rule declaration e.g. "text: red;"

88 # ^---^

89 expect_declaration_content = Expect(

90 declaration_end=r";",

91 whitespace=r"\s+",

92 comment_start=COMMENT_START,

93 **DECLARATION_VALUES,

94 important=r"\!important",

95 comma=",",

96 declaration_set_end=r"\}",

97 )

98

99 expect_declaration_content_solo = Expect(

100 declaration_end=r";",

101 whitespace=r"\s+",

102 comment_start=COMMENT_START,

103 **DECLARATION_VALUES,

104 important=r"\!important",

105 comma=",",

106 declaration_set_end=r"\}",

107 ).expect_eof(True)

108

109

110 class TokenizerState:

111 """State machine for the tokenizer.

112

113 Attributes:

114 EXPECT: The initial expectation of the tokenizer. Since we start tokenizing

115 at the root scope, we might expect to see either a variable or selector, for example.

116 STATE_MAP: Maps token names to Expects, defines the sets of valid tokens

117 that we'd expect to see next, given the current token. For example, if

118 we've just processed a variable declaration name, we next expect to see

119 the value of that variable.

120 """

121

122 EXPECT = expect_root_scope

123 STATE_MAP = {

124 "variable_name": expect_variable_name_continue,

125 "variable_value_end": expect_root_scope,

126 "selector_start": expect_selector_continue,

127 "selector_start_id": expect_selector_continue,

128 "selector_start_class": expect_selector_continue,

129 "selector_start_universal": expect_selector_continue,

130 "selector_id": expect_selector_continue,

131 "selector_class": expect_selector_continue,

132 "selector_universal": expect_selector_continue,

133 "declaration_set_start": expect_declaration,

134 "declaration_name": expect_declaration_content,

135 "declaration_end": expect_declaration,

136 "declaration_set_end": expect_root_scope,

137 }

138

139 def __call__(self, code: str, path: str) -> Iterable[Token]:

140 tokenizer = Tokenizer(code, path=path)

141 expect = self.EXPECT

142 get_token = tokenizer.get_token

143 get_state = self.STATE_MAP.get

144 while True:

145 token = get_token(expect)

146 name = token.name

147 if name == "comment_start":

148 tokenizer.skip_to(expect_comment_end)

149 continue

150 elif name == "eof":

151 break

152 expect = get_state(name, expect)

153 yield token

154

155

156 class DeclarationTokenizerState(TokenizerState):

157 EXPECT = expect_declaration_solo

158 STATE_MAP = {

159 "declaration_name": expect_declaration_content,

160 "declaration_end": expect_declaration_solo,

161 }

162

163

164 class ValueTokenizerState(TokenizerState):

165 EXPECT = expect_declaration_content_solo

166

167

168 tokenize = TokenizerState()

169 tokenize_declarations = DeclarationTokenizerState()

170 tokenize_value = ValueTokenizerState()

171

172

173 def tokenize_values(values: dict[str, str]) -> dict[str, list[Token]]:

174 """Tokens the values in a dict of strings.

175

176 Args:

177 values (dict[str, str]): A mapping of CSS variable name on to a value, to be

178 added to the CSS context.

179

180 Returns:

181 dict[str, list[Token]]: A mapping of name on to a list of tokens,

182 """

183 value_tokens = {

184 name: list(tokenize_value(value, "__name__")) for name, value in values.items()

185 }

186 return value_tokens

187

188

189 if __name__ == "__main__":

190 from rich import print

191

192 css = """#something {

193

194 color: rgb(10,12,23)

195 }

196 """

197 # transition: offset 500 in_out_cubic;

198 tokens = tokenize(css, __name__)

199 print(list(tokens))

200

201 print(tokenize_values({"primary": "rgb(10,20,30)", "secondary": "#ff00ff"}))

202

[end of src/textual/css/tokenize.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/src/textual/css/tokenize.py b/src/textual/css/tokenize.py

--- a/src/textual/css/tokenize.py

+++ b/src/textual/css/tokenize.py

@@ -11,7 +11,7 @@

NUMBER = r"\-?\d+\.?\d*"

COLOR = r"\#[0-9a-fA-F]{8}|\#[0-9a-fA-F]{6}|rgb\(\-?\d+\.?\d*,\-?\d+\.?\d*,\-?\d+\.?\d*\)|rgba\(\-?\d+\.?\d*,\-?\d+\.?\d*,\-?\d+\.?\d*,\-?\d+\.?\d*\)"

KEY_VALUE = r"[a-zA-Z_-][a-zA-Z0-9_-]*=[0-9a-zA-Z_\-\/]+"

-TOKEN = "[a-zA-Z_-]+"

+TOKEN = "[a-zA-Z][a-zA-Z0-9_-]*"

STRING = r"\".*?\""

VARIABLE_REF = r"\$[a-zA-Z0-9_\-]+"

| {"golden_diff": "diff --git a/src/textual/css/tokenize.py b/src/textual/css/tokenize.py\n--- a/src/textual/css/tokenize.py\n+++ b/src/textual/css/tokenize.py\n@@ -11,7 +11,7 @@\n NUMBER = r\"\\-?\\d+\\.?\\d*\"\n COLOR = r\"\\#[0-9a-fA-F]{8}|\\#[0-9a-fA-F]{6}|rgb\\(\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*\\)|rgba\\(\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*\\)\"\n KEY_VALUE = r\"[a-zA-Z_-][a-zA-Z0-9_-]*=[0-9a-zA-Z_\\-\\/]+\"\n-TOKEN = \"[a-zA-Z_-]+\"\n+TOKEN = \"[a-zA-Z][a-zA-Z0-9_-]*\"\n STRING = r\"\\\".*?\\\"\"\n VARIABLE_REF = r\"\\$[a-zA-Z0-9_\\-]+\"\n", "issue": "[textual][bug] CSS rule parsing fails when the name of the colour we pass contains a digit\nSo while this is working correctly:\r\n```css\r\n#my_widget {\r\n background: dark_cyan;\r\n}\r\n```\r\n\r\n...this fails:\r\n```css\r\n#my_widget {\r\n background: turquoise4;\r\n}\r\n```\r\n...with the following error:\r\n```\r\n \u2022 failed to parse color 'turquoise'; \r\n \u2022 failed to parse 'turquoise' as a color; \r\n```\r\n(maybe just a regex that doesn't take into account the fact that colour names can include numbers?)\n", "before_files": [{"content": "from __future__ import annotations\n\nimport re\nfrom typing import Iterable\n\nfrom textual.css.tokenizer import Expect, Tokenizer, Token\n\nCOMMENT_START = r\"\\/\\*\"\nSCALAR = r\"\\-?\\d+\\.?\\d*(?:fr|%|w|h|vw|vh)\"\nDURATION = r\"\\d+\\.?\\d*(?:ms|s)\"\nNUMBER = r\"\\-?\\d+\\.?\\d*\"\nCOLOR = r\"\\#[0-9a-fA-F]{8}|\\#[0-9a-fA-F]{6}|rgb\\(\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*\\)|rgba\\(\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*,\\-?\\d+\\.?\\d*\\)\"\nKEY_VALUE = r\"[a-zA-Z_-][a-zA-Z0-9_-]*=[0-9a-zA-Z_\\-\\/]+\"\nTOKEN = \"[a-zA-Z_-]+\"\nSTRING = r\"\\\".*?\\\"\"\nVARIABLE_REF = r\"\\$[a-zA-Z0-9_\\-]+\"\n\n# Values permitted in variable and rule declarations.\nDECLARATION_VALUES = {\n \"scalar\": SCALAR,\n \"duration\": DURATION,\n \"number\": NUMBER,\n \"color\": COLOR,\n \"key_value\": KEY_VALUE,\n \"token\": TOKEN,\n \"string\": STRING,\n \"variable_ref\": VARIABLE_REF,\n}\n\n# The tokenizers \"expectation\" while at the root/highest level of scope\n# in the CSS file. At this level we might expect to see selectors, comments,\n# variable definitions etc.\nexpect_root_scope = Expect(\n whitespace=r\"\\s+\",\n comment_start=COMMENT_START,\n selector_start_id=r\"\\#[a-zA-Z_\\-][a-zA-Z0-9_\\-]*\",\n selector_start_class=r\"\\.[a-zA-Z_\\-][a-zA-Z0-9_\\-]*\",\n selector_start_universal=r\"\\*\",\n selector_start=r\"[a-zA-Z_\\-]+\",\n variable_name=rf\"{VARIABLE_REF}:\",\n).expect_eof(True)\n\n# After a variable declaration e.g. \"$warning-text: TOKENS;\"\n# for tokenizing variable value ------^~~~~~~^\nexpect_variable_name_continue = Expect(\n variable_value_end=r\"\\n|;\",\n whitespace=r\"\\s+\",\n comment_start=COMMENT_START,\n **DECLARATION_VALUES,\n).expect_eof(True)\n\nexpect_comment_end = Expect(\n comment_end=re.escape(\"*/\"),\n)\n\n# After we come across a selector in CSS e.g. \".my-class\", we may\n# find other selectors, pseudo-classes... e.g. \".my-class :hover\"\nexpect_selector_continue = Expect(\n whitespace=r\"\\s+\",\n comment_start=COMMENT_START,\n pseudo_class=r\"\\:[a-zA-Z_-]+\",\n selector_id=r\"\\#[a-zA-Z_\\-][a-zA-Z0-9_\\-]*\",\n selector_class=r\"\\.[a-zA-Z_\\-][a-zA-Z0-9_\\-]*\",\n selector_universal=r\"\\*\",\n selector=r\"[a-zA-Z_\\-]+\",\n combinator_child=\">\",\n new_selector=r\",\",\n declaration_set_start=r\"\\{\",\n)\n\n# A rule declaration e.g. \"text: red;\"\n# ^---^\nexpect_declaration = Expect(\n whitespace=r\"\\s+\",\n comment_start=COMMENT_START,\n declaration_name=r\"[a-zA-Z_\\-]+\\:\",\n declaration_set_end=r\"\\}\",\n)\n\nexpect_declaration_solo = Expect(\n whitespace=r\"\\s+\",\n comment_start=COMMENT_START,\n declaration_name=r\"[a-zA-Z_\\-]+\\:\",\n declaration_set_end=r\"\\}\",\n).expect_eof(True)\n\n# The value(s)/content from a rule declaration e.g. \"text: red;\"\n# ^---^\nexpect_declaration_content = Expect(\n declaration_end=r\";\",\n whitespace=r\"\\s+\",\n comment_start=COMMENT_START,\n **DECLARATION_VALUES,\n important=r\"\\!important\",\n comma=\",\",\n declaration_set_end=r\"\\}\",\n)\n\nexpect_declaration_content_solo = Expect(\n declaration_end=r\";\",\n whitespace=r\"\\s+\",\n comment_start=COMMENT_START,\n **DECLARATION_VALUES,\n important=r\"\\!important\",\n comma=\",\",\n declaration_set_end=r\"\\}\",\n).expect_eof(True)\n\n\nclass TokenizerState:\n \"\"\"State machine for the tokenizer.\n\n Attributes:\n EXPECT: The initial expectation of the tokenizer. Since we start tokenizing\n at the root scope, we might expect to see either a variable or selector, for example.\n STATE_MAP: Maps token names to Expects, defines the sets of valid tokens\n that we'd expect to see next, given the current token. For example, if\n we've just processed a variable declaration name, we next expect to see\n the value of that variable.\n \"\"\"\n\n EXPECT = expect_root_scope\n STATE_MAP = {\n \"variable_name\": expect_variable_name_continue,\n \"variable_value_end\": expect_root_scope,\n \"selector_start\": expect_selector_continue,\n \"selector_start_id\": expect_selector_continue,\n \"selector_start_class\": expect_selector_continue,\n \"selector_start_universal\": expect_selector_continue,\n \"selector_id\": expect_selector_continue,\n \"selector_class\": expect_selector_continue,\n \"selector_universal\": expect_selector_continue,\n \"declaration_set_start\": expect_declaration,\n \"declaration_name\": expect_declaration_content,\n \"declaration_end\": expect_declaration,\n \"declaration_set_end\": expect_root_scope,\n }\n\n def __call__(self, code: str, path: str) -> Iterable[Token]:\n tokenizer = Tokenizer(code, path=path)\n expect = self.EXPECT\n get_token = tokenizer.get_token\n get_state = self.STATE_MAP.get\n while True:\n token = get_token(expect)\n name = token.name\n if name == \"comment_start\":\n tokenizer.skip_to(expect_comment_end)\n continue\n elif name == \"eof\":\n break\n expect = get_state(name, expect)\n yield token\n\n\nclass DeclarationTokenizerState(TokenizerState):\n EXPECT = expect_declaration_solo\n STATE_MAP = {\n \"declaration_name\": expect_declaration_content,\n \"declaration_end\": expect_declaration_solo,\n }\n\n\nclass ValueTokenizerState(TokenizerState):\n EXPECT = expect_declaration_content_solo\n\n\ntokenize = TokenizerState()\ntokenize_declarations = DeclarationTokenizerState()\ntokenize_value = ValueTokenizerState()\n\n\ndef tokenize_values(values: dict[str, str]) -> dict[str, list[Token]]:\n \"\"\"Tokens the values in a dict of strings.\n\n Args:\n values (dict[str, str]): A mapping of CSS variable name on to a value, to be\n added to the CSS context.\n\n Returns:\n dict[str, list[Token]]: A mapping of name on to a list of tokens,\n \"\"\"\n value_tokens = {\n name: list(tokenize_value(value, \"__name__\")) for name, value in values.items()\n }\n return value_tokens\n\n\nif __name__ == \"__main__\":\n from rich import print\n\n css = \"\"\"#something {\n\n color: rgb(10,12,23)\n }\n \"\"\"\n # transition: offset 500 in_out_cubic;\n tokens = tokenize(css, __name__)\n print(list(tokens))\n\n print(tokenize_values({\"primary\": \"rgb(10,20,30)\", \"secondary\": \"#ff00ff\"}))\n", "path": "src/textual/css/tokenize.py"}]} | 2,780 | 239 |

gh_patches_debug_18348 | rasdani/github-patches | git_diff | elastic__apm-agent-python-1414 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Sanic 21.9.2 breaks exception tracking

For yet unknown reasons, Sanic 21.9.2+ broke exception tracking. The changes between 21.9.1 and 21.9.2 are here:

https://github.com/sanic-org/sanic/compare/v21.9.1...v21.9.2

The test failures are here: https://apm-ci.elastic.co/blue/organizations/jenkins/apm-agent-python%2Fapm-agent-python-nightly-mbp%2Fmaster/detail/master/787/tests/

Example:

----------------------------------------------------- Captured log call ------------------------------------------------------INFO sanic.root:testing.py:82 http://127.0.0.1:50003/fallback-value-error

ERROR sanic.error:request.py:193 Exception occurred in one of response middleware handlers

Traceback (most recent call last):

File "/home/user/.local/lib/python3.10/site-packages/sanic_routing/router.py", line 79, in resolve

route, param_basket = self.find_route(

File "", line 24, in find_route

sanic_routing.exceptions.NotFound: Not Found

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/user/.local/lib/python3.10/site-packages/sanic/router.py", line 38, in _get

return self.resolve(

File "/home/user/.local/lib/python3.10/site-packages/sanic_routing/router.py", line 96, in resolve

raise self.exception(str(e), path=path)

sanic_routing.exceptions.NotFound: Not Found

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "handle_request", line 26, in handle_request

Any,

File "/home/user/.local/lib/python3.10/site-packages/sanic/router.py", line 66, in get

return self._get(path, method, host)

File "/home/user/.local/lib/python3.10/site-packages/sanic/router.py", line 44, in _get

raise NotFound("Requested URL {} not found".format(e.path))

sanic.exceptions.NotFound: Requested URL /fallback-value-error not found

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/user/.local/lib/python3.10/site-packages/sanic/request.py", line 187, in respond

response = await self.app._run_response_middleware(

File "_run_response_middleware", line 22, in _run_response_middleware

from ssl import Purpose, SSLContext, create_default_context

File "/app/elasticapm/contrib/sanic/__init__.py", line 279, in _instrument_response

await set_context(

File "/app/elasticapm/contrib/asyncio/traces.py", line 93, in set_context

data = await data()

File "/app/elasticapm/contrib/sanic/utils.py", line 121, in get_response_info

if config.capture_body and "octet-stream" not in response.content_type:

TypeError: argument of type 'NoneType' is not iterable

Checking for `response.content_type is not None` in `elasticapm/contrib/sanic/utils.py:121` doesn't resolve the issue.

@ahopkins do you happen to have an idea what could cause these failures?

</issue>

<code>

[start of elasticapm/contrib/sanic/utils.py]

1 # BSD 3-Clause License

2 #

3 # Copyright (c) 2012, the Sentry Team, see AUTHORS for more details

4 # Copyright (c) 2019, Elasticsearch BV

5 # All rights reserved.

6 #

7 # Redistribution and use in source and binary forms, with or without

8 # modification, are permitted provided that the following conditions are met:

9 #

10 # * Redistributions of source code must retain the above copyright notice, this

11 # list of conditions and the following disclaimer.

12 #

13 # * Redistributions in binary form must reproduce the above copyright notice,

14 # this list of conditions and the following disclaimer in the documentation

15 # and/or other materials provided with the distribution.

16 #

17 # * Neither the name of the copyright holder nor the names of its

18 # contributors may be used to endorse or promote products derived from

19 # this software without specific prior written permission.

20 #

21 # THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

22 # AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

23 # IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

24 # DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

25 # FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

26 # DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

27 # SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

28 # CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

29 # OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

30

31 from typing import Dict

32

33 from sanic import Sanic

34 from sanic import __version__ as version

35 from sanic.cookies import CookieJar

36 from sanic.request import Request

37 from sanic.response import HTTPResponse

38

39 from elasticapm.base import Client

40 from elasticapm.conf import Config, constants

41 from elasticapm.contrib.sanic.sanic_types import EnvInfoType

42 from elasticapm.utils import compat, get_url_dict

43

44

45 class SanicAPMConfig(dict):

46 def __init__(self, app: Sanic):

47 super(SanicAPMConfig, self).__init__()

48 for _key, _v in app.config.items():

49 if _key.startswith("ELASTIC_APM_"):

50 self[_key.replace("ELASTIC_APM_", "")] = _v

51

52

53 def get_env(request: Request) -> EnvInfoType:

54 """

55 Extract Server Environment Information from the current Request's context

56 :param request: Inbound HTTP Request

57 :return: A tuple containing the attribute and it's corresponding value for the current Application ENV

58 """

59 for _attr in ("server_name", "server_port", "version"):

60 if hasattr(request, _attr):

61 yield _attr, getattr(request, _attr)

62

63

64 # noinspection PyBroadException

65 async def get_request_info(config: Config, request: Request) -> Dict[str, str]:

66 """

67 Generate a traceable context information from the inbound HTTP request

68

69 :param config: Application Configuration used to tune the way the data is captured

70 :param request: Inbound HTTP request

71 :return: A dictionary containing the context information of the ongoing transaction

72 """

73 env = dict(get_env(request=request))

74 env.update(dict(request.app.config))

75 result = {

76 "env": env,

77 "method": request.method,

78 "socket": {

79 "remote_address": _get_client_ip(request=request),

80 "encrypted": request.scheme in ["https", "wss"],

81 },

82 "cookies": request.cookies,

83 "http_version": request.version,

84 }

85 if config.capture_headers:

86 result["headers"] = dict(request.headers)

87

88 if request.method in constants.HTTP_WITH_BODY and config.capture_body:

89 if request.content_type.startswith("multipart") or "octet-stream" in request.content_type:

90 result["body"] = "[DISCARDED]"

91 try:

92 result["body"] = request.body.decode("utf-8")

93 except Exception:

94 pass

95

96 if "body" not in result:

97 result["body"] = "[REDACTED]"

98 result["url"] = get_url_dict(request.url)

99 return result

100

101

102 async def get_response_info(config: Config, response: HTTPResponse) -> Dict[str, str]:

103 """

104 Generate a traceable context information from the inbound HTTP Response

105

106 :param config: Application Configuration used to tune the way the data is captured

107 :param response: outbound HTTP Response

108 :return: A dictionary containing the context information of the ongoing transaction

109 """

110 result = {

111 "cookies": _transform_response_cookie(cookies=response.cookies),

112 "finished": True,

113 "headers_sent": True,

114 }

115 if isinstance(response.status, compat.integer_types):

116 result["status_code"] = response.status

117

118 if config.capture_headers:

119 result["headers"] = dict(response.headers)

120

121 if config.capture_body and "octet-stream" not in response.content_type:

122 result["body"] = response.body.decode("utf-8")

123 else:

124 result["body"] = "[REDACTED]"

125

126 return result

127

128

129 def _get_client_ip(request: Request) -> str:

130 """Extract Client IP Address Information"""

131 try:

132 return request.ip or request.socket[0] or request.remote_addr

133 except IndexError:

134 return request.remote_addr

135

136

137 def make_client(client_cls=Client, **defaults) -> Client:

138 if "framework_name" not in defaults:

139 defaults["framework_name"] = "sanic"

140 defaults["framework_version"] = version

141

142 return client_cls(**defaults)

143

144

145 def _transform_response_cookie(cookies: CookieJar) -> Dict[str, str]:

146 """Transform the Sanic's CookieJar instance into a Normal dictionary to build the context"""

147 return {k: {"value": v.value, "path": v["path"]} for k, v in cookies.items()}

148

[end of elasticapm/contrib/sanic/utils.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/elasticapm/contrib/sanic/utils.py b/elasticapm/contrib/sanic/utils.py

--- a/elasticapm/contrib/sanic/utils.py

+++ b/elasticapm/contrib/sanic/utils.py

@@ -28,6 +28,7 @@

# CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

# OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

+from string import ascii_uppercase

from typing import Dict

from sanic import Sanic

@@ -71,7 +72,8 @@

:return: A dictionary containing the context information of the ongoing transaction

"""

env = dict(get_env(request=request))

- env.update(dict(request.app.config))

+ app_config = {k: v for k, v in dict(request.app.config).items() if all(letter in ascii_uppercase for letter in k)}

+ env.update(app_config)

result = {

"env": env,

"method": request.method,

| {"golden_diff": "diff --git a/elasticapm/contrib/sanic/utils.py b/elasticapm/contrib/sanic/utils.py\n--- a/elasticapm/contrib/sanic/utils.py\n+++ b/elasticapm/contrib/sanic/utils.py\n@@ -28,6 +28,7 @@\n # CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,\n # OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE\n \n+from string import ascii_uppercase\n from typing import Dict\n \n from sanic import Sanic\n@@ -71,7 +72,8 @@\n :return: A dictionary containing the context information of the ongoing transaction\n \"\"\"\n env = dict(get_env(request=request))\n- env.update(dict(request.app.config))\n+ app_config = {k: v for k, v in dict(request.app.config).items() if all(letter in ascii_uppercase for letter in k)}\n+ env.update(app_config)\n result = {\n \"env\": env,\n \"method\": request.method,\n", "issue": "Sanic 21.9.2 breaks exception tracking\nFor yet unknown reasons, Sanic 21.9.2+ broke exception tracking. The changes between 21.9.1 and 21.9.2 are here:\r\n\r\nhttps://github.com/sanic-org/sanic/compare/v21.9.1...v21.9.2\r\n\r\nThe test failures are here: https://apm-ci.elastic.co/blue/organizations/jenkins/apm-agent-python%2Fapm-agent-python-nightly-mbp%2Fmaster/detail/master/787/tests/\r\n\r\nExample:\r\n\r\n ----------------------------------------------------- Captured log call ------------------------------------------------------INFO sanic.root:testing.py:82 http://127.0.0.1:50003/fallback-value-error\r\n ERROR sanic.error:request.py:193 Exception occurred in one of response middleware handlers\r\n Traceback (most recent call last):\r\n File \"/home/user/.local/lib/python3.10/site-packages/sanic_routing/router.py\", line 79, in resolve\r\n route, param_basket = self.find_route(\r\n File \"\", line 24, in find_route\r\n sanic_routing.exceptions.NotFound: Not Found\r\n\r\n During handling of the above exception, another exception occurred:\r\n\r\n Traceback (most recent call last):\r\n File \"/home/user/.local/lib/python3.10/site-packages/sanic/router.py\", line 38, in _get\r\n return self.resolve(\r\n File \"/home/user/.local/lib/python3.10/site-packages/sanic_routing/router.py\", line 96, in resolve\r\n raise self.exception(str(e), path=path)\r\n sanic_routing.exceptions.NotFound: Not Found\r\n\r\n During handling of the above exception, another exception occurred:\r\n\r\n Traceback (most recent call last):\r\n File \"handle_request\", line 26, in handle_request\r\n Any,\r\n File \"/home/user/.local/lib/python3.10/site-packages/sanic/router.py\", line 66, in get\r\n return self._get(path, method, host)\r\n File \"/home/user/.local/lib/python3.10/site-packages/sanic/router.py\", line 44, in _get\r\n raise NotFound(\"Requested URL {} not found\".format(e.path))\r\n sanic.exceptions.NotFound: Requested URL /fallback-value-error not found\r\n\r\n During handling of the above exception, another exception occurred:\r\n\r\n Traceback (most recent call last):\r\n File \"/home/user/.local/lib/python3.10/site-packages/sanic/request.py\", line 187, in respond\r\n response = await self.app._run_response_middleware(\r\n File \"_run_response_middleware\", line 22, in _run_response_middleware\r\n from ssl import Purpose, SSLContext, create_default_context\r\n File \"/app/elasticapm/contrib/sanic/__init__.py\", line 279, in _instrument_response\r\n await set_context(\r\n File \"/app/elasticapm/contrib/asyncio/traces.py\", line 93, in set_context\r\n data = await data()\r\n File \"/app/elasticapm/contrib/sanic/utils.py\", line 121, in get_response_info\r\n if config.capture_body and \"octet-stream\" not in response.content_type:\r\n TypeError: argument of type 'NoneType' is not iterable\r\n\r\nChecking for `response.content_type is not None` in `elasticapm/contrib/sanic/utils.py:121` doesn't resolve the issue.\r\n\r\n@ahopkins do you happen to have an idea what could cause these failures?\n", "before_files": [{"content": "# BSD 3-Clause License\n#\n# Copyright (c) 2012, the Sentry Team, see AUTHORS for more details\n# Copyright (c) 2019, Elasticsearch BV\n# All rights reserved.\n#\n# Redistribution and use in source and binary forms, with or without\n# modification, are permitted provided that the following conditions are met:\n#\n# * Redistributions of source code must retain the above copyright notice, this\n# list of conditions and the following disclaimer.\n#\n# * Redistributions in binary form must reproduce the above copyright notice,\n# this list of conditions and the following disclaimer in the documentation\n# and/or other materials provided with the distribution.\n#\n# * Neither the name of the copyright holder nor the names of its\n# contributors may be used to endorse or promote products derived from\n# this software without specific prior written permission.\n#\n# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS \"AS IS\"\n# AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE\n# IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE\n# DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE\n# FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL\n# DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR\n# SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER\n# CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,\n# OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE\n\nfrom typing import Dict\n\nfrom sanic import Sanic\nfrom sanic import __version__ as version\nfrom sanic.cookies import CookieJar\nfrom sanic.request import Request\nfrom sanic.response import HTTPResponse\n\nfrom elasticapm.base import Client\nfrom elasticapm.conf import Config, constants\nfrom elasticapm.contrib.sanic.sanic_types import EnvInfoType\nfrom elasticapm.utils import compat, get_url_dict\n\n\nclass SanicAPMConfig(dict):\n def __init__(self, app: Sanic):\n super(SanicAPMConfig, self).__init__()\n for _key, _v in app.config.items():\n if _key.startswith(\"ELASTIC_APM_\"):\n self[_key.replace(\"ELASTIC_APM_\", \"\")] = _v\n\n\ndef get_env(request: Request) -> EnvInfoType:\n \"\"\"\n Extract Server Environment Information from the current Request's context\n :param request: Inbound HTTP Request\n :return: A tuple containing the attribute and it's corresponding value for the current Application ENV\n \"\"\"\n for _attr in (\"server_name\", \"server_port\", \"version\"):\n if hasattr(request, _attr):\n yield _attr, getattr(request, _attr)\n\n\n# noinspection PyBroadException\nasync def get_request_info(config: Config, request: Request) -> Dict[str, str]:\n \"\"\"\n Generate a traceable context information from the inbound HTTP request\n\n :param config: Application Configuration used to tune the way the data is captured\n :param request: Inbound HTTP request\n :return: A dictionary containing the context information of the ongoing transaction\n \"\"\"\n env = dict(get_env(request=request))\n env.update(dict(request.app.config))\n result = {\n \"env\": env,\n \"method\": request.method,\n \"socket\": {\n \"remote_address\": _get_client_ip(request=request),\n \"encrypted\": request.scheme in [\"https\", \"wss\"],\n },\n \"cookies\": request.cookies,\n \"http_version\": request.version,\n }\n if config.capture_headers:\n result[\"headers\"] = dict(request.headers)\n\n if request.method in constants.HTTP_WITH_BODY and config.capture_body:\n if request.content_type.startswith(\"multipart\") or \"octet-stream\" in request.content_type:\n result[\"body\"] = \"[DISCARDED]\"\n try:\n result[\"body\"] = request.body.decode(\"utf-8\")\n except Exception:\n pass\n\n if \"body\" not in result:\n result[\"body\"] = \"[REDACTED]\"\n result[\"url\"] = get_url_dict(request.url)\n return result\n\n\nasync def get_response_info(config: Config, response: HTTPResponse) -> Dict[str, str]:\n \"\"\"\n Generate a traceable context information from the inbound HTTP Response\n\n :param config: Application Configuration used to tune the way the data is captured\n :param response: outbound HTTP Response\n :return: A dictionary containing the context information of the ongoing transaction\n \"\"\"\n result = {\n \"cookies\": _transform_response_cookie(cookies=response.cookies),\n \"finished\": True,\n \"headers_sent\": True,\n }\n if isinstance(response.status, compat.integer_types):\n result[\"status_code\"] = response.status\n\n if config.capture_headers:\n result[\"headers\"] = dict(response.headers)\n\n if config.capture_body and \"octet-stream\" not in response.content_type:\n result[\"body\"] = response.body.decode(\"utf-8\")\n else:\n result[\"body\"] = \"[REDACTED]\"\n\n return result\n\n\ndef _get_client_ip(request: Request) -> str:\n \"\"\"Extract Client IP Address Information\"\"\"\n try:\n return request.ip or request.socket[0] or request.remote_addr\n except IndexError:\n return request.remote_addr\n\n\ndef make_client(client_cls=Client, **defaults) -> Client:\n if \"framework_name\" not in defaults:\n defaults[\"framework_name\"] = \"sanic\"\n defaults[\"framework_version\"] = version\n\n return client_cls(**defaults)\n\n\ndef _transform_response_cookie(cookies: CookieJar) -> Dict[str, str]:\n \"\"\"Transform the Sanic's CookieJar instance into a Normal dictionary to build the context\"\"\"\n return {k: {\"value\": v.value, \"path\": v[\"path\"]} for k, v in cookies.items()}\n", "path": "elasticapm/contrib/sanic/utils.py"}]} | 2,923 | 224 |

gh_patches_debug_27127 | rasdani/github-patches | git_diff | mindsdb__mindsdb-1011 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Endpoint to disable telemtry

fairly self explainatory, add and an endpoint to the HTTP API that can be used to disable/enable the telemtry.

Endpoint to disable telemtry

fairly self explainatory, add and an endpoint to the HTTP API that can be used to disable/enable the telemtry.

</issue>

<code>

[start of mindsdb/api/http/namespaces/util.py]

1 from flask import request

2 from flask_restx import Resource, abort

3 from flask import current_app as ca

4

5 from mindsdb.api.http.namespaces.configs.util import ns_conf

6 from mindsdb import __about__

7

8 @ns_conf.route('/ping')

9 class Ping(Resource):

10 @ns_conf.doc('get_ping')

11 def get(self):

12 '''Checks server avaliable'''

13 return {'status': 'ok'}

14

15 @ns_conf.route('/report_uuid')

16 class ReportUUID(Resource):

17 @ns_conf.doc('get_report_uuid')

18 def get(self):

19 metamodel_name = '___monitroing_metamodel___'

20 predictor = ca.mindsdb_native.create(metamodel_name)

21 return {

22 'report_uuid': predictor.report_uuid

23 }

24

[end of mindsdb/api/http/namespaces/util.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch> | diff --git a/mindsdb/api/http/namespaces/util.py b/mindsdb/api/http/namespaces/util.py

--- a/mindsdb/api/http/namespaces/util.py

+++ b/mindsdb/api/http/namespaces/util.py

@@ -1,3 +1,4 @@

+import os

from flask import request

from flask_restx import Resource, abort

from flask import current_app as ca

@@ -5,6 +6,8 @@

from mindsdb.api.http.namespaces.configs.util import ns_conf

from mindsdb import __about__

+TELEMETRY_FILE = 'telemetry.lock'

+

@ns_conf.route('/ping')

class Ping(Resource):

@ns_conf.doc('get_ping')

@@ -21,3 +24,34 @@

return {

'report_uuid': predictor.report_uuid

}

+

+@ns_conf.route('/telemetry')

+class Telemetry(Resource):

+ @ns_conf.doc('get_telemetry_status')

+ def get(self):

+ status = "enabled" if is_telemetry_active() else "disabled"

+ return {"status": status}

+

+ @ns_conf.doc('set_telemetry')

+ def post(self):

+ data = request.json

+ action = data['action']

+ if str(action).lower() in ["true", "enable", "on"]:

+ enable_telemetry()

+ else:

+ disable_telemetry()

+

+

+def enable_telemetry():

+ path = os.path.join(ca.config_obj['storage_dir'], TELEMETRY_FILE)

+ if os.path.exists(path):

+ os.remove(path)

+

+def disable_telemetry():

+ path = os.path.join(ca.config_obj['storage_dir'], TELEMETRY_FILE)

+ with open(path, 'w') as _:

+ pass

+

+def is_telemetry_active():

+ path = os.path.join(ca.config_obj['storage_dir'], TELEMETRY_FILE)

+ return not os.path.exists(path)

| {"golden_diff": "diff --git a/mindsdb/api/http/namespaces/util.py b/mindsdb/api/http/namespaces/util.py\n--- a/mindsdb/api/http/namespaces/util.py\n+++ b/mindsdb/api/http/namespaces/util.py\n@@ -1,3 +1,4 @@\n+import os\n from flask import request\n from flask_restx import Resource, abort\n from flask import current_app as ca\n@@ -5,6 +6,8 @@\n from mindsdb.api.http.namespaces.configs.util import ns_conf\n from mindsdb import __about__\n \n+TELEMETRY_FILE = 'telemetry.lock'\n+\n @ns_conf.route('/ping')\n class Ping(Resource):\n @ns_conf.doc('get_ping')\n@@ -21,3 +24,34 @@\n return {\n 'report_uuid': predictor.report_uuid\n }\n+\n+@ns_conf.route('/telemetry')\n+class Telemetry(Resource):\n+ @ns_conf.doc('get_telemetry_status')\n+ def get(self):\n+ status = \"enabled\" if is_telemetry_active() else \"disabled\"\n+ return {\"status\": status}\n+\n+ @ns_conf.doc('set_telemetry')\n+ def post(self):\n+ data = request.json\n+ action = data['action']\n+ if str(action).lower() in [\"true\", \"enable\", \"on\"]:\n+ enable_telemetry()\n+ else:\n+ disable_telemetry()\n+\n+\n+def enable_telemetry():\n+ path = os.path.join(ca.config_obj['storage_dir'], TELEMETRY_FILE)\n+ if os.path.exists(path):\n+ os.remove(path)\n+\n+def disable_telemetry():\n+ path = os.path.join(ca.config_obj['storage_dir'], TELEMETRY_FILE)\n+ with open(path, 'w') as _:\n+ pass\n+\n+def is_telemetry_active():\n+ path = os.path.join(ca.config_obj['storage_dir'], TELEMETRY_FILE)\n+ return not os.path.exists(path)\n", "issue": "Endpoint to disable telemtry\nfairly self explainatory, add and an endpoint to the HTTP API that can be used to disable/enable the telemtry.\r\n\nEndpoint to disable telemtry\nfairly self explainatory, add and an endpoint to the HTTP API that can be used to disable/enable the telemtry.\r\n\n", "before_files": [{"content": "from flask import request\nfrom flask_restx import Resource, abort\nfrom flask import current_app as ca\n\nfrom mindsdb.api.http.namespaces.configs.util import ns_conf\nfrom mindsdb import __about__\n\n@ns_conf.route('/ping')\nclass Ping(Resource):\n @ns_conf.doc('get_ping')\n def get(self):\n '''Checks server avaliable'''\n return {'status': 'ok'}\n\n@ns_conf.route('/report_uuid')\nclass ReportUUID(Resource):\n @ns_conf.doc('get_report_uuid')\n def get(self):\n metamodel_name = '___monitroing_metamodel___'\n predictor = ca.mindsdb_native.create(metamodel_name)\n return {\n 'report_uuid': predictor.report_uuid\n }\n", "path": "mindsdb/api/http/namespaces/util.py"}]} | 810 | 433 |

gh_patches_debug_67113 | rasdani/github-patches | git_diff | zestedesavoir__zds-site-5120 | You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Erreur 403 après recherche dans la bibliothèque

> Bonjour,

>

> Je tombe sur une erreur 403 "Vous n’avez pas les droits suffisants pour accéder à cette page." après une recherche dans les cours présents dans la bibliothèque.

Voilà comment elle est apparue :

>

> - Arrivé sur le site déconnecté

> - Je suis allé dans "Développement web" depuis le bandeau en haut du site

> - A partir de là je me suis connecté à mon compte (même onglet, bouton classique), ce qui m'a ramené sur la page

> - Puis j'ai fait une recherche "PHP" dans la barre de rechercher, ce qui m'a amené sur [ce lien](https://zestedesavoir.com/rechercher/?q=PHP&models=content&from_library=on&category=informatique&subcategory=site-web)

>

> L'erreur 403 se produit lorsque je coche 1 à 3 cases (sous la barre de recherche), pour filtrer les résultats, et que je clique à nouveau sur le bouton "rechercher" dans la barre.

>

> Voilà [un exemple de lien](https://zestedesavoir.com/rechercher/?q=PHP&category=informatique&subcategory=site-web&from_library=on&models=) provoquant une erreur 403 de mon côté.

>

> Bye

Sujet : https://zestedesavoir.com/forums/sujet/11609/erreur-403-apres-recherche-dans-la-bibliotheque/

*Envoyé depuis Zeste de Savoir*

</issue>

<code>

[start of zds/searchv2/forms.py]

1 import os

2 import random

3

4 from django import forms

5 from django.conf import settings

6 from django.utils.translation import ugettext_lazy as _

7

8 from crispy_forms.bootstrap import StrictButton

9 from crispy_forms.helper import FormHelper

10 from crispy_forms.layout import Layout, Field

11 from django.core.urlresolvers import reverse

12

13

14 class SearchForm(forms.Form):

15 q = forms.CharField(

16 label=_('Recherche'),

17 max_length=150,

18 required=False,

19 widget=forms.TextInput(

20 attrs={

21 'type': 'search',

22 'required': 'required'

23 }

24 )

25 )

26

27 choices = sorted(

28 [(k, v[0]) for k, v in settings.ZDS_APP['search']['search_groups'].items()],

29 key=lambda pair: pair[1]

30 )

31

32 models = forms.MultipleChoiceField(

33 label='',

34 widget=forms.CheckboxSelectMultiple,

35 required=False,

36 choices=choices

37 )

38

39 category = forms.CharField(widget=forms.HiddenInput, required=False)

40 subcategory = forms.CharField(widget=forms.HiddenInput, required=False)

41 from_library = forms.CharField(widget=forms.HiddenInput, required=False)

42

43 def __init__(self, *args, **kwargs):

44

45 super(SearchForm, self).__init__(*args, **kwargs)

46

47 self.helper = FormHelper()

48 self.helper.form_id = 'search-form'

49 self.helper.form_class = 'clearfix'

50 self.helper.form_method = 'get'

51 self.helper.form_action = reverse('search:query')

52

53 try:

54 with open(os.path.join(settings.BASE_DIR, 'suggestions.txt'), 'r') as suggestions_file:

55 suggestions = ', '.join(random.sample(suggestions_file.readlines(), 5)) + '…'

56 except OSError:

57 suggestions = _('Mathématiques, Droit, UDK, Langues, Python…')

58

59 self.fields['q'].widget.attrs['placeholder'] = suggestions

60

61 self.helper.layout = Layout(

62 Field('q'),

63 StrictButton('', type='submit', css_class='ico-after ico-search', title=_('Rechercher')),

64 Field('category'),

65 Field('subcategory'),