problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

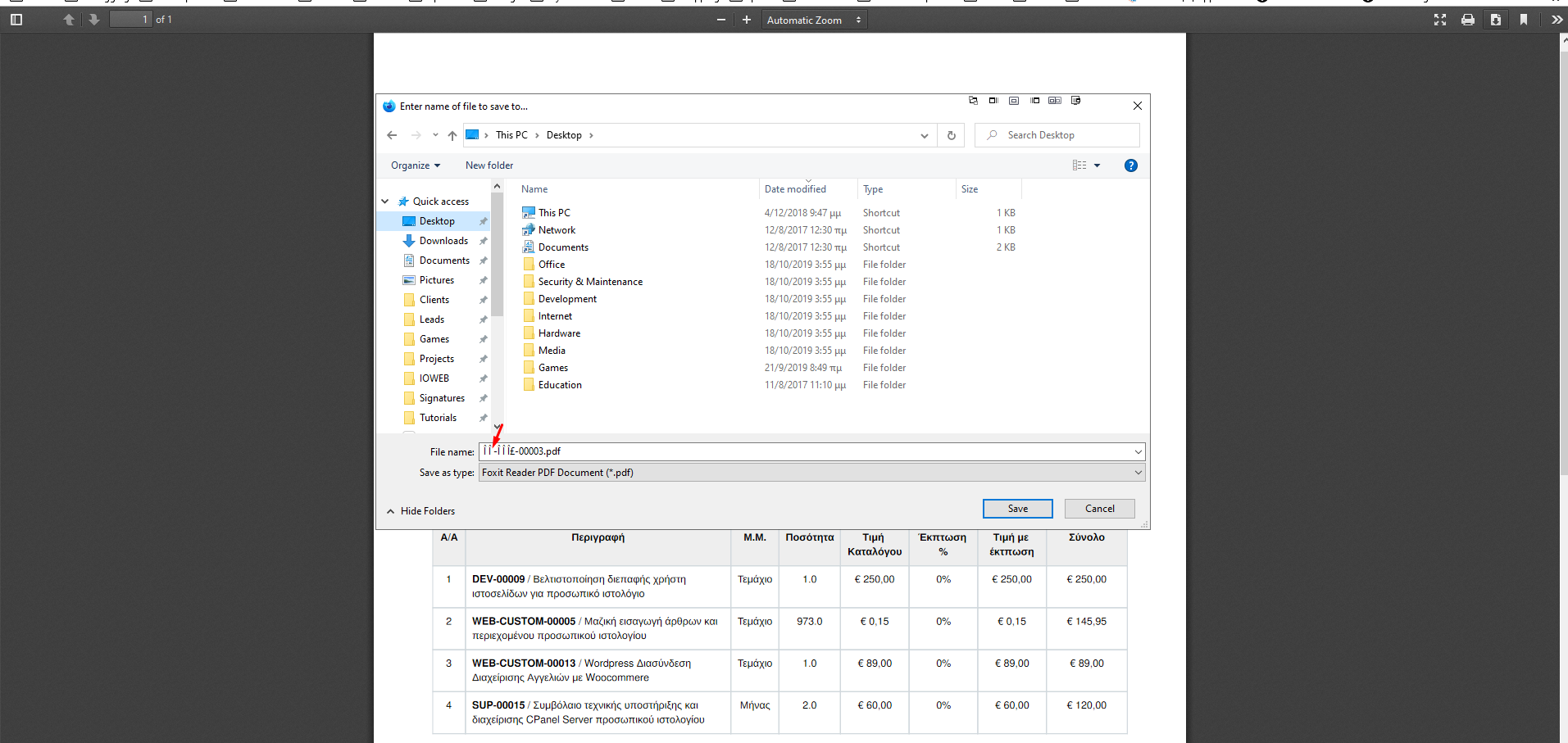

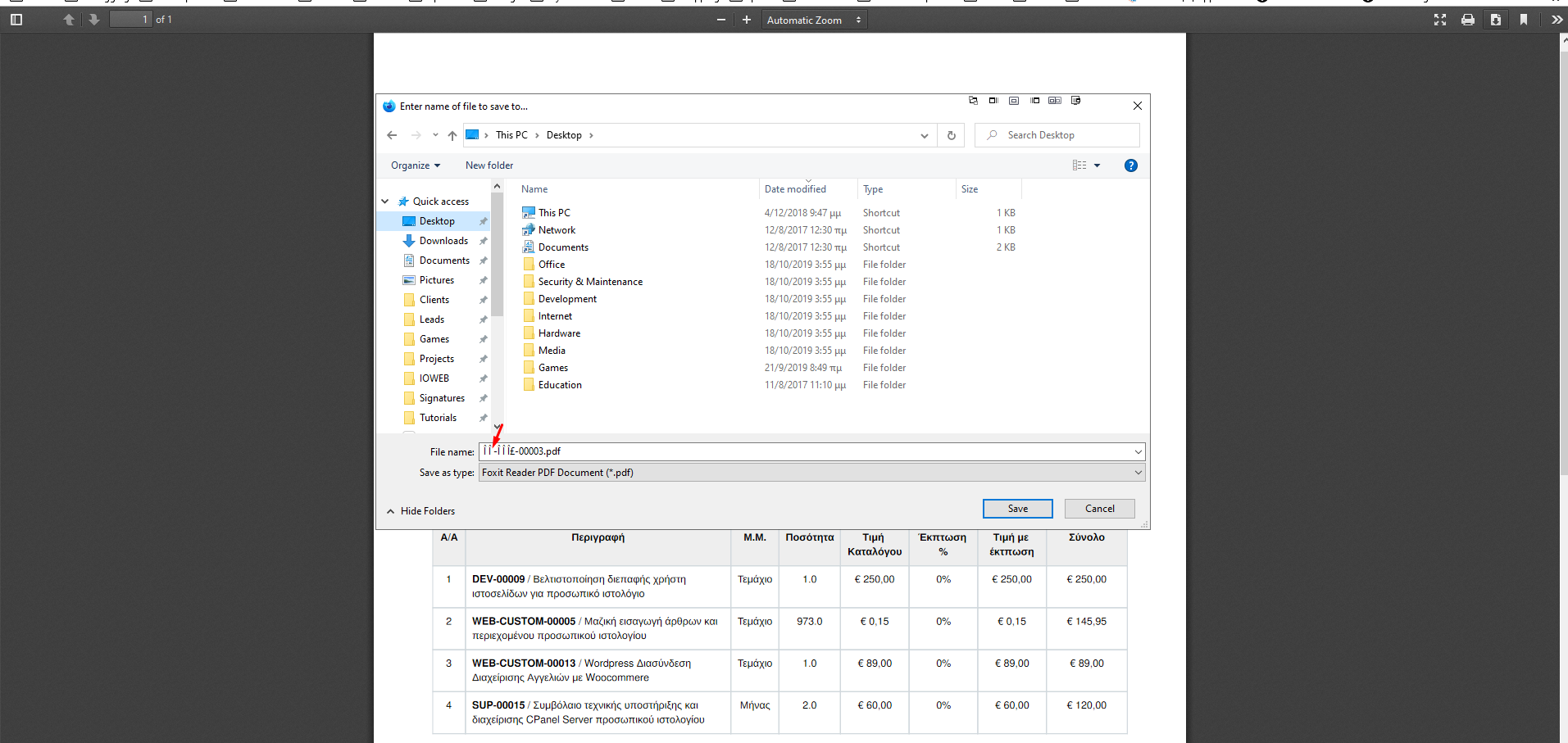

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_35252

|

rasdani/github-patches

|

git_diff

|

pytorch__text-1525

|

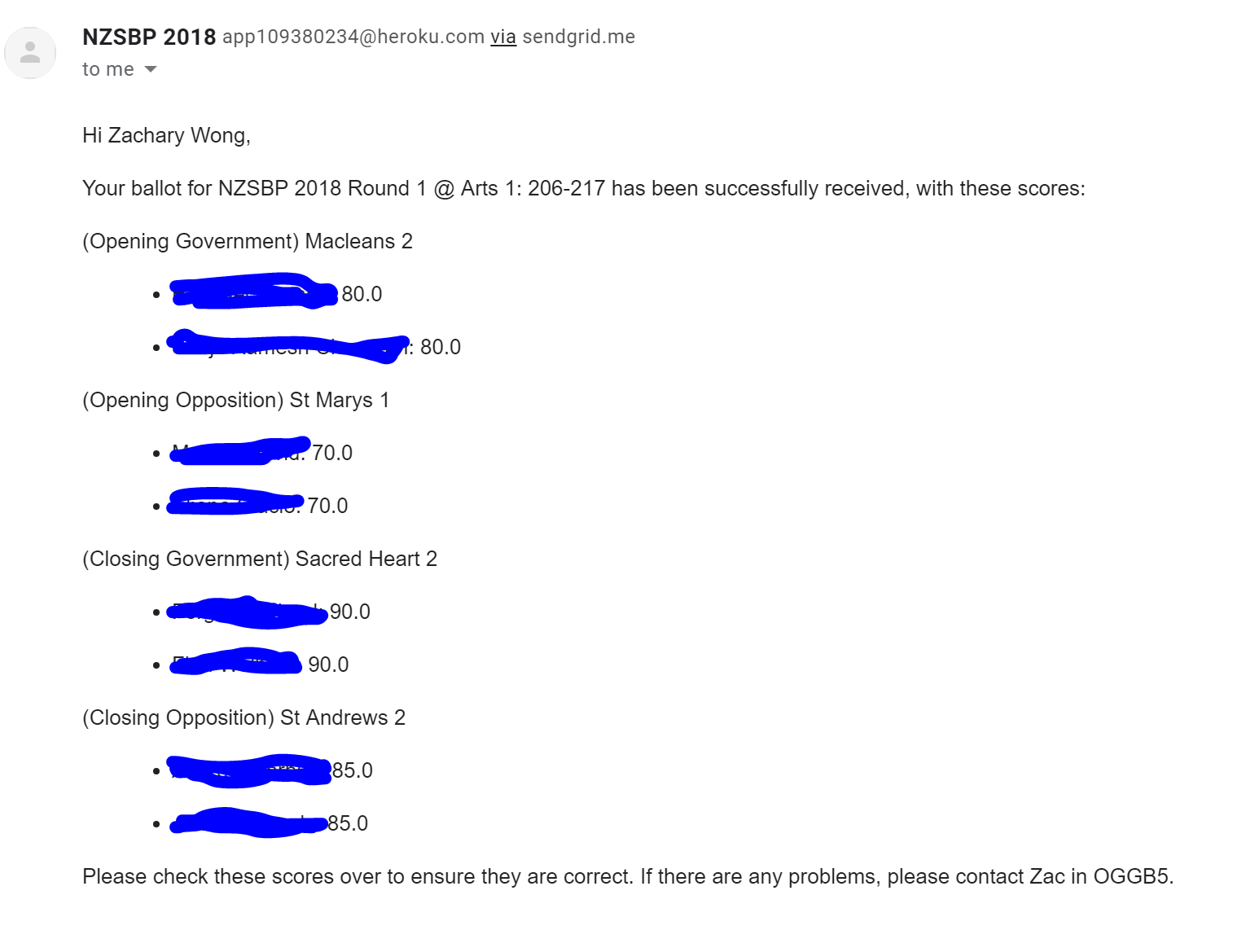

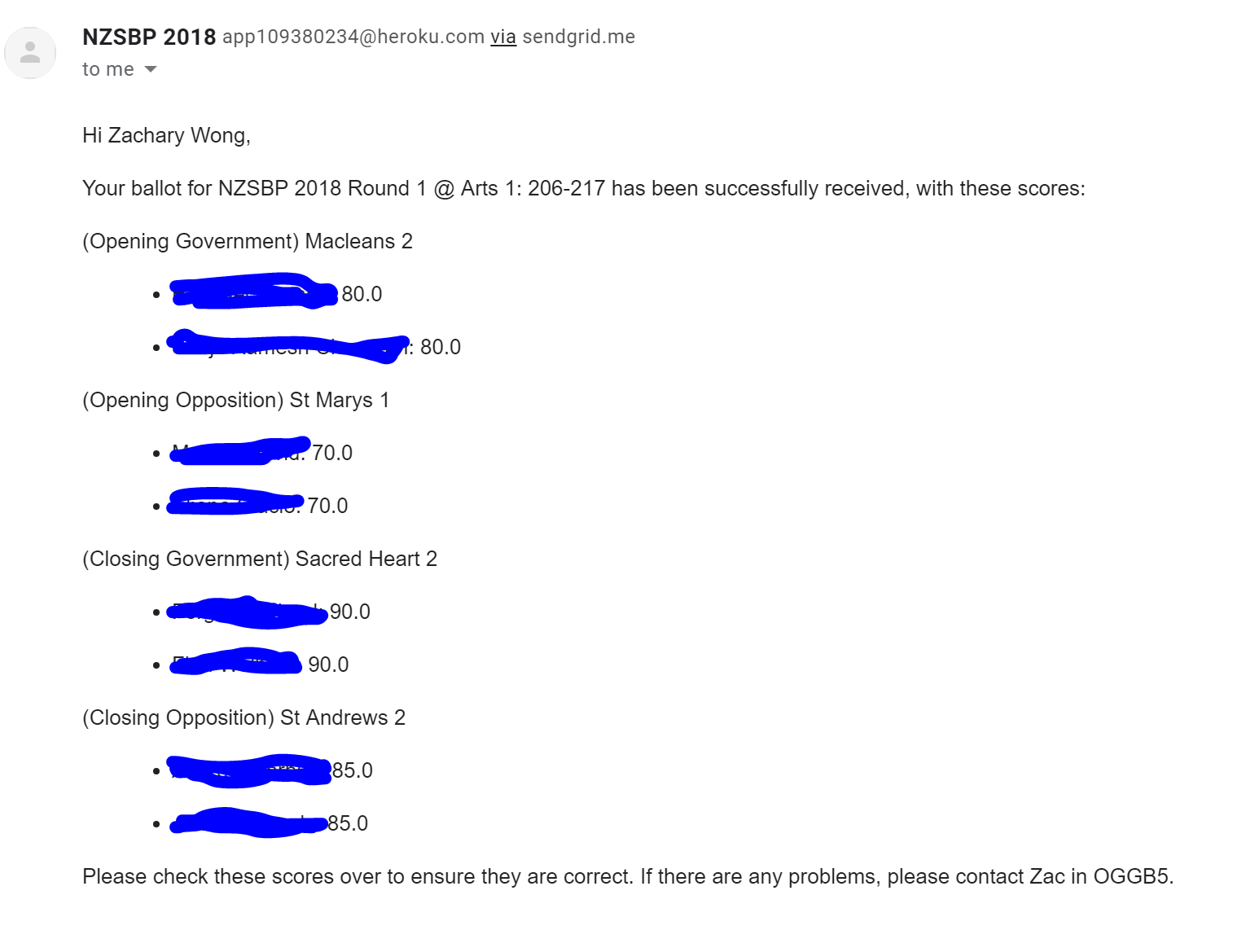

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Add a `max_words` argument to `build_vocab_from_iterator`

## 🚀 Feature

<!-- A clear and concise description of the feature proposal -->

[Link to the docs](https://pytorch.org/text/stable/vocab.html?highlight=build%20vocab#torchtext.vocab.build_vocab_from_iterator)

I believe it would be beneficial to limit the number of words you want in your vocabulary with an argument like `max_words`, e.g.:

```

vocab = build_vocab_from_iterator(yield_tokens_batch(file_path), specials=["<unk>"], max_words=50000)

```

**Motivation**

<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->

This allows a controllable-sized `nn.Embedding`, with rare words being mapped to `<unk>`. Otherwise, it would not be practical to use `build_vocab_from_iterator` for larger datasets.

**Alternatives**

<!-- A clear and concise description of any alternative solutions or features you've considered, if any. -->

Keras and Huggingface's tokenizers would be viable alternatives, but do not nicely integrate with the torchtext ecosystem.

</issue>

<code>

[start of torchtext/vocab/vocab_factory.py]

1 from .vocab import Vocab

2 from typing import Dict, Iterable, Optional, List

3 from collections import Counter, OrderedDict

4 from torchtext._torchtext import (

5 Vocab as VocabPybind,

6 )

7

8

9 def vocab(ordered_dict: Dict, min_freq: int = 1,

10 specials: Optional[List[str]] = None,

11 special_first: bool = True) -> Vocab:

12 r"""Factory method for creating a vocab object which maps tokens to indices.

13

14 Note that the ordering in which key value pairs were inserted in the `ordered_dict` will be respected when building the vocab.

15 Therefore if sorting by token frequency is important to the user, the `ordered_dict` should be created in a way to reflect this.

16

17 Args:

18 ordered_dict: Ordered Dictionary mapping tokens to their corresponding occurance frequencies.

19 min_freq: The minimum frequency needed to include a token in the vocabulary.

20 specials: Special symbols to add. The order of supplied tokens will be preserved.

21 special_first: Indicates whether to insert symbols at the beginning or at the end.

22

23 Returns:

24 torchtext.vocab.Vocab: A `Vocab` object

25

26 Examples:

27 >>> from torchtext.vocab import vocab

28 >>> from collections import Counter, OrderedDict

29 >>> counter = Counter(["a", "a", "b", "b", "b"])

30 >>> sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: x[1], reverse=True)

31 >>> ordered_dict = OrderedDict(sorted_by_freq_tuples)

32 >>> v1 = vocab(ordered_dict)

33 >>> print(v1['a']) #prints 1

34 >>> print(v1['out of vocab']) #raise RuntimeError since default index is not set

35 >>> tokens = ['e', 'd', 'c', 'b', 'a']

36 >>> #adding <unk> token and default index

37 >>> unk_token = '<unk>'

38 >>> default_index = -1

39 >>> v2 = vocab(OrderedDict([(token, 1) for token in tokens]), specials=[unk_token])

40 >>> v2.set_default_index(default_index)

41 >>> print(v2['<unk>']) #prints 0

42 >>> print(v2['out of vocab']) #prints -1

43 >>> #make default index same as index of unk_token

44 >>> v2.set_default_index(v2[unk_token])

45 >>> v2['out of vocab'] is v2[unk_token] #prints True

46 """

47 specials = specials or []

48 for token in specials:

49 ordered_dict.pop(token, None)

50

51 tokens = []

52 for token, freq in ordered_dict.items():

53 if freq >= min_freq:

54 tokens.append(token)

55

56 if special_first:

57 tokens[0:0] = specials

58 else:

59 tokens.extend(specials)

60

61 return Vocab(VocabPybind(tokens, None))

62

63

64 def build_vocab_from_iterator(iterator: Iterable, min_freq: int = 1, specials: Optional[List[str]] = None, special_first: bool = True) -> Vocab:

65 """

66 Build a Vocab from an iterator.

67

68 Args:

69 iterator: Iterator used to build Vocab. Must yield list or iterator of tokens.

70 min_freq: The minimum frequency needed to include a token in the vocabulary.

71 specials: Special symbols to add. The order of supplied tokens will be preserved.

72 special_first: Indicates whether to insert symbols at the beginning or at the end.

73

74

75 Returns:

76 torchtext.vocab.Vocab: A `Vocab` object

77

78 Examples:

79 >>> #generating vocab from text file

80 >>> import io

81 >>> from torchtext.vocab import build_vocab_from_iterator

82 >>> def yield_tokens(file_path):

83 >>> with io.open(file_path, encoding = 'utf-8') as f:

84 >>> for line in f:

85 >>> yield line.strip().split()

86 >>> vocab = build_vocab_from_iterator(yield_tokens_batch(file_path), specials=["<unk>"])

87 """

88

89 counter = Counter()

90 for tokens in iterator:

91 counter.update(tokens)

92

93 sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: x[0])

94 sorted_by_freq_tuples.sort(key=lambda x: x[1], reverse=True)

95 ordered_dict = OrderedDict(sorted_by_freq_tuples)

96

97 word_vocab = vocab(ordered_dict, min_freq=min_freq, specials=specials or [],

98 special_first=special_first)

99 return word_vocab

100

[end of torchtext/vocab/vocab_factory.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/torchtext/vocab/vocab_factory.py b/torchtext/vocab/vocab_factory.py

--- a/torchtext/vocab/vocab_factory.py

+++ b/torchtext/vocab/vocab_factory.py

@@ -49,6 +49,7 @@

ordered_dict.pop(token, None)

tokens = []

+ # Save room for special tokens

for token, freq in ordered_dict.items():

if freq >= min_freq:

tokens.append(token)

@@ -61,7 +62,7 @@

return Vocab(VocabPybind(tokens, None))

-def build_vocab_from_iterator(iterator: Iterable, min_freq: int = 1, specials: Optional[List[str]] = None, special_first: bool = True) -> Vocab:

+def build_vocab_from_iterator(iterator: Iterable, min_freq: int = 1, specials: Optional[List[str]] = None, special_first: bool = True, max_tokens: Optional[int] = None) -> Vocab:

"""

Build a Vocab from an iterator.

@@ -70,6 +71,7 @@

min_freq: The minimum frequency needed to include a token in the vocabulary.

specials: Special symbols to add. The order of supplied tokens will be preserved.

special_first: Indicates whether to insert symbols at the beginning or at the end.

+ max_tokens: If provided, creates the vocab from the `max_tokens - len(specials)` most frequent tokens.

Returns:

@@ -90,10 +92,16 @@

for tokens in iterator:

counter.update(tokens)

- sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: x[0])

- sorted_by_freq_tuples.sort(key=lambda x: x[1], reverse=True)

- ordered_dict = OrderedDict(sorted_by_freq_tuples)

+ specials = specials or []

+

+ # First sort by descending frequency, then lexicographically

+ sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: (-x[1], x[0]))

+

+ if max_tokens is None:

+ ordered_dict = OrderedDict(sorted_by_freq_tuples)

+ else:

+ assert len(specials) < max_tokens, "len(specials) >= max_tokens, so the vocab will be entirely special tokens."

+ ordered_dict = OrderedDict(sorted_by_freq_tuples[:max_tokens - len(specials)])

- word_vocab = vocab(ordered_dict, min_freq=min_freq, specials=specials or [],

- special_first=special_first)

+ word_vocab = vocab(ordered_dict, min_freq=min_freq, specials=specials, special_first=special_first)

return word_vocab

|

{"golden_diff": "diff --git a/torchtext/vocab/vocab_factory.py b/torchtext/vocab/vocab_factory.py\n--- a/torchtext/vocab/vocab_factory.py\n+++ b/torchtext/vocab/vocab_factory.py\n@@ -49,6 +49,7 @@\n ordered_dict.pop(token, None)\n \n tokens = []\n+ # Save room for special tokens\n for token, freq in ordered_dict.items():\n if freq >= min_freq:\n tokens.append(token)\n@@ -61,7 +62,7 @@\n return Vocab(VocabPybind(tokens, None))\n \n \n-def build_vocab_from_iterator(iterator: Iterable, min_freq: int = 1, specials: Optional[List[str]] = None, special_first: bool = True) -> Vocab:\n+def build_vocab_from_iterator(iterator: Iterable, min_freq: int = 1, specials: Optional[List[str]] = None, special_first: bool = True, max_tokens: Optional[int] = None) -> Vocab:\n \"\"\"\n Build a Vocab from an iterator.\n \n@@ -70,6 +71,7 @@\n min_freq: The minimum frequency needed to include a token in the vocabulary.\n specials: Special symbols to add. The order of supplied tokens will be preserved.\n special_first: Indicates whether to insert symbols at the beginning or at the end.\n+ max_tokens: If provided, creates the vocab from the `max_tokens - len(specials)` most frequent tokens.\n \n \n Returns:\n@@ -90,10 +92,16 @@\n for tokens in iterator:\n counter.update(tokens)\n \n- sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: x[0])\n- sorted_by_freq_tuples.sort(key=lambda x: x[1], reverse=True)\n- ordered_dict = OrderedDict(sorted_by_freq_tuples)\n+ specials = specials or []\n+\n+ # First sort by descending frequency, then lexicographically\n+ sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: (-x[1], x[0]))\n+\n+ if max_tokens is None:\n+ ordered_dict = OrderedDict(sorted_by_freq_tuples)\n+ else:\n+ assert len(specials) < max_tokens, \"len(specials) >= max_tokens, so the vocab will be entirely special tokens.\"\n+ ordered_dict = OrderedDict(sorted_by_freq_tuples[:max_tokens - len(specials)])\n \n- word_vocab = vocab(ordered_dict, min_freq=min_freq, specials=specials or [],\n- special_first=special_first)\n+ word_vocab = vocab(ordered_dict, min_freq=min_freq, specials=specials, special_first=special_first)\n return word_vocab\n", "issue": "Add a `max_words` argument to `build_vocab_from_iterator`\n## \ud83d\ude80 Feature\r\n<!-- A clear and concise description of the feature proposal -->\r\n\r\n[Link to the docs](https://pytorch.org/text/stable/vocab.html?highlight=build%20vocab#torchtext.vocab.build_vocab_from_iterator)\r\n\r\nI believe it would be beneficial to limit the number of words you want in your vocabulary with an argument like `max_words`, e.g.:\r\n```\r\nvocab = build_vocab_from_iterator(yield_tokens_batch(file_path), specials=[\"<unk>\"], max_words=50000)\r\n```\r\n\r\n**Motivation**\r\n\r\n<!-- Please outline the motivation for the proposal. Is your feature request related to a problem? e.g., I'm always frustrated when [...]. If this is related to another GitHub issue, please link here too -->\r\n\r\n\r\nThis allows a controllable-sized `nn.Embedding`, with rare words being mapped to `<unk>`. Otherwise, it would not be practical to use `build_vocab_from_iterator` for larger datasets.\r\n\r\n\r\n**Alternatives**\r\n\r\n<!-- A clear and concise description of any alternative solutions or features you've considered, if any. -->\r\n\r\nKeras and Huggingface's tokenizers would be viable alternatives, but do not nicely integrate with the torchtext ecosystem.\r\n\r\n\n", "before_files": [{"content": "from .vocab import Vocab\nfrom typing import Dict, Iterable, Optional, List\nfrom collections import Counter, OrderedDict\nfrom torchtext._torchtext import (\n Vocab as VocabPybind,\n)\n\n\ndef vocab(ordered_dict: Dict, min_freq: int = 1,\n specials: Optional[List[str]] = None,\n special_first: bool = True) -> Vocab:\n r\"\"\"Factory method for creating a vocab object which maps tokens to indices.\n\n Note that the ordering in which key value pairs were inserted in the `ordered_dict` will be respected when building the vocab.\n Therefore if sorting by token frequency is important to the user, the `ordered_dict` should be created in a way to reflect this.\n\n Args:\n ordered_dict: Ordered Dictionary mapping tokens to their corresponding occurance frequencies.\n min_freq: The minimum frequency needed to include a token in the vocabulary.\n specials: Special symbols to add. The order of supplied tokens will be preserved.\n special_first: Indicates whether to insert symbols at the beginning or at the end.\n\n Returns:\n torchtext.vocab.Vocab: A `Vocab` object\n\n Examples:\n >>> from torchtext.vocab import vocab\n >>> from collections import Counter, OrderedDict\n >>> counter = Counter([\"a\", \"a\", \"b\", \"b\", \"b\"])\n >>> sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: x[1], reverse=True)\n >>> ordered_dict = OrderedDict(sorted_by_freq_tuples)\n >>> v1 = vocab(ordered_dict)\n >>> print(v1['a']) #prints 1\n >>> print(v1['out of vocab']) #raise RuntimeError since default index is not set\n >>> tokens = ['e', 'd', 'c', 'b', 'a']\n >>> #adding <unk> token and default index\n >>> unk_token = '<unk>'\n >>> default_index = -1\n >>> v2 = vocab(OrderedDict([(token, 1) for token in tokens]), specials=[unk_token])\n >>> v2.set_default_index(default_index)\n >>> print(v2['<unk>']) #prints 0\n >>> print(v2['out of vocab']) #prints -1\n >>> #make default index same as index of unk_token\n >>> v2.set_default_index(v2[unk_token])\n >>> v2['out of vocab'] is v2[unk_token] #prints True\n \"\"\"\n specials = specials or []\n for token in specials:\n ordered_dict.pop(token, None)\n\n tokens = []\n for token, freq in ordered_dict.items():\n if freq >= min_freq:\n tokens.append(token)\n\n if special_first:\n tokens[0:0] = specials\n else:\n tokens.extend(specials)\n\n return Vocab(VocabPybind(tokens, None))\n\n\ndef build_vocab_from_iterator(iterator: Iterable, min_freq: int = 1, specials: Optional[List[str]] = None, special_first: bool = True) -> Vocab:\n \"\"\"\n Build a Vocab from an iterator.\n\n Args:\n iterator: Iterator used to build Vocab. Must yield list or iterator of tokens.\n min_freq: The minimum frequency needed to include a token in the vocabulary.\n specials: Special symbols to add. The order of supplied tokens will be preserved.\n special_first: Indicates whether to insert symbols at the beginning or at the end.\n\n\n Returns:\n torchtext.vocab.Vocab: A `Vocab` object\n\n Examples:\n >>> #generating vocab from text file\n >>> import io\n >>> from torchtext.vocab import build_vocab_from_iterator\n >>> def yield_tokens(file_path):\n >>> with io.open(file_path, encoding = 'utf-8') as f:\n >>> for line in f:\n >>> yield line.strip().split()\n >>> vocab = build_vocab_from_iterator(yield_tokens_batch(file_path), specials=[\"<unk>\"])\n \"\"\"\n\n counter = Counter()\n for tokens in iterator:\n counter.update(tokens)\n\n sorted_by_freq_tuples = sorted(counter.items(), key=lambda x: x[0])\n sorted_by_freq_tuples.sort(key=lambda x: x[1], reverse=True)\n ordered_dict = OrderedDict(sorted_by_freq_tuples)\n\n word_vocab = vocab(ordered_dict, min_freq=min_freq, specials=specials or [],\n special_first=special_first)\n return word_vocab\n", "path": "torchtext/vocab/vocab_factory.py"}]}

| 1,940 | 572 |

gh_patches_debug_20842

|

rasdani/github-patches

|

git_diff

|

napari__napari-2398

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Settings manager may need to handle edge case where loaded data is None

## 🐛 Bug

Looks like the settings manager `_load` method may need to handle the case where `safe_load` returns `None`. I don't yet have a reproducible example... but I'm working on some stuff that is crashing napari a lot :joy:, so maybe settings aren't getting written correctly at close? and during one of my runs I got this traceback:

```pytb

File "/Users/talley/Desktop/t.py", line 45, in <module>

import napari

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/__init__.py", line 22, in <module>

from ._event_loop import gui_qt, run

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/_event_loop.py", line 2, in <module>

from ._qt.qt_event_loop import gui_qt, run

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/_qt/__init__.py", line 41, in <module>

from .qt_main_window import Window

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/_qt/qt_main_window.py", line 30, in <module>

from ..utils.settings import SETTINGS

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/__init__.py", line 5, in <module>

from ._manager import SETTINGS

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/_manager.py", line 177, in <module>

SETTINGS = SettingsManager()

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/_manager.py", line 66, in __init__

self._load()

File "/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/_manager.py", line 115, in _load

for section, model_data in data.items():

AttributeError: 'NoneType' object has no attribute 'items'

```

</issue>

<code>

[start of napari/utils/settings/_manager.py]

1 """Settings management.

2 """

3

4 import os

5 from pathlib import Path

6

7 from appdirs import user_config_dir

8 from pydantic import ValidationError

9 from yaml import safe_dump, safe_load

10

11 from ._defaults import CORE_SETTINGS, ApplicationSettings, PluginSettings

12

13

14 class SettingsManager:

15 """

16 Napari settings manager using evented SettingsModels.

17

18 This provides the presistence layer for the application settings.

19

20 Parameters

21 ----------

22 config_path : str, optional

23 Provide the base folder to store napari configuration. Default is None,

24 which will point to user config provided by `appdirs`.

25 save_to_disk : bool, optional

26 Persist settings on disk. Default is True.

27

28 Notes

29 -----

30 The settings manager will create a new user configuration folder which is

31 provided by `appdirs` in a cross platform manner. On the first startup a

32 new configuration file will be created using the default values defined by

33 the `CORE_SETTINGS` models.

34

35 If a configuration file is found in the specified location, it will be

36 loaded by the `_load` method. On configuration load the following checks

37 are performed:

38

39 - If invalid sections are found, these will be removed from the file.

40 - If invalid keys are found within a valid section, these will be removed

41 from the file.

42 - If invalid values are found within valid sections and valid keys, these

43 will be replaced by the default value provided by `CORE_SETTINGS`

44 models.

45 """

46

47 _FILENAME = "settings.yaml"

48 _APPNAME = "Napari"

49 _APPAUTHOR = "Napari"

50 application: ApplicationSettings

51 plugin: PluginSettings

52

53 def __init__(self, config_path: str = None, save_to_disk: bool = True):

54 self._config_path = (

55 Path(user_config_dir(self._APPNAME, self._APPAUTHOR))

56 if config_path is None

57 else Path(config_path)

58 )

59 self._save_to_disk = save_to_disk

60 self._settings = {}

61 self._defaults = {}

62 self._models = {}

63 self._plugins = []

64

65 if not self._config_path.is_dir():

66 os.makedirs(self._config_path)

67

68 self._load()

69

70 def __getattr__(self, attr):

71 if attr in self._settings:

72 return self._settings[attr]

73

74 def __dir__(self):

75 """Add setting keys to make tab completion works."""

76 return super().__dir__() + list(self._settings)

77

78 @staticmethod

79 def _get_section_name(settings) -> str:

80 """

81 Return the normalized name of a section based on its config title.

82 """

83 section = settings.Config.title.replace(" ", "_").lower()

84 if section.endswith("_settings"):

85 section = section.replace("_settings", "")

86

87 return section

88

89 def _to_dict(self) -> dict:

90 """Convert the settings to a dictionary."""

91 data = {}

92 for section, model in self._settings.items():

93 data[section] = model.dict()

94

95 return data

96

97 def _save(self):

98 """Save configuration to disk."""

99 if self._save_to_disk:

100 path = self.path / self._FILENAME

101 with open(path, "w") as fh:

102 fh.write(safe_dump(self._to_dict()))

103

104 def _load(self):

105 """Read configuration from disk."""

106 path = self.path / self._FILENAME

107 for plugin in CORE_SETTINGS:

108 section = self._get_section_name(plugin)

109 self._defaults[section] = plugin()

110 self._models[section] = plugin

111

112 if path.is_file():

113 with open(path) as fh:

114 data = safe_load(fh.read())

115

116 # Check with models

117 for section, model_data in data.items():

118 try:

119 model = self._models[section](**model_data)

120 model.events.connect(lambda x: self._save())

121 self._settings[section] = model

122 except KeyError:

123 pass

124 except ValidationError as e:

125 # Handle extra fields

126 model_data_replace = {}

127 for error in e.errors():

128 # Grab the first error entry

129 item = error["loc"][0]

130 try:

131 model_data_replace[item] = getattr(

132 self._defaults[section], item

133 )

134 except AttributeError:

135 model_data.pop(item)

136

137 model_data.update(model_data_replace)

138 model = self._models[section](**model_data)

139 model.events.connect(lambda x: self._save())

140 self._settings[section] = model

141 else:

142 self._settings = self._defaults

143

144 self._save()

145

146 @property

147 def path(self):

148 return self._config_path

149

150 def reset(self):

151 """Reset settings to default values."""

152 for section in self._settings:

153 self._settings[section] = self._models[section]()

154

155 self._save()

156

157 def schemas(self) -> dict:

158 """Return the json schema for each of the settings model."""

159 schemas = {}

160 for section, settings in self._settings.items():

161 schemas[section] = {

162 "json_schema": settings.schema_json(),

163 "model": settings,

164 }

165

166 return schemas

167

168 def register_plugin(self, plugin):

169 """Register plugin settings with the settings manager.

170

171 Parameters

172 ----------

173 plugin:

174 The napari plugin that may or may not provide settings.

175 """

176 self._plugins.append(plugin)

177

178

179 SETTINGS = SettingsManager()

180

[end of napari/utils/settings/_manager.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/napari/utils/settings/_manager.py b/napari/utils/settings/_manager.py

--- a/napari/utils/settings/_manager.py

+++ b/napari/utils/settings/_manager.py

@@ -108,10 +108,11 @@

section = self._get_section_name(plugin)

self._defaults[section] = plugin()

self._models[section] = plugin

+ self._settings[section] = plugin()

if path.is_file():

with open(path) as fh:

- data = safe_load(fh.read())

+ data = safe_load(fh.read()) or {}

# Check with models

for section, model_data in data.items():

@@ -138,8 +139,6 @@

model = self._models[section](**model_data)

model.events.connect(lambda x: self._save())

self._settings[section] = model

- else:

- self._settings = self._defaults

self._save()

|

{"golden_diff": "diff --git a/napari/utils/settings/_manager.py b/napari/utils/settings/_manager.py\n--- a/napari/utils/settings/_manager.py\n+++ b/napari/utils/settings/_manager.py\n@@ -108,10 +108,11 @@\n section = self._get_section_name(plugin)\n self._defaults[section] = plugin()\n self._models[section] = plugin\n+ self._settings[section] = plugin()\n \n if path.is_file():\n with open(path) as fh:\n- data = safe_load(fh.read())\n+ data = safe_load(fh.read()) or {}\n \n # Check with models\n for section, model_data in data.items():\n@@ -138,8 +139,6 @@\n model = self._models[section](**model_data)\n model.events.connect(lambda x: self._save())\n self._settings[section] = model\n- else:\n- self._settings = self._defaults\n \n self._save()\n", "issue": "Settings manager may need to handle edge case where loaded data is None\n## \ud83d\udc1b Bug\r\nLooks like the settings manager `_load` method may need to handle the case where `safe_load` returns `None`. I don't yet have a reproducible example... but I'm working on some stuff that is crashing napari a lot :joy:, so maybe settings aren't getting written correctly at close? and during one of my runs I got this traceback:\r\n\r\n```pytb\r\n File \"/Users/talley/Desktop/t.py\", line 45, in <module>\r\n import napari\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/__init__.py\", line 22, in <module>\r\n from ._event_loop import gui_qt, run\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/_event_loop.py\", line 2, in <module>\r\n from ._qt.qt_event_loop import gui_qt, run\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/_qt/__init__.py\", line 41, in <module>\r\n from .qt_main_window import Window\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/_qt/qt_main_window.py\", line 30, in <module>\r\n from ..utils.settings import SETTINGS\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/__init__.py\", line 5, in <module>\r\n from ._manager import SETTINGS\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/_manager.py\", line 177, in <module>\r\n SETTINGS = SettingsManager()\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/_manager.py\", line 66, in __init__\r\n self._load()\r\n File \"/Users/talley/Dropbox (HMS)/Python/forks/napari/napari/utils/settings/_manager.py\", line 115, in _load\r\n for section, model_data in data.items():\r\nAttributeError: 'NoneType' object has no attribute 'items'\r\n```\n", "before_files": [{"content": "\"\"\"Settings management.\n\"\"\"\n\nimport os\nfrom pathlib import Path\n\nfrom appdirs import user_config_dir\nfrom pydantic import ValidationError\nfrom yaml import safe_dump, safe_load\n\nfrom ._defaults import CORE_SETTINGS, ApplicationSettings, PluginSettings\n\n\nclass SettingsManager:\n \"\"\"\n Napari settings manager using evented SettingsModels.\n\n This provides the presistence layer for the application settings.\n\n Parameters\n ----------\n config_path : str, optional\n Provide the base folder to store napari configuration. Default is None,\n which will point to user config provided by `appdirs`.\n save_to_disk : bool, optional\n Persist settings on disk. Default is True.\n\n Notes\n -----\n The settings manager will create a new user configuration folder which is\n provided by `appdirs` in a cross platform manner. On the first startup a\n new configuration file will be created using the default values defined by\n the `CORE_SETTINGS` models.\n\n If a configuration file is found in the specified location, it will be\n loaded by the `_load` method. On configuration load the following checks\n are performed:\n\n - If invalid sections are found, these will be removed from the file.\n - If invalid keys are found within a valid section, these will be removed\n from the file.\n - If invalid values are found within valid sections and valid keys, these\n will be replaced by the default value provided by `CORE_SETTINGS`\n models.\n \"\"\"\n\n _FILENAME = \"settings.yaml\"\n _APPNAME = \"Napari\"\n _APPAUTHOR = \"Napari\"\n application: ApplicationSettings\n plugin: PluginSettings\n\n def __init__(self, config_path: str = None, save_to_disk: bool = True):\n self._config_path = (\n Path(user_config_dir(self._APPNAME, self._APPAUTHOR))\n if config_path is None\n else Path(config_path)\n )\n self._save_to_disk = save_to_disk\n self._settings = {}\n self._defaults = {}\n self._models = {}\n self._plugins = []\n\n if not self._config_path.is_dir():\n os.makedirs(self._config_path)\n\n self._load()\n\n def __getattr__(self, attr):\n if attr in self._settings:\n return self._settings[attr]\n\n def __dir__(self):\n \"\"\"Add setting keys to make tab completion works.\"\"\"\n return super().__dir__() + list(self._settings)\n\n @staticmethod\n def _get_section_name(settings) -> str:\n \"\"\"\n Return the normalized name of a section based on its config title.\n \"\"\"\n section = settings.Config.title.replace(\" \", \"_\").lower()\n if section.endswith(\"_settings\"):\n section = section.replace(\"_settings\", \"\")\n\n return section\n\n def _to_dict(self) -> dict:\n \"\"\"Convert the settings to a dictionary.\"\"\"\n data = {}\n for section, model in self._settings.items():\n data[section] = model.dict()\n\n return data\n\n def _save(self):\n \"\"\"Save configuration to disk.\"\"\"\n if self._save_to_disk:\n path = self.path / self._FILENAME\n with open(path, \"w\") as fh:\n fh.write(safe_dump(self._to_dict()))\n\n def _load(self):\n \"\"\"Read configuration from disk.\"\"\"\n path = self.path / self._FILENAME\n for plugin in CORE_SETTINGS:\n section = self._get_section_name(plugin)\n self._defaults[section] = plugin()\n self._models[section] = plugin\n\n if path.is_file():\n with open(path) as fh:\n data = safe_load(fh.read())\n\n # Check with models\n for section, model_data in data.items():\n try:\n model = self._models[section](**model_data)\n model.events.connect(lambda x: self._save())\n self._settings[section] = model\n except KeyError:\n pass\n except ValidationError as e:\n # Handle extra fields\n model_data_replace = {}\n for error in e.errors():\n # Grab the first error entry\n item = error[\"loc\"][0]\n try:\n model_data_replace[item] = getattr(\n self._defaults[section], item\n )\n except AttributeError:\n model_data.pop(item)\n\n model_data.update(model_data_replace)\n model = self._models[section](**model_data)\n model.events.connect(lambda x: self._save())\n self._settings[section] = model\n else:\n self._settings = self._defaults\n\n self._save()\n\n @property\n def path(self):\n return self._config_path\n\n def reset(self):\n \"\"\"Reset settings to default values.\"\"\"\n for section in self._settings:\n self._settings[section] = self._models[section]()\n\n self._save()\n\n def schemas(self) -> dict:\n \"\"\"Return the json schema for each of the settings model.\"\"\"\n schemas = {}\n for section, settings in self._settings.items():\n schemas[section] = {\n \"json_schema\": settings.schema_json(),\n \"model\": settings,\n }\n\n return schemas\n\n def register_plugin(self, plugin):\n \"\"\"Register plugin settings with the settings manager.\n\n Parameters\n ----------\n plugin:\n The napari plugin that may or may not provide settings.\n \"\"\"\n self._plugins.append(plugin)\n\n\nSETTINGS = SettingsManager()\n", "path": "napari/utils/settings/_manager.py"}]}

| 2,640 | 215 |

gh_patches_debug_11496

|

rasdani/github-patches

|

git_diff

|

saleor__saleor-9498

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Setting external methods should throw errors when the method does not exist

### What I'm trying to achieve

I'm setting an external method that does not exist

### Steps to reproduce the problem

I base64 encoded `app:1234:some-id` that was never a real id external method id:

<img width="1440" alt="image" src="https://user-images.githubusercontent.com/2566928/154252619-496c1b91-ca79-4fe8-bc1d-abcd0cbb743c.png">

There is no error, but the delivery method is still null.

### What I expected to happen

I would expect an error response, rather than noop.

### Screenshots and logs

<!-- If applicable, add screenshots to help explain your problem. -->

**System information**

<!-- Provide the version of Saleor or whether you're using it from the `main` branch. If using Saleor Dashboard or Storefront, provide their versions too. -->

Saleor version:

- [ ] dev (current main)

- [ ] 3.0

- [ ] 2.11

- [ ] 2.10

Operating system:

- [ ] Windows

- [ ] Linux

- [ ] MacOS

- [ ] Other

Setting external methods should throw errors when the method does not exist

### What I'm trying to achieve

I'm setting an external method that does not exist

### Steps to reproduce the problem

I base64 encoded `app:1234:some-id` that was never a real id external method id:

<img width="1440" alt="image" src="https://user-images.githubusercontent.com/2566928/154252619-496c1b91-ca79-4fe8-bc1d-abcd0cbb743c.png">

There is no error, but the delivery method is still null.

### What I expected to happen

I would expect an error response, rather than noop.

### Screenshots and logs

<!-- If applicable, add screenshots to help explain your problem. -->

**System information**

<!-- Provide the version of Saleor or whether you're using it from the `main` branch. If using Saleor Dashboard or Storefront, provide their versions too. -->

Saleor version:

- [ ] dev (current main)

- [ ] 3.0

- [ ] 2.11

- [ ] 2.10

Operating system:

- [ ] Windows

- [ ] Linux

- [ ] MacOS

- [ ] Other

</issue>

<code>

[start of saleor/graphql/checkout/mutations/checkout_delivery_method_update.py]

1 from typing import Optional

2

3 import graphene

4 from django.core.exceptions import ValidationError

5

6 from ....checkout.error_codes import CheckoutErrorCode

7 from ....checkout.fetch import fetch_checkout_info, fetch_checkout_lines

8 from ....checkout.utils import (

9 delete_external_shipping_id,

10 is_shipping_required,

11 recalculate_checkout_discount,

12 set_external_shipping_id,

13 )

14 from ....plugins.webhook.utils import APP_ID_PREFIX

15 from ....shipping import interface as shipping_interface

16 from ....shipping import models as shipping_models

17 from ....shipping.utils import convert_to_shipping_method_data

18 from ....warehouse import models as warehouse_models

19 from ...core.descriptions import ADDED_IN_31, PREVIEW_FEATURE

20 from ...core.mutations import BaseMutation

21 from ...core.scalars import UUID

22 from ...core.types import CheckoutError

23 from ...core.utils import from_global_id_or_error

24 from ...shipping.types import ShippingMethod

25 from ...warehouse.types import Warehouse

26 from ..types import Checkout

27 from .utils import ERROR_DOES_NOT_SHIP, clean_delivery_method, get_checkout_by_token

28

29

30 class CheckoutDeliveryMethodUpdate(BaseMutation):

31 checkout = graphene.Field(Checkout, description="An updated checkout.")

32

33 class Arguments:

34 token = UUID(description="Checkout token.", required=False)

35 delivery_method_id = graphene.ID(

36 description="Delivery Method ID (`Warehouse` ID or `ShippingMethod` ID).",

37 required=False,

38 )

39

40 class Meta:

41 description = (

42 f"{ADDED_IN_31} Updates the delivery method "

43 f"(shipping method or pick up point) of the checkout. {PREVIEW_FEATURE}"

44 )

45 error_type_class = CheckoutError

46

47 @classmethod

48 def perform_on_shipping_method(

49 cls, info, shipping_method_id, checkout_info, lines, checkout, manager

50 ):

51 shipping_method = cls.get_node_or_error(

52 info,

53 shipping_method_id,

54 only_type=ShippingMethod,

55 field="delivery_method_id",

56 qs=shipping_models.ShippingMethod.objects.prefetch_related(

57 "postal_code_rules"

58 ),

59 )

60

61 delivery_method = convert_to_shipping_method_data(

62 shipping_method,

63 shipping_models.ShippingMethodChannelListing.objects.filter(

64 shipping_method=shipping_method,

65 channel=checkout_info.channel,

66 ).first(),

67 )

68 cls._check_delivery_method(

69 checkout_info, lines, shipping_method=delivery_method, collection_point=None

70 )

71

72 cls._update_delivery_method(

73 manager,

74 checkout,

75 shipping_method=shipping_method,

76 external_shipping_method=None,

77 collection_point=None,

78 )

79 recalculate_checkout_discount(

80 manager, checkout_info, lines, info.context.discounts

81 )

82 return CheckoutDeliveryMethodUpdate(checkout=checkout)

83

84 @classmethod

85 def perform_on_external_shipping_method(

86 cls, info, shipping_method_id, checkout_info, lines, checkout, manager

87 ):

88 delivery_method = manager.get_shipping_method(

89 checkout=checkout,

90 channel_slug=checkout.channel.slug,

91 shipping_method_id=shipping_method_id,

92 )

93

94 cls._check_delivery_method(

95 checkout_info, lines, shipping_method=delivery_method, collection_point=None

96 )

97

98 cls._update_delivery_method(

99 manager,

100 checkout,

101 shipping_method=None,

102 external_shipping_method=delivery_method,

103 collection_point=None,

104 )

105 recalculate_checkout_discount(

106 manager, checkout_info, lines, info.context.discounts

107 )

108 return CheckoutDeliveryMethodUpdate(checkout=checkout)

109

110 @classmethod

111 def perform_on_collection_point(

112 cls, info, collection_point_id, checkout_info, lines, checkout, manager

113 ):

114 collection_point = cls.get_node_or_error(

115 info,

116 collection_point_id,

117 only_type=Warehouse,

118 field="delivery_method_id",

119 qs=warehouse_models.Warehouse.objects.select_related("address"),

120 )

121 cls._check_delivery_method(

122 checkout_info,

123 lines,

124 shipping_method=None,

125 collection_point=collection_point,

126 )

127 cls._update_delivery_method(

128 manager,

129 checkout,

130 shipping_method=None,

131 external_shipping_method=None,

132 collection_point=collection_point,

133 )

134 return CheckoutDeliveryMethodUpdate(checkout=checkout)

135

136 @staticmethod

137 def _check_delivery_method(

138 checkout_info,

139 lines,

140 *,

141 shipping_method: Optional[shipping_interface.ShippingMethodData],

142 collection_point: Optional[Warehouse]

143 ) -> None:

144 delivery_method = shipping_method

145 error_msg = "This shipping method is not applicable."

146

147 if collection_point is not None:

148 delivery_method = collection_point

149 error_msg = "This pick up point is not applicable."

150

151 delivery_method_is_valid = clean_delivery_method(

152 checkout_info=checkout_info, lines=lines, method=delivery_method

153 )

154 if not delivery_method_is_valid:

155 raise ValidationError(

156 {

157 "delivery_method_id": ValidationError(

158 error_msg,

159 code=CheckoutErrorCode.DELIVERY_METHOD_NOT_APPLICABLE.value,

160 )

161 }

162 )

163

164 @staticmethod

165 def _update_delivery_method(

166 manager,

167 checkout: Checkout,

168 *,

169 shipping_method: Optional[ShippingMethod],

170 external_shipping_method: Optional[shipping_interface.ShippingMethodData],

171 collection_point: Optional[Warehouse]

172 ) -> None:

173 if external_shipping_method:

174 set_external_shipping_id(

175 checkout=checkout, app_shipping_id=external_shipping_method.id

176 )

177 else:

178 delete_external_shipping_id(checkout=checkout)

179 checkout.shipping_method = shipping_method

180 checkout.collection_point = collection_point

181 checkout.save(

182 update_fields=[

183 "private_metadata",

184 "shipping_method",

185 "collection_point",

186 "last_change",

187 ]

188 )

189 manager.checkout_updated(checkout)

190

191 @staticmethod

192 def _resolve_delivery_method_type(id_) -> Optional[str]:

193 if id_ is None:

194 return None

195

196 possible_types = ("Warehouse", "ShippingMethod", APP_ID_PREFIX)

197 type_, id_ = from_global_id_or_error(id_)

198 str_type = str(type_)

199

200 if str_type not in possible_types:

201 raise ValidationError(

202 {

203 "delivery_method_id": ValidationError(

204 "ID does not belong to Warehouse or ShippingMethod",

205 code=CheckoutErrorCode.INVALID.value,

206 )

207 }

208 )

209

210 return str_type

211

212 @classmethod

213 def perform_mutation(

214 cls,

215 _,

216 info,

217 token,

218 delivery_method_id=None,

219 ):

220

221 checkout = get_checkout_by_token(token)

222

223 manager = info.context.plugins

224 lines, unavailable_variant_pks = fetch_checkout_lines(checkout)

225 if unavailable_variant_pks:

226 not_available_variants_ids = {

227 graphene.Node.to_global_id("ProductVariant", pk)

228 for pk in unavailable_variant_pks

229 }

230 raise ValidationError(

231 {

232 "lines": ValidationError(

233 "Some of the checkout lines variants are unavailable.",

234 code=CheckoutErrorCode.UNAVAILABLE_VARIANT_IN_CHANNEL.value,

235 params={"variants": not_available_variants_ids},

236 )

237 }

238 )

239

240 if not is_shipping_required(lines):

241 raise ValidationError(

242 {

243 "delivery_method": ValidationError(

244 ERROR_DOES_NOT_SHIP,

245 code=CheckoutErrorCode.SHIPPING_NOT_REQUIRED,

246 )

247 }

248 )

249 type_name = cls._resolve_delivery_method_type(delivery_method_id)

250

251 checkout_info = fetch_checkout_info(

252 checkout, lines, info.context.discounts, manager

253 )

254 if type_name == "Warehouse":

255 return cls.perform_on_collection_point(

256 info, delivery_method_id, checkout_info, lines, checkout, manager

257 )

258 if type_name == "ShippingMethod":

259 return cls.perform_on_shipping_method(

260 info, delivery_method_id, checkout_info, lines, checkout, manager

261 )

262 return cls.perform_on_external_shipping_method(

263 info, delivery_method_id, checkout_info, lines, checkout, manager

264 )

265

[end of saleor/graphql/checkout/mutations/checkout_delivery_method_update.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py b/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py

--- a/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py

+++ b/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py

@@ -91,6 +91,16 @@

shipping_method_id=shipping_method_id,

)

+ if delivery_method is None and shipping_method_id:

+ raise ValidationError(

+ {

+ "delivery_method_id": ValidationError(

+ f"Couldn't resolve to a node: ${shipping_method_id}",

+ code=CheckoutErrorCode.NOT_FOUND,

+ )

+ }

+ )

+

cls._check_delivery_method(

checkout_info, lines, shipping_method=delivery_method, collection_point=None

)

|

{"golden_diff": "diff --git a/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py b/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py\n--- a/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py\n+++ b/saleor/graphql/checkout/mutations/checkout_delivery_method_update.py\n@@ -91,6 +91,16 @@\n shipping_method_id=shipping_method_id,\n )\n \n+ if delivery_method is None and shipping_method_id:\n+ raise ValidationError(\n+ {\n+ \"delivery_method_id\": ValidationError(\n+ f\"Couldn't resolve to a node: ${shipping_method_id}\",\n+ code=CheckoutErrorCode.NOT_FOUND,\n+ )\n+ }\n+ )\n+\n cls._check_delivery_method(\n checkout_info, lines, shipping_method=delivery_method, collection_point=None\n )\n", "issue": "Setting external methods should throw errors when the method does not exist\n### What I'm trying to achieve\r\nI'm setting an external method that does not exist\r\n\r\n### Steps to reproduce the problem\r\nI base64 encoded `app:1234:some-id` that was never a real id external method id:\r\n\r\n<img width=\"1440\" alt=\"image\" src=\"https://user-images.githubusercontent.com/2566928/154252619-496c1b91-ca79-4fe8-bc1d-abcd0cbb743c.png\">\r\n\r\nThere is no error, but the delivery method is still null.\r\n\r\n\r\n### What I expected to happen\r\nI would expect an error response, rather than noop.\r\n\r\n### Screenshots and logs\r\n<!-- If applicable, add screenshots to help explain your problem. -->\r\n\r\n**System information**\r\n<!-- Provide the version of Saleor or whether you're using it from the `main` branch. If using Saleor Dashboard or Storefront, provide their versions too. -->\r\nSaleor version:\r\n- [ ] dev (current main)\r\n- [ ] 3.0\r\n- [ ] 2.11\r\n- [ ] 2.10\r\n\r\nOperating system:\r\n- [ ] Windows\r\n- [ ] Linux\r\n- [ ] MacOS\r\n- [ ] Other\r\n\nSetting external methods should throw errors when the method does not exist\n### What I'm trying to achieve\r\nI'm setting an external method that does not exist\r\n\r\n### Steps to reproduce the problem\r\nI base64 encoded `app:1234:some-id` that was never a real id external method id:\r\n\r\n<img width=\"1440\" alt=\"image\" src=\"https://user-images.githubusercontent.com/2566928/154252619-496c1b91-ca79-4fe8-bc1d-abcd0cbb743c.png\">\r\n\r\nThere is no error, but the delivery method is still null.\r\n\r\n\r\n### What I expected to happen\r\nI would expect an error response, rather than noop.\r\n\r\n### Screenshots and logs\r\n<!-- If applicable, add screenshots to help explain your problem. -->\r\n\r\n**System information**\r\n<!-- Provide the version of Saleor or whether you're using it from the `main` branch. If using Saleor Dashboard or Storefront, provide their versions too. -->\r\nSaleor version:\r\n- [ ] dev (current main)\r\n- [ ] 3.0\r\n- [ ] 2.11\r\n- [ ] 2.10\r\n\r\nOperating system:\r\n- [ ] Windows\r\n- [ ] Linux\r\n- [ ] MacOS\r\n- [ ] Other\r\n\n", "before_files": [{"content": "from typing import Optional\n\nimport graphene\nfrom django.core.exceptions import ValidationError\n\nfrom ....checkout.error_codes import CheckoutErrorCode\nfrom ....checkout.fetch import fetch_checkout_info, fetch_checkout_lines\nfrom ....checkout.utils import (\n delete_external_shipping_id,\n is_shipping_required,\n recalculate_checkout_discount,\n set_external_shipping_id,\n)\nfrom ....plugins.webhook.utils import APP_ID_PREFIX\nfrom ....shipping import interface as shipping_interface\nfrom ....shipping import models as shipping_models\nfrom ....shipping.utils import convert_to_shipping_method_data\nfrom ....warehouse import models as warehouse_models\nfrom ...core.descriptions import ADDED_IN_31, PREVIEW_FEATURE\nfrom ...core.mutations import BaseMutation\nfrom ...core.scalars import UUID\nfrom ...core.types import CheckoutError\nfrom ...core.utils import from_global_id_or_error\nfrom ...shipping.types import ShippingMethod\nfrom ...warehouse.types import Warehouse\nfrom ..types import Checkout\nfrom .utils import ERROR_DOES_NOT_SHIP, clean_delivery_method, get_checkout_by_token\n\n\nclass CheckoutDeliveryMethodUpdate(BaseMutation):\n checkout = graphene.Field(Checkout, description=\"An updated checkout.\")\n\n class Arguments:\n token = UUID(description=\"Checkout token.\", required=False)\n delivery_method_id = graphene.ID(\n description=\"Delivery Method ID (`Warehouse` ID or `ShippingMethod` ID).\",\n required=False,\n )\n\n class Meta:\n description = (\n f\"{ADDED_IN_31} Updates the delivery method \"\n f\"(shipping method or pick up point) of the checkout. {PREVIEW_FEATURE}\"\n )\n error_type_class = CheckoutError\n\n @classmethod\n def perform_on_shipping_method(\n cls, info, shipping_method_id, checkout_info, lines, checkout, manager\n ):\n shipping_method = cls.get_node_or_error(\n info,\n shipping_method_id,\n only_type=ShippingMethod,\n field=\"delivery_method_id\",\n qs=shipping_models.ShippingMethod.objects.prefetch_related(\n \"postal_code_rules\"\n ),\n )\n\n delivery_method = convert_to_shipping_method_data(\n shipping_method,\n shipping_models.ShippingMethodChannelListing.objects.filter(\n shipping_method=shipping_method,\n channel=checkout_info.channel,\n ).first(),\n )\n cls._check_delivery_method(\n checkout_info, lines, shipping_method=delivery_method, collection_point=None\n )\n\n cls._update_delivery_method(\n manager,\n checkout,\n shipping_method=shipping_method,\n external_shipping_method=None,\n collection_point=None,\n )\n recalculate_checkout_discount(\n manager, checkout_info, lines, info.context.discounts\n )\n return CheckoutDeliveryMethodUpdate(checkout=checkout)\n\n @classmethod\n def perform_on_external_shipping_method(\n cls, info, shipping_method_id, checkout_info, lines, checkout, manager\n ):\n delivery_method = manager.get_shipping_method(\n checkout=checkout,\n channel_slug=checkout.channel.slug,\n shipping_method_id=shipping_method_id,\n )\n\n cls._check_delivery_method(\n checkout_info, lines, shipping_method=delivery_method, collection_point=None\n )\n\n cls._update_delivery_method(\n manager,\n checkout,\n shipping_method=None,\n external_shipping_method=delivery_method,\n collection_point=None,\n )\n recalculate_checkout_discount(\n manager, checkout_info, lines, info.context.discounts\n )\n return CheckoutDeliveryMethodUpdate(checkout=checkout)\n\n @classmethod\n def perform_on_collection_point(\n cls, info, collection_point_id, checkout_info, lines, checkout, manager\n ):\n collection_point = cls.get_node_or_error(\n info,\n collection_point_id,\n only_type=Warehouse,\n field=\"delivery_method_id\",\n qs=warehouse_models.Warehouse.objects.select_related(\"address\"),\n )\n cls._check_delivery_method(\n checkout_info,\n lines,\n shipping_method=None,\n collection_point=collection_point,\n )\n cls._update_delivery_method(\n manager,\n checkout,\n shipping_method=None,\n external_shipping_method=None,\n collection_point=collection_point,\n )\n return CheckoutDeliveryMethodUpdate(checkout=checkout)\n\n @staticmethod\n def _check_delivery_method(\n checkout_info,\n lines,\n *,\n shipping_method: Optional[shipping_interface.ShippingMethodData],\n collection_point: Optional[Warehouse]\n ) -> None:\n delivery_method = shipping_method\n error_msg = \"This shipping method is not applicable.\"\n\n if collection_point is not None:\n delivery_method = collection_point\n error_msg = \"This pick up point is not applicable.\"\n\n delivery_method_is_valid = clean_delivery_method(\n checkout_info=checkout_info, lines=lines, method=delivery_method\n )\n if not delivery_method_is_valid:\n raise ValidationError(\n {\n \"delivery_method_id\": ValidationError(\n error_msg,\n code=CheckoutErrorCode.DELIVERY_METHOD_NOT_APPLICABLE.value,\n )\n }\n )\n\n @staticmethod\n def _update_delivery_method(\n manager,\n checkout: Checkout,\n *,\n shipping_method: Optional[ShippingMethod],\n external_shipping_method: Optional[shipping_interface.ShippingMethodData],\n collection_point: Optional[Warehouse]\n ) -> None:\n if external_shipping_method:\n set_external_shipping_id(\n checkout=checkout, app_shipping_id=external_shipping_method.id\n )\n else:\n delete_external_shipping_id(checkout=checkout)\n checkout.shipping_method = shipping_method\n checkout.collection_point = collection_point\n checkout.save(\n update_fields=[\n \"private_metadata\",\n \"shipping_method\",\n \"collection_point\",\n \"last_change\",\n ]\n )\n manager.checkout_updated(checkout)\n\n @staticmethod\n def _resolve_delivery_method_type(id_) -> Optional[str]:\n if id_ is None:\n return None\n\n possible_types = (\"Warehouse\", \"ShippingMethod\", APP_ID_PREFIX)\n type_, id_ = from_global_id_or_error(id_)\n str_type = str(type_)\n\n if str_type not in possible_types:\n raise ValidationError(\n {\n \"delivery_method_id\": ValidationError(\n \"ID does not belong to Warehouse or ShippingMethod\",\n code=CheckoutErrorCode.INVALID.value,\n )\n }\n )\n\n return str_type\n\n @classmethod\n def perform_mutation(\n cls,\n _,\n info,\n token,\n delivery_method_id=None,\n ):\n\n checkout = get_checkout_by_token(token)\n\n manager = info.context.plugins\n lines, unavailable_variant_pks = fetch_checkout_lines(checkout)\n if unavailable_variant_pks:\n not_available_variants_ids = {\n graphene.Node.to_global_id(\"ProductVariant\", pk)\n for pk in unavailable_variant_pks\n }\n raise ValidationError(\n {\n \"lines\": ValidationError(\n \"Some of the checkout lines variants are unavailable.\",\n code=CheckoutErrorCode.UNAVAILABLE_VARIANT_IN_CHANNEL.value,\n params={\"variants\": not_available_variants_ids},\n )\n }\n )\n\n if not is_shipping_required(lines):\n raise ValidationError(\n {\n \"delivery_method\": ValidationError(\n ERROR_DOES_NOT_SHIP,\n code=CheckoutErrorCode.SHIPPING_NOT_REQUIRED,\n )\n }\n )\n type_name = cls._resolve_delivery_method_type(delivery_method_id)\n\n checkout_info = fetch_checkout_info(\n checkout, lines, info.context.discounts, manager\n )\n if type_name == \"Warehouse\":\n return cls.perform_on_collection_point(\n info, delivery_method_id, checkout_info, lines, checkout, manager\n )\n if type_name == \"ShippingMethod\":\n return cls.perform_on_shipping_method(\n info, delivery_method_id, checkout_info, lines, checkout, manager\n )\n return cls.perform_on_external_shipping_method(\n info, delivery_method_id, checkout_info, lines, checkout, manager\n )\n", "path": "saleor/graphql/checkout/mutations/checkout_delivery_method_update.py"}]}

| 3,462 | 182 |

gh_patches_debug_30437

|

rasdani/github-patches

|

git_diff

|

rotki__rotki-4261

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Docker container's /tmp doesn't get automatically cleaned

## Problem Definition

PyInstaller extracts the files in /tmp every time the backend starts

In the docker container /tmp is never cleaned which results in an ever-increasing size on every application restart

## TODO

- [ ] Add /tmp cleanup on start

</issue>

<code>

[start of packaging/docker/entrypoint.py]

1 #!/usr/bin/python3

2 import json

3 import logging

4 import os

5 import subprocess

6 import time

7 from pathlib import Path

8 from typing import Dict, Optional, Any, List

9

10 logger = logging.getLogger('monitor')

11 logging.basicConfig(level=logging.DEBUG)

12

13 DEFAULT_LOG_LEVEL = 'critical'

14

15

16 def load_config_from_file() -> Optional[Dict[str, Any]]:

17 config_file = Path('/config/rotki_config.json')

18 if not config_file.exists():

19 logger.info('no config file provided')

20 return None

21

22 with open(config_file) as file:

23 try:

24 data = json.load(file)

25 return data

26 except json.JSONDecodeError as e:

27 logger.error(e)

28 return None

29

30

31 def load_config_from_env() -> Dict[str, Any]:

32 loglevel = os.environ.get('LOGLEVEL')

33 logfromothermodules = os.environ.get('LOGFROMOTHERMODDULES')

34 sleep_secs = os.environ.get('SLEEP_SECS')

35 max_size_in_mb_all_logs = os.environ.get('MAX_SIZE_IN_MB_ALL_LOGS')

36 max_logfiles_num = os.environ.get('MAX_LOGFILES_NUM')

37

38 return {

39 'loglevel': loglevel,

40 'logfromothermodules': logfromothermodules,

41 'sleep_secs': sleep_secs,

42 'max_logfiles_num': max_logfiles_num,

43 'max_size_in_mb_all_logs': max_size_in_mb_all_logs,

44 }

45

46

47 def load_config() -> List[str]:

48 env_config = load_config_from_env()

49 file_config = load_config_from_file()

50

51 logger.info('loading config from env')

52

53 loglevel = env_config.get('loglevel')

54 log_from_other_modules = env_config.get('logfromothermodules')

55 sleep_secs = env_config.get('sleep_secs')

56 max_logfiles_num = env_config.get('max_logfiles_num')

57 max_size_in_mb_all_logs = env_config.get('max_size_in_mb_all_logs')

58

59 if file_config is not None:

60 logger.info('loading config from file')

61

62 if file_config.get('loglevel') is not None:

63 loglevel = file_config.get('loglevel')

64

65 if file_config.get('logfromothermodules') is not None:

66 log_from_other_modules = file_config.get('logfromothermodules')

67

68 if file_config.get('sleep-secs') is not None:

69 sleep_secs = file_config.get('sleep-secs')

70

71 if file_config.get('max_logfiles_num') is not None:

72 max_logfiles_num = file_config.get('max_logfiles_num')

73

74 if file_config.get('max_size_in_mb_all_logs') is not None:

75 max_size_in_mb_all_logs = file_config.get('max_size_in_mb_all_logs')

76

77 args = [

78 '--data-dir',

79 '/data',

80 '--logfile',

81 '/logs/rotki.log',

82 '--loglevel',

83 loglevel if loglevel is not None else DEFAULT_LOG_LEVEL,

84 ]

85

86 if log_from_other_modules is True:

87 args.append('--logfromothermodules')

88

89 if sleep_secs is not None:

90 args.append('--sleep-secs')

91 args.append(str(sleep_secs))

92

93 if max_logfiles_num is not None:

94 args.append('--max-logfiles-num')

95 args.append(str(max_logfiles_num))

96

97 if max_size_in_mb_all_logs is not None:

98 args.append('--max-size-in-mb-all-logs')

99 args.append(str(max_size_in_mb_all_logs))

100

101 return args

102

103

104 base_args = [

105 '/usr/sbin/rotki',

106 '--rest-api-port',

107 '4242',

108 '--websockets-api-port',

109 '4243',

110 '--api-cors',

111 'http://localhost:*/*,app://.',

112 '--api-host',

113 '0.0.0.0',

114 ]

115

116 config_args = load_config()

117 cmd = base_args + config_args

118

119 logger.info('starting rotki backend')

120

121 rotki = subprocess.Popen(cmd)

122

123 if rotki.returncode == 1:

124 logger.error('Failed to start rotki')

125 exit(1)

126

127 logger.info('starting nginx')

128

129 nginx = subprocess.Popen('nginx -g "daemon off;"', shell=True)

130

131 if nginx.returncode == 1:

132 logger.error('Failed to start nginx')

133 exit(1)

134

135 while True:

136 time.sleep(60)

137

138 if rotki.poll() is not None:

139 logger.error('rotki has terminated exiting')

140 exit(1)

141

142 if nginx.poll() is not None:

143 logger.error('nginx was not running')

144 exit(1)

145

146 logger.info('OK: processes still running')

147

[end of packaging/docker/entrypoint.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/packaging/docker/entrypoint.py b/packaging/docker/entrypoint.py

--- a/packaging/docker/entrypoint.py

+++ b/packaging/docker/entrypoint.py

@@ -2,10 +2,12 @@

import json

import logging

import os

+import shutil

import subprocess

import time

+from datetime import datetime, timedelta

from pathlib import Path

-from typing import Dict, Optional, Any, List

+from typing import Any, Dict, List, Optional

logger = logging.getLogger('monitor')

logging.basicConfig(level=logging.DEBUG)

@@ -13,6 +15,41 @@

DEFAULT_LOG_LEVEL = 'critical'

+def can_delete(file: Path, cutoff: int) -> bool:

+ return int(os.stat(file).st_mtime) <= cutoff or file.name.startswith('_MEI')

+

+

+def cleanup_tmp() -> None:

+ logger.info('Preparing to cleanup tmp directory')

+ tmp_dir = Path('/tmp/').glob('*')

+ cache_cutoff = datetime.today() - timedelta(hours=6)

+ cutoff_epoch = int(cache_cutoff.strftime("%s"))

+ to_delete = filter(lambda x: can_delete(x, cutoff_epoch), tmp_dir)

+

+ deleted = 0

+ skipped = 0

+

+ for item in to_delete:

+ path = Path(item)

+ if path.is_file():

+ try:

+ path.unlink()

+ deleted += 1

+ continue

+ except PermissionError:

+ skipped += 1

+ continue

+

+ try:

+ shutil.rmtree(item)

+ deleted += 1

+ except OSError:

+ skipped += 1

+ continue

+

+ logger.info(f'Deleted {deleted} files or directories, skipped {skipped} from /tmp')

+

+

def load_config_from_file() -> Optional[Dict[str, Any]]:

config_file = Path('/config/rotki_config.json')

if not config_file.exists():

@@ -101,6 +138,8 @@

return args

+cleanup_tmp()

+

base_args = [

'/usr/sbin/rotki',

'--rest-api-port',

|

{"golden_diff": "diff --git a/packaging/docker/entrypoint.py b/packaging/docker/entrypoint.py\n--- a/packaging/docker/entrypoint.py\n+++ b/packaging/docker/entrypoint.py\n@@ -2,10 +2,12 @@\n import json\n import logging\n import os\n+import shutil\n import subprocess\n import time\n+from datetime import datetime, timedelta\n from pathlib import Path\n-from typing import Dict, Optional, Any, List\n+from typing import Any, Dict, List, Optional\n \n logger = logging.getLogger('monitor')\n logging.basicConfig(level=logging.DEBUG)\n@@ -13,6 +15,41 @@\n DEFAULT_LOG_LEVEL = 'critical'\n \n \n+def can_delete(file: Path, cutoff: int) -> bool:\n+ return int(os.stat(file).st_mtime) <= cutoff or file.name.startswith('_MEI')\n+\n+\n+def cleanup_tmp() -> None:\n+ logger.info('Preparing to cleanup tmp directory')\n+ tmp_dir = Path('/tmp/').glob('*')\n+ cache_cutoff = datetime.today() - timedelta(hours=6)\n+ cutoff_epoch = int(cache_cutoff.strftime(\"%s\"))\n+ to_delete = filter(lambda x: can_delete(x, cutoff_epoch), tmp_dir)\n+\n+ deleted = 0\n+ skipped = 0\n+\n+ for item in to_delete:\n+ path = Path(item)\n+ if path.is_file():\n+ try:\n+ path.unlink()\n+ deleted += 1\n+ continue\n+ except PermissionError:\n+ skipped += 1\n+ continue\n+\n+ try:\n+ shutil.rmtree(item)\n+ deleted += 1\n+ except OSError:\n+ skipped += 1\n+ continue\n+\n+ logger.info(f'Deleted {deleted} files or directories, skipped {skipped} from /tmp')\n+\n+\n def load_config_from_file() -> Optional[Dict[str, Any]]:\n config_file = Path('/config/rotki_config.json')\n if not config_file.exists():\n@@ -101,6 +138,8 @@\n return args\n \n \n+cleanup_tmp()\n+\n base_args = [\n '/usr/sbin/rotki',\n '--rest-api-port',\n", "issue": "Docker container's /tmp doesn't get automatically cleaned\n## Problem Definition\r\n\r\nPyInstaller extracts the files in /tmp every time the backend starts\r\nIn the docker container /tmp is never cleaned which results in an ever-increasing size on every application restart\r\n\r\n## TODO\r\n\r\n- [ ] Add /tmp cleanup on start\r\n\r\n\n", "before_files": [{"content": "#!/usr/bin/python3\nimport json\nimport logging\nimport os\nimport subprocess\nimport time\nfrom pathlib import Path\nfrom typing import Dict, Optional, Any, List\n\nlogger = logging.getLogger('monitor')\nlogging.basicConfig(level=logging.DEBUG)\n\nDEFAULT_LOG_LEVEL = 'critical'\n\n\ndef load_config_from_file() -> Optional[Dict[str, Any]]:\n config_file = Path('/config/rotki_config.json')\n if not config_file.exists():\n logger.info('no config file provided')\n return None\n\n with open(config_file) as file:\n try:\n data = json.load(file)\n return data\n except json.JSONDecodeError as e:\n logger.error(e)\n return None\n\n\ndef load_config_from_env() -> Dict[str, Any]:\n loglevel = os.environ.get('LOGLEVEL')\n logfromothermodules = os.environ.get('LOGFROMOTHERMODDULES')\n sleep_secs = os.environ.get('SLEEP_SECS')\n max_size_in_mb_all_logs = os.environ.get('MAX_SIZE_IN_MB_ALL_LOGS')\n max_logfiles_num = os.environ.get('MAX_LOGFILES_NUM')\n\n return {\n 'loglevel': loglevel,\n 'logfromothermodules': logfromothermodules,\n 'sleep_secs': sleep_secs,\n 'max_logfiles_num': max_logfiles_num,\n 'max_size_in_mb_all_logs': max_size_in_mb_all_logs,\n }\n\n\ndef load_config() -> List[str]:\n env_config = load_config_from_env()\n file_config = load_config_from_file()\n\n logger.info('loading config from env')\n\n loglevel = env_config.get('loglevel')\n log_from_other_modules = env_config.get('logfromothermodules')\n sleep_secs = env_config.get('sleep_secs')\n max_logfiles_num = env_config.get('max_logfiles_num')\n max_size_in_mb_all_logs = env_config.get('max_size_in_mb_all_logs')\n\n if file_config is not None:\n logger.info('loading config from file')\n\n if file_config.get('loglevel') is not None:\n loglevel = file_config.get('loglevel')\n\n if file_config.get('logfromothermodules') is not None:\n log_from_other_modules = file_config.get('logfromothermodules')\n\n if file_config.get('sleep-secs') is not None:\n sleep_secs = file_config.get('sleep-secs')\n\n if file_config.get('max_logfiles_num') is not None:\n max_logfiles_num = file_config.get('max_logfiles_num')\n\n if file_config.get('max_size_in_mb_all_logs') is not None:\n max_size_in_mb_all_logs = file_config.get('max_size_in_mb_all_logs')\n\n args = [\n '--data-dir',\n '/data',\n '--logfile',\n '/logs/rotki.log',\n '--loglevel',\n loglevel if loglevel is not None else DEFAULT_LOG_LEVEL,\n ]\n\n if log_from_other_modules is True:\n args.append('--logfromothermodules')\n\n if sleep_secs is not None:\n args.append('--sleep-secs')\n args.append(str(sleep_secs))\n\n if max_logfiles_num is not None:\n args.append('--max-logfiles-num')\n args.append(str(max_logfiles_num))\n\n if max_size_in_mb_all_logs is not None:\n args.append('--max-size-in-mb-all-logs')\n args.append(str(max_size_in_mb_all_logs))\n\n return args\n\n\nbase_args = [\n '/usr/sbin/rotki',\n '--rest-api-port',\n '4242',\n '--websockets-api-port',\n '4243',\n '--api-cors',\n 'http://localhost:*/*,app://.',\n '--api-host',\n '0.0.0.0',\n]\n\nconfig_args = load_config()\ncmd = base_args + config_args\n\nlogger.info('starting rotki backend')\n\nrotki = subprocess.Popen(cmd)\n\nif rotki.returncode == 1:\n logger.error('Failed to start rotki')\n exit(1)\n\nlogger.info('starting nginx')\n\nnginx = subprocess.Popen('nginx -g \"daemon off;\"', shell=True)\n\nif nginx.returncode == 1:\n logger.error('Failed to start nginx')\n exit(1)\n\nwhile True:\n time.sleep(60)\n\n if rotki.poll() is not None:\n logger.error('rotki has terminated exiting')\n exit(1)\n\n if nginx.poll() is not None:\n logger.error('nginx was not running')\n exit(1)\n\n logger.info('OK: processes still running')\n", "path": "packaging/docker/entrypoint.py"}]}

| 1,932 | 472 |

gh_patches_debug_105

|

rasdani/github-patches

|

git_diff

|

celery__celery-3671

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Request on_timeout should ignore soft time limit exception

When Request.on_timeout receive a soft timeout from billiard, it does the same as if it was receiving a hard time limit exception. This is ran by the controller.

But the task may catch this exception and eg. return (this is what soft timeout are for).

This cause:

1. the result to be saved once as an exception by the controller (on_timeout) and another time with the result returned by the task

2. the task status to be passed to failure and to success on the same manner

3. if the task is participating to a chord, the chord result counter (at least with redis) is incremented twice (instead of once), making the chord to return prematurely and eventually loose tasks…

1, 2 and 3 can leads of course to strange race conditions…

## Steps to reproduce (Illustration)

with the program in test_timeout.py:

```python

import time

import celery

app = celery.Celery('test_timeout')

app.conf.update(

result_backend="redis://localhost/0",

broker_url="amqp://celery:celery@localhost:5672/host",

)

@app.task(soft_time_limit=1)

def test():

try:

time.sleep(2)

except Exception:

return 1

@app.task()

def add(args):

print("### adding", args)

return sum(args)

@app.task()

def on_error(context, exception, traceback, **kwargs):

print("### on_error: ", exception)

if __name__ == "__main__":

result = celery.chord([test.s().set(link_error=on_error.s()), test.s().set(link_error=on_error.s())])(add.s())

result.get()

```

start a worker and the program:

```

$ celery -A test_timeout worker -l WARNING

$ python3 test_timeout.py

```

## Expected behavior

add method is called with `[1, 1]` as argument and test_timeout.py return normally

## Actual behavior

The test_timeout.py fails, with

```

celery.backends.base.ChordError: Callback error: ChordError("Dependency 15109e05-da43-449f-9081-85d839ac0ef2 raised SoftTimeLimitExceeded('SoftTimeLimitExceeded(True,)',)",

```

On the worker side, the **on_error is called but the add method as well !**

```

[2017-11-29 23:07:25,538: WARNING/MainProcess] Soft time limit (1s) exceeded for test_timeout.test[15109e05-da43-449f-9081-85d839ac0ef2]

[2017-11-29 23:07:25,546: WARNING/MainProcess] ### on_error:

[2017-11-29 23:07:25,546: WARNING/MainProcess] SoftTimeLimitExceeded(True,)

[2017-11-29 23:07:25,547: WARNING/MainProcess] Soft time limit (1s) exceeded for test_timeout.test[38f3f7f2-4a89-4318-8ee9-36a987f73757]

[2017-11-29 23:07:25,553: ERROR/MainProcess] Chord callback for 'ef6d7a38-d1b4-40ad-b937-ffa84e40bb23' raised: ChordError("Dependency 15109e05-da43-449f-9081-85d839ac0ef2 raised SoftTimeLimitExceeded('SoftTimeLimitExceeded(True,)',)",)

Traceback (most recent call last):

File "/usr/local/lib/python3.4/dist-packages/celery/backends/redis.py", line 290, in on_chord_part_return

callback.delay([unpack(tup, decode) for tup in resl])

File "/usr/local/lib/python3.4/dist-packages/celery/backends/redis.py", line 290, in <listcomp>

callback.delay([unpack(tup, decode) for tup in resl])

File "/usr/local/lib/python3.4/dist-packages/celery/backends/redis.py", line 243, in _unpack_chord_result

raise ChordError('Dependency {0} raised {1!r}'.format(tid, retval))

celery.exceptions.ChordError: Dependency 15109e05-da43-449f-9081-85d839ac0ef2 raised SoftTimeLimitExceeded('SoftTimeLimitExceeded(True,)',)

[2017-11-29 23:07:25,565: WARNING/MainProcess] ### on_error:

[2017-11-29 23:07:25,565: WARNING/MainProcess] SoftTimeLimitExceeded(True,)

[2017-11-29 23:07:27,262: WARNING/PoolWorker-2] ### adding

[2017-11-29 23:07:27,264: WARNING/PoolWorker-2] [1, 1]

```

Of course, on purpose did I choose to call the test.s() twice, to show that the count in the chord continues. In fact:

- the chord result is incremented twice by the error of soft time limit

- the chord result is again incremented twice by the correct returning of `test` task

## Conclusion

Request.on_timeout should not process soft time limit exception.

here is a quick monkey patch (correction of celery is trivial)

```python

def patch_celery_request_on_timeout():

from celery.worker import request

orig = request.Request.on_timeout