problem_id

stringlengths 18

22

| source

stringclasses 1

value | task_type

stringclasses 1

value | in_source_id

stringlengths 13

58

| prompt

stringlengths 1.71k

18.9k

| golden_diff

stringlengths 145

5.13k

| verification_info

stringlengths 465

23.6k

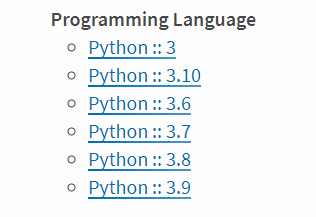

| num_tokens_prompt

int64 556

4.1k

| num_tokens_diff

int64 47

1.02k

|

|---|---|---|---|---|---|---|---|---|

gh_patches_debug_1403

|

rasdani/github-patches

|

git_diff

|

dotkom__onlineweb4-402

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

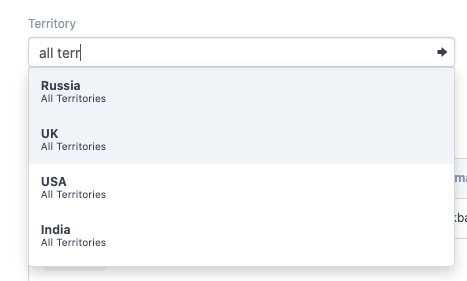

<issue>

Sort list of users when adding marks

When adding a mark, the list of user which the mark should relate to is not sorted. It should be. (It is probably sorted on realname instead of username)

- Change the list to display realname instead of username.

- Make sure it's sorted.

(Bonus would be to have a select2js-ish search on it as well, but don't use time on it.)

</issue>

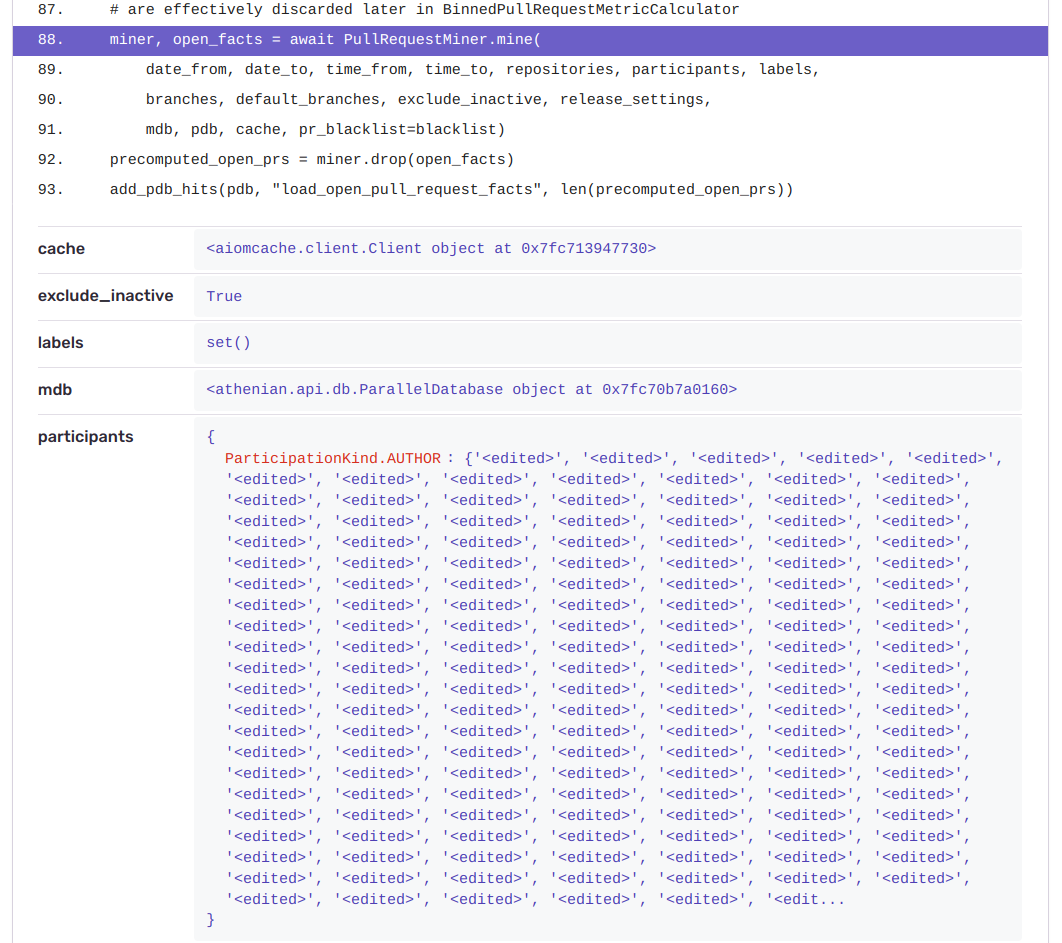

<code>

[start of apps/authentication/models.py]

1 # -*- coding: utf-8 -*-

2

3 import datetime

4 from pytz import timezone

5

6 from django.conf import settings

7 from django.contrib.auth.models import AbstractUser

8 from django.db import models

9 from django.utils.translation import ugettext as _

10 from django.utils import timezone

11

12

13 # If this list is changed, remember to check that the year property on

14 # OnlineUser is still correct!

15 FIELD_OF_STUDY_CHOICES = [

16 (0, _(u'Gjest')),

17 (1, _(u'Bachelor i Informatikk (BIT)')),

18 # master degrees take up the interval [10,30>

19 (10, _(u'Software (SW)')),

20 (11, _(u'Informasjonsforvaltning (DIF)')),

21 (12, _(u'Komplekse Datasystemer (KDS)')),

22 (13, _(u'Spillteknologi (SPT)')),

23 (14, _(u'Intelligente Systemer (IRS)')),

24 (15, _(u'Helseinformatikk (MSMEDTEK)')),

25 (30, _(u'Annen mastergrad')),

26 (80, _(u'PhD')),

27 (90, _(u'International')),

28 (100, _(u'Annet Onlinemedlem')),

29 ]

30

31 class OnlineUser(AbstractUser):

32

33 IMAGE_FOLDER = "images/profiles"

34 IMAGE_EXTENSIONS = ['.jpg', '.jpeg', '.gif', '.png']

35

36 # Online related fields

37 field_of_study = models.SmallIntegerField(_(u"studieretning"), choices=FIELD_OF_STUDY_CHOICES, default=0)

38 started_date = models.DateField(_(u"startet studie"), default=timezone.now().date())

39 compiled = models.BooleanField(_(u"kompilert"), default=False)

40

41 # Email

42 infomail = models.BooleanField(_(u"vil ha infomail"), default=True)

43

44 # Address

45 phone_number = models.CharField(_(u"telefonnummer"), max_length=20, blank=True, null=True)

46 address = models.CharField(_(u"adresse"), max_length=30, blank=True, null=True)

47 zip_code = models.CharField(_(u"postnummer"), max_length=4, blank=True, null=True)

48

49 # Other

50 allergies = models.TextField(_(u"allergier"), blank=True, null=True)

51 mark_rules = models.BooleanField(_(u"godtatt prikkeregler"), default=False)

52 rfid = models.CharField(_(u"RFID"), max_length=50, blank=True, null=True)

53 nickname = models.CharField(_(u"nickname"), max_length=50, blank=True, null=True)

54 website = models.URLField(_(u"hjemmeside"), blank=True, null=True)

55

56

57 image = models.ImageField(_(u"bilde"), max_length=200, upload_to=IMAGE_FOLDER, blank=True, null=True,

58 default=settings.DEFAULT_PROFILE_PICTURE_URL)

59

60 # NTNU credentials

61 ntnu_username = models.CharField(_(u"NTNU-brukernavn"), max_length=10, blank=True, null=True)

62

63 # TODO profile pictures

64 # TODO checkbox for forwarding of @online.ntnu.no mail

65

66 @property

67 def is_member(self):

68 """

69 Returns true if the User object is associated with Online.

70 """

71 if AllowedUsername.objects.filter(username=self.ntnu_username).filter(expiration_date__gte=timezone.now()).count() > 0:

72 return True

73 return False

74

75 def get_full_name(self):

76 """

77 Returns the first_name plus the last_name, with a space in between.

78 """

79 full_name = u'%s %s' % (self.first_name, self.last_name)

80 return full_name.strip()

81

82 def get_email(self):

83 return self.get_emails().filter(primary = True)[0]

84

85 def get_emails(self):

86 return Email.objects.all().filter(user = self)

87

88 @property

89 def year(self):

90 today = timezone.now().date()

91 started = self.started_date

92

93 # We say that a year is 360 days incase we are a bit slower to

94 # add users one year.

95 year = ((today - started).days / 360) + 1

96

97 if self.field_of_study == 0 or self.field_of_study == 100: # others

98 return 0

99 # dont return a bachelor student as 4th or 5th grade

100 elif self.field_of_study == 1: # bachelor

101 if year > 3:

102 return 3

103 return year

104 elif 9 < self.field_of_study < 30: # 10-29 is considered master

105 if year >= 2:

106 return 5

107 return 4

108 elif self.field_of_study == 80: # phd

109 return year + 5

110 elif self.field_of_study == 90: # international

111 if year == 1:

112 return 1

113 return 4

114

115 def __unicode__(self):

116 return self.get_full_name()

117

118 class Meta:

119 verbose_name = _(u"brukerprofil")

120 verbose_name_plural = _(u"brukerprofiler")

121

122

123 class Email(models.Model):

124 user = models.ForeignKey(OnlineUser, related_name="email_user")

125 email = models.EmailField(_(u"epostadresse"), unique=True)

126 primary = models.BooleanField(_(u"aktiv"), default=False)

127 verified = models.BooleanField(_(u"verifisert"), default=False)

128

129 def __unicode__(self):

130 return self.email

131

132 class Meta:

133 verbose_name = _(u"epostadresse")

134 verbose_name_plural = _(u"epostadresser")

135

136

137 class RegisterToken(models.Model):

138 user = models.ForeignKey(OnlineUser, related_name="register_user")

139 email = models.EmailField(_(u"epost"), max_length=254)

140 token = models.CharField(_(u"token"), max_length=32)

141 created = models.DateTimeField(_(u"opprettet dato"), editable=False, auto_now_add=True)

142

143 @property

144 def is_valid(self):

145 valid_period = datetime.timedelta(days=1)

146 now = timezone.now()

147 return now < self.created + valid_period

148

149

150 class AllowedUsername(models.Model):

151 """

152 Holds usernames that are considered valid members of Online and the time they expire.

153 """

154 username = models.CharField(_(u"brukernavn"), max_length=10)

155 registered = models.DateField(_(u"registrert"))

156 note = models.CharField(_(u"notat"), max_length=100)

157 description = models.TextField(_(u"beskrivelse"), blank=True, null=True)

158 expiration_date = models.DateField(_(u"utløpsdato"))

159

160 @property

161 def is_active(self):

162 return timezone.now().date() < self.expiration_date

163

164 def __unicode__(self):

165 return self.username

166

167 class Meta:

168 verbose_name = _(u"tillatt brukernavn")

169 verbose_name_plural = _(u"tillatte brukernavn")

170 ordering = (u"username",)

171

[end of apps/authentication/models.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/apps/authentication/models.py b/apps/authentication/models.py

--- a/apps/authentication/models.py

+++ b/apps/authentication/models.py

@@ -116,6 +116,7 @@

return self.get_full_name()

class Meta:

+ ordering = ['first_name', 'last_name']

verbose_name = _(u"brukerprofil")

verbose_name_plural = _(u"brukerprofiler")

|

{"golden_diff": "diff --git a/apps/authentication/models.py b/apps/authentication/models.py\n--- a/apps/authentication/models.py\n+++ b/apps/authentication/models.py\n@@ -116,6 +116,7 @@\n return self.get_full_name()\n \n class Meta:\n+ ordering = ['first_name', 'last_name']\n verbose_name = _(u\"brukerprofil\")\n verbose_name_plural = _(u\"brukerprofiler\")\n", "issue": "Sort list of users when adding marks\nWhen adding a mark, the list of user which the mark should relate to is not sorted. It should be. (It is probably sorted on realname instead of username)\n- Change the list to display realname instead of username.\n- Make sure it's sorted.\n\n(Bonus would be to have a select2js-ish search on it as well, but don't use time on it.)\n\n", "before_files": [{"content": "# -*- coding: utf-8 -*-\n\nimport datetime\nfrom pytz import timezone\n\nfrom django.conf import settings\nfrom django.contrib.auth.models import AbstractUser\nfrom django.db import models\nfrom django.utils.translation import ugettext as _\nfrom django.utils import timezone\n\n\n# If this list is changed, remember to check that the year property on\n# OnlineUser is still correct!\nFIELD_OF_STUDY_CHOICES = [\n (0, _(u'Gjest')),\n (1, _(u'Bachelor i Informatikk (BIT)')),\n # master degrees take up the interval [10,30>\n (10, _(u'Software (SW)')),\n (11, _(u'Informasjonsforvaltning (DIF)')),\n (12, _(u'Komplekse Datasystemer (KDS)')),\n (13, _(u'Spillteknologi (SPT)')),\n (14, _(u'Intelligente Systemer (IRS)')),\n (15, _(u'Helseinformatikk (MSMEDTEK)')),\n (30, _(u'Annen mastergrad')),\n (80, _(u'PhD')),\n (90, _(u'International')),\n (100, _(u'Annet Onlinemedlem')),\n]\n\nclass OnlineUser(AbstractUser):\n\n IMAGE_FOLDER = \"images/profiles\"\n IMAGE_EXTENSIONS = ['.jpg', '.jpeg', '.gif', '.png']\n \n # Online related fields\n field_of_study = models.SmallIntegerField(_(u\"studieretning\"), choices=FIELD_OF_STUDY_CHOICES, default=0)\n started_date = models.DateField(_(u\"startet studie\"), default=timezone.now().date())\n compiled = models.BooleanField(_(u\"kompilert\"), default=False)\n\n # Email\n infomail = models.BooleanField(_(u\"vil ha infomail\"), default=True)\n\n # Address\n phone_number = models.CharField(_(u\"telefonnummer\"), max_length=20, blank=True, null=True)\n address = models.CharField(_(u\"adresse\"), max_length=30, blank=True, null=True)\n zip_code = models.CharField(_(u\"postnummer\"), max_length=4, blank=True, null=True)\n\n # Other\n allergies = models.TextField(_(u\"allergier\"), blank=True, null=True)\n mark_rules = models.BooleanField(_(u\"godtatt prikkeregler\"), default=False)\n rfid = models.CharField(_(u\"RFID\"), max_length=50, blank=True, null=True)\n nickname = models.CharField(_(u\"nickname\"), max_length=50, blank=True, null=True)\n website = models.URLField(_(u\"hjemmeside\"), blank=True, null=True)\n\n\n image = models.ImageField(_(u\"bilde\"), max_length=200, upload_to=IMAGE_FOLDER, blank=True, null=True,\n default=settings.DEFAULT_PROFILE_PICTURE_URL)\n\n # NTNU credentials\n ntnu_username = models.CharField(_(u\"NTNU-brukernavn\"), max_length=10, blank=True, null=True)\n\n # TODO profile pictures\n # TODO checkbox for forwarding of @online.ntnu.no mail\n \n @property\n def is_member(self):\n \"\"\"\n Returns true if the User object is associated with Online.\n \"\"\"\n if AllowedUsername.objects.filter(username=self.ntnu_username).filter(expiration_date__gte=timezone.now()).count() > 0:\n return True\n return False\n\n def get_full_name(self):\n \"\"\"\n Returns the first_name plus the last_name, with a space in between.\n \"\"\"\n full_name = u'%s %s' % (self.first_name, self.last_name)\n return full_name.strip()\n\n def get_email(self):\n return self.get_emails().filter(primary = True)[0]\n\n def get_emails(self):\n return Email.objects.all().filter(user = self)\n\n @property\n def year(self):\n today = timezone.now().date()\n started = self.started_date\n\n # We say that a year is 360 days incase we are a bit slower to\n # add users one year.\n year = ((today - started).days / 360) + 1\n\n if self.field_of_study == 0 or self.field_of_study == 100: # others\n return 0\n # dont return a bachelor student as 4th or 5th grade\n elif self.field_of_study == 1: # bachelor\n if year > 3:\n return 3\n return year\n elif 9 < self.field_of_study < 30: # 10-29 is considered master\n if year >= 2:\n return 5\n return 4\n elif self.field_of_study == 80: # phd\n return year + 5\n elif self.field_of_study == 90: # international\n if year == 1:\n return 1\n return 4\n\n def __unicode__(self):\n return self.get_full_name()\n\n class Meta:\n verbose_name = _(u\"brukerprofil\")\n verbose_name_plural = _(u\"brukerprofiler\")\n\n\nclass Email(models.Model):\n user = models.ForeignKey(OnlineUser, related_name=\"email_user\")\n email = models.EmailField(_(u\"epostadresse\"), unique=True)\n primary = models.BooleanField(_(u\"aktiv\"), default=False)\n verified = models.BooleanField(_(u\"verifisert\"), default=False)\n\n def __unicode__(self):\n return self.email\n\n class Meta:\n verbose_name = _(u\"epostadresse\")\n verbose_name_plural = _(u\"epostadresser\")\n\n\nclass RegisterToken(models.Model):\n user = models.ForeignKey(OnlineUser, related_name=\"register_user\")\n email = models.EmailField(_(u\"epost\"), max_length=254)\n token = models.CharField(_(u\"token\"), max_length=32)\n created = models.DateTimeField(_(u\"opprettet dato\"), editable=False, auto_now_add=True)\n\n @property\n def is_valid(self):\n valid_period = datetime.timedelta(days=1)\n now = timezone.now()\n return now < self.created + valid_period \n\n\nclass AllowedUsername(models.Model):\n \"\"\"\n Holds usernames that are considered valid members of Online and the time they expire.\n \"\"\"\n username = models.CharField(_(u\"brukernavn\"), max_length=10)\n registered = models.DateField(_(u\"registrert\"))\n note = models.CharField(_(u\"notat\"), max_length=100)\n description = models.TextField(_(u\"beskrivelse\"), blank=True, null=True)\n expiration_date = models.DateField(_(u\"utl\u00f8psdato\"))\n\n @property\n def is_active(self):\n return timezone.now().date() < self.expiration_date\n\n def __unicode__(self):\n return self.username\n\n class Meta:\n verbose_name = _(u\"tillatt brukernavn\")\n verbose_name_plural = _(u\"tillatte brukernavn\")\n ordering = (u\"username\",)\n", "path": "apps/authentication/models.py"}]}

| 2,615 | 92 |

gh_patches_debug_25973

|

rasdani/github-patches

|

git_diff

|

pex-tool__pex-435

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

pex 1.2.14 breaks entrypoint targeting when PEX_PYTHON is present

with 1.2.13, targeting an entrypoint with `-e` et al results in an attempt to load that entrypoint at runtime:

```

[omerta ~]$ pip install pex==1.2.13 2>&1 >/dev/null

[omerta ~]$ pex --version

pex 1.2.13

[omerta ~]$ pex -e 'pants.bin.pants_loader:main' pantsbuild.pants -o /tmp/pants.pex

[omerta ~]$ /tmp/pants.pex

Traceback (most recent call last):

File ".bootstrap/_pex/pex.py", line 365, in execute

File ".bootstrap/_pex/pex.py", line 293, in _wrap_coverage

File ".bootstrap/_pex/pex.py", line 325, in _wrap_profiling

File ".bootstrap/_pex/pex.py", line 408, in _execute

File ".bootstrap/_pex/pex.py", line 466, in execute_entry

File ".bootstrap/_pex/pex.py", line 480, in execute_pkg_resources

File ".bootstrap/pkg_resources/__init__.py", line 2297, in resolve

ImportError: No module named pants_loader

```

with 1.2.14, it seems to be re-execing against the `PEX_PYTHON` interpreter sans args which results in a bare repl when the pex is run:

```

[omerta ~]$ pip install pex==1.2.14 2>&1 >/dev/null

[omerta ~]$ pex --version

pex 1.2.14

[omerta ~]$ pex -e 'pants.bin.pants_loader:main' pantsbuild.pants -o /tmp/pants.pex

[omerta ~]$ PEX_VERBOSE=9 /tmp/pants.pex

pex: Please build pex with the subprocess32 module for more reliable requirement installation and interpreter execution.

pex: Selecting runtime interpreter based on pexrc: 0.1ms

pex: Re-executing: cmdline="['/opt/ee/python/2.7/bin/python2.7']", sys.executable="/Users/kwilson/Python/CPython-2.7.13/bin/python2.7", PEX_PYTHON="None", PEX_PYTHON_PATH="None", COMPATIBILITY_CONSTRAINTS="[]"

Python 2.7.10 (default, Dec 16 2015, 14:09:45)

[GCC 4.2.1 Compatible Apple LLVM 7.0.2 (clang-700.1.81)] on darwin

Type "help", "copyright", "credits" or "license" for more information.

>>>

```

cc @CMLivingston since this appears related to #427

</issue>

<code>

[start of pex/pex_bootstrapper.py]

1 # Copyright 2014 Pants project contributors (see CONTRIBUTORS.md).

2 # Licensed under the Apache License, Version 2.0 (see LICENSE).

3 from __future__ import print_function

4 import os

5 import sys

6

7 from .common import die, open_zip

8 from .executor import Executor

9 from .interpreter import PythonInterpreter

10 from .interpreter_constraints import matched_interpreters

11 from .tracer import TRACER

12 from .variables import ENV

13

14 __all__ = ('bootstrap_pex',)

15

16

17 def pex_info_name(entry_point):

18 """Return the PEX-INFO for an entry_point"""

19 return os.path.join(entry_point, 'PEX-INFO')

20

21

22 def is_compressed(entry_point):

23 return os.path.exists(entry_point) and not os.path.exists(pex_info_name(entry_point))

24

25

26 def read_pexinfo_from_directory(entry_point):

27 with open(pex_info_name(entry_point), 'rb') as fp:

28 return fp.read()

29

30

31 def read_pexinfo_from_zip(entry_point):

32 with open_zip(entry_point) as zf:

33 return zf.read('PEX-INFO')

34

35

36 def read_pex_info_content(entry_point):

37 """Return the raw content of a PEX-INFO."""

38 if is_compressed(entry_point):

39 return read_pexinfo_from_zip(entry_point)

40 else:

41 return read_pexinfo_from_directory(entry_point)

42

43

44 def get_pex_info(entry_point):

45 """Return the PexInfo object for an entry point."""

46 from . import pex_info

47

48 pex_info_content = read_pex_info_content(entry_point)

49 if pex_info_content:

50 return pex_info.PexInfo.from_json(pex_info_content)

51 raise ValueError('Invalid entry_point: %s' % entry_point)

52

53

54 def find_in_path(target_interpreter):

55 if os.path.exists(target_interpreter):

56 return target_interpreter

57

58 for directory in os.getenv('PATH', '').split(os.pathsep):

59 try_path = os.path.join(directory, target_interpreter)

60 if os.path.exists(try_path):

61 return try_path

62

63

64 def find_compatible_interpreters(pex_python_path, compatibility_constraints):

65 """Find all compatible interpreters on the system within the supplied constraints and use

66 PEX_PYTHON_PATH if it is set. If not, fall back to interpreters on $PATH.

67 """

68 if pex_python_path:

69 interpreters = []

70 for binary in pex_python_path.split(os.pathsep):

71 try:

72 interpreters.append(PythonInterpreter.from_binary(binary))

73 except Executor.ExecutionError:

74 print("Python interpreter %s in PEX_PYTHON_PATH failed to load properly." % binary,

75 file=sys.stderr)

76 if not interpreters:

77 die('PEX_PYTHON_PATH was defined, but no valid interpreters could be identified. Exiting.')

78 else:

79 if not os.getenv('PATH', ''):

80 # no $PATH, use sys.executable

81 interpreters = [PythonInterpreter.get()]

82 else:

83 # get all qualifying interpreters found in $PATH

84 interpreters = PythonInterpreter.all()

85

86 return list(matched_interpreters(

87 interpreters, compatibility_constraints, meet_all_constraints=True))

88

89

90 def _select_pex_python_interpreter(target_python, compatibility_constraints):

91 target = find_in_path(target_python)

92

93 if not target:

94 die('Failed to find interpreter specified by PEX_PYTHON: %s' % target)

95 if compatibility_constraints:

96 pi = PythonInterpreter.from_binary(target)

97 if not list(matched_interpreters([pi], compatibility_constraints, meet_all_constraints=True)):

98 die('Interpreter specified by PEX_PYTHON (%s) is not compatible with specified '

99 'interpreter constraints: %s' % (target, str(compatibility_constraints)))

100 if not os.path.exists(target):

101 die('Target interpreter specified by PEX_PYTHON %s does not exist. Exiting.' % target)

102 return target

103

104

105 def _select_interpreter(pex_python_path, compatibility_constraints):

106 compatible_interpreters = find_compatible_interpreters(

107 pex_python_path, compatibility_constraints)

108

109 if not compatible_interpreters:

110 die('Failed to find compatible interpreter for constraints: %s'

111 % str(compatibility_constraints))

112 # TODO: https://github.com/pantsbuild/pex/issues/430

113 target = min(compatible_interpreters).binary

114

115 if os.path.exists(target) and os.path.realpath(target) != os.path.realpath(sys.executable):

116 return target

117

118

119 def maybe_reexec_pex(compatibility_constraints):

120 """

121 Handle environment overrides for the Python interpreter to use when executing this pex.

122

123 This function supports interpreter filtering based on interpreter constraints stored in PEX-INFO

124 metadata. If PEX_PYTHON is set in a pexrc, it attempts to obtain the binary location of the

125 interpreter specified by PEX_PYTHON. If PEX_PYTHON_PATH is set, it attempts to search the path for

126 a matching interpreter in accordance with the interpreter constraints. If both variables are

127 present in a pexrc, this function gives precedence to PEX_PYTHON_PATH and errors out if no

128 compatible interpreters can be found on said path. If neither variable is set, fall through to

129 plain pex execution using PATH searching or the currently executing interpreter.

130

131 :param compatibility_constraints: list of requirements-style strings that constrain the

132 Python interpreter to re-exec this pex with.

133

134 """

135 if ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC:

136 return

137

138 selected_interpreter = None

139 with TRACER.timed('Selecting runtime interpreter based on pexrc', V=3):

140 if ENV.PEX_PYTHON and not ENV.PEX_PYTHON_PATH:

141 # preserve PEX_PYTHON re-exec for backwards compatibility

142 # TODO: Kill this off completely in favor of PEX_PYTHON_PATH

143 # https://github.com/pantsbuild/pex/issues/431

144 selected_interpreter = _select_pex_python_interpreter(ENV.PEX_PYTHON,

145 compatibility_constraints)

146 elif ENV.PEX_PYTHON_PATH:

147 selected_interpreter = _select_interpreter(ENV.PEX_PYTHON_PATH, compatibility_constraints)

148

149 if selected_interpreter:

150 ENV.delete('PEX_PYTHON')

151 ENV.delete('PEX_PYTHON_PATH')

152 ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC = True

153 cmdline = [selected_interpreter] + sys.argv[1:]

154 TRACER.log('Re-executing: cmdline="%s", sys.executable="%s", PEX_PYTHON="%s", '

155 'PEX_PYTHON_PATH="%s", COMPATIBILITY_CONSTRAINTS="%s"'

156 % (cmdline, sys.executable, ENV.PEX_PYTHON, ENV.PEX_PYTHON_PATH,

157 compatibility_constraints))

158 os.execve(selected_interpreter, cmdline, ENV.copy())

159

160

161 def bootstrap_pex(entry_point):

162 from .finders import register_finders

163 register_finders()

164 pex_info = get_pex_info(entry_point)

165 maybe_reexec_pex(pex_info.interpreter_constraints)

166

167 from . import pex

168 pex.PEX(entry_point).execute()

169

170

171 def bootstrap_pex_env(entry_point):

172 """Bootstrap the current runtime environment using a given pex."""

173 from .environment import PEXEnvironment

174 from .finders import register_finders

175 from .pex_info import PexInfo

176

177 register_finders()

178

179 PEXEnvironment(entry_point, PexInfo.from_pex(entry_point)).activate()

180

[end of pex/pex_bootstrapper.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pex/pex_bootstrapper.py b/pex/pex_bootstrapper.py

--- a/pex/pex_bootstrapper.py

+++ b/pex/pex_bootstrapper.py

@@ -1,6 +1,7 @@

# Copyright 2014 Pants project contributors (see CONTRIBUTORS.md).

# Licensed under the Apache License, Version 2.0 (see LICENSE).

from __future__ import print_function

+

import os

import sys

@@ -147,14 +148,14 @@

selected_interpreter = _select_interpreter(ENV.PEX_PYTHON_PATH, compatibility_constraints)

if selected_interpreter:

- ENV.delete('PEX_PYTHON')

- ENV.delete('PEX_PYTHON_PATH')

- ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC = True

- cmdline = [selected_interpreter] + sys.argv[1:]

+ cmdline = [selected_interpreter] + sys.argv

TRACER.log('Re-executing: cmdline="%s", sys.executable="%s", PEX_PYTHON="%s", '

'PEX_PYTHON_PATH="%s", COMPATIBILITY_CONSTRAINTS="%s"'

% (cmdline, sys.executable, ENV.PEX_PYTHON, ENV.PEX_PYTHON_PATH,

compatibility_constraints))

+ ENV.delete('PEX_PYTHON')

+ ENV.delete('PEX_PYTHON_PATH')

+ ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC = True

os.execve(selected_interpreter, cmdline, ENV.copy())

|

{"golden_diff": "diff --git a/pex/pex_bootstrapper.py b/pex/pex_bootstrapper.py\n--- a/pex/pex_bootstrapper.py\n+++ b/pex/pex_bootstrapper.py\n@@ -1,6 +1,7 @@\n # Copyright 2014 Pants project contributors (see CONTRIBUTORS.md).\n # Licensed under the Apache License, Version 2.0 (see LICENSE).\n from __future__ import print_function\n+\n import os\n import sys\n \n@@ -147,14 +148,14 @@\n selected_interpreter = _select_interpreter(ENV.PEX_PYTHON_PATH, compatibility_constraints)\n \n if selected_interpreter:\n- ENV.delete('PEX_PYTHON')\n- ENV.delete('PEX_PYTHON_PATH')\n- ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC = True\n- cmdline = [selected_interpreter] + sys.argv[1:]\n+ cmdline = [selected_interpreter] + sys.argv\n TRACER.log('Re-executing: cmdline=\"%s\", sys.executable=\"%s\", PEX_PYTHON=\"%s\", '\n 'PEX_PYTHON_PATH=\"%s\", COMPATIBILITY_CONSTRAINTS=\"%s\"'\n % (cmdline, sys.executable, ENV.PEX_PYTHON, ENV.PEX_PYTHON_PATH,\n compatibility_constraints))\n+ ENV.delete('PEX_PYTHON')\n+ ENV.delete('PEX_PYTHON_PATH')\n+ ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC = True\n os.execve(selected_interpreter, cmdline, ENV.copy())\n", "issue": "pex 1.2.14 breaks entrypoint targeting when PEX_PYTHON is present\nwith 1.2.13, targeting an entrypoint with `-e` et al results in an attempt to load that entrypoint at runtime:\r\n\r\n```\r\n[omerta ~]$ pip install pex==1.2.13 2>&1 >/dev/null\r\n[omerta ~]$ pex --version\r\npex 1.2.13\r\n[omerta ~]$ pex -e 'pants.bin.pants_loader:main' pantsbuild.pants -o /tmp/pants.pex\r\n[omerta ~]$ /tmp/pants.pex\r\nTraceback (most recent call last):\r\n File \".bootstrap/_pex/pex.py\", line 365, in execute\r\n File \".bootstrap/_pex/pex.py\", line 293, in _wrap_coverage\r\n File \".bootstrap/_pex/pex.py\", line 325, in _wrap_profiling\r\n File \".bootstrap/_pex/pex.py\", line 408, in _execute\r\n File \".bootstrap/_pex/pex.py\", line 466, in execute_entry\r\n File \".bootstrap/_pex/pex.py\", line 480, in execute_pkg_resources\r\n File \".bootstrap/pkg_resources/__init__.py\", line 2297, in resolve\r\nImportError: No module named pants_loader\r\n```\r\n\r\nwith 1.2.14, it seems to be re-execing against the `PEX_PYTHON` interpreter sans args which results in a bare repl when the pex is run:\r\n\r\n```\r\n[omerta ~]$ pip install pex==1.2.14 2>&1 >/dev/null\r\n[omerta ~]$ pex --version\r\npex 1.2.14\r\n[omerta ~]$ pex -e 'pants.bin.pants_loader:main' pantsbuild.pants -o /tmp/pants.pex\r\n[omerta ~]$ PEX_VERBOSE=9 /tmp/pants.pex\r\npex: Please build pex with the subprocess32 module for more reliable requirement installation and interpreter execution.\r\npex: Selecting runtime interpreter based on pexrc: 0.1ms\r\npex: Re-executing: cmdline=\"['/opt/ee/python/2.7/bin/python2.7']\", sys.executable=\"/Users/kwilson/Python/CPython-2.7.13/bin/python2.7\", PEX_PYTHON=\"None\", PEX_PYTHON_PATH=\"None\", COMPATIBILITY_CONSTRAINTS=\"[]\"\r\nPython 2.7.10 (default, Dec 16 2015, 14:09:45) \r\n[GCC 4.2.1 Compatible Apple LLVM 7.0.2 (clang-700.1.81)] on darwin\r\nType \"help\", \"copyright\", \"credits\" or \"license\" for more information.\r\n>>> \r\n```\r\n\r\ncc @CMLivingston since this appears related to #427 \n", "before_files": [{"content": "# Copyright 2014 Pants project contributors (see CONTRIBUTORS.md).\n# Licensed under the Apache License, Version 2.0 (see LICENSE).\nfrom __future__ import print_function\nimport os\nimport sys\n\nfrom .common import die, open_zip\nfrom .executor import Executor\nfrom .interpreter import PythonInterpreter\nfrom .interpreter_constraints import matched_interpreters\nfrom .tracer import TRACER\nfrom .variables import ENV\n\n__all__ = ('bootstrap_pex',)\n\n\ndef pex_info_name(entry_point):\n \"\"\"Return the PEX-INFO for an entry_point\"\"\"\n return os.path.join(entry_point, 'PEX-INFO')\n\n\ndef is_compressed(entry_point):\n return os.path.exists(entry_point) and not os.path.exists(pex_info_name(entry_point))\n\n\ndef read_pexinfo_from_directory(entry_point):\n with open(pex_info_name(entry_point), 'rb') as fp:\n return fp.read()\n\n\ndef read_pexinfo_from_zip(entry_point):\n with open_zip(entry_point) as zf:\n return zf.read('PEX-INFO')\n\n\ndef read_pex_info_content(entry_point):\n \"\"\"Return the raw content of a PEX-INFO.\"\"\"\n if is_compressed(entry_point):\n return read_pexinfo_from_zip(entry_point)\n else:\n return read_pexinfo_from_directory(entry_point)\n\n\ndef get_pex_info(entry_point):\n \"\"\"Return the PexInfo object for an entry point.\"\"\"\n from . import pex_info\n\n pex_info_content = read_pex_info_content(entry_point)\n if pex_info_content:\n return pex_info.PexInfo.from_json(pex_info_content)\n raise ValueError('Invalid entry_point: %s' % entry_point)\n\n\ndef find_in_path(target_interpreter):\n if os.path.exists(target_interpreter):\n return target_interpreter\n\n for directory in os.getenv('PATH', '').split(os.pathsep):\n try_path = os.path.join(directory, target_interpreter)\n if os.path.exists(try_path):\n return try_path\n\n\ndef find_compatible_interpreters(pex_python_path, compatibility_constraints):\n \"\"\"Find all compatible interpreters on the system within the supplied constraints and use\n PEX_PYTHON_PATH if it is set. If not, fall back to interpreters on $PATH.\n \"\"\"\n if pex_python_path:\n interpreters = []\n for binary in pex_python_path.split(os.pathsep):\n try:\n interpreters.append(PythonInterpreter.from_binary(binary))\n except Executor.ExecutionError:\n print(\"Python interpreter %s in PEX_PYTHON_PATH failed to load properly.\" % binary,\n file=sys.stderr)\n if not interpreters:\n die('PEX_PYTHON_PATH was defined, but no valid interpreters could be identified. Exiting.')\n else:\n if not os.getenv('PATH', ''):\n # no $PATH, use sys.executable\n interpreters = [PythonInterpreter.get()]\n else:\n # get all qualifying interpreters found in $PATH\n interpreters = PythonInterpreter.all()\n\n return list(matched_interpreters(\n interpreters, compatibility_constraints, meet_all_constraints=True))\n\n\ndef _select_pex_python_interpreter(target_python, compatibility_constraints):\n target = find_in_path(target_python)\n\n if not target:\n die('Failed to find interpreter specified by PEX_PYTHON: %s' % target)\n if compatibility_constraints:\n pi = PythonInterpreter.from_binary(target)\n if not list(matched_interpreters([pi], compatibility_constraints, meet_all_constraints=True)):\n die('Interpreter specified by PEX_PYTHON (%s) is not compatible with specified '\n 'interpreter constraints: %s' % (target, str(compatibility_constraints)))\n if not os.path.exists(target):\n die('Target interpreter specified by PEX_PYTHON %s does not exist. Exiting.' % target)\n return target\n\n\ndef _select_interpreter(pex_python_path, compatibility_constraints):\n compatible_interpreters = find_compatible_interpreters(\n pex_python_path, compatibility_constraints)\n\n if not compatible_interpreters:\n die('Failed to find compatible interpreter for constraints: %s'\n % str(compatibility_constraints))\n # TODO: https://github.com/pantsbuild/pex/issues/430\n target = min(compatible_interpreters).binary\n\n if os.path.exists(target) and os.path.realpath(target) != os.path.realpath(sys.executable):\n return target\n\n\ndef maybe_reexec_pex(compatibility_constraints):\n \"\"\"\n Handle environment overrides for the Python interpreter to use when executing this pex.\n\n This function supports interpreter filtering based on interpreter constraints stored in PEX-INFO\n metadata. If PEX_PYTHON is set in a pexrc, it attempts to obtain the binary location of the\n interpreter specified by PEX_PYTHON. If PEX_PYTHON_PATH is set, it attempts to search the path for\n a matching interpreter in accordance with the interpreter constraints. If both variables are\n present in a pexrc, this function gives precedence to PEX_PYTHON_PATH and errors out if no\n compatible interpreters can be found on said path. If neither variable is set, fall through to\n plain pex execution using PATH searching or the currently executing interpreter.\n\n :param compatibility_constraints: list of requirements-style strings that constrain the\n Python interpreter to re-exec this pex with.\n\n \"\"\"\n if ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC:\n return\n\n selected_interpreter = None\n with TRACER.timed('Selecting runtime interpreter based on pexrc', V=3):\n if ENV.PEX_PYTHON and not ENV.PEX_PYTHON_PATH:\n # preserve PEX_PYTHON re-exec for backwards compatibility\n # TODO: Kill this off completely in favor of PEX_PYTHON_PATH\n # https://github.com/pantsbuild/pex/issues/431\n selected_interpreter = _select_pex_python_interpreter(ENV.PEX_PYTHON,\n compatibility_constraints)\n elif ENV.PEX_PYTHON_PATH:\n selected_interpreter = _select_interpreter(ENV.PEX_PYTHON_PATH, compatibility_constraints)\n\n if selected_interpreter:\n ENV.delete('PEX_PYTHON')\n ENV.delete('PEX_PYTHON_PATH')\n ENV.SHOULD_EXIT_BOOTSTRAP_REEXEC = True\n cmdline = [selected_interpreter] + sys.argv[1:]\n TRACER.log('Re-executing: cmdline=\"%s\", sys.executable=\"%s\", PEX_PYTHON=\"%s\", '\n 'PEX_PYTHON_PATH=\"%s\", COMPATIBILITY_CONSTRAINTS=\"%s\"'\n % (cmdline, sys.executable, ENV.PEX_PYTHON, ENV.PEX_PYTHON_PATH,\n compatibility_constraints))\n os.execve(selected_interpreter, cmdline, ENV.copy())\n\n\ndef bootstrap_pex(entry_point):\n from .finders import register_finders\n register_finders()\n pex_info = get_pex_info(entry_point)\n maybe_reexec_pex(pex_info.interpreter_constraints)\n\n from . import pex\n pex.PEX(entry_point).execute()\n\n\ndef bootstrap_pex_env(entry_point):\n \"\"\"Bootstrap the current runtime environment using a given pex.\"\"\"\n from .environment import PEXEnvironment\n from .finders import register_finders\n from .pex_info import PexInfo\n\n register_finders()\n\n PEXEnvironment(entry_point, PexInfo.from_pex(entry_point)).activate()\n", "path": "pex/pex_bootstrapper.py"}]}

| 3,244 | 330 |

gh_patches_debug_28309

|

rasdani/github-patches

|

git_diff

|

pre-commit__pre-commit-1142

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

include version information in error log

would be useful to include things like:

- pre-commit version

- sys.version

- sys.executable

</issue>

<code>

[start of pre_commit/error_handler.py]

1 from __future__ import absolute_import

2 from __future__ import print_function

3 from __future__ import unicode_literals

4

5 import contextlib

6 import os.path

7 import traceback

8

9 import six

10

11 from pre_commit import five

12 from pre_commit import output

13 from pre_commit.store import Store

14

15

16 class FatalError(RuntimeError):

17 pass

18

19

20 def _to_bytes(exc):

21 try:

22 return bytes(exc)

23 except Exception:

24 return six.text_type(exc).encode('UTF-8')

25

26

27 def _log_and_exit(msg, exc, formatted):

28 error_msg = b''.join((

29 five.to_bytes(msg), b': ',

30 five.to_bytes(type(exc).__name__), b': ',

31 _to_bytes(exc), b'\n',

32 ))

33 output.write(error_msg)

34 store = Store()

35 log_path = os.path.join(store.directory, 'pre-commit.log')

36 output.write_line('Check the log at {}'.format(log_path))

37 with open(log_path, 'wb') as log:

38 output.write(error_msg, stream=log)

39 output.write_line(formatted, stream=log)

40 raise SystemExit(1)

41

42

43 @contextlib.contextmanager

44 def error_handler():

45 try:

46 yield

47 except (Exception, KeyboardInterrupt) as e:

48 if isinstance(e, FatalError):

49 msg = 'An error has occurred'

50 elif isinstance(e, KeyboardInterrupt):

51 msg = 'Interrupted (^C)'

52 else:

53 msg = 'An unexpected error has occurred'

54 _log_and_exit(msg, e, traceback.format_exc())

55

[end of pre_commit/error_handler.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pre_commit/error_handler.py b/pre_commit/error_handler.py

--- a/pre_commit/error_handler.py

+++ b/pre_commit/error_handler.py

@@ -4,10 +4,12 @@

import contextlib

import os.path

+import sys

import traceback

import six

+import pre_commit.constants as C

from pre_commit import five

from pre_commit import output

from pre_commit.store import Store

@@ -34,9 +36,36 @@

store = Store()

log_path = os.path.join(store.directory, 'pre-commit.log')

output.write_line('Check the log at {}'.format(log_path))

+

with open(log_path, 'wb') as log:

+ output.write_line(

+ '### version information\n```', stream=log,

+ )

+ output.write_line(

+ 'pre-commit.version: {}'.format(C.VERSION), stream=log,

+ )

+ output.write_line(

+ 'sys.version:\n{}'.format(

+ '\n'.join(

+ [

+ ' {}'.format(line)

+ for line in sys.version.splitlines()

+ ],

+ ),

+ ),

+ stream=log,

+ )

+ output.write_line(

+ 'sys.executable: {}'.format(sys.executable), stream=log,

+ )

+ output.write_line('os.name: {}'.format(os.name), stream=log)

+ output.write_line(

+ 'sys.platform: {}\n```'.format(sys.platform), stream=log,

+ )

+ output.write_line('### error information\n```', stream=log)

output.write(error_msg, stream=log)

output.write_line(formatted, stream=log)

+ output.write('\n```\n', stream=log)

raise SystemExit(1)

|

{"golden_diff": "diff --git a/pre_commit/error_handler.py b/pre_commit/error_handler.py\n--- a/pre_commit/error_handler.py\n+++ b/pre_commit/error_handler.py\n@@ -4,10 +4,12 @@\n \n import contextlib\n import os.path\n+import sys\n import traceback\n \n import six\n \n+import pre_commit.constants as C\n from pre_commit import five\n from pre_commit import output\n from pre_commit.store import Store\n@@ -34,9 +36,36 @@\n store = Store()\n log_path = os.path.join(store.directory, 'pre-commit.log')\n output.write_line('Check the log at {}'.format(log_path))\n+\n with open(log_path, 'wb') as log:\n+ output.write_line(\n+ '### version information\\n```', stream=log,\n+ )\n+ output.write_line(\n+ 'pre-commit.version: {}'.format(C.VERSION), stream=log,\n+ )\n+ output.write_line(\n+ 'sys.version:\\n{}'.format(\n+ '\\n'.join(\n+ [\n+ ' {}'.format(line)\n+ for line in sys.version.splitlines()\n+ ],\n+ ),\n+ ),\n+ stream=log,\n+ )\n+ output.write_line(\n+ 'sys.executable: {}'.format(sys.executable), stream=log,\n+ )\n+ output.write_line('os.name: {}'.format(os.name), stream=log)\n+ output.write_line(\n+ 'sys.platform: {}\\n```'.format(sys.platform), stream=log,\n+ )\n+ output.write_line('### error information\\n```', stream=log)\n output.write(error_msg, stream=log)\n output.write_line(formatted, stream=log)\n+ output.write('\\n```\\n', stream=log)\n raise SystemExit(1)\n", "issue": "include version information in error log\nwould be useful to include things like:\r\n\r\n- pre-commit version\r\n- sys.version\r\n- sys.executable\n", "before_files": [{"content": "from __future__ import absolute_import\nfrom __future__ import print_function\nfrom __future__ import unicode_literals\n\nimport contextlib\nimport os.path\nimport traceback\n\nimport six\n\nfrom pre_commit import five\nfrom pre_commit import output\nfrom pre_commit.store import Store\n\n\nclass FatalError(RuntimeError):\n pass\n\n\ndef _to_bytes(exc):\n try:\n return bytes(exc)\n except Exception:\n return six.text_type(exc).encode('UTF-8')\n\n\ndef _log_and_exit(msg, exc, formatted):\n error_msg = b''.join((\n five.to_bytes(msg), b': ',\n five.to_bytes(type(exc).__name__), b': ',\n _to_bytes(exc), b'\\n',\n ))\n output.write(error_msg)\n store = Store()\n log_path = os.path.join(store.directory, 'pre-commit.log')\n output.write_line('Check the log at {}'.format(log_path))\n with open(log_path, 'wb') as log:\n output.write(error_msg, stream=log)\n output.write_line(formatted, stream=log)\n raise SystemExit(1)\n\n\[email protected]\ndef error_handler():\n try:\n yield\n except (Exception, KeyboardInterrupt) as e:\n if isinstance(e, FatalError):\n msg = 'An error has occurred'\n elif isinstance(e, KeyboardInterrupt):\n msg = 'Interrupted (^C)'\n else:\n msg = 'An unexpected error has occurred'\n _log_and_exit(msg, e, traceback.format_exc())\n", "path": "pre_commit/error_handler.py"}]}

| 988 | 378 |

gh_patches_debug_32024

|

rasdani/github-patches

|

git_diff

|

medtagger__MedTagger-391

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Remove error about not picking category properly

## Current Behavior

When user access labeling page without choosing the category via the category page he/she receives an error about not choosing the category properly. While this is necessary for preventing users accessing this page, it makes development more difficult. Every time when front-end loads, developer has to go back to category page.

## Expected Behavior

There shouldn't be an error about not picking category properly.

## Steps to Reproduce the Problem

1. Go to labeling page `/labeling` without going through category page.

## Additional comment (optional)

We should probably get category using `queryParams` like before and load current category on marker page.

</issue>

<code>

[start of backend/medtagger/api/tasks/service_rest.py]

1 """Module responsible for definition of Tasks service available via HTTP REST API."""

2 from typing import Any

3

4 from flask import request

5 from flask_restplus import Resource

6

7 from medtagger.api import api

8 from medtagger.api.tasks import business, serializers

9 from medtagger.api.security import login_required, role_required

10 from medtagger.database.models import LabelTag

11

12 tasks_ns = api.namespace('tasks', 'Methods related with tasks')

13

14

15 @tasks_ns.route('')

16 class Tasks(Resource):

17 """Endpoint that manages tasks."""

18

19 @staticmethod

20 @login_required

21 @tasks_ns.marshal_with(serializers.out__task)

22 @tasks_ns.doc(security='token')

23 @tasks_ns.doc(description='Return all available tasks.')

24 @tasks_ns.doc(responses={200: 'Success'})

25 def get() -> Any:

26 """Return all available tasks."""

27 return business.get_tasks()

28

29 @staticmethod

30 @login_required

31 @role_required('admin')

32 @tasks_ns.expect(serializers.in__task)

33 @tasks_ns.marshal_with(serializers.out__task)

34 @tasks_ns.doc(security='token')

35 @tasks_ns.doc(description='Create new Task.')

36 @tasks_ns.doc(responses={201: 'Success'})

37 def post() -> Any:

38 """Create new Task."""

39 payload = request.json

40

41 key = payload['key']

42 name = payload['name']

43 image_path = payload['image_path']

44 datasets_keys = payload['datasets_keys']

45 tags = [LabelTag(tag['key'], tag['name'], tag['tools']) for tag in payload['tags']]

46

47 return business.create_task(key, name, image_path, datasets_keys, tags), 201

48

[end of backend/medtagger/api/tasks/service_rest.py]

[start of backend/medtagger/api/tasks/business.py]

1 """Module responsible for business logic in all Tasks endpoints."""

2 from typing import List

3

4 from medtagger.database.models import Task, LabelTag

5 from medtagger.repositories import (

6 tasks as TasksRepository,

7 )

8

9

10 def get_tasks() -> List[Task]:

11 """Fetch all tasks.

12

13 :return: list of tasks

14 """

15 return TasksRepository.get_all_tasks()

16

17

18 def create_task(key: str, name: str, image_path: str, datasets_keys: List[str], tags: List[LabelTag]) -> Task:

19 """Create new Task.

20

21 :param key: unique key representing Task

22 :param name: name which describes this Task

23 :param image_path: path to the image which is located on the frontend

24 :param datasets_keys: Keys of Datasets that Task takes Scans from

25 :param tags: Label Tags that will be created and assigned to Task

26 :return: Task object

27 """

28 return TasksRepository.add_task(key, name, image_path, datasets_keys, tags)

29

[end of backend/medtagger/api/tasks/business.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/backend/medtagger/api/tasks/business.py b/backend/medtagger/api/tasks/business.py

--- a/backend/medtagger/api/tasks/business.py

+++ b/backend/medtagger/api/tasks/business.py

@@ -1,6 +1,9 @@

"""Module responsible for business logic in all Tasks endpoints."""

from typing import List

+from sqlalchemy.orm.exc import NoResultFound

+

+from medtagger.api.exceptions import NotFoundException

from medtagger.database.models import Task, LabelTag

from medtagger.repositories import (

tasks as TasksRepository,

@@ -15,6 +18,17 @@

return TasksRepository.get_all_tasks()

+def get_task_for_key(task_key: str) -> Task:

+ """Fetch Task for given key.

+

+ :return: Task

+ """

+ try:

+ return TasksRepository.get_task_by_key(task_key)

+ except NoResultFound:

+ raise NotFoundException('Did not found task for {} key!'.format(task_key))

+

+

def create_task(key: str, name: str, image_path: str, datasets_keys: List[str], tags: List[LabelTag]) -> Task:

"""Create new Task.

diff --git a/backend/medtagger/api/tasks/service_rest.py b/backend/medtagger/api/tasks/service_rest.py

--- a/backend/medtagger/api/tasks/service_rest.py

+++ b/backend/medtagger/api/tasks/service_rest.py

@@ -43,5 +43,19 @@

image_path = payload['image_path']

datasets_keys = payload['datasets_keys']

tags = [LabelTag(tag['key'], tag['name'], tag['tools']) for tag in payload['tags']]

-

return business.create_task(key, name, image_path, datasets_keys, tags), 201

+

+

+@tasks_ns.route('/<string:task_key>')

+class Task(Resource):

+ """Endpoint that manages single task."""

+

+ @staticmethod

+ @login_required

+ @tasks_ns.marshal_with(serializers.out__task)

+ @tasks_ns.doc(security='token')

+ @tasks_ns.doc(description='Get task for given key.')

+ @tasks_ns.doc(responses={200: 'Success', 404: 'Could not find task'})

+ def get(task_key: str) -> Any:

+ """Return task for given key."""

+ return business.get_task_for_key(task_key)

|

{"golden_diff": "diff --git a/backend/medtagger/api/tasks/business.py b/backend/medtagger/api/tasks/business.py\n--- a/backend/medtagger/api/tasks/business.py\n+++ b/backend/medtagger/api/tasks/business.py\n@@ -1,6 +1,9 @@\n \"\"\"Module responsible for business logic in all Tasks endpoints.\"\"\"\n from typing import List\n \n+from sqlalchemy.orm.exc import NoResultFound\n+\n+from medtagger.api.exceptions import NotFoundException\n from medtagger.database.models import Task, LabelTag\n from medtagger.repositories import (\n tasks as TasksRepository,\n@@ -15,6 +18,17 @@\n return TasksRepository.get_all_tasks()\n \n \n+def get_task_for_key(task_key: str) -> Task:\n+ \"\"\"Fetch Task for given key.\n+\n+ :return: Task\n+ \"\"\"\n+ try:\n+ return TasksRepository.get_task_by_key(task_key)\n+ except NoResultFound:\n+ raise NotFoundException('Did not found task for {} key!'.format(task_key))\n+\n+\n def create_task(key: str, name: str, image_path: str, datasets_keys: List[str], tags: List[LabelTag]) -> Task:\n \"\"\"Create new Task.\n \ndiff --git a/backend/medtagger/api/tasks/service_rest.py b/backend/medtagger/api/tasks/service_rest.py\n--- a/backend/medtagger/api/tasks/service_rest.py\n+++ b/backend/medtagger/api/tasks/service_rest.py\n@@ -43,5 +43,19 @@\n image_path = payload['image_path']\n datasets_keys = payload['datasets_keys']\n tags = [LabelTag(tag['key'], tag['name'], tag['tools']) for tag in payload['tags']]\n-\n return business.create_task(key, name, image_path, datasets_keys, tags), 201\n+\n+\n+@tasks_ns.route('/<string:task_key>')\n+class Task(Resource):\n+ \"\"\"Endpoint that manages single task.\"\"\"\n+\n+ @staticmethod\n+ @login_required\n+ @tasks_ns.marshal_with(serializers.out__task)\n+ @tasks_ns.doc(security='token')\n+ @tasks_ns.doc(description='Get task for given key.')\n+ @tasks_ns.doc(responses={200: 'Success', 404: 'Could not find task'})\n+ def get(task_key: str) -> Any:\n+ \"\"\"Return task for given key.\"\"\"\n+ return business.get_task_for_key(task_key)\n", "issue": "Remove error about not picking category properly\n## Current Behavior\r\n\r\nWhen user access labeling page without choosing the category via the category page he/she receives an error about not choosing the category properly. While this is necessary for preventing users accessing this page, it makes development more difficult. Every time when front-end loads, developer has to go back to category page.\r\n\r\n## Expected Behavior\r\n\r\nThere shouldn't be an error about not picking category properly. \r\n\r\n## Steps to Reproduce the Problem\r\n\r\n 1. Go to labeling page `/labeling` without going through category page.\r\n\r\n## Additional comment (optional)\r\n\r\nWe should probably get category using `queryParams` like before and load current category on marker page.\r\n\n", "before_files": [{"content": "\"\"\"Module responsible for definition of Tasks service available via HTTP REST API.\"\"\"\nfrom typing import Any\n\nfrom flask import request\nfrom flask_restplus import Resource\n\nfrom medtagger.api import api\nfrom medtagger.api.tasks import business, serializers\nfrom medtagger.api.security import login_required, role_required\nfrom medtagger.database.models import LabelTag\n\ntasks_ns = api.namespace('tasks', 'Methods related with tasks')\n\n\n@tasks_ns.route('')\nclass Tasks(Resource):\n \"\"\"Endpoint that manages tasks.\"\"\"\n\n @staticmethod\n @login_required\n @tasks_ns.marshal_with(serializers.out__task)\n @tasks_ns.doc(security='token')\n @tasks_ns.doc(description='Return all available tasks.')\n @tasks_ns.doc(responses={200: 'Success'})\n def get() -> Any:\n \"\"\"Return all available tasks.\"\"\"\n return business.get_tasks()\n\n @staticmethod\n @login_required\n @role_required('admin')\n @tasks_ns.expect(serializers.in__task)\n @tasks_ns.marshal_with(serializers.out__task)\n @tasks_ns.doc(security='token')\n @tasks_ns.doc(description='Create new Task.')\n @tasks_ns.doc(responses={201: 'Success'})\n def post() -> Any:\n \"\"\"Create new Task.\"\"\"\n payload = request.json\n\n key = payload['key']\n name = payload['name']\n image_path = payload['image_path']\n datasets_keys = payload['datasets_keys']\n tags = [LabelTag(tag['key'], tag['name'], tag['tools']) for tag in payload['tags']]\n\n return business.create_task(key, name, image_path, datasets_keys, tags), 201\n", "path": "backend/medtagger/api/tasks/service_rest.py"}, {"content": "\"\"\"Module responsible for business logic in all Tasks endpoints.\"\"\"\nfrom typing import List\n\nfrom medtagger.database.models import Task, LabelTag\nfrom medtagger.repositories import (\n tasks as TasksRepository,\n)\n\n\ndef get_tasks() -> List[Task]:\n \"\"\"Fetch all tasks.\n\n :return: list of tasks\n \"\"\"\n return TasksRepository.get_all_tasks()\n\n\ndef create_task(key: str, name: str, image_path: str, datasets_keys: List[str], tags: List[LabelTag]) -> Task:\n \"\"\"Create new Task.\n\n :param key: unique key representing Task\n :param name: name which describes this Task\n :param image_path: path to the image which is located on the frontend\n :param datasets_keys: Keys of Datasets that Task takes Scans from\n :param tags: Label Tags that will be created and assigned to Task\n :return: Task object\n \"\"\"\n return TasksRepository.add_task(key, name, image_path, datasets_keys, tags)\n", "path": "backend/medtagger/api/tasks/business.py"}]}

| 1,426 | 526 |

gh_patches_debug_7569

|

rasdani/github-patches

|

git_diff

|

pre-commit__pre-commit-1881

|

You will be provided with a partial code base and an issue statement explaining a problem to resolve.

<issue>

Parallel execution ran into "patch does not apply"

I modified a bunch of repos using `pre-commit` in parallel and ran `git commit` at the same time, with unstaged changes. The pre-commit processes did `[WARNING] Unstaged files detected.`, stashed the changes in a pach, ran, and then tried to reapply the patches.

Some repos failed with:

```

[WARNING] Stashed changes conflicted with hook auto-fixes... Rolling back fixes...

An unexpected error has occurred: CalledProcessError: command: ('/usr/local/Cellar/git/2.31.1/libexec/git-core/git', '-c', 'core.autocrlf=false', 'apply', '--whitespace=nowarn', '/Users/chainz/.cache/pre-commit/patch1618586253')

return code: 1

expected return code: 0

stdout: (none)

stderr:

error: patch failed: .github/workflows/main.yml:21

error: .github/workflows/main.yml: patch does not apply

Check the log at /Users/chainz/.cache/pre-commit/pre-commit.log

```

It looks like this is due to use of the unix timestamp as the only differentiator in patch file paths, causing the parallely-created patches to clobber each other.

`pre-commit.log` says:

### version information

```

pre-commit version: 2.12.0

sys.version:

3.9.4 (default, Apr 5 2021, 01:49:30)

[Clang 12.0.0 (clang-1200.0.32.29)]

sys.executable: /usr/local/Cellar/pre-commit/2.12.0/libexec/bin/python3

os.name: posix

sys.platform: darwin

```

### error information

```

An unexpected error has occurred: CalledProcessError: command: ('/usr/local/Cellar/git/2.31.1/libexec/git-core/git', '-c', 'core.autocrlf=false', 'apply', '--whitespace=nowarn', '/Users/chainz/.cache/pre-commit/patch1618586253')

return code: 1

expected return code: 0

stdout: (none)

stderr:

error: patch failed: .github/workflows/main.yml:21

error: .github/workflows/main.yml: patch does not apply

```

```

Traceback (most recent call last):

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/staged_files_only.py", line 20, in _git_apply

cmd_output_b('git', *args)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/util.py", line 154, in cmd_output_b

raise CalledProcessError(returncode, cmd, retcode, stdout_b, stderr_b)

pre_commit.util.CalledProcessError: command: ('/usr/local/Cellar/git/2.31.1/libexec/git-core/git', 'apply', '--whitespace=nowarn', '/Users/chainz/.cache/pre-commit/patch1618586253')

return code: 1

expected return code: 0

stdout: (none)

stderr:

error: patch failed: .github/workflows/main.yml:21

error: .github/workflows/main.yml: patch does not apply

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/staged_files_only.py", line 68, in _unstaged_changes_cleared

_git_apply(patch_filename)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/staged_files_only.py", line 23, in _git_apply

cmd_output_b('git', '-c', 'core.autocrlf=false', *args)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/util.py", line 154, in cmd_output_b

raise CalledProcessError(returncode, cmd, retcode, stdout_b, stderr_b)

pre_commit.util.CalledProcessError: command: ('/usr/local/Cellar/git/2.31.1/libexec/git-core/git', '-c', 'core.autocrlf=false', 'apply', '--whitespace=nowarn', '/Users/chainz/.cache/pre-commit/patch1618586253')

return code: 1

expected return code: 0

stdout: (none)

stderr:

error: patch failed: .github/workflows/main.yml:21

error: .github/workflows/main.yml: patch does not apply

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/staged_files_only.py", line 20, in _git_apply

cmd_output_b('git', *args)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/util.py", line 154, in cmd_output_b

raise CalledProcessError(returncode, cmd, retcode, stdout_b, stderr_b)

pre_commit.util.CalledProcessError: command: ('/usr/local/Cellar/git/2.31.1/libexec/git-core/git', 'apply', '--whitespace=nowarn', '/Users/chainz/.cache/pre-commit/patch1618586253')

return code: 1

expected return code: 0

stdout: (none)

stderr:

error: patch failed: .github/workflows/main.yml:21

error: .github/workflows/main.yml: patch does not apply

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/error_handler.py", line 65, in error_handler

yield

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/main.py", line 357, in main

return hook_impl(

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/commands/hook_impl.py", line 227, in hook_impl

return retv | run(config, store, ns)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/commands/run.py", line 408, in run

return _run_hooks(config, hooks, args, environ)

File "/usr/local/Cellar/[email protected]/3.9.4/Frameworks/Python.framework/Versions/3.9/lib/python3.9/contextlib.py", line 513, in __exit__

raise exc_details[1]

File "/usr/local/Cellar/[email protected]/3.9.4/Frameworks/Python.framework/Versions/3.9/lib/python3.9/contextlib.py", line 498, in __exit__

if cb(*exc_details):

File "/usr/local/Cellar/[email protected]/3.9.4/Frameworks/Python.framework/Versions/3.9/lib/python3.9/contextlib.py", line 124, in __exit__

next(self.gen)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/staged_files_only.py", line 93, in staged_files_only

yield

File "/usr/local/Cellar/[email protected]/3.9.4/Frameworks/Python.framework/Versions/3.9/lib/python3.9/contextlib.py", line 124, in __exit__

next(self.gen)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/staged_files_only.py", line 78, in _unstaged_changes_cleared

_git_apply(patch_filename)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/staged_files_only.py", line 23, in _git_apply

cmd_output_b('git', '-c', 'core.autocrlf=false', *args)

File "/usr/local/Cellar/pre-commit/2.12.0/libexec/lib/python3.9/site-packages/pre_commit/util.py", line 154, in cmd_output_b

raise CalledProcessError(returncode, cmd, retcode, stdout_b, stderr_b)

pre_commit.util.CalledProcessError: command: ('/usr/local/Cellar/git/2.31.1/libexec/git-core/git', '-c', 'core.autocrlf=false', 'apply', '--whitespace=nowarn', '/Users/chainz/.cache/pre-commit/patch1618586253')

return code: 1

expected return code: 0

stdout: (none)

stderr:

error: patch failed: .github/workflows/main.yml:21

error: .github/workflows/main.yml: patch does not apply

```

</issue>

<code>

[start of pre_commit/staged_files_only.py]

1 import contextlib

2 import logging

3 import os.path

4 import time

5 from typing import Generator

6

7 from pre_commit import git

8 from pre_commit.util import CalledProcessError

9 from pre_commit.util import cmd_output

10 from pre_commit.util import cmd_output_b

11 from pre_commit.xargs import xargs

12

13

14 logger = logging.getLogger('pre_commit')

15

16

17 def _git_apply(patch: str) -> None:

18 args = ('apply', '--whitespace=nowarn', patch)

19 try:

20 cmd_output_b('git', *args)

21 except CalledProcessError:

22 # Retry with autocrlf=false -- see #570

23 cmd_output_b('git', '-c', 'core.autocrlf=false', *args)

24

25

26 @contextlib.contextmanager

27 def _intent_to_add_cleared() -> Generator[None, None, None]:

28 intent_to_add = git.intent_to_add_files()

29 if intent_to_add:

30 logger.warning('Unstaged intent-to-add files detected.')

31

32 xargs(('git', 'rm', '--cached', '--'), intent_to_add)

33 try:

34 yield

35 finally:

36 xargs(('git', 'add', '--intent-to-add', '--'), intent_to_add)

37 else:

38 yield

39

40

41 @contextlib.contextmanager

42 def _unstaged_changes_cleared(patch_dir: str) -> Generator[None, None, None]:

43 tree = cmd_output('git', 'write-tree')[1].strip()

44 retcode, diff_stdout_binary, _ = cmd_output_b(

45 'git', 'diff-index', '--ignore-submodules', '--binary',

46 '--exit-code', '--no-color', '--no-ext-diff', tree, '--',

47 retcode=None,

48 )

49 if retcode and diff_stdout_binary.strip():

50 patch_filename = f'patch{int(time.time())}'

51 patch_filename = os.path.join(patch_dir, patch_filename)

52 logger.warning('Unstaged files detected.')

53 logger.info(f'Stashing unstaged files to {patch_filename}.')

54 # Save the current unstaged changes as a patch

55 os.makedirs(patch_dir, exist_ok=True)

56 with open(patch_filename, 'wb') as patch_file:

57 patch_file.write(diff_stdout_binary)

58

59 # prevent recursive post-checkout hooks (#1418)

60 no_checkout_env = dict(os.environ, _PRE_COMMIT_SKIP_POST_CHECKOUT='1')

61 cmd_output_b('git', 'checkout', '--', '.', env=no_checkout_env)

62

63 try:

64 yield

65 finally:

66 # Try to apply the patch we saved

67 try:

68 _git_apply(patch_filename)

69 except CalledProcessError:

70 logger.warning(

71 'Stashed changes conflicted with hook auto-fixes... '

72 'Rolling back fixes...',

73 )

74 # We failed to apply the patch, presumably due to fixes made

75 # by hooks.

76 # Roll back the changes made by hooks.

77 cmd_output_b('git', 'checkout', '--', '.', env=no_checkout_env)

78 _git_apply(patch_filename)

79

80 logger.info(f'Restored changes from {patch_filename}.')

81 else:

82 # There weren't any staged files so we don't need to do anything

83 # special

84 yield

85

86

87 @contextlib.contextmanager

88 def staged_files_only(patch_dir: str) -> Generator[None, None, None]:

89 """Clear any unstaged changes from the git working directory inside this

90 context.

91 """

92 with _intent_to_add_cleared(), _unstaged_changes_cleared(patch_dir):

93 yield

94

[end of pre_commit/staged_files_only.py]

</code>

I need you to solve this issue by generating a single patch file that I can apply directly to this repository using git apply. Please respond with a single patch file in the following format.

<patch>

diff --git a/file.py b/file.py

--- a/file.py

+++ b/file.py

@@ -1,27 +1,35 @@

def euclidean(a, b):

- while b:

- a, b = b, a % b

- return a

+ if b == 0:

+ return a

+ return euclidean(b, a % b)

def bresenham(x0, y0, x1, y1):

points = []

dx = abs(x1 - x0)

dy = abs(y1 - y0)

- sx = 1 if x0 < x1 else -1

- sy = 1 if y0 < y1 else -1

- err = dx - dy

+ x, y = x0, y0

+ sx = -1 if x0 > x1 else 1

+ sy = -1 if y0 > y1 else 1

- while True:

- points.append((x0, y0))

- if x0 == x1 and y0 == y1:

- break

- e2 = 2 * err

- if e2 > -dy:

- err -= dy

- x0 += sx

- if e2 < dx:

- err += dx

- y0 += sy

+ if dx > dy:

+ err = dx / 2.0

+ while x != x1:

+ points.append((x, y))

+ err -= dy

+ if err < 0:

+ y += sy

+ err += dx

+ x += sx

+ else:

+ err = dy / 2.0

+ while y != y1:

+ points.append((x, y))

+ err -= dx

+ if err < 0:

+ x += sx

+ err += dy

+ y += sy

+

+ points.append((x, y))

return points

</patch>

|

diff --git a/pre_commit/staged_files_only.py b/pre_commit/staged_files_only.py

--- a/pre_commit/staged_files_only.py

+++ b/pre_commit/staged_files_only.py

@@ -47,7 +47,7 @@

retcode=None,

)

if retcode and diff_stdout_binary.strip():

- patch_filename = f'patch{int(time.time())}'

+ patch_filename = f'patch{int(time.time())}-{os.getpid()}'

patch_filename = os.path.join(patch_dir, patch_filename)

logger.warning('Unstaged files detected.')

logger.info(f'Stashing unstaged files to {patch_filename}.')

|