code

stringlengths 114

1.05M

| path

stringlengths 3

312

| quality_prob

float64 0.5

0.99

| learning_prob

float64 0.2

1

| filename

stringlengths 3

168

| kind

stringclasses 1

value |

|---|---|---|---|---|---|

# Samplitude [](https://travis-ci.com/pgdr/samplitude)

CLI generation and plotting of random variables:

```bash

$ samplitude "sin(0.31415) | sample(6) | round | cli"

0.0

0.309

0.588

0.809

0.951

1.0

```

The word _samplitude_ is a portmanteau of _sample_ and _amplitude_. This

project also started as an étude, hence should be pronounced _sampl-étude_.

`samplitude` is a chain starting with a _generator_, followed by zero or more

_filters_, followed by a consumer. Most generators are infinite (with the

exception of `range` and `lists` and possibly `stdin`). Some of the filters can

turn infinite generators into finite generators (like `sample` and `gobble`),

and some filters can turn finite generators into infinite generators, such as

`choice`.

_Consumers_ are filters that necessarily flush the input; `list`, `cli`,

`json`, `unique`, and the plotting tools, `hist`, `scatter` and `line` are

examples of consumers. The `list` consumer is a Jinja2 built-in, and other

Jinja2 consumers are `sum`, `min`, and `max`:

```bash

samplitude "sin(0.31415) | sample(5) | round | max | cli"

0.951

```

For simplicity, **s8e** is an alias for samplitude.

## Generators

In addition to the standard `range` function, we support infinite generators

* `exponential(lambd)`: `lambd` is 1.0 divided by the desired mean.

* `uniform(a, b)`: Get a random number in the range `[a, b)` or `[a, b]`

depending on rounding.

* `gauss(mu, sigma)`: `mu` is the mean, and `sigma` is the standard deviation.

* `normal(mu, sigma)`: as above

* `lognormal(mu, sigma)`: as above

* `triangular(low, high)`: Continuous distribution bounded by given lower and

upper limits, and having a given mode value in-between.

* `beta(alpha, beta)`: Conditions on the parameters are `alpha > 0` and `beta >

0`. Returned values range between 0 and 1.

* `gamma(alpha, beta)`: as above

* `weibull(alpha, beta)`: `alpha` is the scale parameter and `beta` is the shape

parameter.

* `pareto(alpha)`: Pareto distribution. `alpha` is the shape parameter.

* `vonmises(mu, kappa)`: `mu` is the mean angle, expressed in radians between 0

and `2*pi`, and `kappa` is the concentration parameter, which must be greater

than or equal to zero. If kappa is equal to zero, this distribution reduces

to a uniform random angle over the range 0 to `2*pi`.

Provided that you have installed the `scipy.stats` package, the

* `pert(low, peak, high)`

distribution is supported.

We have a special infinite generator (filter) that works on finite generators:

* `choice`,

whose behaviour is explained below.

For input from files, either use `words` with a specified environment variable

`DICTIONARY`, or pipe through

* `stdin()`

which reads from `stdin`.

If the file is a csv file, there is a `csv` generator that reads a csv file with

Pandas and outputs the first column (if nothing else is specified). Specify the

column with either an integer index or a column name:

```bash

>>> samplitude "csv('iris.csv', 'virginica') | counter | cli"

0 50

1 50

2 50

```

For other files, we have the `file` generator:

```bash

>>> s8e "file('iris.csv') | sample(1) | cli"

150,4,setosa,versicolor,virginica

```

Finally, we have `combinations` and `permutations` that are inherited from

itertools and behave exactly like those.

```bash

>>> s8e "'ABC' | permutations | cli"

```

However, the output of this is rather non-UNIXy, with the abstractions leaking through:

```bash

>>> s8e "'HT' | permutations | cli"

('H', 'T')

('T', 'H')

```

So to get a better output, we can use an _elementwise join_ `elt_join`:

```bash

>>> s8e "'HT' | permutations | elt_join | cli"

H T

T H

```

which also takes a seperator as argument:

```bash

>>> s8e "'HT' | permutations | elt_join(';') | cli"

H;T

T;H

```

This is already supported by Jinja's `map` function (notice the strings around `join`):

```bash

>>> s8e "'HT' | permutations | map('join', ';') | cli"

H;T

T;H

```

We can thus count the number of permutations of a set of size 10:

```bash

>>> s8e "range(10) | permutations | len"

3628800

```

The `product` generator takes two generators and computes a cross-product of

these. In addition,

## A warning about infinity

All generators are (potentially) infinite generators, and must be sampled with

`sample(n)` before consuming!

## Usage and installation

Install with

```bash

pip install samplitude

```

or to get bleeding release,

```bash

pip install git+https://github.com/pgdr/samplitude

```

### Examples

This is pure Jinja2:

```bash

>>> samplitude "range(5) | list"

[0, 1, 2, 3, 4]

```

However, to get a more UNIXy output, we use `cli` instead of `list`:

```bash

>>> s8e "range(5) | cli"

0

1

2

3

4

```

To limit the output, we use `sample(n)`:

```bash

>>> s8e "range(1000) | sample(5) | cli"

0

1

2

3

4

```

That isn't very helpful on the `range` generator, which is already finite, but

is much more helpful on an infinite generator. The above example is probably

better written as

```bash

>>> s8e "count() | sample(5) | cli"

0

1

2

3

4

```

However, much more interesting are the infinite random generators, such as the

`uniform` generator:

```bash

>>> s8e "uniform(0, 5) | sample(5) | cli"

3.3900198868059235

1.2002767137709318

0.40999391897569126

1.9394585953696264

4.37327472704115

```

We can round the output in case we don't need as many digits (note that `round`

is a generator as well and can be placed on either side of `sample`):

```bash

>>> s8e "uniform(0, 5) | round(2) | sample(5) | cli"

4.98

4.42

2.05

2.29

3.34

```

### Selection and modifications

The `sample` behavior is equivalent to the `head` program, or from languages

such as Haskell. The `head` alias is supported:

```bash

>>> samplitude "uniform(0, 5) | round(2) | head(5) | cli"

4.58

4.33

1.87

2.09

4.8

```

`drop` is also available:

```bash

>>> s8e "uniform(0, 5) | round(2) | drop(2) | head(3) | cli"

1.87

2.09

4.8

```

To **shift** and **scale** distributions, we can use the `shift(s)` and

`scale(s)` filters.

To get a Poisson distribution process starting at 15, we can run

```bash

>>> s8e "poisson(4) | shift(15) | sample(5) |cli"

18

21

19

22

17

```

or to get the Poisson point process (exponential distribution),

```bash

>>> s8e "exponential(4) | round | shift(15) | sample(5) |cli"

16.405

15.54

15.132

15.153

15.275

```

Both `shift` and `scale` work on generators, so to add `sin(0.1)` and

`sin(0.2)`, we can run

```bash

>>> s8e "sin(0.1) | shift(sin(0.2)) | sample(10) | cli"

```

### Choices and other operations

Using `choice` with a finite generator gives an infinite generator that chooses

from the provided generator:

```bash

>>> samplitude "range(0, 11, 2) | choice | sample(6) | cli"

8

0

8

10

4

6

```

Jinja2 supports more generic lists, e.g., lists of strings. Hence, we can write

```bash

>>> s8e "['win', 'draw', 'loss'] | choice | sample(6) | sort | cli"

draw

draw

loss

loss

loss

win

```

... and as in Python, strings are also iterable:

```bash

>>> s8e "'HT' | cli"

H

T

```

... so we can flip six coins with

```bash

>>> s8e "'HT' | choice | sample(6) | cli"

H

T

T

H

H

H

```

We can flip 100 coins and count the output with `counter` (which is

`collections.Counter`)

```bash

>>> s8e "'HT' | choice | sample(100) | counter | cli"

H 47

T 53

```

The `sort` functionality works as expected on a `Counter` object (a

`dict` type), so if we want the output sorted by key, we can run

```bash

>>> s8e "range(1,7) | choice | sample(100) | counter | sort | elt_join | cli" 42 # seed=42

1 17

2 21

3 12

4 21

5 13

6 16

```

There is a minor hack to sort by value, namely by `swap`-ing the Counter twice:

```bash

>>> s8e "range(1,7) | choice | sample(100) |

counter | swap | sort | swap | elt_join | cli" 42 # seed=42

3 12

5 13

6 16

1 17

2 21

4 21

```

The `swap` filter does an element-wise reverse, with element-wise reverse

defined on a dictionary as a list of `(value, key)` for each key-value pair in

the dictionary.

So, to get the three most common anagram strings, we can run

```bash

>>> s8e "words() | map('sort') | counter | swap | sort(reverse=True) |

swap | sample(3) | map('first') | elt_join('') | cli"

aeprs

acerst

opst

```

Using `stdin()` as a generator, we can pipe into `samplitude`. Beware that

`stdin()` flushes the input, hence `stdin` (currently) does not work with

infinite input streams.

```bash

>>> ls | samplitude "stdin() | choice | sample(1) | cli"

some_file

```

Then, if we ever wanted to shuffle `ls` we can run

```bash

>>> ls | samplitude "stdin() | shuffle | cli"

some_file

```

```bash

>>> cat FILE | samplitude "stdin() | cli"

# NOOP; cats FILE

```

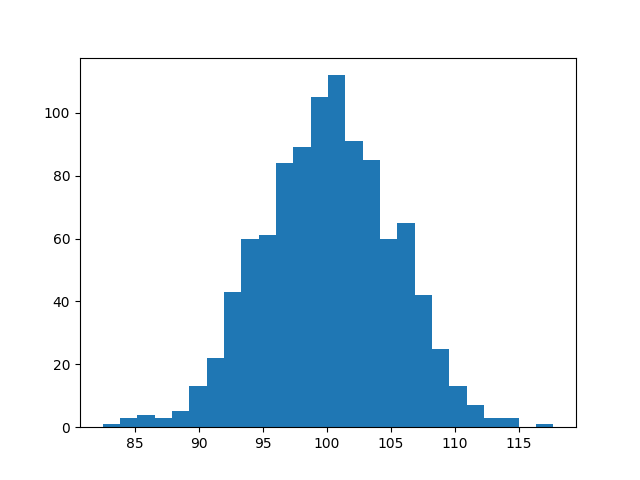

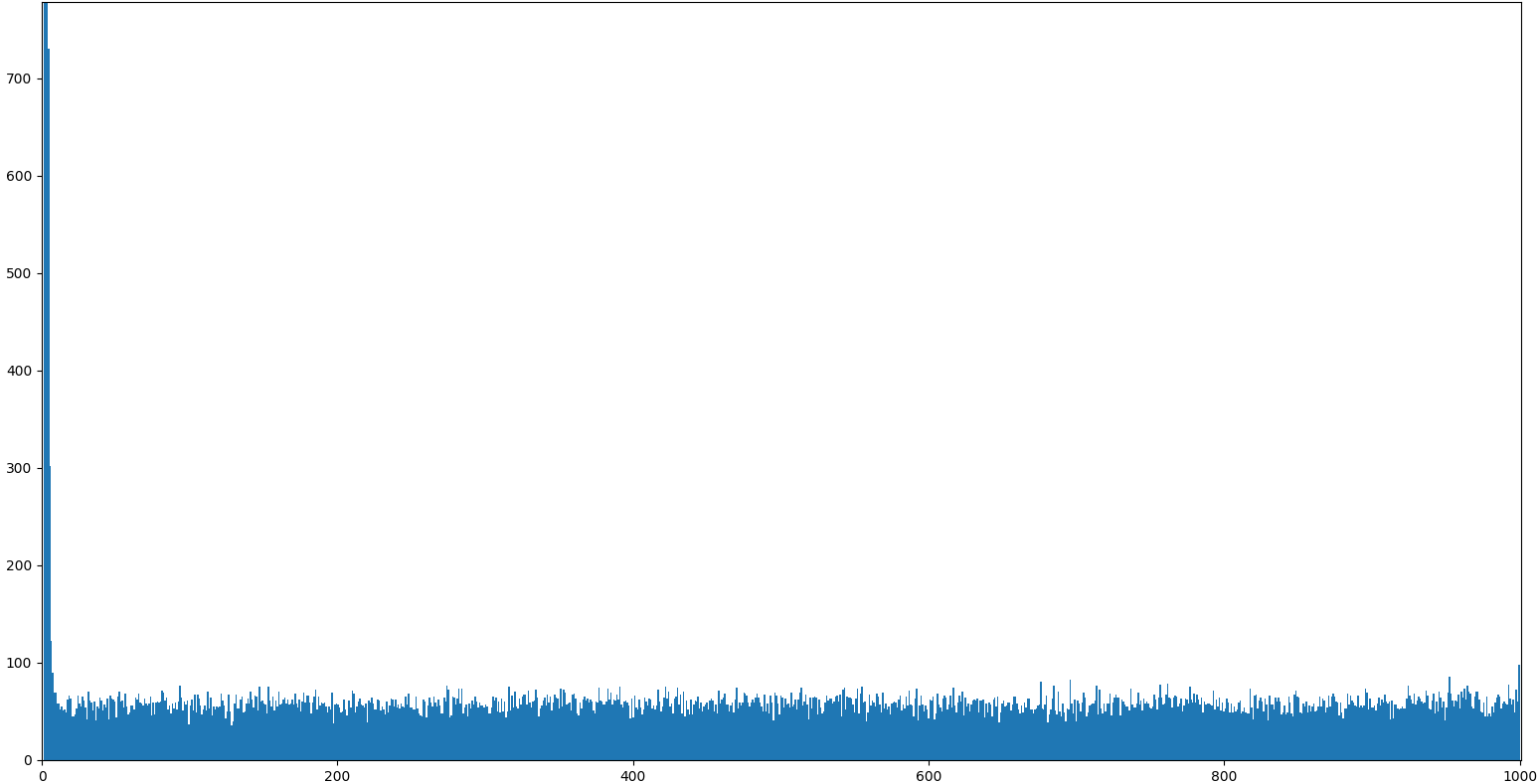

### The fun powder plot

For fun, if you have installed `matplotlib`, we support plotting, `hist` being

the most useful.

```bash

>>> samplitude "normal(100, 5) | sample(1000) | hist"

```

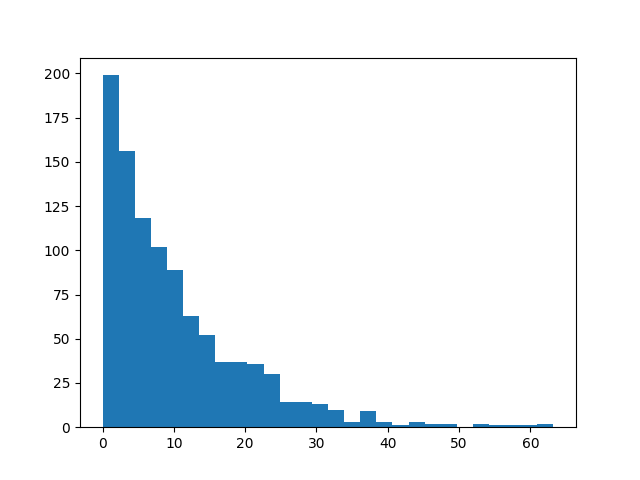

An exponential distribution can be plotted with `exponential(lamba)`. Note that

the `cli` output must be the last filter in the chain, as that is a command-line

utility only:

```bash

>>> s8e "normal(100, 5) | sample(1000) | hist | cli"

```

To **repress output after plotting**, you can use the `gobble` filter to empty

the pipe:

```bash

>>> s8e "normal(100, 5) | sample(1000) | hist | gobble"

```

The

[`pert` distribution](https://en.wikipedia.org/wiki/PERT_distribution)

takes inputs `low`, `peak`, and `high`:

```bash

>>> s8e "pert(10, 50, 90) | sample(100000) | hist(100) | gobble"

```

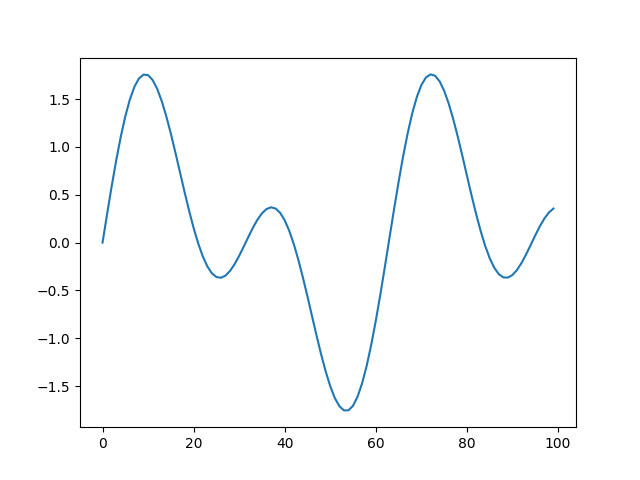

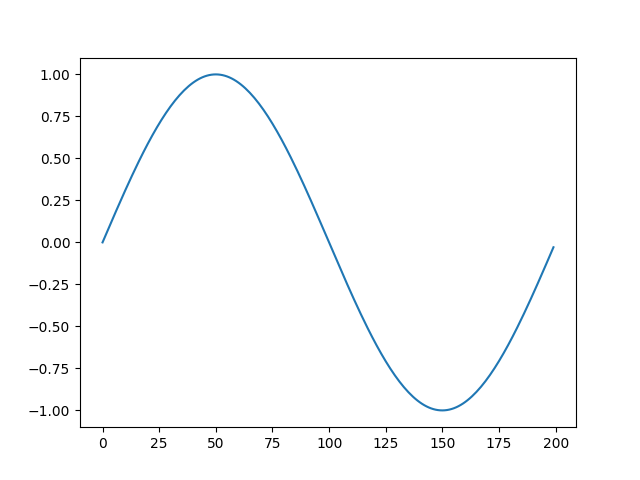

Although `hist` is the most useful, one could imaging running `s8e` on

timeseries, where a `line` plot makes most sense:

```bash

>>> s8e "sin(22/700) | sample(200) | line"

```

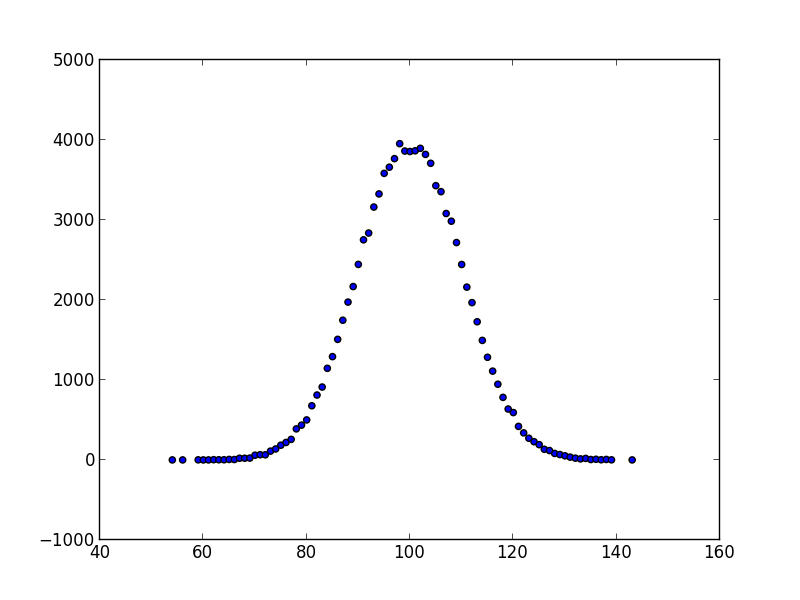

The scatter function can also be used, but requires that the input stream is a

stream of pairs, which can be obtained either by the `product` generator, or via

the `pair` or `counter` filter:

```bash

s8e "normal(100, 10) | sample(10**5) | round(0) | counter | scatter"

```

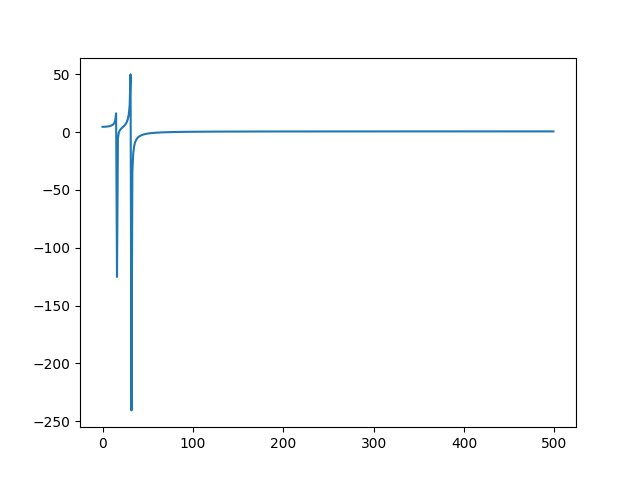

### Fourier

A fourier transform is offered as a filter `fft`:

```bash

>>> samplitude "sin(0.1) | shift(sin(0.2)) | sample(1000) | fft | line | gobble"

```

## Your own filter

If you use Samplitude programmatically, you can register your own filter by

sending a dictionary

```python

{'name1' : filter1,

'name2' : filter2,

#...,

'namen' : filtern,

}

```

to the `samplitude` function.

### Example: secretary problem

Suppose you want to emulate the secretary problem ...

#### Intermezzo: The problem

For those not familiar, you are a boss, Alice, who wants to hire a new secretary

Bob. Suppose you want to hire the tallest Bob of all your candidates, but the

candidates arrive in a stream, and you know only the number of candidates. For

each candidate, you have to accept (hire) or reject the candidate. Once you

have rejected a candidate, you cannot undo the decision.

The solution to this problem is to look at the first `n/e` (`e~2.71828` being

the Euler constant) candidates, and thereafter accept the first candidate taller

than all of the `n/e` first candidates.

#### A Samplitude solution

Let `normal(170, 10)` be the candidate generator, and let `n=100`. We create a

filter `secretary` that takes a stream and an integer (`n`) and picks according

to the solution. In order to be able to assess the quality of the solution

later, the filter must forward the entire list of candidates; hence we annotate

the one we choose with `(c, False)` for a candidate we rejected, and `(c, True)`

denotes the candidate we accepted.

```python

def secretary(gen, n):

import math

explore = int(n / math.e)

target = -float('inf')

i = 0

# explore the first n/e candidates

for c in gen:

target = max(c, target)

yield (c, False)

i += 1

if i == explore:

break

_ok = lambda c, i, found: ((i == n-1 and not found)

or (c > target and not found))

have_hired = False

for c in gen:

status = _ok(c, i, have_hired)

have_hired = have_hired or status

yield c, status

i += 1

if i == n:

return

```

Now, to emulate the secretary problem with Samplitude:

```python

from samplitude import samplitude as s8e

# insert above secretary function

n = 100

filters = {'secretary': secretary}

solution = s8e('normal(170, 10) | secretary(%d) | list' % n, filters=filters)

solution = eval(solution) # Samplitude returns an eval-able string

cands = map(lambda x: x[0], solution)

opt = [s[0] for s in solution if s[1]][0]

# the next line prints in which position the candidate is

print(1+sorted(cands, reverse=True).index(opt), '/', n)

```

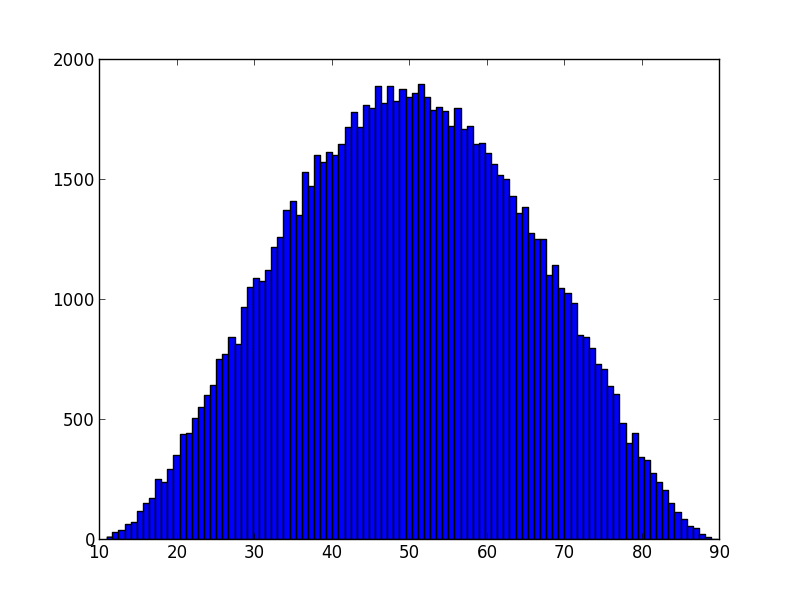

In about 67% of the cases we can expect to get one of the top candidates,

whereas the remaining 33% of the cases will be uniformly distributed. Running

100k runs with a population of size 1000 reveals the structure.

|

/samplitude-0.2.0.tar.gz/samplitude-0.2.0/README.md

| 0.765243 | 0.970882 |

README.md

|

pypi

|

import numpy as np

from .jit_compiled_functions import (sampling_get_potential_targets,

sampling_sample_from_array_condition)

class BaseSamplingSingleSpecies:

"""

Base Class for single species sampling.

"""

def __init__(self, target_species=None, **kwargs):

if target_species is None:

raise ValueError("No agent object provided for the sampling. Should be provided using kwarg "

"'target_species'.")

self.target_species = target_species

class SamplingSingleSpecies:

"""

Introduce the methods for sampling a single species

"""

def __init__(self, **kwargs):

pass

def sample_proportion_from_array(self, array_proportion, condition=None, position_attribute='position',

return_as_pandas_df=False, eliminate_sampled_pop=False):

"""

Take as input an array telling the proportion of agent to sample in each vertex.

:param array_proportion: 1D array of float. array_proportion[i] is the probability for an agent living in the

vertex of index i.

:param condition: optional, 1D array of bool, default None. If not None, tell which agent can be sampled.

:param position_attribute: optional, string, default 'position'. Tell which attribute of the agents should

be used as position.

:param return_as_pandas_df: optional, boolean, default False. Clear.

:param eliminate_sampled_pop: optional, boolean, default False. If True,

:return: a DataFrameXS if return_as_pandas_df is False, a pandas dataframe otherwise. The returned DF is

the sample of the population taken from df_population

"""

targets = sampling_get_potential_targets(self.target_species.df_population[position_attribute],

array_proportion)

if condition is not None:

targets = targets & condition

rand = np.random.uniform(0, 1, (targets.sum(),))

sampled = sampling_sample_from_array_condition(array_proportion,

self.target_species.df_population[position_attribute],

rand, targets)

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/intervention/sampling.py

| 0.878536 | 0.372077 |

sampling.py

|

pypi

|

import numpy as np

from ..pandas_xs.pandas_xs import DataFrameXS

from .jit_compiled_functions import vaccination_apply_vaccine_from_array_condition

class BaseVaccinationSingleSpeciesDisease:

def __init__(self, disease=None, **kwargs):

if disease is None:

raise ValueError(

"No disease object provided for the vaccination. Should be provided using kwarg 'disease'.")

self.disease = disease

self.target_species = self.disease.host

self.target_species.df_population['vaccinated_' + self.disease.disease_name] = False

self.target_species.dict_default_val['vaccinated_' + self.disease.disease_name] = False

class VaccinationSingleSpeciesDiseaseFixedDuration:

def __init__(self, duration_vaccine=None, **kwargs):

if duration_vaccine is None:

raise ValueError(

"No duration provided for the vaccination duration. Should be provided using kwarg 'duration_vaccine'.")

self.duration_vaccine = int(duration_vaccine)

self.target_species.df_population['cnt_vaccinated_' + self.disease.disease_name] = 0

self.target_species.dict_default_val['cnt_vaccinated_' + self.disease.disease_name] = 0

def update_vaccine_status(self):

"""

Should be call at each time-step of the simulation. Update the attribute that count how many day each individual

has been vaccinated, and remove the vaccinated status of the individual which recieved their dose for more than

'duration_vaccine' time-steps.

"""

self.target_species.df_population['cnt_vaccinated_' + self.disease.disease_name] += 1

arr_lose_vaccine = self.target_species.df_population['cnt_vaccinated_' + self.disease.disease_name] >= \

self.duration_vaccine

not_arr_lose_vaccine = ~arr_lose_vaccine

self.target_species.df_population['cnt_vaccinated_' + self.disease.disease_name] = \

self.target_species.df_population['cnt_vaccinated_' + self.disease.disease_name] * not_arr_lose_vaccine

self.target_species.df_population['vaccinated_' + self.disease.disease_name] = \

self.target_species.df_population['vaccinated_' + self.disease.disease_name] * not_arr_lose_vaccine

self.target_species.df_population['imm_' + self.disease.disease_name] = \

self.target_species.df_population['imm_' + self.disease.disease_name] * not_arr_lose_vaccine

def apply_vaccine_from_array(self, array_vaccine_level, condition=None, position_attribute='position'):

"""

Apply vaccine to the agents based on the 1D array 'array_vaccine_level'. array_vaccine_level[i] is the

probability for an agent on the vertex of index i to get vaccinated.

Note that, by default, infected and contagious agents can get vaccinated. They can be excluded using the

kwarg 'condition'

:param array_vaccine_level: 1D array of float. Floats between 0 and 1.

:param condition: optional, 1D array of bool, default None.

:param position_attribute: optional, string, default 'position'.

"""

if condition is None:

condition = np.full((self.target_species.df_population.nb_rows,), True, dtype=np.bool_)

rand = np.random.uniform(0, 1, (condition.sum(),))

newly_vaccinated = \

vaccination_apply_vaccine_from_array_condition(

self.target_species.df_population['vaccinated_' + self.disease.disease_name],

self.target_species.df_population['cnt_vaccinated_' + self.disease.disease_name],

self.target_species.df_population['imm_' + self.disease.disease_name],

array_vaccine_level, self.target_species.df_population[position_attribute], rand, condition)

not_newly_vaccinated = ~newly_vaccinated

self.target_species.df_population['inf_' + self.disease.disease_name] *= not_newly_vaccinated

self.target_species.df_population['con_' + self.disease.disease_name] *= not_newly_vaccinated

def apply_vaccine_from_dict(self, graph, dict_vertex_id_to_level, condition=None, position_attribute='position'):

"""

same as apply_vaccine_from_array, but the 1D array is replaced by a dictionary whose keys are vertices ID and

values is the vaccination level on each cell.

:param graph: graph object on which the vaccine is applied

:param dict_vertex_id_to_level: dictionnary-like object with vaccine level

:param condition: optional, 1D array of bool, default None.

:param position_attribute: optional, string, default 'position'.

"""

array_vac_level = np.full((graph.number_vertices,), 0., dtype=float)

for id_vertex, level in dict_vertex_id_to_level.items():

array_vac_level[graph.dict_cell_id_to_ind[id_vertex]] = level

self.apply_vaccine_from_array(array_vac_level, condition=condition, position_attribute=position_attribute)

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/intervention/vaccination.py

| 0.744099 | 0.390243 |

vaccination.py

|

pypi

|

import numpy as np

from .jit_compiled_functions import culling_apply_culling_from_array_condition

class BaseCullingSingleSpecies:

def __init__(self, species=None, **kwargs):

if species is None:

raise ValueError(

"No agent object provided for the culling. Should be provided using kwarg 'species'.")

self.species = species

class CullingSingleSpecies:

def __init__(self, **kwargs):

pass

def apply_culling_from_array(self, array_culling_level, condition=None, position_attribute='position'):

"""

Kill proportion of agents based on the 1D array 'array_culling_level'. array_culling_level[i] is the

probability for an agent on the vertex of index i to be killed.

By default, all agent can be killed. Use kwarg 'condition' to refine the culling.

:param array_culling_level: 1D array of float

:param condition: optional, 1D array of bool, default None.

:param position_attribute: optionnal, string, default 'position'

"""

if condition is None:

condition = np.full((self.species.df_population.nb_rows,), True, dtype=np.bool_)

rand = np.random.uniform(0, 1, (condition.sum(),))

survive_culling = culling_apply_culling_from_array_condition(array_culling_level,

self.species.df_population[position_attribute],

rand, condition)

self.species.df_population = self.species.df_population[survive_culling]

def apply_culling_from_dict(self, graph, dict_vertex_id_to_level, condition=None, position_attribute='position'):

"""

Same as apply_culling_from_array, but the 1D array is replaced by a dictionary whose keys are vertices ID and

values is the culling level on each cell.

:param graph: graph object on which the culling is applied

:param dict_vertex_id_to_level: dictionnary-like object with culling level

:param condition: optional, 1D array of bool, default None.

:param position_attribute: optional, string, default 'position'.

"""

array_cul_level = np.full((graph.number_vertices,), 0., dtype=float)

for id_vertex, level in dict_vertex_id_to_level.items():

array_cul_level[graph.dict_cell_id_to_ind[id_vertex]] = level

self.apply_culling_from_array(array_cul_level, condition=condition, position_attribute=position_attribute)

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/intervention/culling.py

| 0.804521 | 0.364353 |

culling.py

|

pypi

|

from .base import BaseTwoSpeciesDisease

from .transition import TransitionCustomProbPermanentImmunity

from .transmission import ContactTransmissionSameGraph

from ...utils.decorators import sampy_class

@sampy_class

class TwoSpeciesContactCustomProbTransitionPermanentImmunity(BaseTwoSpeciesDisease,

TransitionCustomProbPermanentImmunity,

ContactTransmissionSameGraph):

"""

Basic disease, transmission by direct contact (contagion only between agents on the same vertex), transition between

disease states encoded by user given arrays of probabilities, and permanent immunity.

IMPORTANT: We strongly recommend the user to use the "simplified" methods defined here instead of the usual

'contaminate_vertices', 'contact_contagion' and 'transition_between_states'. Indeed, the combination of

building blocks involved in this disease requires many actions to be performed in a precise order,

otherwise the model's behaviour cannot be guaranteed. See each simplified method description to learn

about each respective ordering.

:param disease_name: mandatory kwargs. String.

:param host1: mandatory kwargs. Population object of the first host.

:param host2: mandatory kwargs. Population object of the second host.

"""

def __init__(self, **kwargs):

pass

def simplified_contact_contagion(self, contact_rate_matrix, arr_timesteps_host1, arr_prob_timesteps_host1,

arr_timesteps_host2, arr_prob_timesteps_host2,

position_attribute_host1='position', position_attribute_host2='position',

condition_host1=None, condition_host2=None,

return_arr_new_infected=False, return_type_transmission=False):

"""

Propagate the disease by direct contact using the following methodology. For any vertex X of the graph, we

count the number of contagious agents N_c_host_j, then each non immuned agent of host_k on the vertex X has a

probability of

1 - (1 - contact_rate_matrix[j,k]) ** N_c_host_j

to become infected by a contact with an agent of host_j.

Detailed Explanation: each agent has a series of counter attached, telling how much time-steps they will spend

in each disease status. Those counters have to be initialized when an individual is newly

infected, and that's what this method does to the newly infected individuals.

:param contact_rate_matrix: 2D array of floats of shape (2, 2). Here, contact_rate_matrix[0][0] is the

probability of contact contagion from host1 to host1, contact_rate_matrix[0][1] is

the probability of contact contagion from host1 to host2, etc...

:param arr_timesteps_host1: 1d array of int. work in tandem with arr_prob_timesteps_host1, see below.

:param arr_prob_timesteps_host1: 1D array of float. arr_prob[i] is the probability for an agent to stay infected

but not contagious for arr_nb_timestep[i] time-steps.

:param arr_timesteps_host2: same but for host2.

:param arr_prob_timesteps_host2: same but for host2.

:param position_attribute_host1: optional, string, default 'position'. Name of the agent attribute used as

position for host1.

:param position_attribute_host2: optional, string, default 'position'. Name of the agent attribute used as

position for host2.

:param condition_host1: optional, array of bool, default None. Array of boolean such that the i-th value is

True if and only if the i-th agent of host1 (i.e. the agent at the line i of df_population)

can be infected and transmit disease. All the agent having their corresponding value to False

are protected from infection and cannot transmit the disease.

:param condition_host2: optional, array of bool, default None. Array of boolean such that the i-th value is

True if and only if the i-th agent of host2 (i.e. the agent at the line i of df_population)

can be infected and transmit disease. All the agent having their corresponding value to False

are protected from infection and cannot transmit the disease.

:param return_arr_new_infected: optional, boolean, default False. If True, the method returns two arrays telling

which agent got contaminated in each host species.

:param return_type_transmission: optional, boolean, default False.

:return: Depending on the values of the parameters 'return_arr_new_infected' and 'return_type_transmission',

the return value will either be None (both are False) or a dictionnary whose key-values are:

- 'arr_new_infected_host1', 'arr_new_infected_host2' if return_arr_new_infected is True, and the

values are 1D arrays of bool telling which agents got infected for each host;

- 'arr_type_transmission_host1', 'arr_type_transmission_host2' if return_type_transmission is True,

and the values are 1D arrays of non-negative integers such that the integer at line i is 0 if the

i-th agent has not been contaminated, 1 if it has been contaminated by a member of its own species

2 if it has been contaminated by a member of the other species, 3 if it has been contaminated by

agents from both its species and the other.

"""

dict_contagion = self.contact_contagion(contact_rate_matrix, return_arr_new_infected=True,

return_type_transmission=return_type_transmission,

position_attribute_host1=position_attribute_host1,

position_attribute_host2=position_attribute_host2,

condition_host1=condition_host1, condition_host2=condition_host2)

self.initialize_counters_of_newly_infected('host1', dict_contagion['arr_new_infected_host1'],

arr_timesteps_host1, arr_prob_timesteps_host1)

self.initialize_counters_of_newly_infected('host2', dict_contagion['arr_new_infected_host2'],

arr_timesteps_host2, arr_prob_timesteps_host2)

if not return_type_transmission and not return_arr_new_infected:

return

if return_arr_new_infected:

return dict_contagion

else: # no other case needed

del dict_contagion['arr_new_infected_host1']

del dict_contagion['arr_new_infected_host2']

return dict_contagion

def simplified_transition_between_states(self, prob_death_host1, prob_death_host2,

arr_infectious_period_host1, arr_prob_infectious_period_host1,

arr_infectious_period_host2, arr_prob_infectious_period_host2):

"""

Takes care of the transition between all the disease states. That is, agents that are at the end of their

infected period become contagious and agents at the end of their contagious period either die (with a

probability of 'prob_death') or become immuned.

Detailed Explanation: the method transition_between_states is coded in such a way that when using it for

transitionning from con to imm, all the agents at the end of their contagious period at

the time the method is called transition. Therefore, we have to make the transition

'con' to 'death' first.

:param prob_death_host1: float between 0 and 1, probability for an agent of host1 to die at the end of the

contagious period

:param prob_death_host2: float between 0 and 1, probability for an agent of host2 to die at the end of the

contagious period

:param arr_infectious_period_host1: 1d array of int, works in tandem with arr_prob_infectious_period_host1.

See Below.

:param arr_prob_infectious_period_host1: 1d array of floats, sums to 1. Same shape as

arr_infectious_period_host1. When an agent transition from infected to contagious, then

arr_prob_infectious_period_host1[i] is the probability for this agent to stay

arr_infectious_period_host1[i] timesteps contagious.

:param arr_infectious_period_host2: same as host1

:param arr_prob_infectious_period_host2: same as host1

"""

self.transition_between_states('host1', 'con', 'death', proba_death=prob_death_host1)

self.transition_between_states('host2', 'con', 'death', proba_death=prob_death_host2)

if self.host1.df_population.nb_rows != 0:

self.transition_between_states('host1', 'con', 'imm')

self.transition_between_states('host1', 'inf', 'con', arr_nb_timestep=arr_infectious_period_host1,

arr_prob_nb_timestep=arr_prob_infectious_period_host1)

if self.host2.df_population.nb_rows != 0:

self.transition_between_states('host2', 'con', 'imm')

self.transition_between_states('host2', 'inf', 'con', arr_nb_timestep=arr_infectious_period_host2,

arr_prob_nb_timestep=arr_prob_infectious_period_host2)

def simplified_contaminate_vertices(self, host, list_vertices, level, arr_timesteps, arr_prob_timesteps,

condition=None, position_attribute='position',

return_arr_newly_contaminated=True):

"""

Contaminate a list of vertices.

Detailed explanation: each agent has a series of counter attached, telling how much time-steps they will spend

in each disease status. Those counters have to be initialized when an individual is newly

infected, and that's what this method does to the newly infected individuals.

:param host: string, either 'host1' or 'host2', tells which host to infect.

:param list_vertices: list of vertices ID to be contaminated.

:param level: float, probability for agent on the vertices to be contaminated.

:param arr_timesteps: 1D array of integer. Works in tandem with 'arr_prob_timesteps'. See below.

:param arr_prob_timesteps: 1D array of float. arr_prob_timesteps[i] is the probability for an agent to stay

infected but not contagious for arr_timesteps[i] timesteps.

:param condition: optional, array of bool, default None. If not None, say which agents are susceptible to be

contaminated.

:param position_attribute: optional, string, default 'position'. Agent attribute to be used to define

their position.

:param return_arr_newly_contaminated: optional, boolean, default True. If True, the method returns an array

telling which agents were contaminated.

:return: if return_arr_newly_contaminated is set to True, returns a 1D array of bool telling which agents where

contaminated. Returns None otherwise.

"""

arr_new_contaminated = self.contaminate_vertices(host, list_vertices, level,

condition=condition, position_attribute=position_attribute,

return_arr_newly_contaminated=True)

self.initialize_counters_of_newly_infected(host, arr_new_contaminated, arr_timesteps, arr_prob_timesteps)

if return_arr_newly_contaminated:

return arr_new_contaminated

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/disease/two_species/builtin_disease.py

| 0.847148 | 0.556038 |

builtin_disease.py

|

pypi

|

import numpy as np

from .jit_compiled_functions import base_contaminate_vertices

class BaseTwoSpeciesDisease:

"""Base class for two species disease. This building block expects the following kwargs:

:param disease_name: mandatory kwargs. String.

:param host1: mandatory kwargs. Population object of the first host.

:param host2: mandatory kwargs. Population object of the second host.

"""

def __init__(self, disease_name='', host1=None, host2=None, **kwargs):

# check values have been given

if not host1:

raise ValueError('No first host given for the disease. Use Kwarg host1.')

if not host2:

raise ValueError('No second host given for the disease. Use Kwarg host2.')

if not disease_name:

raise ValueError('No name given to the disease. Use Kwarg disease_name.')

self.host1 = host1

self.host2 = host2

self.disease_name = disease_name

self.host1.df_population['inf_' + disease_name] = False

self.host1.df_population['con_' + disease_name] = False

self.host1.df_population['imm_' + disease_name] = False

if hasattr(host1, 'dict_default_val'):

self.host1.dict_default_val['inf_' + disease_name] = False

self.host1.dict_default_val['con_' + disease_name] = False

self.host1.dict_default_val['imm_' + disease_name] = False

self.host2.df_population['inf_' + disease_name] = False

self.host2.df_population['con_' + disease_name] = False

self.host2.df_population['imm_' + disease_name] = False

if hasattr(host2, 'dict_default_val'):

self.host2.dict_default_val['inf_' + disease_name] = False

self.host2.dict_default_val['con_' + disease_name] = False

self.host2.dict_default_val['imm_' + disease_name] = False

if not hasattr(self, 'set_disease_status'):

self.set_disease_status = {'inf', 'con', 'imm'}

else:

self.set_disease_status.update(['inf', 'con', 'imm'])

self.on_ticker = []

def tick(self):

"""

execute in order all the methods whose name are in the list 'on_ticker'. Those methods should not accept

any arguments.

"""

for method in self.on_ticker:

getattr(self, method)()

def contaminate_vertices(self, host, list_vertices, level, return_arr_newly_contaminated=True,

condition=None, position_attribute='position'):

"""

Contaminate the vertices given in the list 'list_vertices' with the disease. Each agent on the vertex have a

probability of 'level' to be contaminated.

:param host: string, either 'host1' or 'host2'. If host1 should be targeted, put 'host1', If host2 should be

targeted, put 'host2'. Any other input will lead to an error.

:param list_vertices: list of vertices ID to be contaminated.

:param level: float, probability for agent on the vertices to be contaminated.

:param return_arr_newly_contaminated: optional, boolean, default True. If True, the method returns an array

telling which agents were contaminated.

:param condition: optional, array of bool, default None. If not None, say which agents are susceptible to be

contaminated.

:param position_attribute: optional, string, default 'position'. Agent attribute to be used to define

their position.

:return: if return_arr_newly_contaminated is set to True, returns a 1D array of bool. Otherwise, returns

None.

"""

# here we check that the given object seems to be the one provided during construction.

if host == 'host1':

host = self.host1

elif host == 'host2':

host = self.host2

else:

raise ValueError('The "host" argument is not recognized. It should be either "host1" or "host2".')

# this is quite inefficient, but this function is not assumed to be called often.

for i, vertex_id in enumerate(list_vertices):

if i == 0:

arr_new_infected = (host.df_population[position_attribute] ==

host.graph.dict_cell_id_to_ind[vertex_id])

continue

arr_new_infected = arr_new_infected | (host.df_population[position_attribute] ==

host.graph.dict_cell_id_to_ind[vertex_id])

arr_new_infected = arr_new_infected & ~(host.df_population['inf_' + self.disease_name] |

host.df_population['con_' + self.disease_name] |

host.df_population['imm_' + self.disease_name])

if condition is not None:

arr_new_infected = arr_new_infected & condition

rand = np.random.uniform(0, 1, (arr_new_infected.sum(),))

base_contaminate_vertices(arr_new_infected, rand, level)

host.df_population['inf_' + self.disease_name] = host.df_population['inf_' + self.disease_name] | \

arr_new_infected

if return_arr_newly_contaminated:

return arr_new_infected

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/disease/two_species/base.py

| 0.77081 | 0.367185 |

base.py

|

pypi

|

import numpy as np

from .jit_compiled_functions import *

from ...utils.errors_shortcut import check_col_exists_good_type

class BaseSingleSpeciesDisease:

def __init__(self, disease_name=None, host=None, **kwargs):

# check values have been given

if host is None:

raise ValueError("No host given for the disease. Use the kwarg 'host'.")

if disease_name is None:

raise ValueError("No name given to the disease. Use the kwarg 'disease_name'.")

self.host = host

self.disease_name = disease_name

self.host.df_population['inf_' + disease_name] = False

self.host.df_population['con_' + disease_name] = False

self.host.df_population['imm_' + disease_name] = False

if hasattr(host, 'dict_default_val'):

self.host.dict_default_val['inf_' + disease_name] = False

self.host.dict_default_val['con_' + disease_name] = False

self.host.dict_default_val['imm_' + disease_name] = False

if not hasattr(self, 'list_disease_status'):

self.set_disease_status = {'inf', 'con', 'imm'}

else:

self.set_disease_status.update(['inf', 'con', 'imm'])

self.on_ticker = []

def tick(self):

"""

execute in order all the methods whose name are in the list 'on_ticker'. Those methods should not accept

any arguments.

"""

for method in self.on_ticker:

getattr(self, method)()

def _sampy_debug_count_nb_status_per_vertex(self, target_status, position_attribute='position'):

if self.host.df_population.nb_rows == 0:

return

check_col_exists_good_type(self.host.df_population, position_attribute, 'attribute_position',

prefix_dtype='int', reject_none=True)

check_col_exists_good_type(self.host.df_population, target_status + '_' + self.disease_name,

'target_status', prefix_dtype='bool', reject_none=True)

def count_nb_status_per_vertex(self, target_status, position_attribute='position'):

"""

Count the number of agent having the targeted status in each vertex. The status can either be 'inf', 'con' and

'imm', which respectively corresponds to infected, contagious and immunized agents.

:param target_status: string in ['inf', 'con', 'imm'].

:param position_attribute: optional, string.

:return: array counting the number of agent having the target status in each vertex

"""

if self.host.df_population.nb_rows == 0:

return np.full((self.host.graph.number_vertices,), 0, dtype=np.int32)

return base_conditional_count_nb_agent_per_vertex(self.host.df_population[target_status + '_' +

self.disease_name],

self.host.df_population[position_attribute],

self.host.graph.weights.shape[0])

def contaminate_vertices(self, list_vertices, level, return_arr_newly_contaminated=True,

condition=None, position_attribute='position'):

"""

Contaminate the vertices given in the list 'list_vertices' with the disease. Each agent on the vertex have a

probability of 'level' to be contaminated.

:param list_vertices: list of vertices ID to be contaminated.

:param level: float, probability for agent on the vertices to be contaminated

:param return_arr_newly_contaminated: optional, boolean, default True. If True, the method returns an array

telling which agents were contaminated.

:param condition: optional, array of bool, default None. If not None, say which agents are susceptible to be

contaminated.

:param position_attribute: optional, string, default 'position'. Agent attribute to be used to define

their position.

:return: if return_arr_newly_contaminated is set to True, returns a 1D array of bool. Otherwise, returns

None.

"""

for i, vertex_id in enumerate(list_vertices):

if i == 0:

arr_new_infected = (self.host.df_population[position_attribute] ==

self.host.graph.dict_cell_id_to_ind[vertex_id])

continue

arr_new_infected = arr_new_infected | (self.host.df_population[position_attribute] ==

self.host.graph.dict_cell_id_to_ind[vertex_id])

arr_new_infected = arr_new_infected & ~(self.host.df_population['inf_' + self.disease_name] |

self.host.df_population['con_' + self.disease_name] |

self.host.df_population['imm_' + self.disease_name])

if condition is not None:

arr_new_infected = arr_new_infected & condition

rand = np.random.uniform(0, 1, (arr_new_infected.sum(),))

base_contaminate_vertices(arr_new_infected, rand, level)

self.host.df_population['inf_' + self.disease_name] = \

self.host.df_population['inf_' + self.disease_name] | arr_new_infected

if return_arr_newly_contaminated:

return arr_new_infected

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/disease/single_species/base.py

| 0.763043 | 0.289711 |

base.py

|

pypi

|

import numpy as np

import math

import numba as nb

@nb.njit

def topology_convert_1d_array_to_2d_array(arr_1d, arr_fast_squarify, shape_0, shape_1):

rv = np.full((shape_0, shape_1), arr_1d[0])

for i in range(arr_1d.shape[0]):

rv[arr_fast_squarify[i][0]][arr_fast_squarify[i][1]] = arr_1d[i]

return rv

@nb.njit

def topology_convert_2d_array_to_1d_array(arr_2d, arr_fast_flat):

n = arr_2d.shape[0] * arr_2d.shape[1]

rv = np.full((n, ), arr_2d[0][0])

for i in range(arr_2d.shape[0]):

for j in range(arr_2d.shape[1]):

rv[arr_fast_flat[i][j]] = arr_2d[i][j]

return rv

@nb.njit

def compute_sin_attr_with_condition(arr_attr, arr_cond, time, amplitude, period, phase, intercept):

for i in range(arr_cond.shape[0]):

if arr_cond[i]:

arr_attr[i] = amplitude*np.sin(2*math.pi*time/period + phase) + intercept

@nb.njit

def get_oriented_neighborhood_of_vertices(connections):

rv = np.full(connections.shape, -1, dtype=np.int32)

for ind_center in range(connections.shape[0]):

# we first create the set of neighbours

set_neighbours = set()

nb_neighbours = 0

for i in range(connections.shape[1]):

ind_neighb = connections[ind_center][i]

if ind_neighb == -1:

pass

else:

set_neighbours.add(ind_neighb)

nb_neighbours += 1

# we now fill the returned array

for j in range(nb_neighbours):

ind_neighbour = connections[ind_center][j]

if ind_neighbour == -1:

pass

else:

rv[ind_center][0] = ind_neighbour

break

for j in range(1, nb_neighbours):

ind_current_neigh = rv[ind_center][j-1]

for k in range(connections.shape[1]):

ind_neighbour = connections[ind_current_neigh][k]

if ind_neighbour == -1:

pass

elif ind_neighbour in set_neighbours:

if j != 1 and rv[ind_center][j-2] == ind_neighbour:

pass

else:

rv[ind_center][j] = ind_neighbour

break

return rv

@nb.njit

def get_surface_array(oriented_neighbourhood_array, x_coord, y_coord, z_coord, radius):

rv = np.full((oriented_neighbourhood_array.shape[0],), 0., dtype=np.float64)

for index_center in range(oriented_neighbourhood_array.shape[0]):

# get coordinates of the center

x_center = x_coord[index_center]

y_center = y_coord[index_center]

z_center = z_coord[index_center]

# quick loop to determine the number of vertices of the current polygon

nb_vertices = 0

for i in range(oriented_neighbourhood_array.shape[1]):

if oriented_neighbourhood_array[index_center][i] != -1:

nb_vertices += 1

# we first create the normal vectors of each hyperplane defining the spherical polygon. Those vectors are not

# normalized

oriented_normal_vect = np.full((nb_vertices, 3), -1.)

current_index = 0

for i in range(oriented_neighbourhood_array.shape[1]):

index_current_neighbour = oriented_neighbourhood_array[index_center][i]

if index_current_neighbour != -1:

oriented_normal_vect[current_index][0] = x_coord[index_current_neighbour] - x_center

oriented_normal_vect[current_index][1] = y_coord[index_current_neighbour] - y_center

oriented_normal_vect[current_index][2] = z_coord[index_current_neighbour] - z_center

current_index += 1

# we know compute the coordinates of the vertices of the spherical polygon using a cross product.

oriented_vertices_polygon = np.full((nb_vertices, 3), -1.)

for i in range(nb_vertices):

vertex = np.cross(oriented_normal_vect[i][:], oriented_normal_vect[(i+1) % nb_vertices][:])

if x_center * vertex[0] + y_center * vertex[1] + z_center * vertex[2] > 0:

oriented_vertices_polygon[i][:] = vertex / (np.sqrt((vertex ** 2).sum()))

else:

oriented_vertices_polygon[i][:] = - vertex / (np.sqrt((vertex ** 2).sum()))

area = 0.

first_point = oriented_vertices_polygon[0][:]

second_point = oriented_vertices_polygon[1][:]

for i in range(2, nb_vertices):

third_point = oriented_vertices_polygon[i][:]

vec1 = second_point - np.dot(second_point, first_point) * first_point

vec2 = third_point - np.dot(third_point, first_point) * first_point

area += np.arccos(np.dot(vec1, vec2) / (np.linalg.norm(vec1) * np.linalg.norm(vec2)))

vec1 = first_point - np.dot(first_point, second_point) * second_point

vec2 = third_point - np.dot(third_point, second_point) * second_point

area += np.arccos(np.dot(vec1, vec2) / (np.linalg.norm(vec1) * np.linalg.norm(vec2)))

vec1 = first_point - np.dot(first_point, third_point) * third_point

vec2 = second_point - np.dot(second_point, third_point) * third_point

area += np.arccos(np.dot(vec1, vec2) / (np.linalg.norm(vec1) * np.linalg.norm(vec2)))

area -= np.pi

second_point = oriented_vertices_polygon[i][:]

rv[index_center] = (radius**2)*area

return rv

@nb.njit

def icosphere_get_distance_matrix(dist_matrix, connections, lats, lons, radius):

for i in range(dist_matrix.shape[0]):

for j in range(dist_matrix.shape[1]):

if connections[i][j] != -1:

dist_matrix[i][j] = radius * np.arccos(np.sin(lats[i]) * np.sin(lats[connections[i][j]]) +

np.cos(lats[i]) * np.cos(lats[connections[i][j]]) *

np.cos(lons[i] - lons[connections[i][j]]))

return dist_matrix

@nb.njit

def keep_subgraph_from_array_of_bool_equi_weight(arr_keep, connections):

counter = 0

dict_old_to_new = dict()

for i in range(arr_keep.shape[0]):

if arr_keep[i]:

dict_old_to_new[i] = counter

counter += 1

arr_nb_connections = np.full((counter,), 0, dtype=np.int32)

new_arr_connections = np.full((counter, 6), -1, dtype=np.int32)

new_arr_weights = np.full((counter, 6), -1., dtype=np.float32)

counter = 0

for i in range(arr_keep.shape[0]):

if arr_keep[i]:

for j in range(connections.shape[1]):

if connections[i][j] in dict_old_to_new:

new_arr_connections[counter][arr_nb_connections[counter]] = dict_old_to_new[connections[i][j]]

arr_nb_connections[counter] += 1

counter += 1

for i in range(arr_nb_connections.shape[0]):

for j in range(arr_nb_connections[i]):

if j + 1 == arr_nb_connections[i]:

new_arr_weights[i][j] = 1.

else:

new_arr_weights[i][j] = (j + 1) / arr_nb_connections[i]

return new_arr_connections, new_arr_weights

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/graph/jit_compiled_functions.py

| 0.487063 | 0.543045 |

jit_compiled_functions.py

|

pypi

|

from .misc import (create_grid_hexagonal_cells,

create_grid_square_cells,

create_grid_square_with_diagonals,

SubdividedIcosahedron)

from .jit_compiled_functions import (get_oriented_neighborhood_of_vertices,

get_surface_array,

topology_convert_1d_array_to_2d_array,

topology_convert_2d_array_to_1d_array,

icosphere_get_distance_matrix)

import numpy as np

from math import sqrt, pi

import pandas as pd

import os

class BaseTopology:

def __init__(self, **kwargs):

self.connections = None

self.weights = None

self.type = None

self.dict_cell_id_to_ind = {}

self.time = 0

self.on_ticker = ['increment_time']

def increment_time(self):

self.time += 1

def tick(self):

"""

execute the methods whose names are stored in the attribute on_ticker, in order.

"""

for method in self.on_ticker:

getattr(self, method)()

def save_table_id_of_vertices_to_indices(self, path_to_csv, sep, erase_existing_file=True):

"""

Create and save a two column csv allowing to match vertices id's with vertices indexes.

:param path_to_csv: string, path to the output csv

:param sep: string, separator to use in the csv.

:param erase_existing_file: optional, boolean, default True. If True, the method will check if there is already

a file at path_to_csv and delete it if it exists.

"""

if erase_existing_file:

if os.path.exists(path_to_csv):

os.remove(path_to_csv)

with open(path_to_csv, 'a') as f_out:

f_out.write("id_vertex" + sep + "index_vertex" + "\n")

for id_vertex, index in self.dict_cell_id_to_ind.items():

f_out.write(str(id_vertex) + sep + str(index) + '\n')

return

@property

def number_vertices(self):

return self.connections.shape[0]

class SquareGridWithDiagTopology(BaseTopology):

def __init__(self, shape=None, **kwargs):

if shape is None:

raise ValueError("Kwarg 'shape' is missing while initializing the graph topology. 'shape' should be a "

"tuple like object of the form (a, b), where a and b are integers bigger than 1.")

len_side_a = shape[0]

len_side_b = shape[1]

self.create_square_with_diag_grid(len_side_a, len_side_b)

self.shape = (len_side_a, len_side_b)

self.type = 'SquareGridWithDiag'

def create_square_with_diag_grid(self, len_side_a, len_side_b):

"""

Create a square grid with diagonals, where each vertex X[i][j] is linked to X[i-1][j-1], X[i][j-1], X[i+1][j-1],

X[i+1][j], X[i+1][j+1], x[i][j+1], x[i-1][j+1] and x[i-1][j] if they exist. Note that the weights on the

'diagonal connections' is reduced to take into account the fact that the vertices on the diagonal are 'further

away' (i.e. using sqrt(2) as a distance instead of 1 in the weight computation).

:param len_side_a: integer, x coordinate

:param len_side_b: integer, y coordinate

"""

if (len_side_a < 2) or (len_side_b < 2):

raise ValueError('side length attributes for HexagonalCells should be at least 2.')

self.connections, self.weights = create_grid_square_with_diagonals(len_side_a, len_side_b)

# populate the dictionary from cell coordinates to cell indexes in arrays connection and weights

for i in range(len_side_a):

for j in range(len_side_b):

self.dict_cell_id_to_ind[(i, j)] = j + i*len_side_b

class SquareGridTopology(BaseTopology):

def __init__(self, shape=None, **kwargs):

if shape is None:

raise ValueError("Kwarg 'shape' is missing while initializing the graph topology. 'shape' should be a "

"tuple like object of the form (a, b), where a and b are integers bigger than 1.")

len_side_a = shape[0]

len_side_b = shape[1]

self.create_square_grid(len_side_a, len_side_b)

self.shape = (len_side_a, len_side_b)

self.type = 'SquareGrid'

def create_square_grid(self, len_side_a, len_side_b):

"""

Create a square grid, where each vertex X[i][j] is linked to X[i-1][j], X[i][j-1], X[i+1][j], X[i][j+1] if they

exist.

:param len_side_a: integer, x coordinate

:param len_side_b: integer, y coordinate

"""

if (len_side_a < 2) or (len_side_b < 2):

raise ValueError('side length attributes for HexagonalCells should be at least 2.')

self.connections, self.weights = create_grid_square_cells(len_side_a, len_side_b)

self.shape = (len_side_a, len_side_b)

# populate the dictionary from cell coordinates to cell indexes in arrays connection and weights

for i in range(len_side_a):

for j in range(len_side_b):

self.dict_cell_id_to_ind[(i, j)] = j + i*len_side_b

class SquareGridsConvertBetween1DArrayAnd2DArrays:

def __init__(self, **kwargs):

self._array_optimized_squarification = None

self._array_optimized_flat = None

def _sampy_debug_convert_1d_array_to_2d_array(self, input_arr):

if not type(input_arr) is np.ndarray:

raise ValueError("Input variable is not a numpy array.")

if input_arr.shape != (self.number_vertices,):

raise ValueError("Input array of invalid shape.")

def convert_1d_array_to_2d_array(self, input_arr):

"""

Takes a 1D array of shape (nb_vertices,) and convert it into a 2D array of shape self.shape.

:param input_arr: 1D array.

:return: 2D array

"""

if self._array_optimized_squarification is None:

self._array_optimized_squarification = np.full((self.number_vertices, 2), 0)

for key, val in self.dict_cell_id_to_ind.items():

self._array_optimized_squarification[val][0] = key[0]

self._array_optimized_squarification[val][1] = key[1]

return topology_convert_1d_array_to_2d_array(input_arr, self._array_optimized_squarification,

self.shape[0], self.shape[1])

def _sampy_debug_convert_2d_array_to_1d_array(self, input_arr):

if not type(input_arr) is np.ndarray:

raise ValueError("Input variable is not a numpy array.")

if input_arr.shape != self.shape:

raise ValueError("Input array of invalid shape.")

def convert_2d_array_to_1d_array(self, input_array):

"""

:param input_array:

:return:

"""

if self._array_optimized_flat is None:

self._array_optimized_flat = np.full(self.shape, 0)

for key, val in self.dict_cell_id_to_ind.items():

self._array_optimized_flat[key[0], key[1]] = val

return topology_convert_2d_array_to_1d_array(input_array, self._array_optimized_flat)

class IcosphereTopology(BaseTopology):

def __init__(self, nb_sub=None, radius=1., **kwargs):

if nb_sub is None:

raise ValueError("kwarg nb_sub missing")

self.nb_sub = nb_sub

self.radius = float(radius)

icosahedron = SubdividedIcosahedron(nb_sub)

self.connections = np.copy(icosahedron.connections)

self.weights = np.copy(icosahedron.weights)

self.arr_coord = np.copy(icosahedron.arr_coord)

del icosahedron

self.type = 'IcoSphere'

self.three_d_coord_created = False

def create_3d_coord(self):

self.df_attributes['coord_x'] = self.arr_coord[:, 0].astype(np.float64)

self.df_attributes['coord_y'] = self.arr_coord[:, 1].astype(np.float64)

self.df_attributes['coord_z'] = self.arr_coord[:, 2].astype(np.float64)

norm = np.sqrt(self.df_attributes['coord_x']**2 +

self.df_attributes['coord_y']**2 +

self.df_attributes['coord_z']**2)

self.df_attributes['coord_x_normalized'] = self.df_attributes['coord_x'] / norm

self.df_attributes['coord_x'] = self.radius * self.df_attributes['coord_x_normalized']

self.df_attributes['coord_y_normalized'] = self.df_attributes['coord_y'] / norm

self.df_attributes['coord_y'] = self.radius * self.df_attributes['coord_y_normalized']

self.df_attributes['coord_z_normalized'] = self.df_attributes['coord_z'] / norm

self.df_attributes['coord_z'] = self.radius * self.df_attributes['coord_z_normalized']

self.three_d_coord_created = True

def create_pseudo_epsg4326_coordinates(self):

"""

This method approximate the shape of the earth using a sphere, which creates deformations.

"""

if not self.three_d_coord_created:

self.create_3d_coord()

self.df_attributes['lat'] = (180*(pi/2 - np.arccos(self.df_attributes['coord_z_normalized']))/pi).astype(np.float64)

self.df_attributes['lon'] = (180*np.arctan2(self.df_attributes['coord_y_normalized'],

self.df_attributes['coord_x_normalized'])/pi).astype(np.float64)

def compute_distance_matrix_on_sphere(self):

"""

This method compute a distance matrix that gives the distance between each pair of connected vertex of the

graph. The distance is the geodesic distance on a sphere (i.e. the distance

:return: Array of floats with the same shape as the array 'connections'

"""

dist_matrix = np.full(self.connections.shape, -1., dtype=np.float64)

lats_rad = (np.pi * self.df_attributes['lat'] / 180).astype(np.float64)

lons_rad = (np.pi * self.df_attributes['lon'] / 180).astype(np.float64)

dist_matrix = icosphere_get_distance_matrix(dist_matrix, self.connections, lats_rad, lons_rad, self.radius)

return dist_matrix

def create_and_save_radius_cells_as_attribute(self, radius_attribute='radius_each_cell'):

"""

Save radius of each cell. The radius of a cell centered on a vertex v is defined as the maximum distance between

v and its neighbours. The radius is saved within df_attributes.

:param radius_attribute: optional, string, default 'radius_each_cell'. Name of the attribute corresponding to

the radius of each cell.

"""

dist_matrix = self.compute_distance_matrix_on_sphere()

max_distance = np.amax(dist_matrix, axis=1).astype(np.float64)

self.df_attributes[radius_attribute] = max_distance

def compute_surface_array(self):

"""

Return an array giving the surface of each cell of the icosphere

:return: array of floats shape (nb_vertex,)

"""

# note that this orientation is not necessarily clockwise, and can be anti-clockwise for some vertices.

# But this is not important for our purpose of computing the area of each cell of the icosphere.

oriented_neigh_vert = get_oriented_neighborhood_of_vertices(self.connections)

return get_surface_array(oriented_neigh_vert,

self.df_attributes['coord_x_normalized'],

self.df_attributes['coord_y_normalized'],

self.df_attributes['coord_z_normalized'],

self.radius).astype(np.float64)

def create_and_save_surface_array_as_attribute(self):

arr_surface = self.compute_surface_array()

self.df_attributes['surface_cell'] = arr_surface

class OrientedHexagonalGrid(BaseTopology):

"""

Create an hexagonal lattice on a square. Each

"""

def __init__(self, nb_hex_x_axis=None, nb_hex_y_axis=None, **kwargs):

"""

:param nb_hex_x_axis: mandatory kwargs. Integer, number of hexagons on the horizontal axis.

:param nb_hex_y_axis: mandatory kwargs. Integer, number of hexagons on the vertical axis.

"""

pass

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/graph/topology.py

| 0.840357 | 0.441553 |

topology.py

|

pypi

|

import numpy as np

from .jit_compiled_functions import compute_sin_attr_with_condition

from ..pandas_xs.pandas_xs import DataFrameXS

class BaseVertexAttributes:

def __init__(self, **kwargs):

if not hasattr(self, 'df_attributes'):

self.df_attributes = DataFrameXS()

def _sampy_debug_create_vertex_attribute(self, attr_name, value):

if not isinstance(attr_name, str):

raise TypeError("the name of a vertex attribute should be a string.")

arr = np.array(value)

if len(arr.shape) != 0:

if len(arr.shape) > 1:

raise ValueError('Shape of provided array for graph attribute ' + attr_name + ' is ' + str(arr.shape) +

', while Sampy expects an array of shape (' + str(self.weights.shape[0]) +

',).')

if arr.shape[0] != self.weights.shape[0]:

raise ValueError('Provided array for graph attribute ' + attr_name + ' has ' +

str(arr.shape[0]) + 'elements, while the graph has ' + str(self.weights.shape[0]) +

'vertices. Those numbers should be the same.')

def create_vertex_attribute(self, attr_name, value):

"""

Creates a new vertex attribute and populates its values. Accepted input for 'value' are:

- None: in this case, the attribute column is set empty

- A single value, in which case all vertexes will have the same attribute value

- A 1D array, which will become the attribute column.

Note that if you use a 1D array, then you are implicitly working with the indexes of the vertices, that is that

the value at position 'i' in the array corresponds to the attribute value associated with the vertex whose index

is 'i'. If you want to work with vertexes id instead, use the method 'create_vertex_attribute_from_dict'.

:param attr_name: string, name of the attribute

:param value: either None, a single value, or a 1D array.

"""

if self.df_attributes.nb_rows == 0 and len(np.array(value).shape) == 0:

self.df_attributes[attr_name] = [value for _ in range(self.weights.shape[0])]

else:

self.df_attributes[attr_name] = value

def _sampy_debug_create_vertex_attribute_from_dict(self, attr_name, dict_id_to_val, default_val=np.nan):

if (not hasattr(dict_id_to_val, 'items')) or (not hasattr(dict_id_to_val.items, '__call__')):

raise ValueError('the method create_vertex_attribute_from_dict expects a dictionnary-like object, ' +

'which has a method \'items\'.')

if not isinstance(attr_name, str):

raise TypeError("the name of a vertex attribute should be a string.")

for key, _ in dict_id_to_val.items():

if key not in self.dict_cell_id_to_ind:

raise ValueError(str(key) + ' is not the id of any vertex in the graph.')

def create_vertex_attribute_from_dict(self, attr_name, dict_id_to_val, default_val):

"""

Creates a new vertex attribute and populates its values using a dictionary-like object, whose keys are id of

vertices, and values the corresponding attribute values. Note that you can specify a default value for the

vertices not appearing in the dictionary.

IMPORTANT: first, the method creates an array filled with the default value, and then replace the values in the

array using the dictionary. Therefore, the dtype of the attribute will be defined using the default

value. Thus, the user should either chose a default value with appropriate dtype, or change the

type of the attribute after creating the attribute.

:param attr_name: string, name of the attribute.

:param dict_id_to_val: Dictionary like object, whose keys are id of vertices, and values the corresponding

attribute value.

:param default_val: Value used for the vertices for which an attribute value is not provided.

"""

arr_attr = np.full((self.number_vertices,), default_val)

for key, val in dict_id_to_val.items():

arr_attr[self.dict_cell_id_to_ind[key]] = val

self.df_attributes[attr_name] = arr_attr

def change_type_attribute(self, attr_name, str_type):

"""

Change the dtype of the selected attribute. Note that the type should be supported by DataFrameXS

:param attr_name: string, name of the attribute

:param str_type: string, target dtype of the attribute

"""

self.df_attributes.change_type(attr_name, str_type)

class PeriodicAttributes:

"""

Class that adds methods to define periodically varying arguments.

"""

def __init__(self, **kwargs):

if not hasattr(self, 'df_attributes'):

self.df_attributes = DataFrameXS()

def update_periodic_attribute(self, time, attr_name, amplitude, period, phase, intercept, condition=None):

"""

Call this method to update the value of an attribute using the following formula.

amplitude * np.sin(2 * math.pi * time / period + phase) + intercept

Where time is either the value of the attribute 'time' of the graph, or if 'time' parameter is not None

:param time: float or int, used as time parameter in the update formula.

:param attr_name: string, name of the attribute

:param amplitude: float, see formula above

:param phase: float, see formula above

:param period: float, see formula above

:param intercept: float, see formula above

:param condition: optional, default None. Boolean Array saying for which cell to apply the sinusoidal variation.

If None, this method behave like an array of True has been provided.

"""

arr_attr = self.df_attributes[attr_name]

if condition is None:

condition = np.full(arr_attr.shape, True, dtype=np.bool_)

if time is None:

time = self.time

compute_sin_attr_with_condition(arr_attr, condition, time, amplitude,

period, phase, intercept)

class AttributesFrom2DArraysSquareGrids:

"""

Allow the user to add attributes based on 2D arrays. Designed to work with 'SquareGrids' topologies.

"""

def __init__(self, **kwargs):

pass

def create_attribute_from_2d_array(self, attr_name, array_2d):

"""

Create or update an attribute based on a 2D array input.

:param attr_name: string, name of the attribute

:param array_2d: 2d array

"""

if array_2d.shape != self.shape:

raise ValueError('Shapes do not match. Graph of shape ' + str(self.shape) +

' while array of shape ' + str(array_2d.shape) + '.')

arr_attr = np.full((self.number_vertices,), array_2d[0][0])

for i in range(array_2d.shape[0]):

for j in range(array_2d.shape[1]):

arr_attr[self.dict_cell_id_to_ind[(i, j)]] = array_2d[i][j]

self.df_attributes[attr_name] = arr_attr

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/graph/vertex_attributes.py

| 0.741487 | 0.456168 |

vertex_attributes.py

|

pypi

|

from .topology import (SquareGridWithDiagTopology,

SquareGridTopology,

SquareGridsConvertBetween1DArrayAnd2DArrays,

IcosphereTopology)

from .vertex_attributes import PeriodicAttributes, BaseVertexAttributes, AttributesFrom2DArraysSquareGrids

from .from_files import SaveAndLoadSquareGrids

from ..utils.decorators import sampy_class

from .misc import save_as_repository_include_metadata

from .jit_compiled_functions import keep_subgraph_from_array_of_bool_equi_weight

import os

import numpy as np

import glob

import json

@sampy_class

class SquareGridWithDiag(SquareGridWithDiagTopology,

BaseVertexAttributes,

PeriodicAttributes,

AttributesFrom2DArraysSquareGrids,

SaveAndLoadSquareGrids,

SquareGridsConvertBetween1DArrayAnd2DArrays):

"""

Landscape graph. Grid of squares, diagonals included.

"""

def __init__(self, **kwargs):

pass

@sampy_class

class SquareGrid(SquareGridTopology,

BaseVertexAttributes,

PeriodicAttributes,

AttributesFrom2DArraysSquareGrids,

SaveAndLoadSquareGrids,

SquareGridsConvertBetween1DArrayAnd2DArrays):

"""

Landscape graph. Grid of squares, diagonals excluded.

"""

def __init__(self, **kwargs):

pass

@sampy_class

class IcosphereGraph(BaseVertexAttributes,

IcosphereTopology):

"""

Graph of choice for the study of species whose species distribution is big enough so that the shape of the earth

has to be considered.

"""

def __init__(self, **kwargs):

pass

def save(self, path_to_folder, erase_folder=True):

"""

Save the graph structure in a folder using .npy files. The end result is not human-readable.

:param path_to_folder: Path to the folder. If it does not exist, the folder will be created.

:param erase_folder: optional, boolean, default True. If True, any folder already existing at 'path_to_folder'

will be deleted.

"""

metadata_json = {'nb_sub': self.nb_sub,

'type': 'icosphere',

'radius': self.radius}

save_as_repository_include_metadata(path_to_folder, metadata_json, self.df_attributes,

self.connections, self.weights, erase_folder=erase_folder)

@classmethod

def load(cls, path_to_folder, strict_check=True):

"""

Load the graph structure using a folder saved using the save method.

:param path_to_folder: path to a folder where a graph icosphere is saved.

:param strict_check: optional, boolean, default True. If true, check that the loaded graph as type 'icosphere'.

:return: An instanciated IcosphereGraph object

"""

if os.path.exists(path_to_folder):

if not os.path.isdir(path_to_folder):

raise OSError("The object at " + path_to_folder + " is not a directory.")

else:

raise OSError("Nothing at " + path_to_folder + '.')

metadata = json.load(open(path_to_folder + '/metadata_json.json'))

if metadata['type'] != 'icosphere' and strict_check:

raise ValueError("According to the metadata, the graph is not of type icosphere.")

nb_sub = int(metadata['nb_sub'])

radius = float(metadata['radius'])

graph = cls(nb_sub=3, radius=1.)

graph.radius = radius

graph.nb_sub = nb_sub

graph.connections = np.load(path_to_folder + '/connections.npy')

graph.weights = np.load(path_to_folder + '/weights.npy')

for path in glob.glob(path_to_folder + '/*'):

if os.path.basename(path).startswith('attr_'):

name = os.path.basename(path).split('.')[0]

name = name[5:]

graph.df_attributes[name] = np.load(path)

for name in metadata['attributes_none']:

graph.df_attributes[name] = None

return graph

def keep_subgraph(self, array_vertices_to_keep):

"""

Keep the specified vertices and keep the rest. Both attributes, connections and weights are updated accordingly.

:param array_vertices_to_keep: 1d array of bool. array[i] is true if the vertex of index i should be kept.

"""

new_connections, new_weights = keep_subgraph_from_array_of_bool_equi_weight(array_vertices_to_keep,

self.connections)

self.connections = new_connections

self.weights = new_weights

self.df_attributes = self.df_attributes[array_vertices_to_keep]

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/graph/builtin_graph.py

| 0.790207 | 0.266584 |

builtin_graph.py

|

pypi

|

import numpy as np

def create_2d_coords_from_oriented_connection_matrix(connections, index_first_vertex, coord_first_vertex,

list_vectors):

"""

WARNING: The graph is assumed to be connected.

Create the coordinates (2D) of each vertex of the graph. The algorithm starts at the cell given by the

kwarg 'index_first_cell', which receives as coordinates the one given in 'coord_first_cell'. Then we loop

through each neighbours of the starting cell, giving coordinates to each one using the parameter 'list_vector'

(see description of 'list_vector' parameter below for a more detailed explanation). We then repeat the process

with a vertex that has coordinates, and so on until each vertex has coordinates. This algorithm works only if

the graph is connected.

:param connections: 2D array of integers. Connection matrix used in SamPy Graph objects to encode the edges between

vertices. Here it is assumed to be oriented (that is each column correspond to a specific

direction).

:param index_first_vertex: non-negative integer

:param coord_first_vertex: couple of floats

:param list_vectors: list containing connections.shape[1] arrays, each of shape (2,).

:return: a pair (coords_x, coords_y) of 1D arrays of floats giving the coordinates of each vertex.

"""

# we now create the arrays that will contain the x and y coordinates

coord_x = np.full(connections.shape[0], 0., dtype=float)

coord_y = np.full(connections.shape[0], 0., dtype=float)

# we initialize the coordinates and create two data-structure that will allow us to recursively give coordinates

# to each vertex.

coord_x[index_first_vertex] = float(coord_first_vertex[0])

coord_y[index_first_vertex] = float(coord_first_vertex[1])

set_index_vert_with_coords = set([index_first_vertex])

list_index_vert_with_coords = [index_first_vertex]

# now we recursively give coordinates to every vertex

for i in range(connections.shape[0]):

try:

current_vertex = list_index_vert_with_coords[i]

except IndexError:

raise ValueError("Error encountered while creating vertices' coordinates. The most likely explanation"

" is that the graph is not connected.")

for j in range(connections.shape[1]):

if connections[current_vertex, j] not in set_index_vert_with_coords:

coord_x[connections[i, j]] = coord_x[current_vertex] + list_vectors[j][0]

coord_y[connections[i, j]] = coord_y[current_vertex] + list_vectors[j][1]

list_index_vert_with_coords.append(connections[i, j])

set_index_vert_with_coords.add(connections[i, j])

return coord_x, coord_y

|

/sampy_abm-1.0.2-py3-none-any.whl/sampy/graph/spatial_functions.py

| 0.744749 | 0.927462 |

spatial_functions.py

|

pypi

|

from ..pandas_xs.pandas_xs import DataFrameXS

from .misc import convert_graph_structure_to_dictionary

import numpy as np

import os

import shutil

import json

import glob

class SaveAndLoadSquareGrids:

"""

Add to the square grids graphs the possibility to save them using their own special format.

We also provide a Json

"""

def __init__(self, **kwargs):

pass

def save(self, path_to_folder, erase_folder=True):

"""

Save the graph structure in a folder using .npy files. The end result is not human readable.

:param path_to_folder: Path to the folder. If it does not exists, the folder will be created.

:param erase_folder: optional, boolean, default True. If True, any folder already existing at 'path_to_folder'

will be deleted.

"""

if os.path.exists(path_to_folder):

if not erase_folder: