code

stringlengths 501

5.19M

| package

stringlengths 2

81

| path

stringlengths 9

304

| filename

stringlengths 4

145

|

|---|---|---|---|

from zeep.utils import get_base_class

from zeep.xsd.types.simple import AnySimpleType

__all__ = ["ListType", "UnionType"]

class ListType(AnySimpleType):

"""Space separated list of simpleType values"""

def __init__(self, item_type):

self.item_type = item_type

super(ListType, self).__init__()

def __call__(self, value):

return value

def render(self, parent, value, xsd_type=None, render_path=None):

parent.text = self.xmlvalue(value)

def resolve(self):

self.item_type = self.item_type.resolve()

self.base_class = self.item_type.__class__

return self

def xmlvalue(self, value):

item_type = self.item_type

return " ".join(item_type.xmlvalue(v) for v in value)

def pythonvalue(self, value):

if not value:

return []

item_type = self.item_type

return [item_type.pythonvalue(v) for v in value.split()]

def signature(self, schema=None, standalone=True):

return self.item_type.signature(schema) + "[]"

class UnionType(AnySimpleType):

"""Simple type existing out of multiple other types"""

def __init__(self, item_types):

self.item_types = item_types

self.item_class = None

assert item_types

super(UnionType, self).__init__(None)

def resolve(self):

self.item_types = [item.resolve() for item in self.item_types]

base_class = get_base_class(self.item_types)

if issubclass(base_class, AnySimpleType) and base_class != AnySimpleType:

self.item_class = base_class

return self

def signature(self, schema=None, standalone=True):

return ""

def parse_xmlelement(

self, xmlelement, schema=None, allow_none=True, context=None, schema_type=None

):

if self.item_class:

return self.item_class().parse_xmlelement(

xmlelement, schema, allow_none, context

)

return xmlelement.text

def pythonvalue(self, value):

if self.item_class:

return self.item_class().pythonvalue(value)

return value

def xmlvalue(self, value):

if self.item_class:

return self.item_class().xmlvalue(value)

return value | zeep-roboticia | /zeep-roboticia-3.4.0.tar.gz/zeep-roboticia-3.4.0/src/zeep/xsd/types/collection.py | collection.py |

import copy

import logging

from collections import OrderedDict, deque

from itertools import chain

from cached_property import threaded_cached_property

from zeep.exceptions import UnexpectedElementError, XMLParseError

from zeep.xsd.const import NotSet, SkipValue, Nil, xsi_ns

from zeep.xsd.elements import (

Any, AnyAttribute, AttributeGroup, Choice, Element, Group, Sequence)

from zeep.xsd.elements.indicators import OrderIndicator

from zeep.xsd.types.any import AnyType

from zeep.xsd.types.simple import AnySimpleType

from zeep.xsd.utils import NamePrefixGenerator

from zeep.xsd.valueobjects import ArrayValue, CompoundValue

logger = logging.getLogger(__name__)

__all__ = ["ComplexType"]

class ComplexType(AnyType):

_xsd_name = None

def __init__(

self,

element=None,

attributes=None,

restriction=None,

extension=None,

qname=None,

is_global=False,

):

if element and type(element) == list:

element = Sequence(element)

self.name = self.__class__.__name__ if qname else None

self._element = element

self._attributes = attributes or []

self._restriction = restriction

self._extension = extension

self._extension_types = tuple()

super(ComplexType, self).__init__(qname=qname, is_global=is_global)

def __call__(self, *args, **kwargs):

if self._array_type:

return self._array_class(*args, **kwargs)

return self._value_class(*args, **kwargs)

@property

def accepted_types(self):

return (self._value_class,) + self._extension_types

@threaded_cached_property

def _array_class(self):

assert self._array_type

return type(

self.__class__.__name__,

(ArrayValue,),

{"_xsd_type": self, "__module__": "zeep.objects"},

)

@threaded_cached_property

def _value_class(self):

return type(

self.__class__.__name__,

(CompoundValue,),

{"_xsd_type": self, "__module__": "zeep.objects"},

)

def __str__(self):

return "%s(%s)" % (self.__class__.__name__, self.signature())

@threaded_cached_property

def attributes(self):

generator = NamePrefixGenerator(prefix="_attr_")

result = []

elm_names = {name for name, elm in self.elements if name is not None}

for attr in self._attributes_unwrapped:

if attr.name is None:

name = generator.get_name()

elif attr.name in elm_names:

name = "attr__%s" % attr.name

else:

name = attr.name

result.append((name, attr))

return result

@threaded_cached_property

def _attributes_unwrapped(self):

attributes = []

for attr in self._attributes:

if isinstance(attr, AttributeGroup):

attributes.extend(attr.attributes)

else:

attributes.append(attr)

return attributes

@threaded_cached_property

def elements(self):

"""List of tuples containing the element name and the element"""

result = []

for name, element in self.elements_nested:

if isinstance(element, Element):

result.append((element.attr_name, element))

else:

result.extend(element.elements)

return result

@threaded_cached_property

def elements_nested(self):

"""List of tuples containing the element name and the element"""

result = []

generator = NamePrefixGenerator()

# Handle wsdl:arrayType objects

if self._array_type:

name = generator.get_name()

if isinstance(self._element, Group):

result = [

(

name,

Sequence(

[

Any(

max_occurs="unbounded",

restrict=self._array_type.array_type,

)

]

),

)

]

else:

result = [(name, self._element)]

else:

# _element is one of All, Choice, Group, Sequence

if self._element:

result.append((generator.get_name(), self._element))

return result

@property

def _array_type(self):

attrs = {attr.qname.text: attr for attr in self._attributes if attr.qname}

array_type = attrs.get("{http://schemas.xmlsoap.org/soap/encoding/}arrayType")

return array_type

def parse_xmlelement(

self, xmlelement, schema=None, allow_none=True, context=None, schema_type=None

):

"""Consume matching xmlelements and call parse() on each

:param xmlelement: XML element objects

:type xmlelement: lxml.etree._Element

:param schema: The parent XML schema

:type schema: zeep.xsd.Schema

:param allow_none: Allow none

:type allow_none: bool

:param context: Optional parsing context (for inline schemas)

:type context: zeep.xsd.context.XmlParserContext

:param schema_type: The original type (not overriden via xsi:type)

:type schema_type: zeep.xsd.types.base.Type

:rtype: dict or None

"""

# If this is an empty complexType (<xsd:complexType name="x"/>)

if not self.attributes and not self.elements:

return None

attributes = xmlelement.attrib

init_kwargs = OrderedDict()

# If this complexType extends a simpleType then we have no nested

# elements. Parse it directly via the type object. This is the case

# for xsd:simpleContent

if isinstance(self._element, Element) and isinstance(

self._element.type, AnySimpleType

):

name, element = self.elements_nested[0]

init_kwargs[name] = element.type.parse_xmlelement(

xmlelement, schema, name, context=context

)

else:

elements = deque(xmlelement.iterchildren())

if allow_none and len(elements) == 0 and len(attributes) == 0:

return

# Parse elements. These are always indicator elements (all, choice,

# group, sequence)

assert len(self.elements_nested) < 2

for name, element in self.elements_nested:

try:

result = element.parse_xmlelements(

elements, schema, name, context=context

)

if result:

init_kwargs.update(result)

except UnexpectedElementError as exc:

raise XMLParseError(exc.message)

# Check if all children are consumed (parsed)

if elements:

if schema.settings.strict:

raise XMLParseError("Unexpected element %r" % elements[0].tag)

else:

init_kwargs["_raw_elements"] = elements

# Parse attributes

if attributes:

attributes = copy.copy(attributes)

for name, attribute in self.attributes:

if attribute.name:

if attribute.qname.text in attributes:

value = attributes.pop(attribute.qname.text)

init_kwargs[name] = attribute.parse(value)

else:

init_kwargs[name] = attribute.parse(attributes)

value = self._value_class(**init_kwargs)

schema_type = schema_type or self

if schema_type and getattr(schema_type, "_array_type", None):

value = schema_type._array_class.from_value_object(value)

return value

def render(self, parent, value, xsd_type=None, render_path=None):

"""Serialize the given value lxml.Element subelements on the parent

element.

:type parent: lxml.etree._Element

:type value: Union[list, dict, zeep.xsd.valueobjects.CompoundValue]

:type xsd_type: zeep.xsd.types.base.Type

:param render_path: list

"""

if not render_path:

render_path = [self.name]

if not self.elements_nested and not self.attributes:

return

# TODO: Implement test case for this

if value is None:

value = {}

if isinstance(value, ArrayValue):

value = value.as_value_object()

# Render attributes

for name, attribute in self.attributes:

attr_value = value[name] if name in value else NotSet

child_path = render_path + [name]

attribute.render(parent, attr_value, child_path)

if (

len(self.elements_nested) == 1

and isinstance(value, self.accepted_types)

and not isinstance(value, (list, dict, CompoundValue))

):

element = self.elements_nested[0][1]

element.type.render(parent, value, None, child_path)

return

# Render sub elements

for name, element in self.elements_nested:

if isinstance(element, Element) or element.accepts_multiple:

element_value = value[name] if name in value else NotSet

child_path = render_path + [name]

else:

element_value = value

child_path = list(render_path)

# We want to explicitly skip this sub-element

if element_value is SkipValue:

continue

if isinstance(element, Element):

element.type.render(parent, element_value, None, child_path)

else:

element.render(parent, element_value, child_path)

if xsd_type:

if xsd_type._xsd_name:

parent.set(xsi_ns("type"), xsd_type._xsd_name)

if xsd_type.qname:

parent.set(xsi_ns("type"), xsd_type.qname)

def parse_kwargs(self, kwargs, name, available_kwargs):

"""Parse the kwargs for this type and return the accepted data as

a dict.

:param kwargs: The kwargs

:type kwargs: dict

:param name: The name as which this type is registered in the parent

:type name: str

:param available_kwargs: The kwargs keys which are still available,

modified in place

:type available_kwargs: set

:rtype: dict

"""

value = None

name = name or self.name

if name in available_kwargs:

value = kwargs[name]

available_kwargs.remove(name)

if value is not Nil:

value = self._create_object(value, name)

return {name: value}

return {}

def _create_object(self, value, name):

"""Return the value as a CompoundValue object

:type value: str

:type value: list, dict, CompoundValue

"""

if value is None:

return None

if isinstance(value, list) and not self._array_type:

return [self._create_object(val, name) for val in value]

if isinstance(value, CompoundValue) or value is SkipValue:

return value

if isinstance(value, dict):

return self(**value)

# Try to automatically create an object. This might fail if there

# are multiple required arguments.

return self(value)

def resolve(self):

"""Resolve all sub elements and types"""

if self._resolved:

return self._resolved

self._resolved = self

resolved = []

for attribute in self._attributes:

value = attribute.resolve()

assert value is not None

if isinstance(value, list):

resolved.extend(value)

else:

resolved.append(value)

self._attributes = resolved

if self._extension:

self._extension = self._extension.resolve()

self._resolved = self.extend(self._extension)

elif self._restriction:

self._restriction = self._restriction.resolve()

self._resolved = self.restrict(self._restriction)

if self._element:

self._element = self._element.resolve()

return self._resolved

def extend(self, base):

"""Create a new ComplexType instance which is the current type

extending the given base type.

Used for handling xsd:extension tags

TODO: Needs a rewrite where the child containers are responsible for

the extend functionality.

:type base: zeep.xsd.types.base.Type

:rtype base: zeep.xsd.types.base.Type

"""

if isinstance(base, ComplexType):

base_attributes = base._attributes_unwrapped

base_element = base._element

else:

base_attributes = []

base_element = None

attributes = base_attributes + self._attributes_unwrapped

# Make sure we don't have duplicate (child is leading)

if base_attributes and self._attributes_unwrapped:

new_attributes = OrderedDict()

for attr in attributes:

if isinstance(attr, AnyAttribute):

new_attributes["##any"] = attr

else:

new_attributes[attr.qname.text] = attr

attributes = new_attributes.values()

# If the base and the current type both have an element defined then

# these need to be merged. The base_element might be empty (or just

# container a placeholder element).

element = []

if self._element and base_element:

self._element = self._element.resolve()

base_element = base_element.resolve()

element = self._element.clone(self._element.name)

if isinstance(base_element, OrderIndicator):

if isinstance(base_element, Choice):

element.insert(0, base_element)

elif isinstance(self._element, Choice):

element = base_element.clone(self._element.name)

element.append(self._element)

elif isinstance(element, OrderIndicator):

for item in reversed(base_element):

element.insert(0, item)

elif isinstance(element, Group):

for item in reversed(base_element):

element.child.insert(0, item)

elif isinstance(self._element, Group):

raise NotImplementedError("TODO")

else:

pass # Element (ignore for now)

elif self._element or base_element:

element = self._element or base_element

else:

element = Element("_value_1", base)

new = self.__class__(

element=element,

attributes=attributes,

qname=self.qname,

is_global=self.is_global,

)

new._extension_types = base.accepted_types

return new

def restrict(self, base):

"""Create a new complextype instance which is the current type

restricted by the base type.

Used for handling xsd:restriction

:type base: zeep.xsd.types.base.Type

:rtype base: zeep.xsd.types.base.Type

"""

attributes = list(chain(base._attributes_unwrapped, self._attributes_unwrapped))

# Make sure we don't have duplicate (self is leading)

if base._attributes_unwrapped and self._attributes_unwrapped:

new_attributes = OrderedDict()

for attr in attributes:

if isinstance(attr, AnyAttribute):

new_attributes["##any"] = attr

else:

new_attributes[attr.qname.text] = attr

attributes = list(new_attributes.values())

if base._element:

base._element.resolve()

new = self.__class__(

element=self._element or base._element,

attributes=attributes,

qname=self.qname,

is_global=self.is_global,

)

return new.resolve()

def signature(self, schema=None, standalone=True):

parts = []

for name, element in self.elements_nested:

part = element.signature(schema, standalone=False)

parts.append(part)

for name, attribute in self.attributes:

part = "%s: %s" % (name, attribute.signature(schema, standalone=False))

parts.append(part)

value = ", ".join(parts)

if standalone:

return "%s(%s)" % (self.get_prefixed_name(schema), value)

else:

return value | zeep-roboticia | /zeep-roboticia-3.4.0.tar.gz/zeep-roboticia-3.4.0/src/zeep/xsd/types/complex.py | complex.py |

import logging

from zeep.utils import qname_attr

from zeep.xsd.const import xsd_ns, xsi_ns

from zeep.xsd.types.base import Type

from zeep.xsd.valueobjects import AnyObject

logger = logging.getLogger(__name__)

__all__ = ["AnyType"]

class AnyType(Type):

_default_qname = xsd_ns("anyType")

_attributes_unwrapped = []

_element = None

def __call__(self, value=None):

return value or ""

def render(self, parent, value, xsd_type=None, render_path=None):

if isinstance(value, AnyObject):

if value.xsd_type is None:

parent.set(xsi_ns("nil"), "true")

else:

value.xsd_type.render(parent, value.value, None, render_path)

parent.set(xsi_ns("type"), value.xsd_type.qname)

elif hasattr(value, "_xsd_elm"):

value._xsd_elm.render(parent, value, render_path)

parent.set(xsi_ns("type"), value._xsd_elm.qname)

else:

parent.text = self.xmlvalue(value)

def parse_xmlelement(

self, xmlelement, schema=None, allow_none=True, context=None, schema_type=None

):

"""Consume matching xmlelements and call parse() on each

:param xmlelement: XML element objects

:type xmlelement: lxml.etree._Element

:param schema: The parent XML schema

:type schema: zeep.xsd.Schema

:param allow_none: Allow none

:type allow_none: bool

:param context: Optional parsing context (for inline schemas)

:type context: zeep.xsd.context.XmlParserContext

:param schema_type: The original type (not overriden via xsi:type)

:type schema_type: zeep.xsd.types.base.Type

:rtype: dict or None

"""

xsi_type = qname_attr(xmlelement, xsi_ns("type"))

xsi_nil = xmlelement.get(xsi_ns("nil"))

children = list(xmlelement)

# Handle xsi:nil attribute

if xsi_nil == "true":

return None

# Check if a xsi:type is defined and try to parse the xml according

# to that type.

if xsi_type and schema:

xsd_type = schema.get_type(xsi_type, fail_silently=True)

# If we were unable to resolve a type for the xsi:type (due to

# buggy soap servers) then we just return the text or lxml element.

if not xsd_type:

logger.debug(

"Unable to resolve type for %r, returning raw data", xsi_type.text

)

if xmlelement.text:

return xmlelement.text

return children

# If the xsd_type is xsd:anyType then we will recurs so ignore

# that.

if isinstance(xsd_type, self.__class__):

return xmlelement.text or None

return xsd_type.parse_xmlelement(xmlelement, schema, context=context)

# If no xsi:type is set and the element has children then there is

# not much we can do. Just return the children

elif children:

return children

elif xmlelement.text is not None:

return self.pythonvalue(xmlelement.text)

return None

def resolve(self):

return self

def xmlvalue(self, value):

"""Guess the xsd:type for the value and use corresponding serializer"""

from zeep.xsd.types import builtins

available_types = [

builtins.String,

builtins.Boolean,

builtins.Decimal,

builtins.Float,

builtins.DateTime,

builtins.Date,

builtins.Time,

]

for xsd_type in available_types:

if isinstance(value, xsd_type.accepted_types):

return xsd_type().xmlvalue(value)

return str(value)

def pythonvalue(self, value, schema=None):

return value

def signature(self, schema=None, standalone=True):

return "xsd:anyType" | zeep-roboticia | /zeep-roboticia-3.4.0.tar.gz/zeep-roboticia-3.4.0/src/zeep/xsd/types/any.py | any.py |

import base64

import datetime

import math

import re

from decimal import Decimal as _Decimal

import isodate

import pytz

import six

from zeep.xsd.const import xsd_ns

from zeep.xsd.types.any import AnyType

from zeep.xsd.types.simple import AnySimpleType

class ParseError(ValueError):

pass

class BuiltinType(object):

def __init__(self, qname=None, is_global=False):

super(BuiltinType, self).__init__(qname, is_global=True)

def check_no_collection(func):

def _wrapper(self, value):

if isinstance(value, (list, dict, set)):

raise ValueError(

"The %s type doesn't accept collections as value"

% (self.__class__.__name__)

)

return func(self, value)

return _wrapper

##

# Primitive types

class String(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("string")

accepted_types = six.string_types

@check_no_collection

def xmlvalue(self, value):

if isinstance(value, bytes):

return value.decode("utf-8")

return six.text_type(value if value is not None else "")

def pythonvalue(self, value):

return value

class Boolean(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("boolean")

accepted_types = (bool,)

@check_no_collection

def xmlvalue(self, value):

return "true" if value and value not in ("false", "0") else "false"

def pythonvalue(self, value):

"""Return True if the 'true' or '1'. 'false' and '0' are legal false

values, but we consider everything not true as false.

"""

return value in ("true", "1")

class Decimal(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("decimal")

accepted_types = (_Decimal, float) + six.string_types

@check_no_collection

def xmlvalue(self, value):

return str(value)

def pythonvalue(self, value):

return _Decimal(value)

class Float(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("float")

accepted_types = (float, _Decimal) + six.string_types

def xmlvalue(self, value):

return str(value).upper()

def pythonvalue(self, value):

return float(value)

class Double(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("double")

accepted_types = (_Decimal, float) + six.string_types

@check_no_collection

def xmlvalue(self, value):

return str(value)

def pythonvalue(self, value):

return float(value)

class Duration(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("duration")

accepted_types = (isodate.duration.Duration,) + six.string_types

@check_no_collection

def xmlvalue(self, value):

return isodate.duration_isoformat(value)

def pythonvalue(self, value):

if value.startswith("PT-"):

value = value.replace("PT-", "PT")

result = isodate.parse_duration(value)

return datetime.timedelta(0 - result.total_seconds())

else:

return isodate.parse_duration(value)

class DateTime(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("dateTime")

accepted_types = (datetime.datetime,) + six.string_types

@check_no_collection

def xmlvalue(self, value):

if isinstance(value, six.string_types):

return value

# Bit of a hack, since datetime is a subclass of date we can't just

# test it with an isinstance(). And actually, we should not really

# care about the type, as long as it has the required attributes

if not all(hasattr(value, attr) for attr in ("hour", "minute", "second")):

value = datetime.datetime.combine(

value,

datetime.time(

getattr(value, "hour", 0),

getattr(value, "minute", 0),

getattr(value, "second", 0),

),

)

if getattr(value, "microsecond", 0):

return isodate.isostrf.strftime(value, "%Y-%m-%dT%H:%M:%S.%f%Z")

return isodate.isostrf.strftime(value, "%Y-%m-%dT%H:%M:%S%Z")

def pythonvalue(self, value):

# Determine based on the length of the value if it only contains a date

# lazy hack ;-)

if len(value) == 10:

value += "T00:00:00"

return isodate.parse_datetime(value)

class Time(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("time")

accepted_types = (datetime.time,) + six.string_types

@check_no_collection

def xmlvalue(self, value):

if isinstance(value, six.string_types):

return value

if value.microsecond:

return isodate.isostrf.strftime(value, "%H:%M:%S.%f%Z")

return isodate.isostrf.strftime(value, "%H:%M:%S%Z")

def pythonvalue(self, value):

return isodate.parse_time(value)

class Date(BuiltinType, AnySimpleType):

_default_qname = xsd_ns("date")

accepted_types = (datetime.date,) + six.string_types

@check_no_collection

def xmlvalue(self, value):

if isinstance(value, six.string_types):

return value

return isodate.isostrf.strftime(value, "%Y-%m-%d")

def pythonvalue(self, value):

return isodate.parse_date(value)

class gYearMonth(BuiltinType, AnySimpleType):

"""gYearMonth represents a specific gregorian month in a specific gregorian

year.

Lexical representation: CCYY-MM

"""

accepted_types = (datetime.date,) + six.string_types

_default_qname = xsd_ns("gYearMonth")

_pattern = re.compile(

r"^(?P<year>-?\d{4,})-(?P<month>\d\d)(?P<timezone>Z|[-+]\d\d:?\d\d)?$"

)

@check_no_collection

def xmlvalue(self, value):

year, month, tzinfo = value

return "%04d-%02d%s" % (year, month, _unparse_timezone(tzinfo))

def pythonvalue(self, value):

match = self._pattern.match(value)

if not match:

raise ParseError()

group = match.groupdict()

return (

int(group["year"]),

int(group["month"]),

_parse_timezone(group["timezone"]),

)

class gYear(BuiltinType, AnySimpleType):

"""gYear represents a gregorian calendar year.

Lexical representation: CCYY

"""

accepted_types = (datetime.date,) + six.string_types

_default_qname = xsd_ns("gYear")

_pattern = re.compile(r"^(?P<year>-?\d{4,})(?P<timezone>Z|[-+]\d\d:?\d\d)?$")

@check_no_collection

def xmlvalue(self, value):

year, tzinfo = value

return "%04d%s" % (year, _unparse_timezone(tzinfo))

def pythonvalue(self, value):

match = self._pattern.match(value)

if not match:

raise ParseError()

group = match.groupdict()

return (int(group["year"]), _parse_timezone(group["timezone"]))

class gMonthDay(BuiltinType, AnySimpleType):

"""gMonthDay is a gregorian date that recurs, specifically a day of the

year such as the third of May.

Lexical representation: --MM-DD

"""

accepted_types = (datetime.date,) + six.string_types

_default_qname = xsd_ns("gMonthDay")

_pattern = re.compile(

r"^--(?P<month>\d\d)-(?P<day>\d\d)(?P<timezone>Z|[-+]\d\d:?\d\d)?$"

)

@check_no_collection

def xmlvalue(self, value):

month, day, tzinfo = value

return "--%02d-%02d%s" % (month, day, _unparse_timezone(tzinfo))

def pythonvalue(self, value):

match = self._pattern.match(value)

if not match:

raise ParseError()

group = match.groupdict()

return (

int(group["month"]),

int(group["day"]),

_parse_timezone(group["timezone"]),

)

class gDay(BuiltinType, AnySimpleType):

"""gDay is a gregorian day that recurs, specifically a day of the month

such as the 5th of the month

Lexical representation: ---DD

"""

accepted_types = (datetime.date,) + six.string_types

_default_qname = xsd_ns("gDay")

_pattern = re.compile(r"^---(?P<day>\d\d)(?P<timezone>Z|[-+]\d\d:?\d\d)?$")

@check_no_collection

def xmlvalue(self, value):

day, tzinfo = value

return "---%02d%s" % (day, _unparse_timezone(tzinfo))

def pythonvalue(self, value):

match = self._pattern.match(value)

if not match:

raise ParseError()

group = match.groupdict()

return (int(group["day"]), _parse_timezone(group["timezone"]))

class gMonth(BuiltinType, AnySimpleType):

"""gMonth is a gregorian month that recurs every year.

Lexical representation: --MM

"""

accepted_types = (datetime.date,) + six.string_types

_default_qname = xsd_ns("gMonth")

_pattern = re.compile(r"^--(?P<month>\d\d)(?P<timezone>Z|[-+]\d\d:?\d\d)?$")

@check_no_collection

def xmlvalue(self, value):

month, tzinfo = value

return "--%d%s" % (month, _unparse_timezone(tzinfo))

def pythonvalue(self, value):

match = self._pattern.match(value)

if not match:

raise ParseError()

group = match.groupdict()

return (int(group["month"]), _parse_timezone(group["timezone"]))

class HexBinary(BuiltinType, AnySimpleType):

accepted_types = six.string_types

_default_qname = xsd_ns("hexBinary")

@check_no_collection

def xmlvalue(self, value):

return value

def pythonvalue(self, value):

return value

class Base64Binary(BuiltinType, AnySimpleType):

accepted_types = six.string_types

_default_qname = xsd_ns("base64Binary")

@check_no_collection

def xmlvalue(self, value):

return base64.b64encode(value)

def pythonvalue(self, value):

return base64.b64decode(value)

class AnyURI(BuiltinType, AnySimpleType):

accepted_types = six.string_types

_default_qname = xsd_ns("anyURI")

@check_no_collection

def xmlvalue(self, value):

return value

def pythonvalue(self, value):

return value

class QName(BuiltinType, AnySimpleType):

accepted_types = six.string_types

_default_qname = xsd_ns("QName")

@check_no_collection

def xmlvalue(self, value):

return value

def pythonvalue(self, value):

return value

class Notation(BuiltinType, AnySimpleType):

accepted_types = six.string_types

_default_qname = xsd_ns("NOTATION")

##

# Derived datatypes

class NormalizedString(String):

_default_qname = xsd_ns("normalizedString")

class Token(NormalizedString):

_default_qname = xsd_ns("token")

class Language(Token):

_default_qname = xsd_ns("language")

class NmToken(Token):

_default_qname = xsd_ns("NMTOKEN")

class NmTokens(NmToken):

_default_qname = xsd_ns("NMTOKENS")

class Name(Token):

_default_qname = xsd_ns("Name")

class NCName(Name):

_default_qname = xsd_ns("NCName")

class ID(NCName):

_default_qname = xsd_ns("ID")

class IDREF(NCName):

_default_qname = xsd_ns("IDREF")

class IDREFS(IDREF):

_default_qname = xsd_ns("IDREFS")

class Entity(NCName):

_default_qname = xsd_ns("ENTITY")

class Entities(Entity):

_default_qname = xsd_ns("ENTITIES")

class Integer(Decimal):

_default_qname = xsd_ns("integer")

accepted_types = (int, float) + six.string_types

def xmlvalue(self, value):

return str(value)

def pythonvalue(self, value):

return int(value)

class NonPositiveInteger(Integer):

_default_qname = xsd_ns("nonPositiveInteger")

class NegativeInteger(Integer):

_default_qname = xsd_ns("negativeInteger")

class Long(Integer):

_default_qname = xsd_ns("long")

def pythonvalue(self, value):

return long(value) if six.PY2 else int(value) # noqa

class Int(Long):

_default_qname = xsd_ns("int")

class Short(Int):

_default_qname = xsd_ns("short")

class Byte(Short):

"""A signed 8-bit integer"""

_default_qname = xsd_ns("byte")

class NonNegativeInteger(Integer):

_default_qname = xsd_ns("nonNegativeInteger")

class UnsignedLong(NonNegativeInteger):

_default_qname = xsd_ns("unsignedLong")

class UnsignedInt(UnsignedLong):

_default_qname = xsd_ns("unsignedInt")

class UnsignedShort(UnsignedInt):

_default_qname = xsd_ns("unsignedShort")

class UnsignedByte(UnsignedShort):

_default_qname = xsd_ns("unsignedByte")

class PositiveInteger(NonNegativeInteger):

_default_qname = xsd_ns("positiveInteger")

##

# Other

def _parse_timezone(val):

"""Return a pytz.tzinfo object"""

if not val:

return

if val == "Z" or val == "+00:00":

return pytz.utc

negative = val.startswith("-")

minutes = int(val[-2:])

minutes += int(val[1:3]) * 60

if negative:

minutes = 0 - minutes

return pytz.FixedOffset(minutes)

def _unparse_timezone(tzinfo):

if not tzinfo:

return ""

if tzinfo == pytz.utc:

return "Z"

hours = math.floor(tzinfo._minutes / 60)

minutes = tzinfo._minutes % 60

if hours > 0:

return "+%02d:%02d" % (hours, minutes)

return "-%02d:%02d" % (abs(hours), minutes)

_types = [

# Primitive

String,

Boolean,

Decimal,

Float,

Double,

Duration,

DateTime,

Time,

Date,

gYearMonth,

gYear,

gMonthDay,

gDay,

gMonth,

HexBinary,

Base64Binary,

AnyURI,

QName,

Notation,

# Derived

NormalizedString,

Token,

Language,

NmToken,

NmTokens,

Name,

NCName,

ID,

IDREF,

IDREFS,

Entity,

Entities,

Integer,

NonPositiveInteger, # noqa

NegativeInteger,

Long,

Int,

Short,

Byte,

NonNegativeInteger, # noqa

UnsignedByte,

UnsignedInt,

UnsignedLong,

UnsignedShort,

PositiveInteger,

# Other

AnyType,

AnySimpleType,

]

default_types = {cls._default_qname: cls(is_global=True) for cls in _types} | zeep-roboticia | /zeep-roboticia-3.4.0.tar.gz/zeep-roboticia-3.4.0/src/zeep/xsd/types/builtins.py | builtins.py |

Authors

=======

* Michael van Tellingen

Contributors

============

* Kateryna Burda

* Alexey Stepanov

* Marco Vellinga

* jaceksnet

* Andrew Serong

* vashek

* Seppo Yli-Olli

* Sam Denton

* Dani Möller

* Julien Delasoie

* Christian González

* bjarnagin

* mcordes

* Joeri Bekker

* Bartek Wójcicki

* jhorman

* fiebiga

* David Baumgold

* Antonio Cuni

* Alexandre de Mari

* Nicolas Evrard

* Eric Wong

* Jason Vertrees

* Falldog

* Matt Grimm (mgrimm)

* Marek Wywiał

* btmanm

* Caleb Salt

* Ondřej Lanč

* Jan Murre

* Stefano Parmesan

* Julien Marechal

* Dave Wapstra

* Mike Fiedler

* Derek Harland

* Bruno Duyé

* Christoph Heuel

* Ben Tucker

* Eric Waller

* Falk Schuetzenmeister

* Jon Jenkins

* OrangGeeGee

* Raymond Piller

* Zoltan Benedek

* Øyvind Heddeland Instefjord

* Pol Sanlorenzo

| zeep | /zeep-4.1.0.tar.gz/zeep-4.1.0/CONTRIBUTORS.rst | CONTRIBUTORS.rst |

========================

Zeep: Python SOAP client

========================

A fast and modern Python SOAP client

Highlights:

* Compatible with Python 3.6, 3.7, 3.8 and PyPy

* Build on top of lxml and requests

* Support for Soap 1.1, Soap 1.2 and HTTP bindings

* Support for WS-Addressing headers

* Support for WSSE (UserNameToken / x.509 signing)

* Support for asyncio using the httpx module

* Experimental support for XOP messages

Please see for more information the documentation at

http://docs.python-zeep.org/

.. start-no-pypi

Status

------

.. image:: https://readthedocs.org/projects/python-zeep/badge/?version=latest

:target: https://readthedocs.org/projects/python-zeep/

.. image:: https://github.com/mvantellingen/python-zeep/workflows/Python%20Tests/badge.svg

:target: https://github.com/mvantellingen/python-zeep/actions?query=workflow%3A%22Python+Tests%22

.. image:: http://codecov.io/github/mvantellingen/python-zeep/coverage.svg?branch=master

:target: http://codecov.io/github/mvantellingen/python-zeep?branch=master

.. image:: https://img.shields.io/pypi/v/zeep.svg

:target: https://pypi.python.org/pypi/zeep/

.. end-no-pypi

Installation

------------

.. code-block:: bash

pip install zeep

Note that the latest version to support Python 2.7, 3.3, 3.4 and 3.5 is Zeep 3.4, install via `pip install zeep==3.4.0`

Zeep uses the lxml library for parsing xml. See https://lxml.de/installation.html for the installation requirements.

Usage

-----

.. code-block:: python

from zeep import Client

client = Client('tests/wsdl_files/example.rst')

client.service.ping()

To quickly inspect a WSDL file use::

python -m zeep <url-to-wsdl>

Please see the documentation at http://docs.python-zeep.org for more

information.

Support

=======

If you want to report a bug then please first read

http://docs.python-zeep.org/en/master/reporting_bugs.html

Please only report bugs and not support requests to the GitHub issue tracker.

| zeep | /zeep-4.1.0.tar.gz/zeep-4.1.0/README.rst | README.rst |

# zeetoo

A collection of various Python scripts created as a help in everyday work in Team II IChO PAS.

- [Geting Started](#getting-started)

- [Running Scripts](#running-scripts)

- [Command Line Interface](#command-line-interface)

- [Python API](#python-api)

- [Graphical User Interface](#graphical-user-interface)

- [Description of modules](#description-of-modules)

- [backuper](#backuper) - simple automated backup tool for Windows

- [confsearch](#confsearch) - find conformers of given molecule using RDKit

- [fixgvmol](#fixgvmol) - correct .mol files created with GaussView software

- [getcdx](#getcdx) - extract all ChemDraw files embedded in .docx file

- [gofproc](#gofproc) - simple script for processing Gaussian output files

- [sdf_to_gjf](#sdf_to_gjf) - save molecules from .sdf file as separate .gjf files

- [Requirements](#requirements)

- [License & Disclaimer](#license--disclaimer)

- [Changelog](#changelog)

## Getting Started

To use this collection of scripts you will need a Python 3 interpreter.

You can download an installer of latest version from [python.org](https://www.python.org)

(a shortcut to direct download for Windows:

[Python 3.7.4 Windows x86 executable installer](https://www.python.org/ftp/python/3.7.4/python-3.7.4.exe)).

The easiest way to get **zeetoo** up and running is to run `pip install zeetoo` in the command line*.

Alternatively, you can download this package as zip file using 'Clone or download' button on this site.

Unzip the package and from the resulting directory run `python setup.py install`

in the command line*.

And that's it, you're ready to go!

* On windows you can reach command line by right-clicking inside the directory

while holding Shift and then choosing "Open PowerShell window here" or "Open command window here".

## Running Scripts

### Command Line Interface

All zeetoo functionality is available from command line.

After installation of the package each module can be accessed with use of

`zeetoo [module_name] [parameters]`.

For more information run `zeetoo --help` to see available modules or

`zeetoo [module_name] --help` to see the help page for specific module.

### Python API

Modules contained in **zeetoo** may also be used directly from python.

This section will be supplemented with details on this topic soon.

### Graphical User Interface

A simple graphical user interface (GUI) is available for backuper script.

Please refer to the [backuper section](#backuper) for details.

GUIs for other modules will probably be available in near future.

## Description of Modules

## backuper

A simple Python script for scheduling and running automated backup.

Essentially, it copies specified files and directories to specified location

with regard to date of last modification of both, source file and existing copy:

- if source file is newer than backup version, the second will be overridden;

- if both files have the same last modification time, file will not be copied;

- if backup version is newer, it will be renamed to "oldname_last-modification-time"

and source file will be copied, preserving both versions.

After creating a specification for backup job (that is, specifying backup destination

and files that should be copied; these information are stored in .ini file),

it may be run manually or scheduled.

Scheduling is currently available only on Windows, as it uses build-in Windows task scheduler.

It is important to remember, that this is not a version control software.

Only lastly copied version is stored.

A minimal graphical user interface for this script is available (see below).

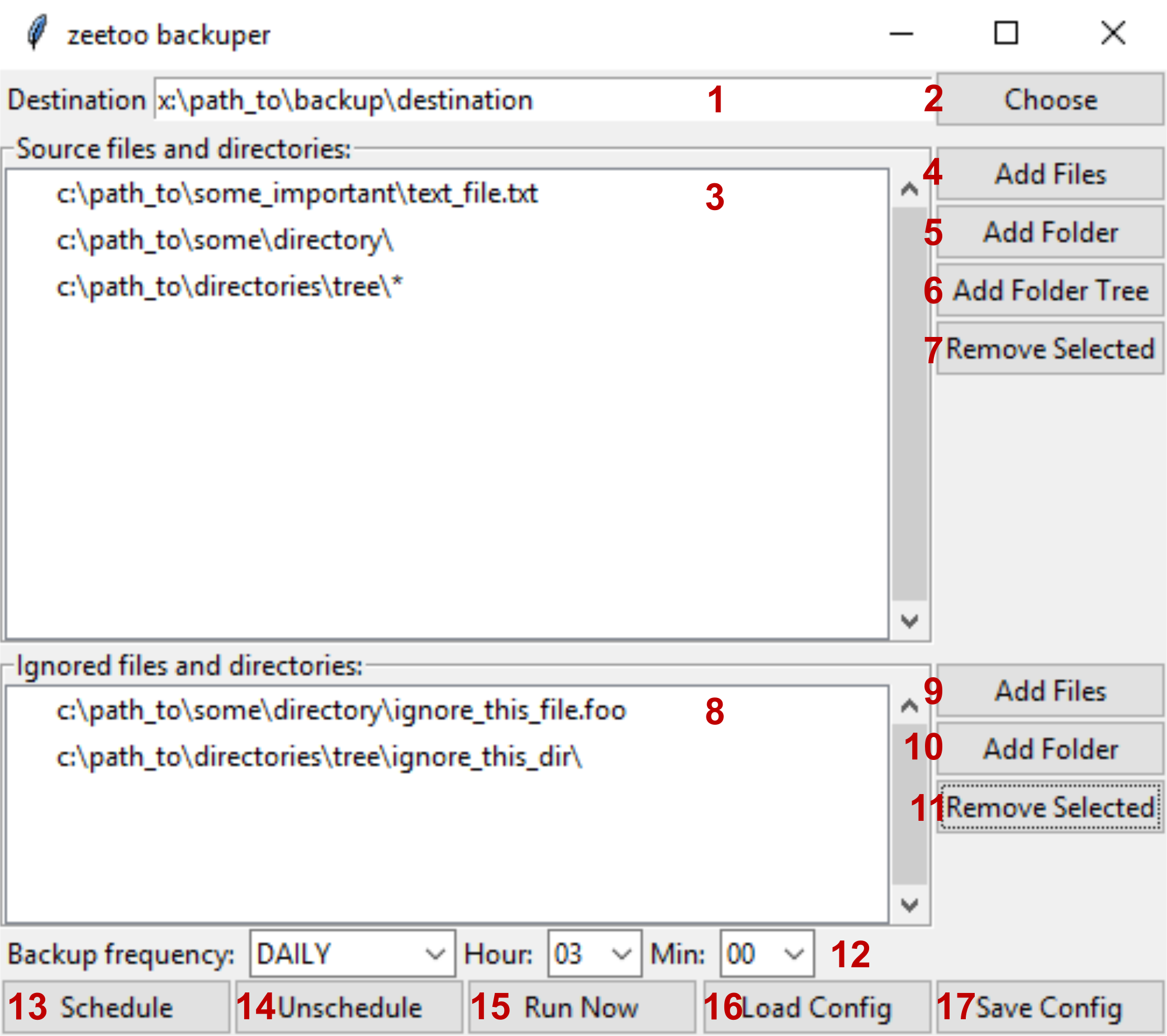

### graphical user interface for backuper module

To start up the graphical user interface (GUI) run `zeetoo backuper_gui` in the command line.

If you've downloaded the whole package manually, you may also double-click on start_gui.bat file.

A window similar to the one below should appear.

Further you'll find description of each element of this window.

1. This field shows path to backup's main directory. Files will be copied there. You can change this field directly or by clicking 2.

2. Choose backup destination directory using graphical interface.

3. All files and directories that are meant to be backuped are listed here. It will be called 'source' from now on. For more details read 4-7.

4. Add file or files to source. Files will be shown in 3 as line without slash character at the end. Each file will be copied to the directory of the same name as directory it is located in; in example shown above it would be 'x:\path_to\backup\destination\some_important\text_file.text'.

5. Add a directory to source. Directories will be shown in 3 as line with slash character at the end. All files (but not subdirectories!) present in this directory will be copied to directory with same name.

6. Add a directory tree to source. Trees will be shown in 3 as line with slash and star characters at the end. The whole content of chosen directory will be copied, including all files and subdirectories.

7. Remove selected path from source.

8. All files and directories marked as ignored will be shown here. Ignored files and directories won't be copied during backup, even if they are inside source directory or tree, or listed as source.

9. Add file or files to ignored.

10. Add directory to ignored.

11. Remove selected item from list of ignored files and directories.

12. Set how often backup should be run (once a day, once a week or once a month) and at what time.

13. Schedule backup task according to specified guidelines. WARNING: this will also automatically save configuration file.

14. Remove backup task scheduled earlier.

15. Run backup task now, according to specified guidelines. Saving configuration to file not needed.

16. Load configuration from specified file.

17. Save configuration.

Configuration is stored in `[User]/AppData/Local/zeetoo/backuper/config.ini` file.

After scheduling backup task this file should not be moved.

It can be modified though, backup task will be done with this modified guidelines from now on.

Scheduling new backup task, even using different configuration file, will override previous task,

unless task_name in this file is specifically changed.

## confsearch

Performs a conformational search on set of given molecules. Takes a .mol file (or number of them)

as an input and saves a list of generated conformers to specified .sdf file.

Some restriction on this process may be given: a number of conformers to generate,

a minimum RMSD value, a maximum energy difference, a maximum number of optimization cycles,

and a set of constraints for force field optimization.

## fixgvmol

.mol files created with GaussView (GV5 at least) lack some information, namely a mol version and END line.

Without it some programs might not be able to read such files.

This script adds these pieces of information to .mol files missing them.

## getcdx

Extracts all embedded ChemDraw files from a .docx document and saves it in a separate directory

(which might be specified by user), using in-text description of schemes/drawings as file names.

It may be specified if description of the scheme/drawing is located above or underneath it

(the former is default). Finally, It may be specified how long filename should be.

## gofproc

Extracts information about molecule energy and imaginary frequencies from given set of Gaussian

output files with *freq* job performed. Extracted data might be written to terminal (stdout)

or to specified .xlsx file (must not be opened in other programs) at the end of the file or

appended to a row, based on name of the file parsed.

Calculations, that did not converged are reported separately.

## sdf_to_gjf

Writes molecules contained in an .sdf file to a set of .gjf files in accordance with the guidelines

given by user.

# Requirements

- getcdx module requires olefile package

- gofproc module requires openpyxl package

- confsearch module requires RDKit software

Please note, that the RDKit **will not** be installed automatically with this package.

The recommended way to get RDKit software is through use of Anaconda Python distribution.

Please refer to RDKit documentation for more information.

# License & Disclaimer

See the LICENSE.txt file for license rights and limitations (MIT).

# Changelog

## v.0.1.3

- fixed sdf_to_gjf ignoring parameters "charge" and "multiplicity"

- supplemented sdf_to_gjf default values and help message

- fixed typo in sdf_to_gjf CLI ("sufix" -> "suffix")

- enabled specifying coordinates' precision in sdf_to_gjf

- enhanced handling of link0 commands by sdf_to_gjf

- removed filtering of explicitly provided non-.mol files in fixgvmol

## v.0.1.2

- getcdx now changes characters forbidden in file names to "-" instead of raising an exception

- start_gui.bat should now work regardless its location

## v.0.1.1

- fixed import errors when run as module

## v.0.1.0

- initial release | zeetoo | /zeetoo-0.1.3.tar.gz/zeetoo-0.1.3/README.md | README.md |

<div align="center">

<img width="300px" src="https://github.com/zefhub/zefhub-web-assets/blob/main/zef_logo_white.png#gh-dark-mode-only">

<img width="300px" src="https://github.com/zefhub/zefhub-web-assets/blob/main/zef_logo_black.png#gh-light-mode-only">

</div>

<p align="center">

A data-oriented toolkit for graph data

</p>

<p align="center">

<em>versioned graphs + streams + query using Python + GraphQL</em>

</p>

<div align="center">

<a href="https://github.com/zefhub/zef/actions/workflows/on-master-merge.yml">

<img src="https://github.com/zefhub/zef/actions/workflows/on-master-merge.yml/badge.svg" alt="Workflow status badge" loading="lazy" height="20">

</a>

<a href="https://github.com/zefhub/zef/blob/master/LICENSE">

<img src="https://img.shields.io/badge/license-Apache%202.0-teal" />

</a>

<a href="https://twitter.com/zefhub" target="_blank"><img src="https://img.shields.io/twitter/follow/zefhub.svg?style=social&label=Follow"></a>

<br />

<br />

<a href="https://zef.zefhub.io/">Docs</a>

<span> | </span>

<a href="https://zef.zefhub.io/blog">Blog</a>

<span> | </span>

<a href="https://zef.chat/">Chat</a>

<span> | </span>

<a href="https://www.zefhub.io/">ZefHub</a>

</div>

<br />

<br />

<br />

## Description

Zef is an open source, data-oriented toolkit for graph data. It combines the access speed and local development experience of an in-memory data structure with the power of a fully versioned, immutable database (and distributed persistence if needed with ZefHub). Furthermore, Zef includes a library of composable functional operators, effects handling, and native GraphQL support. You can pick and choose what you need for your project.

If any of these apply to you, Zef might help:

- I need a graph database with fast query speeds and hassle-free infra

- I need a graph data model that's more powerful than NetworkX but easier than Neo4j

- I need to "time travel" and access past states easily

- I like Datomic but prefer something open source that feels like working with local data structures

- I would prefer querying and traversing directly in Python, rather than a query language (like Cypher or GSQL)

- I need a GraphQL API that's easy to spin up and close to my data model

<br />

<br />

## Features

- a graph language you can use directly in Python code

- fully versioned graphs

- in-memory access speeds

- free and real-time data persistence (via ZefHub)

- work with graphs like local data structures

- no separate query language

- no ORM

- GraphQL API with low impedance mismatch to data model

- data streams and subscriptions

<br />

<br />

## Status

Zef is currently in Public Alpha.

- [x] Private Alpha: Testing Zef internally and with a closed group of users.

- [x] Public Alpha: Anyone can use Zef but please be patient with very large graphs!

- [ ] Public Beta: Stable enough for most non-enterprise use cases.

- [ ] Public: Stable for all production use cases.

<br />

<br />

## Installation

The platforms we currently support are 64-bit Linux and MacOS. The latest version can be installed via the PyPI repository using:

```bash

pip install zef

```

This will attempt to install a wheel if supported by your system and compile from source otherwise. See INSTALL for more details if compiling from source.

Check out our [installation doc](https://zef.zefhub.io/introduction/installation) for more details about getting up and running once installed.

<br />

<br />

## Using Zef

Here's some quick points to get going. Check out our [Quick Start](https://zef.zefhub.io/introduction/quick-start) and docs for more details.

A quick note, in Zef, we overloaded the "|" pipe so users can chain together values, Zef operators (ZefOps), and functions in sequential, lazy, and executable pipelines where data flow is left to right.

<br />

<div align="center">

<h3>💆 Get started 💆</h3>

</div>

```python

from zef import * # these imports unlock user friendly syntax and powerful Zef operators (ZefOps)

from zef.ops import *

g = Graph() # create an empty graph

```

<br />

<div align="center">

<h3>🌱 Add some data 🌱</h3>

</div>

```python

p1 = ET.Person | g | run # add an entity to the graph

(p1, RT.FirstName, "Yolandi") | g | run # add "fields" via relations triples: (source, relation, target)

```

<br />

<div align="center">

<h3>🐾 Traverse the graph 🐾</h3>

</div>

```python

p1 | Out[RT.FirstName] # one hop: step onto the relation

p1 | out_rel[RT.FirstName] # two hops: step onto the target

```

<br />

<div align="center">

<h3>⏳ Time travel ⌛</h3>

</div>

```python

p1 | time_travel[-2] # move reference frame back two time slices

p1 | time_travel[Time('2021 December 4 15:31:00 (+0100)')] # move to a specific date and time

```

<br />

<div align="center">

<h3>👐 Share with other users (via ZefHub) 👐</h3>

</div>

```python

g | sync[True] | run # save and sync all future changes on ZefHub

# ---------------- Python Session A (You) -----------------

g | uid | to_clipboard | run # copy uid onto local clipboard

# ---------------- Python Session B (Friend) -----------------

graph_uid: str = '...' # uid copied from Slack/WhatsApp/email/etc

g = Graph(graph_uid)

g | now | all[ET] | collect # see all entities in the latest time slice

```

<br />

<div align="center">

<h3>🚣 Choose your own adventure 🚣</h3>

</div>

- [Basic tutorial of Zef](https://zef.zefhub.io/tutorials/basic/employee-database)

- [Build Wordle clone with Zef](https://zef.zefhub.io/blog/wordle-using-zefops)

- [Import data from CSV](https://zef.zefhub.io/how-to/import-csv)

- [Import data from NetworkX](https://zef.zefhub.io/how-to/import-graph-formats)

- [Set up a GraphQL API](https://zef.zefhub.io/how-to/graphql-basic)

- [Use Zef graphs in NetworkX](https://zef.zefhub.io/how-to/use-zef-networkx)

<br />

<div align="center">

<h3>📌 A note on ZefHub 📌</h3>

</div>

Zef is designed so you can use it locally and drop it into any existing project. You have the option of syncing your graphs with ZefHub, a service that persists, syncs, and distributes graphs automatically (and the company behind Zef). ZefHub makes it possible to [share graphs with other users and see changes live](https://zef.zefhub.io/how-to/share-graphs), by memory mapping across machines in real-time!

You can create a ZefHub account for free which gives you full access to storing and sharing graphs forever. For full transparency, our long-term hope is that many users will get value from Zef or Zef + ZefHub for free, while ZefHub power users will pay a fee for added features and services.

<br />

<br />

## Roadmap

We want to make it incredibly easy for developers to build fully distributed, reactive systems with consistent data and cross-language (Python, C++, Julia) support. If there's sufficient interest, we'd be happy to share a public board of items we're working on.

<br />

<br />

## Contributing

Thank you for considering contributing to Zef! We know your time is valuable and your input makes Zef better for all current and future users.

To optimize for feedback speed, please raise bugs or suggest features directly in our community chat [https://zef.chat](https://zef.chat).

Please refer to our [CONTRIBUTING file](https://github.com/zefhub/zef/blob/master/CONTRIBUTING.md) and [CODE_OF_CONDUCT file](https://github.com/zefhub/zef/blob/master/CODE_OF_CONDUCT.md) for more details.

<br />

<br />

## License

Zef is licensed under the Apache License, Version 2.0 (the "License"). You may obtain a copy of the License at

[http://www.apache.org/licenses/LICENSE-2.0](http://www.apache.org/licenses/LICENSE-2.0)

<br />

<br />

## Dependencies

The compiled libraries make use of the following packages:

- `asio` (https://github.com/chriskohlhoff/asio)

- `JWT++` (https://github.com/Thalhammer/jwt-cpp)

- `Curl` (https://github.com/curl/curl)

- `JSON` (https://github.com/nlohmann/json)

- `Parallel hashmap` (https://github.com/greg7mdp/parallel-hashmap)

- `Ranges-v3` (https://github.com/ericniebler/range-v3)

- `Websocket++` (https://github.com/zaphoyd/websocketpp)

- `Zstandard` (https://github.com/facebook/zstd)

- `pybind11` (https://github.com/pybind/pybind11)

- `pybind_json` (https://github.com/pybind/pybind11_json)

| zef | /zef-0.17.0a3.tar.gz/zef-0.17.0a3/README.md | README.md |

# Version: 0.22

"""The Versioneer - like a rocketeer, but for versions.

The Versioneer

==============

* like a rocketeer, but for versions!

* https://github.com/python-versioneer/python-versioneer

* Brian Warner

* License: Public Domain

* Compatible with: Python 3.6, 3.7, 3.8, 3.9, 3.10 and pypy3

* [![Latest Version][pypi-image]][pypi-url]

* [![Build Status][travis-image]][travis-url]

This is a tool for managing a recorded version number in distutils/setuptools-based

python projects. The goal is to remove the tedious and error-prone "update

the embedded version string" step from your release process. Making a new

release should be as easy as recording a new tag in your version-control

system, and maybe making new tarballs.

## Quick Install

* `pip install versioneer` to somewhere in your $PATH

* add a `[versioneer]` section to your setup.cfg (see [Install](INSTALL.md))

* run `versioneer install` in your source tree, commit the results

* Verify version information with `python setup.py version`

## Version Identifiers

Source trees come from a variety of places:

* a version-control system checkout (mostly used by developers)

* a nightly tarball, produced by build automation

* a snapshot tarball, produced by a web-based VCS browser, like github's

"tarball from tag" feature

* a release tarball, produced by "setup.py sdist", distributed through PyPI

Within each source tree, the version identifier (either a string or a number,

this tool is format-agnostic) can come from a variety of places:

* ask the VCS tool itself, e.g. "git describe" (for checkouts), which knows

about recent "tags" and an absolute revision-id

* the name of the directory into which the tarball was unpacked

* an expanded VCS keyword ($Id$, etc)

* a `_version.py` created by some earlier build step

For released software, the version identifier is closely related to a VCS

tag. Some projects use tag names that include more than just the version

string (e.g. "myproject-1.2" instead of just "1.2"), in which case the tool

needs to strip the tag prefix to extract the version identifier. For

unreleased software (between tags), the version identifier should provide

enough information to help developers recreate the same tree, while also

giving them an idea of roughly how old the tree is (after version 1.2, before

version 1.3). Many VCS systems can report a description that captures this,

for example `git describe --tags --dirty --always` reports things like

"0.7-1-g574ab98-dirty" to indicate that the checkout is one revision past the

0.7 tag, has a unique revision id of "574ab98", and is "dirty" (it has

uncommitted changes).

The version identifier is used for multiple purposes:

* to allow the module to self-identify its version: `myproject.__version__`

* to choose a name and prefix for a 'setup.py sdist' tarball

## Theory of Operation

Versioneer works by adding a special `_version.py` file into your source

tree, where your `__init__.py` can import it. This `_version.py` knows how to

dynamically ask the VCS tool for version information at import time.

`_version.py` also contains `$Revision$` markers, and the installation

process marks `_version.py` to have this marker rewritten with a tag name

during the `git archive` command. As a result, generated tarballs will

contain enough information to get the proper version.

To allow `setup.py` to compute a version too, a `versioneer.py` is added to

the top level of your source tree, next to `setup.py` and the `setup.cfg`

that configures it. This overrides several distutils/setuptools commands to

compute the version when invoked, and changes `setup.py build` and `setup.py

sdist` to replace `_version.py` with a small static file that contains just

the generated version data.

## Installation

See [INSTALL.md](./INSTALL.md) for detailed installation instructions.

## Version-String Flavors

Code which uses Versioneer can learn about its version string at runtime by

importing `_version` from your main `__init__.py` file and running the

`get_versions()` function. From the "outside" (e.g. in `setup.py`), you can

import the top-level `versioneer.py` and run `get_versions()`.

Both functions return a dictionary with different flavors of version

information:

* `['version']`: A condensed version string, rendered using the selected

style. This is the most commonly used value for the project's version

string. The default "pep440" style yields strings like `0.11`,

`0.11+2.g1076c97`, or `0.11+2.g1076c97.dirty`. See the "Styles" section

below for alternative styles.

* `['full-revisionid']`: detailed revision identifier. For Git, this is the

full SHA1 commit id, e.g. "1076c978a8d3cfc70f408fe5974aa6c092c949ac".

* `['date']`: Date and time of the latest `HEAD` commit. For Git, it is the

commit date in ISO 8601 format. This will be None if the date is not

available.

* `['dirty']`: a boolean, True if the tree has uncommitted changes. Note that

this is only accurate if run in a VCS checkout, otherwise it is likely to

be False or None

* `['error']`: if the version string could not be computed, this will be set

to a string describing the problem, otherwise it will be None. It may be

useful to throw an exception in setup.py if this is set, to avoid e.g.

creating tarballs with a version string of "unknown".

Some variants are more useful than others. Including `full-revisionid` in a

bug report should allow developers to reconstruct the exact code being tested

(or indicate the presence of local changes that should be shared with the

developers). `version` is suitable for display in an "about" box or a CLI

`--version` output: it can be easily compared against release notes and lists

of bugs fixed in various releases.

The installer adds the following text to your `__init__.py` to place a basic

version in `YOURPROJECT.__version__`:

from ._version import get_versions

__version__ = get_versions()['version']

del get_versions

## Styles

The setup.cfg `style=` configuration controls how the VCS information is

rendered into a version string.

The default style, "pep440", produces a PEP440-compliant string, equal to the

un-prefixed tag name for actual releases, and containing an additional "local

version" section with more detail for in-between builds. For Git, this is

TAG[+DISTANCE.gHEX[.dirty]] , using information from `git describe --tags

--dirty --always`. For example "0.11+2.g1076c97.dirty" indicates that the

tree is like the "1076c97" commit but has uncommitted changes (".dirty"), and

that this commit is two revisions ("+2") beyond the "0.11" tag. For released

software (exactly equal to a known tag), the identifier will only contain the

stripped tag, e.g. "0.11".

Other styles are available. See [details.md](details.md) in the Versioneer

source tree for descriptions.

## Debugging

Versioneer tries to avoid fatal errors: if something goes wrong, it will tend

to return a version of "0+unknown". To investigate the problem, run `setup.py

version`, which will run the version-lookup code in a verbose mode, and will

display the full contents of `get_versions()` (including the `error` string,

which may help identify what went wrong).

## Known Limitations

Some situations are known to cause problems for Versioneer. This details the

most significant ones. More can be found on Github

[issues page](https://github.com/python-versioneer/python-versioneer/issues).

### Subprojects

Versioneer has limited support for source trees in which `setup.py` is not in

the root directory (e.g. `setup.py` and `.git/` are *not* siblings). The are

two common reasons why `setup.py` might not be in the root:

* Source trees which contain multiple subprojects, such as

[Buildbot](https://github.com/buildbot/buildbot), which contains both

"master" and "slave" subprojects, each with their own `setup.py`,

`setup.cfg`, and `tox.ini`. Projects like these produce multiple PyPI

distributions (and upload multiple independently-installable tarballs).

* Source trees whose main purpose is to contain a C library, but which also

provide bindings to Python (and perhaps other languages) in subdirectories.

Versioneer will look for `.git` in parent directories, and most operations

should get the right version string. However `pip` and `setuptools` have bugs

and implementation details which frequently cause `pip install .` from a

subproject directory to fail to find a correct version string (so it usually

defaults to `0+unknown`).

`pip install --editable .` should work correctly. `setup.py install` might

work too.

Pip-8.1.1 is known to have this problem, but hopefully it will get fixed in

some later version.

[Bug #38](https://github.com/python-versioneer/python-versioneer/issues/38) is tracking

this issue. The discussion in

[PR #61](https://github.com/python-versioneer/python-versioneer/pull/61) describes the

issue from the Versioneer side in more detail.

[pip PR#3176](https://github.com/pypa/pip/pull/3176) and

[pip PR#3615](https://github.com/pypa/pip/pull/3615) contain work to improve

pip to let Versioneer work correctly.

Versioneer-0.16 and earlier only looked for a `.git` directory next to the

`setup.cfg`, so subprojects were completely unsupported with those releases.

### Editable installs with setuptools <= 18.5

`setup.py develop` and `pip install --editable .` allow you to install a

project into a virtualenv once, then continue editing the source code (and

test) without re-installing after every change.

"Entry-point scripts" (`setup(entry_points={"console_scripts": ..})`) are a

convenient way to specify executable scripts that should be installed along

with the python package.

These both work as expected when using modern setuptools. When using

setuptools-18.5 or earlier, however, certain operations will cause

`pkg_resources.DistributionNotFound` errors when running the entrypoint

script, which must be resolved by re-installing the package. This happens

when the install happens with one version, then the egg_info data is

regenerated while a different version is checked out. Many setup.py commands

cause egg_info to be rebuilt (including `sdist`, `wheel`, and installing into

a different virtualenv), so this can be surprising.

[Bug #83](https://github.com/python-versioneer/python-versioneer/issues/83) describes

this one, but upgrading to a newer version of setuptools should probably

resolve it.

## Updating Versioneer

To upgrade your project to a new release of Versioneer, do the following:

* install the new Versioneer (`pip install -U versioneer` or equivalent)

* edit `setup.cfg`, if necessary, to include any new configuration settings

indicated by the release notes. See [UPGRADING](./UPGRADING.md) for details.

* re-run `versioneer install` in your source tree, to replace

`SRC/_version.py`

* commit any changed files

## Future Directions

This tool is designed to make it easily extended to other version-control

systems: all VCS-specific components are in separate directories like

src/git/ . The top-level `versioneer.py` script is assembled from these

components by running make-versioneer.py . In the future, make-versioneer.py

will take a VCS name as an argument, and will construct a version of

`versioneer.py` that is specific to the given VCS. It might also take the

configuration arguments that are currently provided manually during

installation by editing setup.py . Alternatively, it might go the other

direction and include code from all supported VCS systems, reducing the

number of intermediate scripts.

## Similar projects

* [setuptools_scm](https://github.com/pypa/setuptools_scm/) - a non-vendored build-time

dependency

* [minver](https://github.com/jbweston/miniver) - a lightweight reimplementation of

versioneer

* [versioningit](https://github.com/jwodder/versioningit) - a PEP 518-based setuptools

plugin

## License

To make Versioneer easier to embed, all its code is dedicated to the public

domain. The `_version.py` that it creates is also in the public domain.

Specifically, both are released under the Creative Commons "Public Domain

Dedication" license (CC0-1.0), as described in

https://creativecommons.org/publicdomain/zero/1.0/ .

[pypi-image]: https://img.shields.io/pypi/v/versioneer.svg

[pypi-url]: https://pypi.python.org/pypi/versioneer/

[travis-image]:

https://img.shields.io/travis/com/python-versioneer/python-versioneer.svg

[travis-url]: https://travis-ci.com/github/python-versioneer/python-versioneer

"""

# pylint:disable=invalid-name,import-outside-toplevel,missing-function-docstring

# pylint:disable=missing-class-docstring,too-many-branches,too-many-statements

# pylint:disable=raise-missing-from,too-many-lines,too-many-locals,import-error

# pylint:disable=too-few-public-methods,redefined-outer-name,consider-using-with

# pylint:disable=attribute-defined-outside-init,too-many-arguments

import configparser

import errno

import json

import os

import re

import subprocess

import sys

from typing import Callable, Dict

import functools

class VersioneerConfig:

"""Container for Versioneer configuration parameters."""

def get_root():

"""Get the project root directory.

We require that all commands are run from the project root, i.e. the

directory that contains setup.py, setup.cfg, and versioneer.py .

"""

root = os.path.realpath(os.path.abspath(os.getcwd()))

setup_py = os.path.join(root, "setup.py")

versioneer_py = os.path.join(root, "versioneer.py")

if not (os.path.exists(setup_py) or os.path.exists(versioneer_py)):

# allow 'python path/to/setup.py COMMAND'

root = os.path.dirname(os.path.realpath(os.path.abspath(sys.argv[0])))

setup_py = os.path.join(root, "setup.py")

versioneer_py = os.path.join(root, "versioneer.py")

if not (os.path.exists(setup_py) or os.path.exists(versioneer_py)):

err = ("Versioneer was unable to run the project root directory. "

"Versioneer requires setup.py to be executed from "

"its immediate directory (like 'python setup.py COMMAND'), "

"or in a way that lets it use sys.argv[0] to find the root "

"(like 'python path/to/setup.py COMMAND').")

raise VersioneerBadRootError(err)

try:

# Certain runtime workflows (setup.py install/develop in a setuptools

# tree) execute all dependencies in a single python process, so

# "versioneer" may be imported multiple times, and python's shared

# module-import table will cache the first one. So we can't use

# os.path.dirname(__file__), as that will find whichever

# versioneer.py was first imported, even in later projects.

my_path = os.path.realpath(os.path.abspath(__file__))

me_dir = os.path.normcase(os.path.splitext(my_path)[0])

vsr_dir = os.path.normcase(os.path.splitext(versioneer_py)[0])

if me_dir != vsr_dir:

print("Warning: build in %s is using versioneer.py from %s"

% (os.path.dirname(my_path), versioneer_py))

except NameError:

pass

return root

def get_config_from_root(root):

"""Read the project setup.cfg file to determine Versioneer config."""

# This might raise OSError (if setup.cfg is missing), or

# configparser.NoSectionError (if it lacks a [versioneer] section), or

# configparser.NoOptionError (if it lacks "VCS="). See the docstring at

# the top of versioneer.py for instructions on writing your setup.cfg .

setup_cfg = os.path.join(root, "setup.cfg")

parser = configparser.ConfigParser()

with open(setup_cfg, "r") as cfg_file:

parser.read_file(cfg_file)

VCS = parser.get("versioneer", "VCS") # mandatory

# Dict-like interface for non-mandatory entries

section = parser["versioneer"]

cfg = VersioneerConfig()

cfg.VCS = VCS

cfg.style = section.get("style", "")

cfg.versionfile_source = section.get("versionfile_source")

cfg.versionfile_build = section.get("versionfile_build")

cfg.tag_prefix = section.get("tag_prefix")

if cfg.tag_prefix in ("''", '""'):

cfg.tag_prefix = ""

cfg.parentdir_prefix = section.get("parentdir_prefix")

cfg.verbose = section.get("verbose")

return cfg

class NotThisMethod(Exception):

"""Exception raised if a method is not valid for the current scenario."""

# these dictionaries contain VCS-specific tools

LONG_VERSION_PY: Dict[str, str] = {}

HANDLERS: Dict[str, Dict[str, Callable]] = {}

def register_vcs_handler(vcs, method): # decorator

"""Create decorator to mark a method as the handler of a VCS."""

def decorate(f):

"""Store f in HANDLERS[vcs][method]."""

HANDLERS.setdefault(vcs, {})[method] = f

return f

return decorate

def run_command(commands, args, cwd=None, verbose=False, hide_stderr=False,

env=None):

"""Call the given command(s)."""

assert isinstance(commands, list)

process = None

popen_kwargs = {}

if sys.platform == "win32":

# This hides the console window if pythonw.exe is used

startupinfo = subprocess.STARTUPINFO()

startupinfo.dwFlags |= subprocess.STARTF_USESHOWWINDOW

popen_kwargs["startupinfo"] = startupinfo

for command in commands:

try:

dispcmd = str([command] + args)

# remember shell=False, so use git.cmd on windows, not just git

process = subprocess.Popen([command] + args, cwd=cwd, env=env,

stdout=subprocess.PIPE,

stderr=(subprocess.PIPE if hide_stderr

else None), **popen_kwargs)

break

except OSError:

e = sys.exc_info()[1]

if e.errno == errno.ENOENT:

continue

if verbose:

print("unable to run %s" % dispcmd)

print(e)

return None, None

else:

if verbose:

print("unable to find command, tried %s" % (commands,))

return None, None

stdout = process.communicate()[0].strip().decode()

if process.returncode != 0:

if verbose:

print("unable to run %s (error)" % dispcmd)

print("stdout was %s" % stdout)

return None, process.returncode

return stdout, process.returncode

LONG_VERSION_PY['git'] = r'''

# This file helps to compute a version number in source trees obtained from

# git-archive tarball (such as those provided by githubs download-from-tag

# feature). Distribution tarballs (built by setup.py sdist) and build

# directories (produced by setup.py build) will contain a much shorter file

# that just contains the computed version number.

# This file is released into the public domain. Generated by

# versioneer-0.22 (https://github.com/python-versioneer/python-versioneer)

"""Git implementation of _version.py."""

import errno

import os

import re

import subprocess

import sys

from typing import Callable, Dict

import functools

def get_keywords():

"""Get the keywords needed to look up the version information."""

# these strings will be replaced by git during git-archive.

# setup.py/versioneer.py will grep for the variable names, so they must

# each be defined on a line of their own. _version.py will just call

# get_keywords().

git_refnames = "%(DOLLAR)sFormat:%%d%(DOLLAR)s"

git_full = "%(DOLLAR)sFormat:%%H%(DOLLAR)s"

git_date = "%(DOLLAR)sFormat:%%ci%(DOLLAR)s"

keywords = {"refnames": git_refnames, "full": git_full, "date": git_date}

return keywords

class VersioneerConfig:

"""Container for Versioneer configuration parameters."""

def get_config():

"""Create, populate and return the VersioneerConfig() object."""

# these strings are filled in when 'setup.py versioneer' creates

# _version.py

cfg = VersioneerConfig()

cfg.VCS = "git"

cfg.style = "%(STYLE)s"

cfg.tag_prefix = "%(TAG_PREFIX)s"

cfg.parentdir_prefix = "%(PARENTDIR_PREFIX)s"

cfg.versionfile_source = "%(VERSIONFILE_SOURCE)s"

cfg.verbose = False

return cfg

class NotThisMethod(Exception):