code

stringlengths 501

5.19M

| package

stringlengths 2

81

| path

stringlengths 9

304

| filename

stringlengths 4

145

|

|---|---|---|---|

# zilean

[](https://badge.fury.io/py/zilean) [](https://codecov.io/gh/JohnsonJDDJ/zilean) [](https://zilean.readthedocs.io/en/main/?badge=main)

> _Zilean is a League of Legends character that can drift through past, present and future. The project is borrowing Zilean's temporal magic to foresee the result of a match._

Documentation: [here](https://zilean.readthedocs.io/).

**The project is open to all sorts of contribution and collaboration! Please feel free to clone, fork, PR...anything! If you are interested, contact me!**

Contact: Johnson Du <[email protected]>

[Introduction](#Introduction)\

[Demo](#Demo)

## Introduction

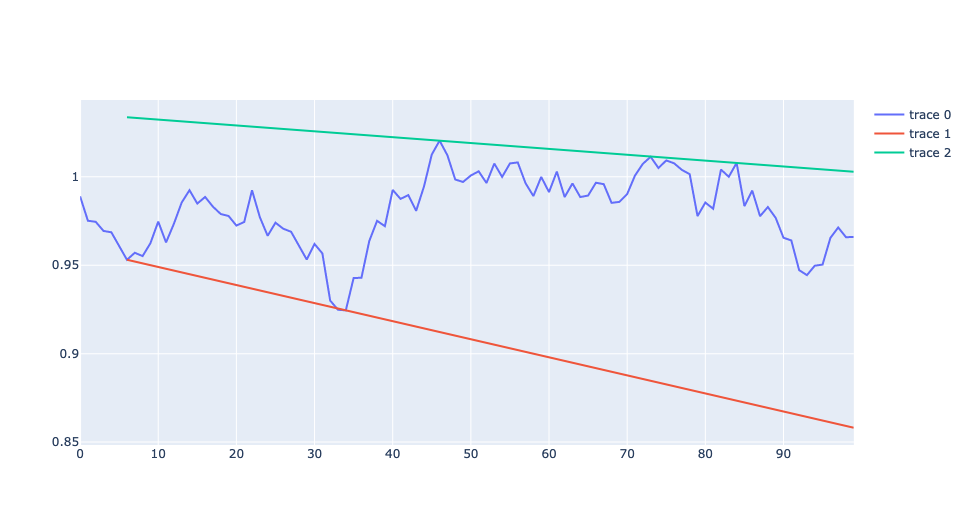

`zilean` is designed to facilitate data analysis of the Riot [MatchTimelineDto](https://developer.riotgames.com/apis#match-v5/GET_getTimeline). The `MatchTimelineDto` is a powerful object that contains information of a specific [League of Legends](https://leagueoflegends.com/) match at **every minute mark**. Naturally, the `MatchTimelineDto` became an **ideal object for various machine learning tasks**. For example, predicting match results using game statistics before the 16 minute mark.

Different from traditional sports, esports such as League of Legends has an innate advantage with respect to the data collection process. Since every play was conducted digitally, it opened up a huge potential to explore and perform all kinds of data analysis. `zilean` hopes to explore the infinite potentials provided by the [Riot Games API](https://developer.riotgames.com/), **and through the power of computing, make our community a better place.**

GL:HF!

## Demo

Here is a quick look of how to do League of Legends data analysis with `zilean`

```python

from zilean import TimelineCrawler, SnapShots, read_api_key

import pandas as pd

# Use the TimelineCrawler to fetch `MatchTimelineDto`s

# from Riot. The `MatchTimelineDto`s have game stats

# at each minute mark.

# We need a API key to fetch data. See the Riot Developer

# Portal for more info.

api_key = read_api_key(you_api_key_here)

# Crawl 2000 Diamond RANKED_SOLO_5x5 timelines from the Korean server.

crawler = TimelineCrawler(api_key, region="kr",

tier="DIAMOND", queue="RANKED_SOLO_5x5")

result = crawler.crawl(2000, match_per_id=30, file="results.json")

# This will take a long time!

# We will look at the player statistics at 10 and 15 minute mark.

snaps = SnapShots(result, frames=[10, 15])

# Store the player statistics using in a pandas DataFrame

player_stats = snaps.summary(per_frame=True)

data = pd.DataFrame(player_stats)

# Look at the distribution of totalGold difference for `player 0` (TOP player)

# at 15 minutes mark.

sns.displot(x="totalGold_0", data=data[data['frame'] == 15], hue="win")

```

Here is an example of some quick machine learning.

```python

# Do some simple modelling

from sklearn.model_selection import train_test_split

from sklearn.ensemble import RandomForestClassifier

# Define X and y for training data

train, test = train_test_split(player_stats, test_size=0.33)

X_train = train.drop(["matchId", "win"], axis=1)

y_train = train["win"].astype(int)

# Build a default random forest classifier

rf = RandomForestClassifier()

rf.fit(X_train, y_train)

y_fitted = rf.predict(X_train)

print(f"Training accuracy: {mean(y_train == y_fitted)}")

``` | zilean | /zilean-0.0.2.tar.gz/zilean-0.0.2/README.md | README.md |

# Notices

This repository incorporates material as listed below or described in the code.

## django-mssql-backend

Please see below for the associated license for the incorporated material from django-mssql-backend (https://github.com/ESSolutions/django-mssql-backend).

### BSD 3-Clause License

Copyright (c) 2019, ES Solutions AB

All rights reserved.

Redistribution and use in source and binary forms, with or without

modification, are permitted provided that the following conditions are met:

* Redistributions of source code must retain the above copyright notice, this

list of conditions and the following disclaimer.

* Redistributions in binary form must reproduce the above copyright notice,

this list of conditions and the following disclaimer in the documentation

and/or other materials provided with the distribution.

* Neither the name of the copyright holder nor the names of its

contributors may be used to endorse or promote products derived from

this software without specific prior written permission.

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE ARE

DISCLAIMED. IN NO EVENT SHALL THE COPYRIGHT HOLDER OR CONTRIBUTORS BE LIABLE

FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR CONSEQUENTIAL

DAMAGES (INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR

SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER

CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY,

OR TORT (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

| zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/NOTICE.md | NOTICE.md |

# SQL Server backend for Django

Welcome to the Zilian-MSSQL-Django 3rd party backend project!

*zilian-mssql-django* is a fork of [mssql-django](https://pypi.org/project/mssql-django/). This project provides an enterprise database connectivity option for the Django Web Framework, with support for Microsoft SQL Server and Azure SQL Database.

We'd like to give thanks to the community that made this project possible, with particular recognition of the contributors: OskarPersson, michiya, dlo and the original Google Code django-pyodbc team. Moving forward we encourage partipation in this project from both old and new contributors!

We hope you enjoy using the Zilian-MSSQL-Django 3rd party backend.

## Features

- Supports Django 3.2 and 4.0

- Tested on Microsoft SQL Server 2016, 2017, 2019

- Passes most of the tests of the Django test suite

- Compatible with

[Micosoft ODBC Driver for SQL Server](https://docs.microsoft.com/en-us/sql/connect/odbc/microsoft-odbc-driver-for-sql-server),

[SQL Server Native Client](https://msdn.microsoft.com/en-us/library/ms131321(v=sql.120).aspx),

and [FreeTDS](https://www.freetds.org/) ODBC drivers

- Supports AzureSQL serveless db reconnection

## Dependencies

- pyodbc 3.0 or newer

## Installation

1. Install pyodbc 3.0 (or newer) and Django

2. Install zilian-mssql-django:

pip install zilian-mssql-django

3. Set the `ENGINE` setting in the `settings.py` file used by

your Django application or project to `'mssql'`:

'ENGINE': 'mssql'

## Configuration

### Standard Django settings

The following entries in a database-level settings dictionary

in DATABASES control the behavior of the backend:

- ENGINE

String. It must be `"mssql"`.

- NAME

String. Database name. Required.

- HOST

String. SQL Server instance in `"server\instance"` format.

- PORT

String. Server instance port.

An empty string means the default port.

- USER

String. Database user name in `"user"` format.

If not given then MS Integrated Security will be used.

- PASSWORD

String. Database user password.

- TOKEN

String. Access token fetched as a user or service principal which

has access to the database. E.g. when using `azure.identity`, the

result of `DefaultAzureCredential().get_token('https://database.windows.net/.default')`

can be passed.

- AUTOCOMMIT

Boolean. Set this to `False` if you want to disable

Django's transaction management and implement your own.

- Trusted_Connection

String. Default is `"yes"`. Can be set to `"no"` if required.

and the following entries are also available in the `TEST` dictionary

for any given database-level settings dictionary:

- NAME

String. The name of database to use when running the test suite.

If the default value (`None`) is used, the test database will use

the name `"test_" + NAME`.

- COLLATION

String. The collation order to use when creating the test database.

If the default value (`None`) is used, the test database is assigned

the default collation of the instance of SQL Server.

- DEPENDENCIES

String. The creation-order dependencies of the database.

See the official Django documentation for more details.

- MIRROR

String. The alias of the database that this database should

mirror during testing. Default value is `None`.

See the official Django documentation for more details.

### OPTIONS

Dictionary. Current available keys are:

- driver

String. ODBC Driver to use (`"ODBC Driver 17 for SQL Server"`,

`"SQL Server Native Client 11.0"`, `"FreeTDS"` etc).

Default is `"ODBC Driver 17 for SQL Server"`.

- isolation_level

String. Sets [transaction isolation level](https://docs.microsoft.com/en-us/sql/t-sql/statements/set-transaction-isolation-level-transact-sql)

for each database session. Valid values for this entry are

`READ UNCOMMITTED`, `READ COMMITTED`, `REPEATABLE READ`,

`SNAPSHOT`, and `SERIALIZABLE`. Default is `None` which means

no isolation levei is set to a database session and SQL Server default

will be used.

- dsn

String. A named DSN can be used instead of `HOST`.

- host_is_server

Boolean. Only relevant if using the FreeTDS ODBC driver under

Unix/Linux.

By default, when using the FreeTDS ODBC driver the value specified in

the ``HOST`` setting is used in a ``SERVERNAME`` ODBC connection

string component instead of being used in a ``SERVER`` component;

this means that this value should be the name of a *dataserver*

definition present in the ``freetds.conf`` FreeTDS configuration file

instead of a hostname or an IP address.

But if this option is present and its value is ``True``, this

special behavior is turned off. Instead, connections to the database

server will be established using ``HOST`` and ``PORT`` options, without

requiring ``freetds.conf`` to be configured.

See https://www.freetds.org/userguide/dsnless.html for more information.

- unicode_results

Boolean. If it is set to ``True``, pyodbc's *unicode_results* feature

is activated and strings returned from pyodbc are always Unicode.

Default value is ``False``.

- extra_params

String. Additional parameters for the ODBC connection. The format is

``"param=value;param=value"``, [Azure AD Authentication](https://github.com/microsoft/mssql-django/wiki/Azure-AD-Authentication) (Service Principal, Interactive, Msi) can be added to this field.

- collation

String. Name of the collation to use when performing text field

lookups against the database. Default is ``None``; this means no

collation specifier is added to your lookup SQL (the default

collation of your database will be used). For Chinese language you

can set it to ``"Chinese_PRC_CI_AS"``.

- connection_timeout

Integer. Sets the timeout in seconds for the database connection process.

Default value is ``0`` which disables the timeout.

- connection_retries

Integer. Sets the times to retry the database connection process.

Default value is ``5``.

- connection_retry_backoff_time

Integer. Sets the back off time in seconds for reries of

the database connection process. Default value is ``5``.

- query_timeout

Integer. Sets the timeout in seconds for the database query.

Default value is ``0`` which disables the timeout.

- [setencoding](https://github.com/mkleehammer/pyodbc/wiki/Connection#setencoding) and [setdecoding](https://github.com/mkleehammer/pyodbc/wiki/Connection#setdecoding)

```python

# Example

"OPTIONS": {

"setdecoding": [

{"sqltype": pyodbc.SQL_CHAR, "encoding": 'utf-8'},

{"sqltype": pyodbc.SQL_WCHAR, "encoding": 'utf-8'}],

"setencoding": [

{"encoding": "utf-8"}],

...

},

```

### Backend-specific settings

The following project-level settings also control the behavior of the backend:

- DATABASE_CONNECTION_POOLING

Boolean. If it is set to ``False``, pyodbc's connection pooling feature

won't be activated.

### Example

Here is an example of the database settings:

```python

DATABASES = {

'default': {

'ENGINE': 'mssql',

'NAME': 'mydb',

'USER': 'user@myserver',

'PASSWORD': 'password',

'HOST': 'myserver.database.windows.net',

'PORT': '',

'OPTIONS': {

'driver': 'ODBC Driver 17 for SQL Server',

},

},

}

# set this to False if you want to turn off pyodbc's connection pooling

DATABASE_CONNECTION_POOLING = False

```

## Limitations

The following features are currently not fully supported:

- Altering a model field from or to AutoField at migration

- Django annotate functions have floating point arithmetic problems in some cases

- Annotate function with exists

- Exists function in order_by

- Righthand power and arithmetic with datatimes

- Timezones, timedeltas not fully supported

- Rename field/model with foreign key constraint

- Database level constraints

- Math degrees power or radians

- Bit-shift operators

- Filtered index

- Date extract function

- Hashing functions

JSONField lookups have limitations, more details [here](https://github.com/microsoft/mssql-django/wiki/JSONField).

| zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/README.md | README.md |

import os

from pathlib import Path

from django import VERSION

BASE_DIR = Path(__file__).resolve().parent.parent

DATABASES = {

"default": {

"ENGINE": "mssql",

"NAME": "default",

"USER": "sa",

"PASSWORD": "MyPassword42",

"HOST": "localhost",

"PORT": "1433",

"OPTIONS": {"driver": "ODBC Driver 17 for SQL Server", },

},

'other': {

"ENGINE": "mssql",

"NAME": "other",

"USER": "sa",

"PASSWORD": "MyPassword42",

"HOST": "localhost",

"PORT": "1433",

"OPTIONS": {"driver": "ODBC Driver 17 for SQL Server", },

},

}

# Django 3.0 and below unit test doesn't handle more than 2 databases in DATABASES correctly

if VERSION >= (3, 1):

DATABASES['sqlite'] = {

"ENGINE": "django.db.backends.sqlite3",

"NAME": str(BASE_DIR / "db.sqlitetest"),

}

# Set to `True` locally if you want SQL queries logged to django_sql.log

DEBUG = False

# Logging

LOG_DIR = os.path.join(os.path.dirname(__file__), '..', 'logs')

os.makedirs(LOG_DIR, exist_ok=True)

LOGGING = {

'version': 1,

'disable_existing_loggers': True,

'formatters': {

'myformatter': {

'format': '%(asctime)s P%(process)05dT%(thread)05d [%(levelname)s] %(name)s: %(message)s',

},

},

'handlers': {

'db_output': {

'level': 'DEBUG',

'class': 'logging.handlers.RotatingFileHandler',

'filename': os.path.join(LOG_DIR, 'django_sql.log'),

'formatter': 'myformatter',

},

'default': {

'level': 'DEBUG',

'class': 'logging.handlers.RotatingFileHandler',

'filename': os.path.join(LOG_DIR, 'default.log'),

'formatter': 'myformatter',

}

},

'loggers': {

'': {

'handlers': ['default'],

'level': 'DEBUG',

'propagate': False,

},

'django.db': {

'handlers': ['db_output'],

'level': 'DEBUG',

'propagate': False,

},

},

}

INSTALLED_APPS = (

'django.contrib.contenttypes',

'django.contrib.staticfiles',

'django.contrib.auth',

'mssql',

'testapp',

)

SECRET_KEY = "django_tests_secret_key"

PASSWORD_HASHERS = [

'django.contrib.auth.hashers.PBKDF2PasswordHasher',

]

DEFAULT_AUTO_FIELD = 'django.db.models.AutoField'

ENABLE_REGEX_TESTS = False

USE_TZ = False

TEST_RUNNER = "testapp.runners.ExcludedTestSuiteRunner"

EXCLUDED_TESTS = [

'aggregation.tests.AggregateTestCase.test_expression_on_aggregation',

'aggregation_regress.tests.AggregationTests.test_annotated_conditional_aggregate',

'aggregation_regress.tests.AggregationTests.test_annotation_with_value',

'aggregation.tests.AggregateTestCase.test_distinct_on_aggregate',

'annotations.tests.NonAggregateAnnotationTestCase.test_annotate_exists',

'custom_lookups.tests.BilateralTransformTests.test_transform_order_by',

'expressions.tests.BasicExpressionsTests.test_filtering_on_annotate_that_uses_q',

'expressions.tests.BasicExpressionsTests.test_order_by_exists',

'expressions.tests.ExpressionOperatorTests.test_righthand_power',

'expressions.tests.FTimeDeltaTests.test_datetime_subtraction_microseconds',

'expressions.tests.FTimeDeltaTests.test_duration_with_datetime_microseconds',

'expressions.tests.IterableLookupInnerExpressionsTests.test_expressions_in_lookups_join_choice',

'expressions_case.tests.CaseExpressionTests.test_annotate_with_in_clause',

'expressions_window.tests.WindowFunctionTests.test_nth_returns_null',

'expressions_window.tests.WindowFunctionTests.test_nthvalue',

'expressions_window.tests.WindowFunctionTests.test_range_n_preceding_and_following',

'field_deconstruction.tests.FieldDeconstructionTests.test_binary_field',

'ordering.tests.OrderingTests.test_orders_nulls_first_on_filtered_subquery',

'get_or_create.tests.UpdateOrCreateTransactionTests.test_creation_in_transaction',

'indexes.tests.PartialIndexTests.test_multiple_conditions',

'introspection.tests.IntrospectionTests.test_get_constraints',

'migrations.test_executor.ExecutorTests.test_alter_id_type_with_fk',

'migrations.test_operations.OperationTests.test_add_constraint_percent_escaping',

'migrations.test_operations.OperationTests.test_alter_field_pk',

'migrations.test_operations.OperationTests.test_alter_field_reloads_state_on_fk_with_to_field_target_changes',

'migrations.test_operations.OperationTests.test_autofield_foreignfield_growth',

'schema.tests.SchemaTests.test_alter_auto_field_to_char_field',

'schema.tests.SchemaTests.test_alter_auto_field_to_integer_field',

'schema.tests.SchemaTests.test_alter_implicit_id_to_explicit',

'schema.tests.SchemaTests.test_alter_int_pk_to_autofield_pk',

'schema.tests.SchemaTests.test_alter_int_pk_to_bigautofield_pk',

'schema.tests.SchemaTests.test_alter_pk_with_self_referential_field',

'schema.tests.SchemaTests.test_no_db_constraint_added_during_primary_key_change',

'schema.tests.SchemaTests.test_remove_field_check_does_not_remove_meta_constraints',

'schema.tests.SchemaTests.test_remove_field_unique_does_not_remove_meta_constraints',

'schema.tests.SchemaTests.test_text_field_with_db_index',

'schema.tests.SchemaTests.test_unique_together_with_fk',

'schema.tests.SchemaTests.test_unique_together_with_fk_with_existing_index',

'aggregation.tests.AggregateTestCase.test_count_star',

'aggregation_regress.tests.AggregationTests.test_values_list_annotation_args_ordering',

'datatypes.tests.DataTypesTestCase.test_error_on_timezone',

'db_functions.math.test_degrees.DegreesTests.test_integer',

'db_functions.math.test_power.PowerTests.test_integer',

'db_functions.math.test_radians.RadiansTests.test_integer',

'db_functions.text.test_pad.PadTests.test_pad',

'db_functions.text.test_replace.ReplaceTests.test_case_sensitive',

'expressions.tests.ExpressionOperatorTests.test_lefthand_bitwise_right_shift_operator',

'expressions.tests.FTimeDeltaTests.test_invalid_operator',

'fixtures_regress.tests.TestFixtures.test_loaddata_raises_error_when_fixture_has_invalid_foreign_key',

'invalid_models_tests.test_ordinary_fields.TextFieldTests.test_max_length_warning',

'model_indexes.tests.IndexesTests.test_db_tablespace',

'ordering.tests.OrderingTests.test_deprecated_values_annotate',

'queries.test_qs_combinators.QuerySetSetOperationTests.test_limits',

'backends.tests.BackendTestCase.test_unicode_password',

'introspection.tests.IntrospectionTests.test_get_table_description_types',

'migrations.test_commands.MigrateTests.test_migrate_syncdb_app_label',

'migrations.test_commands.MigrateTests.test_migrate_syncdb_deferred_sql_executed_with_schemaeditor',

'migrations.test_operations.OperationTests.test_alter_field_pk_fk',

'schema.tests.SchemaTests.test_add_foreign_key_quoted_db_table',

'schema.tests.SchemaTests.test_unique_and_reverse_m2m',

'schema.tests.SchemaTests.test_unique_no_unnecessary_fk_drops',

'select_for_update.tests.SelectForUpdateTests.test_for_update_after_from',

'backends.tests.LastExecutedQueryTest.test_last_executed_query',

'db_functions.datetime.test_now.NowTests.test_basic',

'db_functions.datetime.test_extract_trunc.DateFunctionTests.test_extract_year_exact_lookup',

'db_functions.datetime.test_extract_trunc.DateFunctionTests.test_extract_year_greaterthan_lookup',

'db_functions.datetime.test_extract_trunc.DateFunctionTests.test_extract_year_lessthan_lookup',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_extract_year_exact_lookup',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_extract_year_greaterthan_lookup',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_extract_year_lessthan_lookup',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_trunc_ambiguous_and_invalid_times',

'delete.tests.DeletionTests.test_only_referenced_fields_selected',

'queries.test_db_returning.ReturningValuesTests.test_insert_returning',

'queries.test_db_returning.ReturningValuesTests.test_insert_returning_non_integer',

'backends.tests.BackendTestCase.test_queries',

'introspection.tests.IntrospectionTests.test_smallautofield',

'schema.tests.SchemaTests.test_inline_fk',

'aggregation.tests.AggregateTestCase.test_aggregation_subquery_annotation_exists',

'aggregation.tests.AggregateTestCase.test_aggregation_subquery_annotation_values_collision',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_extract_func_with_timezone',

'db_functions.text.test_md5.MD5Tests.test_basic',

'db_functions.text.test_md5.MD5Tests.test_transform',

'db_functions.text.test_sha1.SHA1Tests.test_basic',

'db_functions.text.test_sha1.SHA1Tests.test_transform',

'db_functions.text.test_sha224.SHA224Tests.test_basic',

'db_functions.text.test_sha224.SHA224Tests.test_transform',

'db_functions.text.test_sha256.SHA256Tests.test_basic',

'db_functions.text.test_sha256.SHA256Tests.test_transform',

'db_functions.text.test_sha384.SHA384Tests.test_basic',

'db_functions.text.test_sha384.SHA384Tests.test_transform',

'db_functions.text.test_sha512.SHA512Tests.test_basic',

'db_functions.text.test_sha512.SHA512Tests.test_transform',

'expressions.tests.BasicExpressionsTests.test_case_in_filter_if_boolean_output_field',

'expressions.tests.BasicExpressionsTests.test_subquery_in_filter',

'expressions.tests.FTimeDeltaTests.test_date_subquery_subtraction',

'expressions.tests.FTimeDeltaTests.test_datetime_subquery_subtraction',

'expressions.tests.FTimeDeltaTests.test_time_subquery_subtraction',

'expressions.tests.BasicExpressionsTests.test_filtering_on_q_that_is_boolean',

'migrations.test_operations.OperationTests.test_alter_field_reloads_state_on_fk_with_to_field_target_type_change',

'migrations.test_operations.OperationTests.test_autofield__bigautofield_foreignfield_growth',

'migrations.test_operations.OperationTests.test_smallfield_autofield_foreignfield_growth',

'migrations.test_operations.OperationTests.test_smallfield_bigautofield_foreignfield_growth',

'schema.tests.SchemaTests.test_alter_auto_field_quoted_db_column',

'schema.tests.SchemaTests.test_alter_autofield_pk_to_bigautofield_pk_sequence_owner',

'schema.tests.SchemaTests.test_alter_autofield_pk_to_smallautofield_pk_sequence_owner',

'schema.tests.SchemaTests.test_alter_primary_key_quoted_db_table',

'schema.tests.SchemaTests.test_alter_smallint_pk_to_smallautofield_pk',

'annotations.tests.NonAggregateAnnotationTestCase.test_combined_expression_annotation_with_aggregation',

'db_functions.comparison.test_cast.CastTests.test_cast_to_integer',

'db_functions.datetime.test_extract_trunc.DateFunctionTests.test_extract_func',

'db_functions.datetime.test_extract_trunc.DateFunctionTests.test_extract_iso_weekday_func',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_extract_func',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_extract_iso_weekday_func',

'datetimes.tests.DateTimesTests.test_datetimes_ambiguous_and_invalid_times',

'expressions.tests.ExpressionOperatorTests.test_lefthand_bitwise_xor',

'expressions.tests.ExpressionOperatorTests.test_lefthand_bitwise_xor_null',

'inspectdb.tests.InspectDBTestCase.test_number_field_types',

'inspectdb.tests.InspectDBTestCase.test_json_field',

'ordering.tests.OrderingTests.test_default_ordering_by_f_expression',

'ordering.tests.OrderingTests.test_order_by_nulls_first',

'ordering.tests.OrderingTests.test_order_by_nulls_last',

'queries.test_qs_combinators.QuerySetSetOperationTests.test_ordering_by_f_expression_and_alias',

'queries.test_db_returning.ReturningValuesTests.test_insert_returning_multiple',

'dbshell.tests.DbshellCommandTestCase.test_command_missing',

'schema.tests.SchemaTests.test_char_field_pk_to_auto_field',

'datetimes.tests.DateTimesTests.test_21432',

# JSONFields

'model_fields.test_jsonfield.TestQuerying.test_has_key_list',

'model_fields.test_jsonfield.TestQuerying.test_has_key_null_value',

'model_fields.test_jsonfield.TestQuerying.test_key_quoted_string',

'model_fields.test_jsonfield.TestQuerying.test_lookups_with_key_transform',

'model_fields.test_jsonfield.TestQuerying.test_ordering_grouping_by_count',

'model_fields.test_jsonfield.TestQuerying.test_isnull_key',

'model_fields.test_jsonfield.TestQuerying.test_none_key',

'model_fields.test_jsonfield.TestQuerying.test_none_key_and_exact_lookup',

'model_fields.test_jsonfield.TestQuerying.test_key_escape',

'model_fields.test_jsonfield.TestQuerying.test_ordering_by_transform',

'expressions_window.tests.WindowFunctionTests.test_key_transform',

# Django 3.2

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_trunc_func_with_timezone',

'db_functions.datetime.test_extract_trunc.DateFunctionWithTimeZoneTests.test_trunc_timezone_applied_before_truncation',

'expressions.tests.ExistsTests.test_optimizations',

'expressions.tests.FTimeDeltaTests.test_delta_add',

'expressions.tests.FTimeDeltaTests.test_delta_subtract',

'expressions.tests.FTimeDeltaTests.test_delta_update',

'expressions.tests.FTimeDeltaTests.test_exclude',

'expressions.tests.FTimeDeltaTests.test_mixed_comparisons1',

'expressions.tests.FTimeDeltaTests.test_negative_timedelta_update',

'inspectdb.tests.InspectDBTestCase.test_field_types',

'lookup.tests.LookupTests.test_in_ignore_none',

'lookup.tests.LookupTests.test_in_ignore_none_with_unhashable_items',

'queries.test_qs_combinators.QuerySetSetOperationTests.test_exists_union',

'introspection.tests.IntrospectionTests.test_get_constraints_unique_indexes_orders',

'schema.tests.SchemaTests.test_ci_cs_db_collation',

'select_for_update.tests.SelectForUpdateTests.test_unsuported_no_key_raises_error',

# Django 4.0

'aggregation.tests.AggregateTestCase.test_aggregation_default_using_date_from_database',

'aggregation.tests.AggregateTestCase.test_aggregation_default_using_datetime_from_database',

'aggregation.tests.AggregateTestCase.test_aggregation_default_using_time_from_database',

'expressions.tests.FTimeDeltaTests.test_durationfield_multiply_divide',

'lookup.tests.LookupQueryingTests.test_alias',

'lookup.tests.LookupQueryingTests.test_filter_exists_lhs',

'lookup.tests.LookupQueryingTests.test_filter_lookup_lhs',

'lookup.tests.LookupQueryingTests.test_filter_subquery_lhs',

'lookup.tests.LookupQueryingTests.test_filter_wrapped_lookup_lhs',

'lookup.tests.LookupQueryingTests.test_lookup_in_order_by',

'lookup.tests.LookupTests.test_lookup_rhs',

'order_with_respect_to.tests.OrderWithRespectToBaseTests.test_previous_and_next_in_order',

'ordering.tests.OrderingTests.test_default_ordering_does_not_affect_group_by',

'queries.test_explain.ExplainUnsupportedTests.test_message',

'aggregation.tests.AggregateTestCase.test_coalesced_empty_result_set',

'aggregation.tests.AggregateTestCase.test_empty_result_optimization',

'queries.tests.Queries6Tests.test_col_alias_quoted',

'backends.tests.BackendTestCase.test_queries_logger',

'migrations.test_operations.OperationTests.test_alter_field_pk_mti_fk',

'migrations.test_operations.OperationTests.test_run_sql_add_missing_semicolon_on_collect_sql',

'migrations.test_operations.OperationTests.test_alter_field_pk_mti_and_fk_to_base'

]

REGEX_TESTS = [

'lookup.tests.LookupTests.test_regex',

'lookup.tests.LookupTests.test_regex_backreferencing',

'lookup.tests.LookupTests.test_regex_non_ascii',

'lookup.tests.LookupTests.test_regex_non_string',

'lookup.tests.LookupTests.test_regex_null',

'model_fields.test_jsonfield.TestQuerying.test_key_iregex',

'model_fields.test_jsonfield.TestQuerying.test_key_regex',

] | zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/testapp/settings.py | settings.py |

import datetime

import uuid

from django import VERSION

from django.db import models

from django.db.models import Q

from django.utils import timezone

class Author(models.Model):

name = models.CharField(max_length=100)

class Editor(models.Model):

name = models.CharField(max_length=100)

class Post(models.Model):

title = models.CharField('title', max_length=255)

author = models.ForeignKey(Author, models.CASCADE)

# Optional secondary author

alt_editor = models.ForeignKey(Editor, models.SET_NULL, blank=True, null=True)

class Meta:

unique_together = (

('author', 'title', 'alt_editor'),

)

def __str__(self):

return self.title

class Comment(models.Model):

post = models.ForeignKey(Post, on_delete=models.CASCADE)

text = models.TextField('text')

created_at = models.DateTimeField(default=timezone.now)

def __str__(self):

return self.text

class UUIDModel(models.Model):

id = models.UUIDField(primary_key=True, default=uuid.uuid4, editable=False)

def __str__(self):

return self.pk

class TestUniqueNullableModel(models.Model):

# Issue https://github.com/ESSolutions/django-mssql-backend/issues/38:

# This field started off as unique=True *and* null=True so it is implemented with a filtered unique index

# Then it is made non-nullable by a subsequent migration, to check this is correctly handled (the index

# should be dropped, then a normal unique constraint should be added, now that the column is not nullable)

test_field = models.CharField(max_length=100, unique=True)

# Issue https://github.com/ESSolutions/django-mssql-backend/issues/45 (case 1)

# Field used for testing changing the 'type' of a field that's both unique & nullable

x = models.CharField(max_length=11, null=True, unique=True)

# A variant of Issue https://github.com/microsoft/mssql-django/issues/14 case (b)

# but for a unique index (not db_index)

y_renamed = models.IntegerField(null=True, unique=True)

class TestNullableUniqueTogetherModel(models.Model):

class Meta:

unique_together = (('a', 'b', 'c'),)

# Issue https://github.com/ESSolutions/django-mssql-backend/issues/45 (case 2)

# Fields used for testing changing the type of a field that is in a `unique_together`

a = models.CharField(max_length=51, null=True)

b = models.CharField(max_length=50)

c = models.CharField(max_length=50)

class TestRemoveOneToOneFieldModel(models.Model):

# Issue https://github.com/ESSolutions/django-mssql-backend/pull/51

# Fields used for testing removing OneToOne field. Verifies that delete_unique

# does not try to remove indexes that have already been removed

# b = models.OneToOneField('self', on_delete=models.SET_NULL, null=True)

a = models.CharField(max_length=50)

class TestIndexesRetainedRenamed(models.Model):

# Issue https://github.com/microsoft/mssql-django/issues/14

# In all these cases the column index should still exist afterwards

# case (a) `a` starts out not nullable, but then is changed to be nullable

a = models.IntegerField(db_index=True, null=True)

# case (b) column originally called `b` is renamed

b_renamed = models.IntegerField(db_index=True)

# case (c) this entire model is renamed - this is just a column whose index can be checked afterwards

c = models.IntegerField(db_index=True)

class M2MOtherModel(models.Model):

name = models.CharField(max_length=10)

class TestRenameManyToManyFieldModel(models.Model):

# Issue https://github.com/microsoft/mssql-django/issues/86

others_renamed = models.ManyToManyField(M2MOtherModel)

class Topping(models.Model):

name = models.UUIDField(primary_key=True, default=uuid.uuid4)

class Pizza(models.Model):

name = models.UUIDField(primary_key=True, default=uuid.uuid4)

toppings = models.ManyToManyField(Topping)

def __str__(self):

return "%s (%s)" % (

self.name,

", ".join(topping.name for topping in self.toppings.all()),

)

class TestUnsupportableUniqueConstraint(models.Model):

class Meta:

managed = False

constraints = [

models.UniqueConstraint(

name='or_constraint',

fields=['_type'],

condition=(Q(status='in_progress') | Q(status='needs_changes')),

),

]

_type = models.CharField(max_length=50)

status = models.CharField(max_length=50)

class TestSupportableUniqueConstraint(models.Model):

class Meta:

constraints = [

models.UniqueConstraint(

name='and_constraint',

fields=['_type'],

condition=(

Q(status='in_progress') & Q(status='needs_changes') & Q(status='published')

),

),

models.UniqueConstraint(

name='in_constraint',

fields=['_type'],

condition=(Q(status__in=['in_progress', 'needs_changes'])),

),

]

_type = models.CharField(max_length=50)

status = models.CharField(max_length=50)

class BinaryData(models.Model):

binary = models.BinaryField(null=True)

if VERSION >= (3, 1):

class JSONModel(models.Model):

value = models.JSONField()

class Meta:

required_db_features = {'supports_json_field'}

if VERSION >= (3, 2):

class TestCheckConstraintWithUnicode(models.Model):

name = models.CharField(max_length=100)

class Meta:

required_db_features = {

'supports_table_check_constraints',

}

constraints = [

models.CheckConstraint(

check=~models.Q(name__startswith='\u00f7'),

name='name_does_not_starts_with_\u00f7',

)

]

class Question(models.Model):

question_text = models.CharField(max_length=200)

pub_date = models.DateTimeField('date published')

def __str__(self):

return self.question_text

def was_published_recently(self):

return self.pub_date >= timezone.now() - datetime.timedelta(days=1)

class Choice(models.Model):

question = models.ForeignKey(Question, on_delete=models.CASCADE, null=True)

choice_text = models.CharField(max_length=200)

votes = models.IntegerField(default=0)

class Meta:

unique_together = (('question', 'choice_text'))

class Customer_name(models.Model):

Customer_name = models.CharField(max_length=100)

class Meta:

ordering = ['Customer_name']

class Customer_address(models.Model):

Customer_name = models.ForeignKey(Customer_name, on_delete=models.CASCADE)

Customer_address = models.CharField(max_length=100)

class Meta:

ordering = ['Customer_address'] | zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/testapp/models.py | models.py |

import uuid

from django.db import migrations, models

import django

class Migration(migrations.Migration):

initial = True

dependencies = [

]

operations = [

migrations.CreateModel(

name='Author',

fields=[

('id', models.AutoField(auto_created=True, primary_key=True, serialize=False, verbose_name='ID')),

('name', models.CharField(max_length=100)),

],

),

migrations.CreateModel(

name='Editor',

fields=[

('id', models.AutoField(auto_created=True, primary_key=True, serialize=False, verbose_name='ID')),

('name', models.CharField(max_length=100)),

],

),

migrations.CreateModel(

name='Post',

fields=[

('id', models.AutoField(auto_created=True, primary_key=True, serialize=False, verbose_name='ID')),

('title', models.CharField(max_length=255, verbose_name='title')),

],

),

migrations.AddField(

model_name='post',

name='alt_editor',

field=models.ForeignKey(blank=True, null=True, on_delete=django.db.models.deletion.SET_NULL, to='testapp.Editor'),

),

migrations.AddField(

model_name='post',

name='author',

field=models.ForeignKey(on_delete=django.db.models.deletion.CASCADE, to='testapp.Author'),

),

migrations.AlterUniqueTogether(

name='post',

unique_together={('author', 'title', 'alt_editor')},

),

migrations.CreateModel(

name='Comment',

fields=[

('id', models.AutoField(auto_created=True, primary_key=True, serialize=False, verbose_name='ID')),

('post', models.ForeignKey(on_delete=django.db.models.deletion.CASCADE, to='testapp.Post')),

('text', models.TextField(verbose_name='text')),

('created_at', models.DateTimeField(default=django.utils.timezone.now)),

],

),

migrations.CreateModel(

name='UUIDModel',

fields=[

('id', models.UUIDField(default=uuid.uuid4, editable=False, primary_key=True, serialize=False)),

],

),

] | zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/testapp/migrations/0001_initial.py | 0001_initial.py |

import binascii

import os

from django.db.utils import InterfaceError

from django.db.backends.base.creation import BaseDatabaseCreation

from django import VERSION as django_version

class DatabaseCreation(BaseDatabaseCreation):

def cursor(self):

if django_version >= (3, 1):

return self.connection._nodb_cursor()

return self.connection._nodb_connection.cursor()

def _create_test_db(self, verbosity, autoclobber, keepdb=False):

"""

Internal implementation - create the test db tables.

"""

# Try to create the test DB, but if we fail due to 28000 (Login failed for user),

# it's probably because the user doesn't have permission to [dbo].[master],

# so we can proceed if we're keeping the DB anyway.

# https://github.com/microsoft/mssql-django/issues/61

try:

return super()._create_test_db(verbosity, autoclobber, keepdb)

except InterfaceError as err:

if err.args[0] == '28000' and keepdb:

self.log('Received error %s, proceeding because keepdb=True' % (

err.args[1],

))

else:

raise err

def _destroy_test_db(self, test_database_name, verbosity):

"""

Internal implementation - remove the test db tables.

"""

# Remove the test database to clean up after

# ourselves. Connect to the previous database (not the test database)

# to do so, because it's not allowed to delete a database while being

# connected to it.

with self.cursor() as cursor:

to_azure_sql_db = self.connection.to_azure_sql_db

if not to_azure_sql_db:

cursor.execute("ALTER DATABASE %s SET SINGLE_USER WITH ROLLBACK IMMEDIATE"

% self.connection.ops.quote_name(test_database_name))

cursor.execute("DROP DATABASE %s"

% self.connection.ops.quote_name(test_database_name))

def sql_table_creation_suffix(self):

suffix = []

collation = self.connection.settings_dict['TEST'].get('COLLATION', None)

if collation:

suffix.append('COLLATE %s' % collation)

return ' '.join(suffix)

# The following code to add regex support in SQLServer is taken from django-mssql

# see https://bitbucket.org/Manfre/django-mssql

def enable_clr(self):

""" Enables clr for server if not already enabled

This function will not fail if current user doesn't have

permissions to enable clr, and clr is already enabled

"""

with self.cursor() as cursor:

# check whether clr is enabled

cursor.execute('''

SELECT value FROM sys.configurations

WHERE name = 'clr enabled'

''')

res = None

try:

res = cursor.fetchone()

except Exception:

pass

if not res or not res[0]:

# if not enabled enable clr

cursor.execute("sp_configure 'clr enabled', 1")

cursor.execute("RECONFIGURE")

cursor.execute("sp_configure 'show advanced options', 1")

cursor.execute("RECONFIGURE")

cursor.execute("sp_configure 'clr strict security', 0")

cursor.execute("RECONFIGURE")

def install_regex_clr(self, database_name):

sql = '''

USE {database_name};

-- Drop and recreate the function if it already exists

IF OBJECT_ID('REGEXP_LIKE') IS NOT NULL

DROP FUNCTION [dbo].[REGEXP_LIKE]

IF EXISTS(select * from sys.assemblies where name like 'regex_clr')

DROP ASSEMBLY regex_clr

;

CREATE ASSEMBLY regex_clr

FROM 0x{assembly_hex}

WITH PERMISSION_SET = SAFE;

create function [dbo].[REGEXP_LIKE]

(

@input nvarchar(max),

@pattern nvarchar(max),

@caseSensitive int

)

RETURNS INT AS

EXTERNAL NAME regex_clr.UserDefinedFunctions.REGEXP_LIKE

'''.format(

database_name=self.connection.ops.quote_name(database_name),

assembly_hex=self.get_regex_clr_assembly_hex(),

).split(';')

self.enable_clr()

with self.cursor() as cursor:

for s in sql:

cursor.execute(s)

def get_regex_clr_assembly_hex(self):

with open(os.path.join(os.path.dirname(__file__), 'regex_clr.dll'), 'rb') as f:

return binascii.hexlify(f.read()).decode('ascii') | zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/mssql/creation.py | creation.py |

from django.db.backends.base.features import BaseDatabaseFeatures

from django.utils.functional import cached_property

class DatabaseFeatures(BaseDatabaseFeatures):

allow_sliced_subqueries_with_in = False

can_introspect_autofield = True

can_introspect_json_field = False

can_introspect_small_integer_field = True

can_return_columns_from_insert = True

can_return_id_from_insert = True

can_return_rows_from_bulk_insert = True

can_rollback_ddl = True

can_use_chunked_reads = False

for_update_after_from = True

greatest_least_ignores_nulls = True

has_json_object_function = False

has_json_operators = False

has_native_json_field = False

has_native_uuid_field = False

has_real_datatype = True

has_select_for_update = True

has_select_for_update_nowait = True

has_select_for_update_skip_locked = True

ignores_quoted_identifier_case = True

ignores_table_name_case = True

order_by_nulls_first = True

requires_literal_defaults = True

requires_sqlparse_for_splitting = False

supports_boolean_expr_in_select_clause = False

supports_covering_indexes = True

supports_deferrable_unique_constraints = False

supports_expression_indexes = False

supports_ignore_conflicts = False

supports_index_on_text_field = False

supports_json_field_contains = False

supports_order_by_nulls_modifier = False

supports_over_clause = True

supports_paramstyle_pyformat = False

supports_primitives_in_json_field = False

supports_regex_backreferencing = True

supports_sequence_reset = False

supports_subqueries_in_group_by = False

supports_tablespaces = True

supports_temporal_subtraction = True

supports_timezones = False

supports_transactions = True

uses_savepoints = True

has_bulk_insert = True

supports_nullable_unique_constraints = True

supports_partially_nullable_unique_constraints = True

supports_partial_indexes = True

supports_functions_in_partial_indexes = True

@cached_property

def has_zoneinfo_database(self):

with self.connection.cursor() as cursor:

cursor.execute("SELECT TOP 1 1 FROM sys.time_zone_info")

return cursor.fetchone() is not None

@cached_property

def supports_json_field(self):

return self.connection.sql_server_version >= 2016 or self.connection.to_azure_sql_db | zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/mssql/features.py | features.py |

import datetime

import uuid

import warnings

from django.conf import settings

from django.db.backends.base.operations import BaseDatabaseOperations

from django.db.models.expressions import Exists, ExpressionWrapper, RawSQL

from django.db.models.sql.where import WhereNode

from django.utils import timezone

from django.utils.encoding import force_str

from django import VERSION as django_version

import pytz

class DatabaseOperations(BaseDatabaseOperations):

compiler_module = 'mssql.compiler'

cast_char_field_without_max_length = 'nvarchar(max)'

def max_in_list_size(self):

# The driver might add a few parameters

# chose a reasonable number less than 2100 limit

return 2048

def _convert_field_to_tz(self, field_name, tzname):

if settings.USE_TZ and not tzname == 'UTC':

offset = self._get_utcoffset(tzname)

field_name = 'DATEADD(second, %d, %s)' % (offset, field_name)

return field_name

def _get_utcoffset(self, tzname):

"""

Returns UTC offset for given time zone in seconds

"""

# SQL Server has no built-in support for tz database, see:

# http://blogs.msdn.com/b/sqlprogrammability/archive/2008/03/18/using-time-zone-data-in-sql-server-2008.aspx

zone = pytz.timezone(tzname)

# no way to take DST into account at this point

now = datetime.datetime.now()

delta = zone.localize(now, is_dst=False).utcoffset()

return delta.days * 86400 + delta.seconds - zone.dst(now).seconds

def bulk_batch_size(self, fields, objs):

"""

Returns the maximum allowed batch size for the backend. The fields

are the fields going to be inserted in the batch, the objs contains

all the objects to be inserted.

"""

max_insert_rows = 1000

fields_len = len(fields)

if fields_len == 0:

# Required for empty model

# (bulk_create.tests.BulkCreateTests.test_empty_model)

return max_insert_rows

# MSSQL allows a query to have 2100 parameters but some parameters are

# taken up defining `NVARCHAR` parameters to store the query text and

# query parameters for the `sp_executesql` call. This should only take

# up 2 parameters but I've had this error when sending 2098 parameters.

max_query_params = 2050

# inserts are capped at 1000 rows regardless of number of query params.

# bulk_update CASE...WHEN...THEN statement sometimes takes 2 parameters per field

return min(max_insert_rows, max_query_params // fields_len // 2)

def bulk_insert_sql(self, fields, placeholder_rows):

placeholder_rows_sql = (", ".join(row) for row in placeholder_rows)

values_sql = ", ".join("(%s)" % sql for sql in placeholder_rows_sql)

return "VALUES " + values_sql

def cache_key_culling_sql(self):

"""

Returns a SQL query that retrieves the first cache key greater than the

smallest.

This is used by the 'db' cache backend to determine where to start

culling.

"""

return "SELECT cache_key FROM (SELECT cache_key, " \

"ROW_NUMBER() OVER (ORDER BY cache_key) AS rn FROM %s" \

") cache WHERE rn = %%s + 1"

def combine_duration_expression(self, connector, sub_expressions):

lhs, rhs = sub_expressions

sign = ' * -1' if connector == '-' else ''

if lhs.startswith('DATEADD'):

col, sql = rhs, lhs

else:

col, sql = lhs, rhs

params = [sign for _ in range(sql.count('DATEADD'))]

params.append(col)

return sql % tuple(params)

def combine_expression(self, connector, sub_expressions):

"""

SQL Server requires special cases for some operators in query expressions

"""

if connector == '^':

return 'POWER(%s)' % ','.join(sub_expressions)

elif connector == '<<':

return '%s * (2 * %s)' % tuple(sub_expressions)

elif connector == '>>':

return '%s / (2 * %s)' % tuple(sub_expressions)

return super().combine_expression(connector, sub_expressions)

def convert_datetimefield_value(self, value, expression, connection):

if value is not None:

if settings.USE_TZ:

value = timezone.make_aware(value, self.connection.timezone)

return value

def convert_floatfield_value(self, value, expression, connection):

if value is not None:

value = float(value)

return value

def convert_uuidfield_value(self, value, expression, connection):

if value is not None:

value = uuid.UUID(value)

return value

def convert_booleanfield_value(self, value, expression, connection):

return bool(value) if value in (0, 1) else value

def date_extract_sql(self, lookup_type, field_name):

if lookup_type == 'week_day':

return "DATEPART(weekday, %s)" % field_name

elif lookup_type == 'week':

return "DATEPART(iso_week, %s)" % field_name

elif lookup_type == 'iso_year':

return "YEAR(DATEADD(day, 26 - DATEPART(isoww, %s), %s))" % (field_name, field_name)

else:

return "DATEPART(%s, %s)" % (lookup_type, field_name)

def date_interval_sql(self, timedelta):

"""

implements the interval functionality for expressions

"""

sec = timedelta.seconds + timedelta.days * 86400

sql = 'DATEADD(second, %d%%s, CAST(%%s AS datetime2))' % sec

if timedelta.microseconds:

sql = 'DATEADD(microsecond, %d%%s, CAST(%s AS datetime2))' % (timedelta.microseconds, sql)

return sql

def date_trunc_sql(self, lookup_type, field_name, tzname=''):

CONVERT_YEAR = 'CONVERT(varchar, DATEPART(year, %s))' % field_name

CONVERT_QUARTER = 'CONVERT(varchar, 1+((DATEPART(quarter, %s)-1)*3))' % field_name

CONVERT_MONTH = 'CONVERT(varchar, DATEPART(month, %s))' % field_name

CONVERT_WEEK = "DATEADD(DAY, (DATEPART(weekday, %s) + 5) %%%% 7 * -1, %s)" % (field_name, field_name)

if lookup_type == 'year':

return "CONVERT(datetime2, %s + '/01/01')" % CONVERT_YEAR

if lookup_type == 'quarter':

return "CONVERT(datetime2, %s + '/' + %s + '/01')" % (CONVERT_YEAR, CONVERT_QUARTER)

if lookup_type == 'month':

return "CONVERT(datetime2, %s + '/' + %s + '/01')" % (CONVERT_YEAR, CONVERT_MONTH)

if lookup_type == 'week':

return "CONVERT(datetime2, CONVERT(varchar, %s, 112))" % CONVERT_WEEK

if lookup_type == 'day':

return "CONVERT(datetime2, CONVERT(varchar(12), %s, 112))" % field_name

def datetime_cast_date_sql(self, field_name, tzname):

field_name = self._convert_field_to_tz(field_name, tzname)

sql = 'CAST(%s AS date)' % field_name

return sql

def datetime_cast_time_sql(self, field_name, tzname):

field_name = self._convert_field_to_tz(field_name, tzname)

sql = 'CAST(%s AS time)' % field_name

return sql

def datetime_extract_sql(self, lookup_type, field_name, tzname):

field_name = self._convert_field_to_tz(field_name, tzname)

return self.date_extract_sql(lookup_type, field_name)

def datetime_trunc_sql(self, lookup_type, field_name, tzname):

field_name = self._convert_field_to_tz(field_name, tzname)

sql = ''

if lookup_type in ('year', 'quarter', 'month', 'week', 'day'):

sql = self.date_trunc_sql(lookup_type, field_name)

elif lookup_type == 'hour':

sql = "CONVERT(datetime2, SUBSTRING(CONVERT(varchar, %s, 20), 0, 14) + ':00:00')" % field_name

elif lookup_type == 'minute':

sql = "CONVERT(datetime2, SUBSTRING(CONVERT(varchar, %s, 20), 0, 17) + ':00')" % field_name

elif lookup_type == 'second':

sql = "CONVERT(datetime2, CONVERT(varchar, %s, 20))" % field_name

return sql

def fetch_returned_insert_rows(self, cursor):

"""

Given a cursor object that has just performed an INSERT...OUTPUT INSERTED

statement into a table, return the list of returned data.

"""

return cursor.fetchall()

def return_insert_columns(self, fields):

if not fields:

return '', ()

columns = [

'%s.%s' % (

'INSERTED',

self.quote_name(field.column),

) for field in fields

]

return 'OUTPUT %s' % ', '.join(columns), ()

def for_update_sql(self, nowait=False, skip_locked=False, of=()):

if skip_locked:

return 'WITH (ROWLOCK, UPDLOCK, READPAST)'

elif nowait:

return 'WITH (NOWAIT, ROWLOCK, UPDLOCK)'

else:

return 'WITH (ROWLOCK, UPDLOCK)'

def format_for_duration_arithmetic(self, sql):

if sql == '%s':

# use DATEADD only once because Django prepares only one parameter for this

fmt = 'DATEADD(second, %s / 1000000%%s, CAST(%%s AS datetime2))'

sql = '%%s'

else:

# use DATEADD twice to avoid arithmetic overflow for number part

MICROSECOND = "DATEADD(microsecond, %s %%%%%%%% 1000000%%s, CAST(%%s AS datetime2))"

fmt = 'DATEADD(second, %s / 1000000%%s, {})'.format(MICROSECOND)

sql = (sql, sql)

return fmt % sql

def fulltext_search_sql(self, field_name):

"""

Returns the SQL WHERE clause to use in order to perform a full-text

search of the given field_name. Note that the resulting string should

contain a '%s' placeholder for the value being searched against.

"""

return 'CONTAINS(%s, %%s)' % field_name

def get_db_converters(self, expression):

converters = super().get_db_converters(expression)

internal_type = expression.output_field.get_internal_type()

if internal_type == 'DateTimeField':

converters.append(self.convert_datetimefield_value)

elif internal_type == 'FloatField':

converters.append(self.convert_floatfield_value)

elif internal_type == 'UUIDField':

converters.append(self.convert_uuidfield_value)

elif internal_type in ('BooleanField', 'NullBooleanField'):

converters.append(self.convert_booleanfield_value)

return converters

def last_insert_id(self, cursor, table_name, pk_name):

"""

Given a cursor object that has just performed an INSERT statement into

a table that has an auto-incrementing ID, returns the newly created ID.

This method also receives the table name and the name of the primary-key

column.

"""

# TODO: Check how the `last_insert_id` is being used in the upper layers

# in context of multithreaded access, compare with other backends

# IDENT_CURRENT: http://msdn2.microsoft.com/en-us/library/ms175098.aspx

# SCOPE_IDENTITY: http://msdn2.microsoft.com/en-us/library/ms190315.aspx

# @@IDENTITY: http://msdn2.microsoft.com/en-us/library/ms187342.aspx

# IDENT_CURRENT is not limited by scope and session; it is limited to

# a specified table. IDENT_CURRENT returns the value generated for

# a specific table in any session and any scope.

# SCOPE_IDENTITY and @@IDENTITY return the last identity values that

# are generated in any table in the current session. However,

# SCOPE_IDENTITY returns values inserted only within the current scope;

# @@IDENTITY is not limited to a specific scope.

table_name = self.quote_name(table_name)

cursor.execute("SELECT CAST(IDENT_CURRENT(%s) AS int)", [table_name])

return cursor.fetchone()[0]

def lookup_cast(self, lookup_type, internal_type=None):

if lookup_type in ('iexact', 'icontains', 'istartswith', 'iendswith'):

return "UPPER(%s)"

return "%s"

def max_name_length(self):

return 128

def no_limit_value(self):

return None

def prepare_sql_script(self, sql, _allow_fallback=False):

return [sql]

def quote_name(self, name):

"""

Returns a quoted version of the given table, index or column name. Does

not quote the given name if it's already been quoted.

"""

if name.startswith('[') and name.endswith(']'):

return name # Quoting once is enough.

return '[%s]' % name

def random_function_sql(self):

"""

Returns a SQL expression that returns a random value.

"""

return "RAND()"

def regex_lookup(self, lookup_type):

"""

Returns the string to use in a query when performing regular expression

lookups (using "regex" or "iregex"). The resulting string should

contain a '%s' placeholder for the column being searched against.

If the feature is not supported (or part of it is not supported), a

NotImplementedError exception can be raised.

"""

match_option = {'iregex': 0, 'regex': 1}[lookup_type]

return "dbo.REGEXP_LIKE(%%s, %%s, %s)=1" % (match_option,)

def limit_offset_sql(self, low_mark, high_mark):

"""Return LIMIT/OFFSET SQL clause."""

limit, offset = self._get_limit_offset_params(low_mark, high_mark)

return '%s%s' % (

(' OFFSET %d ROWS' % offset) if offset else '',

(' FETCH FIRST %d ROWS ONLY' % limit) if limit else '',

)

def last_executed_query(self, cursor, sql, params):

"""

Returns a string of the query last executed by the given cursor, with

placeholders replaced with actual values.

`sql` is the raw query containing placeholders, and `params` is the

sequence of parameters. These are used by default, but this method

exists for database backends to provide a better implementation

according to their own quoting schemes.

"""

return super().last_executed_query(cursor, cursor.last_sql, cursor.last_params)

def savepoint_create_sql(self, sid):

"""

Returns the SQL for starting a new savepoint. Only required if the

"uses_savepoints" feature is True. The "sid" parameter is a string

for the savepoint id.

"""

return "SAVE TRANSACTION %s" % sid

def savepoint_rollback_sql(self, sid):

"""

Returns the SQL for rolling back the given savepoint.

"""

return "ROLLBACK TRANSACTION %s" % sid

def _build_sequences(self, sequences, cursor):

seqs = []

for seq in sequences:

cursor.execute("SELECT COUNT(*) FROM %s" % self.quote_name(seq["table"]))

rowcnt = cursor.fetchone()[0]

elem = {}

if rowcnt:

elem['start_id'] = 0

else:

elem['start_id'] = 1

elem.update(seq)

seqs.append(elem)

return seqs

def _sql_flush_new(self, style, tables, *, reset_sequences=False, allow_cascade=False):

if reset_sequences:

return [

sequence

for sequence in self.connection.introspection.sequence_list()

if sequence['table'].lower() in [table.lower() for table in tables]

]

return []

def _sql_flush_old(self, style, tables, sequences, allow_cascade=False):

return sequences

def sql_flush(self, style, tables, *args, **kwargs):

"""

Returns a list of SQL statements required to remove all data from

the given database tables (without actually removing the tables

themselves).

The returned value also includes SQL statements required to reset DB

sequences passed in :param sequences:.

The `style` argument is a Style object as returned by either

color_style() or no_style() in django.core.management.color.

The `allow_cascade` argument determines whether truncation may cascade

to tables with foreign keys pointing the tables being truncated.

"""

if not tables:

return []

if django_version >= (3, 1):

sequences = self._sql_flush_new(style, tables, *args, **kwargs)

else:

sequences = self._sql_flush_old(style, tables, *args, **kwargs)

from django.db import connections

cursor = connections[self.connection.alias].cursor()

seqs = self._build_sequences(sequences, cursor)

COLUMNS = "TABLE_NAME, CONSTRAINT_NAME"

WHERE = "CONSTRAINT_TYPE not in ('PRIMARY KEY','UNIQUE')"

cursor.execute(

"SELECT {} FROM INFORMATION_SCHEMA.TABLE_CONSTRAINTS WHERE {}".format(COLUMNS, WHERE))

fks = cursor.fetchall()

sql_list = ['ALTER TABLE %s NOCHECK CONSTRAINT %s;' %

(self.quote_name(fk[0]), self.quote_name(fk[1])) for fk in fks]

sql_list.extend(['%s %s %s;' % (style.SQL_KEYWORD('DELETE'), style.SQL_KEYWORD('FROM'),

style.SQL_FIELD(self.quote_name(table))) for table in tables])

if self.connection.to_azure_sql_db and self.connection.sql_server_version < 2014:

warnings.warn("Resetting identity columns is not supported "

"on this versios of Azure SQL Database.",

RuntimeWarning)

else:

# Then reset the counters on each table.

sql_list.extend(['%s %s (%s, %s, %s) %s %s;' % (

style.SQL_KEYWORD('DBCC'),

style.SQL_KEYWORD('CHECKIDENT'),

style.SQL_FIELD(self.quote_name(seq["table"])),

style.SQL_KEYWORD('RESEED'),

style.SQL_FIELD('%d' % seq['start_id']),

style.SQL_KEYWORD('WITH'),

style.SQL_KEYWORD('NO_INFOMSGS'),

) for seq in seqs])

sql_list.extend(['ALTER TABLE %s CHECK CONSTRAINT %s;' %

(self.quote_name(fk[0]), self.quote_name(fk[1])) for fk in fks])

return sql_list

def start_transaction_sql(self):

"""

Returns the SQL statement required to start a transaction.

"""

return "BEGIN TRANSACTION"

def subtract_temporals(self, internal_type, lhs, rhs):

lhs_sql, lhs_params = lhs

rhs_sql, rhs_params = rhs

if internal_type == 'DateField':

sql = "CAST(DATEDIFF(day, %(rhs)s, %(lhs)s) AS bigint) * 86400 * 1000000"

params = rhs_params + lhs_params

else:

SECOND = "DATEDIFF(second, %(rhs)s, %(lhs)s)"

MICROSECOND = "DATEPART(microsecond, %(lhs)s) - DATEPART(microsecond, %(rhs)s)"

sql = "CAST({} AS bigint) * 1000000 + {}".format(SECOND, MICROSECOND)

params = rhs_params + lhs_params * 2 + rhs_params

return sql % {'lhs': lhs_sql, 'rhs': rhs_sql}, params

def tablespace_sql(self, tablespace, inline=False):

"""

Returns the SQL that will be appended to tables or rows to define

a tablespace. Returns '' if the backend doesn't use tablespaces.

"""

return "ON %s" % self.quote_name(tablespace)

def prep_for_like_query(self, x):

"""Prepares a value for use in a LIKE query."""

# http://msdn2.microsoft.com/en-us/library/ms179859.aspx

return force_str(x).replace('\\', '\\\\').replace('[', '[[]').replace('%', '[%]').replace('_', '[_]')

def prep_for_iexact_query(self, x):

"""

Same as prep_for_like_query(), but called for "iexact" matches, which

need not necessarily be implemented using "LIKE" in the backend.

"""

return x

def adapt_datetimefield_value(self, value):

"""

Transforms a datetime value to an object compatible with what is expected

by the backend driver for datetime columns.

"""

if value is None:

return None

if settings.USE_TZ and timezone.is_aware(value):

# pyodbc donesn't support datetimeoffset

value = value.astimezone(self.connection.timezone).replace(tzinfo=None)

return value

def time_trunc_sql(self, lookup_type, field_name, tzname=''):

# if self.connection.sql_server_version >= 2012:

# fields = {

# 'hour': 'DATEPART(hour, %s)' % field_name,

# 'minute': 'DATEPART(minute, %s)' % field_name if lookup_type != 'hour' else '0',

# 'second': 'DATEPART(second, %s)' % field_name if lookup_type == 'second' else '0',

# }

# sql = 'TIMEFROMPARTS(%(hour)s, %(minute)s, %(second)s, 0, 0)' % fields

if lookup_type == 'hour':

sql = "CONVERT(time, SUBSTRING(CONVERT(varchar, %s, 114), 0, 3) + ':00:00')" % field_name

elif lookup_type == 'minute':

sql = "CONVERT(time, SUBSTRING(CONVERT(varchar, %s, 114), 0, 6) + ':00')" % field_name

elif lookup_type == 'second':

sql = "CONVERT(time, SUBSTRING(CONVERT(varchar, %s, 114), 0, 9))" % field_name

return sql

def conditional_expression_supported_in_where_clause(self, expression):

"""

Following "Moved conditional expression wrapping to the Exact lookup" in django 3.1

https://github.com/django/django/commit/37e6c5b79bd0529a3c85b8c478e4002fd33a2a1d

"""

if isinstance(expression, (Exists, WhereNode)):

return True

if isinstance(expression, ExpressionWrapper) and expression.conditional:

return self.conditional_expression_supported_in_where_clause(expression.expression)

if isinstance(expression, RawSQL) and expression.conditional:

return True

return False | zilian-mssql-django | /zilian-mssql-django-1.1.4.tar.gz/zilian-mssql-django-1.1.4/mssql/operations.py | operations.py |

import os

import re

import time

import struct

from django.core.exceptions import ImproperlyConfigured

try:

import pyodbc as Database

except ImportError as e:

raise ImproperlyConfigured("Error loading pyodbc module: %s" % e)

from django.utils.version import get_version_tuple # noqa

pyodbc_ver = get_version_tuple(Database.version)

if pyodbc_ver < (3, 0):

raise ImproperlyConfigured("pyodbc 3.0 or newer is required; you have %s" % Database.version)

from django.conf import settings # noqa

from django.db import NotSupportedError # noqa

from django.db.backends.base.base import BaseDatabaseWrapper # noqa

from django.utils.encoding import smart_str # noqa

from django.utils.functional import cached_property # noqa

if hasattr(settings, 'DATABASE_CONNECTION_POOLING'):

if not settings.DATABASE_CONNECTION_POOLING:

Database.pooling = False

from .client import DatabaseClient # noqa

from .creation import DatabaseCreation # noqa

from .features import DatabaseFeatures # noqa

from .introspection import DatabaseIntrospection # noqa

from .operations import DatabaseOperations # noqa

from .schema import DatabaseSchemaEditor # noqa

EDITION_AZURE_SQL_DB = 5

def encode_connection_string(fields):

"""Encode dictionary of keys and values as an ODBC connection String.

See [MS-ODBCSTR] document:

https://msdn.microsoft.com/en-us/library/ee208909%28v=sql.105%29.aspx

"""

# As the keys are all provided by us, don't need to encode them as we know

# they are ok.

return ';'.join(

'%s=%s' % (k, encode_value(v))

for k, v in fields.items()

)

def prepare_token_for_odbc(token):

"""

Will prepare token for passing it to the odbc driver, as it expects

bytes and not a string

:param token:

:return: packed binary byte representation of token string

"""

if not isinstance(token, str):

raise TypeError("Invalid token format provided.")

tokenstr = token.encode()

exptoken = b""

for i in tokenstr:

exptoken += bytes({i})

exptoken += bytes(1)

return struct.pack("=i", len(exptoken)) + exptoken

def encode_value(v):

"""If the value contains a semicolon, or starts with a left curly brace,

then enclose it in curly braces and escape all right curly braces.

"""

if ';' in v or v.strip(' ').startswith('{'):

return '{%s}' % (v.replace('}', '}}'),)

return v

class DatabaseWrapper(BaseDatabaseWrapper):

vendor = 'microsoft'

display_name = 'SQL Server'

# This dictionary maps Field objects to their associated MS SQL column

# types, as strings. Column-type strings can contain format strings; they'll

# be interpolated against the values of Field.__dict__ before being output.

# If a column type is set to None, it won't be included in the output.

data_types = {

'AutoField': 'int',

'BigAutoField': 'bigint',

'BigIntegerField': 'bigint',

'BinaryField': 'varbinary(%(max_length)s)',

'BooleanField': 'bit',

'CharField': 'nvarchar(%(max_length)s)',

'DateField': 'date',

'DateTimeField': 'datetime2',

'DecimalField': 'numeric(%(max_digits)s, %(decimal_places)s)',

'DurationField': 'bigint',

'FileField': 'nvarchar(%(max_length)s)',

'FilePathField': 'nvarchar(%(max_length)s)',

'FloatField': 'double precision',

'IntegerField': 'int',

'IPAddressField': 'nvarchar(15)',

'GenericIPAddressField': 'nvarchar(39)',

'JSONField': 'nvarchar(max)',

'NullBooleanField': 'bit',

'OneToOneField': 'int',

'PositiveIntegerField': 'int',

'PositiveSmallIntegerField': 'smallint',

'PositiveBigIntegerField' : 'bigint',

'SlugField': 'nvarchar(%(max_length)s)',

'SmallAutoField': 'smallint',

'SmallIntegerField': 'smallint',

'TextField': 'nvarchar(max)',

'TimeField': 'time',

'UUIDField': 'char(32)',

}

data_types_suffix = {

'AutoField': 'IDENTITY (1, 1)',

'BigAutoField': 'IDENTITY (1, 1)',

'SmallAutoField': 'IDENTITY (1, 1)',

}

data_type_check_constraints = {

'JSONField': '(ISJSON ("%(column)s") = 1)',

'PositiveIntegerField': '[%(column)s] >= 0',

'PositiveSmallIntegerField': '[%(column)s] >= 0',

'PositiveBigIntegerField': '[%(column)s] >= 0',

}

operators = {

# Since '=' is used not only for string comparision there is no way

# to make it case (in)sensitive.

'exact': '= %s',

'iexact': "= UPPER(%s)",

'contains': "LIKE %s ESCAPE '\\'",

'icontains': "LIKE UPPER(%s) ESCAPE '\\'",

'gt': '> %s',

'gte': '>= %s',

'lt': '< %s',

'lte': '<= %s',

'startswith': "LIKE %s ESCAPE '\\'",

'endswith': "LIKE %s ESCAPE '\\'",

'istartswith': "LIKE UPPER(%s) ESCAPE '\\'",

'iendswith': "LIKE UPPER(%s) ESCAPE '\\'",

}

# The patterns below are used to generate SQL pattern lookup clauses when

# the right-hand side of the lookup isn't a raw string (it might be an expression

# or the result of a bilateral transformation).

# In those cases, special characters for LIKE operators (e.g. \, *, _) should be

# escaped on database side.

#

# Note: we use str.format() here for readability as '%' is used as a wildcard for

# the LIKE operator.

pattern_esc = r"REPLACE(REPLACE(REPLACE({}, '\', '[\]'), '%%', '[%%]'), '_', '[_]')"

pattern_ops = {

'contains': "LIKE '%%' + {} + '%%'",

'icontains': "LIKE '%%' + UPPER({}) + '%%'",

'startswith': "LIKE {} + '%%'",

'istartswith': "LIKE UPPER({}) + '%%'",

'endswith': "LIKE '%%' + {}",

'iendswith': "LIKE '%%' + UPPER({})",

}

Database = Database

SchemaEditorClass = DatabaseSchemaEditor

# Classes instantiated in __init__().

client_class = DatabaseClient

creation_class = DatabaseCreation

features_class = DatabaseFeatures

introspection_class = DatabaseIntrospection

ops_class = DatabaseOperations

_codes_for_networkerror = (

'08S01',

'08S02',

)

_sql_server_versions = {

9: 2005,

10: 2008,

11: 2012,

12: 2014,

13: 2016,

14: 2017,

15: 2019,

}

# https://azure.microsoft.com/en-us/documentation/articles/sql-database-develop-csharp-retry-windows/

_transient_error_numbers = (

'4060',

'10928',

'10929',

'40197',

'40501',

'40613',

'49918',

'49919',

'49920',

'[HYT00] [Microsoft][ODBC Driver 17 for SQL Server]Login timeout expired (0) (SQLDriverConnect)',

)

def __init__(self, *args, **kwargs):

super().__init__(*args, **kwargs)

opts = self.settings_dict["OPTIONS"]

# capability for multiple result sets or cursors

self.supports_mars = False

# Some drivers need unicode encoded as UTF8. If this is left as

# None, it will be determined based on the driver, namely it'll be

# False if the driver is a windows driver and True otherwise.

#

# However, recent versions of FreeTDS and pyodbc (0.91 and 3.0.6 as

# of writing) are perfectly okay being fed unicode, which is why

# this option is configurable.

if 'driver_needs_utf8' in opts:

self.driver_charset = 'utf-8'

else:

self.driver_charset = opts.get('driver_charset', None)

# interval to wait for recovery from network error

interval = opts.get('connection_recovery_interval_msec', 0.0)

self.connection_recovery_interval_msec = float(interval) / 1000

# make lookup operators to be collation-sensitive if needed

collation = opts.get('collation', None)

if collation:

self.operators = dict(self.__class__.operators)

ops = {}

for op in self.operators:

sql = self.operators[op]

if sql.startswith('LIKE '):

ops[op] = '%s COLLATE %s' % (sql, collation)

self.operators.update(ops)

def create_cursor(self, name=None):

return CursorWrapper(self.connection.cursor(), self)

def _cursor(self):

new_conn = False

if self.connection is None:

new_conn = True

conn = super()._cursor()

if new_conn:

if self.sql_server_version <= 2005:

self.data_types['DateField'] = 'datetime'

self.data_types['DateTimeField'] = 'datetime'

self.data_types['TimeField'] = 'datetime'

return conn

def get_connection_params(self):

settings_dict = self.settings_dict

if settings_dict['NAME'] == '':

raise ImproperlyConfigured(

"settings.DATABASES is improperly configured. "

"Please supply the NAME value.")

conn_params = settings_dict.copy()

if conn_params['NAME'] is None:

conn_params['NAME'] = 'master'

return conn_params

def get_new_connection(self, conn_params):

database = conn_params['NAME']

host = conn_params.get('HOST', 'localhost')

user = conn_params.get('USER', None)

password = conn_params.get('PASSWORD', None)

port = conn_params.get('PORT', None)

trusted_connection = conn_params.get('Trusted_Connection', 'yes')

options = conn_params.get('OPTIONS', {})

driver = options.get('driver', 'ODBC Driver 17 for SQL Server')

dsn = options.get('dsn', None)

options_extra_params = options.get('extra_params', '')

# Microsoft driver names assumed here are:

# * SQL Server Native Client 10.0/11.0

# * ODBC Driver 11/13 for SQL Server

ms_drivers = re.compile('^ODBC Driver .* for SQL Server$|^SQL Server Native Client')

# available ODBC connection string keywords:

# (Microsoft drivers for Windows)

# https://docs.microsoft.com/en-us/sql/relational-databases/native-client/applications/using-connection-string-keywords-with-sql-server-native-client

# (Microsoft drivers for Linux/Mac)

# https://docs.microsoft.com/en-us/sql/connect/odbc/linux-mac/connection-string-keywords-and-data-source-names-dsns

# (FreeTDS)

# http://www.freetds.org/userguide/odbcconnattr.htm

cstr_parts = {}

if dsn:

cstr_parts['DSN'] = dsn

else:

# Only append DRIVER if DATABASE_ODBC_DSN hasn't been set

cstr_parts['DRIVER'] = driver

if ms_drivers.match(driver):

if port:

host = ','.join((host, str(port)))

cstr_parts['SERVER'] = host

elif options.get('host_is_server', False):

if port:

cstr_parts['PORT'] = str(port)

cstr_parts['SERVER'] = host

else:

cstr_parts['SERVERNAME'] = host

if user:

cstr_parts['UID'] = user

if 'Authentication=ActiveDirectoryInteractive' not in options_extra_params:

cstr_parts['PWD'] = password