url

stringlengths 58

61

| repository_url

stringclasses 1

value | labels_url

stringlengths 72

75

| comments_url

stringlengths 67

70

| events_url

stringlengths 65

68

| html_url

stringlengths 46

51

| id

int64 599M

1.12B

| node_id

stringlengths 18

32

| number

int64 1

3.68k

| title

stringlengths 1

276

| user

dict | labels

list | state

stringclasses 2

values | locked

bool 1

class | assignee

dict | assignees

list | milestone

dict | comments

list | created_at

int64 1.59k

1,644B

| updated_at

int64 1.59k

1,694B

| closed_at

int64 1.59k

1,690B

⌀ | author_association

stringclasses 3

values | active_lock_reason

null | draft

bool 2

classes | pull_request

dict | body

stringlengths 0

228k

⌀ | reactions

dict | timeline_url

stringlengths 67

70

| performed_via_github_app

null | state_reason

stringclasses 2

values | is_pull_request

bool 2

classes |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

https://api.github.com/repos/huggingface/datasets/issues/24 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/24/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/24/comments | https://api.github.com/repos/huggingface/datasets/issues/24/events | https://github.com/huggingface/datasets/pull/24 | 609,064,987 | MDExOlB1bGxSZXF1ZXN0NDEwNzE5MTU0 | 24 | Add checksums | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"Looks good to me :-) \r\n\r\nJust would prefer to get rid of the `_DYNAMICALLY_IMPORTED_MODULE` attribute and replace it by a `get_imported_module()` function. Maybe there is something I'm not seeing here though - what do you think? ",

"> * I'm not sure I understand the general organization of checksums. I see we have a checksum folder with potentially several checksum files but I also see that checksum files can potentially contain several checksums. Could you explain a bit more how this is organized?\r\n\r\nIt should look like this:\r\nsquad/\r\n├── squad.py/\r\n└── urls_checksums/\r\n...........└── checksums.txt\r\n\r\nIn checksums.txt, the format is one line per (url, size, checksum)\r\n\r\nI don't have a strong opinion between `urls_checksums/checksums.txt` or directly `checksums.txt` (not inside the `urls_checksums` folder), let me know what you think.\r\n\r\n\r\n> * Also regarding your comment on checksum files for \"canonical\" datasets. I understand we can just create these with `nlp-cli test` and then upload them manually to our S3, right?\r\n\r\nYes you're right",

"Update of the commands:\r\n\r\n- nlp-cli test \\<dataset\\> : Run download_and_prepare and verify checksums\r\n * --name \\<name\\> : run only for the name\r\n * --all_configs : run for all configs\r\n * --save_checksums : instead of verifying checksums, compute and save them\r\n * --ignore_checksums : don't do checksums verification\r\n\r\n- nlp-cli upload \\<dataset_folder\\> : Upload a dataset\r\n * --upload_checksums : compute and upload checksums for uploaded files\r\n\r\nTODO:\r\n- don't overwrite checksums files on S3, to let the user upload a dataset in several steps if needed\r\n\r\nQuestion:\r\n- One idea from @patrickvonplaten : shall we upload checksums everytime we upload files ? (and therefore remove the upload_checksums parameter)",

"Ok, ready to merge, then @lhoestq ?",

"Yep :)"

]

| 1,588,167,449,000 | 1,588,276,370,000 | 1,588,276,369,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/24",

"html_url": "https://github.com/huggingface/datasets/pull/24",

"diff_url": "https://github.com/huggingface/datasets/pull/24.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/24.patch",

"merged_at": 1588276369000

} | ### Checksums files

They are stored next to the dataset script in urls_checksums/checksums.txt.

They are used to check the integrity of the datasets downloaded files.

I kept the same format as tensorflow-datasets.

There is one checksums file for all configs.

### Load a dataset

When you do `load("squad")`, it will also download the checksums file and put it next to the script in nlp/datasets/hash/urls_checksums/checksums.txt.

It also verifies that the downloaded files checksums match the expected ones.

You can ignore checksum tests with `load("squad", ignore_checksums=True)` (under the hood it just adds `ignore_checksums=True` in the `DownloadConfig`)

### Test a dataset

There is a new command `nlp-cli test squad` that runs `download_and_prepare` to see if it runs ok, and that verifies that all the checksums match. Allowed arguments are `--name`, `--all_configs`, `--ignore_checksums` and `--register_checksums`.

### Register checksums

1. If the dataset has external dataset files

The command `nlp-cli test squad --register_checksums --all_configs` runs `download_and_prepare` on all configs to see if it runs ok, and it creates the checksums file.

You can also register one config at a time using `--name` instead ; the checksums file will be completed and not overwritten.

If the script is a local script, the checksum file is moved to urls_checksums/checksums.txt next to the local script, to enable the user to upload both the script and the checksums file afterwards with `nlp-cli upload squad`.

2. If the dataset files are all inside the directory of the dataset script

The user can directly do `nlp-cli upload squad --register_checksums`, as there is no need to download anything.

In this case however, all the dataset must be uploaded at once.

--

PS : it doesn't allow to register checksums for canonical datasets, the file has to be added manually on S3 for now (I guess ?)

Also I feel like we must be sure that this processes would not constrain too much any user from uploading its dataset.

Let me know what you think :) | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/24/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/24/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/23 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/23/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/23/comments | https://api.github.com/repos/huggingface/datasets/issues/23/events | https://github.com/huggingface/datasets/pull/23 | 608,508,706 | MDExOlB1bGxSZXF1ZXN0NDEwMjczOTU2 | 23 | Add metrics | {

"login": "mariamabarham",

"id": 38249783,

"node_id": "MDQ6VXNlcjM4MjQ5Nzgz",

"avatar_url": "https://avatars.githubusercontent.com/u/38249783?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariamabarham",

"html_url": "https://github.com/mariamabarham",

"followers_url": "https://api.github.com/users/mariamabarham/followers",

"following_url": "https://api.github.com/users/mariamabarham/following{/other_user}",

"gists_url": "https://api.github.com/users/mariamabarham/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariamabarham/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariamabarham/subscriptions",

"organizations_url": "https://api.github.com/users/mariamabarham/orgs",

"repos_url": "https://api.github.com/users/mariamabarham/repos",

"events_url": "https://api.github.com/users/mariamabarham/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariamabarham/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,588,096,925,000 | 1,664,875,916,000 | 1,589,185,178,000 | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/23",

"html_url": "https://github.com/huggingface/datasets/pull/23",

"diff_url": "https://github.com/huggingface/datasets/pull/23.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/23.patch",

"merged_at": null

} | This PR is a draft for adding metrics (sacrebleu and seqeval are added)

use case examples:

`import nlp`

**sacrebleu:**

```

refs = [['The dog bit the man.', 'It was not unexpected.', 'The man bit him first.'],

['The dog had bit the man.', 'No one was surprised.', 'The man had bitten the dog.']]

sys = ['The dog bit the man.', "It wasn't surprising.", 'The man had just bitten him.']

sacrebleu = nlp.load_metrics('sacrebleu')

print(sacrebleu.score)

```

**seqeval:**

```

y_true = [['O', 'O', 'O', 'B-MISC', 'I-MISC', 'I-MISC', 'O'], ['B-PER', 'I-PER', 'O']]

y_pred = [['O', 'O', 'B-MISC', 'I-MISC', 'I-MISC', 'I-MISC', 'O'], ['B-PER', 'I-PER', 'O']]

seqeval = nlp.load_metrics('seqeval')

print(seqeval.accuracy_score(y_true, y_pred)

print(seqeval.f1_score(y_true, y_pred)

```

_examples are taken from the corresponding web page_

your comments and suggestions are more than welcomed

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/23/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/23/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/22 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/22/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/22/comments | https://api.github.com/repos/huggingface/datasets/issues/22/events | https://github.com/huggingface/datasets/pull/22 | 608,298,586 | MDExOlB1bGxSZXF1ZXN0NDEwMTAyMjU3 | 22 | adding bleu score code | {

"login": "mariamabarham",

"id": 38249783,

"node_id": "MDQ6VXNlcjM4MjQ5Nzgz",

"avatar_url": "https://avatars.githubusercontent.com/u/38249783?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/mariamabarham",

"html_url": "https://github.com/mariamabarham",

"followers_url": "https://api.github.com/users/mariamabarham/followers",

"following_url": "https://api.github.com/users/mariamabarham/following{/other_user}",

"gists_url": "https://api.github.com/users/mariamabarham/gists{/gist_id}",

"starred_url": "https://api.github.com/users/mariamabarham/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/mariamabarham/subscriptions",

"organizations_url": "https://api.github.com/users/mariamabarham/orgs",

"repos_url": "https://api.github.com/users/mariamabarham/repos",

"events_url": "https://api.github.com/users/mariamabarham/events{/privacy}",

"received_events_url": "https://api.github.com/users/mariamabarham/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,588,078,850,000 | 1,588,096,100,000 | 1,588,096,088,000 | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/22",

"html_url": "https://github.com/huggingface/datasets/pull/22",

"diff_url": "https://github.com/huggingface/datasets/pull/22.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/22.patch",

"merged_at": null

} | this PR add the BLEU score metric to the lib. It can be tested by running the following code.

` from nlp.metrics import bleu

hyp1 = "It is a guide to action which ensures that the military always obeys the commands of the party"

ref1a = "It is a guide to action that ensures that the military forces always being under the commands of the party "

ref1b = "It is the guiding principle which guarantees the military force always being under the command of the Party"

ref1c = "It is the practical guide for the army always to heed the directions of the party"

list_of_references = [[ref1a, ref1b, ref1c]]

hypotheses = [hyp1]

bleu = bleu.bleu_score(list_of_references, hypotheses,4, smooth=True)

print(bleu) ` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/22/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/22/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/21 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/21/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/21/comments | https://api.github.com/repos/huggingface/datasets/issues/21/events | https://github.com/huggingface/datasets/pull/21 | 607,914,185 | MDExOlB1bGxSZXF1ZXN0NDA5Nzk2MTM4 | 21 | Cleanup Features - Updating convert command - Fix Download manager | {

"login": "thomwolf",

"id": 7353373,

"node_id": "MDQ6VXNlcjczNTMzNzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/7353373?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/thomwolf",

"html_url": "https://github.com/thomwolf",

"followers_url": "https://api.github.com/users/thomwolf/followers",

"following_url": "https://api.github.com/users/thomwolf/following{/other_user}",

"gists_url": "https://api.github.com/users/thomwolf/gists{/gist_id}",

"starred_url": "https://api.github.com/users/thomwolf/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/thomwolf/subscriptions",

"organizations_url": "https://api.github.com/users/thomwolf/orgs",

"repos_url": "https://api.github.com/users/thomwolf/repos",

"events_url": "https://api.github.com/users/thomwolf/events{/privacy}",

"received_events_url": "https://api.github.com/users/thomwolf/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"For conflicts, I think the mention hint \"This should be modified because it mentions ...\" is missing.",

"Looks great!"

]

| 1,588,029,415,000 | 1,588,325,387,000 | 1,588,325,386,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/21",

"html_url": "https://github.com/huggingface/datasets/pull/21",

"diff_url": "https://github.com/huggingface/datasets/pull/21.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/21.patch",

"merged_at": 1588325386000

} | This PR makes a number of changes:

# Updating `Features`

Features are a complex mechanism provided in `tfds` to be able to modify a dataset on-the-fly when serializing to disk and when loading from disk.

We don't really need this because (1) it hides too much from the user and (2) our datatype can be directly mapped to Arrow tables on drive so we usually don't need to change the format before/after serialization.

This PR extracts and refactors these features in a single `features.py` files. It still keep a number of features classes for easy compatibility with tfds, namely the `Sequence`, `Tensor`, `ClassLabel` and `Translation` features.

Some more complex features involving a pre-processing on-the-fly during serialization are kept:

- `ClassLabel` which are able to convert from label strings to integers,

- `Translation`which does some check on the languages.

# Updating the `convert` command

We do a few updates here

- following the simplification of the `features` (cf above), conversion are updated

- we also makes it simpler to convert a single file

- some code need to be fixed manually after conversion (e.g. to remove some encoding processing in former tfds `Text` features. We highlight this code with a "git merge conflict" style syntax for easy manual fixing.

# Fix download manager iterator

You kept me up quite late on Tuesday night with this `os.scandir` change @lhoestq ;-)

| {

"url": "https://api.github.com/repos/huggingface/datasets/issues/21/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/21/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/20 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/20/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/20/comments | https://api.github.com/repos/huggingface/datasets/issues/20/events | https://github.com/huggingface/datasets/pull/20 | 607,313,557 | MDExOlB1bGxSZXF1ZXN0NDA5MzEyMDI1 | 20 | remove boto3 and promise dependencies | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,587,973,185,000 | 1,588,003,457,000 | 1,587,996,945,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/20",

"html_url": "https://github.com/huggingface/datasets/pull/20",

"diff_url": "https://github.com/huggingface/datasets/pull/20.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/20.patch",

"merged_at": 1587996945000

} | With the new download manager, we don't need `promise` anymore.

I also removed `boto3` as in [this pr](https://github.com/huggingface/transformers/pull/3968) | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/20/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/20/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/19 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/19/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/19/comments | https://api.github.com/repos/huggingface/datasets/issues/19/events | https://github.com/huggingface/datasets/pull/19 | 606,400,645 | MDExOlB1bGxSZXF1ZXN0NDA4NjIwMjUw | 19 | Replace tf.constant for TF | {

"login": "jplu",

"id": 959590,

"node_id": "MDQ6VXNlcjk1OTU5MA==",

"avatar_url": "https://avatars.githubusercontent.com/u/959590?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jplu",

"html_url": "https://github.com/jplu",

"followers_url": "https://api.github.com/users/jplu/followers",

"following_url": "https://api.github.com/users/jplu/following{/other_user}",

"gists_url": "https://api.github.com/users/jplu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jplu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jplu/subscriptions",

"organizations_url": "https://api.github.com/users/jplu/orgs",

"repos_url": "https://api.github.com/users/jplu/repos",

"events_url": "https://api.github.com/users/jplu/events{/privacy}",

"received_events_url": "https://api.github.com/users/jplu/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"Awesome!"

]

| 1,587,742,326,000 | 1,588,152,428,000 | 1,587,849,525,000 | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/19",

"html_url": "https://github.com/huggingface/datasets/pull/19",

"diff_url": "https://github.com/huggingface/datasets/pull/19.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/19.patch",

"merged_at": 1587849525000

} | Replace simple tf.constant type of Tensor to tf.ragged.constant which allows to have examples of different size in a tf.data.Dataset.

Now the training works with TF. Here the same example than for the PT in collab:

```python

import tensorflow as tf

import nlp

from transformers import BertTokenizerFast, TFBertForQuestionAnswering

# Load our training dataset and tokenizer

train_dataset = nlp.load('squad', split="train[:1%]")

tokenizer = BertTokenizerFast.from_pretrained('bert-base-cased')

def get_correct_alignement(context, answer):

start_idx = answer['answer_start'][0]

text = answer['text'][0]

end_idx = start_idx + len(text)

if context[start_idx:end_idx] == text:

return start_idx, end_idx # When the gold label position is good

elif context[start_idx-1:end_idx-1] == text:

return start_idx-1, end_idx-1 # When the gold label is off by one character

elif context[start_idx-2:end_idx-2] == text:

return start_idx-2, end_idx-2 # When the gold label is off by two character

else:

raise ValueError()

# Tokenize our training dataset

def convert_to_features(example_batch):

# Tokenize contexts and questions (as pairs of inputs)

input_pairs = list(zip(example_batch['context'], example_batch['question']))

encodings = tokenizer.batch_encode_plus(input_pairs, pad_to_max_length=True)

# Compute start and end tokens for labels using Transformers's fast tokenizers alignement methods.

start_positions, end_positions = [], []

for i, (context, answer) in enumerate(zip(example_batch['context'], example_batch['answers'])):

start_idx, end_idx = get_correct_alignement(context, answer)

start_positions.append([encodings.char_to_token(i, start_idx)])

end_positions.append([encodings.char_to_token(i, end_idx-1)])

if start_positions and end_positions:

encodings.update({'start_positions': start_positions,

'end_positions': end_positions})

return encodings

train_dataset = train_dataset.map(convert_to_features, batched=True)

columns = ['input_ids', 'token_type_ids', 'attention_mask', 'start_positions', 'end_positions']

train_dataset.set_format(type='tensorflow', columns=columns)

features = {x: train_dataset[x] for x in columns[:3]}

labels = {"output_1": train_dataset["start_positions"]}

labels["output_2"] = train_dataset["end_positions"]

tfdataset = tf.data.Dataset.from_tensor_slices((features, labels)).batch(8)

model = TFBertForQuestionAnswering.from_pretrained("bert-base-cased")

loss_fn = tf.keras.losses.SparseCategoricalCrossentropy(reduction=tf.keras.losses.Reduction.NONE, from_logits=True)

opt = tf.keras.optimizers.Adam(learning_rate=3e-5)

model.compile(optimizer=opt,

loss={'output_1': loss_fn, 'output_2': loss_fn},

loss_weights={'output_1': 1., 'output_2': 1.},

metrics=['accuracy'])

model.fit(tfdataset, epochs=1, steps_per_epoch=3)

``` | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/19/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/19/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/18 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/18/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/18/comments | https://api.github.com/repos/huggingface/datasets/issues/18/events | https://github.com/huggingface/datasets/pull/18 | 606,109,196 | MDExOlB1bGxSZXF1ZXN0NDA4Mzg0MTc3 | 18 | Updating caching mechanism - Allow dependency in dataset processing scripts - Fix style and quality in the repo | {

"login": "thomwolf",

"id": 7353373,

"node_id": "MDQ6VXNlcjczNTMzNzM=",

"avatar_url": "https://avatars.githubusercontent.com/u/7353373?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/thomwolf",

"html_url": "https://github.com/thomwolf",

"followers_url": "https://api.github.com/users/thomwolf/followers",

"following_url": "https://api.github.com/users/thomwolf/following{/other_user}",

"gists_url": "https://api.github.com/users/thomwolf/gists{/gist_id}",

"starred_url": "https://api.github.com/users/thomwolf/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/thomwolf/subscriptions",

"organizations_url": "https://api.github.com/users/thomwolf/orgs",

"repos_url": "https://api.github.com/users/thomwolf/repos",

"events_url": "https://api.github.com/users/thomwolf/events{/privacy}",

"received_events_url": "https://api.github.com/users/thomwolf/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"LGTM"

]

| 1,587,713,988,000 | 1,588,174,048,000 | 1,588,089,988,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/18",

"html_url": "https://github.com/huggingface/datasets/pull/18",

"diff_url": "https://github.com/huggingface/datasets/pull/18.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/18.patch",

"merged_at": 1588089988000

} | This PR has a lot of content (might be hard to review, sorry, in particular because I fixed the style in the repo at the same time).

# Style & quality:

You can now install the style and quality tools with `pip install -e .[quality]`. This will install black, the compatible version of sort and flake8.

You can then clean the style and check the quality before merging your PR with:

```bash

make style

make quality

```

# Allow dependencies in dataset processing scripts

We can now allow (some level) of imports in dataset processing scripts (in addition to PyPi imports).

Namely, you can do the two following things:

Import from a relative path to a file in the same folder as the dataset processing script:

```python

import .c4_utils

```

Or import from a relative path to a file in a folder/archive/github repo to which you provide an URL after the import state with `# From: [URL]`:

```python

import .clicr.dataset_code.build_json_dataset # From: https://github.com/clips/clicr

```

In both these cases, after downloading the main dataset processing script, we will identify the location of these dependencies, download them and copy them in the dataset processing script folder.

Note that only direct import in the dataset processing script will be handled.

We don't recursively explore the additional import to download further files.

Also, when we download from an additional directory (in the second case above), we recursively add `__init__.py` to all the sub-folder so you can import from them.

This part is still tested for now. If you've seen datasets which required external utilities, tell me and I can test it.

# Update the cache to have a better local structure

The local structure in the `src/datasets` folder is now: `src/datasets/DATASET_NAME/DATASET_HASH/*`

The hash is computed from the full code of the dataset processing script as well as all the local and downloaded dependencies as mentioned above. This way if you change some code in a utility related to your dataset, a new hash should be computed. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/18/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/18/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/17 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/17/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/17/comments | https://api.github.com/repos/huggingface/datasets/issues/17/events | https://github.com/huggingface/datasets/pull/17 | 605,753,027 | MDExOlB1bGxSZXF1ZXN0NDA4MDk3NjM0 | 17 | Add Pandas as format type | {

"login": "jplu",

"id": 959590,

"node_id": "MDQ6VXNlcjk1OTU5MA==",

"avatar_url": "https://avatars.githubusercontent.com/u/959590?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jplu",

"html_url": "https://github.com/jplu",

"followers_url": "https://api.github.com/users/jplu/followers",

"following_url": "https://api.github.com/users/jplu/following{/other_user}",

"gists_url": "https://api.github.com/users/jplu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jplu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jplu/subscriptions",

"organizations_url": "https://api.github.com/users/jplu/orgs",

"repos_url": "https://api.github.com/users/jplu/repos",

"events_url": "https://api.github.com/users/jplu/events{/privacy}",

"received_events_url": "https://api.github.com/users/jplu/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,587,666,014,000 | 1,588,010,870,000 | 1,588,010,868,000 | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/17",

"html_url": "https://github.com/huggingface/datasets/pull/17",

"diff_url": "https://github.com/huggingface/datasets/pull/17.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/17.patch",

"merged_at": 1588010868000

} | As detailed in the title ^^ | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/17/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/17/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/16 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/16/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/16/comments | https://api.github.com/repos/huggingface/datasets/issues/16/events | https://github.com/huggingface/datasets/pull/16 | 605,661,462 | MDExOlB1bGxSZXF1ZXN0NDA4MDIyMTUz | 16 | create our own DownloadManager | {

"login": "lhoestq",

"id": 42851186,

"node_id": "MDQ6VXNlcjQyODUxMTg2",

"avatar_url": "https://avatars.githubusercontent.com/u/42851186?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/lhoestq",

"html_url": "https://github.com/lhoestq",

"followers_url": "https://api.github.com/users/lhoestq/followers",

"following_url": "https://api.github.com/users/lhoestq/following{/other_user}",

"gists_url": "https://api.github.com/users/lhoestq/gists{/gist_id}",

"starred_url": "https://api.github.com/users/lhoestq/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/lhoestq/subscriptions",

"organizations_url": "https://api.github.com/users/lhoestq/orgs",

"repos_url": "https://api.github.com/users/lhoestq/repos",

"events_url": "https://api.github.com/users/lhoestq/events{/privacy}",

"received_events_url": "https://api.github.com/users/lhoestq/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"Looks great to me! ",

"The new download manager is ready. I removed the old folder and I fixed a few remaining dependencies.\r\nI tested it on squad and a few others from the dataset folder and it works fine.\r\n\r\nThe only impact of these changes is that it breaks the `download_and_prepare` script that was used to register the checksums when we create a dataset, as the checksum logic is not implemented.\r\n\r\nLet me know if you have remarks",

"Ok merged it (a bit fast for you to update the copyright, now I see that. but it's ok, we'll do a pass on these doc/copyright before releasing anyway)",

"Actually two additional things here @lhoestq (I merged too fast sorry, let's make a new PR for additional developments):\r\n- I think we can remove some dependencies now (e.g. `promises`) in setup.py, can you have a look?\r\n- also, I think we can remove the boto3 dependency like here: https://github.com/huggingface/transformers/pull/3968"

]

| 1,587,658,087,000 | 1,620,239,124,000 | 1,587,849,910,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/16",

"html_url": "https://github.com/huggingface/datasets/pull/16",

"diff_url": "https://github.com/huggingface/datasets/pull/16.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/16.patch",

"merged_at": 1587849910000

} | I tried to create our own - and way simpler - download manager, by replacing all the complicated stuff with our own `cached_path` solution.

With this implementation, I tried `dataset = nlp.load('squad')` and it seems to work fine.

For the implementation, what I did exactly:

- I copied the old download manager

- I removed all the dependences to the old `download` files

- I replaced all the download + extract calls by calls to `cached_path`

- I removed unused parameters (extract_dir, compute_stats) (maybe compute_stats could be re-added later if we want to compute stats...)

- I left some functions unimplemented for now. We will probably have to implement them because they are used by some datasets scripts (download_kaggle_data, iter_archive) or because we may need them at some point (download_checksums, _record_sizes_checksums)

Let me know if you think that this is going the right direction or if you have remarks.

Note: I didn't write any test yet as I wanted to read your remarks first | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/16/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/16/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/15 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/15/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/15/comments | https://api.github.com/repos/huggingface/datasets/issues/15/events | https://github.com/huggingface/datasets/pull/15 | 604,906,708 | MDExOlB1bGxSZXF1ZXN0NDA3NDEwOTk3 | 15 | [Tests] General Test Design for all dataset scripts | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"> I think I'm fine with this.\r\n> \r\n> The alternative would be to host a small subset of the dataset on the S3 together with the testing script. But I think having all (test file creation + actual tests) in one file is actually quite convenient.\r\n> \r\n> Good for me!\r\n> \r\n> One question though, will we have to create one test file for each of the 100+ datasets or could we make some automatic conversion from tfds dataset test files?\r\n\r\nI think if we go the way shown in the PR we would have to create one test file for each of the 100+ datasets. \r\n\r\nAs far as I know the tfds test files all rely on the user having created a special download folder structure in `tensorflow-datasets/tensorflow_datasets/testing/test_data/fake_examples`. \r\n\r\nMy hypothesis was: \r\nBecasue, we don't want to work with PRs, no `dataset_script` is going to be in the official repo, so no `dataset_script_test` can be in the repo either. Therefore we can also not have any \"fake\" test folder structure in the repo. \r\n\r\n**BUT:** As you mentioned @thom, we could have a fake data structure on AWS. To add a test the user has to upload multiple small test files when uploading his data set script. \r\n\r\nSo for a cli this could look like:\r\n`python nlp-cli upload <data_set_script> --testfiles <relative path to test file 1> <relative path to test file 2> ...` \r\n\r\nor even easier if the user just creates the dataset folder with the script inside and the testing folder structure, then the API could look like:\r\n\r\n`python nlp-cli upload <path/to/dataset/folder>`\r\n\r\nand the dataset folder would look like\r\n```\r\nsquad\r\n- squad.py\r\n- fake_data # this dir would have to have the exact same structure we get when downloading from the official squad data url\r\n```\r\n\r\nThis way I think we wouldn't even need any test files at all for each dataset script. For special datasets like `c4` or `wikipedia` we could then allow to optionally upload another test script. \r\nWe just assume that this is our downloaded `url` and check all functionality from there. \r\n\r\nThinking a bit more about this solution sounds a) much less work and b) even easier for the user.\r\n\r\nA small problem I see here though:\r\n1) What do we do when the depending on the config name the downloaded folder structure is very different? I think for each dataset config name we should have one test, which could correspond to one \"fake\" folder structure on AWS\r\n\r\n@thomwolf What do you think? I would actually go for this solution instead now.\r\n@mariamabarham You have written many more tfds dataset scripts and tests than I have - what do you think? \r\n\r\n",

"Regarding the tfds tests, I don't really see a point in keeping them because:\r\n\r\n1) If you provide a fake data structure, IMO there is no need for each dataset to have an individual test file because (I think) most datasets have the same functions `_split_generators` and `_generate_examples` for which you can just test the functionality in a common test file. For special functions like these beam / pipeline functionality you probably need an extra test file. But @mariamabarham I think you have seen more than I have here as well \r\n\r\n2) The dataset test design is very much intertwined with the download manager design and contains a lot of code. I would like to seperate the tests into a) tests for downloading in general b) tests for post download data set pre-processing. Since we are going to change the download code anyways quite a lot, my plan was to focus on b) first. ",

"I like the idea of having a fake data folder on S3. I have seen datasets with nested compressed files structures that would be tedious to generate with code. And for users it is probably easier to create a fake data folder by taking a subset of the actual data, and then upload it as you said.",

"> > I think I'm fine with this.\r\n> > The alternative would be to host a small subset of the dataset on the S3 together with the testing script. But I think having all (test file creation + actual tests) in one file is actually quite convenient.\r\n> > Good for me!\r\n> > One question though, will we have to create one test file for each of the 100+ datasets or could we make some automatic conversion from tfds dataset test files?\r\n> \r\n> I think if we go the way shown in the PR we would have to create one test file for each of the 100+ datasets.\r\n> \r\n> As far as I know the tfds test files all rely on the user having created a special download folder structure in `tensorflow-datasets/tensorflow_datasets/testing/test_data/fake_examples`.\r\n> \r\n> My hypothesis was:\r\n> Becasue, we don't want to work with PRs, no `dataset_script` is going to be in the official repo, so no `dataset_script_test` can be in the repo either. Therefore we can also not have any \"fake\" test folder structure in the repo.\r\n> \r\n> **BUT:** As you mentioned @thom, we could have a fake data structure on AWS. To add a test the user has to upload multiple small test files when uploading his data set script.\r\n> \r\n> So for a cli this could look like:\r\n> `python nlp-cli upload <data_set_script> --testfiles <relative path to test file 1> <relative path to test file 2> ...`\r\n> \r\n> or even easier if the user just creates the dataset folder with the script inside and the testing folder structure, then the API could look like:\r\n> \r\n> `python nlp-cli upload <path/to/dataset/folder>`\r\n> \r\n> and the dataset folder would look like\r\n> \r\n> ```\r\n> squad\r\n> - squad.py\r\n> - fake_data # this dir would have to have the exact same structure we get when downloading from the official squad data url\r\n> ```\r\n> \r\n> This way I think we wouldn't even need any test files at all for each dataset script. For special datasets like `c4` or `wikipedia` we could then allow to optionally upload another test script.\r\n> We just assume that this is our downloaded `url` and check all functionality from there.\r\n> \r\n> Thinking a bit more about this solution sounds a) much less work and b) even easier for the user.\r\n> \r\n> A small problem I see here though:\r\n> \r\n> 1. What do we do when the depending on the config name the downloaded folder structure is very different? I think for each dataset config name we should have one test, which could correspond to one \"fake\" folder structure on AWS\r\n> \r\n> @thomwolf What do you think? I would actually go for this solution instead now.\r\n> @mariamabarham You have written many more tfds dataset scripts and tests than I have - what do you think?\r\n\r\nI'm agreed with you just one thing, for some dataset like glue or xtreme you may have multiple datasets in it. so I think a good way is to have one main fake folder and a subdirectory for each dataset inside",

"> Regarding the tfds tests, I don't really see a point in keeping them because:\r\n> \r\n> 1. If you provide a fake data structure, IMO there is no need for each dataset to have an individual test file because (I think) most datasets have the same functions `_split_generators` and `_generate_examples` for which you can just test the functionality in a common test file. For special functions like these beam / pipeline functionality you probably need an extra test file. But @mariamabarham I think you have seen more than I have here as well\r\n> 2. The dataset test design is very much intertwined with the download manager design and contains a lot of code. I would like to seperate the tests into a) tests for downloading in general b) tests for post download data set pre-processing. Since we are going to change the download code anyways quite a lot, my plan was to focus on b) first.\r\n\r\nFor _split_generator, yes. But I'm not sure for _generate_examples because there is lots of things that should be taken into account such as feature names and types, data format (json, jsonl, csv, tsv,..)",

"Sounds good to me!\r\n\r\nWhen testing, we could thus just override the prefix in the URL inside the download manager to have them point to the test directory on our S3.\r\n\r\nCc @lhoestq ",

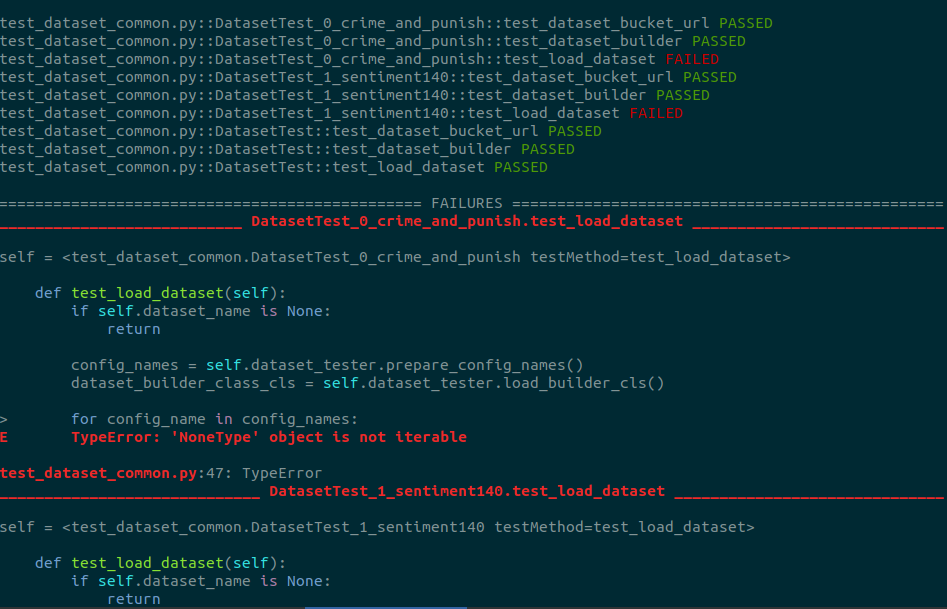

"Ok, here is a second draft for the testing structure. \r\n\r\nI think the big difficulty here is \"How can you generate tests on the fly from a given dataset name, *e.g.* `squad`\"?\r\n\r\nSo, this morning I did some research on \"parameterized testing\" and pure `unittest` or `pytest` didn't work very well. \r\nI found the lib https://github.com/wolever/parameterized, which works very nicely for our use case I think. \r\n@thomwolf - would it be ok to have a dependence on this lib for `nlp`? It seems like a light-weight lib to me. \r\n\r\nThis lib allows to add a `parameterization` decorator to a `unittest.TestCase` class so that the class can be instantiated for multiple different arguments (which are the dataset names `squad` etc. in our case).\r\n\r\nWhat I like about this lib is that one only has to add the decorator and the each of the parameterized tests are shown, like this: \r\n\r\n\r\n\r\nWith this structure we would only have to upload the dummy data for each dataset and would not require a specific testing file. \r\n\r\nWhat do you think @thomwolf @mariamabarham @lhoestq ?",

"I think this is a nice solution.\r\n\r\nDo you think we could have the `parametrized` dependency in a `[test]` optional installation of `setup.py`? I would really like to keep the dependencies of the standard installation as small as possible. ",

"> I think this is a nice solution.\r\n> \r\n> Do you think we could have the `parametrized` dependency in a `[test]` optional installation of `setup.py`? I would really like to keep the dependencies of the standard installation as small as possible.\r\n\r\nYes definitely!",

"UPDATE: \r\n\r\nThis test design is ready now. I added dummy data to S3 for the dataests: `squad, crime_and_punish, sentiment140` . The structure can be seen on `https://s3.console.aws.amazon.com/s3/buckets/datasets.huggingface.co/nlp/squad/dummy/?region=us-east-1&tab=overview` for `squad`. \r\n\r\nAll dummy data files have to be in .zip format and called `dummy_data.zip`. The zip file should thereby have the exact same folder structure one gets from downloading the \"real\" data url(s). \r\n\r\nTo show how the .zip file looks like for the added datasets, I added the folder `nlp/datasets/dummy_data` in this PR. I think we can leave for the moment so that people can see better how to add dummy data tests and later delete it like `nlp/datasets/nlp`."

]

| 1,587,573,961,000 | 1,664,875,914,000 | 1,587,998,882,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/15",

"html_url": "https://github.com/huggingface/datasets/pull/15",

"diff_url": "https://github.com/huggingface/datasets/pull/15.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/15.patch",

"merged_at": 1587998882000

} | The general idea is similar to how testing is done in `transformers`. There is one general `test_dataset_common.py` file which has a `DatasetTesterMixin` class. This class implements all of the logic that can be used in a generic way for all dataset classes. The idea is to keep each individual dataset test file as minimal as possible.

In order to test whether the specific data set class can download the data and generate the examples **without** downloading the actual data all the time, a MockDataLoaderManager class is used which receives a `mock_folder_structure_fn` function from each individual dataset test file that create "fake" data and which returns the same folder structure that would have been created when using the real data downloader. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/15/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/15/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/14 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/14/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/14/comments | https://api.github.com/repos/huggingface/datasets/issues/14/events | https://github.com/huggingface/datasets/pull/14 | 604,761,315 | MDExOlB1bGxSZXF1ZXN0NDA3MjkzNjU5 | 14 | [Download] Only create dir if not already exist | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,587,562,371,000 | 1,664,875,910,000 | 1,587,630,453,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/14",

"html_url": "https://github.com/huggingface/datasets/pull/14",

"diff_url": "https://github.com/huggingface/datasets/pull/14.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/14.patch",

"merged_at": 1587630453000

} | This was quite annoying to find out :D.

Some datasets have save in the same directory. So we should only create a new directory if it doesn't already exist. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/14/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/14/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/13 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/13/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/13/comments | https://api.github.com/repos/huggingface/datasets/issues/13/events | https://github.com/huggingface/datasets/pull/13 | 604,547,951 | MDExOlB1bGxSZXF1ZXN0NDA3MTIxMjkw | 13 | [Make style] | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"I think this can be quickly reproduced. \r\nI use `black, version 19.10b0`. \r\n\r\nWhen running: \r\n`black nlp/src/arrow_reader.py` \r\nit gives me: \r\n\r\n```\r\nerror: cannot format /home/patrick/hugging_face/nlp/src/nlp/arrow_reader.py: cannot use --safe with this file; failed to parse source file. AST error message: invalid syntax (<unknown>, line 78)\r\nOh no! 💥 💔 💥\r\n1 file failed to reformat.\r\n```\r\n\r\nThe line in question is: \r\nhttps://github.com/huggingface/nlp/blob/6922a16705e61f9e31a365f2606090b84d49241f/src/nlp/arrow_reader.py#L78\r\n\r\nWhat is weird is that the trainer file in `transformers` has more or less the same syntax and black does not fail there: \r\nhttps://github.com/huggingface/transformers/blob/cb3c2212c79d7ff0a4a4e84c3db48371ecc1c15d/src/transformers/trainer.py#L95\r\n\r\nI googled quite a bit about black & typing hints yesterday and didn't find anything useful. \r\nAny ideas @thomwolf @julien-c @LysandreJik ?",

"> I think this can be quickly reproduced.\r\n> I use `black, version 19.10b0`.\r\n> \r\n> When running:\r\n> `black nlp/src/arrow_reader.py`\r\n> it gives me:\r\n> \r\n> ```\r\n> error: cannot format /home/patrick/hugging_face/nlp/src/nlp/arrow_reader.py: cannot use --safe with this file; failed to parse source file. AST error message: invalid syntax (<unknown>, line 78)\r\n> Oh no! 💥 💔 💥\r\n> 1 file failed to reformat.\r\n> ```\r\n> \r\n> The line in question is:\r\n> https://github.com/huggingface/nlp/blob/6922a16705e61f9e31a365f2606090b84d49241f/src/nlp/arrow_reader.py#L78\r\n> \r\n> What is weird is that the trainer file in `transformers` has more or less the same syntax and black does not fail there:\r\n> https://github.com/huggingface/transformers/blob/cb3c2212c79d7ff0a4a4e84c3db48371ecc1c15d/src/transformers/trainer.py#L95\r\n> \r\n> I googled quite a bit about black & typing hints yesterday and didn't find anything useful.\r\n> Any ideas @thomwolf @julien-c @LysandreJik ?\r\n\r\nOk I found the problem. It was the one Julien mentioned and has nothing to do with this line. Black's error message is a bit misleading here, I guess",

"Ok, just had to remove the python 2 syntax comments `# type`. \r\n\r\nGood to merge for me now @thomwolf "

]

| 1,587,543,006,000 | 1,664,875,911,000 | 1,587,646,942,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/13",

"html_url": "https://github.com/huggingface/datasets/pull/13",

"diff_url": "https://github.com/huggingface/datasets/pull/13.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/13.patch",

"merged_at": 1587646942000

} | Added Makefile and applied make style to all.

make style runs the following code:

```

style:

black --line-length 119 --target-version py35 src

isort --recursive src

```

It's the same code that is run in `transformers`. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/13/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/13/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/12 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/12/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/12/comments | https://api.github.com/repos/huggingface/datasets/issues/12/events | https://github.com/huggingface/datasets/pull/12 | 604,518,583 | MDExOlB1bGxSZXF1ZXN0NDA3MDk3MzA4 | 12 | [Map Function] add assert statement if map function does not return dict or None | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"Also added to an assert statement that if a dict is returned by function, all values of `dicts` are `lists`",

"Wait to merge until `make style` is set in place.",

"Updated the assert statements. Played around with multiple cases and it should be good now IMO. "

]

| 1,587,540,084,000 | 1,664,875,913,000 | 1,587,709,743,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/12",

"html_url": "https://github.com/huggingface/datasets/pull/12",

"diff_url": "https://github.com/huggingface/datasets/pull/12.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/12.patch",

"merged_at": 1587709743000

} | IMO, if a function is provided that is not a print statement (-> returns variable of type `None`) or a function that updates the datasets (-> returns variable of type `dict`), then a `TypeError` should be raised.

Not sure whether you had cases in mind where the user should do something else @thomwolf , but I think a lot of silent errors can be avoided with this assert statement. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/12/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/12/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/11 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/11/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/11/comments | https://api.github.com/repos/huggingface/datasets/issues/11/events | https://github.com/huggingface/datasets/pull/11 | 603,921,624 | MDExOlB1bGxSZXF1ZXN0NDA2NjExODk2 | 11 | [Convert TFDS to HFDS] Extend script to also allow just converting a single file | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,587,468,333,000 | 1,664,875,906,000 | 1,587,502,020,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/11",

"html_url": "https://github.com/huggingface/datasets/pull/11",

"diff_url": "https://github.com/huggingface/datasets/pull/11.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/11.patch",

"merged_at": 1587502020000

} | Adds another argument to be able to convert only a single file | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/11/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/11/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/10 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/10/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/10/comments | https://api.github.com/repos/huggingface/datasets/issues/10/events | https://github.com/huggingface/datasets/pull/10 | 603,909,327 | MDExOlB1bGxSZXF1ZXN0NDA2NjAxNzQ2 | 10 | Name json file "squad.json" instead of "squad.py.json" | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,587,467,068,000 | 1,664,875,904,000 | 1,587,502,086,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/10",

"html_url": "https://github.com/huggingface/datasets/pull/10",

"diff_url": "https://github.com/huggingface/datasets/pull/10.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/10.patch",

"merged_at": 1587502086000

} | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/10/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/10/timeline | null | null | true |

|

https://api.github.com/repos/huggingface/datasets/issues/9 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/9/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/9/comments | https://api.github.com/repos/huggingface/datasets/issues/9/events | https://github.com/huggingface/datasets/pull/9 | 603,894,874 | MDExOlB1bGxSZXF1ZXN0NDA2NTkwMDQw | 9 | [Clean up] Datasets | {

"login": "patrickvonplaten",

"id": 23423619,

"node_id": "MDQ6VXNlcjIzNDIzNjE5",

"avatar_url": "https://avatars.githubusercontent.com/u/23423619?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/patrickvonplaten",

"html_url": "https://github.com/patrickvonplaten",

"followers_url": "https://api.github.com/users/patrickvonplaten/followers",

"following_url": "https://api.github.com/users/patrickvonplaten/following{/other_user}",

"gists_url": "https://api.github.com/users/patrickvonplaten/gists{/gist_id}",

"starred_url": "https://api.github.com/users/patrickvonplaten/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/patrickvonplaten/subscriptions",

"organizations_url": "https://api.github.com/users/patrickvonplaten/orgs",

"repos_url": "https://api.github.com/users/patrickvonplaten/repos",

"events_url": "https://api.github.com/users/patrickvonplaten/events{/privacy}",

"received_events_url": "https://api.github.com/users/patrickvonplaten/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"Yes!"

]

| 1,587,465,596,000 | 1,664,875,902,000 | 1,587,502,198,000 | MEMBER | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/9",

"html_url": "https://github.com/huggingface/datasets/pull/9",

"diff_url": "https://github.com/huggingface/datasets/pull/9.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/9.patch",

"merged_at": 1587502198000

} | Clean up `nlp/datasets` folder.

As I understood, eventually the `nlp/datasets` shall not exist anymore at all.

The folder `nlp/datasets/nlp` is kept for the moment, but won't be needed in the future, since it will live on S3 (actually it already does) at: `https://s3.console.aws.amazon.com/s3/buckets/datasets.huggingface.co/nlp/?region=us-east-1` and the different `dataset downloader scripts will be added to `nlp/src/nlp` when downloaded by the user.

The folder `nlp/datasets/checksums` is kept for now, but won't be needed anymore in the future.

The remaining folders/ files are leftovers from tensorflow-datasets and are not needed. The can be looked up in the private tensorflow-dataset repo. | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/9/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/9/timeline | null | null | true |

https://api.github.com/repos/huggingface/datasets/issues/8 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/8/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/8/comments | https://api.github.com/repos/huggingface/datasets/issues/8/events | https://github.com/huggingface/datasets/pull/8 | 601,783,243 | MDExOlB1bGxSZXF1ZXN0NDA0OTg0NDUz | 8 | Fix issue 6: error when the citation is missing in the DatasetInfo | {

"login": "jplu",

"id": 959590,

"node_id": "MDQ6VXNlcjk1OTU5MA==",

"avatar_url": "https://avatars.githubusercontent.com/u/959590?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jplu",

"html_url": "https://github.com/jplu",

"followers_url": "https://api.github.com/users/jplu/followers",

"following_url": "https://api.github.com/users/jplu/following{/other_user}",

"gists_url": "https://api.github.com/users/jplu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jplu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jplu/subscriptions",

"organizations_url": "https://api.github.com/users/jplu/orgs",

"repos_url": "https://api.github.com/users/jplu/repos",

"events_url": "https://api.github.com/users/jplu/events{/privacy}",

"received_events_url": "https://api.github.com/users/jplu/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,587,110,666,000 | 1,588,152,431,000 | 1,587,389,052,000 | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/8",

"html_url": "https://github.com/huggingface/datasets/pull/8",

"diff_url": "https://github.com/huggingface/datasets/pull/8.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/8.patch",

"merged_at": 1587389052000

} | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/8/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/8/timeline | null | null | true |

|

https://api.github.com/repos/huggingface/datasets/issues/7 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/7/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/7/comments | https://api.github.com/repos/huggingface/datasets/issues/7/events | https://github.com/huggingface/datasets/pull/7 | 601,780,534 | MDExOlB1bGxSZXF1ZXN0NDA0OTgyMzA2 | 7 | Fix issue 5: allow empty datasets | {

"login": "jplu",

"id": 959590,

"node_id": "MDQ6VXNlcjk1OTU5MA==",

"avatar_url": "https://avatars.githubusercontent.com/u/959590?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jplu",

"html_url": "https://github.com/jplu",

"followers_url": "https://api.github.com/users/jplu/followers",

"following_url": "https://api.github.com/users/jplu/following{/other_user}",

"gists_url": "https://api.github.com/users/jplu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jplu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jplu/subscriptions",

"organizations_url": "https://api.github.com/users/jplu/orgs",

"repos_url": "https://api.github.com/users/jplu/repos",

"events_url": "https://api.github.com/users/jplu/events{/privacy}",

"received_events_url": "https://api.github.com/users/jplu/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | []

| 1,587,110,396,000 | 1,588,152,433,000 | 1,587,389,028,000 | CONTRIBUTOR | null | false | {

"url": "https://api.github.com/repos/huggingface/datasets/pulls/7",

"html_url": "https://github.com/huggingface/datasets/pull/7",

"diff_url": "https://github.com/huggingface/datasets/pull/7.diff",

"patch_url": "https://github.com/huggingface/datasets/pull/7.patch",

"merged_at": 1587389027000

} | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/7/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/7/timeline | null | null | true |

|

https://api.github.com/repos/huggingface/datasets/issues/6 | https://api.github.com/repos/huggingface/datasets | https://api.github.com/repos/huggingface/datasets/issues/6/labels{/name} | https://api.github.com/repos/huggingface/datasets/issues/6/comments | https://api.github.com/repos/huggingface/datasets/issues/6/events | https://github.com/huggingface/datasets/issues/6 | 600,330,836 | MDU6SXNzdWU2MDAzMzA4MzY= | 6 | Error when citation is not given in the DatasetInfo | {

"login": "jplu",

"id": 959590,

"node_id": "MDQ6VXNlcjk1OTU5MA==",

"avatar_url": "https://avatars.githubusercontent.com/u/959590?v=4",

"gravatar_id": "",

"url": "https://api.github.com/users/jplu",

"html_url": "https://github.com/jplu",

"followers_url": "https://api.github.com/users/jplu/followers",

"following_url": "https://api.github.com/users/jplu/following{/other_user}",

"gists_url": "https://api.github.com/users/jplu/gists{/gist_id}",

"starred_url": "https://api.github.com/users/jplu/starred{/owner}{/repo}",

"subscriptions_url": "https://api.github.com/users/jplu/subscriptions",

"organizations_url": "https://api.github.com/users/jplu/orgs",

"repos_url": "https://api.github.com/users/jplu/repos",

"events_url": "https://api.github.com/users/jplu/events{/privacy}",

"received_events_url": "https://api.github.com/users/jplu/received_events",

"type": "User",

"site_admin": false

} | []

| closed | false | null | []

| null | [

"Yes looks good to me.\r\nNote that we may refactor quite strongly the `info.py` to make it a lot simpler (it's very complicated for basically a dictionary of info I think)",

"No, problem ^^ It might just be a temporary fix :)",

"Fixed."

]

| 1,586,960,094,000 | 1,588,152,202,000 | 1,588,152,202,000 | CONTRIBUTOR | null | null | null | The following error is raised when the `citation` parameter is missing when we instantiate a `DatasetInfo`:

```

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

File "/home/jplu/dev/jplu/datasets/src/nlp/info.py", line 338, in __repr__

citation_pprint = _indent('"""{}"""'.format(self.citation.strip()))

AttributeError: 'NoneType' object has no attribute 'strip'

```

I propose to do the following change in the `info.py` file. The method:

```python

def __repr__(self):

splits_pprint = _indent("\n".join(["{"] + [

" '{}': {},".format(k, split.num_examples)

for k, split in sorted(self.splits.items())

] + ["}"]))

features_pprint = _indent(repr(self.features))

citation_pprint = _indent('"""{}"""'.format(self.citation.strip()))

return INFO_STR.format(

name=self.name,

version=self.version,

description=self.description,

total_num_examples=self.splits.total_num_examples,

features=features_pprint,

splits=splits_pprint,

citation=citation_pprint,

homepage=self.homepage,

supervised_keys=self.supervised_keys,

# Proto add a \n that we strip.

license=str(self.license).strip())

```

Becomes:

```python

def __repr__(self):

splits_pprint = _indent("\n".join(["{"] + [

" '{}': {},".format(k, split.num_examples)

for k, split in sorted(self.splits.items())

] + ["}"]))

features_pprint = _indent(repr(self.features))

## the strip is done only is the citation is given

citation_pprint = self.citation

if self.citation:

citation_pprint = _indent('"""{}"""'.format(self.citation.strip()))

return INFO_STR.format(

name=self.name,

version=self.version,

description=self.description,

total_num_examples=self.splits.total_num_examples,

features=features_pprint,

splits=splits_pprint,

citation=citation_pprint,

homepage=self.homepage,

supervised_keys=self.supervised_keys,

# Proto add a \n that we strip.

license=str(self.license).strip())

```

And now it is ok. @thomwolf are you ok with this fix? | {

"url": "https://api.github.com/repos/huggingface/datasets/issues/6/reactions",

"total_count": 0,

"+1": 0,

"-1": 0,

"laugh": 0,

"hooray": 0,

"confused": 0,

"heart": 0,

"rocket": 0,

"eyes": 0

} | https://api.github.com/repos/huggingface/datasets/issues/6/timeline | null | completed | false |