huihui-ai/r1-1776-GGUF

This model converted from r1-1776 to GGUF, Even GPUs with a minimum memory of 8 GB can try it.

GGUF: Q2_K, Q3_K_M, Q4_K_M, Q8_0 all support.

BF16 to f16.gguf

- Download perplexity-ai/r1-1776 model, requires approximately 1.21TB of space.

cd /home/admin/models

huggingface-cli download perplexity-ai/r1-1776 --local-dir ./perplexity-ai/r1-1776

- Use the llama.cpp conversion program to convert r1-1776 to gguf format, requires an additional approximately 1.22 TB of space.

python convert_hf_to_gguf.py /home/admin/models/perplexity-ai/r1-1776 --outfile /home/admin/models/perplexity-ai/r1-1776/ggml-model-f16.gguf --outtype f16

- Use the llama.cpp quantitative program to quantitative model (llama-quantize needs to be compiled.), other quant option. Convert first Q2_K, requires an additional approximately 227 GB of space.

llama-quantize /home/admin/models/perplexity-ai/r1-1776/ggml-model-f16.gguf /home/admin/models/perplexity-ai/r1-1776/ggml-model-Q2_K.gguf Q2_K

- Use llama-cli to test.

llama-cli -m /home/admin/models/perplexity-ai/r1-1776/ggml-model-Q2_K.gguf -n 2048

Use with ollama

You can use huihui_ai/perplexity-ai-r1 directly

ollama run huihui_ai/perplexity-ai-r1:671b-q2_K

Modefile

The Model file is based on ggml-model-Q2_K.gguf.

A single GPU with 24GB of memory can hold 4 layers of data, and num_gpu is set to 4

If there are 8 GPUs with 24GB of GPU memory each, num_gpu can be 32. The value of this parameter can be set to ollama.

But the model suggests setting the minimum value of num_gpu to 1, which can be changed by setting parameters later.

The specific parameters can be changed according to your own tests.

The value of num_gpu can be adjusted based on the number of GPUs and the GPU memory size available.

- Modify Modelfile

FROM perplexity-ai/r1-1776/ggml-model-Q2_K.gguf

TEMPLATE """{{- if .System }}{{ .System }}{{ end }}

{{- range $i, $_ := .Messages }}

{{- $last := eq (len (slice $.Messages $i)) 1}}

{{- if eq .Role "user" }}<|User|>{{ .Content }}

{{- else if eq .Role "assistant" }}<|Assistant|>{{ .Content }}{{- if not $last }}<|end▁of▁sentence|>{{- end }}

{{- end }}

{{- if and $last (ne .Role "assistant") }}<|Assistant|>{{- end }}

{{- end }}"""

PARAMETER stop <|begin▁of▁sentence|>

PARAMETER stop <|end▁of▁sentence|>

PARAMETER stop <|User|>

PARAMETER stop <|Assistant|>

PARAMETER num_gpu 1

- Use ollama create to then create the quantized model.

ollama create -f Modelfile huihui_ai/perplexity-ai-r1:671b-q2_K

- Run model

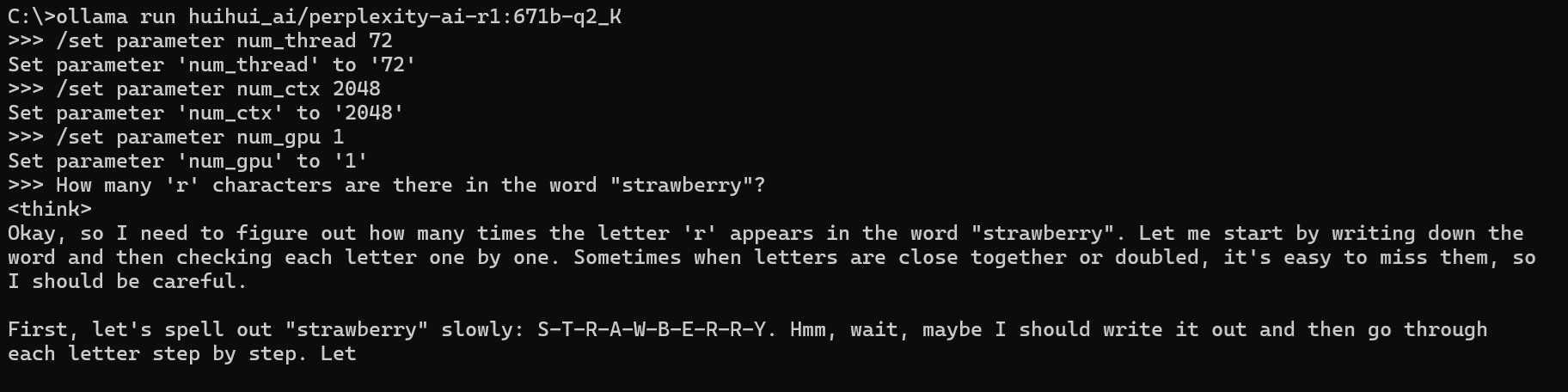

ollama run huihui_ai/perplexity-ai-r1:671b-q2_K

- Set parameters before asking the question.

The /set parameter for ollama can cause a model reload on each occasion.

"num_thread" refers to the number of cores in your computer, and it's recommended to use half of that, Otherwise, the CPU will be at 100%.

"num_ctx" for ollama refers to the number of context slots or the number of contexts the model can maintain during inference.

/set parameter num_thread 32

/set parameter num_ctx 2048

If it's an 8-card (GPU, 24GB)configuration, you also need to set the num_gpu parameter.

If it's an 8-card (GPU, 24GB)configuration, you also need to set the num_gpu parameter.

/set parameter num_gpu 32

The above three parameters should be set one at a time, and do not send them all to Ollama at once.

- Q2.K.gguf is now available for download. If you want to merge the weights together, use this script:

llama-gguf-split --merge Q2_K-GGUF/r1-1776-Q2_K-00001-of-00005.gguf r1-1776-q2_K.gguf

Q3_K_M, Q4_K_M, Q8_0 also supports it, and it will likely need at least 12GB of memory. We will upload q3_K_M, q4_K_M shortly

Q8_0 also supports it, and it will likely need at least 24GB of memory. We will upload Q8_0 shortly

Donation

If you like it, please click 'like' and follow us for more updates.

Your donation helps us continue our further development and improvement, a cup of coffee can do it.

- bitcoin:

bc1qqnkhuchxw0zqjh2ku3lu4hq45hc6gy84uk70ge

- Downloads last month

- 24