Citrus: Leveraging Expert Cognitive Pathways in a Medical Language Model for Advanced Medical Decision Support

📑Paper |🤗Github Page |🤗Model |📚Medical Reasoning Data | 📚Evaluation Data

The Introduction to Our Work

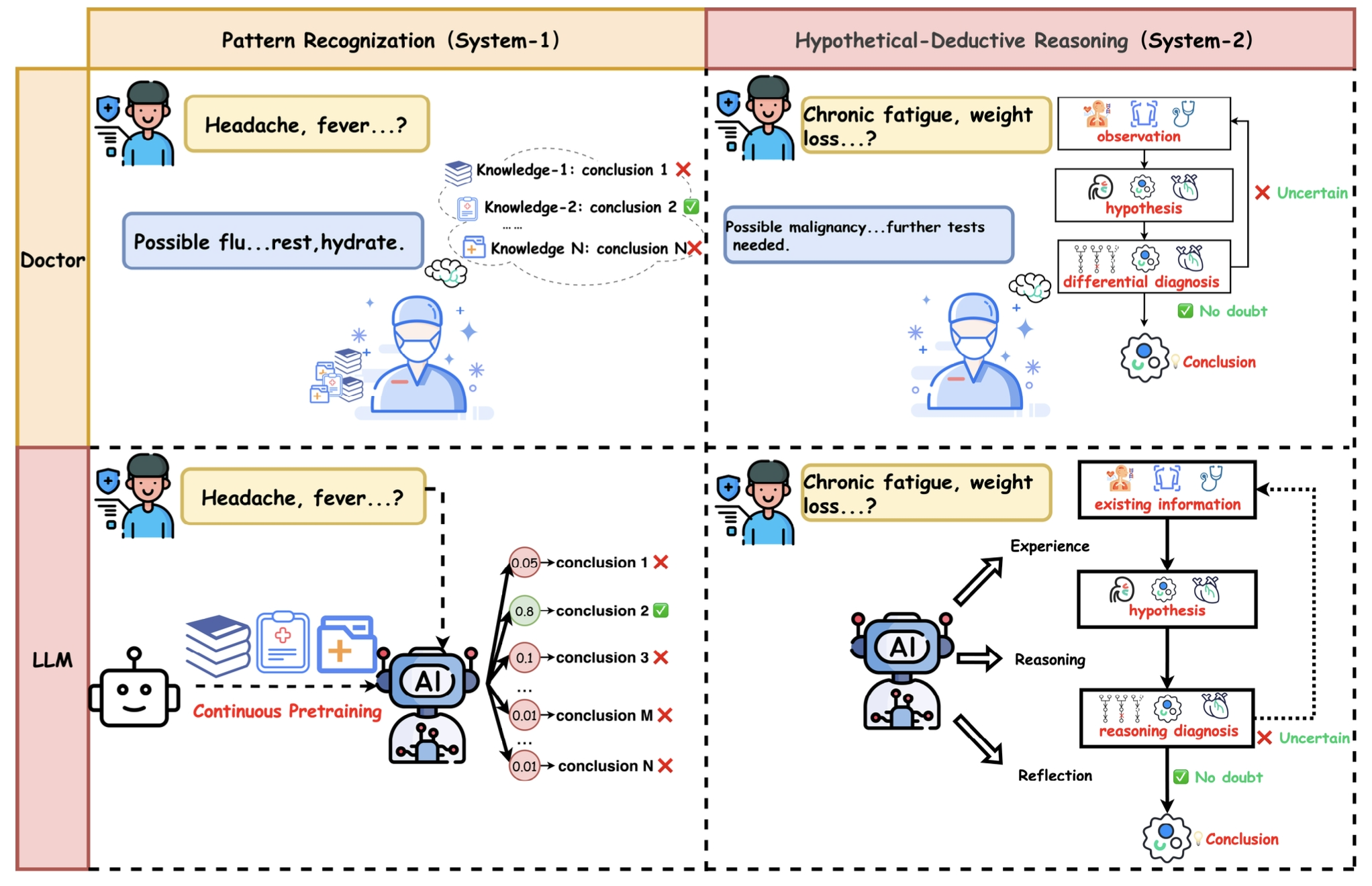

1. Main approaches

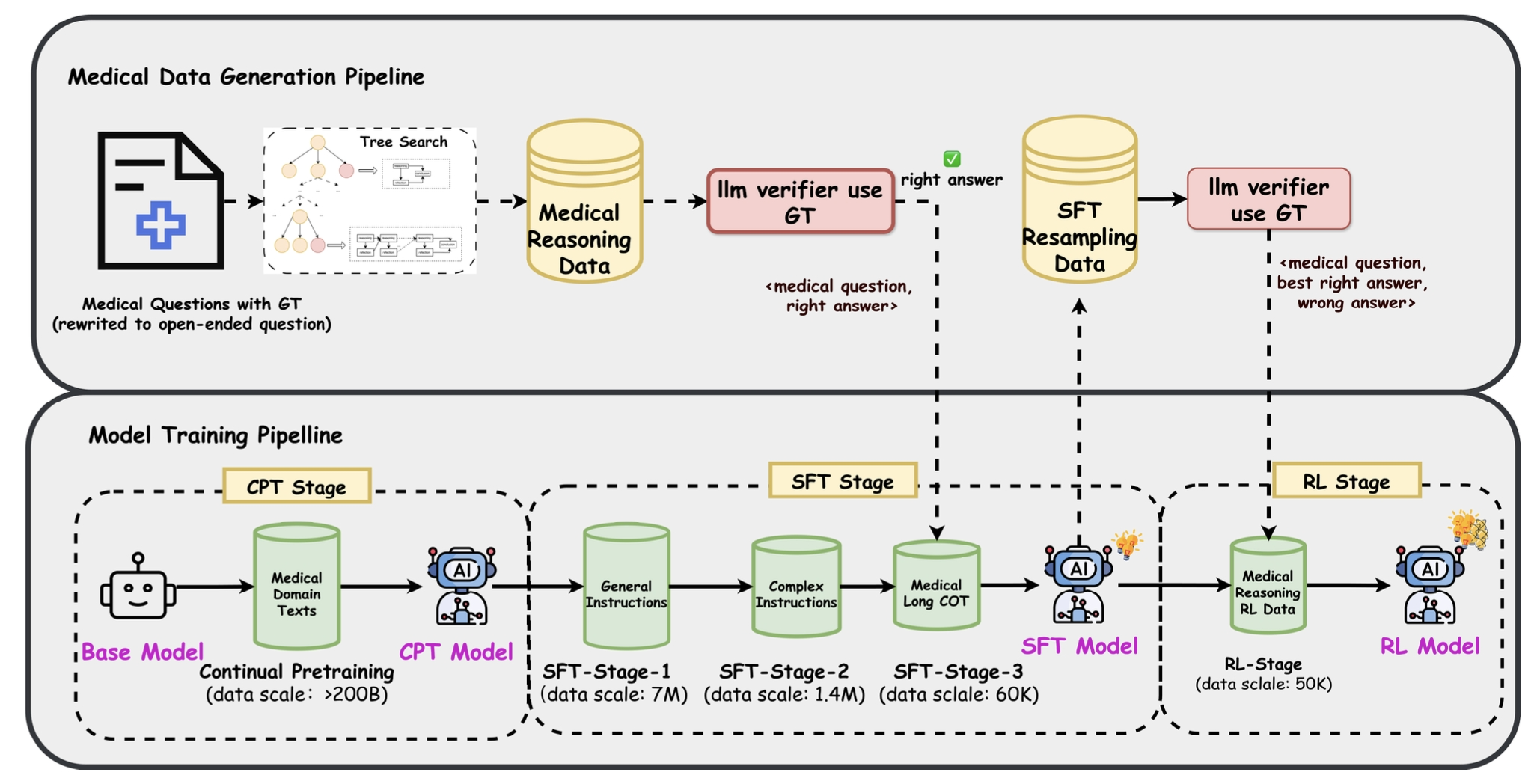

2. Overview of training stages and training data pipeline

The contributions of this work are as follows:

We propose a training-free reasoning approach that emulates the cognitive processes of medical experts, enabling large language models to enhance their medical capabilities in clinical diagnosis and treatment.

In conjunction with the data construction method, we introduce a multi-stage post-training approach to further improve the model’s medical performance.

We have made the Citrus model and its training data publicly available as open-source resources to advance research in AI-driven medical decision-making.

We have developed and open-sourced a large-scale, updatable clinical practice evaluation dataset based on real-world data, accurately reflecting the distribution of patients in real-world settings.

Notice

- Our model is built with Llama3.1-70B, Llama 3.1 is licensed under the Llama 3.1 Community License, Copyright © Meta Platforms, Inc. All Rights Reserved.

- Our default license is MIT, provided it does not conflict with the Llama license.

- Downloads last month

- 133