metadata

license: apache-2.0

Pre-trained models and output samples of ControlNet-LLLite.

Note: The model structure is highly experimental and may be subject to change in the future.

Inference with ComfyUI: https://github.com/kohya-ss/ControlNet-LLLite-ComfyUI

For 1111's Web UI, sd-webui-controlnet extension supports ControlNet-LLLite.

Training: https://github.com/kohya-ss/sd-scripts/blob/sdxl/docs/train_lllite_README.md

The recommended preprocessing for the blur model is Gaussian blur.

Naming Rules

controllllite_v01032064e_sdxl_blur_500-1000.safetensors

v01: Version Flag.032: Dimensions of conditioning.064: Dimensions of control module.sdxl: Base Model.blur: The control method.animemeans the LLLite model is trained on/with anime sdxl model and images.500-1000: (Optional) Timesteps for training. If this is500-1000, please control only the first half step.

Models

Trained on sdxl base

- controllllite_v01032064e_sdxl_blur-500-1000.safetensors

- trained with 3,919 generated images and Gaussian blur preprocessing.

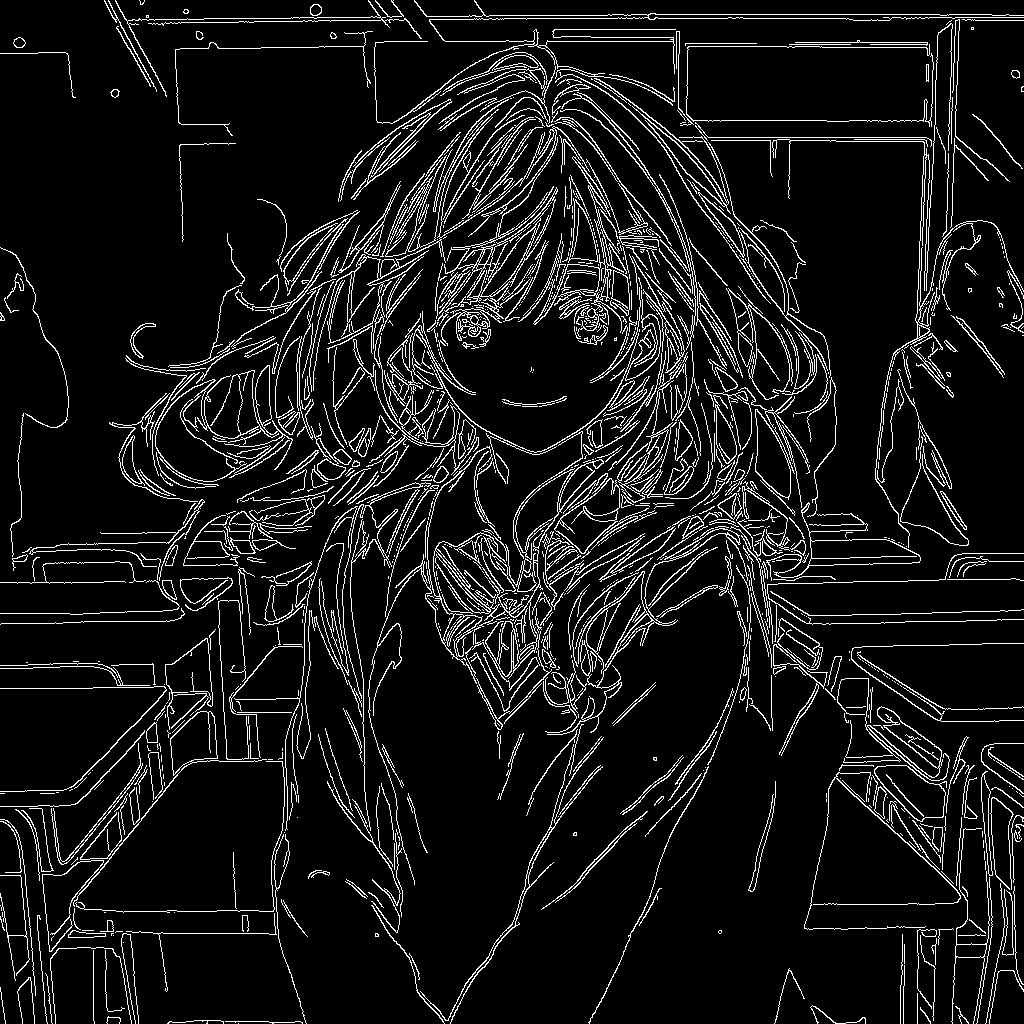

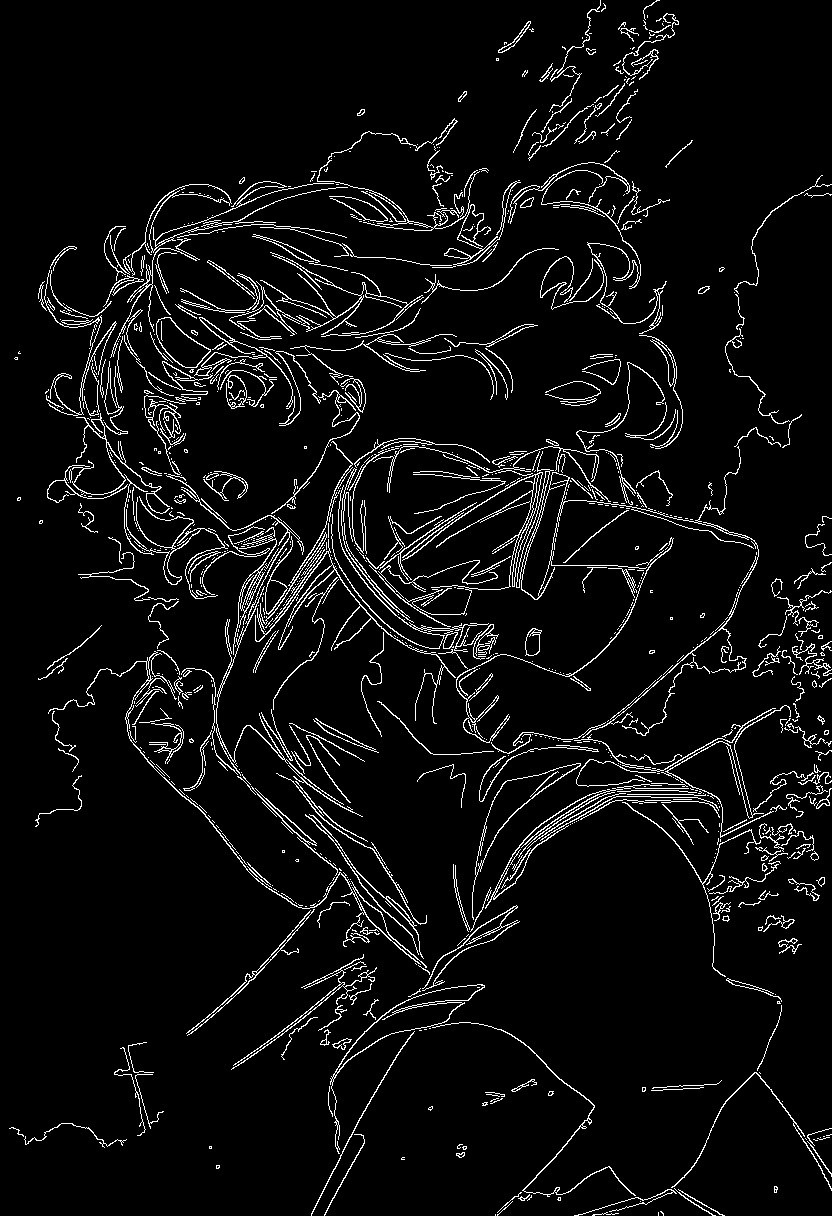

- controllllite_v01032064e_sdxl_canny.safetensors

- trained with 3,919 generated images and canny preprocessing.

- controllllite_v01032064e_sdxl_depth_500-1000.safetensors

- trained with 3,919 generated images and MiDaS v3 - Large preprocessing.

Trained on anime model

The model ControlNet trained on is our custom model.

- controllllite_v01016032e_sdxl_blur_anime_beta.safetensors

- beta version.

- controllllite_v01032064e_sdxl_blur-anime_500-1000.safetensors

- trained with 2,836 generated images and Gaussian blur preprocessing.

- controllllite_v01032064e_sdxl_canny_anime.safetensors

- trained with 921 generated images and canny preprocessing.

- controllllite_v01008016e_sdxl_depth_anime.safetensors

- trained with 1,433 generated images and MiDaS v3 - Large preprocessing.

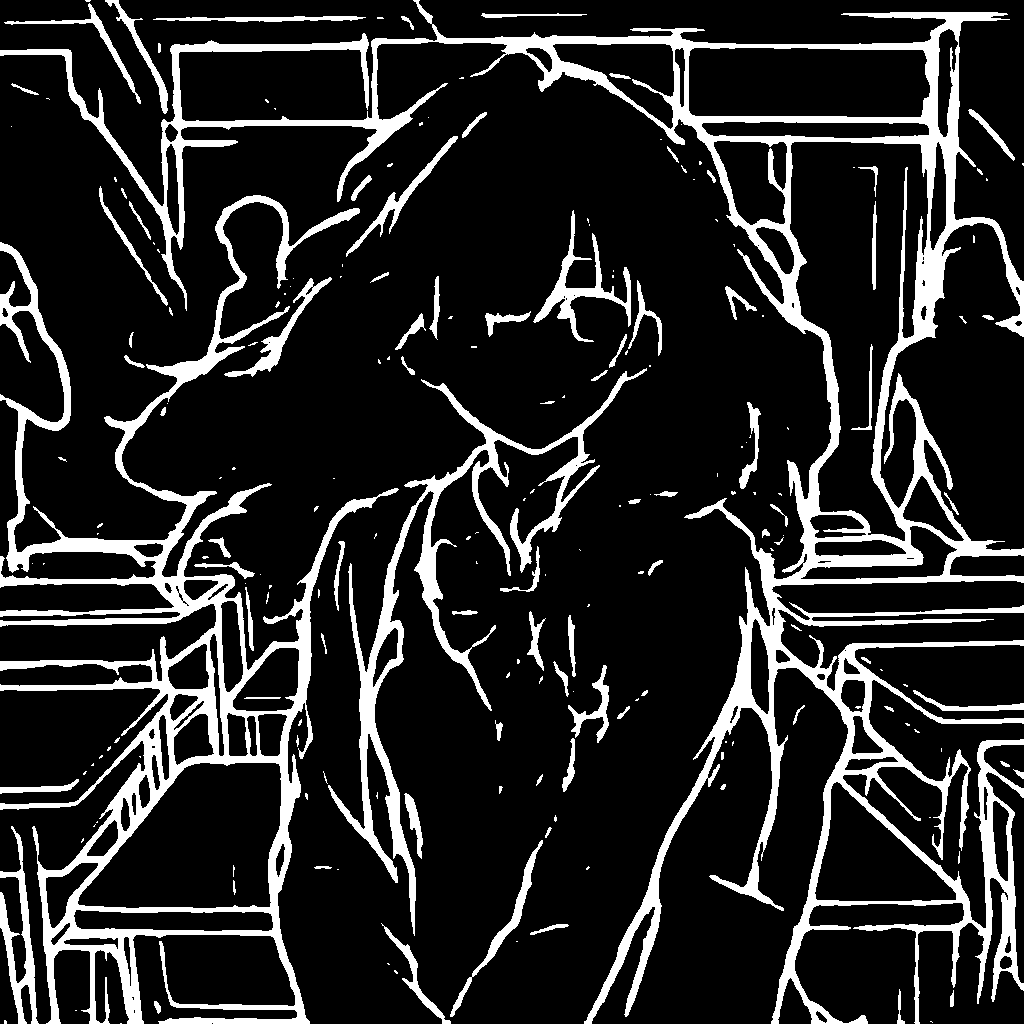

- controllllite_v01032064e_sdxl_fake_scribble_anime.safetensors

- trained with 921 generated images and PiDiNet preprocessing.

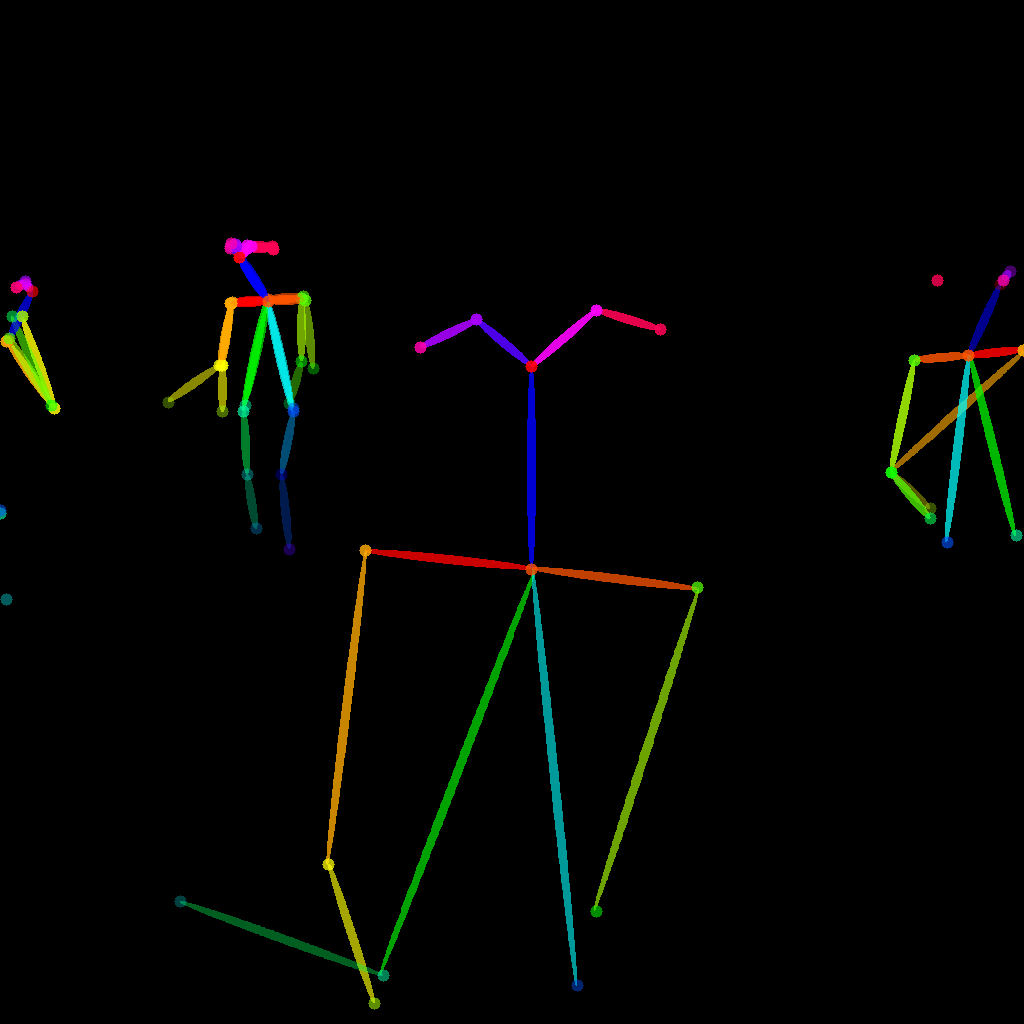

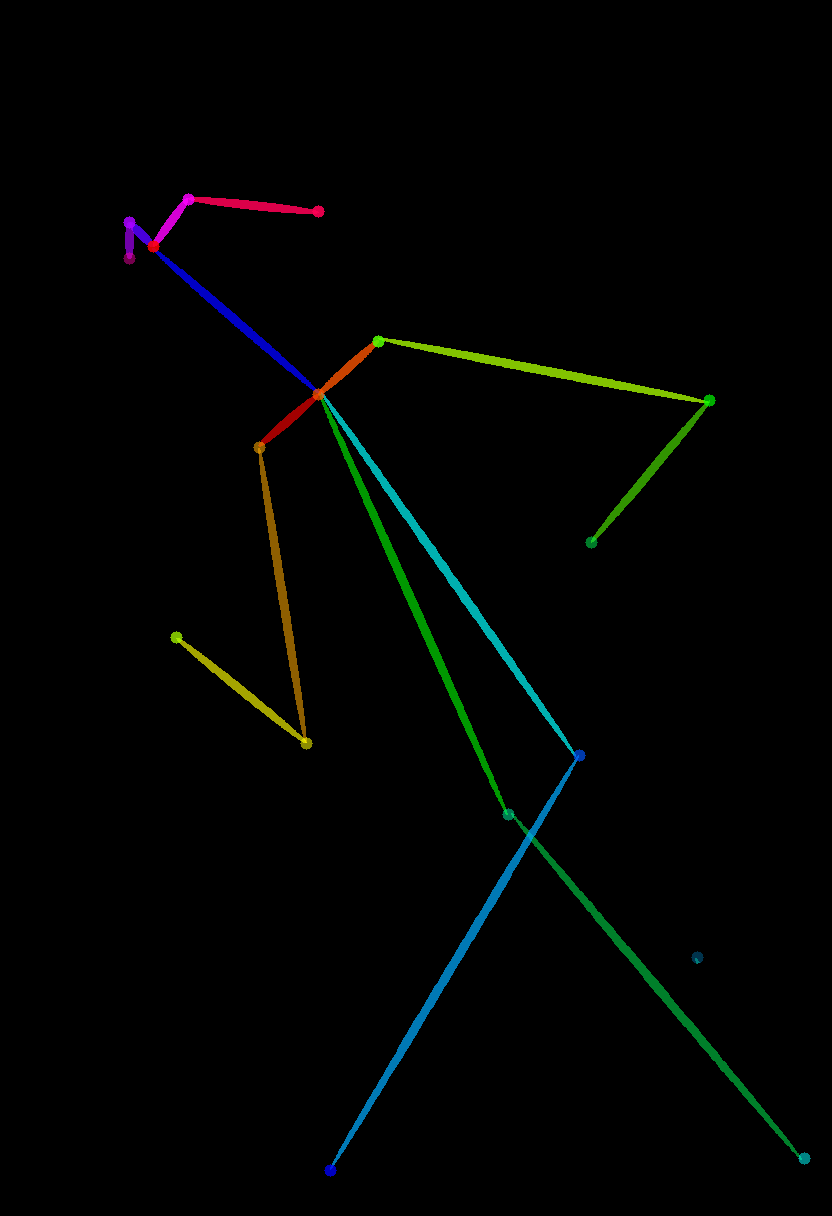

- controllllite_v01032064e_sdxl_pose_anime.safetensors

- trained with 921 generated images and MMPose preprocessing.

- controllllite_v01032064e_sdxl_pose_anime_v2_500-1000.safetensors

- trained with 1,415 generated images and MMPose preprocessing.

- controllllite_v01016032e_sdxl_replicate_anime_0-500.safetensors

- trained with 896 generated image pairs, 1024x1024 and 2048x2048 (highres. fix-ed).

- Trained for 0-500 steps, but it seems to work for 0-1000.

- controllllite_v01032064e_sdxl_replicate_anime_v2.safetensors

- trained with 896 generated image pairs, 1024x1024 and 2048x2048 (highres. fix-ed).

- Trained for 0-1000 steps.

About replicate model

- Replicates the control image, mixed with the prompt, as possible as the model can.

- No preprocessor is required. Also works for img2img.

Samples

sdxl base

anime model

replicate

Sample images are generated by custom model.