license: mit

language:

- en

base_model:

- mistralai/Mistral-7B-Instruct-v0.3

Model Information

We release the full ranking model RankMistral100 distilled from GPT-4o-2024-08-06 used in Sliding Windows Are Not the End: Exploring Full Ranking with Long-Context Large Language Models.

Useful links: 📝 Paper • 🤗 Dataset • 🧩 Github

Training framework

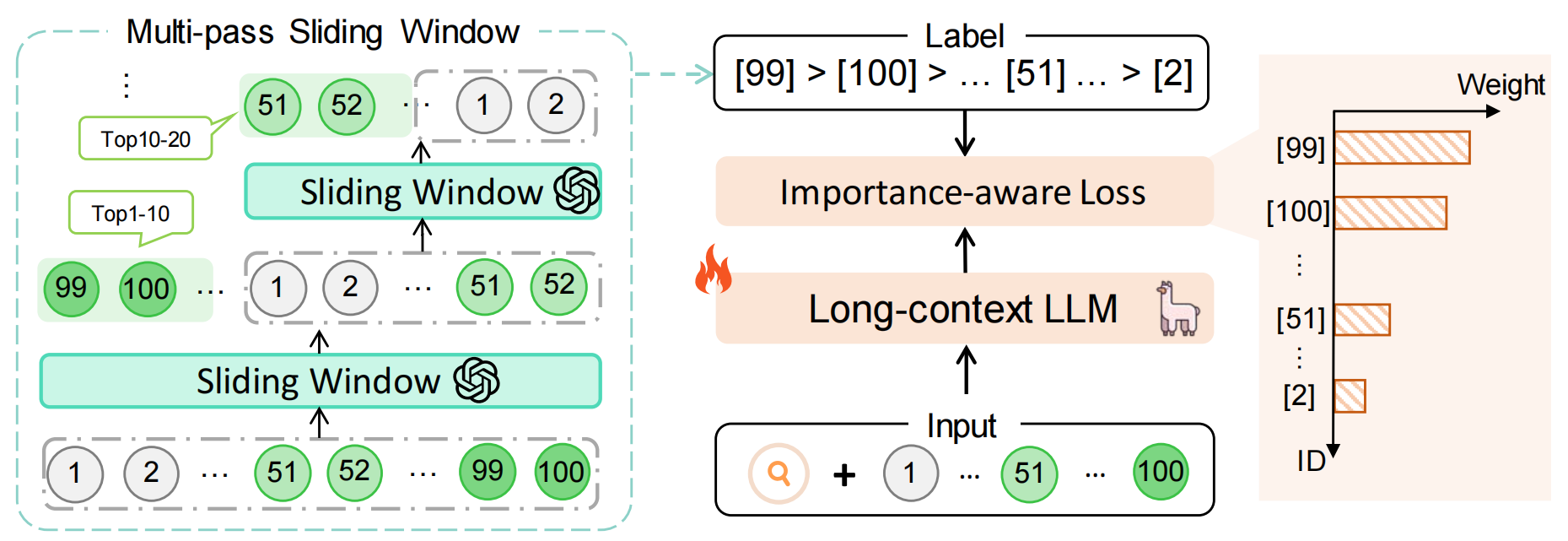

Our full ranking model aims to directly rerank 100 passages at a time, abandoning the sliding window strategy. We propose a multi-pass sliding window approach for generating the full ranking list as label and design a importance-aware training loss for optimization.

Backbone Model

RankMistral100 is finetuned from https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.3.

Performance

We surpuss the strong baseline RankZephyr with 1.2 points on BEIR Avg.

| Models | Covid | DBPedia | SciFact | NFCorpus | Signal | Robust04 | Touche | News | Avg. |

|---|---|---|---|---|---|---|---|---|---|

| BM25 | 59.47 | 31.80 | 67.89 | 33.75 | 33.04 | 40.70 | 44.22 | 39.52 | 43.80 |

| monoBERT (340M) | 73.45 | 41.69 | 62.22 | 34.92 | 30.63 | 44.21 | 30.26 | 47.03 | 45.55 |

| monoT5 (220M) | 75.94 | 42.43 | 65.07 | 35.42 | 31.20 | 44.15 | 30.35 | 46.98 | 46.44 |

| RankVicuna (7B) | 79.19 | 44.51 | 70.67 | 34.51 | 34.24 | 48.33 | 33.00 | 47.15 | 48.95 |

| RankZepeyer (7B) | 82.92 | 44.42 | 75.42 | 38.26 | 31.41 | 53.73 | 30.22 | 52.80 | 51.15 |

| RankMistral100 (7B) | 82.24 | 43.54 | 77.04 | 39.14 | 33.99 | 57.91 | 34.63 | 50.59 | 52.40 |

🌹 If you use this model, please ✨star our GitHub repository to support us. Your star means a lot!