language: pt

license: apache-2.0

widget:

- text: O futuro de DI caiu 20 bps nesta manhã

example_title: Example 1

- text: >-

O Nubank decidiu cortar a faixa de preço da oferta pública inicial (IPO)

após revés no humor dos mercados internacionais com as fintechs.

example_title: Example 2

- text: O Ibovespa acompanha correção do mercado e fecha com alta moderada

example_title: Example 3

FinBERT-PT-BR : Financial BERT PT BR

FinBERT-PT-BR is a pre-trained NLP model to analyze sentiment of Brazilian Portuguese financial texts.

The model was trained in two main stages: language modeling and sentiment modeling. In the first stage, a language model was trained with more than 1.4 million texts of financial news in Portuguese. From this first training, it was possible to build a sentiment classifier with few labeled texts (500) that presented a satisfactory convergence.

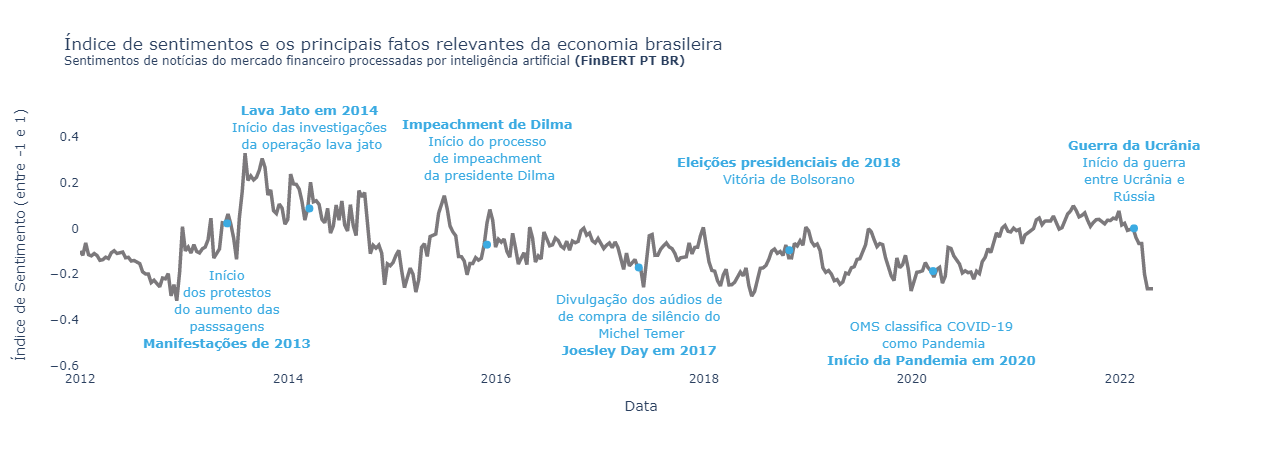

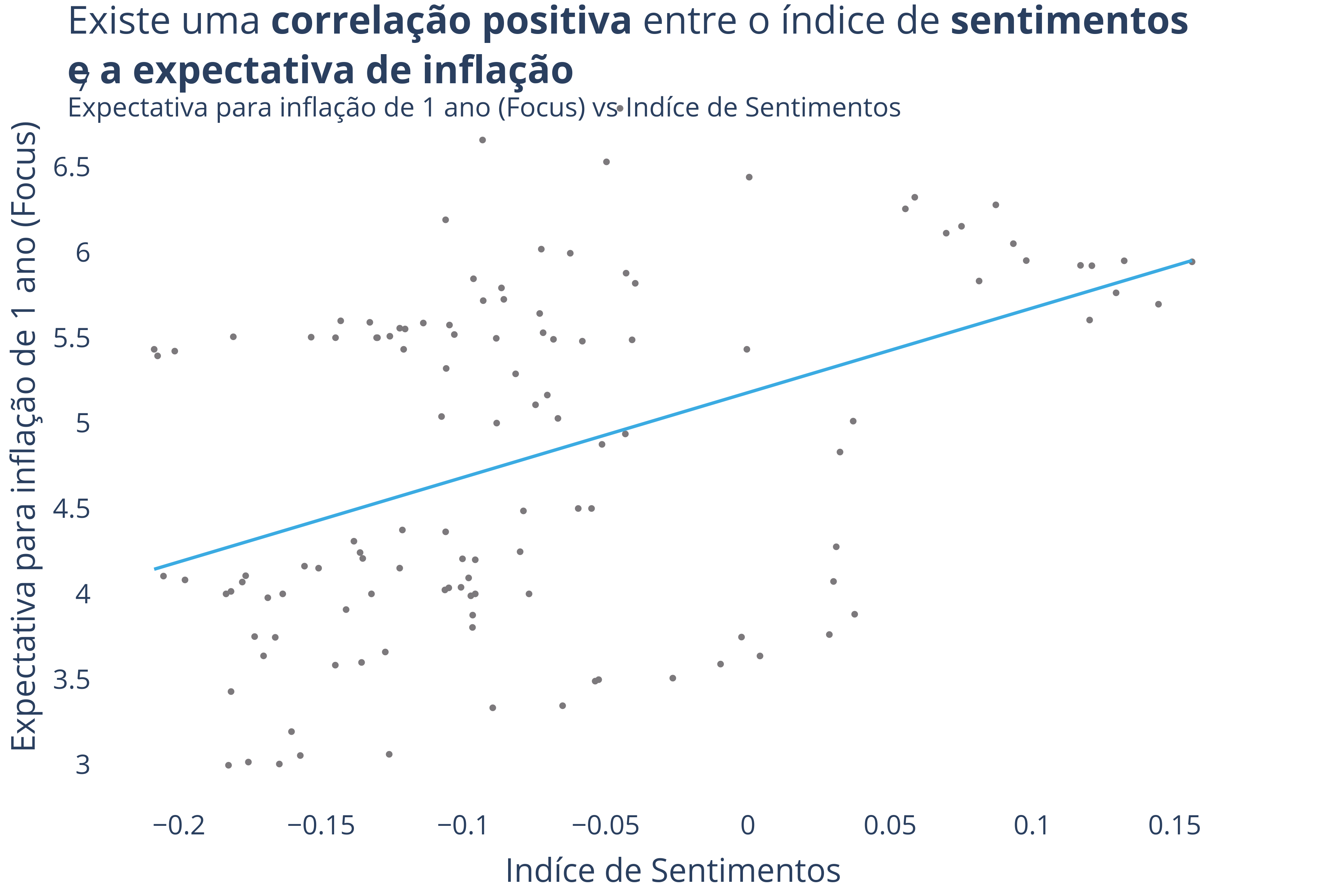

At the end of the work, a comparative analysis with other models and the possible applications of the developed model are presented. In the comparative analysis, it was possible to observe that the developed model presented better results than the current models in the state of the art. Among the applications, it was demonstrated that the model can be used to build sentiment indices, investment strategies and macroeconomic data analysis, such as inflation.

Applications

Sentiment Index

Inflation Analysis

Usage

In order to use the model, you need to get the HuggingFace auth token. You can get it here.

from transformers import AutoTokenizer, BertForSequenceClassification

import numpy as np

pred_mapper = {

0: "POSITIVE",

1: "NEGATIVE",

2: "NEUTRAL"

}

huggingface_auth_token = 'AUTH_TOKEN'

tokenizer = AutoTokenizer.from_pretrained("lucas-leme/FinBERT-PT-BR", use_auth_token=huggingface_auth_token)

finbertptbr = BertForSequenceClassification.from_pretrained("lucas-leme/FinBERT-PT-BR", use_auth_token=huggingface_auth_token)

tokens = tokenizer(["Hoje a bolsa caiu", "Hoje a bolsa subiu"], return_tensors="pt",

padding=True, truncation=True, max_length=512)

finbertptbr_outputs = finbertptbr(**tokens)

preds = [pred_mapper[np.argmax(pred)] for pred in finbertptbr_outputs.logits.cpu().detach().numpy()]

Author

Paper - Stay tuned!

Undergraduate thesis: FinBERT-PT-BR: Análise de sentimentos de textos em português referentes ao mercado financeiro