Confucius-o1-14B

Introduction

Confucius-o1-14B is a o1-like reasoning model developed by the NetEase Youdao Team, it can be easily deployed on a single GPU without quantization. This model is based on the Qwen2.5-14B-Instruct model and adopts a two-stage learning strategy, enabling the lightweight 14B model to possess thinking abilities similar to those of o1. What sets it apart is that after generating the chain of thought, it can summarize a step-by-step problem-solving process from the chain of thought on its own. This can prevent users from getting bogged down in the complex chain of thought and allows them to easily obtain the correct problem-solving ideas and answers. Experience the Confucius-o1-14B demo right now!

Optimization Methods

Selection of the Base Model: Our open-source model is based on the Qwen2.5-14B-Instruct model. We chose this model as the starting point for optimization because it can be deployed on a single GPU and has powerful basic capabilities. Our internal experiments show that starting from a base model with stronger mathematical capabilities and going through the same optimization process can result in an o1-like model with stronger reasoning capabilities.

Two-stage Learning: Our optimization process is divided into two stages. In the first stage, the model learns from a larger o1-like teacher model. This is the most effective way to enable a small model to efficiently master the o1 thinking pattern. In the second stage, the model conducts self-iterative learning to further enhance its reasoning ability. On our internal evaluation dataset, these two stages bring about a performance improvement of approximately 10 points and 6 points respectively.

Data Formatting: Different from general o1-like models, our model is designed for applications in the education field. Therefore, we expect the model not only to output the final answer but also to provide a step-by-step problem-solving process based on the correct thinking process in the chain of thought. To this end, we standardize the output format of the model as follows: the chain-of-thought process is output in the <thinking></thinking> block, and then the step-by-step problem-solving process is summarized in the <summary></summary> block.

More Stringent Data filtering: To ensure the quality of the learning data, we not only evaluate the correctness of the final answer but also examine the accuracy of the explanation process in the entire summary. This is achieved through an automated evaluation methods developed internally, which can effectively prevent the model from learning false positives.

Selection of Training Instructions: The training instruction data we used was sampled from an internal training dataset. We made this data selection because our optimization is mainly targeted at applications in the education field. To efficiently verify the effectiveness, the sample size is only 6,000 records, mainly covering non-graphical mathematical problems in K12 scenarios, and there is no overlap with the training data of the benchmark test set. It has been proven that with just a small amount of data, it is possible to transform a general-purpose model into a Chain of Thought model with reasoning capabilities similar to those of o1.

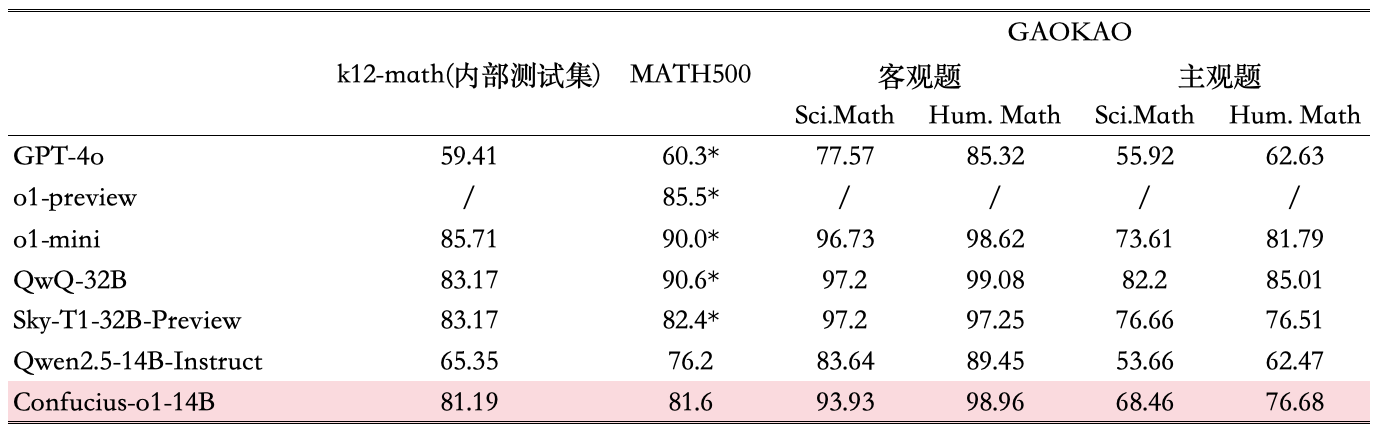

Evaluation and Results

Note: The results marked with * are directly obtained from the data provided by the respective model/interface provider, and the other results are from our evaluation.

Limitations

However, there are some limitations that must be stated in advance:

- Scenario Limitations: Our optimization is only carried out on data from the K12 mathematics scenario, and the effectiveness has only been verified in math-related benchmark tests. The performance of the model in non-mathematical scenarios has not been tested, so we cannot guarantee its quality and effectiveness in other fields.

- Language-related Issues: In the “summary” block, the model has a stronger tendency to generate Chinese content. In the “thinking” block, the model may reason in an unexpected language environment or even present a mixture of languages. However, this does not affect the actual reasoning ability of the model. This indicates that the chain of thought itself may not have independent value, it is merely an easier-to-learn path leading to a correct summary.

- Invalid Results: The model may sometimes fall into circular reasoning. Since we use explicit identifiers to divide the thinking and summary parts, when the model enters this mode, it may generate invalid results that cannot be parsed.

- Safety and Ethics: This model has not undergone optimization and testing for alignment at the safety and ethical levels. Any output generated by the model does not represent the official positions, views, or attitudes of our company. When using this model, users should independently judge and evaluate the rationality and applicability of the output content and comply with relevant laws, regulations, and social ethics.

Quickstart

The environmental requirements for running it are exactly the same as those of the Qwen2.5-14B-Instruct model. Therefore, you can easily use Transformers or vLLM to load and run the model for inference, and deploy your services.

The only thing you need to pay attention to is to use the predefined system message and user message templates provided below to request the model. Other templates may also be usable, but we haven't tested them yet.

SYSTEM_PROMPT_TEMPLATE = """你叫"小P老师",是一位由网易有道「子曰」教育大模型创建的AI家庭教师。

尽你所能回答数学问题。

!!! 请记住:

- 你应该先通过思考探索正确的解题思路,然后按照你思考过程里正确的解题思路总结出一个包含3-5步解题过程的回答。

思考过程的一些准则:

- 这个思考过程应该呈现出一种原始、自然且意识流的状态,就如同你在解题时内心的独白一样,因此可以包含一些喃喃自语。

- 在思考初期,你应该先按自己的理解重述问题,考虑问题暗含的更广泛的背景信息,并梳理出已知和未知的元素,及其与你所学知识的一些关联点,并发散思维考虑可能有几种潜在的解题思路。

- 当你确定了一个解题思路时,你应该先逐步按预想的思路推进,但是一旦你发现矛盾或者不符合预期的地方,你应该及时停下来,提出你的质疑,认真验证该思路是否还可以继续。

- 当你发现一个思路已经不可行时,你应该灵活切换到其他思路上继续推进你的思考。

- 当你按照一个思路给出答案后,切记要仔细验证你的每一个推理和计算细节,这时候逆向思维可能有助于你发现潜在的问题。

- 你的思考应该是细化的,需要包括详细的计算和推理的细节。

- 包含的喃喃自语应该是一个口语化的表达,需要和上下文语境匹配,并且尽量多样化。

总结的解题过程的格式要求:

- 求解过程应该分为3-5步,每个步骤前面都明确给出步骤序号(比如:“步骤1”)及其小标题

- 每个步骤里只给出核心的求解过程和阶段性答案。

- 在最后一个步骤里,你应该总结一下最终的答案。

请使用以下模板。

<question>待解答的数学问题</question>

<thinking>

这里记录你详细的思考过程

</thinking>

<summary>

根据思考过程里正确的解题路径总结出的,包含3-5步解题过程的回答。

</summary>"""

USER_PROMPT_TEMPLATE = """现在,让我们开始吧!

<question>{question}</question>"""

Then you can create your messages as follows and use them to request model results. You just need to fill in your instructions in the "question" field.

from transformers import AutoModelForCausalLM, AutoTokenizer

model_name = "netease-youdao/Confucius-o1-14B"

model = AutoModelForCausalLM.from_pretrained(

model_name,

torch_dtype="auto",

device_map="auto"

)

tokenizer = AutoTokenizer.from_pretrained(model_name)

messages = [

{'role': 'system', 'content': SYSTEM_PROMPT_TEMPLATE},

{'role': 'user', 'content': USER_PROMPT_TEMPLATE.format(question=question)},

]

text = tokenizer.apply_chat_template(

messages,

tokenize=False,

add_generation_prompt=True

)

model_inputs = tokenizer([text], return_tensors="pt").to(model.device)

generated_ids = model.generate(

**model_inputs,

max_new_tokens=16384

)

generated_ids = [

output_ids[len(input_ids):] for input_ids, output_ids in zip(model_inputs.input_ids, generated_ids)

]

response = tokenizer.batch_decode(generated_ids, skip_special_tokens=True)[0]

After obtaining the model results, you can parse out the "thinking" and "summary" parts as follows.

def parse_result_nostep(result):

thinking_pattern = r"<thinking>(.*?)</thinking>"

summary_pattern = r"<summary>(.*?)</summary>"

thinking_list = re.findall(thinking_pattern, result, re.DOTALL)

summary_list = re.findall(summary_pattern, result, re.DOTALL)

assert len(thinking_list) == 1 and len(summary_list) == 1, \

f"The parsing results do not meet the expectations.\n{result}"

thinking = thinking_list[0].strip()

summary = summary_list[0].strip()

return thinking, summary

thinking, summary = parse_result_nostep(response)

Citation

If you find our work helpful, feel free to give us a cite.

@misc{confucius-o1,

author = {NetEase Youdao Team},

title = {Confucius-o1: Open-Source Lightweight Large Models to Achieve Excellent Chain-of-Thought Reasoning on Consumer-Grade Graphics Cards.},

url = {https://huggingface.co/netease-youdao/Confucius-o1-14B},

month = {January},

year = {2025}

}

- Downloads last month

- 98