File size: 11,083 Bytes

9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b 339b1e7 9e9d27b |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 160 161 162 163 164 165 166 167 168 169 170 171 172 173 174 175 176 177 178 179 180 181 |

---

language:

- multilingual

- en

- de

- fr

- ja

license: mit

tags:

- object-detection

- vision

- generated_from_trainer

- DocLayNet

- COCO

- PDF

- IBM

- Financial-Reports

- Finance

- Manuals

- Scientific-Articles

- Science

- Laws

- Law

- Regulations

- Patents

- Government-Tenders

- object-detection

- image-segmentation

- token-classification

inference: false

datasets:

- pierreguillou/DocLayNet-base

metrics:

- precision

- recall

- f1

- accuracy

model-index:

- name: layout-xlm-base-finetuned-with-DocLayNet-base-at-linelevel-ml384

results:

- task:

name: Token Classification

type: token-classification

metrics:

- name: f1

type: f1

value: 0.7336

---

<!-- This model card has been generated automatically according to the information the Trainer had access to. You

should probably proofread and complete it, then remove this comment. -->

# Document Understanding model (at line level)

This model is a fine-tuned version of [microsoft/layoutxlm-base](https://huggingface.co/microsoft/layoutxlm-base) with the [DocLayNet base](https://huggingface.co/datasets/pierreguillou/DocLayNet-base) dataset.

It achieves the following results on the evaluation set:

- Loss: 0.2364

- Precision: 0.7260

- Recall: 0.7415

- F1: 0.7336

- Accuracy: 0.9373

## References

### Other models

- LiLT base

- [Document Understanding model (at paragraph level)](https://huggingface.co/pierreguillou/lilt-xlm-roberta-base-finetuned-with-DocLayNet-base-at-paragraphlevel-ml512)

- [Document Understanding model (at line level)](https://huggingface.co/pierreguillou/lilt-xlm-roberta-base-finetuned-with-DocLayNet-base-at-linelevel-ml384)

### Blog posts

- Layout XLM base

- (03/05/2023) [Document AI | Inference APP and fine-tuning notebook for Document Understanding at line level with LayoutXLM base]()

- LiLT base

- (02/16/2023) [Document AI | Inference APP and fine-tuning notebook for Document Understanding at paragraph level](https://medium.com/@pierre_guillou/document-ai-inference-app-and-fine-tuning-notebook-for-document-understanding-at-paragraph-level-c18d16e53cf8)

- (02/14/2023) [Document AI | Inference APP for Document Understanding at line level](https://medium.com/@pierre_guillou/document-ai-inference-app-for-document-understanding-at-line-level-a35bbfa98893)

- (02/10/2023) [Document AI | Document Understanding model at line level with LiLT, Tesseract and DocLayNet dataset](https://medium.com/@pierre_guillou/document-ai-document-understanding-model-at-line-level-with-lilt-tesseract-and-doclaynet-dataset-347107a643b8)

- (01/31/2023) [Document AI | DocLayNet image viewer APP](https://medium.com/@pierre_guillou/document-ai-doclaynet-image-viewer-app-3ac54c19956)

- (01/27/2023) [Document AI | Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)](https://medium.com/@pierre_guillou/document-ai-processing-of-doclaynet-dataset-to-be-used-by-layout-models-of-the-hugging-face-hub-308d8bd81cdb)

### Notebooks (paragraph level)

- LiLT base

- [Document AI | Inference APP at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levelparagraphs_ml512.ipynb)

- [Document AI | Inference at paragraph level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levelparagraphs_ml512.ipynb)

- [Document AI | Fine-tune LiLT on DocLayNet base in any language at paragraph level (chunk of 512 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LiLT_on_DocLayNet_base_in_any_language_at_paragraphlevel_ml_512.ipynb)

### Notebooks (line level)

- Layout XLM base

- [Document AI | Inference at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb)

- [Document AI | Inference APP at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet base dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb)

- [Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LayoutXLM_base_on_DocLayNet_base_in_any_language_at_linelevel_ml_384.ipynb)

- LiLT base

- [Document AI | Inference at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb)

- [Document AI | Inference APP at line level with a Document Understanding model (LiLT fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/Gradio_inference_on_LiLT_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb)

- [Document AI | Fine-tune LiLT on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LiLT_on_DocLayNet_base_in_any_language_at_linelevel_ml_384.ipynb)

- [DocLayNet image viewer APP](https://github.com/piegu/language-models/blob/master/DocLayNet_image_viewer_APP.ipynb)

- [Processing of DocLayNet dataset to be used by layout models of the Hugging Face hub (finetuning, inference)](processing_DocLayNet_dataset_to_be_used_by_layout_models_of_HF_hub.ipynb)

### APP

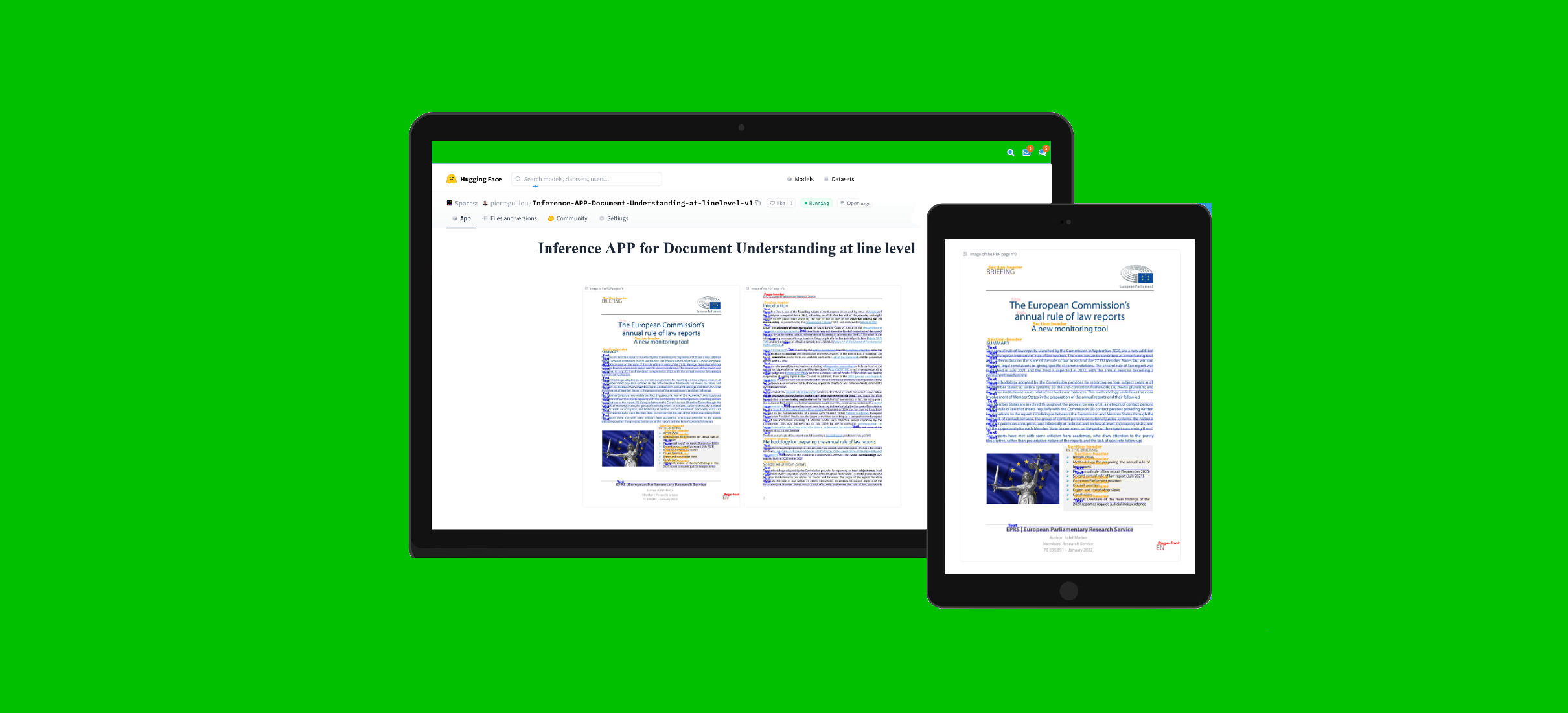

You can test this model with this APP in Hugging Face Spaces: [Inference APP for Document Understanding at line level (v2)](https://huggingface.co/spaces/pierreguillou/Inference-APP-Document-Understanding-at-linelevel-v2).

### DocLayNet dataset

[DocLayNet dataset](https://github.com/DS4SD/DocLayNet) (IBM) provides page-by-page layout segmentation ground-truth using bounding-boxes for 11 distinct class labels on 80863 unique pages from 6 document categories.

Until today, the dataset can be downloaded through direct links or as a dataset from Hugging Face datasets:

- direct links: [doclaynet_core.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_core.zip) (28 GiB), [doclaynet_extra.zip](https://codait-cos-dax.s3.us.cloud-object-storage.appdomain.cloud/dax-doclaynet/1.0.0/DocLayNet_extra.zip) (7.5 GiB)

- Hugging Face dataset library: [dataset DocLayNet](https://huggingface.co/datasets/ds4sd/DocLayNet)

Paper: [DocLayNet: A Large Human-Annotated Dataset for Document-Layout Analysis](https://arxiv.org/abs/2206.01062) (06/02/2022)

## Model description

The model was finetuned at **line level on chunk of 384 tokens with overlap of 128 tokens**. Thus, the model was trained with all layout and text data of all pages of the dataset.

At inference time, a calculation of best probabilities give the label to each line bounding boxes.

## Inference

See notebook: [Document AI | Inference at line level with a Document Understanding model (LayoutXLM base fine-tuned on DocLayNet dataset)](https://github.com/piegu/language-models/blob/master/inference_on_LayoutXLM_base_model_finetuned_on_DocLayNet_base_in_any_language_at_levellines_ml384.ipynb)

## Training and evaluation data

See notebook: [Document AI | Fine-tune LayoutXLM base on DocLayNet base in any language at line level (chunk of 384 tokens with overlap)](https://github.com/piegu/language-models/blob/master/Fine_tune_LayoutXLM_base_on_DocLayNet_base_in_any_language_at_linelevel_ml_384.ipynb)

## Training procedure

### Training hyperparameters

The following hyperparameters were used during training:

- learning_rate: 2e-05

- train_batch_size: 8

- eval_batch_size: 16

- seed: 42

- optimizer: Adam with betas=(0.9,0.999) and epsilon=1e-08

- lr_scheduler_type: linear

- lr_scheduler_warmup_ratio: 0.1

- num_epochs: 3

- mixed_precision_training: Native AMP

### Training results

| Training Loss | Epoch | Step | Accuracy | F1 | Validation Loss | Precision | Recall |

|:-------------:|:-----:|:----:|:--------:|:------:|:---------------:|:---------:|:------:|

| No log | 0.12 | 300 | 0.8413 | 0.1311 | 0.5185 | 0.1437 | 0.1205 |

| 0.9231 | 0.25 | 600 | 0.8751 | 0.5031 | 0.4108 | 0.4637 | 0.5498 |

| 0.9231 | 0.37 | 900 | 0.8887 | 0.5206 | 0.3911 | 0.5076 | 0.5343 |

| 0.369 | 0.5 | 1200 | 0.8724 | 0.5365 | 0.4118 | 0.5094 | 0.5667 |

| 0.2737 | 0.62 | 1500 | 0.8960 | 0.6033 | 0.3328 | 0.6046 | 0.6020 |

| 0.2737 | 0.75 | 1800 | 0.9186 | 0.6404 | 0.2984 | 0.6062 | 0.6787 |

| 0.2542 | 0.87 | 2100 | 0.9163 | 0.6593 | 0.3115 | 0.6324 | 0.6887 |

| 0.2542 | 1.0 | 2400 | 0.9198 | 0.6537 | 0.2878 | 0.6160 | 0.6962 |

| 0.1938 | 1.12 | 2700 | 0.9165 | 0.6752 | 0.3414 | 0.6673 | 0.6833 |

| 0.1581 | 1.25 | 3000 | 0.9193 | 0.6871 | 0.3611 | 0.6868 | 0.6875 |

| 0.1581 | 1.37 | 3300 | 0.9256 | 0.6822 | 0.2763 | 0.6988 | 0.6663 |

| 0.1428 | 1.5 | 3600 | 0.9287 | 0.7084 | 0.3065 | 0.7246 | 0.6929 |

| 0.1428 | 1.62 | 3900 | 0.9194 | 0.6812 | 0.2942 | 0.6866 | 0.6760 |

| 0.1025 | 1.74 | 4200 | 0.9347 | 0.7223 | 0.2990 | 0.7315 | 0.7133 |

| 0.1225 | 1.87 | 4500 | 0.9360 | 0.7048 | 0.2729 | 0.7249 | 0.6858 |

| 0.1225 | 1.99 | 4800 | 0.9396 | 0.7222 | 0.2826 | 0.7497 | 0.6966 |

| 0.108 | 2.12 | 5100 | 0.9301 | 0.7193 | 0.3071 | 0.7022 | 0.7372 |

| 0.108 | 2.24 | 5400 | 0.9334 | 0.7243 | 0.2999 | 0.7250 | 0.7237 |

| 0.0799 | 2.37 | 5700 | 0.9382 | 0.7254 | 0.2710 | 0.7310 | 0.7198 |

| 0.0793 | 2.49 | 6000 | 0.9329 | 0.7228 | 0.3201 | 0.7352 | 0.7108 |

| 0.0793 | 2.62 | 6300 | 0.9373 | 0.7336 | 0.3035 | 0.7260 | 0.7415 |

| 0.0696 | 2.74 | 6600 | 0.9374 | 0.7275 | 0.3137 | 0.7313 | 0.7237 |

| 0.0696 | 2.87 | 6900 | 0.9381 | 0.7253 | 0.3242 | 0.7369 | 0.7142 |

| 0.0866 | 2.99 | 7200 | 0.2473 | 0.7439 | 0.7207 | 0.7321 | 0.9407 |

### Framework versions

- Transformers 4.26.1

- Pytorch 1.10.0+cu111

- Datasets 2.10.1

- Tokenizers 0.13.2

|