SmolLM2-1.7B-TLDR

This model is a fine-tuned version of SmolLM2-1.7B-Instruct, optimized for generating concise summaries of long texts (TL;DR - Too Long; Didn't Read). It was trained using Group Relative Policy Optimization (GRPO) to improve the model's ability to extract key information from longer documents while maintaining brevity.

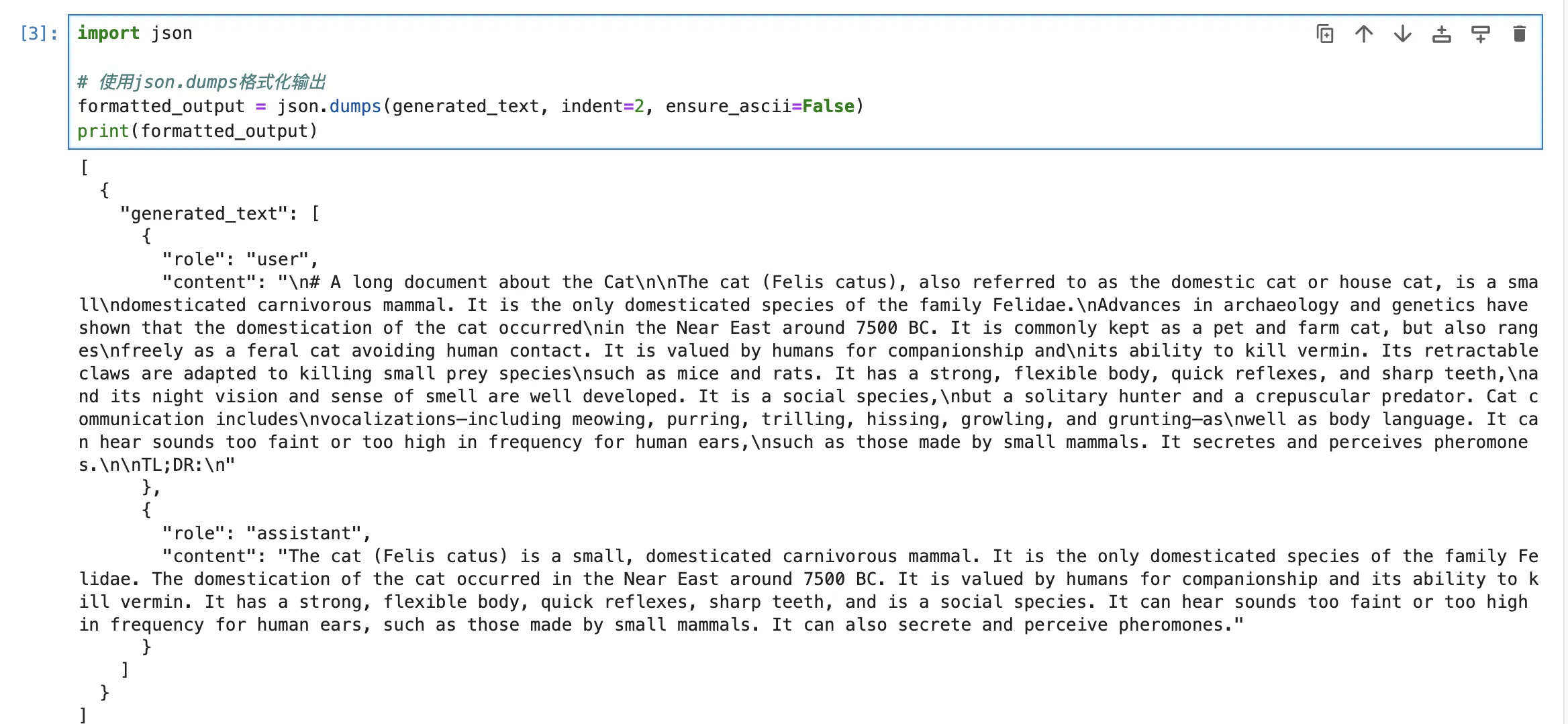

Demo

Uses

This model is specifically designed for text summarization tasks, particularly producing TL;DR versions of longer documents. The model works best when prompted with a long text followed by "TL;DR:" to indicate where the summary should begin.

Example usage:

from transformers import pipeline

generator = pipeline("text-generation", model="real-jiakai/SmolLM2-1.7B-TLDR")

messages = [

{"role": "user", "content": "Your long text here...\n\nTL;DR:"}

]

generate_kwargs = {

"max_new_tokens": 256,

"do_sample": True,

"temperature": 0.5,

"min_p": 0.1,

}

generated_text = generator(messages, **generate_kwargs)

print(generated_text)

Training Details

Training Data

The model was fine-tuned on the mlabonne/smoltldr dataset, which contains 2000 training samples of long-form content paired with concise summaries. Each sample consists of a prompt (long text) and a completion (summary).

Training Procedure

The model was trained using the TRL (Transformer Reinforcement Learning) library's GRPOTrainer with the following configuration:

Training Hyperparameters

- Learning rate: 2e-5

- Batch size: 2 per device

- Gradient accumulation steps: 8

- Training epochs: 1

- Max prompt length: 512

- Max completion length: 96

- Number of generations per prompt: 4

- Optimizer: AdamW 8-bit

- Precision: BF16

- Reward function: Length-based optimization targeting concise summaries

The training process utilized LoRA (Low-Rank Adaptation) for parameter-efficient fine-tuning with the following configuration:

- LoRA rank (r): 16

- LoRA alpha: 32

- Target modules: All linear layers

Training Process

Training showed a progression of loss values from near-zero to approximately 0.01 over 125 steps, indicating gradual learning of the summarization task through reinforcement. The complete training process took approximately 1 hour on two NVIDIA RTX 4090 GPUs.

Citation

@misc{allal2025smollm2smolgoesbig,

title={SmolLM2: When Smol Goes Big -- Data-Centric Training of a Small Language Model},

author={Loubna Ben Allal and Anton Lozhkov and Elie Bakouch and Gabriel Martín Blázquez and Guilherme Penedo and Lewis Tunstall and Andrés Marafioti and Hynek Kydlíček and Agustín Piqueres Lajarín and Vaibhav Srivastav and Joshua Lochner and Caleb Fahlgren and Xuan-Son Nguyen and Clémentine Fourrier and Ben Burtenshaw and Hugo Larcher and Haojun Zhao and Cyril Zakka and Mathieu Morlon and Colin Raffel and Leandro von Werra and Thomas Wolf},

year={2025},

eprint={2502.02737},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2502.02737},

}

- Downloads last month

- 0